Abstract

We introduce a method for computing probabilities for spontaneous activity and propagation failure of the action potential in spatially extended, conductance-based neuronal models subject to noise, based on statistical properties of the membrane potential. We compare different estimators with respect to the quality of detection, computational costs and robustness and propose the integral of the membrane potential along the axon as an appropriate estimator to detect both spontaneous activity and propagation failure. Performing a model reduction we achieve a simplified analytical expression based on the linearization at the resting potential (resp. the traveling action potential). This allows to approximate the probabilities for spontaneous activity and propagation failure in terms of (classical) hitting probabilities of one-dimensional linear stochastic differential equations. The quality of the approximation with respect to the noise amplitude is discussed and illustrated with numerical results for the spatially extended Hodgkin-Huxley equations. Python simulation code is supplied on GitHub under the link https://github.com/deristnochda/Hodgkin-Huxley-SPDE.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Noise is an inherent component of neural systems that accounts for various problems in information processing at all levels of the nervous system, see e.g. the review Faisal et al. (2008) for a detailed discussion. In particular, channel noise has been identified as an important source of various types of variability in single neurons. Examples are the noise induced phenomena as observed in Faisal and Laughlin (2007). The timing of action potentials can be highly sensitive with respect to fluctuations in the opening and closing of ion channels leading to jitter and stochastic interspike intervals (Horikawa 1991). This effect becomes important in thin axons with diameter of less than 1μ m. Furthermore, there appear stochastic patterns in the grouping of action potentials, and action potentials can vanish due to noise interference or spontaneously emerge without apparent synaptic input.

When it comes to the mathematical modeling of the membrane potential in axons, in particular in thin ones, channel noise therefore has to be taken into account. For a discussion and comparison of the various types of adding noise to conductance-based neuronal models such as the classical Hodgkin-Huxley equations we refer to Goldwyn and Shea-Brown (2011). Concerning spatially extended models, in e.g. Tuckwell and Jost (2010, 2011), Tuckwell (2008) it has been shown that already simple additive noise, uncorrelated in space and time, accounts for a large range of variability in the action potential. That includes variability in the repetitive generation of action potentials, deletion of action potentials or propagation failures and spontaneously emerging action potentials or spontaneous activity. Because of this observation, we restrict ourselves to such a simple model of the noise that—as a byproduct—reduces the computational and analytical complexity. However, the proposed detection and estimation method can be applied to more complex models and e.g. even the full Markov chain dynamics of channel noise can be used.

It is the purpose of this work to introduce a method to compute in a mathematical consistent way the probabilities of those last two events. This is done for general spatially extended neuronal models with additive noise, both numerically and theoretically, in terms of statistical quantities of the membrane potential. A suitable statistical estimator for such kind of characteristics should have the following desired properties: It is automatically evaluable to do Monte-Carlo simulations; it strictly separates the considered event from different ones; it is a low dimensional function of the observables; it is relatively robust to stochastic perturbations and uncertainty in the observables. We compare different estimators with respect to the quality of detection, computational costs and robustness. In order to further reduce the computational costs and to obtain a simpler analytical description, we perform a consistent model reduction, with respect to these statistical quantities, to a one-dimensional linear stochastic differential equation that allows to compute the desired characteristics without necessarily simulating the full system.

The method is illustrated in a case study using the Hodgkin-Huxley equations (Hodgkin and Huxley 1952) with two distinct parameter sets. With spatial diffusion, this is a system of partial differential equations that can serve as a model for the propagation of action potentials in the neuron’s axon. In particular, depending on the size of the stimulus there exist pulse-like solutions (action potentials) to these equations propagating along the spatial domain. Using these equations, we estimate the probabilities of spontaneous activity and propagation failure. Although we only focus on these two examples, the methods presented here can be used for a broader range of problems, in particular, similar model reductions can also be performed in order to compute time jitter and the variability in grouping patterns of action potentials.

In our setting, we consider a simple spatial geometry of the axon that is a cylindrical shaped fiber. Thus the relevant spatial domain is an interval [0, L]. We propose \(\Phi (u) := {{\int }_{0}^{L}} u(x) \,\mathrm {d}x\) as an estimator for the detection of spontaneous activity and propagation failures. Here, u is the space(-time)-dependent observable whose solution is pulse-formed. In the cases at hand, this will be the membrane potential. Φ(u) is the area under the pulse considered as a graph with respect to the space variable that has the following properties: It is easy to extract automatically from the numerical simulations; it significantly separates the number of observed pulses; it is a linear functional of only one observable; stochastic perturbations, in particular additive noise that is white in space (or of low correlation length) should cancel out through integration. The events of spontaneous activity and propagation failure can both be defined as threshold crossings of the quantity Φ(u) and therefore easily be estimated using a Monte-Carlo simulation. The results can be found in Section 3. In Section 4, we do a model reduction for this quantity, only assuming a reasonable local stability of the pulse and resting solutions. In particular, we deduce one-dimensional Ornstein-Uhlenbeck processes, that captures both probabilities in particular for small noise intensities remarkably well.

2 Hodgkin-Huxley type equations

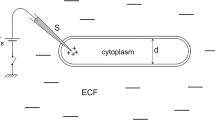

In this article, we consider a spatially extended conductance based neuronal model with a simple one dimensional domain (0, L) approximating the axon. This is most accurate in the case of a long axon, shaped as a cylinder with constant diameter. Our examples combine a Hodgkin-Huxley type model with diffusive spatial coupling to describe the evolution of the membrane potential u(t, x) in time and space by a system of partial differential equations involving the dimensionless potassium activation, sodium activation and sodium inactivation variables n(t, x), m(t, x) and h(t, x), respectively. This typically reads as

Here, C m is the membrane capacitance in μF/cm2, d the axon diameter in cm, R i the intracellular resistivity in Ωcm, g K, g Na, g L the maximal potassium, sodium and leak conductance in mS /cm2. To further specify units, all times are in ms, voltages in mV and distances in cm. These standard parameters from the original work of Hodgkin and Huxley (1952) are used throughout: R i = 34.5, C m = 1, g K = 36, g Na = 120, g L = 0.3, E K = −12, E Na = 115 and E L = 10. Note that the membrane potential is shifted by 65 mV compared to the original values. In order to be in the regime of thin, unmyelinated axons, we choose a diameter of d = 0.5 μm for all simulations and consider an axon length of L = 1 cm.

2.1 Two parameter sets for the (in)activation variables

Equation (1) is missing the coefficients determining the evolution of the (in)activation variables. In the standard model following Hodgkin and Huxley (1952) these are

In the following, we refer to this model as (HH). A second model (\(\widetilde {\text {HH}}\)) with a different behavior can be obtained by slight modification. Set

that amounts to a change in the sensitivity of the sodium (in)activation rates, and leave the rest unchanged. The result is a neuron much less sensitive to input, i.e. with a higher firing threshold. In the next section, models (HH) and (\(\widetilde {\text {HH}}\)) are used to illustrate the phenomenon of spontaneous activity and propagation failure, respectively.

2.2 A mathematical model

Noisy perturbations of Eq. (1) can be realized as a stochastic partial differential equation (SPDE) on the Hilbert space (H,∥⋅∥) = L 2(0, L) with inner product 〈⋅,⋅〉. The variables u(t), n(t), m(t) and h(t) are then function valued, thus we omit the x dependence in the notation.

For the spatial diffusion, define the Laplace operator \({\Delta } u := {\partial ^{2}_{x}} u\) supplemented with Neumann boundary conditions. We choose a sealed end at x = L, i.e. ∂ x u(t, L) = 0 for all t≥0 and model the input signal to the axon via an injected current in form of a rectangular pulse

Here, T ∗≤∞ is the duration and J > 0 the amplitude of the signal.

The question of how to add noise to Eq. (1) has been studied in the literature, see e.g. Goldwyn and Shea-Brown (2011). Although it has been shown that current noise, i.e. uncorrelated additive noise in the voltage variable, does not accurately approximate a Markov chain ion channel dynamics, we use this form of noise in our study. The reason is twofold: First, already such a kind of noise can qualitatively account for all of the phenomena observed in e.g. Faisal and Laughlin (2007) and second, it allows further analysis due to its simplicity. Mathematically speaking, current noise is realized as a two-parameter white noise η that is defined in terms of a cylindrical Wiener process W such that \(\eta = \dot {W}\). W = (W(t)) t ≥ 0 is a function valued process that can be formally represented by the infinite series

where \((\beta _{n}(t))_{n\in {\mathbb N}}\) is a family of iid real valued Brownian motions and

is an orthonormal basis of H. For f, g ∈ H one can calculate the covariance of this process as

thus \(\mathbb {E}\left [ \eta (t,x) \eta (s,y) \right ] = \delta (t-s) \delta (x-y)\), i.e. no correlation in either time nor space. Thus formally speaking, a cylindrical Wiener process is time-integrated space-time white noise. Equation (1) then reads as

Together with suitable initial conditions, in our case the equilibrium values (u ∗, n ∗, m ∗, h ∗), being u ∗=0 for (HH) and u ∗≈−0.820 for (\(\widetilde {\text {HH}}\)), as well as

we refer to Sauer and Stannat (2014) for well-posedness of Eq. (3).

2.3 Linear stability of pulse and resting state

If one injects an input above a certain threshold, the solution of Eq. (1) rapidly approaches a traveling pulse like solution. Denote X = (u, n, m, h)T, then numerical simulations show that this traveling pulse is well-approximated by a solution of the form \(X(t,x) = \hat {X}(x - ct)\) for a fixed reference profile \(\hat {X}\) and pulse speed c as long as the pulse did not reach the boundary. Let us call this solution \(\hat {X}(t)\).

Without any external input, the system (1) remains in equilibrium if started there. Denote by X ∗ this constant (in time and space) solution to the equations.

The phenomena of interest in this work directly correspond to the stability properties of those two solutions \(\hat {X}\) and X ∗. Although this has only been shown for general stochastic bistable equations, see e.g. Stannat (2014), we assume a linear stability condition that should be possible to be extended to the higher dimensional Hodgkin-Huxley system. This linear stability assumption is then only used in Section 4 for a model reduction. For convenience of notation, denote Eq. (3) in the following abstract form

where A = (Δ,0,… )T, \(\mathbb {W} = (W, 0, \dots )^{T}\) and F is the appropriate nonlinear part of the drift. Also, denote by \(\mathcal {H} = \otimes _{n=1}^{4} H\) the state space of Eq. (4). Then, we assume the following geometrical condition of Lyapunov type

implying that the resting solution is locally exponentially attracting in \(\mathcal {H}\), i.e. linearly stable. Moreover

for all t ∈ [T 0, T], where \(d_{\hat {X}}(t) = \dot {\hat {X}}(t)\). Here T 0 is the time until \(\hat {X}\) is in pulse form and T denotes the time, when the pulse has reached the boundary. The latter condition can be interpreted geometrically as follows: once it is formed, the traveling pulse solution is locally exponentially attracting in the subspace \(\bot _{t} := \{ h \in \mathcal {H}: \langle {h}, {d_{\hat {X}}(t)} \rangle _{\mathcal {H}} = 0\} \subset \mathcal {H}\) that is orthogonal to the direction of propagation.

2.4 Numerical method

SPDE (3) is a reaction diffusion equation coupled to a set of equations without spatial diffusion. Thus, the main issue from a numerical perspective is the simulation of equations of the form

with Neumann boundary conditions as in Eq. (2). The numerical method chosen for the integration of such a SPDE is a finite difference approximation in both space and time, see Sauer and Stannat (2015, 2014) for details. For the space variable x we use an equidistant grid (x i ) of size Δx = L/N and replace the second derivative by its two-sided difference quotient. Boundary conditions are approximated up to second order, using the artificial points x −1 and x N+1. The time variable t is discretized to (t j ) using Δt = 1/M and a semi-implicit Euler scheme. Approximating the variable u in the point (x i , t j ) yields the following scheme.

for 1≤i≤N−1, where J j is the discrete applied current and (N i, j )0≤i≤N, j≥1 is a sequence of iid \(\mathcal {N}(0,1)\)-distributed random variables. For details on convergence of this scheme and error rates we refer to Sauer and Stannat (2015).

3 Reliability of signal transmission

Let us first specify numerical parameters. We use N = 500 gridpoints, i.e. Δx = 0.02, and Δt = 0.01 to simulate the equations. Using the input of height J = 0.001 μA and length T ∗=0.5, in both models (HH) and (\(\widetilde {\text {HH}}\)) a pulse is formed at the left boundary, traveling to the right, see Fig. 1.

The problem at hand is how the presence of noise affects the generation and reliability of transmission of action potentials in the axon, similar to the studies by Faisal and Laughlin (2007) for the Hodgkin-Huxley equations and Tuckwell (2008) for the FitzHugh-Nagumo equations. In particular, this section concerns two distinct phenomena observed in these two studies. Faisal & Laughlin found that in the (HH) model action potential propagation is very secure, but in certain cases there spontaneously emerge action potentials somewhere along the axon due to the effect of noise (spontaneous activity). This is illustrated in Fig. 2, where an exemplary trajectory of such an event can be found.

A realization of the event spontaneous activity is given by the solid, light gray trajectory. The three plots are the membrane potential u using (HH) at times t 1 = 13.5, t 2 = 14.5, t 3 = 16.5 from top to bottom. For comparison we include a trajectory, where there are only fluctuations around the resting potential (dashed, dark gray). For all of them, σ = 0.372

On the other hand, Tuckwell observed that a primary effect of noise on the action potential can be a breakdown of the pulse without any secondary phenomena such as spontaneous activity (propagation failure). An illustration is given in Fig. 3 comparing a failure to a stable pulse. The equations Tuckwell used to model the neuron are, of course, different to the work by Faisal & Laughlin, however this discrepancy is not due to the choice of the neuron model but rather due to the choice of the particular parameter values describing the model. These are directly linked to the stability of the traveling pulse and resting state. Indeed, slightly modifying sodium (in)activation in model (\(\widetilde {\text {HH}}\)), we can observe occurences of propagation failure but no spontaneous activity. In this work, (HH) is always used to study spontaneous activity and (\(\widetilde {\text {HH}}\)) for the propagation failures, since these are the prominent phenomena in the respective dynamical system.

A realization of the event propagation failure is given by the solid, light gray trajectory. The three plots are the membrane potential u using (\(\widetilde {\text {HH}}\)) at times t 1 = 14.5, t 2 = 16, t 3 = 18 from top to bottom. For comparison we include a trajectory, where no propagation failure occurs (dashed, dark gray). For all of them, σ = 0.504

We aim to propose a simple statistical estimator that allows for detection of both spontaneous activity and propagation failures. A first educated guess might suggest that checking for certain threshold crossings of the maximum height of the membrane potential, i.e. supx ∈ (0, L)u(x)>𝜃, is a good choice. Note that such a criterion has been used in Faisal and Laughlin (2007) to detect arrival times of action potentials. However, we suggest a different method using the following linear functional of the (shifted) membrane potential,

This describes the area below the pulse of the membrane potential shifted by the resting potential u ∗. Note that we can always change variables so that in the following we assume w. l. o. g. u ∗=0. We choose the estimator Φ over any other pointwise criterion as e.g. the supremum for the following reasons. First, Φ is a linear functional of only one observable. Second, the action potential is not a point charge that propagates along the axon but it is rather spread out along some part of it that may reach up to a few cm in length. Thus, a global criterion as imposed by Φ is more reasonable than a pointwise one. Moreover local fluctuations due to the noise should have a less pronounced effect. Third, Φ is not sensitive to fluctuations in the phase of the traveling pulse, which will be explained in the discussion section.

Consider the deterministic solution (i.e. σ = 0) \(\hat {u}\) that is a traveling pulse and denote \(\hat {\Phi } := \Phi (\hat {u})\). As long as the pulse is formed, this quantity should stay more or less constant. In the following, with abuse of notation we use \(\Phi (u) := \Phi (u)/ \hat {\Phi }\). Concerning the example paths in Figs. 2 and 3 we can look at the corresponding time evolution of the area Φ, see Fig. 4.

The evolution of the area Φ. (Top) For the same realizations as in Fig. 2 using (HH). Spontaneous activity (light gray) and fluctuations around the resting potential (dark gray). (Bottom) For the same realizations as in Fig. 3 using (\(\widetilde {\text {HH}}\)). Pulse with propagation failure (light gray), pulse without failure (dark gray)

3.1 Spontaneous activity

Since the estimator Φ reliably discriminates between no, one or more pulses, it can be used to observe the probability of emerging secondary pulses. In this scenario, starting the model (HH) at the resting potential without any input signal through the Neumann boundary condition, we observe the solution for the time T the deterministic pulse \(\hat {u}\) would need to reach the right boundary. For a given critical value 𝜃 we define the event supt ∈ [0, T]Φ(u σ(t)) ≥ 𝜃 as spontaneous activity for the noise amplitude σ. Similar, the probability of spontaneous activity is

In this definition, the threshold 𝜃 still has to be specified. Experience with different parameter sets and other neuron models have shown that a suitable threshold depends heavily on these. Suitable is used here in the sense that the estimator indeed detects an emerging action potential when there is one.

In the following we use T = 60 and M = 10 000 realizations of (HH) to estimate s σ . Figure 5 shows that the curve σ↦s σ shifts to the right as 𝜃 is increased and stays unchanged for 𝜃 ≥ 0.52, which is in this case the suitable threshold to detect spontaneous activity.

3.2 Propagation failure

Obviously, we can use Φ the other way round to detect a propagation failure using the model (\(\widetilde {\text {HH}}\)). Thus, we are in principle able to easily reproduce and generalize the observations made in Tuckwell (2008) in terms of variation of parameters, models and the number of Monte-Carlo realizations. Let T 0 > 0 be a given, fixed initialization time until the pulse is formed. Also, recall that T denotes the time when the pulse has reached the boundary. Given a threshold 𝜃 we define the event \(\sup _{t \in [T_{0}, T]} \Phi \left (u^{\sigma }(t)\right ) - \hat {\Phi } > \theta \) as a propagation failure for the noise amplitude σ. Similar, the probability of propagation failure is

Remark 1

Numerically the stopping time T is implemented as follows. The axon is extended using a noiseless cable at the right boundary that allows to keep track of the pulse even if it already has left the original part of the axon. Applying the estimator Φ on both the noisy and noiseless part makes it possible to determine whether and when a pulse has successfully reached the axon terminal. With this, we can compute a reference value \(p_{\sigma }^{\text {ref}}\) to evaluate the quality of the estimator Φ.

With T 0 = 10 Fig. 6 shows the probability of propagation failure p σ versus σ for different threshold values compared to \(p_{\sigma }^{\text {ref}}\). As 𝜃 decreases, the curves converge to the reference curve. In particular, 𝜃 = 0 seems like a suitable threshold in this scenario.

4 Model reduction

Obtaining an analytical expression for p σ and s σ is out of reach, considering these are the exit time probabilities of a nonlinear infinite dimensional problem. However, one can use the linear stability assumptions of both pulse and resting state made in Section 2.3 to obtain a simplified model. In this part we show that a model reduction is indeed possible and propose a simple, one-dimensional equation that mimics the behavior of the original problem and is able to capture the desired quantities, such as the probabilities of propagation failure and spontaneous activity. This has the following implications: First, the computational costs are reduced and second, we obtain a simplified analytical expression in terms of classical, known quantities.

In view of assumption (6) our arguments for the use of Φ can be strengthened by a simple observation. Let \(\mathbb {1}_{u} = (\mathbb {1}, 0, \dots )^{T}\) be the constant function equal to 1 in the u-component, then

for t ∈ [T 0, T] since the integral is invariant to translation of the pulse. Thus, \(\mathbb {1}_{u} \in \bot _{t}\) for all t ∈ [T 0, T].

The implications of this are the following. Consider the solution Z(t) to the linearization of Eq. (4) neglecting all higher order terms. In particular, Z(t) is an Ornstein-Uhlenbeck process on \(\mathcal {H}\). Writing \(T(t,s) = \exp [{\int }_{s}^{t} A + \nabla F(\hat {X}(r)) \,\mathrm {d}r]\) for the exponential of the linear operator, the solution can be written using Duhamel’s principle as

Z(t) is a Gaussian process, uniquely characterized by its mean and variance

where ∗ denotes the adjoint operator. Now, recall \(\Phi (u) = {{\int }_{0}^{L}} u \,\mathrm {d}x\), hence \(\Phi (u(t) - \hat {u}(t)) = \langle {u(t) - \hat {u}(t)}, {\mathbb {1}} \rangle _{H} \approx \langle {Z(t)}, {\mathbb {1}_{u}} \rangle _{\mathcal {H}}\). In particular, this is a linear functional of Z(t). Since Z is Gaussian, so is \(\langle {Z(t)}, {\mathbb {1}_{u}} \rangle _{\mathcal {H}}\) with mean and variance

Now, recall Eq. (6), i.e. the linear stability assumption for the pulse state. Note that \(\mathbb {1}_{u} \in \bot _{t}\), i.e. orthogonal to the direction of pulse propagation, and therefore \(\hat {C} \langle \mathbb {1}_{u} , d_{\hat {X}}(t)\rangle ^{2}_{\mathcal {H}} = 0\). Hence, the linear operator T(t, s) satisfies the following inequality:

In particular it follows that

Of course, this implies \(\mathbb {E}\left [ \langle Z(t), \mathbb {1}_{u} \rangle _{\mathcal {H}} \right ] \to 0\), which is one of the main advantages of choosing the estimator Φ. In contrast to this, the squared L 2-norm \(\|{u(t) - \hat {u}(t)}\|_{H}^{2}\) or also \(\sup _{x \in (0,L)} \|{u(t,x) - \hat {u}(t,x)}\|\) might also serve as a measure of how close u is to the pulse solution. However, both will not converge to 0, since due to the noise u will never be adapted to the right phase of \(\hat {u}\). In our approach, we integrate the difference \(u - \hat {u}\) with respect to a function orthogonal to the direction of propagation, hence our estimator does not perceive any phase shift and is locally exponentially stable around 0. Concerning the variance, we compute

With the considerations above, the following Ansatz for a scalar valued stochastic differential equation for Φ is reasonable.

where \(\beta (t) := \sqrt {L}^{-1} \langle W(t), \mathbb {1} \rangle _{H}\) defines a real-valued Brownian motion and \(\tilde {\sigma } := \sqrt {L} \sigma \). Using linearity of Φ, \(\hat {\Phi } := \Phi (\hat {u}(t))\) and Φ(t):=Φ(u(t)) it follows that

is the approximating dynamics, a simple, one-dimensional Ornstein-Uhlenbeck process around the mean \(\hat {\Phi }\). Also, p σ can be approximated by the exit time probability

\(T_{1} = \mathbb {E}\left [ T \right ]\), that is a first passage time of the Ornstein-Uhlenbeck process. These are intensively studied in relation to stochastic LIF neurons, see Alili et al. (2005), Sacerdote and Giraudo (2013), and are in addition easily accessible numerically.

In this Ansatz, the whole complexity of the SPDE dynamics is reduced to the parameter α and the solution to Eq. (7) can be written down explicitly as

Assuming the validity of this linear approximation, which will be true for small σ, we can estimate α using mean and variance of Φ(t). In particular,

Hence, Var [Φ(t)] → L σ 2/2α as t → ∞ can be used to estimate α for large t, in our simulations t = 45, thus the difference to the limit is negligible. We apply the standard variance estimator

for σ = 0.024, the smallest σ used in the simulations before. We arrive at

with again M = 10 000 realizations.

Using the linearization around X ∗ and the same Ansatz, we propose a similar Ornstein-Uhlenbeck process, whose hitting probabilities approximate s σ . With \(\Phi (t) := \Phi (u(t)) = \langle u(t), \mathbb {1} \rangle _{H}\) and, of course, Φ(u ∗)=0 this reads as

Also, \(\mathbb {E}\left [ \Phi (t) \right ] = 0\) and Var [Φ(t)] = L σ 2/2β(1−e−2βt) and we estimate the rate β using σ = 0.012 via

with M = 10 000 realizations. Figure 7 shows the probabilities \(\tilde {p}_{\sigma }\) and

as a function of σ for different thresholds 𝜃 compared to the probabilities obtained using the SPDE. Note that the approximation becomes worse as 𝜃 and σ increase, which is expected since then the solution approaches the other equilibrium state and the linearization is not valid anymore.

5 Discussion

In this article, we have introduced a method to compute probabilities for spontaneous activity and propagation failure in a consistent way with underlying spatially extended, conductance-based neuronal models, based on certain statistical properties of the membrane potential. Since the action potential in the neuron’s axon is not a point charge, but rather spread out in space, we advertise the use of a non-local criterion such as the one using Φ. It may be interesting to find out how the axon’s length and diameter influence the quality of detection, since these are the relevant parameters concerning the width of an action potential.

A further reduction in computational costs and a simplified analytical description can be achieved performing a model reduction with respect to the chosen estimator Φ in a consistent way with the underlying spatially extended neuronal model. This is based on its linearization at the resting potential (resp. the traveling action potential) and allows to approximate the probabilities for spontaneous activity and propagation failure in terms of (classical) hitting time probabilities of one-dimensional linear stochastic differential equations. Since the linearization is valid only locally, the approximations \(\tilde {p}_{\sigma }\) and \(\tilde {s}_{\sigma }\) become worse for growing 𝜃 and σ as shown in Fig. 7. For reasonable small 𝜃 and σ however, the hitting probabilities of the one-dimensional stochastic differential equations are a solid approximation to the full nonlinear, infinite dimensional SPDE. On the other hand, Fig. 7 also shows that the model reduction can be used to find upper bounds for s σ resp. p σ over a considerably larger range of σ.

In this study, we used the modified model (\(\widetilde {\text {HH}}\)) to illustrate propagation failures. Although Faisal and Laughlin (2007) found action potential propagation to be very secure with less than 1 % failures, we have shown that little change in parameters produce a dynamical system with a totally different behavior. More precisely, rising slightly the sodium inactivation rate as in the modified Hodgkin-Huxley system (\(\widetilde {HH}\)) lowers excitability of the neuron and increases the probability of propagation failure. It may even become the predominant feature over spontaneous activation, similar to the case of the FitzHugh-Nagumo system, see Tuckwell (2008). It would be a interesting to see whether this computational fact could be confirmed in experiments.

As generalizations, we may incorporate more general noise, e.g. as suggested in Goldwyn and Shea-Brown (2011) for the Hodgkin-Huxley model, and study how this affects the signal transmission. Note, that in the development of this study we have used e.g. conductance noise as presented in Linaro et al. (2010). This does not qualitatively change the behavior concerning p σ and s σ , but should be analyzed in comparison to the results in Faisal and Laughlin (2007) for the Hodgkin-Huxley equations with ion channels modeled via Markov chains. Future work will also be concerned with the effect of noise on the generation of repetitive spiking, see Tuckwell and Jost (2010), and the estimation of the speed of propagation.

References

Alili, L., Patie, P., & Pedersen, J. L. (2005). Representations of the first hitting time density of an Ornstein-Uhlenbeck process 1. Stochastic Models, 21(4), 967–980.

Faisal, A. A., & Laughlin, S. B. (2007). Stochastic simulations on the reliability of action potential propagation in thin axons. PLoS Computational Biology, 3(5), e79.

Faisal, A. A., Selen, L. P., & Wolpert, D. M. (2008). Noise in the nervous system. Nature Reviews Neuroscience, 9(4), 292–303.

Goldwyn, J. H., & Shea-Brown, E. (2011). The what and where of adding channel noise to the Hodgkin-Huxley equations. PLoS Computational Biology, 7(11), e1002247.

Hodgkin, A. L., & Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. The Journal of Physiology, 117(4), 500–544.

Horikawa, Y. (1991). Noise effects on spike propagation in the stochastic Hodgkin-Huxley models. Biological Cybernetics, 66(1), 19–25.

Linaro, D., Storace, M., & Giugliano, M. (2010). Accurate and fast simulation of channel noise in conductance-based model neurons by diffusion approximation. PLoS Computational Biology, 7(3), e1001102.

Sacerdote, L., & Giraudo, M. T. (2013). Stochastic Integrate and Fire models: a review on mathematical methods and their applications. In: Stochastic biomathematical models (pp. 99–148). Springer.

Sauer, M., & Stannat, W. (2014). Analysis and approximation of stochastic nerve axon equations. arXiv:1402.4791, accepted for publication in Mathematics of Computation.

Sauer, M., & Stannat, W. (2015). Lattice approximation for stochastic reaction diffusion equations with one-sided lipschitz condition. Mathematics of Computation, 84(292), 743– 766.

Stannat, W. (2014). Stability of travelling waves in stochastic bistable reaction-diffusion equations. arXiv:1404.3853.

Tuckwell, H. C. (2008). Analytical and simulation results for the stochastic spatial Fitzhugh-Nagumo model neuron. Neural Computation, 20(12), 3003–3033.

Tuckwell, H. C., & Jost, J (2010). Weak noise in neurons may powerfully inhibit the generation of repetitive spiking but not its propagation. PLoS Computational Biology, 6(5), e1000794.

Tuckwell, H. C., & Jost, J. (2011). The effects of various spatial distributions of weak noise on rhythmic spiking. Journal of Computational Neuroscience, 30(2), 361–371.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Action Editor: Brent Doiron

This work is supported by the BMBF, FKZ 01GQ1001B

Rights and permissions

About this article

Cite this article

Sauer, M., Stannat, W. Reliability of signal transmission in stochastic nerve axon equations. J Comput Neurosci 40, 103–111 (2016). https://doi.org/10.1007/s10827-015-0586-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-015-0586-0