Abstract

Synaptic plasticity is studied herein using a voltage-driven memristor model. The bidirectional weight update technique is demonstrated, and significant synaptic features, including nonlinear and threshold-based learning and long-term potentiation and long-term depression, are emulated. The spike-timing-dependent plasticity (STDP) learning characteristic curve is obtained from exhaustive simulations. Then, using leaky integrate and fire neurons and memristive synapses, fully connected spiking neural networks with \(2\times 2\) and \(4\times 2\) architectures are constructed, and unsupervised learning using the STDP rule and winner-takes-all strategy is evaluated in those networks for pattern classification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The human brain contains about \(10^{10}\) neurons, each connected to \(10^3-10^4\) other neurons via synapses [1]. This means that the synapse is the most abundant element in the brain neural network. Therefore, the development of high-density and biologically plausible spiking neural networks (SNNs) requires an efficient circuit component that realizes synaptic plasticity. The three functionalities required of this component include: (1) storage of the synaptic weight, (2) updating the weight according to the network activities (update rule), and (3) affecting the strength of the communication between a pre- and postsynaptic neuron [2, 3]. Neurons in SNNs communicate with each other by transmitting signals in the form of voltage spikes. When a neuron is activated, it fires and generates spikes. The spikes pass to the next neuron through synaptic connections and then increase or decrease the membrane potential of the neurons in the next layer. The synaptic weights modulate the spikes. A popular weight update algorithm is spike-timing-dependent plasticity (STDP), which is as a refinement of the Hebbian learning method in which the weight of a synapse varies based on the relative timing between the pre- and postsynaptic neuron spikes. According to STDP, if the presynaptic spikes arrive before the postsynaptic spikes, the weight of the synapse increases, leading to long-term potentiation (LTP). However, if they arrive after the postsynaptic spikes, the synaptic weight decreases, leading to long-term depression (LTD).

Several circuits have been proposed to achieve synapse functionality based on STDP learning rules. However, these circuits face several challenges that limit their application as efficient synapses in large-scale SNNs. The synaptic circuit proposed in Ref. [1] requires external controllers and several switches. Indiveri et al. [4] proposed a complementary metal–oxide–semiconductor (CMOS) circuit with 30 transistors to implement a single synapse using the STDP learning rule, but this is not appropriate for large-scale implementation. In Ref. [5], synaptic plasticity was investigated by using binary memristive synapses. However, a gradual and analog weight change is more desirable and also biologically plausible. Hong et al. [6] proposed a structure with two memristors connected in reverse polarity to implement a single synapse that exhibited both potentiation and depression processes. Long et al. [7] proposed a memristive synapse including two memristors connected in reverse polarity in series and two transistors. Their memristor model followed the simple model of HP Labs. To improve the performance of the HP model, a window function has been proposed. In the work presented herein, we use a single memristor with bidirectional and analog weight change characteristics.

Nonvolatile storage is preferred for efficient synapse circuit components so that the weight does not vanish over time. Besides, such devices should have a minimal footprint to facilitate large-scale integration. It is also preferred that the weight only changes when the applied signal level exceeds threshold. This feature discriminates between learning and regular operation, such as classification. All the above characteristics are offered by a two-terminal device named the memristor, first introduced by Leon Chua in 1971 [8] and experimentally demonstrated by HP Labs in 2008 [9]. In particular, Chua proposed to use memristors to fabricate synapses and neurons following the Hodgkin–Huxley formalism [10]. Jo et al. experimentally demonstrated the use of a memristor as a synapse [3]. The memristor exhibits nonvolatile modification of its resistance (or conductance) in response to the current (charge) or voltage (flux) driving the device. Several models have been proposed to capture the electrical characteristic of a memristor [9, 11,12,13,14,15]. A physical-based model called the ThrEshold Adaptive Memristor (TEAM) model [14] takes into account the Simmons tunneling equations and is compatible with several devices such as spin-based memristive systems and ionic thin-film memristors made from materials such as \({\text {TiO}}_{2}\). Subsequently, the voltage (V)TEAM model [15] was introduced as a voltage-driven version of the TEAM model. VTEAM expresses the physical relations via relatively simple mathematical equations, which are reasonably accurate. Besides, a threshold level is defined for the learning function, which is compatible with the synapse wright update requirement. In recent years, the implementation of artificial synapses using memristors has attracted significant attention. Zamarreño-Ramos et al. [16] proposed a macro model to implement the memristor characteristic. Then, based on this macro model and the leaky integrate and fire (LIF) neuron circuit, STDP learning rules were implemented. Spiking neuron and memristor-based STDP learning circuits have been designed to represent both LTP and LTD processes in one circuit [17]. Covi et al. [18] studied the performance of HfO\(_{2}\)-based memristors by placing the device between two spiking channels, i.e., two waveform generators and fast measurement units. They characterized the STDP mechanism by analyzing the delay between the pre- and postsynaptic signals. This artificial synapse was then used in a sample neural network to perform unsupervised learning to recognize five characters. The use of memristors as synapses in a SNN with Hodgkin–Huxley and Morris–Lecar neurons has been reported for pattern classification applications [19, 20]. These works used the current-controlled linear ion drift model [9] and the Biolek [21] memristor model, respectively.

In the work presented herein, we use the VTEAM model for the memristor, and investigate the resulting synaptic functionality for an SNN. To demonstrate the application of the proposed modeling approach in an SNN, two examples of fully connected SNN architectures based on LIF neurons and memristive synapses in the form of a crossbar array are presented for pattern classification.

2 The memristor model

A voltage-controlled time-invariant model of a memristor device can be expressed as

where w is an internal state variable that is limited to the physical dimension of the device [0, D] with D being the device length, v(t) is the voltage applied to the device, and i(t) is the device current. The mathematical relation for f(w, v) in the VTAEM model is defined as [15]

where \(k, \alpha _{{\text {on}}}\), and \(\alpha _{{\text {off}}}\) are constant parameters that can be considered as reinforcement coefficients. \(v_{{\text {on}}}\) and \(v_{{\text {off}}}\) are voltage thresholds. \(f_{{\text {on}}}(w)\) and \(f_{{\text {off}}}(w)\) are window functions defined in Ref. [14] as

where \(w_{\rm c}\) is a constant parameter. The current–voltage relationship can be defined as

\(R_{{\text {off}}}\) and \(R_{{\text {on}}}\) are the memristance values at the bounds D and 0, respectively, and \({\lambda }\) is obtained as

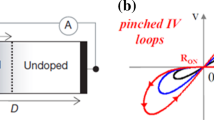

Figure 1a shows the variation of the memristance as a function of time for several values of the \({R}_{{\text {off}}} / {R}_{{\text {on}}}\) ratio. Increasing \({R}_{{\text {off}}} / {R}_{{\text {on}}}\) extends the dynamic range of the device. This causes the conductance to change over a wide range and prevents early saturation of the conductance. This feature is vital to achieve precise control over and analog variation of the synaptic weight. However, practical devices have a limited dynamic range that should be taken into account. Figure 1b shows the hysteresis characteristic obtained from the model for different values of k. In fact, k acts as a gain parameter in our model, increasing or decreasing the rate of change of the state variable. Too large values of k cause the resistance to saturate with small changes of the applied voltage. The values of the model parameters are presented in Table 1, selected such that the memristor model can function properly as a synapse and thereby can be adapted to implemented practical devices; For example, the length of a practical device is less than 10 nm, and this parameter is 3 nm in the current memristor model. The \(R_{{\text {off}}}/R_{{\text {on}}}\) ratio is selected based on dynamic range considerations. To study the feasibility of using the proposed device as a synapse, the variation of the current over five positive and five negative voltage cycles is shown in Fig. 2a. A consecutive increase (or decrease) of the memristor conductance is observed in the positive (negative) applied voltage cycles. When applying positive voltages, the current and conductance gradually increase from their previous values. Then, when applying negative pulses at each stage, the current decreases from its maximum value. This result suggests that this memristive-based synapse can preserve its weight and that its weight can be updated at any stage by applying an external voltage. Figure 2b illustrates the hysteresis characteristic in sequential voltage sweeps. When applying a train of positive (or negative) voltage pulses, the conductance of the memristor gradually increases (or decreases) in a continuous way and the magnitude of the weight change is considerable for the selected parameter values, indicating that the proposed memristor model with the specified parameter values can display long-term potentiation (and depression) features as an artificial synapse.

a The effect of the \({R}_{{\text {off}}}/{R}_{{\text {on}}}\) ratio on the variation of the memristance as a function of time. The applied stimulation voltage profile with a maximum of 0.95 V and frequency of 1.2 Hz is shown in the inset. b The effect of different \(\vert k \vert \) values on the I–V characteristic

A constant-current stimulation of a \(V_{{\text {pre}}}\), b \(V_{{\text {post}}}\), and c \(V_{{\text {post}}}-V_{{\text {pre}}}\) for LTP when reaching the positive threshold. d The gradual increase in the conductance induced in the LIF neuron blocks when using a train of identical spikes. The three spikes are magnified in a–c

A constant-current stimulation of a \(V_{{\text {pre}}}\), b \(V_{{\text {post}}}\), and c \(V_{{\text {post}}}-V_{{\text {pre}}}\) for LTD when reaching the negative threshold. d The gradual decrease in the conductance using a train of identical spikes induced by the LIF neuron blocks. The three spikes are magnified in a–c

3 The LIF neuron model

The leaky integrate and fire (LIF) neuron model is widely used in SNNs since it demonstrates the main biological features seen in Nature without high computational cost [22]. In the LIF model, when the voltage crosses a threshold, the neuron fires and produces an output spike that is transmitted to other neurons via the synapse. The weights of the synapses control the impact of the spikes on the neurons in the next layer. This electrical spike corresponds to the action potential of biological neurons. Immediately after firing, the depression phase takes place, and the neuron’s potential is reset to 0. The LIF neuron model can be described based on a few circuit components, including a capacitor for integration of the input current with a resistor in parallel to describe the leakage term. This circuit is driven by an input current I(t). The voltage across the capacitor is compared with a threshold, and a switch turns on at the threshold voltage, when a spike is produced. The differential equation describing the circuit can be written as [23]

where v is the membrane potential and \(\tau =RC\) is the time constant.

\({\text {max}}(V_{{\text {mem}}})\) as a function of \(\varDelta t\) for the pre- and postsynaptic pulse shapes shown in Fig. 7

4 Synaptic plasticity

The circuit designed to investigate the behavior of such memristor-based synapses in HSPICE is illustrated in Fig. 3 [24]. In this circuit, a memristor is considered as a synaptic device, and no complex synaptic circuit is required. Unlike other fundamental elements (such as the resistor, capacitor, and inductor), the memristor is unknown to HSPICE. Therefore, it is necessary to describe the memristor in the Verilog-A circuit language. In addition to the memristor, the pre- and postsynaptic neuron blocks are defined in Verilog-A, too.

The spike resulting from firing either the pre- or postsynaptic neuron should reach the memristor synapse with a delay relative to the other neuron spike so that the learning ability can be assessed through the STDP mechanism. Therefore, a delay block is required for either the LTP or LTD process. The delay block shown with solid or dashed lines is used for the LTP and LTD mode, respectively. In the circuit-level modeling, when one neuron fires, due to the loading effect of the previous circuit stages, the resulting spike is attenuated along the path to the synapse. A voltage buffer block is inserted into the proposed circuit to isolate the network from other circuit components.

The response of the device to a constant stimulation current \(I_1, I_2\) applied in the form of identical spike trains is demonstrated in Figs. 4 and 5 . When the voltage across the memristor synapse exceeds a positive threshold, the memristor conductance, equivalent to the synapse weight, gradually increases as shown in Fig. 4. This situation is reversed in the LTD mode, as shown in Fig. 5. Synaptic plasticity can be observed for fixed spike times in these figures. The parameter \(|\varDelta t|\) is constant in this case. The analog behavior of the device is confirmed, too (Figs. 4d, 5d).

5 The STDP characteristics of the memristor synapse

To study the feasibility of implementing the STDP rule using the proposed memristor model, input signals in the form of pulse trains are applied to both nodes of the memristor [16]. The signals are shaped so that the voltage difference between two nodes may exceed the threshold value for both the LTP and LTD weight update. The delay between consecutive falling edges of these pulse trains \(\varDelta t\) is a crucial parameter in the STDP characteristics because it defines the duration for the weight update,

Figure 6a depicts a typical set of pre- and postsynaptic pulse trains, and the resulting voltage drop across the memristor (\(V_{{\text {mem}}}=V_{{\text {post}}}-V_{{\text {pre}}}\)) is shown in Fig. 6b. Figure 6c demonstrates the response of the proposed memristor to the applied spike trains. As expected from Eq. 2, the conductance of the memristor is only updated when \(V_{{\text {mem}}}\) exceeds the threshold (the regions indicated by the red color above and below the dashed line in Fig. 6b). Two examples each of potentiation and depression are demonstrated in Fig. 6b with \(\varDelta t_{\rm a}, \varDelta t_{\rm b}, \varDelta t_{\rm c}\), and \(\varDelta t_{\rm d}\). For the cases with \(\varDelta t_{\rm a}\) and \(\varDelta t_{\rm b}\), the conductance increases because the presynaptic spike occurs before the postsynaptic one. On the other hand, for \(\varDelta t_{\rm c}\) and \(\varDelta t_{\rm d}\), the postsynaptic spike occurs before the presynaptic one, thus the memristor conductance decreases, as shown in Fig. 6c. A comparison of \(\varDelta t_{\rm a}\) with \(\varDelta t_{\rm b}\) and \(\varDelta t_{\rm c}\) with \(\varDelta t_{\rm d}\) shows that, the smaller the value of \(\varDelta t\), the sharper the change in the conductance. Figure 6 confirms the bidirectional behavior of the memristor synapse.

In Fig. 6, although the interval \(\varDelta t_{\rm a}\) is longer than \(\varDelta t_{\rm b}\), the incremental steps in the conductance are almost equal. Nevertheless, this is not precisely according to the STDP rule. Previously, the STDP characteristics were obtained by applying current pulses to LIF neuron blocks, and a single spike is produced in the synaptic circuit (Fig. 3) in Ref. [24]. The spikes displayed in Fig. 6 are used to show the bidirectional behavior of the memristor. However, the magnitude of the conduction change due to the variation of the time difference between the pre- and postsynaptic spikes is not noticeable, so this spike shape is not suitable for STDP characterization. Therefore, we use different shapes for the pre- and postsynaptic spikes to reflect the effect of the delay on the weight update more effectively, as shown in Fig. 7. Figure 8 shows the maximum value of the voltage applied to the memristor \({\text {max}}(V_{{\text {mem}}})\) for the new pulse shapes in Fig. 7 as a function of \(\varDelta t\). The duration for which the voltage is above the threshold is almost constant, and Fig. 8 indicates that the magnitude of the weight change is directly related to \(\varDelta t\). To obtain STDP characteristics, the parameter (\(\varDelta t\)), which is the time difference between the pre- and postsynaptic neuron spikes, varies and the voltage applied to the memristor (\(\varDelta v\)) is calculated as

In each step, the conductance varies as

where \(G_0\) and \(G_1\) are the conductance values (synapse weights) before and after the update, respectively. Next, the STDP characteristics \(\varDelta G\) are obtained based on exhaustive simulations (with 110 simulation steps) for different values of the delay (\(\varDelta t\)). The results are shown in Fig. 9. In each simulation step, a \(\varDelta t\) value is assumed and input pulses are applied to both nodes of the memristor, while the weight is updated according to the model if the threshold is crossed. Figures 8 and 9 demonstrate several essential features of the proposed memristor model along with the input spikes for neuromorphic applications. The maximum duration \(\varDelta t\) that can affect the synapse weight is about 100 \(\upmu \)s, whereas for a longer delay between the pre- and postsynaptic signals, the weight change is almost negligible. The magnitude of the weight change increases when reducing \(\varDelta t\). However, for a \(\varDelta t\) value that is comparable to the postsynaptic pulse duration, the two consecutive weight changes (positive and negative) almost cancel out and the weight change is negligible at around \(\varDelta t=0\). Figure 9 follows the experimental results [25]. This result suggests that the behavior of the device is promising and that it could be used as an artificial synapse.

6 Network architectures with memristor synapses

In this section, we demonstrate unsupervised learning based on the STDP rule of the proposed memristor synapse in simple SNNs formed by connecting LIF neurons through voltage-driven memristors playing the role of synapses (Fig. 10a). The membrane voltage of the presynaptic neuron in each input neuron can be determined by Eq. 7, where v and I(t) are replaced by \(V_{{\text {pre}}}\) and \(I_{{\text {in}}}(t)\), respectively. \(V_{{\text {pre}}}\) is the voltage of the presynaptic neuron, and \(I_{{\text {in}}}\) is the input current applied to the presynaptic neurons with an amplitude of 1 A.

The excitatory current of the postsynaptic neurons is the current from the previous layer that passes through the memristor synapse. The membrane voltage of the postsynaptic neuron can be determined by Eq. 7, where v and I(t) are replaced by \(V_{{\text {post}}}\) and \(I_{{\text {postsynaptic}}}(t)\), respectively. The current that stimulates each postsynaptic neuron is the sum of the currents passing through the synapses connected to that neuron,

When the voltage across each memristive synapse exceeds the positive or negative threshold of the device, the LTP and LTD phenomenon occurs and the conductance of the memristive synapses is altered. In an SNN with k input neurons, input current s to the postsynaptic neuron is obtained by Eq. 1.

\(G_{s}\) is the conductance of the memristor synapse and can be calculated by using Eq. 5, where \({{w}_{s}(t)}\) is the situation of the state variable in each moment, calculated using Eq. 2. The behavior of the LIF neurons in the presence of memristor synapses is shown in Fig. 10b. Unsupervised learning in a \(2\times 2\) network with four memristors and then in a \(4\times 2\) network with eight memristors is investigated.

6.1 The \(2\times 2\) SNN using STDP learning

The \(2\times 2\) network with two input and two output LIF neurons is depicted in Fig. 11. The proposed network is fully connected through four memristive synapses. This SNN is used for unsupervised learning of the XOR pattern based on two complementary input pulses demonstrated in Fig. 11. The input waveform can be divided into several time slots, during which a two-pixel pattern is displayed on the network. Black pixels stimulate their corresponding presynaptic neurons with level “1”, while white pixels are represented by input level “0”. The membrane potential of the presynaptic neurons is shown in Fig. 12a, b. The threshold value of all the output neurons is constant and equal to each other. The initial weights of the synapses are randomly assigned. The spiking characteristics of the postsynaptic neurons are shown in Fig. 12c, d. As expected, the two output neurons compete with each other to spike earlier in response to the input spikes. We use the winner-takes-all concept, thus as soon as one neuron spikes, the current time slot is assigned to it. In this way, potentiation and depression are applied to the corresponding synapses. At each step, the voltage drop across the memristor synapses is calculated and compared with the threshold value. If it exceeds the threshold, the memristor synapse weight is updated. Given that the output 1 neuron spikes earlier after applying class 1 input, the synaptic weight \({W_{11}}\) is increased through the LTP phenomenon. Presynaptic neuron 1 spikes before output 1. However, since output 2 is inactive in this time slot, its synaptic weight \({W_{12}}\) should be reduced. A similar behavior occurs for the class 2 pattern. Figure 13 demonstrates the time evolution of the weight changes for all the memristive synapses. At the end of the learning process, the synapse weights \({W_{11}}\) and \({W_{22}}\) are increased and almost become saturated at the maximum value, while \({W_{12}}\) and \({W_{21}}\) are decreased to the minimum value.

6.2 The \(4\times 2\) SNN using STDP learning

A crossbar framework for the LIF-based memristive SNN consisting of four inputs and two outputs is shown in Fig. 14. The proposed structure has eight memristive synapses and classifies two classes of four-pixel images. Each class is assigned to presynaptic neurons in the form of four input waves (Fig. 15a–d). Figure 15e, f shows the output results for the two postsynaptic neurons. Whenever an input neuron spikes before an output neuron, the corresponding memristive synapse is potentiated and otherwise depressed, as demonstrated in Fig. 16. Note that the weight for the two synapses connecting each input neuron x to two output neurons (Wx1, Wx2) varies in opposite directions.

Other works have examined the Hodgkin–Huxley and Morris–Lecar models. Table 2 presents a comparison of those results with the current work. Despite the high accuracy of the cited models, they suffer from high computational cost due to their complexity. In this study, the LIF model, the most popular neuron model with very low complexity and acceptable accuracy, has been used for the memristive SNNs. During the learning process, the LIF neuron model is used directly instead of the neural spike shapes. In this study, the VTEAM model was applied to achieved synaptic plasticity, and a thorough analysis of its different synaptic features carried out, being used for the first time as an artificial synapse in a sample SNN.

7 Conclusions

The feasibility of using a voltage-driven memristor model as a synapse in SNNs has been studied through circuit- and system-level simulations. The model is analyzed and parameterized to demonstrate the variation of the analog conductance in response to an applied excitation. The results of the simulations confirm the ability of the proposed model to demonstrate bidirectional characteristics in response to input spikes, including both long-term potentiation (LTP) and depression (LTD). The STDP characteristics of the proposed synapse are explored using exhaustive simulations under specific spike patterns. Unsupervised learning based on the STDP rule with the proposed synapse in \(2\times 2\) and \(4\times 2\) SNNs is further demonstrated. These results suggest that the proposed memristor-based synapse model might emulate synapses in spiking neural networks, including networks with temporal encoding [26].

References

Serrano-Gotarredona, T., Linares-Barranco, B.: Design of adaptive nano/CMOS neural architectures. In: 2012 19th IEEE International Conference on Electronics, Circuits, and Systems (ICECS 2012), pp. 949–952 (2012)

Stanley Williams, R.: How we found the missing memristor. World Sci. 1616, 483–489 (2013)

Jo, S.H., Chang, T., Ebong, I., Bhadviya, B.B., Mazumder, P., Lu, W.: Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 10, 1297–1301 (2010)

Indiveri, G., Chicca, E., Douglas, R.: A VLSI array of low-power spiking neurons and bistable synapses with spike-timing dependent plasticity. IEEE Trans. Neural Netw. 17, 211–221 (2006)

Kim, Y., Jeong, W.H., Tran, S.B., Woo, H.C., Kim, J., Hwang, C.S., Min, K.-S., Choi, B.J.: Memristor crossbar array for binarized neural networks. AIP Adv. 9, 045131 (2019)

Hong, Q., Zhao, L., Wang, X.: Novel circuit designs of memristor synapse and neuron. Neurocomputing 330, 11–16 (2019)

Long, K., Zhang, X.: Memristive-synapse spiking neural networks based on single-electron transistors. J. Comput. Electron. 19, 435–450 (2020)

Chua, L.: Memristor-the missing circuit element. IEEE Trans. Circuit Theory 18, 507–519 (1971)

Strukov, D.B., Snider, G.S., Stewart, D.R., Williams, R.S.: The missing memristor found. Nature 453, 80 (2008)

Chua, L.: Memristor, Hodgkin–Huxley, and edge of chaos. Nanotechnology 24, 383001 (2013)

Lehtonen, E., Laiho, M.: CNN using memristors for neighborhood connections. In: 2010 12th International Workshop on Cellular Nanoscale Networks and their Applications (CNNA 2010), pp. 1–4 (2010)

Pickett, M.D., Strukov, D.B., Borghetti, J.L., Yang, J.J., Snider, G.S., Stewart, D.R., Williams, R.S.: Switching dynamics in titanium dioxide memristive devices. J. Appl. Phys. 106, 074508 (2009)

Rziga, F.O., Mbarek, K., Ghedira, S., Besbes, K.: An efficient Verilog-A memristor model implementation: simulation and application. J. Comput. Electron. 18, 1055–1064 (2019)

Kvatinsky, S., Friedman, E.G., Kolodny, A., Weiser, U.C.: TEAM: threshold adaptive memristor model. IEEE Trans. Circuits Syst. I Regul. Pap. 60, 211–221 (2012)

Kvatinsky, S., Ramadan, M., Friedman, E.G., Kolodny, A.: VTEAM: a general model for voltage-controlled memristors. IEEE Trans. Circuits Syst. II Express Briefs 62, 786–790 (2015)

Zamarreño-Ramos, C., Camuñas-Mesa, L.A., Perez-Carrasco, J.A., Masquelier, T., Serrano-Gotarredona, T., Linares-Barranco, B.: On spike-timing-dependent-plasticity, memristive devices, and building a self-learning visual cortex. Front. Neurosci. 5, 26 (2011)

Zhao, L., Hong, Q., Wang, X.: Novel designs of spiking neuron circuit and STDP learning circuit based on memristor. Neurocomputing 314, 207–214 (2018)

Covi, E., Brivio, S., Serb, A., Prodromakis, T., Fanciulli, M., Spiga, S.: Analog memristive synapse in spiking networks implementing unsupervised learning. Front. Neurosci. 10, 482 (2016)

Amirsoleimani, A., Ahmadi, M., Ahmadi, A., Boukadoum, M.: Brain-inspired pattern classification with memristive neural network using the Hodgkin–Huxley neuron. In: 2016 IEEE International Conference on Electronics, Circuits and Systems (ICECS), pp. 81–84 (2016)

Amirsoleimani, A., Ahmadi, M., Ahmadi, A.: STDP-based unsupervised learning of memristive spiking neural network by Morris–Lecar model. In: 2017 International Joint Conference on Neural Networks (IJCNN), pp. 3409–3414 (2017)

Biolek, Z., Biolek, D., Biolková, V.: SPICE model of memristor with nonlinear dopant drift. Radioengineering 18, 236 (2009)

Schuman, C.D., Potok, T.E., Patton, R.M., Birdwell, J.D., Dean, M.E., Rose, G.S., Plank, J.S.: A survey of neuromorphic computing and neural networks in hardware, arXiv preprint arXiv:1705.06963 (2017)

Gerstner, W., Kistler, W.M.: Spiking neuron models: single neurons, populations, plasticity. Cambridge University Press (2002)

hajiabadi, Z., Shalchian, M.: Behavioral modeling and STDP learning characteristics of a memristive synapse. In: 2020 28th Iranian Conference on Electrical Engineering (ICEE), pp. 1–5 (2020)

Bi, G., Poo, M.: Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 18, 10464–10472 (1998)

Mirsadeghi, M., Shalchian, M., Kheradpisheh, S.R. Masquelier, T.: STiDi-BP: Spike time displacement based error backpropagation in multilayer spiking neural networks. Neurocomputing 427, 131–140 (2021)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hajiabadi, Z., Shalchian, M. Memristor-based synaptic plasticity and unsupervised learning of spiking neural networks. J Comput Electron 20, 1625–1636 (2021). https://doi.org/10.1007/s10825-021-01719-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10825-021-01719-2