Abstract

Atypical processing and integration of sensory inputs are hypothesized to play a role in unusual sensory reactions and social-cognitive deficits in autism spectrum disorder (ASD). Reports on the relationship between objective metrics of sensory processing and clinical symptoms, however, are surprisingly sparse. Here we examined the relationship between neurophysiological assays of sensory processing and (1) autism severity and (2) sensory sensitivities, in individuals with ASD aged 6–17. Multiple linear regression indicated significant associations between neural markers of auditory processing and multisensory integration, and autism severity. No such relationships were apparent for clinical measures of visual/auditory sensitivities. These data support that aberrant early sensory processing contributes to autism symptoms, and reveal the potential of electrophysiology to objectively subtype autism.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Individuals with autism spectrum disorder (ASD) have impaired social interaction and communication, and restricted, repetitive and stereotyped patterns of behavior and/or interests. Symptom expression and severity in these core domains, however, vary considerably within the ASD population (Jones and Klin 2009). Indeed, clinical observations, parent report, and behavioral studies indicate a complex and highly variable phenotype across the spectrum (e.g., Charman et al. 2011; Geschwind and Levitt 2007; Lord et al. 2000, 2012; Volkmar et al. 1989). Observations of atypical brain activity in ASD are ubiquitous (Ameis et al. 2011; Boddaert et al. 2004; Brandwein et al. 2013; Cardinale et al. 2013; Courchesne et al. 1985; Dunn et al. 2008; Fan et al. 2012; Fishman et al. 2014; Frey et al. 2013; Green et al. 2013; Jemel et al. 2010; Kemner et al. 1995; Mak-Fan et al. 2013; Nair et al. 2013; Roberts et al. 2010; Russo et al. 2009, 2010; Wolf et al. 2008), and it is reasonable to assume that such atypicalities relate systematically to variance in the autism phenotype. Bridging biological processes to clinical phenotype is clearly essential to understanding the neurobiology of ASD. There is a growing literature probing how neural processing differences relate to symptoms (e.g., Campbell et al. 2010; Coutanche et al. 2011; Edgar et al. 2013; Elsabbagh et al. 2011; Hu 2012; Roberts et al. 2011; Russo et al. 2009), yet much work remains to arrive at a thorough understanding of these relationships.

Event-related potentials (ERPs) provide a direct measure of the brain’s response to sensory inputs. Clearly identifiable transitions in scalp-topography of the ERP reflect successive cortical phases of analysis (Leavitt et al. 2007; Picton et al. 1974). These evolving processing stages are observed at the scalp as a set of positive and negative deflections in the evoked response, commonly referred to as components, each reflecting coordinated activity within or across a network of cortical regions. The high temporal resolution of electrophysiological recordings (i.e., electroencephalogram or EEG) allows one to parse the response in terms of early cortical sensory registration, sensory-perceptual processing, and later cognitive stages of processing (Foxe and Simpson 2002; Lucan et al. 2010; Naatanen and Picton 1987). Studies using EEG recordings of brain activity reveal the presence of differences in auditory (e.g., Dunn et al. 2008; Kemner et al. 1995; Lepisto et al. 2005) and visual (e.g., Frey et al. 2013; Jemel et al. 2010) sensory-perceptual processing, as well as decreased integration of multisensory inputs (e.g., Brandwein et al. 2013; Russo et al. 2010) in ASD. Neurophysiological indices of sensory processing atypicalities may reflect neuropathology underlying clinical symptoms of ASD, and as such serve as strong candidates for biomarkers of clinical phenotype.

Unusual responses to sensory information have long been noted in ASD, and were in fact documented in some of the original descriptions of the disorder provided by Kanner (1943) and Asperger (1944). Recent studies indicate that a significant proportion of individuals with ASD have aberrant and pathological responses to sensory events (Ben-Sasson et al. 2009; Rogers and Ozonoff 2005), with estimates ranging from 45 to 90 % (Ben-Sasson 2011; Ben-Sasson et al. 2008; Leekam et al. 2007; Tomchek and Dunn 2007). These can involve a wide range of atypical reactions to stimulation including outright aversion to certain touches or sounds, indifference to other sounds, and obsession with particular types of visual stimulation. Families report that their routines and activities are significantly affected by their child’s sensory-related behaviors (Schaaf et al. 2011), and a recent study suggested that sensory over-responsivity in toddlers with autism is associated with increased maternal stress, and with disruptions in family life (Ben-Sasson et al. 2013). It has been proposed that sensory processing differences contribute to the social, cognitive, and repetitive behaviors and restricted interests associated with ASD (Baranek et al. 2006; Cascio et al. 2012; Mongillo et al. 2008; Mottron et al. 2006a; Schaaf et al. 2014), and even that failure to develop normal modulation and integration of sensory inputs is at the root of autism (i.e., ‘Sensory Integration Theory’ from Ayres 1979; Brock et al. 2002; Frith 1996; Happé 2005; Hermelin and O’Connor 1970; Hutt et al. 1964; Just et al. 2004b; Mottron et al. 2006b; Ornitz 1974; Ornitz et al. 1977).

The current investigation was designed to probe the clinical significance of EEG indices of auditory and visual sensory processing and integration. That is, how well do these neurophysiological responses predict the severity of core and associated symptoms of autism in a well-characterized sample of children and adolescents with ASD? We tested the hypothesis that the amplitude of auditory and visual sensory ERPs (e.g., the auditory N1-complex and the visual P1), and ERPs associated with multisensory integration (MSI), are systematically related to (a) autistic symptom severity and/or (b) visual/auditory sensitivities. Since behavioral measures were also available, we likewise tested the hypothesis that variance in reaction times to auditory, visual, and audiovisual stimuli, as well as a psychophysical index of MSI, are systematically related to (a) autistic symptom severity and/or (b) visual/auditory sensitivities. Analyses were focused on a purely clinical sample (see Brandwein et al. 2013 for a group level comparison between individuals with ASD and individuals with typical development), leveraging EEG and behavioral ASD data from Brandwein et al. (2013) (plus additional data that had been gathered from individuals with ASD in the intervening time). For dependent measures, EEG indices of early auditory and visual sensory processing and sensory integration (Brandwein et al. 2011, 2013; Di Russo et al. 2002; Foxe and Simpson 2002; Molholm et al. 2002; Naatanen and Picton 1987) were used. A measure of autism severity was derived from the Autism Diagnostic Observation Schedule (ADOS) (Lord et al. 1999), a semi-structured observation of the individual designed to measure both quantity and quality of social-communication skills, as well as stereotyped behaviors and restricted interests. To assess the presence of atypical reactions to visual and auditory sensory stimulation we relied on the Short Sensory Profile (SSP) (McIntosh et al. 1999a), a questionnaire on which parents/caregivers rate their child’s reactions, preferences, and tendencies when confronted with everyday sensory stimuli and situations.

Methods

Participants

Participants consisted of a purely clinical sample of fifty-two individuals with ASD (6–17 years, seven females). For the analysis of symptom severity, nine of these individuals were excluded because severity scores are not available for Module 4 of the ADOS (resulting in a total N = 43 for this analysis). For the analysis of visual/auditory sensitivities, six individuals were excluded due to missing SSP data (resulting in a total N = 46 for this analysis). The ADOS was administered and scored by a research-reliable psychologist or trainee, and an ASD diagnosis was confirmed with a developmental history and clinical judgment. Intellectual functioning was assessed using the Wechsler Abbreviated Scales of Intelligence (WASI, Wechsler 1999). Table 1 describes the intellectual makeup of the full sample. Supplemental Tables 1 and 2 characterize the sample separately for the analysis of autism symptom severity and visual/auditory sensitivities, respectively. Exclusionary criteria included a history of seizures (non-febrile) or head trauma, a performance IQ (PIQ) estimate below 80, or a known genetic disorder. The dataset was 69 % Caucasian, 12 % African American, 12 % Asian American, 4 % mixed race, 2 % Native American, and 2 % unspecified. Regarding maternal education, 11 % of mothers had a high school degree or less, 22 % had a bachelor’s, associate’s degree or some college education, and 19 % reported a graduate or professional degree. Audiometric threshold evaluation confirmed that all participants had normal hearing. Participants were formally screened for normal or corrected-to-normal vision using a Snellen eye test chart. Informed written consent was obtained from each participant’s parent or legal guardian prior to entering the study. Verbal or written assent was obtained from each participant. All procedures were approved by the Institutional Review Boards of the Albert Einstein College of Medicine, the City College and the Graduate Center of the City University of New York, and were in accord with the ethical standards laid out in the declaration of Helsinki. Participants were recruited through the Human Phenotyping Core (a facility of the Rose F. Kennedy Intellectual and Developmental Disabilities Research Center), referrals from clinicians (primarily at the Albert Einstein College of Medicine), advertising, and at community health fairs.

Procedure

Clinical assessments, including the ADOS, WASI, and Sensory Profile, were administered at the participant’s initial visit to the laboratory. On the following visit, participants performed a simple reaction time task while continuous EEG was recorded. On average, the two visits were 3 months apart. The parameters of the task and the ERP acquisition, processing and analysis procedures are briefly described here, and in more detail in Brandwein et al. (2013).

Audiovisual Simple Reaction Time Task

Participants performed a simple reaction time task consisting of three stimulus conditions presented with equal probability. The ‘auditory-alone’ condition was a 1,000-Hz tone 75 dBSPL; 5 ms rise/fall time) presented from a single speaker for 60 ms. The ‘visual-alone’ condition was an image of a red disc with a diameter of 3.2 cm (subtending 1.5° in diameter at a viewing distance of 122 cm), which appeared on a black background for 60 ms. The ‘audiovisual’ condition consisted of the ‘auditory-alone’ and ‘visual-alone’ stimuli presented simultaneously. Auditory stimuli were presented from a Hartman Multimedia JBL Duet speaker located centrally atop the computer monitor (a Dell Ultrasharp 1704FTP) from which the visual stimuli were presented. The three stimulus conditions were presented in random order with an inter-stimulus interval (ISI) that varied randomly between 1,000 and 3,000 ms. Stimuli were presented in blocks of 100 trials each, and participants completed between 9 and 11 blocks (with the vast majority completing 10 blocks). Participants were instructed to press a button on a response pad as quickly as possible when they saw the circle, heard the tone, or saw the circle and heard the tone together. The same response key was used for all the three stimulus types. Breaks were encouraged between blocks to help maintain concentration and reduce restlessness or fatigue.

Behavioral Indices

Mean reaction times (RTs) were computed for each of the three stimulus conditions, so that we could assess how well these predicted the clinical measures of interest. Only trials with RTs falling within 2 standard deviations of an individual’s average RT were considered valid. Thus, the range of RTs accepted was determined at the individual participant level. Given the large age range and focus on a clinical population, significant intersubject variability in RT was expected. Using a 95 % cutoff to define the time window for acceptable trials rather than an absolute cutoff value allowed us to more accurately capture the range of RTs for each participant, an important factor in calculating the race model (described below). Hit rates, defined as the percent of trials on which a button press occurred within the individual’s specific RT range, were calculated for each participant and planned comparisons assessed for differences in hit rates across the three stimulus conditions. Planned comparisons between RTs for each three conditions tested for the presence of the ‘redundant signal effect’ (RSE), which, in this case would indicate behavioral facilitation (e.g., faster RTs) to the multisensory condition compared to each of the unisensory conditions. Our psychophysical index of MSI, however, was based on the Miller’s Race Model (Miller 1982), a stringent and established behavioral metric of MSI (e.g., Barutchu et al. 2009; Hughes et al. 1994; Maravita et al. 2008; Molholm et al. 2002; Neil et al. 2006). The race model assumes that a RSE can occur because the multisensory stimulus has two inputs to trigger a response (e.g., auditory and visual), and the fastest input wins. This in turn can lead to a faster mean RT to multisensory stimuli due to probability summation. Miller’s race model tests whether RT facilitation exceeds that predicted by probability summation. When the race model is violated (e.g., when RT facilitation is greater than that predicted by the race model), it can be assumed that multisensory RT facilitation is due to the interaction of the unisensory inputs during processing.

Miller’s race model (Miller 1982) is tested as follows: An upper limit is placed on the cumulative probability (CP) of a response at a given latency for redundant signals (i.e., the multisensory condition). For any latency, t, the race model holds when this CP value is less than or equal to the sum of the CP from each of the single target stimulus conditions (the unisensory stimuli). For each individual, the range of valid RTs was calculated for the three stimulus types (auditory-alone, visual-alone, and audiovisual) and divided into quantiles from the 5th to 100th percentile in 5 % increments (5, 10, …, 95, 100 %). Violations were expected to occur at quantiles representing the shorter RTs because this is when it was most likely that interactions of the visual and auditory inputs would result in the fulfillment of a response criterion before either source alone satisfied the same criterion (Miller 1982; Ulrich et al. 2007). A ‘Miller Inequality’ value is calculated by subtracting the value predicted by the race model from this cumulative probability value, and positive values represent the presence and amount of race model violation. It is important to note that failure to violate the race model is not evidence that the two information sources did not interact, but rather it places an upper boundary on RT facilitation that can be accounted for by probability summation. In the current study, we used maximum race model violation as the behavioral measure of MSI. Maximum race model violation is defined here as the largest ‘Miller inequality’ value across the first third of the distribution of RTs for each individual. To assess race model violation at the group level, the ‘Miller inequality’ value (from each participant, at each quantile considered) is submitted to a t test. The group is said to violate the race model at quantiles in which the t test was significant and the ‘Miller inequality’ value was positive.

Electrophysiological Indices

ERP Acquisition

Continuous EEG was recorded from 70 scalp electrodes at a digitization rate of 512 Hz using the BioSemi ActiveTwo™ electrode system with an open pass-band from DC to 103 Hz. Continuous EEG was recorded referenced to a common mode sense (CMS) active electrode and a driven right leg (DRL) passive electrode (for a description of the BioSemi active electrode system referencing and grounding conventions, visit www.biosemi.com/faq/cms&drl.htm).

ERP Processing

Matlab was used for offline processing and analyses. A low-pass filter of 45 Hz with a slope of 24 db/octave, and a high-pass filter of 1.6 Hz with a slope of 12 db/octave were applied to each participant’s continuous EEG. To generate ERPs, the EEG was divided into 600 ms epochs (100 ms pre-stimulus to 500 ms post-stimulus onset) with baseline defined as −50 to +10 ms relative to stimulus onset. To ensure that participants were paying attention to the stimuli, only trials for which the participant made a response (button press) within a specific time window were included in the analysis.

Artifact Rejection

Electrode channels with amplitudes larger than ±120 μV during the epoch surrounding stimulus presentation were considered to have excessive electromuscular activity, including those resulting from large eye movements, and were interpolated on a trial-by-trial basis using the nearest-neighbor spline (Perrin et al. 1987, 1989). Channels with a standard deviation of <.5 μV across the block were interpolated on a block-by-block basis. Finally, if there were more than four bad channels in a trial, then the trial was rejected (i.e., no more than four channels were interpolated for any given trial). For a given condition, a minimum of 180 trails (with an average of about 250 trials) were included in each of the participant averages. Epochs were sorted according to stimulus condition and averaged for each participant. The resulting auditory, visual, and audiovisual ERPs were re-referenced to an average of all electrodes. For each participant, a “sum” waveform was created by summing together the auditory and visual ERPs (from the unisensory conditions), the purpose of which is described in the following section.

ERP Analysis and Measures

EEG indices of early auditory and visual processing were based on the peak amplitudes of the auditory P1, N1a, N1b, and N1c and of the visual P1 and N1. The grand averaged ERPs across the full dataset for a given stimulus condition (auditory or visual) were used to identify the latency window and electrodes where the sensory evoked potential was largest (see Table 4). Automatic identification of the largest amplitude value within these timeframes and for these electrodes was then performed. Multisensory interactions were measured by comparing the sum of the responses to the auditory and visual unisensory conditions (the sum waveform) to the response to the multisensory audiovisual (AV) condition. This well-established and commonly used approach to measuring MSI (e.g., Brandwein et al. 2011; Foxe et al. 2000; Giard and Peronnet 1999; Molholm et al. 2002; Murray et al. 2005; Russo et al. 2010; Teder-Salejarvi et al. 2002) is based on the principle of superposition of electrical fields and nonlinear summation. Based on this general principle, any significant divergence between the sum and multisensory waveforms indicates that the auditory and visual inputs were processed differently when presented simultaneously versus when presented in isolation; i.e., that they interacted. EEG indices of MSI were based on the peak amplitudes of the MSI waveform (the difference between sum and multisensory responses) between 100 and 120 ms over fronto-central scalp, 100–130 ms over parietal scalp, and 180–210 ms over parieto-occipital scalp. These latencies and regions were defined by where and when ASD participants showed MSI in our earlier study (Brandwein et al. 2013).

Clinical Indices

Autism Symptom Severity

Severity scores were derived from ADOS raw total scores using the conversion table from Gotham et al. (2009). Severity scores are on a 1–10 point scale with higher numbers representing increased severity of autistic symptoms. A score under 4 is associated with a non-spectrum classification (Gotham et al. 2009). In this dataset severity scores ranged from 5 to 10. The distribution of scores within the sample is presented in Table 2.

Visual and Auditory Sensitivities

Visual/auditory sensitivity (VAS) scores were computed by mapping participants’ classification on the VAS scale of the SSP onto an ordinal 0–2 point scale such that 0 = ‘typical development’, 1 = ‘probable difference’, and 2 = ‘definite difference’. The distribution of VAS scores within the sample is presented in Table 2.

Statistical Analyses

Consideration of Participant Characteristics

Because certain demographic variables (e.g., maternal education, VIQ) have been shown, albeit inconsistently, to correlate with the expression and severity of ASD (Gotham et al. 2009; Howlin et al. 2004; Sell et al. 2012; van Eeghen et al. 2013a, b) and of sensory sensitivities (Ben-Sasson et al. 2009; Engel-Yeger et al. 2011; Gouze et al. 2009), participant characteristics (including age, verbal IQ, performance IQ, sex, maternal education, and race) were controlled for in these analyses. An initial correlation analysis assessed whether there were any significant relationships between the demographic characteristics of participants and the two clinical outcome measures. Participant characteristics shown to correlate with ADOS severity scores or VAS scores from the SSP were controlled for in the regression analyses by entering them into a hierarchical regression as ‘Stage 1’ variables. The preliminary correlation analysis on this dataset showed that none of the participant characteristics considered were significantly related to ADOS severity scores. Verbal IQ (VIQ) was significantly related to VAS [r(46) = −.414, p < .01] such that a lower VIQ was associated with higher levels of visual and auditory sensitivities. To control for the potential effect of VIQ on predicting VAS scores, VIQ was entered in Step 1 of the hierarchical regressions.

Predicting Autism Severity

Two multiple linear regression analyses were conducted to assess the extent to which (1) neurophysiological measures (the auditory P1, N1a, N1b, N1c; the visual P1, N1, and the three multisensory responses), and (2) behavioral measures (RTs for the three conditions and maximum race model violation), can predict autism symptom severity as measured by ADOS severity scores. In the first regression, the nine ERP measures of auditory and visual processing and MSI were entered into a simple linear regression as independent variables with severity scores as the dependent variable. In the second regression analysis, the four behavioral measures were entered into a simple linear regression as independent variables with severity scores on the ADOS as the dependent variable. The R 2 associated with the linear combination of the independent variables was used to evaluate the extent to which neurophysiological and behavioral measures of auditory and visual sensory processing and integration were associated with autistic symptom severity. The importance of individual ERP components and behavioral response patterns was considered by examining their relative contribution to the variance in autism symptom severity scores.

Predicting Visual and Auditory Sensitivities

Two hierarchical regression analyses were performed to assess the extent to which (1) neurophysiological measures (the auditory P1, N1a, N1b, N1c; the visual P1, N1, and the three multisensory responses), and (2) behavioral measures (RTs for the three conditions and maximum race model violation), are associated with VAS scores on the SSP, above and beyond that predicted by VIQ. For both of the regression analyses VIQ was entered in Step 1 of the regression analysis, as a preliminary analysis suggested that increased visual/auditory sensitivities are correlated with a lower IQ. The nine ERP measures were entered in Step 2 of the regression examining neurophysiological predictors as well as in the regression assessing behavioral variables. The change in R 2 resulting from the addition of the ERP and behavioral variables was used to evaluate the extent to which these experimental indices are associated with VAS once VIQ is controlled for. The importance of individual ERP components and of reaction time patterns was considered by examining their relative contribution to the variance in VAS.

Results

Behavioral Findings

Mean hit rates and RT for the full dataset are presented in Table 3. As expected based on Brandwein et al. (2013), mean hit rates were highest for the AV condition and lowest for the visual-alone condition (auditory compared to the visual: t 51 = 4.428, p < .01; auditory compared to AV: t 51 = 2.579, p < .05; visual compared to AV: t 51 = 6.008, p < .01). As a group, mean RTs were fastest to the AV stimuli and slowest to the visual-alone stimuli (auditory compared to the visual: t 51 = 3.605, p < .01; auditory compared to AV: t 51 = 13.978, p < .01; visual compared to AV: t 51 = 15.045, p < .01). Also as expected, at the group level the race model was not significantly violated.

Results of Regression Analyses

As described in the methods, we entered the mean RT for the three stimulus conditions and maximum race model violation (i.e., the ‘Miller inequality’ value) into each of the regression models as independent variables. The linear combination of these four behavioral variables did not predict ADOS severity scores [F(4, 38) = .326, p > .05] or VAS scores [after controlling for the effects of VIQ, R 2 change = .197, F(5, 40) = 4.674, p > .05].

Electrophysiological Findings

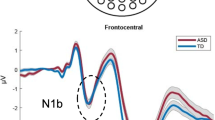

Clear Auditory and visual responses were readily observable (see Fig. 2, Supplemental Figure 1 for ERPs representing the composite signal from the electrodes used in the analyses). The auditory evoked potential was characterized by the typical P1–N1 complex with a fronto-centrally focused positivity (P1) around 75 ms followed by a negativity (N1b) around 115 ms. Over lateral scalp regions, the auditory evoked potential included a negativity that peaked around 75 ms (N1a) and 180 ms (N1c). The visual evoked potential was characterized by a large positivity (P1) over occipital areas peaking at about 150 ms, and a large bilateral negativity over lateral occipital areas that peaked around 225 ms. AV interactions, as indicated by the multisensory (AV) and the sum waveforms (A + V), were observed over fronto-central and parietal scalp between 100 and 150 ms, and bilaterally over parieto-occipital scalp between 180 and 210 ms. See Table 4 for a breakdown of the electrodes and latency windows used in the analyses.

Results of Regression Analyses

As described in the methods, we entered the peak amplitudes of the nine ERP measures (auditory P1, N1a, N1b, and N1c and the visual P1 and N1, and three multisensory responses) into each of the regression models as independent variables. The linear combination of the nine ERP measures was significantly related to ADOS severity scores, F(9, 33) = 2.928, p = .011. Approximately 44 % (R 2 = .444) of the variance of autistic symptom severity in the sample can be accounted for by the linear combination of ERP measures (Fig. 1). Table 5 presents the relative strength of the individual predictors. The auditory N1a and N1b were the strongest unisensory ERP predictors of autism symptom severity. The negative correlation between the N1a and severity scores suggested that a smaller N1a (e.g., a more positive amplitude value) was associated with less severe symptoms of autism (lower severity scores). The positive correlation between the N1b and severity scores indicated that a larger N1b (e.g., more negative amplitude value) was associated with less severe symptoms of autism (lower severity scores). MSI between 100 and 130 ms over parietal scalp significantly contributed to the variance observed in autism symptom severity, with larger amplitude MSI effects (the difference between the sum and multisensory responses) associated with less severe symptoms of autism. In contrast, the linear combination of the nine ERP measures did not account for a significant proportion of the variance in VAS scores after controlling for the effects of VIQ (R 2 change = .151, F(10, 35) = 1.675, p > .05).

Scatterplot displaying the relationship between autism symptom severity (y-axis), and the linear combination of nine ERP peaks that measure auditory, visual, and audiovisual processing (x-axis). Severity scores are derived from the ADOS and range from 1 to 10 with higher scores indicating increased symptom severity. Each point represents a single value for a participant. The p value associated with the R 2 of .444 is .012

Schematic Representation of ERP Effects

For descriptive purposes only, we performed a median split of the ERP data as a function of the participant’s autism severity score. This allowed us to visualize the above effects in terms of the ERP response, albeit in summary form. Waveforms were generated to illustrate the three ERP effects significantly related to autism symptom severity, the auditory N1a, N1b, and the parietally focused MSI peak (between 100 and 130 ms). This yielded an ‘ASD-moderate’ group with severity scores between 5 and 7 (N = 22) and an ‘ASD-severe’ group with severity scores between 8 and 10 (N = 21). The two groups did not differ significantly in age or estimated PIQ or VIQ. Further, to visualize how the waveforms of the ‘ASD-moderate’ and ‘ASD-severe’ groups compared to those of TD children, we generated mean ERPs from a group of age and PIQ matched typically developing (TD) children (taken from a database of TD children run on the exact same paradigm/procedures). Waveforms for these three groups (Fig. 2a) show that the peak amplitude of the auditory N1a in the ‘ASD-moderate’ group is midway between the peak amplitude of the auditory N1a in the ‘ASD- severe’ and TD group. The auditory N1b component (Fig. 2b) in the ‘ASD-moderate’ group is very similar to, and in fact overlapping with, that of the TD group. The auditory N1b is strikingly smaller in the ‘ASD-severe’ group. The parietally focused MSI peak between 100 and 130 ms is largest in the TD group and smallest in the ‘ASD-severe’ group, with the peak amplitude of the ‘ASD-moderate’ group falling midway between the severely autistic and the TD children (Fig. 2c).

Mean ERPs for the ASD-severe, ASD-moderate, and typically developing groups. a, b The three groups’ responses to the auditory-alone condition, with dashed ellipses indicating the component of interest (the auditory N1a and N1b). c A measure of audiovisual integration, represented by a difference wave (explained in the text) over parietal scalp, with a dashed ellipse to indicate the response window of interest. Traces represent the composite signal from adjacent electrodes, the locations of which are indicated on the head models

Discussion

This study revealed a significant relationship between neural indices of early auditory and visual processing and the severity of autistic symptoms, in a group of children and adolescents with ASD. A particularly robust relationship was observed between severity of autism and basic auditory processing and audiovisual integration. In contrast, our EEG indices and reaction time data did not predict visual/auditory sensitivities, as assessed by parent responses on the SSP.

A Role for Impaired Auditory Processing in the Severity of Autism

The strongest neurophysiological predictors of autistic symptom severity were the auditory N1a and auditory N1b. The N1 response reflects early sensory processing, and is associated with neural activity largely focused in auditory cortices in the temporal lobe (Naatanen and Picton 1987; Ruhnau et al. 2011; Scherg et al. 1989). Interestingly, post mortem studies reveal that typical neural patterning during development in these very same regions is disrupted in ASD (Casanova et al. 2002; Stoner et al. 2014). Further, there is considerable evidence from converging methods for impaired auditory processing in ASD (Boddaert et al. 2004; Bruneau et al. 1999; Courchesne et al. 1985; Ferri et al. 2003; Martineau et al. 1984; Oades et al. 1988; Roberts et al. 2010; Samson et al. 2011). Previous findings on the auditory N1 response in ASD, however, have been highly variable. For example while Bruneau et al. (1999) found smaller N1b amplitude in 4–8 year old children with ASD, Oades et al. (1988) found that the N1b was larger and had a shorter latency in a sample of 5–17 year olds with ASD. Other groups, in contrast, report the absence of significant N1b amplitude differences in children with ASD (Dunn et al. 2008; Ferri et al. 2003; Lincoln et al. 1995; Martineau et al. 1984). Such inconsistencies in the literature are undoubtedly due in part to differences in participant characteristics (e.g., age, cognitive level of functioning, language function) and experimental parameters (e.g., inter-stimulus interval, and active vs. passive tasks; see Dunn et al. 2008) across studies. The current findings make clear, however, that symptom severity is another key factor accounting for variance in basic auditory processing in ASD. It is reasonable to assume that this reflects a relationship between neuropathology in auditory cortices and the degree of autistic symptoms. We would also surmise that magnitude of neuropathology in auditory cortices is indicative of neuropathology in other affected brain areas. To the extent that this is the case, associations between auditory responses (as indexed by neurophysiological recordings) and autism severity do not necessarily mean that auditory cortex is the only region involved. Nevertheless, it is worthwhile and valid to consider the possible role of auditory dysfunction in autism.

The auditory N1 typically shows developmental changes over childhood (Ceponiene et al. 2002; Gomes et al. 2001b; Ponton et al. 2000; Tonnquist-Uhlen et al. 2003). In young children (under ~9 years of age), the N1a is most prominent at lateral electrode sites, whereas response amplitude at these sites diminishes with increasing age (Gomes et al. 2001b; Tonnquist-Uhlen et al. 2003). The fronto-centrally focused N1 (N1b), a prominent early negative-going response in adults, is small or under some circumstances undetectable in young children (Ceponiene et al. 2002; Gomes et al. 2001b; Ponton et al. 2000), and reaches adult-like levels by about 15–16 years of age (Mahajan and McArthur 2012; Pang and Taylor 2000; Ponton et al. 2000). In contrast, the amplitude of the lateral N1 (a and c) gets smaller with increasing age (Gomes et al. 2001a; Tonnquist-Uhlen et al. 2003). In light of these documented changes in N1 morphology, the current findings, that increased severity of autism is associated with a larger lateral N1 (i.e., N1a) and a smaller fronto-central N1 (i.e., N1b), is consistent with the notion of immature responses to auditory stimuli during early stages of cortical processing. It is possible that differences in the microstructure of neural patterning, as has been observed in post-mortem morphological studies (Casanova et al. 2002; Stoner et al. 2014), would lead to immature auditory responses. It is important to point out that it is unlikely that age accounts for the current differences. Autism severity, as measured by the ADOS, is derived in such a way to be relatively independent of age, and in our preliminary processing of the data, autism severity and age did not correlate. Further, when the ASD participants were equally split based on whether the participant fell into the “moderate autism” or “severe autism” category, there was no significant difference in age (means of 10.8 and 10.4 years respectively).

We also examined the relationship between indices of early visual processing (visual P1 and N1 response amplitudes), and autism symptom severity and SSP scores. In contrast to the auditory N1, these neurophysiological metrics of visual processing did not have significant predictive value for our outcome measures. A number of studies report differences in early visual processing in individuals with ASD compared to age and IQ matched healthy controls (Brandwein et al. 2013; Frey et al. 2013; McPartland et al. 2011; Vlamings et al. 2010). As such we cannot rule out that neurophysiologial responses to different visual stimuli, or stimuli presented to more peripheral locations (Frey et al. 2013), would reveal such relationships. Indeed we expect that such relationships exist. It is further possible that different and/or more specific clinical variables would hold a stronger relationship to the neurophysiological measures of visual processing that we investigated here. Alternatively, visual processing deficits may be less variable across the spectrum and therefore not hold strong predictive value with regard to clinical symptoms in ASD.

A Role for Impaired Multisensory Processing in the Severity of Autism

An additional neurophysiological predictor of autistic symptom severity was found in an early MSI response over parietal scalp (in the 100–130 ms post-stimulus time window). Although the functional role of this MSI response remains to be unraveled, the fact that it is smaller in individuals with more severe autism supports the thesis that deficits in MSI are associated with the core symptoms of autism (Brandwein et al. 2013; Foss-Feig et al. 2010; Foxe et al. 2013; Stevenson et al. 2014b; Woynaroski et al. 2013). Neuroimaging studies indicate that brain connectivity is abnormal in ASD (Courchesne and Pierce 2005; Just et al. 2004a; Muller et al. 2011; Supekar et al. 2013), and it has been speculated that this has implications for the integrity of MSI. There is now substantial evidence for impaired multisensory processing in autism from both behavioral (Brandwein et al. 2013; Collignon et al. 2013; Foss-Feig et al. 2010; Foxe et al. 2013; Kwakye et al. 2011; Stevenson et al. 2014a, b) and EEG (Brandwein et al. 2013; Magnee et al. 2011; Murphy et al. 2014; Russo et al. 2010) studies. It is a reasonable assumption that suboptimal integration of multisensory inputs early in development would have cascading effects on the development of both language and social skills. For example, in typical development, early language learning involves combining incoming visual (lip movements) and auditory (speech sounds) information (Kuhl and Meltzoff 1982; Teinonen et al. 2008). We and others have shown the ability to benefit from such multisensory inputs during speech perception to be significantly impaired in autism (e.g., Foxe et al. 2013; Stevenson et al. 2014a). Regarding the development of non-linguistic social skills, emotion and speaker intention are communicated through multisensory signals such as facial expressions and changes in prosody of the speech signal (Ethofer et al. 2006). As such we would expect impaired integration to also have implications for the development of social communication. It is also possible that individuals with ASD are more reliant on redundant sensory inputs to learn social cues than are typically developing individuals, perhaps due to poorer attunement to social cues. In this case, a reduced ability to benefit from multisensory inputs may compound existing deficits, exacerbating the severity of symptoms seen in ASD. In addition, we and others have proposed that the integration of multisensory inputs is essential to the orderly grouping of information that enters through the separate sensory systems (e.g., Molholm et al. 2004; Stein and Meredith 1990). Accordingly, deficits in MSI may lead to experiences of a disorganized sensory environment and ‘sensory overload’, which in turn may lead to withdrawal and defensive sensory behaviors.

Neurophysiological Measures of Sensory Processing and Their Relationship to Reported Visual/Auditory Sensitivities

Whereas our neurophysiological indicators of auditory and visual processing and integration were good predictors of autism severity, these metrics failed to show a systematic relationship with participants’ auditory and visual sensitivities, as rated by their parents on the SSP. It is tempting to interpret this finding as lack of evidence for a neurophysiological relationship with auditory and visual sensitivities in ASD. However, such an interpretation is premature for a number of reasons. One is that here we only consider relatively early latency sensory processing, whereas sensory processing in later time-frames might be more relevant for reported sensory sensitivities. Additionally, we must consider the limitations of using an indirect, parent report measure to quantify visual/auditory sensitivities. The SSP was chosen as an outcome measure because it is currently the most commonly used scale of sensory processing in research. Nevertheless, it is far from ideal. Like most parent report measures, the SSP is problematic because parents can be strongly influenced by the symptoms they believe to be related to their child’s disorder (Dahlgren and Gillberg 1989), as well as by their own personal experiences with sensory stimuli. The construct validity of the SSP is based on the finding that children who scored lower on the SSP (indicating more abnormal behaviors) had more abnormal physiological responses (as measured by electrodermal responses) to repeated sensory stimulation (McIntosh et al. 1999a, b). However, the sample size was small and the relationship between SSP scores was non-specific (i.e., scores did not differentiate between hyper or hypo-responsive electrodermal responses). In addition, the psychometric properties of the SSP are based on a small sample size (N = 117) across a large age range (3–17 years); and the normative data are not age or IQ-specific, which would seem particularly important given the known influences of age and cognitive level on sensory responses and behaviors (Crane et al. 2009; Kern et al. 2006). A final issue to be considered is that the VAS section of the SSP focuses on over-responsivity to visual stimuli and sounds (sample question: “holds hands over ears to protect ears from sound”). It may be that our experimental variables represent a different aspect of auditory and visual processing abnormalities that are not captured by the questions in the SSP.

While the shortcomings of the SSP limit the conclusions that can be drawn from the current dataset, these issues highlight the need for an improved measure of sensory symptoms. The Sensory Integration and Praxis Test (SPIT) (Ayres 1989) is considered the gold standard tool for assessing sensory integration and praxis (Schaaf et al. 2014). This well standardized, reliable and valid measure of sensory symptoms has been used in children with ASD (Schaaf et al. 2014) and is valuable in that it involves direct observation of the child by a trained professional. However, this measure is not optimal for the current study as it does not include assessments of auditory and visual symptoms, the mainstay of this investigation. One promising tool that may prove valuable for characterizing sensory symptoms is the SensOR Assessment, an examiner-administered performance evaluation that measures sensory over-responsivity across seven domains (including auditory and visual) (Schoen et al. 2008). Unfortunately the SensOR is in development and is not yet standardized. Establishing a valid measure of sensory symptoms is important not only for research purposes, but also for use by clinicians. This is especially the case in light of the inclusion of sensory symptoms in the DSM-5 criteria for ASD.

Finally, we also note that for both regression analyses, RTs in response to unisensory stimulation and RT facilitation as assessed by race model violation did not predict clinical symptomology. This may reflect that these early latency ERPs are more proximal to the underlying neurobiology of ASD than are RT data.

Conclusions

In conclusion, the current investigation reveals a relationship between neurophysiological indices of basic sensory processing and clinical measures of autism symptom severity. Clinical diagnosis is currently made on the basis of behavioral characteristics and symptoms which can be highly subjective and often require a tremendous amount of clinical expertise. On the other hand, biomarkers (whether they are genetic, neuroanatomical, or in this case neurophysiological) can be measured objectively and systematically. Biomarkers may prove invaluable in sub-grouping this incredibly heterogeneous disorder, and aiding in developing targeted, individualized interventions that are tailored for maximum efficacy based on the individual’s specific strengths and weaknesses. While there is much ground to be covered in terms of identifying biomarkers of ASD, the hope is that combining robust neurophysiological indices of basic sensory processing with well-established clinical measures of autism, will help get us closer to this point.

References

Ameis, S. H., Fan, J., Rockel, C., Voineskos, A. N., Lobaugh, N. J., Soorya, L., et al. (2011). Impaired structural connectivity of socio-emotional circuits in autism spectrum disorders: A diffusion tensor imaging study. PLoS ONE, 6(11), e28044. doi:10.1371/journal.pone.0028044.

Asperger, H. (1944). Die ‘Autistischen Psychopathen’ im Kindesalter. Archiv für Psychiatrie und Nervenkrankheiten, 117, 76–136.

Ayres, A. J. (1979). Sensory integration and the child. Los Angeles: Western Psychological Services.

Ayres, A. J. (1989). The sensory integration and praxis tests. Los Angeles, CA: Western Psychological Services.

Baranek, G. T., David, F. J., Poe, M. D., Stone, W. L., & Watson, L. R. (2006). Sensory Experiences Questionnaire: Discriminating sensory features in young children with autism, developmental delays, and typical development. Journal of Child Psychology and Psychiatry, 47(6), 591–601. doi:10.1111/j.1469-7610.2005.01546.x.

Barutchu, A., Crewther, D. P., & Crewther, S. G. (2009). The race that precedes coactivation: Development of multisensory facilitation in children. Dev Sci, 12(3), 464–473. doi:10.1111/j.1467-7687.2008.00782.x.

Ben-Sasson, A. (2011). Parents’ search for evidence-based practice: A personal story. Journal of Paediatrics and Child Health, 47(7), 415–418. doi:10.1111/j.1440-1754.2011.02141.x.

Ben-Sasson, A., Cermak, S. A., Orsmond, G. I., Tager-Flusberg, H., Kadlec, M. B., & Carter, A. S. (2008). Sensory clusters of toddlers with autism spectrum disorders: Differences in affective symptoms. Journal of Child Psychology and Psychiatry, 49(8), 817–825. doi:10.1111/j.1469-7610.2008.01899.x.

Ben-Sasson, A., Hen, L., Fluss, R., Cermack, S., Engel-Yeger, B., & Gal, E. (2009). A meta-analysis of sensory modulation symptoms in Individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 39, 1–11.

Ben-Sasson, A., Soto, T. W., Martinez-Pedraza, F., & Carter, A. S. (2013). Early sensory over-responsivity in toddlers with autism spectrum disorders as a predictor of family impairment and parenting stress. Journal of Child Psychology and Psychiatry, 54(8), 846–853. doi:10.1111/jcpp.12035.

Boddaert, N., Chabane, N., Belin, P., Bourgeois, M., Royer, V., Barthelemy, C., et al. (2004). Perception of complex sounds in autism: Abnormal auditory cortical processing in children. American Journal of Psychiatry, 161(11), 2117–2120. doi:10.1176/appi.ajp.161.11.2117.

Brandwein, A. B., Foxe, J. J., Butler, J. S., Russo, N. N., Altschuler, T. S., Gomes, H., et al. (2013). The development of multisensory integration in high-functioning autism: High-density electrical mapping and psychophysical measures reveal impairments in the processing of audiovisual inputs. Cerebral Cortex, 23(6), 1329–1341. doi:10.1093/cercor/bhs109.

Brandwein, A. B., Foxe, J. J., Russo, N. N., Altschuler, T. S., Gomes, H., & Molholm, S. (2011). The development of audiovisual multisensory integration across childhood and early adolescence: A high-density electrical mapping study. Cerebral Cortex, 21(5), 1042–1055.

Brock, J., Brown, C. C., & Boucher, J. (2002). The temporal binding deficit hypothesis of autism. Developmental and Psychopathology, 14, 209–224.

Bruneau, N., Roux, S., Adrien, J., & Barthelemy, C. (1999). Auditory associative cortex dysfunction in children with autism: Evidence from late auditory evoked potentials (N1 wave ± T complex). Clinical Neurophysiology, 110, 1927–1934.

Campbell, D. B., Warren, D., Sutcliffe, J. S., Lee, E. B., & Levitt, P. (2010). Association of MET with social and communication phenotypes in individuals with autism spectrum disorder. American Journal of Medical Genetics Part B: Neuropsychiatric Genetics, 153B(2), 438–446. doi:10.1002/ajmg.b.30998.

Cardinale, R. C., Shih, P., Fishman, I., Ford, L. M., & Muller, R. A. (2013). Pervasive rightward asymmetry shifts of functional networks in autism spectrum disorder. [Research support, N.I.H., extramural]. JAMA Psychiatry, 70(9), 975–982. doi:10.1001/jamapsychiatry.2013.382.

Casanova, M. F., Buxhoeveden, D. P., & Brown, C. (2002). Clinical and macroscopic correlates of minicolumnar pathology in autism. Journal of Child Neurology, 17(9), 692–695.

Cascio, C. J., Moana-Filho, E. J., Guest, S., Nebel, M. B., Weisner, J., Baranek, G. T., et al. (2012). Perceptual and neural response to affective tactile texture stimulation in adults with autism spectrum disorders. Autism Research, 5(4), 231–244. doi:10.1002/aur.1224.

Ceponiene, R., Rinne, R., & Naatanen, R. (2002). Maturation of cortical sound processing as indexed by event-related potentials. Clinical Neurophysiology, 113, 870–882.

Charman, T., Pickles, A., Simonoff, E., Chandler, S., Loucas, T., & Baird, G. (2011). IQ in children with autism spectrum disorders: Data from the Special Needs and Autism Project (SNAP). Psychological Medicine, 41(3), 619–627. doi:10.1017/S0033291710000991.

Collignon, O., Charbonneau, G., Peters, F., Nassim, M., Lassonde, M., Lepore, F., et al. (2013). Reduced multisensory facilitation in persons with autism. [Research support, Non-U.S. Gov’t]. Cortex, 49(6), 1704–1710. doi:10.1016/j.cortex.2012.06.001.

Courchesne, E., Lincoln, A. J., Kilman, B. A., & Galambos, R. (1985). Event-related brain potential correlates of the processing of novel visual and auditory information in autism. Journal of Autism and Developmental Disorders, 15(1), 55–76.

Courchesne, E., & Pierce, K. (2005). Why the frontal cortex in autism might be talking only to itself: Local over-connectivity but long-distance disconnection. Current Opinion in Neurobiology, 15(2), 225–230. doi:10.1016/j.conb.2005.03.001.

Coutanche, M. N., Thompson-Schill, S. L., & Schultz, R. T. (2011). Multi-voxel pattern analysis of fMRI data predicts clinical symptom severity. Neuroimage, 57(1), 113–123. doi:10.1016/j.neuroimage.2011.04.016.

Crane, L., Goddard, L., & Pring, L. (2009). Sensory processing in adults with autism spectrum disorders. Autism, 13, 215–228.

Dahlgren, S. O., & Gillberg, C. (1989). Symptoms in the first two years of life. A preliminary population study of infantile autism. European Archives of Psychiatry and Neurological Sciences, 238(3), 169–174.

Di Russo, F., Martinez, A., Sereno, M. I., Pitzalis, S., & Hillyard, S. A. (2002). Cortical sources of the early components of the visual evoked potential. Human Brain Mapping, 15(2), 95–111.

Dunn, M., Gomes, H., & Gravel, J. (2008). Mismatch negativity in children with autism and typical development. Journal of Autism and Developmental Disorders, 38, 52–71.

Edgar, J. C., Khan, S. Y., Blaskey, L., Chow, V. Y., Rey, M., Gaetz, W., et al. (2013). Neuromagnetic oscillations predict evoked-response latency delays and core language deficits in autism spectrum disorders. Journal of Autism and Developmental Disorders. doi:10.1007/s10803-013-1904-x.

Elsabbagh, M., Holmboe, K., Gliga, T., Mercure, E., Hudry, K., Charman, T., et al. (2011). Social and attention factors during infancy and the later emergence of autism characteristics. Progress in Brain Research, 189, 195–207. doi:10.1016/B978-0-444-53884-0.00025-7.

Engel-Yeger, B., Hardal-Nasser, R., & Gal, E. (2011). Sensory processing dysfunctions as expressed among children with different severities of intellectual developmental disabilities. Research in Developmental Disabilities, 32(5), 1770–1775.

Ethofer, T., Anders, S., Erb, M., Droll, C., Royen, L., Saur, R., et al. (2006). Impact of voice on emotional judgment of faces: An event-related fMRI study. Human Brain Mapping, 27(9), 707–714. doi:10.1002/hbm.20212.

Fan, J., Bernardi, S., Van Dam, N. T., Anagnostou, E., Gu, X., Martin, L., et al. (2012). Functional deficits of the attentional networks in autism. Brain and Behavior, 2(5), 647–660. doi:10.1002/brb3.90.

Ferri, R., Elia, M., Agarwal, N., Lanuzza, B., Musumeci, S., & Pennisi, G. (2003). The mismatch negativity and the P3a components of the auditory event-related potentials in autistic low-functioning subjects. Clinical Neurophysiology, 114, 1671–1680.

Fishman, I., Keown, C. L., Lincoln, A. J., Pineda, J. A., & Muller, R. A. (2014). Atypical cross talk between mentalizing and mirror neuron networks in autism spectrum disorder. JAMA Psychiatry, 71(7), 751–760. doi:10.1001/jamapsychiatry.2014.83.

Foss-Feig, J. H., Kwakye, L. D., Cascio, C. J., Burnette, C. P., Kadivar, H., Stone, W. L., et al. (2010). An extended multisensory temporal binding window in autism spectrum disorders. Experimental Brain Research, 203(2), 381–389. doi:10.1007/s00221-010-2240-4.

Foxe, J. J., Molholm, S., Del Bene, V. A., Frey, H. P., Russo, N. N., Blanco, D., et al. (2013). Severe multisensory speech integration deficits in high-functioning school-aged children with autism spectrum disorder (ASD) and their resolution during early adolescence. Cerebral Cortex. doi:10.1093/cercor/bht213.

Foxe, J. J., Morocz, I. A., Murray, M. M., Higgins, B. A., Javitt, D. C., & Schroeder, C. E. (2000). Multisensory auditory–somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Cognitive Brain Research, 10(1–2), 77–83.

Foxe, J. J., & Simpson, G. V. (2002). Flow of activation from V1 to frontal cortex in humans. A framework for defining “early” visual processing. [Research support, U.S. Gov’t, P.H.S.]. Experimental Brain Research, 142(1), 139–150. doi:10.1007/s00221-001-0906-7.

Frey, H. P., Molholm, S., Lalor, E. C., Russo, N. N., & Foxe, J. J. (2013). Atypical cortical representation of peripheral visual space in children with an autism spectrum disorder. European Journal of Neuroscience, 38(1), 2125–2138. doi:10.1111/ejn.12243.

Frith, U. (1996). Cognitive explanations of autism. Acta Paediatrica. Supplement, 416, 63–68.

Geschwind, D. H., & Levitt, P. (2007). Autism spectrum disorders: Developmental disconnection syndromes. Current Opinion in Neurobiology, 17(1), 103–111. doi:10.1016/j.conb.2007.01.009.

Giard, M. H., & Peronnet, F. (1999). Auditory–visual integration during multimodal object recognition in humans: A behavioral and electrophysiological study. Journal of Cognitive Neuroscience, 11(5), 473–490.

Gomes, H., Dunn, M., Ritter, W., Kurtzberg, D., Brattson, A., Kreuzer, J. A., et al. (2001a). Spatiotemporal maturation of the central and lateral N1 components to tones. Developmental Brain Research, 129, 147–155.

Gomes, H., Dunn, M., Ritter, W., Kurtzberg, D., Brattson, A., Kreuzer, J. A., et al. (2001b). Spatiotemporal maturation of the central and lateral N1 components to tones. Developmental Brain Research, 129(2), 147–155.

Gotham, K., Pickles, A., & Lord, C. (2009). Standardizing ADOS scores for a measure of severity in autism spectrum disorders. Journal of Autism and Developmental Disorders, 39(5), 693–705. doi:10.1007/s10803-008-0674-3.

Gouze, K. R., Hopkins, J., LeBailly, S. A., & Lavigne, J. V. (2009). Re-examining the epidemiology of sensory regulation dysfunction and comorbid psychopathology. Journal of Abnormal Child Psychology, 37(8), 1077–1087. doi:10.1007/s10802-009-9333-1.

Green, S. A., Rudie, J. D., Colich, N. L., Wood, J. J., Shirinyan, D., Hernandez, L., et al. (2013). Overreactive brain responses to sensory stimuli in youth with autism spectrum disorders. Journal of the American Academy of Child and Adolescent Psychiatry, 52(11), 1158–1172. doi:10.1016/j.jaac.2013.08.004.

Happé, F. (2005). The weak central coherence account of autism. In F. Volkmar, R. Paul, A. Klin, & J. Cohen (Eds.), Handbook of autism and pervasive developmental disorders, Vol. 1: Diagnosis, development, neurobiology, and behavior (3rd ed., pp. 640–649). New York: Wiley.

Hermelin, B., & O’Connor, N. (1970). Psychological experiments with autistic children. Oxford: Pergamon Press.

Howlin, P., Goode, S., Hutton, J., & Rutter, M. (2004). Adult outcome for children with autism. Journal of Child Psychology and Psychiatry, 45(2), 212–229.

Hu, V. W. (2012). Subphenotype-dependent disease markers for diagnosis and personalized treatment of autism spectrum disorders. Disease Markers, 33(5), 277–288. doi:10.3233/DMA-2012-0916.

Hughes, H., Reuter-Lorenz, P. A., Nozawa, G., & Fendrich, R. (1994). Visual–auditory interactions in sensorimotor processing: Saccades versus manual responses. Journal of Experimental Psychology - Human Perception and Performance, 20(1), 131–153.

Hutt, C., Hutt, S. J., Lee, D., & Ounsted, C. (1964). Arousal and childhood autism. Nature, 204, 909–919.

Jemel, B., Mimeault, D., Saint-Amour, D., Hosein, A., & Mottron, L. (2010). VEP contrast sensitivity responses reveal reduced functional segregation of mid and high filters of visual channels in autism. J Vis, 10(6), 13. doi:10.1167/10.6.13.

Jones, W., & Klin, A. (2009). Heterogeneity and homogeneity across the autism spectrum: The role of development. Journal of the American Academy of Child and Adolescent Psychiatry, 48(5), 471–473. doi:10.1097/CHI.0b013e31819f6c0d.

Just, M. A., Cherkassky, V. L., Keller, T. A., & Minshew, N. J. (2004a). Cortical activation and synchronization during sentence comprehension in high-functioning autism: Evidence of underconnectivity. Brain, 127, 1811–1821.

Just, M. A., Cherkassy, V. L., Keller, T. A., & Minshew, N. J. (2004b). Cortical activation and synchronization during sentence comprehension in high-functioning autism: Evidence of underconnectivity. Brain, 127, 1811–1821.

Kanner, L. (1943). Autistic disturbances of affective contact. Nervous Child, 2, 217–250.

Kemner, C., Verbaten, M. N., Cuperus, J. M., Camfferman, G., & van Engeland, H. (1995). Auditory event-related brain potentials in autistic children and three different control groups. Biological Psychiatry, 38(3), 150–165.

Kern, J., Trivedi, M., Garver, C., Grannemann, B., Andrews, A., Salva, J., et al. (2006). The pattern of sensory processing abnormalities in autism. Autism, 10, 480–494.

Kuhl, P. K., & Meltzoff, A. N. (1982). The bimodal perception of speech in infancy. Science, 218(4577), 1138–1141.

Kwakye, L. D., Foss-Feig, J. H., Cascio, C. J., Stone, W. L., & Wallace, M. T. (2011). Altered auditory and multisensory temporal processing in autism spectrum disorders. Frontiers in Integrative Neuroscience, 4, 129. doi:10.3389/fnint.2010.00129.

Leavitt, V. M., Molholm, S., Ritter, W., Shpaner, M., & Foxe, J. J. (2007). Auditory processing in schizophrenia during the middle latency period (10–50 ms): High-density electrical mapping and source analysis reveal subcortical antecedents to early cortical deficits. Journal of Psychiatry and Neuroscience, 32(5), 339–353.

Leekam, S. R., Nieto, C., Libby, S. J., Wing, L., & Gould, J. (2007). Describing the sensory abnormalities of children and adults with autism. Journal of Autism and Developmental Disorders, 37(5), 894–910. doi:10.1007/s10803-006-0218-7.

Lepisto, T., Kujala, T., Vanhala, R., Alku, P., Huotilainen, M., & Naatanen, R. (2005). The discrimination of and orienting to speech and non-speech sounds in children with autism. Brain Research, 1066(1–2), 147–157. doi:10.1016/j.brainres.2005.10.052.

Lincoln, A. J., Courchesne, E., Harms, L., & Allen, M. (1995). Sensory modulation of auditory stimuli in children with autism and receptive developmental language disorder: Event-related brain potential evidence. Journal of Autism and Developmental Disorders, 25(5), 521–539.

Lord, C., Luyster, R., Guthrie, W., & Pickles, A. (2012). Patterns of developmental trajectories in toddlers with autism spectrum disorder. Journal of Consulting and Clinical Psychology, 80(3), 477–489. doi:10.1037/a0027214.

Lord, C., Risi, S., Lambrecht, L., Cook, E. H, Jr, Leventhal, B. L., DiLavore, P. C., et al. (2000). The autism diagnostic observation schedule-generic: A standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders, 30(3), 205–223.

Lord, C., Rutter, M., DiLavore, P. C., & Risi, S. (1999). Autism diagnostic observation schedule. Los Angeles, CA: Western Psychological Services.

Lucan, J. N., Foxe, J. J., Gomez-Ramirez, M., Sathian, K., & Molholm, S. (2010). Tactile shape discrimination recruits human lateral occipital complex during early perceptual processing. [Research support, U.S. Gov’t, Non-P.H.S.]. Human Brain Mapping, 31(11), 1813–1821. doi:10.1002/hbm.20983.

Magnee, M. J., de Gelder, B., van Engeland, H., & Kemner, C. (2011). Multisensory integration and attention in autism spectrum disorder: Evidence from event-related potentials. PLoS ONE, 6(8), e24196. doi:10.1371/journal.pone.0024196.

Mahajan, Y., & McArthur, G. (2012). Maturation of auditory event-related potentials across adolescence. Hearing Research, 294(1–2), 82–94. doi:10.1016/j.heares.2012.10.005.

Mak-Fan, K. M., Morris, D., Vidal, J., Anagnostou, E., Roberts, W., & Taylor, M. J. (2013). White matter and development in children with an autism spectrum disorder. Autism, 17(5), 541–557. doi:10.1177/1362361312442596.

Maravita, A., Bolognini, N., Bricolo, E., Marzi, C. A., & Savazzi, S. (2008). Is audiovisual integration subserved by the superior colliculus in humans? NeuroReport, 19(3), 271–275.

Martineau, J., Garreau, B., Barthelemy, C., & Lelord, G. (1984). Evoked potentials and P300 during sensory conditioning in autistic children. Annals of the New York Academy of Sciences, 425, 362–369.

McIntosh, D. N., Miller, L. J., Shyu, J., & Dunn, W. (1999a). Short Sensory Profile. San Antonio, TX: The Psychological Corporation.

McIntosh, D. N., Miller, L. J., Shyu, V., & Hagerman, R. J. (1999b). Sensory-modulation disruption, electrodermal responses, and functional behaviors. Developmental Medicine and Child Neurology, 41(9), 608–615.

McPartland, J. C., Wu, J., Bailey, C. A., Mayes, L. C., Schultz, R. T., & Klin, A. (2011). Atypical neural specialization for social percepts in autism spectrum disorder. Social Neuroscience, 6(5–6), 436–451. doi:10.1080/17470919.2011.586880.

Miller, J. (1982). Divided attention: Evidence for coactivation with redundant signals. Cognitive Psychology, 14(2), 247–279.

Molholm, S., Ritter, W., Javitt, D. C., & Foxe, J. J. (2004). Multisensory visual–auditory object recognition in humans: A high-density electrical mapping study. Cerebral Cortex, 14(4), 452–465.

Molholm, S., Ritter, W., Murray, M. M., Javitt, D. C., Schroeder, C. E., & Foxe, J. J. (2002). Multisensory auditory–visual interactions during early sensory processing in humans: A high density electrical mapping study. Cognitive Brain Research, 14, 115–128.

Mongillo, E. A., Irwin, J. R., Whalen, D. H., Klaiman, C., Carter, A. S., & Schultz, R. T. (2008). Audiovisual processing in children with and without autism spectrum disorders. Journal of Autism and Developmental Disorders, 38(7), 1349–1358. doi:10.1007/s10803-007-0521-y.

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., & Burack, J. (2006a). Enhanced perceptual functioning in autism: An update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders, 36(1), 27–43. doi:10.1007/s10803-005-0040-7.

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., & Burack, J. A. (2006b). Enhanced perceptual functioning in autism: An update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders, 36(1), 27–43.

Muller, R. A., Shih, P., Keehn, B., Deyoe, J. R., Leyden, K. M., & Shukla, D. K. (2011). Underconnected, but how? A survey of functional connectivity MRI studies in autism spectrum disorders. Cerebral Cortex, 21(10), 2233–2243. doi:10.1093/cercor/bhq296.

Murphy, J. W., Foxe, J. J., Peters, J. B., & Molholm, S. (2014). Susceptibility to distraction in autism spectrum disorder: Probing the integrity of oscillatory alpha-band suppression mechanisms. Autism Res. doi:10.1002/aur.1374.

Murray, M. M., Molholm, S., Michel, C. M., Heslenfeld, D. J., Ritter, W., Javitt, D. C., et al. (2005). Grabbing your ear: Rapid auditory–somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cerebral Cortex, 15(7), 963–974.

Naatanen, R., & Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology, 24(4), 375–425.

Nair, A., Treiber, J. M., Shukla, D. K., Shih, P., & Muller, R. A. (2013). Impaired thalamocortical connectivity in autism spectrum disorder: A study of functional and anatomical connectivity. Brain, 136(Pt 6), 1942–1955. doi:10.1093/brain/awt079.

Neil, P. A., Chee-Ruiter, C., Scheier, C., Lewkowicz, D. L., & Shimojo, S. (2006). Development of multisensory spatial integration and perception in humans. Developmental Science, 9(5), 454–464.

Oades, R. D., Walker, M. K., Geffen, L. B., & Stern, L. M. (1988). Event-related potentials in autistic and healthy children on an auditory choice reaction time task. International Journal of Psychophysiology, 6(1), 25–37.

Ornitz, E. M. (1974). The modulation of sensory input and motor output in autistic children. Journal of Autism and Childhood Schizophrenia, 4, 197–215.

Ornitz, E. M., Guthrie, D., & Farley, A. H. (1977). The early development of autistic children. Journal of Autism and Childhood Schizophrenia, 7, 207–229.

Pang, E. W., & Taylor, M. J. (2000). Tracking the development of the N1 from age 3 to adulthood: An examination of speech and non-speech stimuli. Clinical Neurophysiology, 111(3), 388–397.

Perrin, F., Pernier, J., Bertrand, O., & Echallier, J. F. (1989). Spherical splines for scalp potential and current density mapping. Electroencephalography and Clinical Neurophysiology, 72(2), 184–187.

Perrin, F., Pernier, J., Bertrand, O., Giard, M. H., & Echallier, J. F. (1987). Mapping of scalp potentials by surface spline interpolation. Electroencephalography and Clinical Neurophysiology, 66(1), 75–81.

Picton, T. W., Hillyard, S. A., Krausz, H. I., & Galambos, R. (1974). Human auditory evoked potentials. I. Evaluation of components. Electroencephalography and Clinical Neurophysiology, 36(2), 179–190.

Ponton, C. W., Eggermont, J. J., Kwong, B., & Don, M. (2000). Maturation of human central auditory system activity: Evidence from multi-channel evoked potentials. Clinical Neurophysiology, 111(2), 220–236.

Roberts, T. P., Cannon, K. M., Tavabi, K., Blaskey, L., Khan, S. Y., Monroe, J. F., et al. (2011). Auditory magnetic mismatch field latency: A biomarker for language impairment in autism. Biological Psychiatry, 70(3), 263–269. doi:10.1016/j.biopsych.2011.01.015.

Roberts, T. P., Khan, S. Y., Rey, M., Monroe, J. F., Cannon, K., Blaskey, L., et al. (2010). MEG detection of delayed auditory evoked responses in autism spectrum disorders: Towards an imaging biomarker for autism. Autism Res, 3(1), 8–18. doi:10.1002/aur.111.

Rogers, S. J., & Ozonoff, S. (2005). Annotation: What do we know about sensory dysfunction in autism? A critical review of the empirical evidence. Journal of Child Psychology and Psychiatry, 46(12), 1255–1268. doi:10.1111/j.1469-7610.2005.01431.x.

Ruhnau, P., Herrmann, B., Maess, B., & Schroger, E. (2011). Maturation of obligatory auditory responses and their neural sources: Evidence from EEG and MEG. Neuroimage, 58(2), 630–639. doi:10.1016/j.neuroimage.2011.06.050.

Russo, N., Foxe, J. J., Brandwein, A. B., Altschuler, T., Gomes, H., & Molholm, S. (2010). Multisensory processing in children with autism: High-density electrical mapping of auditory–somatosensory integration. Autism Res, 3(5), 253–267. doi:10.1002/aur.152.

Russo, N., Nicol, T., Trommer, B., Zecker, S., & Kraus, N. (2009). Brainstem transcription of speech is disrupted in children with autism spectrum disorders. Developmental Science, 12(4), 557–567.

Samson, F., Hyde, K. L., Bertone, A., Soulieres, I., Mendrek, A., Ahad, P., et al. (2011). Atypical processing of auditory temporal complexity in autistics. Neuropsychologia, 49(3), 546–555. doi:10.1016/j.neuropsychologia.2010.12.033.

Schaaf, R. C., Benevides, T., Mailloux, Z., Faller, P., Hunt, J., van Hooydonk, E., et al. (2014). An intervention for sensory difficulties in children with autism: A randomized trial. Journal of Autism and Developmental Disorders, 44(7), 1493–1506. doi:10.1007/s10803-013-1983-8.

Schaaf, R. C., Toth-Cohen, S., Johnson, S. L., Outten, G., & Benevides, T. W. (2011). The everyday routines of families of children with autism: Examining the impact of sensory processing difficulties on the family. Autism, 15(3), 373–389.

Scherg, M., Vajsar, J., & Picton, T. W. (1989). A source analysis of the late human auditory evoked potentials. Journal of Cognitive Neuroscience, 1(4), 336–355. doi:10.1162/jocn.1989.1.4.336.

Schoen, S. A., Miller, L. J., & Green, K. E. (2008). Pilot study of the Sensory Over-Responsivity Scales: Assessment and inventory. American Journal of Occupational Therapy, 62(4), 393–406.

Sell, N. K., Giarelli, E., Blum, N., Hanlon, A. L., & Levy, S. E. (2012). A comparison of autism spectrum disorder DSM-IV criteria and associated features among African American and white children in Philadelphia County. Disability and Health Journal, 5(1), 9–17.

Stein, B., & Meredith, M. (1990). Multisensory integration. Neural and behavioral solutions for dealing with stimuli from different sensory modalities. Annals of the New York Academy of Sciences, 608, 51–65; discussion 65–70.

Stevenson, R. A., Segers, M., Ferber, S., Barense, M. D., & Wallace, M. T. (2014a). The impact of multisensory integration deficits on speech perception in children with autism spectrum disorders. Frontiers in Psychology, 5, 379. doi:10.3389/fpsyg.2014.00379.

Stevenson, R. A., Siemann, J. K., Schneider, B. C., Eberly, H. E., Woynaroski, T. G., Camarata, S. M., et al. (2014b). Multisensory temporal integration in autism spectrum disorders. Journal of Neuroscience, 34(3), 691–697. doi:10.1523/JNEUROSCI.3615-13.2014.

Stoner, R., Chow, M. L., Boyle, M. P., Sunkin, S. M., Mouton, P. R., Roy, S., et al. (2014). Patches of disorganization in the neocortex of children with autism. New England Journal of Medicine, 370(13), 1209–1219. doi:10.1056/NEJMoa1307491.

Supekar, K., Uddin, L. Q., Khouzam, A., Phillips, J., Gaillard, W. D., Kenworthy, L. E., et al. (2013). Brain hyperconnectivity in children with autism and its links to social deficits. Cell Reports, 5(3), 738–747. doi:10.1016/j.celrep.2013.10.001.

Teder-Salejarvi, W. A., McDonald, J. J., Di Russo, F., & Hillyard, S. A. (2002). An analysis of audio-visual crossmodal integration by means of event-related potential (ERP) recordings. Cognitive Brain Research, 14(1), 106–114.

Teinonen, T., Aslin, R. N., Alku, P., & Csibra, G. (2008). Visual speech contributes to phonetic learning in 6-month-old infants. Cognition, 108(3), 850–855. doi:10.1016/j.cognition.2008.05.009.

Tomchek, S. D., & Dunn, W. (2007). Sensory processing in children with and without autism: A comparative study using the short sensory profile. American Journal of Occupational Therapy, 61(2), 190–200.

Tonnquist-Uhlen, I., Ponton, C. W., Eggermont, J. J., Kwong, B., & Don, M. (2003). Maturation of human central auditory system activity: The T-complex. Clinical Neurophysiology, 114(4), 685–701.

Ulrich, R., Miller, J., & Schroter, H. (2007). Testing the race model inequality: An algorithm and computer programs. Behav Res Methods, 39(2), 291–302.

van Eeghen, A. M., Pulsifer, M. B., Merker, V. L., Neumeyer, A. M., van Eeghen, E. E., Thibert, R. L., et al. (2013a). Understanding relationships between autism, intelligence, and epilepsy: A cross-disorder approach. Developmental Medicine and Child Neurology, 55(2), 146–153. doi:10.1111/dmcn.12044.

van Eeghen, A. M., Pulsifer, M. B., Merker, V. L., Neumeyer, A. M., van Eeghen, E. E., Thibert, R. L., et al. (2013b). Understanding relationships between autism, intelligence, and epilepsy: A cross-disorder approach. Developmental Medicine and Child Neurology, 55(2), 146–153. doi:10.1111/dmcn.12044.

Vlamings, P. H., Jonkman, L. M., van Daalen, E., van der Gaag, R. J., & Kemner, C. (2010). Basic abnormalities in visual processing affect face processing at an early age in autism spectrum disorder. [Comparative study]. Biological Psychiatry, 68(12), 1107–1113. doi:10.1016/j.biopsych.2010.06.024.

Volkmar, F. R., Cohen, D. J., Bregman, J. D., Hooks, M. Y., & Stevenson, J. M. (1989). An examination of social typologies in autism. Journal of the American Academy of Child and Adolescent Psychiatry, 28(1), 82–86. doi:10.1097/00004583-198901000-00015.

Wechsler, D. (1999). Wechsler abbreviated scale of intelligence. San Antonio, TX: Psychological Corporation.

Wolf, J. M., Tanaka, J. W., Klaiman, C., Cockburn, J., Herlihy, L., Brown, C., et al. (2008). Specific impairment of face-processing abilities in children with autism spectrum disorder using the let’s face it! skills battery. Autism Res, 1(6), 329–340. doi:10.1002/aur.56.

Woynaroski, T. G., Kwakye, L. D., Foss-Feig, J. H., Stevenson, R. A., Stone, W. L., & Wallace, M. T. (2013). Multisensory speech perception in children with autism spectrum disorders. Journal of Autism and Developmental Disorders, 43(12), 2891–2902. doi:10.1007/s10803-013-1836-5.

Acknowledgments

Primary funding for this work was provided through a grant from the U.S. National Institute of Mental Health (MH085322 to S.M. and J.J.F.). The Human Clinical Phenotyping Core, where the children enrolled in this study were clinically evaluated, is a facility of the Rose F. Kennedy Intellectual and Developmental Disabilities Research Center (IDDRC) which is funded through a center grant from the Eunice Kennedy Shriver National Institute of Child Health & Human Development (NICHD P30 HD071593). A.B.B. received additional support from the Maternal & Child Health Interdisciplinary Leadership Education in Neurodevelopmental & Related Disabilities (LEND). The authors express their sincere gratitude to Sarah Ruberman, Frantzy Acluche, Dr. Ted Altschuler, Joanna Peters, Kristina Dumas, and for their invaluable support during the course of this research. We thank Dr. Natalie Russo for her contributions to the clinical phenotyping of a portion of our ASD cohort. We also extend our heartfelt gratitude to the children and families that have contributed their time to participate in this research. An earlier version of this manuscript was submitted to the Graduate Center of the City University of New York in partial fulfillment of the first author’s doctoral degree.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Brandwein, A.B., Foxe, J.J., Butler, J.S. et al. Neurophysiological Indices of Atypical Auditory Processing and Multisensory Integration are Associated with Symptom Severity in Autism. J Autism Dev Disord 45, 230–244 (2015). https://doi.org/10.1007/s10803-014-2212-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-014-2212-9