Abstract

Autism spectrum disorder (ASD) is a neurodevelopmental disorder that is characterized by a constellation of symptoms, including impairments in social communication, restricted interests, and repetitive behaviors. Although sensory issues have long been reported in clinical descriptions of ASD, only the most recent edition of the Diagnostic and Statistical Manual of Mental Disorders (DSM-V) has included differences in sensory processing as part of the diagnostic profile for ASD. Indeed, sensory processing differences are among the most prevalent findings in ASD, and these differences are increasingly recognized as a core component of ASD. Furthermore, characterizing ASD phenotypes on the basis of sensory processing differences has been suggested as a constructive means of creating phenotypic subgroups of ASD, which may be useful to better tailor individualized treatment strategies. Although sensory processing differences are frequently approached from the perspective of deficits in the context of ASD, there are a number of instances in which individuals with ASD outperform their neurotypical counterparts on tests of sensory function. Here, the current state of knowledge regarding sensory processing in ASD is reviewed, with a particular emphasis on auditory and multisensory (i.e., audiovisual) performance. In addition to characterizing the nature of these differences in sensory performance, the chapter focuses on the neurological correlates of these sensory processing differences and how differences in sensory function relate to the other core clinical features of ASD, with an emphasis on speech and language.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Auditory

- Binding

- Causal inference

- Cue combination

- Integration

- Neuroimaging

- Perception

- Psychophysics

- Sensory processing

- Visual

12.1 Introduction

This chapter focuses on the behavioral and neural underpinnings of altered sensory processing in autism spectrum disorder (ASD) by first looking at perception in auditory (Sect. 12.1.1), visual (Sect. 12.1.2), and audiovisual (Sect. 12.1.3) processing by comparing individuals with ASD and neurotypical (NT) development. Next, this chapter explores how these differences in perception might be tied to both structural and functional changes in the brain, first focusing on insights from magnetic resonance imaging (Sect. 12.2.1) and then moving to electrophysiology (Sect. 12.2.2). The chapter concludes by looking at the developmental trajectory of these populations and connecting sensory processing to different clinical symptoms in ASD.

12.1.1 Perceptual Differences in Auditory Processing

A given auditory stimulus can be deconstructed into a number of dimensions, such as amplitude (loudness), frequency (pitch) composition, and timbre. A great deal of human psychophysical work has focused on better understanding how these various stimulus attributes are processed by human observers. Intriguingly, in the processing of some of these primary attributes, children with ASD have been shown to exhibit superior performance (Remington and Fairnie 2017). For example, several studies have noted superior pitch perception in children with ASD compared with their NT peers (Heaton 2003; O’Riordan and Passetti 2006). This includes both the perception of simple pure tones (Fig. 12.1A; Bonnel et al. 2003) as well as pitch perception in the context of full sentences (Järvinen-Pasley et al. 2008a, b). Intriguingly, during adolescence, this advantage seems to disappear (Heaton et al. 2008; Jones et al. 2009). Somewhat paradoxically, older individuals with ASD who retain this advantage in pitch perception are more likely to have significant language difficulties (Bonnel et al. 2010).

Evidence for enhanced and impaired sensory processing in individuals with ASD. (A) Individuals with ASD (clinical subjects) outperform matched NT individuals (control subjects) in both the discrimination (left) and categorization (right) of differing tones. Adapted from Bonnel et al. (2003), with permission. Error bars are ±SD. (B) Individuals with ASD (“high-functioning” autism [HFA]) outperform NT individuals in first-order (luminance-defined) grating discrimination but show deficits relative to the NT (typically developing [TD]) group when the gratings are defined using second-order characteristics (texture). V and H, vertical and horizontal axes, respectively, of the visual stripes. Error bars are SDs. *P < 0.05. Adapted from Bertone et al. (2005), with permission

Auditory thresholds, as measured by indices such as modulation depth discrimination, do not seem to differ in individuals with ASD compared with those who are NT (Haigh et al. 2016). However, across multiple standard audiological measures, individuals with ASD are more likely to show at least one abnormal finding, with a common finding that they are more likely to be more sensitive to sounds (lower behavioral threshold for rating a sound to be uncomfortable) than NT individuals (Demopoulos and Lewine 2016). Other findings of note include reduced otoacoustic emissions, which measure vibrations reflected backward from the cochlea in response to sound and thus the integrity of the transduction process of the ear. However, these reductions have been found only for specific frequencies but are largely not different compared with NT individuals across most frequencies (Bennetto et al. 2017). Additionally, brainstem auditory evoked potentials, measuring auditory activity from the cochlea through the earliest processing stages in the brain, have longer latencies in a significant subset of children with ASD (Nagy and Loveland 2002; Ververi et al. 2015). Collectively, these findings of low-level differences suggest that auditory processing is impacted in ASD at some of the earliest stages in which auditory information is processed by the cochlea and brain.

Further along the processing hierarchy, different sound elements must be grouped into auditory objects to perceptually bind information coming from individual sources and filter background “noise” (Shinn-Cunningham et al. 2017). To do so, multiple acoustic features (spectral, temporal) must be integrated, a process that involves a number of brain regions (Christison-Lagay et al. 2015). Here, individuals with ASD have more difficulty than NT individuals in both integrating and parsing auditory (as well as visual) scenes (Lin et al. 2017). ASD individuals show a reduced ability to perceive auditory objects, particularly noted in the ability to filter out competing auditory stimuli as measured by both behavioral and electrophysiological methods (Lodhia et al. 2014). In contrast to the enhanced pitch perception for sentences, when asked instead to judge the semantic content of the sentences, children with ASD performed much worse than NT children (Järvinen-Pasley et al. 2008a). Furthermore, individuals with ASD show atyptical neural responses when perceiving spatial cues like interaural time and level differences, which are used to group auditory features into auditory objects (Lodhia et al. 2014, 2018). These difficulties in utilizing auditory cues, especially for complex auditory stimuli like speech, have been hypothesized as contributing to the overwhelming nature of complex everyday sensory environments reported by many ASD individuals (Markram and Markram 2010).

When taken as a whole, the enhanced abilities on the processing of low-level auditory stimulus features contrasted with the weakness in perceptual grouping/binding represent the cornerstone of several of the more prevalent neurobiologically inspired theories of ASD. One that is strongly anchored in these data is weak central coherence, which posits that whereas local connectivity within brain circuits is either preserved or enhanced in autism, more global connectivity across brain regions is compromised (Happé 1999; Peiker et al. 2015).

12.1.2 Perceptual Differences in Visual Processing

As for audition, many measures of simple visual processing show similar or superior performance in ASD individuals compared with their NT peers. For example, individuals with ASD tend to have better performance on various visual search tasks (Simmons et al. 2009). Using very short (160-ms) display times to focus on bottom-up processing, Shirama et al. (2016) found that adults with ASD are both faster and more accurate at finding visual targets embedded within a display of distractors. However, there are some exceptions to this general improvement in the processing of low-level stimulus features, including in the ability to exploit statistical features of the stimuli over time. For example, individuals with ASD are poorer at detecting systematic biases in the location of a visual target across a series of trials (Pellicano et al. 2011) and appear to be less flexible in encoding the stimulus when it changes location (Harris et al. 2015). The ability to focus on more local features seems to result in less susceptibility to distracting features, such as surround suppression by visual stimuli in the periphery (Flevaris and Murray 2014), which appears to parallel the difference between local and global processing articulated in Sect. 12.1.1 for auditory stimuli. Extending these findings, during the perception of visual motion children with ASD show atypical patterns. Spatial suppression refers to the paradoxical finding that it is often more difficult to perceive the motion of large, high-contrast stimuli compared with smaller stimuli (Pack et al. 2005), whereas spatial facilitation refers to the relative boost in perceiving the motion direction of large stimuli of low-contrast (Tadin and Lappin 2005). Children with ASD show a reduction in spatial suppression (i.e., they show less of a performance decrement perceiving motion with large vs. small, high-contrast stimuli) as well as an enhancement of spatial facilitation (Foss-Feig et al. 2013; Sysoeva et al. 2017). One common feature in much of this work is the revelation of processing differences when stimuli become dynamic, possibly reflecting challenges in temporal integrative processes (a topic that is returned to in Sect. 12.4.2).

Although individuals with ASD are often superior at discerning distinct features of a complex visual stimulus, much like for audition, the grouping of these sensory cues into a single perceptual object seems to be weakened (Dakin and Frith 2005). In an orientation discrimination task, Bertone et al. (2005) found that ASD individuals performed better than NT individuals for discriminating simple, “first-order” (luminance-defined) orientations but worse for discerning more complex, “second-order” (texture-defined) orientations, which are likely to be processed later in the visual hierarchy (Fig. 12.1B). In a more “traits-based” approach to these questions, the association between decreased perceptual grouping and autism features was also found. Surround suppression, in which the proximity of objects to a target impedes responses to that target, was found to be reduced in individuals without a diagnosis of ASD but with higher scores on a measure of autism traits, the Autism Quotient (AQ; Flevaris and Murray 2014).

The processing of biological motion, which combines both visually complex and social information, also seems to be altered in ASD. Children with ASD spend less time looking at biological motion (Annaz et al. 2012) and have worse performance on tasks that rely on biological motion (Blake et al. 2003; Swettenham et al. 2013). Difficulties with biological motion seem to be exacerbated when the task requires the use of this motion to recognize emotions. For example, attempting to identify the emotion (e.g., happy, angry) in the body language of a walking point light display (Nackaerts et al. 2012). Such a result underscores the weaknesses in the processing of social information in ASD. Differences in performance on biological motion tasks between individuals with ASD and NT seem to diminish in adulthood (Murphy et al. 2009); however, there is evidence that this similar performance between ASD and NT adults may be mediated by distinct cortical networks (McKay et al. 2012).

As for auditory processing, the weight of the evidence in regard to visual processing in ASD points toward selective enhancements in low-level abilities coupled to processing weaknesses for more high-order stimuli. One illustrative and powerful example of this comes from work using the embedded figures test, in which subjects are asked to identify shapes that are embedded within larger illustrations (Fig. 12.2A). Children with autism show performance advantages from processing the component elements of a stimulus array but substantial deficits when asked to report on the whole image (Fig. 12.2B; Pellicano et al. 2005; Plaisted et al. 1999).

Local and global perceptual processing in ASD. (A) Illustration of an image used in the children’s embedded figures task (CEFT). (B) Performance on three different visual tasks for a group of ASD and TD participants. The global dot-motion task is a two-alternative forced choice (2AFC) task in which participants indicate the general direction of motion of a group of dots (up or down) and taps measures of visual processing later in the visual cortical hierarchy (top). Flicker contrast sensitivity is a two-interval forced choice (2IFC) task that quantifies the contrast at which participants can reliably identify (75% threshold) the interval with a Gaussian blob with a 10-Hz sinusoidal flicker and measures low-level visual processing (center). The CEFT measures how quickly participants can identify hidden features that, when assembled, result in a larger image with different meaning (bottom). In the example shown, the clock (A) is made up of a number of triangles. Note that whereas neither dot-motion thresholds nor flicker contrast sensitivity differ between groups, there is a striking difference in the CEFT, with ASD children being much faster to identify the number of components making up the whole image. Box plots are the distribution of the middle 50% of the scores. Solid black lines, median of each box plot. Bars at top and bottom of each box plot extend to include all of the data, excepting outliers, which are marked individually. Adapted from Pellicano et al. (2005), with permission

12.1.3 Perceptual Differences in the Integration of Auditory and Visual Information

In addition to the processing differences observed within individual senses like audition and vision, there is growing evidence of changes in the processing and integration of information across the different sensory modalities in individuals with ASD (for a review, see Baum et al. 2015). Similar to the differences noted in the processing of stimuli within the individual sensory modalities, changes to multisensory processing observed in individuals with ASD are manifold and differences depend on a number of features, including both the type and complexity of the stimuli that are combined.

One of the most salient cues for integrating multiple pieces of sensory information is the temporal relationship between the stimuli from the different modalities (Welch 1999; Stevenson and Wallace 2013). Stimuli that occur at the same time are likely to come from the same source and should be integrated, whereas stimuli that occur at different times should remain segregated. Overall, individuals with ASD are less able to accurately detect these multisensory temporal relationships than their NT peers (Foss-Feig et al. 2010; de Boer-Schellekens et al. 2013a), and emerging work suggests these differences may be particularly pronounced for speech stimuli (Stevenson et al. 2014a), perhaps serving as a foundation for the communication deficits that often accompany ASD (Fig. 12.3). In addition to these difficulties in the temporal processing of audiovisual speech, Foxe et al. (2015) observed that children with ASD are less able to utilize visual speech information to improve speech comprehension, and that this multisensory difference grows larger under noisy and more naturalistic conditions. This paradigm builds off of the foundational evidence that the ability to see a speaker’s mouth provides a large gain in intelligibility to the spoken signal (cf. Grant and Bernstein, Chap. 3) and that these visually-mediated benefits grow larger under noisy conditions (Sumby and Pollack 1954; Ross et al. 2006).

Alterations in audiovisual temporal function in ASD. (A) Performance on a simultaneity judgment task reveals differences in performance between the ASD (gray) and NT (black) groups, with those with ASD showing a higher likelihood of reporting simultaneity for highly asynchronous audiovisual pairings. AV, auditory leads; VA, visual leads. *P < 0.05. (B) Group averages for the width of the audiovisual temporal binding window (TBW) as a function of stimulus complexity (flashbeep, visual flash with auditory beep; tools, video and audio of a handheld hammer hitting a table; speech, video and audio of the syllables /ba/ and /ga/) reveals preferential differences in the processing of speech-related stimuli. Error bars are ±SE of the mean. *P < 0.05. Adapted from Stevenson et al. (2014a), with permission

Illusions are commonly used as one means of studying multisensory integration, where a number of audiovisual illusions have provided great insight into how auditory and visual information are synthesized (cf., Lee, Maddox, and Bizley, Chap. 4). For example, the sound-induced flash illusion consists of a single flash of light paired with two or more auditory stimuli (beeps) in quick succession. The participant is then asked to report the number of flashes while ignoring the beeps, with the task-irrelevant beeps often resulting in the illusory perception of several flashes (Shams et al. 2000). Children with ASD appear less susceptible to perceiving this illusion (Stevenson et al. 2014b), although they also seem to perceive the illusion over a wider range of temporal asynchronies (i.e., delays between the flashes and beeps) than their NT peers (Foss-Feig et al. 2010), providing further evidence for temporally based multisensory processing differences. Another common illusion, the McGurk effect, involves the presentation of an auditory syllable (e.g., /ba/) paired with an incongruent visual syllable (e.g., /ga/), which frequently results in the perception of a novel syllable (e.g., /da/), reflecting a synthesis of the auditory and visual cues (McGurk and MacDonald 1976). Many studies have found that individuals with ASD are less susceptible to this illusion (Irwin et al. 2011; Stevenson et al. 2014c). Recent work suggests that these differences in illusory perception may be due to differences in top-down factors (i.e., decision criterion) as opposed to differences in simple bottom-up stimulus integration (Magnotti and Beauchamp 2015).

One of the challenges in studying multisensory processing and the possible differences in ASD is teasing out the respective contributions of changes in unisensory function from changes in the integration of information across the different sensory modalities. As detailed in Sects. 12.1.1 and 12.1.2, there is substantial evidence in support of unisensory processing differences in ASD, and these differences may be responsible for many of the apparent changes in multisensory abilities. However, a number of studies have now attempted to dissociate these effects and have shown in many cases that the deficits seen in multisensory processing go beyond what is predicted from performance on unisensory tasks (Brandwein et al. 2013; Stevenson et al. 2014b).

One of the most powerful approaches to this question is through the use of computational models that endeavor to parse out the individual contributions of both the individual sensory inputs as well as the actual process of integrating the individual cues. In particular, Bayesian modeling is increasingly being applied to examine sensory processing in ASD in an attempt to disentangle possible mechanisms for these sensory and multisensory processing differences (Pellicano and Burr 2012; Van de Cruys et al. 2014). Bayesian models of sensory processing formalize perception as statistical inference, where incoming information is combined with expectations and prior experience that ultimately results in the final percept, and these perpetual experiences provide updates that inform the processing of subsequent incoming information (cf. Shams and Beierholm 2010; Alais and Burr, Chap. 2). One theory of ASD posits that internal representations of the world (so-called Bayesian “priors”) are weak in ASD and thus provide a poor reference for incoming information, resulting in an overweighting of incoming sensory evidence (Pellicano and Burr 2012) and an overestimation of the volatility of this evidence (Lawson et al. 2017; Palmer et al. 2017). By formalizing possible mechanisms of perception, these models may help pinpoint how and where sensory perception diverges in ASD with testable hypotheses. For example, ongoing work suggests that internal sensory representations may actually be intact in individuals with ASD (Pell et al. 2016; Croydon et al. 2017) or are specifically impaired only in social situations (Chambon et al. 2017). Furthermore, some studies have shown that individuals with ASD can learn from and update their representation of the environment appropriately based on incoming sensory information in some contexts (Manning et al. 2017). Although more work is needed to fully characterize and understand perceptual differences in ASD, Bayesian models provide a powerful framework within which these different mechanisms may be tested.

12.2 Neural Correlates of Sensory Processing in Autism Spectrum Disorder

12.2.1 Magnetic Resonance Imaging

Functional magnetic resonance (MR) imaging (fMRI) allows for the noninvasive investigation of the neural circuitry involved in sensory and perceptual processes. Although the hemodynamic response (i.e., changes in oxygenated and deoxygenated hemoglobin) that is the basis of the fMRI signal is slow, it is highly localized, which allows for a relatively high degree of spatial resolution (Glover 2011). In addition to studying functional brain activity, MRI can also be used to investigate the underlying structural networks (i.e., anatomical connectivity) that support various neural functions (structural MRI).

As highlighted in Sect. 12.1.1 in regard to perceptual differences in auditory processing, individuals with ASD show similar behavioral performance to their NT peers in the detection of simple tones. Brain imaging done during this task shows a similar pattern of temporal (auditory) cortex activation between the ASD and NT groups, including bilateral superior and middle temporal gyri (Brodmann areas 41, 42, and 22), but also a much broader set of activity extending into right prefrontal and premotor cortices for the ASD individuals (Gomot et al. 2008). More temporally complex (frequency-modulated) sounds evoked enhanced responses in the primary auditory cortex in individuals with ASD but reduced responses in areas surrounding the auditory cortex (Samson et al. 2011). Speech processing, which involves complex and socially relevant auditory information, is an area where individuals with ASD are thought to be particularly affected. Although previous work reported a lack of voice-sensitive regions in individuals with ASD (Gervais et al. 2004), emerging work suggests that these regions do exist but show atypical activity during voice identity-recognition tasks (Schelinski et al. 2016). Intriguingly, children with ASD show a response pattern where evoked responses are reduced in response to spoken speech but look surprisingly similar to NT children when the speech is sung rather than spoken (Sharda et al. 2015), indicating that speech might be more affected in certain contexts. How these atypical neural networks and response patterns contribute to altered auditory processing in ASD is currently unclear.

Paralleling the behavioral findings of largely similar performance in tasks indexing low-level visual processing, a comprehensive study measuring visual contrast sensitivity across a range of spatial frequencies found no difference in brain activation patterns between ASD and NT individuals (Koh et al. 2010). In contrast, enhanced visual search has been a consistent finding of behavioral studies in ASD, and these enhancements seem to be accompanied by greater activity in regions of early visual cortex (Manjaly et al. 2007).

A large body of work in regard to visual processing in ASD has focused on face processing. Several studies have shown weaker activation in the fusiform face area in response to faces in individuals with ASD (Hubl et al. 2003; Corbett et al. 2009) whereas viewing pictures of inanimate items that are the focus of restricted interests (e.g., trains, cars) elicits greater activity in this same area (Foss-Feig et al. 2016). Furthermore, reductions in right fusiform face area activity in response to faces have been shown to be correlated with symptom severity in ASD (Scherf et al. 2015).

Structural and functional MRI have shown differences in the connectivity and lateralization of sensory networks in the brains of those with autism, including changes in the white matter integrity of auditory, language (Nagae et al. 2012; Berman et al. 2016), and visual (Thomas et al. 2011; Yamasaki et al. 2017) networks. In fact, these differences in network structure appear to be present well before ASD can be diagnosed. A large-scale study of infant siblings of children with ASD (and thus who are at elevated risk for being later diagnosed with autism) just found that those infants who were later diagnosed with ASD showed an enlarged cortical surface area across a range of regions of interest, including auditory and visual cortices, as early as 6–12 months old (Hazlett et al. 2017).

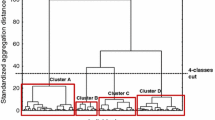

These differences in the structure of neural networks are also mirrored in functional connectivity findings. Reduced functional connectivity has been observed during traditional paradigms in ASD research such as theory-of-mind tasks that probe the ability to infer intentions from others’ actions (Fig. 12.4A; Kana et al. 2014). Additionally, increased ASD symptom severity in regard to sensory features is correlated with reduced interhemispheric connectivity in auditory (Heschl’s gyrus and superior temporal gyrus) cortices (Linke et al. 2018). Furthermore, differences in functional connectivity in voice-selective regions of the posterior superior temporal sulcus (pSTS) and other nodes in the reward system are predictive of social communication skills as indexed by both the Autism Diagnostic Observation Schedule (ADOS) and Autism Diagnostic Interview (Fig. 12.4B; Abrams et al. 2013). Although ASD has traditionally been thought of as a disorder of hypoconnectivity, ongoing work has provided evidence for both hypo- and hyperconnectivity (Hull et al. 2017). Furthermore, both short- and long-range connectivity differ across the developmental trajectory in individuals with ASD, revealing an age by distance interaction (Long et al. 2016).

Differences in functional connectivity between brain regions is a common feature of ASD. (A) Evidence for weaker functional connectivity while attributing causal explanations to the actions of others. Top, some of the actions that were explored in this study; bottom, strength of connectivity between several brain regions during the causal explanation task (both numbers and width of the lines reflect connectivity strength). Sample stimulus item from an experimental condition in the intentional causality vignette is depicted, with subjects being asked to choose the intent of the subjects after viewing the sequence depicted in a, b, and c (correct answer is c). Adapted from Kana et al. (2014). (B) Reduced connectivity in language areas correlates with communication subtest scores of the Autism Diagnostic Observation Schedule (ADOS) and Autism Diagnostic Interview (ADI). Figure depicts connectivity strength between a seed in the posterior superior temporal sulcus (pSTS) region and a number of brain regions in the reward network. Yellow circles, brain regions of interest; red lines, functional connectivity between them, with the numbers showing the strength of the connectivity. Adapted from Abrams et al. (2013), with permission

These differences in both structural and functional connectivity between individuals with ASD and NT are highly likely to impact multisensory processing, which relies on communication across both local and long-range networks. However, to date, little has been done that focuses exclusively on multisensory function and its associated brain networks. In a recent study, Ross et al. (2017) studied NT adults who had an autism risk-associated gene variant of CNTNAP2 and explored whether this genetic variant mediated individual differences in multisensory gain as measured in a speech-in-noise task. The results showed that multisensory gain was diminished in those with the risk-associated allele, who also had overall lower fractional anisotropy (FA), a measure of the structural integrity in white matter tracts, in clusters in right precentral gyrus, and continuing into the superior longitudinal fasciculus as well as in the left corona radiata and the right superior temporal gyrus. An interesting functional corollary to this finding was that the effect of this genotype on multisensory gain was mediated by FA in the right precentral gyrus. Counterintuitively, it was found that decreased FA was linked to increased audiovisual gain. The authors suggest that although stronger structural and functional connectivity of the motor system is typically associated with improvements in speech perception (Du et al. 2014), this is usually in the case of typical development where language function is left lateralized. Reduced lateralization in language function, which has been a frequent observation in autism (Floris et al. 2016), may then explain a greater reliance on the right hemisphere and reduced performance. Much more work is needed in both the functional and structural imaging realms to clarify the differences in multisensory circuits in those living with ASD.

12.2.2 Electrophysiology

The neural underpinnings of ASD have also been investigated using electrophysiological techniques including electroencephalography (EEG), which, unlike MRI, allows for temporal resolution on a millisecond-level timescale but lacks a high degree of spatial resolution (Lee et al. 2014). EEG allows for investigations into the temporal dynamics of neural activity, which can help pinpoint when atypical brain activity emerges while processing a sensory stimulus. This information may prove useful as a biomarker for both humans and animal models of ASD (for a review, see Modi and Sahin 2017).

In the auditory domain, EEG can be particularly powerful because of the high temporal precision of the auditory system and because studies can be conducted without the loud noises of the MRI scanner as a possible confound. Paralleling the behavioral results, differences in auditory processing in ASD have been found at very early stages using EEG. For example, for the classic mismatch negativity (MMN) seen in response to an auditory oddball (an infrequently presented variant of a repeated stimulus), reduced amplitudes were seen in response to tone bursts (Abdeltawwab and Baz 2015) in those with ASD. In an adaptation of the standard MMN paradigm, Lepistö et al. (2009) created a version of the task that either required auditory stream segregation (segregated condition) or not (integrated condition) and found a reduced MMN in individuals with ASD but only for the segregated condition. These reductions in the auditory MMN seem to be especially pronounced with speech stimuli compared with nonspeech sounds with similar spectral and temporal content (Fan and Cheng 2014) and have been interpreted as a deficit in the ability to accurately predict incoming inputs and map these on to expectations. These data can be interpreted from the perspective of weaknesses in predictive coding that have been hypothesized to play an important role in ASD (Van de Cruys et al. 2014).

Similar to the results seen in the auditory system, examining visual activation patterns using EEG in ASD reveals both similarities and differences to NT counterparts. For example, simple visual features such as retinotopic organization can be derived from EEG and have been found to be similar in the central visual field of ASD and NT individuals but to differ for the peripheral visual field (Frey et al. 2013). Other aspects of low-level visual brain activation patterns that differ in ASD include boundary detection (Vandenbroucke et al. 2008), spatial acuity (Pei et al. 2014), and visual oddball detection (Baruth et al. 2010). In addition to these differences that largely manifest as differences in response amplitude (the strength of some aspect of the EEG signal), differences in the lateralization of responses have also been noted, with the general finding of a shift toward less lateralization in ASD (Pei et al. 2014).

In addition to these changes seen in EEG markers of early visual function, there is also growing evidence for differences in more complex aspects of visual processing. For example, there is ample evidence for differences in motion processing, and, in particular, differences in the perception and processing of biological motion have been a common observation (Kröger et al. 2014). Furthermore, the ability to learn regularities in a visual scene over repeated exposure (visual statistical learning) is known to be impaired in ASD, and differences in event-related potential (ERP) amplitudes can account for these performance differences in those with ASD (Jeste et al. 2015). Additionally, neural processing of explicitly social visual stimuli like faces is also atypical in ASD. For example, differences in the lateralization of EEG responses to faces is observed in children as early as 12 months and can predict ADOS scores at 36 months of age (Keehn et al. 2015). These differences seem to continue through to adulthood, where adults with ASD show less differentiation of ERP responses to upright versus inverted faces (Webb et al. 2012) as well as a reduction in the preferential processing of one’s own face compared with the face of others (Cygan et al. 2014).

EEG can also be used to investigate brain rhythms (i.e., oscillations), which appear to be critical indices of information flow through cortical circuits. In particular, abnormal oscillatory power in a variety of frequency bands has been consistently identified in ASD (Simon and Wallace 2016). For example, power in the gamma band (>30 Hz), which is thought to play a key role in perceptual integration (Keil et al. 1999), has been found to be diminished in individuals with ASD (Snijders et al. 2013; Peiker et al. 2015). Alpha band (8- to 14-Hz) abnormalities have also been observed, with reduced power observed in individuals with ASD (Milne 2011; Murphy et al. 2014). These reductions in alpha power, which are typically thought to reflect a reduced ability to inhibit task-irrelevant information (Klimesch et al. 2007), may represent the neural correlates of deficits in sensory filtering. Furthermore, these alterations in oscillatory dynamics can be tied to ASD symptomology. For example, differences in both gamma and theta (4- to 7-Hz) activity in response to speech can predict the degree of verbal deficit and symptom severity in individuals with ASD (Jochaut et al. 2015). Differences in oscillatory power also seem to change based on task demands. Indeed, although gamma power is typically reduced in individuals with ASD during sensory processing, it is increased relative to NT individuals during the resting state, i.e., when subjects are not performing an explicit task (Cornew et al. 2012; Wang et al. 2013).

The presence of these differences in oscillatory function in ASD become increasingly important as knowledge grows concerning the central role of these oscillatory processes in information encoding and transfer. Perhaps most importantly in the context of prevailing theories of autism is the fact that these oscillations are indexing processes at various levels of the processing hierarchy, with a simple framework that the higher the frequency of the oscillation, the more local the neural process that underlies it. Thus, high-frequency (i.e., gamma) oscillations are generally thought to reflect processes within very local circuits, whereas those in the lower frequency bands are reflective of processes that are indexing communication across broad regions. Further complicating matters, these oscillatory frequencies are not independent of one another, and changes within one frequency band almost invariably results in changes across other frequency bands, through processes described as phase-phase coupling and phase-amplitude coupling (Canolty and Knight 2010). Hence, changes in one frequency band generally propagate throughout the oscillatory network.

In the context of sensory processing, an important finding has been the seeming importance of oscillations and oscillatory coupling to facilitate communication across the senses (cf. Keil and Senkowski, Chap. 10). Studies have shown that, even in the earliest regions of the sensory cortex (e.g., primary auditory cortex), input from other senses has the capacity to change the nature of the oscillations in that region (Thorne et al. 2011; Romei et al. 2012). For example, through a process known as phase reset, it has been shown that visual inputs have the ability to reset ongoing oscillations in the primary auditory cortex, thus changing the nature of information exchange at the earliest stages of auditory cortical processing. Although yet to be firmly established, such cross-modal phase resetting can provide valuable predictive information about the nature of a multisensory stimulus. For example, the articulatory movements of the mouth (i.e., visual speech) happen before the audible speech signal. If such visual information has early access to the auditory cortex, it then has the ability to provide predictive information about the auditory information that is about to arrive and thus the ability to boost (or suppress) the gain of the signal. Such findings may have important implications because there is ample evidence for oscillatory dysfunction (Simon and Wallace 2016; Larrain-Valenzuela et al. 2017), multisensory temporal deficits (Brock et al. 2002; Stevenson et al. 2014a), and weaknesses in speech comprehension (Woynaroski et al. 2013; Stevenson et al. 2017) in individuals with ASD.

12.3 Developmental Trajectory of Sensory Processing in Autism Spectrum Disorder

12.3.1 Infancy and Early Childhood

ASD cannot currently be reliably diagnosed in children younger than 2 years of age (Lord et al. 2006; Luyster et al. 2009). Therefore, younger siblings of children with ASD, who are at a higher risk of being later diagnosed with ASD, provide a useful avenue of research for assessing early signs of ASD and the development of potential biomarkers for the progression to autism (Ozonoff et al. 2011). Sensory function and the associated brain networks undergo dramatic changes in early life, and the detailing of neurotypical developmental trajectories provides an opportunity to delineate when maturation begins to deviate from the typical developmental pattern to patterns characteristic of disorders such as autism.

In the auditory domain, infants who are at high risk for ASD at 9 months show reduced habituation and sensitivity in evoked EEG responses to repeated pure tones in an auditory MMN paradigm (Guiraud et al. 2011). In a similar paradigm using consonant-vowel stimuli (used to assess the processing of more speech-related stimuli), high-risk infants showed hypersensitivity to the standard but similar responses to the deviant as infants at a low risk of being diagnosed with ASD (Seery et al. 2014). Although high-risk infants show a similar developmental progression to low-risk infants in regard to a specialization toward processing native speech sounds as they grow older, they do not show the same left-lateralized response to speech as is seen in low-risk infants between 6 and 12 months (Seery et al. 2013). This lack of left-lateralized responses to language is also observed in somewhat older children (i.e., 12–24 months old), and this pattern appears to worsen with age (Eyler et al. 2012). Another early auditory warning sign in infants who are later diagnosed with ASD is a failure to orient to their own name as early as 9 months of age (Miller et al. 2017). Indeed, this lack of response, generally captured within the domain of hyporesponsivity, is often one of the earliest concerns many parents report concerning their child.

In the visual domain, gaze-tracking studies have been conducted with infants at risk for being diagnosed with ASD as a means of assessing sensory attention and as an early marker for how infants interact with their environment (Falck-Ytter et al. 2013). A preference for looking at nonsocial images, as measured by fixation time and number of saccades, seems to emerge as early as 15 months of age in children who will progress to autism (Pierce et al. 2016). In a longitudinal study tracking gaze to faces in infants, fixation to the eye region of a face stimulus declined in infants ages 2–6 months who would later be diagnosed with ASD (Jones and Klin 2013). As with atypical lateralization for language observed in high-risk infants, a similar pattern emerges in face perception, where high-risk infants show left hemisphere lateralization for faces while low-risk infants show more of right hemisphere lateralization (Keehn et al. 2015). In a study measuring resting-state EEG at multiple time points in the first 2 years of life, spectral power was lower in high-risk infants across the delta, theta, alpha, beta, and gamma frequency bands but eventually converged with low-risk infants in all frequency bands by 24 months. (Tierney et al. 2012). A possible confound in these studies is the relative difference in signal-to-noise ratio between low- and high-risk infants; however, these differences have not been systematically characterized.

Furthermore, as with differences in connectivity, differences in EEG power noted for individuals with ASD may be more nuanced than a mere reduction or enhancement of spectral power. For example, reduced power in the alpha band at frontal electrodes seems to predict worse expressive language (Levin et al. 2017), whereas increased power in the theta band at frontal electrodes is associated with greater sensory hyporesponsiveness (Simon et al. 2017). Clearly, much more work is needed to better understand how sensory development differs between ASD and NT infants, and to clarify how these differences relate to later differences in cognitive abilities. Additionally, little work has extended these studies into the multisensory arena to see if changes in multisensory function may serve as more sensitive indices of risk for progression to autism.

12.3.2 Adolescence and Young Adulthood

Many of the same patterns of neural processing differences between ASD and NT individuals persist into adolescence and young adulthood, particularly with regard to social stimuli such as speech and faces. Reduced left lateralization in language is present in adolescents and young adults with ASD across a wide range of tasks (Herringshaw et al. 2016), and individual differences in lateralization are tied to performance deficits in language tasks like letter fluency, which measures word knowledge and the ability to retrieve words (Kleinhans et al. 2008). Reduced specialization for native speech sounds also persists through adolescence (DePape et al. 2012). Furthermore, differences in functional connectivity between children, adolescents, and young adults with ASD and their NT peers are noted in language-processing areas across all three age groups (Lee et al. 2017).

Gaze differences observed in infancy seem to continue throughout development. School-age children with autism look at faces less in videos of social scenes (Rice et al. 2012) as well as in live social interactions (Noris et al. 2012), Furthermore, children with ASD frequently do not shift their gaze within the context of a social scene, such as following a back-and-forth conversation between two speakers (Hosozawa et al. 2012). Reduced fixation time on the eyes is also observed through adolescence and young adulthood in individuals with ASD (Sasson 2006; Frazier et al. 2017) and may instead be shifted toward the mouth (Fig. 12.5; Neumann et al. 2006). These differences in gaze processing appear to continue into adulthood (Zalla et al. 2016).

Differences in gaze characterize in ASD. (A) Heat maps representing the time spent viewing various locations on the face. Note the significant differences in gaze patterns, with those with ASD seemingly avoiding looking to the eyes. (B) Quantification of amount of time looking at the eyes versus the mouth. Error bars are SDs. *P < 0.05; ***P < 0.001. Adapted from Neumann et al. (2006), with permission

In regard to multisensory function, much work is still needed to delineate the developmental trajectories associated with both ASD and NT development. Initial work has suggested that these trajectories show some convergence in adolescence (de Boer-Schellekens et al. 2013b; Beker et al. 2018), but it is unclear how the relationship between multisensory integration and autism symptomology changes over the lifetime. In typical development, aspects of multisensory function such as audiovisual temporal acuity mature as late as adolescence or early adulthood (Hillock-Dunn and Wallace 2012). Such a delayed developmental trajectory may be of great interventional utility because it suggests that multisensory plasticity remains quite robust well into adolescence, thus providing great opportunity to strengthen multisensory abilities through training-based approaches.

12.4 Connecting Sensory Processing to Clinical Symptoms

12.4.1 Atypical Sensory Processing Patterns

Historically, sensory processing issues in individuals with autism have been treated as unrelated to impairments in the other core domains of ASD (Rogers and Ozonoff 2005). This seems shortsighted because these domains are heavily dependent on the integrity of the incoming sensory information processing. Furthermore, a better understanding of sensory features will benefit clinical assessment of ASD, including both diagnosis and treatment strategies (Schaaf and Lane 2015).

Abnormal sensory processing in individuals with ASD is typically broken down into three broad patterns: hypersensitivity, hyposensitivity, and sensory seeking (Baranek et al. 2006; Ben-Sasson et al. 2009). It is important to note that these distinctions and divisions have historically been made on the basis of survey and observational data but that there is a growing emphasis on more rigorous empirical characterization of sensory function using psychophysical and behavioral task batteries. These patterns can be seen across multiple sensory modalities even within the same individual and have been noted not only in ASD but also in other clinical groups characterized by developmental delays such as Down syndrome (Boyd et al. 2010). Furthermore, many of these abnormalities persist into adulthood (Crane et al. 2009), although individuals with ASD seem to “catch up” by adulthood to their NT peers on a subset of tasks (Beker et al. 2018).

Recent work has sought to bridge between sensory function and the more classic domains of clinical dysfunction (i.e., social communication and restricted interests and repetitive behaviors) and strongly suggests that abnormalities across these core domains of ASD are related, at least in part, to differences in sensory function. For example, in children with ASD, sensory hyperresponsiveness is correlated with an increased presence of repetitive behaviors (like stereotypical hand flapping), whereas sensory seeking is associated with the presence of ritualistic behaviors and routines (Boyd et al. 2010). In a large-scale study by Mayer 2017, the presence of abnormal sensory processing patterns was compared with specific autism traits as measured by the AQ. Across both NT and ASD adults, greater levels of abnormal sensory processing (failure to register sensory stimuli, sensory seeking, and sensory sensitivity) were correlated with lower functioning in multiple subdomains of autism symptomology (social skills, attention switching, and communication). In a more specific example that links directly to auditory function, difficulties in focusing on an auditory stream of interest in the presence of distractors (termed auditory filtering) has been connected to cognitive problems in the classroom (Ashburner et al. 2008). The Short Sensory Profile (McIntosh et al. 1999) characterizes auditory filtering by asking the caregiver how well the child performs day-to-day activities in the presence of noise; 50% or more caregivers of children with ASD marked “always” or “frequently” to items like “doesn’t respond when name is called but you know the child’s hearing is OK” and “is distracted or has trouble functioning if there is a lot of noise around.” More recent work has framed these perceptual differences as an increased capacity for processing sound, which carries both advantages (e.g., superior pitch perception) and disadvantages (such as a higher propsensity for sensory overload; Remington and Fairnie 2017). This study highlights an increasing awareness in sensory perception research in ASD that focuses on both the deficits and the benefits associated with differences in sensory abilities.

Atypical sensory processing has also been linked to symptom severity in the social, cognitive, and communication domains (Linke et al. 2018) and to self-injurious behavior (Duerden et al. 2012). Furthermore, these connections are found not only in individuals with ASD but also in individuals who do not have ASD but score high on measures of autistic traits (Mayer 2017). This suggests that the relationship between abnormal sensory processing and autistic symptoms occurs not just within the autism spectrum but also across the full range of clinical and subclinical autism symptomology.

12.4.2 Speech and Communication Skills

Several studies have shown that abnormal sensory processing also affects how individuals with ASD communicate and interact with their environment. For example, hyporesponsiveness is associated with increased severity on measures of social communication (Watson et al. 2011). In a study that combined both laboratory and live social interaction, facial recognition was a significant predictor of measures of playing alone and with others (Corbett et al. 2014). In particular, higher scores on a delayed recognition-of-faces task were associated with lower levels of self-play and playing on playground equipment alone and with higher levels of playing with others. Finally, a growing body of work has begun to show the presence of strong links between audiovisual temporal function and clinical measures of speech perception (Woynaroski et al. 2013; Stevenson et al. 2014b) and receptive language functioning (Patten et al. 2014). One illustration of these links was seen by examining the relationship between audiovisual temporal function and reports of the McGurk illusion (cf. Sect. 12.1.3). Here, a strong negative relationship was found between audiovisual temporal acuity (as measured by the size of the temporal window of integration) and reports of the McGurk illusion, suggesting that those with larger windows combine visual and auditory speech signals differently from those with smaller windows (Fig. 12.6; Stevenson et al. 2014a).

Connections between temporal acuity and multisensory illusory perception. (A) Children with ASD and TD children show differences in their perception of a multisensory speech illusion (i.e., the McGurk effect). TD children report the illusory percept /da/ more often than children with ASD. Error bars are SEs of the mean. *P < 0.05. (B) Differences in susceptibility to the McGurk effect are associated with differences in multisensory temporal acuity (i.e., size of the temporal binding window [TBW]) in ASD for flashbeep (P < 0.05), tool (P < 0.03), and speech (P < 0.001) stimuli such that larger TBWs (worse temporal acuity) are associated with reduced susceptibility of the McGurk effect. Note the strong negative relationship for speech stimuli, in which large TBWs are associated with less reporting of the McGurk illusion. Data are from the ASD children. Adapted from Stevenson et al. (2014a), with permission

12.5 Summary and Future Directions of Research

Changes in auditory and multisensory (i.e., audiovisual) processing and the associated brain networks are a common feature of ASD. Although only recently added to the formal diagnostic framework for ASD, the presence of sensory features has been a long-recognized but poorly understood characteristic of the autism phenotype. Recent work has begun to establish links between sensory function and the more classic characteristics of autism, with the largest body of evidence showing strong relationships with social communication. The presence of such links makes great intuitive sense because higher order constructs such as communication skills and social interactive abilities create a scaffold on a sensory and multisensory foundation where the integrity of sensory information processing and the proper synthesis and integration across the sensory streams is key to social communicative development.

From a neurobiological perspective, a great deal of additional work is needed to better understand the circuit and network changes within sensory areas in ASD and how these changes relate to changes in brain regions supporting more cognitive abilities such as social communication. Although much of the prior neurophysiological and neuroimaging work in ASD has focused on differences in brain regions supporting these “higher order” abilities, there is a growing corpus of work oriented toward better elucidating differences in sensory regions of the brain in individuals with autism. Much like the behavioral research that has begun to establish strong associations between sensory function and social communication, these studies now need to address how processing differences in sensory regions and circuits impact the changes that are seen in brain regions responsible for more cognitively directed functions. Key nodes in this analysis will likely be multisensory regions that sit at the transition between unisensory domains of the auditory and visual cortices and regions of the parietal, temporal, and frontal cortices and that have been implicated in higher order functions including attention, executive control, and social cognition. Two of the most intriguing of these regions are the pSTS (cf. Beauchamp, Chap. 8) and areas along the intraparietal sulcus. In addition to being strongly implicated in the integration of auditory, visual, and tactile information, these areas are also centrally involved in processes integral for speech comprehension (Venezia et al. 2017) and attentional allocation (Corbetta and Shulman 2002), respectively.

Finally, greater knowledge of sensory processing in autism is likely to play an important role in intervention and remediation. Perhaps more important than ameliorating the altered sensory characteristics seen in ASD is the potential for this work to have cascading effects on domains such as social communication. The core question here is whether sensory-based training approaches focused on strengthening aspects of sensory function will have secondary effects on processes dependent on the integrity of this sensory information and the manner in which it is integrated. There is a strong developmental emphasis to this point because the maturation of brain regions responsible for sensory processes takes place before the maturation of those regions more integral for cognitive abilities, and early intervention focused in the sensory realm may set the stage for improving the developmental trajectory of these higher order regions.

References

Abdeltawwab, M. M., & Baz, H. (2015). Automatic pre-attentive auditory responses: MMN to tone burst frequency changes in autistic school-age children. The Journal of International Advanced Otology, 11(1), 36–41.

Abrams, D. A., Lynch, C. J., Cheng, K. M., Phillips, J., Supekar, K., Ryali, S., Uddin, L. Q., & Menon, V. (2013). Underconnectivity between voice-selective cortex and reward circuitry in children with autism. Proceedings of the National Academy of Sciences of the United States of America, 110(29), 12060–12065.

Annaz, D., Campbell, R., Coleman, M., Milne, E., & Swettenham, J. (2012). Young children with autism spectrum disorder do not preferentially attend to biological motion. Journal of Autism and Developmental Disorders, 42(3), 401–408.

Ashburner, J., Ziviani, J., & Rodger, S. (2008). Sensory processing and classroom emotional, behavioral, and educational outcomes in children with autism spectrum disorder. The American Journal of Occupational Therapy, 62(5), 564–573.

Baranek, G. T., David, F. J., Poe, M. D., Stone, W. L., & Watson, L. R. (2006). Sensory Experiences Questionnaire: Discriminating sensory features in young children with autism, developmental delays, and typical development. Journal of Child Psychology and Psychiatry, 47(6), 591–601.

Baruth, J. M., Casanova, M. F., Sears, L., & Sokhadze, E. (2010). Early-stage visual processing abnormalities in high-functioning autism spectrum disorder (ASD). Translational Neuroscience, 1(2), 177–187.

Baum, S. H., Stevenson, R. A., & Wallace, M. T. (2015). Behavioral, perceptual, and neural alterations in sensory and multisensory function in autism spectrum disorder. Progress in Neurobiology, 134, 140–160.

Beker, S., Foxe, J. J., & Molholm, S. (2018). Ripe for solution: Delayed development of multisensory processing in autism and its remediation. Neuroscience & Biobehavioral Reviews, 84, 182–192.

Bennetto, L., Keith, J. M., Allen, P. D., & Luebke, A. E. (2017). Children with autism spectrum disorder have reduced otoacoustic emissions at the 1 kHz mid-frequency region. Autism Research, 10(2), 337–145.

Ben-Sasson, A., Hen, L., Fluss, R., Cermak, S. A., Engel-Yeger, B., & Gal, E. (2009). A meta-analysis of sensory modulation symptoms in individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 39(1), 1–11.

Berman, J. I., Edgar, J. C., Blaskey, L., Kuschner, E. S., Levy, S. E., Ku, M., Dell, J., & Roberts, T. P. L. (2016). Multimodal diffusion-MRI and MEG assessment of auditory and language system development in autism spectrum disorder. Frontiers in Neuroanatomy, 10, 30.

Bertone, A., Mottron, L., Jelenic, P., & Faubert, J. (2005). Enhanced and diminished visuo-spatial information processing in autism depends on stimulus complexity. Brain, 128(10), 2430–2441.

Blake, R., Turner, L. M., Smoski, M. J., Pozdol, S. L., & Stone, W. L. (2003). Visual recognition of biological motion is impaired in children with autism. Psychological Science, 14(2), 151–157.

Bonnel, A., Mottron, L., Peretz, I., Trudel, M., Gallun, E., & Bonnel, A.-M. (2003). Enhanced pitch sensitivity in individuals with autism: A signal detection analysis. Journal of Cognitive Neuroscience, 15(2), 226–235.

Bonnel, A., McAdams, S., Smith, B., Berthiaume, C., Bertone, A., Ciocca, V., Burack, J. A., & Mottron, L. (2010). Enhanced pure-tone pitch discrimination among persons with autism but not Asperger syndrome. Neuropsychologia, 48(9), 2465–2475.

Boyd, B. A., Baranek, G. T., Sideris, J., Poe, M. D., Watson, L. R., Patten, E., & Miller, H. (2010). Sensory features and repetitive behaviors in children with autism and developmental delays. Autism Research, 3(2), 78–87.

Brandwein, A. B., Foxe, J. J., Butler, J. S., Russo, N. N., Altschuler, T. S., Gomes, H., & Molholm, S. (2013). The development of multisensory integration in high-functioning autism: High-density electrical mapping and psychophysical measures reveal impairments in the processing of audiovisual inputs. Cerebral Cortex, 23(6), 1329–1341.

Brock, J., Brown, C. C., Boucher, J., & Rippon, G. (2002). The temporal binding deficit hypothesis of autism. Development and Psychopathology, 14(2), 209–224.

Canolty, R. T., & Knight, R. T. (2010). The functional role of cross-frequency coupling. Trends in Cognitive Sciences, 14(11), 506–515.

Chambon, V., Farrer, C., Pacherie, E., Jacquet, P. O., Leboyer, M., & Zalla, T. (2017). Reduced sensitivity to social priors during action prediction in adults with autism spectrum disorders. Cognition, 160, 17–26.

Christison-Lagay, K. L., Gifford, A. M., & Cohen, Y. E. (2015). Neural correlates of auditory scene analysis and perception. International Journal of Psychophysiology, 95(2), 238–245.

Corbett, B. A., Carmean, V., Ravizza, S., Wendelken, C., Henry, M. L., Carter, C., & Rivera, S. M. (2009). A functional and structural study of emotion and face processing in children with autism. Psychiatry Research: Neuroimaging, 173(3), 196–205.

Corbett, B. A., Newsom, C., Key, A. P., Qualls, L. R., & Edmiston, E. (2014). Examining the relationship between face processing and social interaction behavior in children with and without autism spectrum disorder. Journal of Neurodevelopmental Disorders, 6(1), 35.

Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience, 3(3), 215–229.

Cornew, L., Roberts, T. P. L., Blaskey, L., & Edgar, J. C. (2012). Resting-state oscillatory activity in autism spectrum disorders. Journal of Autism and Developmental Disorders, 42(9), 1884–1894.

Crane, L., Goddard, L., & Pring, L. (2009). Sensory processing in adults with autism spectrum disorders. Autism, 13(3), 215–228.

Croydon, A., Karaminis, T., Neil, L., Burr, D., & Pellicano, E. (2017). The light-from-above prior is intact in autistic children. Journal of Experimental Child Psychology, 161, 113–125.

Cygan, H. B., Tacikowski, P., Ostaszewski, P., Chojnicka, I., & Nowicka, A. (2014). Neural correlates of own name and own face detection in autism spectrum disorder. PLoS One, 9(1), e86020.

Dakin, S., & Frith, U. (2005). Vagaries of visual perception in autism. Neuron, 48(3), 497–507.

de Boer-Schellekens, L., Eussen, M., & Vroomen, J. (2013a). Diminished sensitivity of audiovisual temporal order in autism spectrum disorder. Frontiers in Integrative Neuroscience, 7, 8.

de Boer-Schellekens, L., Keetels, M., Eussen, M., & Vroomen, J. (2013b). No evidence for impaired multisensory integration of low-level audiovisual stimuli in adolescents and young adults with autism spectrum disorders. Neuropsychologia, 51(14), 30043013.

Demopoulos, C., & Lewine, J. D. (2016). Audiometric profiles in autism spectrum disorders: Does subclinical hearing loss impact communication? Autism Research, 9(1), 107–120.

DePape, A.-M. R., Hall, G. B. C., Tillmann, B., & Trainor, L. J. (2012). Auditory processing in high-functioning adolescents with autism spectrum disorder. PLoS One, 7(9), e44084.

Du, Y., Buchsbaum, B. R., Grady, C. L., & Alain, C. (2014). Noise differentially impacts phoneme representations in the auditory and speech motor systems. Proceedings of the National Academy of Sciences of the United States of America, 111(19), 7126–7131.

Duerden, E. G., Oatley, H. K., Mak-Fan, K. M., McGrath, P. A., Taylor, M. J., Szatmari, P., & Roberts, S. W. (2012). Risk factors associated with self-injurious behaviors in children and adolescents with autism spectrum disorders. Journal of Autism and Developmental Disorders, 42(11), 2460–2470.

Eyler, L. T., Pierce, K., & Courchesne, E. (2012). A failure of left temporal cortex to specialize for language is an early emerging and fundamental property of autism. Brain, 135(3), 949–960.

Falck-Ytter, T., Bölte, S., Gredebäck, G., Klin, A., Martinez-Conde, S., Pollick, F., Bolton, P., Charman, T., Baird, G., Johnson, M., Gerig, G., Hazlett, H., Schultz, R., Styner, M., Zwaigenbaum, L., & Piven, J. (2013). Eye tracking in early autism research. Journal of Neurodevelopmental Disorders, 5(1), 28.

Fan, Y.-T., & Cheng, Y. (2014). Atypical mismatch negativity in response to emotional voices in people with autism spectrum conditions. PLoS One, 9(7), e102471.

Flevaris, A. V., & Murray, S. O. (2014). Orientation-specific surround suppression in the primary visual cortex varies as a function of autistic tendency. Frontiers in Human Neuroscience, 8, 1017.

Floris, D. L., Barber, A. D., Nebel, M. B., Martinelli, M., Lai, M.-C., Crocetti, D., Baron-Cohen, S., Suckling, J., Pekar, J. J., & Mostofsky, S. H. (2016). Atypical lateralization of motor circuit functional connectivity in children with autism is associated with motor deficits. Molecular Autism, 7(1), 35.

Foss-Feig, J. H., Kwakye, L. D., Cascio, C. J., Burnette, C. P., Kadivar, H., Stone, W. L., & Wallace, M. T. (2010). An extended multisensory temporal binding window in autism spectrum disorders. Experimental Brain Research, 203(2), 381–389.

Foss-Feig, J. H., Tadin, D., Schauder, K. B., & Cascio, C. J. (2013). A substantial and unexpected enhancement of motion perception in autism. The Journal of Neuroscience, 33(19), 8243–8249.

Foss-Feig, J. H., McGugin, R. W., Gauthier, I., Mash, L. E., Ventola, P., & Cascio, C. J. (2016). A functional neuroimaging study of fusiform response to restricted interests in children and adolescents with autism spectrum disorder. Journal of Neurodevelopmental Disorders, 8, 15.

Foxe, J. J., Molholm, S., Del Bene, V. A., Frey, H.-P., Russo, N. N., Blanco, D., Saint-Amour, D., & Ross, L. A. (2015). Severe multisensory speech integration deficits in high-functioning school-aged children with autism spectrum disorder (ASD) and their resolution during early adolescence. Cerebral Cortex, 25(2), 298–312.

Frazier, T. W., Strauss, M., Klingemier, E. W., Zetzer, E. E., Hardan, A. Y., Eng, C., & Youngstrom, E. A. (2017). A meta-analysis of gaze differences to social and nonsocial information between individuals with and without autism. Journal of the American Academy of Child & Adolescent Psychiatry, 56(7), 546555.

Frey, H.-P., Molholm, S., Lalor, E. C., Russo, N. N., & Foxe, J. J. (2013). Atypical cortical representation of peripheral visual space in children with an autism spectrum disorder. European Journal of Neuroscience, 38(1), 2125–2138.

Gervais, H., Belin, P., Boddaert, N., Leboyer, M., Coez, A., Sfaello, I., Barthélémy, C., Brunelle, F., Samson, Y., & Zilbovicius, M. (2004). Abnormal cortical voice processing in autism. Nature Neuroscience, 7(8), 801–802.

Glover, G. H. (2011). Overview of functional magnetic resonance imaging. Neurosurgery Clinics of North America, 22(2), 133–139.

Gomot, M., Belmonte, M. K., Bullmore, E. T., Bernard, F. A., & Baron-Cohen, S. (2008). Brain hyper-reactivity to auditory novel targets in children with high-functioning autism. Brain, 131(9), 2479–2488.

Guiraud, J. A., Kushnerenko, E., Tomalski, P., Davies, K., Ribeiro, H., Johnson, M. H., & BASIS Team. (2011). Differential habituation to repeated sounds in infants at high risk for autism. Neuroreport, 22(16), 845–849.

Haigh, S. M., Heeger, D. J., Heller, L. M., Gupta, A., Dinstein, I., Minshew, N. J., & Behrmann, M. (2016). No difference in cross-modal attention or sensory discrimination thresholds in autism and matched controls. Vision Research, 121, 85–94.

Happé, F. (1999). Autism: Cognitive deficit or cognitive style? Trends in Cognitive Sciences, 3(6), 216–222.

Harris, H., Israeli, D., Minshew, N., Bonneh, Y., Heeger, D. J., Behrmann, M., & Sagi, D. (2015). Perceptual learning in autism: Over-specificity and possible remedies. Nature Neuroscience, 18(11), 1574–1576.

Hazlett, H. C., Gu, H., Munsell, B. C., Kim, S. H., Styner, M., Wolff, J. J., Elison, J. T., Swanson, M. R., Zhu, H., Botteron, K. N., Collins, D. L., Constantino, J. N., Dager, S. R., Estes, A. M., Evans, A. C., Fonov, V. S., Gerig, G., Kostopoulos, P., McKinstry, R. C., Pandey, J., Paterson, S., Pruett, J. R., Schultz, R. T., Shaw, D. W., Zwaigenbaum, L., Piven, J., & The IBIS Network. (2017). Early brain development in infants at high risk for autism spectrum disorder. Nature, 542(7641), 348–351.

Heaton, P. (2003). Pitch memory, labelling and disembedding in autism. Journal of Child Psychology and Psychiatry, 44(4), 543–551.

Heaton, P., Williams, K., Cummins, O., & Happe, F. (2008). Autism and pitch processing splinter skills: A group and subgroup analysis. Autism, 12(2), 203–219.

Herringshaw, A. J., Ammons, C. J., DeRamus, T. P., & Kana, R. K. (2016). Hemispheric differences in language processing in autism spectrum disorders: A meta-analysis of neuroimaging studies. Autism Research, 9(10), 1046–1057.

Hillock-Dunn, A., & Wallace, M. T. (2012). Developmental changes in the multisensory temporal binding window persist into adolescence. Developmental Science, 15(5), 688–696.

Hosozawa, M., Tanaka, K., Shimizu, T., Nakano, T., & Kitazawa, S. (2012). How children with specific language impairment view social situations: An eye tracking study. Pediatrics, 129(6), e1453–e1460.

Hubl, D., Bölte, S., Feineis-Matthews, S., Lanfermann, H., Federspiel, A., Strik, W., Poustka, F., & Dierks, T. (2003). Functional imbalance of visual pathways indicates alternative face processing strategies in autism. Neurology, 61(9), 1232–1237.

Hull, J. V., Jacokes, Z. J., Torgerson, C. M., Irimia, A., & Van Horn, J. D. (2017). Resting-state functional connectivity in autism spectrum disorders: A review. Frontiers in Psychiatry, 7, 205.

Irwin, J. R., Tornatore, L. A., Brancazio, L., & Whalen, D. H. (2011). Can children with autism spectrum disorders “hear” a speaking face? Child Development, 82(5), 1397–1403.

Järvinen-Pasley, A., Pasley, J., & Heaton, P. (2008a). Is the linguistic content of speech less salient than its perceptual features in autism? Journal of Autism and Developmental Disorders, 38(2), 239–248.

Järvinen-Pasley, A., Wallace, G. L., Ramus, F., Happé, F., & Heaton, P. (2008b). Enhanced perceptual processing of speech in autism. Developmental Science, 11(1), 109–121.

Jeste, S. S., Kirkham, N., Senturk, D., Hasenstab, K., Sugar, C., Kupelian, C., Baker, E., Sanders, A. J., Shimizu, C., Norona, A., Paparella, T., Freeman, S. F. N., & Johnson, S. P. (2015). Electrophysiological evidence of heterogeneity in visual statistical learning in young children with ASD. Developmental Science, 18(1), 90–105.

Jochaut, D., Lehongre, K., Saitovitch, A., Devauchelle, A.-D., Olasagasti, I., Chabane, N., Zilbovicius, M., & Giraud, A.-L. (2015). Atypical coordination of cortical oscillations in response to speech in autism. Frontiers in Human Neuroscience, 9, 171.

Jones, W., & Klin, A. (2013). Attention to eyes is present but in decline in 2–6-month-old infants later diagnosed with autism. Nature, 504(7480), 427–431.

Jones, C. R. G., Happé, F., Baird, G., Simonoff, E., Marsden, A. J., Tregay, J., Phillips, R. J., Goswami, U., Thomson, J. M., & Charman, T. (2009). Auditory discrimination and auditory sensory behaviours in autism spectrum disorders. Neuropsychologia, 47(13), 2850–2858.

Kana, R. K., Libero, L. E., Hu, C. P., Deshpande, H. D., & Colburn, J. S. (2014). Functional brain networks and white matter underlying theory-of-mind in autism. Social Cognitive and Affective Neuroscience, 9(1), 98–105.

Keehn, B., Vogel-Farley, V., Tager-Flusberg, H., & Nelson, C. A. (2015). Atypical hemispheric specialization for faces in infants at risk for autism spectrum disorder. Autism Research, 8(2), 187–198.

Keil, A., Müller, M. M., Ray, W. J., Gruber, T., & Elbert, T. (1999). Human gamma band activity and perception of a gestalt. The Journal of Neuroscience, 19(16), 7152–7161.

Kleinhans, N. M., Müller, R.-A., Cohen, D. N., & Courchesne, E. (2008). Atypical functional lateralization of language in autism spectrum disorders. Brain Research, 1221, 115–125.

Klimesch, W., Sauseng, P., & Hanslmayr, S. (2007). EEG alpha oscillations: The inhibition-timing hypothesis. Brain Research Reviews, 53(1), 63–88.

Koh, H. C., Milne, E., & Dobkins, K. (2010). Spatial contrast sensitivity in adolescents with autism spectrum disorders. Journal of Autism and Developmental Disorders, 40(8), 978–987.

Kröger, A., Bletsch, A., Krick, C., Siniatchkin, M., Jarczok, T. A., Freitag, C. M., & Bender, S. (2014). Visual event-related potentials to biological motion stimuli in autism spectrum disorders. Social Cognitive and Affective Neuroscience, 9(8), 1214–1222.

Larrain-Valenzuela, J., Zamorano, F., Soto-Icaza, P., Carrasco, X., Herrera, C., Daiber, F., Aboitiz, F., & Billeke, P. (2017). Theta and alpha oscillation impairments in autistic spectrum disorder reflect working memory deficit. Scientific Reports, 7(1), 14328.

Lawson, R. P., Mathys, C., & Rees, G. (2017). Adults with autism overestimate the volatility of the sensory environment. Nature Neuroscience, 20(9), 1293–1299.

Lee, A. K. C., Larson, E., Maddox, R. K., & Shinn-Cunningham, B. G. (2014). Using neuroimaging to understand the cortical mechanisms of auditory selective attention. Hearing Research, 307, 111–120.

Lee, Y., Park, B., James, O., Kim, S.-G., & Park, H. (2017). Autism spectrum disorder related functional connectivity changes in the language network in children, adolescents and adults. Frontiers in Human Neuroscience, 11, 418.

Lepistö, T., Kuitunen, A., Sussman, E., Saalasti, S., Jansson-Verkasalo, E., Nieminen-von Wendt, T., & Kujala, T. (2009). Auditory stream segregation in children with Asperger syndrome. Biological Psychology, 82(3), 301–307.

Levin, A. R., Varcin, K. J., O’Leary, H. M., Tager-Flusberg, H., & Nelson, C. A. (2017). EEG power at 3 months in infants at high familial risk for autism. Journal of Neurodevelopmental Disorders, 9(1), 34.

Lin, I.-F., Shirama, A., Kato, N., & Kashino, M. (2017). The singular nature of auditory and visual scene analysis in autism. Philosophical Transactions of the Royal Society B: Biological Sciences, 372(1714), 20160115.

Linke, A. C., Jao Keehn, R. J., Pueschel, E. B., Fishman, I., & Müller, R. A. (2018). Children with ASD show links between aberrant sound processing, social symptoms, and atypical auditory interhemispheric and thalamocortical functional connectivity. Developmental Cognitive Neuroscience, 29, 117–126.

Lodhia, V., Brock, J., Johnson, B. W., & Hautus, M. J. (2014). Reduced object related negativity response indicates impaired auditory scene analysis in adults with autistic spectrum disorder. PeerJ, 2, e261.

Lodhia, V., Hautus, M. J., Johnson, B. W., & Brock, J. (2018). Atypical brain responses to auditory spatial cues in adults with autism spectrum disorder. European Journal of Neuroscience, 47(6), 682–689.

Long, Z., Duan, X., Mantini, D., & Chen, H. (2016). Alteration of functional connectivity in autism spectrum disorder: Effect of age and anatomical distance. Scientific Reports, 6(1), 26527.

Lord, C., Risi, S., DiLavore, P. S., Shulman, C., Thurm, A., & Pickles, A. (2006). Autism from 2 to 9 years of age. Archives of General Psychiatry, 63(6), 694–701.

Luyster, R., Gotham, K., Guthrie, W., Coffing, M., Petrak, R., Pierce, K., Bishop, S., Esler, A., Hus, V., Oti, R., Richler, J., Risi, S., & Lord, C. (2009). The Autism Diagnostic Observation Schedule-toddler module: A new module of a standardized diagnostic measure for autism spectrum disorders. Journal of Autism and Developmental Disorders, 39(9), 1305–1320.

Magnotti, J. F., & Beauchamp, M. S. (2015). The noisy encoding of disparity model of the McGurk effect. Psychonomic Bulletin & Review, 22(3), 701–709.

Manjaly, Z. M., Bruning, N., Neufang, S., Stephan, K. E., Brieber, S., Marshall, J. C., Kamp-Becker, I., Remschmidt, H., Herpertz-Dahlmann, B., Konrad, K., & Fink, G. R. (2007). Neurophysiological correlates of relatively enhanced local visual search in autistic adolescents. Neuroimage, 35(1), 283–291.

Manning, C., Kilner, J., Neil, L., Karaminis, T., & Pellicano, E. (2017). Children on the autism spectrum update their behaviour in response to a volatile environment. Developmental Science, 20(5), e12435.

Markram, K., & Markram, H. (2010). The intense world theory-a unifying theory of the neurobiology of autism. Frontiers in Human Neuroscience, 4, 224.

Mayer, J. L. (2017). The relationship between autistic traits and atypical sensory functioning in neurotypical and ASD adults: A spectrum approach. Journal of Autism and Developmental Disorders, 47(2), 316–327.

McGurk, H., & MacDonald, J. (1976). Hearing lips and seeing voices. Nature, 264(5588), 746–748.

McIntosh, D., Miller, L., & Shyu, V. (1999). Development and validation of the short sensory profile. In W. Dunn (Ed.), Sensory Profile: User’s Manual (pp. 59–73). San Antonio: The Psychological Coroporation.

McKay, L. S., Simmons, D. R., McAleer, P., Marjoram, D., Piggot, J., & Pollick, F. E. (2012). Do distinct atypical cortical networks process biological motion information in adults with autism spectrum disorders? Neuroimage, 59(2), 1524–1533.

Miller, M., Iosif, A.-M., Hill, M., Young, G. S., Schwichtenberg, A. J., & Ozonoff, S. (2017). Response to name in infants developing autism spectrum disorder: A prospective study. The Journal of Pediatrics, 183, 141–146.e1.

Milne, E. (2011). Increased intra-participant variability in children with autistic spectrum disorders: Evidence from single-trial analysis of evoked EEG. Frontiers in Psychology, 2, 51.

Modi, M. E., & Sahin, M. (2017). Translational use of event-related potentials to assess circuit integrity in ASD. Nature Reviews Neurology, 13(3), 160–170.

Murphy, P., Brady, N., Fitzgerald, M., & Troje, N. F. (2009). No evidence for impaired perception of biological motion in adults with autistic spectrum disorders. Neuropsychologia, 47(14), 3225–3235.

Murphy, J. W., Foxe, J. J., Peters, J. B., & Molholm, S. (2014). Susceptibility to distraction in autism spectrum disorder: Probing the integrity of oscillatory alpha-band suppression mechanisms. Autism Research, 7(4), 442–458.

Nackaerts, E., Wagemans, J., Helsen, W., Swinnen, S. P., Wenderoth, N., & Alaerts, K. (2012). Recognizing biological motion and emotions from point-light displays in autism spectrum disorders. PLoS One, 7(9), e44473.