Abstract

This paper consider the possibility of using some quantum tools in decision making strategies. In particular, we consider here a dynamical open quantum system helping two players, \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\), to take their decisions in a specific context. We see that, within our approach, the final choices of the players do not depend in general on their initial mental states, but they are driven essentially by the environment which interacts with them. The model proposed here also considers interactions of different nature between the two players, and it is simple enough to allow for an analytical solution of the equations of motion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years the scientific literature has seen a growing interest in the possibility of using quantum ideas and quantum tools in the description of some aspects of several macroscopic systems, systems which, in the common understanding, are usually thought to be purely classical. This interest has touched very different fields of science, starting with finance, going to ecology, passing through psychology, decision making and so on. The literature is now very rich, and it increases almost every day. We just cite here some recent books, [1–6], which cover some of the area mentioned above, but not only.

In this paper we will propose a dynamical approach to a very simple and well known problem in decision making, but in a slightly modified version. Our starting point is what was considered in [7, 8], which is a variation on the theme of the prisoners’ dilemma. This is just one of the several contributions existing in the literature related to decision making processes and to brain dynamics, and it is just one of the contributions suggesting the relevance of something quantum in this kind of problems. For instance, Manousakis in [9] suggests that the condition describing someone who must still make a choice, could be thought as a superposition of suitable states in a particular Hilbert space, whose coefficients are related to the probabilities of making a particular choice among the various possibilities. He also proposes a time evolution driven by some hyper-simplified hamiltonian. Other effective hamiltonians are used, in similar contexts, by other authors, [10, 11]. Of course these effective hamiltonians, as such, are usually quite ad hoc and can only be used to describe some particular aspect of the system under analysis.

Less dynamically oriented is the paper by Agrawal and Sharda [12], where the authors focus particularly on the probabilistic aspects of the process of decision making. Still other contributions are due, for instance, to Vitiello, Khrennikov et al. [13], and to Busemeyer et al., [14]. In particular, in this last paper the authors confront Markov models of human decision-making with other models somehow connected to quantum mechanics. A common feature of almost all these papers have to do with the probabilistic interpretation of quantum mechanics, where interference effects are quite naturally introduced and described, with respect to what happens using classical ideas, where a similar interpretation is not as natural. Other interesting references are given in [15–18].

Let us now go back to the problem we are interested in here. We adopt almost the same notation as in [7, 8]. We have two players, \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\), and each of them can make two possible choices, ”0” and ”1”. For instance, 01 means that \(\mathcal {G}_{1}\) has chosen ”0”, while 12 means that \(\mathcal {G}_{2}\) has chosen ”1”. More compactly, this choice is indicated as 0112. In the same way 1112 means that both \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) made the same choice, ”1”. And so on.Footnote 1 Now, let us introduce as in [7, 8] four real numbers a, b, c and d satisfying the inequalities c>a>d>b. The payoff of \(\mathcal {G}_{1}\) is a or c if \(\mathcal {G}_{2}\)’s choice is 02: 0102 corresponds to a payoff a, while 1102 corresponds to c. On the other hand, if \(\mathcal {G}_{2}\) chooses 1, 12, \(\mathcal {G}_{1}\)’s payoff is b or d: 0112 corresponds to b, while 1112 corresponds to d. The situation is summarized in the following table, [7, 8]:

This table represents the point of view of both \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\). For instance, if the two players choose 0 (0102), they both have a payoff a. Analogously, choice 1112 corresponds to the same payoff d for \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\). On the other hand, different choices of the players correspond to different payoffs: the choice 0112 produces a payoff b for \(\mathcal {G}_{1}\), and c for \(\mathcal {G}_{2}\), while 1102 produces a payoff c for \(\mathcal {G}_{1}\), and b for \(\mathcal {G}_{2}\). The table shows that if \(\mathcal {G}_{1}\) chooses 1, then he can get the maximum payoff, c (if \(\mathcal {G}_{2}\) chooses 0), or a small one, d (if \(\mathcal {G}_{2}\) chooses 1). Hence this could be the best choice, but it can also have bad consequences. On the other hand, if \(\mathcal {G}_{1}\) chooses 0, then he can have the minimum payoff, b (if \(\mathcal {G}_{2}\) chooses 1), or a better one, a (if \(\mathcal {G}_{2}\) chooses 0). Hence \(\mathcal {G}_{1}\) should make choice 1, hoping that \(\mathcal {G}_{2}\) makes choice 0, so that he gets the maximum payoff c. Of course, with this choice there exists also the possibility that he gets d, which is less than a (corresponding to 0102). But, for sure, choosing 1, \(\mathcal {G}_{1}\) will not get b, which is the lowest possible payoff. Hence, if what \(\mathcal {G}_{1}\) really hopes is not to get the lowest payoff,Footnote 2 he must choose 1. A similar analysis of the table from the point of view of \(\mathcal {G}_{2}\) suggests that, if also \(\mathcal {G}_{2}\) wants to avoid to get the worst payoff, he has to choose 1. Then, if \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are rational players, meaning that they are both interested not to get b, they should both choose 1 (1112).

In [7, 8] this problem has been considered using quantum techniques: a mental state vector belonging to some suitable Hilbert space is associated to each possible choice of the players, and it is used to describe the situation. In particular, since we have here only four possible choices, the Hilbert space is four dimensional. We will give more details, adapted to our aims, in Section 2. The dynamics of the vector is deduced by a master equation, and the final decision is related to the equilibrium solution of this equation.

In this paper we consider a similar system from a slightly different point of view, i.e. from the point of view of quantum open systems. In our opinion, this choice is more realistic, since we consider the possibility that the two players interact with the external world, to make up their mind and to take their decisions. Of course, this is different from the standard version of the two players game, where there is no interaction at all, and this is the reason why we talk of a ”similar system”. In particular, in our case, the Hilbert space of the model is richer than the one considered in the existing literature. In fact, most of the papers considering a quantum approach to decision making deal with finite dimensional Hilbert spaces. This is not the case for us: in our settings, while the players will be attached to a four-dimensional Hilbert space, the reservoir will not. But, rather than being a problem, in our opinion this makes the structure more realistic. In fact, the presence of the reservoir mimics well the very many inputs that each player normally takes into account while making his choice. This is exactly our interpretation of the reservoir: it represents the set of rumors, ideas, suggestions,... coming from the external world and reaching the players. For this reason, the dynamics of the players is provided by an hamiltonian, written following the rules proposed in [4], which describes not only the two players, but also the reservoir, and, above all, the possible interactions. As we have already said, similar ideas have already been used in the literature on decision making, see [9–11] for instance. However, in these cases, the hamiltonian is often a very simple matrix which, of course, can only be used to describe a particular aspect of the model. On the other hand, our hamiltonian contains rather general information on the system, and the different ingredients of H can be easily identified. Finally, even if, in our knowledge, this is not done in any standard (or quantum) view to the two players game, we will also consider here the possibility of having some interaction between \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\), and we will discuss the consequences of this interaction. In particular, we will consider the case in which the two players react in the same way and the case in which they have opposite reactions. This will be clarified in the next section. Of course, the presence of this interaction between the players, makes our system even more different from the one considered in [7, 8].

The paper is organized as follows: in the following section we propose the model and we derive its dynamics. Then we consider the cases in which \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) do not interact, and the cases in which they do, and different possibilities are considered. The analysis of the results and our conclusions are discussed in Section 3. Finally, to keep the paper self contained, we discuss some important facts in quantum mechanics in the Appendix.

2 The Model and its Dynamics

In this section we will discuss the details of our model, constructing first the vectors of the players, the hamiltonian of the system, and deducing, out of it, the differential equations of motion and their solution, with particular interest to its asymptotic (in time) behavior.

In our game we have two players, \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\). Each player could operate two possible choices, 0 and 1. Hence we have four different possibilities, which, following [7, 8], we associate here to four different and mutually orthogonal vectors in a four dimensional Hilbert space \(\mathcal {H}_{\mathcal {G}}\). These vectors are φ 0,0, φ 1,0, φ 0,1 and φ 1,1. The first vector, φ 0,0, describes the fact that, at t = 0, the two players have both chosen 0 (0102). Of course, this is not a fixed choice, and can change during the time evolution of the system. Analogously, φ 0,1 describes the fact that, at t = 0, the first player has chosen 0, while the second has chosen 1 (0112). And so on. \(\mathcal {F}_{\varphi }=\{\varphi _{k,l},\,k,l=0,1\}\) is an orthonormal basis for \(\mathcal {H}_{\mathcal {G}}\). The general mental state vector of the system \(\mathcal {S}_{\mathcal {G}}\) (i.e. of the two players), for t = 0, is a linear combination

where we assume that \({\sum }_{k,l=0}^{1}|\alpha _{k,l}|^{2}=1\) in order to normalize the total probability. Indeed |α 0,0|2 is the probability that \(\mathcal {S}_{\mathcal {G}}\) is, at t = 0, in a state φ 0,0, i.e. that both \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) have chosen 0. Notice, incidentally, that Ψ=Φ1⊗Φ2, where \({\Phi }_{k}=x_{0}^{(k)}\varphi _{0}^{(k)}+x_{1}^{(k)}\varphi _{1}^{(k)}\), k = 1,2, and where α k,l and \(x_{j}^{(k)}\) are related in an obvious way: \(\alpha _{0,0}=x_{0}^{(1)}x_{0}^{(2)}\), \(\alpha _{1,0}=x_{1}^{(1)}x_{0}^{(2)}\), \(\alpha _{0,1}=x_{0}^{(1)}x_{1}^{(2)}\) and \(\alpha _{1,1}=x_{1}^{(1)}x_{1}^{(2)}\). We see that the vectors describing \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are independent, and Ψ is the tensor product of the two.

The first essential difference with respect to what is done in [7, 8] is now the way in which these vectors are constructed: we consider two fermionic operators, see A, i.e. two operators b 1 and b 2, satisfying the following canonical anti-commutation rules (CAR):

where k,l = 0,1, \(\mathbb {1}\) is the identity operator, and {x,y}=x y+y x. Then we take φ 0,0 as the vacuum of b 1 and b 2: b 1 φ 0,0=b 2 φ 0,0=0, and construct the other vectors out of it:

The explicit expressions of these vectors and operators can be found in many textbooks in quantum mechanics, see [19] for instance: \(\varphi _{k,l}=\varphi _{k}^{(1)}\otimes \varphi _{l}^{(2)}\), where \(\varphi _{0}=\left (\begin {array}{c}1 \\0 \end {array}\right )\) and \(\varphi _{1}=\left (\begin {array}{c}0 \\1 \end {array}\right ) \). Then,

and so on. The matrix form of the operators b j and \(b_{j}^{\dagger }\) are also quite simple. For instance,

and so on.

Let now \(\hat {n}_{j}=b_{j}^{\dagger } b_{j}\) be the number operator of the j-th player: the CAR above imply that \(\hat {n}_{1}\varphi _{k,l}=k\varphi _{k,l}\) and \(\hat {n}_{2}\varphi _{k,l}=l\varphi _{k,l}\), k,l = 0,1. Then, as already stated, the eigenvalues of these operators correspond to the choice operated by the two players at t = 0: for instance, φ 1,0 corresponds to the choice 1102, just because one is the eigenvalue of \(\hat {n}_{1}\) and zero is the eigenvalue of \(\hat {n}_{2}\).

Remark 1

One might wonder why, in the description of our model, we use fermionic rather than bosonic operators, as we have done in several other applications in recent years, [4]. This is easily understood, since the eigenvalues of the fermionic number operators \(\hat {n}_{j}\) are exactly 0 and 1, which are the only possible choices of the players. On the other hand, see [20], the eigenvalues of the bosonic number operators are all the natural numbers (including 0): too many for us!

Our main effort now consists in giving a dynamics to the number operators \(\hat {n}_{j}\), following the scheme described in [4]. Therefore, what we first need is to introduce a hamiltonian H for the system. Then, we will use this hamiltonian to deduce the dynamics of the number operators as \(\hat {n}_{j}(t):=e^{iHt}\hat {n}_{j} e^{-iHt}\), and finally we will compute the mean values of these operators on some suitable state which is needed to describe, see below, the status of the system at t = 0. The rules needed to write down H are described in [4]. The main idea here is that the two players are just part of the full system: in order to take their decision, they need to be somehow informed. In fact, it is really the information which creates the final decision. Hence, \(\mathcal {S}_{\mathcal {G}}\) must be open, meaning with this that there must be a reservoir \(\mathcal {R}=\mathcal {R}_{1}\otimes \mathcal {R}_{2}\), interacting with \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\), which is responsible for this sort of information. The reservoir, compared with \(\mathcal {S}_{\mathcal {G}}\), is expected to be a very large system since the information is created by several different sources. A possible hamiltonian is therefore the following:

Here ω j and λ j are real quantities, and Ω j (k) are real functions. In analogy with the b j ’s, we adopt fermionic operators B j (k) and \(B_{j}^{\dagger }(k)\) to describe the reservoir. They depend on j = 1,2 (two different sub-reservoirs for the two players), and on the real variableFootnote 3 k, and they satisfy the rules

which have to be added to those in (2.2). Moreover each \(b_{j}^{\sharp }\) anti-commutes with each \(B_{j}^{\sharp }(k)\): \(\{b_{j}^{\sharp }, B_{l}^{\sharp }(k)\}=0\) for all j, l and k. Here X ♯ stands for X or X †.

2.1 An Interlude: Why Fermionic Operators, and why this Hamiltonian?

It may be useful to recall now that, as discussed in the Appendix, the fermionic operators considered above have a very useful characteristic for us: they can be used to construct new self-adjoint operators, the number operators \(\hat {n}_{j}=b_{j}^{\dagger } b_{j}\), which are diagonal in the φ k,l ’s, and whose eigenvalues are exactly zero and one. Which, important to stress, are the only two possible choices of our players. As we have already said, this is the core of our choice: we have two main possible choices of the players, and these correspond exactly to two eigenvalues of very simple matrices. Then, as we have already discussed, a rather natural possibility to describe the process of decision making is simply to give a dynamics to \(\hat {n}_{j}\). And our claim is that this dynamics is given (in part) by the hamiltonian (2.3). The full hamiltonian is given below, in (2.5).

Let us now concentrate on the meaning of h, beginning with the role of the parameters and of the functions. Of course, λ j is an interaction parameter, measuring the strength of the interaction between \(\mathcal {G}_{j}\) and \(\mathcal {R}_{j}\). If, in particular, λ 1=λ 2=0, then h = H 0 and, since \([h,\hat {n}_{j}]=0\), this would imply that the number operators describing the choices of the two players stay constant in time. In other words, in this case the original choices of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are not affected by the time evolution.Footnote 4 Both ω j and Ω j (k) are related to a sort of inertia of the system, [4], i.e. to a tendency of a particular part of the system not to change too fast its status. For instance, we will see in Section 2.1 that Ω j (k) is related to the time needed by \(\mathcal {G}_{j}\) to make his choice. In [4] it is also shown, in several concrete applications, that the values of ω j and Ω j (k) are related to the magnitude of the oscillations of some relevant functions of the model. For this reason, in analogy with classical mechanics, we adopt the word inertia in connection with these quantities.

Let us now explain why we have chosen these particular forms of H 0, and of H I .

H 0 is a sum of diagonal operators, describing the free evolution of the operators of \(\mathcal {S}=\mathcal {S}_{\mathcal {G}}\otimes \mathcal {R}\). In fact, for instance, \({\sum }_{j=1}^{2}\omega _{j}b_{j}^{\dagger } b_{j}={\sum }_{j=1}^{2}\omega _{j}\hat n_{j}\), which is already diagonal in terms of the φ k,l . Slightly more complicated, but not particularly different, is the part of H 0 which refers to the reservoirs: it is also diagonal. Now, suppose that \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are, at t = 0 in a definite state, say φ 0,1 (i.e. 0111), and that the dynamics of the system is only given by H 0. Then, at t>0, the two players will be still described by φ 0,1: no change in their decisions. This is coherent with the fact that, as we have discussed before, \([H_{0},\hat {n}_{j}]=0\). Stated with different words, we could say that H 0 is the simplest quadratic self-adjoint operator in our fermionic operators which commutes with \(\hat {n}_{1}\) and \(\hat {n}_{2}\). This ensures that, in absence of interactions, \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) do not change idea.

More interesting is the role of H I . In order to explain its meaning, we have to recall that, see Appendix, b j and B j (k) are lowering operators, while their adjoint \(b_{j}^{\dagger }\) and \(B_{j}^{\dagger }(k)\) are raising operators. For instance, if we consider b 1 φ 1,0, we obtain φ 0,0. Then, the action of b 1 modify the original choice of the players, 1102, to the new choice, 0102. Similarly, since \(b_{1}^{\dagger }\varphi _{0,0}=\varphi _{1,0}\), the action of \(b_{1}^{\dagger }\) brings 0102 to 1102. The operators B j (k) and \(B_{j}^{\dagger }(k)\) behave similarly for the reservoir.

Then, it is clear that H I describes the interaction between the two components of \(\mathcal {R}\), \(\mathcal {R}_{1}\) and \(\mathcal {R}_{2}\), with the players: \(b_{j} B_{j}^{\dagger }(k)\) describes the fact that, when the amount of information reaching \(\mathcal {G}_{j}\) increases (because of \(B_{j}^{\dagger }(k)\)), \(\mathcal {G}_{j}\) tends to chose 0 (because of b j ). On the other hand, \(B_{j}(k)b_{j}^{\dagger }\) describes the fact that \(\mathcal {G}_{j}\) tends to chose 1, when the amount of information reaching him decreases. Now, recalling that, in our model, what the players really want to avoid is getting the smallest payoff b, and recalling that this is achieved by choosing 1, it is natural to interpret the information produced by the reservoir as information of bad quality: the more it reaches \(\mathcal {G}_{j}\), the more he moves away from his rational choice.

2.2 Enriching the Model

To make the situation richer and more interesting for us we admit here the possibility that the two players also interact among them and we consider two different possible interactions, by adding a cooperative and an exchange effects. The full hamiltonian H is therefore

where μ e x and μ c o o p are non negative. In particular, they could be both equal to zero, and in this case H = h. In this particular case, \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) do not interact with each other. On the other hand, if μ e x ≠0 and μ c o o p =0 then \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are pushed to make different choices because of the terms \(b_{1}^{\dagger } b_{2}\) and \(b_{2}^{\dagger } b_{1}\), while they act cooperatively if μ e x =0 and μ c o o p ≠0 (because of \(b_{1}^{\dagger } b_{2}^{\dagger }\) and b 2 b 1). Finally, we also allow the possibility of having both these contributions, when μ e x and μ c o o p are simultaneously non zero.

Before deducing the time evolution of the relevant observables of the system, it is interesting to discuss the presence, or the absence, of some integrals of motion for the model. In our context, these are (self-adjoint) operators which commute with the hamiltonian. In many concrete situations the existence of these kind of operators gives an hint on how the hamiltonian should look like, [4], and can be used sometimes to check how realistic our model is. In fcat, this strategy was previously used to fix the form of H 0. Let us introduce

with obvious notation. First of all, it is easy to check that [N j ,h]=0, j = 1,2, so that [N,h]=0. Moreover, even if \(\left [N_{j},\mu _{ex}\left (b_{1}^{\dagger } b_{2}+b_{2}^{\dagger } b_{1}\right )\right ]\neq 0\), we find that

so that N commutes also with \(h+\mu _{ex}\left (b_{1}^{\dagger } b_{2}+b_{2}^{\dagger } b_{1}\right )\). On the other hand, neither N j nor N commute with \(\mu _{coop}\left (b_{1}^{\dagger } b_{2}^{\dagger }+b_{2} b_{1}\right )\) so that, when μ c o o p ≠0, N ceases to be an integral of motion. This suggests that the cooperation destroys the integral of motion in (2.6). This is because the cooperative term in H forces \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) to behave in a similar way forcing, as a consequence, the mean value of N to change with time. On the other hand, if μ c o o p =0, the creation and the annihilation operators in H always compensate their actions and for this reason N stays constant in time, even if its different contributions in (2.6) have a non trivial time evolution.

We can now go back to the analysis of the dynamics of the system. The Heisenberg equations of motion \(\dot {X}(t)=i[H,X(t)]\), see Appendix, can be deduced by using the CAR (2.2) and (2.4) above:

j = 1,2. The third equation can be rewritten as

and, taking Ω j (k)=Ω j k, Ω j >0, standard computations produce

We refer to [4] for details of this computation and for a discussion on the physical genesis of this approach. If we now replace (2.8) in the (2.7) for \(\dot {b}_{j}(t)\), we can write

where we have introduced \(\nu _{j}=i\omega _{j}+\pi \frac {{\lambda _{j}^{2}}}{{\Omega }_{j}}\), \(\beta _{j}(t)={\int }_{\mathbb {R}}B_{j}(k)e^{-i{\Omega }_{j} k t}~dk\), j = 1,2, and

The solution of (2.9) is easily found in a matrix form:

which is now the starting point for our analysis below.

2.3 \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) do not Interact

This is almost the classical two players game, since they do not interact each other, but still both communicate with their environments. As we have discussed before, in this case μ e x =μ c o o p =0. Then U is a diagonal matrix, and e iUt is diagonal as well. Then, from (2.10) we easily deduce that

j = 1,2. From this equation we can obtain \(b_{j}^{\dagger }(t)\) and, consequently, the number operator \(\hat {n}_{j}(t)=b_{j}^{\dagger }(t)b_{j}(t)\). However, what is relevant for us is not really \(\hat {n}_{j}(t)\) itself, but its mean value on some suitable state on \(\mathcal {S}\). These states are assumed to be tensor products of vector states for \(\mathcal {S}_{\mathcal {G}}\) and states on the reservoir which obey a standard equation, see below. More in details, for each operator of the form \(X_{\mathcal {S}}\otimes Y_{\mathcal {R}}\), \(X_{\mathcal {S}}\) being an operator of \(\mathcal {S}_{\mathcal {G}}\) and \(Y_{\mathcal {R}}\) an operator of the reservoir, we consider

Here Ψ is the vector introduced in (2.1), while \(\omega _{\mathcal {R}}(.)\) is a state satisfying the following standard properties, [4]:

for some constant N j . Also, \(\omega _{\mathcal {R}}(B_{j}(k)B_{l}(q))=0\), for all j and l. These formulas for \(\omega _{\mathcal {R}}\) reflect for the reservoir expressions similar to those for \(\mathcal {S}_{\mathcal {G}}\). Then

What is interesting here is that, if λ j ≠0,

does not depend on the original state of mind of the two players, but only on what the reservoir suggests. In fact, independently of the vector Ψ describing probabilistically, at t = 0, the choices of both \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\), if \(\mathcal {R}_{1}\) (the part of the reservoir interacting with \(\mathcal {G}_{1}\)) has in (2.11) N 1=0, then after a sufficiently long time, 0 will be exactly \(\mathcal {G}_{1}\)’s choice. On the other hand, if N 1=1, then \(\mathcal {G}_{1}\) will eventually choose 1. A similar conclusion can be deduced for \(\mathcal {G}_{2}\). Therefore, when \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) do not interact, their choices are only dictated by their environments. This conclusion looks quite reasonable, in the present context.

Remark 2

-

(1)

Notice that, if λ j =0, formula (2.12) reduces to n j (t)=∥b j Ψ∥2=n j (0), ∀ t. This is not surprising, since reflects what was already deduced before in absence of interactions of any kind. In this case, in fact, we have seen that the initial state of mind is what really matters for the final decision, since there is no time evolution of the operator \(\hat {n}_{j}\) at all.

-

(2)

More in general, formula (2.12) suggests the introduction of a sort of characteristic time for \(\mathcal {G}_{j}\), \(\tau _{j}=\frac {{\Omega }_{j}}{2{\pi \lambda _{j}^{2}}}\). The more t approaches τ j , the bigger the influence of \(\mathcal {R}_{j}\) on \(\mathcal {G}_{j}\) is. In particular, if λ j →0, τ j diverges. Hence we recover our previous conclusions: \(\mathcal {G}_{j}\) is not influenced at all by \(\mathcal {R}_{j}\), even after a long time. A similar behavior is deduced also when Ω j increases: the larger its value, the larger the value of τ j . In other words, for large Ω j the influence of the environment is effective only after a sufficiently long interval. This is not very different from what we have deduced in other systems, [4], where analogous parameters of the hamiltonian measure the inertia of that particular part of the system. Of course, τ j can be considered as a sort of decision time.

-

(3)

Since the rational choice of both players is 1, (2.13) shows that rationality really belongs to \(\mathcal {R}_{j}\), rather than to \(\mathcal {G}_{j}\): in our version of the game, \(\mathcal {G}_{j}\) does not need to be rational, at least if their reservoirs behave rationally!

2.4 The Effect of Exchange Interaction

In the following we will fix μ c o o p =0, allowing μ e x to be different from zero. In particular, from now on, for concreteness’ sake we will work fixing the following values of the other parameters in the hamiltonian: ω 1=1, ω 2=2, λ 1=λ 2=0.5, Ω1=Ω2=0.1. This choice is meant to have almost identical players and reservoirs. As it is clear, the only difference between \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) is played here by the values of ω 1 and ω 2.

After few computations, calling V(t)=e iUt and V k,l (t) its (k,l)-matrix element, we deduce that

and

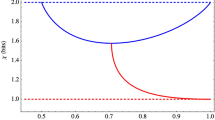

To begin with, we consider three different choices for μ e x : (a). μ e x =0.01, (b). μ e x =0.05 and (c). μ e x =0.1. In all these cases it is possible to check that both V 1,1(t) and V 1,2(t) converge to zero when t diverges. On the other hand, neither \({{\int }_{0}^{t}}dt_{1}|V_{1,1}(t-t_{1})|^{2}\) nor \({{\int }_{0}^{t}}dt_{1}|V_{1,2}(t-t_{1})|^{2}\) converge to zero. All these computations can be performed analytically and the explicit result, in case (a), is the following:

How we can see, these are symmetrical, and not very different from the result in (2.13): the two players modify their decision with respect to when μ e x =0, but just a little bit! This is because μ e x is too small. In fact, let us consider the case (b) above, μ e x =0.05. In this case, repeating the same computations, we conclude that

which shows that the mixing between N 1 and N 2 increases a little bit. And, in fact, this mixing increases even more in case (c), when μ e x =0.1: we get

To clarify further the role of the exchange hamiltonian, we now consider much higher values of μ e x , keeping again μ c o o p =0. Hence we take: (d). μ e x =10 and (e). μ e x =100. In the first case, μ e x =10, we find

while in case (e), μ e x =100, we obtain

We believe that, for μ e x ≫μ c o o p =0, the two players reach eventually a common choice which should be \(n_{1}(\infty )=n_{2}(\infty )=\frac {1}{2}(N_{1}+N_{2})\): perfect mixing! Once again, then, the decisions of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are driven by the reservoirs but, in this case, the stronger the interaction between \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\), the more \(\mathcal {R}_{1}\) and \(\mathcal {R}_{2}\) affect in a symmetric way the two players.

2.5 The Effect of Cooperative Interaction

We now consider the case in which only the cooperative part in the hamiltonian is switched on, μ c o o p ≠0, while the exchange contribution is turned off, μ e x =0. As before, we will consider, for the same reasons, the following values of the other parameters in the hamiltonian: ω 1=1, ω 2=2, λ 1=λ 2=0.5, Ω1=Ω2=0.1, and then we will put (a). μ c o o p =0.01, (b). μ c o o p =0.05 and (c). μ c o o p =0.1.

In this case we deduce that

and

Again it is possible to check that all the functions V k,l (t) above converge to zero when t diverges. On the other hand, \({{\int }_{0}^{t}}dt_{1}|V_{k,l}(t-t_{1})|^{2}\) admits a non zero limiting value for t→∞. The results are the following: in case (a), μ c o o p =0.01 and μ e x =0, we have

In case (b), μ c o o p =0.05 and μ e x =0, we have

while in case (c), μ c o o p =0.1 and μ e x =0, we get

Again we observe that the higher the value of μ c o o p , the higher the mixing between the effects of the two sub-reservoirs. Hence we are led to formulate a similar conclusion as we did in the previous situation, and we expect that, for μ c o o p ≫μ e x =0, the two players arrive to an asymptotic (in time) choice which is the following: \(n_{1}(\infty )=\frac {1}{2}(N_{1}+(1-N_{2}))\), \(n_{2}(\infty )=\frac {1}{2}((1-N_{1})+N_{2})\).

2.6 Full Hamiltonian

In this last part, we consider together the effects of the exchange and of the cooperative hamiltonians, still keeping unchanged the values ω 1=1, ω 2=2, λ 1=λ 2=0.5, Ω1=Ω2=0.1. Now both μ e x and μ c o o p will be taken different from zero. In particular, we will consider the situation in which μ e x and μ c o o p are significantly different from each other (which we don’t expect is particularly different from what we did before), and the case in which they are similar. More in details, these will be our choices of parameters: Case (a). μ e x =0.01 and μ c o o p =100; (b). μ e x =0.01 and μ c o o p =1; (c). μ e x =μ c o o p =0.5; (d). μ e x =1 and μ c o o p =0.01 and (e). μ e x =100 and μ c o o p =0.01.

In this case we deduce that

and

The following are the results we have deduced in the five cases listed above. We have:

-

Case (a), μ e x =0.01 and μ c o o p =100:

$$ \left\{\begin{array}{l} n_{1}(\infty)\simeq 0.50317 N_{1}+0.49682 (1-N_{2}),\\ n_{2}(\infty)\simeq 0.49682 (1-N_{1})+0.50317 N_{2}. \end{array}\right. $$(2.28)

-

Case (b), μ e x =0.01 and μ c o o p =1:

$$ \left\{\begin{array}{l} n_{1}(\infty)\simeq 0.91914 N_{1}+0.08075 (1-N_{2}),\\ n_{2}(\infty)\simeq 0.08075 (1-N_{1})+0.91914 N_{2}. \end{array}\right. $$(2.29)

-

Case (c), μ e x =0.5 and μ c o o p =0.5:

$$ \left\{\begin{array}{ll} n_{1}(\infty)&\simeq 0. 85428N_{1}+0.00626 (1-N_{1})+0.11974 N_{2}+0.01917 (1-N_{2})\\ &=0.84802 N_{1}+0.10057 N_{2}+0.02543,\\ n_{2}(\infty)&\simeq 0.11974 N_{1}+0.01917 (1-N_{1})+0.85428 N_{2}+0.00626 (1-N_{2})\\ &=0.10057 N_{1}+0.84802 N_{2}+0.02543. \end{array}\right. $$(2.30)

Notice that, in these equations, the first form has been explicitly written simply because, in this way, the different contributions arising from (2.26) and (2.27) can be easily identified.

-

Case (d), μ e x =1 and μ c o o p =0.01:

$$ \left\{\begin{array}{l} n_{1}(\infty)\simeq 0.99210 N_{1}+0.00795 N_{2},\\ n_{2}(\infty)\simeq 0.00795 N_{1}+0.99210 N_{2}, \end{array}\right. $$(2.31)and, finally, Case (e), μ e x =100 and μ c o o p =0.01:

$$ \left\{\begin{array}{l} n_{1}(\infty)\simeq 0.50308 N_{1}+0.49692 N_{2}, \\ n_{2}(\infty)\simeq 0.49692 N_{1}+0.50308 N_{2}. \end{array}\right. $$(2.32)

We postpone our detailed analysis of these and of the previous results to the next section. Here we just want to add that, contrarily to what we have seen in Section 2.1, in this more general case we expect that the characteristic time depends also on μ e x and μ c o o p , so that these parameters are expected to contribute to the decision time.

3 Analysis of the Results and Conclusions

The first clear output of our analysis suggests that, when \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) do not directly interact, it is really the environment which produces their decisions. Hence the rationality of the players is strongly linked to the nature of the reservoirs: if both reservoirs have N j =1, j = 1,2, then n j (∞)=1, and the two players make the most rational choice according to the loss aversion rule. More interesting is the situation when we allow some interaction between \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\). In particular our results show that, when at least one between μ e x or μ c o o p is different from zero, and small, the value of n j (∞) is essentially decided again by the j-th part of the reservoir. However, when the numerical values of one of the two parameters increase, then some mixing is possible. For instance, we see that when μ e x =100 and μ c o o p =0, n 1(∞)≃0.50154N 1+0.49846N 2 and n 2(∞)≃0.49846N 1+0.50154N 2. This means that, even if the two components of the reservoir do not mutually interact, the existence of a direct interaction between \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) mixes the cards: the final decision of each player is not only related to the value of his own part of reservoir (i.e. to N 1 or to N 2), but it is a mixture of the two, and, at least for this high value of μ e x , in this mixture N 1 and N 2 have almost the same weights for \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\). A similar behavior is observed also when μ e x =0 while μ c o o p increases: again we have a stronger and stronger mixing of the effects of \(\mathcal {R}_{1}\) and \(\mathcal {R}_{2}\) for μ c o o p increasing. However as we see from (2.23–2.25), N 1 mixes with 1−N 2 (rather than with N 2) and N 2 with 1−N 1 (rather than with N 1). Hence, this contribution in the hamiltonian, behaves differently from the other one, and this is natural, due to the different kind of the interactions. When we consider both contributions in h i n t , the two effects come together and we see this in formulas (2.28–2.32). From these formulas we also see that in the extreme situations (when μ e x is much smaller or much larger than μ c o o p ), not unexpectedly the two final decisions of \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) are similar to the previous cases (i.e. to the cases in which one of the μ’s was zero). On the other hand, when μ e x =μ c o o p , the two effects are both clearly visible, see formula (2.30).

In order to compare these results with those in the Introduction, we begin with a very evident fact: the initial state of mind of the players plays absolutely no role in the final decision, except when there is no interaction at all. No matter which was their status at t = 0, its effect simply disappears when t increases. This is clearly a measure of the fact that our model is not really the two-player game proposed in [7, 8], as we have already stressed before, but a slightly different version of that.

Let us now consider four different cases, depending on the values of N j of \(\mathcal {R}_{j}\). Case (I): N 1=N 2=0; Case (II): N 1=0 and N 2=1; Case (III): N 1=1 and N 2=0; Case (IV): N 1=N 2=1. From the formulas of Section 2 we deduce the following:

-

1.

The only way in which both \(\mathcal {G}_{1}\) and \(\mathcal {G}_{2}\) choose 1 if when N 1=N 2=1, but not for all values of μ e x and μ c o o p . For instance, apparently this is not so when μ c o o p ≪μ e x . However, when this happens, n 1(∞) and n 2(∞) still coincide.

-

2.

On exactly the opposite side, when N 1=N 2=0 the values of n 1(∞) and n 2(∞) stay always very low, except again when μ c o o p ≪μ e x . Even now, when this happens, n 1(∞) and n 2(∞) still coincide. The larger μ c o o p with respect to μ e x , the bigger the value n 1(∞)=n 2(∞) which approaches asymptotically, as our computation suggests, the value \(\frac {1}{2}\).

-

3.

When N 1=0 and N 2=1 in most of the cases considered here n 1(∞) stays close to 0 while n 2(∞) is close to 1. However, when μ e x ≪μ c o o p , again our numerical results suggest that \(n_{1}(\infty )=n_{2}(\infty )\simeq \frac {1}{2}\). Specular (and similar) conclusions can be deduced when N 1=1 and N 2=0.

-

4.

While there is apparently no other way to get n 1(∞)=n 2(∞)=1 than having N 1=N 2=1, there exist several possibilities to have n 1(∞)=n 2(∞). Therefore, in a slightly modified version of the game in which we look for equal decisions (not necessarily equal to 1), we have plenty of possibilities in which this happens.

Remark 3

-

(1)

A different possibility, which may be closer to the usual interpretation of what is quantum in decision making, is to look at the n j (∞) we have deduced before in a probabilistic way. For instance, rather than looking at n j (∞) as the real decision taken by \(\mathcal {G}_{j}\), we could consider it as a sort of probability that \(\mathcal {G}_{j}\) chooses 0 or 1. Then, instead of looking to square modula of the coefficients of the vectors in Ψ, we directly look at n j (∞). But this does not fit well with our general interpretation, see [4], and we will not insist on it here.

-

(2)

It should probably be stressed that the payoffs a, b, c and d do not enter explicitly in the definition of the hamiltonian, at least in the model considered here. In fact, we are interested here in the possibility that \(\mathcal {G}_{j}\) make the rational choice for a fixed choice of parameters satisfying c>a>d>b, whatever this choice is. Changing their values, but maintaining these inequalities, we don’t affect the players’ behavior, of course. Nevertheless, it could be interesting to look for some different model in which the role of the payoffs is evident in the hamiltonian of the system itself or directly in the state describing the system at t = 0. The (probably) easiest way to include the payoffs directly in the hamiltonian is to assume, for instance, that μ e x and μ c o o p depend explicitly on a, b, c and d. For instance, if this dependence is such that μ e x >μ c o o p , then the effect of the exchange interaction would be stronger than that of the cooperative term in h i n t . More sophisticated dependencies could be considered, like for instance some nonlinear extra term in H, depending on the payoffs. But this would make very hard, if not impossible, to get an exact analytical solution, and perturbative expansions should be possibly used.

This is probably just the beginning of the story: there are still several possible aspects to be considered. First of all, we have considered here just a particular choice of the many parameters of H. A natural question is what changes when these parameters, and in particular those which reflect the nature of the players, are fixed in a different way. For instance, in view of the meaning of the ω j ’s we have deduced for other systems, [4], we could expect a larger inertia of, say, \(\mathcal {G}_{1}\) with respect to \(\mathcal {G}_{2}\) if ω 1≫ω 2: \(\mathcal {G}_{1}\) changes his original idea slowly, when compared to \(\mathcal {G}_{2}\). However, from the point of view of n 1(∞) and n 2(∞), we don’t expect this will change much our conclusions, but at most some (minor) details, like the decision time. Moreover, the hamiltonian we have considered here is just one among all the possible choices. Indeed, in our opinion, it is a rather natural choice and, when compared with other possibilities, allows a more natural interpretation. Still, one could look for other possibilities, and for instance one could try to add non linearities in the model. However, in this case, numerical techniques should most probably be adopted. Another interesting aspect is the following: is there any other problem in decision making theory in which the method proposed here could be applied? We believe this is very plausible. These are some of the aspects we plan to consider in a close future.

Notes

Notice that in any choice X 1 Y 2 the indices 1 and 2 refer to the players, while X and Y refer to the possible choices of the players, 0 or 1.

This is what in the literature is called loss-aversion

In principle we should use a discrete variable to label each element of the reservoirs. However, since integrals are quite often easier to be computed than series, as usually done in the literature we consider this label to be real.

References

Baaquie, B.E.: Quantum Finance. Cambridge University Press, Cambridge (2004)

Khrennikov, A.Y.: Information Dynamics in Cognitive, Psychological, Social and Anomalous Phenomena. Kluwer, Dordrecht (2004)

Khrennikov, A.: Ubiquitous Quantum Structure: from Psychology to Finances. Springer, Berlin (2010)

Bagarello, F.: Quantum Dynamics for Classical Systems: with Applications of the Number Operator. Wiley, New York (2012)

Haven, E., Khrennikov, A.: Quantum Social Science. Cambridge University Press, New York (2013)

Busemeyer, J.R., Bruza, P.D.: Quantum Models of Cognition and Decision. Cambridge University Press, Cambridge (2012)

Asano, M., Ohya, M., Tanaka, Y., Basieva, I., Khrennikov, A.: Quantum-like model of brain’s functioning: decision making from decoherence. J. Theor. Biol. 281, 56–64 (2011)

Asano, M., Ohya, M., Tanaka, Y., Basieva, I., Khrennikov, A.: Quantum-like dynamics of decision-making. Phys. A 391, 2083–2099 (2012)

Manousakis, E.: Quantum formalism to describe binocular rivalry. Biosystems 98(2), 5766 (2009)

Martínez-Martínez, I.: A connection between quantum decision theory and quantum games: the Hamiltonian of strategic interaction. J. Math. Psychology 58, 3344 (2014)

Busemeyer, J.R., Pothos, E.M.: A quantum probability explanation for violations of ’rational’ decision theory. Proc. R. Soc. B. doi:10.1098/rspb.2009.0121

Agrawal, P.M., Sharda, R.: OR Forum - Quantum mechanics and human decision making. Oper. Res. 61(1), 1–16 (2013)

Conte, E., Todarello, O., Federici, A., Vitiello, F., Lopane, M., Khrennikov, A., Zbilut, J.P.: Some remarks on an experiment suggesting quantum-like behavior of cognitive entities and formulation of an abstract quantum mechanical formalism to describe cognitive entity and its dynamics. Chaos Solitons Fractals 31, 1076–1088 (2006)

Busemeyer, J.R., Wang, Z., Townsend, J.T.: Quantum dynamics of human decision-making. J. Math. Psyc. 50, 220–241 (2006)

Pothos, E.M., Perry, G., Corr, P.J., Matthew, M.R., Busemeyer, J.R.: Understanding cooperation in the Prisoners Dilemma game. Personal. Individ. Differ. 51, 210215 (2011)

Manousakis, E.: Founding quantum theory on the basis of consciousness. Found. of Phys. 36(6), 795–838 (2006)

Donald, M.J.: Quantum theory and the brain. Proc. Roy. Soc. Lond. A 427, 43–93 (1990)

Khrennikov, A.: On the physical basis of the theory of mental waves. Neuroquantology Suppl. 1 8(4), S71–S80 (2010)

Roman, P.: Advanced Quantum Mechanics. Addison–Wesley, New York (1965)

Merzbacher, E.: Quantum Mechanics. Wiley, New York (1970)

Acknowledgments

The author acknowledges partial support from Palermo University and from G.N.F.M. The author also thanks the referees for their suggestions, useful to produce an improved version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix: Few Results on the Number Representation

Appendix: Few Results on the Number Representation

To keep the paper self-contained, we discuss here few important facts in quantum mechanics and in the so–called number representation. More details can be found, for instance, in [19, 20], as well as in[4].

Let \(\mathcal {H}\) be an Hilbert space, and \(B(\mathcal {H})\) the set of all the (bounded) operators on \(\mathcal {H}\). Let \(\mathcal {S}\) be our physical system, and \(\mathfrak {A}\) the set of all the operators useful for a complete description of \(\mathcal {S}\), which includes the observables of \(\mathcal {S}\). For simplicity, it is convenient (but not really necessary) to assume that \(\mathfrak {A}\) coincides with \(B(\mathcal {H})\) itself. The description of the time evolution of \(\mathcal {S}\) is related to a self–adjoint operator H = H † which is called the Hamiltonian of \(\mathcal {S}\), and which in standard quantum mechanics represents the energy of \(\mathcal {S}\). In this paper we have adopted the so–called Heisenberg representation, in which the time evolution of an observable \(X\in \mathfrak {A}\) is given by

or, equivalently, by the solution of the differential equation

where [A,B]:=A B−B A is the commutator between A and B. The time evolution defined in this way is a one–parameter group of automorphisms of \(\mathfrak {A}\).

An operator \(Z\in \mathfrak {A}\) is a constant of motion if it commutes with H. Indeed, in this case, equation (A.2) implies that \(\dot Z(t)=0\), so that Z(t)=Z for all t.

In some previous applications, [4], a special role was played by the so–called canonical commutation relations. Here, these are replaced by the so–called canonical anti–commutation relations (CAR): we say that a set of operators \(\{a_{\ell },~a_{\ell }^{\dagger }, \ell =1,2,\ldots ,L\}\) satisfy the CAR if the conditions

hold true for all ℓ,n = 1,2,…,L. Here, \(\mathbb {1}\) is the identity operator and {x,y}:=x y+y x is the anticommutator of x and y. These operators, which are widely analyzed in any textbook about quantum mechanics (see, for instance, [19, 20]) are those which are used to describe L different modes of fermions. From these operators we can construct \(\hat {n}_{\ell }=a_{\ell }^{\dagger } a_{\ell }\) and \(\hat {N}={\sum }_{\ell =1}^{L} \hat {n}_{\ell }\), which are both self–adjoint. In particular, \(\hat {n}_{\ell }\) is the number operator for the ℓ–th mode, while \(\hat {N}\) is the number operator of \(\mathcal {S}\). Compared with bosonic operators, the operators introduced here satisfy a very important feature: if we try to square them (or to rise to higher powers), we simply get zero: for instance, from (A.3), we have \(a_{\ell }^{2}=0\). This is related to the fact that fermions satisfy the Fermi exclusion principle [19].

The Hilbert space of our system is constructed as follows: we introduce the vacuum of the theory, that is a vector φ 0 which is annihilated by all the operators a ℓ : a ℓ φ 0 =0 for all ℓ = 1,2,…,L. Such a non zero vector surely exists. Then we act on φ 0 with the operators \(a_{\ell }^{\dagger }\) (but not with higher powers, since these powers are simply zero!):

n ℓ =0,1 for all ℓ. These vectors form an orthonormal set and are eigenstates of both \(\hat {n}_{\ell }\) and \(\hat {N}\): \(\hat {n}_{\ell }\varphi _{n_{1},n_{2},\ldots ,n_{L}}=n_{\ell }\varphi _{n_{1},n_{2},\ldots ,n_{L}}\) and \(\hat {N}\varphi _{n_{1},n_{2},\ldots ,n_{L}}=N\varphi _{n_{1},n_{2},\ldots ,n_{L}},\) where \(N={\sum }_{\ell =1}^{L}n_{\ell }\). Moreover, using the CAR, we deduce that

and

for all ℓ. Then a ℓ and \(a_{\ell }^{\dagger }\) are called the annihilation and the creation operators. Notice that, in some sense, \(a_{\ell }^{\dagger }\) is also an annihilation operator since, acting on a state with n ℓ =1, we destroy that state.

The Hilbert space \(\mathcal {H}\) is obtained by taking the linear span of all these vectors. Of course, \(\mathcal {H}\) has a finite dimension. In particular, for just one mode of fermions, \(\dim (\mathcal {H})=2\). This also implies that, contrarily to what happens for bosons, all the fermionic operators are bounded.

The vector \(\varphi _{n_{1},n_{2},\ldots ,n_{L}}\) in (A.4) defines a vector (or number) state over the algebra \(\mathfrak {A}\) as

where 〈 , 〉 is the scalar product in \(\mathcal {H}\). As we have discussed in [4], these states are useful to project from quantum to classical dynamics and to fix the initial conditions of the considered system.

Rights and permissions

About this article

Cite this article

Bagarello, F. A Quantum-Like View to a Generalized Two Players Game. Int J Theor Phys 54, 3612–3627 (2015). https://doi.org/10.1007/s10773-015-2599-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10773-015-2599-x