Abstract

In this paper Type-2 Information Set (T2IS) features and Hanman Transform (HT) features as Higher Order Information Set (HOIS) based features are proposed for the text independent speaker recognition. The speech signals of different speakers represented by Mel Frequency Cepstral Coefficients (MFCC) are converted into T2IS features and HT features by taking account of the cepstral and temporal possibilistic uncertainties. The features are classified by Improved Hanman Classifier (IHC), Support Vector Machine (SVM) and k-Nearest Neighbours (kNN). The performance of the proposed approaches is tested in terms of speed, computational complexity, memory requirement and accuracy on three datasets namely NIST-2003, VoxForge 2014 speech corpus and VCTK speech corpus and compared with that of the baseline features like MFCC, ∆MFCC, ∆∆MFCC and GFCC under white Gaussian noisy environment at different signal-to-noise ratios. The proposed features have the reduced feature size, computational time, and complexity and also their performance is not degraded under the noisy environment.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

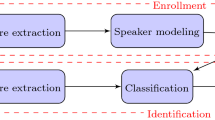

1 Introduction

Speaker based biometric authentication in forensic and social media applications is emerging as a viable technology because acquisition of data is easy and economical. The system has been adjudged effective in the noise free environment, in the absence of channel variations and in the absence of limited data (Jayanna et al. 2009). The present work focuses on addressing the noisy conditions that pose the real time challenge. The traditional features used for speaker recognition are Mel Frequency Cepstral Coefficients (MFCC) which are sensitive to the noise. These are first introduced by Davis and Mermelstein (Davis and Mermelstein 1980) for word recognition and later many variants of MFCC such asdelta-MFCC and delta–delta MFCC (Kumar et al. 2011) are proposed to make them robust under the noisy environment. Approaches to increase the robustness are attempted by feature normalization such as cepstral mean and variance normalization (CMVN), RASTA filtering (Hermansky and Morgan 1994) and feature warping (Pelecanos and Sridharan 2001). Zhao et al. (2012) have proposed a new speaker feature known as Gammatone Frequency Cepstral Coefficients (GFCC) having more robustness towards noise than that of the commonly used MFCC. But as explained in (Zhao et al. 2013), the frequency scale (Mel scale) employed in the filter bank and the nonlinear rectification (i.e., cubic root) used in the derivation of scale invariant cepstral coefficients provide robust features to counter noise.

Gaussian mixture model (GMM) (Reynolds and Rose 1995, Reynolds1995) is still the most common approach (Togneri and Pullella 2011) for speaker modeling in the text-independent speaker recognition as it is a model based approach. Reynolds et al. (2000) have adapted GMM using Universal Background Model (UBM) for speaker verification system that is found to be efficient.

Fuzzy logic have been used to handle the uncertainty at modeling stage, decision stage and the feature dimensionality reduction stage to yield promising results. In the literature there are a large number of Fuzzy based modeling techniques most of which are the fuzzified version of the existing modeling and decision techniques.

Yuan et al. (1993) have developed Fuzzy mathematical algorithm to extract different features of speakers between Line Spectrum Pair Frequencies and Cepstrum derived from linear prediction analysis. Pierre Castellano (Yuan et al. 1993) have utilized the fuzzy set theory that provides thesecond stage (post-processing) classification after an Artificial Neural Network (ANN) that provides a firststage of discrimination. Jawarkar et al. (2011) use the fuzzy min–max neural network for the text independent speaker identification. This network utilizes fuzzy sets as pattern classes. It is a three layer feed forward network that grows adaptively to meet the demands of the problem. It yields good result as compared to GMM.

Ki Yong Lee in (2004) partitions the data spaceinto several disjoint clusters by fuzzy clustering, and then performs PCA using the fuzzy covariance matrix in each cluster. Finally, the GMM for speaker is obtained from the transformed feature vectors with reduced dimension in each cluster. As compared to the conventional GMM with diagonal covariance matrix, the proposed method needs less storage and gives faster results. Lung (2004) extracts features based on wavelet transform derived from fuzzy c-means clustering. It is found that decreasing the number of training frames does not reduce the recognition rate by the fuzzy c-means clustering algorithm. Wang et al. (2008) proposes a local PCA and Kernel-based fuzzy clustering for feature extraction. These methods remove the time pertinence, noise of speech, reduce the feature vector dimension and achieve a best performance as compared to the standard SVM and GMM. Mirhassani et al. (2014) has addressed methods that include the extraction of MFCC with the narrower filter bank followed by a fuzzy-based feature selection method. The proposed features election provides relevant, discriminative, and complementary features. The proposed method can diminish the dimensionality without compromising the speech recognition rate. Pinheiro et al. (2016) describe a novel GMM-UBM based system dealing with the session noise variability problem. The system uses the Type-2 Fuzzy GMM frame work by considering the speaker GMM parameters to be uncertain in an interval.

The fuzzy setsare characterized by a set of information source values and a membership function (MF) that maps the information source values to the membership grades (degrees of belongingness or association) to the set. But we are interested in representing the uncertainty associated with a fuzzy set. The membership grades don’t provide the overall uncertainty associated with the fuzzy set. They only can present the degree of association or belongingness of an information source value to a vague concept represented by a MF.

In (Aggarwal and Hanmandlu 2015), Information set theory was proposed to overcome the shortcomings of fuzzy set theory. The first shortcoming is that its elements are pairs. The components of each pair though related but are delinked. The second shortcoming is that it has no provision to represent both probabilistic and possibilistic uncertainties. Hence we use the Information Set theory to handle the possibilistic uncertainty present in speech signal for the text-independent speaker recognition.

1.1 Motivation

Though Information set features have been used for the development of speaker based authentication system, these features cannot take care of the uncertainty in MFCCs fully. Hardly any effort is made in the literature to account for high order uncertainty in the MFCCs. So we are motivated to represent the higher order uncertainty using type-2 Membership Functions (MF) in place of type-1 MFs in the original Information Set features leading to Type-2 Information Set features and also by applying the Hanman transform on the original information source values. As MFCCs derived under noisy environment are subject to higher order uncertainty we are bent upon investing the effectiveness of these approaches in representing this kind of uncertainty.

2 Related topics

2.1 Mel-frequency cepstral coefficients

The frame work to extract Mel-Frequency Cepstral Coefficients (MFCC) from a speech signal as shown in Fig. 1.

Step 1: Pre-process the speech signal \({\text{s(n)}}\) to boost the high frequency components and to eliminate the spectrum tilt by applying the first order high pass filter with \(\alpha =0.97\) as follows:

The above pre-emphasis operation has little impact on imparting robustness to MFCC towards noise. As speech is a quasi-stationary signal, features extracted from the pre-processed signal are not reliable. However the signal is observed to be stationary in a window of small duration and so the features extracted in this widow are reliable. Therefore the signal is divided into frames of 32 ms duration with 16 ms overlapping.

Step 2: Disregard the silent periods using Voice Activity Detection (Jongseo 1999; Ephraim and Malah 1984) and consider the frames only with the voice signals as these contribute less while extracting speaker specific features.

Step 3: Calculate the power spectrum of each frame. This is motivated by the human cochlea (an organ in the ear) which vibrates at different spots depending on the frequency of the incoming sounds. Periodogram estimate also helps identifying which frequencies are present in the frame.

Step 4: Take a set of Periodogram bins and compute the energy of each frequency band by applying the Mel filter banks (Davis and Mermelstein 1980) with 40 triangular filters. This is due to the fact that cochlea cannot discern the difference between two closely spaced frequencies. These filter banks are non-linearly placed throughout the bandwidth using Mel scale \(\mathcal{M}\) given by:

Step 5: Perform the cubic root operation for non-linear rectification. As proved in (Zhao et al. 2012, 2013) the Mel power spectrum using cubic root operation for non-linear rectification is more noise robust than the log operator.

Step 6: Because of using the overlapping filter banks, the filter bank energies are quite correlated with each other. The DCT decorrelates the energies. But in the traditional MFCC, not all DCT coefficients are considered. This is because the higher DCT coefficients represent fast changes in the filter bank energies that degrade the performance. But in the proposed methodology all the coefficients are considered for delivering an efficient information.

2.2 Information set theory

The Information theoretic entropy function called the Hanman-Anirban entropy function in (Hanmandlu and Das 2011) represents the possibilistic uncertainty by virtue of having parameters in its exponential gain function. This entropy function is generalized by Mamta and Hanmandlu (Mamta et al. 2014) and Medikonda et al. (2016). Out of these the one in (Mamta et al. 2014) is the most general entropy followed by the one in (Medikonda et al. 2016) which is pursued in the present work.

The concept of information set was mooted in (Hanmandlu 2011) and utilized in (Mamta and Hanmandlu 2014) for the recognition of infra-red face. By taking recourse to the Hanman-Anirban entropy function the information set theory eliminates the shortcomings of fuzzy set theory. This allows us to represent the uncertainty in the granularized information source values via the corresponding membership function values. This theory converts the information source values and its membership function values which are pairs in a fuzzy set into the products termed as the information values constituting the information set. The sum of the information values gives the overall uncertainty which we call as the information. A brief description of information set theory is given below.

To seek the conversion of a fuzzy set into the information set consider a set of values, termed as the information source values of an attribute \(\Phi =\{ {\varphi _1}, \ldots ,{\varphi _n}\}\). This set is denoted by \({{\text{X}}_\Phi }\) as

The information set theory permits the use of agents by expanding the functionality of a MF called empowered MF, which has a limited role in a fuzzy set. Recall the role of an agent in artificial Intelligence where it is bestowed with perceiving its environment and performing the assigned task accordingly. For example, a robotic agent has sensors that perceive the surroundings and performs simple tasks like pick and place to complex tasks. The empowered MF acting as an agent can do much more than what it can do as MF.

Let us consider the generalized entropy from (Medikonda et al. 2016) in the following form as defined in Eq. (4):

The gain function in Eq. (4) is defined as follows:

where \(\{ {a_\Phi },{b_\Phi },{\beta _\Phi }\}\) are the real valued parameters, assumed to be variables to make the entropy function adaptive.

We have employed Eq. (4) by taking \({G_\Phi }\) from Eq. (5) and \({\alpha _\Phi }=1\). This gain function \({G_\Phi }\) can take any form of MF including the commonly used membership functions by an appropriate choice of parameters, \(\{ {a_\Phi },{b_\Phi },{\beta _\Phi }\}\).

2.2.1 Gain function as the membership function

Taking \({a_\Phi }={1}/{{\sqrt 2 \sigma }},\,{b_\Phi }={{ - \mu }}/{{\sqrt 2 \sigma }},\,{\beta _\Phi }=2\) in Eq. (5) the gain function becomes the Gaussian membership function where \(\mu\) and \(\sigma\) are the mean and standard deviation associated with \({{\text{x}}_\Phi }\). The choice of parameters involving mean and variance in the gain function \({G_\Phi }\left( {{\varphi _i}} \right)\) helps convert it into the Gaussian function representing the possibilistic distribution of the information source values. This function called the Gaussian MF \({\mathcal{G}_\Phi }\left( {{\varphi _i}} \right)\) in the parlance of a fuzzy set is given by:

The versatility of the entropy function in Eq. (4) is that the information source values \(\left\{ {{{\text{x}}_\Phi }\left( {{\varphi _i}} \right)} \right\}\) can be taken from any domain, say, probabilistic, possibilistic or a combination of both. Thus we have eliminated two shortcomings of a fuzzy set firstly by connecting the information source value and its MF value that form a pair as the product called the information value and secondly by extending the entropy function to deal with both possibilistic and probabilistic uncertainties.

The information value \({{\text{{I}}}_{{{\Phi}}}}\left( {{\varphi _i}} \right)\) corresponding to the information source value \({{\text{x}}_{{{\Phi}}}}\left( {{\varphi _i}} \right)\) is computed using the generalized entropy function of (Medikonda et al. 2016) as

The set of these information values constitutes the information set \({\mathcal{S}_\Phi }\) given by

The sum of the information values in an information set \({\mathcal{H}_\Phi }\) is called the information denoted by \({{\rm I}_\Phi }\). Thus, the normalized effective information \({H_\Phi }\) of the collected information source values is given by

3 Proposed higher order information set based features

We now present two approaches for representing higher order uncertainty in MFCCs. In the first approach the basic information values are modified by replacing type-1 MF with type-2 MF. The features obtained from this approach are called type-2 Information set features. In the second approach we compute the features by applying the Hanman transform directly on the information source values. These approaches are now discussed in detail.

3.1 Type-2 information set features

In this, we adapt the Mamdani type fuzzy rule to define the corresponding information rule, where the input is a set of fuzzy sets but the output is an Information Set. Depending on the type-1 or type-2 membership function used in the input fuzzy sets of the antecedent part of the rule, we define the consequent part as the Information set of type-1 or type-2 unlike the output fuzzy set in the Mamdani rule. We name this rule as type-1 Information rule or the type-2 Information rule depending on the type of the membership function. It may be noted that type-2 Information rule that help represent higher order possibilistic uncertainty are the generalization of type-1 Information rule.

A pair of type-1 Information rules (T1IRs) is formed from MFCC corresponding to spatial (Cepstral) and temporal components. The T1IRa for the temporal component and T1IRB for the Cepstral component are of the following form:

T1IRa:

T1IRb:

Where \({{\varvec{x}}_1},~{{\varvec{x}}_2} \ldots .~{{\varvec{x}}_\tau }\) and \({{\varvec{y}}_1},~{{\varvec{y}}_2} \ldots .~{{\varvec{y}}_d}\) are the input vectors (Information Source values).We denote the information source values by \({{\varvec{x}}_\tau }=\{ {x_{\tau 1}},{x_{\tau 2}}, \ldots ,{x_{\tau d}}\}\) and \({{\varvec{y}}_d}=\{ {x_{1d}},{x_{2d}}, \ldots ,{x_{\tau d}}\}\).

The corresponding fuzzy sets are denoted by:

\({\mathcal{H}_T}~{\text{and}}~{\mathcal{H}_D}\) are the output Information Sets. As these are derived from the proposed entropy function they are defined as follows:

where \({\varvec{{\mathcal{G}}}_{{\mathcal{A}_i}}}~{\text{and}}~{\varvec{\mathcal{G}}_{{\mathcal{B}_j}}}\) are type-1 membership functions for ruleT1IRa and T1IRbrespectively.

The combined output information set is taken as,

The combined effective information of the system is computed from:

We now describe the type-2 Information rule (T2IR) in which the antecedent part contains type-2 input fuzzy sets but the consequent part is similar to that of T1IR. The type-2 information rule consists of two parts: one corresponding to the upper MF and another corresponding to the lower MF. Thus corresponding to each T1IRa we will have two type-2 information rules, T2IRUa and T2IRLa and similarly we will have two rules, T2IRUb and T2IRLb corresponding to T1IRb, defined as:

T2IRUa & T2IRLa:

T2IRUb &T2IRLb:

The output of T1IRa is obtained by

The output of T1IRb is obtained by

where \({{\varvec{x}}_1},~{{\varvec{x}}_2} \ldots .~{{\varvec{x}}_\tau }\) and \({{\varvec{y}}_1},~{{\varvec{y}}_2} \ldots .~{{\varvec{y}}_d}\) are the input vectors (Information Source values) represented such as \({{\varvec{x}}_\tau }=\{ {x_{\tau 1}},{x_{\tau 2}}, \ldots ,{x_{\tau d}}\}\) and \({{\varvec{y}}_d}=\{ {x_{1d}},{x_{2d}}, \ldots ,{x_{\tau d}}\}\). \({\overline {\mathbb{A}}_1},{\overline {\mathbb{A}}_2}, \ldots ,~{\overline {\mathbb{A}}_\tau }\) and \({\underline {\mathbb{A}} _1},~{\underline {\mathbb{A}} _2},~ \ldots ,~{\underline {\mathbb{A}} _\tau }\) are the upper and lower fuzzy sets of T2IRUa and T2IRLarepresented as

\({\overline {{\varvec{\mathcal{H}}}}_T}\,{\text{and}}\,{\underline {{\varvec{\mathcal{H}}}} _T}\) are the upper and lower Information Sets of T2IRUa and T2IRLa and \({\overline {{\varvec{\mathcal{H}}}}_D}\,{\text{and}}\,{\underline {{\varvec{\mathcal{H}}}} _D}\) are the corresponding upper and lower Information Sets of T2IRUb and T2IRLb respectively. The combined output of a system is calculated using Eqs. (12, 13).

We have a number of type-1 fuzzy membership functions in the literature, i.e. Triangular, Gaussian, Trapezoidal, Sigmoidal, pi-shaped, etc. They can be easily converted into type-2 membership functions by changing their parameters. Type-1 Gaussian type membership functions can be easily converted into type-2 by changing either mean or standard deviation.

The mathematical expressions for the type-1 Gaussian membership functions in Cepstro-temporal cases are:

For T1IRa:

For T1IRb:

The type-1 antecedent parameters are modified by the changing the mean and the standard deviation. The upper mean and standard deviation of type-2 Gaussian membership are defined as follows:

For T2IRUa:

For T2IRUb:

Type-2 upper Gaussian membership function for Cepstro-temporal cases are defined as follows:

For T2IRUa:

For T2IRUb:

where, \({\overline {\mu } _i}\) is the mean and \({\overline {\sigma } _i}\) is width of the upper membership grade for T2IRUa and \({\overline {\mu } _j}\) is the mean and \({\overline {\sigma } _j}\) is width of the upper membership grade for TSIRUb.

We will now consider type-2 interval sets where the lower mean and standard deviation of type-2 lower Gaussian membership function are obtained by scaling the upper mean and standard deviation of the type-2 upper Gaussian membership function as,

where, \({\gamma _1}~{\text{and}}~{\gamma _2}\) are the scaling factors. However we have used upper and lower values only in the standard deviation. This means \({\gamma _1}=1\). Inview of this, the twolower membership functions are defined as,

For T2IRLa:

For T2IRLb:

where, \({\underline {\mu } _i}\) is the mean and \({\underline {\sigma } _i}\) is width of the lower membership grade for T2IRLa and \({\underline {\mu } _j}\) is the mean and \({\underline {\sigma } _j}\) is width of the lower membership grade for T2IRLb.

3.2 Hanman transform based features

The Information sets can also be used to assess higher form of uncertainty in the information source values based on the initial uncertainty representation. This is the concept behind the Hanman transform which follows from the possibilistic version of the adaptive Hanman-Anirban entropy function (Hanmandlu and Das 2011) having variable parameters. Recall Eq. (9)

where \({{\varvec{G}}_\Phi }\left( {{\varphi _i}} \right)={e^{ - {{({a_\Phi }{{\text{x}}_\Phi }\left( {{\varphi _i}} \right)+{b_\Phi })}^{{\beta _\Phi }}}}}\). Assuming its parameters to be variables and substituting \({a_\Phi }={\varvec{\mathcal{G}}_\Phi }\left( {{\varphi _i}} \right)\) from Eq. (6); \({b_\Phi }=0;\,{\beta _\Phi }=1\) we obtain Hanman Transform value set, \(\mathfrak{H}\) as,

This transform has realistic applications; for example, we gather information about an unknown person of some interest to us. This is the first level of information (set) and then evaluate him again to get the second level of information camped with the first one.

To derive Hanman transform, recall the two information rules, T1IRa and T1IRb defined as,

T1IRa:

Rule 1b:

where \({{\varvec{x}}_1},~{{\varvec{x}}_2} \ldots ~{{\varvec{x}}_\tau }\) and \({{\varvec{y}}_1},~{{\varvec{y}}_2} \ldots ~{{\varvec{y}}_d}\) are the input vectors. \({\mathfrak{H}_T}\;{\text{and}}\;{\mathfrak{H}_D}\) are the higher order Information Sets using Hanman transform. \({\mathfrak{A}_i}\;{\text{and}}\;{\mathfrak{B}_{j~}}\) are the gain functions derived from the Information sets of T1IRa and T1IRb for the ith and jth input vectors respectively. These gain functions are functions of the Information values, defined as

where \({{\varvec{x}}_i}{\mathcal{G}_{{\mathcal{A}_i}}}\) and \({{\varvec{y}}_i}{\mathcal{G}_{{\mathcal{B}_j}}}\) are the type-1 output Information values of T1IRa and T1IRb defined in Eqs. (18, 19) respectively. The combined output of a system is calculated using Eq. (13).

4 Experiments and results

4.1 Database description

The proposed approach is tested on the standard databases such as NIST-2003, VoxForg-2015 and VCTK speech corpus.

Switchboard NIST (2003) evaluation database consists of 356 speakers voice recorded on telephone for a duration of 2 min per speaker with a sampling rate of 8 kHz at 16 bit. We have divided this single speech sample into 5 samples of a user with duration of 50 s for training and testing.

VoxForge (2015) is a collection of transcribed speech to use in Open Source Speech Recognition Engines (“SRE"s). It consists of a large number of speakers from different regions of the world from which we have randomly chosen 100 speakers. Each speaker reads out 10 sentences in English that are recorded with a sampling rate of 8 kHz. The channels used in this recording are different like microphone, mobile, laptops etc.

This CSTR VCTK Corpus (2009) includes speech data collected from 109 native speakers of English with various accents. Each speaker reads out about 400 sentences in which we have randomly selected 5 samples. All speech data was recorded using an identical recording setup: an omni-directional head-mounted microphone (DPA 4035), 48 kHz sampling frequency at 16 bits. For our experiment we have down sampled it to 8 kHz at 16 bits.

4.2 Results and discussions

The other classifiers used in this study are: Gaussian Mixture Model (GMM), Support Vector Machine (SVM) and k Nearest Neighborhood (kNN). They are described briefly now.

4.2.1 Gaussian mixture model (GMM)

It is a probability density function represented as a weighted sum of Gaussian component densities and it is commonly used as a parametric model of the probability distribution of continuous measurements or features in a biometric system, such as vocal-tract related spectral features in a speaker recognition system. GMM parameters such as mean, standard deviation and weight of each Gaussian are estimated from the training data using the iterative Expectation–Maximization (EM) algorithm or Maximum A Posteriori (MAP) estimation from a well-trained prior model. In this work we have used 16 Gaussian mixtures to model the MFCC feature vectors in the training set.

4.2.2 Support vector machine (SVM)

This is a discriminative classifier wherein a model of decision hyperplane is constructed using the training feature vectors called the support vectors. These vectors help match the test feature vector with the training feature vectors. In this work we have used radial basis functional kernel with degree 3.

4.2.3 k nearest neighborhood (kNN)

It uses the Euclidean distance between the test feature vector and the training feature vector and its neighbors to identify the unknown user using majority rule. In this work we have considered k = 1, 3, 5 nearest neighbors.

For the extraction of the standard MFCC, we have selected a frame of 20 ms with a frame shift of 10 ms, and 26 Mel filter banks that provides 13 MFCC features per frame. Similarly by using ∆MFCC we have taken 26 features per frame and with ∆∆MFCC 39 features per frame. GMM is used to model the training data of the each speaker and log likelihood is considered for classification.

A frame length of 32 ms duration with a frame shift of 16 ms, and 40 Mel filter banks lead to40 MFCC per frame. On each speech sample we compute 40 T2IS features and 40 HT features. For T2IS features, we have used the classifiers : IHC (Medikonda et al. 2016), SVM (Chang et al. 2011), and k-Nearest Neighborhood (k = 1,3,5).

In Fig. 2, a graph is represented between average recognition (%) and scaling factor (\(\gamma\)) is shown. To generate type-2 Gaussian membership function we generate upper and lower standard deviations (\(\sigma\)). When a test sample is considered with additive white Gaussian noise at a Signal-to-Noise Ratio (SNR) from 0dB to 30dB in steps of 5dB. It is found by experimental that at \(\gamma =0.2\) yields best results on three databases. Scaling factor ‘\(\gamma\)’ can also be learned by using different learning technique, but here we considered by experimental.

Table 1 presents the average k-fold identification accuracy (%) using T1IS on NIST, VoxForge and VCTK databases. It is observed that SVM and IHC gives the compatible results but with kNN at k = 3 and 5 yields better results with an average improvement of 10, 8 and 13% on NIST, VoxForge and VCTK respectively.

Tables 2, 3 and 4 presents the comparison of average k-fold accuracy (%) of MFCC,ΔMFCC, ΔΔMFCC, and GFCC features using GMM andT2IS features using IHC, SVM and kNN (k = 1,3,5) on NIST, VoxForge and VCTK databases respectively.

On NIST-2003 database, the proposed methods outperforms other features with an average improvement of about 26% when compared with MFCC as can be seen from Tables 2 and 5. But when compared with GFCC there is an average improvement of 10% when kNN (k = 3, 5) is used and there is an average improvement of 5% at 0–5 dB SNR when IHC and SVM classifiers are used. At different SNR values, the extent of improvement in performance varies. And it is similar with VoxForge 2014 and VCTK databases as shown in Tables 3, 4 and 5.

The proposed method helps reduce the size of the feature vector. We can see from Table 6 that there is a drastic reduction in feature size in the multi-dimensional feature vector of size about 18,000 (\(\sim 13\, \times \,1385\)) for MFCC and 31,000 (\(\sim 22\, \times \,1385\)) for GFCC to an information set based feature vector (T2IS, HT) of size ~ 30 after feature selection using MDA.

Another significant achievement of this proposed method is reduction in computation time. From Table 7, it is observed that computational time using proposed method is less than that of the standard state-of-the-art methods.

5 Conclusions

This paper formulates Type-2 Information Set features (T2IS) and Hanman Transform features (HT) based on Information Set theory in the development of a robust text-independent speaker identification system in the presence of whiten Gaussian noise at six different SNRs. This was an effort taken to extract speaker specific information in noisy environment without any noise reduction.

In the first phase the audio signal is partitioned into frames and from each frame Mel Frequency Cepstral Coefficients (MFCC). Considering MFCC matrix, such that each row of a matrix corresponds to a dimension and each column corresponds to a frame. This matrix representation facilitates the derivation of T2IS features and HT features from frames yielding the type-2 and HTcepstral information and dimensions yielding the type-2 and HT temporal information. Thus at each position in the matrix we have two types of information components adding which we get T2IS features. After the extraction of feature vectors from all samples of a user, we have set aside some feature vectors as the training sets and the rest as the test feature vectors.

The proposed method is applied on three datasets (NIST 2003, VoxForge 2015, VCTK 2009) with four types of noises. For the sake of comparison of performance the three types of MFCC (MFCC, ΔMFCC, ΔΔMFCC) and GFCC with GMM are used as baseline methods. The proposed T2IS features found to be robust and outperforms when compared with the baseline methodologies. This vindicates the effectiveness of T2IS features over the existing features. Moreover the number of T2IS features is very less thus reducing the computational complexity.

References

Aggarwal, M., & Hanmandlu, M. (2015). Representing uncertainty with information sets. IEEE Transactions on Fuzzy Systems, 24, 1–15.

Chang, C.-C., & Lin, C.-J., LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology, 2, 1–27, 2011.

Davis, S., & Mermelstein, P. (1980). Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Transactions on Acoustics, Speech and Signal Processing, 28, 357–366.

Ephraim, Y., & Malah, D. (1984). Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Transactions on Acoustics, Speech and Signal Processing, 32, 1109–1121.

Hanmandlu, M. (2011). Information sets and information processing. Defence Science Journal, 61, 405–407.

Hanmandlu, M., & Das, A. (2011). Content-based image retrieval by information theoretic measure. Defence Science Journal, 61, 415–430.

Hermansky, H., & Morgan, N. (1994). RASTA processing of speech. IEEE Transactions on Speech and Audio Processing, 2, 578–589.

Jawarkar, N. P., Holambe, R. S., & Basu, T. K., Use of fuzzy min-max neural network for speaker identification, In 2011 International Conference on Recent Trends in Information Technology (ICRTIT), 2011, pp. 178–182.

Jayanna, H. S., & Prasanna, S. R., & Mahadeva. (2009, Multiple frame size and rate analysis for speaker recognition under limited data condition. IET Signal Processing, 3(3), 189–204.

Kumar, K., Kim, C. & Stern, R. M., Delta-spectral cepstral coefficients for robust speech recognition, In 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2011, pp. 4784–4787.

Lee, K. Y. (2004). Local fuzzy PCA based GMM with dimension reduction on speaker identification. Pattern Recognition Letters, 25, 1811–1817.

Lung, S.-Y. (2004). Further reduced form of wavelet feature for text independent speaker recognition. Pattern Recognition, 37, 1565–1566.

Lung, S.-Y. (2004). Adaptive fuzzy wavelet algorithm for text-independent speaker recognition. Pattern Recognition, 37, 2095–2096.

Mamta, & Hanmandlu, M. (2014). Robust authentication using the unconstrained infrared face images. Expert Systems with Applications, 41, 6494–6511.

Mamta, & Hanmandlu, M. (2014). A new entropy function and a classifier for thermal face recognition. Engineering Applications of Artificial Intelligence, 36, 269–286.

Medikonda, J., Madasu, H., & Panigrahi, B. K. (2016). Information set based gait authentication system. Neurocomputing, 207, 1–14.

Mirhassani, S. M., & Ting, H.-N. (2014). Fuzzy-based discriminative feature representation for children’s speech recognition. Digital Signal Processing, 31, 102–114.

NIST (2003). The NIST year 2003 speaker recognition evaluation plan. Available: http://www.itl.nist.gov/iad/mig/tests/sre/2003/2003-spkrec-evalplan-v2.2.pdf.

Pelecanos, J., & Sridharan, S. (2001). Feature Warping for Robust Speaker Verification, presented at the A Speaker Odyssey—The Speaker Recognition Workshop, Crete.

Pinheiro, H. N. B., Vieira, S. R. F., Ren, T. I., Cavalcanti, G. D. C., & de Mattos Neto, P. S. G. (2016). Type-2 fuzzy GMM for text-independent speaker verification under unseen noise conditions, In 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5490–5494.

Reynolds, D. A., & Rose, R. C. (1995). Robust text-independent speaker identification using Gaussian mixture speaker models. IEEE Transactions on Speech and Audio Processing, 3, 72–83.

Reynolds, D. A. (1995). Speaker identification and verification using Gaussian mixture speaker models. Speech Communication, 17, 91–108.

Reynolds, D. A., Quatieri, T. F., & Dunn, R. B. (2000). Speaker verification using adapted gaussian mixture models. Digital Signal Processing, 10, 19–41.

Sohn, J., Kim, N. S., Sung, W. (1999). A statistical model-based voice activity detection”. IEEE Signal Processing Letters, 6, 1–3.

Togneri, R., & Pullella, D. (2011). An overview of speaker identification: accuracy and robustness issues. IEEE Transactions on Circuits and Systems Magazine, 11, 23–61.

VCTK (2009). The Centre for Speech Technology Research VCTK Corpus.

VoxForge (2015). VoxForge speech corpus. Available: http://www.repository.voxforge1.org/downloads/SpeechCorpus/Trunk/Audio/Main/.

Wang, Y., Liu, X., Xing, Y., & Li, M. (2008). A Novel Reduction Method for Text-Independent Speaker Identification,” in 2008 Fourth International Conference on Natural Computation, pp. 66–70.

Yuan, Z. X., Yu, C. Z., & Fang, Y. (1993). Text independent speaker identification using fuzzy mathematical algorithm, In 1993 IEEE International Conference on Acoustics, Speech, and Signal Processing, ICASSP-93, Vol. 2., pp. 403–406.

Zhao X., & Wang D. L. (2013). Analyzing noise robustness of MFCC and GFCC features in speaker identification, In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 7204–7208.

Zhao X., Shao Y., Wang D. L. (2012). CASA-based robust speaker identification. IEEE Transactions on Audio, Speech, and Language Processing, 20, 1608–1616.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Medikonda, J., Madasu, H. Higher order information set based features for text-independent speaker identification. Int J Speech Technol 21, 451–461 (2018). https://doi.org/10.1007/s10772-017-9472-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-017-9472-7