Abstract

Digital watermarking technology is concerned with solving the problem of copyright protection, data authentication, content identification, distribution, and duplication of the digital media due to the great developments in computers and Internet technology. Recently, protection of digital audio signals has attracted the attention of researchers. This paper proposes a new audio watermarking scheme based on discrete wavelet transform (DWT), singular value decomposition (SVD), and quantization index modulation (QIM) with a synchronization code embedded with two encrypted watermark images or logos inserted into a stereo audio signal. In this algorithm, the original audio signal is split into blocks, and each block is decomposed with a two-level DWT, and then the approximate low-frequency sub-band coefficients are decomposed by SVD transform to obtain a diagonal matrix. The prepared watermarking and synchronization code bit stream is embedded into the diagonal matrix using QIM. After that, we perform inverse singular value decomposition (ISVD) and inverse discrete wavelet transform (IDWT) to obtain the watermarked audio signal. The watermark can be blindly extracted without knowledge of the original audio signal. Experimental results show that the transparency and imperceptibility of the proposed algorithm is satisfied, and that robustness is strong against popular audio signal processing attacks. High watermarking payload is achieved through the proposed scheme.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, with the large utilization of the Internet and digital multimedia technology, digital watermarking (Cox et al. 2000; Gonzalez and Hernandez 1999; Singh 2011; Megha et al. 2013; Saini and Shrivastava 2014; Singh and Chadha 2013) is considered as a sequel to cryptographic procedures (Bao et al. 2012; Kaushik 2012; Mitali et al. 2014) that can preserve digital media like text, audio, image, or video from tampering. Digital watermarking schemes are capable of embedding important information inside the media contents of any type. Several algorithms have been proposed for digital image, audio, and video watermarking. Watermarking schemes do not actually restrict access to the data, but actually provide full access.

Watermarking is strongly required to achieve robustness so that the hidden watermark data becomes a part of the final produced data. The watermark must not to be recognized, and it must be difficult to remove. Watermarking schemes must be resistant to different attacks or signal processing modifications. Attacks on the watermarked audio signals usually affect the security and robustness of the watermarks. These attacks depend on certain manipulations of the data as a whole.

The rest of this paper is organized as follows. Section 2 shows the related works to the proposed algorithm. Theoretical background is introduced in Sect. 3. The proposed embedding procedure is demonstrated in Sect. 4. The proposed extraction procedure is discussed in Sect. 5. Our simulation results and performance evaluation along with necessary discussions are presented in Sect. 6. Finally, the conclusion of this work is discussed in Sect. 7.

2 Related works

Several audio watermarking techniques have been proposed to embed robust watermarks, while maintaining the original audio signals fidelity. Based on the domain in which the watermark is embedded, these techniques are classified into time-domain or transform-domain techniques. There are several related works to the proposed algorithm.

In Elshazly et al. (2012) proposed an algorithm, which uses DWT with QIM where an encrypted image is embedded into the low frequency component of the transformed audio signal. The watermark is blindly extracted. The performance of this algorithm is highly robust against common signal processing attacks. In Abd El-Samie (2009) proposed an SVD algorithm, where the host audio signal is converted into a 2-dimensional matrix, and then decomposed using SVD into three matrices; U, S, and V. The contents of the watermark are inserted to matrix S producing a new matrix Sw. The watermarked audio signal is obtained by applying inverse SVD to U, Sw, and V. The extraction process of the watermark is implemented by inverting the embedding procedure.

In Lalitha et al. (2011) proposed an algorithm, which uses a DWT-SVD technique for audio watermarking. The DCT-SVD algorithm has been compared with the DWT-SVD algorithm. The DWT-SVD algorithm is more robust than DCT-SVD algorithm. In Al-Haj and Mohammad (2010), the authors proposed an algorithm of audio watermarking, which is considered robust and imperceptible through using two important transforms DWT and SVD. They started with DWT transform and then applied the SVD for obtaining the S matrix, which is used to embed the bits of a binary watermark image. They suggest a duplication for the details D sub-bands in the formation of the DC matrix produced. Also, they suggested a value of 0.2 for the intensity parameter of the watermark to satisfy the required SNR, which is compatible with the IFPI standards. An evaluation of their algorithm on pop music and speech audio signals has been presented.

An improved audio watermarking algorithm has been proposed in Al-Haj and Mohammad (2010) and improved in Al-Haj et al. (2011) with two levels of DWT for watermark embedding. The authors in Al-Yaman et al. (2011) modified the proposed audio watermarking scheme in Al-Haj and Mohammad (2010), where they suggested embedding the thumbprint of the owner as a watermark for verification of the ownership. Also, they decreased the interval of the host audio by lessening the total number of watermark bits using a cryptographic hash function producing and embedding the assimilation of the thumbprint watermark instead of the image itself. In Al-Yaman et al. (2012), the authors improved the algorithms proposed by Al-Haj and Mohammad (2010), Al-Haj et al. (2011), Al-Yaman et al. (2011) through amalgamating a new framing of audio signals, where they take one frame and skip the next 29 frames. They introduced a new DWT matrix format for reducing the elements of the details D sub-bands in the formation of the DC matrix produced.

3 Theoretical background

3.1 Singular value decomposition

Singular value decomposition (SVD) is a mathematical tool which is mainly used to analyze matrices. A matrix A, can be decomposed into three matrices U, S, V. Let A(i,j) of dimension m × m be an arbitrary matrix with SVD of the form

where U and V are orthogonal m × m matrices such that \({U^T}U=I,\,{V^T}V=I,\) and S is an m × m diagonal matrix with nonnegative elements. The diagonal entries of S are called the singular values (SVs) of A, where S = diag\(({\lambda _1},{\lambda _2}, \ldots {\lambda _m}),{\text{ }}{\lambda _1} \ge {\lambda _2} \ge \ldots \ge {\lambda _m} \ge 0\) are the SVs of A, the columns of U are called the left singular vectors of A, and the columns of V are called the right singular vectors of A (Abd El-Samie 2009). The SVD has some interesting properties: (1) the sizes of the matrices from SVD transformation are not fixed, and the matrices need not to be square, (2) changing the SVs slightly does not affect the quality of the signal, (3) the SVs are invariant under common signal processing operations, and (4) the SVs satisfy intrinsic algebraic properties.

3.2 Quantization index modulation

The simplest quantization method used with the majority of audio watermarking schemes to embed or extract the watermark bit stream is the quantization index modulation (QIM) (Chen and Wornell 2001). The authors Chen and Wornell (2001) proposed a QIM watermarking algorithm in which the values of the samples are rounded to even or odd quantization steps, where 0 or 1 of the watermark bit stream was embedded. They found that a single sample quantization technique is more susceptible than the mean quantization method. Using the mean value instead of the sample values achieves minimization of the bit error rate (BER). The tradeoff between signal-to-noise-ratio (SNR) and both the normalized cross correlation (NCC), and the BER is affected by the choice of the value of the embedding factor, q.

3.3 Synchronization code

Synchronization code (SC) in digital systems is a sequence of bits introduced into a transmitted signal to achieve or maintain synchronism. It is an important portion of any data communications system. As any audio watermarking scheme is considered as a communication system, we can apply synchronization. The codes are inserted through the hidden data so that self-synchronization is achieved. To increase the robustness of the proposed scheme, a synchronization code not only contains the information about the position of the 1st bit of the watermark stream, but also is inserted with the binary watermark images. The robustness to any cropping, cutting, or shifting attacks is increased. The length of the SC is very important as the longer it is, the more robust it becomes. Figure 1 shows the watermark data structure that will be embedded in the host audio. Barker code (Hossain et al. 2012) is one of the main binary phase codes that produce compressed waveforms with constant side lobe levels equal to unity. A Barker code of length n is denoted as Bn. There are only seven known Barker codes that share this unique property, and they can have complementary forms that have the same characteristics. We have used 13-bit Barker code where the original bit sequence is given by:

The modified Barker code is

where we replace − 1 symbol by 0 symbol.

4 Embedding procedure

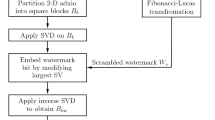

The watermark bit stream that will be embedded into the host audio signal with the watermarking images shown in Fig. 2 is prepared using the procedure shown in Fig. 3 as follows:

-

1.

Load the watermark images \({w_1}\left( {i,j} \right){\text{ and }}{w_2}\left( {i,j} \right){\text{.}}\)

-

2.

Encrypt both watermark images, \({w_1}\left( {i,j} \right){\text{ and }}{w_2}\left( {i,j} \right)\) using an Arnold chaotic map with a secret key to produce encrypted versions \({w_{1e}}\left( {i,j} \right){\text{ and }}{w_{2e}}\left( {i,j} \right).\)

-

3.

Lower the dimensions of both encrypted versions \({w_{1e}}\left( {i,j} \right){\text{ and }}{w_{2e}}\left( {i,j} \right)\) to produce one dimension vectors \({w_{1e}}\left( k \right),{w_{2e}}\left( k \right),\) where k = m × n, m is the number of rows, and n is the number of columns of the original watermark image.

-

4.

Insert the synchronization code within the lowered-dimension vector with the structure shown in Fig. 1 to form the watermark bit streams.

The complete embedding procedure is shown in Fig. 4, and the embedding steps are as follows

-

1.

Start with loading the 2-D binary watermark images, \({w_1}(i,j)\) and \({w_2}(i,j),\) and encrypt both images with chaos sequence of a secrete key, k, producing \({w_{1e}}(i,j)\) and \({w_{2e}}(i,j)\)

-

2.

Lower the dimensions of the watermark images to be 1-D arrays for embedding the watermarks in 1-D audio signals. \({w_{1e}}\) and \({w_{2e}}\) are the bit streams after this process,

-

3.

Perform coding of both sequences \({w_{1e}}\) and \({w_{2e}}\) using modified Barker code (using symbols 1 and 0 instead of 1 and − 1) with length 13 bits (B13), and the sequences become \({w_{1c}}\left( k \right)\) and \({w_{2c}}\left( k \right).\)

-

4.

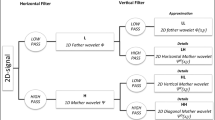

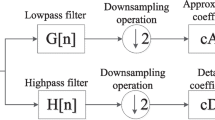

Decompose the host audio signal, A with the two-level wavelet transform to get the decomposed vectors \({V_1}\), \({V_2}\) in the form:

-

5.

Calculate the SVD of each block of CA 2 for the two channels to get SVD1 and SVD2 and select S1(1,1) for the 1st channel and S2(1,1) of the 2nd channel.

-

6.

Obtain the integer values, P 1 and P 2 for both channels such that,

where └ ┘is the down integer operation, q is the embedding strength, L is the frame length.

-

7.

Obtain the modified versions of \({S_1}(1,1)\) and \({S_2}(1,1)\) due to watermark embedding as follows:

where mod(x,y) results in the reminder of x/y.

-

8.

Apply inverse SVD after replacing \({{S_{1} }}(1,1)\) and \({{S_{2} }}(1,1)\) by \(\widetilde{{S_{1} }}(1,1)\) and \(\widetilde{{S_{2} }}(1,1)\).

-

9.

Reconstruct the watermarked audio signal \({A^{\prime}_1}\) for channel 1 and \({A^{\prime}_2}\) for channel 2 by IDWT as follows

-

10.

Formulate the watermarked audio signal \(A^{\prime}\) as:

5 Extraction procedure

The extraction procedure for the watermark images from the watermarked audio signal is performed blindly, i.e. there is no need for the original host audio signal. The procedure of extraction of the watermark images is shown in Fig. 5 as follows:

-

1.

Decompose the watermarked audio signal, A′ with two-level DWT and obtain the decomposition vectors\({V^{\prime}_1}\), and \({V^{\prime}_2}\) as follows,

$${V^{\prime}_1}={\{ C{A^{\prime}_2},C{D_2},C{D_1}\} _1},{\text{ for channel 1}}$$(13)$${V^{\prime}_2}={\{ C{A^{\prime}_2},C{D_2},C{D_1}\} _2},{\text{ for channel 2}}$$(14)\(C{A^{\prime}_2}\) is selected for both channels.

-

2.

Calculate the SVD of each block of \(C{A^{\prime}_2}\) for both channels and select \({s^{\prime}_1}\left( {1,1} \right)\) for the 1st channel and \({s^{\prime}_2}\left( {1,1} \right)\) of the 2nd channel.

-

3.

Reconstruct the watermark bit stream for both channels as follows:

-

4.

Search for the synchronization code on the reconstructed bit stream on \({w^{\prime}_{1c}}\) and \({w^{\prime}_{2c}}\) to obtain the 1-D scrambled bit streams \({w^{\prime}_{e1}}\left( k \right)\) and \({w^{\prime}_{e2}}\left( k \right).\)

-

5.

Rearrange all detected bit streams to form the scrambled binary watermark images \({w^{\prime}_{e1}}\left( {i,j} \right)\) and \({w^{\prime}_{e2}}\left( {i,j} \right).\)

-

6.

Descramble \({w^{\prime}_{e1}}\left( {i,j} \right)\) and \({w^{\prime}_{e2}}\left( {i,j} \right)\) to obtain the original watermark images \({w^{\prime}_1}\left( {i,j} \right)\) and \({w^{\prime}_2}\left( {i,j} \right).\)

6 Simulation results

Simulations are performed with MATLAB 7.5 and Cool Edit pro 2.0. Five different types of music (classical-folk-trumpet-blues-country) have been used for performance evaluation of the proposed scheme. We have used different types of audio signals, because they have different perceptual properties, characteristics, and energy distributions. All audio signals are a 16-bit, stereo files in wave format and have 44.1 kHz sampling rate. The length of each is 5 s. We have used a 64 × 64 bits binary images as watermarks as shown in Fig. 2a, b. A 13-bit Barker code {1 1 1 1 1 0 0 1 1 0 1 0 1} is used as a synchronization code embedded within the watermark bit streams.

The authors Elshazly et al. (2012) made a comparison between two wavelet functions; Haar and db4 using three different criteria; BER, normalized cross-correlation (NCC), and peak signal-to-noise-ratio (PSNR). The results in Elshazly et al. (2012) showed that db-4 wavelet filter gives the maximum NCC, and PSNR, and the minimum BER. Therefore, db-4 wavelet is applied with two decomposition levels. Generally, the embedding strength q ranges from 0 (fragile watermarking with high SNR) to 1 (robust watermarking with very low SNR, i.e. the signal is deteriorated). So the choice of the value of the embedding strength is a very important issue in our algorithm to compromise between the robustness and imperceptibility.

Figure 6 shows a plot of embedding strength value, q, versus SNR for folk music, where the plot showed that the value of 0.49 is considered as a critical value because any increase of embedding strength more than this value will cause the SNR to be decreased lower than 20 dB and will not satisfy the IFPI standards.

The performance evaluation of the proposed scheme depends on four objectives; imperceptibility, robustness, payload, and complexity.

6.1 Imperceptibility

Imperceptibility is evaluated with many objective measures of quality. A commonly used method in the simulations is the signal-to-noise ratio (SNR):

where A is the original audio signal, A′ is the watermarked audio signal. The algorithm is said to be imperceptible (inaudible) if the SNR is greater than 20 dB due to the IFPI standards. Table 1 shows the SNR for different audio signals, where folk music gives a higher SNR than that of the other music signals. Comparing with other algorithms from the SNR perspective, the proposed algorithm gives higher SNR values than those of the other algorithms in Table 2. Figure 7 shows waveforms of the original audio signal, watermarked audio signal, and difference signal for folk music.

6.2 Robustness

To satisfy robustness, the embedded watermarks should not be removed or eliminated by unauthorized distributors using common signal processing techniques. NCC, and BER are good measurements of robustness.

The NCC and BER are given by:

where M × N is the size of the watermark image.

where \({b_{error}}\) is the number of bit errors.

Table 3 shows a comparison between the different algorithms in the presence of different attacks.

6.3 Complexity

Complexity is also a criterion that used to evaluate the performance of the proposed audio watermarking algorithm. The complexity depends on the CPU time consumed with Matlab software version 7.5 on a PC of Intel(R) core™ (i3) 2.4 MHz Processor, 4 GB of RAM, for both embedding and extraction processes. The embedding time is 1.03 s, and extraction time is 0.18 s, which shows that a short time is taken in the embedding or extraction procedures.

6.4 Payload

Data payload is defined as the number of bits, which could be inserted to the host audio signal within a unit of time in bits per second (bps). Our formula for data payload DPL is defined as:

where L is the length of the original audio signal in seconds, and \({N_t}\) is the total number of watermark bits embedded into the original audio signal. For any watermarking method, the data payload should be more than 20 bps. Table 4 shows a general comparison between the proposed algorithm and several recent algorithms in terms of data payload, where the proposed algorithm achieves a higher data payload than those achieved by other algorithms.

7 Conclusion

A new audio watermarking algorithm has been proposed in this paper. It is based on SVD, DWT, and QIM. Performance evaluation showed that the proposed algorithm satisfies self-synchronization, and increase watermark robustness. A very good imperceptibility, and a high robustness to various signal processing attacks such as noise addition, cropping, resampling, re-quantization, low pass filtering and MP3 compression have been satisfied. Moreover, the proposed algorithm achieves high data payloads.

References

Abd El-Samie, F. E. (2009). An efficient singular value decomposition algorithm for digital audio watermarking. International Journal of Speech Technology, 12(1), 27–45.

Al-Haj, A., & Mohammad, A. (2010). Digital audio watermarking based on the discrete wavelets transform and singular value decomposition. European Journal of Scientific Research, 39, 6–21.

Al-Haj, A., Mohammad, A., & Bata, L. (2011). DWT-based audio watermarking. The International Arab Journal of Information Technology, 8, 326–333.

Ali, A. H., & Ahmad, M. (2010). Digital audio watermarking based on the discrete wavelet transform and singular value decomposition. European Journal of Scientific Research, 39(1), 6–21.

Al-Yaman, M. S., Al-Taee, M. A., & Alshammas, H. A. (2012). Audio-watermarking based ownership verification system using enhanced DWT-SVD technique. In Proceedings of the 9th international multi-conference on systems, signals and devices (SSD’12), Chemnitz, March 20–23.

Al-Yaman, M. S., Al-Taee, M. A., Shahrour, A. T., & Al-Husseini, I. A. (2011). Biometric based audio ownership verification using discrete wavelet transform and SVD techniques. In Proceedings of the 8th international multi-conference on systems, signals and devices (SSD’11), Sousse, March 22–25.

Bao, F., Samarati, P., & Zhou, J. (2012). Applied cryptography and network security. In 10th International conference, ACNS 2012, Singapore, June 26–29.

Bhat, V. K., Sengupta, I., & Das, A. (2010). An adaptive audio watermarking based on the singular value decomposition in the wavelet domain. Digital Signal Processing, 20(6), 1547–1558.

Chen, B., & Wornell, G.W. (2001). Quantization index modulation methods for digital watermarking and information embedding of multimedia. Journal of VLSI Signal Processing 27, 7–33.

Chen, S. T., Wu, G. D., & Huang, H. N. (2010). Wavelet-domain audio watermarking scheme using optimization-based quantization. IET Signal Processing, 4(6), 720–727.

Cox, I. J., Miller, M. L., & Bloom, J. A. (2000). Watermarking applications and their properties. In International conference on information technology 2000, Las Vegas.

Elshazly, A. R., Fouad, M. M., & Nasr, M. E. (2012). Secure and robust high quality DWT domain audio watermarking algorithm with binary image. In Computer engineering and systems (ICCES), 2012, seventh international conference on IEEE.

Gonz´alez, F. P., & Hern´andez, J. R. (1999). A tutorial on digital watermarking. In Proceedings of IEEE 33rd annual 1999 international carnahan conference on security technology, Madrid, October 5–7.

Hossain, M. A., Islam, M. S., & Ali, M. S. (2012). Performance analysis of baker code based on their correlation property in multiuser environment. International Journal of Information Sciences and Techniques (IJIST), 2, 1.

Kaushik, S., & Singhal, A. (2012). Network security using cryptographic techniques. International Journal of Advanced Research in Computer Science and Software Engineering, 2, 12.

Khaldi, K., & Boudraa, A. O. (2013). “Audio watermarking via EMD. IEEE Transactions on Audio, Speech and Language Processing, 21(3), 675–680.

Kumar, P. K., & Shimamura, T. (2014). Audio watermarking in transform domain based on singular value decomposition and Cartesian-polar transformation. International Journal of Speech Technology, 17(2), 133–144.

Lalitha, N. V., Suresh, G., & Sailiaja, V. (2011). Improved audio watermarking using DWT-SVD. International Journal of Scientific & Engineering Research, 2, 6.

Megha, K. D., Vaidya, N. P., & Patel, K. (2013). Digital watermarking: Data hiding techniques using DCT-DWT algorithm. International Journal of Advanced Research in Computer and Communication Engineering, 2, 6.

Megías, D., Serra-Ruiz, J., & Fallahpour, M. (2010). Efficient self-synchronized blind audio watermarking system based on time domain and FFT amplitude modification. Signal Processing, 90(12), 3078–3092.

Mitali, V., Kumar, & Sharma, A. (2014). A survey on various cryptography techniques. International Journal of Emerging Trends & Technology in Computer Science (IJETTCS), 3, 4.

Ozer, H., Sankur, B., & Memon, N. (2005). An SVD-based audio watermarking technique, In Proceedings of the 7th ACM workshop on multimedia and security, pp. 51–56.

Saini, L. K., & Shrivastava, V. (2014). A survey of digital watermarking techniques and its applications. International Journal of Computer Science Trends and Technology (IJCST), 2, 3.

Singh, P., & Chadha, R. S. (2013). A survey of digital watermarking techniques, applications and attacks. International Journal of Engineering and Innovative Technology (IJEIT), 2(9), 165–175.

Singh, V. (2011). Digital watermarking: A tutorial. Journal of Selected Areas in Telecommunications (JSAT), 2, 10–21.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Elshazly, A.R., Nasr, M.E., Fouad, M.M. et al. High payload multi-channel dual audio watermarking algorithm based on discrete wavelet transform and singular value decomposition. Int J Speech Technol 20, 951–958 (2017). https://doi.org/10.1007/s10772-017-9462-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-017-9462-9