Abstract

The ability to judge the visual attention of others is a key aspect of human social cognition and communication. While evidence has shown that chimpanzees can discriminate human attention based on eye cues alone, findings for gorillas and orangutans have been less consistent. In addition, it is currently unclear whether these gorillas and orangutans attempt to attract the visual attention of inattentive recipients using “attention-getting” behaviors. We replicated and extended previous work by testing whether six orangutans (Pongo pygmaeus and hybrid) and six gorillas (Gorilla gorilla gorilla) modified the use of their visual and auditory signals based on the attentional state of a human experimenter. We recorded all communicative behaviors produced by the apes for 30 s while a human experimenter stood in front of them with a food reward in a variety of postures, both visually attentive (facing the apes) and inattentive (body and/or head facing away or eyes covered). Both species produced visual behaviors more often when the experimenter was looking at them than when she had her face turned away, but only the orangutans discriminated attention based on eye cues alone. When we removed human-reared apes from the analyses (N = 3), mother-reared apes showed sensitivity to eye cues from the experimenter. However, further analyses found that the orangutans and gorillas relied more heavily on the body and head orientation of the experimenter than on her eye cues. Neither species produced more vocalizations or nonvocal auditory behaviors, such as mesh and object banging, mesh rubbing, or clapping, in the inattentive, than attentive, conditions. Our results reveal that while orangutans and gorillas preferentially use visual gestures when a human is attending to them, they do not appear to produce auditory behaviors, including vocalizations, with the intention of manipulating the recipient’s attention state.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Understanding visual attention in others is crucial to human social cognition and communication. The ability to attribute visual perception allows us to assess what others are attending to, what they can see and what they might know, based on what they have perceived (Gómez 2009). In addition, an understanding of visual attention is crucial for intentional communication using visual signals; a signaler should display visual gestures only when the intended recipient is visually attentive (Liebal et al.2014). Researchers have therefore spent several decades investigating this ability in nonhuman primates (Hare et al.2000; Povinelli et al.1996; Tempelmann et al.2011). Observations of conspecific interactions have shown that chimpanzees (Pan troglodytes), gorillas (Gorilla gorilla gorilla), and orangutans (Pongo pygmaeus) use visual gestures more often when a recipient is visually attending, as opposed to not attending, thus revealing discrimination of visual attention in the signaler (Genty et al.2009; Liebal et al.2004a, 2006). Several experimental studies have used human–ape interactions to assess this capacity in the nonhuman great apes (hereafter, great apes), thereby allowing systematic manipulation of the cues used to determine attentional state. Initial studies used a two-experimenter paradigm in which chimpanzees could choose to beg from either an attending or nonattending experimenter and found that body orientation, rather than head direction or eye gaze, influenced which human the chimpanzee begged from (Povinelli et al.1996). However, this task was later considered to be fairly complex and thus some following studies used a simpler version, whereby a single experimenter varies his or her attentional state and records the great apes’ behavior under the various conditions. Using this latter paradigm, chimpanzees, bonobos (Pan paniscus), and gorillas were sensitive to the body orientation of human experimenters by producing more behaviors when the human experimenter was facing them than when the experimenter was facing away (Kaminski et al.2004). The authors found no difference between conditions in which the experimenter’s eyes were shut vs. open, although the behaviors measured in this study were not separated by modality (grouping together behaviors such as knocking on the Plexiglas and lip pouts). Using this same paradigm, studies found that chimpanzees produced more visual behaviors when a human was facing them and when the human’s eyes were visible as opposed to covered (Hostetter et al.2001, 2007).

As is common in comparative cognition, chimpanzees have received more research attention than the other great apes and thus, whereas we have evidence that chimpanzees are capable of using eye gaze alone to judge human attention, the evidence is less clear for orangutans and gorillas (Kaminski 2015). Orangutans and gorillas produce more visual behaviors when a human is attending to them, based on body and face orientation (Poss et al.2006; Tempelmann et al.2011). In addition, all great ape species generally used visual behaviors more often when a human experimenter was facing them (Liebal et al.2004b). However, if the experimenter turned away, yet left the food, orangutans and gorillas, unlike bonobos and chimpanzees, did not consistently move in front of the experimenter or use more visual gestures when the experimenter was facing them than when not. The authors suggest these results show a greater sensitivity in chimpanzees and bonobos, compared to orangutans and gorillas, to human visual attention when gesturing (Liebal et al.2004b). This greater sensitivity in chimpanzees and bonobos was also found in another study wherein orangutans were less skilled, relative to the other great apes, at understanding the relevance of a window compared to a solid barrier when following human gaze (Okamoto-barth et al.2007). A subset of orangutans, however, appeared to modify some behaviors based on human eye cues and gorillas are somewhat sensitive to human eye cues using a two-experimenter paradigm (Bania and Stromberg 2013; Kaminski et al.2004).

In addition to examining the conditions under which apes use visual gestures, these studies raise a further interesting question: To what extent do apes attempt to actively manipulate the attention of others? Some of the aforementioned studies measured attention-getting behaviors in primates. Attention-getters are “proposed to be signals that function to attract the attention of the recipient” and are composed of both auditory and tactile behaviors (Liebal et al.2014, p. 180). Their function is not to convey a certain message, but to attract the attention of the recipient who can then be communicated with further once attentive. Attention-getters are proposed to provide partial evidence for intentional communication, as their use would suggest an understanding of the need to obtain visual attention from a recipient before the use of visually communicative behaviors (Liebal et al.2014). However, again the evidence for these behaviors in primates is mixed. Studies have found that chimpanzees used vocalizations more when the human experimenter was inattentive, although they did not always use more when only provided with eye cues (Hostetter et al.2001, 2007; Leavens et al.2004). Conversely, in a later study when researchers separated behavior by modality, they found that apes did not modify their use of auditory, bimodal, or “attention-getting” behaviors depending on the attentional state of the human (unlike their visual behaviors; Tempelmann et al.2011). A similar result was found in a study of orangutans and gorillas in which the apes did not modify their use of vocalizations, nor nonvocal auditory signals, such as mesh bangs and claps, depending on whether a human was visually attentive (Poss et al.2006). This is consistent with results from conspecific interactions during which chimpanzees and orangutans did not appear to use auditory and tactile behaviors to attract the attention of a conspecific before performing a visual behavior (Liebal et al.2004a; Tempelmann and Liebal 2012).

Discrimination of visual attention in nonhuman primates has been studied far more than discrimination of auditory attention, although some studies have attempted to assess the latter with varied results among species (Costes-Thiré et al.2014; Melis et al.2006; Santos et al.2006). We introduced two additional conditions in which the human experimenter made herself less available to auditory communication, by covering her ears, to assess whether this had any impact on the auditory behaviors produced.

Our study had three primary aims: (1) to examine whether orangutans and gorillas can determine human attention state based on body, head, and, specifically, eyes cues, as measured by their use of visual behaviors; (2) to examine whether they attempt to manipulate a human’s attention state with the use of “attention-getters,” as measured by their use of vocalizations and nonvocal auditory signals; and (3) to examine whether the apes understand the role of the ears in human auditory communications, as measured by their use of vocalizations and nonvocal auditory signals. We replicated and extended previous work with orangutans and gorillas (Poss et al.2006) by testing them under a wider range of experimental conditions to more fully understand how these apes discriminate human attention and therefore gain insight into the evolution of more complex social cognitive processes.

We predicted that if the apes discern and use recipient visual attention to moderate their use of visual signals, they would produce more visual behaviors when the experimenter was visually attending to them than when she was looking away and that, if they understand the role of the eyes in visual communication, these results would also extend to when only eye cues are given. We also predicted that if vocal and nonvocal auditory behaviors serve as “attention-getters,” then they would be used more frequently when the human was visually inattentive, compared to attentive and that if the apes understood the role of the ears in human auditory communication, they would use fewer auditory behaviors when the experimenter’s ears were covered.

Methods

Subjects

Subjects were six western lowland gorillas and two Bornean and four hybrid (Pongo pygmaeus × Pongo abelii) orangutans housed at the Smithsonian’s National Zoological Park, Washington, DC. All subjects were housed socially either in fixed or dynamic social groups. One orangutan, Batang, had an infant who was 3 mo old at the start of testing and the infant was with his mother during all tests. Two orangutans and one gorilla were human reared and the others were mother reared (or foster mother reared; details in Electronic Supplementary Material [ESM] Table SI). Gorilla and orangutan diets consist of fruits, vegetables and primate chow which was either scatter-fed or hand-fed to the apes and water was available ad libitum. Subjects were never deprived of food or water during the test period. We conducted testing ≥1 h after the morning or afternoon feed and tested subjects individually in their living quarters after they separated voluntarily for testing. We conducted tests between November 8 and December 20, 2016.

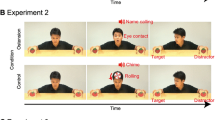

Conditions

We conducted trials across seven conditions in which the experimenter varied her posture and attentional state toward the ape. We chose these conditions to both replicate and extend previous work with these species. The experimenter was either absent (“Absent”), had her head and body facing 180 degrees away from the ape (“Backward”), her head and body facing the ape (“Forward”), her body facing but head turned 90 degrees away from the ape (“Head away”), or her head and body facing the ape with her left hand covering her eyes (“Eyes covered”). In addition, to assess any changes in the use of auditory behaviors stemming from the apparent auditory availability of the experimenter’s ears, we also included conditions that replicated Backward and Forward except that both of the experimenter’s hands covered her ears; (“Backward ears”) and (“Forward ears”) respectively. In conditions Forward, Backward, Head away and Eyes covered, the food reward was held in the experimenter’s right hand. In conditions Absent, Forward ears and Backward ears, the food reward was placed on the floor at the experimenter’s feet. A session consisted of one trial in each of the seven conditions in a randomized order, such that it was a different order for each ape, each day. Each ape participated in 4 sessions and thus 4 trials per condition, totaling 28 trials each.

Protocol

A trial began once an ape was separated from his or her group mates and either sat or clung to the mesh in front of the demonstrator. The experimenter (J. Botting) stood 36 in. from the enclosure with her feet on a marked line to ensure consistency. Depending on condition, the food reward (a half-inch slice of banana) was either in the experimenter’s hand or on the floor at her feet. Most of the subjects were tested in enclosures where the floor was raised such that, while standing, the experimenter was roughly at eye level with the apes. For those tested in floor-level enclosures, the experimenter sat on a small stool for all trials so that she was at eye level with the apes. Owing to the recent birth of an infant orangutan, the experimenter wore a face mask (covering only the mouth and nose) while testing all the orangutans; all humans that they encountered during this period (beginning 3 mo prior) wore the same face masks.

A trial began when the experimenter had assumed the position as dictated by condition and continued for 30 s, after which the experimenter passed the ape the food reward (turning to face the ape to do so). In between each test trial were two filler trials, consisting of the experimenter giving two grapes without waiting and assuming a normal feeding position. This was to sustain the apes’ motivation throughout the test session. We conducted one session with each ape per day. We recorded all test sessions using a Panasonic HD video camera (HC-X920M) on a tripod, angled at the subject.

Behavioral Ethogram

We coded data from the video footage using the coding software BORIS v 3.12 (Friard and Gamba 2016). We did not count behaviors that began before the start of the 30-s test period. We used an ethogram of potentially communicative behaviors based on Poss et al. (2006), with additions relevant to the subjects tested (Table I). As the aim of the study was to test the apes’ understanding of visual attention, for the visual behaviors category we analyzed behaviors that could be perceived only if the human was visually attentive, including body present and trade as well as gesture and facial expressions. Because of the variable size of the mesh and the ape hands/fingers, we did not require the apes’ hands to extend beyond the mesh when gesturing. We coded yawns as distinct from the “open mouth” facial expression in that the apes did not bare their teeth in the open mouth expression, but did so during a yawn. We coded all noises produced by the ape’s mouth or throat as vocalizations. We coded the auditory behaviors mesh and object bangs, body rubs, and claps as nonvocal (Table I). Although all behaviors have some visual component (such as the motion of clapping hands together or facial changes when vocalizing), we coded those that created an obvious noise in addition to this visual aspect as auditory. One of the orangutans, Lucy, had unfortunately been taught at a previous institution to perform certain “poses” when given a certain visual command. This command was very similar to the posture adopted by the demonstrator in the Eyes covered condition. While these poses could be considered as a type of visual behavior (body present), they were not included in the analyses of visual behaviors, as they were triggered very specifically by this condition.

Statistical Analyses

To test our research questions, we analyzed visual behaviors, vocalizations, and nonvocal auditory behaviors separately. The number of visual behaviors (gestures, facial expressions, trades, and presents), vocalizations, and nonvocal auditory behaviors (mesh and object bangs, body rubs, and claps) produced by the apes across all four trials were entered as the dependent variables into separate models. Our data were count data with nonnormal distributions (Shapiro test; Visual behaviors, W = 0.51, P < 0.001; Vocalizations W = 0.61, P < 0.001; Auditory signals, W = 0.47, P < 0.001) and thus we used generalized linear mixed models (GLMMs) with Poisson error structures, using the package blme (Chung et al.2013) in R Studio (R Core Team 2013). In all models, condition and test session were entered as fixed effects and the subject was entered as a random intercept. We also tested the frequency of facial expressions and gestures separately, as these had not been explicitly trained like trades and presents. Given the differences found between ape species in social cognitive tests we analyzed each species separately where there were sufficient data to do so (Liebal et al.2004b). We compared all conditions against the Forward condition, but added comparisons between Backward and Backward ears and Absent and Backward for the analysis of the vocalizations and nonvocal auditory signals. Alpha levels were adjusted for multiple comparisons using the Holm–Bonferroni method (Holm 1979).

To further disentangle which bodily cues the apes primarily use to determine human visual attention, we also compared a series of GLMMs that combined data from the different conditions based on the primary bodily cue and arranged it into two levels, Away and Facing. Table II shows how the data from each condition were combined to allow us to test models based on specific bodily cues. For example, for the Body model, the conditions in which the experimenter’s body was facing the ape (Forward, Forward eyes, Head away, and Eyes covered) were combined in the Facing level, whereas the conditions in which the experimenter’s body faced away from the ape (Backward, Backward ears) were combined as the Away level. The fit of these models to the data was then compared using Akaike’s information criterion (AIC; Akaike 1974), with the frequency of visual behaviors produced as the outcome variable and subject as a random intercept. The Ears model was only included when analyzing the number of vocalizations and nonvocal auditory signals used to test our hypothesis about auditory availability.

Finally, we analyzed the effect of condition on the modality of the first behavior produced. The first communicative behavior in each trial was coded as of either visual or auditory modality (including both vocalizations and nonvocal auditory signals). Only trials in which one of these categories of behavior was produced were included in the analyses (N = 196), and entered as the outcome variable in a GLMM with a binomial error structure.

Owing to the small number of data points, an interaction between history and condition could not always be entered into the same GLMM and therefore the potential influence of rearing history was assessed by additionally analyzing data from the mother-reared individuals separately based on the premise that human-reared apes often outperform mother-reared apes in social cognitive tests (Leavens et al.2017). To assess interobserver reliability, a second coder, blind to the experimental conditions, coded behaviors in 6% of the trials. For all communicative behaviors combined, Cohen’s κ for agreement between the two coders was 0.79.

Data Availability

The data sets generated and analyzed during the current study are publicly available in the OSF repository using the following link: https://osf.io/yurfs/?view_only=665a37e8c7e643cd92bf51afec2dbf5e

Ethical Note

Ethical permission for this study was gained from the Smithsonian Institution Animal Care and Use Committee and all welfare standards were adhered to. The authors confirm that they have no conflict of interest.

Results

Visual Behaviors

The orangutans used significantly more visual behaviors in the Forward condition than in all the other conditions (Table III and Fig. 1), including Eyes covered. The gorillas did not use more visual behaviors in the Forward condition than in the Eyes covered or Forward ears covered conditions (Table III and Fig. 1), but did use more in the Forward condition than in all other conditions. When mother-reared apes only were analyzed (N = 9), they used significantly more visual behaviors in the Forward condition than in any other condition (ESM Fig. S1 and Table SII). There was considerable variation between individuals, although 8 out of 12 individuals gestured more in the Forward condition than in the Head away, Eyes covered, or Backward conditions (Fig. S2). The apes used facial expressions and gestures significantly more often in the Forward condition than in the inattentive conditions (Table IV).

Mean frequency of visual behaviors displayed across all trials as shown by condition by gorillas (dark gray) and orangutans (light gray) for a study of use of communicative behaviors toward a recipient with varying attention state in Gorilla gorilla gorilla and Pongo pygmaeus and hybrid (Pongo pygmaeus × Pongo abelii) at the Smithsonian’s National Zoo between November 8 and December 20, 2016. Error bars represent SE. A = Absent; B = Backward; BE = Backward ears; EYC = Eyes covered; F = Forward; FE = Forward ears; HA = Head away.

Vocalizations and Nonvocal Auditory Behaviors

Both species used vocalizations more in the Forward condition than in the Absent condition, but this was statistically significant only in the orangutans (Table V). Neither species produced significantly more vocalizations in the inattentive conditions (Backward, Backward ears, Head away or Eyes covered) than in the Forward condition (Table V and Fig. 2a). Similarly, neither species produced significantly more nonvocal auditory behaviors in the inattentive conditions than in the Forward condition (Table V and Fig. 2b). Neither species showed significant differences in vocalizations or nonvocal auditory behaviors when the ears were covered vs. uncovered (between the Forward and Forward ears and the Backward and Backward ears conditions Table V).

Mean frequency across all sessions of (a) vocalizations and (b) nonvocal auditory behaviors produced by gorillas (dark gray) and orangutans (light gray) across all trials, as shown by condition in a study of use of communicative behaviors toward a recipient with varying attention state in Gorilla gorilla gorilla and Pongo pygmaeus and hybrid (Pongo pygmaeus × Pongo abelii) at the Smithsonian’s National Zoo between November 8 and December 20, 2016. Error bars represent SE. A = Absent, B = Backward, BE = Backward ears, EYC = Eyes covered, F = Forward, FE = Forward ears, HA = Head away.

When analyzed separately, the mother-reared apes used significantly fewer vocalizations in the Absent condition than in the Forward (estimate = −1.61[0.40], z = −4.01, P < 0.001, adjusted α = 0.006) and Backward conditions (estimate = −1.22[0.42], z = −2.93, P = 0.003, adjusted α = 0.007) and test session had no significant effect. The number of nonvocal auditory signals produced did not differ significantly between any of the conditions.

Modality of First Behavior

There was no significant effect of condition on the modality of first communicative behavior for either species. Both species used more auditory and less visual behaviors first in the Backward condition, than in the Forward condition, but this was not statistically significant (gorillas, estimate = −1.79[0.88], z = −2.05, P = 0.041, adjusted α = 0.008; orangutans, estimate = −2.16[1.03], z = −2.10, P = 0.036, adjusted α = 0.008).

Use of Bodily Cues

When we compared models to examine which bodily cues the apes primarily used to regulate production of visual behaviors, the best-fitting models were the Head model for the gorillas and the Body model for the orangutans (all other models had a ΔAIC of >4 and therefore little support; Burnham and Anderson 2004; Table VI), suggesting that the gorillas primarily used the experimenter’s head orientation to regulate their use of visual gestures, whereas the orangutans more often used the experimenter’s body orientation to judge human attention (Table VI). When production of vocalizations was considered, the gorillas relied more on head and body cues rather than on eye or ear cues. The orangutans tended to rely on the orientation of the head, but this did not have clear support as the best model. When only nonvocal auditory signals were considered, there was no strong support for any one model, showing that the apes did not regulate these behaviors based on bodily orientation.

Discussion

We found that the apes used more visual behaviors, including gestures, facial expressions, trades and body presents, when the human experimenter was visually attentive compared to when she was facing away. This pattern remained when only facial expressions and gestures were included in the analyses. These results are consistent with previous research (Poss et al.2006; Tempelmann et al.2011) and provide further evidence that orangutans and gorillas can discriminate the attentional state of a human based on the body and head orientation. The results also provide evidence that these apes use facial expressions and gestures primarily when a recipient can see them, fulfilling one of the criteria for intentional communication (Liebal et al.2014).

Our study also extended previous research by testing whether the apes consider the availability of the human’s eyes when discriminating attention in others. While a sensitivity to eye cues has been shown in chimpanzees in a previous study with a similar paradigm, there is a lack of evidence to show that orangutans and gorillas modify their use of communicative behaviors based on eye cues alone (Hostetter et al.2007; Kaminski 2015). We found that the orangutans produced more visual behaviors when the experimenter’s eyes were visible, although the gorillas did not. These findings therefore indicate that orangutans do show some sensitivity to eye cues when discerning attention, which suggests that they may have some understanding of the role of the eyes in visual attention.

Our analyses also revealed, however, that the visual availability of the eyes was not the best predictor of visual behavior production for either species. Instead, the orientation of the experimenter’s body best predicted the orangutans’ use of visual behaviors, while the orientation of the experimenter’s head best predicted the gorillas’ use of visual behaviors. This suggests that while orangutans can use eye cues to some extent to determine human attention, both species rely more heavily on body and head cues—a finding that is consistent with previous findings with the great apes (Gómez 1996; Tomasello et al.2007). This apparent reliance on body and head cues is perhaps unsurprising given the morphological difference between the eyes of the other great apes and the human eye, the latter having adaptations that appear to allow for enhanced gaze following based on eye cues (Kobayashi and Kohshima 2001). Reliance on larger body cues may be a more adaptive system for animals often living in areas of dense vegetation, such as gorillas and orangutans, to allow for faster detection of visual attention, and avoidance of eye gaze (due to the threatening nature of direct eye contact) may help explain why gorillas did not attend to the experimenter’s eye cues.

We suggest that the apes’ use of visual behaviors more often when the recipient was watching them warrants further investigation into their potential ability to attribute visual perception. While findings from a recent study indicated that orangutans are capable of attributing false belief to others (Krupenye et al.2016), a previous study also revealed that orangutans apparently failed to attribute visual perception to humans using a competitive paradigm in which they had to avoid taking a route visible to a competitor to obtain a contested item (Gretscher et al.2012). However the orangutans in the latter study were all fairly young (range of 7.5–12 yr), which may have impacted their performance at this relatively complex cognitive task. Therefore, the ability of orangutans and gorillas to attribute perception to others remains somewhat unclear and further studies examining this, particularly in gorillas, would be beneficial.

Vocalizations and Nonvocal Auditory Signals

We aimed to test whether the apes would attempt to attract the attention of a nonattentive experimenter by using signals with an auditory component—another behavior often cited as indicating intentional communication in nonhumans (Liebal et al.2014). We found that the apes produced fewer of both vocalizations and nonvocal auditory behaviors in the Absent condition than in the Forward condition, although this was statistically significant only for the orangutan vocalizations. This may have been due to the absence of an effect in the gorillas, but could be due to low overall frequencies of nonvocal auditory signals (Table V). This indicates that the apes used these signals with the intention of communicating with the human experimenter and is consistent with findings from orangutans, gorillas, and chimpanzees in similar paradigms (Hopkins et al.2007; Poss et al.2006). However, it could also be argued that these behaviors may be expressions of frustration, and the presence of an apparently unhelpful human with food, compared to food alone (as in the Absent condition), may have elicited more frustration in the apes.

Neither the orangutans nor gorillas produced more auditory (vocal or nonvocal) signals when the experimenter was visually inattentive than when she was attentive, based on body, head, or eye cues. These results thus indicate that the apes did not try to attract the visual attention of the experimenter by using either vocalizations or nonvocal auditory signals. The findings are consistent with studies that show that orangutans and gorillas do not appear to use auditory signals strategically to attract the attention of a recipient, either in conspecific interactions or in experimental interactions with humans (Genty et al.2009; Poss et al.2006; Tempelmann and Liebal 2012; Tempelmann et al.2011). These studies found that auditory and/or tactile behaviors are used when the recipient is both inattentive and attentive, a pattern also found in the current study. From our results we can reasonably surmise that, while vocalizations are seemingly directed toward a human, they, along with behaviors such as mesh and object banging, rubbing, and clapping, are not being used in an attempt to manipulate the attentional state of that human. Together with past research, these findings suggest that behaviors often regarded as attention getters, such as mesh banging and clapping, may instead serve another purpose (Poss et al.2006). They could either be simply expressions of frustration as discussed earlier, or they may serve a more general communicative purpose other than the manipulation of the recipient’s visual attention. Further research into the contexts in which these behaviors are produced would help clarify this point.

Our results are in apparent contrast to the results of some studies with chimpanzees that have been shown to use vocalizations more in conditions in which the experimenter is visually inattentive even when using eye cues only (Hostetter et al.2001, 2007; Leavens et al.2004). However, Hostetter and colleagues did not find a difference in frequency of nonvocal auditory signals based on human attention (Hostetter et al.2001, 2007). In addition, other studies with chimpanzees have failed to find evidence for a strategic use of these behaviors in both human and conspecific interactions (Liebal et al.2004b; Tempelmann et al.2011; Theall and Povinelli 1999). Liebal and colleagues found instead that chimpanzees interacting with conspecifics tended to use other strategies to ensure visual gestures were displayed to an attentive audience, such as gesturing more when the recipients were also attending and also moving to place themselves in view of the conspecific, a tactic that chimpanzees also use when interacting with humans in captive paradigms (Liebal et al.2004a, 2004b). It seems the participants in our study may have used a similar strategy; while the experimental setup meant they were unable to move into the line of sight of the experimenter, they used visual gestures more when the experimenter was attentive, but did not attempt to attract her attention when she was not.

Finally, we included exploratory conditions with the experimenter’s ears covered to assess whether this would affect the apes’ production of auditory behaviors. There were no differences in production of either vocalizations or nonvocal auditory signals between the “ears covered” (Forward ears and Backward ears) and corresponding “ears uncovered” conditions (Forward and Backward). Orangutans did use fewer vocalizations in the Forward ears compared to Forward condition, although this was not statistically significant (Table V). This may have been a response to the lessened auditory availability of the experimenter, learned through an individual’s own experience that covering ears leads to lessened auditory availability. However, given that this trend was shown in the forward facing, but not backward facing conditions, we suggest that it was more likely a response to the location of the food. Although the experimenter’s visual attention was consistent across both conditions, the food reward was on the floor in the Forward ears condition as opposed to in the experimenter’s hand in the Forward condition. The apparent availability of a human to give food has been shown to affect great apes’ and monkeys’ behavior in similar paradigms and may have increased the apes’ motivation to communicate by indicating that the human was ready to hand over the food (Hattori et al.2010; Tempelmann et al.2011). However, the placement of the food would need to be varied across all conditions to either confirm or refute this.

Species Differences

In terms of nonvocal auditory signals, the gorillas and orangutans produced similar results, with neither species directing more auditory behaviors at an inattentive human. Similarly, neither species used vocalizations more in the presence of inattentive vs. attentive humans, although orangutans produced notably more vocalizations overall than did gorillas. However, when we examined the use of visual behaviors, some differences between the species emerged in terms of which bodily cues were used to discriminate visual attention. The orangutans used significantly fewer visual behaviors when the human’s eyes were covered compared to uncovered, but the gorillas did not. Indeed, the direction of the head appears to be an important cue for gorillas when producing visual signals, but not necessarily for the orangutans. This may reflect species differences in social interactions; gorillas often avoid eye contact, which can be considered a threat, with experimenters and during some interactions, with conspecifics, often doing so by displaying a prominent turn of the head. Therefore gorillas might be expected to attend more to the head direction than the eyes of another when assessing visual attention.

There was a slight difference in protocol between the two species; the experimenter had to wear a face mask covering the nose and mouth when testing the orangutans because of the presence of a newborn orangutan. It is possible that this may have affected how the experimenter’s attention was discerned; it may even be the case that the covered mouth focused the attention of the orangutans on the human eyes and caused them to use fewer visual signals when the eyes were covered than they might have if the experimenter’s whole face was visible. A further test without the face mask would be necessary to determine if such an effect may have occurred.

Finally, we aimed to assess whether orangutans and gorillas would modify their communicative behaviors depending on the attentional state of the experimenter and, as such, we used behaviors that were considered to be previously used in interactions with humans. However, testing great apes with humans through a mesh presents a very different environment than would occur naturally (Leavens et al.2017). The tactile gestures that orangutans may use with conspecifics could not be used with the experimenter and thus constrained the behaviors from their repertoire that they were able to use (Liebal et al.2006). In addition, while we found that mother-reared apes were as efficient at determining attentional state as human-reared apes, the communicative interaction that exists between humans and zoo-housed great apes remains highly specific and should be borne in mind when considering the cues that an ape may use with a conspecific when assessing visual attention.

Conclusions

In summary, we found evidence that orangutans and gorillas use visual signals more frequently when a human is looking at them, compared to when a human is looking away, or has her eyes covered, in the case of orangutans. The orangutans were able to make this judgment using eye cues alone, although they appeared to rely more heavily on body cues, and the position of the head seemed to be the most salient cue for the gorillas. Finally, neither species used vocalizations or nonvocal auditory signals more often when the experimenter was inattentive, suggesting that they were not attempting to manipulate the attentional state of the experimenter.

References

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, 19(6), 716–723.

Bania, A. E., & Stromberg, E. E. (2013). The effect of body orientation on judgments of human visual attention in western lowland gorillas (Gorilla gorilla gorilla). Journal of Comparative Psychology, 127(1), 82–90.

Burnham, K. P., & Anderson, D. R. (2004). Multimodel inference: Understanding AIC and BIC in model selection. Sociological Methods & Research, 33(2), 261–304.

Chung, Y., Rabe-Hesketh, S., Dorie, V., Gelman, A., & Liu, J. (2013). A nondegenerate penalized likelihood estimator for variance parameters in multilevel models. Psychometrika, 78(4), 685–709.

Costes-Thiré, M., Levé, M., Uhlrich, P., De Marco, A., & Thierry, B. (2014). Lack of evidence that Tonkean macaques understand what others can hear. Animal Cognition, 18, 251–258. https://doi.org/10.1007/s10071-014-0795-3.

Friard, O., & Gamba, M. (2016). BORIS: A free, versatile open-source event-logging software for video/audio coding and live observations. Methods in Ecology and Evolution, 7(11), 1325–1330.

Genty, E., Breuer, T., Hobaiter, C., & Byrne, R. W. (2009). Gestural communication of the gorilla (Gorilla gorilla): Repertoire, intentionality and possible origins. Animal Cognition, 12(3), 527–546.

Gómez, J. C. (1996). Non-human primate theories of (non-human primate) minds: Some issues concerning the origins of mind-reading. In P. Carruthers & P. K. Smith (Eds.), Theories of theories of mind (pp. 330–343). Cambridge: Cambridge University Press.

Gómez, J. C. (2009). Apes, monkeys, children, and the growth of mind. Cambridge: Harvard University Press.

Gretscher, H., Haun, D. B., Liebal, K., & Kaminski, J. (2012). Orang-utans rely on orientation cues and egocentric rules when judging others' perspectives in a competitive food task. Animal behaviour, 84(2), 323–331.

Harcourt, A. H., Stewart, K. J., & Hauser, M. (1993). Functions of wild gorilla “close” calls. I. Repertoire, context, and interspecific comparison. Behavior, 124(1), 89–122.

Hardus, M. E., Lameira, A. R., Singleton, I., Morrogh-Bernard, H. C., Knott, C. D., et al (2009). A description of the orangutan’s vocal and sound repertoire, with a focus on geographic variation. In S. A. Wich, S. S. Utami Atmoko, T. Mitra Setia, & C. P. van Schaik (Eds.), Orangutans: Geographic variation in behavioral ecology and conservation (pp. 49–60). Oxford: Oxford University Press.

Hare, B., Call, J., Agnetta, B., & Tomasello, M. (2000). Chimpanzees know what conspecifics do and do not see. Animal Behavior, 59(4), 771–785.

Hattori, Y., Kuroshima, H., & Fujita, K. (2010). Tufted capuchin monkeys (Cebus apella) show understanding of human attentional states when requesting food held by a human. Animal Cognition, 13(1), 87–92.

Holm, S. (1979). A simple sequential rejective multiple test procedure. Scandinavian Journal of Statistics, 6, 65–70.

Hopkins, W. D., Taglialatela, J. P., & Leavens, D. A. (2007). Chimpanzees differentially produce novel vocalizations to capture the attention of a human. Animal Behavior, 73(2), 281–286.

Hostetter, A. B., Cantero, M., & Hopkins, W. D. (2001). Differential use of vocal and gestural communication by chimpanzees (Pan troglodytes) in response to the attentional status of a human (Homo sapiens). Journal of Comparative Psychology, 115(4), 337–343.

Hostetter, A. B., Russell, J. L., Freeman, H., & Hopkins, W. D. (2007). Now you see me, now you don’t: Evidence that chimpanzees understand the role of the eyes in attention. Animal Cognition, 10(1), 55.

Kaminski, J. (2015). Theory of mind: A primatological perspective. In W. Henke & I. Tattersall (Eds.), Handbook of paleoanthropology (pp. 1741–1757). Berlin and Heidelberg: Springer.

Kaminski, J., Call, J., & Tomasello, M. (2004). Body orientation and face orientation: Two factors controlling apes’ begging behavior from humans. Animal Cognition, 7(4), 216–223.

Kobayashi, H., & Kohshima, S. (2001). Unique morphology of the human eye and its adaptive meaning: Comparative studies on external morphology of the primate eye. Journal of Human Evolution, 40(5), 419–435.

Krupenye, C., Kano, F., Hirata, S., Call, J., & Tomasello, M. (2016). Great apes anticipate that other individuals will act according to false beliefs. Science, 354(6308), 110–114.

Leavens, D. A., Hostetter, A. B., Wesley, M. J., & Hopkins, W. D. (2004). Tactical use of unimodal and bimodal communication by chimpanzees, Pan troglodytes. Animal Behaviour, 67(3), 467–476.

Leavens, D. A., Bard, K. A., & Hopkins, W. D. (2017). The mismeasure of ape social cognition. Animal Cognition, 1–18.

Liebal, K., Call, J., & Tomasello, M. (2004a). Use of gesture sequences in chimpanzees. American Journal of Primatology, 64(4), 377–396.

Liebal, K., Call, J., Tomasello, M., & Pika, S. (2004b). To move or not to move: How apes adjust to the attentional state of others. Interaction Studies, 5(2), 199–219.

Liebal, K., Pika, S., & Tomasello, M. (2006). Gestural communication of orangutans (Pongo pygmaeus). Gesture, 6(1), 1–38.

Liebal, K., Waller, B. M., Slocombe, K. E., & Burrows, A. M. (2014). Primate communication: A multimodal approach. Cambridge: Cambridge University Press.

Melis, A. P., Call, J., & Tomasello, M. (2006). Chimpanzees (Pan troglodytes) conceal visual and auditory information from others. Journal of Comparative Psychology, 120(2), 154–162.

Okamoto-Barth, S., Call, J., & Tomasello, M. (2007). Great apes’ understanding of other individuals’ line of sight. Psychological Science, 18(5), 462–468.

Poss, S. R., Kuhar, C., Stoinski, T. S., & Hopkins, W. D. (2006). Differential use of attentional and visual communicative signaling by orangutans (Pongo pygmaeus) and gorillas (Gorilla gorilla) in response to the attentional status of a human. American Journal of Primatology, 68(10), 978–992.

Povinelli, D. J., Eddy, T. J., Hobson, R. P., & Tomasello, M. (1996). What young chimpanzees know about seeing. Monographs of the Society for Research in Child Development, i–189.

Santos, L. R., Nissen, A. G., & Ferrugia, J. A. (2006). Rhesus monkeys, Macaca mulatta, know what others can and cannot hear. Animal Behaviour, 71(5), 1175–1181.

Team, R. C. (2013). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing.

Tempelmann, S., & Liebal, K. (2012). Spontaneous use of gesture sequences in orangutans. Developments in Primate Gesture Research, 6, 73.

Tempelmann, S., Kaminski, J., & Liebal, K. (2011). Focus on the essential: All great apes know when others are being attentive. Animal Cognition, 14(3), 433–439.

Theall, L. A., & Povinelli, D. J. (1999). Do chimpanzees tailor their gestural signals to fit the attentional states of others?. Animal Cognition, 2(4), 207–214.

Tomasello, M., Hare, B., Lehmann, H., & Call, J. (2007). Reliance on head versus eyes in the gaze following of great apes and human infants: The cooperative eye hypothesis. Journal of Human Evolution, 52(3), 314–320.

Acknowledgments

This research was funded by a generous grant from the David Bohnett Foundation. The authors would like to thank the staff at the Smithsonian’s National Zoo for their assistance during data collection and are additionally grateful to Betsy Herrelko and Alexandra Reddy for advice and assistance with behavioral coding. Our thanks go to Jo Setchell and two anonymous reviewers whose constructive suggestions greatly improved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Handling Editor: Joanna M. Setchell

Electronic supplementary material

ESM 1

(DOCX 93 kb)

Rights and permissions

About this article

Cite this article

Botting, J., Bastian, M. Orangutans (Pongo pygmaeus and Hybrid) and Gorillas (Gorilla gorilla gorilla) Modify Their Visual, but Not Auditory, Communicative Behaviors, Depending on the Attentional State of a Human Experimenter. Int J Primatol 40, 244–262 (2019). https://doi.org/10.1007/s10764-019-00083-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10764-019-00083-0