Abstract

Despite some theoretical and technical criticism, scholars largely acknowledge the influence of universities’ ranking positions on the preferences of fund providers, academics and students, nationally and internationally. Considering their noticeable contribution to university rankings, prominent indicators can guide university leaders to develop better strategies by targeting common aspects of international ranking systems. The purpose of this research is therefore to specify the significant indicators and to examine their individual weight through an expanded indicator-set of international university rankings. The research benefited from the predictive approach of correlational research. The dataset was composed of universities’ scores in the 2018 ARWU, THE, QS and URAP world university rankings and includes the scores of 224 universities. The data were re-organised following the expanded indicator-set previously formulated by the researcher. Regression analyses were then employed in two steps to explore significant predictors through the expanded indicator-set. The researcher also re-calculated the percentage values of seven combined indicators: citation, income, internationalisation, prize, publication, reputation and ratios/degrees. The findings showed that while all these indicators are statistically significant, the components of research reputation contribute 73.71% to universities’ ranking scores. On the other hand, income is the only negative contributor with a weight of − 1.78%. The research also revealed that when comparing two scores based on re-calculated and assigned weights, only 19 universities occupy the same position among the 224 universities. Following these results, the researcher then discusses various policies and practices with the potential to expedite universities’ ranking success. Considering the data reliability and longitudinal feasibility, several recommendations were also developed for further research on university ranking systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Through institutional webpages, many universities highlight their places in the ranking tables to assert their higher-achieving performance. University leaders also frequently refer to the ranking achievements of their institutions to impress fund providers and attract the attention of academics and students, nationally and internationally. Therefore, university managers largely follow these ranking schemes to develop organisational policies and practices to enhance the ranking success of their own institutions (Hazelkorn 2015; Heffernan and Heffernan 2018; Shin et al. 2011; Tapper and Filippakou 2009). While university ranking systems mainly use publication and citation rates as common metrics, most of them also include a variety of different indicators such as reputation scores, award winners, faculty-per-student ratios, postgraduate-to-undergraduate student rates, doctoral graduates, number of foreign staff and students, international research collaboration, or income components (through teaching, research and services to industry and business). On this point, to know which indicators significantly contribute to ranking success may be helpful in formulating potential strategies at universities to increase their position in ranking systems.

Since the first announcement of the Academic Ranking of World Universities (ARWU) in 2003, there has been a growing number of university ranking systems (Jajo and Harrison 2014). These systems naturally intensify world-class university discussion through international ranking outputs (Altbach and Salmi 2011). Hence, higher education policymakers in many countries have generated national policies to enhance the ranking of their universities; for example, World Class 1.0, 2010 and Double First Class (or World Class 2.0), 2017 in China (PREC Edu Services, n.d.), Excellence Initiative 1.0, 2005 and 2.0, 2012 in Germany (DFG n.d.), or Academic Excellence Project (or Project 5–100), 2013 in Russia (5top100 n.d.). Another example, from the researcher’s home country, is the government-selected list of the 10 best universities in Turkey announced in 2017, as the result of a national “research university” policy to include extra financial support and human resource expansion to achieve better results in international rankings (YÖK 2017). Considering both the potential expansion of funds and the positive influence on institutional choices of successful researchers and students, university managers mostly give special attention to ranking indicators when drawing up their organisational development strategies (Hazelkorn 2015). However, the content of indictor-sets and the calculation of ranking scores have raised many theoretical and technical questions.

Researchers have argued that while selected metrics in most international rankings are very useful to measure a university’s research productivity and academic reputation, these rankings do not fully reflect the level of teaching quality and social/economic impact of universities (Lim 2018; Shattock 2017; Shin et al. 2011). International rankings have also been criticised for excluding graduate employment rates as another important metric regarding training quality in universities (Çakır et al. 2015; Uslu 2017a). In addition to these theoretical aspects, researchers have highlighted some technical fallacies associated with international university rankings. For example, Bougnol and Dula (2015) examined several ranking systems in terms of the handling of the data, exposing mistakes of logic and interpretation issues and concluded that the rankings contained four ‘pitfalls.’ These are anti-isotonic attributes (positive weights do not guarantee positive score contribution), rewarding inefficiency (the same level inputs might create smaller or higher outcomes for different universities), co-linearity in the data (due to their conjoint features, some attributes can be removed during the recalculation of weights) and transparency-reproducibility (considering the recalculation possibility, researchers cannot access to indicator scores or indicator weights in many rankings). Soh (2017) summarised ‘the seven deadly sins’ of international university rankings as follows: spurious precision, weight discrepancies, assumed mutual compensation, indicator redundancy, inter-system discrepancy, negligence of indicator scores and lastly inconsistency between changes in ranking and overall. In a previous study, however, Soh (2015) used the 2013 data of ARWU, Times Higher Education (THE) and Quacquarelli Symonds (QS) rankings to show how various indicator scores contributed to universities’ overall scores.

Despite the possible multicollinearity between various ranking metrics, some researchers examined the indicator-sets of various rankings and their influence on ranking achievements. Through their cross-national analysis of university ranking systems, Dill and Soo (2005, pp. 500–502) theoretically combined the performance indicators under four dimensions: (i) input (faculty, students, financial resources and facilities), (ii) process (teaching), (iii) output (satisfaction, graduation, value-added, learning progress, employment) and (iv) reputation. Similarly, Hendel and Stolz (2008, p. 180) compared the ranking systems in Europe and defined four categories, namely, input (beginning characteristics students, faculty and staff, financial resources, program design and amenities), throughput (program/course proceedings, organisation and management, student support system and teaching quality), output (career prospects, reputation, outcome measurements (e.g. degree awarded, completion rate, student satisfaction) and value added) and research (e.g. grants per faculty, international publications, citations per publication, patents per faculty). Furthermore, using ARWU, THE and QS metrics as latent variables, Jajo and Harrison (2014) statistically examined ranking achievements and found the beta coefficientsFootnote 1 regarding the achievement data to be β = .662 for ARWU, β = .220 for QS and β = .188 for THE; in addition to β = .159–.230 for ARWU metrics, β = .184–.384 for QS metrics and β = .090–.414 for THE metrics. In another study, Luque-Martínez and Faraoni (2019, p. 10) clustered universities using indicator scores in ARWU, National Taiwan University Ranking (NTU), QS, THE and URAP rankings and associated indicators under seven university categories: (1) internationalised, (2) connected to industry, good reputation, (3) female students, (4) minor quality research, (5) global leaders, (6) low scientific production, quality and reputation and (7) intermediate majority. The researcher also examined the increase and decrease rates of universities’ ranking positions through the expanded indicator-set of ARWU, QS, THE and URAP systems (see details in Methodology) and found that reputation and citation scores caused the highest increase/decrease in universities’ ranking positions, by comparing how the increase/decrease rates originated according to publications, prizes, ratios/degrees, internationalisation and income scores (Uslu 2018a).

While some of the researchers mentioned above outline the theoretical and technical shortcomings of international rankings, they largely accept the impact of ranking systems on the international composition of the higher education sector. Others have focused on the metric schemes of these international rankings to explore the value of each indicator for universities’ ranking success. The researcher has also shown what indicators expedite higher ratings in various international university rankings. However, none of the studies in the reviewed literature provides clarification about the contribution of each indicator within a combination of various ranking systems. Considering the influence of international rankings on institutional and even national higher education policies, on the other hand, statistically prominent indicators and their individual values can guide higher education policymakers and university managers towards developing better strategies targeting common aspects of international ranking systems. Accordingly, the purpose of this research is to identify the most significant indicators and to examine their individual weight by means of an expanded indicator-set of international university rankings. With this aim, the research questions are

-

1.

Which indicators (and their sub-components) are statistically significant within the expanded indicator-set?

-

2.

What is the individual weight of each significant indicator (and its sub-components) eliminating multicollinear connections within the expanded indicator-set?

-

3.

How do universities’ ranking positions differ comparing the overall scores of re-calculated individual weights with the total score of assigned weights within the expanded indicator-set?

Conceptual framework

Focusing on international ranking systems alone does not provide sufficient information to outline the organisational structure of high-ranked universities. However, one of the major approaches “to define a world-class university (WCU) is to identify the common features of the current top-ranking universities to provide some insights into the institutionalization of a WCU” (Shin 2013, p. 19). This apparent connection between global rankings and WCU definition will enrich the theoretical perspective to picturise the general characteristics of high-ranked universities (see Fig. 1). In this respect, a well-known study by Salmi (2009, p. 32) presents the essential requirements to establish a WCU, as follows (see Fig. 1): concentration of talent (students, teaching staff, researchers, internationalisation), Abundant resources (public budget resources, endowment revenues, tuition fees, research grants) and Favourable governance (supportive regulatory framework, autonomy, academic freedom, leadership team, strategic vision, culture of excellence). While Salmi (2009, p. 32) identified the major outcomes of WCUs in the general form of graduates, research outputs and technology transfer, Alden and Lin (2004) generated a long list of key characteristics of WCUs. Their list can be easily categorised in terms of potential indicators of universities’ ranking performance; namely, citation, income, internationalisation, prize, publication, ratios/degrees and reputation (see Fig. 1).

Additionally, Alden and Lin (2004) added ‘supportive (research and educational) environment’ as another important component to empower both the students’ learning experience and the academic productivity of staff by establishing a high-quality campus and facilities in high-ranked universities. Further, through excellence initiatives, Liu et al. (2019, p. 10) recently argued for the role of national higher education policies (targeting better ranking positions) to support the financial and staffing aspects of universities in their countries. Lastly, while showing institutional actions regarding global rankings, Hazelkorn (2015, p. 209) indicated the re-contribution of marketing strategies that highlight their ranking position, thus adding to their reputation. All in all, the combination of these theoretical components illustrated in Fig. 1 provides a good basis to analyse the values of common indicators of university rankings and to discuss institutional strategies operated by universities to improve their ranking position.

Methodology

This research was designed as a correlational research. In the correlational research design, researchers assess the relation(s) between variables (e.g. views, opinions, attitudes, terms, phenomena, scores, points) by the explanatory or predictive approach through quantitative analysis (Ary et al. 2006; Tekbıyık 2014). In line with this definition, the data were firstly collected from various international university rankings, and then regression analyses were performed to explore the predictive relations between universities’ ranking performance and the combination of indicators in the selected rankings.

Expanded indicator-set

The dataset includes universities’ ranking scores re-calculated using the expanded indicator-set of international university rankings. The researcher followed here the expanded indicator-set of ARWU, QS, THE and URAP systems in his previous study (Uslu 2018a). He selected these ranking systems because of the availability of (full) indicator scores as well as their assigned weights (as percentage values). This expansion process fully complies with the potential ranking indicators outlined in the conceptual framework (see Fig. 1). While the conceptual framework provides well-established theoretical bases to discuss the analysis results, the expanded indicator-set also offers a comprehensive perspective to statistically examine each indicator.

Although the researcher took their similarities into consideration when re-grouping the indicators of ARWU, QS, THE and URAP, the richness of this expanded set comes from differences between the indicators of selected rankings. For example, ARWU and URAP benefit from the Web of Science (WOS) database to determine the publication and citation scores of universities, while QS and THE use the outputs of SCOPUS database. To enhance the comprehensiveness of the reputation category, the expanded set combines four different parts from QS and THE reputation surveys, as follows: QS-Academic Reputation, QS-Employer Reputation, THE-Research Reputation and THE-Teaching Reputation. Similarly, under the ratios/degrees category, this research expanded the ranking indicators through students-per-staff rates and doctoral degree ratios (against bachelor degrees and the number of academic personnel in universities) in the QS and THE rankings. In terms of internationalisation, QS and THE contribute to the expanded set by including international student and staff ratios, while THE and URAP enrich the set with international collaboration scores. Further, ARWU and THE add two unique criteria to the expanded indicator-set; namely, ARWU-Prize (awarded to staff or alumni) and THE-Income (from teaching/research/industry-based work). All in all, the researcher formed the expanded indicator-set including seven indicator groups, five derived from various rankings while remaining two based on one, but different ranking systems (see Table 1).

Dataset

In this research, the dataset was prepared by entering the score separately for each indicator in each of these selected ranking systems onto Excel during the first half of 2018. Despite 959 universities being featured in QS World University Rankings 2018, more than one indicator scores were missing for most of the universities in the list; leaving 225 universities which had individual scores for all indicators within ARWU, QS, THE and URAP rankings in this dataset. While ARWU and URAP in 2018 provided a full-score for each indicator, a couple of universities in THE rankings had a missing indicator score that could be easily extracted by decreasing other indicator scores from the full-scores. Although QS rankings only provided an overall score for the first 400 universities (from all around the world), many of these top universities had one or more missing indicator scores. Overall, there is no clear pattern on missing indicator scores in the QS 2018 Rankings, despite relatively more absent indicator figures related to the international faculty and student ratios in Asian universities.

Further, ARWU announced their 2018 ranking results on August 15, 2018 (ARWU 2018a), while QS, THE and URAP had previously released their 2018 results. Therefore, the researcher updated the dataset adding ARWU 2018 ranking scores on Excel to ensure the inclusion of 2018 scores from all four selected ranking systems. Finally, by eliminating one more university because of its exclusion from ARWU 2018 rankings, the Excel sheet included ranking outputs (with all indicator scores) for 224 universities via their 2018 scores in ARWU, QS, THE and URAP world university rankings. The country distribution of these 224 universities largely reflects the continental distribution of universities in the selected rankings, as follows: 28 from Asia (including universities from 7 countries), 107 from Europe (including universities from 14 countries), 2 from the Middle East (1 Israeli and 1 Saudi Arabian), 63 from North America (12 Canadian and 51 American), 23 from Oceania (19 Australian and 4 New Zealander) and 1 from South Africa (see Table 3 in the Appendix).

Data analysis

After completing the dataset of 224 universities’ ranking scores, the researcher firstly transferred the data from the Excel sheet to SPSS programme interface. To calculate the individual value of each criterion in the expanded indicator-set, he preferred to use standardised Beta (β) coefficients of regression analysis. While un-standardised β coefficients include collinear relations between variables, a standardised β coefficient shows the contribution of the related variable excluding its contribution through other variables on the dependent variable (Hair, Black, Babin and Anderson, 2010). Unlike the general assumption in regression-based analyses as in predicting a dependent variable through one or more independent variables (e.g. X = constant + aβa + bβb + cβc + …), this research adopted the approach of predicting a dependent variable via its sub-components (e.g. X = constant + XaβXa + XbβXb + XcβXc + …). This naturally means obtaining the determinant coefficient as one (R2 = 1), the sign of full prediction/explanation on the dependent variable, while exploring the contribution of sub-components through their standardised β coefficients.Footnote 2

As the starting point, the researcher calculated the weighted sub-criteria scores for each university in the dataset (e.g. the university’s score in the HiCi of ARWU × 22.22%) using the Compute Variable function of SPSS 21 Software. Following the calculation of the weighted score for each sub-criterion, he also calculated the combined scores for seven indicators in the expanded set. The researcher then employed Multiple Regression Analysis on the dataset to explore the predictive relations between universities’ ranking performance and their indicator scores. Firstly, pre-tests were applied to ensure the availability of the dataset for the regression analysis (Hair et al. 2010), as following: (1) in terms of the sample size, the ranking scores of 224 universities are adequate for the regression analysis including seven independent variables (considering the general calculation approach as 50 + [8 × 7indicators] = 106). (2) The dependent variable and total ranking scores (and also scores of each indicator for further regression analyses) are normally distributed in accordance with Skewness-Kurtosis values between ± 2 (Skw = .950; Krt = .712–for seven indicators: Skw = −.117–1.399; Krt = −.969–2.144). 3) The multiple linear connections between research variables are at an acceptable level considering the Tolerance > .10 and VIF < 10 criteria of Collinearity Statistics (see Table 2). (4) According to the Durbin-Watson test (criterion of D-W ≈ 2), there is no autocorrelation between error ratios in the analysis (see Table 2).

Ensuring the necessary assumptions for regression analysis, the researcher employed the first multiple regression analysis by appointing universities’ overall ranking scores as dependent (predicted) variables and the unweighted scores of seven indicators in the expanded set as independent (predictive) variables. During further regression analyses, he used the total score for each indicator as a dependent (predicted) variable and the unweighted scores of sub-criteria of the related indicator as independent (predictive) variables. For the regression analyses in the current research, the significance level was assigned as .05. In the last phase, the researcher calculated the percentage value of each indicator (and also for its sub-criteria) in the expanded set by benefiting from their standardised β coefficients.

Findings

The analyses and calculations were carried out sequentially as per the research questions. First, regression analysis was employed to explore the predictive relations between the seven indicators of the expanded set and the overall (weighted) ranking score through ARWU, QS, THE and URAP rankings. The results are summarised in Table 2.

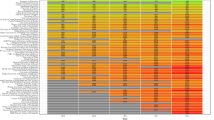

As can be seen in Table 2,Footnote 3 each indicator score is the significant predictor for universities’ ranking performance (t = −2.831–33.979; p ≤ .05). While other scores had a different level of contribution (standardised β = .078–.313) to universities’ ranking position, income (β = −.021) was the only predictor negatively influencing the overall ranking scores. The percentage values of each of the seven indicatorsFootnote 4 were calculated using their standardised β values and added to Fig. 2.

In the second-order analysis, each indicator within the expanded set was individually examined to observe the predictive relations between the indicator (weighted) score and its sub-indicators (through their unweighted scores). The results of the indicator level-regression analysis were added to Fig. 1 in order to see the complete path of universities’ ranking performance. For each indicator in the expanded set, the percentage values of its sub-indicatorsFootnote 5 were also calculated via their standardised β values (see Fig. 2).

According to Fig. 2, publication (26.46%) has the highest contribution to universities’ ranking score while reputation the second (24.09%) and citation the third (23.16%). Remaining four indicators altogether 26.28% contribute to universities’ ranking performance. Taking the 400% total of ARWU, QS, THE and URAP rankings into account, the percentage values of seven indicators were compared with a quarter of their assigned percentages. This comparison shows that ratios/degrees made the highest percentage gain with a 4.49% increasFootnote 6 against the highest percentage lost (4.47%) in the income scores. Further, THE-staff/student ratio and THE-doctorate/bachelor’s ratio were not a significant predictor for ratios/degrees while THE-proportion of international students and THE-proportion of the international staff were not a significant predictor for internationalisation. In addition, Q-academic reputation had the highest value with 12.79% whereas THE-research income had the lowest (with − 1.02%). Among sub-indicators, however, QS-faculty/student ratio showed the greatest increase with a 4.26% gainFootnote 7, and demonstrated the greatest decrease (2.52%) on THE-research income.

Lastly, the total ranking scores were calculated using the assigned percentages of indicators in ARWU, QS, THE and URAP ranking systems (see Table 1). Universities’ ranking scores were also calculated using the percentages of indicators (through their standardised β coefficients) within the expanded set. The results of these two rankings, which were based on the assigned and re-calculated percentages, were then compared (see Appendix). According to this comparison, only 19 universities occupy the same position in both rankings of the expanded indicator-set, assigned and re-calculated, while 205 universities had different ranks. Whereas the highest decrease for universities in the calculated rankings was 21 places against the assigned rankings of the expanded indicator-set, the maximum increase was 22 (see Appendix). It can be seen in the Appendix, except for one (from China), that 19 universities having the same position are from Anglo-Saxon countries, as follows: seven from the United States of America, four from the United Kingdom, two from Australia, one from Canada, one from Germany, one from New Zealand, one from Norway and one from Switzerland. With greater or lesser transposition (from 1 place to 22 places), the remaining 91.52% of the 224 universities show a highly divergent picture compared to their original positions in the selected four ranking systems (see Appendix).

Discussion and conclusion

This research re-ranked universities taking into account the individual contributions of score criteria within the expanded indicator-set. The expanded indicator-set includes the ranking metrics used by ARWU, THE, QS and URAP for their 2018 world university rankings. Although it appears there are no mysterious results, the research revealed a common path for ranking success through the contribution of each significant indicator in the expanded set.

Indeed, the results strongly confirmed the comment of one of the researcher’s former interviewees, as follows: “For the rankings, the most important thing is research reputation [a combination of research productivity, research impact and academic reputation]. The second most important thing is, again, research reputation” (Uslu 2017a). For example, this research showed that publications are the primary source of universities’ ranking performance, and, with a value of 26.46%, they are 1.6% more influential than the assigned percentage average (24.86%) for the selected four ranking systems. This result is expected when we consider the strong ties between publication volume and the second and third highest contributor indicators, reputation and citation. It is important to remember here that the selected ranking systems use the output of WOS or SCOPUS databases to calculate universities’ publication score. Although other factors increasing a university’s reputation in global academia are discussed below, publication volume in these international databases is obviously the main feed line for their research reputation (Bowman and Bastedo 2011; Collins and Park 2016; Hazelkorn 2015). In addition to attracting more attention in global academia, the publications indexed in prestigious databases also gain more citations from other researchers. The reason is simply a high correlation between citation rates and the impact factors of the related journals (Jarwal et al. 2009; Shin et al. 2011). Accordingly, WOS and SCOPUS, as the two most prestigious databases, include academic journals with a high impact factor; the publications in these journals then assist in increasing universities’ citation score, with 23.16% value in the expanded indicator-set.

Unlike accessible (and reviewable) data provided by WOS or SCOPUS on publication and citation, reputation surveys employed by two ranking systems (QS and THE) lead us to pose questions in terms of valid and reliable representation of the global academic community. Further, these reputation surveys can be criticised on the basis of their approach, purpose and calculations. Although Spence (2019) suggested preferring ‘judgement’ rather than ‘metrics’ for measurement of performance in higher education, the ‘judgement’ of reputation in international university rankings does not comply with the main approach of measurement, as in “the measurement of outcomes and their correlation with… ‘input’… aims to provide an evidence-base for [higher] educational practice” (Biesta 2009, p. 34). In the end, as Shin and Toutkoushian (2011) clearly stated, “reputation measures have limitations in reflecting the quality of teaching and research” (p. 5) because of their validity, reliability and measurability shortages. However, among the sub-indicators within the expanded set, academic reputation (from QS with 12.79% value, against 3.25% for employer reputation) is still the highest contributor to universities’ ranking performance. As the researcher’s previous study (Uslu 2018a) revealed, universities’ reputation scores are also the main indicator designating their increase or decrease in the selected rankings. This increase/decrease pattern based on the reputation scores of universities is the main factor behind the inconsistency between the two rankings, prepared based on assigned and calculated values of indicators in the expanded set (see Appendix). The greatest gap is generally between universities’ calculated rankings and positions in QS where half of the overall score comes from their reputation survey (see Appendix). Accordingly, this gap might be the reason for a slight increase of non-western universities compared to their western competitors in the calculated rankings through the expanded indicator-set (see Appendix).

In every case, considering the 73.71% value of research reputation components (research productivity, research impact, academic reputation) together for international rankings, university managers should firstly develop various strategies to enhance their publication and citation rates in globally prestigious databases. Here, the components of the conceptual framework in the present study provide insights into the importance of having talented staff, large resources, high-quality facilities and influential management practices. Therefore, there are two basic approaches for university managers to increase their research reputation. As Hazelkorn (2015) argued, one is “recruit[ing]/head-hunt[ing] international high-achieving/HiCi scholars” (p. 209) if universities have the resources to attract them. The other approach is raising their own star by academic support mechanisms and well-established institutional norms (Uslu 2017b); as in examples from all over the world, largely integrating tenure/promotion and reward/incentive schemes (Cadez et al. 2017; Macfarlane 2007), writing support mechanisms (McGrail et al. 2006) and incorporating publication support (especially for open access options) in project budgets (Gargouri et al. 2010). When we consider the tippy-top of international rankings; however, it is a very challenging task for universities to force their way into the forefront, even if they could achieve higher scores from the publication and citation categories. The top places are almost completely occupied by older universities (including two that were founded in medieval times), and they seem to have a self-feeding loop on ‘the road to academic excellence’, using the advantages of their long-standing historical prestige to obtain larger resources and attract productive researchers and better students (Altbach and Salmi 2011; Uslu 2017b). Therefore, despite their smaller contribution, other indicators gain importance for universities to further raise their position in international rankings.

While the number of ‘students’ and ‘doctoral degrees’ per faculty, as the sub-indicators of the ratios/degrees category, contributes 12.68% to universities’ ranking scores, award-winning academics and alumni can provide an 8.79% increase in ranking scores. Commenting the QS Methodology, as follows: “The [Faculty/Student Ratio criterion] recognizes that a high number of faculty members per student will reduce the teaching burden on each individual academic” (QS 2018)), the student/faculty ratio is obviously the sign of larger research time for academics. Doctoral degree completions per faculty also indicates the significant contribution that doctoral students provide to university publication records, especially through peer-reviewed articles and chapters in which PhD researchers and their advisors collaborated (Horta and Santos 2016). The award indicator only includes highly prestigious scientific prizes and medals such as the Nobel Prize and Fields Medal (ARWU 2018b); therefore, the award-winning staff and alumni naturally promote the reputation of their institutions within both global academia and the international community. The internationalisation indicator also adds 6.59% value to the universities’ ranking score via its contribution to institutional reputation, as well as research productivity. If we take the similar contribution of internationalisation components (from 1.51 to 1.74%) into account, it appears to be equally important for universities to expand their reputation wider by having more international staff and students (Chapleo 2010; Delgado-Marquez et al. 2013) and to enrich their research portfolio with international collaborations (Abramo et al. 2011; Kwiek 2015). Therefore, university managers can prioritise the appointment of highly qualified foreign researchers and institute scholarship programmes targeting international students to empower their global visibility and international publication profile.

In these two respects of the conceptual framework (see Fig. 1), all around the world governments have provided remarkable support for universities within the framework of various internationalisation policies and programmes. Examples include the Laureate Fellowships in Australia, Research Chairs in Canada, Thousand Talents Professorship in China, DAAD Scholarships in Germany, UKRI Research Training Funds in the UK and Türkiye Scholarships in Turkey. This is in addition to the initiatives of multinational funding bodies such as Marie Skłodowska-Curie Actions exchange, co-funding and fellowship programmes of the European Commission. Further, as two other common strategies (Uslu 2017b; Wang and Shapira 2011), university managers may establish (at least, a limited number of) institutional advanced grants and/or starting funds for project applications to national and international research councils in order to support assertive research endeavours, if their potential for internationally scientific awards is taken into consideration (Tatsioni et al. 2010). Here, external image management, another component of the conceptual framework, would play an important role for universities to beat the drum for various achievements from local to global level. Therefore, to enhance the reputation of their institutions both in the academic community and in the public eye (Uslu 2018b), university managers can benefit from different media channels to publicise success stories such as ranking performance, entrepreneurial and social projects, as well as award winners and the international composition of their institutions.

Unlike others, the income indicator exerts a negative influence (− 1.78% against 2.69% average assigned value) on universities’ ranking scores. This is truly not a well-advised finding by reason of the data source(s) used for income metrics. Whereas THE (as the only ranking including income components) largely uses accessible data sources such as academic databases for publication and citation or official statistics for faculty/student and degree completion ratios and even applied data weighting (by subject and/or continent) in their reputation surveys (e.g. THE methodological explanation (THE 2018b)), it would seem they do not have any option other than self-reported data by individual universities in order to collect institutional income figures. This obviously creates a data-reliability problem for the income component within university rankings. For example, the researcher looked at two example institutions in his home country. While the top 10 universities in the general rankings did not achieve 90 points, these two universities were uppermost in industry income rankings with over 90 points (THE 2019a), despite their position at around 20 in the Entrepreneurial and Innovative University Index of Turkey (TÜBİTAK 2019), against 64 points of industry income in THE rankings for the champion in the national list. Apart from theoretical criticism (Harvey 2008; Shin et al. 2011), this sort of reliability concern, as well as other technical issues such as weight discrepancies and co-linearity in the data (Bougnol and Dula 2015; Soh 2017) within ‘research reputation’ rankings, possibly led to alternative ranking mechanisms gaining more importance in global academia and also visibility in the international community.

However, similar problems are also seen within the scope for alternative ranking outputs. As the latest product of THE rankings, they prepared the University Impact Rankings 2019, following the 11 Sustainable Development Goals (SDG) published by the United Nations (THE 2019b). While “Research metrics [we]re derived from data supplied by Elsevier” for this new ranking, they announced that “Institutions provide[d] and sign[ed] off their institutional data for use in the rankings” for various SDG (e.g. good health and well-being, gender equality or climate action) so as to again bring up the question of reliability of institutions’ self-reported data (THE 2019b). Another alternative ranking list, Reuters Top 100: The World’s Most Innovative Universities, counted 10 various metrics (with equal weights) (Reuters 2018a), but released only three scores, namely, Total Patents Filed, Patents Granted (as two direct metrics) and Commercial Impact (Reuters 2018b). When the 100 universities are re-ranked using these three scores and their assigned percentage values, the positions of nearly all the universities are very different in the listFootnote 8. This is a clear sign of the ‘Transparency and Reproducibility’ problem (Bougnol and Dula 2015), which is due to giving insufficient explanation of the metrical score methodology and using a vague statistical procedure to produce the overall scores.

All in all, via the comprehensive structure of the expanded indicator-set, this research identified a common path for ranking success in universities. In doing so, the analysis assessed the percentage value of each indicator (and also its sub-components) by eliminating multicollinearity between the ranking indicators. However, by collecting data from the four selected international rankings, this research naturally has limitations in terms of data-reliability and reproducibility. Therefore, the reliability of data source(s) is potentially a research topic of its own, considering each of the ranking indicators. Additionally, further research might focus on the re-examination of selected ranking cases or different expanded university ranking schemes. Researchers may also employ the same analysis to long-term data of the selected four rankings (starting from 2010, with the first announcement of URAP rankings, the newest ranking scheme in the study) in order to examine the longitudinal feasibility of findings in this research.

Notes

In predictive analyses, beta coefficient (β) indicates the individual contribution of the independent variable to the dependent variable. Here, for example, β = 662 for ARWU means a 1-point increase in ARWU brought a .662-point increase in the combination of ARWU, QS and THE.

In the predictive analysis, the determinant coefficient (R2) indicates the explanation ratio of dependent (predicted) variables by independent (predictive) variables. Then, R2 = 1 means all predictor variables that together explain the whole constitution of the predicted variable. In this research, while the un-standardised beta (β) coefficient of each indicator shows its assigned value by the related ranking system (ARWU, QS, THE or URAP), the standardised β coefficient reveals the individual contribution of the related indicator (see previous example in Endnote-1 above).

While the seven indicators together fully explain universities’ ranking scores (according to R2 = .997), standardised β values show “Ranking Score = .313x(publication) + .285x(reputation) + .274x(citation) + .150x(ratios/degrees) + .104x(prizes) + .078x(internationalisation)−.021x(income)”. t and p values together also indicate valid test results for individual contribution of the related indicator at significance level (here .05)

e.g. Percentage value of publication = [.313/(.313 + .285 + .274 + .150 + .104 + .078–.021)] × 100 = 26.46%.

e.g. Percentage value of ARWU-Publication = [.266 / (.266 + .265 + .231 + .172 + .099 + .045)] × 26.46 = 6.53%.

e.g. 32.75% in total 400% of ARWU, QS, THE and URAP (see Table 1); 32.75%/4 = 8.19%; 12.68–8.19% = 4.49%

e.g. 20% in total 400% of ARWU, QS, THE and URAP (see Table 1); 20%/4 = 5%; 9.26–5.00% = 4.26%

e.g. ([Number of Patent applications/100] × 10) + ([Granted Patent Percentage/100] × 10) + ([Commercial Impact Score/100] × 80)

Re-calculation by the above formula resulted in different ranks for 95 universities, and the top university lost ground to sixth position.

References

5top100. (n.d.). Russian Academic Excellence Project: World-class Russian education. Accessed (July 23, 2019): https://www.5top100.ru/en/about/more-about/

Abramo, G., D’Angelo, C. A., & Solazzi, M. (2011). The relationship between scientists’ research performance and the degree of internationalization of their research. Scientometrics, 86(3), 629–643. https://doi.org/10.1007/s11192-010-0284-7.

Alden, J., & Lin, G. (2004). Benchmarking the characteristics of a world-class university: Developing an international strategy at university level. London: Leadership Foundation for Higher Education.

Altbach, P. G., & Salmi, J. (2011). The road to academic excellence: The making of world-class research universities. Washington, DC: The World Bank.

ARWU. (2018a). ShanghaiRanking’s Academic Ranking of World Universities 2018 Press Release. Accessed (August 15, 2018): http://www.shanghairanking.com/Academic-Ranking-of-World-Universities-2018-Press-Release.html.

ARWU. (2018b). Methodology, Academic Ranking of World Universities. Accessed (August 16, 2018): http://www.shanghairanking.com/ARWU-Methodology-2018.html.

Ary, D., Jacobs, L. C., Razavieh, A., & Sorensen, C. (2006). Introduction to research in education (7th ed.). Belmont, CA: Thomson Wadsworth.

Biesta, G. (2009). Good education in an age of measurement: on the need to reconnect with the question of purpose in education. Educational Assessment, Evaluation and Accountability, 21(1), 33–46. https://doi.org/10.1007/s11092-008-9064-9.

Bougnol, M.-L., & Dula, J. H. (2015). Technical pitfalls in university rankings. Higher Education, 69(5), 859–866. https://doi.org/10.1007/s10734-014-9809-y.

Bowman, N. A., & Bastedo, M. N. (2011). Anchoring effects in world university rankings: Exploring biases in reputation scores. Higher Education, 61(4), 431–444. https://doi.org/10.1007/s10734-010-9339-1.

Cadez, S., Dimovski, V., & Groff, M. Z. (2017). Research, teaching and performance evaluation in academia: The salience of quality. Studies in Higher Education, 42(8), 1455–1473. https://doi.org/10.1080/03075079.2015.1104659.

Çakır, M. P., Acartürk, C., Alaşehir, O., & Çilingir, C. (2015). A comparative analysis of global and national university ranking systems. Scientometrics, 103(3), 813–848. https://doi.org/10.1007/s11192-015-1586-6.

Chapleo, C. (2010). What defines “successful” university brands? International Journal of Public Sector Management, 23(2), 169–183. https://doi.org/10.1108/09513551011022519.

Collins, F. L., & Park, G.-S. (2016). Ranking and the multiplication of reputation: reflections from the frontier of globalizing higher education. Higher Education, 72(1), 115–129. https://doi.org/10.1007/s10734-015-9941-3.

Delgado-Marquez, B. L., Escudero-Torres, M. A., & Hurtado-Torres, N. E. (2013). Being highly internationalised strengthens your reputation: an empirical investigation of top higher education institutions. Higher Education, 66(5), 619–633. https://doi.org/10.1007/s10734-013-9626-8.

DFG. (n.d.). Excellence Initiative (2005–2017/19). Accessed (July 23, 2019): https://www.dfg.de/en/research_funding/programmes/excellence_initiative/index.html.

Dill, D. D., & Soo, M. (2005). Academic quality, league tables, and public policy: a cross-national analysis of university ranking systems. Higher Education, 49(4), 495–533. https://doi.org/10.1007/s10734-004-1746-8.

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Carr, L., Brody, T., & Harnad, S. (2010). Self-selected or mandated, open access increases citation impact for higher quality research. PLoS One, 5(10), e13636. https://doi.org/10.1371/journal.pone.0013636.

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2010). Multivariate data analysis (7th ed.). Upper Saddle River, NJ: Pearson Prentice Hall.

Harvey, L. (2008). Rankings of higher education institutions: a critical review. Quality in Higher Education, 14(3), 187–207. https://doi.org/10.1080/13538320802507711.

Hazelkorn, E. (2015). Rankings and the reshaping of higher education: The battle for world-class excellence (2nd ed.). London: Palgrave Macmillan.

Heffernan, T. A., & Heffernan, A. (2018). Language games: university responses to ranking metrics. Higher Education Quarterly, 72(1), 29–39. https://doi.org/10.1111/hequ.12139.

Hendel, D. D., & Stolz, I. (2008). A comparative analysis of higher education ranking systems in Europe. Tertiary Education and Management, 14(3), 173–189. https://doi.org/10.1080/13583880802228158.

Horta, H., & Santos, J. M. (2016). The impact of publishing during PhD studies on career research publication, visibility, and collaborations. Research in Higher Education, 57(1), 28–50. https://doi.org/10.1007/s11162-015-9380-0.

Jajo, N. K., & Harrison, J. (2014). World university ranking systems: an alternative approach using partial least squares path modelling. Journal of Higher Education Policy and Management, 36(5), 471–482. https://doi.org/10.1080/1360080X.2014.936090.

Jarwal, S. D., Brion, A. M., & King, M. L. (2009). Measuring research quality using the journal impact factor, citations and ‘ranked journals’: blunt instruments or inspired metrics? Journal of Higher Education Policy and Management, 31(4), 289–300. https://doi.org/10.1080/13600800903191930.

Kwiek, M. (2015). The internationalization of research in Europe: a quantitative study of 11 national systems from a micro-level perspective. Journal of Studies in International Education, 19(4), 341–359. https://doi.org/10.1177/1028315315572898.

Lim, M. A. (2018). The building of weak expertise: the work of global university rankers. Higher Education, 75(3), 415–430. https://doi.org/10.1007/s10734-017-0147-8.

Liu, Z., Moshi, G. L., & Awuor, C. M. (2019). Sustainability and indicators of newly formed world-class universities (NFWCUs) between 2010 and 2018: empirical analysis from the rankings of ARWU, QSWUR and THEWUR. Sustainability, 11(10), 1–29. https://doi.org/10.3390/su11102745.

Luque-Martínez, T., & Faraoni, N. (2019). Meta-ranking to position world universities. Studies in Higher Education (Online First: 1-15). https://doi.org/10.1080/03075079.2018.1564260.

Macfarlane, B. (2007). Defining and rewarding academic citizenship: the implications for university promotions policy. Journal of Higher Education Policy and Management, 29(3), 261–273. https://doi.org/10.1080/13600800701457863.

McGrail, M. R., Rickard, C. M., & Jones, R. (2006). Publish or perish: a systematic review of interventions to increase academic publication rates. Higher Education Research & Development, 25(1), 19–35. https://doi.org/10.1080/07294360500453053.

PREC Edu Service. (n.d.). China’s New World-Class University Policy. Accessed (July 22, 2019): https://www.studyinchina.com.my/web/page/chinas-new-world-class-university-policy/.

QS. (2018). Methodology, QS World University Rankings. Accessed (January 13, 2018): https://www.topuniversities.com/qs-world-university-rankings/methodology.

Reuters. (2018a). Methodology: Top 100 Innovative Universities – 2018. Accessed (July 13, 2019): https://www.reuters.com/innovative-universities-2018/methodology.

Reuters. (2018b). Reuters Top 100: The World's Most Innovative Universities - 2018. Accessed (July 13, 2019): https://www.reuters.com/article/us-amers-reuters-ranking-innovative-univ/reuters-top-100-the-worlds-most-innovative-universities-2018-idUSKCN1ML0AZ.

Salmi, J. (2009). The challenge of establishing world-class universities. Washington, DC: The World Bank.

Shattock, M. (2017). The ‘world class’ university and international ranking systems: What are the policy implications for governments and institutions? Policy Reviews in Higher Education, 1(1), 4–21. https://doi.org/10.1080/23322969.2016.1236669.

Shin, J. C. (2013). The world-class university: Concept and policy initiatives. In J. C. Shin & B. M. Kehm (Eds.), Institutionalization of world-class university in global competition (pp. 17–32). Dordrecht: Springer.

Shin, J. C., & Toutkoushian, R. K. (2011). The past, present, and future of university rankings. In J. C. Shin, R. K. Toutkoushian, & U. Teichler (Eds.), University rankings: Theoretical basis, methodology and impacts on global higher education (pp. 1–16). Dordrecht: Springer.

Shin, J. C., Toutkoushian, R. K., & Teichler, U. (2011). University rankings: Theoretical basis, methodology and impacts on global higher education. Dordrecht: Springer.

Soh, K. (2015). What the overall doesn’t tell about world university rankings: examples from ARWU, QSWUR, and THEWUR in 2013. Journal of Higher Education Policy and Management, 37(3), 295–307. https://doi.org/10.1080/1360080X.2015.1035523.

Soh, K. (2017). The seven deadly sins of world university ranking: a summary from several papers. Journal of Higher Education Policy and Management, 39(1), 104–115. https://doi.org/10.1080/1360080X.2016.1254431.

Spence, C. (2019). ‘Judgement’ versus ‘metrics’ in higher education management. Higher Education, 77(5), 761–775. https://doi.org/10.1007/s10734-018-0300-z.

Tapper, T., & Filippakou, O. (2009). The world-class league tables and the sustaining of international reputations in higher education. Journal of Higher Education Policy and Management, 31(1), 55–66. https://doi.org/10.1080/13600800802383091.

Tatsioni, A., Vavva, E., & Ioannidis, J. P. A. (2010). Sources of funding for Nobel Prize-winning work: public or private? The FASEB Journal, 24(5), 1335–1339. https://doi.org/10.1096/fj.09-148239.

Tekbıyık, A. (2014). İlişkisel tarama yöntemi [Correlational survey model]. In M. Metin (Ed.), Kuramdan uygulamaya eğitimde bilimsel araştırma yöntemleri [from theory to practices, research methods in education] (pp. 99–114). Ankara: Pegem.

THE. (2018a). World University Rankings 2018 Methodology. Accessed (January 14, 2018): https://www.timeshighereducation.com/world-university-rankings/methodology-world-university-rankings-2018.

THE. (2018b). THE World Reputation Rankings 2018: Methodology explained. Accessed (July 13, 2019): https://www.timeshighereducation.com/world-university-rankings/world-reputation-rankings-2018-methodology.

THE. (2019a). World University Rankings 2019. Accessed (July 13, 2019): https://www.timeshighereducation.com/world-university-rankings/2019/world-ranking#!/page/0/length/25/sort_by/rank/sort_order/asc/cols/stats.

THE. (2019b). THE University Impact Rankings 2019: Methodology. Accessed (July 13, 2019): https://www.timeshighereducation.com/world-university-rankings/methodology-impact-rankings-2019.

TÜBİTAK. (2019). 2018 Entrepreneurial and Innovative University Index, Turkey. Accessed (July 13, 2019): http://www.tubitak.gov.tr/sites/default/files/289/gyue_ilk50.pdf.

URAP. (2018). World Ranking Indicators. Accessed (January 15, 2018): http://www.urapcenter.org/2018/indicator.php?q=1.

Uslu, B. (2017a). Appearing in international rankings: How do academics from high-ranked universities comment? The 2nd International Higher Education Studies Conference (IHEC 2017), October 12–14. Turkey: Antalya.

Uslu, B. (2017b). The influence of organisational features in high-ranked universities: the case of Australia. Journal of Higher Education Policy and Management, 39(5), 471–486. https://doi.org/10.1080/1360080X.2017.1354755.

Uslu, B. (2018a). Dünya Üniversiteler Sıralaması: Genişletilen gösterge setine göre sıralamada oluşan farklılıklar [The World University Rankings: Differentiations in rankings according to the expanded indicator set]. Yükseköğretim ve Bilim Dergisi, 8(3), 457–470. https://doi.org/10.5961/jhes.2018.287.

Uslu, B. (2018b). The components of communication systems in universities: Their influence on academic work life. Tertiary Education and Management, 24(1), 34–48. https://doi.org/10.1080/13583883.2017.1359662.

Wang, J., & Shapira, P. (2011). Funding acknowledgement analysis: An enhanced tool to investigate research sponsorship impacts: The case of nanotechnology. Scientometrics, 87(3), 563–586. https://doi.org/10.1007/s11192-011-0362-5.

YÖK. (2017). Araştırma üniversitesi olarak belirlenen üniversiteler Cumhurbaşkanı Erdoğan tarafından açıklandı [The universities assigned as research university were announced by President Erdoğan]. Accessed (July 22, 2019): https://www.yok.gov.tr/Sayfalar/Haberler/arastirma-universitesi-olarak-belirlenen-universiteler-cumhurbaskani-tarafindan-aciklandi.aspx.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Uslu, B. A path for ranking success: what does the expanded indicator-set of international university rankings suggest?. High Educ 80, 949–972 (2020). https://doi.org/10.1007/s10734-020-00527-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10734-020-00527-0