Abstract

In this paper we present a heuristic approach to two-stage mixed-integer linear stochastic programming models with continuous second stage variables. A common solution approach for these models is Benders decomposition, in which a sequence of (possibly infeasible) solutions is generated, until an optimal solution is eventually found and the method terminates. As convergence may require a large amount of computing time for hard instances, the method may be unsatisfactory from a heuristic point of view. Proximity search is a recently-proposed heuristic paradigm in which the problem at hand is modified and iteratively solved with the aim of producing a sequence of improving feasible solutions. As such, proximity search and Benders decomposition naturally complement each other, in particular when the emphasis is on seeking high-quality, but not necessarily optimal, solutions. In this paper, we investigate the use of proximity search as a tactical tool to drive Benders decomposition, and computationally evaluate its performance as a heuristic on instances of different stochastic programming problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Stochastic programming (SP) is an important framework for dealing with uncertainty in optimization. SP models tend to be huge, and their solution typically requires a decomposition method to break the model into manageable parts. Benders decomposition (Benders 1962) is one of the most widely used approaches for SP. In Benders decomposition, a sequence of solutions to a “master” problem is generated, each of which is checked for feasibility by solving one or more subproblems. Without applying some heuristic to recover feasibility, the solution to the master problem, typically, cannot be used to update the incumbent. Even in the case that the solution to the master is feasible, its actual cost must be computed a-posteriori by solving the subproblems, which may reveal that the solution is worse than the incumbent. This iterative process continues until a feasible solution to the master problem is found for which the estimated cost of each subproblem is correct, i.e., the solution is optimal, and the method terminates. In other words, Benders decomposition primarily acts as a “dual algorithm” that rarely, and incidentally, improves the incumbent solution. As a consequence, because convergence may require a large amount of computing time for hard instances, the method may be unsatisfactory from a heuristic point of view.

Proximity search (PS) is a general approach focused on improving a given feasible “reference solution”, and seeking to quickly produce a sequence of improving feasible solutions (Fischetti and Monaci 2014). The approach is related to large-neighborhood search (LNS) heuristics (Shaw 1998) that explore a neighborhood defined by constraints restricting the search space, in particular to Local Branching (Fischetti and Lodi 2003), which adds a constraint that eliminates all solutions that are not “sufficiently close” to a reference solution.

The generic Mixed-Integer Linear Program (MILP) of interest has the form

where A is an \(m\times n\) input matrix, b and c are input vectors of dimension m and n, respectively, and the variable index set \(\mathcal {N}:=\{ 1,\dots , n \}\) is partitioned into \({\mathcal {B}}, {\mathcal {G}}\), and \({\mathcal {C}}\), with \(\mathcal {B}\) the index set of the 0–1 variables, \(\mathcal {G}\) the index set of general integer variables, and \(\mathcal {C}\) the index set of continuous variables. Removing the integrality requirement on variables with indices in \(\mathcal {B}\cup \mathcal {G}\) gives the LP relaxation.

PS works in stages, each aimed at producing an improved feasible solution. In each stage, a reference solution \(\widetilde{x}\) is given, and one seeks to improve it. To this end, an explicit cutoff constraint

is added to the original MILP, where \(\theta >0\) is a given tolerance that specifies the minimum improvement required. The objective function of the problem can then be replaced by the proximity function

to be minimized. One then applies the MILP solver, as a black box, to the modified problem in the hope of finding a nearby solution better than \(\widetilde{x}\) (see Algorithm 1 for more details).

A powerful variant of the above scheme, called “proximity search with incumbent”, is based on the idea of providing \(\widetilde{x}\) to the MILP solver as a starting solution. To avoid \(\widetilde{x}\) being rejected because of the cutoff constraint (1), the latter is weakened to its “soft” version

while minimizing \(\Delta (x,\widetilde{x}) + M z\) instead of just \(\Delta (x,\widetilde{x})\), where \(z \ge 0\) is a continuous variable, \(M\gg 0\) is a large penalty and \(\Delta (x, \widetilde{x})\) is defined by (2).

Computational experience confirms that PS is quite successful (at least, on some classes of problems), due to the fact that the proximity function improves the “relaxation grip” of the model, meaning that the solutions of the LP relaxation tend to have a large number of integer components, thus improving the success rate of the heuristics embedded within the MILP solver. This is true, in particular, for the MILP models where feasibility is enforced dynamically through cut generation. In fact, the solutions to the LP-relaxation tend to be similar to the reference solution, thus most feasibility constraints are likely to be satisfied without the need to explicitly impose them.

PS has a “primal nature”, meaning that it proceeds from a feasible solution to a “nearby” feasible solution of improved value. As such, PS and Benders decomposition naturally complement each other, in particular when the emphasis is on seeking heuristic solutions. In this paper, we investigate the use of PS as a tactical tool to drive Benders decomposition, and computationally evaluate its performance as a heuristic on instances of different stochastic programming problems.

The main contribution of our work is the development of a method that is able to produce good-quality feasible solutions to large-scale instances of (certain types of) stochastic programming problems, for which standard MILP solvers are unable to do so either because the instances are too big to load into memory or the time to solve even the root node relaxation is prohibitive.

The remainder of the paper is organized as follows. In Sect. 2, we introduce Proximity Benders, a variant of proximity search that is specifically designed to handle MILPs arising in stochastic programming. In Sect. 3, we present a heuristic that can enhance the performance of Proximity Benders. In Sect. 4, we discuss the results of a set of computational experiments that demonstrate the efficacy of Proximity Benders on instances of three stochastic programming problems. We conclude, in Sect. 5, with some final remarks and future research directions.

2 Proximity Benders

In what follows, we will concentrate on a MILP of the form:

where \(Y = P_0 \cap \{0,1\}^m\) and each set \(P_k\) (\(k=0,\ldots ,K\)) is a polyhedron. In other words, we assume that problem P has a block structure allowing for a decomposition into a master problem that involves binary variables y along with K continuous variables \(\mu _k\), plus K subproblems arising after fixing y, each being a Linear Program (LP) on the \((x_k,\mu _k)\) variables. In addition, we assume that it is not hard to determine a feasible (sub-optimal) solution of P. MILPs of this type frequently arise in stochastic programming, and are typically attacked by Benders decomposition.

We next describe our PS heuristic scheme for problem P. Given a feasible solution \((\widetilde{y}, \widetilde{x}, \widetilde{\mu })\), PS adds the cutoff constraint

to P, where \(U = c_0^T \widetilde{y} + \sum _{k=1}^K \widetilde{\mu }_k\) and \(\theta >0\) is a given tolerance, and replaces the objective function with the Hamming distance

with respect to \(\widetilde{y}\). In our implementation, we used the “proximity search with incumbent” variant, see (3), where the cutoff constraint is imposed in a soft way by introducing a new continuous variable \(z_0\ge 0\) with a large cost \(M_0\gg 0\) in the objective function (8), along with the constraint

At any stage of the algorithm, we have a collection of previously-generated Benders cuts involving the master variables, which we enforce by requiring that \((y,\mu ) \in \Gamma \), where \(\Gamma \) is the polyhedron defined by the current collection of cuts (initially, \(\Gamma = \mathfrak {R}^{m+K}\)). Therefore, the master problem has the form,

and can be solved by any black-box MILP solver.

According to classical Benders’ decomposition, given an optimal solution of the master problem, say \((\overline{y}, \overline{\mu })\), one solves each subproblem,

independently to possibly derive violated cuts to be added to the master. In our implementation, we followed the cut-generation recipe described in Fischetti et al. (2010), i.e., for each k, we introduce two additional nonnegative variables \(z_k\) and \(\nu _k\) and rewrite the corresponding subproblem as

where \(M_k\gg 0\) is a sufficiently large penalty used to drive \(z_k\) as close to zero as possible and \(\mathbf 1 \) is the vector of all ones. If the optimal solution value of \(\widetilde{P}_k(\overline{y}, \overline{\mu }_k)\) is strictly positive, a new cut can be derived and added to the master. Specifically, let \((x_k^*,z_k^*,\nu _k^*)\) denote the optimal solution found for the k-th subproblem \(\widetilde{P}_k(\overline{y}, \overline{\mu }_k)\). If \(z^*_k > 0\), problem \(P_k(\overline{y}, \overline{\mu }_k)\) is infeasible, and one can derive a Benders’ feasibility cut of the form

to add to the master. Otherwise, i.e., \(z^*_k = 0\) and \(\nu ^*_k > 0\), a Benders’ optimality cut of the form

can be added to the master. In both cases, the cut may be derived using the optimal solution of the master and exploiting strong duality for a linear program. The reader is referred to Fischetti et al. (2010) for further details. In the remainder, we will sometimes refer to subproblem \(\widetilde{P}_k(\overline{y}, \overline{\mu }_k)\) simply as subproblem k.

Obviously, if \(z^*_k=0\) for all k and \(c_0^T \overline{y} + \sum _{k=1}^K c_k^T x^*_k < U\), then one can update the incumbent \((\widetilde{y}, \widetilde{x}, \widetilde{\mu } )\) and iterate proximity search starting from this new solution. A more formal description of Proximity Benders is given in Algorithm 2.

A sequence of passes through the “while” loop in which the incumbent \(\widetilde{y}\) is not updated is called an outer iteration, while an inner iteration is a single pass through the “while” loop where the master problem and the K subproblems are solved.

The idea of mixing Benders decomposition with local search is not new. In particular, Rei et al. (2009) use local branching to produce a pool of (possibly infeasible) integer solutions of the master problem, from which diversified Benders cuts can be derived. However, our approach reverses the role of local search and Benders decomposition: instead of using local search inside a Benders method, we apply a black-box Benders solver within an external local search scheme, i.e., PS. In doing so, we modify the objective function of the master problem, thus drastically changing the sequence of points provided by the various masters, as well as their associated Benders cuts.

3 A repair heuristic

Due to its particular structure, finding a heuristic solution to problem P involves deciding the values of the y variables, as the x and \(\mu \) variables can easily be computed by solving a sequence of LP subproblems, provided of course that these subproblems are feasible.

We say that \(\overline{y}\) is feasible (for problem P) if fixing \(y=\overline{y}\) does not produce an infeasible subproblem. In many applications, the feasible y’s satisfy the following monotonicity property: let \(\overline{y}\in \{0,1\}^m\) and \(y'\in \{0,1\}^m\) with \(y' \ge \overline{y}\); if \(\overline{y}\) is feasible, then \(y'\) is also feasible. This implies, in particular, that \(\overline{y}=(1,\ldots ,1)\) is always a feasible (though likely very bad) solution.

Assuming monotonicity, one can easily derive a repair heuristic that starts with an infeasible \(\overline{y}\), and iteratively increases some of its components until a feasible \(y'\) is found. Of course, the quality of the final solution depends on how cleverly the components to be increased are chosen. In this respect, the following repair heuristic seems a reasonable option, and is applied in an inner iteration of Algorithm 2 to enforce feasibility of the current \(\overline{y}\) when only a few subproblems k have \(z^*_k > 0\).

Given the current infeasible solution \(\overline{y}\) we consider each subproblem \(\overline{k}\) with \(z^*_{\overline{k}} > 0\), in turn, and solve the following auxiliary problem

obtained from problem P by eliminating all variables and constraints associated with subproblems \(k \not = \overline{k}\) and imposing the additional constraint \(y \ge \overline{y}\). In this way, we model the effect of changing some variables \(y_j\) with \(\overline{y}_j=0\) to retrieve feasibility for the current subproblem. The resulting problem is then solved heuristically with a certain time limit imposed (or by simply rounding up the root node LP-solution) to quickly get an integer solution \(y'\). Then we set \(\overline{y}= y'\) and iterate on the next subproblem k with \(z^*_k > 0\). A pseudo-code of the resulting procedure is given in Algorithm 3.

In some cases, \(1-y\) (instead of y) satisfies the monotonicity property, and the repair heuristic can still be applied by replacing \(y \ge \overline{y}\) with \(y \le \overline{y}\) in (15). This happens, e.g., in the stochastic network interdiction problem described in Sect. 4.3.

4 Computational experiments

The Proximity Benders algorithm presented in Sect. 2, denoted as ProxyBenders in the following, has been implemented in C using IBM-ILOG Cplex 12.6.1 as the MILP solver. We ran the algorithm on different benchmark instances in order to test its effectiveness, namely:

-

instances of a stochastic capacitated facility location problem described, for example, by Bodur et al. (2014);

-

instances of a stochastic network interdiction problems described, for example, by Bodur et al. (2014); and

-

instances of a stochastic fixed charge multi-commodity network design problem derived from those introduced by Crainic et al. (2014).

To evaluate the performance of Proximity Benders, we also considered alternative approaches and ran, on the same benchmark instances, the following algorithms:

-

IBM-ILOG Cplex 12.6.1 in its default settings (Cplex in the following) on the MILP associated with an instance; and

-

A natural implementation of a Benders decomposition algorithm (Benders in what follows) in which the proximity paradigm is not exploited.

In our implementation, we set \(M_k=100,000\) to enforce feasibility in the subproblems. The minimum improvement required in the cutoff constraint of ProxyBenders is set as \(\theta = 10^{-5} z_0\), where \(z_0\) is the incumbent solution value. Thus, we use a non-aggressive policy and we let the value of \(\theta \) decrease during the execution of the algorithm. Each algorithm was run in single-thread mode with a time limit of 1 h per instance on an Intel Xeon E3-1220V2 running at 3.10 GHz, with 16GB of RAM.

ProxyBenders requires an initial feasible solution. A natural idea is to use the repair heuristic starting from infeasible solution \(\overline{y} = 0\). This produces reasonable results for the stochastic facility location and the stochastic fixed-charge multi-commodity network design problems, but not for the stochastic network interdiction problem, as the repair heuristic is unable to improve it. To have a more meaningful initial solution, in all cases we also run algorithm Benders and stop it when the incumbent has been updated 10 times. The best of the two feasible solutions produced is provided as initial feasible solution to all algorithms (for all instances in our testbed).

4.1 Metrics

To compare the performance of the different heuristics, we use an indicator recently proposed in Achterberg et al. (2012) and Berthold (2013), aimed at measuring the trade-off between the computational effort required to produce a solution and the quality of the solution itself. Specifically, let \(\widetilde{z}_{opt}\) denote the optimal solution value for a given problem, and z(t) be the value of the best heuristic solution found at a time t. Then, a primal gap function p can be computed as

where \(\gamma (\cdot ) \in [0,1]\) is the primal gap, defined as

Finally, the primal integral (PI) of a run until time \(t_{\max }\) is defined as

and is actually used to measure the quality of primal heuristics: the smaller \(P(t_{\max })\), the better the expected quality of the incumbent solution if we stopped computation at an arbitrary time before \(t_{\max }\).

We also count the number of instances for which an algorithm produced the best solution at the time limit (#w for number of wins), the number of instances for which a solution is found that improves the initial feasible solution (#i), and the total number of improving solutions found (#s).

4.2 Stochastic capacitated facility location

Our first benchmark includes instances of a stochastic variant of the capacitated facility location problem (CAP), as described by Louveaux (1986). In this variant, first-stage variables determine the set of facilities to be opened before observing the actual realizations of customer demands. The second-stage variables determine the fraction of customer demands allocated to the open facilities. Denoting by I the set of potential facilities, by J the set of customers, and by K the set of scenarios, the problem can be formulated as follows (see Bodur et al. 2014 for further details)

where \(f_i\) and \(s_i\) represent the fixed cost and capacity, respectively, of facility \(i \in I\), \(\lambda _j^k\) is the realized demand of customer \(j \in J\) in scenario \(k \in K\), and \(q_{ij}\) denotes the cost of sending a unit of demand from facility \(i \in I\) to customer \(j \in J\).

We used all the CAP instances considered in Bodur et al. (2014), obtained using networks from the OR-Library (Beasley 1990) and randomly generating the customers’ demands. In particular, we have 4 classes each with 4 instances with 250 scenarios and 4 classes each with 4 instances with 500 scenarios.

Table 1 reports, for each algorithm and for different time limits (namely, 100 s, 600 s and 1 h), the outcome of our experiments for each instance class. Instances are grouped as in Bodur et al. (2014) according to (K, CAP #), thus each row refers to 4 instances. Note that for all these instances, the optimal solution value is known, allowing for an exact computation of the primal integral, see (16).

The results in Table 1 show that ProxyBenders performs better than Cplex for small time limits, but that it loses its edge when more computation time is available. This is due to the fact that these instances are not extremely hard and that all but two of them can be solved to optimality by Cplex within the 1-h time limit. On this benchmark, Benders is outperformed by the other two algorithms, though the presence of redundant constraint (17) in the model ensures that each subproblem is feasible for any first stage solution, allowing Benders (and ProxyBenders) to find a feasible solution at each iteration. This fact typically increases the chances of finding a high-quality solution, and, in fact, deactivates the repair heuristic described in Sect. 3.

The results above are encouraging and demonstrate the potential of Proximity Benders, but the instances are inadequate to fully reveal the power of Proximity Benders. As mentioned, most of the instances can be solved to optimality by Cplex in less than 1 h. That is not the setting for which a decomposition approach is designed. Proximity Benders is designed to be used in settings where the instances are large, difficult, and cannot be solved in a reasonable amount of time by providing the MILP formulation to a solver, indeed for which solving the root relaxation may already be computationally prohibitive. In the next two subsections, we present results on instances that are (somewhat) more appropriate to show the benefits of a decomposition approach, and of Proximity Benders in particular.

4.3 Stochastic network interdiction

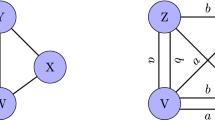

Our second set of benchmark instances among those described by Bodur et al. (2014) includes instances of the stochastic network interdiction problem (SNIP) described by Pan and Morton (2008). In SNIP one is given a directed graph \(G=(N,A)\) and a subset of candidate arcs D on which sensors can be installed, so as to maximize the probability of catching an intruder that traverses some path in the graph. First-stage decisions concern the installation of the sensors. In the second stage, a scenario corresponds to an intruder selecting a path that has minimum probability of being detected when traversing the path from the intruder’s origin to his destination. Denoting by K the set of scenarios, the problem can be formulated as follows

where \(p_k\) is the probability of scenario k, which corresponds to a path from origin \(s^k\) to destination \(t^k\), \(c_{ij}\) is the cost of installing a sensor on arc \((i,j) \in D\), b is the available budget, and \(q_{ij}\) and \(r_{ij}\) denote the probability of failing to detect the intruder with and without a sensor on each arc (i, j), respectively. Finally, coefficients \(\psi ^k_j\) represent the value of the maximum-reliability path from j to \(t^k\) when no sensors are placed and can be determined by shortest-path computation. In this case too, the reader is referred to Bodur et al. (2014) for a complete description of objective function and constraints.

We focus our experiments on the more difficult instances, which are obtained by drawing \(r_{ij}\) uniform randomly from [0.3, 0.6] and setting \(q_{ij} = 0.1 r_{ij}\) (Class 3 instances) and \(q_{ij} = 0\) (Class 4 instances) for all \((i,j) \in A\), respectively. The available budget ranges from 30 to 90. Table 2 gives the corresponding results.

For the instances in this testbed, Benders tends to perform better than Cplex as it takes advantage of the decomposition, which is crucial for these large instances. However, results show that the proximity paradigm produces even better results: ProxyBenders has a performance similar to Cplex and Benders for the smallest time limit, while it outperforms the other two methods (for both metrics) for larger time limits. The reason why ProxyBenders performs so well on these instances is that it continues to find improving solutions during the 1 h execution, whereas Cplex does not and Benders cannot.

In Fig. 1, we show the best known solution value for each of the heuristics over time for one of the instances (namely, the fourth instance of Class 4 with a budget of 80). We see that all algorithms start with the same initial solution of value 0.199, and ProxyBenders finds many improving feasible solutions during its execution; the final one after about 1600 s has a value equal to 0.155. Cplex, on the other hand, finds the first improving feasible solution of value 0.187 after 1200 s and terminates with a solution of value 0.178 found after about 2800 s. As to Benders, it finds about ten improving solutions: after 1000 s it computes a solution of value 0.158 which is slightly improved to 0.157 after 2200 s.

4.4 Stochastic fixed-charge multi-commodity network design

Finally, we consider large instances of a stochastic fixed charge multi-commodity network design problem. Given a directed network \(G=(N,A)\) and a set K of commodities, the deterministic problem seeks a minimum cost set of arcs that allows the required amount of flow for each commodity to be send from its origin to its destination (i.e., to satisfy demand). In the stochastic version of the problem, first-stage binary variables determine the network design, i.e., the set of arcs to be installed, whereas second-stage continuous variables determine the flow of a commodity along an arc for a given scenario (i.e., for a given demand realization). Denoting by S the set of scenarios, the model reads as follows

where \(f_{ij}\) and \(u_{ij}\) denote the fixed cost and capacity, respectively, of arc \((i,j) \in A\), \(c_{ij}^k\) denotes the cost of sending one unit of commodity \(k\in K\) along arc \((i,j)\in A\), \(d_i^{ks}\) denotes the net flow (i.e., the difference between inflow and outflow) at node \(i \in N\) for commodity \(k \in K\) in scenario \(s \in S\), and \(p_s\) denotes the probability of scenario \(s \in S\). Further details about the model can be found in Crainic et al. (2014).

We consider seven of the instance classes (namely, instance classes 4 through 10) used in Crainic et al. (2014), which, in turn, were derived from the set of R-instances of Crainic et al. (2011). For each instance class, we consider five networks (namely, networks 1, 3, 5, 7, and 9) with an increasing ratio of fixed to variable costs and total demand to capacity. For each of the 35 networks, Crainic et al. (2014) generated 5 instances with 64 scenarios. In order to have instances with a larger number of scenarios, we generate scenarios as follows. For each commodity and for each network, we determine the minimum and maximum demand over all scenarios considered in Crainic et al. (2014). Then, we generate the demand for the commodity in each of the |S| scenarios by drawing uniform randomly from the interval determined by the minimum and maximum demand.

Table 3 provides results for instances with 2000 scenarios. The table reports the same information as Tables 1 and 2, but each line now refers to a specific instance. Because for many instances the optimal solution value is not known, the primal integral has been computed with respect to the best known solution value.

For two instances, none of the heuristics was able to improve on the initial feasible solution in 1 h. For these instances all entries in the table are set to zero. For twenty instances, Cplex was unable to even solve the LP relaxation in 1 h. These instances are marked by a star.

We see that Benders performs poorly on these instances, as it only improves the initial feasible solution for 7 instances out of 35. On the other hand, ProxyBenders is reasonably effective, especially for the largest instance classes (namely, instance classes 8, 9 and 10). When restricting ourselves to these instance classes, we see that Cplex finds improving solutions for only 1 of the 15 instances whereas ProxyBenders finds improving solutions for 10 instances. Although ProxyBenders is not guaranteed to find a feasible solution at each iteration, using a large value \(M_k\) in problem \(\widetilde{P}_k(\overline{y}, \overline{\mu }_k)\) enforces feasibility in many cases. And, whenever this is not the case, the repair heuristic recovers feasibility, which may or may not provide an improving solution.

The results clearly exhibit the expected pattern. When given enough time, Cplex will ultimately overtake ProxyBenders, in terms of primal integral, number of instances for which it finds the best solution, and the number of instances for which it finds an improving solution. However, for this testbed too, we see that the proximity paradigm has a positive effect on the performance of a decomposition algorithm. Indeed, Benders never ends up with the best solution after 100 s, whereas this happens for 6 instances using ProxyBenders. Allowing a 10-min time limit, Benders improves the solution only for 4 instances, and for 5 instance with a 1-h time lime. For these time limes, ProxyBenders improves 14 and 21 solutions, respectively.

These result reinforce that Proximity Benders should be the method of choice in situations where high-quality solutions to very large instances of stochastic programming problems need to be found quickly.

5 Final remarks

The computational results presented in this paper provide a proof-of-concept demonstration of the potential of Proximity Benders. The computational results suggest that Proximity Benders should be the method of choice in situations where high-quality solutions to very large instances of certain types of stochastic programming problems need to be found quickly. This is true, in particular, for situations in which solving the LP relaxation is already computationally prohibitive. Proximity Benders naturally and easily parallelizes, which will further amplify its advantages and benefits.

We next mention just a few possible directions for future research. Investigating the sensitivity of Proximity Benders to the quality of the initial feasible solution is of interest. We have used a non-aggressive policy for setting the minimum improvement required in the cutoff constraint of Proximity Benders. Investigating the performance of Proximity Benders for different (more aggressive) schemes for setting and updating the minimum improvement required is of interest. Exploring whether the efficiency of Proximity Benders can be improved by incorporating more sophisticated cut management schemes or by not solving all subproblems in each inner iteration is also of interest. There are similarities between Proximity Benders and the Feasibility Pump for finding feasible solutions to MILP (Fischetti et al. 2005). The use of randomization is critical to the performance of the feasibility pump and, therefore, randomization schemes for Proximity Benders, e.g., randomizing \(\tilde{y}\), are worth studying.

Finally, we observe that our proof-of-concept implementation did not target a specific problem, and we anticipate that tailored implementations for specific problems would likely be far more efficient. Thus, we expect that Proximity Benders can lead to quite effective ad-hoc heuristics for very large stochastic programming applications.

References

Achterberg, T., Berthold, T., Hendel, G.: Rounding and propagation heuristics for mixed integer programming. In: Operation Research Proceedings 2011, pp. 71–76. Springer, Berlin (2012)

Beasley, J.: Or-library: Distributing test problems by electronic mail. J. Oper. Res. Soc. 41, 1069–1072 (1990)

Benders, J.: Partitioning procedures for solving mixed-variables programming problems. Numer. Math. 4(1), 238–252 (1962)

Berthold, T.: Measuring the impact of primal heuristics. Oper. Res. Lett. 41(6), 611–614 (2013)

Bodur, M., Dash, S., Günlück, O., Luedtke, J.: Strengthened Benders cuts for stochastic integer programs with continuous recourse. Technical Report. Optimization Online 2014-03-4263 (2014)

Crainic, T., Fu, X., Gendreau, M., Rei, W., Wallace, S.: Progressive hedging-based metaheuristics for stochastic network design. Networks 58(2), 114–124 (2011)

Crainic, T., Hewitt, M., Rei, W.: Partial decomposition strategies for two-stage stochastic integer programs. Technical Report 13, CIRRELT (2014)

Fischetti, M., Glover, F., Lodi, A.: The feasibility pump. Math. Program. 104(1), 91–104 (2005)

Fischetti, M., Lodi, A.: Local branching. Math. Program. 98, 23–47 (2003)

Fischetti, M., Monaci, M.: Proximity search for 0–1 mixed-integer convex programming. J. Heuristics 20(6), 709–731 (2014)

Fischetti, M., Salvagnin, D., Zanette, A.: A note on the selection of Benders cuts. Math. Program. 124(1–2), 175–182 (2010)

Louveaux, L.: Discrete stochastic location models. Ann. Oper. Res. 6, 23–34 (1986)

Pan, F., Morton, D.: Minimizing a stochastic maximum-reliability path. Networks 52(3), 111–119 (2008)

Rei, W., Cordeau, J.-F., Gendreau, M., Soriano, P.: Accelerating Benders decomposition by local branching. INFORMS J. Comput. 21(2), 333–345 (2009)

Shaw, P.: Using constraint programming and local search methods to solve vehicle routing problems. In: Maher M., Puget J.-F. (eds.) Principles and Practice of Constraint Programming CP98. Lecture Notes in Computer Science, vol. 1520, pp. 417–431. Springer, Berlin, Heidelberg (1998)

Acknowledgments

This research of Fischetti and Monaci was supported by the University of Padova (Progetto di Ateneo “Exploiting randomness in Mixed Integer Linear Programming”), and by MiUR, Italy (PRIN project “Mixed-Integer Nonlinear Optimization: Approaches and Applications”). We thank Merve Bodur and Mike Hewitt for their support and for providing the data used in the computational analysis. Thanks are also due to three anonymous referees for their helpful comments leading to an improved presentation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Boland, N., Fischetti, M., Monaci, M. et al. Proximity Benders: a decomposition heuristic for stochastic programs. J Heuristics 22, 181–198 (2016). https://doi.org/10.1007/s10732-015-9306-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10732-015-9306-1