Abstract

Healthcare systems are facing a resources scarcity so they must be efficiently managed. On the other hand, it is commonly accepted that the higher the consumed resources, the higher the hospital production, although this is not true in practice. Congestion on inputs is an economic concept dealing with such situation and it is defined as the decreasing of outputs due to some resources overuse. This scenario gets worse when inpatients’ high severity requires a strict and effective resources management, as happens in Intensive Care Units (ICU). The present paper employs a set of nonparametric models to evaluate congestion levels, sources and determinants in Portuguese Intensive Care Units. Nonparametric models based on Data Envelopment Analysis are employed to assess both radial and non-radial (in)efficiency levels and sources. The environment adjustment models and bootstrapping are used to correct possible bias, to remove the deterministic nature of nonparametric models and to get a statistical background on results. Considerable inefficiency and congestion levels were identified, as well as the congestion determinants, including the ICU specialty and complexity, the hospital differentiation degree and population demography. Both the costs associated with staff and the length of stay are the main sources of (weak) congestion in ICUs. ICUs management shall make some efforts towards resource allocation to prevent the congestion effect. Those efforts shall, in general, be focused on costs with staff and hospital days, although these congestion sources may vary across hospitals and ICU services, once several congestion determinants were identified.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The congestion effect on healthcare systems assumes a particular importance. By definition, congestion refers to situations where the reduction of one or more inputs generates an increase of at least one output. Cooper et al. [1] state that evidence of congestion is present when reductions in one or more inputs can be associated with increases in one or more outputs or, when increases in one or more inputs can be associated with decreases in one or more outputs, without worsening any other variable. Most of health managers, policy makers and even the public opinion may think that an increase of resources will always return an increase of produced outputs. This is not true in practice as the congestion phenomenon is not as unusual as it sounds. Particularly, within a period featured by both the necessity of resources saving and a growing demand for healthcare, any inefficiency source (as the inputs-related congestion is) must be carefully analyzed.

In 2013, about 9.1 % of the gross domestic product (GDP) was relative to the health sector in Portugal. The healthcare demand predictions are necessary for the hospital management in order to avoid situations such as poor service quality or inefficiency and congestion, being health care delivery systems particularly prone to congestion. According to Nayar et al. [2], hospitals face pressures to maximize performance in terms of production efficiency and quality, especially due to difficult financial environment factors, such as expansion of managed care, changes in public policy, growing market competition for certain services, and growth in the number of uninsured, [3]. In most situations, clinical practices may be characterized by intense health resources consumption, e.g. a large drugs supply, more surgeries and more hospital days than the necessary, which is not always beneficial for patients, eventually leading to the congestion phenomenon. That is why greater expenditures do not imply better quality on health care outputs.

By definition, the Intensive Care Unit (ICU) service is a specific internment ward dealing with critically ill inpatients, i.e. those ones who need for advanced, close and constant life support for 24 h a day due to their life-threatening illnesses/ injuries (e.g. trauma, multiple organ failure, sepsis, preterm birth, congenital disorder, birthing complications, cardiac arrest, acute myocardial infarction, intracranial hemorrhages). Most patients arriving to the ICU are admitted from the emergency department. After their treatment, patients from the ICU service(s) are usually transferred to another medical unit for further care, if their severity of illness is not sufficiently high to be considered for the ICU.

ICUs account for a considerable amount of hospital costs across the world with, historically, up to two-fold variation in risk-adjusted mortality, [4–5]. More recently, Halpern et al. [6] stated that ICU is the most expensive, technologically advanced and resource-intensive area of medical care, consuming about 13 % of hospital costs. As a consequence, ICU should, in general, be earmarked for those patients with severe and complex illness. According to Portuguese health care data, we conclude that, on average, a patient in ICU presents much higher costs than the national average. The ICU services cost, in Portugal, about seven times (per inpatient) more than a standard service, defined as the geometric mean of all Portuguese hospital services’ related unitary costs. Additionally, Barrett et al. [7] state that critical care costs have been rising for decades, representing a costly segment of health care spending. Given these facts and the high severity of the inpatients threated in the ICU wards, resources allocated to ICUs must be managed in the most efficient and effective ways.

Several studies are devoted to the efficiency measurement of this service. For instance, Puig-Junoy [8] studied the Spanish ICUs performance. Some other studies about the ICU efficiency assessment can be found in the literature: Tsekouras et al. [9] and Dervaux et al. [10] are some remarkable ones. The latter uses a robust partial non-parametric and non-conditional frontier to assess some French ICU services efficiency. Meanwhile, the former analyses the Greek ICU services performance and the impact of the “significant amount of financial resources [that] has been devoted by the Greek Government and the European Union” to the ICU. That study uses a bootstrap-based bias-corrected efficiency measurement and the double bootstrap. Spain, France and Greece have similar demographic and epidemiological patterns to Portugal.

Several authors have dealt with the congestion phenomenon in healthcare provision. Table 5 on Appendix A provides a literature review on congestion measurement and/or on ICU performance evaluation, as well as some comments/ critiques on previous literature. For example, Clement et al. [11], Valdmanis et al. [12], Ferrier et al. [13], Arrieta and Guillén [14] and Matranga and Sapienza [15] employ an output-oriented nonparametric method to assess whether some undesirable outputs (e.g. mortality rates) are congesting hospital performance. In this case, the congestion is investigated over the bad outputs and how they could be reduced so as to improve the efficiency and effectiveness of hospitals. In all cases, radial models were employed, still ignoring the environment effect, the existence of other inefficiency sources and the possible existence of data noise. This means that a robustness analysis over their results is lacking, which may jeopardize conclusions that could be drawn from there. On the other hand, they investigated solely the impact of undesirable outputs, ignoring the fact that resources may also have a role on congestion (i.e. congestion over inputs), which is the way we take in the present study, in line with Simões and Marques [16]. This seems to be the most appropriate route within a period of resources scarcity, which in turn may have a considerable impact on ICU management and on the capacity and ability to treat highly critical ill patients. Quotes like “the more, the better” are common among the public opinion and healthcare managers but usually they are wrong precisely due to the congestion effect. This effect may then jeopardize the quality of care, in particular the one in ICUs. Ensuring the best resource allocation at the same time people’s lives are saved is a hot topic in ICUs management. Since the congestion is an inefficiency parcel it must be mitigated. Although the congestion of ICUs has been previously studied under queuing theories, e.g. [17–18], so far no study has neither simultaneously employed a bias-corrected environment-based nonparametric congestion model, with both radial and non-radial (in)efficiency measures, concerning the ICU services, and identified the main sources of such phenomenon, over a strong statistical background, nor investigated the impact of the environment on ICU congestion. This paper then tries to overcome those faults, with an empirical application to the Portuguese ICU case.

This study is structured as follows: section 2 presents some different ways to measure congestion and its sources; section 3 presents the sample, the variables and the methods for environment and biasing adjustment; in section 4 we present and discuss the main results; finally, section 5 concludes this study.

2 Measuring the congestion levels and sources

2.1 Measuring the efficiency through non-parametric methods: an overview

The assessment of technical efficiency employing nonparametric linear envelopment of the data dates back to Farrell’s work, [19]. Charnes et al. [20] introduced the Data Envelopment Analysis (DEA) estimator of technical efficiency. The technical efficiency of each decision making unit (DMU) is obtained through the comparison by distance with an efficient frontier formed by the best practices, [21], which use the lower level of inputs for a given output level, or produce the higher output level for a given level of resources. A DMU is an entity to be compared with others in similar conditions and produces the same kind of outputs from the same kind of resources (usually, in different proportions).

DEA has become the dominant approach to efficiency measurement in health care, as well as in many other sectors of the economy, such as education or justice, [22]. Ruggiero [23] and De Witte and Marques [24] point out several advantages of nonparametric methods over the parametric ones, such as the possibility of multiple inputs and multiple outputs inclusion and the fact that a priori it is not necessary to define the frontier shape. As a matter of fact, unlike the parametric approaches, where the analysis is driven by economic theory, DEA is a data-guided approach, [25]. Regarding health care, the techniques used are mainly based on DEA, [26], which is consistent with the economic theory underlying the optimizing behavior, and the frontier deviations can be interpreted as inefficiency and there are several ways to overshoot the noise. These are the reasons why DEA is considered hereafter.

The following DEA radial model, Eq. (1) [θ−model], can evaluate the (in)efficiency of a specific DMU0 concerning the production of s different outputs, \( {y}_{rj}\in {\mathbb{R}}_{+\cup \left\{0\right\}}^s \), r = 1...s, using m different inputs, \( {x}_{ij}\in {\mathrm{\mathbb{R}}}_{+}^m \), i = 1...m, [27−29], and under the potential influence of ϑ exogenous variables, z hj ∈ ℝϑ, h = 1… ϑ. In Eq. (1), ε is a non-Archimedean, ε ~ 0, and \( {p}_i^{-}\in {\mathbb{R}}_{+\cup \left\{0\right\}}^m \) and \( {p}_r^{+}\in {\mathbb{R}}_{+\cup \left\{0\right\}}^s \) are slacks (non-radial inefficiencies) to be optimized by the linear model (1). The unit DMU0 \( \left({x}_{i0},{y}_{r0},{z}_{h0}\right)\in {\mathrm{\mathbb{R}}}_{+}^{m+s}\times {\mathrm{\mathbb{R}}}^{\vartheta } \) is evaluated concerning a set of comparable units, Ω0, which empirically determines a conditional frontier. This topic will be discussed below, see subsection 3.3. This model is output-oriented, thus \( {\theta}_0^{*}\ge 1 \).Footnote 1 A DMU0 is technically efficient if and only if \( {\theta}_0^{*}=1 \), so it cannot increase its outputs without increasing at least one input. However, it is only strongly efficient concerning the strong disposability hull (SDH) if and only if \( {\theta}_0^{*}=1{\displaystyle \cap }{\forall}_{i=1\dots m}{\forall}_{r=1\dots s}{p}_i^{-*}={p}_r^{+*}=0 \).

2.2 Measuring the congestion

By replacing the objective function of Eq. (1) by \( {\beta}_0^{*}=\underset{\beta_0,{\lambda}_j}{ \max}\left({\beta}_0+\varepsilon {\displaystyle {\sum}_{r=1}^s{\tilde{p}}_r^{+}}\right) \) and the second constraint by \( {\displaystyle {\sum}_{j=1;\kern0.5em j\in {\Omega}_0}^n{\lambda}_j{y}_{rj}-{\tilde{p}}_r^{+}={\beta}_0{y}_{r0}} \), we get a weak disposability output-oriented based model, henceforward β−model, cf. Eq. (2), [30]. Therefore, the DMU0 is strongly efficient regarding the weak disposability hull (WDH) if and only if \( {\beta}_0^{*}=1{\displaystyle \cap }{\forall}_{r=1\dots \mathrm{s}}{\tilde{p}}_r^{+}=0 \). In this model, \( {\tilde{p}}_r^{+} \) keeps the same meaning as \( {p}_r^{+} \) in Eq. (1).

It is possible to show that, under the output-oriented framework, \( {\theta}_0^{*}\ge {\beta}_0^{*}\ge 1 \). In view of that, we can construct the output congestion score, C 0, on its standard way, as in Eq. (3), [31]. C 0 < 1 indicates the presence of congestion in the evaluated DMU, [30–35]. The value of C 0 indicates the amounts of outputs that should be increased to reach the non-congestion situation, so the lower C 0, the higher the congestion level. If C 0 = 1, the DMU0 is not congested, i.e., there is absence of congestion inefficiency. However, even an inefficient DMU may be non-congested: it is sufficient that it does not belong to a weak disposability region, i.e., \( {\theta}_0^{*} \)=\( {\beta}_0^{\ast }>1 \).

Let \( {f}_q:{\mathrm{\mathbb{R}}}^q\to \mathrm{\mathbb{R}} \) be an aggregation function of q arguments, e.g. the geometric mean. So, let’s define the following inefficiency index, \( {\tilde{\Lambda}}_0 \), where the optima set \( \left({\theta}_0^{\ast },{p}_i^{-\ast },{p}_r^{+\ast },{\beta}_0^{\ast },{\tilde{p}}_r^{+*}\right)\in {\mathbb{R}}_{+\cup \left\{0\right\}}^{m+2s+2} \) are obtained by using all ϑ environment variables and Eqs. (1–2):

If \( {\forall}_{i=1\dots m}{p}_i^{-*}=0{\displaystyle \cap }{\forall}_{r=1\dots s}{p}_r^{+*}={\tilde{p}}_r^{+*}=0{\displaystyle \cap }{\theta}_0^{*}={\beta}_0^{*}=1 \), then the DMU0 is technically efficient regarding the SDH and \( \tilde{\Lambda_0}=1 \). However, \( {\tilde{\Lambda}}_0<1 \) does not imply that congestion is present. As a matter of fact, if \( {\forall}_{r=1\dots s}{p}_r^{+*}={\tilde{p}}_r^{+*}=0{\displaystyle \cap }{\theta}_0^{*}={\beta}_0^{*}=1{\displaystyle \cap }{\exists}_{i=1\dots m}{p}_i^{-*}\ne 0\Rightarrow {f}_m\left(\frac{x_{i0}-{p}_i^{-*}}{x_{i0}}\right)<1{\displaystyle \cap }{f}_s\left(\frac{\beta_0^{*}{y}_{r0}+{\tilde{p}}_r^{+*}}{\theta_0^{*}{y}_{r0}+{p}_r^{+*}}\right)=1\Rightarrow \tilde{\Lambda_0}<1 \) and the DMU0 is just technically inefficient regarding SDH. Only when \( {f}_s\left(\frac{\beta_0^{*}{y}_{r0}+{\tilde{p}}_r^{+*}}{\theta_0^{*}{y}_{r0}+{p}_r^{+*}}\right)<1 \) congestion can be identified, [36]. This means that the non-radial inputs inefficiency component must be removed from Eq. (4), leading to a congestion composite index:

Λ0 is comparable to \( {C}_0\left({\theta}_0^{*},{\beta}_0^{*}\right) \). Indeed, if \( {\forall}_{r=1\dots s}{p}_r^{+*}={\tilde{p}}_r^{+*}=0 \), then \( {\Lambda}_0={C}_0\left({\theta}_0^{*},{\beta}_0^{*}\right) \). Still, Λ0 encompasses both radial and non-radial inefficiency sources, so it is a more robust measure of congestion than \( {C}_0\left({\theta}_0^{*},{\beta}_0^{*}\right) \). Additionally, the ratio \( \frac{{\tilde{\Lambda}}_0}{\Lambda_0} \) measures the extension of congestion in the whole inefficiency. It is easy to show that \( {f}_m\left(\frac{x_{i0}-{s}_i^{-*}}{x_{i0}}\right)\le 1 \), Eq. (4), which means that \( \frac{{\tilde{\Lambda}}_0}{\Lambda_0}\le 1 \).

2.3 Marginal Products and Scale Elasticities

Dual formulation of Eq. (1) is given by Eq. (6), where u r and v i represent, respectively, the virtual weights of the r-th output and the i-th input, μ is a variable that controls for returns to scale (RTS) (CRS – constant returns to scale, VRS – variable returns to scale), and ε (a non-Archimedean) ensures variables’ weights are non-zero. Replacing the first constraint of Eq. (1) by \( {\displaystyle {\sum}_{j=1;\kern0.5em j\in {\Omega}_0}^n{\lambda}_j{x}_{ij}={x}_{i0}} \) is equivalent to unrestraint the inequity v i0 ≥ ε in Eq. (6), [30]. That is, \( {\beta}_0^{*}=\underset{{\tilde{v}}_{10}\dots {\tilde{v}}_{m0},{\tilde{u}}_{10}\dots {\tilde{u}}_{s0},{\tilde{\mu}}_0\ }{ \min}\left[{\displaystyle {\sum}_{i=1}^m{\tilde{v}}_{i0}{x}_{i0}-{\tilde{\mu}}_0}\right] \) if \( {\tilde{v}}_{i0} \) is free in sign, ∀i = 1 … m, cf. Eq. (7), [31, 35]. In Eqs. (6) and (7), u r0 and \( {\tilde{u}}_{r0} \) share the same meaning; the same applies to v i0 and \( {\tilde{v}}_{i0} \). Tiles are utilized to differentiate them. As usually there is no reason to believe that one input has a considerable higher impact on congestion than the remaining resources, no further restrictions to Eq. (7) are required. That is, Eq. (7) allows finding out which input is a congestion source with no further assumptions.

The Law of Variable Proportions (LVP) states that as the quantity of one factor increases, keeping the other factors fixed, the marginal product (MP), or marginal rate of production, of that factor will eventually decline after a certain stage, [32–34]. When the variable factor becomes abundant, the MP may become negative. Then, the total product (defined as the total of outputs resulting from efforts of all factors of production) decreases if and only if the MP value of that factor becomes negative, i.e., when the entity is congested. Additionally, Sueyoshi [35] demonstrated that the MP between the i-th input x i0 and the r-th output y r0, of DMU0, is given by Eq. (8). Such formula was derived from the first restriction of Eq. (7). Under the weak disposability assumption, \( {\tilde{v}}_{i0}^{*} \) can be negative; if this is the case and since \( {\tilde{u}}_{r0}^{*}\ge \varepsilon >0 \), by Eq. (7), then MP (x i0, y r0) is negative as well, which means that the i-th input is one source of congestion because an increase of such an input reduces the quantity of the r-th produced output. That is, MP (x i0, y r0) < 0, such that MP is computed between the r-th output and the i-th input reveals the existence of congestion in the unit (x i0 , y r0), and then \( {\beta}_0^{*}<{\theta}_0^{*} \) and Λ0 < 1, in other words, the i-th input of DMU0 is congested. In view of that, congestion sources can be easily identified as those inputs leading to a negative MP (which, in turn, is consistent with the economic meaning of congestion).

Consistent with the preceding argument, one can easily compute the corresponding scale elasticity, ρ 0, as in Eq. (9), being \( {\rho}_0^{+*} \) given by Eq. (10), [30, 36]Footnote 2:

Clearly, \( {\rho}_0^{+*}\ge {\rho}_0^{-*} \), which means that if \( {\rho}_0^{+*}<0 \), then ρ 0 < 0 as well, i.e., the Degree of Scale (Dis) Economies (DSE) is negative for the DMU0 (x i0, y r0, z h0). However, a DMU can exhibit \( {\rho}_0<0{\displaystyle \cap }{\rho}_0^{+*}\ge 0 \), which happens when \( {\rho}_0^{-*}<-{\rho}_0^{+*} \). Negative RTS exists if and only if \( {\rho}_0^{+}<0\Rightarrow {\rho}_0<0 \), [30, 36], i.e., \( MP\left({x}_{i0},{y}_{r0}\right)<0\iff {\rho}_0^{+}<0\Rightarrow {\rho}_0<0 \).

2.4 Weak congestion

The previous approach assumes that, under the congestion phenomenon, a “proportional reduction in all inputs warrants an increase in all outputs”, [30], which is a rather restrictive assumption. Tone and Sahoo [36] call it strong congestion, so they relax that assumption and introduce the weak congestion concept, such that “an increase in one or more inputs causes a decrease in one or more outputs”, [30]. Strong congestion implies weak congestion, but the reciprocal is not necessarily true, [36]. Because of that, their proposal relies on a semi-radial (and units invariant) approach, as in Eq. (11), where \( {t}_r^{+} \) and \( {t}_i^{-} \) are slacks to be optimized, as before ε is a non-Archimedean number and \( \left({\overset{\vee }{x}}_{i0},{\overset{\vee }{y}}_{r0}\right)=\left({x}_{i0},{\beta}_0^{*}{y}_{r0}+{\tilde{p}}_r^{+*}\right) \), i.e., (x i0, y r0) is projected on WDH, cf. Eq. (2), that is \( \left({\overset{\vee }{x}}_{i0},{\overset{\vee }{y}}_{r0}\right) \) is efficient concerning the WDH technology (frontier).

From the \( \left\{{t}_1^{+*},\dots, {t}_s^{+*},{t}_1^{-*},\dots, {t}_m^{-*}\right\} \) optima obtained in Eq. (11), it is possible to construct a ratio measuring the average improvement in outputs to the average reduction in inputs, [30, 36], as in Eq. (12), where \( {s}^{\hbox{'}} \) and \( {m}^{\hbox{'}} \) respectively represent the numbers of positive \( {t}_r^{+*} \) and positive \( {t}_i^{-*} \), [30]. In short, such a ratio is a DSE measure for weakly congested units. Still, sources of congestion can be identified by those slacks, \( \left\{{t}_1^{+*},\dots, {t}_s^{+*},{t}_1^{-*},\dots, {t}_m^{-*}\right\}\ne \overrightarrow{0} \).

As in Eq. (5), we define a composite congestion index for weak congestion:

Where Λ0 is obtained from Eq. (5), \( {p}_r^{+*} \) from Eq. (1), and \( \left\{{t}_1^{+*}\dots {t}_s^{+*},{t}_1^{-*}\dots {t}_m^{-*}\right\} \) from Eq. (11). It is worthy to mention that strong congestion implies weak congestion (Λ0 < 1 ⇒ Λ weak , 0 < 1), but even DMUs with no strong congestion can exhibit weak congestion though, i.e., Λ0 = 1 ⇏ Λ weak , 0 = 1. As a matter of fact, \( {\Lambda}_0=1\Rightarrow {\Lambda}_{weak,0}={f}_m\left(1-\frac{t_i^{-*}}{x_{i0}}\right)/{f}_s\left(\frac{\theta_0^{*}{y}_{r0}+{p}_r^{+*}+{t}_r^{+*}}{y_{r0}}\right) \) which is unitary if and only if \( {\forall}_{r=1,\dots, s}{p}_r^{+*}={t}_r^{+*}=0{\displaystyle \cap }{\forall}_{i=1,\dots, m}{t}_i^{-*}=0{\displaystyle \cap }{\theta}_0^{*}=1 \), i.e., if the unit is technically efficient regarding SDH. If there is no weak congestion (Λ weak , 0 = 1), then by definition \( {\forall}_{r=1\dots s}{t}_r^{+*}=0{\displaystyle \cap }{\forall}_{i=1\dots m}{t}_i^{-*}=0 \), which means that the DMU \( \left({\overset{\check{} }{x}}_{i0},{\overset{\check{} }{y}}_{r0}\right) \) is strongly efficient regarding SDH and \( \left\{{\theta}_0^{*}=1{\displaystyle \cap }{\forall}_{r=1\dots s}{p}_r^{+*}=0\right\}\Rightarrow \left\{{\beta}_0^{*}=1{\displaystyle \cap }{\forall}_{r=1\dots s}{\tilde{p}}_r^{+*}=0\right\} \), and finally, Λ0 = 1. That is, Λ weak , 0 = 1 ⇒ Λ0 = 1, in other words, if the DMU0 has no evidence of weak congestion, then it is not congested. So, Λ weak , 0 defines the link between the weak and the strong congestion measures; the ratio Λ weak , 0/Λ0 gives, then, the extent of weak congestion in the whole congestion. It is easy to show that \( 0<\frac{\Lambda_{weak,0}}{\Lambda_0}\le 1 \).

2.5 Final considerations regarding the congestion models

Input-congestion models have been defined so far. However, a strategy must be employed so as to check whether DMUs are effectively congested or not, and if so, whether there are either strongly or weakly congested, or not. Such strategy is summarized in Table 1.

3 Data and methodological issues

3.1 Sample

For this paper, each DMU is a different ICU service in a specific hospital. That is, a hospital, which has several different ICU services, has several DMUs. A measure of homogenization between them is required and discussed below. The sample is constituted by 630 DMUs, distributed across 8 years (2002-2009),Footnote 3 and four ICU specialties: (a) Polyvalent ICUs, # = 278 DMUs, (b) Cardiology ICUs, # = 143 DMUs, (c) Pediatric, Gynecology, Obstetrics and Neonatology ICUs, # = 105 DMUs, and (d) Surgical ICUs, # = 104 DMUs. Surgical ICUs’ classification includes “General surgical ICUs” (# = 41), “Neurosurgical ICUs” (# = 36), “Cardiothoracic surgical ICUs” (# = 9), “Transplants’ ICUs” (# = 6) and “Burn Units” (# = 12). Those 630 DMUs are spread over a range of 25 – 40 hospitals (depending on the year), giving an average of 2 – 3 ICUs per hospital. Still, the DMU definition remains as the ICU specialty service.

3.2 Variables

Given, at least, the theoretical financial unsustainability of the health system and/ or the high financing provided to this department by most governments, [9], an economic outlook is desirable. Therefore, the following inputs were chosen, [27, 28, 9–10]Footnote 4 , Footnote 5:

-

i.

X 1 – Costs of Goods Sold and Consumed (CGSC) – expenditures with drugs and clinical materials;

-

ii.

X 2 – Supplies and External Services (SEServ) – expenditures with external labor outsourcing;

-

iii.

X 3 – Staff Costs (StaffC) – expenditures with staff, including salaries and bonuses to physicians, nurses and other (non-administrative) ancillary staff;

-

iv.

X 4 – Capital Costs (CapC) – expenditures with technological asset investments;

-

v.

X 5 – (ICU) Hospital Days (HospDays) – total number of days used by all inpatients treated on the ICU service, within 1 year, [27], as time is a resource required to produce the output(s), [10].

Additionally, the following output was chosen, [27, 28, 9, 10]:

-

i.

Y – Inpatient Discharges (InpD) – total number of patients treated within a specific ICU service (DMU) in a year, excluding deaths.Footnote 6

Costs (inputs i. up to iv.) were all updated to 2009 by using the GDP deflator. Note that the higher the length of stay (HospDays), the higher the probability of other diseases appearance, such as nosocomial infections and pressure ulcers in bedridden patients, increasing the ICU mortality rate (or, at least, its associated death probability), as stated by Ferreira and Marques [28] and Chan et al. [18]. Given the limited capacity (number of beds) of the service, the higher the number of (ICU) hospital days, the lower the possible discharges. That is, HospDays may contribute to the ICU services congestion. We also assume that the remaining inputs are prone to congestion. Indeed, we have observed a considerable and positive correlation between all costs and HospDays, which is an expected result because more inpatient days require more expenses with staff (nurses), drugs and other clinical material. As a result, since we suspect that HospDays can be a source of congestion, the remaining inputs can be either. As a matter of fact, by definition, if there is an entity producing more InpD than a specific DMU0, spending fewer resources, say StaffC and keeping the remaining inputs unchanged, then StaffC is obviously congested on DMU0 because the production could be increased at the same time that StaffC would be decreased. The advantage of using the previously described nonparametric methods is that we do not need to make strong assumptions over the inputs; we only assume that it is somehow possible that they can be eventually congested.

Table 2 contains the descriptive statistics of the main variables utilized in our analysis, by ICU specialty. As we can observe, there is considerable resources consumption in ICUs, but also a huge heterogeneity on both resources consumption and outputs production (standard deviations and averages are quite close). In general, ICUs resource consumption lies essentially on staff costs (StaffC) and costs with drugs and clinical material (CGSC), while capital investments and expenses with outsourcing are generally low compared with the other costs. Surgical ICUs are responsible for the majority of inpatients treated, being followed by Cardiology ICUs. Furthermore, if we assume that the average delay is a measure of the inpatients complexity (because the higher the average delay, the higher the expected inpatient needs as well as their own complexity, [38]), as is the case-mix, then we also observe a significant diversity. As expected, the most complex services (as Burn Units and Transplants ICUs) deal with more complex inpatients, which present a priori higher probability of death, and then require a higher length of stay.

3.3 Adjusting for internal and external environment variables

Section 2 has detailed the models to be utilized so as to assess both congestion levels and sources. However, for the sake of comparability issues, units that create the appropriate reference set are addressed to Ω0, which is computed for each unit \( \left({x}_{i0},{y}_{r0},{z}_{h0}\right)\in {\mathbb{R}}_{+}^m\times {\mathbb{R}}_{+}^s\times {\mathbb{R}}^{\vartheta } \). It is commonly accepted that DMUs efficiency must be assessed taking into account the environment they face, which in turn may jeopardize/ benefit the units’ performance. By environment we mean both internal (e.g. legal status) and external environments (e.g. demographic patterns). Adjusting for the environment allows homogenizing the sample.

The question is how to derive such set Ω0. Let’s consider a generic unit \( \left({x}_{i0},{y}_{r0}\right)\in {\mathrm{\mathbb{R}}}_{+}^m\times {\mathrm{\mathbb{R}}}_{+}^s \), characterized by ϑ different characteristics, z h0 ∈ ℝϑ, h = 1… ϑ. These features (variables) can be either independent, \( {z}_{h_u0}\in {\mathrm{\mathbb{R}}}^{\vartheta_u} \), h u =1… ϑ u , or dependent, \( {z}_{h_w0}\in {\mathrm{\mathbb{R}}}^{\vartheta_w} \), h w =1… ϑ w , being ϑ =ϑ w + ϑ u and \( {z}_{h0}=\left\{{z}_{h_u0}{\displaystyle \cup }{z}_{h_w0}\right\} \). Let also \( {K}_{h_u}:\mathrm{\mathbb{N}}\to {\mathrm{\mathbb{R}}}_{\left[0;1\right]} \) and \( {K}_{h_w}:\mathrm{\mathbb{R}}\to {\mathrm{\mathbb{R}}}_{\left[0;1\right]} \) be two kernel functions with compact support (e.g. Epanechnikov), and h u > 0 and h w > 0 some appropriate bandwidths for those kernels, triggering the comparability between units for each criterion. In other words, only those DMUs whose operational environment variables are close to DMU \( \left({x}_{i0},{y}_{r0},{z}_{h0}\right)\in {\mathrm{\mathbb{R}}}_{+}^m\times {\mathrm{\mathbb{R}}}_{+}^s\times {\mathrm{\mathbb{R}}}^{\vartheta } \) can be utilized to compose the latter reference set, Ω0. This reference set is, then, achieved by a global kernel function, as discussed below.

Under a multivariate framework, i.e., ϑ>0, it is common to assume that environmental variables share no dependence between them, so a natural choice for the global kernel, K : ℝϑ → ℝ[0; 1], would be the product of all univariate kernels, K h : ℝ → ℝ[0; 1], [27], as in Eq. (14), being \( \overrightarrow{u}=\left\{{u}_h;h=1\dots \vartheta \right\}=\left\{\frac{z_{h0}-{z}_{hj}}{H_h};h=1\dots \vartheta \right\}\in {\mathbb{R}}^{\vartheta } \).

However, it is not always true that environment variables share no interdependence. Unless their correlation is quite low, we may take advantage of such dependence. To do so, we adapt the approach proposed by Daraio and Simar [38], which can be synthetized as in Eq. (15), where S is the covariance matrix of the ϑ variables u h , and as usual n is the sample size and \( \mathbb{I} \) is the indicator function.

Splitting environment variables into independent (categorical), u, and depedent (either discrete, categorical or continous), w, allows us to create a global multivariate kernel, \( K:{\mathrm{\mathbb{R}}}^{\vartheta_w}\times {\mathrm{\mathbb{R}}}^{\vartheta_u}\to {\mathrm{\mathbb{R}}}_{\left[0;1\right]} \), as follows:

Where \( \overrightarrow{w}=\left\{{w}_{h_w};{h}_w=1\dots {\vartheta}_w\right\}=\left\{{z}_{h_w0}-{z}_{h_wj};{h}_w=1\dots {\vartheta}_w\right\}\in {\mathrm{\mathbb{R}}}^{\vartheta_w} \), \( \overrightarrow{u}=\left\{{u}_{h_u};{h}_u=1\dots {\vartheta}_u\right\}=\left\{\frac{z_{h_u0}-{z}_{h_uj}}{H_{h_u}};{h}_u=1\dots {\vartheta}_u\right\}\in {\mathrm{\mathbb{R}}}^{\vartheta_u} \), and S only regards the dependent variables. Kernel functions for independent variables shall be triggered by a small bandwidth, such that no other units belonging to different categories can be utilized for comparability issues; in other words, as those categories are usually defined by integer values (typically 1, 2, 3...), the bandwidth \( {H}_{h_u} \) shall be lower than 1, i.e., \( {H}_{h_u}\in \mathrm{\mathbb{R}}:0<{H}_{h_u}<1 \); in this work, we impose \( {H}_{h_u}=0.5,{\forall}_{h_u=1\dots {\vartheta}_u} \), a choice that does not impact on final results, [40]. Finally, the comparability set Ω0, for the DMU0: \( \left({x}_{i0},{y}_{r0},{z}_{h0}\right)\in {\mathrm{\mathbb{R}}}_{+}^m\times {\mathrm{\mathbb{R}}}_{+}^s\times {\mathrm{\mathbb{R}}}^{\vartheta } \), is composed by only those DMUs verifying \( K\left(\overrightarrow{u},\overrightarrow{w}\right)>0 \).

Hospitals can be classified by different points of view and face a meaningful environment impact on their performance, [27, 28, 37]. Such environment must adjust efficiency scores, which can be either internal or external. Similarly, each ICU faces (a) the same external environment as the whole hospital, and (b) specific ICU-related environment variables, mostly due to the inherent complexity of inpatients that ICUs take care. Table 3 identifies and describes the environment variables, either internal or external, to be utilized in the present study.

The adoption of a single independent categorical variable, Z1, i.e., ϑ u = 1 under the proposed framework (assuming a sufficiently small bandwidth, \( {H}_{h_u}=0.5 \), and a uniform kernel, \( {K}_{h_u} \)) avoids unadvisable comparisons among DMUs from different ICU specialties. For instance, by using the variable Z1 – ICU Specialty, DMUs from a specific ICU specialty are only compared with those ones from the same very ICU specialty. That is, ‘Polyvalent ICUs’ are not compared with ‘Cardiology ICUs’, for instance. In other words, the best practice frontier for each DMU is ICU specialty-specific.

On the other hand, variables such as Z2 – Year, Z3 – Legal Status, Z4 – Hospital Type and Z5 – Merging Status, are defined as dependent categorical variables to be included in the multidimensional kernel function as defined in Eqs. (15)-(16) and to enjoy possible interactions they may have between them and with continuous variables, Z6 up to Z10, so ϑ w = 9.Footnote 7

Table 6 (Appendix E) contains the Pearson’s correlation coefficients for those dependent discrete and continuous environment variables. There is a considerable correlation among some of them, which justifies the adoption of the multivariate kernel of Eq. (16). Clearly, the SMI, Z6, is not correlated with the remaining environment variables as their effect (as well as the technical inefficiency of resources consumption) was filtered in the SMI computation, see Appendix A. Finally, the GSI and the demographic variables show significant correlation among them: hospitals located in urban regions, where the population density and the purchasing power are higher and the aging indexes are lower, tend to present more medical specialties.

3.4 Methodological issues

Efficiency under both SDH and WDH is computed by using the models presented in section 2, and following a conditional framework imposed by the method provided in subsection 3.3.Footnote 8 However, those models do not provide robust bias-corrected efficiency estimates due to their deterministic nature, neither do they allow achieving statistic-based results (such as confidence intervals) nor doing statistical inference tests over some hypotheses. Accordingly, we employ the bootstrap technique, as introduced by Simar and Wilson [47] and detailed in Appendix D, over a pooled conditional frontier. Such a technique allows obtaining B (a large number, say B ~ 1000) pseudo-frontiers, which are close to the true, still unobserved, frontier. Additional model features include: VRS and output-orientation, to be in line with the models described in section 2.Footnote 9 This study adopts the strategy defined in Table 1 (section 2). We can make use of bootstrap iterations to employ a set of statistical tests and check whether:

-

(1)

Strong congestion levels are not significant across the entire sample, i.e., \( {H}_{0(1)}:{p}_{r=1}^{+*}={\tilde{p}}_{r=1}^{+*}=0{\displaystyle \cap }{\beta}_0^{*}={\theta}_0^{*}=1\iff {\Lambda}_0=1\ vs\ {H}_{1(1)}:{p}_{r=1}^{+*}\ne {\tilde{p}}_{r=1}^{+*}\ne 0{\displaystyle \cup }{\beta}_0^{*}>{\theta}_0^{*}\iff {\Lambda}_0<1 \)

-

(2)

Both strong and weak congestion levels are similar, i.e., H 0(2) : Λ0 = Λweak , 0 vs H 1(2) : Λ0 > Λweak , 0

-

(3)

Weak congestion levels are not noteworthy, i.e., \( {H}_{0(3)}:{f}_m\left(1-\frac{t_i^{-*}}{x_{i0}}\right)={\Lambda}_0\cdot {\theta}_0^{*}+\frac{\Lambda_0\cdot {p}_r^{+*}+{t}_r^{+*}}{y_{r0}}\ vs\ {H}_{1(3)}:{f}_m\left(1-\frac{t_i^{-*}}{x_{i0}}\right)<{\Lambda}_0\cdot {\theta}_0^{*}+\frac{\Lambda_0\cdot {p}_r^{+*}+{t}_r^{+*}}{y_{r0}} \)

Let’s define the following general tests to evaluate the second hypothesisFootnote 10:

Where, as before, f n is an aggregating function of n arguments (n is the sample size). The p-value utilized is as follows:

Where \( \mathbb{I} \) is the indicator function. A very small p (say, p < 0.05) allows us rejecting the null hypothesis at the 5 % level. In this paper, as aggregating function, f n , we use the arithmetic mean, the geometric mean, the median and the 10 % trimmed mean.

Measuring the impact of a specific environment variable implies running previous models with and without such a variable, z h , h = 1 … ϑ. As there are 10 environment variables, this means that the aforementioned analysis is conducted 11 times. Furthermore, let Λ0(z) (resp . Λ0(z\z h )) be a congestion index computed by using all environment variables (resp. The same index computed when z h is excluded). We utilize the ratios Γ0(z h ) = Λ0(z)/Λ0(z\z h ) , ∀ h = 6 … 10, and Γweak , 0(z h ) = Λweak , 0(z)/Λweak , 0(z\z h ) , ∀ h = 6 … 10, to test whether the continuous variable z h impacts on congestion. It is possible to conclude that ∂Γ0(z h )/∂z h > 0 means that the higher z h , the lower the congestion levels. Nonparametric regressions utilize the Nadaraya-Watson nonparametric regression method, using Gaussian kernels and the Silverman’s bandwidth.

4 Empirical Results

4.1 Identifying Congestion levels

4.1.1 Global results of technical efficiency

Table 4 provides the main results of both bias- and environment-corrected efficiency and congestion measures, divided by categories. While the 3rd column is devoted to the whole sample, the 4th column onwards shows the results of congested units only. Regarding the technical efficiency, we observe that ICUs are generally highly inefficient and they could increase their outputs (InpD) into about 78 % (\( =1-{\left[{\theta}_0^{*}\right]}^{-1}=1-{\left[4.5694\right]}^{-1}\approx 0.78 \)), keeping their resources unchanged, i.e., ignoring the congestion effect. This high inefficiency level is prominent on Pediatric, Gynecology, Obstetrics and Neonatology and Surgical ICUs, all specialties with similar levels of (in)efficiency. Although we can observe that these inefficiency levels have increased over time, those differences are not statistically significant according to the Kruskal-Wallis nonparametric test. Besides, there is no apparent reason to justify these inefficiency levels based on criteria like the hospital legal status, merging status or type, at least at the 1 % significance level; still, these differences remain on the ICUs specialty basis and their treated inpatients’ inherent complexity. As a matter of fact, neither the last hospital reforms (merging and legal statuses) nor the possible existence of scope and scale economies have contributed to the improvement of technical efficiency in ICUs on the period 2002-2009. However, if the sample is divided into congested and non-congested DMUs, we verify that the inefficiency is significantly lower on congested ICUs from differentiated hospitals. Furthermore, non-congested ICUs on average operate on the increasing RTS region, as shown in Table 7 (Appendix E).

4.1.2 Global results of congestion

The sample exhibits considerable levels of strong congestion, as shown in Table 4 (7th column) and as proved by the bootstrap-based test over the hypothesis \( {H}_{0(1)}:{p}_{r=1}^{+*}={\tilde{p}}_{r=1}^{+*}=0{\displaystyle \cap }{\beta}_0^{*}={\theta}_0^{*}=1\iff {\Lambda}_0=1 \). Statistics T b have returned p ~ 0 for all employed aggregating functions, which means that the null hypothesis can be rejected at any significance level. As no output slacks,\( {p}_{r=1}^{+*} \) and \( {\tilde{p}}_{r=1}^{+*} \), have been identified, then Λ0, Eq. (5), and \( {C}_0\left({\theta}_0^{*},{\beta}_0^{*}\right) \), Eq. (3), overlap. On average, congested ICUs could have increased their production (number of discharges) into about 120 % (\( ={\Lambda}_0^{-1}-1={\left[0.4539\right]}^{-1}-1\approx 1.20 \)) by reducing their consumed resources. 45 % of the sample was identified as congested. From these, 65 % were weakly congested and the remaining 35 % strongly congested. As before, we test hypothesis H 0(2) : Λ0 = Λweak , 0 and \( {H}_{0(3)}:{f}_m\left(1-\frac{t_i^{-*}}{x_{i0}}\right)={\Lambda}_0\cdot {\theta}_0^{*}+\frac{\Lambda_0\cdot {p}_r^{+*}+{t}_r^{+*}}{y_{r0}} \) by using bootstrap and, once again, p-values are close to zero, which allows us to conclude that weak congestion is effectively lower than the strong congestion and it is statistically significant. Accordingly, very low weak congestion coefficients were obtained, revealing that weakly congested ICUs show considerable congestion levels and, as consequence, there is a huge room for inputs management improvement and costs savings. This fact is consistent with the average DSE value found for weakly congested ICUs, −3.5178 (Table 7, Appendix E), i.e., about 7 new discharges could have occurred by an average decreasing of inputs of 2,000€ (on costs) and/or 2 hospital days.

4.1.3 Determinants on congestion levels

Categorical and discrete environment variables

The ICU specialty is clearly a determinant on both technical efficiency and congestion. As a matter of fact, the splitting of the sample on those specialties has proven to return different efficiency and congestion distributions by the Kruskal-Wallis test. In view of that, we conclude that Cardiology ICUs are the DMUs presenting the lowest both strong and weak congestion levels. They are followed by Polyvalent ICUs and Surgical ICUs, ex aequo.

Time did not show to be a determinant on congestion, either strong or weak, at least at the 1 % significance level. That means that congestion distributions do not significantly change over time and we can expect that those significant congestion levels may keep it up in present days.

As the time, the legal status of the hospital where the ICU(s) is(are) placed has no significant impact on congestion distributions, so it is not a determinant of congestion. Likewise, neither the hospital type nor the hospital merging status is congestion determinant. As in the efficiency case, these Government reforms have not produced yet the desired outcomes, in terms of resources wastefulness reduction and as expected the New Public Management system, the philosophy under which those reforms were made.

SMI

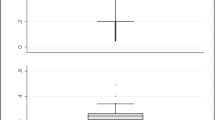

Figure 1 shows the Nadaraya-Watson nonparametric regression (and the 95 %CIs) of Γ0(z 6) = Λ0(z)/Λ0(z\z 6) and Γweak , 0(z 6) = Λweak , 0(z)/Λweak , 0(z\z 6), so as to study the impact of the SMI on both strong and weak congestion measures. It is evident (and statistically significant) that the higher the ICU complexity, the lower the strong congestion, which means that these services (e.g. transplants and burn units) seem to present a better resource allocation and management than other, less complex, ICU services. On the other hand, the SMI has little (though statistically non-null) impact on weak congestion.

GSI

Appendix E contains the Nadaraya-Watson regressions against the continuous environment variables, h = 7...10, cf. Figs. 2, 3, 4 and 5. Hospital specialty degree (GSI) is negatively related to strong congestion, as the higher that degree is, the higher the strong congestion level (this variables seems to have no meaningful impact on weak congestion levels). This means that highly differentiated hospitals are more prone to strong congestion, as we can state by the comparison among undifferentiated hospitals and oncology centers (Table 4), such that the latter (more differentiated) present slightly higher congestion levels than the former, though those differences are not statistically significant by the Kruskal-Wallis test, which can be attributed to the fact that undifferentiated hospitals present a considerable range on GSI. For instance, general hospitals deal with the most complex cases (in terms of illness) and present the highest services complexity (given by the SMI), but still they are quite undifferentiated (low GSI), and then they are less congested than differentiated hospitals. That is, both results from SMI and GSI are consistent.

Population density

The population density only affects the weak congestion. The higher that environment variable is, the higher the weak congestion levels. Rural regions usually present low population density and their hospitals typically present less complex services (low SMI). On the other hand, urban regions, like Lisbon and Oporto, have a set of highly differentiated hospitals (e.g. oncology centers), Table 6 (Appendix E), which, by the previous subsection, are prone to congestion. These results seem to be consistent with previous findings.

Wealth index

Like the SMI, the higher the wealth index, the lower the congestion levels. The fact that this index and the population density are positively correlated, Table 6, could lead to different conclusions. However, by the Grossman model, [48], we can expect that wealthier populations have higher education levels, so their health relies on prevention rather than on treatment. In other words, it is expected that their illness and probability of unexpected mortality is lower than those ones on poorer people, and a priori consume less resources and require lower levels of treatments on differentiated care (say higher prevention, less cancers), which are more prone to congestion.

Aging index

The aging index, as the SMI and the wealth index, does impact on both strong and weak congestion levels. The higher that index is, the lower the congestion in ICUs. As a matter of fact, the aging index and the population density are negatively correlated, see Table 6, i.e., aging populations tend to be located in rural areas, so the hospitals where they are treated do not have highly complex ICU services such as transplants and burned units, and then are more congested as predicted by the SMI impact. When these (elderly) patients need highly complex ICU treatments, they are transported to urban general hospitals.

4.2 Identifying Congestion main sources

So as to identify the congestion sources, we compute the MP for each input. As there is only one output, Eq. (8) reduces to \( MP\left({x}_{i0},{y}_{10}\right)={y}_{10}\cdot {\tilde{v}}_{i0}^{*},\mathrm{i}=1\dots m \), a quantity that is negative when \( {\exists}_{i=1\dots m}{\tilde{v}}_{i0}^{*}<0 \), revealing the existence of congestion. Table 8 (Appendix E) summarizes the MP values (10 % trimmed means) by different categories. Note that under the strong congestion, all inputs are strong congestion sources, so the results in Table 8 regard only the weak congestion cases. These results shall be interpreted as follows: keeping the remaining inputs unchanged, the increasing of 1 HospDay or 1,000€ on a specific cost-related input will raise (resp. Decrease) the no. discharges by a ratio equal to MP(x i0, y 10), if it is a positive (resp. Negative) quantity. For instance, and taking the global results, an increase of 1,000€ on medicines and clinical stuff (CGSC) will increase, on average, the number of discharges by about 18 inpatients. Likewise, the decrease of 2 hospital days will likely increase discharges by about 3 inpatients. This shows that the length of stay in ICU is its main congestion source, being relevant in ICU specialties such as Cardiology and Surgical ICUs. In these cases, the decrease of 1 HospDay would increase the number of discharges by 4-5. This is an expected result as the input HospDays strongly depends on the beds availability and the average delay, which assumes considerable values in ICUs due to the required level of care. Accordingly, we recall and confirm our assumption that HospDays contributes to the services congestion as it likely potentiates the appearance of other diseases, like nosocomial infections.

Costs with staff also exhibit a role on congestion, although it is weak on average as it would be necessary an average decrease of about 37,600€ on StaffC to increase only one InpD, which in practice is not likely. However, its role becomes meaningful on Polyvalent ICUs and Pediatric, Gynecology, Obstetrics and Neonatology ICUs, on Oncology centers and on EPE hospitals, which came into force in 2005. We also observe that from 2005 onwards, StaffC becomes the most important congestion source instead of HospDays. According to Ferreira and Marques [28], the corporatization reform (legal status) “refers to the application of private management tools to the public sector”, so EPE hospitals are more autonomous on human resources contracting. This freedom is perhaps the reason why StaffC is a congestion source. On the other hand, HospDays is no longer a major source of congestion in ICUs of EPE hospitals, which means that the introduction of such private management rules on corporatized hospitals may have imposed the reduction of the average delay, even in ICUs. So, unnecessary and even harmful HospDays were reduced to a minimum.

Furthermore, StaffC and HospDays are highly correlated (τ = 0.5067), so the higher the inpatients illness, complexity and severity, the higher the hospitalization time and the costs with doctors and nurses. But as there is a surplus on HospDays, the same applies to StaffC. This also seems to justify why StaffC is a congestion source in hospital centers, as the (horizontal) merging reform did not change the staffing quantity; rather some services were closed so as to explore potential scope and scale economies. In ICUs, this appears to show a perverse effect, exhibiting some staff surplus.

Finally, it is worth to mention that CGSC, SEServ and CapC are not meaningful sources of congestion in ICUs, revealing a good management of clinical stuff (including medicines), outsourcing and capital investments. These three variables may also contribute to the improvement of ICUs production, due to their considerable positive MPs.

5 Concluding remarks

The main objective of the paper is threefold: firstly, to achieve the bias- and environment-corrected congestion of ICUs; secondly, to check whether any of those environment variables, either internal or external, are determinants of congestion; and thirdly, to verify which (if any) of the inputs exhibits signs of congestion source. Considerable and statistically significant congestion levels were identified, meaning that ICUs management shall be careful in resource allocation so as to prevent the congestion phenomenon and improve the production (in this case, the number of alive inpatients leaving the ICU to other hospital services). This resource allocation shall focus on the dimensions identified as congestion sources: costs with staff and hospital days. The remaining inputs seem to be well managed in terms of congestion, but still they can exhibit some other signs of inefficiency. Nevertheless, they do not seem to negatively affect the number of discharges of ICUs. Clearly, both congestion levels and sources are dependent on some determinants, including the ICU specialty, the ICU complexity, the hospital differentiation degree and the demographic patterns of the population. This means that these factors shall also be taken into account by the ICU management on such a resource allocation process. Despite the relative database seniority, it was shown that time is not a determinant of congestion levels, meaning that it is expected that congestion levels remain slightly unchanged at present and some results can be inferred regarding the current days. This clearly must be confirmed by using a more recent database.

Finally, it shall be mentioned that this study, like any other, is not absolutely flawless. An important issue that is left for further research includes the adjustment of inpatients by their probability of death at the ICU entrance. However, our model is adjusted for environment which, according to Ferreira and Marques [27], mimics the inpatients complexity and avoids the heterogeneity among ICUs. Needless to say that for a more recent database this kind of data shall be included and this hypothesis confirmed. Additionally, no quality data has been considered in this study. Unfortunately such information does not exist neither for the ICU services nor for the considered time period (2002-2009). Nevertheless, we believe that quality assumes a really important role in ICU services (and hospitals, in general) performance; therefore, the inclusion of quality data (such as in-ICU mortality rates) shall be a hot topic for further research.

Notes

Hereinafter, stars * stand for linear programming models’ variables optima.

ρ0 -* is achieved by minimizing the linear program in Eq. (10), instead of maximizing.

We are aware that data can be somehow old. Still, there is no apparent reason to believe that both congestion sources and the environment impact on congestion could significantly change till the present days.

We do not include “work force” variables, such as number of nurses and doctors, as inputs, once they are multidisciplinary, working in different hospital dimensions, but the information provided by the official sources do not allow to disentangle the staff number working in ICU from other departments.

All required data for this research is available at the official database of the Portuguese Ministry of Health, the Central Administration of Health Systems, cf. http://www.acss.min-saude.pt/, in lawful annual reports of each hospital, and in http://www.pordata.pt/en/Municipalities.

The adjustment for environment (subsection 3.3) shall be enough for inpatients complexity accounting, as claimed by Ferreira and Marques [27].

This results into \( \sqrt[{\vartheta}_w+4]{\frac{16}{n^2{\left({\vartheta}_w+2\right)}^2}}=\sqrt[9+4]{\frac{16}{630^2{\left(9+2\right)}^2}}\approx 0.3175 \), which represents a bandwidth for the multidimensional kernel function.

The authors, using the software Matlab®, developed all computational frameworks.

Multiple optima (solutions) are not problematic in the present case. Indeed, our results are consistent with those ones obtained through the approach proposed by Sueyoshi and Sekitani [31]. However, so as to avoid a too long paper and to keep the analysis as simple as possible, those results are not displayed but can be provided upon request.

Mutatis mutandis, it can be easily adapted to the other two hypotheses.

Abbreviations

- CapC:

-

Capital Costs

- CI:

-

Confidence Interval

- CGSC:

-

Costs of Goods Sold and Consumed

- CRS:

-

Constant Returns to Scale

- DEA:

-

Data Envelopment Analysis

- DMU:

-

Decision Making Unit

- DSE:

-

Degree of Scale Economies

- EPE:

-

Entidade Pública Empresarial

- EU:

-

European Union

- GDP:

-

Gross Domestic Product

- GSI:

-

Gini’s Specialization Index

- HC:

-

Hospital Center

- HospDays:

-

Hospital Day(s)

- ICU:

-

Intensive Care Unit

- InpD:

-

Inpatient Discharge(s)

- LHU:

-

Local Health Unit

- LVP:

-

Law of Variable Proportions

- MP:

-

Marginal Product

- RTS:

-

Returns to Scale

- SA:

-

Sociedade Anónima

- SDH:

-

Strong Disposability Hull

- SEServ:

-

Supplies and External Services

- SH:

-

Singular Hospital

- SMI:

-

Service-Mix Index

- SPA:

-

Serviço Público Administrativo

- StaffC:

-

Staff Costs

- VRS:

-

Variable Returns to Scale

- WDH:

-

Weak Disposability Hull

References

Cooper WW, Seiford LM, Zhu J (2000) A unified additive model approach for evaluating inefficiency and congestion with associated measures in DEA. Socio-Econ Plan Sci 34:1–25

Nayar P, Ozcan YA, Yu F, Nguyen AT (2013) Benchmarking urban acute care hospitals: Efficiency and quality perspectives. Health Care Manag Rev 38(2):137–145

Hsieh HM, Clement DG, Bazzoli GJ (2010) Impacts of market and organizational characteristics on hospital efficiency and uncompensated care. Health Care Manag Rev 35(1):77–87

Kahn JM, Goss CH et al (2006) Hospital volume and the outcomes of mechanical ventilation. N Engl J Med 355(1):41–50

Khandelwal N, Benkeser DC, Coe NB, Curtis JR (2016) Potential Influence of Advance Care Planning and Palliative Care Consultation on ICU Costs for Patients with Chronic and Serious Illness. Crit Care Med 44(8):1474–1481

Halpern NA, Pastores SM, Greenstein RJ (2004) Critical care medicine in the United States 1985-2000: An analysis of bed numbers, use, and costs. Crit Care Med 32(6):1254–1259

Barrett, M.L., Smith, M.W., Elixhauser, A., Honigman, L.S., Pines, J.M., 2014. Utilization of Intensive Care Services, 2011. HCUP Statistical Brief #185. Agency for Healthcare Research and Quality, Rockville. Retrieved from https://www.hcup-us.ahrq.gov/reports/statbriefs/sb185-Hospital-Intensive-Care-Units-2011.jsp, 15th September 2016.

Puig-Junoy J (1998) Technical Efficiency in the Clinal Management of Critically Ill Patients. Health Econ 7:263–277

Tsekouras K, Papathanassopoulos F, Kounetas K, Pappous G (2010) Does the adoption of new technology boost productive efficiency in the public sector? The case of ICUs system. Int J Prod Econ 128:427–433

Dervaux B, Leleu H, Minvielle E, Valdmanis V, Aegerter P, Guidet B (2009) Performance of French intensive care units: A directional distance function approach at the patient level. Int J Prod Econ 120:585–594

Clement J, Valdmanis V, Bazzoli G, Zhao M, Chukmaitov A (2008) Is more, better? An analysis of hospital outcomes and efficiency with a DEA model of output congestion. Health Care Manag Sci 11:67–77

Valdmanis V, Rosko M, Muller R (2008) Hospital quality, efficiency, and input slack differentials. Health Serv Res 43:1830–1848

Ferrier GD, Rosko M, Valdmanis V (2006) Analysis of uncompensated hospital care using a DEA model of output congestion. Health Care Manag Sci 9:181–188

Arrieta A, Guillén J (2015) Output congestion leads to compromised care in Peruvian public hospital neonatal units. Health Care Manag Sci. doi:10.1007/s10729-015-9346-y

Matranga D, Sapienza F (2015) Congestion analysis to evaluate the efficiency and appropriateness of hospitals in Sicily. Health Policy 119(3):324–332

Simões P, Marques RC (2011) Performance and Congestion Analysis of the Portuguese Hospital Services. CEJOR 19:39–63

Mathews KS, Long EF (2015) A Conceptual Framework for Improving Critical Care Patient Flow and Bed Use. Ann Am Thorac Soc 12(6). doi:10.1513/AnnalsATS.201409-419OC

Chan CW, Farias VF, Escobar GJ (2016) The Impact of Delays on Service Times in the Intensive Care Unit. Manag Sci. doi:10.1287/mnsc.2016.2441

Farrell MJ (1957) The measurement of productive efficiency. J R Stat Soc Ser A 120:253–281

Charnes A, Cooper WW, Rhodes E (1978) Measuring efficiency of decision-making units. Eur J Oper Res 2:429–444

Ozcan YA (2008) Health Care Benchmarking and Performance Evaluation: An Assessment using Data Envelopment Analysis (DEA). Springer, New York

Hollingsworth B (2003) Non-parametric and parametric applications measuring efficiency in health care. Health Care Manag Sci 6:203–218

Ruggiero J (2007) A comparison of DEA and the stochastic frontier model using panel data. Int Trans Oper Res 14:259–266

De Witte K, Marques R (2009) Capturing the environment, a metafrontier approach to the drinking water sector. Int Trans Oper Res 16:257–271

Jacobs R, Smith PC, Street A (2006) Measuring Efficiency in Health Care: Analytic Techniques and Health Policy. Cambridge University Press, Cambridge

Hollingsworth B (2008) The Measurement of Efficiency and Productivity of Health Care Delivery. Health Econ 17(10):1107–1128

Ferreira D, Marques RC (2016) Should inpatients be adjusted by their complexity and severity for efficiency assessment? Evidence from Portugal. Health Care Manag Sci 19(1):43–57

Ferreira D, Marques RC (2015) Did the corporatization of Portuguese hospitals significantly change their productivity? Eur J Health Econ 16(3):289–303

Marques RC, Carvalho P (2012) Estimating the efficiency of Portuguese hospitals using an appropriate production technology. Int Trans Oper Res 20(2):1–17

Cooper WW, Seiford LM, Tone K (2007) Data Envelopment Analysis: A Comprehensive Text with Models, Applications, References and DEA-solver Software. Kluwer Academic Publishers, Boston

Sueyoshi T, Sekitani K (2009) DEA congestion and returns to scale under an occurrence of multiple optimal projections. Eur J Oper Res 194(2):592–607

Färe R, Grosskopf S, Lovell CAK (1985) The measurement of efficiency of production. Kluwer-Nijhoff Publishing

Färe R, Grosskopf S, Lovell CAK (1994) Production Frontiers. Cambridge University Press, Cambridge

Färe R, Svensson L (1980) Congestion of production factors. Econometrica 48:1745–1753

Sueyoshi T (2003) DEA Implications of Congestion. Asia Pacific Manag Rev 8(1):59–70

Tone K, Sahoo BK (2004) Degree of scale economies and congestion: a unified DEA approach. Eur J Oper Res 158:755–772

Ferreira, D., Marques, R.C. 2016. Malmquist and Hicks-Moorsteen Productivity Indexes for Clusters Performance Evaluation. International Journal of Information Technology & Decision Making, forthcoming.

Herr A (2008) Cost and technical efficiency of German hospitals: does ownership matter? Health Econ 17(9):1057–1071

Daraio C, Simar L (2007) Advanced robust and nonparametric methods in efficiency analysis: Methodology and applications. Springer Science + Business Media, LLC, New York

Ferreira, D., Marques, R.C., Pedro, I., 2016. Comparing efficiency of holding business model and individual management model of airports. Journal of Air Transport Management, forthcoming

Rego G, Nunes R, Costa J (2010) The challenge of corporatisation: the experience of Portuguese public hospitals. Eur J Health Econ 11:367–381

Ozcan YA, Luke RD (1993) A national study of the efficiency of hospitals in urban markets. Health Serv Res 28(6):719–739

Ozcan YA (1993) Sensitivity analysis of hospital efficiency under alternative output/input and peer groups: a review. Knowl Policy 5(4):1–29

Lobo MSC, Ozcan YA, Lins MPE, Silva ACM, Fiszman R (2014) Teaching hospitals in Brazil: findings on determinants for efficiency. Int J Healthcare Manag 7(1):60–68

Daidone S, D’Amico F (2009) Technical efficiency, specialization and ownership form: evidences from a pooling of Italian hospitals. J Prod Anal 32:203–216

Lindlbauer I, Schreyögg J (2014) The relationship between hospital specialization and hospital efficiency: do different measures of specialization lead to different results? Health Care Manag Sci 17:365–378

Simar L, Wilson PW (1998) Sensitivity analysis of efficiency scores: how to bootstrap in nonparametric frontier models. Manag Sci 44:49–61

Grossman M (1972) On the Concept of Health Capital and the Demand for Health. J Polit Econ 80(2):223–255

Kuntz L, Sülz S (2011) Modeling and notation of DEA with strong and weak disposable outputs. Health Care Manag Sci 14(4):385–388

Acknowledgments

We would like to thank to three anonymous referees who kindly and significantly have improved this paper’s quality, clarity and structure, due to their beneficial comments. We also acknowledge the financial support of the Portuguese Foundation for Scientific and Technology (FCT): SFRH/BD/113038/2015. The second author thanks the FCT (Portuguese national funding agency for science, research and technology) for the possibility of being under sabbatical leave in the University of Cornell in the USA for the period when part of this research took place.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

1.1 Literature review

Please, see Table 5

Service-Mix Index

The inefficiency-corrected SMI for the ICU service (DMU0) defined by the pair \( \left({x}_{i0},{y}_{r0}\right)\in {\mathbb{R}}_{+}^{5+1} \) can be computed as follows:

Use Eq. (19) to obtain the optima \( {\left\{{\theta}_0^{*},{p}_1^{-*},\dots, {p}_5^{-*},{p}_1^{+*}\right\}}_{SDH} \) and project (x i0, y r0) in the SDH frontier using the transformation \( \left({\overset{\vee }{x}}_{i0},{\overset{\vee }{y}}_{10}\right)=\left({\theta}_0^{*}\cdot {x}_{i0}-{p}_i^{-*},{y}_{10}+{p}_1^{+*}\right)\in {\mathbb{R}}_{+}^{5+1} \). This projection removes the technical inefficiency of units. To obtain Ω we follow the strategy proposed by subsection 3.3, considering all environment variables but Z6 (by obvious reasons).

-

1)

Re-run the step 1) for all DMUs and obtain the set \( \nabla =\left\{\left({\displaystyle \sum_{i=1}^4}{\overset{\vee }{x}}_{ij},{\overset{\vee }{y}}_{1j}\right)=\left({\theta}_0^{*}\cdot {\displaystyle \sum_{i=1}^4}{x}_{ij}-{\displaystyle \sum_{i=1}^4}{p}_i^{-*},{\overset{\vee }{y}}_{1j}\right)\in {\mathbb{R}}_{+}^2,j=1,\dots, n\right\} \). ∇ only contains data from the single output and the first 4 inputs (monetary resources).

-

2)

Compute the ratio for the j-th unit \( {\boldsymbol{\xi}}_{\boldsymbol{j}}=\left({\displaystyle \sum_{\boldsymbol{i}=1}^4}{\overset{\vee }{\boldsymbol{x}}}_{\boldsymbol{i}\boldsymbol{j}}\right)/{\overset{\vee }{\boldsymbol{y}}}_{1\boldsymbol{j}},\boldsymbol{j}=1,\dots, \boldsymbol{n} \), which represents the efficient unitary cost of such DMU j .

-

3)

Compute \( \mathbf{\mathcal{B}}={\displaystyle \prod_{\boldsymbol{j}=1}^{\boldsymbol{n}}}\left({{\boldsymbol{\xi}}_{\boldsymbol{j}}}^{1/\boldsymbol{n}}\right) \), which represents the unitary costs’ national average (baseline).

-

4)

The SMI for the DMU0 is then SMI0 = ξ0/\( \boldsymbol{\mathcal{B}} \), where \( {\boldsymbol{\xi}}_0=\left({\displaystyle \sum_{\boldsymbol{i}=1}^4}{\overset{\vee }{\boldsymbol{x}}}_{\boldsymbol{i}0}\right)/{\overset{\vee }{\boldsymbol{y}}}_{10} \)

Gini’s Specialization Index

The GSI k for the hospital k is computed as follows, [45, 46]:

-

1)

Let ℒ be the number of Disease Related Groups (DRG);

-

2)

Sort DRGs by discharges treated, in ascending order;

-

3)

Let \( {\mathbf{\mathcal{D}}}_{\boldsymbol{w}}^{\boldsymbol{k}} \) be the number of the w-th DRG group discharges;

-

4)

Let \( {\boldsymbol{q}}_{\boldsymbol{i}}^{\boldsymbol{k}},\boldsymbol{i}=1,\dots, \mathbf{\mathcal{L}}-1 \), be the ratio of total discharges treated by the first i DRGs, i.e., \( {\boldsymbol{q}}_{\boldsymbol{i}}^{\boldsymbol{k}}={\varSigma}_{\ell =1}^i{\mathbf{\mathcal{D}}}_{\ell}^{\boldsymbol{k}}/{\displaystyle \sum_{\boldsymbol{w}=1}^{\mathbf{\mathcal{L}}-1}}{\mathbf{\mathcal{D}}}_{\boldsymbol{w}}^{\boldsymbol{k}} \)

-

5)

Compute GSI k ∈ [0; 1] using Eq. (20).

Bootstrapping

Based on Simar and Wilson [47] and Daraio and Simar [38], the output-oriented bootstrap algorithm is as follows:

-

1)

Compute the n output-oriented DEA efficiency scores, under the strong or the weak disposability assumption, Eqs. (6) and (7), respectively; for the sake of generality, let’s suppose we obtain the set of efficiency scores, Φ = {θ j , j = 1, … , n}, with a standard \( {\sigma}_{\varPhi^{"}} \) deviation and an interquartile range \( {r}_{\varPhi^{"}} \).

-

2)

Reflect Φ and obtain the 2n-length set Φ ' = {2 − θ 1, 2 − θ 2, ... , 2 − θ n , θ 1, θ 2, ... , θ n }.

-

3)

Consider only those p DMUs such that θ b > 1 , b = 1 , . . . , p < n; from Φ′, create the 2p-length set Φ″ = {2 − θ 1, 2 − θ 2, … , 2 − θ p , θ 1, θ 2, … , θ p } ⊂ Φ′;Φ ' ' has a standard deviation \( {\sigma}_{\varPhi^{{\prime\prime} }} \) and an interquartile range r Φ ' '.

-

4)

Compute a bandwidth \( d\approx \left(1.06\cdot {\sigma}_{\boldsymbol{\Phi}}\cdot \min \left\{{\sigma}_{{\boldsymbol{\Phi}}^{\mathbf{{\prime\prime}}}},\kern0.5em \frac{r_{{\boldsymbol{\Phi}}^{\mathbf{{\prime\prime}}}}}{1.34}\right\}{(2p)}^{4/5}\right)/\left(\boldsymbol{n}\cdot {\sigma}_{{\boldsymbol{\Phi}}^{\mathbf{{\prime\prime}}}}\right) \).

-

5)

Randomly (with reposition) draw a n-length sample from Φ' (step 2)) and obtain the set \( {\varPhi}^{\star }=\left\{\tilde{\theta_j^{\star }},\mathrm{j}=1,\dots, n\right\} \), with a standard deviation σ ⋆ and an arithmetic mean m ⋆.

-

6)

Use a perturbation χ j = d ⋅ ζ j , where \( {\boldsymbol{\zeta}}_{\boldsymbol{j}}\sim \mathbf{\mathcal{N}}\left(\boldsymbol{\mu} =0,\boldsymbol{\sigma} =1\right) \), to obtain the set

$$ {\varPhi}^{\star \star }=\left\{\tilde{\theta_j^{\star \star }}=\frac{\tilde{\theta_j^{\star }}+{\chi}_j-{m}^{\star }}{\sqrt{1+{\left(\frac{d}{\&^{\star }}\right)}^2}}+{m}^{\star };\mathrm{s}.t.{\chi}_j=d\cdot {\zeta}_j;{\zeta}_j\sim \mathbf{\mathcal{N}}\left(\mu =0,\sigma =1\right);\mathrm{j}=1,\dots, n;\tilde{\theta_j^{\star }}\in {\varPhi}^{\star}\right\} $$(21) -

7)

Reflect those n units from Φ ⋆⋆, as follows:

$$ {\theta}_j^{\star \star }=\left\{\begin{array}{cc}\hfill 2-\tilde{\theta_j^{\star \star }}\hfill & \hfill if\ \tilde{\theta_j^{\star \star }}<1\hfill \\ {}\hfill \tilde{\theta_j^{\star \star }}\hfill & \hfill otherwise\hfill \end{array}\right. $$(22) -

8)

Create the set \( {\Im}^{\star \star }=\left\{\left({x}_{ij}^{\star },{y}_{rj}^{\star}\right)\in {\mathbb{R}}_{+}^{m+s}:\kern0.5em {x}_{ij}^{\star }={x}_{ij}\cap {y}_{rj}^{\star }={y}_{rj}\frac{\theta_j}{\theta_j^{\star \star }},\mathrm{j}=1,\dots, n\right\} \), and re-run Eqs. (6) and (7) to project units in the new frontier and to obtain the bootstrap-based efficiency scores, under the strong or the weak disposability assumption, resp.

-

9)

Repeat steps 5)-8) B times, where B is large, say B ~ 1,000 iterations.

-

10)

Let m Bj and σ Bj be the arithmetic mean and the standard deviation of those B bootstrap-based efficiency score for unit j. Bias is then bias j ≈ m Bj − θ j and the bias-corrected DEA efficiency score is \( \hat{\theta_j}={\theta}_j-bia{s}_j\approx 2\cdot {\theta}_j-{m}_{Bj} \). Still, this bias correction shall not be performed if |bias j | ≤ σ Bj /4.

Some additional graphics and tables

Please check Figs. 2, 3, 4 and 5, as well as Tables 6, 7 and 8.

Rights and permissions

About this article

Cite this article

Ferreira, D., Marques, R.C. Identifying congestion levels, sources and determinants on intensive care units: the Portuguese case. Health Care Manag Sci 21, 348–375 (2018). https://doi.org/10.1007/s10729-016-9387-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10729-016-9387-x