Abstract

Traditional approaches to group decision making (GDM) problems for ranking a finite set of alternatives terminate when the experts involved in the GDM process reach a consensus. This paper proposes ways for analyzing the final results after a consensus has been reached in GDM. Results derived from this last step can be used to further enhance the understanding of possible hidden dynamics of the problem under consideration. The proposed approach for post-consensus analysis is in part based on a novel idea, known as preference maps (PMs) introduced recently in the literature on how rankings should be described when ties in the rankings are allowed. An original contribution of this paper is how to define the difference between two PMs. This is achieved by using a metric known as the Marczewski–Steinhaus distance. Approaches for analyzing the final results of a GDM process after consensus has been reached may reveal hidden but crucial insights in the way the experts reached the consensus and also new insights related to the alternatives. These approaches rely on the concept of differences in the rankings, defined by traditional means or as the difference between two PMs as defined in this paper. This is the second group of original contributions made in this paper. The various issues are illustrated with numerical examples and an application inspired from a real-world problem described in the literature. The new contributions described in this study offer an exciting potential to enrich the group decision making process considerably.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most research on group decision making (GDM) focuses on approaches that can guide a group of decision makers (also known as decision experts or just experts) to reach a consensus when ranking a finite set of alternatives (Tindale et al. 2003; Saaty and Peniwati 2008; Ureña et al. 2019; Pérez et al. 2014). However, no work has been done on what happens after a consensus has been reached. The argument can be made that by studying the decision makers’ individual rankings of the alternatives and the consensus reached, one might be able to discover some interesting issues related to key aspects of the group’s decision making process. In this way one may gain deeper insights of the alternatives and also on the decision makers themselves. More knowledge on the various dynamics of the particular decision problem under consideration may ultimately lead to a better understanding of the problem and thus of its final conclusions. Thus, doing research on this important subject may offer a new, and so far unutilized, opportunity to benefit the GDM process in ways not imagined before.

The structure of the decision problem considered in this paper is best described as follows. Available is a finite set of the alternatives which have been considered in a GDM process. This set of alternatives is denoted as {A1, A2, …, An} where n is a positive integer number. These alternatives have been evaluated and ranked by some experts denoted as E1, E2, …, Em, where m is a positive integer number. The experts have evaluated these alternatives by considering some evaluative criteria and using a multi-criteria decision making (MCDM) process such as the ones described by Triantaphyllou (2000). Next a GDM approach [such as the ones described by Hou and Triantaphyllou (2019), Davis (2014), Dong et al. (2015), or Herrera et al. (1996)] was followed and a consensus has been reached on the way these alternatives should be ranked. Is this the end of the group decision making process or is there something more that could be done to gain more useful insights? This paper describes how more analysis can be performed at this stage to potentially derive some very useful and unexpected results.

This paper is organized as follows. Section 2 describes some relevant developments from the literature. These are various approaches for determining the difference between rankings. Special emphasis is given to a recently developed method which can be used when rankings involve ties. That is, when multiple ranks are assigned to the same alternative. This method is based on developments first introduced by Hou (2015a, b, 2016) and later refined in Hou and Triantaphyllou (2019). They constitute what is referred to preference maps (PMs). Sections 3 and 4 present two novel definitions and some theoretical results related to PMs. In particular, how to analyze the information described in a PM and how to express the difference of two PMs. This is achieved by defining the concepts of “expansion of a PM” and then use it to define the “difference between two PMs.” These two definitions and the three relevant theorems in Sects. 3 and 4 are some of the original contributions of this paper. These concepts, along with the traditional ways for defining the difference between two rankings, can be used in Sect. 5 to demonstrate how the final results of a GDM process can be analyzed after a consensus has been reached. Some illustrative examples are used for this purpose. The proposed approaches for analyzing the final results after a consensus has been reached is the second group of original contributions made in this study. An example, inspired from a real-life application described in the literature, is analyzed in Sect. 6 to further demonstrate the applicability of the new concepts and approaches. Finally, the paper ends with Sect. 7 which summarizes the main contributions and highlights some areas for possible future research.

2 Some Relevant Developments from the Literature

This section presents some relevant developments from the literature that describe how differences in rankings can be quantified. It considers two general settings. In the first setting each alternative is assigned to a unique rank. The second setting is more general. Now an alternative may be assigned to multiple ranks, which must be represented by consecutive positive integers. These two settings are elaborated in the following two subsections, respectively.

2.1 When Each Alternative is Assigned to a Unique Rank

This is the most common scenario. There are multiple ways of how one may define the difference between two such rankings. Ray and Triantaphyllou (1999) describe five different methods for determining the difference of two rankings (with no ties). For illustrative purposes, consider the two rankings R1 = (1, 2, 3) and R2 = (2, 3, 1). Then these five methods are as follows:

(1) Number of disagreements | Difference between R1 and R2 = 3 |

(2) Weighted number of disagreements | Difference between R1 and R2 = 7 |

(3) Sum-of-squares of differences in rank | Difference between R1 and R2 = 6 |

(4) Normalized sum-of-squares of differences in rank | Difference between R1 and R2 = 0.75 |

(5) Sum of the absolute values of differences in rank | Difference between R1 and R2 = 4 |

For the second method mentioned above the three weights were assumed to be as follows: W1 = 3.0, W2 = 2.0, and W3 = 1.0. For the forth method one first computes the value as in the third method and then that value is divided by the largest possible value when one considers two ranking vectors of size 3 (as is the case in the previous example). That value is equal to 8, and hence the corresponding result is equal to 0.75 (= 6/8). Another relevant study is the results reported in Ray and Triantaphyllou (1998) which describes the evaluation of rankings by considering the possible number of agreements and conflicts. One may easily define even more such methods and many more methods exist as this is an open problem and cannot be answered exhaustively.

2.2 When an Alternative May be Assigned to Multiple Ranks

Now it is assumed that the experts’ preferences can be expressed as ties-permitted ordinal rankings. Such rankings can be expressed in terms of the so-called preference sequence vectors (or PSVs) (Hou 2015a, b, 2016). The term preference sequence vector was later changed by Hou and Triantaphyllou (2019) to be called the preference map (PM). The notion of the preference map is described next along with some key definitions and related concepts from the literature.

Let us denote as [Si]n×1 a column vector of size n × 1 (i.e., with n entries), each entry of which is denoted as Si, for i = 1, 2, 3, …, n. This notation is used to formally define the concept of a preference sequence vector (PSV) as it was first introduced by Hou (2015a, b, 2016). Please note that in the following definitions the term PM is used instead of the initial PSV one.

Definition 1

(Hou 2015b) A vector \( \left[ {S_{i} } \right]_{n \times 1} \) is called a preference map (PM) of a ties-permitted ordinal ranking on the alternative set \( X = \{ A_{1} ,A_{2} , \ldots ,A_{n} \} \) with respect to a weak order relation \( { \preccurlyeq } \) such that

where \( P_{i} = \{ A_{j} |A_{j} \in X,A_{j} \succ A_{i} \} \) is the set containing the alternatives that predominate \( A_{i} \), and Ti = {Ar | Ar\( \in \)X, Ai ~ Ar} is the set containing the alternatives that are indifferent to \( A_{i} \), for i = 1, 2, 3, …, n.

We illustrate the PM idea by means of a simple example. Suppose that we have the following ties-permitted ordinal ranking: A1 ~ A2\( \succ \)A3. This ranking implies such preferences on the alternative set {A1, A2, A3}. In particular, it indicates that alternatives A1 and A2 are in a tie and should occupy positions 1 and 2; meanwhile, alternative \( A_{3} \) is dominated by A1 and A2 hence it is ranked at position 3. We represent these preferences in the following PM:

whose entries indicate the alternatives’ possible ranking positions. These positions are deduced by using formula (1). For instance, according to the preferences contained in the ranking, the predominating sets of A1 and \( A_{3} \) are:

respectively. Meanwhile, their indifference sets are:

respectively. Therefore, by formula (1) we obtain their corresponding entries in the PM as \( \{ 1,2\} \) and \( \{ 3\} \), which are, in fact, their possible ranking positions which correspond to the ordinal ranking of A1 ~ A2\( \succ \)A3.

Currently, the term ‘preference map’ is also used in a different context to graphically communicate, based on some statistical analysis, the relationships between product characteristics and the consumer preferences in some application areas [see e.g. Gere et al. (2014) and Yenket et al. (2011)]. In comparison the term ‘preference map’ used in this paper, as defined by Definition 1, is a sequence whose entries are sets containing alternatives’ possible ranking positions. We use the term “preference map” to represent an ordered partition of an alternative set, and vice versa.

The introduction of the PM concept brings a much needed convenience and flexibility for describing ranking preferences that are constructed based on weak order relations. Moreover, a powerful mathematical definition of consensus can now be constructed too as it is shown in the following definition taken from the literature.

Definition 2

(Hou 2015b) Let \( \left[ {S_{i}^{(1)} } \right]_{n \times 1} \), \( \left[ {S_{i}^{(2)} } \right]_{n \times 1} \), …, \( \left[ {S_{i}^{(m)} } \right]_{n \times 1} \) be PMs. These PMs are said to be in consensus if and only if \( \forall i\left( {\bigcap\nolimits_{k = 1}^{m} {S_{i}^{(k)} } \ne \emptyset } \right) \), or equivalently, \( \forall i,j,k\left( {S_{i}^{(j)} \cap S_{i}^{(k)} \ne \emptyset } \right) \). Moreover, the consensus PM is defined by \( \left[ {S_{i}^{(c)} } \right]_{n \times 1} = \left[ {\bigcap\nolimits_{k = 1}^{m} {S_{i}^{(k)} } } \right]_{n \times 1} \).

The above mathematical definition of consensus based on PMs, is intuitively appealing because it reflects two fundamental observations in GDM (Hou and Triantaphyllou 2019): (1) Two experts’ preferences may not be identical but they may still exhibit a type of consensus; and (2) Consensus may not be transitive among experts. For example, as it has been demonstrated in Hou (2015a, b) and Hou and Triantaphyllou (2019), suppose that there are three persons, say Rudy, Scott and Tracy, and two drinking alternatives, say coffee and tea. We assume that these persons’ preferences are as follows: Rudy prefers coffee to tea, Scott prefers both coffee and tea, while Tracy prefers tea to coffee. Evidently, Rudy’s and Scott’s preferences exhibit a type of consensus. Meanwhile, Scott’s and Tracy’s preferences also exhibit some consensus, while Rudy’s and Tracy’s preferences do not exhibit any consensus. This simple example describes some key observations on consensus in GDM defined as in Definition 2.

Consider the following three PMs assumed to be the judgments of three experts when they have reached a consensus (as defined in Definition 2) in a GDM process:

These three PMs imply that the consensus PM of the three experts is the PM termed as V4 depicted below:

The above PM termed as V4 can be derived as the intersection of the previous three PMs which express the judgments of the three experts. For instance, the third entry of V4 is the element {3} which is derived as follows:

A similar interpretation holds for the rest of the entries of V4.

The problem examined in this paper is described next. Given are the individual final ranking decisions produced by a group of experts after they have reached a consensus (defined as in Definition 2 or otherwise). The individual ranking decisions and the consensus are described by PMs. They can be rankings where ties are permitted (in which case the concept of PM as described above needs to be used) or they can be of the traditional type with no ties. Furthermore, the consensus PM may or may not be the result of performing the intersection operation on the PMs that described the experts’ final ranking decisions. Given the above PMs the main question examined in this paper is described as follows: What more can be inferred about the experts involved and the alternatives at the end of the formal GDM process that could potentially lead to a better understanding of the GDM process and the derived consensus? Before this question is addressed, the concept of PM is analyzed to facilitate a better understanding of how the difference between two PMs can be quantified.

3 Analyzing a Preference Map (PM)

Sections 3 and 4 introduce a number of original concepts related to group decision making and especially when conducting a post-consensus analysis by using PMs. To help fix ideas, consider the previous PM termed as V1 (i.e., the final ranking as proposed by expert E1) which is repeated here for convenience:

The above PM implies that the following nine rankings (also PMs) are in consensus with V1 (this includes V1 itself). These nine PMs are derived by expanding the entries of V1 and then considering all possible combinations. As V1 has two entries equal to {1, 2} and {3, 4} (each appearing twice in V1), it follows that this expansion has the nine members which are exhaustively enumerated next.

We will call this set of nine members (i.e., the PMs following the arrow after V1) the expansion of PM V1. Obviously, the expansion of the PM termed as V4 in Sect. 2.2, is the intersection of the expansions of the PMs termed as V1, V2, and V3 in Sect. 2.2. Formally, we define the notion of PM expansion as follows:

Definition 3

Let vector V be a PM. Then its expansion, denoted as EXPANSION (V), is the following set of all PM vectors Vi, where Vi denotes a ranking which is not in conflict with the rankings implied by V. That is, the following is true:

The set of PMs defined as the expansion of a PM V has some interesting properties. Suppose that the vector V can be defined as the intersection of m PMs denoted as Vj, for j = 1, 2, 3, …, m. Then the following theorem follows directly from the previous definition:

Theorem 1

Let\( V = \bigcap\nolimits_{j = 1}^{j = m} {V_{j} } \). Then the following relation is true: EXPANSION (V) = \( \bigcap\nolimits_{j = 1}^{j = m} {} \) EXPANSION (Vj).

Let V be a PM. Next define as UNIQUE (V) the ordered list of all the unique members of V. For instance, UNIQUE (V1) = (U1, U2, U3) = ({1, 2}, {3, 4}, {5}). That is, U1 = {1, 2}, U2 = {3, 4}, and U3 = {5}. This definition can be used next to compute the size (cardinality) of the EXPANSION (V) set of the PM termed as V. The following theorem is based on a result reported in Maassen and Bezembinder (2002). According to that result if we have a finite set of m alternatives, then the total number of weak orders on m alternatives is as follows:

where \( S\left( {m,k} \right) \), a Stirling number of the second kind, indicates the number of partitions of a set of \( m \) elements into \( k \) nonempty subsets. When this result is used in conjunction with the previous definition of the UNIQUE (V) ordered list, then the following theorem follows:

Theorem 2

Let V be a PM and the members of the corresponding UNIQUE (V) set be U1, U2, U3, …, Ut. Then the size of the set EXPANSION (V) is equal to\( \mathop \prod \nolimits_{j = 1}^{t} \left[ {\mathop \sum \nolimits_{k = 1}^{{\left| {U_{j} } \right|}} k!S\left( {\left| {U_{j} } \right|,k} \right)} \right] \), where |Uj|, for j = 1, 2, 3, …, t, are the sizes of the members of the set UNIQUE (V), and \( S\left( {m,k} \right) \)is a Stirling number of the second kind.

When this theorem is applied to the vector V1 it turns out that the set EXPANSION (V1) has nine members.

4 Assessing the Difference Between Two PMs

Suppose that given are two PMs. For instance, let us consider V1 and V3 as defined in Sect. 2.2. How can one best describe their difference? In other words, how similar or dissimilar are the two experts who submitted the rankings implied by these two PMs? These two experts are in consensus but still their final decisions are described by two distinct PMs. The answer to this question will be determined by using the notion of the corresponding EXPANSION (V) sets. If these two sets are identical, then we can say that the two PMs are identical too in the way they ranked the alternatives. Therefore, it is reasonable to express the difference of the two PMs in terms of how different the corresponding EXPANSION sets are. This is achieved by introducing the following definition.

Definition 4

Let X and Y be two PMs. Then their relative difference, denoted as DIFFERENCE (X, Y), is defined as follows:

To appreciate the intuition of the previous definition, consider the following issues. If the previous quantity DIFFERENCE (X, Y) is equal to 0, the implication is that the two PMs X and Y are identical. This follows from the fact that the set of the numerator and the set of the dominator of the fraction involved in the previous formula will be of equal size. On the other hand, if the two PMs do not have any elements in common, then their intersection will be equal to the empty set and thus the quantity DIFFERENCE (X, Y) will be equal to 1. In other words, the values the quantity DIFFERENCE (X, Y) takes have as range the interval [0, 1] (i.e., including 0 and 1). Obviously, if the two PMs correspond to a situation where the experts have reached a consensus as described in Definition 2, then the value of the difference will never be equal to 1 (as the intersection of the corresponding EXPANSION sets will never be equal to the empty set). Since the denominator of the previous ratio will never be the empty set, the previous ratio can always be defined.

As an illustrative example, let us consider the case of the PMs V1 and V3 defined in Sect. 2. That is, we need to compute the value of DIFFERENCE (V1, V3). The set EXPANSION (V1) was given in Sect. 3. The case of EXPANSION (V3) is described next.

Given the above analysis, it turns out that the intersection of the two sets EXPANSION (V1) and EXPANSION (V3) is equal to the set S1:

Therefore, its size is equal to 3 and this is the value of the numerator of the expression in Definition 4. Working similarly, it can be easily verified that the size of the union of these two EXPANSION sets is equal to 15. This is the value of the dominator of the expression in Definition 4. Hence, the value of DIFFERENCE (V1, V3) is equal to: 1 − (3/15) = 12/15 = 0.800.

More specifically, suppose that X, Y and Z are any three PMs. Then the following properties follow easily from Definition 4:

(1) DIFFERENCE (X, Y) ≥ 0 | (non-negativity property) |

(2) DIFFERENCE (X, Y) = 0, if and only if X = Y | (non-degeneracy property) |

(3) DIFFERENCE (X, Y) = DIFFERENCE (Y, X) | (symmetry property) |

(4) DIFFERENCE (X, Y) + DIFFERENCE (Y, Z) ≥ DIFFERENCE | (triangle property) |

The above relationships are true because the concept of DIFFERENCE (X, Y), as defined in Definition 4, is consistent with the well-known Marczewski–Steinhaus distance (Marczewski and Steinhaus 1958). Therefore, DIFFERENCE (X, Y) is a metric measurement. The Marczewski–Steinhaus distance has received considerable attention in the literature [see, for instance, the work by Karoński and Palka (1977), and Heine (1973)]. Applications of this distance include areas in the fields of biology (Dunn 2000), transportation (Kubiak 2007), ecology (Safford and Harrison 2001), computer vision and pattern recognition (Gardner et al. 2014), and microbiology (Engel et al. 2012), among many other areas. More recent references to this important distance measure can be found in (Ricotta and Podani 2017; Yao and Deng 2014).

The following Theorem 3 states a useful property when one PM is a subset of another PM. Its proof follows directly from Definition 4. It is based on the fact that if a set Y is a subset of another set X (i.e., Y\( \subseteq \)X), then Y ∩ X = Y and Y ∪ X = X. This theorem is useful if one wishes to compute the quantity DIFFERENCE (X, Y) when EXPANSION (Y) \( \subseteq \) EXPANSION (X), as is the case when Y is a consensus ranking according to Definition 2 and X is a ranking proposed by any of the GDM experts.

Theorem 3

Let X, Y be two PMs defined on the same alternatives space and EXPANSION (Y) \( \subseteq \) EXPANSION (X). Then the following relation is always true:

5 Some Applications of the Proposed Difference Measurement

The difference measure DIFFERENCE (X, Y) (regardless of which way was used to define it) offers some novel and highly exciting opportunities for gaining a deep understanding of key issues involved in a GDM process, after consensus has been reached and when ties in the rankings of alternatives are permitted.

If the rankings do not have ties, then any of the methods described in Sect. 2.1 may be applicable or any other method from the literature that expresses the difference of two rankings may be applicable. The following three applications illustrate the kind of analyses one may seek to perform after a consensus ranking has somehow been reached as part of the GDM process.

5.1 Application #1: Grouping the Experts Based on Their Final Recommendations When All the Alternatives are Considered

Suppose that m experts have been involved in ranking a set of n alternatives and a consensus has been reached by using the concept of PM vectors for expressing individual expert judgments. Next, it is proposed that one compares in a pairwise manner the final recommendations made by these m experts and also the consensus ranking. That is, one computes all possible DIFFERENCE (Vi, Vj) quantities by using Definition 4, where Vi and Vj are the final recommendations of Experts Ei and Ej, respectively, and also the consensus ranking. As one has m + 1 entities to compare, there are ((m + 1) × m)/2 comparisons to be considered. If ties in the ranks are not permitted, then the function DIFFERENCE (Vi, Vj) can be replaced by any other function that expresses the difference between two rankings (such as the ones mentioned in Sect. 2.1).

These values can be used to explore any potential clusters that may be identifiable based on these difference measures. Such clusters can be identified by applying some of the well-known clustering methods such as the ones described by Tan et al. (2013), Larose and Larose (2014), and Witten et al. (2016). The general idea when identifying clusters, is that members within the same cluster to be similar to each other in some way, while members across different clusters to be dissimilar. A key issue here is the number of clusters. Usually, this is explored in an ad-hoc manner. Clustering is by nature an open-ended issue and thus there are multiple clustering approaches. In this application we used a simple approach as it is explained next.

Once clusters are determined an immediate result is to identify subsets of experts that provably ranked the n alternatives in a highly similar manner. Such clustering knowledge may offer new opportunities on the way the alternatives are ranked by next identifying reasons why the experts within clusters assessed them the way they did.

The above concepts are applied to all possible pairs when the final recommendations of the three experts in the illustrative example described in Sect. 2.2 are used along with the consensus ranking. The case of DIFFERENCE (V1, V3) was calculated in the previous section. Table 1 presents the numerical results of all possible pairwise comparisons when the three experts are considered. The same table also considers the consensus decision as described in Sect. 2.2 (i.e., the one described by the PM termed V4).

If we consider the case of having two clusters, then Experts E2 and E3 are (relatively speaking) the closest ones with each other as their DIFFERENCE (V2, V3) value is the smallest one (equal to 0.769) among all the non-diagonal values in Table 1. Expert E1 is rather distinct than either E2 or E3. Thus, one cluster is comprised of Experts E2 and E3, while the second cluster of Expert E1. Another scenario is to have three clusters (with one expert on each) or a single cluster (with all the experts together). The derived consensus decision is also quite distinct than the final ranking recommendations made by each one of the three experts of this illustrative example. This indicates that each expert had to compromise quite a bit in order for a consensus to be reached. In summary, this kind of results can only be derived if one follows an analytical approach based on the concept of difference between two rankings as demonstrated in this application.

5.2 Application #2: Grouping the Experts Based on their Final Recommendations When a Single or a Subset of the Alternatives are Considered

This application is similar to the previous one, but now the interest is narrower as it focuses on a single alternative or a non-empty subset of the set of the alternatives. For illustrative purposes consider only alternative A4 and again the two experts E1 and E3 whose EXPANSION sets were determined earlier. The only difference now is the way the intersection and union operations are defined in a modified version of Definition 4. In this illustrative example alternative A4 has been ranked by Expert E1 as {5} (i.e., as having rank value equal to 5) and by Expert E3 as {4, 5} (see also the two corresponding PMs in Sect. 2.2). Thus, the intersection returns only the set {{5}} while the union operation returns the set {{4}, {5}, {4, 5}}. Hence, a modified version of Definition 4 would result in the following calculations for the adjusted quantity (where “(V1, V3)/A4” indicates the pair of experts E1 and E3 when it is examined in terms of the way they ranked alternative A4): DIFFERENCE ((V1, V3)/A4) = 1 – (1/3) = 0.667.

Next, all ((m + 1) × m)/2 pairwise comparisons can be calculated similarly to the previous illustrative example and again the m experts can be clustered as in the first application. However, now the focus is limited to a single alternative (i.e., to alternative A4) or a non-empty subset of the alternatives. This approach may reveal hidden aspects of the way the m experts pursued the rankings of single alternatives or subgroups of them. As was the case with the previous application, if ties in the rankings are not permitted, then the difference function described in Definition 4 can be replaced by other definitions of ranking difference (such as the ones mentioned in Sect. 2.1).

5.3 Application #3: Analysis of Individual Alternatives by Using the Final Recommendations of the Experts

This type of analysis focuses directly on the alternatives, one at a time. It expands on the previous ideas regarding ways for assessing the differences between pairs of experts. Given an alternative, say A1 of the illustrative example presented in Sect. 2.2, a ratio is formed as follows. The numerator is the size of the set formed as the modified intersection of the rankings made by all the experts (when represented as PMs) and then expanding it. For this case the intersection is the set {1, 2} and thus it expands into the set {{1}, {2}, {1, 2}}. Since this set has three members the numerator of the ratio is the number 3. The dominator is determined by first forming the modified union of the sets that correspond to all the ranking decisions made by the experts (expressed as PMs). This is the set {{1, 2}, {1, 2, 3}} which expands to the following set {{1}, {2}, {3}, {1, 2}, {2, 3}, {1, 2, 3}}. This set has six members (please note that the entry {1,3} is ignored as it cannot be in a PM due to the fact the integers 1 and 3 are not consecutive). Therefore, the dominator of the ratio is the number 6. As result of the previous analysis, the value for this ratio for alternative A1 is equal to 3/6 = 0.500.

When a similar analysis is performed with regard to alternatives A2, A3, A4, and A5, the corresponding ratios are equal to 3/6 = 0.500, 1/8 = 0.125, 1/3 = 0.333, and 1/5 = 0.200, respectively. Obviously, the value of the above ratios is at most equal to 1 (when all the experts agree with each other) and it is strictly greater than 0 (because we have a consensus reached (according to Definition 2), and thus the intersection set is never empty). The above results for the illustrative example are summarized in Table 2. If we do not use the approach based on PMs (i.e., when ties in the ranks are allowed), then the same approach can still be applied, but it is possible for some ratios to be equal to 0 (if not all the experts agree with the ranking of an alternative).

The results (final ratio values) can be clustered by using the methods described in the previous application. For this particular illustrative application, from the last column (entitled “Final Value”) of the results in Table 2 one may observe that alternatives A1, A2, and perhaps A4 can be viewed as belonging to the same group (cluster), while the remaining two alternatives (e.g., alternatives A3 and A5) seem to belong to a different group. The high values (e.g., 0.500, 0.500, and 0.333, respectively) for alternatives A1, A2, and A4, relatively speaking, are closer to the maximum value of 1. This indicates that the group of the three experts ranked these three alternatives with higher consistency with each other when compared to the way they ranked the other two alternatives (i.e., A3 and A5). Perhaps the latter two alternatives are more complex in nature than the former three ones. This suggests that some additional evaluative criteria on the way to evaluate the alternatives and more deliberations might shed more light into the nature of the GDM process of this illustrative example.

In an ideal situation all five alternatives should result in values which would be very close to the maximum possible value of 1. On the contrary, if the values are very close to the minimum possible value of 0, the opposite is true. That is, now the implication is that the experts involved may have reached a consensus on how the alternatives should be ranked, but the way they reached their individual final ranking decisions is significantly different from each other.

The results of the previous analysis can be very sensitive to the ranking decision of a single expert. This happens because a single ranking decision may impact the composition of the union set (which is used to compute the value of the dominator) in a significant manner. A similar situation may also occur for the intersection set. This is more likely to occur with large groups of experts. Thus, a complementary approach to the previous idea is to follow a more traditional method based on some simple statistical concepts.

To help fix ideas let us consider again alternative A1. There are three ranking decisions regarding this alternative (see also Sect. 2). They are as follows: {1, 2}, {1, 2, 3}, and {1, 2}, as made by expert E1, E2, and E3, respectively. Each ranking decision has a starting (smallest) rank and an ending (largest) rank (in general, these two ranks may be identical or different). The starting rank by each expert is equal to {1}, {1} and {1} (i.e., they are the same). The ending rank is equal to {2}, {3}, and {2}, respectively. The average of the starting rank is equal to 1.000, with a standard deviation equal to 0.000 (as all three numbers are identical). For the ending rank the average is equal to 2.333 with standard deviation equal to 0.222. We define as “length” the difference between the ending rank from the starting rank for each expert when a particular alternative is considered. Thus, the three lengths are equal to 1, 2, and 1, for expert E1, E2, and E3, respectively for alternative A1. The average of these values is equal to 1.333 while their standard deviation is equal to 0.222. Table 3 presents these results along with the results for all the alternatives which have been computed in a similar manner. Regarding Table 3, of interest is the observation that the average length of the ranking decisions (i.e., the 5-th column) is equal to 1 for alternatives A3 and A5, while it is significantly different than 1 for the rest. That is, the spread of the rankings for these two alternatives was smaller when compared to that for the other three alternatives.

A comparison between the results in Tables 2 and 3 shows that both tables seem to suggest that alternatives A1, A2, and A4 can be grouped together while alternatives A3 and A5 form a second group as it was explained earlier. However, the results in Table 3 are more compact and easier to interpret. It is suggested that, in general, these two analytical approaches should be followed together in a complementary manner.

6 An Illustrative Example Based on a Real-World GDM Application

This section examines an illustrative example taken from the literature (Boroushaki and Malczewski 2010). The real-world problem was to select the best location for a new major parking facility for the city of Canmore, Alberta, Canada. The actual application used a computer software package (ParticipatoryGIS.com) which was created for that purpose to collect input from the public and then analyze it. Those authors do not provide the raw data for the actual problem as the size of that data set is huge (58 decision makers were used for that purpose), but instead they describe a small scale version but still similar in nature problem for illustrative purposes. Thus, in this section we will use the same small version data set to demonstrate some of the previous concepts and approaches.

The small version data set is summarized in Table 4. The integer numbers in this table represent ranks. This table corresponds to Table 1 of the original publication (Boroushaki and Malczewski 2010). These data describe how a sample of five candidate locations (indicated as alternative A1, A2, …, A5) were ranked by six experts (indicated as expert E1, E2, …, E6). Some criteria relevant to this location problem were used by these experts. Somehow a consensus solution was reached and it is presented in the last column of Table 4.

The implied rankings by the six experts and that of the consensus solution are depicted next. One may observe that ties are allowed in the original data. Table 5 is derived from Table 4 and presents the PMs of the rankings made by the six experts plus the PM of the consensus ranking.

The Rankings by the Six Experts and the Consensus Solution are as follows:

-

Expert E1: \( A_{4} \succ A_{1} \succ A_{3} \succ A_{2} \succ A_{5} \)

-

Expert E2: \( A_{5} \succ A_{1} \succ A_{2} \succ A_{4} \succ A_{3} \)

-

Expert E3: \( A_{1} \succ A_{4} \sim A_{5} \succ A_{2} \succ A_{3} \)

-

Expert E4: \( A_{1} \sim A_{5} \succ A_{2} \sim A_{3} \sim A_{4} \)

-

Expert E5: \( A_{1} \succ A_{5} \succ A_{4} \succ A_{2} \succ A_{3} \)

-

Expert E6: \( A_{1} \succ A_{5} \succ A_{4} \succ A_{2} \succ A_{3} \)

-

Consensus: \( A_{1} \succ A_{5} \succ A_{2} \succ A_{4} \succ A_{3} \)

The intersection between almost any pair of the PMs depicted in Table 5 is the empty set. For instance, the intersection of the PMs derived from the rankings by experts E1 and E3 is the empty set. Therefore, the distance between most of the pairs of experts will be equal to the maximum value (i.e., 1.00) and thus would be meaningless. However, this particular application possesses a special characteristic: it aims at finding the best location for a parking facility. In other words, one is interested to identify the alternative with the top (i.e., #1) rank. Thus, it makes sense to focus the analysis on the alternative(s) which have received many top ranks. For the current application, this is the subset of alternatives A1 and A5 (see also Table 5). When the rankings (PMs) of the six experts and the consensus solution are considered in terms of only these two alternatives (i.e., A1 and A5) some interesting observations can be derived.

For instance, let us consider the pair of experts E3 and E4. The PMs of experts E3 and E4 in terms of only these two alternatives, denoted as PM3 and PM4, respectively, are as follows (see also Table 5):

However, it can be observed now that the previous entity denoted as “PM3” does not strictly satisfy Definition 1 because {2, 3} cannot be a legitimate ranking set as now we have just two alternatives. Thus, we call such entities “partial preference maps” (PPMs). The previous operations on union and intersection can be performed as usually.

Therefore, the previous two entities will be called PPM3 and PPM4, respectively. When the function DIFFERENCE is applied on this pair of PPMs the following result is derived:

In a similar manner, any pair of PPMs can be analyzed.

In Boroushaki and Malczewski (2010) the authors computed the proximity (distance) of two rankings based on a method originally proposed in Herrera-Viedma et al. (2002). That approach is based on the absolute value of the difference of the ranks of the alternatives, taken one at a time. That is, a method similar (but not identical) to method 5 presented in Sect. 2.1. Such approach, however, fails to compute the essence of PMs when ties are present. That is, the proposed approach based on Definition 4 (in Sect. 4) is more appropriate.

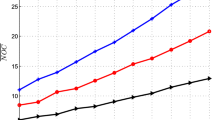

Table 6 presents all the pairwise differences derived when one considers the PMs shown in Table 5 but only in terms of the two alternatives A1 and A5 (i.e., to consider the PPMs as defined above). As this table (matrix) is a symmetric one, only the entries in the upper-right half of it are shown for simplicity of the presentation. The data in Table 6 are next used to explore any relationships that may exist among the experts involved in this GDM process. These relationships can be explored by following a clustering approach as follows (see also Fig. 1). The same results are also presented in tabular form in Table 7.

Clusters defined among the experts and the consensus solution (each denoted by the small solid rectangle symbol) which are derived by analyzing the data in Table 6

The data in Table 6 suggest that the two experts E5 and E6, along with the consensus solution are identical (their pairwise differences are equal to 0) when one focuses on the rankings of the two best alternatives (i.e., A1 and A5). This is why the experts E5 and E6 and the consensus ranking (denoted as E0 in Fig. 1 and Table 7) are the only members of the inner most cluster in Fig. 1 (see also Table 7).

Next we can observe that if one expands the notion of belonging to the same cluster (i.e., the distance threshold increases from 0 to 0.667), then experts E2 and E4 form a single cluster. At the same time, expert E3 can form a cluster with E5, E6 and E0, and another cluster is formed by E4 and E5, E6 and E0. However, experts E3 and E4 cannot be placed yet inside the same cluster. For this to happen, one needs to further relax the notion of belonging to the same cluster by considering difference values less than or equal to the threshold value 0.800 (see also Table 6). Now, E3, E4, E5, E6, and E0 are all members of the same cluster. However, expert E1 is still considered very different than the rest to be placed within the same cluster, while expert E2 forms a cluster only with expert E4. In order for one to also include experts E1 and E2 in the same cluster, then the threshold distance needs to be expanded to the highest value (i.e., to become equal to 1). In this way everybody would be included in a single cluster (see also Fig. 1 and Table 7). However, such scenario might be practically meaningless.

In summary, the previous clustering analysis reveals that experts E5 and E6 are practically of the same ranking preferences in terms of the top two alternatives which coincides with the consensus ranking. The next closest way experts can be considered as similar is when one considers experts E3 and E4 as indicated in Fig. 1 (or Table 7) and so on.

The previous results cannot be attained unless one follows the analytical procedures described in this study. Depending on the nature of the application at hand, the definition of difference between two rankings may change. However, one would still have to compute all possible pairwise differences and then examine how clusters among the experts may form. These clusters have the potential to uncover actionable new knowledge pertinent to the group decision making process and the current application. The proposed methods are very versatile and can be adapted to different problem settings. Current GDM methods do not offer this kind of post-consensus exploration.

7 Concluding Remarks

Smart decision making always requires to have a feedback mechanism at each stage of the decision making process. This is true in group decision making too. When a consensus has been reached after (possibly) a laborious decision making process, this should not be treated as the end of the process. A feedback step that further analyzes the final results may offer the last opportunity to uncover some hidden but nevertheless critical dynamics of the problem under consideration or even of the experts themselves.

The proposed methodology analyzes differences among pairs of experts when all the alternatives are considered simultaneously and also when a single alternative or subsets of alternatives are considered. A way to analyze the decisions made regarding each alternative is presented as well. The results of such analyses, after a consensus has been reached, may shed light to aspects of the problem or the experts that cannot be explored unless a consensus has first been reached and comprehensive analyses like the ones proposed in this paper are undertaken.

In summary, this paper made original contributions in two fronts. First, it contributed on how to define the concept of difference when rankings are allowed to include ties. That is, when one alternative can be assigned to multiple (but consecutive) ranks. This type of rankings can capture more realistically the way decision makers express ranking preferences. This issue has not been explored adequately so far and this study offers some exciting new developments. The second group of original contributions is on ways for analyzing the final results of a GDM process after a consensus has been reached. It provides the means to infer potentially useful new knowledge regarding the group of experts and the alternatives that were evaluated during the GDM process.

These analyses are proposed to be done post-consensus. The original GDM problem may need to be re-examined if new insights uncover important reasons to do so, especially if certain patterns seem to hold true over time when multiple GDM sessions with the same group of experts are considered. For instance, if it is discovered that very often the same subgroup of experts make decisions in an identical or semi-identical manner with each other. Such finding may be considered as an actionable insight. The proposed methodology can be executed automatically and be presented to the experts and/or to any other relevant stakeholder in an intuitive manner. This is a novel aspect of this study and has not been explored in other studies to have results to compare with.

Future research may focus on how to explore other ways for defining the difference between two rankings, especially when ties are permitted. For instance, consider the following three PPMs:

According to Definition 4 in Sect. 4, the difference between PPM1 and PPM0 is equal to 1, and the same is true for the difference between PPM2 and PPM0. However, one may argue that PPM1 is closer to PPM0 than PPM2 is to PPM0. This is not captured by this definition. Thus, in the future the concept of PM (or PPM) difference may need to be enhanced to be able to capture such issues.

Another possible extension for the future might be to consider the various stages the GDM process may have to go through during a single session. Usually, consensus is not reached in a single stage and the process may have to go through a sequence of iterations (stages) for this to happen. Information gathered during each stage and also across different GDM sessions (with the same or similar groups of experts) may be analyzed in a similar manner. Such data, however, may offer more ways for exploring the dynamics of the GDM processes and the experts involved with them. As a final note we state that the results of this study have been used in Triantaphyllou et al. (2020) as the foundation for a post-consensus analysis that considers multiple GDM sessions, each session having a number of stages. That study considers some graph theoretic formulations and then it employs an association rules mining approach to derive potentially important relations in the way experts make decisions during GDM processes.

References

Boroushaki S, Malczewski J (2010) Measuring consensus for collaborative decision-making: a GIS-based approach. Comput Environ Urban Syst 34(4):322–332

Davis JH (2014) Group decision making and quantitative judgments: a consensus model (Chapter 3). In: Witte EH, Davis JH (eds) Understanding group behavior. Psychology Press, New York, pp 43–68

Dong Y, Chen X, Herrera F (2015) Minimizing adjusted simple terms in the consensus reaching process with hesitant linguistic assessments in group decision making. Inf Sci 297:95–117

Dunn RR (2000) Isolated trees as foci of diversity in active and fallow fields. Biol Conserv 95(3):317–321

Engel M, Behnke A, Bauerfeld S, Bauer C, Buschbaum C, Volkenborn N, Stoeck T (2012) Sample pooling obscures diversity patterns in intertidal ciliate community composition and structure. FEMS Microbiol Ecol 79(3):741–750

Gardner A, Kanno J, Duncan CA, Selmic R (2014) Measuring distance between unordered sets of different sizes. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 137–143

Gere A, Kovács S, Pásztor-Huszár K, Kókai Z, Sipos L (2014) Comparison of preference mapping methods: a case study on flavored kefirs. J Chemometr 28(4):293–300

Heine MH (1973) Distance between sets as an objective measure of retrieval effectiveness. Information Storage and Retrieval (Continued as Inform Process Manag) 9(3):181–198

Herrera F, Herrera-Viedma E, Verdegay JL (1996) A model of consensus in group decision making under linguistic assessments. Fuzzy Sets Syst 78(1):73–87

Herrera-Viedma E, Herrera F, Chiclana F (2002) A consensus model for multiperson decision making with different preference structures. IEEE Trans Syst Man Cybern A Syst Hum 32(3):394–402

Hou F (2015a) A consensus gap indicator and its application to group decision making. Group Decis Negot 24(3):415–428

Hou F (2015b) The parametric-based GDM selection procedure under linguistic assessments. In: Proceedings of the 2015 IEEE international conference on fuzzy systems (FUZZ-IEEE). IEEE, pp 1–8

Hou F (2016) The prametric-based GDM procedure under fuzzy environment. Group Decis Negot 25(5):1071–1084

Hou F, Triantaphyllou E (2019) An iterative approach for achieving consensus when ranking a finite set of alternatives by a group of experts. Eur J Oper Res 275(2):570–579

Karoński M, Palka Z (1977) On Marczewski–Steinhaus type distance between hypergraphs. Appl Math 1(16):47–57

Kubiak M (2007) Distance measures and fitness-distance analysis for the capacitated vehicle routing problem. In: Doerner KF, Gendreau M, Greistorfer P, Gutjahr W, Hartl RF, Reimann M (eds) Metaheuristics. Operations research/computer science interfaces series, vol 39. Springer, Boston, MA, pp 345–364

Larose DT, Larose CD (2014) Discovering knowledge in data: an introduction to data mining. Wiley, Hoboken

Maassen H, Bezembinder T (2002) Generating random weak orders and the probability of a Condorcet winner. Soc Choice Welf 19(3):517–532

Marczewski E, Steinhaus H (1958) On a certain distance of sets and the corresponding distance of functions. Colloq Math 6(1):319–327

Pérez IJ, Cabrerizo FJ, Alonso S, Herrera-Viedma E (2014) A new consensus model for group decision making problems with non-homogeneous experts. IEEE Trans Syst Man Cybern Syst 44(4):494–498

Ray TG, Triantaphyllou E (1998) Evaluation of rankings with regard to the possible number of agreements and conflicts. Eur J Oper Res 106(1):129–136

Ray T, Triantaphyllou E (1999) Procedures for the evaluation of conflicts in rankings of alternatives. Comput Ind Eng 36(1):35–44

Ricotta C, Podani J (2017) On some properties of the Bray-Curtis dissimilarity and their ecological meaning. Ecol Complex 31:201–205

Saaty TL, Peniwati K (2008) Group decision making: drawing out and reconciling differences. RWS Publications, Pittsburgh

Safford HD, Harrison SP (2001) Grazing and substrate interact to affect native versus exotic diversity in roadside grasslands. Ecol Appl 11(4):1112–1122

Tan PN, Steinbach M, Kumar V (2013) Data mining cluster analysis: basic concepts and algorithms. In: Xiong H (ed) Introduction to data mining. Lecture notes for chapter 8. Rutgers University, New Jersey

Tindale RS, Kameda T, Hinsz VB (2003) Group decision making. In: Hogg MA, Cooper J (eds) The Sage handbook of social psychology. Sage Publications, London, pp 381–403

Triantaphyllou E (2000) Multi-criteria decision making methods: a comparative study. Kluwer Academic Publishers (currently under Springer), Boston

Triantaphyllou E, Yanase J, Hou F (2020) Post-consensus analysis of group decision making processes by means of a graph theoretic and an association rules mining approach. Omega. https://doi.org/10.1016/j.omega.2020.102208

Ureña R, Kou G, Dong Y, Chiclana F, Herrera-Viedma E (2019) A review on trust propagation and opinion dynamics in social networks and group decision making frameworks. Inf Sci 478:461–475

Witten IH, Frank E, Hall MA, Pal CJ (2016) Data Mining: Practical machine learning tools and techniques. Morgan Kaufmann, Boston

Yao Y, Deng X (2014) Quantitative rough sets based on subsethood measures. Inf Sci 267:306–322

Yenket R, Chambers ED IV, Adhikari K (2011) A comparison of seven preference mapping techniques using four software programs. J Sens Stud 26(2):135–150

Acknowledgements

The authors are very appreciative to the two anonymous reviewers whose valuable comments helped them to significantly improve the quality of the original version of the paper.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Triantaphyllou, E., Hou, F. & Yanase, J. Analysis of the Final Ranking Decisions Made by Experts After a Consensus has Been Reached in Group Decision Making. Group Decis Negot 29, 271–291 (2020). https://doi.org/10.1007/s10726-020-09655-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10726-020-09655-5