Abstract

How can probabilities make sense in a deterministic many-worlds theory? We address two facets of this problem: why should rational agents assign subjective probabilities to branching events, and why should branching events happen with relative frequencies matching their objective probabilities. To address the first question, we generalise the Deutsch–Wallace theorem to a wide class of many-world theories, and show that the subjective probabilities are given by a norm that depends on the dynamics of the theory: the 2-norm in the usual Many-Worlds interpretation of quantum mechanics, and the 1-norm in a classical many-worlds theory known as Kent’s universe. To address the second question, we show that if one takes the objective probability of an event to be the proportion of worlds in which this event is realised, then in most worlds the relative frequencies will approximate well the objective probabilities. This suggests that the task of determining the objective probabilities in a many-worlds theory reduces to the task of determining how to assign a measure to the worlds.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are used to think of a probabilistic situation as one where either an event E or an event \(\lnot E\) happens, with probabilities p and \(1-p\). In many-world theories, however, probabilistic situations are treated as deterministic branching situations, where some worlds are created where event E happens, and some worlds are created where event \(\lnot E\) happens. Does it still make sense to assign probabilities p and \(1-p\) to the worlds with events E and \(\lnot E\)?

This question was already raised at the inception of the Many-Worlds interpretation by Everett in 1957 [1]. Several early attempts were made to understand probability from a frequentist point of view [2,3,4,5,6] that were mathematically mistaken [7, 8]. Progress had to wait until 1999, when Deutsch proposed a proof of the Born rule from decision-theoretical assumptions, that was subsequently clarified and improved upon by Wallace [9,10,11,12]. In Deutsch and Wallace’s proofs probabilities are understood as tools rational agents use to make decisions about branching situations, in analogy to single-world decision theory.

Other derivations of the Born rule in the Many-Worlds interpretation have been proposed since then by Żurek, Vaidman, Carroll, and Sebens [13,14,15].

In this paper, we investigate the probability problem by focussing on general many-world theories that have a measurement-like branching situation, where a finite number of possible outcomes can occur, and one set of worlds is created with each outcome. Each set of worlds has a coefficient associated to them, with the only constraint that if the coefficient is zero, then no worlds exist with that outcome.

Two concrete many-world theories that fit into this framework are the usual Many-Worlds interpretation, where the coefficients are the usual complex amplitudes, and a classical many-worlds theory introduced by Kent [16]. There, agents live in a deterministic computer simulation, and the coefficients are non-negative integers that literally indicate the number of copies of the simulation that are run with each outcome after branching. We generalise the Deutsch–Wallace theorem to these many-world theories, and show that it implies, in Kent’s universe, that the subjective probabilities must come from the 1-norm of the coefficients, and in Many-Worlds from the 2-norm.

The decision-theoretical approach has been criticized by several authors [16,17,18,19,20,21,22]. Besides objections against specific aspects of Deutsch’s and Wallace’s proofs that do not apply to the current work, the criticism focuses on three main points: (i) the claim that to derive the branching structure of Many-Worlds via decoherence one needs to assume the Born rule in the first place, which would make the Deutsch–Wallace argument circular, (ii) the observation that there are irrational agents who do not use probabilities to make their decisions, and (iii) the claim that it is incoherent to use decision theory to derive probabilities that are actually objective. With regards to (i), it is not true that the derivation of the branching structure depends on the Born rule. It depends only on being able to say that quantum states that are close in some metric give rise to similar physics, which is standard scientific practice [23]. In any case, in this paper we are not concerned with deriving the branching structure, but rather with making sense of probabilities given that such a branching structure exists. With regards to (ii) and (iii), one should note that these criticisms apply equally well to many-world and single-world versions of decision theory, so they are actually against the whole idea of subjective probability. To a subjectivist they ring hollow, as for them probability is nothing but a tool used by rational agents. Nevertheless, it is hard to deny that there is something objective about quantum probabilities.

To address this point, we show that for any many-worlds theory where a fairly general measure can be assigned to the sets of worlds with a given outcome, an analogue of the law of large number can be proved, which says that in most worlds the relative frequency of some outcome will be close to the proportion of worlds with that outcome. This suggests that we should define the objective probability of an outcome as the proportion of worlds with that outcome. While in Kent’s universe it is obvious how to calculate the proportion of worlds, and thus the objective probabilities, this is not the case in Many-Worlds. There, the best this argument can do is say that one should take the relative frequencies as a guess for the proportion of worlds, as in most worlds one will be right.

We also explore a non-symmetric branching scenario where Kent’s universe and Many-Worlds assign fundamentally different proportions of worlds to each outcome, and thus the analogy between these many-world theories breaks down. We argue that this is due to the fact that the number of worlds in Kent’s universe increases upon branching, while in Many-Worlds the measure of worlds is conserved. To support this argument we introduce a reversed version of Kent’s universe where branching conserves the number of worlds, and show that it assign proportions of worlds compatible with Many-Worlds. This breakdown suggests that one of the key rationality principles that Deutsch and Wallace used in their proofs of the Born rule—called substitutibility by Deutsch and diachronic consistency by Wallace—is not valid in Kent’s universe, and therefore should not be accepted for every many-worlds theory. The proof of the generalised Deutsch–Wallace theorem presented here does not assume this principle, but instead derives it as a theorem in Many-Worlds and the reverse Kent’s universe.

2 Naïve Decision Theory

The generalised many-world theories we shall be concerned with have very little structure. We only need them to admit a measurement-like branching situation with n possible outcomes, where after branching n sets of worlds are created, one with each outcome. Each outcome i has a complex coefficient \(c_i\) associated to it whose precise meaning depends on the physics underlying the many-worlds theory, but obeys the general constraint that if the coefficient is equal to zero, then no worlds are created with this outcome.

We want to use this branching situation to play a game where an agent receives a reward \(r_i\) in the worlds that are created with outcome i. The game is then defined by a vector of coefficients \({\mathbf {c}} \in {\mathbb {C}}^n\), and a vector of rewards \({\mathbf {r}} \in {\mathbb {R}}^n\), and can be represented by the \(n \times 2 \) matrix

where line i specifies the coefficient and reward associated to the worlds with outcome i.

We are concerned with the price at which a rational agent with full knowledge about the situation would accept to buy or sell a ticket for playing a game G. This fair price is called the value of the game, and is denoted by V(G). From this price we shall infer subjective probabilities that are attributed by the agent to the outcomes. To determine this fair price we shall use a fairly basic decision theory, taken from Ref. [24], that is essentially a formalisation of the Dutch book argument.

The first rationality axiom we demand is that if in all worlds the game gives the same reward \(r_1\), the agent must assign value \(r_1\) to the game. If the value were higher, the agent would lose money in all worlds when buying a ticket for this game; if it were lower, the agent would lose money in all worlds when selling a ticket for this game. This axiom implies that the agent is indifferent to branching per se, and only cares about the rewards their future selves get. This might be false, as we can easily conceive of an agent which is reluctant about branching, and would only accept to pay a value smaller than \(r_1\) to play this game. In this case the agent would also be reluctant to sell a ticket for this game, and demand a price higher than \(r_1\), so there is no fair price that can be agreed upon, and presumably the game simply will not be played. We deem this behaviour to be irrational. This is specially so in theories where branching is ubiquitous and unavoidable, such as Many-Worlds.

The axiom is then

-

ConstancyIf for some game\(G = ({\mathbf {c}},{\mathbf {r}})\)all rewards\(r_i\)are equal to\(r_1\), then\(V(G) = r_1\).

The second axiom we demand is that if for a pair of games with equal coefficients G and \(G'\) the rewards of the first game are larger than or equal to the rewards of the second game in all worlds, then the agent must value the first game no less than the second game. This could be false, for example, if some agent actively wants to hurt their future selves that receive rewards smaller than the maximal one, and accepts to pay more for a game where this happens. We deem this behaviour to be irrational.

The axiom is thenFootnote 1

-

DominanceLet\(G = ({\mathbf {c}},{\mathbf {r}})\)and\(G' = ({\mathbf {c}},\mathbf {r'})\)be two games that differ only in their rewards. If\({\mathbf {r}} \ge \mathbf {r'}\), then\(V(G) \ge V(G')\).

The third axiom we need is that it should not matter if a ticket for a game with rewards \({\mathbf {r}} + \mathbf {r'}\) is sold at once, or broken down into first a ticket for the a game with rewards \({\mathbf {r}}\) followed by a ticket for another game with rewards \(\mathbf {r'}\), where these games are played using the same branching situation. If the price of the composed ticket were higher than the sum of the values of the individual tickets, the agent would lose money in all worlds when buying the composed ticket and selling the individual tickets. On the other hand, if the price of the composed ticket were lower, the agent would lose money in all worlds when buying both individual tickets and selling the composed ticket. The axiom is then

-

Additivity Let \(G = ({\mathbf {c}},{\mathbf {r}})\) and \(G' = ({\mathbf {c}},\mathbf {r'})\) be two games that differ only in their rewards. Then the game \(G'' = ({\mathbf {c}},{\mathbf {r}}+\mathbf {r'})\) has value \(V(G'') = V(G) + V(G').\)

This Additivity axiom is eminently reasonable in the scenario considered, where the agent should be willing to act as bookie and bettor in the game. In decision theory, however, one usually considers scenarios where an agent is simply offered the gamble and is trying to decide how much to pay for it, without needing to act as a bookie. In this case it is not irrational to set \(V({\mathbf {c}},{\mathbf {r}}+\mathbf {r'}) < V({\mathbf {c}},{\mathbf {r}})+V({\mathbf {c}},\mathbf {r'})\); it is in fact necessary to avoid pathological decisions such as Pascal’s Wager and the St. Petersburg paradox. As shown by Wallace, this scenario can be dealt with using a more sophisticated decision theory, such as Savage’s [12, 25]. But we believe that such decision-theoretical sophistication distracts from the physical arguments at hand, and will stick with the simpler bettor/bookie scenario. One should note, anyway, that even in Savage’s theory Additivity is a good approximation when the values being gambled are small compared to the bettor’s total wealth, and one could constrain the analysis to games in which this condition is satisfied.

The fourth and last axiom we need from classical decision theory is that the value function must be continuous on the coefficients and rewards:

-

ContinuityLet\((G_k)\)be a sequenceFootnote 2of games such that\(\lim _{k\rightarrow \infty }G_k = G\). Then\(V(G) = \lim _{k\rightarrow \infty } V(G_k)\)

It is here just for mathematical convenience. It can be left out entirely if one is happy to restrict the rewards to be rational numbers (as more realistically they would be integer multiples of eurocents) and the coefficients to belong to some countable subset of the complex numbers that depends on the particular many-worlds theory (as it would be physically suspect to demand them to be specified with infinite precision).

The axioms presented up to this point are already enough to imply that the agent must assign subjective probabilities to the outcomes of the measurement in the game. The proof is an elementary exercise in decision theory, but we shall include it here anyway because it is short, enlightening, and mostly unfamiliar to physicists:

Theorem 1

Let \(G = ({\mathbf {c}},{\mathbf {r}})\) be a game with n outcomes. A rational agent must assign it value

where the vectors \({\mathbf {e}}_i\) are the standard basis, and \(V({\mathbf {c}},{\mathbf {e}}_i)\) are the subjective probabilities, as

Proof

By Additivity

so we only need to compute the value of games with a single non-zero reward, e.g. \(({\mathbf {c}},r_1{\mathbf {e}}_1)\), upon which we shall now focus. Using Additivity again, we see that for any positive integer b we have that

and setting \(r_1=1/b\) shows us that

Another application of Additivity lets us conclude that for any positive integer a we have that

so this homogeneity is valid for any positive rational number. To extend it for any rational number q we use Constancy to conclude that \(V({\mathbf {c}},{\mathbf {0}}) = 0\), where \({\mathbf {0}}\) is the vector of all zeroes, and Additivity again to show that

and therefore that

To extend this to any real number \(\mu \), let \((q_k)\) be a sequence of rational numbers converging to it. Then by Continuity

and therefore for any reward vector r we have that

so the computation of the value of an arbitrary game reduces to the computation of the values of the elementary games \(({\mathbf {c}},{\mathbf {e}}_i)\), which are by definition the subjective probabilities. To see that they are positive and normalised first notice that by Dominance

and that by Constancy

where \({\mathbf {1}}\) is the vector of all ones. \(\square \)

This is as far as we can go by using the naïve decision theory. Rational agents must reason about the games by assigning a probability to each outcome, but the decision theory is silent about what the probabilities must be.

One can go further, though, by assuming an additional axiom reminiscent of the disreputableFootnote 3 Principle of Indifference from classical probability [26]. It states that one should assign uniform probabilities to symmetric situations: coins and dice should be regarded as equiprobable because there is no preferred side of the coin or face of the die.

In a deterministic single-world theory the principle is nonsense, as a truly symmetric situation would be incapable of producing an output. Deterministic single world theories that are capable of producing outcomes, such as Bohmian mechanics, must have a variable breaking the symmetry. But a rational agent that knows its value should definitely not assign uniform probabilities, but rather probability one to the outcome that will actually happen.

Probabilistic single-world theories fare a bit better, as one can have a perfectly symmetric situation before the measurement. But after the measurement the symmetry is necessarily broken, as only one outcome actually happens, and a rational agent that knows which should definitely not assign uniform probabilities.

In both deterministic and probabilistic single-world theories, therefore, an indifference principle can only apply to agents with restricted knowledge: about the present, in the deterministic case, or about the future, in the probabilistic case. Only in many-world theories can the symmetry remain rigorously unbroken, even after the measurement is made, and agents with full knowledge about the game can apply the indifference principle. Note that even if the agent’s future selves could relay information to the past about which outcome they experience it would not help, as the agent already knows that they will have future selves experiencing all outcomes.

The axiom is then

-

Indifference Let \(G = ({\mathbf {c}},{\mathbf {r}})\) and \(\sigma \) be a permutation. Then the game with permuted coefficients and rewards \(G_\sigma = (\sigma ({\mathbf {c}}),\sigma ({\mathbf {r}}))\) has value \(V(G_\sigma ) = V(G)\)

Notice that this axiom is not merely saying that it does not matter whether we write down 1 or 2 to label the outcome of a coin toss, but rather that it does not matter if we exchange the coefficients and the rewards of the set of worlds with outcome 1 with those of the set of worlds with outcome 2. Worlds 1 and worlds 2 are physically different, if nothing else for having different measurement results. Indifference implies that whatever these differences are, the coefficients and rewards are the only relevant ones. This is clearly not true if outcome 1, and not 2, happens.

This axiom suffices to prove that symmetric games have uniform probabilities:

Lemma 2

(Symmetry). Let \(G = ({\mathbf {c}},{\mathbf {r}})\) be a game with n outcomes such that all coefficients \(c_i\) are equal to \(c_1\). Then

Proof

Let

be a version of G with the ith cyclic permutation applied to the coefficients and rewards, so that by Indifference\(V(G_{\sigma _i}) = V(G)\). If one then defines the reward vector

all its components are equal to \(\sum _{i=1}^n r_i\), so by Constancy the game \(\Gamma = ({\mathbf {c}},\varvec{\rho })\) has value

but also by Additivity

and therefore

\(\square \)

Now, to deal with games with unequal coefficients, we shall do a fine-graining argument to reduce them to symmetric games. How the fine-graining works depends on the precise physics of the many-worlds theory, so we’ll have different fine-graining axioms for different theories.

3 Kent’s Universe

In Kent’s universe the agent lives in a deterministic computer simulation run by some advanced civilization. The branching happens at the press of a button that is displayed on a wall, that in addition contains a list of non-negative integers \({\mathbf {m}} = (m_1,\ldots ,m_n)\) and real numbers \({\mathbf {r}} = (r_1,\ldots ,r_n)\). These integers play the role of the coefficients in the game, and \(m_i\) is literally the number of successor worlds which are created with reward \(r_i\) in them.

In this case it is clear how to do fine-graining: if one plays the game

or the game

in both games three successor worlds are created, one with reward \(r_1\) in it, and two with reward \(r_2\). The only difference is that while in game G both worlds with reward \(r_2\) are labelled with outcome 2, in game \(G'\) one of the worlds with reward \(r_2\) is labelled with outcome 2 and the other with outcome 3. We postulate that this difference does not matter, and that \(V(G') = V(G)\). Since \(G'\) is symmetric, we can evaluate \(V(G')\) using Lemma 2, and thus determine that

Generalising this argument, we can determine the value of any game by fine-graining it into a symmetric game. The formal postulate we need is

-

1-Fine-graining Let

$$\begin{aligned} G = \begin{pmatrix} m_1 &{} \quad r_1 \\ m_2 &{} \quad r_2 \\ \vdots &{} \quad \vdots \\ m_n &{} \quad r_n \end{pmatrix} \end{aligned}$$(23)be a game with n outcomes. Then for any non-negative integers \(m_{11},m_{12}\) such that \(m_{11} + m_{12} = m_1\) the fine-grained game with \(n+1\) outcomes

$$\begin{aligned} G' = \begin{pmatrix} m_{11} &{} \quad r_1 \\ m_{12} &{}\quad r_1 \\ m_2 &{} \quad r_2 \\ \vdots &{}\quad \vdots \\ m_n &{} \quad r_n \end{pmatrix} \end{aligned}$$(24)has value \(V(G') = V(G)\)

Theorem 3

Let \(G = ({\mathbf {m}},{\mathbf {r}})\) be a game. A rational agent in Kent’s universe must assign it value

Proof

Starting from an arbitrary n-outcome game \(G = ({\mathbf {m}},{\mathbf {r}})\), we can repeatedly apply 1-Fine-graining to take it to a symmetric game \(G' = ({\mathbf {1}}^{(\sum _{i=1}^nm_i)},\mathbf {r'})\), where \({\mathbf {1}}^{(\sum _{i=1}^nm_i)}\) is the vector of \(\sum _{i=1}^nm_i\) ones. The reward vector \(\mathbf {r'}\) consists of \(m_1\) rewards \(r_1\), \(m_2\) rewards \(r_2\), etc., and can be written as \(\mathbf {r'} = \bigoplus _{i=1}^n {\mathbf {r}}_i^{(m_i)}\). Its value \(V(G')\) can then by calculated via Lemma 2, and is given by

\(\square \)

4 Many-Worlds

In the Many-worlds interpretation a measurement is a unitary transformation, that via decoherence gives rise to superpositions of quasi-classical worlds. We shall not discuss how this happens, as this has been extensively explored elsewhere [27,28,29,30]. Rather, we shall take for granted that this is indeed the case, and that one can instantiate the games by doing measurements on quantum states, with the complex amplitudes playing the role of the coefficients.

The game is started by giving the agent a state

where the \(| i \rangle \) are distinguishable states of some infinite-dimensional degree of freedom, such as position. The agent then does a measurement in the computational basis: a unitary transformation that acts on the basis state \(| i \rangle \) together with the measurement device in the ready state \(| M_? \rangle \) and takes the measurement device to the state with outcome i called \(| M_i \rangle \):

The game is concluded by giving the agent reward \(r_i\) in the worlds with outcome i, with the whole process taking the initial state \(\sum _{i=1}^n \alpha _i | i \rangle | M_? \rangle | r_? \rangle \) to the final state

Since the measurement is done in a fixed basis, the game is completely defined by the vector of coefficients \(\varvec{\alpha }\) and the vector of rewards \({\mathbf {r}}\), and so we can represent it by the matrix \(G = (\varvec{\alpha },{\mathbf {r}})\).

We want to proceed as in Kent’s universe, and use a fine-graining postulate to reduce arbitrary games to symmetric games. It is not so obvious, however, how to do fine-graining in the Many-Worlds picture, as we cannot count worlds in order to say that games are equivalent if they assign the same number of worlds to each reward. As argued by Wallace, the continuous nature of quantum mechanics and the arbitrariness of the border between worlds make it impossible to count them in a physically meaningful way [11]. One could try, instead, to arbitrarily postulate that there is one world for each measurement outcome with nonzero coefficient, as is often proposedFootnote 4 [5, 31].

To see that this is untenable, consider the historical Stern–Gerlach experiment [32], that measured the spinFootnote 5 of silver atoms by letting them accumulate on a glass plate; if some atom ended up on the left hand side of the glass plate it had spin \(+\hbar \frac{1}{2}\), and if ended up on the right hand side it had spin \(-\hbar \frac{1}{2}\). Their apparatus, however, was not doing a left/right measurement, but rather a much more precise position measurement, that needs to be coarse-grained in order to obtain a two-outcome spin measurement. Should we then consider the large number of distinguishable positions to be the number of worlds produced in this experiment? But if so, what would we make of a more modern Stern–Gerlach experiment, that instead of the glass plate uses a Langmuir–Taylor detector, a single hot wire that is scanned across the neutral atom beam [33, 34]? Should it be taken to produce two worlds, one in which the atom hit the wire and another in which it did not? And what if we do a Frankenstein version of the Stern–Gerlach experiment, with a glass plate on the left-hand side and a Langmuir–Taylor detector on the right-hand side? Does it create more worlds with spin \(+\hbar \frac{1}{2}\) than with spin \(-\hbar \frac{1}{2}\)?

This should make it clear that the number of outcomes in a measurement is largely arbitrary, and of little relevance. What actually matters is that atoms with spin \(+\hbar \frac{1}{2}\) go left and atoms with spin \(-\hbar \frac{1}{2}\) go right; these different experimental setups are equivalent ways to do the measurement, and we shall base the fine-graining argument in Many-Worlds precisely on this equivalence.

Consider then a game where a measurement is made on the state

reward \(r_1\) is given in the worlds with outcome 1, and reward \(r_2\) in the worlds with outcome 2, taking it to the final state

which can also be represented as the game matrix

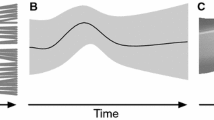

An equivalent way to play this game, shown in Fig. 1, is to make a measurement on the state

instead, where an unitary U was applied to \(| 2 \rangle \), and apply the label 2 and give reward \(r_2\) to both outcomes 2 and 3, leading to the final state

where \(| M_2' \rangle \) is a measurement result physically distinct from \(| M_2 \rangle \), but with the same label.

This second way to do the measurement still gives outcomes 1 and 2 whenever the initial state is \(| 1 \rangle \) and \(| 2 \rangle \), so it respects the definition of measurement given above and normal practice in real laboratories.

One can measure a state in the \(\{| 1 \rangle ,| 2 \rangle \}\) basis by applying a unitary U to \(| 2 \rangle \) that takes it to a superposition of \(| 2 \rangle \) and \(| 3 \rangle \), measuring the state in the \(\{| 1 \rangle ,| 2 \rangle ,| 3 \rangle \}\) basis, and label as 2 both results 2 and 3

If one does not, however, coarse-grain the outcomes 2 and 3 together, but rather leaves them distinct, then we are playing instead the three-outcome game

We postulate that this labelling choice does not make a difference, so a rational agent must assign the same value to games G and \(G'\). This suffices to fine-grain arbitrary games into symmetric games. As an illustration, consider the game

Doing the fine-graining with a unitary U that takes the state \(| 2 \rangle \) to

we take G to

Applying similar unitaries to \(| 2 \rangle \) and \(| 3 \rangle \), we take \(G'\) to

which is a symmetric game, and so \(V(G'') = V(G)\) can be evaluated via Lemma 2, resulting in

We can now prove the Born rule in the general case by formalising this argument via the following axiom:

-

2-Fine-graining Let

$$\begin{aligned} G = \begin{pmatrix} \alpha _1 &{} \quad r_1 \\ \alpha _2 &{}\quad r_2 \\ \vdots &{} \quad \vdots \\ \alpha _n &{}\quad r_n \end{pmatrix} \end{aligned}$$(41)be a game with n outcomes. Then for any complex numbers \(\alpha _{11},\alpha _{12}\) such that \({\sqrt{|\alpha _{11}|^2 + \left| \alpha _{12}\right| ^2} = \left| \alpha _1\right| }\) the fine-grained game with \(n+1\) outcomes

$$\begin{aligned} G' = \begin{pmatrix} \alpha _{11} &{}\quad r_1 \\ \alpha _{12} &{}\quad r_1 \\ \alpha _2 &{}\quad r_2 \\ \vdots &{}\quad \vdots \\ \alpha _n &{}\quad r_n \end{pmatrix} \end{aligned}$$(42)has value \(V(G') = V(G)\)

Theorem 4

(Deutsch–Wallace). Let \(G = (\varvec{\alpha },{\mathbf {r}})\) be a game. A rational agent in Many-Worlds must assign it value

Proof

Let then \(G = (\varvec{\alpha },{\mathbf {r}})\) be a n-outcome game such that the coefficients \(\alpha _j\) are of the form \(\alpha _j = \sqrt{\frac{a_j}{b_j}}e^{i\theta _j}\), and do a trivial 2-Fine-graining in each outcome to take it to a n-outcome game \(G' = (\varvec{\alpha '},{\mathbf {r}})\) with coefficients \(\alpha _i' = |\alpha _i| = \sqrt{\frac{a_i}{b_i}}\).

Let then \(d=\prod _{i=1}^n b_i\), and define the integer \(a_i' = da_i/b_i\) so that \(\alpha _i = \sqrt{\frac{a'_i}{d}}\). One can then fine-grain each coefficient \(\alpha _i\) into \(a'_i\) coefficients equal to \(1/\sqrt{d}\), obtaining a symmetric game \(G'' = ({\mathbf {1}}^{(\sum _{i=1}^n a_i')}/\sqrt{d},\mathbf {r'})\) with reward vector \(\mathbf {r'} = \bigoplus _{i=1}^n {\mathbf {r}}_i^{(a_i')}\). By Lemma 2 the value of \(G''\) is given by

and by 2-Fine-graining this is equal to V(G).

To generalise this argument for a game \(G = (\varvec{\alpha },{\mathbf {r}})\) with arbitrary complex coefficients \(\alpha _j\), it is enough to notice that numbers of the form \(\sqrt{\frac{a}{b}}e^{i\theta }\) are dense in the complex plane. Let then \(\varvec{\alpha }_k\) be a sequence of coefficient vectors such that \(\lim _{k\rightarrow \infty }\varvec{\alpha }_k = \varvec{\alpha }\) and that for all k we have \(\alpha _{kl} = \sqrt{\frac{a_{kl}}{b_{kl}}}e^{i\theta _l}\). Let then \(G_k = (\varvec{\alpha }_k,{\mathbf {r}})\). By Continuity, we have that

\(\square \)

Note that we did not have to assume that the initial state was normalised, as the proof implies that a factor of \(\Vert \varvec{\alpha }\Vert ^2_2\) must appear on the denominator of the value function. We did have to assume that the transformations between quantum states are done via unitaries, but this comes from the background assumption of Many-Worlds quantum mechanics.

In some textbooks one postulates the Born rule as a fundamental principle, and motivates unitary evolution from conservation of probabilities, see e.g. Refs. [35,36,37]. Such an approach would make deriving the Born rule from the unitary evolution a bit circular. Other textbooks, however, postulate unitarity as fundamental, motivated by analogy with Hamiltonian classical mechanics, and consider the Born rule a separate postulate, see e.g. Refs. [38,39,40]. This latter approach parallels the historical development of the Schrödinger equation and the Born rule [41, 42].

4.1 Generalisation

To emphasize that the Born rule comes from fine-graining through unitary transformations that preserve the 2-norm, we’d like to generalise the fine-graining argument to transformations that preserve some other norm. Which norms should we consider? As we show in Appendix A, some weak consistency conditions imply that fine-graining can only be done if the transformations preserve the p-norm of the vectors.

Consider, then, some hypotheticalFootnote 6 many-worlds theory where the p-norm is preserved. In such a theory we can fine-grain the game

by using a transformation T that takes the state \(| 2 \rangle \) intoFootnote 7

The fine-grained game is then

where \({\mathbf {1}}^{(2^p)}\) is the vector of \(2^p\) ones, and from the analogous argument we conclude that

The general p-Born rule can then be proven via the following axiom:

-

p -Fine-graining Let

$$\begin{aligned} G = \begin{pmatrix} \alpha _1 &{}\quad r_1 \\ \alpha _2 &{}\quad r_2 \\ \vdots &{}\quad \vdots \\ \alpha _n &{}\quad r_n \end{pmatrix} \end{aligned}$$(50)be a game with n outcomes. Then for any complex numbers \(\alpha _{11},\alpha _{12}\) such that \({\left( |\alpha _{11}|^p + \left| \alpha _{12}\right| ^p\right) ^{\frac{1}{p}} = \left| \alpha _1\right| }\) the fine-grained game with \(n+1\) outcomes

$$\begin{aligned} G' = \begin{pmatrix} \alpha _{11} &{}\quad r_1 \\ \alpha _{12} &{}\quad r_1 \\ \alpha _2 &{}\quad r_2 \\ \vdots &{}\quad \vdots \\ \alpha _n &{}\quad r_n \end{pmatrix} \end{aligned}$$(51)has value \(V(G') = V(G)\)

Theorem 5

Let \(G = (\varvec{\alpha },{\mathbf {r}})\) be a game. A rational agent in a many-worlds theory where transformations preserve the p-norm must assign it value

Proof

The proof is similar to the Many-Worlds case, so we shall only sketch it: consider a game \(G = (\varvec{\alpha },{\mathbf {r}})\) with coefficients \(\alpha _j = \left( \frac{a_j}{b_j}\right) ^{\frac{1}{p}}e^{i\theta _j}\), do a trivial p-Fine-graining to get rid of the phase, rewrite the coefficients as \(\left( \frac{a_i'}{d}\right) ^{\frac{1}{p}}\) for \(d=\prod _{i=1}^n b_i\) and \(a_i' = da_i/b_i\), fine-grain it into a game with \(a_i'\) coefficients equal to \(\left( 1/d\right) ^{\frac{1}{p}}\) for each outcome i, use Lemma 2 to conclude that the value of the fine-grained game is given by equation (52), and use Continuity to show that this formula is valid for any game. \(\square \)

Notice that not only the proof does not work for the case of the \(\max \)-norm (often referred to as \(p=\infty \)), but the result is also false, as it is not possible to fine-grain all games into symmetric games via transformations that preserve the \(\max \)-norm. For example, any fine-graining of the game

will have at least one coefficient 1 associated to reward \(r_1\) and at least one coefficient 2 associated to reward \(r_2\). One could try, nevertheless, to arbitrarily define

where M is the set of i such that \(|\alpha _i| = \max _j |\alpha _j|\). This \(\max \)-Born rule would be consistent with Constancy, Dominance, Additivity, and Indifference, but not with Continuity.

5 Objective Probabilities

We do not accept that the behaviour of a rational decision maker should play a role in modelling physical systems—Richard Gill [17].

Up to now the discussion has been exclusively about subjective probabilities. We have argued only that it would be irrational to assign probabilities different than those given by the generalised Deutsch–Wallace theorem because one would unjustifiably break the symmetries of the theory. We have not argued, though, that it would be irrational to assign different probabilities because they would predict different relative frequencies. Such an argument would be suspect: why should these explicitly subjective probabilities be connected to the clearly objective relative frequencies? Should not relative frequencies be connected to objective probabilities instead?

The situation is not as bad as it sounds, because although subjective, these probabilities depend only on the coefficients of the game, which are objective, and their derivation is done from defensible rationality axioms together with facts about the world and the theory describing it; it would be rather surprising if these subjective probabilities turned out to be completely fantastic. In fact, such well-grounded subjective probabilities are even taken to be equal to the objective probabilities by Lewis’ Principal Principle [44], which states that

that is, the objective probability of an event E is equal to the subjective probabilities assigned to E by a rational agent that knows the history of the world up to this point H and the theory that describes the world T.

This is still a bit unsatisfactory, because the relationship between objective probabilities and relative frequencies should not depend on the opinions of rational agents or even their existence. We believe, after all, that radioactive elements have been decaying on Earth much before humans appeared to reason about them, and it would be absurd to assume that the relative frequencies budged at all when we arrived.

We can do better, though. We shall propose a definition of objective probability in terms of the proportion of worlds in which an event is realised, and show that the connection of these objective probabilities with relative frequencies is mathematically identical to the one in single-world theories. In this way they fulfil the main roleFootnote 8 objective probabilities must play, as defended by Saunders in Ref. [45].

This connection, in single-world theories, is given by the law of large numbers, that says roughly that for a large number of trials the relative frequencies will be close to the objective probability, with high objective probabilityFootnote 9. More precisely, if the objective probability of observing some event is p, then after N trials the objective probability that the frequency \(f_N\) will be farther than \(\varepsilon \) away from p is upperbounded by a function that is decreasing in N and \(\varepsilon \), that is, that

where the precise form of the upper bound is not relevant, but for concreteness we used the one given by the Hoeffding inequality.

To see how this works in many-world theories, we shall first consider how the relative frequencies behave in Kent’s universe, and then generalise. Consider then that one is collecting relative frequencies by performing N repetitions of a n-outcome measurement, where \(m_i\) worlds are created with outcome i. After N trials there are \(n^N\) sets of worlds, each set identified by a sequence of outcomes \({\mathbf {s}} = (s_1,\ldots ,s_N)\), with \(s_i \in \{1,\ldots ,n\}\). The number of worlds where a sequence \({\mathbf {s}}\) is obtained is clearly given by

where \(k_i\) is the number of outcomes i in \({\mathbf {s}}\). If we define then the proportion of worlds with outcome sequence \({\mathbf {s}}\) as

then the proportion of worlds where the relative frequency \(f_N\) of outcome 1 is k / N is

which is formally identical to the binomial distribution, and therefore allows us to prove a law of large numbers for the proportion of worlds, which says that in most worlds the relative frequency of outcome 1 will be close to \(\sharp _1\), or more precisely that

This suggests that we could try identifying objective probabilities with the proportion of worlds in a general many-worlds theory. Consider then that in such a theory one is collecting frequencies by performing N repetitions of a n-outcome measurement as in Kent’s universe. Now the branching coefficients are not the number of worlds created with each outcome, however, and as argued in Sect. 4 we do not think that it is possible to assign a sensible world count. We can, however, assign a sensible world measureFootnote 10: a function \(\Lambda \) that assigns a real number \(\Lambda _{\mathbf {s}} \ge 0\) to the set of worlds with outcome sequence \({\mathbf {s}}\), and defines the measure of a set of worlds with outcome sequences in some set S to be

Since the branchings are completely independent, it is natural to postulate that

that is, that the measure of the set of worlds with outcomes \({\mathbf {s}}\) is the product of the single-trial measures, and this is enough to show that in most worlds the relative frequencies will be right. Defining the proportion of worlds with outcome sequence \({\mathbf {s}}\) to be

it follows that the proportion of worlds with relative frequency k / N of outcome 1 is

which is again formally identical to the binomial distribution, and thus the law of large numbers for the proportion of worlds follows.

This implies that in any many-worlds theory with such a product measure it is eminently reasonable to count relative frequencies, and use them to guess which is the proportion of worlds with a given outcome: in most worlds one will be right.

This works perfectly well in Many-Worlds, if we take the measure of worlds to be the one suggested by the Born rule, or, more generally, the p-Born rule. To see that, consider again the N repetitions of the n-outcome measurement, which in Many-Worlds is represented by the transformation

where the final state \(| w \rangle \) can be written as the superposition of the \(n^N\) sets of worlds

If we then postulate the measure of the set of worlds \(| w_{\mathbf {s}} \rangle \) to be

then it does decompose as a product of the single-trial measures, as required. Defining again the proportion of worlds with outcomes \({\mathbf {s}}\) to be

we see that the proportion of worlds with relative frequency \(f_N = k/N\) is again given by the binomial distribution, from which the law of large numbers for the proportion of worlds again follows.

In summary, if the correct way to measure the proportion of worlds is via the p-norm, then in most worlds we will observe frequencies conforming to the p-Born rule. Conversely, if we observe relative frequencies conforming to the 2-Born rule, we should bet that the correct way to measure the proportion of worlds is via the 2-norm.

6 Multiplying or Splitting

The analogy between Kent’s universe and Many-Worlds is not perfect, however, and it breaks down in the Once-or-Twice scenario shown in Fig. 2 (discussed by Wallace [23] and Sebens and Carroll [46]). In it, an agent performs a measurement described by the coefficients \(c_1\) and \(c_2\), and in the worlds where outcome 2 is obtained they perform the same measurement again. What are the subjective and objective probabilities of the sequences of outcomes (1), (2, 1), and (2, 2)?

First we shall consider the objective probabilities. In Kent’s universe it seems clear what they are: there are in total \(c_1+c_1c_2+c_2^2\) worlds, of which \(c_1\) have outcome (1), \(c_2c_1\) have outcomes (2, 1), and \(c_2^2\) have outcomes (2, 2), so the proportions are

If we measure frequencies, which are after all what objective probabilities should be connected to, then in most worlds they will be close to these proportions.

In Many-Worlds (and more generally in the hypothetical p-theories), however, Once-or-Twice is described by the transformation

and the measure of worlds is given by Eq. (67), which tells us that

a fundamentally different result. If \(c_1=c_2\), for example, \(\sharp _1 = 1/3\), but \(\lambda _1 = 1/2\).

What is the source of this disconnect? Our proposal is that while in Kent’s universe the number of worlds is quite literally being multiplied upon branching, this is clearly not the case in Many-Worlds, as branching increases neither number of particles nor energy. In fact, the total measure of worlds, as given by Eq. (67), is conserved. A closer analogue to Many-Worlds would be a reversed version of Kent’s universe where branching conserves the number of worlds. There the computer simulation starts with a large number of worlds, and when a measurement is made each outcome is imprinted in a subset of the worlds, with the relative sizes of these subsets determined by the coefficients. In the Once-or-Twice case, it suffices to start with \((c_1+c_2)^2\) worlds. After the first branching \((c_1+c_2)c_1\) are imprinted with outcome 1, and \((c_1+c_2)c_2\) with outcome 2. Out of the \((c_1+c_2)c_2\) with outcome 2 a further \(c_2c_1\) receive a 1, and \(c_2^2\) receive another 2. The proportions of worlds are given by

now matching Many-WorldsFootnote 11.

Now turning to the subjective probabilities, we face a difficulty, because the generalised Deutsch–Wallace theorem proved here deals only with simple measurements, not sequences of measurements. The original versions by Deutsch and Wallace do attribute probabilities to sequences of measurements, though, through an axiom that Deutsch called substitutibility and Wallace called diachronic consistency. It says that the value of a game does not change if one of its rewards is replaced by a game of equal value, so if an agent attributes value V(G) to some game G, they really must be indifferent between receving reward V(G) or playing G, even when this reward would be given as part of another game. More formally, it is

-

SubstitutionLet\(G = ({\mathbf {c}},{\mathbf {r}})\)be a game, and\(G' = ({\mathbf {c}}',{\mathbf {r}}')\)another game such that\(V(G') = r_1\). Then the sequential game where an agent playsGbut instead of receiving reward\(r_1\)they play\(G'\)has value equal toV(G).

With it, we can prove that the subjective probabilities in Many-Worlds match the proportion of worlds from equations (71), as expected, but Substitution also implies that in both the normal and the reverse Kent’s universe the subjective probabilities match those from equations (72). Here the Principal Principle raises a red flag, as in the regular Kent’s universe they should match equations (69) instead.

What went wrong? Well, Substitution implies that, in the case where \(c_1=c_2=1\), one should be indifferent between playing a game where one future self gets reward \(r_1\) and another reward \(r_2\), and another game where one future self gets reward \(r_1\) but two get reward \(r_2\). If all these future selves are equal, as is the case in Kent’s universe, one should definitely not be indifferent! One should prefer the second game, and assign probability 1 / 3 to each outcomeFootnote 12. This is also the answer one obtains from Elga’s indifference principle [47].

We should, therefore, reject Substitution as a rationality principle valid for general many-world theories, as it seems valid only for those where the total measure of worlds is conserved upon branching. As a substitute, in both the regular and the reverse Kent’s universe we can unproblematically follow the objective probabilities, and say that a sequential game is equivalent to a simple game where the same rewards are given in the same number of worlds. In Many-Worlds the objective probabilities are not well-established, so instead we argue that different ways of doing a measurement are equivalent, as done in Sect. 4. Specifically, we say that measuring a state \(| \psi \rangle \) and then a state \(| \varphi \rangle \) in the worlds with outcome 2 is equivalent to doing a joint measurement on \(| \psi \rangle | \varphi \rangle \) and coarse-graining the outcomes of the measurement on \(| \varphi \rangle \) in the worlds where the outcome of the measurement on \(| \psi \rangle \) was different than 2. This allows us to derive Substitution as a theorem in Many-Worlds and in the reverse Kent’s universe, but not in the regular Kent’s universe. This is done in detail in Appendix B.

In all many-world theories, these arguments imply that the agents should be indifferent to the number of branchings, caring only about which coefficients are associated to which rewards, thus satisfying the Principal Principle.

7 Conclusion

We investigated how subjective and objective probabilities work in many-world theories.

With respect to subjective probabilities, we generalised the Deutsch–Wallace theorem, and showed how it can be broken down in three parts: the first is a decision-theoretical core common to both single-world and many-world theories, that says that rational agents should use probabilities to make their decisions. The second part is a symmetry argument very natural for many-world theories, but less so for single-world ones, that implies that rational agents should assign uniform probabilities to physically symmetric games. The last part is a fine-graining argument used to reduce arbitrary games to symmetric ones. This reduction takes different forms depending on the precise physics of the many-worlds theory in question: in Kent’s universe it implies that the probabilities come from the 1-norm, while in Many-Worlds it implies that the probabilities come from the 2-norm. While in Kent’s universe the fine-graining is motivated explicitly from many-worlds considerations, the Many-Worlds version depends only on operational assumptions, so if one can stomach the symmetry argument, this derivation of the Born rule can also be considered valid for single-world versions of quantum mechanics.

With respect to objective probabilities, we have argued that they should be identified with the proportion of worlds in which an event happens, and showed that for any measure of worlds that has a product form an analogue of the law of large numbers can be proven: in most worlds the relative frequency will be close to the proportion of worlds. In Kent’s universe, the motivating example, this argument can tell us what the objective probabilities are, as it is obvious how to measure the proportion of worlds. Furthermore, one can use the Principal Principle as a consistent check, and note that they match the subjective probabilities from the generalised Deutsch–Wallace theorem.

In Many-Worlds, however, it is not clear how to measure the proportion of worlds, so this argument cannot be used to derive the objective probabilities. It could be used in the other direction, though: if one takes both the Deutsch–Wallace theorem and the Principal Principle as true, then one could show that worlds should be measured via the 2-norm. Alternatively, the argument can simply be used to say that if you want to find out what the proportion of worlds is, gathering relative frequencies is a good idea, as in most worlds you’ll get the right answer.

We have also shown that the analogy between Many-Worlds and Kent’s universe breaks down in the Once-or-Twice scenario, and used this breakdown to argue that a key rationality principle used in the original version of the Deutsch–Wallace theorem—that when playing a sequential game agents should be indifferent between receiving a reward or playing a subgame with the same value—is not valid in general many-world theories, and thus one should rely on other arguments to calculate the value of sequential games.

Notes

Dominance will only be needed to prove Theorem 1, that says that rational agents in a many-worlds theory assign subjective probabilities to the worlds. All other results follow without it, including Theorems 3 and 4, that say that rational agents in Kent’s universe and Many-Worlds bet according to the appropriate version of the Born rule.

For concreteness, convergence is defined in the metric induced by the norm \(\Vert G\Vert := \Vert {\mathbf {c}}\Vert _3 + \Vert {\mathbf {r}}\Vert _1\).

It is untenable in classical probability because in a given situation there are often several different plausible symmetries that give rise to different probability assignments. This problem does not arise here, as the symmetry at hand will be the one between the coefficients of the worlds, or the amplitudes of the quantum state.

To the best of our knowledge there has been no attempt to calculate the number of worlds in an even remotely realistic model of a measurement.

Note that Stern and Gerlach were not aware that they were measuring spin, rather they interpreted the experiment as a proof of Bohr–Sommerfelds spatial quantization hypothesis.

One should take seriously the “hypothetical” here, as theories where the p-norm is preserved are rather pathological. As shown in Ref. [43], the only linear transformations that preserve the p-norm of all vectors for \(p\ne 1,2\) are permutations composed with phases. Here we get around this by only asking the transformation T to preserve the norm of the computational basis.

In this example p must be of the form \(\log _2 n\) for integer \(n\ge 2\), but in general any real \(p\ge 1\) works.

We talk about the roles objective probabilities play instead of the definition of objective probabilities because this is the best we can do. Unlike subjective probabilities, there is no widely accepted definition of objective probability that we could try to satisfy.

One often hears a different story, that in the limit of an infinite number of trials the relative frequency is equal to the objective probability, full stop. This is simply mistaken, but given that the mathematics we present here are identical, those that want to insist on this mistake can do it equally well in many-world theories.

Which does include the counting measure as a particular case, so we are by no means assuming that worlds cannot be counted.

It should be clear that neither the regular nor the reverse Kent’s universe are realistic analogues of Many-Worlds: both of them require an exponential number of worlds, either in the end or in the beginning of the simulation.

Sebens and Carroll claim, however, that an agent that assigns these probabilities can be Dutch-booked [46]: After the first measurement, but before the second, an agent that is ignorant of the outcome will assign probability 1 / 2 to being in each world, and will therefore accept a bet that pays 3€ in the world with outcome 1 and \(-3\)€ in the world with outcome 2. After the second measurement, the agent will assign probability 1 / 3 to being in each world, and will therefore accept a bet that pays \(-4\)€ in the world with a single outcome 1 and 2€ in the worlds with outcomes (2, 1) and (2, 2). If the agent accepts both bets, then in all worlds they will lose 1€.

The problem with this argument is that the agent would not have accepted the first bet if they knew that they would be multiplied in the world with outcome 2: the assignment of probability 1 / 2 was a mistake, that the agent corrected after learning of the second multiplication. Given that they accepted the incorrect bet, however, the agent knows that accepting the second, fair, bet will cause them to be Dutch-booked, so they would reject it. Note, furthermore, that making two bets about the same situation using two different probabilities generically leads to Dutch-booking, even in single-world theories. Consider an agent that believes a coin to be fair, and therefore accepts a bet that pays 3€ if heads, and \(-3\)€ if tails. If the agent changes their mind, and decides instead that the coin has probability 2 / 3 of coming up tails, and accepts a bet that pays \(-4\)€ if heads and 2€ if tails, then the agent will lose 1€ independently of the result of the coin flip.

This raises the question of how a realistic agent in Kent’s universe could ever assign probabilities, since they would depend on the whole tree of future branchings. The agent could simply recognize that it is not possible to determine the objective probabilities, and use only the part of the branching tree that they can foresee to calculate their subjective probabilities. Analogously, a grandparent could decide to divide their inheritance among their children weighted by how the number of grandchildren they begat, but refuse to speculate about how many children each grandchild will have.

References

Everett, H.: “Relative state” formulation of quantum mechanics. Rev. Mod. Phys. 29, 454–462 (1957)

Finkelstein, D.: The logic of quantum physics. Trans. N. Y. Acad. Sci. 25, 621–637 (1963)

Hartle, J.B.: Quantum mechanics of individual systems. Am. J. Phys. 36, 704 (1968)

DeWitt, B.S.: Quantum mechanics and reality. Phys. Today 23, 30 (1970)

Graham, N.: The measurement of relative frequency. In: DeWitt, B., Graham, N. (eds.) The Many-Worlds Interpretation of Quantum Mechanics. Princeton University Press, Princeton (1973)

Farhi, E., Goldstone, J., Gutmann, S.: How probability arises in quantum mechanics. Ann. Phys. 192, 368–382 (1989)

Squires, E.J.: On an alleged “proof” of the quantum probability law. Phys. Lett. A 145, 67–68 (1990)

Caves, C.M., Schack, R.: Properties of the frequency operator do not imply the quantum probability postulate. Ann. Phys. 315, 123–146 (2005)

Deutsch, D.: Quantum theory of probability and decisions. Proc. R. Soc. Lond. A 455, 3129 (1999)

Wallace, D.: Everettian rationality: defending Deutsch’s approach to probability in the Everett interpretation. Stud. Hist. Philos. Sci. B 34, 415–439 (2003)

Wallace, D.: Quantum probability from subjective likelihood: improving on Deutsch’s proof of the probability rule. Stud. Hist. Philos. Sci. B 38, 311–332 (2007)

Wallace, D.: A formal proof of the Born rule from decision-theoretic assumptions. In: Saunders, S., Barrett, J., Kent, A., Wallace, D. (eds.) Many Worlds? Everett, Quantum Theory & Reality. Oxford University Press, Oxford (2010)

Zurek, W.H.: Environment-assisted invariance, entanglement, and probabilities in quantum physics. Phys. Rev. Lett. 90, 120404 (2003)

Vaidman, L.: Probability in the many-worlds interpretation of quantum mechanics. In: Ben-Menahem, Y., Hemmo, M. (eds.) The Probable and the Improbable: Understanding Probability in Physics, Essays in Memory of Itamar Pitowsky. Springer, Berlin (2011)

Carroll, S.M., Sebens, C.T.: Many worlds, the Born rule, and self-locating uncertainty. In: Struppa, D.C., Tollaksen, J.M. (eds.) Quantum Theory: A Two-Time Success Story, p. 157. Springer, Milano (2014)

Kent, A.: One world versus many: the inadequacy of Everettian accounts of evolution, probability, and scientific confirmation. In: Saunders, S., Barrett, J., Kent, A., Wallace, D. (eds.) Many Worlds? Everett, Quantum Theory & Reality. Oxford University Press, Oxford (2010)

Gill, R.D.: On an argument of David Deutsch. In: Schürmann, M., Franz, U. (eds.) Quantum Probability and Infinite Dimensional Analysis: From Foundations to Applications, pp. 277–292. World Scientific, Hackensack (2005)

Baker, D.J.: Measurement outcomes and probability in Everettian quantum mechanics. Stud. Hist. Philos. Sci. B 38, 153–169 (2007)

Albert, D.: Probability in the Everett picture. In: Saunders, S., Barrett, J., Kent, A., Wallace, D. (eds.) Many Worlds? Everett, Quantum Theory & Reality. Oxford University Press, Oxford (2010)

Dawid, R., Thébault, K.: Many worlds: decoherent or incoherent? Synthese 192, 1559–1580 (2015)

Mandolesi, A.L.G.: Analysis of Wallace’s proof of the Born rule in Everettian quantum mechanics: formal aspects. Found. Phys. 48, 751–782 (2018)

Mandolesi, A.L.G.: Analysis of Wallace’s proof of the Born rule in Everettian quantum mechanics II: concepts and axioms. arXiv:1803.08762 [quant-ph]

Wallace, D.: The Emergent Multiverse: Quantum Theory According to the Everett Interpretation. Oxford University Press, Oxford (2012)

Joyce, J.: The Foundations of Causal Decision Theory. Cambridge University Press, Cambridge (2008)

Savage, L.: The Foundations of Statistics. Dover Publications, New York (1972)

van Fraassen, B.: Indifference: the symmetries of probability. In: van Fraassen, B. (ed.) Laws and Symmetry. Oxford University Press, Oxford (1989)

Saunders, S.: Decoherence, relative states, and evolutionary adaptation. Found. Phys. 23, 1553–1585 (1993)

Wallace, D.: Everett and structure. Stud. Hist. Philos. Sci. B 34, 87–105 (2003)

Zurek, W.H.: Decoherence, einselection, and the quantum origins of the classical. Rev. Mod. Phys. 75, 715–775 (2003)

Hartle, J.B.: The quasiclassical realms of this quantum universe. Found. Phys. 41, 982–1006 (2011)

Price, H.: Decisions, decisions, decisions: can Savage salvage Everettian probability? In: Saunders, S., Barrett, J., Kent, A., Wallace, D. (eds.) Many Worlds? Everett, Quantum Theory & Reality. Oxford University Press, Oxford (2010)

Gerlach, W., Stern, O.: Der experimentelle Nachweis der Richtungsquantelung im Magnetfeld. Z. Phys. 9, 349–352 (1922)

Phipps, T.E., Stern, O.: Über die Einstellung der Richtungsquantelung. Z. Phys. 73, 185–191 (1932)

Frisch, R., Segrè, E.: Über die Einstellung der Richtungsquantelung. II. Z. Phys. 80, 610–616 (1933)

Sakurai, J.-J.: Modern Quantum Mechanics. Addison-Wesley, Boston (1993)

Feynman, R., Leighton, R., Sands, M.: The Feynman Lectures on Physics, vol. 3. Addison-Wesley, Boston (1977)

Landau, L., Lifshitz, E.: Quantum Mechanics: Non-relativistic Theory. Pergamon, Oxford (1965)

von Neumann, J.: Mathematische Grundlagen der Quantenmechanik. Springer, Berlin (1932)

Nielsen, M., Chuang, I.: Quantum Computation and Quantum Information. Cambridge University Press, New York (2000)

Peres, A.: Quantum Theory: Concepts and Methods. Springer, Dordrecht (2006)

Schrödinger, E.: An undulatory theory of the mechanics of atoms and molecules. Phys. Rev. 28, 1049–1070 (1926)

Born, M.: Zur Quantenmechanik der Stoßvorgänge. Z. Phys. 37, 863–867 (1926)

Aaronson, S.: Is quantum mechanics an island in theoryspace? arXiv:quant-ph/0401062

Lewis, D.: A subjectivist’s guide to objective chance. In: Jeffrey, R.C. (ed.) Studies in Inductive Logic and Probability, vol. 2. University of California Press, Berkeley (1980)

Saunders, S.: Chance in the Everett interpretation. In: Saunders, S., Barrett, J., Kent, A., Wallace, D. (eds.) Many Worlds? Everett, Quantum Theory & Reality. Oxford University Press, Oxford (2010)

Sebens, C.T., Carroll, S.M.: Self-locating uncertainty and the origin of probability in Everettian quantum mechanics. Br. J. Philos. Sci. 69, 25–74 (2018)

Elga, A.: Defeating Dr. Evil with self-locating belief. Philos. Phenomenol. Res. 69, 383–396 (2004)

Bohnenblust, F.: An axiomatic characterization of \(L_p\)-spaces. Duke Math. J. 6, 627–640 (1940)

Acknowledgements

We thank Koenraad Audenaert, Časlav Brukner, Eric Cavalcanti, Fabio Costa, Daniel Süß, David Gross, Markus Heinrich, Philipp Höhn, Felipe M. Mora, Jacques Pienaar, Simon Saunders, and David Wallace for useful discussions. This work has been supported by the Excellence Initiative of the German Federal and State Governments (Grant ZUK 81).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Consistent Fine-Graining

For which norms can the fine-graining argument work?

There are multiple ways to fine-grain a game with unequal coefficients into a symmetric game. For example, if \(\mu = \big \Vert \big (\Vert {\mathbf {1}}^{(n)}\Vert ,\Vert {\mathbf {1}}^{(m)}\Vert \big )\big \Vert \), where \({\mathbf {1}}^{(n)}\) is the vector with n ones, one can fine-grain the game

either by first taking it to

and then applying two more fine-grainings to take it to

or by taking G directly to \(G''\), which will be possible only if \(\mu = \Vert {\mathbf {1}}^{(n+m)}\Vert \). We want all possible ways of fine-graining a game to give same result, so we demand the norm to be such that for all vectors \({\mathbf {v}}\) and \({\mathbf {w}}\) with disjoint support

We also demand the norm to be permutation-invariant, as it seems unphysical to attribute meaning to the labelling of the vectors, and that \(\Vert (1,1)\Vert \ne 1\), because otherwise \(\Vert {\mathbf {1}}^{(n)}\Vert =1\) for all n, and it is therefore impossible to fine-grain any non-trivial game.

These conditions are enough to show that these norms must be equivalent to p-norms when restricted to vectors of rational numbers, as can be seen by adapting an argument by Bohnenblust [48]. We have then

Theorem 6

Let \(\Vert \cdot \Vert :{\mathbb {C}}^N \rightarrow {\mathbb {R}}\) be a permutation-invariant norm for \(N\ge 3\) such that \(\Vert (1,1)\Vert \ne 1\) and

for all vectors \({\mathbf {v}},{\mathbf {w}}\) with disjoint support. Then for any vector \({\mathbf {c}}\) such that the absolute value of its components is rational,

for some real number \(p \ge 1\).

Proof

Let f be such that \(f(1):=\Vert 1\Vert \) and \(f(n+1) := \Vert (1,f(n))\Vert \). First note that \(f(1) = 1\), as \(\Vert 1\Vert = \Vert (1,0)\Vert = \Vert (\Vert (1,0)\Vert ,\Vert (0,0)\Vert )\Vert = \Vert (1,0)\Vert ^2\).

We need to show that f(n) is monotonous, and that \(f(n^k) = f^k(n)\). For the former, consider the identity \(2(f(n),0) = (f(n),1) + (f(n),-1)\) and take the norm of both sides. By the triangle inequality

For the latter, first we show that \(f(n+m) = \Vert (f(m),f(n)\Vert \). Assume that it holds for some m. Then

Since it holds for \(m=1\), by induction it holds for all m. Now assume that \(f(nm) = f(n)f(m)\) holds for some m. Then

and therefore by induction this is true for all m, as it obviously holds for \(m=1\). This implies that \(f(n^k) = f^k(n)\).

Now let \(m,n\ge 2\) be some fixed integers, and h the integer such that for any positive integer k

Applying \(f(\cdot )\) to these numbers, it follows that

and using the fact that \(h \le k \frac{\log n}{\log m}\) and \(h > k \frac{\log n}{\log m}-1\) we have that

Taking the limit of k going to infinity lets us conclude that

which means that this fraction is a constant independent of n (and different than 0 as \(f(2)>1)\). Calling this constant 1 / p, we conclude that

Now for any rational number m / n we have that

so by homogeneity \(\Vert (a,b)\Vert = (|a|^p + |b|^p)^{\frac{1}{p}}\) for any rationals |a| and |b|, and by induction for any vector \({\mathbf {c}}\) such that the absolute values of the components are rational numbers

\(\square \)

If one furthermore assumes some regularity condition, then the result is valid for any complex vector.

Reducing Sequential Games to Simple Games

Consider the sequential game

where in the worlds with outcome 1 reward \(r_1\) is given, but in the worlds with outcome 2 the subgame \(H = \begin{pmatrix} d_1 &{} s_1 \\ d_2 &{} s_2 \end{pmatrix}\) is played. We want to reduce it to a simple game in both Kent’s universes and Many-Worlds.

In the regular Kent’s universe \(c_1\) worlds are created with reward \(r_1\), \(c_2d_1\) worlds are created with reward \(s_1\), and \(c_2d_2\) worlds are created with reward \(s_2\), so it seems natural to postulate that G is equivalent to the simple game

In the reverse Kent’s universe, there are \(M(c_1+c_2)(d_1+d_2)\) worlds in the beginning. After the first branching, \(Mc_1(d_1+d_2)\) worlds are imprinted with outcome 1, and the remaining \(Mc_2(d_1+d_2)\) worlds are split again, with \(Mc_2d_1\) being imprinted with a further outcome 1, and \(Mc_2d_2\) with outcome 2. In the end there are \(Mc_1(d_1+d_2)\) with reward \(r_1\), \(Mc_2d_1\) worlds with reward \(s_1\), and \(Mc_2d_2\) worlds with reward \(s_2\), so it seems natural to postulate that G is equivalent to the simple game

Note that in the reverse Kent’s universe Substitution is satisfied: the value of the subgame H is

and the value of G there is

which is equal to the value of \(\begin{pmatrix} c_1 &{} r_1 \\ c_2 &{} V(H) \end{pmatrix}\), as required. We shall not prove the general case, as that is quite straightforward.

In Many-Worlds, the game G is instantiated by making a measurement on the state \(| \psi \rangle = c_1| 1 \rangle + c_2| 2 \rangle ,\) giving reward \(r_1\) in the worlds with outcome 1, and in the worlds with outcome 2 doing a measurement on the state \(| \varphi \rangle = d_1| 1 \rangle + d_2| 2 \rangle ,\) finally giving rewards \(s_1\) and \(s_2\) in the worlds with the second outcomes 1 and 2. These measurements take the initial state \(| \psi \rangle | M_? \rangle | \varphi \rangle | D_? \rangle \) to the final state

An equivalent way to play this game is to make a joint measurement on the state

but in the worlds where the measurement on \(| \psi \rangle \) resulted in 1 apply the label ? to both outcomes of the measurement on \(| \varphi \rangle \), leading to the final state

where \(| D_?' \rangle \) and \(| D_?'' \rangle \) are measurements results physically distinct from \(| D_? \rangle \), but with the same label.

If one does not, however, coarse-grain the results (1, 1) and (1, 2) together, then this measurement procedure can be regarded as playing the simple game

instead. We postulate therefore that a rational agent in Many-Worlds should regard G and \(G'''\) as equivalent, or more formally that:

-

ReductionThe sequential game

$$\begin{aligned} G = \begin{pmatrix} \alpha _1 &{}\quad r_1 \\ \vdots &{}\quad \vdots \\ \alpha _n &{}\quad \beta _1 &{}\quad s_1 \\ &{}\quad \vdots &{}\quad \vdots \\ &{}\quad \beta _m &{}\quad s_m \end{pmatrix} \end{aligned}$$(97)where subgame\((\varvec{\beta },{\mathbf {s}})\)is played in the worlds with outcomen, has the same value as the simple game

$$\begin{aligned} G' = \begin{pmatrix} \alpha _1\varvec{\beta } &{}\quad r_1 \\ \vdots &{}\quad \vdots \\ \alpha _{n-1}\varvec{\beta } &{} r_{n-1} \\ \alpha _n\beta _1 &{}\quad s_1 \\ \vdots &{}\quad \vdots \\ \alpha _n\beta _{m-1} &{}\quad s_{m-1} \\ \alpha _n\beta _m &{}\quad s_m \end{pmatrix}. \end{aligned}$$(98)

This Reduction postulate suffices to prove Substitution as a theorem, as the value of the subgame \((\varvec{\beta },{\mathbf {s}})\) is

and the value of G is

as required.

Rights and permissions

About this article

Cite this article

Araújo, M. Probability in Two Deterministic Universes. Found Phys 49, 202–231 (2019). https://doi.org/10.1007/s10701-019-00241-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10701-019-00241-7