Abstract

The emergence of chatbots and language models, such as ChatGPT has the potential to aid university students’ learning experiences. However, despite its potential, ChatGPT is relatively new. There are limited studies that have investigated its usage readiness, and perceived usefulness among students for academic purposes. This study investigated university students’ academic help-seeking behaviour, with a particular focus on their readiness, and perceived usefulness in using ChatGPT for academic purposes. The study employed a sequential explanatory mixed-method research design. Data were gathered from a total of 373 students from a public university in Malaysia. SPSS software version 27 was used to determine the reliability of the research instrument, and descriptive statistics was used to assess the students’ readiness, and perceived usefulness of ChatGPT for academic purposes. Responses in the open-ended questions were analysed using a four-step approach with ATLAS.ti 22. Research data from both the quantitative and qualitative methods were integrated. Findings indicated that students have the proficiency, willingness, and the requisite technological infrastructure to use ChatGPT, with a large majority attesting to its ability to augment their learning experience. The findings also showed students’ positive perception of ChatGPT’s usefulness in facilitating task and assignment completions, and its resourcefulness in locating learning materials. The results of this study provide practical implications for university policies, and instructor adoption practices on the utilisation of ChatGPT, and other AI technologies, in academic settings.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence (AI) is transforming the way university students learn and understand new things (Ifenthaler & Schumacher, 2023; Rawas, 2023). However, unlike face-to-face lectures where instructors can support university students in regulating their own learning, the use of chatbots, powered by artificial intelligence, provides learners with high levels of autonomy, and low levels of instructor presence (Jin et al., 2023). Students can now tailor and adapt their learning to their individual needs, goals, and abilities. This decreases opportunities for student-instructor face-to-face interactions (Adams et al., 2020). Consequently, a type of interaction that is potentially under threat is help-seeking. Academic help-seeking behaviour is an important self-regulated learning strategy (Won et al., 2021), which is critical to a student’s academic success (Adams et al., 2021; Yan, 2020).

University students seek help from their instructors and friends for a variety of purposes, such as obtaining course advice and information, navigating course content and resources, verifying their understanding on the subject matter, or discussing personal matters (Broadbent & Lodge, 2021). In the usage of chatbots, and language models like ChatGPT, university students commonly seek help to answer questions, provide explanations, and create study materials (Foroughi et al., 2023). ChatGPT can also enhance students’ educational experience (Kuhail et al., 2023) by simulating conversations, and providing immediate support and feedback to students (Pillai et al., 2023). Its other capabilities include providing students with personalised learning experiences, and automating administrative tasks, contributing to enhanced student engagement (Foroughi et al., 2023; Mijwil & Aljanabi, 2023). However, there are concerns regarding the accuracy of the information and advice given. Some believe that relying solely on it could disrupt genuine learning experiences particularly in the context of self-regulated learning (Wu et al., 2023). Ray (2023) highlighted this potential drawback, emphasizing the need for users to cross-reference and critically evaluate the information provided.

This raises the question of how university students’ help-seeking behaviours can be supported in using ChatGPT. This is a timely issue as the number of students using ChatGPT is on the rise (“Students Turn to ChatGPT for Learning Support,” 2023). While past studies have revealed the benefits of using AI-based chatbots for learning (e.g., Al-Sharafi et al., 2022; Hwang & Chang, 2021), a growing number of studies have also highlighted that many students grapple with effective self-regulation when using them (Gupta et al., 2019; Sáiz-Manzanares et al., 2023; Tsivitanidou & Ioannou, 2021). University students’ help-seeking behaviour in the usage of AI-based chatbots can be influenced by factors such as readiness (Hammad & Bahja, 2023; Uren & Edwards, 2023), and perceived usefulness (Kasneci et al., 2023). Thus, to support help-seeking behaviours in the long term, it is necessary to investigate students’ readiness, and perceived usefulness of ChatGPT.

With the ability to adapt and improvise in the long term, ChatGPT could revolutionize education by potentially enhancing its effectiveness and accessibility for students worldwide (Mijwil et al., 2023; Rawas, 2023). However, despite its potential, ChatGPT is relatively new. There are limited studies that have investigated its usage readiness, and perceived usefulness among students for academic purposes. In the Malaysian context, ChatGPT serves as a potential tool for promoting academic assistance among students. Due to an intrinsic shyness observed in Malaysian students when seeking help, as highlighted by Low et al. (2016), they frequently opt for intermediary platforms to ask questions. ChatGPT could provide a non-judgmental and accessible medium, making it easier for them to seek the guidance they require. However, its efficacy and impact in this setting warrant further investigation. Therefore, the current study aimed to investigate the use of ChatGPT for academic help-seeking, with a focus on the perceptions of students in higher education. In particular, it investigated the university students’ readiness to use ChatGPT, and its perceived usefulness for academic purposes.

The emergence of chatbots and language models, such as ChatGPT, which can act as a digital tutor (Olga et al., 2023), has the potential to aid university students’ learning experiences, enabling them to make informed decisions, and draw reliable conclusions in their studies (Foroughi et al., 2023). When a given technology provides accurate information, students are more likely to trust and adopt it for learning (Iranmanesh et al., 2022). This opens new possibilities to support help-seeking behaviours among university students. The results of this study provide practical implications for university policies, and instructor practices on the utilisation of ChatGPT, and other AI technologies in academic settings.

2 Literature review

2.1 Academic help-seeking behaviour

Academic help-seeking behaviour among university students is a complex phenomenon influenced by a multitude of factors (Fan & Lin, 2023; Kassarnig et al., 2018; Payne et al., 2021). In general, help-seeking behaviour refers to the actions or steps that individuals take to obtain assistance, information, or advice when facing a problem or challenge (Li et al., 2023). In the academic context, it refers to students seeking assistance or clarification when facing academic challenges or uncertainties (Payne et al., 2021). In this study, academic help-seeking behaviour is aligned to principles of self-regulated learning as it represents the active strategies students employ when they encounter challenges. By seeking help, students are exercising metacognitive awareness, recognizing their limitations, and taking steps to address them. This aligns with the principles of self-regulated learning, which emphasize goal setting, self-monitoring, and strategic adjustment (Carter et al., 2020; Puustinen & Pulkkinen, 2001). In essence, academic help-seeking fosters self-regulation, promoting a proactive and resilient approach to learning, which could potentially improve their academic performance.

A study by Kassarnig et al. (2018) found that the most informative indicators of academic performance are based on social ties, suggesting the presence of a strong peer effect among university students. This finding indicates that students who seek help from their peers tend to perform better academically. In addition to social ties, behavioural patterns also play a significant role in academic performance. Yao et al. (2019) collected longitudinal behavioural data from students’ smart cards, and proposed three major types of discriminative behavioural factors: diligence, orderliness, and sleep patterns. The empirical analysis demonstrated that these behavioural factors are strongly correlated with academic performance. Furthermore, the study found a significant correlation between each student’s academic performance, and that of their behaviourally similar peers. These studies show that peer influence is a strong indicator of university students’ help-seeking behaviour. However, this reliance on peers could potentially be problematic when peers are absent, or lack expertise.

Hence, the role of online learning platforms and technological tools in shaping academic help-seeking behaviour among university students should not be overlooked (Amador & Amador, 2014; Chyr et al., 2017; Mandalapu et al., 2021). Mandalapu et al. (2021) explored a student-centric analytical framework for Learning Management System (LMS) activity data. Their analysis showed that student login volume, compared to other login behaviour indicators, is both strongly correlated, and causally linked to student academic performance, especially among those with low academic performance. This suggests that students who frequently engage with online learning resources are more likely to seek academic help. The context in which students seek help can also influence their behaviour. For instance, Hassan et al. (2019) found that students’ perceptions of course outcomes, and instructor ratings on online academic forums can influence their help-seeking behaviour.

Owing to the adoption of artificial intelligence (AI) in education (Adams & Chuah, 2022), virtual tutors, or widely known as chatbots, are also integrated into various e-learning platforms and applications with the goal of providing real-time help to students (Chen et al., 2023; Chuah & Kabilan, 2021). Artificially intelligent chatbots are seen as a feasible solution in enhancing the help-seeking experience among students. These chatbots can provide immediate responses to students’ queries, making them a valuable tool. Windiatmoko et al. (2022) developed a chatbot using deep learning models to provide students with various information, such as information on the curriculum, new student admissions, lecture course schedules, and student grades. Similarly, Broadbent and Lodge (2021) explored students’ perceptions of the use of live chat technology for online academic help-seeking within higher education. The study specifically focused on comparing the perspectives of online and blended learners. Their findings suggest that live chats can be effective tools in supporting self-regulated help-seeking behaviours in both online and blended learning environments. Another increasingly popular chatbot that has penetrated the academic setting is ChatGPT.

2.2 ChatGPT in education

ChatGPT, developed by OpenAI, is a powerful chatbot that runs on a large language model that has found significant presence in academic settings (Adiguzel et al., 2023; Kasneci et al., 2023; Lund & Wang, 2023). It leverages machine learning to understand and generate human-like text, providing users with insightful responses to a wide range of queries (OpenAI, 2022). Released in November 2022, it has rapidly gained the attention of administrators, instructors, and students, particularly due to its capability to perform various academic-related tasks, such as writing essays, or solving complex problems across different subjects in seconds (Lo, 2023; Sok & Heng, 2023). The body of scholarly research exploring the effects of ChatGPT on the broader educational landscape is also steadily expanding. These studies critically evaluate not only students’ readiness to incorporate this tool into their learning strategies, but also its practical benefits for academic achievement apart from the academic integrity and ethical implications.

Kasneci et al. (2023), for example, explored the usefulness of ChatGPT in education. They stipulated that it has potential applications in creating personalised learning experiences for students that are catered to their individual needs and preferences. Dwivedi et al. (2023) further highlighted the importance of ChatGPT as an additional tool to assist academics in their teaching and learning, and research obligations. However, they also outlined the challenges that need to be addressed in the deployment of such AI tools. One major concern revolves around biases that may be inherent in the AI system itself.

While ChatGPT has been studied extensively in the context of various educational tools, there is indeed a limited amount of research specifically on its potential use and impact on help-seeking behaviour among students. One study that is related to this scope is by Zhang (2023), who conducted a lab experiment with students answering multiple-choice questions across 25 academic subjects with the assistance of ChatGPT. The study found that the students were more likely to weigh in advice from the chatbot if they were unfamiliar with the topic, had used ChatGPT in the past, or had previously received accurate advice. In addition, Olga et al. (2023) highlighted that ChatGPT can provide automated review and feedback for assignments, act as a digital tutor to answer questions, and generate examples for learning activities. On the other hand, they also noted that ChatGPT could ironically misguide students due to the system’s limitation and bias. This drawback may reduce the reliability of ChatGPT as an academic help-seeking medium among students.

To date, studies have primarily focused on the overall performance outcomes associated with ChatGPT’s use in education, overlooking the potential influence of these technologies on students’ approaches to seeking academic help. Given the 24/7 availability and accessibility of AI platforms like ChatGPT, which may alter traditional dynamics of help seeking, it is imperative for future research to explore this domain. Such investigations would provide crucial insights into the ways in which AI tools could encourage or deter help-seeking behaviour among students, and how these behaviours might subsequently impact their learning outcomes.

2.3 Research context

Since 1963, Malaysia’s higher education system has emerged and evolved into a binary structure. As acknowledged in the Malaysia Education Blueprint (Higher Education) (MOE, 2015), its higher education is governed by the Federal government through the Ministry of Higher Education (MOHE) with exceptions to central agencies such as Public Service Department and the Treasury whose jurisdiction includes budgetary, financial, and human resource matters (Sirat & Wan, 2022). This limits the institutional autonomy of the public universities. More importantly, several legislations were enacted between 1971 and 2007 to govern higher education institutions. The Universities and University Colleges Act 1971 (Act 30) the is legislation that governs relations between the state and public universities. Conversely, the Private Higher Educational Institution Act 1996 (Act 555) provides the legal standing to establish private higher education institutions and empowers the Minister of Higher Education authority to regulate these private institutions. The higher education system in Malaysia comprises the public and private sectors with a minimal interface between them. At present, there are 20 public universities and 426 private universities, university colleges, and colleges (Sirat & Wan, 2022).

Within the Malaysian higher education landscape, there is a dynamic interplay of challenges and opportunities. These include the need to address the quality of education (Morshidi et al., 2020), provide equal access to diverse student populations, and enhance the international competitiveness of Malaysian universities (MOE, 2015). Moreover, the advent of Artificial Intelligence (AI) has introduced both promises and complexities. AI’s potential to aid university students’ learning experiences is undeniable. However, it also brings forth a spectrum of challenges and ethical considerations. The integration of AI, such as ChatGPT, into educational settings necessitates careful deliberation on issues related to self-regulated learning, data privacy, bias, and the impact on traditional pedagogical methods.

3 Methods

This study employed a sequential explanatory mixed-method research design to investigate university students’ readiness, and perceived usefulness of using ChatGPT for academic purposes. The use of mixed methods allowed for a comprehensive exploration of the research objectives by integrating quantitative and qualitative data collection and analysis techniques, where the intent was to use the qualitative methods to help explain the quantitative results in more depth (Creswell, 2015; Tashakkori et al., 2020). We fully acknowledge that qualitative research is primarily designed to provide a rich understanding of complex phenomena from the participants’ perspectives, focusing on context and nuances. Our rationale for employing open-ended questions in a questionnaire format lies in our intent to capture both quantitative and qualitative data concurrently, allowing us to complement quantitative insights with qualitative narratives (Creswell & Plano Clark, 2017). This mixed-methods approach was chosen to offer a more comprehensive understanding of the phenomenon under investigation (Tashakkori et al., 2020).

3.1 Sample

A non-probability sampling technique was chosen due to its ability to focus on particular characteristics of a population that are of interest (Etikan et al., 2016). In this study, a total of 373 students of different fields of study from a public university in Malaysia participated. Key demographic information of the participants is illustrated in Table 1.

As indicated in Table 1, a higher percentage of the participants (54.2%) were female, which is indicative of the gender distribution in higher education institutions in Malaysia (Norman & Kaur, 2022). 85.8% of them were within the age group of 20 to 29 years old. Furthermore, a large majority of them were in the first year of their university education (95%), with most of them studying via online mode (98%), which is particularly relevant for this study as this allows for insights into their help-seeking behaviours in the usage of ChatGPT. The participants’ frequency of using ChatGPT is shown in Table 2.

Only 12.3% of the participants noted that they used ChatGPT every day while most of them used it occasionally during the week. The different levels of usage provided an opportunity for this study to gauge students’ perceived usefulness of ChatGPT in assisting in their academic tasks.

3.2 Instrumentation

A cross-sectional quantitative survey method was employed in this study. A questionnaire was developed to gauge university students’ readiness, and the perceived usefulness of using ChatGPT for academic purposes. It contained four main sections: Section A contained five basic demographic questions (i.e., gender, age, mode of learning, year of study, and frequency of using ChatGPT); Section B was on the students’ readiness to use ChatGPT; Section C was on their perceived usefulness of ChatGPT while Section D covered four open-ended questions to gather the participants’ views and suggestions on the use of ChatGPT for academic purposes. For Sections B and C, the instrument consisted of 20 items on a four-point Likert scale, with 1 being strongly disagree, and 4 being strongly agree.

3.3 Data collection

Respondents were administered an online questionnaire in April 2023. With regard to ethical considerations, students’ consent to take part in this study was sought before they filled in the questionnaire. On the front cover of the questionnaire, it was stated that the respondents were given the choice to either take part in the survey, or otherwise. Participation was strictly voluntary, and anonymous. Thus, by completing the questionnaire, the respondents had given their consent.

3.4 Data analysis

The quantitative data from the questionnaires were imported into IBM’s Statistical Product and Service Solutions (SPSS) software version 27, and screened. A valid data set of 373 surveys was used for analysis. Prior to further analysis, the validity and reliability of the instrument was determined. The Cronbach’s alpha coefficient value (0.92) suggested that the instrument had very good internal consistency, and was considered highly reliable (Bond & Fox, 2015).

Descriptive statistics (mean, frequency count, and standard deviation) were used to show the participants’ overall level of agreement to each item. In the context of a four-point Likert scale, where 4 is the highest level of agreement, a mean score of 3.0 would suggest a fairly high level of agreement on average among respondents. This score is above the scale’s midpoint of 2.5, indicating that the majority of responses lean towards agreement on the statement (Sullivan & Artino, 2013).

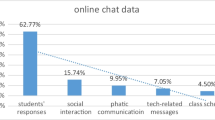

Recognizing responses to the open-ended items varied in length, from just a few words to more detailed descriptions and examples, we employed a deductive theme analysis as we used predefined themes based on our research questions to guide our analysis (Miles et al., 2019). The open-ended questions in our survey were designated as mandatory as we aimed to maximize the completeness of the dataset. This approach helps ensure that we receive responses from all participants, providing a more comprehensive dataset for analysis (Dillman et al., 2014).

Responses in the open-ended questions were analysed using a four-step approach. First, all data sets were read to ensure familiarity. Next, themes and propositions were generated using the constant comparative analysis technique (Lester et al., 2020) using a qualitative data analysis tool, ATLAS.ti 22, and coded. Third, each code label was examined. Codes that were similar or overlapping were merged. Finally, specific quotes from the open-ended questions were highlighted to support the quantitative findings, adding more depth and richness to the study. The combination of both types of data from the quantitative and qualitative methods provides the robust analysis required for a mixed-method design (Tashakkori et al., 2020). Students’ responses were coded Student 1 (S1) to Student 373 (S373) to ensure respondent confidentiality.

3.5 Findings

3.5.1 Students’ readiness to use ChatGPT for academic purposes

This section on quantitative findings covers the responses from students who have used ChatGPT. Table 3 shows their level of agreement on items concerning their readiness to use it.

The reported mean scores substantiated an overall positive predisposition towards employing ChatGPT among respondents albeit with some degree of variability. The respondents expressed a strong conviction in their capacity to master the use of ChatGPT, as evidenced by an impressive mean score of 3.09 out of a possible 4. Notably, those respondents with prior exposure indicated a considerable understanding of its capabilities, with a mean score of 3.07. There is a further substantial degree of assurance in their current skills to manipulate ChatGPT as underscored by the mean score of 2.98.

The data also unearthed a more-than-average assurance among respondents in the potential of ChatGPT to augment their learning experience, registering a high mean score of 3.33. Equally promising is the discovery that respondents have the requisite technological infrastructure to access ChatGPT, with adequate internet connection and smart devices, as denoted by a score of 3.21. However, juxtaposed against this positivity is a significant revelation indicating a perception among respondents that the university falls short in providing adequate guidelines for the utilisation of AI tools such as ChatGPT. This sentiment registered the lowest mean score of 2.52, highlighting a visible gap in institutional support and guidance that warrants immediate attention.

In the context of integrating and accrediting ChatGPT-generated content in academic tasks or assignments, scores hovered just below the 3-mark (2.95 and 2.93 respectively). This reflects a moderate level of comfort and propensity, but not an overwhelming enthusiasm. It might be inferred that lingering ethical or academic integrity considerations pertaining to the use of AI-generated content exist. Intriguingly, the statement regarding the necessity for caution in divulging sensitive information when deploying ChatGPT earned the highest mean score of 3.35. This implies a heightened level of vigilance and discernment pertaining to data privacy and security in the deployment of AI tools among the respondent population.

Excerpts from the open-ended questions support the quantitative data findings. Students demonstrated a readiness to integrate ChatGPT into their daily tasks, with their feedback highlighting the tool’s user-friendliness and resourcefulness. Their sentiments collectively indicated a high level of preparedness to incorporate ChatGPT into their academic routine.

A recurring theme in the students’ responses was their recognition of ChatGPT’s ability to provide simple and comprehensible explanations. This perception often contrasted with their experience in traditional classroom settings. A significant number of students conveyed that ChatGPT’s straightforward answers surpassed their classroom encounters with lecturers, and provided a clearer understanding of course material:

“[ChatGPT] explains the course contents in a much simpler, and more understandable way, better than my lecturer. When I am stuck on a coding problem, or when I have general questions, because it can give accurate answers straight away, it is better than Google. I like how it uses simple words when explaining things as if a human is answering my questions.” (S103).

“Some of the lecture notes did not have enough examples. So, I use ChatGPT to help me [on] how to use some of the formulas.” (S126).

The students’ sentiments also centred on the efficiency and accuracy of ChatGPT’s responses. They valued its ability to promptly provide precise answers, streamlining their search for information:

“Because ChatGPT only compresses [one] answer for each question asked. It’s different from Google [that] displays many answers, and [you] must choose [from] its own description. [ChatGPT is] able to explain a question in a complete and easy-to-understand manner, [and] display a complete answer. I don’t have to spend a lot of time choosing the best answer.” (S111).

“Google will give me different answers, and I’m not sure which is the most accurate one. [But] I will ask ChatGPT which is the correct one. Once you key in your question, you only [need to] wait around 5 seconds to get your answer without [having to view] the websites one by one.” (S368).

Convenience emerged as a prominent attribute of ChatGPT for students. They highlighted the tool’s accessibility especially to academic concepts and theories whenever and wherever needed. This was coupled with an appreciation for the tool’s ability to simplify complex concepts, aiding in retention and understanding:

“It helps me to better understand and visualise some concepts in subjects as it could explain in simple terms, and relate to other items, making the concept easier to be remembered. I like ChatGPT because [I can] access it anywhere and anytime I want.” (S325).

“[With] theory explanation, it could explain it using clear and classic, relevant examples.” (S297).

As a whole, student feedback indicated their enthusiastic willingness to embrace ChatGPT as a valuable tool for their academic endeavours. They cited its user-friendly interface, resourcefulness, and capacity to provide clear and accurate explanations as driving factors behind their readiness to integrate it into their learning routine. The students’ recognition of ChatGPT’s efficiency in delivering on-point answers, paired with its availability, and ability to simplify complex concepts, further solidified their positive perception of its implications for their academic journey.

3.6 Students’ perceived usefulness of ChatGPT for academic purposes

This section covers the quantitative findings from students on the perceived usefulness of the usage of ChatGPT for academic purposes. Table 4 shows their level of agreement on items concerning its usefulness.

As shown in Table 4, the findings demonstrated a positive perception of ChatGPT’s impact on the learning experience, and task completion. The mean scores, ranging from 2.99 to 3.30 on a scale of 4, indicated a predominantly positive evaluation from the participants. Particularly noteworthy was the highest mean score of 3.30, signifying the respondents’ firm belief that ChatGPT plays a significant role in facilitating task and assignment completion. Additionally, a mean score of 3.27 reflected the respondents’ conviction that ChatGPT has the potential to enhance learning experiences. Equally notable was its ability to expedite the process of locating resources essential for task accomplishment (mean = 3.27).

The perceived capacity of ChatGPT to promote a deeper comprehension of complex concepts, and alleviate the stress associated with completing tasks or assignments was also met with favour, as indicated by the mean scores of 3.26 and 3.21 respectively. The lowest mean score of 2.99, although still relatively high, was assigned to two specific perceptions: that ChatGPT will enhance critical thinking and problem-solving skills, and that it complements the participants’ own effort and creativity. This finding implies that, while participants recognise the value of ChatGPT, they concurrently acknowledge the vital role their own input and creativity plays in the learning process.

Consequently, these compelling results underscore the status of ChatGPT as a valuable tool for supporting learning, and task completion while also emphasising the potential for further refinement in areas such as enhancing critical thinking skills, and complementing students’ creativity. To explain the quantitative findings, the perceived usefulness of ChatGPT was enquired on through the open-ended questions. In general, about 60% of the students (n = 224) acknowledged the usefulness of ChatGPT in their academic pursuits. They reported employing the tool to assist with assignments, and task completion, emphasising its ability to help them elaborate on points effectively. One student (S76) expressed,

[It helps] me with the assignment… It can help me to elaborate on my point without [my] wasting time thinking of them.

Students revealed that ChatGPT serves as a springboard for initiating assignments, and guiding their research direction though they remain cautious about the accuracy and reliability of the information obtained. As noted by another student (S151),

[It is a] springboard to start my assignments. I use it to give me an idea of where to start my research before I write an essay. I am aware that its facts might not be accurate or reliable, [and] that’s why I fact-check all ChatGPT’s info.

Moreover, students identified ChatGPT’s role in aiding in their assessments, and enhancing their knowledge base. A student (S346) commented,

ChatGPT helps me a lot with my assessments whenever I felt stuck, or I don’t have any [ideas]. It’s easy to use, convenient, and improves my ability, and [helps me] gain new knowledge.

Several students underlined ChatGPT’s resource-finding capabilities, affirming its efficiency in complementing lecture content. One student (S135) clarified,

“Normally stuff provided in lectures and notes isn’t enough to tackle [the] weekly questions in tutorials and labs, so I opt for ChatGPT to find such information. By all means, I do not use ChatGPT to cheat, or get answers quickly; it’s more [about knowing] where to find certain information [more easily]. It makes my studying more efficient; instead of scouring through hundreds of slides, books, and notes, I could just give a prompt to find what I’m looking for. It’s very time efficient, and cost efficient.”

Furthermore, students highlighted ChatGP’s ability to simplify complex concepts, aiding comprehension. Students acknowledged its contribution to overcoming challenges such as “brain fog” in science-related fields (S72), and its proficiency in providing detailed explanations (S164), and solving mathematical and scientific problems swiftly (S39). More importantly, ChatGPT was also recognised for its impact on students’ productivity, and time management. Respondents consistently attested to ChatGPT’s assistance that led to stress reduction, and timely completion of assignments:

“ChatGPT reduces my stress about doing unnecessarily hard assignments that cost me too much time.” (S23).

“Using Chat GPT helps me find the answers to my questions [easily]. So, this situation saves [me] time [on going] to the library, or [doing] research on Google.” (S42).

“ChatGPT can understand my question.” (S77).

However, concerns were raised regarding overreliance on AI tools like ChatGPT. Students noted the potential detrimental effects on critical thinking, and problem-solving skills:

“Relying solely on AI tools for academic work may result in a lack of originality in the work. It is important for students to develop their own critical thinking skills, and perspectives.” (S79).

“Students [who] rely on ChatGPT will lose problem-solving skills as they will be [too] lazy to think.” (S310).

Furthermore, the students expressed apprehension that excessive reliance on ChatGPT could stifle creativity, and discourage independent thinking:

“It may kill the creativity of students, and it will increase the number of students with the attitude of waiting to be spoon-fed if they do not understand how to use ChatGPT ethically.” (S26).

In summary, student perceptions of ChatGPT’s usage for academic purposes are predominantly positive, highlighting its role in enhancing assignment completion, aiding comprehension of complex concepts, and facilitating efficient resource searching. Nonetheless, students acknowledged the importance of maintaining a balance between utilising AI assistance, and cultivating critical thinking, problem-solving skills, and creativity.

4 Discussion

This study investigated university students’ readiness to use ChatGPT, and its perceived usefulness for academic purposes. The study further postulates practical implications for academic help-seeking behaviour among students in the usage of ChatGPT.

First, the results of this study indicated that students have the proficiency, willingness, and the requisite technological infrastructure to use ChatGPT, with a large majority concurring with its ability to augment their learning experience. The results corroborate with the findings of Kasneci et al. (2023), Mijwil and Aljanabi (2023), and Kuhail et al. (2023) who found that ChatGPT has the potential to enhance learning, and create individualised educational experiences for students. Second, students were ready to integrate ChatGPT into their learning routines due to its ability to provide clear and accurate explanations (Foroughi et al., 2023). Furthermore, they also shared that the tool’s ability to simplify complex concepts, and deliver straightforward answers was the main reason behind their motivation to use the software (Olga et al., 2023).

The findings also showed students’ positive perception of ChatGPT’s usefulness in facilitating task and assignment completions, and its resourcefulness in locating learning materials. These findings corroborated with other studies that found that university students used ChatGPT as the main resource for learning, and completing their academic tasks, such as assignments (e.g., Chen et al., 2023; Gupta et al., 2019). In addition, students also found it to be useful in elevating their stress, and assisting them in comprehending complex concepts in their studies (Crawford et al., 2023; Smith et al., 2023). However, the findings also indicated that students acknowledged the need to maintain a balance in the learning process between AI assistance, and their own critical thinking, problem-solving skills, and creativity (Huang, 2021; Matthee & Turpin, 2019).

4.1 Limitations and recommendations for Future Research

This study comes with several limitations. First, the results cannot be generalised because the sample was not only concentrated on only one public university in Malaysia, but also involved students from different fields of study. Second, the sample size was small. Therefore, future research should focus on more public universities in Malaysia, with possibly the added perspective from instructors as well.

5 Conclusion

The purpose of this study was to investigate university students’ readiness to use ChatGPT, and its perceived usefulness for academic purposes. From the results, there are two important implications for practice. First, the overall findings of this study revealed that students were ready to use ChatGPT for academic purposes. However, in terms of adequate guidelines for the utilisation of AI tools such as ChatGPT in universities, students felt that there was a lack of guidance in this aspect, thus requiring urgent attention. In light of this, the present study’s findings could be used as a starting point for universities to then formulate a guideline for using ChatGPT, and other AI tools, and nurture self-regulated learning among students. In addition, concerns were raised among students on the overreliance on AI tools like ChatGPT, and its negative impacts on critical thinking, creativity, and problem-solving skills. To address these concerns, instructors and universities must carefully consider how to incorporate AI technology such as ChatGPT into the learning and teaching process in a way that supports critical thinking, and self-regulated learning.

It can be concluded that the students’ readiness, and use of ChatGPT and other AI tools is closely bound by university policies, and the instructors’ adoption practices (Adams et al., 2022). As the thrust towards implementing AI in classrooms gathers pace (Ifenthaler & Schumacher, 2023), training and awareness programmes must be implemented to facilitate the adoption process for both students, and instructors. Students needs to be made aware that the originality of their work is essential for effective assessment, and improved learning outcomes while instructors are able to instil a strong sense of academic integrity by helping students navigate the complex ethical questions that arise when using AI technology in academic settings.

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Adams, D., & Chuah, K. M. (2022). Artificial intelligence-based tools in research writing: Current trends and future potentials. In P. P. Churi, S. Joshi, M. Elhoseny, & A. Omrane (Eds.), Artificial intelligence in higher education: A practical approach (pp. 169–184). CRC Press. https://doi.org/10.1201/9781003184157.

Adams, D., Mabel, H. J. T., & Sumintono, B. (2020). Students’ readiness for blended learning in a leading Malaysian private higher education institution. Interactive Technology and Smart Education, 1–20. https://doi.org/10.1108/ITSE-03-2020-0032.

Adams, D., Chuah, K. M., Sumintono, B., & Mohamed, A. (2021). Students’ readiness for e-learning during the COVID-19 pandemic in a South-East Asian university: A rasch analysis. Asian Education and Development Studies, 1–16. https://doi.org/10.1108/AEDS-05-2020-0100.

Adams, D., Chuah, K. M., Mohamed, A., Sumintono, B., Moosa, V., & Shareefa, M. (2022). Bricks to clicks: Students’ engagement in e-learning during the COVID-19 pandemic. Asia Pacific Journal of Educators and Education, 36(2), 99–117. https://doi.org/10.21315/apjee2021.36.2.6.

Adiguzel, T., Kaya, M. H., & Cansu, F. K. (2023). Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemporary Educational Technology, 15(3). https://doi.org/10.30935/cedtech/13152.

Al-Sharafi, M. A., Al-Emran, M., Iranmanesh, M., Al-Qaysi, N., Iahad, N. A., & Arpaci, I. (2022). Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interactive Learning Environments, 1–20. https://doi.org/10.1080/10494820.2022.2075014.

Amador, P., & Amador, J. (2014). Academic advising via Facebook: Examining student help seeking. The Internet and Higher Education, 21, 9–16. https://doi.org/10.1016/j.iheduc.2013.10.003.

Bond, T. G., & Fox, C. M. (2015). Applying the Rasch Model: Fundamental measurement in the Human sciences (3rd ed.). Routledge.

Broadbent, J., & Lodge, J. (2021). Use of live chat in higher education to support self-regulated help seeking behaviours: A comparison of online and blended learner perspectives. International Journal of Educational Technology in Higher Education, 18(1), 1–20. https://doi.org/10.1186/s41239-021-00253-2.

Carter, R. A., Rice, M., Yang, S., & Jackson, H. A. (2020). Self-regulated learning in online learning environments: Strategies for remote learning. Information and Learning Sciences, 121(5/6), 321–329.

Chen, Y., Jensen, S., Albert, L. J., Gupta, S., & Lee, T. (2023). Artificial intelligence (AI) student assistants in the classroom: Designing chatbots to support student success. Information Systems Frontiers, 25(1), 161–182. https://doi.org/10.1007/s10796-022-10291-4.

Chuah, K. M., & Kabilan, M. (2021). Teachers’ views on the Use of Chatbots to Support English Language Teaching in a Mobile Environment. International Journal of Emerging Technologies in Learning (iJET), 16(20), 223–237. https://doi.org/10.3991/ijet.v16i20.24917.

Chyr, W. L., Shen, P. D., Chiang, Y. C., Lin, J. B., & Tsai, C. W. (2017). Exploring the effects of online academic help-seeking and flipped learning on improving students’ learning. Journal of Educational Technology & Society, 20(3), 11–23.

Crawford, J., Cowling, M., & Allen, K. A. (2023). Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). Journal of University Teaching & Learning Practice, 20(3), 02.

Creswell, J. W. (2015). A concise introduction to mixed methods research. SAGE publications.

Creswell, J. W., & Plano Clark, V. L. P. (2017). Designing and conducting mixed methods research. Sage publications.

Dillman, D. A., Smyth, J. D., & Christian, L. M. (2014). Internet, phone, mail, and mixed-Mode surveys: The tailored design method (4th ed.). Wiley.

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., & Wright, R. (2023). So what if ChatGPT wrote it? Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. International Journal of Information Management, 71, 102642. https://doi.org/10.1016/j.ijinfomgt.2023.102642.

Etikan, I., Musa, S. A., & Alkassim, R. S. (2016). Comparison of convenience sampling and purposive sampling. American Journal of Theoretical and Applied Statistics, 5(1), 1–4. https://doi.org/10.11648/j.ajtas.20160501.11.

Fan, Y. H., & Lin, T. J. (2023). Identifying university students’ online academic help-seeking patterns and their role in internet self-efficacy. The Internet and Higher Education, 56, 100893. https://doi.org/10.1016/j.iheduc.2022.100893.

Foroughi, B., Senali, M. G., Iranmanesh, M., Khanfar, A., Ghobakhloo, M., Annamalai, N., & Naghmeh-Abbaspour, B. (2023). Determinants of intention to Use ChatGPT for Educational purposes: Findings from PLS-SEM and fsQCA. International Journal of Human–Computer Interaction, 1–20.

Gupta, S., Jagannath, K., Aggarwal, N., Sridar, R., Wilde, S., & Chen, Y. (2019). Artificially Intelligent (AI) Tutors in the Classroom: A Need Assessment Study of Designing Chatbots to Support Student Learning. In PACIS 2019 Proceedings (Vol. 213). Retrieved from https://aisel.aisnet.org/pacis2019/213.

Hammad, R., & Bahja, M. (2023). Opportunities and Challenges in Educational Chatbots. Trends, Applications, and Challenges of Chatbot Technology, 119–136.

Hassan, T., Edmison, B., Cox, L., Louvet, M., & Williams, D. (2019, August). Exploring the context of course rankings on online academic forums. In Proceedings of the 2019 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (pp. 553–556).

Huang, X. (2021). Aims for cultivating students’ key competencies based on artificial intelligence education in China. Education and Information Technologies, 26, 5127–5147.

Hwang, G. J., & Chang, C. Y. (2021). A review of opportunities and challenges of chatbots in education. Interactive Learning Environments, 1–14. https://doi.org/10.1080/10494820.2021.1952615.

Ifenthaler, D., Schumacher, C., & Kuzilek, J. (2023). Investigating students’ use of self-assessments in higher education using learning analytics. Journal of Computer Assisted Learning, 39(1), 255–268.

Iranmanesh, M., Annamalai, N., Kumar, K. M., & Foroughi, B. (2022). Explaining student loyalty towards using WhatsApp in higher education: An extension of the IS success model. The Electronic Library, 40(3), 196–220. https://doi.org/10.1108/EL-08-2021-0161.

Jin, S. H., Im, K., Yoo, M., Roll, I., & Seo, K. (2023). Supporting students’ self-regulated learning in online learning using artifcial intelligence applications. International Journal of Educational Technology in Higher Education, 20, 32.

Kasneci, E., Seßler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., & Kasneci, G. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learning and Individual Differences, 103, 102274. https://doi.org/10.1016/j.lindif.2023.102274.

Kassarnig, V., Mones, E., Bjerre-Nielsen, A., Sapiezynski, P., Lassen, D., D., & Lehmann, S. (2018). Academic performance and behavioral patterns. EPJ Data Science, 7, 1–16.

Kuhail, M. A., Alturki, N., Alramlawi, S., & Alhejori, K. (2023). Interacting with educational chatbots: A systematic review. Education and Information Technologies, 28(1), 973–1018. https://doi.org/10.1007/s10639-022-11177-3.

Lester, J. N., Cho, Y., & Lochmiller, C. R. (2020). Learning to do qualitative data analysis: A starting point. Human Resource Development Review, 19(1), 94–106.

Li, R., Hassan, C., N., & Saharuddin, N. (2023). College Student’s academic help-seeking behavior: A systematic literature review. Behavioral Sciences, 13(8), 637.

Lo, C. K. (2023). What is the impact of ChatGPT on education? A rapid review of the literature. Education Sciences, 13(4), 410. https://doi.org/10.3390/educsci13040410.

Low, S. K., Pheh, K. S., Lim, Y., & Tan, S. A. (2016, April). Help Seeking Barrier of Malaysian Private University Students. In Proceedings of the International Conference on Disciplines in Humanities and Social Sciences (DHSS-2016), Bangkok, Thailand (pp. 26–27).

Lund, B. D., & Wang, T. (2023). Chatting about ChatGPT: How may AI and GPT impact academia and libraries? Library Hi Tech News, 40(3), 26–29. https://doi.org/10.1108/LHTN-01-2023-0009.

Mandalapu, V., Chen, L. K., Chen, Z., & Gong, J. (2021). Student-Centric Model of Login Patterns: A Case Study with Learning Management Systems. Proceedings of the 14th International Conference on Educational Data Mining (EDM 2021), Paris, France.

Matthee, M., & Turpin, M. (2019). Teaching critical thinking, problem solving, and design thinking: Preparing IS students for the future. Journal of Information Systems Education, 30(4), 242–252.

Mijwil, M., & Aljanabi, M. (2023). Towards artificial intelligence-based cybersecurity: The practices and ChatGPT generated ways to combat cybercrime. Iraqi Journal for Computer Science and Mathematics, 4(1), 65–70. https://doi.org/10.52866/ijcsm.2023.01.01.0019.

Miles, M. B., Huberman, A. M., & Saldana, J. (2019). Qualitative data analysis: A methods Sourcebook (4th ed.). Sage Publications.

Ministry of Education (MoE). (2015). Malaysia Education Blueprint 2015–2025 (higher education). Ministry of Education Malaysia.

Morshidi, S., Abdul Karim, A., Hazri, J., Wan Zuhainis, S., Muhamad Saiful Bahri, Y., Munir, S., Mahiswaran, S., Muhammad, M., Ghasemy, M., & Mazlinawati, M. (2020). Flexible learning pathways in Malaysian higher education: Balancing human resource development and equity policies. Commonwealth Tertiary Education Facility (CTEF).

Noman, M., & Kaur, A. (2022). Gender equity and the Lost Boys in Malaysian education. In D. Adams (Ed.), Education in Malaysia: Developments, reforms and prospects (pp. 19–33). Routledge. https://doi.org/10.4324/9781003244769.

Olga, A., Tzirides, A., Saini, A., Zapata, G., Searsmith, D., Cope, B., Kalantzis, M., Castro, V., Kourkoulou, T., Jones, J., da Silva, R. A., Whiting, J., & Kastania, N. P. (2023). Generative AI: Implications and applications for education. arXiv Preprint arXiv:2305.07605.

OpenAI (2022, November 30). Introducing ChatGPT. https://openai.com/blog/chatgpt.

Payne, T., Muenks, K., & Aguayo, E. (2021). Just because I am first gen doesn’t mean I’m not asking for help: A thematic analysis of first-generation college students’ academic help-seeking behaviors. Journal of Diversity in Higher Education. https://doi.org/10.1037/dhe0000382.

Pillai, R., Sivathanu, B., Metri, B., & Kaushik, N. (2023). Students’ adoption of AI-based teacher-bots (T-bots) for learning in higher education. Information Technology & People, 1–25. https://doi.org/10.1108/ITP-02-2021-0152.

Puustinen, M., & Pulkkinen, L. (2001). Models of self-regulated learning: A review. Scandinavian Journal of Educational Research, 45(3), 269–286.

Rawas, S. (2023). ChatGPT: Empowering lifelong learning in the digital age of higher education. Education and Information Technologies. Advance online publication. https://doi.org/10.1007/s10639-023-12114-8.

Ray, P. P. (2023). ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems.

Sáiz-Manzanares, M. C., Marticorena-Sánchez, R., Martín-Antón, L. J., Díez, I. G., & Almeida, L. (2023). Perceived satisfaction of university students with the use of chatbots as a tool for self-regulated learning. Heliyon, 9(1).

Sirat, M., & Wan, C. D. (2022). Higher education in Malaysia. In L. P. Symaco, & M. Hayden (Eds.), International Handbook on Education in South East Asia. Springer International Handbooks of Education. Springer. https://doi.org/10.1007/978-981-16-8136-3_14-1.

Smith, A., Hachen, S., Schleifer, R., Bhugra, D., Buadze, A., & Liebrenz, M. (2023). Old dog, new tricks? Exploring the potential functionalities of ChatGPT in supporting educational methods in social psychiatry. International Journal of Social Psychiatry, 00207640231178451.

Sok, S., & Heng, K. (2023). ChatGPT for education and research: A review of benefits and risks. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4378735.

Students Turn to ChatGPT for Learning Support. (2023, February 2). The New York Times. https://www.nytimes.com/2023/02/02/learning/students-chatgpt.html.

Sullivan, G. M., & Artino, A. R. (2013). Analyzing and interpreting data from Likert-type scales. Journal of Graduate Medical Education, 5(4), 541–542. https://doi.org/10.4300/JGME-5-4-18.

Tashakkori, A., Johnson, R. B., & Teddlie, C. (2020). Foundations of mixed methods research: Integrating quantitative and qualitative approaches in the social and behavioral sciences. Sage publications.

Tsivitanidou, O., & Ioannou, A. (2021). Envisioned pedagogical uses of chatbots in higher education and perceived benefits and challenges. In P. Zaphiris, & A. Ioannou (Eds.), Learning and collaboration technologies: Games and virtual environments for learning. HCII 2021 (Vol. 12785, pp. 230–250). Springer. Lecture Notes in Computer Science (Volhttps://doi.org/10.1007/978-3-030-77943-6_15.

Uren, V., & Edwards, J. S. (2023). Technology readiness and the organizational journey towards AI adoption: An empirical study. International Journal of Information Management, 68, 102588.

Windiatmoko, Y., Hidayatullah, A. F., Fudholi, D. H., & Rahmadi, R. (2022). Mi-Botway: A deep learning-based intelligent university enquiries chatbot. International Journal of Artificial Intelligence Research, 6(1). https://doi.org/10.29099/ijair.v6i1.247.

Won, S., Hensley, L. C., & Wolters, C. A. (2021). Brief research report: Sense of belonging and academic help-seeking as self-regulated learning. The Journal of Experimental Education, 89(1), 112–124.

Wu, T. T., Lee, H. Y., Li, P. H., Huang, C. N., & Huang, Y. M. (2023). Promoting self-regulation progress and knowledge construction in blended learning via ChatGPT-based learning aid. Journal of Educational Computing Research. https://doi.org/10.1177/07356331231191125.

Yan, Z. (2020). Self-assessment in the process of self-regulated learning and its relationship with academic achievement. Assessment & Evaluation in Higher Education, 45(2), 224–238.

Yao, H., Lian, D., Cao, Y., Wu, Y., & Zhou, T. (2019). Predicting academic performance for college students: A campus behavior perspective. ACM Transactions on Intelligent Systems and Technology (TIST), 10(3), 1–21. https://doi.org/10.1145/3299087.

Zhang, P. (2023). Taking Advice from ChatGPT. arXiv preprint arXiv:2305.11888.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Adams, D., Chuah, KM., Devadason, E. et al. From novice to navigator: Students’ academic help-seeking behaviour, readiness, and perceived usefulness of ChatGPT in learning. Educ Inf Technol 29, 13617–13634 (2024). https://doi.org/10.1007/s10639-023-12427-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-12427-8