Abstract

This paper studies an optimal ON-OFF control problem for a class of discrete event systems with real-time constraints. Our goal is to minimize the overall costs, including the operating cost and the wake-up cost, while still guaranteeing the deadline of each individual task. In particular, we consider the homogeneous case in which it takes the same amount of time to serve each task and each task needs to be served by d seconds upon arrival. The problem involves two subproblems: (i) finding the best time to wake up the system and (ii) finding the best time to let the system go to sleep. We study the two subproblems in both off-line and on-line settings. In the off-line case that all task information is known a priori, we combine sample path analysis and dynamic programming to come up with the optimal solution. In the on-line scenario where future task information is completely unknown, we show that the optimal time to wake up the system can be obtained without relying on future task arrivals. We also perform competitive analysis for on-line control and derive the competitive ratios for both deterministic and random controllers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There exists a large amount of Discrete Event Systems (DESs) that involve allocation of resources to satisfy real-time constraints. One commonality of these DESs is that certain tasks must be completed by their deadlines in order to guarantee Quality-of-Service (QoS). Examples arise in wireless networks and computing systems, where communication and computing tasks must be transmitted/processed before the information they contain becomes obsolete (Miao et al. 2017; Liu 2000), and in manufacturing systems, where manufacturing tasks must be completed before the specified time in the production schedule (Pepyne and Cassandras 2000). Another commonality of these DESs is that they all require the minimization of cost (e.g., energy). An interesting question then arises naturally: how can we allocate resources to such DESs so that the cost is minimized and the real-time constraints are also satisfied? To answer this question, one often has to study the trade-off between minimizing the cost and satisfying the real-time constraints: processing the tasks at a higher speed makes it easier to satisfy the real-time constraints but harder to reduce the cost; conversely, processing the tasks at a lower speed makes it harder to satisfy the real-time constraints but easier to reduce the cost. This trade-off is often referred to as the energy-latency trade-off and has been widely studied in the literature (Miao et al. 2017; Gamal et al. 2002; Zafer and Modiano 2009a).

In this paper, our objective is to utilize the energy-latency trade-off to minimize the cost while guaranteeing the real-time constraint for each task. Different from most existing papers that assume the system’s service rate (the control variable) is a continuous function of time, we assume that the DES only operates at one of the two states: ON and OFF. One motivating example of such DES is wireless sensor networks, in which operation simplicity must be maintained. For example, the radio of a ZigBee wireless device can be either completely off or transmitting at a fixed-rate, e.g., 250kb/s in the 2.4GHz band. Another difference between this paper and others is that we assume that a wake-up cost is incurred whenever the system transits from the OFF state to the ON state.

We solve both off-line and on-line optimal ON-OFF control problems. Our main contributions are two-fold: (i) We combine sample path analysis and Dynamic Programming (DP) to obtain the optimal off-line solution and (ii) We perform competitive analysis and derive the competitive ratios of both deterministic and random on-line controllers. Some results of this paper are previously shown in two conference papers: Miao and Xu (2015) and (Miao 2017), which primarily focus on off-line control. One new contribution of this paper is the competitive analysis for on-line control. Another new contribution is that we introduce an idling cost in the system model. We point out that the addition of this idling cost makes our problem formulation more realistic because it often exists in real-world applications. For example, energy is consumed when a motor is spinning without any load attached and when a sensor is turned on, but not actively processing information.

Due to page limitation, we do not include the results and discussions that are available in the conference papers and all the proofs; instead, we let the readers refer to Miao et al. (2018) for all these details. The organization of the rest of the paper is as follows: in Section 2, we discuss related work; we introduce the system model and formulate our optimization problem in Section 3; the off-line and on-line results are presented in Sections 4 and 5, respectively; finally, we conclude in Section 6.

2 Related work

There are two lines of work that are closely related to this paper. One is transmission scheduling for wireless networks, in which the transmission rate of a wireless device is adjusted so as to minimize the transmission cost and satisfy real-time constraints. This line of work is initially studied in Uysal-Biyikoglu et al. (2002) with follow-up work in Gamal et al. (2002) where a homogeneous case is considered for a convex optimization problem, assuming all packets have the same deadline and number of bits. The rate of convergence of the proposed “MoveRight” algorithm is obtainable for a special case of the problem when all packets have identical energy functions. Zafer and Modiano (2009b) study an optimal rate control problem over a time-varying wireless channel, in which the channel state was modeled as a Markov process. In particular, they consider the scenario that B units of data must be transmitted by a common deadline T, and they obtain an optimal rate-control policy that minimizes the total energy expenditure subject to short-term average power constraints. In Zafer and Modiano (2007, 2008), the case of identical arrival time and individual deadline is studied by Zafer et. al. In Chen et al. (2007), the case of identical packet size and identical delay constraint is studied by Neely et. al. They extend the result for the case of individual packet size and identical delay constraint in Chen et al. (2009). In Zafer and Modiano (2009a), Zafer et. al. use a graphical approach to analyze the case that each packet has its own arrival time and deadline. However, there are certain restrictions in their setting; for example, the packet that arrives later must have later deadlines. Wang and Li (2013) analyze scheduling problems for bursty packets with strict deadlines over a single time-varying wireless channel. Assuming slotted transmission and changeable packet transmission order, they are able to exploit structural properties of the problem to come up with an algorithm that solves the off-line problem. In Poulakis et al. (2013), Poulakis et. al. also study energy efficient scheduling problems for a single time-varying wireless channel. They consider a finite-horizon problem where each packet must be transmitted before Dmax. Optimal stopping theory is used to find the optimal start transmission time between [0, Dmax] so as to minimize the expected energy consumption and the average energy consumption per unit of time. Zhong and Xu (2008) formulated optimization problems that minimize the energy consumption of a set of tasks with task-dependent energy functions and packet lengths. In their problem formulation, the energy functions include both transmission energy and circuit power consumption. To obtain the optimal solution for the off-line case with backlogged tasks only, they develop an iterative algorithm RADB whose complexity is O(n2) (n is the number of tasks). The authors show via simulation that the RADB algorithm achieves good performance when used in on-line scheduling. Miao et al. (2017) studies a transmission control problem for task-dependent cost functions and arbitrary task arrival time, deadline, and number of bits. They propose a GCTDA algorithm that solves the off-line problem efficiently by identifying certain critical tasks. The GCTDA algorithm is an extension to the CTDA algorithm (Mao et al. 2007) designed by Mao and Cassandras for dynamic voltage scaling related applications. They extend the CTDA algorithm to multilayer scenarios in Mao and Cassandras (2014). Our model is different from all the above works by letting the system operate in one of the discrete modes and also including a wake-up cost at each time instant that the system transitions from OFF to ON state.

The other line of research studies On-OFF scheduling in Wireless Sensor Networks (WSNs). Solutions in the Medium Access Control (MAC) layer, such as the S-MAC protocol (Ye et al. 2004), have been developed to coordinate neighboring sensors’ ON-OFF schedule in order to reduce both energy consumption and packet delay. These approaches do not provide specific end-to-end latency guarantee. In Lai and Paschalidis (2006), routing problems are considered in WSNs where each sensor switches between ON and OFF states. The authors formulate an optimization problem to pick the best path that minimizes the weighted sum of the expected energy cost and the exponent of the latency probability. In another work in Ning and Cassandras (2006), Ning and Cassandras formulate a dynamic sleep control problem in order to reduce the energy consumed in listening to an idle channel. The idea is to sample the channel more frequently when it is likely to have traffic and less frequently when it is not. The authors extend their work in Ning and Cassandras (2008), by formulating an optimization problem with the goal of minimizing the expected total energy consumption at the transmitter and the receiver. Dynamic programming is used to come up with an optimal policy that is shown to be more effective in cost saving than the fixed sleep time. Cohen and Kapchits (2009) studies the ON-OFF scheduling in wireless mesh networks. By assuming a fixed routing tree topology used for task transmission, each child in the tree knows exactly when its parents will wake up, and the traffic is only generated by the leaves of the tree, the authors formulate and solve an optimization problem that minimizes the total transmission energy cost while satisfying the latency and maximum energy constraints on each individual node. The major difference between this paper and the existing ones in this line of research is that we study a system with a real-time constraint for each individual task. To the best of our knowledge, ON-OFF scheduling with a real-time constraint for each individual task has not been studied extensively.

It is worth noting that there also exists papers related to the service rate control problem, in which the optimal service rate policy of either single-server or multi-server queueing systems are derived in order to minimize an average cost. A recent representative work along this line can be found in Xia et al. (2017) where Xia et al. study the service rate problem for tandem queues with power constraints. They formulate the model as a Markov decision process with constrained action space and use sensitivity-based optimization techniques to derive the conditions of optimal service rates, the optimality of the vertexes of the feasible domain for linear and concave operating cost, and an iterative algorithm that provides the optimal solution. Our problem formulation is different from these works in two aspects: (i) We consider tasks with real-time constraints and (ii) We include system wake-up cost on top of the service cost.

3 System model and problem formulation

We consider a finite horizon scenario that a DES processes N tasks with real-time constraints. In particular, task i, i = 1,…,N, has arrival time ai (generally random), deadline di = ai + d, and B number of operations. Both d and B are constants. In the off-line setting, we assume that the task arrival time ai is known to the controller a priori. The DES can only operate in one of the two modes: ON and OFF. When it is in the OFF mode, there is no operating cost associated. When it is in the ON or active mode, the system can either be busy or idling. When the system is busy, it processes the tasks at a constant rate R with fixed operating cost CB per unit time. When the system is idling, no tasks are waiting to be served, and the system cost is CI (CI ≤ CB) per unit time. Furthermore, we assume that whenever a transition from the OFF mode to the ON mode occurs, a fixed wake-up cost CW is incurred; examples of such costs include: the large amount of current (known as inrush current) required when a motor is turned on, the energy needed to initialize electric circuits when RF radio is turned on in a wireless device, and so on. Note that the wake-up cost may also include system wearout cost, if the system can only be turned on for certain number of times during its lifetime. In our previous work in Miao and Xu (2015) and Miao (2017), CI = CB. As we will show later, when CI is different from CB, it does not make the analysis significantly harder, and the off-line optimal solution can still be obtained by DP.

As we mentioned earlier, the task information is known to the controller a priori in the off-line setting. Our objective is to find the optimal ON and OFF time periods so as to (i) finish all the tasks by their deadlines and (ii) minimize the cost.

Definition 1

Suppose the system is woken up at t1, put to sleep at t2 (t1 < t2), and kept active from t1 to t2. Then, we call the time interval [t1,t2] an Active Period(AP).

Definition 2

In any AP, the periods during which the system is actively serving tasks are known as Busy Periods(BPs). The rest of the time periods in that AP are known as Idle Periods(IPs).

Let r(t) be the rate that the system is capable of serving tasks at time t. It is piecewise constant and at any given time t, it can only be either 0 (when the system is OFF) or R (when the system is ON). Note that r(t) is the potential service rate, instead of the the actual service rate, since the system is only serving tasks during the BPs, not the IPs.

We now introduce the control variables. Our first control variable is α, the number of APs. The second control variable is a α × 2 array t that contains 2α time instants. These time instants satisfy: ti,1 < ti,2 < tj,1 < tj,2, ∀i,j ∈{1,…,α}, i < j and define α number of APs. The off-line problem Q(1,N) can then be formulated:

where xj is the departure time of task j, u(t) is the unit step function, and τi,B is the length of the busy periods in the i-th AP. The first constraint ensures that exactly B number of operations are executed for each task. The second one is the real-time constraint. The third one makes sure that the processing rate is R only during each AP. Note that τi,B is dependent on the number of tasks served in APi. To represent τi,B, we use \({N_{i}^{S}}\) and \({N_{i}^{E}}\) to denote the first (starting) task and the last (ending) task in APi, respectively:\( {N_{i}^{E}}=\underset {j\in \{1,{\ldots } ,N\}}{\arg \max }(d_{j}\le t_{i,2}), \text { } {N_{i}^{S}}=\underset {j\in \{1,{\ldots } ,N\}}{\arg \min }(a_{j}\ge t_{i,1}),\) and \( \tau _{i,B}=\max (({N_{i}^{E}}-{N_{i}^{S}}+ 1)\frac {B}{R},0) \).

Notice that Q(1,N) above may not always be feasible. In this paper, we only consider the case that Q(1,N) is indeed feasible. See Assumption 1 and Lemma 1 in Miao et al. (2018) for the assumption that guarantees feasibility.

Q(1,N) is a hard optimization problem, due to the nondifferentiable terms in the constraints and the objective function. It cannot be easily solved by standard optimization software. In what follows, we will first discuss optimal off-line control, using which we will then establish the results for on-line control.

4 Off-line control

In this section, we focus on the off-line control problem, in which all task arrivals are known to us a priori. We need to find out when the system should wake up and start to serve the first task in an AP. Similar to the “just-in-time” idea exploited in Gamal et al. (2002) for adaptive modulation, the system should wake up as late as possible so that it may potentially reduce the active time. The question is how late the system should wake up. This is answered by Lemmas 2-4 in Miao et al. (2018).

We now find out when the system should go to sleep. Lemma 5 in Miao et al. (2018) provides a sufficient but not necessary condition of ending an AP on the optimal sample path. Let d0 = −∞ and aN+ 1 = ∞. We introduce the following definition.

Definition 3

Consecutive tasks {k,…,n}, 1 ≤ k ≤ n ≤ N, belong to a super active period (SAP) in problem Q(1,N) if dk− 1 + CW/CI < ak, dn + CW/CI < an+ 1, and dj + CW/CI ≥ aj+ 1, ∀j ∈{k + 1,…,n − 1}.

Each SAP contains one or more APs. SAPs can be easily identified by simply examining all the task deadlines and arrival times and applying Lemma 5 in Miao et al. (2018). It implies that instead of working on the original problem Q(1,N), we now only need to focus on each SAP, which is essentially a subproblem Q(k,n).

We now define our decision points in each SAP. A decision point xt, t ∈{k,…,n − 1}, is the departure time of task t satisfies xt < at+ 1. If xt ≥ at+ 1,0 then xt is not a decision point because the system should stay active at xt and process task t + 1. At each decision point, the control is letting the system either go to sleep or stay awake, and the optimal control depends on future task arrivals. A first look at the problem seems to suggest that the problem is intractable; however, a closer look indicates that the off-line optimal ON-OFF control problem can be solved by DP, which has been widely used to solve a large class of problems with special structural properties. In the context of DES, however, its usage has been very limited to date. For example, in Mao et al. (2007) and Miao et al. (2017) where the problem formulation is similar to the one in this paper, both CTDA and GCTDA algorithms are not DP-based. We will show next that for the DES studied by this paper, DP and sample path analysis can be used together to obtain the optimal solution. In particular, it is done by introducing two types of tasks: starting and following.

Definition 4

In problem Q(k,n), where tasks {k,…,n} form an SAP, the first task of any AP is called a starting task. Tasks that are not starting tasks are known as following tasks.

For any task i ∈{k,…,n}, it must either be a starting task or a following one. We use QS(i,n) and QF(i,n) to denote the optimization problems of serving tasks {i,…,n} when task i is a starting and following task, respective. Let \({J_{i}^{S}}\) and \({J_{i}^{F}}\) be the minimum cost of QS(i,n) and QF(i,n), respectively.

Theorem 1

\({J_{k}^{S}}\)isthe optimal cost of problemQ(k,n).

This theorem shows that when the algorithm in Table 1 in Miao et al. (2018) stops, \({J_{k}^{S}}\) is the optimal cost of problem Q(k,n). The corresponding optimal control, i.e., the starting time and ending time of each AP, can be traced back iteratively by identifying the \({J_{l}^{S}}\) or \({J_{l}^{F}}\) that each \(J_{i-1}^{S}\) or \( J_{i-1}^{F}\) points to. The procedure is provided in Table 4 in Miao et al. (2018).

Next, we use simulation results to show how the optimal solution performs compared with a naive approach, in which the controller simply goes to sleep when there is no backlog and wakes up when a new task arrives. Let optimal to naive ratio be the ratio between the optimal cost and the cost of the naive controller. Figure 1 shows how the optimal to naive ratio varies when the task arrival process and the wake-up cost CW change. In the simulation, we have 100 runs that correspond to 100 maximum interarrival time from 1ms to 100ms with step size 1ms. For the purpose of statistical regularity, each run has 1000 tasks. The interarrival time between two adjacent tasks is uniformly distributed between 0 and the maximum interarrival time in each run. The values of the other parameters are as follows: d = 20ms, CB= 30mW, CI= 100μW, and β = 1ms.

We have a couple of observations. First, the cost saving of the optimal solution is greater when CW is larger. Second, the maximum cost saving occurs when the interarrival time is not too small or too large: when it is too small, a single AP will be sufficient to complete all the tasks, and the optimal and the naive solutions are essentially the same; when it is too large, many APs are needed, and the advantage of the optimal controller gets smaller. As we can see from the result, the cost saving of the DP algorithm in the CW = 28mJ case is as large as 50%, and it will be ever greater when CW is higher.

5 On-line control

In this section, we study on-line control where future task arrival information is unknown to the controller. Essentially, the controller needs to decide the starting time and ending time for each AP. When to start an AP has been previously studied and see Lemma 6 in Miao et al. (2018) for details. We now focus on ending an AP in on-line control.

When all backlogged tasks have been served in an on-line setting, the controller needs to decide when to end an AP and put the system to sleep. This decision depends on future task information and the values of idling cost CI and wake-up cost CW. For example, if the next task t + 1 arrives very soon, the optimal control at decision point xt might be letting the system stay active; conversely, if the next task t + 1 arrives after a long time, then the system perhaps should go to sleep at decision point xt.

When some future task information is known, techniques such as Receding Horizon Control (RHC) can be utilized to make decisions. In this paper, we focus on the scenario that no future task information is available at all.

In general, the control at each decision point is the following: let the system stay awake for another 𝜃t seconds. If no task arrives within the 𝜃t seconds, then put the system to sleep after 𝜃t seconds; o.w., serve the newly arrived tasks and wait for the next decision point. Note that the subscript t indicates 𝜃t could be different at each decision point.

Let J∗ be the optimal cost of the off-line problem Q(1,N) and \(\widetilde {J}\) be the cost of the on-line controller. Our objective is to develop competitive on-line controllers which can quantify their worst-case performance deviation from the optimal off-line solution.

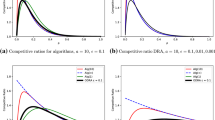

One challenge of competitive analysis is to find out the worst-case scenario. In our problem, the unnecessary cost in on-line control occurs when the system is idling: the controller must decide if and when to sleep. Therefore, the worst case occurs when each AP contains only one task so that the decision has to be made over and over again for every single task. This property actually simplifies our analysis, and in particular, we tackle the competitive ratio problem from two different aspects: a deterministic controller and a randomized one.

5.1 Deterministic controller

We first consider a deterministic controller in which 𝜃t is a fixed constant value 𝜃. The on-line controller is c-competitive if \(\widetilde {J}(I,\theta )\le c J^{*}, \forall I\in \mathscr{I}\), where \(\mathscr{I}\) is the set of all possible task arrival instances and I is one task arrival instance. c is called the competitive ratio of the deterministic on-line controller and is essentially the upper-bound (i.e., worst case) of the ratio between the on-line cost \(\widetilde {J}\) and the off-line optimal cost J∗.

Lemma 1

The best competitive ratioc∗of a deterministic controller is obtained when𝜃 = CW/CI,and\(\lim _{N\to \infty } c^{*}=(2+\gamma )/(1+\gamma )\),where N is the number of tasks andγ = CBβ/CW.

Lemma 1 shows that the competition ratio of a deterministic algorithm depends on the ratio between CBβ, the cost of serving one task, and CW, the cost of waking up the system. If this ratio is very small, then the competitive ratio is close to 2; if the ratio is very large, then the competitive ratio is close to 1.

5.2 Randomized controller

In a different methodology, we assume that 𝜃t is determined by a randomized algorithm that returns a value based on certain probability distribution P. During on-line control, the controller essentially is playing a game with an adversary (i.e., the task arrival process). Our job is to find the optimal probability distribution and the corresponding competitive ratio. We point out that the competitive ratio of a randomized on-line algorithm A is defined with respect to a specific type of adversary. In this paper, we assume an oblivious adversary (Ben-David et al. 1994), in which the worst instance for the randomized algorithm A is chosen without the the knowledge of the realization of the random variable used by A. We say randomized algorithm A is c-competitive if \(E_{P}[\widetilde {J}(A,I)] \le cJ^{*}(I), \forall I \in \mathscr{I}\), where \(\widetilde {J}(A,I)\) is the cost of algorithm A under task arrival instance I in on-line control and J∗(I) is the corresponding off-line optimal cost. Note that the task arrival instance I must be fixed before the expectation is taken. The competition ratio of randomized algorithm AP (algorithm A using probability distribution P) is: \(c(A_{P})=\underset {I\in \mathscr{I}}{\sup }\frac {E_{P}[\widetilde {J}(A_{P},I)]}{J^{*}(I)}\). Our goal is to find the best possible probability distribution that yields the best competitive ratio \(c^{*}=\underset {P}{\inf }\text { }\underset {I\in \mathscr{I}}{\sup }\frac {E_{P}[\widetilde {J}(A_{P},I)]}{J^{*}(I)}\). This is essentially a minimax problem, and one way of solving it is to use Yao’s minimax principle (Yao 1977), which states: a randomized algorithm may be viewed as a random choice between deterministic algorithms; in particular, the competitive ratio of a randomized algorithm against any oblivious adversary is the same as that of the best deterministic algorithm under the worst-case distribution of the adversary’s input. In our case, the adversary’s input is the task arrival instance after each AP. Let its probability distribution be G. Using Yao’s principle and von Neumann minimax theorem, we get: \(c^{*}=\underset {G}{\sup }\text { }\underset {A\in \mathscr{A}}{\inf }\frac {E_{G}[\widetilde {J}(A,I_{G})]}{J^{*}(I_{G})} \), where \(\mathscr{A}\) is the set of all randomized algorithms, IG is a specific task arrival instance under probability distribution G, and the expectation is now performed with respect to G. We now use the following lemma to find c∗.

Lemma 2

The best competitive ratioc∗of a randomized controller is obtained when𝜃tis a random variable X, whose probability density function is

When this controller is used,\(\lim _{N\to \infty } c^{*}=(\gamma + 1.58)/(\gamma + 1)\),whereγ = CBβ/CW.

Lemma 2 shows that the competition ratio of a random controller also depends on the ratio between CBβ and CW. If this ratio is very small, then the competitive ratio is close to 1.58; if the ratio is very large, then the competitive ratio is close to 1.

6 Conclusions

In this paper, we study the optimal ON-OFF control problem for a class of DESs with real-time constraints. The DESs have operating costs CB and CI per unit time and wake-up cost CW. Our goal is to switch the system between the ON and the OFF states so as to minimize cost and satisfy real-time constraints. In particular, we consider a homogeneous case that all tasks have the same number of operations and each one’s deadline is d seconds after the arrival time. For the off-line scenario that all task information is known to the controller a priori, we show that the optimal solution can be obtained via a two-fold decomposition: (i) super active periods that contain one or more active periods can be identified easily using the task arrival times and deadlines and (ii) the optimal solution to each super active period can be solved using dynamic programming. Simulation results show that compared with a simple heuristic, the cost saving of the DP algorithm can be 50% or more.

In on-line control, we show that the best time to start an AP can be obtained via an iterative algorithm and is guaranteed to be the same as the off-line problem. To decide the best time to end an AP in the on-line setting where no future task arrival information is available, we evaluate both deterministic and random controllers and derive their competitive ratios; these results quantify the worst-case on-line performance deviation from the optimal off-line solution.

References

Ben-David S, Borodin A, Karp R, Tardos G, Wigderson A (1994) On the power of randomization in on-line algorithms. Algorithmica 11(1):2–14

Chen W, Mitra U, Neely M (2009) Energy-efficient scheduling with individual packet delay constraints over a fading channel. ACM Wireless Networks 15:601–618

Chen W, Neely M, Mitra U (2007) Energy efficient scheduling with individual packet delay constraints: offline and online results. In: IEEE Infocom, Anchorage

Cohen R, Kapchits B (2009) An optimal wake-up scheduling algorithm for minimizing energy consumption while limiting maximum delay in a mesh sensor network. IEEE/ACM Trans Networking 17:570–581

Gamal AE, Nair C, Prabhakar B, Uysal-Biyikoglu E, Zahedi S (2002) Energy-efficient scheduling of packet transmissions over wireless networks. In: Proceedings of IEEE INFOCOM, vol 3, 23-27. New York City, pp 1773–1782

Lai W, Paschalidis IC (2006) Routing through noise and sleeping nodes in sensor networks: latency vs. energy trade-offs. In: The 45th IEEE conference on decision and control, San Diego, pp 2716–2721

Liu JWS (2000) Real - Time Systems. NJ, Prentice Hall Inc.

Mao J, Cassandras CG (2014) Optimal control of multilayer discrete event systems with real-time constraint guarantees. IEEE Trans Syst Man Cybern Syst Hum 44(10):1425–1434

Mao J, Cassandras CG, Zhao Q (2007) Optimal dynamic voltage scaling in energy-limited nonpreemptive systems with real-time constraints. IEEE Trans Mob Comput 6(6):678–688

Miao L (2017) Optimal on-off scheduling for a class of discrete event systems with real-time constraints. In: American control conference, Seattle

Miao L, Mao J, Cassandras CG (2017) Optimal Energy-Efficient Downlink Transmission Scheduling for Real-Time Wireless Networks. IEEE Trans Control Netw Syst 4(4):692–706

Miao L, Xu L (2015) Optimal wake-up scheduling for energy efficient fixed-rate wireless transmissions with real-time constraints. In: 14th wireless telecommunications symposium, New York

Miao L, Xu L, Jiang D (2018) Optimal On-Off Control for a Class of Discrete Event Systems with Real-Time Constraints. arXiv:1809.01711

Ning X, Cassandras CG (2006) Dynamic sleep time control in event-driven wireless sensor networks. In: The 45th IEEE conference on decision and control, San Diego, pp 2722–2727

Ning X, Cassandras CG (2008) Optimal dynamic sleep time control in wireless sensor networks. In: The 47th IEEE conference on decision and control, Cancun, pp 2332–2337

Pepyne DL, Cassandras CG (2000) Optimal control of hybrid systems in manufacturing. Proc IEEE 88(7):1108–1123

Poulakis MI, Panagopoulos AD, Canstantinou P (2013) Channel-aware opportunistic transmission scheduling for energy-efficient wireless links. IEEE Trans Veh Technol 62:192–204

Uysal-Biyikoglu E, Prabhakar B, Gamal AE (2002) Energy-efficient packet transmission over a wireless link. IEEE/ACM Trans Networking 10:487–499

Wang X, Li Z (2013) Energy-efficient transmissions of bursty data packets with strict deadlines over time-varying wireless channels. IEEE Trans Wirel Commun 12:2533–2543

Xia L, Miller D, Zhou Z, Bambos N (2017) Service rate control of tandem queues with power constraints. IEEE Trans Autom Control 62(10):5111–5123

Yao AC-C (1977) Probabilistic computations: toward a unified measure of complexity. In: 18th annual symposium on foundations of computer science, 1977, IEEE, pp 222–227

Ye W, Heidemann JS, Estrin D (2004) Medium access control with coordinated adaptive sleeping for wireless sensor networks. IEEE/ACM Trans Networking 12:493–506

Zafer M, Modiano E (2007) Delay-constrained energy efficient data transmission over a wireless fading channel. In: IEEE Information theory and applications workshop, San Diego

Zafer M, Modiano E (2008) Optimal rate control for delay-constrained data transmission over a wireless channel. IEEE Trans Inf Theory 54:4020–4039

Zafer M, Modiano E (2009) A calculus approach to energy-efficient data transmission with quality-of-service constraints. IEEE/ACM Trans Networking 17:898–911

Zafer M, Modiano E (2009) Minimum energy transmission over a wireless channel with deadline and power constraints. IEEE Trans Autom Control 54:2841–2852

Zhong X, Xu C (2008) Online energy efficient packet scheduling with delay constraints in wireless networks. In: IEEE Infocom, Phoenix

Acknowledgements

We would like to thank Dallas Leitner for his help in generating the off-line simulation results. We also would like to thank the anonymous reviewers for the constructive feedback they provided in the review process.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors’ work is supported in part by a start-up funding provided by Middle Tennessee State University.

Rights and permissions

About this article

Cite this article

Miao, L., Xu, L. & Jiang, D. Optimal on-off control for a class of discrete event systems with real-time constraints. Discrete Event Dyn Syst 29, 79–90 (2019). https://doi.org/10.1007/s10626-019-00277-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10626-019-00277-x