Abstract

The issue of opinion sharing and formation has received considerable attention in the academic literature, and a few models have been proposed to study this problem. However, existing models are limited to the interactions among nearest neighbors, with those second, third, and higher-order neighbors only considered indirectly, despite the fact that higher-order interactions occur frequently in real social networks. In this paper, we develop a new model for opinion dynamics by incorporating long-range interactions based on higher-order random walks that can explicitly tune the degree of influence of higher-order neighbor interactions. We prove that the model converges to a fixed opinion vector, which may differ greatly from those models without higher-order interactions. Since direct computation of the equilibrium opinion is computationally expensive, which involves the operations of huge-scale matrix multiplication and inversion, we design a theoretically convergence-guaranteed estimation algorithm that approximates the equilibrium opinion vector nearly linearly in both space and time with respect to the number of edges in the graph. We conduct extensive experiments on various social networks, demonstrating that the new algorithm is both highly efficient and effective.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent years have witnessed an explosive growth in social media and online social networks, which have increasingly become an important part of our lives (Smith and Christakis 2008). For example, online social networks can increase the diversity of opinions, ideas, and information available to individuals (Kim 2011; Lee et al. 2014). At the same time, people may use online social networks to broadcast information on their lives and their opinions about some topics or issues to a large audience. It has been reported that social networks and social media have resulted in a fundamental change of ways that people share and shape opinions (Das et al. 2014; Fotakis et al. 2016; Auletta et al. 2018). Recently, there have been a concerted effort to model opinion dynamics in social networks, in order to understand the effects of various factors on the formation dynamics of opinions (Jia et al. 2015; Dong et al. 2018; Anderson and Ye 2019).

One of the popular opinion dynamics models is the Friedkin-Johnsen (FJ) model (Friedkin and Johnsen 1990). Although simple and succinct, the FJ model can capture complex behavior of real social groups by incorporating French’s “theory of social power” (French 1956), and thus has been extensively studied. A sufficient condition for the stability of this standard model was obtained in (Ravazzi et al. 2015), the average innate opinion was estimated in (Das et al. 2013), and the unique equilibrium expressed opinion vector was derived in (Das et al. 2013; Bindel et al. 2015). Some explanations of this natural model were consequently explored from different perspectives (Ghaderi and Srikant 2014; Bindel et al. 2015). In addition, based on the FJ opinion dynamics model, some social phenomena have been quantified and studied Xu et al. (2021), including polarization (Matakos et al. 2017; Musco et al. 2018), disagreement (Musco et al. 2018), conflict (Chen et al. 2018), and controversy (Chen et al. 2018). Moreover, some optimization problems (Abebe et al. 2018) for the FJ model were also investigated, such as opinion maximization (Gionis et al. 2013).

Other than studying the properties, interpretations and related quantities of the FJ model, many extensions or variants of this popular model have been developed (Jia et al. 2015). In (Abebe et al. 2018), the impact of susceptibility to persuasion on opinion dynamics was analyzed by introducing a resistance parameter to modify the FJ model. In (Semonsen et al. 2019), a varying peer-pressure coefficient was introduced to the FJ model, aiming to explore the role of increasing peer pressure on opinion formation. In (Chitra and Musco 2020), the FJ model was augmented to include algorithmic filtering, to analyze the effect of filter bubbles on polarization. Some multidimensional extensions were developed for the FJ model (Friedkin 2015; Parsegov et al. 2015, 2017; Friedkin et al. 2016), extending the scalar opinion to vector-valued opinions corresponding to several settings, either independent (Friedkin 2015) or interdependent (Parsegov et al. 2015, 2017; Friedkin et al. 2016).

The above related works for opinion dynamic models provide deep insights into the understanding of opinion formulation, since they grasped various important aspects affecting opinion shaping, including individual’s attributes, interactions among individuals, and opinion update mechanisms. However, existing models consider only the interactions among the nearest neighbors, allowing interactions with higher-order neighbors only indirectly via their immediate neighbors, in spite of the fact that this situation is commonly encountered in real natural (Schunack et al. 2002) and social (Ghasemiesfeh et al. 2013; Lyu et al. 2022) networks. In a real natural example (Schunack et al. 2002), it is shown as the long-jump spanning multiple lattice spacings, which plays a dominating role in the diffusion of these molecules. In a social network example (Ghasemiesfeh et al. 2013; Lyu et al. 2022), an individual can make use of the local, partial, or global knowledge corresponding to his direct, second-order, and even higher-order neighbors to search for opinions about a concerned issue or to diffuse information and opinions in an efficient way. It has been suggested by many existing theories and models that long ties are more likely to persist than other social ties, and that many of them constantly function as social bridges (Lyu et al. 2022). Schawe and Hernández (2022) defined a higher-order Deffuant model (Deffuant et al. 2000), generalizing the original pairwise interaction model for bounded-confidence opinion-dynamics to interactions involving a group of agents. To date, there is still a lack a comprehensive higher-order FJ opinion dynamics model on social networks, although it has been observed that long-range non-nearest-neighbor interactions could play a fundamental role in opinion dynamics.

In this paper, we make a natural extension of the classical FJ opinion dynamics model to explicitly incorporate the higher-order interactions between individuals and their non-nearest neighbors by leveraging higher-order random walks. We prove that the higher-order model converges to a unique equilibrium opinion vector, provided that each individual has a non-zero resistance parameter measuring his susceptibility to persuasion. We show that the equilibrium opinions of the higher-order FJ model differ greatly from those of the classical FJ model, demonstrating that higher-order interactions have a significant impact on opinion dynamics.

Basically, the equilibrium opinions of the higher-order FJ model on a graph are the same as those of the standard FJ model on a corresponding dense graph with a loop at each node. That is, at each time step, every individual updates his opinion according to his innate opinion, as well as the currently expressed opinions of his nearest neighbors on the dense graph. Since the transition matrix of the dense graph is a combination of the powers of that on the original graph, direct construction of the transition matrix for the dense graph is computationally expensive. To reduce the computation cost, we construct a sparse matrix, which is spectrally close to the dense matrix, nearly linearly in both space and time with respect to the number of edges on the original graph. This sparsified matrix maintains the information of the dense graph, such that the difference between the equilibrium opinions on the dense graph and the sparsified graph is negligible.

Based on the obtained sparsifed matrix, we further introduce an iteration algorithm, which has a theoretical convergence and can approximate the equilibrium opinions of the higher-order FJ model quickly. Finally, we perform extensive experiments on different networks of various scales, and show that the new algorithm achieves high efficiency and effectiveness. Particularly, this algorithm is scalable, which can approximate the equilibrium opinions of the second-order FJ on large graphs with millions of nodes. It is expected that the new model sheds light on further understanding of opinion formation, and that the new algorithm can be helpful for various applications, such as the computations of polarization and disagreement in opinion dynamics.

A preliminary version of our work has been published in (Zhang et al. 2020). In this paper, we extend our preliminary results in several directions. First, we present proof details previously omitted in (Zhang et al. 2020) for several important theorems, including the convergence analysis and approximation error bound of the proposed algorithm. Second, we add an illustrative example in Sect. 3.2, in order to better understand and demonstrate the difference between the traditional FJ model and the higher-order model. Finally, we provide additional experimental results for different innate opinion distributions and provide a thorough parameter analysis in Sect. 6.

2 Preliminaries

In this section, some basic concepts in graph and matrix theories, as well as the Friedkin–Johnsen (FJ) opinion dynamics model are briefly reviewed.

2.1 Graphs and related matrices

Consider a simple, connected, undirected social network (graph) \(\mathcal {G}=(\mathcal {V},\mathcal {E})\), where \(\mathcal {V}=\{1,2,\ldots ,n\}\) is the set of n agents and \(\mathcal {E}=\{(i,j)|i,j\in \mathcal {V}\}\) is the set of m edges describing relations among nearest neighbors. The topological and weighted properties of \(\mathcal {G}\) are encoded in its adjacency matrix \(\varvec{ A }=(a_{ij})_{n\times n}\), where \(a_{ij}=a_{ji}=w_e\) if i and j are linked by an edge \(e=(i,j)\in \mathcal {E}\) with weight \(w_e\), and \(a_{ij}=0\) otherwise. Let \(\mathcal {N}_i=\{j|(i,j)\in \mathcal {E}\}\) denote the set of neighbors of node i and \(d_i=\sum _{j\in \mathcal {N}_i} a_{ij}\) denote the degree of i. The diagonal degree matrix of graph \(\mathcal {G}\) is defined to be \(\varvec{ D }= \textrm{diag}(d_1,d_2,\ldots ,d_n)\), and the Laplacian matrix of \(\mathcal {G}\) is \(\varvec{ L }=\varvec{ D }-\varvec{ A }\). Let \(\varvec{ e }_i\) denote the i-th standard basis vector of appropriate dimension. Let \(\textbf{1}\) (\(\textbf{0}\)) be the vector with all entries being ones (zeros). Then, it can be verified that \(\varvec{ L }\textbf{1}=\textbf{0}\). The random walk transition matrix for \(\mathcal {G}\) is defined as \(\varvec{ P }=\varvec{ D }^{-1}\varvec{ A }\), which is row-stochastic (i.e., each row-sum equals 1).

2.2 Norms of a vector or matrix

For a non-negative vector \(\varvec{ x }\), \(x_{\max }\) and \(x_{\min }\) denote the maximum and minimum entry, respectively. For an \(n\times n\) matrix \(\varvec{ A }\), \(\sigma _i(\varvec{ A }), i=1,2,\ldots ,n\) denote its singular values. Given a vector \(\varvec{ x }\), its 2-norm is defined as \(\Vert \varvec{ x } \Vert _2=\root 2 \of {\sum _i{|x_i|^2}}\) and the \(\infty\)-norm is defined as \(\Vert \varvec{ x } \Vert _{\infty }=\max _i{|x_i|}\). It is easy to verify that \(\Vert \varvec{ x } \Vert _{\infty }\le \Vert \varvec{ x } \Vert _2\le \sqrt{n}\Vert \varvec{ x } \Vert _{\infty }\) for any n-dimensional vector \(\varvec{ x }\). For a matrix \(\varvec{ A }\), its 2-norm is defined to be \(\Vert \varvec{ A } \Vert _2=\max _{\varvec{ x }} \Vert \varvec{ A }\varvec{ x } \Vert _2/\Vert \varvec{ x } \Vert _2\). By definition, \(\Vert \varvec{ A }\varvec{ x } \Vert _{2}\le \Vert \varvec{ A } \Vert _2\Vert \varvec{ x } \Vert _2\). It is known that the 2-norm of the matrix \(\varvec{ A }\) is equal to its maximum singular value \(\sigma _{\max }\), satisfying \(\Vert \varvec{ x }^\top \varvec{ A }\varvec{ y } \Vert _2\le \sigma _{\max }\Vert \varvec{ x } \Vert _2\Vert \varvec{ y } \Vert _2\) for any vectors \(\varvec{ x }\) and \(\varvec{ y }\) (Golub and Van Loan 2012).

2.3 Friedkin-Johnsen opinion dynamics model

The Friedkin-Johnsen (FJ) model is a classic opinion dynamics model (Friedkin and Johnsen 1990). For a specific topic, the FJ model assumes that each agent \(i\in \mathcal {V}\) is associated with an innate opinion \(s_i\in [0,1]\), where higher values signify more favorable opinions, and a resistance parameter \(\alpha _i\in (0,1]\) quantifying the agent’s stubbornness, with a higher value corresponding to a lower tendency to conform with his neighbors’ opinions. Let \(\varvec{ x }^{(t)}\) denote the opinion vector of all agents at time t, with element \(x_i^{(t)}\) representing the opinion of agent i at that time. At every timestep, each agent updates his opinion by taking a convex combination of his innate opinion and the average of the expressed opinion of his neighbors in the previous timestep. Mathematically, the opinion of agent i evolves according to the following rule:

The evolution rule can be rewritten in matrix form as

where \(\varvec{\varLambda }\) denotes the diagonal matrix \(\textrm{diag}(\alpha _1,\alpha _2,\ldots ,\alpha _n)\), and \(\varvec{ I }\) is the identity matrix.

It has been proved (Das et al. 2013) that the above opinion formation process converges to a unique equilibrium \(\varvec{ z }\) when \(\alpha _i>0\) for all \(i\in \mathcal {V}\). The equilibrium vector \(\varvec{ z }\) can be obtained as the unique fixed point of equation (2), i.e.,

The ith entry \(z_i\) of \(\varvec{ z }\) is the expressed opinion of agent i.

A straightforward way to calculate the equilibrium vector \(\varvec{ z }\) requires inverting a matrix, which is expensive and intractable for large networks. In (Chan et al. 2019), the iteration process of the opinion dynamics model is used to obtain an approximation of vector \(\varvec{ z }\), which has a theoretical guarantee of convergence. The method is very efficient, scalable to networks with millions of nodes.

3 Higher-order opinion dynamics model

The classical FJ model has many advantages; for example, it captures some complex human behavior in social networks. However, this model considers only the interactions among nearest neighbors, without explicitly considering the higher-order interactions existing in social networks and social media. To fix this deficiency, in this section, we generalize the FJ model to a higher-order setting by using the random walk matrix polynomials describing higher-order random walks.

3.1 Random walk matrix polynomial

For a network \(\mathcal {G}\), its random walk matrix polynomial is defined as follows (Cheng et al. 2015):

Definition 1

Let \(\varvec{ A }\) and \(\varvec{ D }\) be, respectively, the adjacency matrix and diagonal degree matrix of a graph \(\mathcal {G}\). For a non-negative vector \(\varvec{\beta }=(\beta _1,\beta _2,\ldots ,\beta _T)\) satisfying \(\sum _{r=1}^T \beta _r=1\), the matrix

is a T-degree random walk matrix polynomial of \(\mathcal {G}\).

The Laplacian matrix \(\varvec{ L }\) is a particular case of \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\), which can be obtained from \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\) by setting \(T=1\) and \(\beta _1=1\). In fact, it can be proved that, for any \(\varvec{\beta }\), there always exists a graph \(\mathcal {G}'\) with loops, whose Laplacian matrix is \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\), as characterized by the following theorem.

Theorem 1

(Proposition 25 in Cheng et al. (2015)) The random walk matrix polynomial \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\) is a Laplacian matrix.

Define matrix \(\varvec{ L }_{\mathcal {G}_r}=\varvec{ D }-\varvec{ D }\left( \varvec{ D }^{-1}\varvec{ A }\right) ^r\), which is a particular case of matrix \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\) corresponding to \(T=r\) and \(\beta _r=1\). In fact, \(\varvec{ L }_{\mathcal {G}_r}\) is the Laplacian matrix of graph \(\mathcal {G}_r\), constructed from graph \(\mathcal {G}\) by performing r-step random walks on graph \(\mathcal {G}\). The ij-th element of the adjacency matrix \(\varvec{ A }_{\mathcal {G}_r}\) for graph \(\mathcal {G}_r\) is equal to the product of the degree \(d_i\) for node i in \(\mathcal {G}\) and the probability that a walker starts from node i and ends at node j after performing r-step random walks in \(\mathcal {G}\). Thus, the matrix polynomial \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\) is a combination of matrices \(\varvec{ L }_{\mathcal {G}_r}\) for \(r=1,2,\ldots ,T\).

Based on the random walk matrix polynomials, one can define a generalized transition matrix \(\varvec{ P }^*=\varvec{ P }^*_{\varvec{\beta }}\) for graph \(\mathcal {G}\) as follows.

Definition 2

Given an undirected weighted graph \(\mathcal {G}\) and a coefficient vector \(\varvec{\beta }=(\beta _1,\beta _2,\ldots ,\beta _T)\) with \(\sum _{r=1}^T\beta _r=1\), the matrix

is a T-order transition matrix of \(\mathcal {G}\) with respect to vector \(\varvec{\beta }\).

Note that the generalized transition matrix \(\varvec{ P }^*\) for graph \(\mathcal {G}\) is actually the transition matrix for another graph \(\mathcal {G}'\).

3.2 Higher-order FJ model

To introduce the higher-order FJ model, first modify the update rule in equation (2) by replacing \(\varvec{ P }\) with \(\varvec{ P }^*\). In other words, the opinion vector evolves as follows:

In this way, individuals update their opinions by incorpating those of their higher-order neighborhoods at each timestep. Moreover, by adjusting the coefficient vector \(\varvec{\beta }\), one can choose different weights for neighbors of different orders.

Note that for the case of \(\varvec{ P }^*=\varvec{ P }\), the higher-order FJ model is reduced to the classic FJ model. While for the case of \(\varvec{ P }^*\ne \varvec{ P }\), the higher-order FJ model can lead to very different results, in comparison with the standard FJ model, as shown in the following example.

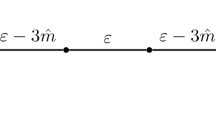

Example. Consider the tree shown in Fig. 1. The nodes from the center to the periphery are colored in red, yellow and blue, respectively. Suppose that for the red node its \((s_i,\alpha _i)\) are given by (1, 1), implying that the center node has a favorable opinion and is insusceptible to be persuaded by others. And, suppose that the yellow and blue nodes have values (0, 0.6) and (0, 0.01), respectively. Then, calculate the equilibrium opinion vector for the following three cases:

-

I.

\(\beta _1=1,\beta _2=0\). This case corresponds to the classic FJ model. At every timestep, the opinion of each node is influenced by the opinions of its nearest neighbors. At equilibrium, the expressed opinions of red, yellow and blue nodes are 1, 0.181 and 0.179, respectively. This is consistent with the intuition, since the red and yellow nodes are stubborn and thus prone to their innate opinions, while the blue nodes are susceptible to their neighboring nodes, the yellow ones.

-

II.

\(\beta _1=0,\beta _2=1\). This case is associated with the second-order FJ model. In this case, only the influences of the second-order neighbors are considered. The equilibrium expressed opinions of the red, yellow and blue nodes are 1, 0 and 0.971, respectively. This can be explained as follows. Since any yellow node is the second-order neighbor of the other two yellow nodes, they are influenced by each other, so they all stick to their innate opinions. In contrast, the blue nodes are highly affected by the center node.

-

III.

\(\beta _1=\beta _2=0.5\). This is in fact a hybrid case of the above two cases, with interactions between a node and both of its first- and second-order neighbors with equal weights. For this case, the equilibrium opinions for red, yellow and blue nodes are 1, 0.142 and 0.351, respectively. The opinion of each node lies between the opinions of the above two cases.

In addition to the expressed opinion of an individual, for the above-considered three cases, the sum of their expressed opinions are also significantly different, which are equal to 2.617, 6.826 and 3.532, respectively.

This example demonstrates that the interactions between nodes and their higher-order neighbors can have substantial impact on network opinions. Moreover, as will be seen in Sect. 6.2, the higher-order interactions also strongly affect the opinion dynamics on real-world social networks.

4 Convergence analysis

In this section, the convergence of the higher-order FJ model is analyzed. It will be shown that if all \(\alpha _i\) are positive, the model has a unique equilibrium and will converge to that equilibrium after sufficiently many iterations. The high-level ideas of our proof are adapted from Das et al. (2013).

First, recall the Gershgorin Circle Theorem.

Lemma 1

(Gershgorin Circle Theorem Bell (1965)) Given a square matrix \(\varvec{ A }\in {\mathbb {R}}^{n\times n}\), let \(R_i=\sum _{j\ne i}|a_{ij}|\) be the sum of the absolute values of the non-diagonal entries in the i-th row and \(D(a_{ii},R_i)\in {\mathbb {C}}\) be a closed disc centered at \(a_{ii}\) with radius \(R_i\). Then, every eigenvalue of \(\varvec{ A }\) lies in at least one of the discs \(D(a_{ii},R_i)\).

Now, the following main result is established.

Theorem 2

The higher-order FJ model defined in (6) has a unique equilibrium if \(\alpha _i>0\) for all \(i \in \mathcal {V}\).

Proof

Any equilibrium \(\varvec{ z }^*\in {\mathbb {R}}^n\) of (6) must be a solution of the following linear system:

Let \(\varvec{ M }=\varvec{ I }-(\varvec{ I }-\varvec{\varLambda })\varvec{ P }^*\). It suffices to show that \(\varvec{ M }\) is non-singular. First, it is obvious that every diagonal entry of \(\varvec{ M }\) is non-negative and every non-diagonal entry is non-positive, since every entry of \(\varvec{ P }^*\) lies in the interval [0, 1]. Thus, for any row i of \(\varvec{ M }\), the sum of absolute values of its non-diagonal elements is

where \(M_{ij}\) denotes the (i, j)th element of \(\varvec{ M }\). Then, according to Lemma 1, every eigenvalue \(\lambda\) of \(\varvec{ M }\) lies within the discs \(\{z:|z-M_{ii}|\le M_{ii}-\alpha _i\}\). Since \(\alpha _i>0\), the set of all eigenvalues for \(\varvec{ M }\) excludes 0. Therefore, matrix \(\varvec{ M }\) is invertible, and thus the equilibrium is unique. \(\square\)

Hence, \(\varvec{ z }^*= \left( \varvec{ I }-(\varvec{ I }-\varvec{\varLambda })\varvec{ P }^*\right) ^{-1}\varvec{\varLambda }\varvec{ s }\) is the unique equilibrium of the opinion dynamics model defined by (6). Next, it will be proved that after sufficiently many iterations, the new higher-order FJ model will converge to this equilibrium.

Theorem 3

If \(\alpha _i>0\) for all \(i\in \mathcal {V}\), then the higher-order FJ model converges to its unique equilibrium \(\varvec{ z }^*= \left( \varvec{ I }-(\varvec{ I }-\varvec{\varLambda })\varvec{ P }^*\right) ^{-1}\varvec{\varLambda }\varvec{ s }\).

Proof

Define the error vector \(\varvec{\epsilon }^{(t)}\) at the t-th iteration as \(\varvec{\epsilon }^{(t)}=\varvec{ x }^{(t)}-\varvec{ z }^*\). It can be proved that as t approaches infinity, the error term \(\varvec{\epsilon }^{(t)}\) tends to zero. Substituting (6) into the formula of \(\varvec{\epsilon }^{(t)}\), one obtains \(\varvec{\epsilon }^{(t+1)}=(\varvec{ I }-\varvec{\varLambda })\varvec{ P }^*\varvec{\epsilon }^{(t)}\). In addition, because the sum of all the entries in each row of \(\varvec{ P }^*\) is one and \((1-\alpha _i)\in [0,1]\), the elements of \(\varvec{\epsilon }^{(t)}\) become smaller as t grows. This can be explained as follows. Let \(\epsilon ^{(t+1)}_{\max }\) be the element of vector \(\varvec{\epsilon }^{(t+1)}\) that has the largest absolute value. Then,

which completes the proof of the theorem. \(\square\)

5 Fast estimation of equilibrium opinion vector

To compute the equilibrium expressed opinion vector \(\varvec{ z }^*\) requires calculating matrix \(\varvec{ P }^*\) and inverting a matrix, both of which are time consuming. In general, for a network \(\mathcal {G}\), sparse or dense, its r-step random walk graph \(\mathcal {G}_r\) could be very dense. Particularly, for a small-world network with a moderately large r, its r-step random walk graph \(\mathcal {G}_r\) is a weighted and almost complete graph. This makes it infeasible to compute the generalized transition matrix \(\varvec{ P }^*\) for huge networks.

In this section, the spectral graph sparsification technique is utilized to obtain an approximation of matrix \(\varvec{ P }^*\). Then, a fast convergent algorithm is developed to approximate the expressed opinion vector \(\varvec{ z }^*\), which avoids matrix inverse operation. The pseudocode of this new algorithm is shown in Algorithm 1.

5.1 Random-walk matrix polynomial sparsification

First, we introduce the concept of spectral similarity and the technique of random-walk matrix polynomial sparsification.

Definition 3

(Spectral Similarity of Graphs Spielman and Srivastava (2011)) Consider two weighted undirected networks \(\mathcal {G}=(\mathcal {V},\mathcal {E})\) and \({\tilde{\mathcal{G}}} = ({\mathcal{V}},{\tilde{\mathcal{E}}})\). Let \(\varvec{ L }\) and \(\varvec{\tilde{ L }}\) denote, respectively, their Laplacian matrices. Graphs \(\mathcal {G}\) and \({\tilde{\mathcal{G}}}\) are \((1+\epsilon )\)-spectrally similar if

Next, recall the sparsification algorithm Cheng et al. (2015). For a given graph \(\mathcal {G}=(\mathcal {V},\mathcal {E})\), start from an empty graph \({\tilde{\mathcal{G}}}\) with the same node set \(\mathcal {V}\) and an empty edge set. Then add M edges into the sparsifier \({\tilde{\mathcal{G}}}\) iteratively by a sampling technique. At each iteration, randomly pick an edge \(e=(u,v)\) from \(\mathcal {E}\) as an intermediate edge and an integer r from \(\{1,2,\ldots ,T\}\) as the length of the random-walk path. To this end, run the PathSampling(e, r) algorithm Cheng et al. (2015) to sample an edge by performing r-step random walks, and add the sample edge, together with its corresponding weight, into the sparsifier \({\tilde{\mathcal{G}}}\). Note that multiple edges will be merged into a single edge by summing up their weights together. Finally, the algorithm generates a sparsifier \({\tilde{\mathcal{G}}}\) for the original graph \(\mathcal {G}\) with no more than M edges.

In Cheng et al. (2015), an algorithm is designed to obtain a sparsifier \({\tilde{\mathcal{G}}}\) with \(O(n\epsilon ^{-2}\log n)\) edges for \(\varvec{ L }_{\varvec{\beta }}(\mathcal {G})\), which consists of two steps: The first step uses random walk path sampling to get an initial sparsifier with \(O(Tm\epsilon ^{-2}\log n)\) edges. The second step utilizes the standard spectral sparsification algorithm proposed in Spielman and Srivastava (2011) to further reduce the edge number to \(O(n\epsilon ^{-2}\log n)\). Since a sparsifier with \(O(Tm\epsilon ^{-2}\log n)\) edges is sparse enough for the present purposes, only the first step will be taken, while skipping the second step, to avoid unnecessary computations.

To sample an edge by performing r-step random walks, the procedure of PathSampling algorithm Cheng et al. (2015) is characterized in Lines 5–9 of Algorithm 1. To sample an edge, first draw a random integer k from \(\{1,2,\ldots ,r\}\) and then perform, respectively, \((k-1)\)-step and \((r-k)\)-step walks starting from two end nodes of the edge \(e=(u,v)\). This process samples a length-r path \(\varvec{ p }=(u_0,u_1,\ldots ,u_r)\). At the same time, compute

The algorithm returns the two endpoints of path \(\varvec{ p }\) as the sample edge \((u_0,u_r)\) and the quantity \(Z(\varvec{ p })\) for the calculation of weight.

Theorem 4

(Spectral Sparsifiers of Random-Walk Matrix Polynomials Cheng et al. (2015)) For a graph \(\mathcal {G}\) with random-walk matrix polynomial

where \(\sum _{r=1}^T \beta _r=1\) and \(\beta _r\) are non-negative, one can construct, in time \(O(T^2m\epsilon ^{-2}\log ^2 n)\), a \((1+\epsilon )\)-spectral sparsifier, \(\varvec{\tilde{ L }}\), with \(O(n\epsilon ^{-2}\log n)\) non-zeros.

Now one can approximate the generalized transition matrix using the Laplacian \({\tilde{\varvec{L}}}( {\tilde{\mathcal{G}}} )\) of the sparse graph \({\tilde{\mathcal{G}}}\):

Complexity Analysis Regarding the time and space complexity of sparsification process of Algorithm 1, the main time cost of sparsification (Lines 2–9) is the M calls of the PathSampling routine. In PathSampling, it requires \(O(\log n)\) time to sample a neighbor from the weighted network, and thus takes \(O(r\log n)\) time to sample a length-r path. Totally, the time complexity of Algorithm 1 is \(O(MT\log n)\). As for space complexity, it takes \(O(n+m)\) space to store the original graph \(\mathcal {G}\) and additional O(M) space to store the sparisifier \({\tilde{\mathcal{G}}}\). Thus, for appropriate size M, the sparsifier is computable.

5.2 Approximating the equilibrium opinion vector via the iteration method

With the spectral graph sparsification technique, it is possible to approximate \(\varvec{ P }^*\) with a sparse matrix. Nevertheless, directly computing the equilibrium still involves the matrix inverse operation, which is computationally expensive for large networks, such as those with millions of nodes. To approximate the equilibrium vector \(\varvec{ z }^*\) using the recurrence defined in (6) and multiple iterations, in this section, we develop a convergent approximation algorithm. For this purpose, an important lemma is first introduced.

Lemma 2

(Lemma 4 in Qiu et al. (2019)) Let \(\varvec{\mathcal { L }}=\varvec{ D }^{-1/2}\varvec{ L }\varvec{ D }^{-1/2}\), \(\varvec {{\tilde{\mathcal{L}}}} =\varvec{ D }^{-1/2}{\tilde{\varvec{L}}}\varvec{ D }^{-1/2}\) and \(\epsilon <0.5\). Then all the singular values of \(\varvec {{\tilde{\mathcal{L}}}} -\varvec{\mathcal { L }}\) satisfy that for all \(i\in \{1,2,\ldots ,n\}\), \(\sigma _i(\varvec {{\tilde{\mathcal{L}}}} -\varvec{\mathcal { L }})<4\epsilon\).

Now, we are in position to introduce a new iteration method for approximating the equilibrium vector \(\varvec{ z }^*\). First, set \(\varvec{\tilde{ x }}^{(0)}=\varvec{ x }^{(0)}=\varvec{ s }\). Then, in every timestep, update the opinion vector with the approximate transition matrix \(\varvec{\tilde{ P }}^*\), i.e., \(\varvec{\tilde{ x }}^{(t+1)}=\varvec{\varLambda }\varvec{ s }+(\varvec{ I }-\varvec{\varLambda })\varvec{\tilde{ P }}^*\varvec{\tilde{ x }}^{(t)}\). Let \(\alpha _{\min }\) be the smallest value among all \(i\in \mathcal {V}\).

Lemma 3

For every \(t\ge 0\),

Proof

Inequality (12) is proved by induction. The case of \(j=0\) is trivial, since \(\varvec{\tilde{ x }}^{(0)}=\varvec{ x }^{(0)}=\varvec{ s }\). Assume that (12) holds for some integer t. Then, it needs to show that (12) also holds for \(t+1\). To this end, split \(\Vert \varvec{\tilde{ x }}^{(t+1)}-\varvec{ x }^{(t+1)} \Vert _{\infty }\) into two terms by using triangle inequality:

For every coordinate of the first term in (14), an upper bound can be derived as follows:

where the second inequality is obtained by using the inequality that \(\varvec{ x }^\top \varvec{ A }\varvec{ y }\le \sigma _{\max }(\varvec{ A }) \Vert \varvec{ x }\Vert _2 \Vert \varvec{ y }\Vert _2\) for any matrix \(\varvec{ A }\),the third one follows \(\Vert \varvec{ D }^{-1}\left( \varvec{ L }_{\varvec{\beta }}-{\tilde{\varvec{L}}}_{\varvec{\beta }}\right) \Vert _2\le \Vert \varvec{ D }^{-1/2}\Vert _2\Vert \varvec{ D }^{1/2}\Vert _2\Vert \varvec{\mathcal { L }}_{\varvec{\beta }}-\varvec {{\tilde{\mathcal{L}}}} _{\varvec{\beta }}\Vert _2\) and the last inequality follows from the fact that \(x^{(t)}_i\le 1\) for all \(i\in \{1,2,\ldots ,n\}\).

Next, consider the second term in (14). One has

where the equality is due to the fact that \(\Vert \varvec{ I }-\varvec{ D }^{-1}\varvec{\tilde{ L }}_{\varvec{\beta }} \Vert _{\infty }=1\), which can be understood as follows. Since every entry of \(\varvec{ I }-\varvec{ D }^{-1}\varvec{\tilde{ L }}_{\varvec{\beta }}\) is non-negative and \(\varvec{\tilde{ L }}_{\varvec{\beta }}\textbf{1}=\textbf{0}\), one has

Substituting (15) and (16) into (14), one obtains

as required. \(\square\)

In order to show the convergence of this method, it needs to prove that, after sufficiently many iterations, the error between \(\varvec{ x }^{(t)}\) and \(\varvec{ z }^*\) will be sufficiently small, as characterized by the following lemma.

Lemma 4

For every \(t\ge 0\),

Proof

Expanding with the series \(\left( \varvec{ I }-\left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\right) ^{-1}=\sum \limits _{j=0}^{\infty } \left( \left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\right) ^j\) leads to

Below, by induction, it will be shown that for any \(\varvec{ x }\in [0,1]^n\), the relation \(\Vert \left( \left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\right) ^j\varvec{ x } \Vert _{\infty }\le (1-\alpha _{\min })^j\) holds for all \(j\ge 0\). Since every coordinate of \(\varvec{ x }\) lies in the interval [0, 1], it is obvious that the above relation is true for the case of \(j=0\). Suppose that, for some \(j>0\), every coordinate of \(\varvec{ y }=\left( \left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\right) ^j\varvec{ x }\) has magnitude at most \((1-\alpha _{\min })^j\). Since \(\varvec{ P }^*\) is row-stochastic, it follows that \(\left\| \varvec{ P }^*\varvec{ y } \right\| _{\infty }\le (1-\alpha _{\min })^j\). In addition, because \(\alpha _i\ge \alpha _{\min }\) for all \(i\in \mathcal {V}\), one has \(\left\| \left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\varvec{ y } \right\| _{\infty }\le (1-\alpha _{\min })^{j+1}\), completing the induction proof.

Finally, since both \(\sum _{j=t}^{\infty }\left( \left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\right) ^j\varvec{\varLambda }\varvec{ s }\) and \(\left( \left( \varvec{ I }-\varvec{\varLambda }\right) \varvec{ P }^*\right) ^t\varvec{ s }\) have non-negative coordinates, one has

as claimed by the lemma. \(\square\)

Combining Lemmas 3 and 4, a convergent approximate iteration method can be summarized as stated in the following theorem.

Theorem 5

(Approximation Error) For every \(t\ge 0\),

In the sequel, this approximate iteration algorithm is referred to as Approx. It should be mentioned that Theorem 5 provides only a rough upper bound. The experiments in Sect. 6.3 show that Approx works well in practice, leading to very accurate results for real networks.

6 Experiments on real networks

In this section, we conduct extensive experiments on real-world social networks to evaluate the performance of the algorithm Approx.

6.1 Setup

Machine Configuration and Reproducibility. Our extensive experiments run on a Linux box with 16-core 3.00GHz Intel Xeon E5-2690 CPU and 64GB of main memory. All algorithms are programmed in Julia v1.3.1. The source code is publicly available at https://github.com/HODynamic/HODynamic.

Datasets. We test the algorithm on a large set of realistic networks, all of which are collected from the Koblenz Network Collection (Kunegis 2013) and Network Repository (Rossi and Ahmed 2015). For those networks that are disconnected originally, we perform experiments on their largest connected components. The statistics of these networks are summarized in the first three columns of Table 1, where we use \(n'\) and \(m'\) to denote, respectively, the numbers of nodes and edges in their largest connected components. The smallest network consists of 4, 991 nodes, while the largest network has more than one million nodes. In Table 1, the networks are listed in an increasing order of the number of nodes in their largest connected components.

Input Generation. For each dataset, we use the network structure to generate the input parameters in the following way. The innate opinions are generated according to three different distributions, that is, uniform distribution, exponential distribution, and power-law distribution, where the latter two are generated by the randht.py file in (Clauset et al. 2009). For the uniform distribution, we generated the opinion \(s_i\) of node i at random in the range of [0, 1]. For the exponential distribution, we use the probability density \(\textrm{e}^{ x_{\min }}\textrm{e}^{-x}\) to generate \(n'\) positive real numbers x with minimum value \(x_{\min }>0\). Then, we normalize these \(n'\) numbers to be within the range [0, 1] by dividing each x with the maximum observed value. Similarly, for the power-law distribution, we choose the probability density \((\alpha -1)x_{\min }^{\alpha -1}x^{-\alpha }\) with \(\alpha =2.5\) to generate \(n'\) positive real numbers, and then normalize them to be within the interval [0, 1] as the innate opinions. In practice, we set \(x_{\min }=1\) for both the exponential and power-law distribution. We note that there is always a node with innate opinion 1 due to the normalization operation for the latter two distributions. We generate the resistance parameters uniformly to be within the interval (0, 1).

6.2 Comparison between standard FJ model and second-order FJ model

To show the impact of higher-order interactions on the opinion dynamics, we compare the equilibrium expressed opinions between the second-order FJ model and the standard FJ model on four real networks: PagesTVshow, PagesCompany, Gplus, and GemsecRO. For both models, we generate innate opinions and resistance parameters for each node according to the uniform distribution. We set \(\beta _1=1, \beta _2=0\) for the standard FJ model, and \(\beta _1=0, \beta _2=1\) for the second-order FJ model.

Figure 2 illustrates the distribution for the difference of the final expressed opinions for each node between the classic and second-order FJ models on four considered real networks. It can be observed that for each of these four networks, there are more than half nodes, for which the difference of expressed opinions between the two models is larger than 0.01. Particularly, there are over \(10\%\) agents, for which the difference of equilibrium opinions is greater than 0.1. This possibly makes them stand on the opposite sides for different models. Thus, the opinion dynamics for the second-order FJ model differs largely from the classic FJ model, indicating that the effects of higher-order interactions are not negligible.

6.3 Performance evaluation

To evaluate the performance of the new algorithm Approx, we implement it on various real networks and compare the running time and accuracy of Approx with those corresponding to the standard Exact algorithm. For the Exact, it computes the equilibrium vector by calculating the random-walk matrix polynomials via matrix multiplication and directly inverting the matrix \(\varvec{ I }-(\varvec{ I }-\varvec{\varLambda })\varvec{ P }^*\). Here, we use the second-order random-walk matrix polynomial to simulate the opinion dynamics with \(\beta _1=\beta _2=0.5\). For Approx, we set the number M of samples as \(10\times T\times m\) and approximate the equilibrium vector with 100 iterations. By using the maximum iterations as the stopping criterion, we can avoid the numeric errors and instability caused by other stopping criteria and thus make the efficiency comparison more stable. To objectively evaluate the running time, we enforce the program to run on a single thread for both Exact and Approx on all considered networks, except the last seven marked with asterisks, for which we cannot run Exact due to the very high cost for space and time.

Efficiency. We present the running time of algorithms Approx and Exact for all networks in Table 1. For the last seven networks, we only run algorithm Approx since Exact would take extremely long time. For each of the three innate opinion distributions in different networks, we record the running time of Approx and Exact. From Table 1, we observe that for small networks with less than 10,000 nodes, the running time of Approx is a little longer than that of Exact. Thus, Approx shows no superiority for small networks. However, for those networks having more than twenty thousand nodes, Approx significantly improves the computation efficiency compared with Exact. For example, for the moderately large network GemsecRO with 41,773 nodes, Approx is \(60\times\) faster than Exact. Finally, for large graphs Approx shows a very obvious efficiency advantage. Table 1 indicates that for those networks with over 100 thousand nodes, Approx completes running within 12 minutes, whereas Exact fails to run. We note that for large networks, the running time of Approx grows nearly linearly with respect to \(m'\), consistent with the above complexity analysis, while the running time of Exact grows as a cube power of \(n'\).

Accuracy. In addition to the high efficiency, the new algorithm Approx provides a good approximation for the equilibrium opinion \(\varvec{ z }^* =( \varvec{ z }_1^*,\varvec{ z }_2^*,\ldots , \varvec{ z }_{n}^*)^\top\) in practice. To show this, we compare the approximate results of Approx for second-order FJ model with exact results obtained by Exact, for all the examined networks shown in Table 1, except the last seven which are too big for Exact to handle. For each of the three distributions of the innate opinions, Table 2 reports the mean absolute error \(\sigma = \sum \nolimits _{i=1}^{n'} |\varvec{ z }^*_i - \varvec{\tilde{ z }}^*_i|/n'\), where \(\varvec{\tilde{ z }}^*=( \varvec{\tilde{ z }}_1^*,\varvec{\tilde{ z }}_2^*,\ldots , \varvec{\tilde{ z }}_{n}^*)^\top\) is the estimated vector obtained by Approx. From Table 2, we observe that the actual mean absolute errors \(\sigma\) are all less than 0.008, thus ignorable. Furthermore, for all networks we tested, the mean absolute errors \(\sigma\) are smaller than the theoretical ones provided by Theorem 5. Therefore, the new algorithm Approx provides a very desirable approximation for the equilibrium opinion vector in applications.

6.4 Parameter analysis

We finally discuss how the parameters affect the performance and efficiency of Approx. We report all the parameter analyses on four networks, namely HamstersterFriends, HamstersterFull, PagesTVshow, and Facebook (NIPS). All experiments here are performed for the second-order FJ opinion dynamics model with \(\beta _1=\beta _2=0.5\).

The number of non-zeros M. As shown in Sect. 5.1, \(M = O(Tm\epsilon ^{-2}\log n)\) is required to guarantee the approximation error in theory. We first explore how the number of non-zeros influences the performance of the algorithm Approx in implementation. Without loss of generality, we empirically set M to be \(k\times T\times m\), with k being 1, 10, 100, 200, 500, 1000 and 2000, respectively. In Fig. 3, we report the mean absolute error of Approx, which drops as we increase the number of samples M. This is because matrix \(\varvec{ P }^*\) is approximated more accurately for larger M. On the other hand, although increasing M may have a positive influence on the accuracy of Approx, this marginal benefit diminishes gradually. Figure 3 shows that \(M = 10\times T\times m\) is in fact a desirable choice, which balances the trade-off between the effectiveness and efficiency of Approx.

The number of iterations on small networks. In Sect. 5.2, we present an approximation convergence for the iteration method Approx, with the accuracy of Approx depending on the number of iterations. In Fig. 4, we plot the mean absolute error of Approx as a function of the number of iterations. In all experiments, M is set to be \(10\times T\times m\). As demonstrated in Fig. 4, the second-order FJ model converges in several iterations for all the four networks tested.

The number of iterations on large networks. We also analyze the influence of the number of iterations on large networks. Since we cannot run Exact for these four large networks, we instead use the mean absolute error between two iterations in Approx as an indicator for convergence in Fig. 5. In all experiments, M is set to be \(10\times T\times m\). As demonstrated in Fig. 5, the second-order FJ model converges in dozens of iterations for all the four networks tested. Therefore, one hundred iterations are enough to obtain desirable approximation results for networks with millions of nodes. For all the experiments shown in Table 1, the difference between opinion vectors of two consecutive iterations for the second-order FJ model is insignificant after dozens of iterations.

7 Conclusion

In this paper, we presented a significant extension of the classic Friedkin-Johnsen (FJ) model by considering not only nearest-neighbor interactions, but also long-range interactions via leveraging higher-order random walks. We showed that the proposed model has a unique equilibrium expressed opinion vector, provided that each individual holds an innate opinion. We also demonstrated that the resultant expressed opinion vector of the new model may be significantly different from that of the FJ model, indicating the important impact of higher-order interactions on opinion dynamics.

The expressed opinion vector of the new model can be considered as an expressed opinion vector of the FJ model in a dense graph with a loop at every node, whose transition matrix is a convex combination of powers of the transition matrix for the original graph. However, direct computation of the transition matrix for the dense graph is computationally expensive, which involves multiple matrix multiplication and inversion operations. As a remedy, we leveraged the state-of-the-art Laplacian sparsification technique and the nearly linear-time algorithm in Cheng et al. (2015) to obtain a sparse matrix, which is spectrally similar to the original dense matrix thereby preserving all basic information. Based on the obtained sparse matrix, we further proposed a convergent iteration algorithm, which approximates the equilibrium opinion vector in linear space and time. We finally conducted extensive experiments on diverse social networks, which demonstrate that the new algorithm achieves both good efficiency and effectiveness.

It should be mentioned that in this paper, we only focus on the impacts of higher-order interactions on the sum of expressed opinions. Actually, in addition to the opinion sum, there are many other related quantities for opinion dynamics, including convergence rate, polarization Dandekar et al. (2013); Matakos et al. (2017); Musco et al. (2018), disagreement Musco et al. (2018); Zhu et al. (2021), and so on. It is expected that higher-order interactions have also important influences on these important quantities. Although these subjects are beyond our paper, below we provide a heuristic explanation for the reason of higher-order interactions affecting polarization. As shown in Theorem 3, the vector of expressed opinions is determined simultaneously by three factors: the innate opinion \(s_i\) and resistance parameter \(\alpha _i\) of every agent i, as well as the higher-order interaction encoded in matrix \(P^*\). Thus, higher-order interactions play a significant role in opinion polarization, since this quantity is also simultaneously affected by these three factors Musco et al. (2018). Finally, it is worth emphasizing that although our model incorporates higher-order interactions and thus generates an opinion vector different from that of the classic FJ model, it is difficult to judge which opinion vector is superior or more compelling. In fact, these two models are not mutually exclusive. The choice of the models depends on the specific aim of applications, such as minimizing polarization Musco et al. (2018), disagreement Zhu et al. (2021), or conflict Zhu and Zhang (2022); Wang and Kleinberg (2023).

References

Abebe R, Kleinberg J, Parkes D, Tsourakakis CE (2018) Opinion dynamics with varying susceptibility to persuasion. In: Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining, ACM, pp 1089–1098

Anderson BD, Ye M (2019) Recent advances in the modelling and analysis of opinion dynamics on influence networks. Int J Autom Comput 16(2):129–149

Auletta V, Ferraioli D, Greco G (2018) Reasoning about consensus when opinions diffuse through majority dynamics. In: Twenty-seventh international joint conference on artificial intelligence, pp 49–55

Bell HE (1965) Gershgorin’s theorem and the zeros of polynomials. Am Math Mon 72(3):292–295

Bindel D, Kleinberg J, Oren S (2015) How bad is forming your own opinion? Games Econ Behav 92:248–265

Chan T, Liang Z, Sozio M (2019) Revisiting opinion dynamics with varying susceptibility to persuasion via non-convex local search. In: Proceedings of the 2019 world wide web conference, ACM, pp 173–183

Chen X, Lijffijt J, De Bie T (2018) Quantifying and minimizing risk of conflict in social networks. In: Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery and data mining, ACM, pp 1197–1205

Cheng D, Cheng Y, Liu Y, Peng R, Teng SH (2015) Efficient sampling for Gaussian graphical models via spectral sparsification. In: Proceedings of the 28th conference on learning theory, pp 364–390

Chitra U, Musco C (2020) Analyzing the impact of filter bubbles on social network polarization. In: Proceedings of the thirteenth ACM international conference on web search and data mining, ACM, pp 115–123

Clauset A, Shalizi CR, Newman ME (2009) Power-law distributions in empirical data. SIAM Rev 51(4):661–703

Dandekar P, Goel A, Lee DT (2013) Biased assimilation, homophily, and the dynamics of polarization. Proc National Acad Sci 110(15):5791–5796

Das A, Gollapudi S, Panigrahy R, Salek M (2013) Debiasing social wisdom. In: Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp 500–508

Das A, Gollapudi S, Munagala K (2014) Modeling opinion dynamics in social networks. In: Proceedings of the 7th ACM international conference on web search and data mining, ACM, pp 403–412

Deffuant G, Neau D, Amblard F, Weisbuch G (2000) Mixing beliefs among interacting agents. Adv Complex Syst 3(01n04):87–98

Dong Y, Zhan M, Kou G, Ding Z, Liang H (2018) A survey on the fusion process in opinion dynamics. Inf Fusion 43:57–65

Fotakis D, Palyvos-Giannas D, Skoulakis S (2016) Opinion dynamics with local interactions. In: Twenty-fifth international joint conference on artificial intelligence, pp 279–285

French JR Jr (1956) A formal theory of social power. Psychol Rev 63(3):181–194

Friedkin NE (2015) The problem of social control and coordination of complex systems in sociology: a look at the community cleavage problem. IEEE Control Syst Mag 35(3):40–51

Friedkin NE, Johnsen EC (1990) Social influence and opinions. J Math Sociol 15(3–4):193–206

Friedkin NE, Proskurnikov AV, Tempo R, Parsegov SE (2016) Network science on belief system dynamics under logic constraints. Science 354(6310):321–326

Ghaderi J, Srikant R (2014) Opinion dynamics in social networks with stubborn agents: equilibrium and convergence rate. Automatica 50(12):3209–3215

Ghasemiesfeh G, Ebrahimi R, Gao J (2013) Complex contagion and the weakness of long ties in social networks: revisited. In: Proceedings of the fourteenth ACM conference on electronic commerce, pp 507–524

Gionis A, Terzi E, Tsaparas P (2013) Opinion maximization in social networks. In: Proceedings of the 2013 SIAM international conference on data mining, SIAM, pp 387–395

Golub GH, Van Loan CF (2012) Matrix computations. JHU press

Jia P, MirTabatabaei A, Friedkin NE, Bullo F (2015) Opinion dynamics and the evolution of social power in influence networks. SIAM Rev 57(3):367–397

Kim Y (2011) The contribution of social network sites to exposure to political difference: the relationships among snss, online political messaging, and exposure to cross-cutting perspectives. Comput Human Behav 27(2):971–977

Kunegis J (2013) Konect: the koblenz network collection. In: Proceedings of the 22nd international conference on world wide web, ACM, ACM, New York, USA, pp 1343–1350

Lee JK, Choi J, Kim C, Kim Y (2014) Social media, network heterogeneity, and opinion polarization. J Commun 64(4):702–722

Lyu D, Yuan Y, Wang L, Wang X, Pentland A (2022) Investigating and modeling the dynamics of long ties. Commun Phys 5(1):1–9

Matakos A, Terzi E, Tsaparas P (2017) Measuring and moderating opinion polarization in social networks. Data Mining Knowl Discov 31(5):1480–1505

Musco C, Musco C, Tsourakakis CE (2018) Minimizing polarization and disagreement in social networks. In: Proceedings of the 2018 world wide web conference, ACM, pp 369–378

Parsegov SE, Proskurnikov AV, Tempo R, Friedkin NE (2015) A new model of opinion dynamics for social actors with multiple interdependent attitudes and prejudices. In: Proceedings of the IEEE conference on decision and control, IEEE, pp 3475–3480

Parsegov SE, Proskurnikov AV, Tempo R, Friedkin NE (2017) Novel multidimensional models of opinion dynamics in social networks. IEEE Trans Autom Control 62(5):2270–2285

Qiu J, Dong Y, Ma H, Li J, Wang C, Wang K, Tang J (2019) NetSMF: Large-scale network embedding as sparse matrix factorization. In: Proceedings of the the world wide web conference, ACM, pp 1509–1520

Ravazzi C, Frasca P, Tempo R, Ishii H (2015) Ergodic randomized algorithms and dynamics over networks. IEEE Trans Control Netw Syst 1(2):78–87

Rossi R, Ahmed N (2015) The network data repository with interactive graph analytics and visualization. In: Proceedings of the twenty-ninth AAAI conference on artificial intelligence, AAAI, pp 4292–4293

Schawe H, Hernández L (2022) Higher order interactions destroy phase transitions in deffuant opinion dynamics model. Commun Phys 5(1):1–9

Schunack M, Linderoth TR, Rosei F, Lagsgaard E, Stensgaard I, Besenbacher F (2002) Long jumps in the surface diffusion of large molecules. Phys Rev Lett 88(15):156102

Semonsen J, Griffin C, Squicciarini A, Rajtmajer S (2019) Opinion dynamics in the presence of increasing agreement pressure. IEEE Trans Cybern 49(4):1270–1278

Smith KP, Christakis NA (2008) Social networks and health. Annual Rev Sociol 34(1):405–429

Spielman DA, Srivastava N (2011) Graph sparsification by effective resistances. SIAM J Comput 40(6):1913–1926

Wang Y, Kleinberg J (2023) On the relationship between relevance and conflict in online social link recommendations. In: Oh A, Neumann T, Globerson A, Saenko K, Hardt M, Levine S (eds) Advances in neural information processing systems, curran associates, Inc., vol 36, pp 36708–36725

Xu W, Bao Q, Zhang Z (2021) Fast evaluation for relevant quantities of opinion dynamics. In: Proceedings of the web conference, ACM, pp 2037–2045

Zhang Z, Xu W, Zhang Z, Chen G (2020) Opinion dynamics incorporating higher-order interactions. In: Proceedings of the IEEE international conference on data mining, IEEE, pp 1430–1435

Zhu L, Zhang Z (2022) A nearly-linear time algorithm for minimizing risk of conflict in social networks. In: Proceedings of the 28th ACM SIGKDD conference on knowledge discovery and data mining, pp 2648–2656

Zhu L, Bao Q, Zhang Z (2021) Minimizing polarization and disagreement in social networks via link recommendation. Proc Adv Neural Inf Process Syst 34:2072–2084

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 62372112, U20B2051, and 61872093).

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Johannes Fürnkranz.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Z., Xu, W., Zhang, Z. et al. Opinion dynamics in social networks incorporating higher-order interactions. Data Min Knowl Disc (2024). https://doi.org/10.1007/s10618-024-01064-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10618-024-01064-5