Abstract

In this paper, we reconsider the model in Bischi and Lamantia (J Econ Interact Coord 17:3–27, 2022) and reformulate it in a two-population context. There, the Cournot duopoly market examined is in equilibrium (Cournot-Nash-equilibrium quantities are produced) conditionally to the players’ (heterogeneous) attitudes toward cooperation. To accommodate players’ attitudes, their objective functions partly include the opponent’s profit, resulting in greater (partial) cooperation or hostility toward the opponent than in the standard duopoly setting. An evolutionary selection mechanism determines the survival of cooperative or competitive strategies in the duopoly. The game is symmetric and Bischi and Lamantia (J Econ Interact Coord 17:3–27, 2022) assumes that the two players involved start the game by choosing the same strategic profile. In this way, the full-fledged two-population game simplifies in a one-dimensional map. In this paper, we relax this assumption. On one hand, this approach allows us to investigate entirely the dynamics of the model and the evolutionary stability of the Nash equilibria of the static game that is implicit in the evolutionary setup. In fact, the model with only one population partially represents the system dynamics occurring in an invariant subset of the phase space. As a remarkable result, this extension shows that the steady state of the evolutionary model where all players are cooperative can be an attractor, although only in the weak sense, even when it is not a Nash equilibrium. This occurs when firms have a very high propensity to change strategies to the one that performs better. On the other hand, this approach allows us to accommodate players’ heterogeneity (non-symmetric version of the game), whose analysis confirms the main insights attained in the homogeneous setting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An intriguing research question in game theory concerns the evolution of cooperation in prisoner’s dilemmas. In this regard, one of the most widely considered topics concerns the theory of oligopolies. Indeed, it is well known that in these contexts agents (firms) are trapped in sub-optimal outcomes relative to what they could potentially achieve with a higher level of cooperation. Cyert and DeGroot (1973) provide one of the earliest contributions analyzing oligopoly under partial cooperation. Cyert and DeGroot (1973) assume that firms’ objective functions are increased to partially consider opponents’ objectives. The justification of partial cooperation in real cases can be provided by assuming cross holdings between firms as illustrated in Clayton and Jørgensen (2005). Chapter 4 in Bischi et al. (2010) provides a thorough dynamic analysis of oligopoly models with partial cooperation. Alongside more cooperative behavior, it is likely that firms adopt more aggressive behavior, for example, because the managers’ incentive scheme weighs the firm’s market share against that of the rival, as suggested in Fershtman and Judd (1987), one seminal contribution to the literature on managers’ incentives.

In the context of oligopolies, many works have employed the evolutionary approach (Weibull 1997; Friedman (1991)) by coupling population dynamics with market dynamics. This allows to classify Nash equilibria in evolutionary stable and evolutionary unstable ones and study the (non-)sustainability of cooperation from a Darwinian point of view. An interesting result in this direction shows that the Walrasian equilibrium (where firms are price-takers) is an evolutionary stable equilibrium, while the more profitable Cournot-Nash equilibrium is not, see on this Vega-Redondo (1997), Radi (2017) and Anufriev and Kopányi (2018).

By studying the evolutionary stability of Nash equilibrium, it is also possible to investigate forms of (non-)cooperation in emerging scenarios, such as the arising of heterogeneous strategies or the adoption of different informational or behavioral rules among players. Among many possible applications, we can mention those with firms endowed with different information sets as in Droste et al. (2002), Bischi et al. (2015), Hommes et al. (2018), Cerboni Baiardi et al. (2015), Baiardi and Naimzada (2019) and Mignot et al. (2023) or with firms choosing different market competition strategies as in Kopel et al. (2014) and Kopel and Lamantia (2018), different incentive schemes to managers as in De Giovanni and Lamantia (2016), and available technologies as in Hommes and Zeppini (2014) and Lamantia and Radi (2018). For an overview of evolutionary modeling in oligopoly games, we refer the reader to Bischi et al. (2018).Footnote 1

More recently, Bischi and Lamantia (2022) consider a model of oligopoly with partial cooperation or partial hostility in an evolutionary sense. The evolutionary mechanism is described by a modification of the replicator dynamics in discrete time suggested in Cabrales and Sobel (1992). Specifically, Bischi and Lamantia (2022) ask under what conditions a certain behavior can prevail by assuming that firms can choose between aggressive and cooperative strategies and can, over time, change their attitudes through a process of evolutionary adaptation. Firms thus choose their actions through the maximization of an extended objective function, which adds a (positive or negative) share of the competitor’s profit, whereas evolutionary pressure follows average profits, in line with the so-called indirect evolutionary approach described in Königstein and Müller (2001). To complete the investigation, Bischi and Lamantia (2022) introduces a memory process to study whether it can sustain more cooperation in the long run.

The evolutionary model in Bischi and Lamantia (2022) is based on the implicit assumption that the two players involved in the game choose to be cooperative or hostile with the same probability. This assumption reduces the full-fledged two-population model into a one-population model. This is a common assumption to study the evolutionary stability of Nash equilibria in symmetric games that are prisoner’s dilemma, prisoner’s delight, or coordination games. The model proposed in Bischi and Lamantia (2022) is indeed a symmetric game that can be either prisoner’s dilemma (all players are hostile) or prisoner’s delight (all players are cooperative). However, considering the two-population version of the game, we underline in this paper that the game can also be an anti-coordination game, with Nash equilibria in which one player is cooperative and the other one is hostile. Then, the two-population setup here proposed is required to investigate the evolutionary stability of these Nash equilibria that are overlooked in Bischi and Lamantia (2022). Analyzing the anti-coordination setup, the steady state of higher cooperation between firms is always unstable from a Lyapunov’s point of view, i.e. it is not a Nash equilibrium.Footnote 2 Turning, however, to a global analysis of the two-population model, we surprisingly discover that the same steady state turns out to attract a set of initial conditions of positive measure. This occurs when the players’ propensity to switch to the most profitable strategy is sufficiently high (large intensity of choice). In more technical terms, the equilibrium of higher cooperation can be an attractor in a weak (or Milnor) sense, see Ashwin et al. (1996), for the two-population evolutionary system if sufficiently high agents’ choice impulsivity is assumed. These results can be compared with what would occur with finite populations, i.e., a greater propensity toward cooperative outcomes, see, e.g., Nowak and Sigmund (2005). The advantage of our approach is that the selection of cooperation is obtained within an easily tractable deterministic dynamic model. It is remarkable that the steady state that is a Milnor attractor but not a Nash equilibrium is also an optimal solution, in the sense that the players gain more payoff by being both cooperative than being both hostile.Footnote 3

To verify the robustness of our results, the two-population setup is further extended by considering heterogeneous populations. Specifically, we consider populations of firms with different impulsiveness in firms’ revision of choices (intensities of choice), different costs of production and different propensity to cooperate and to non-cooperate. The robustness check confirms the main insights. In particular, the Milnor attractor persists, representing an equilibrium of the replicator dynamics that is not a Nash equilibrium but that is evolutionary stable although only in a Milnor sense. We say that the equilibrium is evolutionary Milnor stable. Therefore, we can conclude that any evolutionary stable equilibrium in a system of exponential replicator dynamics is a Nash equilibrium, see, e.g., Hofbauer and Weibull (1996), Hofbauer and Sigmund (1998), Hofbauer and Sigmund (2003), while we show here that a non-Nash equilibrium can be evolutionary Milnor stable.

The paper is organized as follows. Section 2 describes the setup of the full-fledged evolutionary game and provides some properties of the static bimatrix game that is implicit in it. Section 3 investigates the competition among firms by considering the one-population version of the game and reconsidering some results from Bischi and Lamantia (2022). The full-fledged evolutionary game, that is the two-population model, is then investigated in Sect. 4, which collects the main results of this work. The asymmetric setting of the full-fledged evolutionary model is investigated in 5 and confirms the results obtained with the symmetric setup. Section 6 concludes.

2 Model

As in Bischi and Lamantia (2022), we assume a Cournot duopoly market. Each player \(i\in \left\{ 1,2\right\}\) produces the quantity \(q_{i}\) of a commodity, while its competitor \(-i\) produces the quantity \(q_{-i}\) of the same commodity. The total production is sold at a price determined according to the following linear inverse demandFootnote 4:

while the linear function \(C\left( q\right) =c q\), where \(c\in \left( 0,a\right)\), is the cost of producing q quantity of the commodity. Then, player i’s profit reads

and, by symmetry, the payoff of its competitor is

Therefore, when there is no risk of confusion we can drop the subscripts i and \(-i\) from \(\pi\) and denote by \(\pi \left( q_{i},q_{-i}\right)\) the payoff of player i and by \(\pi \left( q_{-i},q_{i}\right)\) the payoff of player \(-i\).

Differently from a classical Cournot duopoly game where each player i produces the quantity that maximizes (2), here each player i produces the output that maximizes the following function

where \(\theta \in \left[ -1,1\right]\). Clearly, a positive (negative) value of \(\theta\) corresponds to a cooperative (aggressive) attitude toward the opponent.

Heterogeneity among agents is then introduced through different possible values of \(\theta\). Denoting by \(\theta _{i}\), resp. by \(\theta _{-i}\), the attitude of player i, resp. of its opponent (player \(-i\)), by standard calculations we have that at Cournot-Nash equilibrium the quantity produced by player i and its profit are:

respectively, where by symmetric arguments \(q^{*}\left( \theta _{-i},\theta _{i}\right)\) is the level of production of the competitor, player \(-i\), and \(\pi \left( q^{*}\left( \theta _{-i},\theta _{i}\right) ,q^{*} \left( \theta _{i},\theta _{-i}\right) \right)\) is its payoff.

Remark 1

Note that \(q^{*}\left( \theta _{i},\theta _{-i}\right) \gtreqless q^{*}\left( \theta _{-i},\theta _{i}\right)\) and \(\pi \left( q^{*}\left( \theta _{i},\theta _{-i}\right) ,q^{*}\left( \theta _{-i},\theta _{i}\right) \right) \gtreqless \pi \left( q^{*}\left( \theta _{-i},\theta _{i}\right) ,q^{*}\left( \theta _{i},\theta _{-i}\right) \right)\) when \(\theta _{i}\lesseqgtr \theta _{-i}\). Moreover, note that \(\pi \left( q^{*}\left( \theta ,\theta \right) ,q^{*}\left( \theta ,\theta \right) \right)\) is increasing in \(\theta\).

Regarding the value of \(\theta\), in the following we assume that a player can choose between two values, say \(\theta \in \left\{ \underline{\theta },\overline{\theta }\right\}\), where \(\underline{\theta }<\overline{\theta }\). In this way, both agents could be of the same type, i.e., both including \(\underline{\theta }\) or \(\overline{\theta }\) of the opponent’s profit or they can be described with different levels of \(\theta\). Note that in the latter case, duopolists could be both cooperators (\(0<\underline{\theta }<\overline{\theta } \le 1\)), both aggressive (\(-1 \le \underline{\theta }<\overline{\theta } < 0\)), or one cooperator against one aggressive player (\(-1 \le \underline{\theta }<0 \le \overline{\theta } \le 1\)).

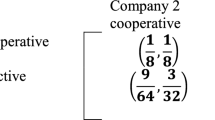

We assume that the choice of the value of \(\theta\) is a strategic action by a player. Specifically, in a pre-commitment phase, each agent chooses ex-ante a certain attitude (cooperative or hostile) toward the opponent. Then the type of the competitor is observed and a quantity supplied to the market corresponds to a Cournot-Nash equilibrium of the underlying duopoly game. Therefore, the choice of \(\theta\) can be represented by a game with the following payoff bimatrix:

Column player | |||

|---|---|---|---|

Row Player | Strategies | \(\overline{\theta }\) | \(\underline{\theta }\) |

\(\overline{\theta }\) | \(\left( \pi ^{*}\left( \overline{\theta },\overline{\theta }\right) ,\pi ^{*}\left( \overline{\theta },\overline{\theta }\right) \right)\) | \(\left( \pi ^{*}\left( \overline{\theta },\underline{\theta }\right) ,\pi ^{*}\left( \underline{\theta },\overline{\theta }\right) \right)\) | |

\(\underline{\theta }\) | \(\left( \pi ^{*}\left( \underline{\theta },\overline{\theta }\right) ,\pi ^{*}\left( \overline{\theta },\underline{\theta }\right) \right)\) | \(\left( \pi ^{*}\left( \underline{\theta },\underline{\theta }\right) ,\pi ^{*}\left( \underline{\theta },\underline{\theta }\right) \right)\) | |

where, for the sake of notation simplicity, we have set \(\pi \left( q^{*}\left( \theta _{i},\theta _{-i}\right) ,q^{*}\left( \theta _{-i},\theta _{i}\right) \right) =\pi ^{*}\left( \theta _{i},\theta _{-i}\right)\) where \(\theta _{i},\theta _{-i}\in \left\{ \underline{\theta },\overline{\theta }\right\}.\)

Note that this is a classical symmetric bimatrix game where the payoff of one strategy does not depend on the player playing it but only on the strategy played by the opponent. In these types of games, the payoff of the opponent is obtained by switching the order of the vector of strategies played. Moreover, the payoffs satisfy the following properties.

Property 1

In the game under consideration, the following relations hold:

-

(i)

\(\pi ^{*}\left( \underline{\theta },\overline{\theta }\right) >\pi ^{*}\left( \overline{\theta },\underline{\theta }\right)\);

-

(ii)

\(\pi ^{*}\left( \overline{\theta },\overline{\theta }\right) >\pi ^{*}\left( \underline{\theta },\underline{\theta }\right)\);

-

(iii)

\(\Gamma = \pi \left( \overline{\theta },\overline{\theta }\right) +\pi \left( \underline{\theta },\underline{\theta }\right) - \pi \left( \overline{\theta },\underline{\theta }\right) -\pi \left( \underline{\theta },\overline{\theta }\right) <0\);

-

(iv)

\(\pi \left( \underline{\theta },\underline{\theta }\right) >\pi \left( \overline{\theta },\underline{\theta }\right) \Rightarrow \pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\);

-

(v)

\(\pi \left( \overline{\theta },\overline{\theta }\right) >\pi \left( \underline{\theta },\overline{\theta }\right) \Rightarrow \pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\);

Proof of Property 1

Properties (i) and (ii) follow from Remark 1. Property (iii) can be shown with the same arguments considered in Bischi and Lamantia (2022) where however \(\overline{\theta }\) and \(\underline{\theta }\) had different signs. Properties (iv) and (v) follow from Property (iii). \(\square\)

From Property 1, it follows the following result about the pure-strategy Nash equilibria of the game above and its classification.

Proposition 1

The bimatrix game under consideration has always a Nash equilibrium in pure strategies. Moreover, the bimatrix game above stated cannot be a coordination game, that is \(\left( \overline{\theta },\overline{\theta }\right)\) and \(\left( \underline{\theta },\underline{\theta }\right)\) cannot be Nash equilibria in pure strategies at the same time. In addition:

-

(I)

The bimatrix game is a prisoner-dilemma and its unique Nash equilibrium in pure strategies is \(\left( \underline{\theta },\underline{\theta }\right)\), when

$$\begin{aligned} \pi ^{*}\left( \underline{\theta },\underline{\theta }\right) >\pi ^{*}\left( \overline{\theta },\underline{\theta }\right) \quad \text {and}\quad \pi ^{*}\left( \overline{\theta },\overline{\theta }\right) <\pi ^{*}\left( \underline{\theta },\overline{\theta }\right) \end{aligned}$$(6) -

(II)

The bimatrix game is an anti-coordination game and its Nash equilibria in pure strategies are \(\left( \overline{\theta },\underline{\theta }\right)\) and \(\left( \underline{\theta },\overline{\theta }\right)\), when

$$\begin{aligned} \pi ^{*}\left( \underline{\theta },\underline{\theta }\right)<\pi ^{*}\left( \overline{\theta },\underline{\theta }\right) \quad \text {and}\quad \pi ^{*}\left( \overline{\theta },\overline{\theta }\right) <\pi ^{*}\left( \underline{\theta },\overline{\theta }\right) \end{aligned}$$(7) -

(III)

The bimatrix game is a prisoner-delight game and its unique Nash equilibrium in pure strategies is \(\left( \overline{\theta },\overline{\theta }\right)\), when

$$\begin{aligned} \pi ^{*}\left( \underline{\theta },\underline{\theta }\right) <\pi ^{*}\left( \overline{\theta },\underline{\theta }\right) \quad \text {and}\quad \pi ^{*}\left( \overline{\theta },\overline{\theta }\right) >\pi ^{*}\left( \underline{\theta },\overline{\theta }\right) \end{aligned}$$(8)

Proof of Proposition 1

By definition, the bimatrix game under consideration is a \(2\times 2\) symmetric game. Then, the existence of at least a pure Nash equilibrium follows from the results in Cheng et al. (2004). Moreover, the pure strategies \(\left( \overline{\theta },\overline{\theta }\right)\) and \(\left( \underline{\theta },\underline{\theta }\right)\) are both Nash equilibria if and only if

which contradict Property 1(iii). Therefore, according to the definition of a coordination game, the bimatrix game cannot belong to this category. Regarding (I), note that condition (6) and Property 1(ii) imply

from which it follows that, according to their definitions, \(\left( \underline{\theta },\underline{\theta }\right)\) is the unique Nash equilibrium (prisoner-dilemma Nash equilibrium), and the bimatrix game is a prisoner-dilemma game. Regarding (II), note that condition (7) implies that \(\left( \overline{\theta },\underline{\theta }\right)\) and \(\left( \underline{\theta },\overline{\theta }\right)\) are the only pure-strategy Nash equilibria of the game. Therefore, according to its definition, the bimatrix game is an anti-coordination game. Regarding (III), note that condition (8) and Property 1(i) imply

from which it follows that, according to their definitions, \(\left( \overline{\theta },\overline{\theta }\right)\) is the unique Nash equilibrium (prisoner-delight Nash equilibrium), and the bimatrix game is a prisoner-delight game. \(\square\)

Introducing mixed strategies and indicating by \(m_{1}\in \left[ 0,1\right]\) the probability that the row player chooses strategy \(\overline{\theta }\) and by \(m_{2}\in \left[ 0,1\right]\) the probability that the column player chooses strategy \(\overline{\theta }\), we have that \(\left( m_{1}^{*},m_{2}^{*}\right)\), with \(m_{1}^{*},m_{2}^{*}\in \left( 0,1\right)\), is a mixed strategy Nash equilibrium of the game when

where

and

Regarding the mixed-strategy Nash equilibria, the following result holds.

Proposition 2

The mixed strategy Nash equilibrium \(\left( m_{1}^{*},m_{2}^{*}\right)\) is such that \(m_{1}^{*}=m_{2}^{*}=m^{*}\), where

and it exists if and only if the bimatrix game is an anti-coordination game (case (II) of Proposition 1).

Proof of Proposition 2

Assume that there exists a mixed strategy Nash equilibrium \(\left( m_{1}^{*},m_{2}^{*}\right)\) such that \(m_{1}^{*}\ne m_{2}^{*}\) with \(m_{1}^{*},m_{2}^{*}\in \left( 0,1\right)\). Then, by definition of mixed strategy Nash equilibrium, the following relations hold:

that is

Note that \(\pi \left( \overline{\theta },\overline{\theta }\right)\), \(\pi \left( \overline{\theta },\underline{\theta }\right)\), \(\pi \left( \underline{\theta },\overline{\theta }\right)\) and \(\pi \left( \underline{\theta },\underline{\theta }\right)\) are all positive. Therefore, the system of inequality implies \(m_{1}^{*}=m_{2}^{*}\) to be satisfied. This proves that if a mixed strategy Nash equilibrium exists than it is symmetric, that is, is given by \(\left( m^{*},m^{*}\right)\). Finally, note that

Therefore, \(m^{*}\) must satisfy

from which we obtain

From Property 1(iii), the denominator of \(m^{*}\) in (20) is negative, so that \(m^{*}>0\) if and only if \(\pi \left( \overline{\theta },\underline{\theta }\right) >\pi \left( \underline{\theta },\underline{\theta }\right)\). Moreover, \(m^{*}<1\) if and only if \(\pi \left( \underline{\theta },\overline{\theta }\right) >\pi \left( \overline{\theta },\overline{\theta }\right)\). By Proposition 1, it then follows that the inner equilibrium exists if and only if the bimatrix game is an anti-coordination game. \(\square\)

Summarizing, the action space of the game is given by \(\left( m_{1},m_{2}\right) \in \left[ 0,1\right] \times \left[ 0,1\right]\). If we want to analyze the evolutionary stability of the Nash equilibria, we can introduce an adjustment process such as the exponential replicator dynamics, see, e.g., Hofbauer and Sigmund (2003). This mechanism describes how the probabilities \(m_{1}\) and \(m_{2}\) of the two players to adopt a more cooperative behavior evolve over time. Before introducing the exponential replicator dynamics, however, note that for the spread of different strategies with the evolutionary mechanism what matters is the profit obtained by playing them, which is given in (2), and not the value obtained with the extended objective functions (which add or subtract part of the competitor’s profit and is given in (4)). This assumption follows the so-called indirect evolutionary approach, see Königstein and Müller (2000), Königstein and Müller (2001) and Kopel et al. (2014). Indirect evolution thus puts the actions of individual agents, which may be related to the maximization of certain objective functions, on a different plane than the evolutionary success of the available strategies.

Then, as usual in evolutionary game theory, the system of difference equations that describes the evolution of the probabilities \(m_{1}\) and \(m_{2}\) is given by

where \(\beta >0\) is the intensity of choice under the exponential replicator, \(\bar{\pi }\left( 1,m_{-i}\left( t\right) \right)\) is the the average profit of a cooperative player i, while \(\bar{\pi }\left( 0,m_{-i}\left( t\right) \right)\) is the the average profit of an aggressive player i.

Denoting by \(E_{0,0}\), \(E_{1,1}\), \(E_{1,0}\) and \(E_{0,1}\) the points \(\left( 0,0\right)\), \(\left( 1,1\right)\), \(\left( 1,0\right)\) and \(\left( 0,1\right)\), respectively, we have that \(E_{0,0}\), \(E_{1,1}\), \(E_{1,0}\) and \(E_{0,1}\) are always equilibria of the system of exponential replicator dynamics (21). They correspond to the actions \(\left( \underline{\theta },\underline{\theta }\right)\) (the two players are aggressive), \(\left( \overline{\theta },\overline{\theta }\right)\) (the two players are cooperators), \(\left( \overline{\theta },\underline{\theta }\right)\) (row player is a cooperator while column player is aggressive) and \(\left( \underline{\theta },\overline{\theta }\right)\) (row players is aggressive while column player is a cooperator) of the bimatrix game, respectively. Moreover, consider \(m^{*}\) as in Proposition 2, system (21) can have only an additional equilibrium, given by \(\left( m^{*},m^{*}\right)\), and denoted by \(E_{m^{*},m^{*}}\), that we will see that it is an equilibrium if and only if it is also a mixed-strategy Nash equilibrium of the game above. In particular, regarding the analogies between the equilibria of the system of exponential replicator dynamics (21) and the Nash equilibria of the game above, we point out the following.

Remark 2

By the Folk Theorem of Evolutionary Game Theory, see, e.g., Cressman and Tao (2014) or Hofbauer and Sigmund (1998), all Nash equilibria of the game above are also equilibria of the exponential replicator dynamics (21), while not all equilibria of the exponential replicator dynamics (21) are Nash equilibria of the original game. However, if an equilibrium of the exponential replicator dynamics (21) is locally asymptotically stable, then it is also a Nash equilibrium.

The setup so far analyzed corresponds to the one in Bischi and Lamantia (2022). However, instead of addressing the full-fledged model (21), Bischi and Lamantia (2022) further assume that \(m_{1}\left( 0\right) =m_{2}\left( 0\right) =m\left( 0\right)\), which implies \(m_{1}\left( t\right) =m_{2}\left( t\right) =m\left( t\right)\) for all \(t>0\) as the bimatrix game above is symmetric. Therefore, Bischi and Lamantia (2022) analyzes the dynamics of model (21) only in an invariant subspace of the action space, that is on the diagonal where \(m_{1}=m_{2}\) of the state space \(\left[ 0,1\right] \times \left[ 0,1\right]\). This does not, however, provide information about the evolutionary stability of the Nash equilibria in the case of an anti-coordination game, which requires the analysis of the full-fledged model (21). Before analyzing the full-fledged model in (21), we recap in the next section the main results in Bischi and Lamantia (2022).

3 Evolutionary Setting with One Population

Let us start investigating the dynamics of the evolutionary model (21) on the invariant set \(m_{1}=m_{2}\). To do so, let us assume \(m_{1}\left( 0\right) =m_{2}\left( 0\right)\), which implies \(m_{1}\left( t\right) =m_{2}\left( t\right)\) for all \(t\in \mathbb {N}\), and let us set \(m_{1}\left( t\right) =m_{2}\left( t\right) =m\left( t\right)\). Then, we recover the one-population setup investigated in Bischi and Lamantia (2022), according to which the updating of the probability \(m\left( t\right)\) follows an exponential replicator equation, that is the dynamics of \(m\left( t\right)\) is modeled by the uni-dimensional mapFootnote 5

where \(\beta >0\) is again the intensity of choice under the exponential replicator, while \(F\left( m\left( t\right) \right) =\bar{\pi }\left( 1,m\left( t\right) \right) -\bar{\pi }\left( 0,m\left( t\right) \right)\) is the difference between average profits, that is the average competitive advantage of playing \(\overline{\theta }\) over \(\underline{\theta }\) given the probability \(m\left( t\right)\) that the competitor chooses \(\overline{\theta }\). In a more compact form, F(m(t)) can be rewritten as

where \(\Gamma = \pi \left( \overline{\theta },\overline{\theta }\right) +\pi \left( \underline{\theta },\underline{\theta }\right) - \pi \left( \overline{\theta },\underline{\theta }\right) -\pi \left( \underline{\theta },\overline{\theta }\right)\), already defined in Property 1(iii) becomes

and \(\Psi = \pi \left( \overline{\theta },\underline{\theta }\right) -\pi \left( \underline{\theta },\underline{\theta }\right)\) becomes

Equation (23) evidences a linear and decreasing relationship, with slope \(\Gamma\), between the average advantage of playing \(\overline{\theta }\) over \(\underline{\theta }\) and the share of players playing \(\overline{\theta }\). The sign of \(\Gamma\) is negative under all possible parameter configurations regardless of the sign of \(\overline{\theta }\) and \(\underline{\theta }\). In other words, the greater the proportion of agents employing \(\overline{\theta }\), the smaller the expected payoff differential of the more cooperative strategy versus the more aggressive strategy. The constant \(\Psi\) in (23) indicates the constant proportion of benefit (when \(\Psi >0\)) or loss (when \(\Psi <0\)) associated with playing \(\overline{\theta }\) relative to playing \(\underline{\theta }\) when the opponent plays \(\underline{\theta }\).

In the following we shall see that the sign of \(\Psi\) depends on the specific parameter configuration and impacts on the existence and stability of equilibria of the exponential replicator (23). Specifically, \(E_{0}=0\) and \(E_{1}=1\) are always equilibria of the map and refer to the actions \(\left( \underline{\theta },\underline{\theta }\right)\) (the two players are aggressive) and \(\left( \overline{\theta },\overline{\theta }\right)\) (the two players are cooperators), respectively. Moreover, \(E_{0}\) and \(E_{1}\) correspond, respectively, to the equilibria \(E_{0,0}\) and \(E_{1,1}\) of the map (21). It is possible to prove that \(E_{0}\), resp. \(E_{1}\), is locally asymptotically stable if and only if \(E_{0,0}\), resp \(E_{1,1}\), is locally asymptotically stable.Footnote 6 Therefore, \(E_{0}\), resp \(E_{1}\), locally asymptotically stable implies that \(\left( \underline{\theta },\underline{\theta }\right)\), resp. \(\left( \overline{\theta },\overline{\theta }\right)\), is a Nash equilibrium of the game.

The same considerations do not hold true if we consider an inner equilibrium. The unique inner equilibrium under exponential replicator (23) is given by \(m^{*}\) and will be denoted by \(E_{m^{*}}\). This equilibrium represents the mixed strategy Nash equilibrium \(\left( m^{*},m^{*}\right)\) of the game above and is related to the equilibrium \(E_{m^{*},m^{*}}\) of the model (21). However, when \(E_{m^{*}}\) is locally asymptotically stable for (23), then \(E_{m^{*},m^{*}}\) can be a stable equilibrium, but it can also be a saddle. In this latter case, the evolutionary stable Nash equilibria of the game will be different from \(\left( m^{*},m^{*}\right)\). Therefore, investigating (23) we have only partial information about the Nash equilibria of the game above and on their evolutionary stable.

Said that, the inner equilibrium \(E_{m^{*}}\) must satisfy the iso-profit condition \(\bar{\pi }\left( 1,m^{*}\right) =\bar{\pi }\left( 0,m^{*}\right)\), that is \(F\left( m^{*}\right) =0\). From the latter condition we get

Note that, due to the symmetric setting of the game that we assume, \(m^{*}\) is independent on the economic parameters a, b, c as well as on the intensity of choice \(\beta\). In terms of aggregate parameters \(\Psi\) and \(\Gamma\), \(m^{*}\) is feasible when \(0<\Psi <-\Gamma\) (recall that \(\Gamma <0\) for all parameter values).

The following proposition summarizes the stability of the different equilibria for the map (22).

Proposition 3

(Stability of the equilibria of the one-population model and their bifurcations) Consider map (22) and define the following functions of \(\underline{\theta }\)

-

For \(\Psi <0\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) >\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} G\left( \underline{\theta }\right) <\overline{\theta }\le 1 \quad \text {or} \quad \underline{\theta }\ \ge -\frac{1}{3} \end{aligned}$$(28)the boundary equilibrium \(E_{0}\) is locally asymptotically stable and \(E_{1}\) is unstable.

-

At \(\Psi =0\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) =\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} \overline{\theta }=G\left( \underline{\theta }\right) \quad \text {with} \quad -1\le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(29)a transcritical bifurcation occurs at which the inner equilibrium \(E_{m^{*}}\) is created, with an exchange of stability between \(E_{m^{*}}\) and \(E_{0}\).

-

For \(0<\Psi <-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} H\left( \underline{\theta }\right)<\overline{\theta }<G\left( \underline{\theta }\right) \quad \text {with} \quad -1\le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(30)the interior equilibrium \(E_{m^{*}}\) is locally asymptotically stable provided that

$$\begin{aligned} \beta <\beta _{flip}=\frac{2 \Gamma }{\Psi (\Gamma +\Psi )} \end{aligned}$$(31)and \(E_{m^{*}}\) loses stability through a flip bifurcation at \(\beta =\beta _{flip}\).

-

At \(\Psi =-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) =\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is at

$$\begin{aligned} \overline{\theta }=H\left( \underline{\theta }\right) \quad \text {with} \quad -1 \le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(32)a transcritical bifurcation occurs at which the inner equilibrium \(E_{m^{*}}\) merges with \(E_{1}\).

-

For \(\Psi >-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) >\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is for,

$$\begin{aligned} \overline{\theta }<H\left( \underline{\theta }\right) \quad \text {with} \quad -1 \le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(33)\(E_{m^{*}}\) leaves the unitary interval \(\left[ 0,1\right]\), the boundary equilibrium \(E_{1}\) is locally asymptotically stable and \(E_{0}\) is unstable.

Proof of Proposition 3

The eigenvalue at a generic \(m\in \left[ 0,1\right]\) of the map (22) is:

where \(\frac{\partial F}{\partial m}=\Gamma\). It follows that the eigenvalue associated to equilibrium \(E_{0}\) is

Since \(\beta >0\), this eigenvalue is smaller than one if and only if \(F\left( 0\right) <0\), that is, if and only if \(\bar{\pi }\left( 1,0\right) <\bar{\pi }\left( 0,0\right)\), or equivalently \(\Psi = \pi \left( \overline{\theta },\underline{\theta }\right) -\pi \left( \underline{\theta },\underline{\theta }\right) <0\) which implies by Property 1(iv) that \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\). By straightforward algebra, \(\Psi <0\) if and only if one of the conditions in (28) holds. Moreover, the eigenvalue associated to equilibrium \(E_{1}\) is

Since \(\beta >0\), this eigenvalue is smaller than one if and only if \(F\left( 1\right) >0\), that is, if and only if \(\bar{\pi }\left( 1,1\right) >\bar{\pi }\left( 0,1\right)\), or equivalently \(\Psi +\Gamma = \pi \left( \overline{\theta },\overline{\theta }\right) -\pi \left( \underline{\theta },\overline{\theta }\right) >0\) which implies by Property 1(v) that \(\Psi >0\). By straightforward algebra, \(\Psi >0\) and \(\Psi +\Gamma >0\) if and only if the condition in (33) holds. Since \(F\left( m^{*}\right) =0\) and \(\frac{\partial F\left( m\right) }{\partial m}<0\), the eigenvalue associated to the equilibrium \(m^{*}\) is

Therefore, the equilibrium is locally asymptotically stable when \(\lambda \left( m^{*}\right) >-1\), that is, when

Finally, by Proposition 2 we know that \(m^{*}\in \left( 0,1\right)\) if and only if \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\), which is equivalently to \(-\Gamma>\Psi >0\). By straightforward algebra, \(-\Gamma>\Psi >0\) if and only if the condition in (30) holds. This completes the proof. \(\square\)

Summarizing, Proposition 3 states that: 1) \(E_{0}\) is a locally asymptotically stable equilibrium of the exponential replicator dynamics (22) while the other equilibrium \(E_{1}\) is unstable if and only if the bimatrix game is a prisoner-dilemma game; 2) \(E_{m^{*}}\) is locally asymptotically stable equilibrium of the exponential replicator dynamics (22) while the other equilibria of (22), given by \(E_{1}\) and \(E_{2}\), are unstable, if and only if the bimatrix game above is an anti-coordination game; 3) \(E_{1}\) is a locally asymptotically stable equilibrium of the exponential replicator dynamics (22) while the other equilibrium \(E_{0}\) is unstable if and only if the bimatrix game is a prisoner-delight game.

The results so far described are a direct extension of those in Bischi and Lamantia (2022). Indeed, in Bischi and Lamantia (2022) the admissible values of \(\underline{\theta }\) and \(\overline{\theta }\) are always of opposite sign, with \(-1 \le \underline{\theta }<0 \le \overline{\theta } \le 1\). Here we relax this assumption and allow parameters \(\underline{\theta }\) and \(\overline{\theta }\) to have the same sign, that is both firms can be aggressive or cooperative even with different degrees. This explains why the equilibrium \(E_{1}\), with all agents choosing the higher level \(\overline{\theta }<0\) over \(\underline{\theta }<\overline{\theta }\), can be here stable (case \(\Psi >-\Gamma\)), in contrast to what is shown in Bischi and Lamantia (2022). Said differently, by imposing \(-1 \le \underline{\theta }<0 \le \overline{\theta } \le 1\) the case of a prisoner-delight game is overlooked.

Regarding the global dynamics of the model (22) in the cases described in Proposition 3, the condition \(\Psi <0\) implies \(F\left( m\left( t\right) \right) <0\), that is, the aggressive strategy dominates the cooperative strategy for each fraction m(t). Similarly, the condition \(\Psi >-\Gamma\) implies that the cooperative strategy dominates the aggressive strategy for each fraction \(m\left( t\right)\). Then, in both these two cases, the evolution of strategies via the (exponential) replicator is characterized by a monotonic convergent dynamic toward the dominant strategy.

Moreover, Proposition 3 underlines that \(\underline{\theta } \ge -\frac{1}{3}\) implies \(\Psi <0\), so that only the equilibrium \(E_{0}\) is stable. Therefore in the following, we will assume \(\underline{\theta } < -\frac{1}{3}\) to rule out uninteresting cases. In particular, in terms of nonlinear dynamics the most interesting case (of Proposition 3) is when \(0<\Psi <-\Gamma\). A unique internal equilibrium \(m^*\) exists and there is no dominant strategy for any share m of more cooperative players, but the cooperative (hostile) strategy yields higher expected utility for a share \(m(t)<m^*\) (\(m(t)>m^*\)). If the intensity of choice \(\beta\) is low enough, the underlying dynamic mechanism ensures convergence at the equilibrium \(m^*\). However, because of the agents’ greater impulsivity toward better-performing strategies (higher \(\beta\)), the exponential replicator dynamics does not guarantee convergence because of overshooting around the equilibrium \(m^*\). From equation (38), it is straightforward to observe that \(\beta _{flip}>0\) only in the case of an anti-coordinate game. In addition, (38) shows that the greater the difference in profits from playing differently than the opponent, the lower the \(\beta _{flip}\) required to have instability.

This dynamic behavior is typical of such discrete-time models and is widely discussed in the literature, see, e.g., Hofbauer and Sigmund (2003), Harting and Radi (2020), Bischi and Lamantia (2022) and references therein.

We show some typical examples of the previous proposition through staircase diagrams. Let us fix parameters as \(a=4\), \(b=0.15\), \(c=0.5\), \(\underline{\theta }=-0.7\) and consider different values of \(\overline{\theta }\) and \(\beta\). Notice that \(G\left( -0.7\right) \approx 0.453083\) and \(H\left( -0.7\right) \approx -0.231258\). Thus, when \(\overline{\theta }>G\left( -0.7\right)\) (case \(\Psi <0\)), regardless of the value of \(\beta\), all agents will be aggressive in the long run, that is they will augment their objective function by adding \(\underline{\theta }<0\) times the competitor’s profit (see Fig. 1a where \(\overline{\theta }=0.5\) \(\beta =1\)).

For \(H\left( -0.7\right)<\overline{\theta }<G\left( -0.7\right)\) (case \(0<\Psi <-\Gamma\)), the inner equilibrium \(m^*\) belongs to the interval (0, 1) and is locally asymptotically stable for a low value of \(\beta\). For the sake of discussion, let us fix \(\overline{\theta }=0.25\). In this particular case, equilibrium \(m^*\approx 0.235179\) is stable for \(\beta \in \left( 0, \beta _{flip}\right)\), where \(\beta _{flip} \approx 3.09308\), see Fig. 1b obtained with \(\beta =1\). Equilibrium \(m^*\) loses stability through a flip bifurcation and chaotic dynamics arise through a cascade of flip bifurcations for higher values of the intensity of choice, see Fig. 1c with \(\beta =3.5\), Fig. 1d with \(\beta =6\) and Fig. 1e with \(\beta =20\).

Finally, for \(\overline{\theta }<H\left( -0.7\right)\) (case \(\Psi >-\Gamma\)), the system admits only equilibria \(E_0\) (unstable) and \(E_1\) (stable), see Fig. 1f with \(\overline{\theta }=-0.25\) and \(\beta =1\).

Staircase diagrams of map (22) with different initial conditions. Parameters: \(a=4\), \(b=0.15\), \(c=0.5\), \(\underline{\theta }=-0.7\). a \(\Psi <0\) with \(\overline{\theta }=0.5\) and \(\beta =1\). b \(0<\Psi <-\Gamma\) with \(\overline{\theta }=0.25\) and \(\beta =1\). c \(0<\Psi <-\Gamma\) with \(\overline{\theta }=0.25\) and \(\beta =3.5\). d \(0<\Psi <-\Gamma\) with \(\overline{\theta }=0.25\) and \(\beta =6\). e \(0<\Psi <-\Gamma\) with \(\overline{\theta }=0.25\) and \(\beta =20\). f \(\Psi >-\Gamma\) with \(\overline{\theta }=-0.25\) and \(\beta =1\)

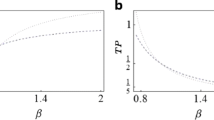

The bifurcation diagrams in Fig. 2 show various scenarios obtainable as the values of \(\overline{\theta }\) increase. In both cases, the intensity of choice is high enough so that the inner equilibrium \(m^*\) flip bifurcates and chaotic dynamics occurs. In Fig. 2a the intensity of choice is at \(\beta =6\), whereas a wider extension of the chaotic attractor is obtained in 2b, where \(\beta =20\).

Bifurcation diagrams of map (22) when \(0<\Psi <-\Gamma\) with varying \(\overline{\theta }\) and different intensities of choice. Parameters: \(a=4\), \(b=0.15\), \(c=0.5\), \(\underline{\theta }=-0.7\), \(\overline{\theta } \in \left( -0.7,1\right]\). a \(\beta =6\). b \(\beta =20\)

4 Two-Population Evolution

Let us now consider the full-fledged two-population framework given by the systems of exponential replicator dynamics (21). The square \(\left[ 0,1\right] \times \left[ 0,1\right]\) is the domain of the two-population model, while the corner points \(E_{0,0}=\left( 0,0\right)\), \(E_{0,1}=\left( 0,1\right)\), \(E_{1,0}=\left( 1,0\right)\) and \(E_{1,1}=\left( 1,1\right)\) are the boundary equilibria. Under the condition specified in Proposition 2, the model admits a further inner equilibrium given by \(E_{m^{*},m^{*}}\).

The following proposition characterizes the stability of the equilibria of the model (21). These results are strictly connected with the ones for the one-population model in Proposition 3, underlining, therefore, differences and analogies. In particular, we show that the inner equilibrium whenever exists is unstable (either repellor or saddle) instead of being stable as it is for the one-population model (22). Moreover, the corner equilibria \(E_{0,1}\) and \(E_{1,0}\) are overlooked by model (22) despite they can be Nash equilibria of the bimatrix game above.

Proposition 4

(Stability of the equilibria of the two-population model and their bifurcations) Consider map (21) and the functions of \(G\left( \underline{\theta }\right)\) and \(H\left( \underline{\theta }\right)\) defined in Proposition 3.

-

For \(\Psi <0\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) >\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} G\left( \underline{\theta }\right) <\overline{\theta }\le 1 \quad \text {or} \quad \underline{\theta }\ge -\frac{1}{3} \end{aligned}$$(39)the corner equilibrium \(E_{0,0}\) is locally asymptotically stable whereas \(E_{1,0}\), \(E_{0,1}\) and \(E_{1,1}\) are unstable.

-

At \(\Psi =0\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) =\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} \overline{\theta }=G\left( \underline{\theta }\right) \quad \text {with} \quad -1\le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(40)\(E_{m^{*},m^{*}}\) merges with \(E_{0,0}\) (transcritical bifurcation) and the corner equilibria \(E_{0,1}\) and \(E_{1,0}\) undergo a bifurcation of eigenvalue 1.

-

For \(0<\Psi <-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} H\left( \underline{\theta }\right)<\overline{\theta }<G\left( \underline{\theta }\right) \quad \text {with} \quad -1\le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(41)\(E_{m^{*},m^{*}}\in \left[ 0,1\right] \times \left[ 0,1\right]\) is a saddle for \(\beta <\beta _{f}\), while it is a repellor for \(\beta >\beta _{f}\) and undergoes a bifurcation of eigenvalue \(-1\) (flip bifurcation) at \(\beta =\beta _{f}\), where

$$\begin{aligned} \beta _{f}:=\frac{2 \Gamma }{\Psi \left( \Gamma +\Psi \right) } \end{aligned}$$(42)while \(E_{0,1}\) and \(E_{1,0}\) are locally asymptotically stable and \(E_{0,0}\) and \(E_{1,1}\) are unstable.

-

At \(\Psi =-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) =\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is at

$$\begin{aligned} \overline{\theta }=H\left( \underline{\theta }\right) \quad \text {with} \quad -1 \le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(43)\(E_{m^{*},m^{*}}\) merges with \(E_{1,1}\) (transcritical bifurcation).

-

For \(\Psi >-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) >\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is for,

$$\begin{aligned} \overline{\theta }<H\left( \underline{\theta }\right) \quad \text {with} \quad -1 \le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(44)\(E_{1,1}\) is locally asymptotically stable, \(E_{m^{*},m^{*}}\notin \left[ 0,1\right] \times \left[ 0,1\right]\), whereas \(E_{0,0}\), \(E_{0,1}\) and \(E_{1,0}\) are unstable.

Proof of Proposition 4

Let us denote the one-time advance operator by \(^{'}\). We have that:

where

The Jacobian matrix of system (21) is given by:

It follows that:

Therefore, the eigenvalue associated to \(E_{0,0}\) are \(\lambda _{1,2}^{E_{0,0}}=e^{\beta \Psi }>0\) and \(\lambda _{1,2}^{E_{0,0}}<1\) if and only if \(\Psi <0\). Moreover, we have that

Therefore, the eigenvalue associated to \(E_{1,1}\) are \(\lambda _{1,2}^{E_{1,1}}=e^{\beta \left( \Gamma + \Psi \right) }>0\) and \(\lambda _{1,2}^{E_{1,1}}<1\) if and only if \(\Gamma + \Psi >0\). Regarding \(E_{1,0}\), we have that:

In this case, the eigenvalue associated to \(E_{1,0}\) are \(\lambda _{1}^{E_{1,0}}=e^{-\beta \Psi }>0\) and \(\lambda _{2}^{E_{1,0}}=e^{\beta \left( \Gamma + \Psi \right) }>0\) and \(\lambda _{1,2}^{E_{1,0}}<1\) if and only if \(\Psi >0\) and \(\Gamma + \Psi <0\). By symmetric arguments, we have that:

with eigenvalues \(\lambda _{1}^{E_{0,1}}=\lambda _{2}^{E_{1,0}}\) and \(\lambda _{2}^{E_{0,1}}=\lambda _{1}^{E_{1,0}}\). It follows that \(E_{0,1}\) is locally asymptotically stable if and only if \(E_{1,0}\) is so. Furthermore, it is easy to verify that:

with eigenvalues

In particular, the eigenvalue \(\lambda _{1}=1+\frac{\Psi }{\Gamma } \beta \left| \Gamma +\Psi \right| <1\), with eigenvector along the diagonal of \(\left[ 0,1\right] ^{2}\) of equation \(m_{2}=m_{1}\), causes a bifurcation of eigenvalue \(-1\), which occurs for \(\beta = \beta _{f}\). Numerical simulation confirms that this bifurcation of eigenvalue \(-1\) is a flip one.Footnote 7 The other eigenvalue is \(\lambda _2>1\), with the associated eigenvector along the diagonal of \(\left[ 0,1\right] ^{2}\) of equation \(m_{2}=-m_{1}\). To complete the proof note that \(\Psi <0\) if and only if one of the conditions in (39) is satisfied, \(-\Gamma>\Psi >0\) if and only if the conditions in (41) are satisfied and, finally, \(\Psi >-\Gamma\) if and only if the conditions in (44) are satisfied. \(\square\)

To summarize, Proposition 4 underlines that: 1) \(E_{0,0}\) is a locally asymptotically stable equilibrium of the exponential replicator dynamics (21) while the other equilibria \(E_{1,1}\), \(E_{0,1}\) and \(E_{1,0}\) are unstable if and only if the bimatrix game is a prisoner-dilemma game; 2) \(E_{0,1}\) and \(E_{1,0}\) are locally asymptotically stable equilibria of the exponential replicator dynamics (21) while the other equilibria \(E_{0,0}\), \(E_{1,1}\) and \(E_{m^{*},m^{*}}\) are unstable, if and only if the bimatrix game above is an anti-coordination game; 3) \(E_{1,1}\) is a locally asymptotically stable equilibrium of the exponential replicator dynamics (21) while the other equilibria \(E_{0,0}\) and \(E_{1,1}\) are unstable, if and only if the bimatrix game is a prisoner-delight game.

Interestingly and contrary to what happens for the one-dimensional map (22), the inner equilibrium is always unstable under the exponential replicator dynamics (21). In particular, for parameter configurations such that the bimatrix game is an anti-coordination game, we need to study the full-fledged model to underline that the evolutionary stable equilibria are the pure-strategy Nash equilibria \(E_{0,1}\) and \(E_{1,0}\) and not the inner equilibrium \(E_{m^{*},m^{*}}\).

Regarding the global dynamics of the exponential replicator dynamics (21), numerical experiments indicate that: (1) when \(E_{0,0}\) is the locally asymptotically stable equilibrium (prisoner-dilemma game), it is also globally stable, see Fig. 3a; (2) when \(E_{0,1}\) and \(E_{1,0}\) are locally asymptotically stable equilibria (anti-coordination game) and the intensity of choice is sufficiently low, we have that \(E_{m^{*},m^{*}}\) is a saddle equilibrium, the basin of attraction of \(E_{0,1}\) is \(\mathcal {B}\left( E_{0,1}\right) =\left\{ \left. \left( m_{1},m_{2}\right) \right| m_{2}>m_{1}\right\}\), and the basin of attraction of \(E_{1,0}\) is \(\mathcal {B}\left( E_{1,0}\right) =\left\{ \left. \left( m_{1},m_{2}\right) \right| m_{1}>m_{2}\right\}\), see Fig. 3b, c; (3), when \(E_{0,1}\) and \(E_{1,0}\) are locally asymptotically stable equilibria (anti-coordination game) and the intensity of choice is sufficiently high, we have that \(E_{1,1}\) is a Milnor attractor, that is, its basin of attraction \(\mathcal {B}\left( E_{1,1}\right)\) has a positive Lebesgue measure but it is not attracting in the Lyapunov sense, while the basin of attraction of \(E_{0,1}\) is \(\mathcal {B}\left( E_{0,1}\right) =\left\{ \left. \left( m_{1},m_{2}\right) \right| m_{2}>m_{1}\right\} /\mathcal {B}\left( E_{1,1}\right)\), and the basin of attraction of \(E_{1,0}\) is \(\mathcal {B}\left( E_{1,0}\right) =\left\{ \left. \left( m_{1},m_{2}\right) \right| m_{1}>m_{2}\right\} /\mathcal {B}\left( E_{1,1}\right)\), see Fig. 3d, e; (4) when \(E_{1,1}\) is the locally asymptotically stable equilibrium (prisoner-delight game), it is also globally stable, see Fig. 3f;

Basins of attraction: In red the basin of attraction of the locally asymptotically stable equilibrium \(E_{1,0}\), in blue the basin of attraction of the locally asymptotically stable equilibrium \(E_{0,1}\), in green the basin of attraction of the equilibrium \(E_{1,1}\), in magenta the basin of attraction of the equilibrium \(E_{0,0}\), in yellow the basin of attraction of the chaotic set in the diagonal. Parameters: \(a=4\), \(b=0.15\), \(c=0.5\) and \(\underline{\theta }=-0.7\). Panel a \(\overline{\theta }= 0.5\) and \(\beta =1\). Panel b \(\overline{\theta }= 0.25\) and \(\beta =1\). Panel c \(\overline{\theta }= 0.25\) and \(\beta =51\). Panel d \(\overline{\theta }= 0.25\) and \(\beta =100\). Panel e \(\overline{\theta }= 0.25\) and \(\beta =200\). Panel f \(\overline{\theta }= -0.25\) and \(\beta =1\). In Panels b–e the common inner equilibrium is \(E_{m^{*},m^{*}}\) with \(m^{*} \approx 0.235179\) and \(\beta _{f}\approx 3.09308\)

Surprisingly, in the case of an anti-coordination game, also an equilibrium representing coordination among players, that is \(E_{1,1}\), can be reached by evolutionary selection. That is, despite the anti-coordination nature of the game, players can learn to coordinate and play the strategy profile that allows them to have the same highest payoff among the coordination strategies. This is clearly related to a nonlinear phenomenon that deserves to be investigated in future works. Here we just remark that for the first time, we observe that an equilibrium that does not represent a Nash equilibrium can be reached through evolutionary pressure despite being evolutionary (locally) unstable. The robustness of this result is confirmed by a large variety of numerical experiments not reported here for the sake of space. Moreover, to see if this result is robust to heterogeneous populations, in the next section we generalized the model setup (21) by accommodating heterogeneous intensity of choices and heterogeneous attitudes towards cooperation, as well as heterogeneous production costs.

Before moving to the asymmetric case, let us put into perspective the result that \(E_{1,1}\) can be a Milnor attractor under exponential replicator dynamics when the static game is an anti-coordination game. To do so, let us point out that the symmetric property of the map (21) with respect to the diagonal \(m_{1}=m_{2}\) and the local stability of the equilibria \(E_{0,1}\) and \(E_{1,0}\) while all the other equilibria are stable, suggest that if at time \(t=0\) in one of the two populations there is a greater propensity to adopt a certain strategy than in the other population, then in each population, the initially prevailing strategy would also prevail eventually. From the dynamic point of view, the diagonal represents the boundary between the basins of attraction of attractors \(E_{0,1}\) and \(E_{1,0}\). After all, this is perfectly in line with what is observed in continuous-time two-population games, as described in Hofbauer and Sigmund (1998), where it is shown that under the continuous replicator with two populations, the internal equilibrium is either a center or a saddle, whose stable manifold delimits the basins of corner equilibria. In the case of an anti-coordination game, the stable corner equilibria are \(E_{0,1}\) and \(E_{1,0}\) only. Instead, we have observed for the first time that under discrete-time multi-population dynamics, when the internal equilibrium exists and the intensity of choice is sufficiently high, with a repelling (chaotic) saddle in the diagonal, then the corner equilibrium \(E_{1,1}\), while not stable in the Lyapunov sense turns out to be a Milnor attractor since \(E_{1,1}\) attracts a set of points of positive Lebesgue measure of the square \([0,1] \times [0,1]\), see Ashwin et al. (1996) and Buescu (1997) for details.

5 Two-Heterogeneous-Population Evolution

To conduct a robustness check, let us now reconsider the previous setup in a two-population framework, where populations are heterogeneous. In particular, let us introduce heterogeneities among competing firms, which are related to asymmetric costs, different propensities to cooperation, different levels of aggressiveness and different intensities of choice. For simplicity, let us imagine here that we have two populations and consider a duopoly in which the first firm belongs to Population 1 while the second firm belongs to Population 2.

Considering a duopoly with a homogeneous product and heterogeneous linear costs of production, at a Nash-Cournot equilibrium we have that a firm in population i produces

Assuming, without loss of generality, that \(c_1 \ge c_2\), quantities are non-negative provided that \(\theta _1 \in \left[ -1, \frac{a-2c_1+c2}{a-c_2} \right]\). The reduced-form profits at \(\left( \tilde{q}_{i}^*\left( \theta _{i},\theta _{-i}\right) ,\tilde{q}_{-i}^*\left( \theta _{i},\theta _{-i}\right) \right)\) read

where firm in population i has marginal costs \(c_i\) and sets a level of cooperativeness \(\theta _i \in \left( -1,1\right)\), with the opponent in population \(-i\) having marginal costs \(c_{-i}\) and sets a level of cooperativeness \(\theta _{-i} \in \left( -1,1\right)\).

In population i (\(i=1,2\)), heterogeneity in the levels of cooperativeness is synthesized by two values of the corresponding parameter \(\underline{\theta }_i<\overline{\theta }_i\).

Let \(m_1\left( t\right)\) be the probability at time t that a player from population 1 sets a level of cooperativeness \(\overline{\theta }_1\) and let \(m_2(t)\) be the probability that a player from population 2 sets a level of cooperativeness \(\overline{\theta }_2\). Then, the expected profit of a player in population 1 by setting \(\overline{\theta }_1\) at time t is

whereas by setting \(\underline{\theta }_1\), expected profit at time t is

Similarly, the expected payoff of a firm in population 2 at time t by setting \(\overline{\theta }_2\) is

whereas by setting \(\underline{\theta }_2\), it is

In each population, the shares of adopters of the two behaviors change over time through double exponential replicator dynamics. This leads to the following two-dimensional map:

where \(\beta _i\) is the intensity of choice in population i.

Being a two-population model in the square \(\left[ 0,1\right] \times \left[ 0,1\right]\), the two-dimensional map (60) always admits as equilibria the corner points \(E_{0,0}\), \(E_{0,1}\), \(E_{1,0}\) and \(E_{1,1}\). By the linearity in \(m_{-i}\) of the difference \(\Pi ^{i}_{c}\left( m_{-i}\right) -\Pi ^{i}_{a}\left( m_{-i}\right)\), \(i=1,2\), it follows that at most one inner equilibrium exists, which reduces to (26) in the homogeneous case (also when \(\beta _1 \ne \beta _2\)). Moreover, note that in a heterogeneous framework the diagonal \(m_{1}=m_{2}\) of the state space \(\left[ 0,1\right] \times \left[ 0,1\right]\) is no longer an invariant set. This occurs when at least one of the following conditions holds \(\beta _{1}\ne \beta _{2}\), \(\overline{\theta }_{1}\ne \overline{\theta }_{2}\), \(\underline{\theta }_{1}\ne \underline{\theta }_{2}\) or \(c_{1}\ne c_{2}\).

5.1 Heterogeneous Intensity of Choices

Let us set \(c_1=c_2=c\), \(\underline{\theta }_1=\underline{\theta }_2=\underline{\theta }\) and \(\overline{\theta }_1=\overline{\theta }_2=\overline{\theta }\). Then, the fitness gain by playing \(\overline{\theta }\) in population i for a given share \(m_{-i}\) of agents playing \(\overline{\theta }\) in population \(-i\), that is \(\Pi ^{i}_{a}\left( m_{-i}\right) -\Pi ^{i}_{c}\left( m_{-i}\right)\), is clearly given by the same function \(F\left( m_{-i}\right)\) in (23), where \(m_{-i}\) is the probability that \(\overline{\theta }\) is played in the competing population. Clearly, the homogeneous model with \(\beta _1=\beta _2\) coincides with the model (21), and its dynamics along the diagonal \(m_1=m_2\) coincides with the one of the homogeneous model studied in Bischi and Lamantia (2022) when memory is disregarded, i.e. map (22).

The following proposition characterizes the stability of the equilibria of the model in which we assume that agents in the two populations can differ in the intensity of choice. This proposition is obviously strictly connected with Proposition 4 regarding the stability of the same model but with a homogeneous intensity of choices.

Proposition 5

(Stability of the equilibria of the two-population model with heterogeneous intensity of choices and their bifurcations) Consider map (60) with \(c_1=c_2=c\), \(\underline{\theta }_1=\underline{\theta }_2=\underline{\theta }\), \(\overline{\theta }_1=\overline{\theta }_2=\overline{\theta }\) and the functions of \(G\left( \underline{\theta }\right)\) and \(H\left( \underline{\theta }\right)\) defined in the previous proposition.

-

For \(\Psi <0\) (equivalently, \(\pi \left( \underline{\theta }, \underline{\theta }\right) >\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} G\left( \underline{\theta }\right) <\overline{\theta }\le 1 \quad \text {or} \quad \underline{\theta }\ge -\frac{1}{3} \end{aligned}$$(61)the corner equilibrium \(E_{0,0}\) is locally asymptotically stable whereas \(E_{1,0}\), \(E_{0,1}\) and \(E_{1,1}\) are unstable.

-

At \(\Psi =0\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) =\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} \overline{\theta }=G\left( \underline{\theta }\right) \quad \text {with} \quad -1\le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(62)\(E_{m^{*},m^{*}}\) merges with \(E_{0,0}\) (transcritical bifurcation) and the corner equilibria \(E_{0,1}\) and \(E_{1,0}\) undergo a bifurcation of eigenvalue 1.

-

For \(0<\Psi <-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) <\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is when

$$\begin{aligned} H\left( \underline{\theta }\right)<\overline{\theta }<G\left( \underline{\theta }\right) \quad \text {with} \quad -1\le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(63)\(E_{m^{*},m^{*}}\in \left[ 0,1\right] \times \left[ 0,1\right]\) is a saddle for \(\beta _1 \beta _2<\tilde{\beta }_{f}\), while it is a repellor for \(\beta _1 \beta _2>\tilde{\beta }_{f}\) and undergoes a bifurcation of eigenvalue \(-1\) (flip bifurcation) at \(\beta _1 \beta _2=\tilde{\beta }_{f}\), where

$$\begin{aligned} \tilde{\beta }_{f}=\frac{4 \Gamma ^2}{\Psi ^2 \left( \Gamma +\Psi \right) ^2} \end{aligned}$$(64)while \(E_{0,1}\) and \(E_{1,0}\) are locally asymptotically stable and \(E_{0,0}\) and \(E_{1,1}\) are (locally) unstable.

-

At \(\Psi =-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) =\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is at

$$\begin{aligned} \overline{\theta }=H\left( \underline{\theta }\right) \quad \text {with} \quad -1 \le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(65)\(E_{m^{*},m^{*}}\) merges with \(E_{1,1}\) (transcritical bifurcation).

-

For \(\Psi >-\Gamma\) (equivalently, \(\pi \left( \underline{\theta },\underline{\theta }\right) <\pi \left( \overline{\theta },\underline{\theta }\right)\) and \(\pi \left( \overline{\theta },\overline{\theta }\right) >\pi \left( \underline{\theta },\overline{\theta }\right)\)), that is for,

$$\begin{aligned} \overline{\theta }<H\left( \underline{\theta }\right) \quad \text {with} \quad -1 \le \underline{\theta }< -\frac{1}{3} \end{aligned}$$(66)\(E_{1,1}\) is locally asymptotically stable, \(E_{m^{*},m^{*}}\notin \left[ 0,1\right] \times \left[ 0,1\right]\), whereas \(E_{0,0}\), \(E_{0,1}\) and \(E_{1,0}\) are unstable.

Proof of Proposition 5

It follows from Proposition 5 by noting that all the results do not depend on the intensity of choice except for the inner equilibrium. In the case of inner equilibrium, it is easy to verify that the Jacobian matrix computed there takes the form

with eigenvalues

In particular, the eigenvalue \(\lambda _{1}=1+\frac{\Psi }{\Gamma } \sqrt{\beta _1 \beta _2 \left( \Gamma +\Psi \right) ^2}<1\) causes a bifurcation of eigenvalue \(-1\) (numerical simulations confirm that it is a flip bifurcation) when we have that \(\lambda _{1}=-1\), that is for \(\beta _1 \beta _2 = \tilde{\beta }_{f}\). The other eigenvalue is \(\lambda _2>1\). Therefore, the inner equilibrium is either a saddle or a repellor. \(\square\)

Up to this point, the dynamic analysis carried out from a local point of view confirms the robustness of the results of the original model: Whenever a pure strategy dominates, equilibria \(E_{0,0}\) or \(E_{1,1}\) are global attractors of the dynamical system. This situation is depicted in Fig. 4, panels (a) and (f), in which, except for \(\beta _1=100\) and \(\beta _2=1\), parameters are set as in Fig. 3, that is \(a=4\), \(b=0.15\), \(c=0.5\), \(\underline{\theta }=-0.7\) and with \(\overline{\theta }= 0.5\) and \(\overline{\theta }= -0.25\) respectively.

In the case of stability of both equilibria \(E_{0,1}\) and \(E_{1,0}\), the internal equilibrium is unstable and convergence to an asymmetric corner equilibrium is expected for any slight mismatch of initial choices of the players. See Fig. 4b, c, where the basin of attraction of \(E_{0,1}\) is the blue region while the basin of attraction of \(E_{1,0}\) is the red region. It is easy to observe that \(\beta _{2}>\beta _{1}\) increases the basin of attraction of the equilibrium \(E_{1,0}\), that is, increases the probability that player 2 is aggressive and player 1 is a cooperator. Therefore, having a higher intensity of choice increases the possibility to be more profitable than your competitor. Except for this, we can draw the same conclusions as for the symmetric case.

Focusing on the case in which \(E_{0,1}\) and \(E_{1,0}\) are stable equilibria (condition \(0<\Psi <-\Gamma\) holds), the most interesting aspect is the persistence of a basin of attraction of positive measure for the corner point \(E_{1,1}\) in case of high values of the intensities of choice, see the green region in Fig. 4d, where \(\beta _{1}=100\) and \(\beta _{2}=50\). Increasing further the gap between the intensities of choice, we observe that the green region shrinks while the basin of attraction of a corner point (either \(E_{0,1}\) or \(E_{1,0}\) depending on which one has a higher intensity of choice) increases.

Basins of attraction of model (60) (asymmetric case). Parameter as in Fig. 3 but: a \(\beta _{1}=100\) and \(\beta _{2}=1\); b \(\beta _{1}=1\) and \(\beta _{2}=2\); c \(\beta _{1}=170\) and \(\beta _{2}=3\); d \(\beta _{1}=100\) and \(\beta _{2}=50\); e \(\beta _{1}=200\) and \(\beta _{2}=50\); f \(\beta _{1}=100\) and \(\beta _{2}=1\)

5.2 Heterogeneous Level of Aggressiveness

The equilibrium \(E_{1,1}\) is the one in which agents use a higher degree of cooperation (or a lower degree of hostility if both \(\underline{\theta }\) and \(\overline{\theta }\) are negative). This result is particularly interesting since higher cooperation in both populations can be selected even when it apparently should be ruled out by the instability of the equilibrium. This occurs only when agents are sufficiently impulsive in their selections of the objective functions to maximize. If we break the symmetry of the intensity of choice, the diagonal of the square loses its role as an invariant set. Nevertheless, similar results in terms of dynamics are obtained, as observed in the previous subsection where \(\beta _{1}\ne \beta _{2}\).

Here, we want to show that a similar phenomenon holds even if we break the symmetry in the level of aggressiveness. This occurrence is shown in the basins of attraction in Fig. 5, where \(a=4\), \(b=0.15\), \(c=0.5\), \(\underline{\theta }_{1}=\underline{\theta }_{2}=-0.7\), \(\overline{\theta }_{1}= -0.1\) and \(\overline{\theta }_{2}= 0.25\). In Panel (a), in which \(\beta _1=\beta _2=1\), the example is analogous to Fig. 3b, c. Figure 5b, in which \(\beta _1=\beta _2=100\), is analogous to Fig. 3d, e, with a disconnected basin of attraction of equilibrium \(E_{1,1}\).

Basins of attraction (asymmetric case): In red the basin of attraction of the locally asymptotically stable equilibrium \(E_{1,0}\), in blue the basin of attraction of the locally asymptotically stable equilibrium \(E_{0,1}\), in green the basin of attraction of the equilibrium \(E_{1,1}\). Panel a: Parameters as in Fig. 3b but \(\overline{\theta }_{1}= -0.1\) and \(\overline{\theta }_{2}= 0.25\). Panel b: Parameters as in Fig. 3e but \(\overline{\theta }_{1}= -0.1\) and \(\overline{\theta }_{2}= 0.25\). The common inner equilibrium is \(m_1^*\approx 0.334136, m_2^*\approx 0.518768\)

5.3 Heterogeneous Costs of Production

The same results hold by breaking the symmetry on the costs of production. See Fig. 6a, obtained with the same parameters as Fig. 3d but \(c_{1} = 0.5\) and \(c_{2}= 0.4\), where we observe that the green region persists. Therefore, \(E_{1,1}\) is a Milnor attractor even in case of asymmetric costs of production. The same is observed in Fig. 6b, obtained with the same parameters as Fig. 3e but \(c_{1} = 0.5\) and \(c_{2}= 0.4\). Summarizing, the Milnor attractor \(E_{1,1}\) observed in a symmetric setting for certain parameter configurations, persists by parameter perturbations that introduce asymmetric costs of production.

Basins of attraction (asymmetric case): In red the basin of attraction of the locally asymptotically stable equilibrium \(E_{1,0}\), in blue the basin of attraction of the locally asymptotically stable equilibrium \(E_{0,1}\), in green the basin of attraction of the equilibrium \(E_{1,1}\). Panel a: Parameters as in Fig. 3d but \(c_{1} = 0.5\) and \(c_{2}= 0.4\). Panel b: Parameters as in Fig. 3e but \(c_{1} = 0.5\) and \(c_{2}= 0.4\). The common inner equilibrium is \((m^*_1,m^*_2)=(0.220573, 0.250546)\)

6 Conclusions

Evolutionary modeling contributes to shedding light on emergent behaviors as a result of strategic interaction, as well evidenced in Anufriev et al. (2018). In this paper, we reconsidered the evolutionary oligopoly model proposed in Bischi and Lamantia (2022), in which firms face duopoly games with objective functions that may include instances of partial cooperation or partial hostility against the competitor. First of all, we extended the investigation in Bischi and Lamantia (2022) by considering the full-fledged two-population version of the model. This setup allows us to formalize the model in a more general context and to introduce easily elements of heterogeneities among firms. From the dynamic point of view, while the one-dimensional model remains illustrative of the dynamics for two types of firms that are perfectly homogeneous in both parameters and initial decisions of the game, the two-population model also permits taking into account asymmetries in the initial decisions of the players. Such asymmetries can lead, if accompanied by sufficiently high agents’ intensity of choice, to convergence to the more cooperative equilibrium, as evidenced by the analysis of basins of attraction for the two-dimensional map. We believe that such aspects are a starting point for further investigation of multi-population models in economics.

Notes

All the mentioned contributions, as well as most evolutionary games in economics, assume interactions among agents from infinite populations of players. A different approach to explaining the selection of cooperation in evolutionary games is based on strategic interaction from a finite population of players. Nowak and Sigmund (2005) report stronger forms of reciprocity that can lead to more cooperation with finite populations. In this work, however, we still consider infinite populations of agents.

In this context, optimality is referred to from the industry perspective while the consumer standpoint is left out. An evolutionary model in the spirit of this paper with the incorporation of shares of the consumer objective function into the firms’ objective has been proposed in Kopel et al. (2014). For an examination of possible alternative objectives to profit (without levels of aggression/cooperation) we refer to Fanti et al. (2017).

Clearly a non-negativity constraint should be included in the price. However, we omit it because it is verified ex-post in the equilibrium quantities considered in the model.

In Bischi and Lamantia (2022), to accommodate memory, the function F also accounts for past profits. Here, we neglect the role of memory and we instead focus on the two-population version of the evolutionary game.

Consider map (21). If \(E_{0,0}\) is locally asymptotically stable, it must attract all points that lie in a neighborhood of it, which includes also points in the diagonal \(m_{1}=m_{2}\) where the dynamics is conjugated to the one of (23), therefore \(E_{0}\) is also locally asymptotically stable. Assume that \(E_{0}\) is also locally asymptotically stable for (23). Since the dynamics of (21) is conjugated to the one of (23) on \(m_{1}=m_{2}\), all points in a neighborhood of \(E_{0,0}\) that lie in the diagonal are attracted to \(E_{0,0}\). Then, \(E_{0,0}\) is either a saddle or a stable equilibrium. However, to be a saddle, it is required that \(m_{1}=m_{2}\) is an eigenvector (stable manifold) of the equilibrium. However, the eigenvectors of \(E_{0,0}\) are the left and right borders of \(\left[ 0,1\right] ^{2}\). It follows that \(E_{0,0}\) must be a stable equilibrium.

References

Anufriev, M., & Kopányi, D. (2018). Oligopoly game: Price makers meet price takers. Journal of Economic Dynamics & Control, 91(91), 84–103.

Anufriev, M., Radi, D., & Tramontana, F. (2018). Some reflections on past and future of nonlinear dynamics in economics and finance. Decisions in Economics and Finance, 41, 91–118.

Ashwin, P., Buescu, J., & Stewart, I. (1996). From attractor to chaotic saddle: A tale of transverse instability. Nonlinearity, 9(3), 703–737.

Baiardi, L. C., & Naimzada, A. (2019). An evolutionary Cournot oligopoly model with imitators and perfect foresight best responders. Metroeconomica, 70, 458–475.

Bischi, G. I., & Lamantia, F. (2022). Evolutionary oligopoly games with cooperative and aggressive behaviors. Journal of Economic Interaction and Coordination, 17, 3–27. https://doi.org/10.1007/s11403-020-00298-y

Bischi, G. I., Chiarella, C., Kopel, M., & Szidarovszky, F. (2010). Nonlinear oligopolies: Stability and bifurcations. Springer-Verlag.

Bischi, G. I., Lamantia, F., & Radi, D. (2015). An evolutionary Cournot model with limited market knowledge. Journal of Economic Behavior & Organization, 116, 219–238.

Bischi, G.I., Lamantia, F., & Radi, D. (2018). Handbook of game theory and industrial organization, volume I, theory, elgar, chap evolutionary oligopoly games with heterogeneous adaptive players. EISBN: 978 1 78536 328 3

Buescu, J. (1997). Exotic Attractors. Birkhäuser.

Cabrales, A., & Sobel, J. (1992). On the limit points of discrete selection dynamics. Journal of Economic Theory, 57(2), 407–419.

Cerboni Baiardi, L., Lamantia, F., & Radi, D. (2015). Evolutionary compentition between boundedly rational behavioral rules in oligopoly games. Chaos, Solitons & Fractals, 79, 204–225.

Cheng, S.F., Reeves, D., Vorobeychik, Y., & Wellman, M. (2004). Notes on equilibria in symmetric games. In Proceedings of the 6th international workshop on game theoretic and decision theoretic agents GTDT 2004, pp. 71–78

Clayton, M. J., & Jørgensen, B. (2005). Optimal cross holding with externalities and strategic interactions. The Journal of Business, 78(4), 1505–1522.

Cressman, R., & Tao, Y. (2014). The replicator equation and other game dynamics. Proceedings of the National Academy of Sciences (PNAS), 111(3), 10,810-10,817.

Cyert, R., & DeGroot, M. H. (1973). An analysis of cooperation and learning in a duopoly context. American Economic Review, 63(1), 24–37.

De Giovanni, D., & Lamantia, F. (2016). Control delegation, information and beliefs in evolutionary oligopolies. Journal of Evolutionary Economics, 27(5), 877–903.

Devaney, R. L. (1989). An introduction to chaotic dynamical systems. CRC Press.

Droste, E., Hommes, C. H., & Tuinstra, J. (2002). Endogenous fluctuations under evolutionary pressure in Cournot competition. Games and Economic Behavior, 40(2), 232–269.

Fanti, L., Gori, L., & Sodini, M. (2017). Managerial delegation theory revisited. Managerial and Decision Economics, 38(4), 490–512.

Fershtman, C., & Judd, K. L. (1987). Equilibrium incentives in oligopoly. American Economic Review, 77(5), 927–940.

Friedman, D. (1991). Evolutionary games in economics. Econometrica, 59(3), 637–666.

Gardini, L., Schmitt, N., Sushko, I., Tramonatana, F., & Westerhoff, F. (2021). Necessary and sufficient conditions for the roots of a cubic polynomial and bifurcations of codimension-1, -2, -3 for 3D maps. Journal of Difference Equations and Applications, 27(4), 557–578.

Harting, P., & Radi, D. (2020). Residential segregation: The role of inequality and housing subsidies. Journal of Economic Behavior & Organization, 178, 801–819.

Hofbauer, J., & Sigmund, K. (1998). Evolutionary games and population dynamics. Cambridge University Press.