Abstract

We consider a cumulative scheduling problem where a task duration and resource consumption are not fixed. The consumption profile of the task, which can vary continuously over time, is a decision variable of the problem to be determined and a task is completed as soon as the integration over its time window of a non-decreasing and continuous processing rate function of the consumption profile has reached a predefined amount of energy. The goal is to find a feasible schedule, which is an NP-hard problem. For the case where functions are concave and piecewise linear, we present two propagation algorithms. The first one is the adaptation to concave functions of the variant of the energetic reasoning previously established for linear functions. Furthermore, a full characterization of the relevant intervals for time-window adjustments is provided. The second algorithm combines a flow-based checker with time-bound adjustments derived from the time-table disjunctive reasoning for the cumulative constraint. Complementarity of the algorithms is assessed via their integration in a hybrid branch-and-bound and computational experiments on small-size instances.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

This paper deals with a scheduling problem involving a set of tasks and a continuously-divisible renewable resource of limited capacity shared by the tasks. We consider the case where tasks resource requirement is not fixed but is part of the problem and has to be determined. Thus, the duration of a task is not fixed either but is determined by the resource requirement function as the task is finished once it has received a necessary amount of energy. Furthermore, we consider that the total energy received by a task is not equal to the total amount of the resource used by it. Instead we have processing rate (or efficiency) functions, which translates the required resource amounts into energy.

We perform an analysis of the structural properties of the problem for concave piecewise linear processing rate functions. To our knowledge, this variant of the cumulative constraint has never been considered in the literature. We show first that the resource demand profile of a task can be restricted to a piecewise constant function with break points at the starts and ends of tasks. From these theoretical properties, we are able to compute the minimal resource consumption of a task inside an interval in O(1) and we prove that the set of the relevant intervals of polynomial size that was shown sufficient for energetic reasoning with linear functions is also sufficient in our case. Furthermore, a full characterization of relevant intervals for time-window adjustments is provided, completing the work for linear function. We also define a new propagation algorithm together with a satisfiability test, which relies on the time-table disjunctive reasoning for the cumulative constraint [6] for the first one and on a flow-based linear program for the latter one. Finally, complementarity of the algorithms is assessed via their integration in a hybrid branch-and-bound and computational experiments on small-size instances.

1 Problem statement, properties and context

In this section, we formally define the Continuous Energy-Constrained Scheduling Problem (CECSP). Then, we present a foundry application in details and finally, we exhibit some properties of the CECSP, which we will use throughout the paper.

1.1 Problem definition

In the CECSP, a set of tasks \(\mathcal {A}=\{1,\dots ,n\}\) has to be scheduled using a continuous, cumulative and renewable resource of capacity B. The resource amount that a task requires during its processing time is not fixed but instead the resource usage of a task \(i \in \mathcal {A}\) is a function of time, b i (t), that must be determined in a continuous time setting (\(t \in \mathbb R^{+}\)). Once the task is started and until its finishing time, its resource usage is constrained to lie between a maximum and a minimum requirement, \(b_{i}^{max}\) and \(b_{i}^{min} \neq 0\), respectively.Footnote 1 In addition, when a task uses a part of the resource, it receives a certain amount of energy and we say that a task is finished when it has received an energy W i . This energy is computed using a continuous, non-decreasing, concave and piecewise linear power processing rate function [3]:

Furthermore, each task has a release date r i and a deadline d i , and has to be fully executed in [r i , d i ].

The CECSP consists of finding, for each task, a start time \(st_{i}\in \mathbb [est_{i},lst_{i}]\), an end time \(et_{i}\in \mathbb [eet_{i},let_{i}]\), and its resource usage \(b_{i}(t)\in \mathbb R^{+}\), \(\forall t\in \mathbb R^{+}\) such that:

Example 1

In the example of Fig. 1, the energy received by task 2 is equal to (2 × 3 + 1) + (2 × 4 + 1) + (2 × 4 + 1) = 25; the amount of resource consumed is equal to 3 + 4 + 4 = 11.

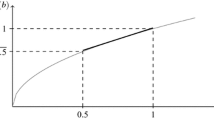

Throughout this paper, we will use the following expression for function f i (see Fig. 2):

with P i being the number of pieces of f i and \({x^{i}_{p}}, p \in \{1,\dots ,P_{i}-1\}\) its breakpoints.

1.2 Context

The CECSP comes from an industrial problem. This problem, presented in [1], arises in a pipe-manufacturing plant and more precisely, the foundry where metal is melted in induction furnaces. In this department, melting and heating use a huge amount of energy especially electricity. The expenses of the plant for electricity represent more than half of the annual energy costs. The cost of electricity depends on the total energy consumed and on penalties for power overrun in reference to a subscribed maximal power. The goal is to minimize the energy bill.

The foundry has several production lines (furnaces) and each metal operation has to be assigned to a furnace and scheduled within a time window. Furthermore, an operation has a variable duration that depends on the power given to the furnace. Thus, the electrical power of the furnaces, which can be adjusted at any time to avoid exceeding a maximum limit, can be seen as a continuous function of time to be determined. However, the function must lie within a limit (due to physical and operational considerations); thus, a minimum and a maximum power level must be satisfied for the melting operation. A melting job is composed of three sequential parts: loading, heating and unloading. The duration of loading and unloading are known but heating duration has to be determined. The heating operation can be stopped once the necessary energy has been received.

To solve this problem, the authors suppose that jobs have a piecewise constant consumption profile and define a two-step method. In the first step, scheduling of jobs on the furnaces is performed, using a constraint programming model, with fixed job durations. The task sequence and assignment are then used as data in the second step and a MILP model is used to determine their consumption profile and duration. The algorithm then returns to the first step with new jobs durations and it stops when the objective function is no more improved.

Unfortunately, the initially considered energy model was not sufficient to achieve a good energy consumption. Therefore, a new problem, which can be used to determine task starting and finishing times as well as their consumption profile was introduced in [1], the Energy Scheduling Problem (EnSP). Due to the complexity of the problem, the proposed model considers a time discretization, which can lead to suboptimal or infeasible solutions by over-constraining the problem. Furthermore, efficiency functions were not considered. In a continuous time setting but still without considering the efficiency functions, constraint propagation algorithms based on the energetic reasoning concept were proposed in [2] for the CECSP, which is the continuous version of the EnSP.

Despite the fact that processing rate functions were not considered in these papers, actual processing rate functions in scheduling problems that involve energy-consuming tasks are intrinsically continuous and non-linear. As a typical example, the non-linear function given in Fig. 3 gives the shape of the processing rate function of a fuel cell [7]. An extension of the work of [2] to linear processing rate functions as well as several solution methods was proposed in [12, 13]. A preliminary version of the work presented in this paper can be found in [14].

1.3 Properties and remarks

In [12], the authors use linear functions to approximate real-world ones. Despite the fact that there exist many real-world processing functions, which are concave [8, 11], approximating a function by a concave piecewise linear one is always at least as good as an approximation by a linear function (see Example 2 below). Furthermore, the authors of [12] prove the NP-hardness of their problem using a reduction from the cumulative problem. This proof is still valid in our case and then the CECSP with concave functions is NP-hard.

Example 2

Consider the neither concave nor convex function described in Fig. 3. We approximate the function from below (to obtain a relaxation) with a linear function (left side of the figure) or with a concave piecewise linear one (right side of the figure).

Clearly, the approximation is tighter with the concave piecewise linear function. Note also that this result is also valid for approximation from above or at the median point.

In order to define an efficient solution method for our problem, we prove that if there exists a solution for the CECSP, then a solution where all resource usage functions, b i (t), are piecewise constant exists. This is the statement of the following theorem:

Theorem 1

Let \(\mathcal {I}\) be a feasible instance of CECSP, with non-decreasing, concave piecewise linear functions f i . A solution such that, for all i ∈ A , b i (t) is piecewise constant, exists. Furthermore, ∀i ∈ A the breakpoints of b i (t) can be restricted to the start and end times of the tasks.

Let us assume a solution S with non-piecewise constant b i (t) exists. Then, S can be transform into a new solution \(S^{\prime }\) with piecewise constant \(b_{i}^{\prime }(t)\), \(\forall i \in \mathcal {A}\).

Before proving Theorem 1, we prove that, if ∃[t 1, t 2] where b i (t) is not constant, then b i (t) can be set to its mean value over [t 1, t 2]. Doing so, i will consume the same resource quantity in [t 1, t 2] and will received more energy.

Lemma 1

Let \(b_{iq}= \frac {{\int }_{t_{1}}^{t_{2}} b_{i}(t) dt}{t_{2}-t_{1}}\) . Then, we have:

Proof

Equation (5) is trivially verified by replacing b i q by its value. To prove that (6) is satisfied, we can use the following theorem, due to Jensen [9].

Theorem 2 (Jensen)

Let α(t)and g(t)be two integrable functions on [t 1, t 2]such that α(t) ≥ 0, ∀t ∈ [t 1, t 2]. We have:

where ϕ is a continuousconcave function in \([\min _{t \in [t_{1},t_{2}]} g(t)][\max _{t \in [t_{1},t_{2}]} g(t)]\).

Replacing ϕ(t) by f i (t), g(t) by b i (t) and α(t) by the constant function equal to 1 gives the desired result. □

Example 3

Consider a task i and the concave piecewise linear efficiency function described in Example 1, f 3(b).

Consider an interval [t 1, t 2 = t 1 + 6] and functions (Fig. 4):

Thus, \({\int }_{t_{1}}^{t_{2}}b_{i}(t)dt=12\) and \({\int }_{t_{1}}^{t_{2}}f_{i}(b_{i}(t))dt=27\). Applying Lemma 1 we can replace b i (t) by b i q = 12/6 = 2 between t 1 and t 2, which yields f i (b i q ) = 5, \({\int }_{t_{1}}^{t_{2}}b_{iq}dt=12\) and \({\int }_{t_{1}}^{t_{2}}f_{i}(b_{iq})dt=30 \ge 27\).

Proof

(Theorem 1) Let S be a feasible solution of \(\mathcal {I}\) and let (t q ){q = 1..Q} be the increasing series of distinct start and end time values (Q ≤ 2n). \(S^{\prime }\) can be defined as follows:

-

\(\begin {array}{lllll} b^{\prime }_{i}(t) = \left \{ \begin {array}{lll} b_{i0} &\quad & \text {if}\, t \in [t_{0}, t_{1}]\\ \multicolumn {2}{c}{\vdots } & \\ b_{i(Q-1)} &\quad & \text {if}\, t \in [t_{Q-1},t_{Q}] \end {array} \right . & \text {with}\, b_{iq}=\frac {{\int }_{t_{q}}^{t_{q+1}} b_{i}(t) dt}{t_{q+1}-t_{q}} \end {array}\)

-

\( st^{\prime }_{i}=st_{i} \)

-

\(et^{\prime }_{i}=\min (\tau | {\int }_{st_{i}}^{\tau } f_{i}(b_{i}^{\prime }(t))dt=W_{i})\) and then \(b_{i}^{\prime }(t) =0,\ \forall t > et^{\prime }_{i}\)

\(S^{\prime }\) clearly verifies the energy constraints (3) since it is defined in this way. \(S^{\prime }\) also satisfies the time window constraints (1) since \(st_{i} \le st^{\prime }_{i}\) and \(et_{i} \ge et^{\prime }_{i}\) (Fig. 5).

In addition, as S is a feasible solution, we have \(\forall q \in \{1,\dots ,Q\}\) and ∀t ∈ [t q , t q+1]:

and \(S^{\prime }\) also verifies the resource capacity constraints (4).

Finally, we can show that \(S^{\prime }\) satisfies the resource requirement constraints (2) in a similar way. □

An interesting remark can be made about Theorem 1. Actually, in order to find a solution to CECSP, we only have to find, for each task, its start time s t i , its end time e t i and the quantity of resource allocated to i between two consecutive start/end times b i q .

2 Time-table based reasoning

In this section, we first describe an algorithm which allows us to detect some infeasibilities and then, another algorithm performing time-bound adjustments is presented. Both are based on the well-known Time-Table reasoning [10] but the first one combines it with a flow-based algorithm, whereas the second one is an adaptation of the Time-Table Disjunctive reasoning for the cumulative constraints [6].

The Time-Table reasoning is based on the following observation: if the earliest end time e e t i of a task i is higher than its latest start time l s t i then i must be in process during interval [l s t i , e e t i ]; this interval is called the compulsory part of i. For the CECSP, as the resource usage of i is not fixed, we can only deduce that the task will consume at least \(b_{i}^{min}\) units of resource (see Fig. 6).

Aggregating all compulsory parts, we can compute in O(n 2) the minimum profile of the resource, denoted by T T(t),∀t and use it to detect infeasibility and to perform time-bound adjustments.

2.1 Time-table flow based reasoning

The first algorithm described in this paper embeds the concept of compulsory part into a flow-based linear program. This linear program allows us to detect some infeasibility. Indeed, if the program has no solution, then the considered CECSP instance is infeasible.

To describe this program, let \((t_{q})_{q \in \mathcal {Q}}\) be the increasing series of distinct variable domain bounds, i.e. t q gather all distinct latest/earliest start/end times of all tasks. Clearly, \(|\mathcal {Q}| \le 4\cdot n\). Then, ∀[t q , t q+1], let β i q (resp. w i q ) represent the quantity of consumed resource (resp. received energy) in this interval. Thus, the following linear program can be used as a checker:

where M is some large enough constant.

The compulsory part constraints are expressed by constraints (9). Constraints (8) model the resource capacity limitations. Constraints (10) impose that the maximum resource requirements are satisfied. Constraints (11) set the resource consumption of task i in [t q , t q+1] to be equal to 0, if \([t_{q},t_{q+1}] \not \subseteq [r_{i},d_{i}]\). Constraints (12) together with constraints (13) ensure a correct resource conversion. Indeed, as f i is concave and piecewise linear, the first constraints ensure that w i q can take the value \(f_{i}(\frac {\beta _{iq}}{t_{q+1}-t_{q}})\cdot (t_{q+1}-t_{q})\) whereas the second ones set w i q to zero if β i q = 0. Finally, constraints (14) state that the tasks received the required energy.

Note that this test ensures the same level of consistency than the Time-Table Overload Check [16]. Indeed, it does the Overload Check at the same time as the Time-Table. This is the same consistency check that performs Time-Table Edge-Finding [15].

We now describe the adaptation of the Time-Table Disjunctive reasoning for the cumulative constraint [6] to our problem. This reasoning will be used to perform time-bound adjustments on the start and end time variables.

2.2 Time-table disjunctive reasoning

The idea of the Time-Table Disjunctive reasoning is to take advantage of the minimum resource profile to detect disjunctive pairs of tasks, i.e. tasks that cannot be processed in parallel, dynamically.

Indeed, the disjunctive reasoning only considers pairs of tasks that exceed the capacity of the resource if they are scheduled in parallel, i.e. \(b_{i}^{min}+b_{j}^{min} > B\). However, tasks in \(\mathcal {A} \setminus \{i,j\}\) may not leave B units of resource available during the overlap of i and j.

Furthermore, if task i has no compulsory part then an additional filtering can be done when starting a task j at e s t j would make it overlap i in every schedule. This is due to the fact that j cannot contain a time interval that i must overlap.

Therefore, the authors in [6] defined the smallest interval overlapping i in every solution, called the minimum overlapping interval and denoted by m o i i . For the CECSP, this interval is exactly [e s t i , l s t i ] ∖ [e s t i , e e t i [, i.e the smallest interval containing [e e t i , l s t i ] (cf. Fig. 7). Note that, if a task has a compulsory part, then m o i i = ∅.

Let \(et_{i}^{max}= r_{i} + W_{i}/f_{i}(b_{i}^{min}) \). Then, using the minimum resource profile to compute the available resource and to search for disjunctive pairs of tasks, the time-table disjunctive reasoning can be stated as follows:

Proposition 1

Let i ≠ j be two tasks having no compulsory consumption and such that \(b_{i}^{min}+b_{j}^{min} +min_{t \in moi_{i}} TT(t)> B\) . If \(moi_{i} \subseteq [est_{j},et_{j}^{max}]\) we must have e e t i ≤ s t j and so e s t j can be adjusted to e e t i .

Example 4

Consider the example of Fig. 8 adapted from [6]. Due to the minimum profile, i and j cannot overlap and, since j cannot be scheduled before i (\(moi_{i} \subseteq [est_{j},et_{j}^{max}]\)), j has to be scheduled after and then we can set e s t j to e e t i .

A symmetrical reasoning allows to make adjustment of l e t j . Furthermore, Proposition 1 can easily be extended to the case where tasks i and j has no compulsory consumption following the method presented in [6] for the cumulative constraint.

3 Energetic reasoning

In this section, we present the extension of the well-known energetic reasoning to the CECSP with concave and piecewise linear processing rate functions. This work extends the work of [13] for linear processing rate functions. The full characterization of relevant intervals for the time-bound adjustments is also provided, extending the result for the cumulative constraint [4]. This characterization was not presented in [13].

First, we present the central idea of the reasoning and we use it to provide a satisfiability test as well as time-bound adjustments. The second part of this section will be dedicated to the characterization of relevant intervals.

3.1 Satisfiability test and time-bound adjustments

The idea of the energetic reasoning is to test whether the available resource within an interval [t 1, t 2] is sufficient to provide the minimum resource quantity needed by each task i in this interval, denoted by \(\underline {\beta }(i,t_{1},t_{2})\). If not, then the corresponding instance of the CECSP is infeasible.

Theorem 3

[5] Let \(\mathcal {I}\) be an instance of CECSP. If there exists (t 1, t 2) such that \(B(t_{2}-t_{1})-{\sum }_{i\in A}\underline {\beta }(i,t_{1},t_{2})<0\) then \(\mathcal {I}\) is infeasible.

In order to compute the minimum resource consumption of task i in [t 1, t 2], we first have to compute the minimum energy requirement of i in this interval, denoted by \(\underline {w}(i,t_{1},t_{2})\). This minimum requirement is obtained by scheduling as much energy as possible outside the interval [t 1, t 2] while satisfying constraints (1)–(4). This always corresponds to a case where the activity is:

-

left-shifted: the task starts at e s t i and is scheduled at its maximum requirement between e s t i and t 1,

-

right-shifted: the task ends at l e t i and is scheduled at its maximum requirement between t 2 and l e t i ,

-

or both: when scheduling at minimum requirement inside [t 1, t 2] implies to have a non-zero requirement both in [e s t i , t 1] and in [t 2, l e t i ].

We denote by ω L S (i, t 1, t 2) (resp. ω R S (i, t 1, t 2)) the minimum energy requirement of task i if it is shifted as early as possible in [t 1, t 2] (resp. as late as possible). Since both cases are symmetric, we only describe the first one. Thus, ω L S (i, t 1, t 2) is equal to:

Expression | Condition | Figure |

|---|---|---|

0 | t 1 ≥ e e t i ∨ t 2 ≤ e s t i | – |

W i | t 1 ≤ e s t i ∧ l e t i ≤ t 2 | – |

\( W_{i} - f_{i}(b_{i}^{max})(t_{1}-est_{i}) \) | e s t i ≤ t 1 ≤ e e t i ∧ l e t i ≤ t 2 | Fig. 9a |

\(\min {\left (\begin {array}{c} W_{i} - f_{i}(b_{i}^{max})(t_{1}-est_{i}) , \\ \max {\left \{ \begin {array}{c} W_{i}-f_{i}(b_{i}^{max})(t_{1}-est_{i}+let_{i}-t_{2})),\\ f_{i}(b_{i}^{min})(t_{2}-t_{1}) \end {array} \right \}} \end {array} \right )} \) | e s t i ≤ t 1 < t 2 ≤ l e t i | Fig. 9b |

\(\max {\left \{ \begin {array}{c} W_{i}-f_{i}(b_{i}^{max})(let_{i}-t_{2})),\\ f_{i}(b_{i}^{min})(t_{2}-est_{i}) \end {array} \right \} }\) | t 1 ≤ e s t i ∧ t 2 < l e t i | Fig. 9c |

The three last cases are illustrated in Fig. 9.

Thus, the minimum energy requirement in [t 1, t 2] is:

The minimum energy requirement is then used to compute the minimum resource requirement. First, let \(I=[est_{i},let_{i}] \cap [t_{1},t_{2}]\), then \(\underline {\beta }(i,t_{1},t_{2})\) can be computed by solving the following program:

Indeed, the goal is to find the minimum resource quantity (17) i has to consume in I to receive an energy \(\underline {w}(i,t_{1},t_{2})\) (18). In addition, we have to make sure that the minimum requirement constraints are satisfied by the solution of the program (19).

Then, Lemma 1 can be used to simplify the program. Actually, the lemma applied to the problem involving only task i implies a solution with constant b i (t), say \(b_{i}(t)=\tilde {b_{i}}\) exists and then the program can be rewritten as follows:

Thus, we have two cases to consider: either the task can be scheduled at \(b_{i}^{min}\) during the whole interval I or not. In the first case, we can remove the second constraint and we obtain:

Since f i is concave and piecewise linear, \(f_{i}^{-1}\) can easily be computedFootnote 2 and \(\tilde {b_{i}}|I|=f_{i}^{-1}(\underline {w}(i,t_{1},t_{2})/|I|)\cdot |I|=\underline {\beta }(i,t_{1},t_{2})\) is equal to:

The second case corresponds to the case where executing the task at \(b_{i}^{min}\) during the whole interval I give too much energy to the task, i.e. \(\underline {w}(i,t_{1},t_{2}) <|I|\cdot f_{i}(b_{i}^{min})\). In this case, \(\underline {\beta }(i,t_{1},t_{2})\) is equal to \(b_{i}^{min} \cdot \underline {w}(i,t_{1},t_{2})/f_{i}(b_{i}^{min})\).

Finally, the expression of the minimum resource consumption is:

Example 5

Consider the instance described in Example 1. In particular, we compute \(\underline {w}(i,t_{1},t_{2})\) and \(\underline {\beta }(i,t_{1},t_{2})\) for task 3 and interval [0,4] and [0,6].

For interval [0,6], we have \(\underline {w}(3,0,6)=W_{3}=21.5\) since \([est_{3},let_{3}] \subseteq [t_{1},t_{2}]\). Then, the computation of \(\underline {\beta }(3,0,6)\) falls into the case where \( \underline {w}(3,0,6) \ge f_{3}(b_{i}^{min}[3]){\cdots } |I| = 18\). Thus, \( \underline {w}(3,0,6)/|I|=21.5/6 \simeq 3.583\) belonging to interval [f 3(1), f 3(2)[= [2,5[, we have (see Fig. 10a):

For interval [0,4], we have \(\underline {w}(3,0,4)=W_{3} - 2\cdot f_{3}(b_{i}^{max}[3]) =8.5\). Here, the computation of \(\underline {\beta }(3,0,4)\) falls into the case where \( \underline {w}(3,0,4) < f_{3}(b_{i}^{min}[3]){\cdots } |I| = 12\). Thus, we have (see Fig. 10b):

We now describe how this quantity is used to perform time-bound adjustments. These adjustments are similar to the ones for linear functions described in [12], so we just briefly present them.

We start by defining some notation. We denote by β L S (i, t 1, t 2) (respectively β R S (i, t 1, t 2)) the minimal resource consumption corresponding to ω L S (i, t 1, t 2) (resp. ω R S (i, t 1, t 2)).

We have:

and a similar expression for β R S (i, t 1, t 2).

Lemma 2

If t 1 > r i and there exists [t 1, t 2]such that:

then,

and, if\(b_{i}^{min}\neq 0\),

Indeed, the only configuration for which task i starts after t 1 and leading to the minimum resource consumption inside [t 1, t 2] is if the task is right-shifted. Therefore, \({\sum }_{\begin {array}{llllll}j \in A;\ j \neq i \end {array}}\underline {\beta }(j,t_{1},t_{2}) + \beta _{RS}(i,t_{1},t_{2})\) is the total minimum resource consumption in [t 1, t 2] when task i starts after t 1. Hence, if this quantity is greater than the quantity of available resource in [t 1, t 2], i has to start before t 1. Otherwise \({\sum }_{\begin {array}{llllll}j \in A;\ j \neq i \end {array}}\underline {\beta }(j,t_{1},t_{2}) + {\int }_{t_{1}}^{t_{2}} b_{i}(t) \ge {\sum }_{\begin {array}{llllll}j \in A;\ j \neq i \end {array}}\underline {\beta }(j,t_{1},t_{2})+ \beta _{RS}(i,t_{1},t_{2}) \ge B(t_{2}-t_{1})\).

Furthermore, \({\sum }_{j \in A;\ j\neq i}\underline {\beta }(t_{1},t_{2},j) + \beta _{RS}(i,t_{1},t_{2})- B(t_{2}-t_{1})\) is the minimum amount of resource that has to be allocated to i before t 1. Hence, we can divide this number by \(b_{i}^{max}\) to obtain a valid upper bound of the start time of i. Similar arguments lead to the adjustment on l e t i . An example of such adjustments can be found in [12].

3.2 Relevant intervals for the energetic reasoning

In [12], the authors present a full characterization of the relevant intervals for the satisfiability test in the case where processing rate functions are linear. These intervals are exactly the ones that are relevant for the case of concave piecewise linear functions. Furthermore, as these intervals are included in the set of relevant intervals for the time-bound adjustments, we only present the second results.

Recall that an adjustment can be performed on task i if \(B(t_{2}-t_{1}) - {\sum }_{i\neq j}\underline {\beta }(j,t_{1},t_{2})] - \beta _{RS}(i,t_1,t_2) <0 \). Thus, we are looking for all intervals [t 1, t 2] such that the function \(B(t_2-t_1) - {\sum }_{i\neq j}\underline {\beta }(j,t_{1},t_{2}) - \beta _{RS}(i,t_1,t_2)\) is negative.

Theorem 4

[4] \(B(t_2-t_1) - {\sum }_{i\neq j}\underline {\beta }(j,t_{1},t_{2}) - \beta _{RS}(i,t_1,t_2)\) is locally minimum in interval [t 1, t 2] only if one of the four following conditions is satisfied:

with \(\frac {\delta ^{+} f}{\delta t_2}\) (resp. \(\frac {\delta ^{-} f}{\delta t_2}\) ) the right (resp. left) derivative of f w.r.t. t 2 .

This theorem is then used to characterize, for a task i and a fixed t 1, the value of function \(t_2 \rightarrow \underline {\beta }(i,t_{1},t_{2})\) or \(t_2 \rightarrow \beta _{RS}(i,t_1,t_2)\) for which its left derivative is greater than its right, and reciprocally for fixed t 2. Then, the two results are combined to obtain the list of relevant intervals [t 1, t 2].

Since there are many cases to consider, we only present how we obtain relevant t 2 for the case where \(W_i > f_i(b_{i}^{min})(let_i-est_i)\). The other cases to consider are \(b_{i}^{min}=b_{i}^{max}\) (see [4]) and \(W_i \le f_i(b_{i}^{min})(let_i-est_i)\).

First, let H (resp. \(I^{\prime }\)) be the intersection point of line \(f_i(b_{i}^{min})(t_2-t_1)=W_i-(let_i-t_2)f_i(b_{i}^{max})\) and \(f_i(b_{i}^{min})(t_2-t_1)=W_i-(t_1-est_i)f_i(b_{i}^{max})\) (resp. \(W_i-(t_1-est_i+let_i-t_2)f_i(b_{i}^{max})= f_i(b_{i}^{min})(t_2-t_1)\) and t 2 = d i ).

Also, let U(t 1) (resp. D(t 1)) be the point t 2 such that \( f_i(b_{i}^{min})(t_2-t_1) = W_i-(t_1-est_i)f_i(b_{i}^{max})\) (resp. \(f_i(b_{i}^{min})(t_2-t_1) = W_i-(let_i-t_2)f_i(b_{i}^{max})\)

Lemma 3

Let i be a task s.t. \(W_i >f_i(b_i^{min})(let_i-est_i)\) . Then, for any fixed t 1 , at most two intervals [t 1, t 2]satisfying the second condition of (21) exist and at most two intervals [t 1, t 2]satisfying the second condition of (22) exist. These intervals are described in Table 1.

With \(H_{t_1}\)(resp. \(I^{\prime }_{t_1}\))being the projection on the x-axis of point H(resp.\(I^{\prime }\)).

Proof

We only present how to obtain relevant t 2 for the second line of Table 1. The other cases can be obtained in a similar way. In order to prove the lemma, we analyze the variation of \(t_2 \rightarrow \underline {\beta }(i,t_{1},t_{2})\). Figure 11 represents these variations.

Where \(expr_{ip}=\frac {1}{a_{ip}}\left (W_i- f_i(b_{i}^{max})(let_i-t_2+t_1-est_i) - c_{ip}(t_2-t_1)\right )\) The intervals for which the left derivative is smaller than the right are [t 1, l e t i ] and [t 1, D(t 1)]. Indeed, since f i is a concave piecewise linear function, we have a i p > a i p+1 and c i p < c i p+1, and we have \(\frac {\delta ^{-}expr_{ip}}{\delta t_2}< \frac {\delta ^{+}expr_{ip+1}}{\delta t_2}\) □

4 Computational results

In this section, we start by presenting how we have conducted our experiments on the algorithms stated in this paper. Then, we describe the results of these experiments.

Our propagation algorithms and satisfiability tests were embedded in a hybrid branch-and-bound combining branching scheme and mixed-integer linear programming (MILP). This procedure is an adaptation of the one in [12] for linear functions.

Hybrid branch-and-bound

At first, a branch-and-bound algorithm is used to reduce the size of possible start and end intervals (until their size is less than a given 𝜖 > 0) and, then, an event-based MILP is used in order to find exact task start and end times and to determine the quantity of resource allocated to i between two consecutive events.

The branching procedure is as follows. At the beginning, a task can start (resp. end) at any time s t i ∈ [e s t i , l s t i ] (resp e t i ∈ [e e t i , l e t i ]). The idea is, at each node, to reduce the size of one of these intervals by creating two nodes splitting the interval into two parts of equal size.

At each node, we apply one or both of the satisfiability tests described above and, if the test does not fail, we perform the corresponding time-window adjustments. We continue this procedure using a depth-first strategy until all intervals are smaller than an 𝜖. When it happens, the remaining solution space is searched via the event-based MILP.

The MILP used in our algorithm is based on the on/off event-based formulation for the CECSP with linear processing rate functions [12]. In this formulation, an event corresponds either to a task start or a task end time. These events are represented by a set of continuous variables t e and \(\mathcal {E}=\{1,\dots ,2n\}\) represents the index set of these events. We use a binary variable z i e to assign the different event dates to the start and end times of the tasks. Indeed, z i e is equal to 1 if and only if task i is in process during interval [t e , t e+1]. Finally, two continuous variables b i e and w i e are also defined. These variables stand for the quantity of resource used by task i and for the energy received by i between events t e and t e+1. Since the event-based MILP used for our case is almost the same than the one used for linear functions, we are not describing the model here. The main difference lies in the constraints converting resource into energy. These constraints were as follows in the previous model:

and are replaced by the following ones in the new model:

Experiments

The experiments are conducted on an Intel Core i7-4770 processor with 4 cores and 8 gigabytes of RAM under the 64-bit Ubuntu 12.04 operating system. The hybrid branch-and-bound algorithm is coded in C++ and uses CPLEX 12.6 with 2 threads at each leaf.

The heuristic tested for choosing the variable on which the algorithm will branch is the following one: we choose the variable corresponding to the smallest size interval among all [e s t i , l s t i ] and [e e t i , l e t i ]. The parameter 𝜖 used in the experiments is equal to 5 since this parameter value provides the best results for the case of linear efficiency functions [12].

To generate instances with concave piecewise linear processing rate functions, we use instances of [12] with identical functions. First, the instances were solved using the time-indexed mixed integer linear program described in [12]. In this formulation, the planning horizon is discretized in T time periods of size 1 and a variable b i t is used to represent the resource consumption of task i in period t.

The efficiency functions f i are generated by randomly selecting a number of pieces P i . The interval \([b_{i}^{min},b_{i}^{max}]\) is then divided into P i parts. For each piece p, a random coefficient a i p such that a i p < a i p−1 is generated and c i p is computed to ensure the continuity of the function. Finally, W i is set to \(W_i={\sum }_{t=1}^{T} f_i(b_{it})\). We repeat this process until we obtain 80 instances with 10 tasks and 140 instances with 20 tasks.

Table 2 presents the results of the hybrid branch-and-bound with a time limit of 7200 seconds. The first row describes the results of the hybrid branch-and-bound with the time-table flow based reasoning (TTFlow), the second row with the energetic reasoning (ER), the third row with the time-table disjunctive reasoning (TTDR) and the last row with both ER and TTFlow tests. For each row, the first column presents the time needed to solve the instances, the second column shows the time spent in the tree, the third column the percentage of solved instances, the fourth column the number of solved MILPs (if the instance is solved), the fifth column the number of explored nodes (if the instance is solved), and the last column describes the percentage of “out of memory”.

We can see that the time-table flow and the time-table disjunctive reasoning provide the best results. For the 10-task instances, the time-table flow solves the instances faster but the time spent in the tree is higher than for the time-table disjunctive reasoning while the number of nodes and MILP solved is equivalent for both algorithms. For the 20-task instances, both algorithms achieved similar performances both in terms of solving time and solved instances.

The energetic reasoning has the worst performances and the combination of the time-table flow and the energetic reasoning improves significantly the performances of the solution method in comparison with the use of the energetic reasoning alone. However, the time-table flow and time-table disjunctive reasoning is better than these two algorithms.

Another remark we can do concerns the huge difference in terms of number of MILPs and nodes between the resolution of the 10-task and 20-task instances with the energetic reasoning. This can be explained by the small number of solved instances for the 20-task instances. Indeed, the number of nodes/MILPs in the table is associated only with solved instances.

In addition, we can see that most of the time is spent in the MILP resolution. Therefore, improving the formulation is one of the main perspectives of this work.

5 Conclusions

This paper presents the extension of the energetic reasoning and the time-table disjunctive reasoning to the CECSP with concave and piecewise linear processing rate functions. A full characterization of the relevant intervals on which the time-bound adjustments of the energetic reasoning has to be applied is provided. Both methods are then embedded in a hybrid branch-and-bound and tested on small-size instances.

The interest of considering concave piecewise processing rate functions is shown through examples and experiments. Furthermore, all the new results described in this paper are still valid for linear functions.

For the perspectives, time has to be spent on the instance generation and on the resolution of larger instances. One way of doing this will be by improving the MILP. Also, the method can be improved by the use of dedicated branching heuristics. Finally, the consideration of objective functions is an important perspective of this work.

Notes

Although all results presented in this paper can be adapted when \(b_{i}^{min}=0\), for ease of notation, we assume \(b_{i}^{min} \neq 0\).

Indeed, suppose that f i is constant in some pieces the first one being p ∈{1,…, P i }, which would cause problem to compute \(f_{i}^{-1}\). In that case, f i must be constant on all pieces {p,…, P i } due to concavity. It follows that \(b_{i}^{max}\) can be reduced to the start of piece p.

References

Artigues, C., Lopez, P., & Haït, A. (2013). The energy scheduling problem: industrial case study and constraint propagation techniques. International Journal of Production Economics, 143(1), 13–23.

Artigues, C., & Lopez, P. (2015). Energetic reasoning for energy-constrained scheduling with a continuous resource. Journal of Scheduling, 18(3), 225–241.

BłaŻewicz, J., Machowiak, M., Wȩglarz, J., Kovalyov, M., & Trystram, D. (2004). Scheduling malleable tasks on parallel processors to minimize the makespan. Annals of Operations Research, 129(1–4), 65–80.

Derrien, A., & Petit, T. (2014). A new characterization of relevant intervals for energetic reasoning. In International conference on principles and practice of constraint programming, CP 2014 vol. 8656 of lecture notes in computer science (289–297). Springer International Publishing.

Erschler, J., & Lopez, P. (1990). Energy-based approach for task scheduling under time and resources constraints. In 2nd International workshop on project management and scheduling (pp 115–121). Compiègne.

Gay, S., Hartert, R., & Schaus, P. (2015). Time-table disjunctive reasoning for the cumulative constraint. In International conference on AI and OR techniques in constraint programming for combinatorial optimization problems, CPAIOR 2015, vol. 9075 of lecture notes in computer science (pp. 157–172). Springer International Publishing.

Ngueveu, S.U., Artigues, C., & Lopez, P (2016). Scheduling under a non-reversible energy source: an application of piecewise linear bounding of non-linear demand/cost functions. Discrete Applied Mathematics, 208, 98–113.

Hung, M.N., Le Van, C., & Michel, P. (2005). Non-convex aggregative technology and optimal economic growth. Cahiers de la Maison des Sciences Économiques 2005.95 - ISSN : 1624-0340.

Jensen, J.L.W.V. (1906). Sur les fonctions convexes et les inégalités entre les valeurs moyennes. Acta Mathematica, 30(1), 175–193.

Lahrichi, A. (1982). Ordonnancements. La notion de parties obligatoires et son application aux problèmes cumulatifs. RAIRO - Operations Research, 16(3), 241–262.

Lewis, J. Algebra symposium: optimizing fuel consumption. http://homepages.math.uic.edu/~jlewis/math165/asavgcost.pdf.

Nattaf, M., Artigues, C., Lopez, P., & Rivreau, D. (2015). Energetic reasoning and mixed-integer linear programming for scheduling with a continuous resource and linear efficiency functions. OR Spectrum, 1–34.

Nattaf, M., Artigues, C., & Lopez, P. (2015). A hybrid exact method for a scheduling problem with a continuous resource and energy constraints. Constraints, 20 (3), 304–324.

Nattaf, M., Artigues, C., & Lopez, P. (2015). Flow and energy based satisfiability tests for the continuous energy-constrained scheduling problem with concave piecewise linear functions. In CP Doctoral Program 2015 (pp. 70–81). Cork.

Vilím, P. (2011). Timetable edge finding filtering algorithm for discrete cumulative resources, Integration of AI and OR techniques in constraint programming for combinatorial optimization problems. CPAIOR 2011 vol 6697 of lecture notes in computer science. Berlin: Springer.

Wolf, A., & Schrader, G. (2006). O(n n) overload checking for the cumulative constraint and its application. In Umeda, M., Wolf, A., Bartenstein, O., Geske, U., Seipel, D., & Takata, O. (Eds.), Declarative programming for knowledge management. INAP 2005 lecture notes in computer science, Vol. 4369. Berlin: Springer.

Acknowledgments

The authors thank José Verschae for enlightening discussions. This study was partially supported by project “Energy-Efficient and Robust approaches for the Scheduling of Production, Services and Urban Transport”, ECOS/CONICYT, N◦ C13E04.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article belongs to the Topical Collection: Integration of Artificial Intelligence and Operations Research Techniques in Constraint Programming

Guest Editors: Michele Lombardi and Domenico Salvagnin

Rights and permissions

About this article

Cite this article

Nattaf, M., Artigues, C. & Lopez, P. Cumulative scheduling with variable task profiles and concave piecewise linear processing rate functions. Constraints 22, 530–547 (2017). https://doi.org/10.1007/s10601-017-9271-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10601-017-9271-4