Abstract

Is it possible for a first-order method, i.e., only first derivatives allowed, to be quadratically convergent? For univariate loss functions, the answer is yes—the Steffensen method avoids second derivatives and is still quadratically convergent like Newton method. By incorporating a specific step size we can even push its convergence order beyond quadratic to \(1+\sqrt{2} \approx 2.414\). While such high convergence orders are a pointless overkill for a deterministic algorithm, they become rewarding when the algorithm is randomized for problems of massive sizes, as randomization invariably compromises convergence speed. We will introduce two adaptive learning rates inspired by the Steffensen method, intended for use in a stochastic optimization setting and requires no hyperparameter tuning aside from batch size. Extensive experiments show that they compare favorably with several existing first-order methods. When restricted to a quadratic objective, our stochastic Steffensen methods reduce to randomized Kaczmarz method—note that this is not true for SGD or SLBFGS—and thus we may also view our methods as a generalization of randomized Kaczmarz to arbitrary objectives.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In minimizing a univariate function f with an iteration \(x_{k+1} = x_k - f'(x_k)/g(x_k)\), possibilities for g include

with different orders of convergence q, i.e., \(|x_{k+1} - x^* |\le c|x_k - x^* |^q\). Gradient descent has \(q =1\), secant method \(q = (1+\sqrt{5})/2\), Newton and Steffensen methods both have \(q = 2\).

Steffensen method [1, 2] is a surprise. Not only does it not require second derivatives (like Newton) to achieve quadratic convergence, it also does not achieve its superior convergence through the use of multisteps (like secant). In other words, the kth Steffensen iterate only depends on \(x_k\) but not \(x_{k-1}, x_{k-2}\), etc.

Nevertheless, while the other three methods have widely used multivariate generalizations (secant method has several, as quasi-Newton methods, as Barzilai–Borwein step size, etc), all existing multivariate generalizations of Steffensen method [3,4,5,6,7,8,9,10,11,12,13,14,15] involve multivariate divided differences that require \(O(n^2)\) function evaluations and are no less expensive than using the full Hessian. Furthermore these multivariate generalizations are no longer one-step methods. As a result they have not found widespread use.

Our contributions are as follows:

-

(i)

We show that by incorporating a carefully chosen step size parameter the convergence of Steffensen method may be further improved beyond quadratic to \(q = 1 + \sqrt{2}\).

-

(ii)

We extend Steffensen method to a multivariate setting as an adaptive learning rate, avoiding divided differences, requiring just two gradient evaluations, and remaining a one-step method.

-

(iii)

We show that when used in a randomized setting, our methods outperform SGD, SVRG, and SLBFGS on a variety of standard machine learning tasks on real data sets.

The performance in iii is measured in actual running time. But aside from speed, our methods have two advantages over SLBFGS, which has become a gold standard in machine learning:

-

(a)

Quasi-Newton methods may involve matrix–vector product, a two-loop recursion with \(O(d^2)\) computation. Although deterministic LBFGS does not form matrix–vector product explicitly, stochastic LBFGS does. Our multivariate Steffensen methods, whether deterministic or stochastic, are free of such products.

-

(b)

Quasi-Newton methods come in two flavors: Hessian or inverse Hessian updates. The latter seems a nobrainer as it avoids matrix inversion but this is a fallacy. It is common knowledge among practitioners [16, Section 4.5.2.2] that the inverse Hessian version often conceals an ill-conditioned approximate Hessian; one should instead update the Cholesky factors of the approximate Hessian in order to detect ill-conditioning. By its design, LBFGS inevitably uses the inverse Hessian version. Our multivariate Steffensen methods are not quasi-Newton methods and do not involve approximate Hessians, avoiding this issue entirely.

A theoretical counterpart to iii is that when measured by the number of stochastic gradient evaluations, we have the following complexity estimates:

to minimize a d-variate convex function of the form \(f = f_1 + \dots + f_n\) to \(\varepsilon \)-accuracy. So our proposed methods SSM and SSBB are at least an order of magnitude fasterFootnote 1 than SVRG–BB and SLBFGS in terms of the condition number \(\kappa \). Here h refers to the ‘history size’ of SLBFGS. The algorithms and quantities involved will all be explained in due course.

Johan Steffensen first proposed his eponymous method [1] in 1933. See [19] for an informative history of the method and a biography of its inventor. The method was described in the classic books of Henrici [5, pp. 91–95] and Householder [20, p. 164] but has remained more of a textbook curiosity. One reason, as we mentioned above and will elaborate in Sect. 2.2, is that there has been no viable multivariate version.

Another reason, as we will speculate, is that much like the Kaczmarz method [21, 22] for iterative solution of linear systems had lingered in relative obscurity until it was randomized [23], Steffensen method is also most effective in a randomized setting. This is in fact more than an analogy; we will show in Sect. 2.4 that the stochastic Steffensen method we propose reduces to randomized Kaczmarz method when applied to a quadratic objective—not true for SGD, SVRG, or SLBFGS. So one may also view our stochastic Steffensen method as a generalization of randomized Kaczmarz method to arbitrary differentiable objective functions.

In Sect. 3 we will supply proofs of linear convergence of our methods and a theoretical comparison with other existing methods. In Sect. 4 we show how to adapt our methods for nonsmooth functions. In the numerical experiments in Sect. 5, we will see that our stochastic Steffensen method compares favorably with SGD, SVRG (with or without Barzilai–Borwein step size), and SLBFGS across different tasks in the LIBSVM datasets: ridge regression, logistic regression, and support vector machines with squared hinge loss.

1.1 Background

As in the usual setting for stochastic gradient descent and its variants, our goal is to minimize an objective function of the form

where \(x\in \mathbb {R}^d\) is the model parameter. Such functions are ubiquitous in machine learning, arising from the empirical risk minimization (ERM) problem where \(f_i\) takes the form

with \(\ell :\mathbb {R}\times \mathbb {R}\rightarrow \mathbb {R}_+\) the loss function, \(R: \mathbb {R}^d\rightarrow \mathbb {R}_+\) the regularizer, \(\lambda \ge 0\) the regularization parameter, and \(\{(w_i,y_i)\in \mathbb {R}^d \times \mathbb {R}:i=1,\dots , n\}\) the training set with labels. Different choices of \(\ell \) and R give \(l^2\)-regularized logistic regression, lasso regression, soft-margin support vector machine, etc.

The challenge here is that the dimension d and sample size n are extremely large in modern situations, mandating the use of first-order methods that rely only on first derivatives. But when n is large, even computing the full gradient of all \(f_1,\dots , f_n\) is intractable, and we need stochastic optimization methods that update x only after processing a small subset of data, permitting progress in the time deterministic methods make only a single step. Consequently, stochastic first-order methods have become the method of choice, with stochastic gradient descent (SGD) [24] and its many variants [25,26,27] and various stochastic quasi-Newton methods [28,29,30] ruling the day.

Stochastic optimization has grown into a vast subject. We have limited our comparison in this article to stochastic variants of classical methods that rely primarily on gradients. We did not include more sophisticated stochastic optimization algorithms that bring in additional features like moments [31, 32] or momentum [33,34,35,36] for two reasons. Firstly these more sophisticated algorithms invariably require heavy tuning compared to purely gradient-based methods. Secondly we view them as enhancements to gradients-based methods and our proposed stochastic Steffensen methods likewise lend themselves to such enhancements. As such, the most appropriate and equitable comparisons for us would be the aforementioned gradient-based methods.

1.2 Convention

In this article, we use the terms learning rate and step size slightly differently. Take for example our Steffensen–Barzilai–Borwein iteration in (10):

the coefficient

will be called a learning rate whereas the coefficient

will be called a step size. In general, the term ‘learning rate’ will be used exclusively to refer to the coefficient of a search direction, which may be a gradient, a stochastic gradient, a variance-reduced stochastic gradient, etc. The term ‘step size’ will be used for coefficients in other contexts like \(\beta _k\) in the definition of the learning rate \(\eta ^{{\scriptscriptstyle \textsf{SBB}}}_k\).

We will use \(\eta _k\) to denote a general learning rate. For the learning rate of a particular algorithm, we will indicate the algorithm in superscript. For example, \(\eta ^{{\scriptscriptstyle \textsf{SBB}}}_k\) above is the learning rate of Steffensen–Barzilai–Borwein method (SBB). The Barzilai–Borwein step size above will always be denoted \(\beta _k\) throughout.

2 Stochastic multivariate Steffensen methods

Our three-step strategy is to (a) push the convergence order of the univariate Steffensen method to its limit, (b) extend the resulting method to a multivariate setting, and then (c) randomize the multivariate algorithm. For (a), we are led naturally to the Barzilai–Borwein step size; for (b), we emulate the multivariate extension of secant method into quasi-Newton method; and for (c), we draw inspiration from stochastic gradient descent and its various derivatives.

2.1 Deterministic univariate setting

As we saw in Sect. 1, univariate Steffensen method:

avoids second-order derivatives and yet preserves quadratic convergence with the use of two first-order derivatives \(f'(x_k + f'(x_k))\) and \(f'(x_k)\). With modern hindsight, it is clear that we may obtain an immediate improvement in (2), one that is essentially free, by incorporating a coefficient \(\beta _k\) that only depends on quantities already computed. The analysis in the next two results will lead us to an appropriate choice of \(\beta _k\). Note that although the algorithms require only first derivatives of f, the convergence results assume that f has a higher degree of smoothness.

Proposition 1

(Convergence order of Steffensen method) Let f be a function that is \(C^3\) in a neighborhood of a stationary point \(x^*\) with \(f'(x^*) = 0\) and \(f''(x^*) \ne 0\). Let \(\alpha \in \mathbb {R}\) be a nonzero constant parameter and

for \(k = 0,1,2,\dots .\) If \(\lim _{k \rightarrow \infty } x_k = x^*\), then

where \(\varepsilon _k {:}{=}x_k - x^*\) denotes the error in iteration k.

Proof

Let \(\varepsilon _k = x_k - x^*\). Subtracting \(x^*\) from both sides, we get

Taylor expanding \(f'(x_k + \alpha f'(x_k))\) about \(x_k\), we get

for some \(\xi _k\) between \(x_k\) and \(x_k + \eta f'(x_k)\). Combining the previous two equations, we have

Taylor expanding \(f'\) about \(x_k\), we get

for some \(\xi _k^*\) between \(x_k\) and \(x^*\). Plugging \(f'(x_k)\) into (3) gives us

Taking limit \(k \rightarrow \infty \) and using continuity of \(f'\), \(f''\), and \(f'''\) at \(x^*\), we have

as required. \(\square \)

We next show that with an appropriate choice of \(\alpha \), we can push Steffensen method into the superquadratically convergent regime. The quadratic convergence in Proposition 1 is independent of the value \(\alpha \) and we may thus choose a different \(\alpha \) at every step. Of course if we simply set \(\alpha _k = -1/f''(x_k)\) in Proposition 1, we will obtain a cubically convergent algorithm. However since we want a first-order method whose learning rate depends only on previously computed quantities, we set \(\alpha _k = -(x_k - x_{k-1})/[f'(x_k) - f'(x_{k-1})]\) to be the finite difference to avoid second derivatives — as it turns out, this improves convergence order to \(1 + \sqrt{2}\).

Theorem 2

(Convergence order of Steffensen method with Barzilai–Borwein step size) Let f be a function that is \(C^4\) in a neighborhood of a stationary point \(x^*\) with \(f'(x^*) = 0\) and \(f''(x^*) \ne 0\). Let

and

for \(k =0,1,2,\dots .\) If \(\lim _{k \rightarrow \infty } x_k \rightarrow x^*\), then

In particular, the order of convergence of (4) is superquadratic with \(1+\sqrt{2} \approx 2.414\).

Proof

Taylor expanding \(f'(x_k+\beta _kf'(x_k))\) at \(x_k\), we get

for some \(\xi _k\) between \(x_k\) and \(x_k + \eta _kf'(x_k)\). Let \(\varepsilon _k = x_k - x^*\), we have

Taylor expanding \(f'(x^*)\) at \(x_k\) to 4th, 3th, and 2nd order, we get

Plugging these into (5) and defining

we obtain

Since \(\beta _k = -(x_k - x_{k-1})/(f'(x_k) - f'(x_{k-1}))\), we may Taylor expand \(f'(x_{k-1})\) at \(x_k\) to get

for some \(\xi _k^\ddagger \) between \(x_{k-1}\) and \(x_k\). Plugging it into

gives us

We deduce that

and therefore

Hence the convergence order is \(1 + \sqrt{2}\). \(\square \)

The choice of \(\beta _k\) above is exactly the Barzilai–Borwein (BB) step size for a univariate function [37]. In the multivariate setting, \(\beta _k\) will be replaced by the multivariate BB step size. Theorem 2 provides the impetus for a first-order method with Steffensen updates and BB step size, namely, it is superquadratically convergent for univariate functions. Such a high convergence order is clearly an overkill for a deterministic algorithm but our experiments in Sect. 5 show that they are rewarding when the algorithm is randomized, as randomization inevitably compromises convergence speed. For easy comparison, we tabulate the convergence order, i.e., the largest q such that \(|\varepsilon _{k+1} |\le c|\varepsilon _k |^q\) for some \(c >0\) and all k sufficiently large, of various methods below:

Method | Convergence | Derivatives | Steps |

|---|---|---|---|

Steepest descent | 1 | 1st | Single step |

Secant = Barzilai–Borwein = quasi-Newton | \((1 + \sqrt{5})/2 \) | 1st | Mutltistep |

Newton | 2 | 2nd | Single step |

Steffensen | 2 | 1st | Single step |

Steffensen–Barzilai–Borwein | \(1 + \sqrt{2} \) | 1st | Multistep |

Note that for a univariate function, Barzilai–Borwein step size and any quasi-Newton method with Broyden class updates (including BFGS, DFP, SR1) reduce to the secant method. In particular, they are all two-step methods, i.e., its iterate at step k depends on both \(x_k\) and \(x_{k-1}\). As a result Steffensen–Barzilai–Borwein method is also a two-step method as it involves the Brazlai–Borwein step size but Steffensen method is a one-step method.

2.2 Deterministic multivariate setting

There have been no shortage of proposals for extending Steffensen method to a multivariate or even infinite-dimensional setting [3,4,5,6,7,8,9,10,11,12,13,14,15]. However all of them rely on various multivariate versions of divided differences that require evaluation and storage of \(O(n^2)\) first derivatives in each step. Although they do avoid second derivatives, computationally they are just as expensive as Newton method and are unsuitable for modern large scale applications like training deep neural networks.

We will propose an alternative class of multivariate Steffensen methods that use only O(n) first derivatives, by emulating quasi-Newton methods [38,39,40,41] and Barzilai–Borwein method [37] respectively. Our observation is that expensive multivariate divided differences can be completely avoided if we just use the ideas in Sect. 2.1 to define learning rates. Another advantage is that these learning rates could be readily used in conjunction with existing stochastic optimization methods, as we will see in Sect. 2.3.

The key idea behind quasi-Newton method is the extension of univariate secant method to a multivariate objective function \(f:\mathbb {R}^d\rightarrow \mathbb {R}\) by replacing the finite difference approximation of \(f''(x_k)\), i.e., \(h_k = [f'(x_k) - f'(x_{k-1})]/(x_k - x_{k-1})\), with the secant equation \(H_k s_k = y_k\) or

where \(s_k = x_k - x_{k-1}\) and \(y_k = \nabla f(x_k) - \nabla f(x_{k-1})\), avoiding the need to divide vectorial quantitites. Here \(H_k\) (resp. \(B_k\)) is the approximate (resp. inverse) Hessian.

We use the same idea to extend Steffensen method to a multivariate setting, solving (6) with

Note that with these choices, (6) roughly says that “\(B_k = s_k/y_k = \nabla f(x_k)/[\nabla f(x_k + \nabla f(x_k)) - \nabla f(x_k) ]\),” which gives us \(f'(x_k)/[f'(x_k + f'(x_k)) - f'(x_k)]\) as in the univariate Steffensen method when \(d=1\) but is of course meaningless when \(d > 1\). Nevertheless we may pick a minimum-norm solution to (6), which is easily seen to be given by the rank-one matrix

regardless of whether \(\Vert \, \cdot \, \Vert \) is the Frobenius or spectral norm. Hence we obtain a multivariate analogue of Steffensen method (2) as

We will call this quasi-Steffensen method in analogy with quasi-Newton methods.

The key idea behind the Barzilai–Borwein method [37] is an alternative way of treating the secant equation (6), whereby the approximate Hessian \(B_k\) is assumed to take the form \(B_k = \sigma _k I\) for some scalar \(\sigma _k > 0\). Since in general it is not possible to find \(\sigma _k\) so that (6) holds exactly with \(B_k = \sigma _k I\), a best approximation is used instead. We seek \(\sigma _k\) so that the residual of the secant equation \(\Vert y_k - (1/\sigma _k) s_k\Vert ^2\) or \(\Vert \sigma _k y_k - s_k\Vert ^2\) is minimized. The first minimization problem gives us

and the second minimization gives the same expression as (7). We will call the resulting iteration

Steffensen method since it most resembles the univariate Steffensen method in (2). Note that the Barzilai–Borwein step size derived in [37] is

and differs significantly from (8). In particular, \(x_{k+1} = x_k - \beta _k \nabla f(x_k)\) is a multistep method whereas \(x_{k+1} = x_k - \sigma _k \nabla f(x_k)\) remains a single step method.

Both (7) and (8) reduce to (2) when f is univariate. Motivated by the univariate discussion before Theorem 2, we combine features from (8) and (9) to obtain a Steffensen–Barzilai–Borwein method in analogy with the univariate case (4):

Note that (10) reduces to (4) when f is univariate. The stochastic version of (10) will be our method of choice, supported by extensive empirical evidence some of which we will present in Sect. 5.

In summary, we have four plausible learning rates:

Here \(\beta _k\) is the Barzilai–Borwein step size in (9). For a univariate function, the iterations with \(\eta ^{{\scriptscriptstyle \textsf{qS}}}_k\) and \(\eta ^{{\scriptscriptstyle \textsf{S}}}_k\) reduce to (2) whereas those with \(\eta ^{{\scriptscriptstyle \textsf{qSBB}}}_k\) and \(\eta ^{{\scriptscriptstyle \textsf{SBB}}}_k\) reduce to (4). The computational costs of all four learning rates are the same: two gradient evaluations and two inner products.

Note that our muiltivariate Steffensen and quasi-Steffensen methods are one-step methods — \(\eta ^{{\scriptscriptstyle \textsf{S}}}_k\) and \(\eta ^{{\scriptscriptstyle \textsf{qS}}}_k\) depend only on \(x_k\) — just like the univariate Steffensen method. Steffensen–Barzilai–Borwein and quasi-Steffensen–Barzilai–Borwein are inevitably two-step methods because they involve the Barzilai–Borwein step size \(\beta _k\), which has a two-step formula.

The main difference between our multivariate Steffensen methods and those in the literature [3,4,5,6,7,8,9,10,11,12,13,14,15] is that ours are encapsulated as learning rates and avoid expensive multivariate divided differences. Recall that for \(g = (g_1,\dots ,g_n): \mathbb {R}^n \rightarrow \mathbb {R}^n\), its divided difference [42] at \(x,y\in \mathbb {R}^n\) is the matrix \(\llbracket x,y \rrbracket \in \mathbb {R}^{n \times n}\) whose (i, j)th entry is

for \(i,j =1,\dots ,n\).

In a stochastic setting, the learning rates \(\eta ^{{\scriptscriptstyle \textsf{S}}}_k,\eta ^{{\scriptscriptstyle \textsf{qS}}}_k,\eta ^{{\scriptscriptstyle \textsf{SBB}}}_k,\eta ^{{\scriptscriptstyle \textsf{qSBB}}}_k\) share the same upper and lower bounds in Lemma 7 and as a result, the linear convergence conclusion in Theorem 10 applies alike to all four of them. Our experiments also indicate that \(\eta ^{{\scriptscriptstyle \textsf{qS}}}_k\) and \(\eta ^{{\scriptscriptstyle \textsf{S}}}_k \) have similar performance and likewise for \(\eta ^{{\scriptscriptstyle \textsf{qSBB}}}_k\) and \(\eta ^{{\scriptscriptstyle \textsf{SBB}}}_k \), although there is a slight difference between \(\eta ^{{\scriptscriptstyle \textsf{S}}}_k \) and \(\eta ^{{\scriptscriptstyle \textsf{SBB}}}_k\). One conceivable advantage of the ‘quasi’ variants is that for a given \(\nabla f(x_k)\), the denominator vanishes only at a single point, e.g., when \(\nabla f(x_k +\nabla f(x_k)) = \nabla f(x_k)\), as opposed to a whole hyperplane, e.g., whenever \(\nabla f(x_k + \nabla f(x_k)) - \nabla f(x_k) \perp \nabla f(x_k)\). Nevertheless, in all our experiments on their stochastic variants, this has never been an issue.

We prefer the slightly simpler expressions of the Steffensen and Steffensen–Barzilai–Borwein methods and will focus our subsequent discussions on them. Their ‘quasi’ variants may be taken as nearly equivalent alternatives for users who may have some other reasons to favor them.

2.3 Stochastic multivariate setting

Encapsulating Steffensen method in the form of learning rates offers an additional advantage — it is straightforward to incorporate them into many stochastic optimization algorithms, which we will do next.

Standard gradient descent applied to (1) requires the evaluation of n gradients. The stochastic gradient descent (SGD), instead of using the full gradient \(\nabla f(x_k)\), relies on an unbiased estimator \(g_k\) with \(\mathbb {E}[g_k] = \nabla f(x_k)\) [24]. One common randomization is to draw \(i_k \in \{1, \dots , n\}\) randomly and set \(g_k = \nabla f_{i_{k}}(x_k)\), giving the update:

Note that \(\mathbb {E}[\nabla f_{i_k}(x_k) \mid x_k] = \nabla f(x_k)\) and its obvious advantage is that each step relies only on a single gradient \(\nabla f_{i_k}\), resulting in a computational cost that is 1/n that of the standard gradient descent. While we could adopt this procedure to randomize our Steffensen and Steffensen–Barzilai–Borwein iterations, we will use a slightly more sophisticated variant with variance reduction and minibatching.

The price of randomization is paid in the form of variance, as the stochastic gradient \(\nabla f_{i_k}(x_k)\) equals the gradient \(\nabla f(x_k)\) only in expectation but each \(\nabla f_{i_k}(x_k)\) is different. Of the many variance reduction strategies, one of the best known and simplest is the stochastic variance reduced gradient method (SVRG) [27], based on the tried-and-tested notion of control variates in Monte Carlo methods. We will emulate SVRG to randomize (7) and (10).

The basic idea of SVRG is to compute the full gradient once every m iterations for some fixed m and use it to generate stochastic gradients with lower variance in the next m iterations:

Here \(\widetilde{x}\) denotes the point where full gradient is computed. Notice that when \(k\rightarrow \infty \), \(x_k\) and \(\widetilde{x}\) are very close to the optimal point \(x^*\). As \(x_k\) and \(\widetilde{x}\) are highly correlated, the variability of the stochastic gradient is reduced as a result [27].

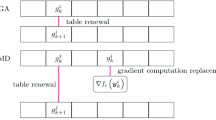

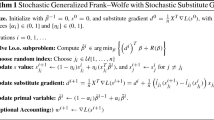

We may similarly randomize multivariate Steffensen method. Our stochastic Steffensen method (SSM) in Algorithm 1 operates in two nested loops. In the kth iteration of the outer loop, we compute two full gradients \(\nabla f(x_k)\) and \(\nabla f(x_k + \nabla f(x_k))\). Note that \(x_k\) plays the role of \(\widetilde{x}\) in the above paragraph. These two terms are used for computing the Steffensen learning rate:

In the \((t+1)\)th iteration of the inner loop, we use \(\nabla f(x_k)\) to generate the stochastic gradient with lower variance

with \(i_t \in \{1,\dots ,n\}\) sampled uniformly. The updating rule takes the form

where the search direction is known as the variance-reduced stochastic gradient. Note that the learning rate \(\eta _k\) given by (11) has an extra \(1/\sqrt{m}\) factor to guarantee the linear convergence. The \(1/\sqrt{m}\) factor may be replaced by \(1/m^p\) for any \(p \in (0,1)\), although not \(p =1\), while preserving linear convergence. The optimal complexity, as measured by the number of stochasic gradient evaluations, is achieved when \(p = 1/2\), with details to follow in Sect. 3.

Aside from variance reduction, we include another common enhancement called minibatching. Minibatched SGD is a trade-off between SGD and gradient descent (GD) where the cost function (and therefore its gradient) is averaged over a small number of samples. SGD has a batch size of one whereas GD has a batch size that includes all training samples. In each iteration, we sample a minibatch \(S_{k}\subseteq \{1,\dots ,n\}\) with \(|S_{k}|=b\) a small number and update

Minibatched SGD smooths out some of the noise in SGD but maintains the ability to escape local minima. The minibatch size b is kept small, thus preserving the cost-saving benefits of SGD. As in the discussion after (11), the coefficient \(1/\sqrt{m}\) may be replaced by \(1/m^p\) for any \(p\in (0,1)\) while preserving linear convergence, but \(p = 1/2\) gives the minimum number of stochastic gradient evaluations, as we will see in Sect. 3.

Upon incorporating (i) a Barzilai–Borwein step size, (ii) variance reduction, and (iii) minibatching, we arrive at the stochastic Steffensen–Barzilai–Borwein method (SSBB) in Algorithm 2. This is our method of choice in this article.

Although we did not include minibatching in Algorithm 1’s pseudocode to avoid clutter, we will henceforth assume that it is also minibatched. The randomization, variance reduction, and minibatching all apply verbatim when the learning rates in Algorithms 1 and 2 are replaced respectively by the quasi-Steffensen and quasi-Steffensen–Barzilai–Borwein learning rates on p. 10. Nevertheless, as we have mentioned, our numerical experiments do not show that the resulting algorithms differ in performance from that of Algorithms 1 and 2.

2.4 Randomized Kaczmarz method as a special case

Given \(A\in \mathbb {R}^{m \times n}\) of full row rank with row vectors \(a_1,\dots ,a_m \in \mathbb {R}^n\) and \(b\in \mathbb {R}^m\) in the image of A, the Kaczmarz method [21, 22] solves the consistent linear system \(Ax=b\) via

with \(i = k \mod m\), \(i =1,\dots , m\). The iterative method has remained relatively obscure, almost unheard of in numerical linear algebra, until it was randomized in [23], which essentially does

where \(i_k \in \{1,\dots ,m\}\) is now sampled with probability \(\Vert a_{i_k}\Vert ^2/\Vert A \Vert ^2\).

We will see that randomized Kaczmarz method is equivalent to applying stochastic Steffensen method, with or without Barzilai–Borwein step size, to minimize the quadratic function \(f: \mathbb {R}^n \rightarrow \mathbb {R}\),

While it is sometimes claimed that SGD has this property, this is not quite true. Suppose \(i_k \in \{1,\dots ,m\}\) is the random row index sampled at the kth step, the update rule in SGD gives

and the update rule in SLBFGS is even further from this. So one needs to impose further assumptions [43] on the learning rate to get randomized Kaczmarz method, which requires that \(\eta _k = 1/\Vert a_{i_k}^2\Vert \). If we use the Steffensen method, we get from (10) that

and using Steffensen–Barzilai–Borwein method makes no difference:

as \(\beta _k = \Vert x_k - x_{k-1}\Vert ^2/(x_k - x_{k-1})^{\scriptscriptstyle \textsf{T}}[\nabla f_{i_k}(x_k) - \nabla f_{i_k}(x_{k-1})] = 1/\Vert a_{i_k}\Vert ^2\).

3 Convergence analysis

In this section, we establish the linear convergence of our stochastic Steffensen methods Algorithm 1 (SSM) and Algorithm 2 (SSBB) for solving (1) under standard assumptions. We would like to stress that these convergence results are intended to provide a minimal theoretical guarantee and do not really do justice to the actual performance of SSBB. The experiments in Sect. 5 indicate that the convergence of SSBB is often superior to other existing methods like SGD and SVRG, with or without Barzilai–Borwein step size, or even SLBFGS. However, we are unable to prove this theoretically, only that it is linearly convergent like the other methods.

For easy reference, we reproduce the minibatched SVRG algorithm in [44, Algorithm 1] as Algorithm 3.

We need to establish the linear convergence of Algorithm 3 for our own convergence results in Sects. 3.1 and 3.2 but we are unable to find such a result in the literature. In particular, the convergence results in [44, Propositions 2–4] and [45, Theorem 1] are for more sophisticated variants of Algorithm 3. So we will provide a version following the same line of arguments in [45, Theorem 1] but tailored to our own requirements.

Our linear convergence proofs for SSM and SSBB are a combination of the proofs in [45, 46] adapted for our purpose. In particular, we quote [46, Lemma A] and prove a simplied version of [45, Lemma 3] for easy reference.

Lemma 3

[Nitanda] Let \(\xi _1,\dots ,\xi _n \in \mathbb {R}^{d}\) and \(\bar{\xi } {:}{=}\frac{1}{n} \sum _{i=1}^n\xi _i\). Let S be a b-element subset chosen uniform randomly from all b-element subsets of \(\{1,2, \ldots , n\}\). Then

Here \(\mathbb {E}_{\mathcal {S}}\) denotes expectation of the random subset \(S \subseteq \{1,\dots ,n\}\) and \(\mathbb {E}_i\) that of the uniform random variable \(i \in \{1,\dots ,n\}\). More specifically, if \(S = \{i_1, \dots , i_b\}\), then

For the rest of this section, we will need to assume, as is customary in such proofs of linear convergence, that our objective f is \(\mu \)-strongly convex, the gradient of each additive component \(f_i\) is L-Lipschitz continuous (and therefore so is \(\nabla f\)), and that all iterates are well-defined (the denominators appearing in our learning rates \(\eta _k \) are nonzero).

Assumption 1

Assume that the function f in (1) satisfies

for any \(v, w \in \mathbb {R}^{d}\), \(i =1,\dots ,n\).

Applying Lemma 3 with \(\xi _i = v_i^{k,t}\) and [45, Corollary 3], we may bound the variance of a minibatched variance-reduced gradient as follows.

Lemma 4

Let f be as in Assumption 1 with \(x^*{:}{=}\mathop {\textrm{argmin}}\limits _{x} f(x)\). Let

Then

The next lemma, a simplified version of [45, Lemma 3], gives a lower bound of the optimal value \(f(x^*)\) useful in our proof of linear convergence.

Lemma 5

Let \(\Delta _{k,t} {:}{=}v_{k,t} - \nabla f(x_{k,t})\) and \(\eta _k\) be a learning rate with \(0<\eta _k \le 1 / L\). Then with the same assumptions and notations in Lemma 4, we have

Proof

By the strong convexity of f, we have

By the smoothness of f, we have

Summing the two inequalities, we get

The second term on the right simplifies as

If the learning rate satisfies \(0 < \eta _k \le 1/L\), then

as required. \(\square \)

Theorem 6

(Linear convergence of Algorithm 3) Let f be as in Assumption 1 with \(x^*{:}{=}\mathop {\textrm{argmin}}\limits _{x} f(x)\). For the \((k+1)\)th iteration of outer loop in Algorithm 3,

If m, \(\eta _k\), and b are chosen so that

then Algorithm 3 converges linearly in expectation with

Proof

For the iteration in the inner loop, we apply Lemma 5 to get

Lemma 5 requires that the learning rate \(\eta _k\le 1/L\). Let \(\bar{x}_{k,t+1} {:}{=}x_{k,t}-\eta _k\nabla f(x_{k,t})\). Then the last term in (12) may be written as

Plugging this into (12) and taking expectations on both sides conditioned on \(x_{k,t}\) and \(x_k\) respectively, we get

where the last equality follows from \(\mathbb {E}[\Delta _{k,t}] = 0\). Set \(\gamma {:}{=}8L\eta _k^2/b\). By Lemma 4, we have

For \(t=0,\dots ,m-1\), we have

Summing this inequality over all \(t=0,\dots ,m-1\), the left hand side becomes

and the right hand side becomes

By the definition of \(x_{k+1}\) in Algorithm 3,

and so, bearing in mind that \(\text {LHS} \le \text {RHS}\),

where the last step follows by replacing \(\sum _{t=0}^{m-1}\) with \(\sum _{t=0}^{m}\), which preserves inequality. Thus

Rearranging terms and applying strong convexity of f, we have

Here we require that \(2\eta _k > 8L\eta _k^2 / b\) and thus \(\eta _k < b / (4\,L)\), leading to

with

Choose \(m, \eta _k\) so that \(\rho _k \le \rho < 1\) and apply the last inequality recursively, we get

as required. \(\square \)

3.1 Linear convergence of stochastic Steffensen method

With Theorem 6, we may deduce the linear convergence of Algorithm 1 as a special case of Algorithm 3 with \(b = 1\) (no minibatching) and \(\eta _k = \eta ^{{\scriptscriptstyle \textsf{SS}}}_k\) (SSM learning rate).

Lemma 7

Let f be as in Assumption 1. Then the stochastic Steffensen learning rate

satisfies

Proof

Since \(\nabla f\) is L-Lipschitz, a lower bound is given by

The required upper bound follows the \(\mu \)-strong convexity of f. \(\square \)

Corollary 8

(Linear convergence of SSM) Let f be as in Assumption 1 with \(x^*{:}{=}\mathop {\textrm{argmin}}\limits _{x} f(x)\). If m is chosen so that

where \(\kappa = L / \mu \) is the condition number, then Algorithm 1 converges linearly in expectation with

Proof

By Theorem 6, we have

as long as \(\eta ^{{\scriptscriptstyle \textsf{SS}}}_k < 1/(4L)\). Lemma 7 shows that this holds for \(m > 16\kappa ^2\), which follows from \(\rho <1\). The upper and lower bounds in Lemma 7 also give

Hence if m is chosen so that \(\rho < 1\), we have

as required. \(\square \)

3.2 Linear convergence of stochastic Steffensen–Barzilai–Borwein

The linear convergence of Algorithm 2 likewise follows from Theorem 6 with \(\eta _k = \eta ^{{\scriptscriptstyle \textsf{SSBB}}}_k\).

Lemma 9

Let f be as in Assumption 1. Then the stochastic Steffensen–Barzilai–Borwein learning rate

satisfies

Proof

Similar to that of Lemma 7. \(\square \)

Corollary 10

(Linear convergence of SSBB) Let f be as in Assumption 1 with \(x^*{:}{=}\mathop {\textrm{argmin}}\limits _{x} f(x)\). If m and b are chosen so that

where \(\kappa = L / \mu \) is the condition number, then Algorithm 2 converges linearly in expectation with

Proof

Because SSBB is a special case of Algorithm 3, then we can easily get

when \(\eta ^{{\scriptscriptstyle \textsf{SSBB}}}_k < b/(4\,L)\) and \(\eta ^{{\scriptscriptstyle \textsf{SSBB}}}_k < 1/L\). From Lemma 9, this is valid for \(m > \max (\kappa ^2, 16\kappa ^2/b^2)\), which holds because \(\rho < 1\). Also, from Lemma 9, we have

Hence if m and b are chosen so that \(\rho < 1\), we have

as required. \(\square \)

3.3 Optimal number of stochastic gradient evaluations

Observe that in the proof of Corollary 8 and 10, we may replace \(1/\sqrt{m}\) by \(1/m^p\) for any \(p \in (0,1)\) without affecting the linear convergence conclusion. More precisely, to reach \(\varepsilon \)-accuracy, the proofs of Corollaries 8 and 10 show that when we set \(b = O(1)\) and \(m = O\bigl (\max (\kappa ^{1/p}, \kappa ^{1/(1-p)})\bigr )\), then both SSM and SSBB require evaluation of

stochastic gradients. Clearly, this is minimized when \(p = 1/2\). It follows that for \(p = 1/2\), \(b = O(1)\), and \(m = O(\kappa ^2)\), both SSM and SSBB require evaluation of

stochastic gradients to reduce to \(\varepsilon \)-accuracy.

3.4 Comparison with other methods

For a theoretical comparison with other methods on equal footing, we will have to limit ourselves to the ones that do not leave the step size \(\eta _k\) unspecified. This automatically excludes SGD and SVRG, which treat \(\eta _k\) as a hyperparameter to be tuned separately. A standard choice is to choose \(\eta _k\) to be the Barzilai–Borwein step size, resulting in the SVRG–BB method [47], which requires evaluation of

stochastic gradients to achieve \(\varepsilon \)-accuracy when \(\kappa \) is sufficiently large. On the other hand, the SLBFGS method [28] requires evaluation of

stochastic gradients where h is ‘history size’, the number of previous updates kept in LBFGS. Evidently both SVRG–BB and SLBFGS are at least an order of magnitude slower than SSM and SSBB as measured by the condition number \(\kappa \). It is worth noting that for a d-variate objective, the number of stochastic gradients required by SLBFGS depends on d. Our methods, like SVRG–BB, are free of such dependence. Note that the lower bound of \(O \bigl ( n + \sqrt{n\kappa } \log (1/\epsilon ) \bigr )\) in [18] and upper bound of \(O \bigl ( (n + \sqrt{n\kappa }) \log (1/\epsilon ) \bigr )\) in [17] assume a constant multiple of the stochastic gradient throughout. We restrict our comparison to adaptive methods where the stochastic gradient is modified by a nonconstant (scalar or matrix) multiple of the stochastic gradient that changes from step to step.

4 Proximal variant

As shown in [45], SGD and SVRG may be easily extended to cover nondifferentiable objective functions of the form

where f satisfies Assumption 1 and R is a nondifferentiable function such as \(R(x) = \Vert x\Vert _1\). In this section we will see that SSBB may likewise be extended, and the linear convergence is preserved.

To solve (13), the proximal gradient method does

with a proximal map defined by

As in [45], we replace the update rule \(x_{k,t+1} = x_{k,t} - \eta ^{{\scriptscriptstyle \textsf{SSBB}}}_kv_{k,t}\) in Algorithm 2 by

We will see that the resulting algorithm, which we will call prox-SSBB, remains linearly convergent as long as the following assumption holds for some \(\mu > 0\).

Assumption 2

The function R is \(\mu \)-strongly convex in the sense that

for all \(x\in {{\,\textrm{dom}\,}}(R)\), \(g(x)\in \partial R(x)\), \(y\in \mathbb {R}^d\), and \(R(y) {:}{=}+\infty \) whenever \(y \notin {{\,\textrm{dom}\,}}(R)\). Here \(\partial R(x)\) denotes subgradient at x.

It is a standard fact [48, p. 340] that if R is a closed convex function on \(\mathbb {R}^d\), then

for all \(x,y\in \text {dom}(R)\). We will write \(\mu _f\) for the strong convexity parameter of f in Assumption 1 and \(\mu _R\) for that of R in Assumption 2. This implies that the overall objective function F is strongly convex with \(\mu \ge \mu _f + \mu _R\).

To establish linear convergence for prox-SSBB, we need an analogue of Lemma 5, which is provided by [45, Lemma 3], reproduced here for easy reference.

Lemma 11

(Xiao–Zhang) Let f be as in Assumption 1, R as in Assumptions 1, and \(F = f + R\) with \(x^*{:}{=}\mathop {\textrm{argmin}}\limits _{x} F(x)\). Let \(\Delta _{k,t} {:}{=}v_{k,t} - \nabla f(x_{k,t})\) and

If \(0< \eta _k < 1/L\), then

Corollary 12

(Linear convergence of prox-SSBB) Let F and \(x^*\) be as in Lemma 11 and \(\eta _k =\eta ^{{\scriptscriptstyle \textsf{SSBB}}}_k\). Then Corollary 10 holds verbatim with F in place of f.

Proof

To apply Lemma 11, we need \(\eta _k\le 1/L\) and this holds as we have \(m \ge (L/\mu )^2 = \kappa ^2\) among the assumptions of Lemma 9. In the notations of Lemma 11, the update (14) is equivalent to \(x_{k,t+1} = x_{k,t} - \eta _kg_{k,t}\). So

By Lemma 11, we have

Therefore,

We bound the middle term on the right. Let \(\bar{x}_{k,t+1} {:}{=}{{\,\textrm{prox}\,}}_{\eta _kR}(x_{k,t} - \eta _k\nabla f(x_{k,t}))\). Then

where the first inequality is Cauchy–Schwarz and the second follows from Lemma 15. The remaining steps are as in the proofs of Theorem 6 and Corollary 10 with F in place of f. \(\square \)

5 Numerical experiments

As mentioned earlier, for smooth objectives, our method of choice is Algorithm 2, the stochastic Steffensen–Barzilai–Borwein method (SSBB) with minibatching. We will compare it with several benchmarking algorithms: stochastic gradient descent (SGD), stochastic variance reduced gradient (SVRG) [27], stochastic LBFGS [28], and the first two with Barzilai–Borwein step size (SGD–BB and SVRG–BB) [47]. For nonsmooth objectives, we compare Algorithm 4, the proximal stochastic Steffensen–Barzilai–Borwein method (prox-SSBB), with proximal variants of the previously mentioned algorithms: prox-SGD, prox-SVRG [45], prox-SLBFGS, and prox-SVRG–BB.

We test these algorithms on popular empirical risk minimization problems — ridge regression, logistic regression, support vector machines with squared hinge loss, \(l^1\)-regularized logistic regression — on standard datasets in LIBSVM.Footnote 2 The parameters involved are summarized in Table 1. Our experiments show that SSBB and prox-SSBB compare favorably with these benchmark algorithms. All our codes are available at https://github.com/Minda-Zhao/stochastic-steffensen.

For a fair comparison, all algorithms are minibatched. We set a batch size of \(b = 4\) for ridge regression, \(b = 16\) for logistic loss and squared hinge loss, \(b=32\) for \(l^1\)-regularized logistic loss. The inner loop size is set at \(m = 2n\) or 4n. The learning rates in SGD, SVRG, and SLBFGS are hyperparameters that require separate tuning; we pick the best possible values with a grid search. SLBFGS requires more hyperparameters: As suggested by the authors of [28], we set the Hessian update interval to be \(L = 10\), Hessian batch size to be \(b_H= L b\), and history size to be \(h = 10\). All experiments are initialized with \(x_0 =0\). We repeat every experiment ten times and report average results.

In all figures, we present the convergence trajectory of each method. The vertical axis represents in log scale the value \(f(x_k) - f(x^*)\) where we estimate \(f(x^*)\) by running full gradient descent or Newton method multiple times. The horizontal axis represents computational cost as measured by either number of gradient computations divided by n or the actual running time — we present both. In all experiments, we note that the convergence trajectories of SSBB and prox-SSBB agree with the linear convergence established in Sects. 3 and 4.

5.1 Ridge regression

Figure 1 shows a simple ridge regression on a synthetic dataset generated in a controlled way to give us the true global solution. We generate \(x^* \in \mathbb {R}^d\) with \(x^*_i\sim \mathcal {N}(0,1)\) and \(A\in \mathbb {R}^{n\times d}\) with row vectors \(a_1,\dots ,a_n \in \mathbb {R}^d\) and entries \(a_{ij} \sim \mathcal {N}(0,1)\). We form \(y = Ax^* + b\) with b an n-dimensional standard normal variate. We then attempt to recover \(x^*\) from A and y by optimizing, with \(\lambda _2 = 10^{-5}\),

5.2 Logistic regression

Figure 2 shows the results of a binary classification problem on the w6a dataset from LIBSVM using an \(l^2\)-regularized binary logistic regression. The associated optimization problem with regularization \(\lambda _2 = 10^{-4}\) and labels \(y_i\in \{-1, +1\}\) is

5.3 Squared hinge loss

Figure 3 shows the results of a support vector machine classifier with \(l^2\)-regularized squared hinge loss and \(\lambda _2=10^{-3}\) on the a6a dataset from LIBSVM. The optimization problem in this case is

The results are clear: SSBB solves the problems to high levels of accuracy and is the fastest, whether measured by running time or by number of passes through data, in all experiments. When measured by running times, SLBFGS performs relatively poorly because of the additional computational cost of its matrix–vector products that other methods avoid.

5.4 Proximal variant

Figure 4 shows the results of a binary classification problem on the w6a dataset from LIBSVM using a binary logistic regression with both \(l^2\)- and \(l^1\)-regularizations, a problem considered in [45]:

We set regularization parameters to be \(\lambda _2 = 10^{-4}\), \(\lambda _1 = 10^{-4}\).

The results obtained are consistent with those in Sects. 5.1, 5.2 and 5.3, demonstrating that prox-SSBB solves the problem to high levels of accuracy and is the fastest among all algorithms compared, whether measured by running time or by the number of passes through data.

6 Conclusion

The stochastic Steffensen methods introduced in this article are (i) simple to implement, (ii) efficient to compute, (iii) easy to incorporate, (iv) tailored for massive data and high dimensions, have (v) minimal memory requirements and (vi) a negligible number of hyperparameters to tune. The last point is in contrast to more sophisticated methods involving moments [31, 32] or momentum [33,34,35,36], which require heavy tuning of many more hyperparameters. SSM and SSBB require just two — minibatch size b and inner loop size m.

The point (iii) also deserves special mention. Since SSM and SSBB are ultimately encapsulated in the respective learning rates \(\eta _k^{{\scriptscriptstyle \textsf{SS}}}\) and \(\eta _k^{{\scriptscriptstyle \textsf{SSBB}}}\), they are versatile enough to be incorporated into other methods such as those in [31,32,33,34,35,36], assuming that we are willing to pay the price in hyperparameters tuning. We hope to explore this in future work.

Data availability

We do not analyze or generate any datasets. The numerical experiments in Sect. 5 rely on standard datasets in LIBSVM that is publicly available from https://github.com/cjlin1/libsvm. The authors declare no conflict of interest.

Notes

References

Steffensen, J.F.: Remarks on iteration. Skand. Aktuarietidskr. 1, 64–72 (1933)

Steffensen, J.F.: Further remarks on iteration. Skand. Aktuarietidskr. 28, 44–55 (1945)

Amat, S., Ezquerro, J.A., Hernández-Verón, M.A.: On a Steffensen-like method for solving nonlinear equations. Calcolo 53(2), 171–188 (2016)

Ezquerro, J.A., Hernández-Verón, M.A., Rubio, M.J., Velasco, A.I.: An hybrid method that improves the accessibility of Steffensen’s method. Numer. Algorithms 66(2), 241–267 (2014)

Henrici, P.: Elements of Numerical Analysis. John Wiley, New York (1964)

Huang, H.Y.: Unified approach to quadratically convergent algorithms for function minimization. J. Optim. Theory Appl. 5, 405–423 (1970)

Johnson, L.W., Scholz, D.R.: On Steffensen’s method. SIAM J. Numer. Anal. 5, 296–302 (1968)

Nedzhibov, G.H.: An approach to accelerate iterative methods for solving nonlinear operator equations. In: Applications of Mathematics in Engineering and Economics (AMEE’11). AIP Conf. Proc., vol. 1410, pp. 76–82. Amer. Inst. Phys., Melville (2011)

Nievergelt, Y.: Aitken’s and Steffensen’s accelerations in several variables. Numer. Math. 59(3), 295–310 (1991)

Nievergelt, Y.: The condition of Steffensen’s acceleration in several variables. J. Comput. Appl. Math. 58(3), 291–305 (1995)

Noda, T.: The Aitken-Steffensen method in the solution of simultaneous nonlinear equations. Sūgaku 33(4), 369–372 (1981)

Noda, T.: The Aitken-Steffensen method in the solution of simultaneous nonlinear equations. II. Sūgaku 38(1), 83–85 (1986)

Noda, T.: The Aitken-Steffensen method in the solution of simultaneous nonlinear equations. III. Proc. Jpn. Acad. Ser. A Math. Sci. 62(5), 174–177 (1986)

Noda, T.: The Aitken-Steffensen formula for systems of nonlinear equations. IV. Proc. Jpn. Acad. Ser. A Math. Sci. 66(8), 260–263 (1990)

Noda, T.: The Aitken-Steffensen formula for systems of nonlinear equations. V. Proc. Jpn. Acad. Ser. A Math. Sci. 68(2), 37–40 (1992)

Gill, P.E., Murray, W., Wright, M.H.: Numerical linear algebra and optimization. In: Classics in Applied Mathematics, vol. 83. Society for Industrial and Applied Mathematics, Philadelphia (2021)

Allen-Zhu, Z.: Katyusha: The first direct acceleration of stochastic gradient methods. In: Hatami, H., McKenzie, P., King, V. (eds.) STOC’17: Proceedings of the 49th Annual ACM SIGACT Symposium on Theory of Computing. Annual ACM Symposium on Theory of Computing, pp. 1200–1205 (2017)

Woodworth, B., Srebro, N.: Tight complexity bounds for optimizing composite objectives. In: Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems (NIPS 2016). Advances in Neural Information Processing Systems, vol. 29 (2016)

Brezinski, C., Redivo-Zaglia, M.: Extrapolation and Rational Approximation–the Works of the Main Contributors. Springer, Cham (2020)

Householder, A.S.: The Numerical Treatment of a Single Nonlinear Equation. International Series in Pure and Applied Mathematics, McGraw-Hill, New York (1970)

Kaczmarz, S.: Angenäherte auflösung von systemen linearer gleichungen. Bull. Int. Acad. Polon. Sci. A 57(6), 355–357 (1937)

Kaczmarz, S.: Approximate solution of systems of linear equations. Int. J. Control 57(6), 1269–1271 (1993)

Strohmer, T., Vershynin, R.: A randomized Kaczmarz algorithm with exponential convergence. J. Fourier Anal. Appl. 15(2), 262–278 (2009)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22, 400–407 (1951)

Roux, N.L., Schmidt, M., Bach, F.R.: A stochastic gradient method with an exponential convergence rate for finite training sets. In: Advances in Neural Information Processing Systems 25, pp. 2672–2680 (2012)

Defazio, A., Bach, F.R., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. In: Advances in Neural Information Processing Systems 27, pp. 1646–1654 (2014)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. In: Advances in Neural Information Processing Systems 26, pp. 315–323 (2013)

Moritz, P., Nishihara, R., Jordan, M.I.: A linearly-convergent stochastic L-BFGS algorithm. In: Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, AISTATS. JMLR Workshop and Conference Proceedings, vol. 51, pp. 249–258 (2016)

Byrd, R.H., Hansen, S.L., Nocedal, J., Singer, Y.: A stochastic quasi-Newton method for large-scale optimization. SIAM J. Optim. 26(2), 1008–1031 (2016)

Zhao, R., Haskell, W.B., Tan, V.Y.: Stochastic L-BFGS: improved convergence rates and practical acceleration strategies. IEEE Trans. Signal Process. 66(5), 1155–1169 (2018)

Duchi, J.C., Hazan, E., Singer, Y.: Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 12, 2121–2159 (2011)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: 3rd International Conference on Learning Representations, ICLR (2015)

Nesterov, Y.E.: A method for solving the convex programming problem with convergence rate \(O(1/k^{2})\). Dokl. Akad. Nauk SSSR 269(3), 543–547 (1983)

Poljak, B.T.: Some methods of speeding up the convergence of iterative methods. Ž. Vyčisl. Mat i Mat. Fiz. 4, 791–803 (1964)

Qian, N.: On the momentum term in gradient descent learning algorithms. Neural Netw. 12(1), 145–151 (1999)

Reddi, S.J., Kale, S., Kumar, S.: On the convergence of Adam and beyond. arXiv:1904.09237 (2019)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Broyden, C.G.: The convergence of a class of double-rank minimization algorithms. II. The new algorithm. J. Inst. Math. Appl. 6, 222–231 (1970)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13(3), 317–322 (1970)

Goldfarb, D.: A family of variable-metric methods derived by variational means. Math. Comput. 24, 23–26 (1970)

Shanno, D.F.: Conditioning of quasi-Newton methods for function minimization. Math. Comput. 24, 647–656 (1970)

Potra, F.A.: On an iterative algorithm of order \(1.839\cdots \) for solving nonlinear operator equations. Numer. Funct. Anal. Optim. 7(1), 75–106 (1984/85)

Needell, D., Srebro, N., Ward, R.: Stochastic gradient descent, weighted sampling, and the randomized Kaczmarz algorithm. Math. Program. 155(1–2, Ser. A), 549–573 (2016)

Babanezhad, R., Ahmed, M.O., Virani, A., Schmidt, M., Konečný, J., Sallinen, S.: Stopwasting my gradients: practical SVRG. In: Advances in Neural Information Processing Systems 28, pp. 2251–2259 (2015)

Xiao, L., Zhang, T.: A proximal stochastic gradient method with progressive variance reduction. SIAM J. Optim. 24(4), 2057–2075 (2014)

Nitanda, A.: Accelerated stochastic gradient descent for minimizing finite sums. In: Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, AISTATS. JMLR Workshop and Conference Proceedings, vol. 51, pp. 195–203 (2016)

Tan, C., Ma, S., Dai, Y., Qian, Y.: Barzilai-borwein step size for stochastic gradient descent. In: Advances in Neural Information Processing Systems 29, pp. 685–693 (2016)

Rockafellar, R.T.: Convex Analysis. Princeton Landmarks in Mathematics, Princeton University Press, Princeton (1997)

Acknowledgements

This work is partially supported by DARPA HR00112190040, NSF DMS-1854831, NSF ECCS-2216912, ONR N000142312863, and the Eckhardt Faculty Fund. We thank Nati Srebro for his exceptionally pertinent pointers and the two anonymous referees for their helpful comments. LHL thanks Junjie Yue for helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, M., Lai, Z. & Lim, LH. Stochastic Steffensen method. Comput Optim Appl 89, 1–32 (2024). https://doi.org/10.1007/s10589-024-00583-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-024-00583-7