Abstract

We propose a modified BFGS algorithm for multiobjective optimization problems with global convergence, even in the absence of convexity assumptions on the objective functions. Furthermore, we establish a local superlinear rate of convergence of the method under usual conditions. Our approach employs Wolfe step sizes and ensures that the Hessian approximations are updated and corrected at each iteration to address the lack of convexity assumption. Numerical results shows that the introduced modifications preserve the practical efficiency of the BFGS method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Multiobjective optimization problems involve the simultaneous minimization of multiple objectives that may be conflicting. The goal is to find a set of solutions that offer different trade-offs between these objectives, helping decision makers in identifying the most satisfactory solution. Pareto optimality is a fundamental concept used to characterize such solutions. A solution is said to be Pareto optimal if none of the objectives can be improved without deterioration to at least one of the other objectives.

Over the last 2 decades, significant research has focused on extending iterative methods originally developed for single-criterion optimization to the domain of multiobjective optimization, providing an alternative to scalarization methods [19, 41]. This line of research was initiated by Fliege and Svaiter in 2000 with the extension of the steepest descent method [23] (see also [32]). Since then, several methods have been studied, including Newton [12, 22, 28, 31, 51], quasi-Newton [1, 33, 34, 39, 42, 44, 46,47,48], conjugate gradient [27, 29, 37], conditional gradient [2, 10], projected gradient [3, 20, 24, 25, 30], and proximal methods [5, 8, 9, 11, 13].

Proposed independently by Broyden [6], Fletcher [21], Goldfarb [26], and Shanno [49] in 1970, the BFGS is the most widely used quasi-Newton method for solving unconstrained scalar-valued optimization problems. As a quasi-Newton method, it computes the search direction using a quadratic model of the objective function, where the Hessian is approximated based on first-order information. Powell [45] was the first to prove the global convergence of the BFGS method for convex functions, employing a line search that satisfies the Wolfe conditions. Some time later, Byrd and Nocedal [7] introduced additional tools that simplified the global convergence analysis, enabling the inclusion of backtracking strategies. For over 3 decades, the convergence of the BFGS method for nonconvex optimization remained an open question until Dai [15], in the early 2000 s, provided a counterexample showing that the method can fail in such cases (see also [16, 40]). Another research direction focuses on proposing suitable modifications to the BFGS algorithm that enable achieving global convergence for nonconvex general functions while preserving its desirable properties, such as efficiency and simplicity. Notable works in this area include those by Li and Fukushima [35, 36].

The BFGS method for multiobjective optimization was studied in [33, 34, 39, 42, 44, 46,47,48]. However, it is important to note that, except for [46, 47], the algorithms proposed in these papers are specifically designed for convex problems. The assumption of convexity is crucial to ensure that the Hessian approximations remain positive definite over the iterations, guaranteeing the well-definedness of these methods. In [47], Qu et al. proposed a cautious BFGS update scheme based on the work [36]. This approach updates the Hessian approximations only when a given safeguard criterion is satisfied, resulting in a globally convergent algorithm for nonconvex problems. In [46], Prudente and Souza proposed a BFGS method with Wolfe line searches which exactly mimics the classical BFGS method for single-criterion optimization. This variant is well defined even for general nonconvex problems, although global convergence cannot be guaranteed in this general case. Despite this, it has been shown to be globally convergent for strongly convex problems.

In the present paper, inspired by the work [35], we go a step further than [46] and introduce a modified BFGS algorithm for multiobjective optimization which possesses a global convergence property even without convexity assumption on the objective functions. Furthermore, we establish the local superlinear convergence of the method under certain conditions. Our approach employs Wolfe step sizes and ensures that the Hessian approximations are updated and corrected at each iteration to overcome the lack of convexity assumption. Numerical results comparing the proposed algorithm with the methods introduced in [46, 47] are discussed. Overall, the modifications made to the BFGS method to ensure global convergence for nonconvex problems do not compromise its practical performance.

The paper is organized as follows: Sect. 2 presents the concepts and preliminary results, Sect. 3 introduces the proposed modified BFGS algorithm and discusses its global convergence, Sect. 4 focuses on the local convergence analysis with superlinear convergence rate, Sect. 5 presents the numerical experiments, and Sect. 6 concludes the paper with some remarks. Throughout the main text, we have chosen to omit proofs that can be easily derived from existing literature to enhance overall readability. However, these proofs are provided in the Appendix for self-contained completeness.

Notation. \(\mathbb {R}\) and \(\mathbb {R}_{++}\) denote the set of real numbers and the set of positive real numbers, respectively. As usual, \(\mathbb {R}^n\) and \(\mathbb {R}^{n\times p}\) denote the set of n-dimensional real column vectors and the set of \({n\times p}\) real matrices, respectively. The identity matrix of size n is denoted by \(I_n\). \(\Vert \cdot \Vert \) is the Euclidean norm. If \(u,v\in \mathbb {R}^n\), then \(u\preceq v\) (or \(\prec \)) is to be understood in a componentwise sense, i.e., \(u_i\le v_i\) (or <) for all \(i=1,\ldots ,n\). For \(B\in \mathbb {R}^{n\times n}\), \(B \succ 0\) means that B is positive definite. In this case, \(\langle \cdot ,\cdot \rangle _B\) and \(\Vert \cdot \Vert _B\) denote the B-energy inner product and the B-energy norm, respectively, i.e., for \(u,v\in \mathbb {R}^n\), \(\langle u,v\rangle _B:=u^\top B v\) and \(\Vert u\Vert _B:=\sqrt{\langle u,u\rangle _B}\). If \(K = \{k_1, k_2, \ldots \} \subseteq \mathbb {N}\), with \(k_j < k_{j+1}\) for all \(j\in \mathbb {N}\), then we denote \(K \displaystyle \mathop {\subset }_{\infty }\mathbb {N}\).

2 Preliminaries

In this paper, we focus on the problem of finding a Pareto optimal point of a continuously differentiable function \(F: \mathbb {R}^n \rightarrow \mathbb {R}^m\). This problem can be denoted as follows:

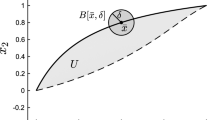

A point \(x^{*}\in \mathbb {R}^n\) is Pareto optimal (or weak Pareto optimal) of F if there is no other point \(x \in \mathbb {R}^n\) such that \(F(x) \preceq F(x^{*})\) and \(F(x) \ne F(x^{*})\) (or \(F(x) \prec F(x^{*})\)). These concepts can also be defined locally. We say that \(x^{*}\in \mathbb {R}^n\) is a local Pareto optimal (or local weak Pareto optimal) point if there exists a neighborhood \(U \subset \mathbb {R}^n\) of \(x^{*}\) such that \(x^{*}\) is Pareto optimal (or weak Pareto optimal) for F restricted to U. A necessary condition (but not always sufficient) for the local weak Pareto optimality of \(x^{*}\) is given by:

where \(JF(x^{*})\) denotes the Jacobian of F at \(x^{*}\). A point \(x^{*}\) that satisfies (2) is referred to as a Pareto critical point. It should be noted that if \(x\in \mathbb {R}^n\) is not Pareto critical, then there exists a direction \(d\in \mathbb {R}^n\) such that \(\nabla F_j(x)^\top d < 0\) for all \(j = 1, \ldots , m\). This implies that d is a descent direction for F at x, meaning that there exists \(\varepsilon > 0\) such that \(F(x + \alpha d) \prec F(x)\) for all \(\alpha \in (0,\varepsilon ]\). Let \(\mathcal {D}: \mathbb {R}^n \times \mathbb {R}^n \rightarrow \mathbb {R}\) be defined as follows:

The function \(\mathcal {D}\) characterizes the descent directions for F at a given point x. Specifically, if \(\mathcal {D}(x,d) < 0\), then d is a descent direction for F at x. Conversely, if \(\mathcal {D}(x,d) \ge 0\) for all \(d \in \mathbb {R}^n\), then x is a Pareto critical point.

We define \(F: \mathbb {R}^n \rightarrow \mathbb {R}^m\) as convex (or strictly convex) if each component \(F_j: \mathbb {R}^n \rightarrow \mathbb {R}\) is convex (or strictly convex) for all \(j = 1, \ldots , m\), i.e., for all \(x,y \in \mathbb {R}^n\) and \(t \in [0,1]\) (or \(t \in (0,1)\)),

The following result establishes a relationship between the concepts of criticality, optimality, and convexity.

Lemma 2.1

[22, Theorem 3.1] The following statements hold:

-

(i)

if \(x^{*}\) is local weak Pareto optimal, then \(x^{*}\) is a Pareto critical point for F;

-

(ii)

if F is convex and \(x^{*}\) is Pareto critical for F, then \(x^{*}\) is weak Pareto optimal;

-

(iii)

if F is strictly convex and \(x^{*}\) is Pareto critical for F, then \(x^{*}\) is Pareto optimal.

The class of quasi-Newton methods used to solve (1) consists of algorithms that compute the search direction d(x) at a given point \(x \in \mathbb {R}^n\) by solving the optimization problem:

where \(B_j\in \mathbb {R}^{n\times n}\) serves as an approximation of \(\nabla ^2 F_j(x)\) for all \(j=1,\ldots ,m\). If \(B_j\succ 0\) for all \(j=1,\ldots ,m\), then the objective function of (4) is strongly convex, ensuring a unique solution for this problem. We denote the optimal value of (4) by \(\theta (x)\), i.e.,

and

One natural approach is to use a BFGS–type formula, which updates the approximation \(B_j\) in a way that preserves positive definiteness. In the case where \(B_j=I_n\) for all \(j=1,\ldots ,m\), d(x) represents the steepest descent direction (see [23]). Similarly, if \(B_j=\nabla ^2 F_j(x)\) for all \(j=1,\ldots ,m\), d(x) corresponds to the Newton direction (see [22]).

In the following discussion, we assume that \(B_j\succ 0\) for all \(j=1,\ldots ,m\). In this scenario, (4) is equivalent to the convex quadratic optimization problem:

The unique solution to (7) is given by \((t,d):=(\theta (x), d(x))\). Since (7) is convex and has a Slater point (e.g., \((1, 0)\in \mathbb {R}\times \mathbb {R}^n\)), there exists a multiplier \(\lambda (x) \in \mathbb {R}^m\) such that the triple \((t,d,\lambda ):=(\theta (x), d(x),\lambda (x)) \in \mathbb {R}\times \mathbb {R}^n\times \mathbb {R}^m\) satisfies its Karush-Kuhn-Tucker system given by:

In particular, (8) and (9) imply that

and

where \(\Delta _m\) represents the m-dimensional simplex defined as:

Now, by summing (10) over \(j=1,\ldots ,m\) and using (11)–(12), we obtain

Lemma 2.2

Let \(d:\mathbb {R}^n\rightarrow \mathbb {R}^n\) and \(\theta :\mathbb {R}^n\rightarrow \mathbb {R}\) given by (5) and (6), respectively. Assume that \(B_j\succ 0\) for all \(j=1,\ldots ,m\). Then, we have:

-

(i)

x is Pareto critical if and only if \(d(x)=0\) and \(\theta (x)=0\);

-

(ii)

if x is not Pareto critical, then \(d(x)\ne 0\) and \(\mathcal {D}(x,d(x))< \theta (x)<0\) (in particular, d(x) is a descent direction for F at x).

Proof

See [22, Lemma 3.2] and [44, Lemma 2]. \(\square \)

As previously mentioned, if \(B_j=I_n\) for all \(j=1,\ldots ,m\), the solution of (4) corresponds to the steepest descent direction, denoted by \(d_{SD}(x)\):

Taking the above discussion into account, we can observe that there exists

such that

Next, we will review some useful properties related to \(d_{SD}(\cdot )\).

Lemma 2.3

Let \(d_{SD}:\mathbb {R}^n\rightarrow \mathbb {R}^n\) be given by (14). Then:

-

(i)

x is Pareto critical if and only if \(d_{SD}(x)=0\);

-

(ii)

if x is not Pareto critical, then we have \(d_{SD}(x)\ne 0\) and \(\mathcal {D}(x,d_{SD}(x))<-(1/2)\Vert d_{SD}(x)\Vert ^2<0\) (in particular, \(d_{SD}(x)\) is a descent direction for F at x);

-

(iii)

the mapping \(d_{SD}(\cdot )\) is continuous;

-

(iv)

for any \(x\in \mathbb {R}^n\), \(-d_{SD}(x)\) is the minimal norm element of the set

$$\begin{aligned} \{u\in \mathbb {R}^n \mid u = \displaystyle \sum _{j=1}^{m} \lambda _j\nabla F_j (x),\lambda \in \Delta _m \}, \end{aligned}$$i.e., in the convex hull of \(\{\nabla F_1(x),\ldots ,\nabla F_m(x)\}\);

-

(v)

if \(\nabla F_j\), \(j=1,\ldots ,m\), are L-Lipschitz continuous on a nonempty set \(U\subset \mathbb {R}^n\), i.e.,

$$\begin{aligned} \Vert \nabla F_j(x)-\nabla F_j(y)\Vert \le L\Vert x-y\Vert , \quad \forall x,y\in U, \quad \forall j=1,\ldots ,m, \end{aligned}$$then the mapping \(x\mapsto \Vert d_{SD}(x)\Vert \) is also L-Lipschitz continuous on U.

Proof

For items (i), (ii), and (iii), see [32, Lemma 3.3]. For items (iv) and (v), see [50, Corollary 2.3] and [50, Theorem 3.1], respectively. \(\square \)

We end this section by presenting an auxiliary result.

Lemma 2.4

The following statements are true.

-

(i)

The function \(h(t):=1-t+\ln (t)\) is nonpositive for all \(t>0\).

-

(ii)

For any \(\bar{t}<1\), we have \(\ln (1-\bar{t})\ge -\bar{t}/(1-\bar{t})\).

Proof

For item (i), see [43, Exercise 6.8]. For item (ii), consider \(\bar{t}<1\). By applying item (i) with \(t=1/(1-\bar{t})\), we obtain

\(\square \)

3 The algorithm and its global convergence

In this section, we define the main algorithm employed in this paper and study its global convergence, with a particular focus on nonconvex multiobjective optimization problems. Let us suppose that the following usual assumptions are satisfied.

Assumption 3.1

-

(i)

F is continuously differentiable.

-

(ii)

The level set \(\mathcal{{L}}:=\{x\in \mathbb {R}^n\mid F(x) \; \preceq \; F(x^0)\}\) is bounded, where \(x^0\in \mathbb {R}^n\) is the given starting point.

-

(iii)

There exists an open set \(\mathcal{N}\) containing \(\mathcal{{L}}\) such that \(\nabla F_j\) is L-Lipschitz continuous on \(\mathcal{N}\) for all \(j = 1, \dots , m\), i.e.,

$$\begin{aligned} \Vert \nabla F_j(x)-\nabla F_j(y)\Vert \le L \Vert x-y\Vert , \quad \forall x,y\in \mathcal{N}, \quad \forall j = 1,\ldots ,m. \end{aligned}$$

The algorithm is formally described as follows.

Some comments are in order. First, by expressing the search direction subproblem (4) as the convex quadratic optimization problem (7), we can apply well-established techniques and solvers to find its solution at Step 1. Second, some practical stopping criteria can be considered at Step 2. It is usual to use the gap function \(\theta (x^k)\) in (6) or the norm of \(d_{SD}(x^k)\) in (14) to measure criticality, see Lemmas 2.2 and 2.3, respectively. Third, at Step 3, we require that \(\alpha _k\) satisfies (17)–(18), which corresponds to the multiobjective standard Wolfe conditions originally introduced in [37]. Under Assumption 3.1(i)–(ii), given \(d^k\in \mathbb {R}^n\) a descent direction for F at \(x^k\), it is possible to show that there are intervals of positive step sizes satisfying (17)–(18), see [37, Proposition 3.2]. As we will see, under suitable assumptions, the unit step size \(\alpha _k=1\) is eventually accepted, which is essential to obtain fast convergence. Furthermore, an algorithm to calculate Wolfe step sizes for vector-valued problems was proposed in [38]. Fourth, the usual BFGS scheme for \(F_j\) consists of the update formula given in (22) with \(y_j^k\) in place of \(\gamma _j^k\). In this case, the product \((y_j^k)^\top s^k\) in the denominator of the third term on the right-hand side of (22) can be nonpositive for some \(j\in \{1,\ldots ,m\}\), even when the step size satisfies the Wolfe conditions (17)–(18), see [46, Example 3.3]. This implies that update scheme (with \(y_j^k\) in place of \(\gamma _j^k\)) may fail to preserve positive definiteness of \(B_j^k\). Fifth, note that \(B_j^{k+1}s^k= y_j^k + r_j^ks^k\) for each \(j=1,\ldots ,m\). Thus, if \(r_j^k\) is small, this relation can be seen as an approximation of the well-known secant equation \(B_j^{k+1}s^k= y_j^k\) for \(F_j\), see [35].

Theorem 3.1

Algorithm 1 is well-defined.

Proof

The proof is by induction. We start by assuming that \(B_j^k\succ 0\) for all \(j=1,\ldots ,m\), which is trivially true for \(k=0\). This makes subproblem (5) in Step 1 solvable. If \(x^k\) is Pareto critical, Algorithm 1 stops at Step 2, thereby concluding the proof. Otherwise, Lemma 2.2(ii) implies that \(d^k\) is a descent direction of F at \(x^k\). Thus, taking into account Assumption 3.1(i)–(ii), there exist intervals of positive step sizes satisfying conditions (17)–(18), as shown in [37, Proposition 3.2]. As a result, \(x^{k+1}\) can be properly defined in Step 3. To complete the proof, let us show that \(B_j^{k+1}\) remains positive definite for all \(j=1,\ldots ,m\). By the definitions of \(\gamma _j^{k}\) and \(\eta _j^k\) in (21) and (19), respectively, we have

Therefore, by the definition of \(r_j^k\) in (20), Lemma 2.3(iv), and Lemma 2.3(ii), it follows that

Thus, the updating formulas (22) are well-defined. Now, for each \(j\in \{1,\ldots ,m\}\) and any nonzero vector z, we have

where the first inequality is a direct consequence of the Cauchy-Schwarz inequality, which gives \(\langle z,s^k \rangle _{B_j^{k}}^2\le \Vert z\Vert _{B_j^{k}}^2 \Vert s^k\Vert _{B_j^{k}}^2\). Finally, assume by contradiction that \(z^\top B_j^{k+1} z=0\). In this case, it follows from (24) that

The second equation in (25) implies that \(|\langle z,s^k \rangle _{B_j^{k}}|= \Vert z\Vert _{B_j^{k}} \Vert s^k\Vert _{B_j^{k}}\), so there exists \(\tau \in \mathbb {R}\) such that \(z=\tau s^k\). Combining this with the first equation in (25), we obtain \(\tau (\gamma _j^{k})^\top s^k=0\). Taking into account (23), we can deduce that \(\tau =0\), which contradicts the fact that z is a nonzero vector. \(\square \)

Hereafter, we assume that \(x^k\) is not Pareto critical for all \(k\ge 0\). Thus, Algorithm 1 generates an infinite sequence of iterates. The following result establishes that the sequence \(\{x^k,d^k\}\) satisfies a Zoutendijk-type condition, which will be crucial in our analysis. Its proof is based on [37, Proposition 3.3] and will be provided in the Appendix.

Proposition 3.2

Consider the sequence \(\{x^k,d^k\}\) generated by Algorithm 1. Then,

Our analysis also exploits insights developed by Byrd and Nocedal [7] in their analysis of the classical BFGS method for single-valued optimization (i.e., for \(m=1\)). They provided sufficient conditions that ensure that the angle between \(s^k\) and \(B_1^ks^k\) (which coincides with the angle between \(d^k\) and \(-\nabla F_1(x^k)\) in the scalar case) remains far from 0 for an arbitrary fraction of the iterates. Recently, this result was studied in the multiobjective setting in [46]. Under some mild conditions, similar to the approach taken in [46], we establish that the angles between \(s^k\) and \(B_j^ks^k\) remain far from 0, simultaneously for all objectives, for an arbitrary fraction p of the iterates. The proof of this result can be constructed as a combination of [7, Theorem 2.1] and [46, Lemma 4.2] and will therefore be postponed to the Appendix.

Proposition 3.3

Consider the sequence \(\{x^k\}\) generated by Algorithm 1. Let \(\beta _j^k\) be the angle between the vectors \(s^k\) and \(B_j^ks^k\), for all \(k\ge 0\) and \(j=1,\ldots ,m\). Assume that

for some positive constants \(C_1,C_2>0\). Then, given \(p\in (0,1)\), there exists a constant \(\delta >0\) such that, for all \(k \ge 1\), the relation

holds for at least \(\lceil p(k+1) \rceil \) values of \(\ell \in \{0,1,\ldots ,k\}\), where \(\lceil \cdot \rceil \) denotes the ceiling function.

The following technical result forms the basis for applying Proposition 3.3.

Lemma 3.4

Let \(\{x^k\}\) be a sequence generated by Algorithm 1. Then, for each j = 1,...,m and all \(k\ge 0\), there exist positive constants \(c_1,c_2>0\) such that:

-

(i)

\(\dfrac{(\gamma _j^{k})^\top s^k}{\Vert s^k\Vert ^2}\ge c_1 \Vert d_{SD}(x^k)\Vert \);

-

(ii)

\(\dfrac{\Vert \gamma _j^{k}\Vert ^2}{(\gamma _j^{k})^\top s^k}\le \dfrac{c_2}{\Vert d_{SD}(x^k)\Vert }\).

Proof

Let \(k\ge 0\) and \(j\in \{1,\ldots ,m\}\) be given. As in (23), we have

Thus, taking \(c_1:=\underline{\vartheta }\), we conclude item (i). Now consider item (ii). By the Cauchy–Schwarz inequality and Assumption 3.1(iii), it follows that

On the other hand, since \(\{x^k\}\subset \mathcal{{L}}\) and \(\mu ^k\in \Delta _m\), by Assumption 3.1(i)–(ii), there exists a constant \(\bar{c}>0\), independent of k, such that \(\Vert \sum _{i=1}^m\mu _i^k \nabla F_i(x^k)\Vert \le \bar{c}\). The definition of \(r_j^k\) together with the last two inequalities yields

and hence

By squaring the latter inequality and using item(i), we obtain

Thus, taking \(c_2:=(2L+ \bar{\vartheta }\bar{c})^2/c_1\), we conclude the proof. \(\square \)

From now on, let \(\lambda ^k:= \lambda (x^k)\in \mathbb {R}^m\) be the Lagrange multiplier associated with \(x^k\) satisfying (11)–(12). We are now able to prove the main result of this section. We show that Algorithm 1 finds a Pareto critical point of F, without imposing any convexity assumptions.

Theorem 3.5

Let \(\{x^k\}\) be a sequence generated by Algorithm 1. Then

As a consequence, \(\{x^k\}\) has a limit point that is Pareto critical.

Proof

Assume by contradiction that there is a constant \(\varepsilon >0\) such that

From Lemma 3.4, taking \(C_1:=c_1\varepsilon \) and \(C_2:=c_2/\varepsilon \), we have

showing that the assumptions of Proposition 3.3 are satisfied. Thus, there exist a constant \(\delta >0\) and \(\mathbb {K}\displaystyle \mathop {\subset }_{\infty }\mathbb {N}\) such that

Hence, by the definitions of \(\cos \beta _j^k\) and \(s^k\), we have, for all \(j = 1,\ldots ,m\),

which implies

Therefore, from Lemma 2.2(ii) and (13), it follows that

Thus, from the triangle inequality, (11), (12), Lemma 2.3(iv), and (29), we obtain

Hence,

which contradicts the Zoutendijk condition (26). Therefore, we conclude that (28) holds.

Now, (28) implies that there exists \(\mathbb {K}_1\displaystyle \mathop {\subset }_{\infty }\mathbb {N}\) such that \(\lim _{k\in \mathbb {K}_1}\Vert d_{SD}(x^k)\Vert =0\). On the other hand, given that \(\{x^k\}\subset \mathcal{{L}}\) and \(\mathcal{{L}}\) is compact, we can establish the existence of \(\mathbb {K}_2\subseteq \mathbb {K}_1\) and \(x^{*}\in \mathcal{{L}}\) such that \(\lim _{k\in \mathbb {K}_2}x^k= x^{*}\). Thus, from Lemma 2.3(iii), we deduce that \(d_{SD}(x^{*})=0\). Consequently, based on Lemma 2.3(i), we conclude that \(x^{*}\) is Pareto critical. \(\square \)

Even though the primary focus of this article is on nonconvex problems, we conclude this section by establishing full convergence of the sequence generated by Algorithm 1 in the case of strict convexity of F. Note that, under Assumption 3.1(i)–(ii), the existence of at least one Pareto optimal point is assured in this particular case.

Theorem 3.6

Let \(\{x^k\}\) be a sequence generated by Algorithm 1. If F is strictly convex, then \(\{x^k\}\) converges to a Pareto optimal point of F.

Proof

According to Theorem 3.5 and Theorem 2.1(iii), there exists a limit point \(x^{*}\in \mathcal{{L}}\) of \(\{x^k\}\) that is Pareto optimal. Let \(\mathbb {K}_1\displaystyle \mathop {\subset }_{\infty }\mathbb {N}\) be such that \(\lim _{k\in \mathbb {K}_1}x^k=x^{*}\). To show the convergence of \(\{x^k\}\) to \(x^{*}\), let us suppose by contradiction that there exist \(\bar{x}\in \mathcal {L}\), where \(\bar{x}\ne x^{*}\), and \(\mathbb {K}_2\displaystyle \mathop {\subset }_{\infty }\mathbb {N}\) such that \(\lim _{k\in \mathbb {K}_2}x^k=\bar{x}\). We first claim that \(F(\bar{x})\ne F(x^{*})\). In fact, if \(F(\bar{x})= F(x^{*})\), based on (3), for all \(t\in (0,1)\), we would have

which contradicts the fact that \(x^{*}\) is a Pareto optimal point. Hence, \(F(\bar{x})\ne F(x^{*})\), as we claimed. Now, since \(x^{*}\) is Pareto optimal, there exists \(j_*\in \{1,\ldots ,m\}\) such that \(F_{j_*}(x^{*})<F_{j_*}(\bar{x})\). Therefore, considering that \(\lim _{k\in \mathbb {K}_1}x^k=x^{*}\) and \(\lim _{k\in \mathbb {K}_2}x^k=\bar{x}\), we can choose \(k_1\in \mathbb {K}_1\) and \(k_2\in \mathbb {K}_2\) such that \(k_1<k_2\) and \(F_{j_*}(x^{k_1})<F_{j_*}(x^{k_2})\). This contradicts (17) which implies, in particular, that \(\{F_{j_*}(x^k)\}\) is decreasing. Thus, we can conclude that \(\lim _{k\rightarrow \infty }x^k=x^{*}\), completing the proof. \(\square \)

4 Local convergence analysis

In this section, we analyze the local convergence properties of Algorithm 1. The findings presented here are applicable to both convex and nonconvex problems. We will assume that the sequence \(\{x^k\}\) converges to a local Pareto optimal point \(x^{*}\) and show, under appropriate assumptions, that the convergence rate is superlinear.

4.1 Superlinear rate of convergence

Throughout this section, we make the following assumptions.

Assumption 4.1

-

(i)

F is twice continuously differentiable.

-

(ii)

The sequence \(\{x^k\}\) generated by Algorithm 1 converges to a local Pareto optimal point \(x^{*}\) where \(\nabla ^2 F_j(x^{*})\) is positive definite for all \(j=1,\ldots ,m\).

-

(iii)

For each \(j=1,\ldots ,m\), \(\nabla ^2 F_j(x)\) is Hölder continuous at \(x^{*}\), i.e., there exist constants \(\nu \in (0,1]\) and \(M>0\) such that

$$\begin{aligned} \Vert \nabla ^2 F_j(x)-\nabla ^2 F_j(x^{*})\Vert \le M \Vert x-x^{*}\Vert ^{\nu }, \quad \forall j=1,\ldots ,m, \end{aligned}$$(30)for all x in a neighborhood of \(x^{*}\).

Under Assumption 4.1(ii), there exist a neighborhood U of \(x^{*}\) and constants \(\underline{L}>0\) and \(L>0\) such that

for all \(z \in \mathbb {R}^n\) and \(x \in U\). In particular, (31) implies that \(F_j\) is strongly convex and has Lipschitz continuous gradients on U. Note that constant L in (31) aligns with the L defined in Assumption 3.1(iii) as part of the L-Lipschitz continuity condition for \(\nabla F_j\), maintaining consistent notation for the Lipschitz constant across our analysis. Throughout this section, we assume, without loss of generality, that \(\{x^k\}\subset U\) and that Assumption 4.1(iii) holds in U, i.e., (30) and (31) hold at \(x^k\) for all \(k\ge 0\).

We also introduce the following additional assumption about \(\{r_j^k\}\), which will be considered only when explicitly mentioned. In Sect. 4.2, we will explore practical choices for \(\{r_j^k\}\) that satisfy such an assumption.

Assumption 4.2

For each \(j=1,\ldots ,m\), \(\{r_j^k\}\) satisfies \(\sum _{k \ge 0} r_j^k < \infty .\)

The following result, which is related to the linear convergence of the sequence \(\{x^k\}\) and is based on [46, Theorem 4.6], has its proof in the Appendix.

Proposition 4.1

Suppose that Assumption 4.1(i)–(ii) holds. Let \(\{x^k\}\) be a sequence generated by Algorithm 1. Then, for all \(\nu >0\), we have

As usual in quasi-Newton methods, our analysis relies on the Dennis–Moré [17] characterization of superlinear convergence. To accomplish this, we use a set of tools developed in [7] (see also [45]). For every \(k\ge 0\), we define the average Hessian as:

This leads to the relationship:

We also introduce, for each \(j=1,\ldots ,m\) and \(k\ge 0\), the following quantities:

and

Note that

and

where the first inequality follows from the left hand side of (31). Considering that \(\tilde{B}_j^k\succ 0\) and, based on (34), it follows that \((\tilde{\gamma }_j^k)^\top \tilde{s}_j^k>0\), we can follow the same arguments as in the proof of Theorem 3.1 to show that \(\tilde{B}_j^{k+1}\succ 0\) for all \(j=1,\ldots ,m\) and all \(k\ge 0\). In connection with Proposition 3.3 and Lemma 3.4, we additionally define the following quantities:

Another useful tool combines the trace and the determinant of a given positive definite matrix B, through the following function:

Given that, for all \(j = 1,\ldots ,m\),

and

we can perform some algebraic manipulations to obtain:

We are now ready to prove the central result of the superlinear convergence analysis: We establish that the Dennis–Moré condition holds individually for each objective function \(F_j\). A similar result in the scalar case was given in [35, Theorem 3.8].

Theorem 4.2

Suppose that Assumptions 4.1 and 4.2 hold. Let \(\{x^k\}\) be a sequence generated by Algorithm 1. Then, for each \(j=1,\ldots ,m\), we have

and

Proof

Let \(j\in \{1,\ldots , m\}\) be an arbitrary index. From (33), we obtain

and hence

for all \(k\ge 0\). Therefore, by the definition of \(\bar{G}_j^k\) and (30), we obtain

where \(\bar{c}_j:=M \Vert \nabla ^2F_j(x^{*})^{-1/2}\Vert ^2\) and \(\varepsilon _k:=\max \{\Vert x^{k+1}-x^{*}\Vert ^{\nu },\Vert x^{k}-x^{*}\Vert ^{\nu }\}\). Now, since \(|\Vert \tilde{y}_j^k\Vert -\Vert \tilde{s}_j^k\Vert |\le \Vert \tilde{y}_j^k-\tilde{s}_j^k\Vert \), it follows from (39) that

Without loss of generality, let us assume that \(1-\bar{c}_j\varepsilon _k>0\), for all \(k\ge 0\). Therefore, the left hand side of (40) together with (39) yields

so that

By the definition of \(\tilde{a}_j^k\), we have

Thus, by (31) and (41), we obtain

From the definition of \(\tilde{b}_j^k\), (34), the right hand side of (40), and (42), by performing some manipulations, we also obtain

where \(C_1:=\Vert \nabla ^2F_j(x^{*})^{-1/2}\Vert \Vert \nabla ^2F_j(x^{*})^{-1}\Vert \Vert \nabla ^2F_j(x^{*})^{1/2}\Vert \) and \(C_2:=\Vert \nabla ^2F_j(x^{*})^{-2}\Vert \Vert \nabla ^2F_j(x^{*})\Vert \). Assumptions 4.1(ii) and 4.2 imply that \(\varepsilon _k \rightarrow 0\) and \(r_j^k\rightarrow 0\), respectively. Thus, by using (42) and (43), for all sufficiently large k, we have \(\bar{c}_j\varepsilon _k <1/2\), \((r_j^k)^2\le r_j^k\), and there exists a constant \(C>\max \{3\bar{c}_j,(2LC_1+C_2)/\underline{L}\}\) such that

where the second inequality follows from Lemma 2.4 (ii) (with \(\bar{t}=\bar{c}_j\varepsilon _k\)), and

Let \(k_0\in \mathbb {N}\) be such that the latter two inequalities hold for all \(k\ge k_0\). Therefore, by (36), we obtain

for all \(k\ge k_0\). By summing this expression and making use of (32) and Assumption 4.2, we have

Since \(\ln (1/\cos ^2\tilde{\beta }_j^\ell )>0\) for all \(\ell \ge k_0\) and, by Lemma 2.4 (i), the term in the square brackets is nonpositive, we have

and hence

Note that the second limit in (44) is equivalent to (37). Now, it follows that

where the last equality follows from (44). The above limit trivially implies (38), concluding the proof. \(\square \)

Based on the Dennis–Moré characterization established in Theorem 4.2, we can easily replicate the proofs presented in [46, Theorem 5.5] and [46, Theorem 5.7] to show that unit step size eventually satisfies the Wolfe conditions (17)–(18) and the rate of convergence is superlinear, as detailed in the Appendix. We formally state the results as follows.

Theorem 4.3

Suppose that Assumptions 4.1 and 4.2 hold. Let \(\{x^k\}\) be a sequence generated by Algorithm 1. Then, the step size \(\alpha _k =1\) is admissible for all sufficiently large k and \(\{x^k\}\) converges to \(x^{*}\) at a superlinear rate.

4.2 Suitable choices for \(r_j^k\)

As we have seen, Algorithm 1 is globally convergent regardless of the particular choice of \(r_j^k\). On the other hand, the superlinear convergence rate depends on whether \(r_j^k\) satisfies Assumption 4.2. Next, we explore suitable choices for the multiplier \(\mu ^k\in \Delta _m\) in (20) to ensure that \(r_j^k\) satisfies the aforementioned assumption. In what follows, we will assume that Assumption 4.1 holds. First note that, as in (34), we have \(\eta _j^k = (y_{j}^k)^\top s^k/\Vert s^k\Vert ^2\ge \underline{L}>0\) and hence

Choice 1: One natural choice is to set \(\mu ^k:=\lambda ^{SD}(x^k)\in \Delta _m\) for all \(k\ge 0\), where \(\lambda ^{SD}(x^k)\) is the steepest descent Lagrange multiplier associated with \(x^k\) as in (16). In this case, by Lemma 2.3(i) and Lemma 2.3(v), we have

By summing this expression and making use of (32), we conclude that \(r_j^k\) satisfies Assumption 4.2 for all \(j=1,\ldots ,m\). One potential drawback of this approach is the need to compute the multipliers \(\lambda ^{SD}(x^k)\), which involves solving the subproblem in (14).

Choice 2: Another natural choice is to set \(\mu ^k:=\lambda ^k \in \Delta _m\) for all \(k\ge 0\), where \(\lambda ^k\) is the Lagrange multiplier corresponding to the search direction \(d^k\), see (11). Since the subproblem in Step 2 is typically solved in the form of (7) using a primal-dual algorithm, this approach does not require any additional computational cost. Let us assume that the sequences \(\{B_j^k\}\) and \(\{(B_j^k)^{-1}\}\) are bounded for all \(j=1,\ldots ,m\). In this case, using [28, Lemma 6], there exists a constant \(\delta >0\) such that \(\Vert \sum _{i=1}^m\lambda _i^k \nabla F_i(x^k)\Vert \le \delta \Vert d_{SD}(x^k)\Vert \) for all \(k\ge 0\). Therefore, for all \(j=1,\ldots ,m\) and \(k\ge 0\), similarly to the previous choice, we have

and hence \(r_j^k\) satisfies Assumption 4.2 for all \(j=1,\ldots ,m\).

5 Numerical experiments

In this section, we present some numerical experiments to evaluate the effectiveness of the proposed scheme. We are particularly interested in verifying how the introduced modifications affect the numerical performance of the method. Toward this goal, we considered the following methods in our tests.

-

Algorithm 1 (Global BFGS): our globally convergent algorithm with \(r_j^k\) chosen according to Choice 2 (see Sect. 4.2) and \(\vartheta _k=0.1\) for all \(k\ge 0\). It is worth noting that preliminary numerical tests demonstrated the superior efficiency of Choice 2 over Choice 1.

-

BFGS-Wolfe [46]: a BFGS algorithm in which the Hessian approximations are updated, for each \(j=1,\ldots ,m\), by

$$\begin{aligned} \begin{aligned} B_j^{k+1} {:}{=}&B_j^{k} - \dfrac{(\rho _j^k)^{-1}B_j^{k}s^k(s^k)^\top B_j^{k}+[(s^k)^\top B_j^{k}s^k] y_j^{k} (y_j^{k})^\top }{\left[ (\rho _j^k)^{-1}-(y_j^{k})^\top s^k\right] ^2 + (\rho _j^k)^{-1}(s^k)^\top B_j^ks^k} \\&+ \left[ (\rho _j^k)^{-1} - (y_j^{k})^\top s^k\right] \dfrac{y_j^{k}(s^k)^\top B_j^{k} + B_j^{k}s^k(y_j^{k})^\top }{\left[ (\rho _j^k)^{-1}-(y_j^{k})^\top s^k\right] ^2 + (\rho _j^k)^{-1}(s^k)^\top B_j^ks^k}, \end{aligned} \end{aligned}$$where

$$\begin{aligned} \rho _j^k {:}{=}\left\{ \begin{array}{ll} 1/\left( (y_j^{k})^\top s^k\right) , &{} \text{ if } (y_j^{k})^\top s^k > 0\\ 1/\left( \mathcal {D}(x^{k+1},s^k)-\nabla F_j(x^k)^\top s^k\right) , &{} \text{ otherwise }.\\ \end{array}\right. \end{aligned}$$and the step sizes are calculated satisfying the Wolfe conditions (17)–(18). We point out that this algorithm is well-defined for nonconvex problems, although it is not possible to establish global convergence in this general case. Additionally, in the case of scalar optimization (\(m = 1\)), it retrieves the classical scalar BFGS algorithm.

-

Cautious BFGS-Armijo [47]: a BFGS algorithm in which the Hessian approximations are updated, for each \(j=1,\ldots ,m\), by

$$\begin{aligned} B_j^{k+1}\!{:}{=}\!\left\{ \hspace{-5pt} \begin{array}{ll} B_j^{k}\! -\! \dfrac{B_j^{k}s^k(s^k)^\top B_j^{k}}{(s^k)^\top B_j^ks^k}\! +\!\dfrac{y_j^{k}(y_j^{k})^\top }{(y_j^{k})^\top s^k}, &{} \text{ if }\, (y_j^{k})^\top s^k\ge \varepsilon \min \{1,|\theta (x^k)|\},\\ B_j^{k}, &{} \text{ otherwise }, \end{array}\right. \end{aligned}$$where \(\varepsilon >0\) is an algorithmic parameter and the step sizes are calculated satisfying the Armijo-type condition given in (17). In our experiments, we set \(\varepsilon =10^{-6}\). This combination also leads to a globally convergent scheme, see [47].

We implemented the algorithms using Fortran 90. The search directions \(d(x^k)\) (see (5)) and optimal values \(\theta (x^k)\) (see (6)) were obtained by solving subproblem (7) using the software Algencan [4]. To compute step sizes satisfying the Wolfe conditions (17)–(18), we employed the algorithm proposed in [38]. This algorithm utilizes quadratic/cubic polynomial interpolations of the objective functions, combining backtracking and extrapolation strategies, and is capable of finding step sizes in a finite number of iterations. Interpolation techniques were also used to calculate step sizes satisfying only the Armijo-type condition. We set \(\rho =10^{-4}\), \(\sigma =0.1\), and initialized \(B_j^0\) as the identity matrix for all \(j=1,\ldots ,m\). Convergence was reported when \(|\theta (x^k)| \le 5 \times \texttt {eps}^{1/2}\), where \(\texttt {eps}=2^{-52}\approx 2.22 \times 10^{-16}\) represents the machine precision. When this criterion is met, we consider the problem successfully solved. The maximum number of allowed iterations was set to 2000. If this limit is reached, it means an unsuccessful termination. Our codes are freely available at https://github.com/lfprudente/GlobalBFGS.

The chosen set of test problems consists of both convex and nonconvex multiobjective problems commonly found in the literature and coincides with the one used in [46]. Table 1 presents their main characteristics: The first column contains the problem name, while the “n” and “m” columns provide the number of variables and objectives, respectively. The column “Conv.” indicates whether the problem is convex or not. For each problem, the starting points were chosen within a box defined as \(\{x\in \mathbb {R}^n \mid \ell \le x \le u\}\), where the lower and upper bounds, denoted by \(\ell \) and \(u \in \mathbb {R}^n\), are presented in the last two columns of Table 1. It is important to note that the boxes specified in the table were used solely for defining starting points and were not employed as constraints during the algorithmic processes. For detailed information regarding the references and corresponding formulations of each problem, we refer the reader to [46].

In multiobjective optimization, the primary objective is to estimate the Pareto frontier of a given problem. A commonly used strategy is to execute the algorithm from multiple distinct starting points and collect the Pareto optimal points found. Thus, each problem listed in Table 1 was addressed by running all algorithms from 300 randomly generated starting points within their respective boxes. In this first stage, each problem/starting point was considered an independent instance and a run was considered successful if an approximate critical point was found, regardless of the objective functions values. Figure 1 presents the comparison of the algorithms in terms of CPU time using a performance profile [18]. As can be seen, Algorithm 1 and the BFGS-Wolfe algorithm exhibited virtually identical performance, outperforming the Cautious BFGS-Armijo algorithm. All methods proved to be robust, successfully solving more than \(98\%\) of the problem instances. It is worth noting that although the BFGS-Wolfe algorithm enjoys (theoretical) global convergence only under convexity assumptions, it also performs exceptionally well for nonconvex problems, which is consistent with observations in the scalar case.

In the following, we evaluate the algorithms based on their ability to properly generate Pareto frontiers. To assess this, we employ the widely recognized Purity and (\(\Gamma \) and \(\Delta \)) Spread metrics. In summary, the Purity metric measures the solver’s ability to identify points on the Pareto frontier, while the Spread metric evaluates the distribution quality of the obtained Pareto frontier. For a detailed explanation of these metrics and their application together with performance profiles, we refer the reader to [14]. It is important to note that, at this stage, data referring to all starting points are combined for each problem, taking into account the objective function values found. The results in Fig. 2 indicate that Algorithm 1 performed slightly better in terms of the Purity and \(\Delta \)-Spread metrics, with no significant difference observed for the \(\Gamma \)-Spread metric among the three algorithms.

The numerical results allow us to conclude that the modifications made to the BFGS method to ensure global convergence for nonconvex problems do not compromise its practical performance.

6 Final remarks

Based on the work of Li and Fukushima [35], we presented a modified BFGS scheme that achieves global convergence without relying on convexity assumptions for the objective function F. The global convergence analysis depends only on the requirement of F having continuous Lipschitz gradients. Furthermore, we showed that by appropriately selecting \(r_j^k\) to satisfy Assumption 4.2 and under suitable conditions, the rate of convergence becomes superlinear. We also discussed some practical choices for \(r_j^k\). The introduced modifications preserve the simplicity and practical efficiency of the BFGS method. It is worth emphasizing that the assumptions considered in our approach are natural extensions of those commonly employed in the context of scalar-valued optimization.

Data availibility

The codes supporting the numerical experiments are freely available in the Github repository, https://github.com/lfprudente/GlobalBFGS.

References

Ansary, M.A., Panda, G.: A modified quasi-Newton method for vector optimization problem. Optimization 64(11), 2289–2306 (2015)

Assunção, P.B., Ferreira, O.P., Prudente, L.F.: Conditional gradient method for multiobjective optimization. Comput. Optim. Appl. 78(3), 741–768 (2021)

Bello Cruz, J.Y., Lucambio Pérez, L.R., Melo, J.G.: Convergence of the projected gradient method for quasiconvex multiobjective optimization. Nonlinear Anal. 74(16), 5268–5273 (2011)

Birgin, E., Martínez, J.: Practical Augmented Lagrangian Methods for Constrained Optimization. SIAM, Philadelphia (2014)

Bonnel, H., Iusem, A.N., Svaiter, B.F.: Proximal methods in vector optimization. SIAM J. Optim. 15(4), 953–970 (2005)

Broyden, C.G.: The Convergence of a Class of Double-rank Minimization Algorithms 1. General Considerations. IMA J. Appl. Math. 6(1), 76–90 (1970)

Byrd, R.H., Nocedal, J.: A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 26(3), 727–739 (1989)

Ceng, L.C., Mordukhovich, B.S., Yao, J.C.: Hybrid approximate proximal method with auxiliary variational inequality for vector optimization. J. Optimiz. Theory App. 146(2), 267–303 (2010)

Ceng, L.C., Yao, J.C.: Approximate proximal methods in vector optimization. Eur. J. Oper. Res. 183(1), 1–19 (2007)

Chen, W., Yang, X., Zhao, Y.: Conditional gradient method for vector optimization. Comput. Optim. Appl. 85(3), 857–896 (2023)

Chuong, T.D.: Generalized proximal method for efficient solutions in vector optimization. Numer. Funct. Anal. Optim. 32(8), 843–857 (2011)

Chuong, T.D.: Newton-like methods for efficient solutions in vector optimization. Comput. Optim. Appl. 54(3), 495–516 (2013)

Chuong, T.D., Mordukhovich, B.S., Yao, J.C.: Hybrid approximate proximal algorithms for efficient solutions in vector optimization. J. Nonlinear Convex Anal. 12(2), 257–285 (2011)

Custódio, A.L., Madeira, J.F.A., Vaz, A.I.F., Vicente, L.N.: Direct Multisearch for Multiobjective Optimization. SIAM J. Optim. 21(3), 1109–1140 (2011)

Dai, Y.-H.: Convergence properties of the BFGS algorithm. SIAM J. Optim. 13(3), 693–701 (2002)

Dai, Y.-H.: A perfect example for the BFGS method. Math. Program. 138(1–2), 501–530 (2013)

Dennis, J.E., Moré, J.J.: A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comp. 28(126), 549–560 (1974)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Eichfelder, G.: Adaptive Scalarization Methods in Multiobjective Optimization. Springer, Berlin Heidelberg (2008)

Fazzio, N.S., Schuverdt, M.L.: Convergence analysis of a nonmonotone projected gradient method for multiobjective optimization problems. Optim. Lett. 13(6), 1365–1379 (2019)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13(3), 317–322 (1970)

Fliege, J., Graña Drummond, L.M., Svaiter, B.F.: Newton’s method for multiobjective optimization. SIAM J. Optim. 20(2), 602–626 (2009)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods of Oper. Res. 51(3), 479–494 (2000)

Fukuda, E.H., Graña Drummond, L.M.: On the convergence of the projected gradient method for vector optimization. Optimization 60(8–9), 1009–1021 (2011)

Fukuda, E.H., Graña Drummond, L.M.: Inexact projected gradient method for vector optimization. Comput. Optim. Appl. 54(3), 473–493 (2013)

Goldfarb, D.: A family of variable-metric methods derived by variational means. Math. Comput. 24, 23–26 (1970)

Gonçalves, M., Lima, F., Prudente, L.: A study of Liu-Storey conjugate gradient methods for vector optimization. Appl. Math. Comput. 425, 127099 (2022)

Gonçalves, M.L.N., Lima, F.S., Prudente, L.F.: Globally convergent Newton-type methods for multiobjective optimization. Comput. Optim. Appl. 83(2), 403–434 (2022)

Gonçalves, M.L.N., Prudente, L.F.: On the extension of the Hager-Zhang conjugate gradient method for vector optimization. Comput. Optim. Appl. 76(3), 889–916 (2020)

Graña Drummond, L.M., Iusem, A.N.: A Projected Gradient Method for Vector Optimization Problems. Comput. Optim. Appl. 28(1), 5–29 (2004)

Graña Drummond, L.M., Raupp, F.M.P., Svaiter, B.F.: A quadratically convergent Newton method for vector optimization. Optimization 63(5), 661–677 (2014)

Graña Drummond, L.M., Svaiter, B.F.: A steepest descent method for vector optimization. J. Comput. Appl. Math. 175(2), 395–414 (2005)

Lai, K.K., Mishra, S.K., Ram, B.: On q-Quasi-Newton’s Method for Unconstrained Multiobjective Optimization Problems. Mathematics 8(4), 616 (2020)

Lapucci, M., Mansueto, P.: A limited memory quasi-Newton approach for multi-objective optimization. Comput. Optim. Appl. 85(1), 33–73 (2023)

Li, D.-H., Fukushima, M.: A modified BFGS method and its global convergence in nonconvex minimization. J. Comput. Appl. Math. 129(1), 15–35 (2001)

Li, D.-H., Fukushima, M.: On the global convergence of the BFGS method for nonconvex unconstrained optimization problems. SIAM J. Optim. 11(4), 1054–1064 (2001)

Lucambio Pérez, L.R., Prudente, L.F.: Nonlinear conjugate gradient methods for vector optimization. SIAM J. Optim. 28(3), 2690–2720 (2018)

Lucambio Pérez, L.R., Prudente, L.F.: A Wolfe line search algorithm for vector optimization. ACM Trans. Math. Softw. 45(4), 23 (2019)

Mahdavi-Amiri, N., Sadaghiani, F.S.: A superlinearly convergent nonmonotone quasi-newton method for unconstrained multiobjective optimization. Optim. Methods Softw. 35(6), 1223–1247 (2020)

Mascarenhas, W.F.: The BFGS method with exact line searches fails for non-convex objective functions. Math. Program. 99(1), 49–61 (2004)

Miettinen, K.: Nonlinear multiobjective optimization, vol. 12. Springer Science & Business Media, Berlin (1999)

Morovati, V., Basirzadeh, H., Pourkarimi, L.: Quasi-Newton methods for multiobjective optimization problems. 4OR-Q J Oper Res 16(3), 261–294 (2017)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, New York, NY, USA (2006)

Povalej, Z.: Quasi-Newton’s method for multiobjective optimization. J. Comput. Appl. Math. 255, 765–777 (2014)

Powell, M.J.D.: Some global convergence properties of a variable metric algorithm for minimization without exact line searches. Nonlinear Programming, SIAM-AMS Proceedings 4, 53–72 (1976)

Prudente, L.F., Souza, D.R.: A quasi-Newton method with Wolfe line searches for multiobjective optimization. J. Optim. Theory Appl. 194, 1107–1140 (2022)

Qu, S., Goh, M., Chan, F.T.: Quasi-Newton methods for solving multiobjective optimization. Oper. Res. Lett. 39(5), 397–399 (2011)

Qu, S., Liu, C., Goh, M., Li, Y., Ji, Y.: Nonsmooth multiobjective programming with quasi-Newton methods. Eur. J. Oper. Res. 235(3), 503–510 (2014)

Shanno, D.F.: Conditioning of quasi-Newton methods for function minimization. Math. Comput. 24, 647–656 (1970)

Svaiter, B.F.: The multiobjective steepest descent direction is not Lipschitz continuous, but is Hölder continuous. Oper. Res. Lett. 46(4), 430–433 (2018)

Wang, J., Hu, Y., Wai Yu, C.K., Li, C., Yang, X.: Extended Newton methods for multiobjective optimization: majorizing function technique and convergence analysis. SIAM J. Optim. 29(3), 2388–2421 (2019)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was funded by CAPES and CNPq (Grants 309628/2020-2, 405349/2021-1).

Appendix

Appendix

In the main body of the article, we have chosen to exclude proofs that can be readily derived from existing sources in order to enhance the overall readability of the text. However, in this appendix, we provide these proofs to ensure self-contained completeness.

Notation. The cardinality of a set C is denoted by |C|. The ceiling and floor functions are denoted by \(\lceil \cdot \rceil \) and \(\lfloor \cdot \rfloor \), respectively; i.e., if \(x\in \mathbb {R}\), then \(\lceil x \rceil \) is the least integer greater than or equal to x and \(\lfloor x \rfloor \) is the greatest integer less than or equal to x. The notation \(\varphi (t):=o(t)\) for \(t>0\) means that \(\lim _{t\rightarrow 0}\varphi (t)/t=0\).

1.1 Proofs of Sect. 3

Throughout this section, we assume that Assumption 3.1 holds.

Proof of Proposition 3.2

It follows from (18), the definition of \(\mathcal {D}(\cdot ,\cdot )\), the Cauchy-Schwarz inequality, and Assumption 3.1(iii) that

where the second inequality follows from the fact that, for any \(u,v\in \mathbb {R}^m\), we have \(\max _j(u_j - v_j) \ge \max _ju_j - \max _jv_j\). Hence,

Now, since \(\{x^k\}\subset \mathcal{{L}}\), Assumption 3.1(i)–(ii) implies the existence of \(\mathcal{F}\in \mathbb {R}\) such that \(F_j(x^k)\ge \mathcal{F}\) for all \(k\ge 0\) and \(j=1,\ldots ,m\). Therefore, by (17), we have

Some algebraic manipulations yields

Therefore,

which together with (45) gives (26). \(\square \)

To prove Proposition 3.3, we will make use of function (35). Let us define

Thus, from the same arguments that led to (36), we obtain

where

Note from Lemma 2.4(i) that \(\xi _j^k\ge 0\).

Proof of Proposition 3.3

Let \(k\ge 1\) and \(p \in (0,1)\) be given and set \(\varepsilon {:}{=}1-p\) and \(\bar{p}{:}{=}1-\varepsilon /m\). Let \(j\in \{1,\ldots , m\}\) be an arbitrary index. From (46) and (27), we have

Therefore, since \(\psi (B_j^{k+1})>0\), we obtain

Let \(\mathcal{J}_j^{k}\) be the set consisting of the \(\lceil \bar{p}(k+1) \rceil \) indices corresponding to the \(\lceil \bar{p}(k+1) \rceil \) smallest values of \(\xi _j^\ell \), for \(\ell \le k\), and define \(\bar{\xi }_j^{k}:=\max _{\ell \in \mathcal{J}_j^{k}}\xi _j^\ell \). Then,

where the last inequality is due to \(\lceil \bar{p}(k+1) \rceil \le \bar{p}(k+1)+1.\) By combining the above two inequalities, we get, for all \(\ell \in \mathcal{J}_j^{k}\),

Therefore, by the definition of \(\xi _j^\ell \), we obtain, for all \(\ell \in \mathcal{J}_j^{k}\),

and hence

This means that \(\cos \beta _j^{\ell } \ge \delta _j\) for at least \(\lceil \bar{p}(k+1) \rceil \) values of \(\ell \in \{0,1,\ldots ,k\}\).

Now, let us define \(\delta {:}{=}\min _{j=1,\ldots ,m}\delta _j\) and, for all \(j=1,\ldots ,m\),

It is easy to see that \(\mathcal{J}_j^{k}\subset \mathcal{G}_j^k\), \(\mathcal{G}_j^k\cap \mathcal{B}_j^k=\emptyset \) and \(|\mathcal{G}_j^k|+|\mathcal{B}_j^k|=k+1\). Therefore, by the definition of \(\bar{p}\) and using some properties of the ceiling and floor functions, we have, for all \(j=1,\ldots ,m\),

and hence \(|\mathcal{B}_j^k|\le \lfloor \frac{\varepsilon }{m}(k+1)\rfloor \). Thus,

As consequence, since we also have \(|\mathop \cap _{j=1}^m \mathcal{G}_j^k|\ + |\mathop \cup _{j=1}^m \mathcal{B}_j^k|=k+1\), by using the definition of \(\varepsilon \), it follows that

which concludes the proof. \(\square \)

1.2 Proofs of Sect. 4

In this section, we make use of Assumption 4.1. In particular, and without loss of generality, we assume that \(\{x^k\}\subset U\), where U is a neighborhood of \(x^{*}\) such that (30) and (31) hold.

1.2.1 Proof of Proposition 4.1

We start with some auxiliary technical results.

Lemma A.1

Suppose that Assumption 4.1 holds. Let \(\beta _j^k\) be the angle between the vectors \(s^k\) and \(B_j^ks^k\), for all \(k\ge 0\) and \(j=1,\ldots ,m\). Then, for all \(k\ge 0\),

where \(\delta _k {:}{=}\min _{j =1,\dots ,m } \cos \beta _j^k\).

Proof

For a given \(k\ge 0\), by using the definitions of \(\delta _k\), \(\cos \beta _j^k\), and \(s^k\), we obtain

Therefore, from Lemma 2.2(ii) and (13), we have

Applying the triangle inequality, together with (11), (12), and Lemma 2.3(iv), we obtain:

\(\square \)

Lemma A.2

Suppose that Assumption 4.1 holds. Then, for all \(k\ge 0\), we have:

-

(i)

\(\Vert x^k-x^{*}\Vert \le \dfrac{2}{\underline{L}}\Vert d_{SD}(x^k)\Vert \);

-

(ii)

\(\Vert s^k\Vert \ge \dfrac{(1-\sigma )}{2\,L}\delta _k\Vert d_{SD}(x^k)\Vert \), where \(\delta _k\) is given as in Lemma A.1;

-

(iii)

\(\dfrac{(\gamma _j^{k})^\top s^k}{\Vert s^k\Vert ^2}\ge \underline{L}\), for all \(j=1,\ldots ,m\);

-

(iv)

\(\dfrac{\Vert \gamma _j^{k}\Vert ^2}{(\gamma _j^{k})^\top s^k}\le \dfrac{(2\,L+ \bar{\vartheta }\bar{c})^2}{\underline{L}}\), for all \(j=1,\ldots ,m\) and some constant \(\bar{c}>0.\)

Proof

Consider part (i). For a given value of \(k\ge 0\), consider \(\lambda ^{SD}(x^k)\in \mathbb {R}^m\) as in (15)–(16), and define the scalar-valued function \(F_{SD}:\mathbb {R}^n\rightarrow \mathbb {R}\) as follows:

Therefore, by taking \(z{:}{=}x^{*}-x^k\), it follows from (15) and (31) that

Evaluating this integral (which can be done by integration by parts), and considering that \(d_{SD}(x^k)=-\nabla F_{SD}(x^k)\), we obtain

Given that \(F_j(x^{*})\le F_j(x^k)\) for all \(j=1,\ldots ,m\), we have \(F_{SD}(x^{*})-F_{SD}(x^k)\le 0\) and thus

which proves part (i).

Consider part (ii). By using (18) and the definitions of \(\mathcal{D}(\cdot ,\cdot )\) and \(y_j^k\), we obtain

which, together with (33), yields

where the latter inequality comes from (31). Therefore, taking into account that \(\sigma <1\), by Lemma A.1, we obtain

which gives the desired inequality.

Part (iii) is a direct consequence of (34). Finally, consider part (iv). From (19) and (31), we have

Furthermore, since \(\{x^k\}\subset U\), by (20) and using continuity arguments, there exists a constant \(\bar{c}>0\) such that

and hence, by (21),

Therefore, using the inequality in part (iii), we obtain

concluding the proof. \(\square \)

We are now able to prove Proposition 4.1

Proof of Proposition 4.1

Let \(\lambda ^{SD}(x^{*})\in \mathbb {R}^m\) be a steepest descent multiplier associated with \(x^{*}\) as in (15)–(16), and define the scalar-valued function \(F_{*}:\mathbb {R}^n\rightarrow \mathbb {R}\) as follows:

Note that

where the last equality comes from Lemma 2.3(i). Now, by using (31), we obtain

for all \(j=1,\ldots ,m\) and for all \(k\ge 0\). By multiplying this expression by \(\lambda _j^{SD}(x^{*})\), summing over all indices \(j=1,\ldots ,m\), and taking into account (15) and (47), we obtain

From the right hand side of (48) and Lemma A.2(i), we obtain

On the other hand, (17) gives

Therefore, from Lemma A.1 and Lemma A.2(ii), we have

Hence, by subtracting the term \(F_{*}(x^{*})\) in both sides of the latter inequality, and using (49), we obtain

For each \(k\ge 0\), define \(\bar{r}_k{:}{=}1-\rho (1-\sigma )\underline{L}^2\delta _k^2/(8\,L^2)\). It is easy to see that \(\bar{r}_k\in (0,1]\), for all \(k\ge 0\).

Now, given \(p\in (0,1)\), we can invoke Lemma A.2(iii)–(iv) to apply Proposition 3.3. This implies that there exists a constant \(\delta > 0\) such that, for any \(k\ge 1\), the number of elements \(\ell \in \{0,1,\ldots ,k\}\) for which \(\delta _{\ell } \ge \delta \) is at least \(\lceil p(k+1) \rceil \). By defining \(\mathcal{G}_k{:}{=}\{\ell \in \{0,1,\ldots ,k\}\mid \delta _{\ell } \ge \delta \}\), we have \(|\mathcal{G}_k|\ge \lceil p(k+1) \rceil \) and

Thus, from (50) and considering that \(F_{*}(x^0) - F_{*}(x^{*}) >0\), we obtain, for all \(k\ge 1\),

where the second inequality follows from the fact that \({\bar{r}}_{\ell }\le 1\) for all \(\ell \notin \mathcal{G}_k\). Therefore, by taking \(r:={\bar{r}}^p\), we obtain

Combining this with the left hand side of (48), we find

Finally, by summing this expression and taking into account that \(r<1\), we conclude that (32) holds. \(\square \)

1.2.2 Proof of Theorem 4.3

We start by introducing an auxiliary result.

Lemma A.3

Suppose that Assumptions 4.1 and 4.2 hold. Then, there exists \(\bar{a}>0\) such that

for all k sufficiently large. Moreover,

Proof

By choosing \(\gamma \in (0,1)\) and recalling that \(s^k=\alpha _k d^k\), it follows from (37) that

for all k sufficiently large. Thus, by (31), we obtain

for all k sufficiently large. Therefore, using (12) and (13), we have

for all k sufficiently large. Defining \(\bar{a}{:}{=}\underline{L}(1- \gamma )/2\), we establish (51). Finally, by combining (51), Lemma 2.2(ii), and Proposition 3.2, we obtain

which concludes the proof. \(\square \)

Recalling that \(\lambda ^k\in \mathbb {R}^m\) is the Lagrange multiplier associated to \(x^k\) of problem (7) fulfilling (11)–(12), let us define

Next, we show that the sequence of functions \(\{F_{\lambda }^k(x)\}_{k\ge 0}\) fulfills a Dennis–Moré-type condition.

Theorem A.4

Suppose that Assumptions 4.1 and 4.2 hold. For each \(k\ge 0\), consider \(F_{\lambda }^k:\mathbb {R}^n\rightarrow \mathbb {R}\) and \(B_{\lambda }^k\) as in (53). Then,

or, equivalently,

Proof

By (53) and taking into account (12), we have

which, combined with (38), yields (54). We proceed to show that (54) implies (55). Firstly, considering (11), since \(B_{\lambda }^kd^k=-\nabla F_{\lambda }^k(x^k) \), it follows that (55) is equivalent to

Note that

and, by using continuity arguments,

Therefore, combining the two latter inequalities, we obtain (56). The proof that (55) implies (54) can be obtained similarly. \(\square \)

The following result shows that the unit step size eventually satisfies the Wolfe conditions (17)–(18).

Theorem A.5

Suppose that Assumptions 4.1 and 4.2 hold. Then, the step size \(\alpha _k =1\) is admissible for all k sufficiently large.

Proof

Let \(j\in \{1,\ldots ,m\}\) be an arbitrary index. It is easy to see that (38) is equivalent to

Thus, by Taylor’s theorem, it follows that

Therefore, by using (6) and setting \(t{:}{=}2\rho <1\), we have

Consequently, according to (51), for sufficiently large k,

As the term in square brackets is negative for k large enough, we conclude that

On the other hand, combining (11)–(13), we find

Hence, from the last two inequalities and the definition of t, we obtain

for all k sufficiently large. Given the arbitrary choice of \(j\in \{1,\ldots ,m\}\), we conclude that the step size \(\alpha _k =1\) satisfies (17) for all sufficiently large k.

Consider the curvature condition (18). From the definition of \(F_{\lambda }^k\) in (53), we have

Thus, by (12), (31), and (55), we obtain

Hence, taking into account (52) and (57), for k sufficiently large, it follows that

On the other hand, applying the Mean Value Theorem to the scalar function \(\nabla F_{\lambda }^k(\cdot )^\top d^k\), there exists \(v^k{:}{=}x^k+t_kd^k\) for some \(t_k\in (0,1)\) such that

Therefore,

Now, by the definitions of \(F_{\lambda }^k\) and \(v^k\), and considering (12) and (52), we obtain

Thus, combining the latter two inequalities with (55), we have

Hence, for k large enough, we have

which, together with (58), yields

Therefore, by the definition of \(\mathcal {D}(\cdot ,\cdot )\), (12), and Lemma 2.2(ii), we obtain

for all k sufficiently large, concluding the proof. \(\square \)

We require an additional auxiliary result.

Lemma A.6

Suppose that Assumption 4.1 holds. Then,

where \(\varepsilon _k:=\max \{\Vert x^{k+1}-x^{*}\Vert ^{\nu },\Vert x^{k}-x^{*}\Vert ^{\nu }\}\).

Proof

By the definition of \(F_{\lambda }^k\) in (53) and taking into account (12), we obtain

On the other hand, for each \(j\in \{1,\ldots ,m\}\), using (33) and (30), we have

By combining the last two inequalities, we obtain the desired result. \(\square \)

Now, we can establish the superlinear convergence of Algorithm 1.

Theorem A.7

Suppose that Assumptions 4.1 and 4.2 hold. Then, \(\{x^k\}\) converges to \(x^{*}\) superlinearly.

Proof

According to Theorem A.5, \(d^k=x^{k+1}-x^k\) for all k sufficiently large. Consequently, \(B_{\lambda }^k (x^{k+1} - x^k) = - \nabla F_{\lambda }^k(x^k)\) (see (11)), and hence

for all k sufficiently large. Therefore,

for all k sufficiently large. Taking limits on both sides of the latter inequality, using (54) and Lemma A.6, we get

On the other hand, considering the definition of \(F_{\lambda }^k\) in (53), Lemma 2.3(iv), and Lemma A.2(i), we find that

Therefore, by using (59), we conclude that

which completes the proof. \(\square \)

Proof of Theorem 4.3

The proof follows straightforwardly from Theorems A.5 and A.7. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Prudente, L.F., Souza, D.R. Global convergence of a BFGS-type algorithm for nonconvex multiobjective optimization problems. Comput Optim Appl 88, 719–757 (2024). https://doi.org/10.1007/s10589-024-00571-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-024-00571-x

Keywords

- Multiobjective optimization

- Pareto optimality

- Quasi-Newton methods

- BFGS

- Wolfe line search

- Global convergence

- Rate of convergence