Abstract

This paper considers the problem of minimizing the summation of a differentiable function and a nonsmooth function on a Riemannian manifold. In recent years, proximal gradient method and its variants have been generalized to the Riemannian setting for solving such problems. Different approaches to generalize the proximal mapping to the Riemannian setting lead different versions of Riemannian proximal gradient methods. However, their convergence analyses all rely on solving their Riemannian proximal mapping exactly, which is either too expensive or impracticable. In this paper, we study the convergence of an inexact Riemannian proximal gradient method. It is proven that if the proximal mapping is solved sufficiently accurately, then the global convergence and local convergence rate based on the Riemannian Kurdyka–Łojasiewicz property can be guaranteed. Moreover, practical conditions on the accuracy for solving the Riemannian proximal mapping are provided. As a byproduct, the proximal gradient method on the Stiefel manifold proposed in Chen et al. [SIAM J Optim 30(1):210–239, 2020] can be viewed as the inexact Riemannian proximal gradient method provided the proximal mapping is solved to certain accuracy. Finally, numerical experiments on sparse principal component analysis are conducted to test the proposed practical conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Proximal gradient method and its variants are family of efficient algorithms for composite optimization problems of the form

where f is differentiable, and g is continuous but could be nonsmooth. In the simplest form, the method updates the iterate viaFootnote 1

where \({\left\langle u,v \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}} = u^T v\) and \(\Vert u\Vert _{{{\,\mathrm{\mathrm {F}}\,}}}^2 = {\left\langle u,u \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}\). The idea is to simplify the objective function in each iteration by replacing the differentiable term f with its first order approximation around the current iterate. In many applications, the proximal mapping has a closed-form solution or can be computed efficiently. Thus, the algorithm has low per iteration cost and is applicable for large-scale problems. For convergence analysis of proximal gradient methods, we refer the interested readers to [1,2,3,4,5,6,7] and references therein.

This paper considers a problem similar to (1) but with a manifold constraint,

where \(\mathcal {M}\) is a finite dimensional Riemannian manifold. Such optimization problem is of interest due to many important applications including but not limit to compressed models [8], sparse principal component analysis [9, 10], sparse variable principal component analysis [11,12,13], discriminative k-means [14], texture and imaging inpainting [15], co-sparse factor regression [16], and low-rank sparse coding [17, 18].

In the presence of the manifold constraints, developing Riemannian proximal gradient methods is more difficult due to nonlinearity of the domain. The update formula in (2) can be generalized to the Riemannian setting using a standard technique, i.e., via the notion of retraction. However, generalizing the proximal mapping to the Riemannian setting is not straightforward and different versions have been proposed. In [19], a proximal gradient method on the Stiefel manifold called ManPG, is proposed and analyzed by generalizing the proximal mapping (2) to

via the restriction of the search direction \(\eta\) onto the tangent space at \(x_k\). It is shown that such proximal mapping can be solved efficiently by a semi-smooth Newton method when the manifold \(\mathcal {M}\) is the Stiefel manifold. In [10], a diagonal weighted proximal mapping is defined by replacing \(\Vert \eta \Vert _F^2\)in (4) with \({\left\langle \eta ,W \eta \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}\), where the diagonal weighted linear operator W is carefully selected. Moreover, the Nesterov momentum acceleration technique is further introduced to accelerate the algorithm, yielding an algorithm called AManPG. Note that the Riemannian proximal mappings (4) involves the calculation of the addition, i.e., \(x_k + \eta\), which cannot be defined on a generic manifold. In [20], a Riemannian proximal gradient method, called RPG, is proposed by replacing the addition \(x_k + p\) with a retraction \(R_{x_k}(\eta )\), so that it is well-defined for generic manifolds. In addition, the Riemannian metric \({\left\langle , \right\rangle _{x}}\) is further used instead of the Euclidean inner product \({\left\langle , \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}\), and a stationary point is used instead of a minimizer. More precisely, letting

the Riemannian proximal mapping in RPG is given by

Unlike ManPG and AManPG that only guarantee global convergence, the local convergence rate of RPG has also been established in terms of Riemannian KL property.

The convergence analyses of Riemannian proximal gradient methods in [10, 19, 20] all rely on solving proximal mappings (4) and (5) exactly. Unlike the Euclidean cases, the existing Riemannian proximal mappings rarely yield a closed-form solution. When the manifold \(\mathcal {M}\) has a linear ambient space, (4) and (5) can be solved based on the semi-smooth Newton method described in [19, 20]. These methods solve a semi-smooth nonlinear systems with dimension equal to the dimension of the normal space. If the dimension of the normal space is low, such as the Stiefel manifold \({{\,\mathrm{\mathrm {St}}\,}}(p, n)\) with \(p \ll n\), then these semi-smooth Newton based methods are shown to be efficient. Otherwise, these methods can be inefficient. Such manifolds and applications include the manifold of fixed rank matrices for texture completion in [15], the manifold of fixed tucker rank tensors for genomic data analysis in [21], and the manifold of symmetric positive definite matrices for sparse inverse covariance matrix estimation in [22]. Therefore, finding an exact or high accurate solution is either not practicable due to numerical errors or may take too much computational time. Meanwhile, it is crucial to study the convergence of the inexact Riemannian proximal gradient method (i.e., the method without solving the proximal mapping (5) exactly), which is essentially the goal of this paper. The main contributions of this paper can be summaried as follows:

-

A general framework of the inexact RPG method is presented in Section 3. The global convergence as well as the local convergence rate of the method are respectively studied in Sects. 3.1 and 3.2 based on different theoretical conditions. The local convergence analysis is based on the Riemannian KL property.

-

It is shown in Sect. 4.1 that if we solve (4) to certain accuracy, the global convergence of the inexact RPG can be guaranteed. As a result, ManPG in [19] can be viewed as the inexact RPG method with the proximal mapping (5), and it is not necessary to solve (4) exactly for ManPG to enjoy global convergence.

-

Under the assumption g is retraction convex, a practical condition which meets the requirement for the local convergence rate analysis is provided in Sect. 4.2. The condition is derived based on the notion of error bound.

Inexact proximal gradient methods have been investigated in the Euclidean setting, see e.g., [23,24,25,26,27]. Multiple practical termination criteria for the inexact proximal mapping have been given such that the global convergence and local convergence rate are preserved. However, these criteria, the corresponding theoretical results, and the algorithm design all rely on the convexity of the function in the proximal mapping. Therefore, these methods can not be trivially generalized to the Riemannian setting since the objective function in the Riemannian proximal mapping (5) may not be convex due to the existence of a retraction. Note that for the inexact Riemannian proximal gradient method, the global and local convergence analyses and the condition that guarantees global convergence all do not assume convexity of the Riemannian proximal mapping. The convexity assumption is only made for the algorithm design that guarantees local convergence rate.

The rest of this paper is organized as follows. Notation and preliminaries about manifolds are given in Sect. 2. The inexact Riemannian proximal gradient method is presented in Sect. 3, followed by the convergence analysis. Section 4 gives practical conditions on the accuracy for solving the inexact Riemannian proximal mapping when the manifold has a linear ambient space. Numerical experiments are presented in Sect. 5 to test the practical conditions.

2 Notation and preliminaries on manifolds

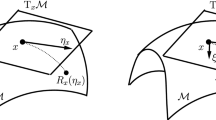

The Riemannian concepts of this paper follow from the standard literature, e.g., [28, 29] and the related notation follows from [29]. A Riemannian manifold \(\mathcal {M}\) is a manifold endowed with a Riemannian metric \((\eta _x, \xi _x) \mapsto {\left\langle \eta _x, \xi _x \right\rangle _{x}} \in \mathbb {R}\), where \(\eta _x\) and \(\xi _x\) are tangent vectors in the tangent space of \(\mathcal {M}\) at x. The induced norm in the tangent space at x is denoted by \(\Vert \cdot \Vert _x\) or \(\Vert \cdot \Vert\) when the subscript is clear from the context. The tangent space of the manifold \(\mathcal {M}\) at x is denoted by \(\mathrm {T}_x \mathcal {M}\), and the tangent bundle, which is the set of all tangent vectors, is denoted by \(\mathrm {T}\mathcal {M}\). A vector field is a function from the manifold to its tangent bundle, i.e., \(\eta :\mathcal {M} \rightarrow \mathrm {T}\mathcal {M}: x\mapsto \eta _x\). An open ball on a tangent space is denoted by \(\mathcal {B}(\eta _x, r) = \{\xi _x \in \mathrm {T}_{x} \mathcal {M} \mid \Vert \xi _x - \eta _x\Vert _x < r\}\). An open ball on the manifold is denoted by \(\mathbb {B}(x, r) = \{ y \in \mathcal {M} \mid {{\,\mathrm{\mathrm {dist}}\,}}(y, x) < r \}\), where \({{\,\mathrm{\mathrm {dist}}\,}}(x, y)\) denotes the distance between x and y on \(\mathcal {M}\).

A retraction is a smooth (\(C^\infty\)) mapping from the tangent bundle to the manifold such that (i) \(R(0_x) = x\) for all \(x \in \mathcal {M}\), where \(0_x\) denotes the origin of \(\mathrm {T}_x \mathcal {M}\), and (ii) \(\frac{d}{d t} R(t \eta _x) \vert _{t = 0} = \eta _x\) for all \(\eta _x \in \mathrm {T}_x \mathcal {M}\). The domain of R does not need to be the entire tangent bundle. However, it is usually the case in practice, and in this paper we assume R is always well-defined. Moreover, \(R_x\) denotes the restriction of R to \(\mathrm {T}_x \mathcal {M}\). For any \(x \in \mathcal {M}\), there always exists a neighborhood of \(0_x\) such that the mapping \(R_x\) is a diffeomorphism in the neighborhood. An important retraction is the exponential mapping, denoted by \({\mathrm {Exp}}\), satisfying \({\mathrm {Exp}}_x(\eta _x) = \gamma (1)\), where \(\gamma (0) = x\), \(\gamma '(0) = \eta _x\), and \(\gamma\) is the geodesic passing through x. In a Euclidean space, the most common retraction is the exponential mapping given by addition \({{\,\mathrm{\mathrm {Exp}}\,}}_x(\eta _x) = x + \eta _x\). If the ambient space of the manifold \(\mathcal {M}\) is a finite dimensional linear space, i.e., \(\mathcal {M}\) is an embedded submanifold of \(\mathbb {R}^n\) or a quotient manifold whose total space is an embedded submanifold of \(\mathbb {R}^n\), then there exist two constants \(\varkappa _1\) and \(\varkappa _2\) such that the inequalities

hold for any \(x \in \mathcal {N}\) and \(R_x(\eta _x) \in \mathcal {N}\), where \(\mathcal {N}\) is a compact subset of \(\mathcal {M}\).

A vector transport \(\mathcal {T}: \mathrm {T}\mathcal {M} \oplus \mathrm {T}\mathcal {M} \rightarrow \mathrm {T}\mathcal {M}: (\eta _x, \xi _x) \mapsto \mathcal {T}_{\eta _x} \xi _x\) associated with a retraction R is a smooth (\(C^\infty\)) mapping such that, for all \((x, \eta _x)\) in the domain of R and all \(\xi _x \in \mathrm {T}_x \mathcal {M}\), it holds that (i) \(\mathcal {T}_{\eta _x} \xi _x \in \mathrm {T}_{R(\eta _x)} \mathcal {M}\) (ii) \(\mathcal {T}_{0_x} \xi _x = \xi _x\), and (iii) \(\mathcal {T}_{\eta _x}\) is a linear map. An important vector transport is the parallel translation, denoted \(\mathcal {P}\). The basic idea behind the parallel translation is to move a tangent vector along a given curve on a manifold “parallelly”. We refer to [29] for its rigorous definition. The vector transport by differential retraction \(\mathcal {T}_R\) is defined by \(\mathcal {T}_{R_{\eta _x}} \xi _x = \frac{d}{d t} R_{x}(\eta _x + t \xi _x) \vert _{t = 0}\). The adjoint operator of a vector transport \(\mathcal {T}\), denoted by \(\mathcal {T}^\sharp\), is a vector transport satisfying \({\left\langle \xi _y,\mathcal {T}_{\eta _x} \zeta _x \right\rangle _{y}} = {\left\langle \mathcal {T}_{\eta _x}^\sharp \xi _y,\zeta _x \right\rangle _{x}}\) for all \(\eta _x, \zeta _x \in \mathrm {T}_x \mathcal {M}\) and \(\xi _y \in \mathrm {T}_y \mathcal {M}\), where \(y = R_x(\eta _x)\). In the Euclidean setting, a vector transport \(\mathcal {T}_{\eta _x}\) for any \(\eta _x \in \mathrm {T}_x \mathcal {M}\) can be represented by a matrix (the commonly-used vector transport is the identity matrix). Then the adjoint operators of a vector transport are given by the transpose of the corresponding matrix.

The Riemannian gradient of a function \(h:\mathcal {M} \rightarrow \mathbb {R}\), denote \({{\,\mathrm{\mathrm {grad}}\,}}h(x)\), is the unique tangent vector satisfying:

where \({{\,\mathrm{\mathrm {D}}\,}}h(x) [\eta _x]\) denotes the directional derivative along the direction \(\eta _x\).

A function \(h:\mathcal {M} \rightarrow \mathbb {R}\) is called locally Lipschitz continuous with respect to a retraction R if for any compact subset \(\mathcal {N}\) of \(\mathcal {M}\), there exists a constant \(L_h\) such that for any \(x \in \mathcal {N}\) and \(\xi _x, \eta _x \in \mathrm {T}_x \mathcal {M}\) satisfying \(R_x(\xi _x) \in \mathcal {N}\) and \(R_x(\eta _x) \in \mathcal {N}\), it holds that \(\vert h \circ R (\xi _x) - h \circ R(\eta _x) \vert \le L_{h} \Vert \xi _x - \eta _x\Vert\). If h is Lipschitz continuous but not differentiable, then the Riemannian version of generalized subdifferential defined in [30] is used. Specifically, since \({\hat{h}}_x = h \circ R_x\) is a Lipschitz continuous function defined on a Hilbert space \(\mathrm {T}_x \mathcal {M}\), the Clarke generalized directional derivative at \(\eta _x \in \mathrm {T}_x \mathcal {M}\), denoted by \({\hat{h}}_x^\circ (\eta _x; v)\), is defined by \({\hat{h}}_x^\circ (\eta _x; v) = \lim _{\xi _x \rightarrow \eta _x} \sup _{t \downarrow 0} \frac{{\hat{h}}_x(\xi _x + t v) - {\hat{h}}_x(\xi _x)}{t}\), where \(v \in \mathrm {T}_x \mathcal {M}\). The generalized subdifferential of \({\hat{h}}_x\) at \(\eta _x\), denoted \(\partial {\hat{h}}_x(\eta _x)\), is defined by \(\partial {\hat{h}}_x(\eta _x) = \{\eta _x \in \mathrm {T}_x \mathcal {M} \mid {\left\langle \eta _x,v \right\rangle _{x}} \le {\hat{h}}_x^\circ (\eta _x; v)\;\hbox {for all}\; v \in \mathrm {T}_x \mathcal {M}\}\). The Riemannian version of the Clarke generalized direction derivative of h at x in the direction \(\eta _x \in \mathrm {T}_x \mathcal {M}\), denoted \(h^\circ (x; \eta _x)\), is defined by \(h^\circ (x; \eta _x) = {\hat{h}}_x^\circ (0_x; \eta _x)\). The generalized subdifferential of h at x, denoted \(\partial h(x)\), is defined as \(\partial h(x) = \partial {\hat{h}}_x(0_x)\). Any tangent vector \(\xi _x \in \partial h(x)\) is called a Riemannian subgradient of h at x.

A vector field \(\eta\) is called Lipschitz continuous if there exist a positive injectivity radius \(i(\mathcal {M})\) and a positive constant \(L_v\) such that for all \(x, y \in \mathcal {M}\) with \({{\,\mathrm{\mathrm {dist}}\,}}(x, y) < i(\mathcal {M})\), it holds that

where \(\gamma\) is a geodesic with \(\gamma (0) = x\) and \(\gamma (1) = y\), the injectivity radius \(i(\mathcal {M})\) is defined by \(i(\mathcal {M}) = \inf _{x \in \mathcal {M}} i_x\) and \(i_x = \sup \{\epsilon > 0 \mid {{\,\mathrm{\mathrm {Exp}}\,}}_x\vert _{\mathbb {B}(x, \epsilon )}\;\hbox {is a diffeomorphism}\}\). Note that for any compact manifold, the injectivity radius is positive [31, Lemma 6.16]. A vector field \(\eta\) is called locally Lipschitz continuous if for any compact subset \({\bar{\Omega }}\) of \(\mathcal {M}\), there exists a positive constant \(L_v\) such that for all \(x, y \in {\bar{\Omega }}\) with \({{\,\mathrm{\mathrm {dist}}\,}}(x, y) < i({{\bar{\Omega }}})\), inequality (8) holds. A function on \(\mathcal {M}\) is called (locally) Lipschitz continuous differentiable if the vector field of its gradient is (locally) Lipschitz continuous.

Let \(\tilde{\Omega }\) be a subset of \(\mathcal {M}\). If there exists a positive constant \(\varrho\) such that, for all \(y \in \tilde{\Omega }, \tilde{\Omega } \subset R_y(\mathcal {B}(0_y, \varrho ))\) and \(R_y\) is a diffeomorphism on \(\mathcal {B}(0_y, \varrho )\), then we call \(\tilde{\Omega }\) a totally retractive set with respect to \(\varrho\). The existence of \(\tilde{\Omega }\) can be shown along the lines of [32, Theorem 3.7], i.e., given any \(x \in \mathcal {M}\), there exists a neighborhood of x which is a totally retractive set.

In a Euclidean space, the Euclidean metric is denoted by \({\left\langle \eta _x, \xi _x \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}\), where \({\left\langle \eta _x, \xi _x \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}\) is equal to the summation of the entry-wise products of \(\eta _x\) and \(\xi _x\), such as \(\eta _x^T \xi _x\) for vectors and \({{\,\mathrm{\mathrm {trace}}\,}}(\eta _x^T \xi _x)\) for matrices. The induced Euclidean norm is denoted by \(\Vert \cdot \Vert _{\mathrm {F}}\). For any matrix M, the spectral norm is denoted by \(\Vert M\Vert _2\). For any vector \(v \in \mathbb {R}^n\), the p-norm, denoted \(\Vert v\Vert _p\), is equal to \(\left( \sum _{i = 1}^n {}{\vert v_i \vert }^p \right) ^{\frac{1}{p}}\). In this paper, \(\mathbb {R}^n\) does not only refer to a vector space, but also can refer to a matrix space or a tensor space.

3 An inexact Riemannian proximal gradient method

The proposed inexact Riemannian proximal gradient method (IRPG) is stated in Algorithm 1. The search direction \(\hat{\eta }_{x_k}\) at the k-th iteration solves the proximal mapping

approximately in the sense that its distance to a stationary point \(\eta _{x_k}^*\), \(\Vert \hat{\eta }_{x_k} - \eta _{x_k}^*\Vert\), is controlled from above by a continuous function q of (\(\varepsilon _k\), \(\Vert \hat{\eta }_{x_k}\Vert\)) and the function value of \(\ell _{x_k}\) satisfies \(\ell _{x_k}(0) \ge \ell _{x_k}(\hat{\eta }_{x_k})\). To the best of our knowledge, this is not Riemannian generalization of any existing Euclidean inexact proximal gradient methods. Specifically, given the exact Euclidean proximal mapping defined by \({{\,\mathrm{\mathrm {Prox}}\,}}_{\lambda g}(y) = \mathop {\mathrm {arg\,min}}\limits _{x} \Phi _{\lambda }(x) := \lambda g(x) + \frac{1}{2} \Vert x - y\Vert _{{{\,\mathrm{\mathrm {F}}\,}}}^2\), letting \(z = {{\,\mathrm{\mathrm {Prox}}\,}}_{\lambda g}(y)\), it follows that \((y - z) / \lambda \in \partial ^E g(z)\) and \({{\,\mathrm{\mathrm {dist}}\,}}(0, \partial ^E \Phi _{\lambda }(z)) = 0\), where \(\partial ^E\) denotes the Euclidean subdifferential. Based on these observations, the inexact Euclidean proximal mappings proposed in [25,26,27, 33] only require z to satisfy any one of the following conditions:

where \(\partial _{\epsilon }^E\) denotes the Euclidean \(\epsilon\)-subdifferential. The corresponding analyses and algorithms rely on the properties of \(\epsilon\)-subdifferential of convex functions. However, since the function \(\ell _{x_k}(\eta )\) is not necessarily convex, these techniques cannot be applied. Note that if g is convex and \(\mathcal {M}\) is a Euclidean space, then the function \(\Phi\) is strongly convex. Therefore, the solutions of the inexact Euclidean proximal mappings in (10) all satisfy (11) with certain q by choosing an appropriate choice of \(\varepsilon\).

In Algorithm 1, q controls the accuracy for solving the proximal mapping and different accuracies lead to different convergence results. Here we give four choices of q:

-

(1)

\(q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = \varepsilon _k\) with \(\varepsilon _k \rightarrow 0\);

-

(2)

\(q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = \tilde{q}(\Vert \hat{\eta }_{x_k}\Vert )\) with \(\tilde{q}: \mathbb {R} \rightarrow [0, \infty )\) a continuous function satisfying \(\tilde{q}(0) = 0\);

-

(3)

\(q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = \varepsilon _k^2\), with \(\sum _{k = 0}^{\infty } \varepsilon _k < \infty\); and

-

(4)

\(q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = \min (\varepsilon _k^2, \delta _q \Vert \hat{\eta }_{x_k}\Vert ^2)\) with a constant \(\delta _q > 0\) and \(\sum _{k = 0}^{\infty } \varepsilon _k < \infty\).

The four choices all satisfy the requirement for the global convergence in Theorem 4, with the first one being the weakest. A practical scheme discussed in Sect. 4.1 can yield a \(\hat{\eta }_{x_k}\) that satisfies the second choice. The third q guarantees that the accumulation point is unique as shown in Theorem 7. The last q allows us to establish convergence rate analysis of Algorithm 1 based on the Riemannian KL property, as shown in Theorem 8. The practical scheme for generating \(\hat{\eta }_{x_k}\) that satisfies the third and fourth choices is discussed in Sect. 4.2.

3.1 Global convergence analysis

The global convergence analysis is over similar to that in [20] and relies on Assumptions 1 and 2 below. Assumption 1 is mild in the sense that it holds if the manifold \(\mathcal {M}\) is compact and the function F is continuous.

Assumption 1

The function F is bounded from below and the sublevel set \(\Omega _{x_0} = \{x \in \mathcal {M} \mid F(x) \le F(x_0)\}\) is compact, where \(x_0\) is the initiate iterate of Algorithm 1.

Definition 1 has been used in [20, 34]. It generalizes the L-smoothness from the Euclidean setting to the Riemannian setting. It says that if the composition \(h \circ R\) satisfies the Euclidean version of L-smoothness, then h is called a L-retraction-smooth function.

Definition 1

Given \(L > 0\), a function \(h:\mathcal {M} \rightarrow \mathbb {R}\) is called L-retraction-smooth with respect to a retraction R in a subset \(\mathcal {N}\) of the manifold \(\mathcal {M}\), i.e., \(\mathcal {N} \subseteq \mathcal {M}\), if for any \(x \in \mathcal {N}\) and any \(\mathcal {S}_x \subseteq \mathrm {T}_x \mathcal {M}\) such that \(R_x(\mathcal {S}_x)\subseteq \mathcal {N}\), we have that

In Assumption 2, we assume that the function f is L-retraction-smooth in the sublevel set \(\Omega _{x_0}\). This is also mild and practical methods to verify this assumption have been given in [34, Lemma 2.7].

Assumption 2

The function f is L-retraction-smooth with respect to the retraction R in the sublevel set \(\Omega _{x_0}\).

Lemma 3 shows that IRPG is a descent algorithm. The short proof is the same as that for [20, Lemma 1], but included for completeness.

Lemma 3

Suppose Assumption 2holds and \(\tilde{L} > L\). Then the sequence \(\{x_k\}\) generated by Algorithm 1 satisfies

where \(\beta = (\tilde{L} - L) / 2\).

Proof

By the definition of \(\hat{\eta }_{x_k}\) and the L-retraction-smooth of f, we have

which completes the first result. \(\square\)

Now, we are ready to give a global convergence analysis of IRPG.

Theorem 4

Suppose that Assumptions 1and 2hold, that \(\tilde{L} > L\), and that \(\lim _{k \rightarrow \infty } q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = 0\). Then the sequence \(\{x_k\}\) has at least one accumulation point. Let \(x_*\) be any accumulation point of the sequence \(\{x_k\}\). Then \(x_*\) is a stationary point. Furthermore, Algorithm 1 returns \(x_k\) satisfying \(\Vert \hat{\eta }_{x_k}\Vert \le \epsilon\) in at most \((F(x_0) - F(x_*)) / (\beta \epsilon ^2)\) iterations.

Proof

The proof mainly follows the proof in [20, Theorem 1]. Here, we only highlight the differences. The existence of an accumulation point follows immediately from Assumption 1 and Lemma 3.

By Lemma 3, we have that \(F(x_0) - F(\tilde{x}) \ge \beta \sum _{i = 0}^\infty \Vert \hat{\eta }_{x_k}\Vert ^2\), where \(\tilde{x}\) denotes a global minimizer of F. Therefore,

By \(\lim _{k \rightarrow \infty } q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = 0\), we have

The remaining of the proof follows [20, Theorem 1]. \(\square\)

3.2 Local convergence rate analysis using Riemannian Kurdyka–Łojasiewicz property

The KL property has been widely used for the convergence analysis of various convex and nonconvex algorithms in the Euclidean case, see e.g., [6, 35,36,37]. In this section we will study the convergence of RPG base on the Riemannian Kurdyka–Łojasiewicz (KL) property, introduced in [38] for the analytic setting and in [39] for the nonsmooth setting. Note that a convergence analysis based on KL property for a Euclidean inexact proximal gradient has been given in [27]. As we pointed out before, the convergence analysis and algorithm design therein rely on the convexity of the objective in the proximal mapping.

Definition 2

A continuous function \(f: \mathcal {M} \rightarrow \mathbb {R}\) is said to have the Riemannian KL property at \(x \in \mathcal {M}\) if and only if there exists \(\varepsilon \in (0, \infty ]\), a neighborhood \(U \subset \mathcal {M}\) of x, and a continuous concave function \(\varsigma : [0, \varepsilon ] \rightarrow [0, \infty )\) such that

-

\(\varsigma (0) = 0\),

-

\(\varsigma\) is \(C^1\) on \((0, \varepsilon )\),

-

\(\varsigma ' > 0\) on \((0, \varepsilon )\),

-

For every \(y \in U\) with \(f(x)< f(y) < f(x) + \varepsilon\), we have

$$\begin{aligned} \varsigma '(f(y) - f(x)) {{\,\mathrm{\mathrm {dist}}\,}}(0, \partial f(y)) \ge 1, \end{aligned}$$where \({{\,\mathrm{\mathrm {dist}}\,}}(0, \partial f(y)) = \inf \{ \Vert v\Vert _y : v \in \partial f(y) \}\) and \(\partial\) denotes the Riemannian generalized subdifferential. The function \(\varsigma\) is called the desingularising function.

Note that the definition of the Riemannian KL property is overall similar to the KL property in the Euclidean setting, except that related notions including U, \(\partial f(y)\) and \({{\,\mathrm{\mathrm {dist}}\,}}(0, \partial f(y))\) are all defined on a manifold. In [20, 39, 40], sufficient conditions to verify if a function satisfies the Riemannian KL condition are given. Specifically, in [20, Section 3.4], it is shown that any restriction of a semialgebraic function onto the Stiefel manifold satisfies the Riemannian KL property. In [40], Lemma 4 therein gives a necessary and sufficient condition to verify if a function defined on an embedded submanifold satisfies the Riemannian KL property by verifying whether its extension satisfies the Euclidean KL property.

Assumptions 5 and 6 are used for the convergence analysis in this section. Assumption 5 is a standard assumption and has been made in e.g., [6], when the manifold \(\mathcal {M}\) is the Euclidean space.

Assumption 5

The function \(f:\mathcal {M} \rightarrow \mathbb {R}\) is locally Lipschitz continuously differentiable.

Assumption 6

The function F is locally Lipschitz continuous with respect to the retraction R.

In order to guarantee the uniqueness of accumulation points, the Riemannian proximal mapping needs to be solved more accurately than (11), as shown in Theorem 7.

Theorem 7

Let \(\{x_k\}\) denote the sequence generated by Algorithm 1 and \(\mathcal {S}\) denote the set of all accumulation points. Suppose Assumptions 1, 2, 5and 6hold. We further assume that \(F = f + g\) satisfies the Riemannian KL property at every point in \(\mathcal {S}\). If the Riemannian proximal mapping (5) is solved such that for all k,

that is, \(q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = \varepsilon _k^2\) and \(\sum _{k=1}^{\infty } \varepsilon _k < \infty\). Then,

It follows that \(\mathcal {S}\) only contains a single point.

Proof

First note that the global convergence result in Theorem 4 implies that every point in \(\mathcal {S}\) is a stationary point. Since \(\lim _{k \rightarrow \infty } \Vert \hat{\eta }_{x_k}\Vert = 0\), there exists a \(\delta _T>0\) such that \(\Vert \hat{\eta }_{x_k}\Vert \le \delta _T\) for all k. Thus, the application of [20, Lemma 6] implies that

Then by [37, Remark 5], we know that \(\mathcal {S}\) is a compact set. Moreover, since \(F(x_k)\) is nonincreasing and F is continuous, F has the same value at all the points in \(\mathcal {S}\). Therefore, by [20, Lemma 5], there exists a single desingularising function, denoted \(\varsigma\), for the Riemannian KL property of F to hold at all the points in \(\mathcal {S}\).

Let \(x_*\) be a point in \(\mathcal {S}\). Assume there exists \({\bar{k}}\) such that \(x_{{\bar{k}}}=x_*\). Since \(F(x_k)\) is non-increasing, it must hold \(F(x_{{\bar{k}}})=F(x_{{{\bar{k}}}+1})\). By Lemma 3, we have \(\eta _{x_{{\bar{k}}}}^*=0\), \(x_{{\bar{k}}}=x_{{{\bar{k}}}+1}\), (16) holds evidently.

In the case when \(F(x_k)>F(x_*)\) for all k, Since \(\eta _{x_k}^* \rightarrow 0\), we have \(F(R_{x_k}(\eta _{x_k}^*)) \rightarrow F(x_*)\), \({{\,\mathrm{\mathrm {dist}}\,}}(R_{x_k}(\eta _{x_k}^*), \mathcal {S}) \rightarrow 0\). By the Riemannian KL property of F on \(\mathcal {S}\), there exists an \(l>0\) such that

It follows that

Since \(\lim _{k \rightarrow \infty } \Vert \eta _{x_{k}}^*\Vert = 0\), there exists a constant \(k_0 > 0\) such that \(\Vert \eta _{x_{k}}^*\Vert < \mu\) for all \(k > k_0\), where \(\mu\) is defined in [20, Lemma 7]. By Assumption 5 and [20, Lemma 7], we have

for all \(k \ge k_0\), where \(L_c\) is a constant. By the definition of \(\eta _{x_{k}}^*\), there exists \(\zeta _{x_{k}} \in \partial g( R_{x_{k}} (\eta _{x_{k}}^*) )\) such that

It follows that

Therefore, (19) and (21) yield

for all \(k > k_0\). Inserting this into (18) gives

Define \(\Delta _{p, q} = \varsigma (F(x_{p})-F(x_*))-\varsigma (F(x_{q})-F(x_*))\). We next show that for sufficiently large k,

where \(b_0 = \frac{L_c}{\beta }\), \(b_1 = \frac{L_F}{\beta }\), and \(L_F\) is the Lipschitz constant of F. To the end, we consider two cases:

-

Case 1: \(F(x_k) = F(R_{x_{k-1}}(\hat{\eta }_{x_{k-1}})) \le F(R_{x_{k-1}}(\eta _{x_{k-1}}^*))\).

We have

$$\begin{aligned}&\varsigma (F(x_{k})-F(x_*))-\varsigma (F(x_{k+1})-F(x_*)) \\&\quad \ge \varsigma '(F(x_{k})-F(x_*))(F(x_{k})-F(x_{k+1})) \\&\quad \ge \varsigma '(F(R_{x_{k-1}}(\eta _{x_{k-1}}^*))-F(x_*))(F(x_{k})-F(x_{k+1}))\\&\quad \ge L_c^{-1}\beta \frac{\Vert \hat{\eta }_{x_{k}}\Vert ^2}{\Vert \eta _{x_{k-1}}^*\Vert } \\&\quad \ge \frac{\beta }{L_c} \frac{\Vert \hat{\eta }_{x_{k}}\Vert ^2}{{}{(\Vert \hat{\eta }_{x_{k-1}}\Vert + \varepsilon _{k-1}^2)}} \quad \text{ for } \text{ all }\quad k>{\hat{l}}:=\max (k_0,l), \end{aligned}$$where the first and the second inequalities are from the concavity of \(\varsigma\), the third inequality is from (23) and (13), and the last inequality is from (15). It follows that

$$\begin{aligned} \Vert \hat{\eta }_{x_{k}}\Vert ^2 \le \frac{L_c}{\beta } \Delta _{k, k+1} (\Vert \hat{\eta }_{x_{k-1}}\Vert + \varepsilon _{k-1}^2), \end{aligned}$$which implies that (24) holds.

-

Case 2: \(F(x_k) = F(R_{x_{k-1}}(\hat{\eta }_{x_{k-1}})) > F(R_{x_{k-1}}(\eta _{x_{k-1}}^*))\).

We have

$$\begin{aligned}&\varsigma (F(x_{k})-F(x_*))-\varsigma (F(x_{k+1})-F(x_*)) \nonumber \\&\quad \ge \varsigma (F(R_{x_{k-1}}(\eta _{x_{k-1}}^*))-F(x_*))-\varsigma (F(x_{k+1})-F(x_*)) \nonumber \\&\quad \ge \varsigma '(F(R_{x_{k-1}}(\eta _{x_{k-1}}^*)) - F(x_*))(F(R_{x_{k-1}}(\eta _{x_{k-1}}^*))-F(x_{k+1})) \nonumber \\&\quad = \varsigma '(F(R_{x_{k-1}}(\eta _{x_{k-1}}^*))-F(x_*)) \Big (F(R_{x_{k-1}}(\eta _{x_{k-1}}^*)) \nonumber \\&\qquad - F(R_{x_{k-1}}(\hat{\eta }_{x_{k-1}})) + F(x_k) -F(x_{k+1}) \Big ) \nonumber \\&\quad \ge \frac{\beta \Vert \hat{\eta }_{x_{k}}\Vert ^2 - L_F \Vert {\eta }_{x_{k-1}}^* - \hat{\eta }_{x_{k-1}}\Vert }{L_c\Vert \eta _{x_{k-1}}^*\Vert } \quad \text{ for } \text{ all }\quad k>{\hat{l}}:=\max (k_0,l) \nonumber \\&\quad \ge \frac{\beta \Vert \hat{\eta }_{x_{k}}\Vert ^2 - L_F \varepsilon _{k-1}^2}{L_c(\Vert \hat{\eta }_{x_{k-1}}\Vert + \varepsilon _{k-1}^2)}, \end{aligned}$$(25)where the third inequality is from Assumption 6 with Lipschitz constant denoted by \(L_F\) and the last inequality is from (15). Together with (15), inequality (25) yields that for all \(k > \hat{\ell }\),

$$\begin{aligned} \beta \Vert \hat{\eta }_{x_{k}}\Vert ^2 \le L_c \Delta _{k, k+1} (\Vert \hat{\eta }_{x_{k-1}}\Vert + \varepsilon _{k-1}^2) + L_F \varepsilon _{k-1}^2, \end{aligned}$$(26)which gives

$$\begin{aligned} \Vert \hat{\eta }_{x_{k}}\Vert ^2 \le \frac{L_c}{\beta } \Delta _{k, k+1} (\Vert \hat{\eta }_{x_{k-1}}\Vert + \varepsilon _{k-1}^2) + \frac{L_F}{\beta } \varepsilon _{k-1}^2. \end{aligned}$$(27)which implies that (24) holds.

Once (24) has been established, by \(\sqrt{a^2 + b^2} \le a + b\) and \(2 \sqrt{a b} \le a + b\), we have

For any \(p > {\hat{l}}\), taking summation of (28) from p to s yields

which implies

Taking s to \(\infty\) yields

It follows that \(\sum _{k=0}^\infty \Vert \hat{\eta }_{x_{k}}\Vert <\infty\), which yields (16) due to (17). \(\square\)

Theorem 8 gives the local convergence rate based on the Riemannian KL property. Note that the local convergence rate requires an even more accurate solution than that in Theorem 7.

Theorem 8

Let \(\{x_k\}\) denote the sequence generated by Algorithm 1 and \(\mathcal {S}\) denote the set of all accumulation points. Suppose Assumptions 1, 2, 5, and 6hold. We further assume that \(F = f + g\) satisfies the Riemannian KL property at every point in \(\mathcal {S}\) with the desingularising function having the form of \(\varsigma (t) = \frac{C}{\theta } t^{\theta }\) for some \(C > 0\), \(\theta \in (0, 1]\). The accumulation point, denoted \(x_*\), is unique by Theorem 7. If the Riemannian proximal mapping (5) is solved such that for all k,

that is, \(q(\varepsilon _k, \Vert \hat{\eta }_{x_k}\Vert ) = \min \left( \varepsilon _k^2, \frac{\beta }{2 L_F} \Vert \hat{\eta }_{x_k}\Vert ^2 \right)\) and \(\sum _{k=1}^{\infty } \varepsilon _k < \infty\). Then

-

If \(\theta = 1\), then there exists \(k_1\) such that \(x_k = x_*\) for all \(k > k_1\).

-

if \(\theta \in [\frac{1}{2}, 1)\), then there exist constants \(C_r > 0\) and \(d \in (0, 1)\) such that for all k

$$\begin{aligned} {{\,\mathrm{\mathrm {dist}}\,}}(x_k, x_*) < C_r d^{k}; \end{aligned}$$ -

if \(\theta \in (0, \frac{1}{2})\), then there exists a positive constant \(\tilde{C}_r\) such that for all k

$$\begin{aligned} {{\,\mathrm{\mathrm {dist}}\,}}(x_k, x_*) < \tilde{C}_r k^{\frac{-1}{1 - 2 \theta }}. \end{aligned}$$

Proof

In the case of \(\theta = 1\), suppose \(F(x_k) > F(x_*)\). It follows from (18) and (22) that

Therefore, we have \(\Vert \eta _{x_k}^*\Vert \ge C / L_c \quad \hbox {for all } k > \max (k_0, l)\). By (11), there exists \(k_2>0\) and \({\hat{C}} > 0\) such that \(\Vert \hat{\eta }_{x_k}\Vert \ge {\hat{C}} \Vert \eta _{x_k}^*\Vert\) for all \(k \ge k_2\). It follows that

Due to the descent property in (13), there must exist \(k_1\) such that \(x_k = x_*\) for all \(k > k_1\).

Next, we consider \(\theta \in (0, 1)\). By the same derivation as the proof in Theorem 7 and noting the difference between (30) and (15), we obtain from (24) that

by replacing \(\varepsilon _{k-1}\) with \(\frac{\beta }{2 L_F} \Vert \hat{\eta }_{x_k}\Vert\). Since \(\Vert \hat{\eta }_{x_k}\Vert \rightarrow 0\), for any \(\delta > 0\), there exists \(k_2 > 0\) such that for all \(k> k_2\), it holds that \(1 + \beta / (2 L_F) \Vert \hat{\eta }_{x_k}\Vert < \delta\). Therefore, we have

By \(2\sqrt{a b} \le a + b\), we have

where \(\tilde{b}_0 = 2 b_0 (1 + \delta )\). It follows that

Substituting \(\varsigma (t) = \frac{C}{\theta } t^{\theta }\) into (31) yields

By Assumption 6 and (30), we have

Combining (32) and (33) yields

By (18), we have \(\frac{1}{C} (F( R_{x_{p-1}} ( {\eta }_{x_{p-1}}^* ) ) - F(x_*))^{1 - \theta } \le {{\,\mathrm{\mathrm {dist}}\,}}(0, \partial F( R_{x_{p-1}} ( {\eta }_{x_{p-1}}^* ) )\). Combining this inequality with (22) yields

It follows from (34) and (35) that

Since \(\lim _{k\rightarrow \infty } \Vert \hat{\eta }_{x_k}\Vert = 0\), there exists \({\hat{p}} > 0\) such that \(\Vert \hat{\eta }_{x_k}\Vert < 1\) for all \(k > {\hat{p}}\). Therefore, for all \(p > {\hat{p}}\), it holds that

which combining with (36) yields

Note that if \(\theta \ge 0.5\), then \(1\le 2 \theta \le \theta / (1 - \theta )\). Thus \(\min \left( 2 \theta , \frac{\theta }{1 - \theta } \right) \ge 1\). If \(\theta \in (0, 0.5)\), then \(\min \left( 2 \theta , \frac{\theta }{1 - \theta } \right) = \theta / (1 - \theta ) <1\). The remaining part of the proof follow the same derivation as those in [41, Appendix B] and [5, Theorem 2]. \(\square\)

4 Conditions for solving Riemannian proximal mapping

In the general framework of the inexact RPG method (i.e., Algorithm 1), the required accuracy for solving the Riemannian proximal mapping involves the unknown exact solution \(\eta _{x_k}^*\). In this section, we study two conditions that can generate search directions satisfying (11) for different forms of g when the manifold \(\mathcal {M}\) has a linear ambient space, or equivalently, \(\mathcal {M}\) is an embedded submanifold of \(\mathbb {R}^n\) or a quotient manifold whose total space is an embedded submanifold of \(\mathbb {R}^n\). Throughout this section, the Riemannian metric is fixed to be the Euclidean metric \({\left\langle , \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}\). We describe the algorithms for embedded submanifolds and point out here that in the case of a quotient manifold, the derivations still hold by replacing the tangent space \(\mathrm {T}_x \mathcal {M}\) with the notion of horizontal space \(\mathcal {H}_x\).

4.1 Condition that ensures global convergence

We first show that an approximate solution to the Riemannian proximal mapping in [19] satisfies the condition that is needed to establish the global convergence of IRPG. Recall that the Riemannian proximal mapping therein is

Since \(\mathcal {M}\) has a linear ambient space \(\mathbb {R}^n\), its tangent space can be characterized by

where \(B_x^T: \mathbb {R}^n \rightarrow \mathbb {R}^{n-d}: v \mapsto ( {\left\langle b_1,v \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}, {\left\langle b_2,v \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}}, \ldots , {\left\langle b_{n-d},v \right\rangle _{{{\,\mathrm{\mathrm {F}}\,}}}} )^T\) is a linear operator, d is the dimension of the manifold \(\mathcal {M}\), and \(\{b_1, b_2, \ldots , b_{n-d}\}\) forms an orthonormal basis of the normal space of \(\mathrm {T}_x \mathcal {M}\). Concrete expressions of \(B_x^T\) for various manifolds will be given later in Appendix 1. Based on \(B_x^T\), Problem (37) can be written as

Semi-smooth Newton method can be used to solve (39). Specifically, the KKT condition of (39) is given by

where \(\mathcal {L}(\eta , \Lambda )\) is the Lagrangian function defined by

Equation (40) yields

where

denotes the Euclidean proximal mapping. Substituting (42) into (41) yields that

which is a system of nonlinear equations with respect to \(\Lambda\). Therefore, to solve (7), one can first find the root of (44) and substitute it back to (42) to obtain \(\tilde{\eta }_x\). Moreover, the semi-smooth Newton method can be used to solve (44), which updates the iterate \(\Lambda _k\) by \(\Lambda _{k+1} = \Lambda _k + d_k\), where \(d_k\) is a solution of

and \(J_{\Psi }(\Lambda _k)\) is a generalized Jacobian of \(\Psi\).

To solve the proximal mapping (37) approximately, we consider an algorithm that can solve (44) globally, e.g., the regularized semi-smooth Newton algorithm from [42,43,44]. Given an approximate solution \(\hat{\Lambda }\) to (44), defineFootnote 2

where \(v(\cdot )\) is defined in (42). We will show later, in order for \(\hat{\eta }_x\) to satisfy (11), it suffices to require \(\hat{\Lambda }\) to satisfy

where \(\ell _x(\cdot )\) the Riemannian proximal mapping function used in Algorithm 1, \(\phi :\mathbb {R} \rightarrow \mathbb {R}\) satisfies \(\phi (0)=0\) and nondecreasing. Moreover, a globally convergent algorithm will terminate properly under these two stopping conditions. The analyses rely on Assumption 9.

Assumption 9

The function g is convex and Lipschitz continuous with constant \(L_g\), where the convexity and Lipschitz continuity are in the Euclidean sense.

Note that if g is given by the one-norm regularization, then Assumption 9 holds.

It is evident that, in order to show that \(\hat{\eta }_x\) satisfies (11), we only need to show that there is a function \(\tilde{q}(t)\) such that \(\Vert \hat{\eta }_{x} - {\eta }_{x}^*\Vert \le \tilde{q}(\Vert \hat{\eta }_{x}\Vert )\) holds if \(\hat{\eta }_x\) satisfies (46) and (47). Therefore, the function q(s, t) in (11) can be defined by \(q(s, t) = \tilde{q}(t)\).

Theorem 10

Suppose there exists a constant \(\rho > 0\) such that for any \(x \in \Omega _{x_0}\) it holds that \(\Omega _{x_0} \subseteq R_x(\mathcal {B}(0_x, \rho ))\). If \(\tilde{L}\) is sufficiently large and the search direction \(\hat{\eta }_x\) define in (45) satisfies (46), then we have

where

and \(\phi (t)\) is defined in (46).

Proof

For ease of notation, let \(\epsilon =\Psi (\hat{\Lambda }) = B_x^T v (\hat{\Lambda })\). Consider the optimization problem

Its KKT condition is given by

which is satisfied by \((\hat{\Lambda },v(\hat{\Lambda }))\) Therefore, \(v(\hat{\Lambda })\) is the minimizer of \(\tilde{\ell }_x(\eta )\) over the set \(\mathcal {S} = \{v : B_x^T v = \epsilon \}\), i.e.,

Define \(\hat{\ell }_x(\eta _x) = \tilde{\ell }_x(\eta + B_x \epsilon )\). Further by the definition of \(\hat{\eta }_x\), i.e., \(\hat{\eta }_x = P_{\mathrm {T}_x \mathcal {M}} v(\hat{\Lambda })\), it is not hard to see that

By the \(\tilde{L}\)-strongly convexity of \(\hat{\ell }_x\) and the definition of \(\hat{\eta }_x\), it holds that

By definition of \(\hat{\eta }_x\), we have

Since \(\Omega _{x_0}\) is compact, there exists a constant \(U_f\) such that \(\Vert {{\,\mathrm{\mathrm {grad}}\,}}f(x)\Vert < U_f\) for all \(x \in \Omega _{x_0}\). By [45], if a function is Lipschitz continuous, then the norm of any subgradient is smaller than its Lipschitz constant. Therefore, by Assumption 9, it holds that \(\Vert \zeta \Vert \le L_g\) for any \(\zeta \in \partial ^E g(x + \eta )\). It follows from (52) and (46) that

Define \(\mathcal {U} = \{R_x(\eta _x) : x \in \Omega _{x_0}, \Vert \eta _x\Vert \le \rho \}\). Therefore, \(\mathcal {U}\) is compact. Moreover, since \(\Omega _{x_0} \subseteq R_x(\mathcal {B}(0_x, \rho ))\) for any \(x\in \Omega _{x_0}\), we have \(\Omega _{x_0}\subset \mathcal {U}\). It follows from (7) that there exists \(\varkappa _2\) such that

holds for any \(x \in \Omega _{x_0}\) and \(\Vert \eta _x\Vert \le \rho\). By Assumption 9 and (54), we have

Moreover, by the definition of \(\hat{\ell }_x(\eta _x)\), we have for any \(x\in \Omega _{x_0}\) and \(\Vert \eta _x\Vert \le \rho\),

where the third line has used the fact \(\Vert B_x\epsilon \Vert \le \Vert \epsilon \Vert \le 1/2\) (see (46)). Together with (55), and (51), it holds that for any \(x \in \Omega _{x_0}, \eta _x \in \mathrm {T}_x \mathcal {M}\), and \(\Vert \eta _x\Vert \le \rho\),

Define

It is easy to verify that

which yields

Noting the expression of \(\vartheta _2\), when \(\tilde{L}\rightarrow \infty\), the righthand side in the above inequality goes to \(\sqrt{4(\rho +1)}\). Thus, for sufficiently large \(\tilde{L}\) and \(\rho\), we have

For any \(\eta _x \in \mathcal {W}\) but not in \(\hat{\Omega }\), it follows from (58) that

Therefore, there exists a global minimizer of \(\ell _x\) in the set \(\hat{\Omega }\), we denote it by \(\eta _x^*\). It follows from \(\eta _x^* \in \hat{\Omega }\), and thus \({\Vert \hat{\eta }_{x} - {\eta }_{x}^*\Vert \le \tilde{q}(\Vert \hat{\eta }_{x}\Vert )}\) for \(\tilde{q}(t)\) given in the theorem. \(\square\)

Theorem 10 ensures that the search direction given by (45) is desirable for IRPG to have global convergence. There are several implications of this theorem. First, the global convergence of ManPG in [19] follows and the step size one is always acceptable. This can be seen by noting that if \(\phi (t) \equiv 0\), then the direction \({\hat{\eta }}\) with \(\Lambda\) satisfying (46) is the search direction used in [19]. Secondly, one can relax the accuracy of the solution in ManPG and still guarantees its global convergence. However, it should be pointed out that Theorem 10 does not implies that \(\hat{\eta }_x\) satisfies (15) or (30). Therefore, the uniqueness of accumulation points and the convergence rate based on the Riemannian KL property are not guaranteed.

Lemma 11 shows that a globally convergent algorithm for solving (44) can terminate properly in the sense that it satisfies (46) and (47) under the assumption that \(\tilde{L}\) is sufficiently large.

Lemma 11

Suppose there exists a constant \(\rho > 0\) such that for any \(x \in \Omega _{x_0}\) it holds that \(\Omega _{x_0} \subseteq R_x(\mathcal {B}(0_x, \rho ))\). If \(\tilde{L}\) is sufficiently large and an algorithm that converges globally is used for (44), then inequalities (46) and (47) are satisfied by all but finitely many iterates from the algorithm.

Proof

If \(\epsilon = 0\), then \(\hat{\eta }_x = \tilde{\eta }_x\) and the above derivations for \(\hat{\eta }_x\) also hold for \(\tilde{\eta }_x\). Therefore, \(\ell _x(0_x) > \ell _x(\tilde{\eta }_x)\) follows from (59) by noting \(0_x \in \mathcal {W}\) and \(0_x \notin \hat{\Omega }\) when \(\tilde{L}\) is sufficiently large. Finally, by strong convexity of \(\tilde{\ell }_x\) and the convergence of the algorithm, we have that \(\hat{\eta }_x \rightarrow \tilde{\eta }_x\) and \(\Vert \Psi (\Lambda )\Vert \rightarrow 0\). Therefore, inequalities (46) and (47) are satisfied by all but finitely many iterates from the algorithm. \(\square\)

4.2 Condition that ensures local convergence rate

In this section, we directly consider the solution of the Riemanniann proximal mapping (9) and provide a condition that meets the requirement for the local convergence rate analysis. First note that the Riemnnaian proximal mapping (9) is equivalent to

which is an optimization problem on a Euclidean space, where the subscript k is omitted for simplicity, d is the dimension of \(\mathcal {M}\) and \(Q_x\) forms an orthonormal space of \(\mathrm {T}_x \mathcal {M}\).

The analysis in this section relies on the notion of error bound (see its definition in e.g., [46, (35)], [47]), whose discussion relies on the convexity of the objective function. Therefore, we will make Assumption 12 which uses Definition 3. It follows that \(J_x(c)\) is convex. Note that Definition 3 has also been used in [20, 48]

Definition 3

A function \(h:\mathcal {M} \rightarrow \mathbb {R}\) is called retraction-convex with respect to a retraction R in \(\mathcal {N} \subseteq \mathcal {M}\) if for any \(x \in \mathcal {N}\) and any \(\mathcal {S}_x \subseteq \mathrm {T}_x \mathcal {M}\) such that \(R_x(\mathcal {S}_x)\subseteq \mathcal {N}\), there exists a tangent vector \(\zeta \in \mathrm {T}_x \mathcal {M}\) such that \(p_x = h \circ R_x\) satisfies

Note that \(\zeta = {{\,\mathrm{\mathrm {grad}}\,}}p_x(\xi )\) if h is differentiable; otherwise, \(\zeta\) is any Riemannian subgradient of \(p_x\) at \(\xi\).

Assumption 12

The function g is retraction-convex on \(\mathcal {M}\).

In the typical error bound analysis, the residual map plays a key role which controls the distance of a point to the optimal solution set. For our purpose, the residual map for (60) is defined as follows:

It is not hard to see that

Note the residual map defined here is slightly different from the one defined in [46], where the coefficient in front \(\Vert v\Vert ^2\) is 1/2 instead of \(\tilde{L}/2\). However, the error bound can be established in exactly the same way. To keep the presentation self-contained, details of the proof are provided below. It is worth pointing out that the family of Problems (60) parameterized by x possesses an error bound property with the coefficient independent of x.

Lemma 13

Suppose that Assumption 12holds. Then it holds that

where \(c_x^*\) is the minimizer of \(J_x(c)\).

Proof

Let \(\tilde{f}_x(c)\) denote \({{\,\mathrm{\mathrm {grad}}\,}}f(x)^T Q_{x} c + \frac{\tilde{L}}{2} \Vert c\Vert ^2\) and \(\tilde{g}_{x}(c)\) denote \(g(R_{x}(Q_{x}(c)))\). It follows that \(J_x(c) = \tilde{f}_x(c) + \tilde{g}_x(c)\) and

Therefore, we have \(0 \in \nabla \tilde{f}_x(c) + \tilde{L} r_{x}(c) + \partial ^E \tilde{g}_x(c + r_{x}(c)),\) which implies

It follows that

Since \(0 \in \nabla \tilde{f}_x(c_x^*) + \partial ^E \tilde{g}_x(c_x^*)\), we have \(c_x^* = \mathop {\mathrm {arg\,min}}\limits _{v \in \mathbb {R}^n} \nabla \tilde{f}_x(c_x^*)^T v + \tilde{g}_x(v).\) Therefore,

By definition of \(\tilde{f}_x\), we have that \(\tilde{f}_x\) is \(\tilde{L}\)-strongly convex and Lipschitz continuously differentiable with constant \(\tilde{L}\). Therefore, (66) yields

which implies \(\Vert c - c_x^*\Vert \le 2 \Vert r_{x}(c)\Vert .\) \(\square\)

Computing the residual map (62) is usually impractical due to the existence of the retraction R in g. Therefore, we use the same technique in [20, Section 3.5] to linearize \(R_x(Q_x(c + v))\) by \(R_x(Q_x c) + \mathcal {T}_{R_{Q_{x} c}} Q_{x} v\), and define a new residual map \(\tilde{r}_{x}(c)\) that can be used to upper bound \(r_x(c)\),

where \(y = R_x(Q_x c)\). A simple calculation can still show that

Moreover, minimizing \(\tilde{w}\) is the same as Problem (37) and therefore can be solved by the techniques in Sect. 4.1.

Lemma 14

Let \(\mathcal {G} \subset \mathcal {M}\) be a compact set. Suppose that Assumptions 9and12hold, and that there exists a parallelizable set \(\mathcal {U}\) such that \(\mathcal {G} \subset \mathcal {U}\), where a set is called parallelizable if \(Q_x\) as a function of x is smooth in \(\mathcal {U}\).Footnote 3If \(\tilde{L}\) is sufficient large, then there exist two constants \(b>0\) and \(\delta > 0\) such that

for all \(x \in \mathcal {G}\) and \(\Vert c\Vert < \delta\).

Proof

Since \(Q_x\) is smooth in \(\mathcal {U}\) and \(\mathcal {T}_R\) is smooth, we have that the function \(z:\mathcal {U} \times \mathbb {R}^d \rightarrow \mathbb {R}^{d \times d}: (x, c) \mapsto Q_y^T \mathcal {T}_{R_{Q_x c}} Q_x\) is a smooth function, where \(y = R_x(Q_x c)\). Furthermore, since \(\mathcal {T}_{R_{0_x}}\) is an identity for any \(x \in \mathcal {M}\), we have \(z(x, 0) = I_d\) for any \(x \in \mathcal {M}\). It follows that

where \(L_J = \max _{x \in \mathcal {G}, \Vert c\Vert \le \delta } \Vert J_z(x, Q_x c)\Vert\). Since the set of \(\{(x, c) : x \in \mathcal {G}, \Vert c\Vert \le \delta \}\) is compact and the Jacobi \(J_z\) is continuous by smoothness of z, we have \(L_J < \infty\).

Using (69) and noting \(\Vert \mathcal {T}_{R_{Q_x c}}\Vert = \Vert Q_y^T \mathcal {T}_{R_{Q_x c}} Q_x\Vert\) yields

and

which gives

Therefore, by choosing \(\delta < \min (\sqrt{3/2}-1, 1 - 1/\sqrt{2}) / L_J\), we have

It follows that

which yields

By the compactness of \(\mathcal {G}\), there exists a constant \(\tilde{\chi }_2\) such that

for all \(x, R_x(Q_x c), R_x(Q_x (c+v)) \in \mathcal {G}\).

Therefore, we have

where \(C_R = \tilde{L} / 4 + 2 L_g \tilde{\chi }_2\), the second inequality follows from (71) and Assumption 9, and the last inequality follows from (70).

Since \(w_{x, c}\) and \(\tilde{w}_{x, c}\) are both strongly convex, their minimizers \(r_{x}(c) \in \mathbb {R}^d\) and \(\tilde{r}_{x}(c) \in \mathrm {T}_y \mathcal {M}\) are unique. By the same derivation in Theorem 10, we have that

which implies

By assuming \(\tilde{L} > 8 L_g \tilde{\chi }_2\), we have that (68) holds with \(b = \frac{\sqrt{\tilde{L}} + \sqrt{2 C_R}}{\sqrt{\tilde{L}} - \sqrt{2 C_R}}\). \(\square\)

The main result is stated in Theorem 15, which follows from Lemmas 13 and 14 . It shows that if the Riemannian proximal mapping is solved sufficiently accurate such that the computable \(\tilde{r}_{x_k}(\bar{c}_k)\) satisfies (72), then the difference \(\Vert \bar{\eta }_{x_k} - \eta _{x_k}^*\Vert\) is controlled from above by the prescribed function \(\psi\). An algorithm that achieves (72) can be found in [20, Algorithm 2] by adjusting its stopping criterion to (72).

Theorem 15

Let \(\mathcal {S}\) denote the set of all accumulation points of \(\{x_k\}\). Suppose that there exists a neighborhood of \(\mathcal {S}\), denoted by \(\mathcal {U}\), such that \(\mathcal {U}\) is a parallelizable set, that Assumptions 1, 2, 9and 12hold, that \(\tilde{L}\) is sufficiently large, and that an algorithm is used to solve

such that the output \(\bar{c}_k \in \mathbb {R}^d\) of the algorithm satisfies

where \(x_k\) is the k -th iterate of Algorithm 1 and \(\psi\) is a function from \(\mathbb {R}^3\) to \(\mathbb {R}\). Then there exists a constant \(\tilde{a} > 0\) and an integer \(\tilde{K} > 0\) such that for all \(k > \tilde{K}\), it holds that

where \(\bar{\eta }_{x_k} = Q_{x_k} \bar{c}_k\). Moreover, if \(\psi (\varepsilon _k, \varrho , t) = \varepsilon _k^2\), then inequality (15) holds; if \(\psi (\varepsilon _k, \varrho , t) = \min (\varepsilon _k^2, \varrho t^2)\) with \(\varrho < \frac{\beta }{2 L_F a}\), then inequality (30) holds.

Proof

By (17) and [37, Remark 5], we have that \(\mathcal {S}\) is a compact set. Therefore, there exists a compact set \(\mathcal {G}\) and an integer \(\tilde{K} > 0\) such that \(\mathcal {S} \subset \mathcal {G} \subset \mathcal {U}\) and it holds that \(x_k \subset \mathcal {G}\) for all \(k > \tilde{K}\). By Lemma 14, there exists two constants \(b> 0\) and \(\delta > 0\) such that \(\Vert r_{x}(c)\Vert \le b \Vert \tilde{r}_{x}(c)\Vert\) for all \(x \in \mathcal {G}\) and \(\Vert c\Vert < \delta\). In addition, it follows from (14) that there exists a constant \(\tilde{K}_+ > 0\) such that for all \(k > \tilde{K}_+\) it holds that \(\Vert \hat{\eta }_{x_k}\Vert < \delta\). Therefore, for all \(k > \max (\tilde{K}, \tilde{K}_+)\), we have

The result (73) follows from (72) and (74). \(\square\)

For simplicity, we define \(\tilde{r}_{x_k}(c)\) as the minimizer of \(\tilde{w}_{x_k, c}(v)\). Indeed, we can show that it is not necessary to optimize \(\tilde{w}_{x_k, c}(v)\) exactly. Suppose that the minimizer \(c_{x_k}^*\) of \(J_{x_k}(c)\) is nonzero, that a converging algorithm is used to optimize \(J_{x_k}(c)\) and let \(\{c_i\}\) denote the generated sequence, and that \(\tilde{w}_{x_k, c}(v)\) is only solved approximately such that the approximated solution, denoted by \(\tilde{\tilde{r}}_{x_k}(c_i)\), satisfies \(\Vert \tilde{\tilde{r}}_{x_k}(c_i) - \tilde{r}_{x_k}(c_i)\Vert \le \delta _r \Vert \tilde{\tilde{r}}_{x_k}(c_i)\Vert\), where \(\delta _r \in (0, 1)\) is a constant.Footnote 4 Then we have

It follows that if

then (72) holds. Since a converging algorithm is used, we have \(c_i\) goes to \(c_{x_k}^*\) and \(\tilde{r}_{x_k}(c_i)\) goes to zero. It follows that \(\psi (\varepsilon _k, \varrho , \Vert c_i\Vert )\) is greater than a positive constant for all i and \(\tilde{\tilde{r}}_{x_k}(c_i)\) goes to zero by (75). Therefore, an iterate \(c_i\), denoted by \(\bar{c}_k\), satisfying inequality (76) can be found.

5 Numerical experiments

In this section, we use the sparse principle component analysis (SPCA) problem to test the proposed practical conditions on the accuracy for solving the Riemannian proximal mapping (9).

5.1 Experimental settings

Since practically a sufficiently large \(\tilde{L}\) is unknown, we dynamically increase its value by \(\tilde{L} \leftarrow 1.5 \tilde{L}\) if the search direction is not descent in the sense that back tracking algorithm \(\alpha ^{(i+1)} = 0.5 \alpha ^{(i)}\) with \(\alpha ^{(0)} = 1\) for finding a step size fails for 5 iterations. In addition, the initial value of \(\tilde{L}\) at \(k+1\)-th iteration, denoted by \(\tilde{L}_{k+1}\), is given by the Barzilar-Borwein step size with safeguard:

where \(\tilde{L}_{\min } > 0\), \(\tilde{L}_{\max } > 0\), \(y_k = P_{\mathrm {T}_{x_{k}} \mathcal {M}} {{\,\mathrm{\mathrm {grad}}\,}}f(x_{k+1}) - {{\,\mathrm{\mathrm {grad}}\,}}f(x_k)\) and \(s_k = \alpha \eta _{x_k}\). The value of \(\tilde{L}_{0}\) is problem dependent and will be specified later. The parameters are given by \(\tilde{L}_{\min } = 10^{-3}\), \(\tilde{L}_{\max } = \tilde{L}_0\), \(\phi :\mathbb {R} \rightarrow \mathbb {R}: t \mapsto \sqrt{t}\), \(\varepsilon _k = \frac{500}{(1+k)^{1.01}}\), and \(\varrho = 100\).

Let IRPG-G, IRPG-U, and IRPG-L respectively denote Algorithm 1 with the subproblem solved accurately enough in the sense that (46) and (47) hold, (72) holds with \(\psi (\varepsilon _k, \rho , \Vert \eta \Vert ) = \varepsilon _k^2\), and (72) holds with \(\psi (\varepsilon _k, \rho , \Vert \eta \Vert ) = \min (\varepsilon _k^2, \varrho \Vert \eta \Vert ^2)\). Unless otherwise indicated, IRPG-G stops if the value of \((\Vert \eta _{x_k}\Vert \tilde{L}_k)\) reduces at least by a factor of \(10^{-3}\). IRPG-U and IRPG-L stop if their objective function values are smaller than the function value of the last iterate given by IRPG-G.

All the tested algorithms are implemented in the ROPTLIB package [51] using C++, with a MATLAB interface. The experiments are performed in Matlab R2018b on a 64 bit Ubuntu platform with 3.5GHz CPU (Intel Core i7-7800X).

5.2 SPCA test

An optimization model for the sparse principle component analysis is given by

where \(A \in \mathbb {R}^{m \times n}\) is the data matrix. This model is a penalized version of the ScoTLASS model introduced in [52] and it has been used in [10, 19].

Basic settings A matrix \(\tilde{A} \in \mathbb {R}^{m \times n}\) is first generated such that its entries are drawn from the standard normal distribution. Then the matrix A is created by shifting and normalized columns of \(\tilde{A}\) such that the columns have mean zero and standard deviation one. The parameter \(\tilde{L}_0\) is \(2 \lambda _{\max }(A)^2\), where \(\lambda _{\max }(A)\) denotes the largest singular value of A. The initial iterate is the leading r right singular vectors of the matrix A. The Riemannian optimization tools including the Riemannian gradient, the retraction by polar decomposition can be found in [20].

Empirical observations Figure 1 shows the performance of IRPG-G, IRPG-U, and IRPG-L with multiple values of n, p, and \(\lambda\). Since IRPG-G, IRPG-U, and IRPG-L solve the Riemannian proximal mapping up to different accuracy, we find that IRPG-G takes notably more iterations than IRPG-U, and IRPG-U takes slightly more iterations than IRPG-L, which coincides with our theoretical results. Though IRPG-U and IRPG-L take fewer iterations, their computational times are still larger than that of IRPG-G due to the excessive cost on improving the accuracy of the Riemannian proximal mapping.

Average results of 10 random runs for SPCA. The same random seed is used when comparing the three algorithms. We choose the runs where the three algorithms find the same minimizer in the sense that the norm of the difference between the solutions is smaller than \(10^{-2}\). “time” denotes the computational time in seconds. “iter” denotes the number of iterations. Top: multiple values \(n = \{256, 512, 1024, 2048\}\) with \(p = 4\), \(m = 20\), and \(\lambda = 2\); Middle: multiple values \(p = \{1, 2, 4, 8\}\) with \(n = 1024\), \(m = 20\), and \(\lambda = 2\); Bottom: Multiple values \(\lambda = \{0.5, 1, 2, 4\}\) with \(n = 1024\), \(p = 4\), and \(m = 20\).

Data availability

The data that support the findings of this study are available from the corresponding author upon request.

Notes

The commonly-used update expression is \(x_{k+1}=\arg \min _x\langle \nabla f(x_k),x-x_k\rangle _2+\frac{L}{2}\Vert x-x_k\Vert _2^2+g(x)\). We reformulate it equivalently for the convenience of the Riemannian formulation given later.

Note that if \(\Psi (\Lambda ) \ne 0\), then \(\eta\) defined by (42) may be not in \(\mathrm {T}_x \mathcal {M}\). Therefore, we add an orthogonal projection.

References

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009). https://doi.org/10.1137/080716542

Beck, A.: First-Order Methods in Optimization. Society for Industrial and Applied Mathematics, Philadelphia, PA (2017). https://doi.org/10.1137/1.9781611974997

Darzentas, J.: Problem Complexity and Method Efficiency in Optimization (1983)

Nesterov, Y.E.: A method for solving the convex programming problem with convergence rate \(O(1/k^{2})\). Dokl. Akas. Nauk SSSR (In Russian) 269, 543–547 (1983)

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116, 5–16 (2009)

Li, H., Lin, Z.: Accelerated proximal gradient methods for nonconvex programming. In: International Conference on Neural Information Processing Systems (2015)

Ghadimi, S., Lan, G.: Accelerated gradient methods for nonconvex nonlinear and stochastic programming. Math. Program. 59–99 (2016)

Ozolinš, V., Lai, R., Caflisch, R., Osher, S.: Compressed modes for variational problems in mathematics and physics. Proc. Natl. Acad. Sci. 110(46), 18368–18373 (2013). https://doi.org/10.1073/pnas.1318679110

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Graph. Stat. 15(2), 265–286 (2006)

Huang, W., Wei, K.: An extension of fast iterative shrinkage-thresholding algorithm to Riemannian optimization for sparse principal component analysis. Numer. Linear Algebra Appl. (2021). https://doi.org/10.1002/nla.2409

Ulfarsson, M.O., Solo, V.: Sparse variable PCA using geodesic steepest descent. IEEE Trans. Signal Process. 56(12), 5823–5832 (2008). https://doi.org/10.1109/TSP.2008.2006587

Cai, T.T., Ma, Z., Wu, Y.: Sparse PCA: optimal rates and adaptive estimation. Ann. Stat. 41(6), 3074–3110 (2013). https://doi.org/10.1214/13-AOS1178

Xiao, N., Liu, X., Yuan, Y.: Exact penalty function for l2, 1 norm minimization over the Stiefel manifold. Optmization online (2020)

Ye, J., Zhao, Z., Wu, M.: Discriminative k-means for clustering. In: Platt, J., Koller, D., Singer, Y., Roweis, S. (eds.) Advances in Neural Information Processing Systems, vol. 20. Curran Associates, Inc. (2008). https://proceedings.neurips.cc/paper/2007/file/a5cdd4aa0048b187f7182f1b9ce7a6a7-Paper.pdf

Liang, X., Ren, X., Zhang, Z., Ma, Y.: Repairing sparse low-rank texture. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) Computer Vision—ECCV 2012, pp. 482–495. Springer, Berlin (2012)

Mishra, A., Dey, D.K., Chen, K.: Sequential co-sparse factor regression. J. Comput. Graph. Stat. 26(4), 814–825 (2017)

Zhang, T., Ghanem, B., Liu, S., Xu, C., Ahuja, N.: Low-rank sparse coding for image classification. In: 2013 IEEE International Conference on Computer Vision, pp. 281–288 (2013)

Shi, J., Qi, C.: Low-rank sparse representation for single image super-resolution via self-similarity learning. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 1424–1428 (2016). https://doi.org/10.1109/ICIP.2016.7532593

Chen, S., Ma, S., So, A.M.-C., Zhang, T.: Proximal gradient method for nonsmooth optimization over the Stiefel manifold. SIAM J. Optim. 30(1), 210–239 (2020). https://doi.org/10.1137/18M122457X

Huang, W., Wei, K.: Riemannian proximal gradient methods. Math. Program. (2021). https://doi.org/10.1007/s10107-021-01632-3. Published online https://doi.org/10.1007/s10107-021-01632-3

Le, O.Y., Zhang, X.F., Yan, H.: Sparse regularized low-rank tensor regression with applications in genomic data analysis. Pattern Recogn. 107(502), 107516 (2020)

Hsieh, C.-J., Sustik, M., Dhillon, I., Ravikumar, P.: QUIC: quadratic approximation for sparse inverse covariance estimation. J. Mach. Learn. Res. 15, 2911–2947 (2014)

Combettes, P.L.: Solving monotone inclusions via compositions of nonexpansive averaged operators. Optimization 53(5–6), 475–504 (2004). https://doi.org/10.1080/02331930412331327157

Fadili, J.M., Peyré, G.: Total variation projection with first order schemes. IEEE Trans. Image Process. 20(3), 657–669 (2011). https://doi.org/10.1109/TIP.2010.2072512

Schmidt, M., Roux, N., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems, vol. 24. Curran Associates, Inc. (2011). https://proceedings.neurips.cc/paper/2011/file/8f7d807e1f53eff5f9efbe5cb81090fb-Paper.pdf

Villa, S., Salzo, S., Baldassarre, L., Verri, A.: Accelerated and inexact forward-backward algorithms. SIAM J. Optim. 23(3), 1607–1633 (2013). https://doi.org/10.1137/110844805

Bonettini, S., Prato, M., Rebegoldi, S.: Convergence of inexact forward-backward algorithms using the forward-backward envelope. SIAM J. Optim. 30(4), 3069–3097 (2020). https://doi.org/10.1137/19M1254155

Boothby, W.M.: An Introduction to Differentiable Manifolds and Riemannian Geometry, 2nd edn. Academic Press, Cambridge (1986)

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Hosseini, S., Huang, W., Yousefpour, R.: Line search algorithms for locally Lipschitz functions on Riemannian manifolds. SIAM J. Optim. 28(1), 596–619 (2018)

Lee, J.M.: Introduction to Riemannian Manifolds. Graduate Texts in Mathematics, vol. 176, 2nd edn. Springer, Berlin (2018)

do Carmo, M.P.: Riemannian Geometry. Mathematics: Theory & Applications (1992)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control. Optim. 14(5), 877–898 (1976). https://doi.org/10.1137/0314056

Boumal, N., Absil, P.-A., Cartis, C.: Global rates of convergence for nonconvex optimization on manifolds. IMA J. Numer. Anal. 39(1), 1–33 (2018). https://doi.org/10.1093/imanum/drx080

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the Kurdyka–Łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010). https://doi.org/10.1287/moor.1100.0449

Attouch, H., Bolte, J., Svaiter, B.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss–Seidel methods. Math. Program. 137, 91–129 (2013)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1–2), 459–494 (2014)

Kurdyka, K., Mostowski, T., Adam, P.: Proof of the gradient conjecture of r. thom. Ann. Math. 152, 763–792 (2000)

Bento, G.C., de Cruz Neto, J.X., Oliveira, P.R.: Convergence of inexact descent methods for nonconvex optimization on Riemannian manifold. arXiv preprint arXiv:1103.4828 (2011)

Qian, Y., Pan, S., Xiao, L.: Error bound and exact penalty method for optimization problems with nonnegative orthogonal constraint (2022)

Huang, W., Wei, K.: Riemannian Proximal Gradient Methods (extended version). arXiv:1909.06065 (2019)

Qi, H., Sun, D.: A quadratically convergent newton method for computing the nearest correlation matrix. SIAM J. Matrix Anal. Appl. 28(2), 360–385 (2006). https://doi.org/10.1137/050624509

Zhao, X.-Y., Sun, D., Toh, K.-C.: A newton-cg augmented Lagrangian method for semidefinite programming. SIAM J. Optim. 20(4), 1737–1765 (2010). https://doi.org/10.1137/080718206

Xiao, X., Li, Y., Wen, Z., Zhang, L.: A regularized semi-smooth Newton method with projection steps for composite convex programs. J. Sci. Comput. 76(1), 364–389 (2018). https://doi.org/10.1007/s10915-017-0624-3

Clarke, F.H.: Optimization and Nonsmooth Analysis. Classics in Applied Mathematics of SIAM (1990)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117, 387–423 (2009). https://doi.org/10.1007/s10107-007-0170-0

Zhou, Z., So, M.C.: A unified approach to error bounds for structured convex optimization problems. Math. Program. 165(2), 689–728 (2017)

Huang, W., Gallivan, K.A., Absil, P.-A.: A Broyden class of quasi-Newton methods for Riemannian optimization. SIAM J. Optim. 25(3), 1660–1685 (2015). https://doi.org/10.1137/140955483

Huang, W., Absil, P.-A., Gallivan, K.A.: A Riemannian symmetric rank-one trust-region method. Math. Program. 150(2), 179–216 (2015)

Boumal, N.: An Introduction to Optimization on Smooth Manifolds. Available online http://www.nicolasboumal.net/book (2020)

Huang, W., Absil, P.-A., Gallivan, K.A., Hand, P.: ROPTLIB: an object-oriented C++ library for optimization on Riemannian manifolds. ACM Trans. Math. Softw. 4(44), 43–14321 (2018)

Jolliffe, I.T., Trendafilov, N.T., Uddin, M.: A modified principal component technique based on the Lasso. J. Comput. Graph. Stat. 12(3), 531–547 (2003)

Huang, W., Absil, P.-A., Gallivan, K.A.: Intrinsic representation of tangent vectors and vector transport on matrix manifolds. Numer. Math. 136(2), 523–543 (2016). https://doi.org/10.1007/s00211-016-0848-4

Acknowledgements

The authors would like to thank Liwei Zhang for discussions on perturbation analysis for optimization problems. Wen Huang was partially supported by National Natural Science Foundation of China (NO. 12001455) and the Fundamental Research Funds for the Central Universities (NO. 20720190060). Ke Wei was partially supported by the NSFC Grant 11801088 and the Shanghai Sailing Program 18YF1401600.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Implementations of \(B_x^T\) and \(B_x\)

Appendix: Implementations of \(B_x^T\) and \(B_x\)

In this section, the implementations of the functions \(B_x^T: \mathbb {R}^n \rightarrow \mathbb {R}^{n - d}\) and \(B_x: \mathbb {R}^{n - d} \rightarrow \mathbb {R}^n\) are given for Grassmann manifold, manifold of fixed-rank matrices, manifold of symmetric positive definite matrices, and products of manifolds. Note that the Riemannian metric is chosen to be the Euclidean metric in this section.

Grassmann manifold We consider the representation of Grassmann manifold by

where \([X] = \{X O : O^T O = I_p\}\). The ambient space of \({{\,\mathrm{\mathrm {Gr}}\,}}(p, n)\) is \(\mathbb {R}^{n \times p}\) and the orthogonal complement space of the horizontal space \(\mathcal {H}_X\) at \(X \in {{\,\mathrm{\mathrm {St}}\,}}(p, n)\) is given by

Therefore, we have

Manifold of fixed-rank matrices The manifold is given by

The ambient space is therefore \(\mathbb {R}^{m \times n}\). Given \(X \in \mathbb {R}_r^{m \times n}\), let \(X = U S V\) be a thin singular value decomposition. The normal space at X is given by

where \(U_{\perp } \in \mathbb {R}^{m \times (m - r)}\) forms an orthonormal basis of the perpendicular space of \(\mathrm {span}(U)\) and \(V_{\perp } \in \mathbb {R}^{n \times (n - r)}\) forms an orthonormal basis of the perpendicular space of \(\mathrm {span}(V)\). It follows that

Note that it is not necessary to form the matrices \(U_{\perp }\) and \(V_{\perp }\). One can use [53, Algorithms 4 and 5] to implement the actions of \(U_{\perp }\), \(U_{\perp }^T\), \(V_{\perp }\), and \(V_{\perp }^T\).

Manifold of symmetric positive semi-definite matrices The manifold is

The ambient space is \(\mathbb {R}^{n \times n}\). Given \(X \in \mathbb {S}_r^{n \times n}\), let \(X = H H^T\), where \(H \in \mathbb {R}^{n \times r}\) is full rank. The normal space at X is

where \(H_{\perp } \in \mathbb {R}^{n \times (n - r)}\) forms an orthonormal basis of the perpendicular space of \(\mathrm {span}(H)\). Therefore, we have

where \(\mathrm {vec}(M) = (M_{11}, M_{22}, \ldots , M_{ss}, \sqrt{2} M_{12}, \sqrt{2} M_{13}, \sqrt{2} M_{1s}, \ldots , \sqrt{2} M_{(s - 1) s})^T\) for \(M \in \mathbb {R}^{s \times s}\) being a symmetric matrix, and \(\mathrm {vec}^{-1}\) is the inverse function of \(\mathrm {vec}\).

Product of manifolds Let the product manifold \(\mathcal {M}\) be denoted by \(\mathcal {M}_1 \times \mathcal {M}_2 \times \ldots \times \mathcal {M}_t\). Let the ambient space of \(\mathcal {M}_i\) be \(\mathbb {R}^{n_i}\) and the dimension of \(\mathcal {M}_i\) be \(d_i\). For any \(X = (X_1, X_2, \ldots , X_t) \in \mathcal {M}\), the mappings \(B_X^T\) and \(B_X\) are given by

where \(B_{X_i}^T\) and \(B_{X_i}\) denote the mappings for manifold \(\mathcal {M}_i\) at \(X_i\), and \(v_i \in \mathbb {R}^{n_i - d_i}\), \(i = 1, \ldots , t\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, W., Wei, K. An inexact Riemannian proximal gradient method. Comput Optim Appl 85, 1–32 (2023). https://doi.org/10.1007/s10589-023-00451-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-023-00451-w