Abstract

This paper studies tensor Z-eigenvalue complementarity problems. We formulate the tensor Z-eigenvalue complementarity problem as constrained polynomial optimization, and propose a semidefinite relaxation algorithm for solving the complementarity Z-eigenvalues of tensors. For every tensor that has finitely many complementarity Z-eigenvalues, we can compute all of them and show that our algorithm has the asymptotic and finite convergence. Numerical experiments indicate the efficiency of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\mathbb {R,C}\) respectively be the sets of real and complex numbers. Let \(T^m(\mathbb {R}^n)\) denote the space of all real m-order n-dimensional tensors, \(\mathbb {R}^{n\times n}\) be the space of all real n-by-n matrices. A tensor \(\mathcal {A} \in T^m(\mathbb {R}^n)\) is a multi-array indexed as

The tensor \(\mathcal {A}\) is called symmetric if the value of \(a_{i_1, \ldots , i_m}\) is invariant under any permutation of its index \(\{i_1, \ldots , i_m\}\). Let \(S^m(\mathbb {R}^n)\) be the space of all symmetric tensors in \(T^m(\mathbb {R}^n)\).

\(\mathcal {A}x^m\) is a homogeneous polynomial of degree m, defined by

where \(x \in \mathbb {R}^n\), and \(\mathcal {A}x^{m-1}\) is a vector in \(\mathbb {R}^n\), which is defined by

The matrix eigenvalue complementarity problem (MEiCP) is that: for given two matrices \(A,B \in \mathbb {R}^{n\times n}\), we find a number \(\lambda \in \mathbb {R}\) and a nonzero vector \(x\in \mathbb {R}^n\) such that

In the above, \(a\perp b\) means that the two vectors a, b are perpendicular to each other. For \((\lambda ,x)\) satisfying (1.1), \(\lambda\) is called a complementary eigenvalue of (A, B) and x is called the associated complementary eigenvector. MEiCPs have wide applications, such as static equilibrium states of mechanical systems with unilateral friction [24], the dynamic analysis of structural mechanical systems [17] and the contact problem in mechanics [18].

Tensor eigenvalue complementarity problems (TEiCPs) have received much attention lately. It is a generalization of the matrix eigenvalue complementarity problem, which has a broad range of interesting applications. A tensor eigenvalue complementarity problem can be formulated: for two nonzero tensors \(\mathcal {A,B} \in T^m(\mathbb {R}^n)\), if a pair \((\lambda , x) \in \mathbb {R}\times (\mathbb {R}^n\setminus \{0\})\) satisfies the equation

then \(\lambda\) is called a complementarity eigenvalue of \((\mathcal {A},\mathcal {B})\) and x is the associated complementarity eigenvector. Such \((\lambda , x)\) is called a C-eigenpair. Chen et al. [3] have further work on tensor eigenvalue complementarity problems. When the tensors are symmetric, they reformulated the problem as nonlinear optimization and proposed a shifted projected power method. Chen and Qi [2] reformulated the TEiCP as a system of nonlinear equations and proposed a damped semi-smooth Newton method for solving it. Fan, Nie and Zhou [6] proposed a semidefinite relaxation method for computing all the complementarity eigenvalues. In this paper, we study tensor Z-eigenvalue complementarity problems.

Lim [14] and Qi [25] introduced the definition of tensor eigenvalues. There is more than one possible definition for tensor eigenvalue in [25, 28]. In this paper, we specifically use the following definitions.

Definition 1.1

Let \(\mathcal {A} \in T^m(\mathbb {R}^n)\). The pair \((\lambda , x) \in \mathbb {C}\times \mathbb {C}^n\) is called E-eigenpair, and \(\lambda\) is called E-eigenvalue and x is the corresponding E-eigenvector of \(\mathcal {A}\) if they satisfy the equations

We call \((\lambda , x)\) a Z-eigenpair if they are both real.

In the following, we define complementarity Z-eigenvalues of tensors.

Definition 1.2

For \(\mathcal {A} \in T^m(\mathbb {R}^n)\), if a pair \((\lambda , x) \in \mathbb {R}\times \mathbb {R}^n\) satisfies the equations

then \(\lambda\) is called a complementarity Z-eigenvalue of \(\mathcal {A}\) and x is the associated complementarity Z-eigenvector. Such \((\lambda , x)\) is called a complementarity Z-eigenpair. For convenience, complementary Z-eigenvalues and Z-eigenvectors are respectively called CZ-eigenvalues and CZ-eigenvectors. The above \((\lambda , x)\) is called a CZ-eigenpair.

For \(x\ge 0\) and \(\lambda x-\mathcal {A}x^{m-1}\ge 0\), \(x\perp (\lambda x-\mathcal {A}x^{m-1})\) holds if and only if

where \(\circ\) denotes the Hadamard product defined as in Sect. 2.

Z-eigenvalues have important applications in numerical multilinear algebra [23], image processing [27, 29], higher order Markov chains [15, 16], and spectral hypergraph theory [9], etc. For symmetric tensors, some methods for computing Z-eigenvalues are proposed. Kolda and Mayo [10] proposed a shifted power method. Cui, Dai and Nie [4] proposed a semidefinite relaxation approach for computing all the real eigenvalues. For nonsymmetric tensors, Nie and Zhang [22] proposed a semidefinite relaxation method for computing all the real eigenvalues. A tensor may not have the Z-eigenvalues, but has the CZ-eigenvalues (see Example 1.1). Under some generic conditions, \(\mathcal {A}\) has finitely many CZ-eigenvalues. Tensor Z-eigenvalue complementarity problems make some practical problems have more natural and precise mathematical descriptions. Its applications need further research.

Example 1.1

Consider the tensor \(\mathcal {A} \in T^4(\mathbb {R}^2)\) such that \(a_{ijkl}=0\) except

By the above definitions and analysis, \((\lambda ,x)\) is a Z-eigenpair if and only if

\((\lambda ,x)\) is a CZ-eigenpair if and only if

\(\mathcal {A}\) has no Z-eigenvalues and neither E-eigenvalues [22], but \(\mathcal {A}\) has one CZ-eigenvalue \(\lambda =0\) with the associated CZ-eigenvector (1, 0).

It is possible that a tensor has infinitely many Z-eigenvalues and CZ-eigenvalues.

Example 1.2

Consider the tensor \(\mathcal {A} \in T^3(\mathbb {R}^2)\) such that \(a_{ijk}=0\) except

By the equations

one can check that every real number \(\lambda \in [0,1]\) is a Z-eigenvalue of \(\mathcal {A}\), associated with Z-eigenvectors \((\lambda , \pm \sqrt{1-\lambda ^2})\) [26]. By the equations

one can check that every real number \(\lambda \in [0,1]\) is a CZ-eigenvalue of \(\mathcal {A}\), associated with the CZ-eigenvector \((\lambda , \sqrt{1-\lambda ^2})\).

In this paper, we study to how to solve all the CZ-eigenvalues of \(\mathcal {A}\), when \(\mathcal {A}\) has finitely many CZ-eigenvalues.

The organization of this paper is as follows. Section 2 reviews some basics in polynomial optimization. We propose the semidefinite relaxation algorithm for computing all the CZ-eigenvalues for every tensor that has finitely many CZ-eigenvalues, and prove its asymptotic and finite convergence in Sect. 3. Section 4 demonstrates the numerical experiments. Conclusions are drawn in Sect. 5.

2 Preliminaries

This section reviews some basics in polynomial optimization. We refer to [11,12,13, 21] for surveys in the area.

Let \(\mathbb {N}\) be the set of nonnegative integer numbers. For two vectors \(a,b \in \mathbb {R}^n\), \(a\circ b\) denotes the Hadamard product of a and b, i.e. the product is defined componentwise. The symbol \(\mathbb {R}[x]:=\mathbb {R}[x_1,x_2,\ldots ,x_n]\) denotes the polynomial ring in \(x=(x_1,x_2,\ldots ,x_n)\) with real coefficients. For the vector \(\alpha =(\alpha _1,\ldots ,\alpha _n)\), denote \(\mathbb {N}_d^n:=\{\alpha \in \mathbb {N}^n||\alpha |:=\alpha _1+\alpha _2+\cdots +\alpha _n \le d\}\). The symbol deg(p) denotes the degree of polynomial p. For \(t\in \mathbb {R}\), \(\lceil t \rceil\) denotes the smallest integer \(\ge t\). For \(x=(x_1,x_2,\ldots ,x_n)\) and \(\alpha = (\alpha _1,\ldots ,\alpha _n)\), denote

The superscript T denotes the transpose of a matrix/vector. By writing \(X\succeq 0\) (resp., \(X\succ 0\)), we mean that X is a symmetric positive semidefinite (resp., positive definite) matrix. For matrices \(X_1,\ldots ,X_r\), \(diag(X_1,\ldots ,X_r)\) denotes the block diagonal matrix whose diagonal blocks are \(X_1,\ldots ,X_r\). For a vector x, \(\Vert x\Vert\) denotes its standard Euclidean norm.

An ideal I in \(\mathbb {R}[x]\) is a subset such that \(I\cdot \mathbb {R}[x]\subseteq I, I+I\subseteq I\). For a tuple \(h=(h_1,\ldots ,h_m)\) in \(\mathbb {R}[x]\), I(h) denotes the smallest ideal containing all \(h_i\), i.e. \(I(h):=h_1\cdot \mathbb {R}[x]+\cdots +h_m\cdot \mathbb {R}[x]\). The kth truncation of the ideal I(h) is denoted as \(I_k(h)\), which is the set

Clearly, \(I(h)=\cup _{k \in \mathbb {N}}I_k(h)\).

A polynomial \(\sigma\) is called a sum of squares (SOS) if \(\sigma =p_1^2+\cdots +p_k^2\) for some polynomials \(p_1,\ldots ,p_k\in \mathbb {R}[x]\). \(\Sigma [x]\) denotes the set of all SOS polynomials in x. For a degree m, \(\Sigma [x]_m\) denotes the truncation \(\Sigma [x]\cap R[x]_m\). For a tuple \(g=(g_1,\ldots ,g_t)\), its quadratic module is the set

The kth truncation of Q(g) is the set

Note that \(Q(g)=\cup _{k \in \mathbb {N}}Q_k(g)\). If the tuple g is empty, then \(Q(g)=\Sigma [x], Q_{k}(g)=\Sigma [x]_{2k}\).

Let Pr(g) be the quadratic module generated by the set of all possible cross products:

The set \(Pr_k(g)\) is the kth truncated preordering generated by \(g=(g_1,\ldots ,g_t)\).

The set \(I(h)+Q(g)\) is called archimedean if there exists some real number \(R>0\) such that \(R-\Vert x\Vert ^2 \in I(h)+Q(g)\). If there exists \(p\in I(h)+Q(g)\) such that \(p(x)\ge 0\) defines a compact set in \(\mathbb {R}^n\), then \(I(h)+Q(g)\) is archimedean. For the tuples h and g as above, denote

Clearly, if \(I(h)+Q(g)\) is archimedean, then K must be a compact set.

Let \(\mathbb {R}^{\mathbb {N}_d^n}\) be the space of real sequences indexed by \(\alpha \in \mathbb {N}_d^n\). A vector y in \(\mathbb {R}^{\mathbb {N}_d^n}\) is called a truncated moment sequences (tms) of degree d, i.e.

A tms \(y\in \mathbb {R}^{\mathbb {N}_d^n}\) defines a Riesz function \(\mathscr {L}\) on \(\mathbb {R}[x]_d\) as

For convenience, we denote \(\langle p,y \rangle :=\mathscr {L}_y(p)\). For an integer \(t\le d\) and \(y \in \mathbb {R}^{\mathbb {N}_d^n}\), denote the tth truncation of y as

Let \(q\in \mathbb {R}[x]_{2k}\). The kth localizing matrix of q, generated by \(y\in \mathbb {R}^{\mathbb {N}_{2k}^n}\), is the symmetric matrix \(L_q^{(k)}(y)\) such that

for all \(p_1,p_2 \in R[x]_{k-\lceil deg(q)/2\rceil }\). In the above, \(vec(p_i)\) denotes the coefficient vector of the polynomial \(p_i\). For example, \(n=2, k=2, q=1-x_1^2-x_2^2\), it follows

When \(q=(q_1,\ldots ,q_r)\) is a tuple of polynomials, we define

a block diagonal matrix. When \(q=1, L_1^{(k)}(y)\) is called the kth moment matrix generated by y, denoted as \(M_k(y)\). For instance, \(n=2, k=2\),

An important question is whether or not a tms \(y\in \mathbb {R}^{\mathbb {N}_{2k}^n}\) admits a representing measure whose support is contained in K. For this to be true, a necessary condition [5, 7] is that

However, the above is typically not sufficient. Let \(d'=\max \{1,\lceil deg(h)/2\rceil ,\lceil deg(g)/2\rceil \}\). y admits a measure supported in K if y also satisfies the rank condition [5]

In such case, y admits a unique finitely atomic measure on K. We call that y is flat with respect to \(h=0\) and \(g\ge 0\) if both Problems (2.1) and (2.2) are satisfied.

3 Computing all the CZ-eigenvalues

Suppose that the tensor \(\mathcal {A}\) has finite CZ-eigenvalues, we discuss how to compute all of them.

Recall that \((\lambda ,x)\) is a CZ-eigenpair of \(\mathcal {A} \in T^m(\mathbb {R}^n)\), if \(\lambda \in \mathbb {R}\) and \(x \in \mathbb {R}^n\) satisfies

Then,

Thus \(\lambda =\mathcal {A}x^m\).

x is a CZ-eigenvector of \(\mathcal {A}\) if and only if

where \(\circ\) denotes the Hadamard product of two vectors, and the associated CZ-eigenvalue is \(\mathcal {A}x^m\). Since the tensor \(\mathcal {A}\) has finite CZ-eigenvalues, we suppose that the CZ-eigenvalues are \(\lambda _1,\lambda _2,\cdots ,\lambda _L\), where L is the total number of distinct CZ-eigenvalues. They can be ordered monotonically as

In the following subsections, we give the semidefinite relaxation method for computing all the CZ-eigenvalues of \(\mathcal {A}\).

3.1 The smallest CZ-eigenvalue

Let \(f(x):=\mathcal {A}x^m\). The smallest CZ-eigenvalue \(\lambda _1\) equals the optimal value of the optimization problem

where h, g are as in (3.2). Let K be its feasible set. For a tms \(y\in \mathbb {R}^{\mathbb {N}_{2k}^n}\) with degree \(2k\ge m\), denote

We apply Lasserre’s semidefinite relaxations [11] to solve (3.3). For the orders \(k=k_0,k_0+1,\ldots\), the kth Lasserre relaxation is

The dual problem of (3.4) is

Under the weak duality, we have \(\eta _k^{(1)}\le \rho _k^{(1)}\le \lambda _1\) for all k and the sequences \(\{\rho _k^{(1)}\}\) and \(\{\eta _k^{(1)}\}\) are monotonically increasing (cf. [11]).

3.2 The other CZ-eigenvalues

We discuss how to compute \(\lambda _i\) for \(i=2,\cdots ,L\). Suppose \(\lambda _{i-1}\) is already computed, we need to determine the next CZ-eigenvalue \(\lambda _i\). Consider the optimization problem

The optimal value of (3.6) is equal to \(\lambda _i\) if

Similarly, Lasserre’s semidefinite relaxations can be applied to solve (3.6). For the orders \(k=k_0,k_0+1,\cdots\), the k-Lasserre relaxation is

The dual problem of (3.8) is

In practice, we usually do not know whether \(\lambda _i\) exists or not. If it exists, how to choose \(\delta\) to satisfy (3.7). The existence of \(\lambda _i\) and the relation (3.7) can be checked by solving the optimization problem

Its optimal value can be computed by semidefinite relaxations like (3.8, 3.9).

Proposition 3.1

Let \(\mathcal {A} \in T^m(\mathbb {R}^n)\). Let \(\lambda _{i-1}\) be the \((i-1)\)-th smallest CZ-eigenvalue of \(\mathcal {A}\). For all \(\delta >0\), we have the following properties:

-

(i)

The relaxation (3.8) is infeasible for some k if and only if \(\lambda _{i-1}+\delta > \lambda _{max}\).

-

(ii)

If \(\chi _i=\lambda _{i-1}\) and \(\lambda _i\) exists, then \(\delta\) satisfies (3.7).

-

(iii)

If \(\chi _i=\lambda _{i-1}\) and (3.8) is infeasible for some k, then \(\lambda _i\) does not exist and \(\lambda _{max}=\lambda _{i-1}\).

Proof

-

(i)

Necessity: Note that every CZ-eigenpair \((\lambda ,\mu )\) of \(\mathcal {A}\) with \(\lambda \ge \lambda _{i-1}+\delta\), the tms \([u]_{2k}\) is always feasible for (3.8). If the relaxation (3.8) is infeasible for some k, then \(\mathcal {A}\) clearly has no CZ-eigenvalues \(\ge \lambda _{i-1}+\delta\). Therefore, \(\lambda _{i-1}+\delta > \lambda _{max}\).

Sufficiency is obvious.

-

(ii)

If \(\chi _i=\lambda _{i-1}\) and \(\lambda _i\) exists, then \(\lambda _{i-1}+\delta <\lambda _i\). Therefore, \(\delta\) satisfies (3.7).

-

(iii)

From (i), we know \(\lambda _{i-1}\le \lambda _{max}<\lambda _{i-1}+\delta\). Owing to \(\chi _i=\lambda _{i-1}\), we get \(\lambda _{max}=\lambda _{i-1}\), otherwise, \(\chi _i=\lambda _{max}>\lambda _{i-1}\). This is a contradiction to \(\chi _i=\lambda _{i-1}\).

\(\square\)

3.3 The semidefinite relaxation algorithm

Let \(Z(\mathcal {A})\) be the set of all the CZ-eigenvalues of \(\mathcal {A}\). If \(Z(\mathcal {A})\) is nonempty and finite, we can compute all the CZ-eigenvalues sequentially as follows. First, we compute the smallest one \(\lambda _1\) by solving the hierarchy of semidefinite relaxations (3.4, 3.5). As shown in Theorem 3.1, this hierarchy converges in finitely many steps. After getting \(\lambda _1\), we solve the hierarchy of (3.8–3.10) for \(i=2\). If \(\chi _2=\lambda _1\) and (3.8) is infeasible for some order k, then \(\lambda _1\) is also the largest eigenvalue. If \(\chi _2=\lambda _1\) and (3.8) is feasible for k big enough, then \(\lambda _2\) is the 2-th smallest CZ-eigenvalue of \(\mathcal {A}\). Otherwise, decrease the value \(\delta\) as \(\delta :=\frac{\delta }{5}\) and solve (3.6 and 3.10) again. After repeating this process for several times, we can always get \(\chi _2=\lambda _{1}\), and the resulting \(\delta\) satisfies (3.7). Repeating this procedure, we can get \(\lambda _3,\lambda _4,\cdots\), if they exist, or we get the largest eigenvalue and stop.

Algorithm 3.1

Step 0. Choose a small positive value \(\delta\) (e.g., 0.05). Set \(i:=1\).

Step 1. Solve the hierarchy (3.4) and get the smallest CZ-eigenvalue \(\lambda _1\).

Step 2. Set \(i:=i+1\) and solve the hierarchy of (3.10). If \(\chi _i=\lambda _{i-1}\), then go to Step 3; If \(\chi _i>\lambda _{i-1}\), let \(\delta :=\frac{\delta }{5}\) and compute (3.10). Repeat this process until (3.7) holds.

Step 3. Solve the hierarchy (3.8). If (3.8) is infeasible for some k, then \(\lambda _{i-1}\) is the largest eigenvalue and stop. Otherwise, we can get the next smallest eigenvalue \(\lambda _{i}\).

Step 4. Go to Step 2.

In the following, we show the asymptotic and finite convergence of Algorithm 3.1.

Theorem 3.1

Let \(\mathcal {A} \in T^m(\mathbb {R}^n)\) and \(Z(\mathcal {A})\) be the set of its CZ-eigenvalues. Then,

-

(i)

The set \(Z(\mathcal {A})=\emptyset\) if and only if the semidefinite relaxation (3.4) is infeasible for some k.

-

(ii)

If the set \(Z(\mathcal {A})\ne \emptyset\), then the smallest CZ-eigenvalue \(\lambda _1\) always exists and

$$\begin{aligned} \lim _{k\rightarrow \infty }\eta _k^{(1)}=\lim _{k\rightarrow \infty }\rho _k^{(1)}=\lambda _1. \end{aligned}$$(3.11)In addition, if \(Z(\mathcal {A})\) is finite, then for all k sufficiently large,

$$\begin{aligned} \eta _k^{(1)}=\rho _k^{(1)}= \lambda _1. \end{aligned}$$(3.12)Suppose \(y^*\) is an optimal solution of (3.4). If there exists \(t \in [k_0,k]\), such that

$$\begin{aligned} rank M_{t-k_0}(y^*)=rank M_t(y^*), \end{aligned}$$(3.13)then there are \(r:= rank M_t(y^*)\) distinct CZ-eigenvectors associated with \(\lambda _1\).

-

(iii)

For \(i\ge 2\), suppose that \(\lambda _i\) exists and \(0<\delta <\lambda _i-\lambda _{i-1}\), then

$$\begin{aligned} \lim _{k\rightarrow \infty }\eta _k^{(i)}=\lim _{k\rightarrow \infty }\rho _k^{(i)}=\lambda _i. \end{aligned}$$(3.14)If the set \(Z(\mathcal {A})\) is finite, then for all k sufficiently large,

$$\begin{aligned} \eta _k^{(i)}=\rho _k^{(i)}= \lambda _i. \end{aligned}$$Suppose \(y^*\) is an optimal solution of (3.8). If there exists \(t \in [k_0,k]\), such that (3.13) holds, then there are \(r:= rank M_t(y^*)\) distinct CZ-eigenvectors associated with \(\lambda _i\).

Proof

-

(i)

Necessity: If \(Z(\mathcal {A})=\emptyset\), then the feasible set K is empty. By Positivstellensatz [1], \(-1 \in I(h)+Pr(g)\). So, when k is big enough, \(-1 \in I_{2k}(h)+Pr_k(g)\), and then the optimization (3.5) is unbounded from above. By duality theory, (3.4) must be infeasible, for all k big enough.

Sufficiency: Assume (3.4) is infeasible for some k. Then \(\mathcal {A}\) has no CZ-eigenpairs. Otherwise, suppose \((\lambda , u)\) is such one CZ-eigenpair. Then the tms \([u]_{2k}\) [see the notation in Sect. 2] is always feasible for (3.4), which is a contradiction. So \(Z(\mathcal {A})=\emptyset\).

-

(ii)

Firstly, we prove the asymptotic convergence. Since \(Z(\mathcal {A})\) is nonempty, then \(\mathcal {A}\) has at least one CZ-eigenvalue. So \(\lambda _1\) always exists. Note that \(x^Tx-1\) is a polynomial in the tuple h, then \(-(x^Tx-1)^2\in I(h)\) and the set \(-(x^Tx-1)^2\ge 0\) is compact. The ideal I(h) is archimedean, which implies that \(I(h)+Q(g)\) is also archimedean. So K is compact, then \(\{\eta _k^{(1)}\}\) asymptotically converges to \(\lambda _1\) (cf. [11]). Therefore, the asymptotic convergence (3.11) is obtained.

Next, we prove the finite convergence. Since \(Z(\mathcal {A})\) is finite, let \(Z(\mathcal {A})=\{\lambda _1,\lambda _2,\cdots ,\lambda _L\}\) and \(\lambda _1<\lambda _2<\cdots <\lambda _L\). Let \(\varphi _1,\varphi _2,\cdots ,\varphi _L\) be the univariate real polynomials in t such that \(\varphi _i(\lambda _j)=0\) when \(i\ne j\) and \(\varphi _i(\lambda _j)=1\) when \(i= j\). For \(i=1,2,\cdots ,L\), let

$$\begin{aligned} a_i:=(\lambda _i-\lambda _1)(\varphi _i(f(x)))^2. \end{aligned}$$Let \(a:=a_1+\cdots +a_L\), then \(a\in \Sigma [x]\). The polynomial

$$\begin{aligned} \hat{f}:=f-\lambda _1-a \end{aligned}$$vanishes identically on K. By Positivstellensatz (cf. [1], Corollary 4.4.3), there exists integers \(\ell >0\) and \(N_1>0\) such that

$$\begin{aligned} q\in Pr_{N_1}(g), \hat{f}^{2\ell }+q\in I_{N_1}(h), \end{aligned}$$where \(Pr_{N_1}(g)\) denotes the \(N_1\)-th truncated preordering generated by the tuple g (cf. [1]). For all \(\varepsilon >0\) and \(c>0\), we can write \(\hat{f}+\varepsilon =\phi _\varepsilon +\theta _\varepsilon\), where

$$\begin{aligned}&\phi _\varepsilon =-c\varepsilon ^{1-2\ell }(\hat{f}^{2\ell }+q), \\&\theta _\varepsilon =\varepsilon (1+\hat{f}/\varepsilon +c(\hat{f}/\varepsilon )^{2\ell })+c\varepsilon ^{1-2\ell }q. \end{aligned}$$By Lemma 2.1 of [19], when \(c\ge \frac{1}{2\ell }\), there exists \(N\ge N_1\) such that for all \(\varepsilon >0\),

$$\begin{aligned} \phi _\varepsilon \in I_{2N}(h), \theta _\varepsilon \in Pr_N(g). \end{aligned}$$Therefore, we have

$$\begin{aligned} f-(\lambda _1-\varepsilon )=\phi _\varepsilon +\sigma _\varepsilon , \end{aligned}$$where \(\sigma _\varepsilon =\theta _\varepsilon +a \in Pr_N(g)\) for all \(\varepsilon >0\). This implies that for all \(\varepsilon >0\), \(\gamma =\lambda _1-\varepsilon\) is feasible in (3.5) for the order N. Thus, we get \(\eta _N^{(1)}\ge \lambda _1\). Note that \(\eta _k^{(1)}\le \rho _k^{(1)} \le \lambda _1\) for all k and the sequence \(\{\eta _k^{(1)}\}\) is monotonically increasing. So, (3.12) must be true for all \(k\ge N\).

Note that \(L_h^{(t)}(y^*)=0, M_t(y^*)\succeq 0, L_{g}^{(t)}(y^*)\succeq 0~ (t\le k)\). When (3.13) is satisfied, there exist \(r:= rank M_t(y^*)\) distinct vectors \(u_1,\cdots ,u_r \in K\) and scalars \(c_1,\cdots ,c_r\) [20] such that

$$\begin{aligned}&y^*|_{2t}=c_1[u_1]_{2t}+\cdots +c_r[u_r]_{2t}, \\&\quad c_1>0,\cdots ,c_r>0. \end{aligned}$$The constraint \(\langle 1,y^*\rangle =1\) implies \(c_1+\cdots +c_r=1\). We have

$$\begin{aligned} c_1f(u_1)+\cdots +c_rf(u_r)=\langle f,y^*|_{2t}\rangle =\langle f,y^*\rangle =\rho _k^{(1)}\le \lambda _1. \end{aligned}$$Since every \(f(u_i)\ge \lambda _1\), then we have

$$\begin{aligned} f(u_i)=\lambda _1,i=1,\cdots ,r. \end{aligned}$$So each \(u_i\) is a CZ-eigenvector associated to \(\rho _k^{(1)}=f(u_1)=\cdots =f(u_r)=\lambda _1\).

-

(iii)

For \(i\ge 2\), if \(0<\delta <\lambda _i-\lambda _{i-1}\) holds, the optimal value of (3.6) is equal to \(\lambda _i\). The rest of the proof is the similar to that of Theorem 3.1(ii).

\(\square\)

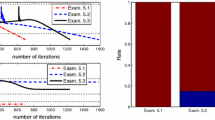

4 Numerical experiments

In this section, we present the numerical experiments to show how to compute all the CZ-eigenvalues of tensors. The Lasserre’s semidefinite relaxations are solved by the software GloptiPoly 3 [8] and SeDuMi [30]. The program is coded in MATLAB (2016a). The experiments are implemented on a personal PC with 2.5 GHz and 2.7 GHz, 8.0 GB RAM, using Windows 10 operation system.

Example 4.1

( [31]). A tensor \(\mathcal {A} \in T^3(\mathbb {R}^3)\) is defined by

We apply Algorithm 3.1 and get four CZ-eigenvalues and the associated CZ-eigenvectors:

The computation takes about 3 s.

Example 4.2

Consider the tensor \(\mathcal {A} \in T^3(\mathbb {R}^n)\) such that

For \(n=3\), we apply Algorithm 3.1 and get seven CZ-eigenvalues and the corresponding CZ-eigenvectors:

The computation takes about 5 s.

For \(n=4\), we apply Algorithm 3.1 and get eight CZ-eigenvalues and the corresponding CZ-eigenvectors:

The computation takes about 10 s.

Example 4.3

Consider the diagonal tensor \(\mathcal {A} \in S^4(\mathbb {R}^3)\) such that

We apply Algorithm 3.1 and get seven CZ-eigenvalues and the associated CZ-eigenvectors:

The computation takes about 5 s.

Example 4.4

Consider the tensor \(\mathcal {A} \in T^3(\mathbb {R}^3)\) such that

We apply Algorithm 3.1 and get one CZ-eigenvalue and the corresponding CZ-eigenvector:

The computation takes about 1 s.

Example 4.5

Consider the tensor \(\mathcal {A} \in T^3(\mathbb {R}^3)\) such that

Using Algorithm 3.1, we get one CZ-eigenvalue and the corresponding CZ-eigenvector:

The computation takes about 1 s.

5 Conclusions

In this paper, we propose the semidefinite relaxation algorithm for computing all the CZ-eigenpairs of tensor that has finitely many CZ-eigenvalues, and prove its asymptotic and finite convergence. Numerical experiments demonstrate the efficiency of the proposed algorithm.

References

Bochnak, J., Coste, M., Roy, M.-F.: Real Algebraic Geometry. Springer, New York (1998)

Chen, Z., Qi, L.: A semismooth Newton method for tensor eigenvalue complementarity problem. Comput. Opt. Appl. 65(1), 109–126 (2016)

Chen, Z., Yang, Q., Ye, L.: Generalized eigenvalue complementarity problem for tensors. Mathematics 63(1), 1–26 (2015)

Cui, C., Dai, Y., Nie, J.: All real eigenvalues of symmetric tensors. SIAM J. Matrix Anal. Appl. 35, 1582–1601 (2014)

Curto, R.E., Fialkow, L.A.: Truncated K-moment problems in several variables. J. Oper. Theory 54(1), 189–226 (2005)

Fan, J., Nie, J., Zhou, A.: Tensor eigenvalue complementarity problems. Math. Program Ser. A. 170, 507–539 (2018)

Helton, J.W., Nie, J.: A semidefinite approach for truncated K-moment problems. Found. Comput. Math. 12(6), 851–881 (2012)

Henrion, D., Lasserre, J., Loefberg, J.: GloptiPoly 3: moments, optimization and semidefinite programming. Optim. Methods Softw. 24, 761–779 (2009)

Hu, S., Qi, L.: Algebraic connectivity of an even uniform hypergraph. J. Combinatorial Optim. 24(4), 564–579 (2012)

Kolda, T.G., Mayo, J.R.: Shifted power method for computing tensor eigenpairs. SIAM J. Matrix Anal. Appl. 32(4), 1095–1124 (2011)

Lasserre, J.B.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11(3), 796–817 (2001)

Lasserre, J.B.: Moments. Positive Polynomials and Their Applications. Imperial College Press, London (2009)

Laurent, M.: Sums of squares moment matrices and optimization over polynomials. In: Putinar, M., Sullivant, S. (eds.) Emerging Applications of Algebraic Geometry, IMA Volumes in Mathematics and its Applications, pp. 157–270. Springer, Berlin (2009)

Lim, L.H.: Singular values and eigenvalues of tensors: a variational approach. In: Proceedings of the IEEE International Workshop on Computational Advances in Multi-Sensor Addaptive Processing. CAMSAP05, IEEE Computer Society Press, Piscataway, pp 129–132. (2005)

Li, W., Ng, M.: On the limiting probability distribution of a transition probability tensor. Linear Multilinear Algebra 62(3), 362–385 (2014)

Li, X., Ng, M., Ye, Y.: Finding stationary probability vector of a transition probability tensor arising from a higher-orderMarkov chain. Technical Report, Department of Mathematics, Hong Kong Baptist University (2011). http://www.math.hkbu.edu.hk/~mng/tensor-research/report1.pdf

Martins, J.A.C., Pinto da Costa, A.: Stability of finite-dimensional nonlinear elastic systems with unilateral contact and friction. Int. J. Solids Struct. 37(18), 2519–2564 (2000)

Martins, J.A.C., Pinto da Costa, A.: Bifurcations and instabilities in frictional contact problems: theoretical relations, computational methods and numerical results. In: European Congress on Computational Methods in Applied Sciences and Engineering: ECCOMAS (2004)

Nie, J.: Polynomial optimization with real varieties. SIAM J. Opt. 23(3), 1634–1646 (2013)

Nie, J.: Certifying convergence of Lasserres hierarchy via flat truncation. Math. Program. 142, 485–510 (2013)

Nie, J.: Optimality conditions and finite convergence of Lasserre’s hierarchy. Mathematical Program. 146(1–2), 97–121 (2014)

Nie, J., Zhang, X.: Real eigenvalues of nonsymmetric tensors. Comput. Opt. Appl. 70, 1–32 (2018)

Ni, Q., Qi, L., Wang, F.: An eigenvalue method for the positive definiteness identification problem. IEEE Trans. Autom. Control 53, 1096–1107 (2008)

Pinto da Costa, A., Figueiredo, I.N., Martins, J.A.C.: A complementarity eigen problem in the stability analysis of finite dimensional elastic systems with frictional contact. In: Ferris, M., Pang, J.S., Mangasarian, O. (eds.) Complementarity Applications Algorithms and Extensions, pp. 67–83. Kluwer Academic, New York (2001)

Qi, L.: Eigenvalues of a real supersymmetric tensor. J. Symb. Comput. 40(6), 1302–1324 (2005)

Qi, L.: Eigenvalues and invariants of tensors. J Math Anal Appl 325(2), 1363–1377 (2007)

Qi, L., Teo, K.L.: Multivariate polynomial minimization and its application in signal processing. J. Global Optim. 26(4), 419–433 (2003)

Qi, L., Wang, F., Wang, Y.: Z-eigenvalue methods for a global polynomial optimization problem. Math. Program. 118(2), 301–316 (2009)

Qi, L., Yu, G., Wu, E.X.: Higher order positive semi-definite diffusion tensor imaging. SIAM J. Imaging Sci. 3(3), 416–433 (2010)

Sturm, J., SeDuMi, F.: A MATLAB toolbox for optimization over symmetric cones. Optim. Methods Softw. 11–12, 625–65 (1999)

Zhou, G.L., Qi, L., Wu, S.Y.: Efficient algorithms for computing the largest eigenvalue of a nonnegative tensor. Frontiners of Mathematics in China, SP Higher Education Press 8(1), 155–168 (2013)

Acknowledgements

The author is very grateful to School of Mathematical Sciences, Shanghai Jiao Tong University for its support and help. The author would like to thank the associate editor and two anonymous referees for their constructive comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the Science and Technology Foundation of the Department of Education of Hubei Province (B2020151) and the University-Industry Collaborative Education Program (201901032002).

Rights and permissions

About this article

Cite this article

Zeng, M. Tensor Z-eigenvalue complementarity problems. Comput Optim Appl 78, 559–573 (2021). https://doi.org/10.1007/s10589-020-00248-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-020-00248-1