Abstract

The exterior Bernoulli problem is rephrased into a shape optimization problem using a new type of objective function called the Dirichlet-data-gap cost function which measures the \(L^2\)-distance between the Dirichlet data of two state functions. The first-order shape derivative of the cost function is explicitly determined via the chain rule approach. Using the same technique, the second-order shape derivative of the cost function at the solution of the free boundary problem is also computed. The gradient and Hessian informations are then used to formulate an efficient second-order gradient-based descent algorithm to numerically solve the minimization problem. The feasibility of the proposed method is illustrated through various numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this note, we are interested in the so-called Bernoulli’s free boundary problem (FBP). The problem, which is considered as the prototype of a stationary FBP and is called in some literature as the Alt–Caffarelli problem (see [1]), find their origin in the description of free surfaces for ideal fluids [37]. There are, however, numerous other applications leading to similar formulations, for instance, in the context of optimal design, electro chemistry and electro statics (see [36] and also [35] for further industrial applications).

Bernoulli problem can be classified into two cases, namely, the exterior Bernoulli FBP and the interior Bernoulli FBP. Here, we focus our attention on the former case. In the exterior problem, a bounded and connected domain \(A \subset {\mathbb {R}}^2\) with a fixed boundary\(\Gamma : = \partial A\) and a constant \(\lambda < 0\) are known or given. The task is to find a bounded connected domain \(B \subset {\mathbb {R}}^2\) with a free boundary\(\Sigma := \partial B\), B contains the closure of A, and an associated state function \(u:=u(\Omega )\), where \(\Omega = B\setminus {\bar{A}}\), such that the following overdetermined system of partial differential equations (PDEs) is satisfied:

Here, \(\partial _{{\mathbf {n}}}{u}:=\nabla u \cdot {\mathbf {n}}\) denotes the normal derivative of u and \({\mathbf {n}}\) represents the outward unit normal vector to \(\Sigma\).

The presence of two boundary conditions imposed on the exterior boundary \(\Sigma\) makes the problem difficult to solve. Nevertheless, it is known that (1) admits a classical solution for simply connected bounded domain \(\Omega\), for any given constant \(\lambda < 0\). In addition, the shape solution \(\Omega ^*\) is unique for bounded convex domains A [36] and the free boundary \(\Sigma ^*\) is \(C^{2,\alpha }\) regular (see [47, Theorem 1.1]).

Our main intent in this work is to numerically solve (1) by performing a novel iterative second-order gradient-based optimization procedure. Our approach relies on the method known as shape optimization (see, e.g., [22, 46, 71]) which is already an established tool to solve such a free boundary problem. The main idea of the said technique is to reformulate the original problem into an optimization problem of the form

where \(J_0\) denotes a suitable objective functional that depends on a domain \(\Omega\) as well as on a function \(u(\Omega )\), which is the solution of a partial differential equation \(e(u(\Omega)) = 0\) posed on \(\Omega\).

There are different ways to write (1) in the form of (2). A typical approach is to choose one of the boundary conditions on the free boundary to obtain a well-posed state equation, and then track the remaining boundary data in a least-squares sense. Such formulation has been carried-out in several previous investigations; see, for instance, [31, 32, 41, 44, 50, 65, 66]. Alternatively, one can consider an energy-gap type cost function which consists of two auxilliary states; one that is a solution of pure Dirichlet problem and one that satisfies a mixed Dirichlet–Neumann problem (see, e.g., [9,10,11,12, 33]). The objective function used in such formulation is sometimes called the Kohn-Vogelius cost functional since Kohn and Vogelius [53] were among the first who used such a functional in the context of inverse problems. Mathematically, these aforementioned formulations are given as follows:

- Dirichlet-data-tracking approach:

-

$$\begin{aligned} \min _{\Omega } J_1(\Sigma ) \equiv \min _{\Omega } \frac{1}{2} \int _{\Sigma }{u_{\mathrm{N}}^2}{\, \mathrm{d} \sigma } \end{aligned}$$

where the state function \(u_{\mathrm{N}}:=u_{\mathrm{N}}(\Omega )\) is the solution to the mixed Dirichlet–Neumann problem

$$\begin{aligned} -\Delta u_{\mathrm{N}}= 0 \ {\mathrm{in}} \ \Omega , \quad u_{\mathrm{N}}= 1 \ {\mathrm{on}} \ \Gamma , \quad \partial _{{\mathbf {n}}}{u_{\mathrm{N}}} = \lambda \ {\mathrm{on}} \ \Sigma ; \end{aligned}$$(3) - Neumann-data-tracking approach:

-

$$\begin{aligned} \min _{\Omega } J_2(\Sigma ) \equiv \min _{\Omega } \frac{1}{2} \int _{\Sigma }{\left( \frac{\partial u_{\mathrm{D}}}{\partial {\mathbf {n}}} - \lambda \right) ^2}{\, \mathrm{d} \sigma } \end{aligned}$$

where the state function \(u_{\mathrm{D}}:=u_{\mathrm{D}}(\Omega )\) is the solution to the pure Dirichlet problem

$$\begin{aligned} -\Delta u_{\mathrm{D}}= 0 \ {\mathrm{in}} \ \Omega , \quad u_{\mathrm{D}}= 1 \ {\mathrm{on}} \ \Gamma , \quad u_{\mathrm{D}}= 0 \ {\mathrm{on}} \ \Sigma ; \end{aligned}$$(4) - Energy-gap type cost functional approach:

-

$$\begin{aligned} \min _{\Omega } J_3(\Omega ) \equiv \min _{\Omega } \frac{1}{2} \int _{\Omega }{\left| \nabla \left( u_{\mathrm{N}}- u_{\mathrm{D}}\right) \right| ^2}{\, \mathrm{d} x} \end{aligned}$$

where the state functions \(u_{\mathrm{N}}\) and \(u_{\mathrm{D}}\) satisfy systems (3) and (4), respectively.

In this study, one of our main objectives is to introduce yet another shape optimization reformulation of (1) which, to the best of our knowledge, has not been studied in any previous investigation. Similar to the cost functional \(J_3\), we make use of a cost function consisting of two auxiliary states \(u_{\mathrm{N}}\) and \(u_{\mathrm{R}}\):

where the state function \(u_{\mathrm{N}}\) is the solution of (3) and \(u_{\mathrm{R}}:=u_{\mathrm{R}}(\Omega )\) satisfies, for a given strictly positive (constant) \(\beta\), the following equivalent form of (1) with a Robin boundary condition:

Clearly, if \((u,\Omega )\) is a solution of (1), then \(u_{\mathrm{N}}=u_{\mathrm{R}}=u\); therefore, \(J(\Sigma ) = 0\). Conversely, if \(J(\Sigma ) = 0\), then \(u_{\mathrm{N}}= u_{\mathrm{R}}\) on \(\Sigma\). Hence, the equation \(\partial _{{\mathbf {n}}}{(u_{\mathrm{N}}- u_{\mathrm{R}})} = \beta u_{\mathrm{R}}= 0\) on \(\Sigma\) and the assumption \(\beta > 0\) implies that \(u_{\mathrm{R}}= u_{\mathrm{N}}= 0\) on \(\Sigma\). Consequently, \(u = u_{\mathrm{N}}= u_{\mathrm{R}}\) is a solution of problem (1). We remark that, in the limiting case as \(\beta\) goes on infinity, the PDE system (6) transforms into the pure Dirichlet problem (4) (this means that \(u_{\mathrm{R}}= 0\) on \(\Sigma\)), leading us to recover from (5) the classical Dirichlet-data-tracking formulation of the FBP (1).

We stress that the formulations presented above can also be applied to Poisson problems with overdetermined non-homogenous (sufficiently smooth) boundary conditions. Here, however, we only inspect the free boundary problem (1) in order to simplify the discussion.

Motivation Our reason for considering the new cost functional \(J(\Sigma )\) stems from several previous related works. In the study carried out in [67], we have considered the cost functional \(J_2\) with a different state constraint problem. More precisely, we replaced the state variable \(u_{\mathrm{D}}\) with \(u_{\mathrm{R}}\) which is the solution of the mixed Dirichlet–Robin problem (6). We found that such modification of the problem setup actually yields more regularity in the solution of the associated adjoint state problem. In fact, the adjoint state associated to the shape optimization problem “\(\min _{\Omega } \frac{1}{2} \Vert \partial _{{\mathbf {n}}}{u_{\mathrm{R}}} - \lambda \Vert ^2_{L^2(\Sigma )}\) subject to (6)” enjoys the same degree of regularity (depending of course on the regularity of \(\Omega\)) with that of \(u_{\mathrm{R}}\). Also, we observed, through various numerical examples, that this new state constraint yields faster and more stable convergence of the approximate solution to the exact solution (both in case of the exterior and interior Bernoulli FBP) than the classical setting “\(\min _{\Omega } \frac{1}{2} \Vert \partial _{{\mathbf {n}}}{u_{\mathrm{D}}} - \lambda \Vert ^2_{L^2(\Sigma )}\) subject to (4).” On the other hand, in [68], we proposed a modification of the energy-gap cost functional approach for the exterior Bernoulli FBP (1). The optimization problem we put forward in (1) utilizes a similar functional to \(J_3\), but, instead of (4), we took \(u_{\mathrm{R}}\) as one of the state constraints. More precisely, we considered the problem “\(\min _{\Omega } J_4(\Omega ) \equiv \min _{\Omega } \frac{1}{2} |u_{\mathrm{R}}- u_{\mathrm{N}}|^2_{H^1(\Omega )}\), subject to (3) and (6)” (where \(|\cdot |_{H^1(\Omega )}\) denotes the \(H^1(\Omega )\)-seminorm; that is, \(|\cdot |_{H^1(\Omega )} := \Vert \nabla (\cdot )\Vert _{L^2(\Omega )}\)) as a shape optimization reformulation of (1). We emphasize that under this formulation, and assuming appropriate conditions on the Robin coefficient \(\beta\) as well as on the exterior boundary \(\Sigma\), we were able to express the first-order shape derivative of \(J_4\) at \(\Omega\) along a given deformation field in terms of just the state constraint \(u_{\mathrm{N}}\). This in turn allowed us to also reduce the number of PDE constraints to be solved when applying a second-order method to numerically resolve the free boundary problem (1) (see Proposition 1 and Corollary 2 in [68]). We stress that such reduction in the number of constraints in the optimization setup is certainly advantageous in terms of numerical aspects. Indeed, the numerical results presented in [68] show that the proposed modification requires less computing time per iteration to numerically solve (1) than the classical formulation “\(\min _{\Omega } \frac{1}{2} |u_{\mathrm{D}}- u_{\mathrm{N}}|^2_{H^1(\Omega )}\) subject to (3) and (4)” (as expected). Meanwhile, in a related problem, Laurain and Privat [55] examined a shape optimization formulation of a Bernoulli-type problem with geometric constraints. In their work, the domain \(\Omega\), which is simply connected, is constrained to lie in the half space determined by \(x_1 \geqslant 0\). The boundary of the solution domain is also forced to contain a segment of the hyperplane \(\{x_1 = 0\}\) where a non-homogeneous Dirichlet condition is imposed. Then, the authors seek to find the solution of a partial differential equation satisfying a Dirichlet and a Neumann boundary condition simultaneously on the free boundary. The cost function examined by the authors in [55] has the form \(J_5(\Omega ) := \Vert u_{2,\varepsilon } - u_1\Vert _{L^2(\Omega )}^2\), where \(u_{2,\epsilon }\) satisfies a mixed Dirichlet–Robin boundary problem while \(u_1\) is a solution of a pure Dirichlet problem. Here, \(u_{2,\varepsilon }\) has the property that “\(u_{2,\varepsilon } \rightarrow u_2\) as \(\varepsilon \rightarrow 0\),” where \(u_2\) is the unique (weak) solution of a mixed Dirichlet–Neumann problem. We point out here that, as opposed to the formulation minimizing \(J_4\) whose first-order shape derivative only depends on \(u_{\mathrm{N}}\) (under appropriate conditions on \(\beta\) and the exterior boundary \(\Sigma\)), the cost function \(J_5\) actually has a first-order shape derivative that depends on the solutions of four PDEs (two state problems and two adjoint state problems).

Besides the above statements, we mention that minimizing \(J_4(\Omega )\) over the set of admissible domains \({\mathcal {O}}_\mathrm{ad}\) (see Sect. 4) of \(\Omega\) is, to some extent, equivalent to finding the optimal shape solution to the optimization problem “\(\min _{\Omega } J(\Sigma )\) subject to (3) and (6),” and we explained it as follows. Firstly, for convenience, let us introduce the notation “\(\lesssim\)”. This means that if \(P \lesssim Q\), then we can find some constant \(c > 0\) such that \(P \leqslant c Q\) (obviously, \(Q \gtrsim P\) is defined as \(P \lesssim Q\)). Then, for an open bounded domain \(\Omega \subset {\mathbb {R}}^2\) with Lipschitz boundary (in this study, we shall in fact assume that \(\Omega\) is \(C^{2,1}\) regular), the inequality \(\Vert v\Vert _{L^2(\partial \Omega )} \lesssim \Vert v \Vert _{H^1(\Omega )}\) holds, for all \(v \in H^1(\Omega )\). We note that this bound clearly exhibits the compact embedding of \(H^1(\Omega )\) in \(L^2(\partial \Omega )\) (see [56, p. 159]) and it actually follows from the well-known trace theorem (see, e.g., [57, Theorem 3.3.7, p. 102], [59, Theorem 5.5, p. 95]) coupled with the compact embedding of \(H^{1/2}(\partial \Omega )\) in \(L^2(\partial \Omega )\) (cf. [69, Theorem, 2.5.5, p. 61]). Moreover, it is not hard to see from this result that we also have the relation \(\Vert v\Vert _{L^2(\Gamma )} \lesssim \Vert v \Vert _{H^1(\Omega )}.\) This inequality shows that the set \(H^1_{\Gamma ,0}(\Omega ) = \{v \in H^1(\Omega ) : v=0 \text { on }\Gamma \}\) is strongly closed in \(H^1(\Omega )\) and, in addition, a convex set. From [19, p. 54], for instance, we know that strongly closed convex sets are also weakly closed (see also [17, Lemma 3.1.15, p. 119]). Hence, the weak convergence \(v_{n_k} \rightharpoonup v\) implies that v is in fact in the same set \(H^1_{\Gamma ,0}(\Omega )\). Furthermore, we note that we may actually prove (following the proof of [43, Lemma 2.19, p. 62]) that \(|v|_{H^1(\Omega )} = \Vert \nabla v\Vert _{L^2(\Omega )} \gtrsim \Vert v \Vert _{H^1(\Omega )},\) for all \(v \in H^1_{\Gamma ,0}(\Omega )\). We note that this bound in fact shows that the \(H^1(\Omega )\)-seminorm \(|\cdot |_{H^1(\Omega )}\) is actually equivalent to the \(H^1(\Omega )\)-norm on \(H^1_{\Gamma ,0}(\Omega )\). Lastly, we mention that we can also verify, possibly by way of contradiction, that the norm

on the other hand, is equivalent to the usual Sobolev \(H^1(\Omega )\)-norm. Thus, by these results, taking \(v = u_{\mathrm{N}}- u_{\mathrm{R}}\in H^1_{\Gamma ,0}(\Omega )\), we can deduce the sequence of inequalities

It should also be recognized that the above relation is a mere consequence of the inequality \(\Vert u_{\mathrm{N}}- u_{\mathrm{R}}\Vert _{L^2(\Sigma )}^2 \lesssim \Vert u_{\mathrm{N}}- u_{\mathrm{R}}\Vert _{H^{1/2 + \varepsilon }(\Omega )}^2\) which holds true for any \(\varepsilon > 0\) due to the trace theorem. This observation further gives us the motivation to consider minimizing \(J(\Sigma )\), subject to (3) and (6), over the set of admissible domains for \(\Omega\) to numerically solve the free boundary problem (1).

The minimization problem (5) can be carried out numerically using different computational strategies [67]. Standard algorithms to minimize J utilizes some gradient information when using a first-order method and also uses the Hessian when applying second-order methods. So, in order for us to accomplish our main objective, we first need to carry out the sensitivity analysis of the cost functional \(J(\Omega )\) with respect to a local perturbation of the domain \(\Omega\). Accordingly, we derive the first- and the second-order shape derivative of J through chain rule approach. This requires, beforehand, the expressions for the shape derivatives of the states \(u_{\mathrm{N}}\) and \(u_{\mathrm{R}}\). Of course, there are other ways to obtain the shape derivative of J such as through a technique used in [33]. However, the method employed in [33] by the authors, which was inspired by [25, 26], restricts the results to starlike domains. Another method could be to use only the Eulerian derivatives [22] of the states and follow [12], or apply the so-called rearrangement method, first used in [51], to obtain the shape derivative of J. We emphasize that the former approach applies not only to starlike domains but also to more general \(C^{k,\alpha }\) domains. On the other hand, the rearrangement method provides a rigorous computation of the shape derivatives of cost functionals using only the Hölder continuity of the state variables, bypassing the computation of the material and shape derivatives of states (see, e.g., [10, 44, 50]). Further, this method requires less regularity of the domain than in the case when applying the classical chain rule approach. Here, we opted to apply the chain rule approach since the shape derivatives of \(u_{\mathrm{N}}\) and \(u_{\mathrm{R}}\) are already available in the literature (see, e.g., [11] and [72], respectively). In addition to these previously mentioned techniques, we remark that the shape gradient of J can also be computed using the well-known minimax formulation developed in [20]. Similar to the rearrangement method, this strategy in computing shape derivatives of cost functionals does not require the knowledge of the shape derivative of the states as it naturally introduces the use of adjoint states to derive the expression for the shape derivative of the cost; see, for instance, [65, 66].

The plan of the paper is as follows. In Sect. 2, we describe the weak formulations of the state equations and briefly discuss the existence, uniqueness and regularity of their solutions. In Sect. 3, we recall a few basic concepts from shape calculus and give the shape derivatives of the states. Then, we compute the first-order shape derivative of the cost J through chain rule approach followed by the computation of its corresponding second-order shape derivative at the solution of the free boundary problem (1). Also, we shortly discuss about the ill-posedness of the proposed shape optimization formulation by inspecting the shape Hessian form at a critical shape. Meanwhile, in Sect. 4, we examine the existence of optimal solution to the minimization problem under consideration. After that, in Sect. 5, we describe how the gradient and Hessian informations can be utilized in formulating an efficient boundary variation algorithm to numerically solve the present optimization problem. Finally, we demonstrate the feasibility of the newly proposed shape optimization approach by solving some concrete problems. Also, to illustrate the efficiency of the proposed method, we compare our numerical results with the results obtained by the classical Dirichlet-data-tracking cost functional approach. We end the paper with a brief conclusion given in Sect. 6.

2 Preliminaries

We first review an essential quality of the state solutions which is vital in guaranteeing the existence of their shape derivatives.

2.1 Weak formulation of the state equations

The respective variational formulations of the state problems (3) and (6) are stated as follows.

Find \(u_{\mathrm{N}}\in H^1(\Omega )\), with \(u_{\mathrm{N}}= 1\) on \(\Gamma\), such that

Find \(u_{\mathrm{R}}\in H^1(\Omega )\), with \(u_{\mathrm{R}}= 1\) on \(\Gamma\), such that

where \(H^1_{\Gamma ,0}(\Omega )\) is the space of test functions in the introduction. It is well-known that the variational equation (7) admits a unique solution in \(H^1(\Omega )\), while it can easily be verified (for instance, by means of Lax-Milgram theorem) that (8) also have a unique solution in \(H^1(\Omega )\) (see [39, 58]).

Remark 1

We emphasize that since \(\beta > 0\), then uniqueness of weak solution \(u_{\mathrm{R}}\in H^1(\Omega )\) is guaranteed for the mixed Robin-Dirichlet problem (6). Moreover, we note that we may actually consider \(\beta\) to be a function on \(\Sigma\) instead of just being a positive constant. In this case, however, we require \(\beta :=\beta (x)\) to be at least an \(L^{\infty }\) function on \(\Sigma\) (i.e., \(\beta \in L^{\infty }(\Sigma )\)) and be positive almost everywhere in the free boundary to ensure uniqueness of weak solution to (6) (cf., e.g, [58, Lemma 7.36.3, p. 617]). In this regard, we mention here in advance that in Section 3, we will in fact consider the mean curvature of the free boundary \(\Sigma\) as the function \(\beta\). Evidently, \(\beta = \kappa\) belongs to \(L^{\infty }(\Sigma )\) because of Rademacher’s theorem (recall that \(\Omega\), by assumption, is \(C^{2,1}\) regular). Hence, the first mentioned requirement for existence of unique weak solution to (6) is satisfied, however, the condition that \(\kappa (x) \geqslant 0\) on \(\Sigma\) only holds for convex domains. Nevertheless, this is not an issue when the domain A (whose boundary is represented by \(\Gamma\)) is convex because, according to [48, Theorem 2.1] (and the references therein), when A is convex, then so is the unique solution domain \(\Omega ^*\) to the free boundary problem (1).

2.2 Higher regularity of the state solutions

The unique solution \(u_{\mathrm{N}}\) of the PDE system (3) actually possesses higher regularity if \(\Omega\) is assumed to be at least \(C^{1,1}\) regular. In fact, the solution is also in \(H^2(\Omega )\) in this case, and in general, if \(\Omega\) is of class \(C^{k+1,1}\), where k is a non-negative integer, then \(u_{\mathrm{N}}\) is \(H^{k+2}\) regular. This claim can easily be verified since the fixed boundary \(\Gamma\) and the free boundary \(\Sigma\) are disjoint, (see, e.g., [10, Theorem 29]). Analogously, the unique solution \(u_{\mathrm{R}}\in H^1(\Omega )\) of (6) also have higher regularity depending on the degree of smoothness of \(\Omega\). More precisely, if \(\Omega\) is of class \(C^{k+1,1}\) (again k is a non-negative integer), then \(u_{\mathrm{R}}\) is also an element of \(H^{k+2}(\Omega )\) (see, e.g., [52, Remark 3.5]). For more details about existence and uniqueness of solutions to mixed Robin-Dirichlet problems in \(W^{s,2}\) for bounded domains in \({\mathbb {R}}^{{\mathrm{d}}}\), we refer the readers to [58, Section 7.36].

3 Shape sensitivity analysis of the states and cost function

Let us consider a bounded and connected domain \(U \supset {\overline{\Omega }}\) and a family of deformation fields

Clearly, every \({\mathbf {V}}\in \Theta\) forces \(\Gamma\) to remain invariant after a deformation since \({\mathbf {V}}\) vanishes on \(\Gamma\). Hence, \(\Gamma\) is a component of the boundary of any perturbation of \(\Omega\). In this work, every admissible perturbation of the reference domain \(\Omega\) is described as follows. Given an element of \(\Theta\), we perturb \(\Omega\) by means of the so-called perturbation of the identity operator (see, e.g., [22, Section 2.5.2, p. 147] or [10]):

For sufficiently small t and for each \({\mathbf {V}}\in \Theta\), the operator \(T_t\) can be shown to be a \(C^{2,1}\) diffeomorphism from \(\Omega\) onto its image (cf. [71]).

With the above definition of \(\Omega _t:=T_t(\Omega )\), the state solutions \(u_{\mathrm{N}t}\) and \(u_{\mathrm{R}t}\) satisfy

respectively, where \({\mathbf {n}}_t\) is the unit outward normal to \(\Sigma _t\). Here, we can actually drop t in \(\Gamma _t\) because \(\Gamma _t = \Gamma\) for all t. Note that for \(t=0\), we recover the reference domain \(\Omega := \Omega _0\), with fixed boundary \(\Gamma := \Gamma _0\) and free boundary \(\Sigma := \Sigma _0\).

Next, let us recall some key definitions from shape calculus. We say that the function \(u(\Omega )\) has a material derivative\({\dot{u}}\) and a shape derivative\(u'\) at zero in the direction \({\mathbf {V}}\) if the limits

exist, respectively, where \((u(\Omega _t) \circ T_t)(x) = u(\Omega _t)(T_t(x))\). These expressions are related by

provided that \(\nabla u \cdot {\mathbf {V}}\) exists in some appropriate function space [22, 71]. In general, if \({\dot{u}}\) and \(\nabla u \cdot {\mathbf {V}}\) both exist in the Sobolev space \(W^{m,p}(\Omega )\), then \(u'\) also exists in that space.

3.1 Shape derivative of the states

To establish the existence of the shape derivative of J, one needs to show that the material and shape derivatives of the states \(u_{\mathrm{N}}\) and \(u_{\mathrm{R}}\) exist and, consequently, apply the chain rule. Apparently, the shape derivatives of \(u_{\mathrm{N}}\) and \(u_{\mathrm{R}}\) were already obtained in [9, 72], respectively. Their existence can be guaranteed if \(\Omega\) is assumed to be at least \(C^{2,1}\) regular.

Lemma 1

[9] Let\(\Omega\)be a bounded\(C^{2,1}\)domain. Then,\(u_{\mathrm{N}}\in H^3(\Omega )\)is shape differentiable with respect to the domain, and its shape derivative\(u_{\mathrm{N}}' \in H^1(\Omega )\)is the unique solution of the mixed Dirichlet–Neumann problem

where\(\kappa\)denotes the mean curvature of\(\Sigma\).

Lemma 2

[72] Let\(\Omega\)be a bounded\(C^{2,1}\)domain. Then,\(u_{\mathrm{R}}\in H^3(\Omega )\)is shape differentiable with respect to the domain, and its shape derivative\(u_{\mathrm{R}}' \in H^1(\Omega )\)is the unique solution of the mixed Robin-Neumann problem

If\(\beta = \kappa\), then for the shape derivative\(u_{\mathrm{R}}'\)of the solution of (6), it holds that\(u_{\mathrm{R}}'\equiv 0\)when\(\Sigma\)is the free boundary.

3.2 First-order shape derivative of the cost function

Our objective here is to derive the shape derivative of the cost function J in the direction of a deformation field \({\mathbf {V}}\in \Theta\). We recall that, for a given functional \(J : \Omega \rightarrow {\mathbb {R}}\), its directional Eulerian derivative at \(\Omega\) in the direction \({\mathbf {V}}\), if it exists, is defined as the limit

In addition, if the derivative \({\mathrm{d}}J(\Omega )[{\mathbf {V}}]\) exists for all \({\mathbf {V}}\) and the map \({\mathbf {V}}\mapsto {\mathrm{d}}J(\Omega )[{\mathbf {V}}]\) is linear and continuous, then J is shape differentiable at \(\Omega\), and this mapping will be referred to as the shape gradient of J at \(\Omega\). According to the well-known Hadamard–Zolésio structure theorem (see, e.g., [21, Theorem 3.2 and Remark 3.1, Corollary 1]), the shape gradient of J depends only on the normal component of \({\mathbf {V}}\) on the boundary of \(\Omega\) when the domain is regular enough.

For our proposed cost function \(J(\Sigma )\) given in (5), the shape derivative under the assumption that

is given in the following proposition.

Proposition 1

Let\(\Omega\)be of class\(C^{2,1}\)and\({\mathbf {V}}\in \Theta\). Also, let us assume that condition (A) holds true. Then, the Dirichlet-data-gap cost functionalJis shape differentiable with

where\(p_{{\mathrm{N}}}\)denotes the adjoint state which is the unique solution to the PDE system

\(\kappa\)denotes the mean curvature of\(\Sigma\)and thetangential gradient\(\nabla _{\Sigma }\)is given by

Proof

We use chain rule approach coupled with the adjoint method to obtain the shape derivative of J given by (15). Let \(\Omega\) be of class \(C^{2,1}\) and \({\mathbf {V}}\in \Theta\). Since the state variables \(u_{\mathrm{N}}\) and \(u_{\mathrm{R}}\) are sufficiently regular, we can apply Hadamard’s boundary differentiation formula (cf. [22, Theorem 4.3, p. 486] or [46, 71]):

where \(f \in C([0,\varepsilon ],W^{2,p}(U))\), \(p > 1\), and \(\frac{{\mathrm{d}}}{{\mathrm{d}}t}f(0)\) exists in \(W^{1,p}(U)\), to obtain

Here, of course, \(u_{\mathrm{N}}'\) and \(u_{\mathrm{R}}'\) satisfy (13) and (14), respectively. If \(u_{\mathrm{R}}'\) is the shape derivative of the solution of (6) where \(\Sigma\) is the free boundary and \(\beta = \kappa\), then by Lemma 2, \(u_{\mathrm{R}}' \equiv 0\) in \({\overline{\Omega }}\). The expression for \(\mathrm{d}J(\Sigma )[{\mathbf {V}}]\) given by (18) then simplifies to

where we put the subscript “\(\cdot _{{\mathrm{A}}}\)” to emphasize that condition (A) was imposed in the computation of the shape gradient (see also comment on notation below).

We stress that the representation (19) of the shape derivative J in the direction of \({\mathbf {V}}\) at \(\Omega\) is actually not useful for practical applications, especially in the numerical realization of the minimization problem (5) because it would require the solution of (13) for each velocity field \({\mathbf {V}}\). This issue can be resolved using the adjoint method, particularly by introducing the adjoint system (16). Using (13) and (16), we observe, via Green’s second identity, that

At this point, it is useful to recall the so-called tangential Green’s formula (see, e.g., [22, Eq. 5.27, p. 498]): let U be a bounded domain of class \(C^{1,1}\) and \(\Omega \subset U\) with boundary \(\Gamma\). For \({\mathbf {V}}\in C^{1,1}({\overline{U}}, {\mathbb {R}}^2)\) and \(f \in W^{2,p}(U)\), \(p > 1\), we have

where \(\kappa\) is the mean curvature of \(\Gamma\). In addition, when \({\mathbf {V}}\cdot {\mathbf {n}}= 0\), we obviously have

Now, note that \({\mathbf {V}}\cdot {\mathbf {n}}\nabla _{\Sigma } u_{\mathrm{N}}\cdot {\mathbf {n}}= 0\). Hence, by the above identity, we have that

Combining Eqs. (19), (20) and (22), we get the desired result. \(\square\)

Remark 2

We recall from [50, Theorem 4.1] (with \(g = {\mathrm{const.}} = \lambda\) and \(f \equiv 0\)) (see also [32, Lemma 2.1]) that the shape gradient of \(J_1\) is given by

It seems not obvious but the kernel G given in (15) only differs by \(\frac{\partial }{\partial {\mathbf {n}}}\left( \frac{1}{2} u_{\mathrm{N}}^2\right)\) from \(G_1\). This can be made more clear if we apply the identity

to (15). Thus, in addition, we can actually write the shape gradient of J equivalently as follows

Notation Throughout the rest of the discussion, we shall denote the shape gradient of J in the direction of \({\mathbf {V}}\) at \(\Omega\) obtained under condition (A) as \(\mathrm{d}J_{{\mathrm{A}}}\) and its corresponding kernel by \(G_{{\mathrm{A}}}\); i.e.,

(cf. Proposition 1). Meanwhile, the expression \({\mathrm{d}}{{J}}\) simply refers to the shape gradient of J obtained without imposing assumption (A). More precisely, the expression for \({\mathrm{d}}{{J}}\) is given by equation (18):

where we use the notation \(w = u_{\mathrm{N}}- u_{\mathrm{R}}\) and \(w' = u_{\mathrm{N}}' - u_{\mathrm{R}}'\) for simplicity.

Before going to the next subsection, let us also express \(\mathrm{d}{{J}}(\Sigma )[{\mathbf {V}}]\) in another form through the adjoint method. For this purpose, let us consider two harmonic functions \(\Xi _{\mathrm{N}}\) and \(\Xi _{\mathrm{R}}\) that both vanish on \(\Gamma\), and such that \(\partial _{{\mathbf {n}}}{\Xi _{\mathrm{N}}} = w\) and \(\partial _{{\mathbf {n}}}{\Xi _{\mathrm{R}}} + \beta \Xi _{\mathrm{R}}= w\) on \(\Sigma\). Then, by Green’s second identity together with Eqs. (13) and (14), we have

Note that the integral \(\int _{\Sigma }{v\, {\mathrm{div}}_{\Sigma } ({\mathbf {V}}\cdot {\mathbf {n}}\nabla _{\Sigma } u)}{\, \mathrm{d} \sigma }\), for any \(u,v \in H^3(\Omega )\), can be expressed as \(\int _{\Sigma }{v\, {\mathrm{div}}_{\Sigma } ({\mathbf {V}}\cdot {\mathbf {n}}\nabla _{\Sigma } u) }{\, \mathrm{d} \sigma } = - \int _{\Sigma }{ (\nabla _{\Sigma } u \cdot \nabla _{\Sigma } v) {\mathbf {V}}\cdot {\mathbf {n}}}{\, \mathrm{d} \sigma } = \int _{\Sigma }{ ( \partial _{{\mathbf {n}}}{u}\partial _{{\mathbf {n}}}{v} - \nabla u \cdot \nabla v) {\mathbf {V}}\cdot {\mathbf {n}}}{\, \mathrm{d} \sigma }\) via (21) and because \({\mathbf {V}}\cdot {\mathbf {n}}\nabla _{\Sigma } u = 0\). Hence, we have

Inserting the above expression to (26), we arrive at the following result.

Proposition 2

Let\(\Omega\)be of class\(C^{2,1}\)and\({\mathbf {V}}\in \Theta\). Then,Jis shape differentiable with\(\mathrm{d}{{J}}(\Sigma )[{\mathbf {V}}] = \int _{\Sigma }{ G{\mathbf {n}}\cdot {\mathbf {V}}}{\, \mathrm{d} \sigma }\)where

and the quantities\(\Xi _{\mathrm{N}}\)and\(\Xi _{\mathrm{N}}\)are the respective solutions to the following adjoint systems

Remark 3

Again, similar to what has been pointed out in the proof of Proposition 1, we remark that the main reason for rewriting the shape gradient \({\mathrm{d}}{{J}}(\Sigma )[{\mathbf {V}}]\) given in (26) into \({\mathrm{d}}{{J}}(\Sigma )[{\mathbf {V}}] = \int _{\Sigma }{ G{\mathbf {n}}\cdot {\mathbf {V}}}{\, \mathrm{d} \sigma }\) is to avoid the computations of solutions to the boundary value problems (13) and (14) for each velocity field \({\mathbf {V}}\) which are impractical to use in an iterative procedure.

As an immediate consequence of Proposition 2, we have the following optimality result.

Corollary 1

Let the domain\(\Omega ^*\)be such that\(u=u(\Omega ^*)\)satisfies the overdetermined boundary value problem (1); i.e., it holds that

Then, the domain\(\Omega ^*\)fulfils the necessary optimality condition

In addition, of course, it also holds that\({\mathrm{d}}J_\mathrm{A}(\Sigma ^*)[{\mathbf {V}}] = 0\)for all\({\mathbf {V}}\in \Theta\).

Proof

At the shape solution \(\Omega = \Omega ^*\) of the Bernoulli problem (1), \(u_{\mathrm{N}}= 0\) on \(\Sigma ^*\). Hence, \(\nabla u_{\mathrm{N}}= (\partial _{{\mathbf {n}}}{u_{\mathrm{N}}}){\mathbf {n}}\) on \(\Sigma\) and it follows that \(\nabla u_{\mathrm{N}}\cdot \tau = 0\) on \(\Sigma ^*\). Moreover, we see that \(\Xi _{\mathrm{N}}\equiv 0\) and \(\Xi _{\mathrm{R}}\equiv 0\) (and also \(p_{{\mathrm{N}}}\equiv 0\)) in \({\overline{\Omega }}^*\). Thus, G given by (27) is zero (so is \(G_{{\mathrm{A}}}\) given by (25)), which implies the assertion. \(\square\)

In the next section, we shall compute the second-order shape derivative of J at \(\Omega\) in the direction of two vector fields from \(\Theta\). We first treat the case when condition (A) is imposed during the calculation of the shape derivative followed by the case when it is disregarded (see Sect. 3.4).

3.3 Second-order shape derivative of the cost function

Let us now compute the shape Hessian of J at \(\Omega\) in the direction of two vector fields \({\mathbf {V}}, {\mathbf {W}}\in \Theta\). Due to standard regularity theory for elliptic equations, we know that the \(H^3(\Omega )\) regularity of \(u_{\mathrm{N}}\) provides the same regularity \(H^3(\Omega )\) to \(p_{{\mathrm{N}}}\). Hence, for sufficiently small s, it is clear that the derivative \({\mathrm{d}}J_{{\mathrm{A}}}(\Omega _s({\mathbf {W}}))[{\mathbf {V}}]\) of J (under assumption (A)) at \(\Omega _s({\mathbf {W}}) \subset U\) is well-defined. Our next goal is to find an expression for the limit

where

Here, \(\Sigma _s:=\Sigma _s({\mathbf {W}})\) denotes the free boundary of the perturbed domain \(\Omega _s:=\Omega _s({\mathbf {W}})\) obtained via the deformation field \({\mathbf {W}}\in \Theta\) and \(u_{{\mathrm{N}}s} \in H^3(\Omega _s)\) is the unique (weak) solution of the state system (3) on \({\bar{\Omega }}={\bar{\Omega }}_s\). On the other hand, \(\kappa _s ={\mathrm{div}}_{\Sigma _s} {\mathbf {n}}_s\), and \({\mathbf {n}}_s\) and \(\tau _s\) respectively denote the unit outward normal and unit tangent vectors on \(\Sigma _s\).

Accordingly, if, for all \({\mathbf {V}}\) and \({\mathbf {W}}\) in \(\Theta\), \(\mathrm{d}^2J(\Sigma )[{\mathbf {V}},{\mathbf {W}}]\) exists and is bilinear and continuous with respect to \({\mathbf {V}}\) and \({\mathbf {W}}\), then J is said to be twice shape differentiable at \(\Omega\). In this case, the map \(({\mathbf {V}},{\mathbf {W}}) \mapsto {\mathrm{d}}^2J(\Sigma )[{\mathbf {V}},{\mathbf {W}}]\) is called the shape Hessian of J at \(\Omega\) in the \({\mathbf {V}}, {\mathbf {W}}\) direction. For an admissible domain \(\Omega\), it can be shown that the shape Hessian has its support on \(\partial \Omega\) and it is independent on the tangential component of \({\mathbf {W}}\) on the boundary. However, the exact expression for the shape Hessian, in general, consists of the tangential component of \({\mathbf {V}}\). This means, basically, that the shape Hessian is generally not symmetric (see, e.g., [22, Chapter 9, Section 6]). Even so, at the optimal shape solution \(\Omega ^*\) of J, it can be proved that only the normal components of \({\mathbf {V}}\) and \({\mathbf {W}}\) contributes to the shape Hessian. Here, we focus our attention on this situation since we are only interested in the expression for the shape Hessian of J at the solution \(\Omega ^*\) of the exterior Bernoulli free boundary problem (1).

Proposition 3

Let\(\Omega\)be of class\(C^{2,1}\)and\({\mathbf {V}},{\mathbf {W}}\in \Theta\)and\(\beta\)be the mean curvature of\(\Sigma\). Then, the shape Hessian ofJat\(\Omega ^*\)is given by

where\(p_{ {\mathrm{N}} W}'\)denotes the shape derivative of the adjoint state\(p_{{\mathrm{N}}}\)in the direction of\({\mathbf {W}}\)satisfying the PDE system

where\(u_{ {\mathrm{N}} W}'\)denotes the shape derivative of\(u_{\mathrm{N}}\)in the direction of\({\mathbf {W}}\).

Proof

In the proof, we denote the shape derivative of \(\varphi\) in the direction \({\mathbf {W}}\) by \(\varphi '\) (i.e., \(\varphi ' = \varphi '_W\)) for simplicity. Let \({\mathbf {N}}_s= {\mathbf {N}}_s({\mathbf {W}})\) be a smooth extension of \({\mathbf {n}}_s\) (see, e.g, [22, Equation (4.37), p. 491]). Using (17) with \(f(s,\sigma ) = G_{{\mathrm{A}}s}{\mathbf {n}}_s\cdot {\mathbf {V}}= G_{{\mathrm{A}}s}{\mathbf {N}}_s\cdot {\mathbf {V}}\) (\(G_{\mathrm{A}s}\) is given by (30)), and \({\mathbf {V}}\) replaced by \({\mathbf {W}}\), we get

By Corollary 1, we know that \(G_{{\mathrm{A}}} = 0\) on \(\Sigma ^*\). Hence, noting that \({\mathbf {N}}|_{\Sigma } = {\mathbf {n}}\), we obtain

Here, because \(p_{{\mathrm{N}}}\equiv 0\), and \(u_{\mathrm{N}}= 0\) and \(\partial _{{\mathbf {n}}}{u_{\mathrm{N}}} = \lambda\) on \(\Sigma ^*\), \(G'|_{\Sigma ^*}\) is given by

On the other hand, we note that, for \(\varphi , \psi \in H^3(\Omega )\), \(\nabla (\nabla \varphi \cdot \nabla \psi ) \cdot {\mathbf {n}}= (\nabla ^2 \varphi \nabla \psi + \nabla ^2\psi \nabla \varphi ) \cdot {\mathbf {n}}\). This identity holds true because the Hessian \(\nabla ^2\varphi\) of \(\varphi\) is symmetric. Hence, the term \(\partial _{{\mathbf {n}}}{G_{{\mathrm{A}}}}\) vanishes on \(\Sigma ^*\) because

Thus, we have

where \(p_{ {\mathrm{N}} W}'\) satisfies the PDE system (32), proving the proposition. \(\square\)

In view of the previous proposition, we see that in order to evaluate the shape Hessian of J, we first need to compute the solution \(p'_{{\mathrm{N}}W}\) of (32) (although the derivation of this set of equations follows standard techniques issued, for example, in [71], we provide it in the appendix for the sake of completeness; see Proposition A.1) which depends on \(u_{ {\mathrm{N}} W}'\) and hence to the perturbation field \({\mathbf {W}}\). In terms of numerical aspect, this step is quite problematic to implement in an iterative procedure because it would require the solution of (32) for each deformation field \({\mathbf {W}}\) at every iteration. To resolve the issue, we can again apply the adjoint method (see Remark 4 in Sect. 3.4) as done in the proof of Proposition 1. Before we do this, let us first examine the symmetry of the shape Hessian \({\mathrm{d}}^2{{J}}(\Sigma ^*)\) of J with respect to the velocity fields \({\mathbf {V}}\) and \({\mathbf {W}}\).

3.4 Symmetricity of the shape Hessian at a critical shape

Here, let us derive the shape Hessian \(\mathrm{d}^2{{J}}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\), but in a slightly different fashion, of J without imposing assumption (A) in expressing its shape gradient (see expression (26)). We will show that, in this case, the corresponding expression for the shape Hessian is symmetric with respect to \({\mathbf {V}}\) and \({\mathbf {W}}\). Again, we denote \(w = u_{\mathrm{N}}- u_{\mathrm{R}}\) and let \({\mathbf {N}}_s= {\mathbf {N}}_s({\mathbf {W}})\) again be a smooth extension of \({\mathbf {n}}_s\). Then, \(J(\Sigma ) = \frac{1}{2} \int _{\Sigma }{|w|^2}{\, \mathrm{d} \sigma }\) and from (17), we obtain

where \(g = w \nabla w \cdot {\mathbf {n}}+ \frac{1}{2} \kappa w^2\). Furthermore, we get

where \(w''_{VW}\) denotes the shape derivative of w along the directions of \({\mathbf {V}}\) and \({\mathbf {W}}\) (applied consecutively) and \(g'_W = w'_W \nabla w \cdot {\mathbf {N}}+ w \nabla w'_W \cdot {\mathbf {N}}+ w \nabla w \cdot {\mathbf {N}}'_W + \frac{1}{2} \kappa '_W w^2 + \kappa w w'_W\). Now, according to Corollary 1, we have \(w \equiv 0\) and \(g \equiv 0\) at \(\Sigma = \Sigma ^*\) which also gives us \(g'_W \equiv 0\) on \(\Sigma ^*\). Therefore, \(\mathrm{d}^2{{J}}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}] = \int _{\Sigma ^*}{ w'_V w'_W }{\, \mathrm{d} \sigma }\). Meanwhile, for \(\beta = \kappa\), we know that \(u'_R \equiv 0\) on \({\bar{\Omega }}^*\) by Lemma 2. Thus, we obtain

which clearly shows the symmetry (with respect to the deformation fields \({\mathbf {V}}\) and \({\mathbf {W}}\)) of the shape Hessian at a critical shape.

Let us now write (36) in its equivalent form using the adjoint method. For this purpose, we will denote the corresponding adjoint of \(u_{{\mathrm{N}}V}'\) and \(u_{\mathrm{N}W}'\) by \(\Phi _W\) and \(\Phi _V\), respectively. (The choice of subscripts for these adjoints will be made clear below.)

Clearly, both \(\Phi _W\) and \(\Phi _V\) are harmonic functions and both vanishes on \(\Gamma\). Meanwhile, on \(\Sigma ^*\), we take \(\partial _{{\mathbf {n}}}{\Phi _W} = u_{{\mathrm{N}}W}'\) and \(\partial _{{\mathbf {n}}}{\Phi _V} = u_{{\mathrm{N}}V}'\), so that (via Green’s second identity) we obtain the following equalities

Consequently, the adjoint states \(\Phi _W\) and \(\Phi _V\) satisfy the PDE systems

respectively. Hence, we conclude that (36) can also be expressed as

where \(\Phi _W\) and \(\Phi _V\) satisfy (37) and (38), respectively. Evidently, this shows that, at the optimal shape solution \(\Omega ^*\) of J, only the normal components of \({\mathbf {V}}\) and \({\mathbf {W}}\) contributes to the shape Hessian.

Remark 4

We emphasize that the shape Hessian \({\mathrm{d}}^2J_\mathrm{A}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}] = \int _{\Sigma ^*}{ \lambda \kappa p_{ {\mathrm{N}} W}' {\mathbf {n}}\cdot {\mathbf {V}}}{\, \mathrm{d} \sigma }\) given in Proposition 3 is also impractical to use in numerical calculation because an appropriate choice for the deformation field \({\mathbf {W}}\) is difficult to determine directly from the given boundary integral (see Sect. 5). To circumvent this difficulty, we again apply the adjoint method. First, we let \(\Psi\) be harmonic on \(\Omega\) such that it vanishes on \(\Gamma\). Letting \(\partial _{{\mathbf {n}}}{\Psi } = \lambda \kappa {\mathbf {V}}\cdot {\mathbf {n}}\) on \(\Sigma\), we get (via Green’s second identity and Eq. (32)) the following equalities \(\int _{\Sigma }{ \lambda \kappa p_{ {\mathrm{N}} W}' {\mathbf {n}}\cdot {\mathbf {V}}}{\, \mathrm{d} \sigma } = \int _{\Sigma }{ p_{ {\mathrm{N}} W}' \partial _{{\mathbf {n}}}{\Psi }}{\, \mathrm{d} \sigma } = \int _{\Sigma }{ \Psi \partial _{{\mathbf {n}}}{p_{ {\mathrm{N}} W}'}}{\, \mathrm{d} \sigma } = \int _{\Sigma }{ (\Psi u_{ {\mathrm{N}} W}' + \lambda \Psi ) {\mathbf {n}}\cdot {\mathbf {W}}}{\, \mathrm{d} \sigma }.\) Next, we let another function \(\Pi\) to be harmonic on \(\Omega\) such that \(\Pi = 0\) on \(\Gamma\). Also, we let \(\partial _{{\mathbf {n}}}{\Pi } = \Psi\), so that (via Green’s second identity) we have \(\int _{\Sigma }{ \Psi u_{ {\mathrm{N}} W}' }{\, \mathrm{d} \sigma } = \int _{\Sigma }{ \partial _{{\mathbf {n}}}{\Pi } u_{ {\mathrm{N}} W}' }{\, \mathrm{d} \sigma } = \int _{\Sigma }{ \Pi \partial _{{\mathbf {n}}}{u_{ {\mathrm{N}} W}'} }{\, \mathrm{d} \sigma } = \int _{\Sigma }{ \lambda \kappa \Pi {\mathbf {n}}\cdot {\mathbf {W}}}{\, \mathrm{d} \sigma }.\) Summarizing these results we can therefore write the shape Hessian \({\mathrm{d}}^2J_{{\mathrm{A}}}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\) as

where \(\Psi\) and \(\Pi\) satisfy the following PDE systems

respectively. Here, we notice that \(\Psi \equiv u_{ {\mathrm{N}} V}'\) on \({\bar{\Omega }}^*\).

Hence, looking back to Eq. (38), we find that \(\Phi _V\) is exactly equal to \(\Pi\) satisfying (42) which means that we may actually write the shape Hessian \({\mathrm{d}}^2{{J}}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\) given in (39) as

Remark 5

In (35), we notice the dependence of the shape Hessian \({\mathrm{d}}^2J(\Sigma )[{\mathbf {V}},{\mathbf {W}}]\) (for \(\Omega\) different from the optimal domain \(\Omega ^*\)) to the shape derivative \(\kappa '_{W}\) of the mean curvature \(\kappa\) along \({\mathbf {W}}\in \Theta\) appearing on \(g'_W\). The explicit form of \(\kappa '_{W}\) can be shown to be given by (see [22, 71])

Clearly, this expression consists of a second-order tangential derivative of the perturbation field \({\mathbf {W}}\), and this derivative actually exists due to our assumption that \(\Omega\) is of class \(C^{2,1}\) [22, 71]. From this observation, we deduce that the shape Hessian defines a continuous bilinear form

that is, \(|{\mathrm{d}}^2J(\Sigma )[{\mathbf {V}},{\mathbf {W}}]| \lesssim \Vert {\mathbf {V}}\Vert _{{\mathbf {H}}^1(\Sigma )} \Vert {\mathbf {W}}\Vert _{{\mathbf {H}}^1(\Sigma )}\). Here, the notation \({\mathbf {H}}^1(\cdot )\) denotes the Sobolev space \({\mathbf {H}}^1(\cdot ):=\{{\mathbf {u}}:=(u_1,u_2) : u_1, u_2 \in H^1(\cdot )\}\) and is equipped with the norm \(\Vert {\mathbf {u}}\Vert ^2_{{\mathbf {H}}^1(\cdot )} = \Vert u_1\Vert ^2_{H^1(\cdot )} + \Vert u_2\Vert ^2_{H^1(\cdot )}\). Similar definition is also given to the \({\mathbf {H}}^1_{\Gamma , 0}(\cdot )\)-space.

In view of the previous remark, it is natural to ask whether it is true that \({\mathrm{d}}^2J(\Sigma ^*)[{\mathbf {V}},{\mathbf {V}}] \gtrsim \Vert {\mathbf {V}}\Vert ^2_{{\mathbf {H}}^1(\Sigma ^*)}\). This question actually refers to the stability of a local minimizer \(\Omega ^*\) of J. In relation to this, we recall from [23, 24] (a result regarding sufficient second order conditions) that a local minimizer \(\Omega ^*\) is stable if and only if the shape Hessian \({\mathrm{d}}^2J(\Sigma ^*)\) is strictly coercive in its corresponding energy space \({\mathbf {H}}^1(\Sigma ^*)\). Unfortunately, this kind of strict coercivity cannot be established for the shape Hessian \({\mathrm{d}}^2J(\Sigma ^*)\) of J. Nevertheless, we shall show in the next subsection that sufficient condition can be derived to obtain strict coercivity in a weaker space. We note that the derived coercivity criterion is exactly the same as in the case of the shape Hessian \({\mathrm{d}}^2J_i\) of the cost functional \(J_i\), \(i=1,2,3,4\), as shown in [31,32,33, 68], respectively. It is worth remarking that, among these cost functions, only the shape Hessian \({\mathrm{d}}^2J_2(\Sigma ^*)\) of \(J_2\) is \({\mathbf {H}}^1(\Sigma ^*)\)-coercive under the derived coercivity criterion (see [31, Proposition 2.12]). For the sake of comparison, let us also compute the shape Hessian of the cost functional \(J_1(\Sigma )\) at \(\Sigma = \Sigma ^*\). From Remark 2, we know that the gradient of \(J_1(\Sigma )\) only differs by the addition of the integral \(\int _{\Sigma }{(u_{\mathrm{N}}\nabla u_{\mathrm{N}}\cdot {\mathbf {n}}){\mathbf {n}}\cdot {\mathbf {V}}}{\, \mathrm{d} \sigma } =: \int _{\Sigma }{g_1{\mathbf {n}}\cdot {\mathbf {V}}}{\, \mathrm{d} \sigma }\) from the shape gradient of \(J(\Sigma )\). Computing the shape derivative of \(g_1\) at \(\Omega = \Omega ^*\) along the deformation field \({\mathbf {W}}\), we get \(g'_{1W}|_{\Sigma ^*} = u'_{{\mathrm{N}}W} (\nabla u_{\mathrm{N}}\cdot {\mathbf {N}}) + u_{\mathrm{N}}(\nabla u'_{{\mathrm{N}}W} \cdot {\mathbf {N}}+ \nabla u_{\mathrm{N}}\cdot {\mathbf {N}}'_W)|_{\Sigma ^*} = \lambda u'_{{\mathrm{N}}W}.\) Meanwhile, we have \(\nabla {(u_{\mathrm{N}}\nabla u_{\mathrm{N}}\cdot {\mathbf {n}})}\cdot {\mathbf {n}}= (\nabla u_{\mathrm{N}}\cdot {\mathbf {n}})^2 + u_{\mathrm{N}}[(\nabla ^2u_{\mathrm{N}}){\mathbf {n}}] \cdot {\mathbf {n}}= \lambda ^2\) on \(\Sigma ^*\). Hence, from (34) with G replaced by \(g_1\), together with Eq. (31) in Proposition 3, we get the final expression for the shape Hessian of \(J_1\) at \(\Omega = \Omega ^*\) (cf. [32, Equation (21)]):

Proposition 4

Let\(\Omega\)be of class\(C^{2,1}\)and\({\mathbf {V}},{\mathbf {W}}\in \Theta\). Then, the shape Hessian of\(J_1\)at\(\Omega ^*\)is given by

Here, we mention that the above expression was also computed in [32] but through shape calculus for star shape domains, hence, we refer the readers to the aforementioned reference for comparison.

Meanwhile, following Remark 4, we can also write \(\mathrm{d}^2J_1(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\) in terms of appropriate adjoint states. To do this, we let \(\Upsilon\) be harmonic in \(\Omega\) and be zero on \(\Gamma\). Moreover, we let \(\partial _{{\mathbf {n}}}{\Upsilon } = \lambda {\mathbf {V}}\cdot {\mathbf {n}}\) on \(\Sigma\), so that by Green’s second identity we have, \(\int _{\Sigma }{u'_{{\mathrm{N}}W} (\lambda {\mathbf {n}}\cdot {\mathbf {V}}) }{\, \mathrm{d} \sigma } = \int _{\Sigma }{u'_{\mathrm{N}W} \partial _{{\mathbf {n}}}{\Upsilon } }{\, \mathrm{d} \sigma } = \int _{\Sigma }{\Upsilon \partial _{{\mathbf {n}}}{u'_{{\mathrm{N}}W}} }{\, \mathrm{d} \sigma } = \int _{\Sigma }{\lambda \kappa \Upsilon {\mathbf {n}}\cdot {\mathbf {W}}}{\, \mathrm{d} \sigma }\). Hence, using the results from Remark 4, we therefore have the following equivalent expression for \(\mathrm{d}^2J_1(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\):

where the adjoint states \(\Psi\) and \(\Pi\) satisfy the boundary value problems (41) and (42), respectively, while \(\Upsilon\) is the unique solution to the PDE system

Here, it is worth to stress out that the shape Hessian \(\mathrm{d}^2J_1(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\) depends on the solutions of three boundary value problems as opposed to the case of \({\mathrm{d}}^2J_\mathrm{A}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\) which depends only on the solutions of two PDE systems. In terms of numerical aspects, this means that we need to solve an additional variational problem in order to evaluate the descent direction for a gradient-based descent algorithm.

3.5 Coercivity of the shape Hessian at its optimal solution

Let us now determine which weaker space of \({\mathbf {H}}^1(\Sigma ^*)\) does the shape Hessian \(\mathrm{d}^2J(\Sigma ^*)\) is strictly coercive. To do this, we use the method already used in [28] (see also [31,32,33, 68]). We start by introducing the following operators which are linear continuous as a multiplier by a smooth function (see [68, Section 3.4]):

Here, \(V_n := {\mathbf {V}}\cdot {\mathbf {n}}\) and \(\kappa\) is, of course, the mean curvature of \(\Sigma ^*\). The continuity of these operators follows from the following result.

Lemma 3

Let\(\Omega \subset {\mathbb {R}}^2\)be a bounded Lipschitz domain with boundary\(\Gamma := \partial \Omega\). Then, the map\(v \mapsto \phi v\)is continuous in\(H^{1/2}(\Gamma )\)for any\(v \in H^{1/2}(\Gamma )\)and\(\phi \in C^{0,1}(\Gamma )\).

Proof

Recall that the fractional Sobolev space \(H^{1/2}(\Gamma )\) (the trace space for \(H^1(\Omega )\)) is equipped with the norm

Let \(\phi\) be a Lipschitz function. Then, we have the inequality

Hence, \(|\phi v|_{1/2,2,\Gamma }\) can be estimated as follows

Because \(\Vert \phi v\Vert _{L^2(\Gamma )} \leqslant \Vert \phi \Vert _{\infty } \Vert v\Vert _{L^2(\Gamma )}\), then the assertion is proved. \(\square\)

In addition to the operators introduced above, let us also define the map \({\mathcal {S}}\) as the Steklov-Poincaré operator on \(\Sigma ^*\) which is defined by (see [72])

where \(\Psi \in H^1(\Omega ^*)\) satisfies

The operator \({\mathcal {S}}\), also called the Dirichlet-to-Neumann map, is \(H^{1/2}(\Sigma ^*)\)-coercive (cf. [33, Lemma 2]). Its inverse \({\mathcal {R}}\) called the Neumann-to-Dirichlet map is defined by

where \(\Phi \in H^1(\Omega ^*)\) satisfies

Now, using the operators \({\mathcal {L}}\), \({\mathcal {M}}\), \({\mathcal {R}}\), and denoting the \(L^2(\Sigma ^*)\)-inner product by \((\cdot ,\cdot )_{L^2(\Sigma ^*)}\), we can write (31) as

By the continuity of the maps \({\mathcal {L}}\) and \({\mathcal {M}}\), and the bijectivity of \({\mathcal {R}}\), we deduce that the shape Hessian \({\mathrm{d}}^2 J_{{\mathrm{A}}}\) at \(\Omega ^*\) is \({\mathbf {L}}^2(\Sigma ^*)\)-coercive (whenever \(\kappa\) is non-negative) and we state this result formally as follows.

Proposition 5

For\(\Sigma ^*\)with non-negative mean curvature\(\kappa\), the shape Hessian\({\mathrm{d}}^2 J_{{\mathrm{A}}}\)at\(\Omega ^*\)is\({\mathbf {L}}^2(\Sigma ^*)\)-coercive; i.e.,

The above result also means that the minimization problem “\(\min _{\Omega } J(\Sigma )\) subject to (3) and (6)” [with condition (A) imposed in computing the gradient] is (algebraically) ill-posed. We further discuss this notion of ill-posedness (in the case of the present shape optimization formulation) briefly as follows. As already mentioned in the previous subsection, the shape optimization problem is well-posed if its local minimum is stable; that is, if the shape Hessian \({\mathrm{d}}^2J_{{\mathrm{A}}}(\Sigma ^*)\) is strictly coercive in its energy space \({\mathbf {H}}^1(\Sigma ^*)\) (i.e., \({\mathrm{d}}^2J_{{\mathrm{A}}}(\Sigma ^*)[{\mathbf {V}},{\mathbf {V}}] \gtrsim \Vert {\mathbf {V}}\Vert ^2_{{\mathbf {H}}^1(\Sigma ^*)}\)). If, on the other hand, the positivity of the shape Hessian at \(\Sigma ^*\) only holds on a weaker (Sobolev) space, then the shape optimization problem is said to be (algebraically) ill-posed (cf. [30, 32]). This means, in particular, that tracking the Dirichlet data in the \(L^2\)-norm is not sufficient, and as strongly assumed by the authors in [34], they have to be tracked relative to \(H^1\). This aforementioned lack of coercivity is known from other PDE-constrained optimal control problems as the so-called two-norm discrepancy (see, e.g., [30] and the references therein) and this concept of norm discrepancy under shape optimization framework was first observed in [23,24,25, 27], among others.

Remark 6

In case of the cost functional \(J_4(\Omega ) = \frac{1}{2} |u_{\mathrm{N}}- u_{\mathrm{R}}|^2_{H^1(\Omega )}\) examined in [68], the shape Hessian is likewise a continuous bilinear form, i.e., \(\mathrm{d}^2J_4(\Omega ) : {\mathbf {H}}^1(\Sigma ) \times {\mathbf {H}}^1(\Sigma ) \rightarrow {\mathbb {R}}\). This result is primarily due to the fact that the computed expression for \({\mathrm{d}}^2J_4(\Omega )\) also consists of the shape derivative \(\kappa '\) of the mean curvature \(\kappa\). Also, using the operators introduced above, the shape Hessian \({\mathrm{d}}^2 J_4\) at \(\Omega ^*\) was shown to be expressible as

which is \({\mathbf {H}}^{1/2}(\Sigma ^*)\)-coercive provided that \(\Sigma ^*\) has non-negative mean curvature \(\kappa\).

Remark 7

Similarly, we have that \({\mathrm{d}}^2J_1(\Omega ) : {\mathbf {H}}^1(\Sigma ) \times {\mathbf {H}}^1(\Sigma ) \rightarrow {\mathbb {R}}\) and using the operators introduced above, we may write the shape Hessian of \(J_1\) at \(\Omega = \Omega ^*\) given in Proposition 4 as follows:

This expression is also \({\mathbf {H}}^{1/2}(\Sigma ^*)\)-coercive (i.e., \({\mathrm{d}}^2J_1(\Sigma ^*)[{\mathbf {V}},{\mathbf {V}}] \gtrsim \Vert {\mathbf {V}}\Vert ^2_{{\mathbf {H}}^{1/2}(\Sigma ^*)}\)) provided that \(\Sigma ^*\) has non-negative mean curvature \(\kappa\).

On the other hand, in case of the shape Hessian \(\mathrm{d}^2{{J}}(\Sigma ^*)[{\mathbf {V}},{\mathbf {W}}]\), we deduce (via the continuity of the maps \({\mathcal {L}}\) and \({\mathcal {M}}\), and the bijectivity of \({\mathcal {R}}\)) that

whenever \(\kappa\) is non-negative. Here, the notation “\(P \sim Q\)” means that “\(P \lesssim Q\) and \(P \gtrsim Q\).” Hence, the positivity of \({\mathrm{d}}^2{{J}}(\Sigma ^*)\) holds only in the weaker space \({\mathbf {L}}^2(\Sigma ^*)\).

4 Existence of optimal domains of the shape optimization problem

Before going to the numerical treatment of the proposed shape optimization reformulation “\(\min _{\Omega } J(\Sigma )\) subject to (3) and (6)” [or equivalently, “\(\min _{\Omega } J(\Sigma )\) subject to (7) and (8)”] of (1) and for completeness, let us first address the question of existence of optimal solution to the said problem. On the other hand, as regards to the existence of solution to the exterior Bernoulli FBP (1), we refer the readers to [1].

To carry out our present task, we use the results established in [68] regarding the continuity of the state problems with respect to domain. We begin by rewriting the weak formulations (7) and (8) of (3) and (6), respectively, as follows:

Find \(z_{\mathrm{N}}=u_{\mathrm{N}}- u_{{\mathrm{N}}0} \in H^1_{\Gamma ,0}(\Omega )\) such that

find \(z_{\mathrm{R}}= u_{\mathrm{R}}- u_{{\mathrm{R}}0} \in H^1_{\Gamma ,0}(\Omega )\) such that

In above equations, \(u_{{\mathrm{N}}0}\) and \(u_{{\mathrm{R}}0}\) are two fixed functions in \(H^1(U)\) such that \(u_{{\mathrm{N}}0} = u_{{\mathrm{R}}0} = 1\) on \(\Gamma\). Given the unique solvability of (46) and (47) in \(H^1(\Omega )\), we define the map \(\Omega \mapsto (z_{\mathrm{N}},z_{\mathrm{R}}) = (z_{\mathrm{N}}(\Omega ),z_{\mathrm{R}}(\Omega ))\) and denote its graph by

Hence, the problem “\(\min _{\Omega } J(\Sigma )\) subject to (7) and (8)” is equivalent to the problem of finding a solution \((\Omega ,z_{\mathrm{N}}(\Omega ),z_{\mathrm{R}}(\Omega ))\) that minimizes \(J(\Omega ) = J(\Omega ,z_{\mathrm{N}}(\Omega ),z_{\mathrm{R}}(\Omega ))\) on \({\mathscr {F}}\). Such minimization problem is usually solved by endowing the set \({\mathscr {F}}\) with a topology for which \({\mathscr {F}}\) is compact and J is lower semi-continuous. For this purpose, we follow the ideas developed in [43] and the ones furnished in [13, 42].

Let us now characterize the set of admissible domains \({\mathcal {O}}_{{\mathrm{ad}}}\) and then give an appropriate topology on it. In the previous section, we assume a \(C^{2,1}\) regularity for the domain \(\Omega\) to guarantee the existence of the shape derivatives of the states and to establish the shape Hessian of J, for the existence proof of optimal solution to the problem

it is enough to assume that \(\Omega\) has a \(C^{1,1}\) smooth free boundary \(\Sigma\) (cf. [68]). Hence, we let \(\Sigma\) be parametrized by a vector function \(\phi \in C^{1,1}({\mathbb {R}},{\mathbb {R}}^2)\) (i.e., \(\Sigma = \Sigma (\phi ) = \{\phi = (\phi _1(t),\phi _2(t)):t\in (0,1]\}\)) where, in addition, \(\phi\) is assume to possess the following properties:

- \(({\mathrm{P}}_1)\):

-

\(\phi\) is injective on (0, 1] and is 1-periodic;

- \(({\mathrm{P}}_2)\):

-

there exist positive constants \(c_0, c_1, c_2\) and \(c_3\) such that

$$\begin{aligned} |\phi (t)| \leqslant c_0, \quad c_1 \leqslant |\phi '(t)| \leqslant c_2, \quad |\phi ''(t)| \leqslant c_3, \ \hbox { a.e. in}\ (0,1); \end{aligned}$$ - \(({\mathrm{P}}_3)\):

-

\({\overline{\Omega }} = {\overline{\Omega }}(\phi ) \subset U\), U is a fixed, connected, bounded open subset of \({\mathbb {R}}^2\);

- \(({\mathrm{P}}_4)\):

-

there is a positive constant \(\gamma\) such that \({\mathrm{dist}}(\Gamma ,\Sigma (\phi )) \geqslant \gamma\).

If \(\phi\) satisfies the above conditions, then we say that \(\phi\) is in \({\mathcal {U}}_{{\mathrm{ad}}}\). The set of admissible domains \({\mathcal {O}}_{{\mathrm{ad}}}\) we consider here is now given as follows

The set U in assumption \(({\mathrm{P}}_3)\) and the one introduced in Sect. 3 are not necessarily the same set. However, we point out that in \(({\mathrm{P}}_3)\), we are assuming that all admissible domains \(\Omega (\phi )\) are contained in the hold-all domain U in the same manner that the universal set U in Eq. (9) holds all the possible deformations of the reference domain \(\Omega\). Also, we assume that U is large enough that it contains the optimal domain \(\Omega ^*\) that solves the exterior Bernoulli FBP (1). Here, we are in fact requiring that \({\mathrm{dist}}(\Sigma (\phi ), \partial U)>0\) for all \(\phi \in {\mathcal {U}}_{{\mathrm{ad}}}\) and \({\mathrm{dist}}(\Sigma ^*, \partial U)>0\). In this way, we can say that the shape optimization problem “\(\min _{\Omega } J(\Sigma )\) subject to (7) and (8)” is indeed equivalent to the free boundary problem (1). Meanwhile, in view of (49), we see that every admissible domain \(\Omega (\phi )\) is a uniformly open set in \({\mathbb {R}}^2\) and therefore satisfy the well-known uniform cone property (cf. [46]). Moreover, as a consequence, these admissible domains satisfy a very important extension property. More precisely, for every \(k \geqslant 1\), \(p > 1\) and domain \(\Omega \in {\mathcal {O}}_{{\mathrm{ad}}}\), there exists an extension operator

such that \(\Vert E_{\Omega }u\Vert _{W^{k,p}(U)} \leqslant C\Vert u\Vert _{W^{k,p}(\Omega )}\), where C is a positive constant independent of the domain \(\Omega\) (see [18]). Using these properties, we can ensure a uniform extension \({\tilde{u}} \in H^1(U)\) from \(\Omega\) to U of every function \(u \in H^1(\Omega )\). In the discussion that follows, we will use this result to finally define the topology we shall work with.

Let us first define the convergence of a sequence \(\{\phi _n\} \subset {\mathcal {U}}_{{\mathrm{ad}}}\) by

i.e., if and only if \(\phi _n \rightarrow \phi\) in the \(C^1\)-topology. We can then define the convergence of a sequence of domains \(\{\Omega _n\}:=\{\Omega (\phi _n)\} \subset {\mathcal {O}}_{{\mathrm{ad}}}\) by

Meanwhile, we define the convergence of a sequence \(\{z_{\mathrm{N}n}\}\) of solutions of (46) on \(\Omega _n\) to the solution of (46) on \(\Omega\) as follows

Similarly, the convergence of a sequence \(\{z_{\mathrm{R}n}\}\) of solutions of (47) on \(\Omega _n\) to the solution of (47) on \(\Omega\) is define

In (53) and (54), the extensions \({\tilde{z}}_i,{\tilde{z}}_{in}\), \(i = {\mathrm{N,R}}\), are defined as \(E_{\Omega }{z}_{{\mathrm{i}}}, E_{\Omega }{z}_{in}\), \(i = {\mathrm{N,R}}\), respectively, where \(E_{\Omega }\) is of course the extension operator (50).

Finally, the topology we introduce on \({\mathscr {F}}\) is the one induced by the convergence defined by

We now state the main result of this section.

Theorem 1

The minimization problem (48) admits a solution in\({\mathscr {F}}\).

As stated before, the existence proof is reduced to proving the compactness of \({\mathscr {F}}\) and the lower semi-continuity of J. Regarding the former problem, we note that the convergence \(\phi _n \rightarrow \phi\) follows immediately from the compactness of \({\mathcal {U}}_{{\mathrm{ad}}}\) and the Arzelà-Ascoli theorem, hence, the compactness of \({\mathscr {F}}\) with respect to the convergence (55) is already guaranteed. This means that we only need to show the continuity of the state problems (3) and (6) with respect to the domain in order to complete the proof of compactness of \({\mathscr {F}}\). The proof of this continuity is not straightforward but has already been done in [68] using the tools established in [14, 15], so we simply state the result as follows.

Proposition 6

[68] With the convergence of a sequence of domains given in (52), we let\(\{(\phi _n,z_{\mathrm{N}n},z_{\mathrm{R}n})\}\)be a sequence in\({\mathscr {F}}\)where\(z_{\mathrm{N}n}:=z_{\mathrm{N}}(\phi _n)\)and\(z_{\mathrm{R}n}:=z_{\mathrm{R}}(\phi _n)\)are the weak solutions of (46) and (47) on\(\Omega _n:=\Omega (\phi _n)\), respectively. Then, there exists a subsequence\(\{(\phi _k,z_{\mathrm{N}k},z_{\mathrm{R}k})\}\)and elements\(\phi \in {\mathcal {U}}_{{\mathrm{ad}}}\)and\(z_{\mathrm{N}}, z_{\mathrm{R}}\in H^1(U)\)such that

where\(z_{\mathrm{N}}=z_{\mathrm{N}}(\phi )={\tilde{z}}_{{\mathrm{N}}}|_{\Omega (\phi )}\)and\(z_{\mathrm{R}}=z_{\mathrm{R}}(\phi )={\tilde{z}}_{{\mathrm{R}}}|_{\Omega (\phi )}\)are the unique solutions of equations (46) and (47) on\(\Omega :=\Omega (\phi )\), respectively.

In the proof of the above proposition, three essential estimates were utilized. The first one is a result regarding the uniform Poincaré inequality proved in [15] (see, particularly, Corollary 3(ii)]). The second one concerns about the uniform continuity of the trace operator with respect to the domain (see [13, Theorem 4]), and the last auxiliary result is about a uniform extension of the state variables from \(\Omega _n\) to U such that their respective \({H^1(U)}\)-norms are bounded above by a constant positive number (see first part of the proof of Proposition 6 given in [68]). For completeness, we recall them as follows:

Lemma 4

Let\(\phi _n,\phi \in {\mathcal {U}}_{{\mathrm{ad}}}\)and\(\Omega (\phi _n), \Omega (\phi ) \in {\mathcal {O}}_{{\mathrm{ad}}}\). Then, the following results hold.

-

(i)

For every\(u \in H^1_{\Gamma ,0}(\Omega )\), we have the estimate\(\Vert u\Vert _{L^2(\Omega )} \lesssim |u|_{H^1(\Omega )}\).

-

(ii)

For all real numberqsuch that\(\frac{1}{2} < q \leqslant 1\)and functions\(u \in H^1(U)\), we have

$$\begin{aligned} \Vert u\Vert _{L^2(\Sigma (\phi ))}\lesssim \Vert u\Vert _{H^q(U)}, \end{aligned}$$where\(\Vert \cdot \Vert _{H^q(U)}\)denotes the\(H^q(U)\)-norm.

-

(iii)

There exists a uniform extension\({\tilde{z}}_{{\mathrm{R}}n}\) (respectively\({\tilde{z}}_{{\mathrm{N}}n}\)) of\(z_{{\mathrm{R}}n}\) (respectively\(z_{{\mathrm{N}}n}\)) from\(\Omega _n\)toUand a constant\(C_{{\mathrm{R}}}>0\)independent ofnsuch that\(\Vert {\tilde{z}}_{\mathrm{R}n}\Vert _{H^1(U)} \leqslant C_{{\mathrm{R}}}\) (respectively\(\Vert {\tilde{z}}_{{\mathrm{N}}n}\Vert _{H^1(U)} \leqslant C_{{\mathrm{N}}}\), where\(C_{{\mathrm{N}}} > 0\) is constant).

In relation to the second statement of the above lemma, we note that due to assumption \(({\mathrm{P}}_3)\) and the uniform cone property of the domain \(\Omega (\phi ) \in {\mathcal {O}}_{{\mathrm{ad}}}\), the norm of the trace map \({\mathrm{tr}}:H^1_0(U) \rightarrow L^2(\Sigma (\phi ))\) can actually be bounded uniformly with respect to \(\Omega (\phi ) \in {\mathcal {O}}_\mathrm{ad}\); see [59]. On the other hand, we mention that the proof of Lemma 4(iii) given in [68] uses the first two estimates (i) and (ii). Note that the third part of the lemma already guarantees the existence of a subsequence of \(\{ {\tilde{z}}_{{\mathrm{R}}n} \}\) (respectively \(\{ {\tilde{z}}_{{\mathrm{N}}n} \}\)) which weakly converges in \(H^1(U)\) to a limit denoted by \({\tilde{z}}_{{\mathrm{R}}}\) (respectively \({\tilde{z}}_{{\mathrm{N}}}\)). Hence, the proof of Proposition 6 is completed by showing that the restriction of \(\{ {\tilde{z}}_{{\mathrm{R}}} \}\) (respectively \(\{ {\tilde{z}}_{{\mathrm{N}}} \}\)) in \(\Omega (\phi )\) coincides with the unique solution of (47) (respectively (46)). Because of the basic role Lemma 4(iii) plays in the proof of the lower-semicontinuity of J, we provide its proof below.

Proof of Lemma 4(iii)

Throughout the proof we use the notation \((\cdot )_n:=(\cdot )(\phi _n)\). From a famous paper of Chenais [18], we know that the solution \(z_{{\mathrm{R}}n}\) of (47) on \(\Omega _n\) admits an extension \({\tilde{z}}_{{\mathrm{R}}n}\) in \(H^1(U)\) such that

So, to establish our desired result, we need to prove that \(\Vert z_{{\mathrm{R}}n}\Vert _{H^1(\Omega _n)}\) is bounded with respect to n. In view of (8), taking \(\varphi = z_{{\mathrm{R}}n} \in H^1_{\Gamma ,0}(\Omega _n)\), we have

This yields the estimate

Next, we show that \(\Vert z_{{\mathrm{R}}n} \Vert _{L^2(\Sigma _n)}\) can be bounded by \(|z_{{\mathrm{R}}n} |_{H^1(\Omega _n)}\). This is where we apply the first two parts of the lemma (i.e., Lemma 4(i) and (ii)) to obtain

Going back to (56), we get

Applying Lemma 4(i) once more, we obtain

which establishes the boundedness of \(\{\Vert {\tilde{z}}_{{\mathrm{R}}n} \Vert _{H^1(U)} \}\). The same line of argument can be used to prove that there exists a uniform extension \({\tilde{z}}_{{\mathrm{N}}n}\) of \(z_{\mathrm{N}n}\) from \(\Omega _n\) to U and a constant \(C_{{\mathrm{N}}}>0\) independent of n such that \(\Vert {\tilde{z}}_{{\mathrm{N}}n}\Vert _{H^1(U)} \leqslant C_{{\mathrm{N}}}\). (In fact, taking \(\beta = 0\) in above proof easily verifies this statement.) \(\square\)

Having recalled the above results, we now proceed on the second part of the proof of Theorem 1 by proving the next result.

Proposition 7

The cost functional

is lower semi-continuous on\({\mathscr {F}}\)in the topology induced by (55).

To prove the above proposition, we will exploit the parametrization \(\phi\) of \(\Sigma\). Also, its properties stated in assumption \((\mathrm{P}_3)\) will be used implicitly many times in the proof. The following result, which is a consequence of Lemma 4(ii) (see [13, Corollary 2], and also [14, Corollary 1]), will also be central to the proof Proposition 7 given below.

Lemma 5

[13, 14] Let\(\phi _n,\phi \in {\mathcal {U}}_{{\mathrm{ad}}}\)be a sequence such that\(\phi _n \rightarrow \phi\)in the\(C^1([0,1],{\mathbb {R}}^2)\)-norm. Then, for any\(u \in H^1(U)\), we have\(\lim _{n\rightarrow \infty } u \circ \phi _n = u \circ \phi\)in\(L^2([0,1])\)

Proof of Proposition 7

Let \(\{ (\Omega _n, u_{{\mathrm{N}}n}, u_{{\mathrm{R}}n}) \}\) be a sequence in \({\mathscr {F}}\), \(\Omega _n := \Omega (\phi _n)\), and assume that

\((\Omega _n, u_{{\mathrm{N}}n}, u_{{\mathrm{R}}n}) \rightarrow (\Omega ,u_{\mathrm{N}},u_{\mathrm{R}})\) as \(n \rightarrow \infty\),

where \(\Omega :=\Omega (\phi )\) and the triple \((\Omega ,u_{\mathrm{N}},u_{\mathrm{R}})\) is in \({\mathscr {F}}\). For convenience, we let \(w_n = u_{{\mathrm{N}}n} - u_{\mathrm{R}n}\) (recalling that \(w = u_{\mathrm{N}}- u_{\mathrm{R}}\)) and their extensions in \(H^1(U)\) by \({\tilde{w}}_n\) and \({\tilde{w}}\), respectively. Here, we emphasize that \(w = {\tilde{w}}|_{\Omega }\) is in \(H^1_{\Gamma ,0}(\Omega )\) which is essentially due to the boundedness of the trace operator. Moreover, for any \(u \in H^1_{\Gamma ,0}(U)\), the restriction \(u|_{\Omega _n}\) is in \(H^1_{\Gamma ,0}(\Omega _n)\). We have

We first look for an estimate for the second integral \({\mathbb {I}}_2\). For this purpose, we apply the estimates in Lemma 4 and the compactness of the injection of \(H^1(U)\) into \(H^q(U)\) for \(\frac{1}{2}< q < 1\), to obtain

Clearly, using the convergence \(\phi _n' \rightarrow \phi '\) in the \(C^1([0,1],{\mathbb {R}}^2)\)-norm (see (51)), we get the limit \(\lim _{n\rightarrow \infty } {\mathbb {I}}_2 = 0\).

On the other hand, to get an estimate for the first integral \({\mathbb {I}}_1\), we first apply the identity \(a^2 - b^2 = (a - b)^2 + 2b(a-b)\) to obtain

For \({\mathbb {I}}_{12}\), we have the estimate

On the other hand, for \({\mathbb {I}}_{11}\), we have

The above estimates were obtained using the inequalities in Lemma 4. Combining them, we arrive at

Applying Lemma 5, and again using the compactness of the injection of \(H^1(U)\) into \(H^q(U)\) for \(\frac{1}{2}< q < 1\), the convergences \({\tilde{w}}_n \rightharpoonup {\tilde{w}}\) in \(H^1(U)\)-weak and \(\phi _n' \rightarrow \phi '\) in the \(C^1([0,1],{\mathbb {R}}^2)\)-norm (see Proposition 6), we obtain \(\lim _{n\rightarrow \infty } {\mathbb {I}}_1 = 0\). Thus, \(\lim _{n\rightarrow \infty } |J(\Sigma (\phi _n) - J(\Sigma (\phi ))| = 0\). Consequently, we find that \(\lim _{n\rightarrow \infty }J(\Omega _n, u_{{\mathrm{N}}n}, u_{{\mathrm{R}}n}) = J(\Omega ,u_{\mathrm{N}},u_{\mathrm{R}})\); that is, J is continuous, and in particular, lower semi-continuous. \(\square\)

To conclude this section, let us formally provide the proof of Theorem 1 using Proposition 6 and Proposition 7.

Proof of Theorem 1

Let \((\Omega _n,z_{{\mathrm{N}}n},z_{{\mathrm{R}}n})\), \(\Omega _n=\Omega (\phi _n)\), be a minimizing sequence for the cost function J; that is, \((\Omega _n,z_{{\mathrm{N}}n},z_{{\mathrm{R}}n})\) is such that

From Proposition 6, there exists a subsequence \((\Omega _k,z_{{\mathrm{N}}k},z_{{\mathrm{R}}k})\) and an element \(\Omega =\Omega (\phi ) \in {\mathcal {O}}_{{\mathrm{ad}}}\) such that \(\Omega _k \rightarrow \Omega\) (i.e., \(\phi _k \rightarrow \phi\) uniformly in the \(C^1\) topology), \({\tilde{z}}_{{\mathrm{N}}k} \rightharpoonup {\tilde{z}}_{{\mathrm{N}}}\), \({\tilde{z}}_{{\mathrm{R}}k} \rightharpoonup {\tilde{z}}_{{\mathrm{R}}}\) in \(H^1(U)\), and the functions \({\tilde{z}}_{{\mathrm{N}}}|_{\Omega }\) and \({\tilde{z}}_{{\mathrm{R}}}|_{\Omega }\) are the unique weak solutions to (46) and (47) in \(\Omega\), respectively. Using these, together with the continuity of J proved in Proposition 7, we conclude that (by virtue of [43, Theorem 2.10])

\(\square\)

Remark 8

It is worth remarking that in [32], the authors did not tackle the question of existence of optimal solution of the shape optimization problem examined in their paper which is the Poisson case of (1) with a regular Dirichlet and Neumann data on the fixed boundary and free boundary, respectively. Nevertheless, the authors tacitly supposed the existence of optimal domains and assumed that it is sufficiently regular to accomplish their objectives. We mention that, with the appropriate modification on the proof of Theorem 1, the existence analysis for the shape optimization problem studied in [32] can be carried out in a similar fashion (see [13]).

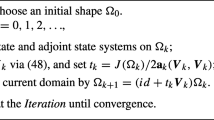

5 Numerical algorithm and examples

Here, using the gradient and Hessian informations, we will formulate a boundary variation algorithm to numerically solve the minimization problem (5). We shall use a Lagrangian-like method to carry out the numerical realization of the problem in contrast to the one applied in [12, 44, 50] which is an Eulerian-like type method known as level-set method (see [64]). Of course, our approach is also different from [31,32,33] which employs the concept of boundary integral equations and were then solved by boundary element methods. Furthermore, there is another numerical method which was recently proposed in [40] that employs the notion of conformal mapping method to solve the FBP (1). This solution method was recently developed by Haddar and Kress in [40] and relates the Bernoulli problem in the context of inverse problems. Much more recently, another method was also introduced by Kress in [54] in an attempt to improve the use of boundary integral equations for numerically solving the Bernoulli problem. In terms of numerical performance, he demosntrated that his recently proposed method inspired by Trefftz’ integral equation method [73] is more robust and wider applicable than that of [40]. We mention here that Trefftz’ approach, in principle, can be considered as a so-called trial method (see, e.g., [72]) which is also a prominent numerical method for solving free boundary value problems such as the Bernoulli problem.

5.1 Numerical algorithm

In the following discussion, we give the details of the numerical algorithm we use to solve some concrete numerical examples of (5).

5.1.1 The Sobolev gradient method

Let us denote by \(\Omega _k\) the shape of the domain at the \(k\hbox {th}\) iteration. Then, at the \((k+1)\hbox {th}\) iteration, the shape \(\Omega\) can be updated as \(\Omega _{k+1} := \Omega _{t_{k+1}} = ({\mathbf {I}}_2+t_k{\mathbf {V}})\Omega\), where \(t_k \geqslant 0\) is some small step size parameter and \({\mathbf {V}}\) represents the descent deformation field \({\mathbf {V}}_k\) at the \(k\hbox {th}\) iterate. In perturbing the domain \(\Omega\), we may take \({\mathbf {V}}|_{\Sigma } = -{G}{\mathbf {n}}\) as the descent direction. However, this choice of the descent direction may cause undesirable oscillations on the free boundary of the shape solution \(\Omega ^*\). To avoid such phenomena, we compute the descent direction via the so-called \(H^1\) gradient method [6]; that is, we take \({\mathbf {V}}\) as the unique solution in \({\mathbf {H}}^1_{\Gamma ,0}(\Omega )\) of the variational problem

In this sense, the deformation field \({\mathbf {V}}\), also called in some literature as a Sobolev gradient (see, e.g., [60]), provides a smooth extension of \({G}{\mathbf {n}}\) over the entire domain \(\Omega\), which not only smoothes the boundary [5] but also provides a preconditioning of the descent direction. The method of regularizing the descent direction using (57) is similar to the idea behind the so-called traction method introduced and popularized in [2,3,4,5].

On the other hand, we note that the kernel G given in (15) depends on the mean curvature of \(\Sigma\). This means that we first need to calculate \(\kappa\) in order to determine \({\mathbf {V}}\). In this investigation, we evaluate this expression by first creating a smooth extension of \({\mathbf {n}}\) using the idea of the \(H^1\) gradient method and then calculate \(\kappa\) as the divergence of that smooth extension. This technique is possible because, by Proposition 5.4.8 of [46, p. 218] (see also [38, Lemma 16.1, p. 390]), we know that, for a domain \(\Omega\) of class \(C^2\), there exists a unitary \(C^1\) extension \({\tilde{{\mathbf {n}}}}\) of \({\mathbf {n}}\) such that the mean curvature may be defined as

Hence, based on this idea, we may numerically compute \(\kappa\) via the equation \(\kappa = {\mathrm{div\,}}{{\mathbf {N}}}\), where \({\mathbf {N}}\) is the smoothed extension of \({\mathbf {n}}\) satisfying the equation

5.1.2 Step size

Let us now turn our attention to the computation of the step size to be used in our algorithm. It is worth mentioning that the choice for \(t_k\) can be decided in many ways. Here, we shall update \(t_k \in (0, \varepsilon ]\) (where \(\varepsilon >0\) is some sufficiently small real number) by following a heuristic approach inspired by the Armijo–Goldstein line search strategy similar to the one offered in [50], but for level-set methods. Given the choice of descent direction \({\mathbf {V}}|_{\Sigma } = -{G}{\mathbf {n}}\) (this means, basically, that \(a(\cdot ,\cdot )\) in equation (60) below is the usual inner product in \(L^2(\Sigma )\)) and the definition of the domain \(\Omega _{\varepsilon }\), we know that