Abstract

We propose a new iterative search procedure for the numerical treatment of unconstrained multi-objective optimization problems (MOPs) which steers the search along a predefined direction given in objective space. Based on this idea we will present two methods: directed search (DS) descent which seeks for improvements of the given model, and a novel continuation method (DS continuation) which allows to search along the Pareto set of a given MOP. One advantage of both methods is that they can be realized with and without gradient information, and if neighborhood information is available the computation of the search direction comes even for free. The latter makes our algorithms interesting candidates for local search engines within memetic strategies. Further, the approach can be used to gain some interesting insights into the nature of multi-objective stochastic local search which may explain one facet of the success of multi-objective evolutionary algorithms (MOEAs). Finally, we demonstrate the strength of the method both as standalone algorithm and as local search engine within a MOEA.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many applications one is faced with the problem that several objectives have to be optimized concurrently leading to a multi-objective optimization problem (MOP). Multi-objective evolutionary algorithms (MOEAs) have proven to be very effective on the treatment of such problems. Reasons for this include that algorithms of this kind are very robust, do not require hard assumptions on the model, and allow to compute a finite size representation of the solution set, the Pareto set, in one single run [1–3]. On the other hand, it is known that MOEAs need quite a few function evaluations in order to evolve to a suitable approximation of the set of interest. As one way out, researchers have proposed memetic algorithms that hybridize local search techniques with MOEAs in order to obtain fast and reliable global search procedures. (e.g., [4–8]).

In this paper, we propose a new point-wise iterative search procedure that allows to steer the search into any direction d given in objective space. Since there is no restriction on d, the Directed Search (DS) method can be used to steer the search both toward and along the Pareto set of a given MOP. Further, the method can be used with or without gradient information. To realize the gradient free DS, the neighborhood information of the point designated for local search can be exploited so that the related search direction can ideally be computed for free in terms of additional function evaluations. The latter makes the DS an interesting candidate for integration into set based heuristics such as MOEAs since in that case the neighbors of a given individual may be utilized. In this paper, we make a first attempt to demonstrate the strength of DS within a memetic algorithm. As a by-product, we gain some insights into the behavior of multi-objective stochastic local search (MOSLS) by using the DS approach which yields some interesting insights and may explain one facet of the huge success of evolutionary algorithms for the treatment of MOPs.

The idea to steer the search by a direction given in objective space is not new but appears in the literature in several variations (e.g., [9–13]). Most remarkably, the DS Descent Method shares some characteristics with NBI [11] and the method presented in [13]. The DS, however, has a broader applicability. In particular the possibility to move along the Pareto set and the gradient free realization present novelties that are beneficial for hybridization with set based heuristics. Preliminary studies of the DS can be found in [14–16]. In [14] the general idea of the DS is presented, in [15] some details of the DS Descent method are provided, and in [16] the gradient free DS Descent method is proposed together with a first memetic algorithm.

The remainder of this paper is organized as follows: in Sect. 2, we will present the background required for the understanding of the sequel. In Sect. 3, we present the basic idea of the DS as well as the gradient based descent and continuation methods. In Sect. 4, we explain some behavior of MOSLS using DS. In Sect. 5, we present the idea of the gradient free DS and present further on a gradient free continuation method. In Sect. 6, we present some numerical results of the DS both as standalone algorithm and as local searcher within a MOEA. Finally, we draw our conclusions and give paths for future research in Sect. 7.

2 Notations and background

In the following we consider unconstrained continuous MOPs

where F is defined as the vector of the objective functions \(F:\mathbbm {R}^n\rightarrow \mathbbm {R}^k\), \(F(x) = (f_1(x),\ldots ,f_k(x))^T,\) and where each objective \(f_i:\mathbbm {R}^n\rightarrow \mathbbm {R}\) is (for simplicity) smooth. The optimality of a MOP is defined by the concept of dominance [17]: a vector \(y\in \mathbbm {R}^n\) is dominated by a vector \(x\in \mathbbm {R}^n\) (\(x\prec y\)) with respect to (MOP) if \(f_i(x)\le f_i(y)\), \(i=1,\ldots ,k\), and there exists an index j such that \(f_j(x)<f_j(y)\), else y is non-dominated by x. A point \(x\in \mathbbm {R}^n\) is called (Pareto) optimal or a Pareto point if there is no \(y\in \mathbbm {R}^n\) which dominates x. The set of all Pareto optimal solutions is called the Pareto set, and is denoted by \(\mathcal {P}\). The image \(F(\mathcal {P})\) of the Pareto set is called the Pareto front. Both sets typically form a (\(k-1\))-dimensional object [18].

The Jacobian of F at a point x is given by

where \(\nabla f_i(x)\) denotes the gradient of objective \(f_i\). If all the objectives of the MOP are differentiable, the following famous theorem of Kuhn and Tucker [19] states a necessary condition for the Pareto optimality of unconstrained MOPs.

Theorem 1

Let \(x^*\) be a Pareto point of (MOP), then there exists a vector \(\alpha \in \mathbbm {R}^k\) with \(\alpha _i \ge 0, i=1,\ldots ,k\), and \(\sum _{i=1}^k\alpha _i = 1\) such that

Points satisfying (2) are called Karush–Kuhn–Tucker (KKT) points.

3 The directed search method

Here we first describe the idea of the Directed Search method and will further on derive a descent and a continuation method out of it.

3.1 Central idea

Assume a point \(x_0\in \mathbbm {R}^n\) with \(\mathrm {rank}(J(x_0))=k\) is given and a vector \(d\in \mathbbm {R}^k\) representing a desired search direction in objective space. Then, a search direction \(\nu \in \mathbbm {R}^n\) in decision space is sought such that for \(y_0 := x_0 + h\nu \), where \(h\in \mathbbm {R}_+\) is the step size (i.e., \(y_0\) represents a movement from \(x_0\) in direction \(\nu \)), it holds:

if \(\Vert \nu \Vert =1\). Throughout this paper, \(\Vert \cdot \Vert \) denotes the 2-norm unless specified otherwise. Using the Jacobian of F, Eq. (3) can be stated in matrix vector notation as

Hence, such a search direction \(\nu \) can be computed by solving a system of linear equations. Since typically the number of decision variables is (much) higher than the number of objectives for a given MOP, i.e., \(n\gg k\), the system (4) is (probably highly) underdetermined which implies that its solution is not unique. One possible choice is to take

where \(J(x_0)^+\in \mathbbm {R}^{n\times k}\) denotes the pseudo inverseFootnote 1 of \(J(x_0)\) as the following discussion shows (see Sect. 3.2 for further insights): given a candidate solution \(x_0\), a new solution is obtained via \(x_1 = x_0 + h\nu \), where \(\nu \in \mathbbm {R}^n\) is a vector that satisfies (4). Among the solutions of (4), \(\nu _+\) is the one with the smallest Euclidean norm. Hence, given h, one expects for a step in direction \(\nu _+\) (in decision space) the largest progress in direction d (in objective space).

3.2 The DS descent method

Assume we are given a direction \(d\in \mathbbm {R}^k\backslash \{0\}\) with \(d_i\le 0\), \(i=1,\ldots ,k\). Further, we assume that we are given a point \(x_0\in \mathbbm {R}^n\) with \(\mathrm {rank}(J(x_0))=k\) and that the image of F is bounded from below. A greedy search in d-direction using Eq. (5) leads to the (numerical) solution of the following initial value problem:

where t denotes the time. In the following we investigate solutions of (\(\mathrm{DS}(x_0,d)\)) qualitatively. Let \(\gamma :[0,t_f]\rightarrow \mathbbm {R}^n\) be such a solution, where \(t_f\) is the final value, and let \(t_c\) be the smallest value of \(t\ge 0\) such that

We will call \(t_c\) the critical value and \(\gamma (t_c)\) the critical point of (\(\mathrm{DS}(x_0,d)\)). \(\gamma \) can be divided into two parts (compare to Fig. 1): \(\gamma ([0,t_c])\) and \(\gamma ([t_c,t_f])\). In the first part, \(F(\gamma (t))\) yields the desired decay in d-direction. From the critical point \(\gamma (t_c)\) on a ‘best fit’ is computed (which follows directly by the properties of the pseudo inverse [20]), i.e.,

For the end point \(\gamma (t_f)\) it holds \(J(\gamma (t_f))^+d = 0\). On the one hand, such end points are of particular interest since they are KKT points with associated convex weight \(\alpha = -d/\Vert d\Vert _1\). To see this, let \(J(\gamma (t_f)) = U\Sigma V^T\) be a singular value decomposition of \(J(\gamma (t_f))\). Since \(J(\gamma (t_f))^+d = 0\), where \(J(\gamma (t_f))^+ = V\Sigma ^+ U^T\), it is also \(J(\gamma (t_f))^Td = V\Sigma U^T d = 0\), i.e., it holds

On the other hand, the computation of \(\gamma \) in \([t_c,t_f]\) might get computationally expensive since (\(\mathrm{DS}(x_0,d)\)) is stiff in the second part. Further, the computation of \(\gamma ([t_c,t_f])\) does not fit to the original idea of the directed search. Hence, we will restrict ourselves here to the detection of \(\gamma (t_c)\).

As seen above, one cannot expect to get KKT points with associated weight \(\alpha \) when computing \(\gamma (t_c)\). However, the following result shows a relation to the well-known NBI method. The NBI subproblem can be stated as followsFootnote 2

Proposition 1

Let \(x^*\) be the critical point of (\( DS(x_0,d)\)), then it is a local solution of \( (NBI(x_0,d))\).

Proof

Let \(g(x,l) := l\) and \(h_i(x,l):= f_i(x_0)+ld_i-f_i(x)\). Assume \(x^*\) is not a local solution of \(\mathrm{NBI}(x_0,d)\). Then there exist \(\nu =(\tilde{\nu },\nu _{n+1})\in \mathbbm {R}^{n+1}\), \(\tilde{\nu }\in \mathbbm {R}^n\), and \(l^*\in \mathbbm {R}\) such that

By (8) it follows that \(\nu _{n+1}\ne 0\), and by (9) that

Hence, it is \(\frac{1}{\nu _{n+1}} J(x^*)\tilde{\nu }=d\) which contradicts that \(x^*\) is a critical point. \(\square \)

In turn, local solutions of \(\mathrm{NBI}(x_0,d)\) are also potential critical points of (\(\mathrm{DS}(x_0,d)\)): let \(x^{**}\) be a solution of \(\mathrm{NBI}(x_0,d)\) . Assume that there exists a \(\nu \in \mathbbm {R}^n\) such that \(J(x^{**})\nu = d\). Then, \(\tilde{\nu } = (\nu ,1)\in \mathbbm {R}^{n+1}\) satisfies (8) and (9) which is in contradiction to the assumption of \(x^{**}\).

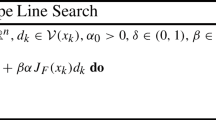

Numerical treatment of (\(\mathrm{DS}(x_0,d)\)) Since we are only interested in the detection of critical points the numerical treatment of (\(\mathrm{DS}(x_0,d)\)) gets significantly simplified. In the following we describe the steps for a particular realization.

To trace the solution curve of (\(\mathrm{DS}(x_0,d)\)), one can e.g. choose well-established numerical discretization methods (e.g., [21]). As these methods do not allow to perform a correction back to the solution curve, computed errors can not be corrected. In the following, we present one possible way to compute the solution curve numerically by a specialized predictor corrector (PC) method (e.g., [22]), i.e., with a procedure which allows for such a correction: recall that for every point x on the solution curve it holds

where \(\lambda _y\in \mathbbm {R}\). Hence, the curve is contained in the zero set of

To comply with the needs of PC methods we introduce an additional parameter \(\lambda _x\) into the solution curve (which is defined in decision space):

So far, we are not able to apply PC methods since the parameters \(\lambda _x\) and \(\lambda _y\) parametrize different curves. The following little consideration, however, argues that it is reasonable to match the two parameters: let \(x_0\) be given and \(\lambda _{x,0}=0\), and let \((x_1,\lambda _{x,1})\) be an Euler step of (13) with a small step size \(\Delta \lambda _x\), i.e., \(x_1 = x_0 + \Delta \lambda _x \nu _+(x_0)\) and \(\lambda _{x,1} = \Delta \lambda _x\). By construction of \(\nu _+(x_0)\) and since \(\Delta \lambda _x\) is small we have

This implies that \(F(x_1)-F(x_0)\approx \Delta \lambda _x d \Vert \nu _+(x_0)\Vert \), and hence, \(H(x_1,\lambda _{x,1}) / \Vert \nu _+(x_0)\Vert \approx 0\). Using this, (13) and (12) can now be used to perform classical PC methods in order to trace the solution curve: starting with the point \((x_0,\lambda _{x,0})\) one can integrate (13) numerically for a small time step, e.g. via the Euler method as described above leading to a predictor solution \((\tilde{x}_1,\tilde{\lambda }_{x,1})\). In a next step, this solution can be corrected to the desired curve. That is, starting with \((\tilde{x}_1,\tilde{\lambda }_{x,1})\) and using a root finding method applied on (12) one can seek for a solution \((x_1,\lambda _{x,1})\) with \(H(x_1,\lambda _{x,1})/ \Vert J(x_0)^+d \Vert \approx 0\), and so on. For details such as step size control we refer to a preliminary study of the DS in [15].

To define a stopping criterion we can utilize the facts that \(\mathrm {rank}(J(x_0))=k\) (by assumption) and that \(\mathrm {rank}(J(x^*))<k\) (by definition of the critical point \(x^*\)). The rank of a matrix can of course not be used to detect the critical point numerically, but instead the condition number \(\kappa _2\) of J(x) can be used: one can e.g. compute

where \(\sigma _1\) and \(\sigma _k\) are the largest and smallest singular values of J(x), respectively, and stop the process if \(\kappa _2(J(x_i)) \ge tol\), where \(tol\in \mathbbm {R}_+\) is a given (large) threshold. This can be done since by the above discussion \(\kappa _2(J(x(t)))\rightarrow \infty \) for \(x(t)\rightarrow x^*\). Alternatively, one can stop the search if the difference between two consecutive solutions is below a given threshold.

This discussion shows one potential drawback of the approach, namely that the determination of the search direction by solving (4) gets inaccurate for points near the Pareto set due to the high condition number of J(x). However, our experience has shown that state-of-the-art numerical tools allow to come ‘near enough’ to the Pareto set even for larger problems (see also the results in Sect. 6). However, large condition numbers (say, \(tol>1000\)) should be avoided as they might lead to inaccuracies and higher computational times when solving (4) numerically.

Crucial for the above descent method is of course the proper choice of d to steer the process. This is in general not an easy task since this is highly problem dependent. In the next subsection, one particular choice of d is discussed for the realization of the continuation method. Some other choices are used in Sect. 6 where the DS is integrated into a MOEA.

3.3 The DS continuation method

In this section we propose a new PC method for the continuation along (local) Pareto sets of a given MOP. The central difference to a classical method is that we suggest a new predictor direction which is based on the geometry of the Pareto front and is realized by DS. Interesting is the fact that this new method does not require to compute the Hessians of the objectives. In the following we present the alternative predictor direction and propose then a ‘complete’ Hessian free PC method (and the variant in Sect. 5 is even gradient free).

Predictor Direction Assume we are given a (local) Pareto point x and the associated convex weight \(\alpha \), i.e., such that Eq. (2) is satisfied. Further, we assume that \(\mathrm {rank} (J(x)) = k-1\). It is known (e.g., [18]) that in this case \(\alpha \) is orthogonal to the linearized Pareto front at F(x). Thus, a search orthogonal to \(\alpha \) (in objective space) could be promising to obtain new predictor points. To use DS, for instance a QR-factorization of \(\alpha \) can be computed, i.e.,

where \(Q=(q_1,\ldots ,q_k)\in \mathbbm {R}^{k\times k}\) is orthogonal and \(q_i,\;i=1,\ldots ,k\), are its column vectors, and \(R=(r_{11},0,\ldots ,0)^T\in \mathbbm {R}^{k\times 1}\) with \(r_{11}\in \mathbbm {R}\backslash \{0\}\). Since by (16) \(\alpha = r_{11}q_1\), i.e., \(\alpha \in span \{q_1\}\), and Q orthogonal, it follows that the column vectors \(q_2,\ldots ,q_k\) build an orthonormal basis of the hyperplane which is orthogonal to \(\alpha \). Thus, a promising well-spread set of search directions \(\nu _i\) may be the ones which satisfy

Since \(\alpha \) is not in the image of J(x) (otherwise x would not be a Pareto point) and by assumption on the rank of J(x) it follows that the vectors \(q_2,\ldots ,q_k\) are in the image of J(x), i.e., Eq. (17) can be solved for each \(i\in \{2,\ldots ,k\}\).

We stress that for the case \(k=2\) the predictor direction (17) coincides—apart from its length—with one of the gradients \(\nabla f_i(x)\), \(i=1,2\), which has already been successfully used in [23, 24] for bi-objective continuation. Further, we note that such predictor directions \(\nu _p=J(x)^+q\) do not have to be tangent to the Pareto set. Instead, \(J(x)\nu _p\) points along the linearized Pareto front. Since as for (5) \(\nu _p\) is the most greedy solution (compare also to Sect. 4) we can expect that the image F(p), where p is the chosen predictor, is also close to the Pareto front, and thus, that only few iteration steps are required to correct back to this set.

PC Method Based on the above discussion we derive in the following a new PC method.

Predictor Assume we are given a Pareto point \(x_0\) with associated weight \(\alpha _0\). The predictor direction can—except for its signum—be chosen as described above, i.e., one of the normalized vectors \(\nu := \pm \nu _2/\Vert \nu _2\Vert \), where \(\nu _2\) satisfies (4) as described above for \(d=q_2\). To orientate the curve (i.e., to determine the signum of \(\nu \)) one can simply use the change of one of the objective values. For this, the signum of the according entry of the direction vector \(q_2\) can be taken. If, for instance, an improvement according to \(f_2\) is sought, then

can be chosen as predictor, where \(q_{2,2}\) denotes the 2nd entry of \(q_2\), and h is the desired step size.

To get the value of h we proceed as follows: assume we are given \(x_0\) and the direction \(\nu \), \(\Vert \nu \Vert =1\), associated to the direction q in objective space for the predictor \(p=x_0+h\nu \). To obtain an adequate spread of the solutions the function values \(f_j(x)\) and \(f_j(p)\) of at least one objective differ ideally by a (problem dependent) value \(\epsilon \) while the difference for all other objectives does not exceed this threshold. Since this value can differ for each objective we obtain for the demand on the spread

where \(d_w(x,y)=\max _{i=1,\ldots ,k} (w_i|x_i-y_i|)\) is the weighted maximum norm distance (used to weight the objective space). Assuming that all \(f_i\)’s are Lipschitz continuous and that the step size \(h_i\) for the i-th objective is sufficiently small we obtain

Since \(L_{i,x}\) can be approximated by the norm of the directional derivative we obtain for each objective the control

and hence for the entire MOP

Corrector Given p, the subsequent solution along the curve can be computed by solving numerically (\(\mathrm{DS}(x_0,d)\)), using p as initial value and choosing \(d:=-\alpha _0\), i.e., the negative of the weight from the previous solution \(x_0\) leading to a new solution \(x_1\). The new associated weight \(\alpha _1\) can be updated as follows ([25]):

Algorithm 1 shows a possible realization of the continuation method for bi-objective problems (BOPs). There are only two choices possible for a continuation along the Pareto front: ‘right down’ or ‘left up’. Algorithm 1 shows the ‘right down’ movement starting with one initial solution, the respective realization for a ‘left up’ movement is analog. The consideration of MOPs with \(k>2\) requires an additional data structure for the efficient representation of the approximation. See e.g. [26–28] for further details.

4 On multi-objective stochastic local search

In the following we discuss the behavior of multi-objective stochastic local search (MOSLS) which can to a certain extent be explained using the approach of the DS. In particular, it may explain one facet of the huge success of global multi-objective stochastic search algorithms such as MOEAs.

To examine the behavior of MOSLS we investigate the relation of search directions \(\nu \in \mathbbm {R}^n\) in decision space and the movement performed in objective space at a given point \(x\in \mathbbm {R}^n\). The latter can be expressed by \(J(x)\nu \): if a line search along \(\nu \) is performed, then the new iterate is given by \(x_{new} = x + t\nu \), where t is a (small) step size. If on the other hand \(x_{new}\) is chosen from a small neighborhood N(x) of x (e.g., via mutation), then we are in the same setting. To see this, define \(\nu := (x_{new} - x)/\Vert x_{new} - x\Vert \), and we have \(x_{new} = x + \Vert x_{new} - x\Vert \nu \).

In the following we will first consider the extreme cases—x is either far away or very close to the Pareto set—and based on this we will derive conclusions of MOSLS within global search heuristics.

x far away from the Pareto set In [29], it has been observed that the objectives’ gradients typically point nearly in the same direction if x is far from the Pareto set. For MOPs with this property, the gradients point in the extreme case into the same direction, and we have for \(g:=\nabla f_1(x)/\Vert \nabla f_1(x)\Vert \)

where each \(\mu _i>0\). Then, we have for a search direction \(\nu \in \mathbbm {R}^n\) that

That is, it is either (a) \(J(x)\nu =0\) or (b) a search in direction \(\nu \in \mathbbm {R}^n\) leads to a movement in direction \(d= \pm (\mu _1,\ldots ,\mu _k)^T\) in objective space. Since the dimension of the kernel of J(x) is \(n-1\), this means that for a randomly chosen search direction \(\nu \in \mathbbm {R}^n\) the probability is zero that \(J(x)\nu =0\). Hence, if we choose a point \(x_{new}\) randomly from a small neighborhood N(x) we can expect that the difference \(F(y)-F(x)\) is basically a multiple of d. If \(F(x_{new})-F(x)> 0\), where ‘\(>\)’ is considered component-wise, (i.e., \(x\prec x_{new}\)), one can simply flip the search and use \(\tilde{x}_{new}:= x-\nu \) where \(\nu = x_{new}-x\) since by the above considerations one can expect that \(F(x)-F(x-\nu ) \approx -(F(x)-F(x+\nu )) < 0\) (i.e., \(\tilde{x}_{new}\prec x\)). Note, however, that the largest improvement along direction d can be obtained for \(\nu = \pm g\) since in that case \(\Vert J(x)\nu \Vert \) is maximized.

As an example we consider the bi-objective problem [30]

Figure 2a shows an example for \(n=2\) where 100 points were randomly sampled around \(x_0=(30,-30)^T\). In objective space, a clear movement along \(d=\pm (1,1)^T\) can be observed which is (by construction) not the case in decision space.

x near to the Pareto set We again consider the extreme situation, namely now that x is a Pareto point. That is, there exists a convex weight \(\alpha \in \mathbbm {R}^k\) such that \(J(x)^T\alpha =0\). Further, let \(\mathrm {rank}(J(x))=k-1\). Then it holds for any \(\nu \in \mathbbm {R}^n\):

Hence, we have that either (a) \(J(x)\nu = 0\) or (b) that a movement along \(\nu \) leads to a movement along the Pareto front since \(J(x)\nu \ne 0\) is orthogonal to \(\alpha \) (compare to Sect. 3.3). Since the dimension of the kernel of J(x) is \(n-k+1\), the probability for event (a) is zero for a chosen direction \(\nu \) (or a randomly chosen point \(x_{new}\in N(x)\), where N(x) is a small neighborbood of x). Note that the movement along the Pareto front is regardless of the choice of \(\nu \), i.e., regardless if the search in decision space is performed along the Pareto set or not.

Let us consider the important special case \(k=2\). Without loss of generality assume that \(\nabla f_1(x)\ne 0\) and \(\nabla f_2(x)\ne 0\). Then there exist \(\alpha _1\ne 0\) and \(\alpha _2\ne 0\) such that \(\alpha _1 \nabla f_1(x) + \alpha _2 \nabla f_2(x)=0\). Defining \(w = -\alpha _1/\alpha _2\) we can write

We see that \(J(x)\nu =0\) iff \(\nu \) is orthogonal to \(\nabla f_1(x)\). Further, \(\nu \in \{\pm \nabla f_1(x)\}\) is the greedy direction since in that case \(\Vert J(x)\nu \Vert \) is maximized.

Figure 2 shows 100 randomly sampled points in a neighborhood of \(x_0=(0,0)^T\). Again, by construction, no pattern is visible in decision space, but in objective space a clear movement along the Pareto front can be observed.

Contribution of MOSLS within global heuristics Based on the observations made above we make a first attempt to explain the contribution of MOSLS within global search heuristic such as MOEAs. The above discussion shows mathematically and empirically that two important features are inherent in MOSLS, namely a pressure both toward and along the Pareto front. The above considerations hold for extreme cases. Apparently, candidate solutions do not have to be near nor far away from the Pareto set. For points x that are ‘in between’ the search can be steered into any direction. Figure 2b shows the result of 100 randomly chosen points around \(x_0 = (1,-1)^T\). This ‘opening’ allows set based stochastic search methods such as MOEAs in principle to find all regions of the Pareto set/front. The latter of course with all the restrictions that hold for local seach methods.

To examine the behavior of MOSLS within the entire search process empirically we consider a simple set based neighborhood search (SNS, see Algorithm 2). In this method, for entry a of a given archive \(A_i\) a solution \(b\in N(a)\) is chosen in a small neighborhood leading to the new set of candidate solutions \(B_i\). The next archive \(A_{i+1}\) consists of the non-dominated solutions of \(A_i\) and \(B_i\).

Figure 3 shows some numerical results for problem (26). Figure 3a shows a result of SNS where \(A_0\) has been built by 10 randomly chosen points within the domain \(D=[-10,10]^2\). In this figure, every second generation is plotted. As neighborhood in step i we have chosen the maximum norm with radius \(\delta _i = 5/i\). The result indicates that for this model SNS has no problem to converge. The final archive is indeed very close to the true Pareto front. Since a random search along the Pareto set leads to many non-dominated solutions leading to many entries in the archive we have for sake of a (very simple) comparison coupled SNS with the archiver ArchiveUpdateTight2 that allows to reduce the number of archive entries while preserving certain convergence properties [31]. See Fig. 3b for a result where the number of function evaluations were restricted to 500 (only the final archive is plotted). As comparison, Fig. 3c contains the non-dominated solutions from a simple global searcher (all points are chosen randomly from D) with a budget of 5000 function calls. This observation gets confirmed in Table 1, where GS and SNS are compared on 14 different benchmark functions with different characteristics such as modality and connectedness of the Pareto fronts. SNS wins in 13 out of 14 cases (and significant in 12 cases due to the Wilcoxon rank-sum test) and is only inferior (significantly) compared to GS on ZDT4 which is highly multi-modal as it contains \(21^9\) local Pareto fronts.

Though SNS does not make use of any gradient information, explicit steering, or any kind of population/swarm based intelligence we think that the results are already reasonable. Certainly, state-of-the-art MOEAs will outperform SNS (in particular when n or k are increased, and/or more complicated models are beeing considered) which results from the great importance of the interplay of the different elements of a search heuristic such as the balance of local and global search (e.g., [32]). However, our focus in this section is the behavior of stochastic local search which is used within every state-of-the-art heuristic. As the above discussion shows and the numerical results indicate, the two key features, pressure toward and along the Pareto front (which are closely related to the terms convergence and diversity) are already inherent in MOSLS. Hence, one can even say that the classical EMO task (i.e., to find a finite size approximation of the Pareto front) is a well-conditioned problem. This might be one facet that explains the huge success of global search heuristics such as MOEAs. We stress that the above considerations are not restricted to differentiable problems. Note that many maps are only non differentiable on a zero set. In other words, for such a map F and a randomly chosen feasible point x the probability is one that J(x) exists, and hence, that the above considerations hold.

5 Gradient free directed search

So far, DS requires the Jacobian if the method is realized via (4). Here, we present an alternative way to obtain such search directions \(\nu \) without explicitly computing or approximating the Jacobian. Instead, the information of function values of points near a given point is utilized for the approximation of \(\nu \). This method can be viewed as a particular finite difference (FD) method, however, it has two crucial advantages over the classical Jacobian approximation via FD: (i) fewer additional function evaluations are required to obtain a direction \(\nu \) such that Eq. (4) is satisfied, and (ii) existing neighborhood information can be utilized leading to a further reduction of the cost. The latter is in particular interesting in the context of set-based optimization strategies such as MOEAs. The general idea behind the method is as follows: given a point \(x_0\) that is designated for local search as well as another point \(x_i\) in the current population (i.e., the objective value is known) that is in the vicinity of \(x_0\), then the given information can be used to approximate the directional derivative in direction

without any additional cost (in terms of function evaluations). That is, it holds

where O denotes the Landau symbol. This can be seen by considering the forward difference quotient on the line search function \(f_{\nu _i}(h) = f(x_0+h\nu _i)\).

Now assume a candidate solution \(x_0\in \mathbb {R}^n\) is designated for local search and further r search directions \(\nu _i\in \mathbb {R}^n\), \(i=1,\ldots ,r\), are given. Then, the matrix \(\mathcal {F} := JV\in \mathbb {R}^{k\times r}\), where \(V=(\nu _1,\ldots ,\nu _r)\in \mathbbm {R}^{n\times r}\), is as follows:

Hence, every element \(m_{ij}\) of JV is defined by the value of the directional derivative of objective \(f_i\) in direction \(\nu _j\), and can be approximated as in Eq. (30). Given JV and a direction d, one can thus obtain a search direction via solving

and then setting

We stress that the above method is not a gradient approximation via sampling (as e.g. done in [33]) but instead a new way to get an approximation of \(\nu \) without explicitly computing the gradient.

Next we investigate the solvability and the condition of Eq. (32) which depends both on the choice of the value of r and the position of the search directions \(\nu _i\) to each other. In case \(\mathrm {rank}(J(x)) = k\) it is known that

If on the other hand x is a critical point (and hence, \(\mathrm {rank}(J(x))<k\)), then it follows by the properties of matrix multiplication that also \(\mathrm {rank}(JV)<k\) regardless of the choice of V (i.e., regardless of the number r and the choice of the search directions \(\nu _i\)). This indicates that the condition number of JV can be used to check numerically if a current iterate is already near to an end point of (\(\mathrm{DS}(x_0,d)\)). It seems to make sense to choose the search directions orthogonal to each other. This is motivated by the fact that \(\kappa _2(JV) \le \kappa _2(J(x))\) for the case that V is orthogonal which means that the condition number \(\kappa _2(JV)\) can indeed be used as a stopping criterion analog to the original DS method.

The above considerations show that already for \(r=k\) search directions \(\nu _i\), \(i=1,\ldots ,r\), one can find a descent direction \(\tilde{\nu }\) by solving Eq. (32) regardless of n. However, by construction it is \(\nu \in span\{\nu _1,\ldots ,\nu _r\}\) which means that only a r-dimensional subspace of the \(\mathbb {R}^n\) is explored in one step. One would expect that the more search directions \(\nu _i\) are taken into account, the better the choice of \(\tilde{\nu }\) is, which is indeed the case: for \(r>k\), we suggest to choose analog to (5)

In fact, \(\nu ^{(r)}_+\) comes closer to \(\nu _+ = J(x)^+d\) as r increases, and both vectors are equal for \(r=n\) in case the \(v_i\)’s are chosen orthogonal to each other. This is due to the fact that in this case \(VV^T\) (i.e., the orthogonal projection onto \(span\{v_1,\ldots ,v_r\}\)) converges to the identity matrix \(I_n\in \mathbb {R}^{n\times n}\).

In the following we investigate empirically the influence of r on the performance of the new gradient free DS descent method. For this reconsider MOP (26), this time for \(n=10\). Consider the point \(x_0 =(1,-1,\ldots ,1,-1)^T\in \mathbb {R}^{10}\) and direction \(d=(-1,-1)^T\). We obtain \(\nabla f_1(x_0) = (0, -4,\ldots ,0,-4)^T\), \(\nabla f_2(x_0) = (4,0,\ldots ,4,0)^T\), and \(\nu _+ = (-1,1,\ldots ,-1,1)^T\). Choose \(\nu _i = e_i\) as the canonical unit vectors and consider the search directions \(\nu _+^{(r)}\), \(r\in \{2,4,6,8,10\}\), where the first r search directions are taken. It follows that \(\nu _+^{(2)} = (-1,1,0,\ldots ,0)^T\), \(\nu _+^{(4)} = (-1,1,-1,1,0,\ldots ,0)^T\) etc., and \(\nu _+^{(10)} = \nu _+\). It is \(\Vert J(x_0)\nu _+\Vert = \sqrt{2} \cdot 20\) and \(\Vert J(x_0)\nu _+^{(r)}\Vert = \sqrt{2}\cdot 2r\). Figure 4 shows the Pareto front together with the images \(y_r = F(x_0 + t \nu _+^{(r)})\), \(r\in \{2,4,\ldots ,10\}\), for the step size \(t=1\). Apparently, the larger the value of r, the more the improvement in d-direction for the same step size. This shows—as anticipated—the trade off between cost and performance of one iteration step depending on r. Crucial for the approximation of \(\mathcal {F}\) is next to r the choice of the test points \(x_i\). If the function values of points in a neighborhood \(N(x_0)\) are already known, it seems to be wise to include them to build the matrix. Nevertheless, it might be that further test points have to be sampled to obtain a better search direction which is motivated by the above discussion. As a rule of thumb, we have observed in our computations that we present in the next section that \(r\approx 0.4n\) leads to good results for the use within MOEAs.

Assume that we are given \(x_0\in \mathbb {R}^n\) as well as l neighboring solutions \(x_1,\ldots ,x_l\in N(x_0)\). According to the discussion above, it is desired that all further search directions are both orthogonal to each other and orthogonal to the previous ones. In order to compute the new search directions \(\nu _{l+1},\ldots ,\nu _r\), \(r>l\), one can proceed as follows: compute \(V = QR = (q_1,\ldots ,q_l,q_{l+1},\ldots ,q_n)R\). Then it is by construction \(\nu _i\in span\{q_1,\ldots ,q_i\}\) for \(i=1,\ldots ,l\), and hence

One can thus e.g. set

Since the cost for the QR-factorization is \(O(n^3)\) in terms of flops, one may alternatively use the Gram–Schmidt procedure (e.g., [20]) to obtain the remaining sample points (e.g., if \(r-l\) is small and n is large). This leads to a cost of \(O((r-l)^2n)\) flops. For the special case that \(\nu _1 = x_1-x_0\) and \(\tilde{\nu }_2 = \tilde{x}_2-x_0\) are given such that \(\{\nu _1,\tilde{\nu }_2\}\) are linearly independent the second search vector \(\nu _2\) can be computed by

Having stated the basic idea of the gradient free DS (called DDS for discrete Directed Search) we are now in the position to perform a movement both toward and along the Pareto front as for the classical DS. For the DDS Descent Method as standalone algorithm we refer to some studies made in [34]. More promising is the use of DDS within set based algorithms such as MOEAs since then the search direction can be computed for free which we will address in Sect. 6. In the following we will consider modifications required to make the above continuation method gradient free which makes it applicable to a broader class of problems.

5.1 DDS continuation

A gradient free realization of the DS Continuation is mainly possible due to the idea in (32) and (33) and the observation made in Sect. 4, namely that a local search along \(x_0\) leads with probability one to a movement from \(F(x_0)\) along the Pareto front. In the following we describe the details about several tasks that have to be performed to realize the continuation and will then present the algorithm.

Task 1: steering the search (a) Predictor: in the DS method, a set of predictors is generated by computing a QR-factorization of the KKT weight \(\alpha \) at the given point \(x_0\). This approach can apparently not be chosen here since the computation of \(\alpha \) via (23) requires gradient information. Instead, we utilize the observation that \(J(x_0)\nu \) points along the Pareto front for almost all vectors \(\nu \in \mathbb {R}^n\): let \(\nu _1,\ldots ,\nu _{k-1}\in \mathbb {R}^n\) such that \(J(x_0)\nu _1,\ldots , J(x_0)\nu _{k-1}\) are linearly independent. Consider the QR-factorization

Then, \(\{q_1,\ldots ,q_{k-1}\}\) is such a desired orthonormal basis, i.e., one can e.g. set \(d_p = q_i\), \(i\in \{1,\ldots ,k-1\}\), as possible predictor direction. Also the KKT weight \(\alpha \) can be gained by this factorization: since \(q_k\) is orthogonal to each \(J(x_0)\nu _i\), \(i=1,\ldots ,k-1\), and either \(q_k < 0\) or \(q_k> 0\), it is

Hence, by choosing test points \(x_i\) in the neighborhood \(N(x_0)\) of \(x_0\) and setting \(\nu _i:= x_i-x_0\), one obtains a gradient free way to get both tangent vectors and KKT weights if \(J(x_0)\nu _i\) is approximated via (30). The cost for this is ideally given by k-1 evaluations of F, further evaluations may occur if \(J(x_0)\nu _1,\ldots , J(x_0)\nu _{k-1}\) are not linearly independent (the probability for this event, however, is zero if the \(x_i\)’s are chosen uniformly at random). For the special case \(k=2\) we assume w.l.o.g. that an improvement according to \(f_1\) is sought for (i.e., a ‘left up’ movement along the Pareto front). Then, compute \(x_1\in N(x_0)\) and evaluate \(F(x_1)\). The desired direction given in objective space is

if \(f_1(x_1)<f_1(x_0)\), else set \(d_p := -\tilde{d}\).

(b) Corrector: since the KKT weight \(\alpha \) of the previous Pareto point is known (see Eq. (40)), one can proceed as in the gradient based DS continuation method, i.e., to set \(d_c = -\alpha \). For \(k=2\), this can be expressed analytically: if \(d_p = (a,b)^T\), then the corrector direction is given by

Task 2: step size control (a) Predictor: the directional derivative used in the step size control (21) can be replaced by the finite difference leading to

where \(x_1\in N(x_0)\) and \(\nu := x_1-x_0\). Note that if \(f_i(x_1)\) is close to \(f_i(x_0)\), then the step size can get large. Hence, it is advisable to bound the overall step size by a maximal value \(t_{max}\).

In case Eq. (35) is used to compute the search direction \(\nu \) the above step size control cannot be used since for \(\nu \) there does not exist a function value in that direction (since it is only known that \(\nu \in span(\nu _1,\ldots ,\nu _r)\)). Instead, the step size in Eq. (21) can be chosen using the following estimation of the directional derivative: given \(x_0\) and directions \(\nu _i\), \(i=1,\ldots ,r\), and \(\nu = V(JV)^+d\), then

where the \(m_{j,i}\)’s are the entries of \(\mathcal {F}\).

(b) Corrector: for the corrector steps, we follow the suggestions made in [15].

Now we are in the position to explain the gradient free PC method. Starting from an (approximate) local solution \(x_i\), the next (approximate) solution is obtained as follows (compare to Algorithm 3). First, a predictor \(p_i\) is selected by performing a step in direction \(d_p\) that points along the linearized Pareto front at \(F(x_i)\) (lines 1–4). This point is only optimal if the Pareto set \(\mathcal {P}\) is not bended around \(x_i\) but still near to \(\mathcal {P}\) if the step size was chosen sufficiently small. Hence, in the second step, \(p_i\) is corrected back to the Pareto set (lines 5–8).

It is important to note that the continuation method has to be started with a (approximate) solution and is of local nature. That is, there is no guarantee that the method detects several connected components starting from one single solution, however, this is in certain cases possible since the movement is in fact performed along the boundary of the image which yields the possibility to reach other parts of the Pareto front. See Sect. 6.1 for such an example.

Note further that the overall cost of the search process can be reduced significantly by utilizing existing information. If, for instance, the predictor \(p_i\) is known and the corrector has to be performed via DDS, one can (among others) use the direction \(v_i = (x_i-p_i)/\Vert x_i-p_i\Vert \). Since the value \(F(x_i)\) of the previous candidate solution \(x_i\) is already known, the incorporation of \(\nu _i\) comes for free in the context of DDS. Similar for all other points in the neighborhood of \(p_i\) those images are known.

As a first example, consider the problem [27]:

where \(a^1 = (1,1)^T\) and \(a^2 = -a^1\). Figure 5 shows one result of the gradient free continuation method for \(\epsilon =0.08\). We started the search with \(x_0 = a^1\) and used the maximum norm with radius \(\delta =0.3\) as neighborhood. A total of 32 solutions (i.e., correctors) were obtained where 95 function evaluations were needed. Here, a satisfying approximation of the Pareto front was obtained for a reasonable amount of function calls spent.

Application of the DDS continuation method on MOP (45) for \(n=2\) and \(\epsilon =0.08\)

6 Numerical results

Here we present some numerical results of the DS as standalone algorithm and as local searcher within the state-of-the-art algorithm MOEA/D [3].

6.1 (D)DS continuation

First we briefly investigate the potential of the DS Continuation Method (DS-C) as standalone algorithm. For this, we first consider the three-objective problem DTLZ2 [35] for \(n=12\) those Pareto front is equal to the first quadrant of the unit sphere. Figure 6 shows the numerical results obtained by NBI as well as from a coupling of DS-C and the recover algorithm [27] in order to obtain a ‘global’ view on the part of the solution set that is already computed: first a partition of the domain is generated via (small) boxes. Then, for every newly generated candidate solution x we checked if the box that contains x is already associated to a solution found by DS-C. If so, we have discarded x. Else, we have added it to the archive and have associated this vector to the respective box. As stopping criterion for the corrector we have chosen \(\Vert x_{i+1}-x_i\Vert \le 1e-6\). For NBI, we have used the quasi-normal \(\hat{n} = (-1,-1,-1)^T\) as proposed in [11]. Apparently, NBI misses some regions of the front (the region which is outside the ‘\(\hat{n}\)-shadow’ of the convex hull of individual minima) which is not the case for the recover algorithm which captures all regions of the front. The crucial difference is that the recover algorithm determines the search direction from a given solution which allows in this case to find points at the boundary of the Pareto set. Given the strong relation of DS and NBI, the recover method can thus be seen as a possible remedy for the ‘\(\hat{n}\)-shadow problem’. Another possible remedy is e.g. proposed in [36]. We also compared the recover method that used DS (called R-DS) with its original variant that uses the continuation method proposed in [18] (R-H) on the same example. While the approximations qualities were the same—a Hausdorff distance of the image of the solution set to the Pareto front \(d_H=0.03423\) for R-DS and \(d_H=0.03545\) for R-H—this does not hold for the computational cost: R-DS used a budget of 3696 function and 935 Jacobian evaluation while R-H spent 4786 function, 4479 Jacobian, and 13,437 Hessian evaluations.

Despite its local nature, DS-C is not necessarily restricted to the connected components of the Pareto sets: recall that the search is performed along the boundary of the image which yields the possibility that along it further connected components of the Pareto front can be found. Figure 7 shows a numerical result of DDS-C on ZDT3 [37] for \(n=30\) starting with the minimizer of the first objective and using the radius \(\delta =0.1\) for the neighborhood search. By performing a movement along the boundary of the domain, all connected components of the Pareto front could be found. Shown is also the result of the Zigzag Method [24] (shifted in \(f_2\)-space for sake of a better comparison) which also incorporates a movement along the boundary of the domain. The approximation qualities obtained by both methods are very similar while the costs differ: DDS Continuation used 2036 function evaluations while the Zigzag Method needed 36,290 function evaluations plus another 36,290 Jacobian calls. Note that a budget of 2036 function evaluations is equivalent to about 70 Jacobian calls if the Jacobians are approximated by finite differences. Thus, better results by gradient based continuation-like methods can hardly be expected.

6.2 DS coupled with MOEA/D

MOEA/D employs a decomposition approach to convert the problem of approximating the Pareto front into a certain number of scalar optimization subproblems. There is a weight vector \(\lambda _i\) associated to each scalar subproblem i. From a practical point of view, this weight can be used to steer the local search over this direction. Algorithm 4 describes the coupling of DS (or DDS) and MOEA/D. The notation regarding MOEA/D procedures and parameters is taken from [3]. There exist more sophisticated approaches to control the application of the local search for this (for example [38–40]); most of them have been proposed for discrete domains and are valuable to explore for future work.

For the sake of comparison we have used the ZDT problems [35] and tested over the functions ZDT1 to ZDT4 (using the modifications presented in [41] in order to make them unconstrained and differentiable over the entire domain). The parameter setting used for this benchmark was: population \(\hbox {size} = 50\), neighborhood size \(T=10\), function evaluations for ZDT 1 to \(3 = 5000\), function evaluations for ZDT \(4 = 10,000\), initial step \(\hbox {size} = 1\), \(h_{ls}= 1\), \(k_{ls}= 20\), \(Start_{ls}= 20\), r for \(\hbox {DDS} = 7\), \(D_{ls}=3\), \(T_{ls}\) for ZDT 1 to \(3= 500\), \(T_{ls}\) for ZDT \(4= 1000\), where \(T_{ls}\) denotes the number of function evaluations after which the local search is not applied any more. Further, we have used the UF functions of the CEC09-competition problem suite [42]. The code for this specific version of MOEA/D was taken from [43]. The parameters were: population \(\hbox {size} = 120\), neighborhood size \(T=60\), function \(\hbox {evaluations} = 50,000\), replaced neighbors \(T_{r}=6\), initial step \(\hbox {size}=1\), \(D_{ls}=3\), F (for Differential \(\hbox {Evolution} (\hbox {DE})) = 0.5\), \(CR (\hbox {for DE}) = 1\), start of LS \(\hbox {application} =\hbox { generation}\) 10, r for \(\hbox {DDS} = 7\), \(h_{ls}= 1\), \(k_{ls}= 10\), \(Start_{ls}= 20\), \(T_{ls}=1500\). Different coding for the basis MOEA/D is justified from a practical point of view, since each particular algorithm is known to have the best performance for that corresponding benchmark. Since it is accepted that the Tchebycheff approach is the most efficient scalarization function for MOEA/D in terms of function evaluations, we have chosen this variant for comparison for both test suites. The performance indicators used were the averaged Hausdorff distance \(\Delta _2\) [44] and the Hypervolume indicator [45]. Table 2 compiles the average over 30 independent runs. We can observe that MOEA/D/DS and MOEA/D/DDS have the best values in most of the cases. Some plots of the output population are shown in Fig. 8.

7 Conclusions and future work

We have presented the DS method for unconstrained MOPs which allows to steer the search into any given direction d in objective space. Based on this idea we have presented a class of descent methods and a novel continuation procedure. Both methods are applicable with and without gradient information. For the latter, neighborhood information can be expoited which makes the DS an interesting candidate as local searcher within memetic algorithms. We have further on illustrated both standalone algorithms on some numerical examples and have shown the potential of DS within MOEA/D.

As a by-product we were able to explain the behavior of multi-objective stochastic local search using the DS approach. We could show that both movement toward and along the Pareto front—which are closely related to the terms spread and convergence—are inherent features in MOSLS which explains a facet of the huge success of global multi-objective stochastic search algorithms such as specialized evolutionary algorithms. We conjecture that this new insight might be interesting for the design of future memetic strategies.

For future work, we intend to adapt DS to constrained models which is not done yet but needs careful considerations. Further, the improvement of the hybrids of DS and global search methods will be an important task. In this paper, we have considered a general purpose MOEA. In order to tap the full potential of the DS, however, we will have to specialize on particular performance indicators. The reason for this is that such indicators implicitly transform the MOP into a scalar optimization problem, and hence, a greedy search direction for every point is well-defined, and this direction is even defined in objective space in case of Pareto front approximations. Hence, the DS comes as a natural choice. Finally, an application to many objective problems (i.e., \(k>3\)) by means of the hybrid MOEA would an interesting task which is, however, strongly related to the previous problem as the ’optimal’ distribution of the solutions along the Pareto set/front is not accepted yet.

Notes

If the rank of \(J:= J(x_0)\) is k (i.e., maximal) the pseudo inverse is given by \(J^+ = J^T(JJ^T)^{-1}\).

We note that the original idea of NBI is not to maximize the distance from \(F(x_0)\) for a given point \(x_0\), but this is a straightforward adaption to the current context.

References

Deb, K.: Multi-objective Optimization Using Evolutionary Algorithms. Wiley, Chichester (2001)

Beume, N., Naujoks, B., Emmerich, M.: SMS-EMOA: multiobjective selection based on dominated hypervolume. Eur. J. Oper. Res. 181(3), 1653–1669 (2007)

Zhang, Q., Li, H.: MOEA/D: a multi-objective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 11(6), 712–731 (2007)

Knowles, J., Corne, D.: M-PAES: a memetic algorithm for multiobjective optimization. In: Proceedings of the IEEE Congress on Evolutionary Computation, pp. 325–332. Piscataway, NJ (2000)

Ishibuchi, H., Yoshida, T., Murata, T.: Balance between genetic search and local search in memetic algorithms for multiobjective permutation flowshop scheduling. IEEE Trans. Evol. Comput. 7(2), 204–223 (2003)

Lara, A., Sanchez, G., Coello, C.A.C., Schütze, O.: HCS: a new local search strategy for memetic multiobjective evolutionary algorithms. IEEE Trans. Evol. Comput. 14(1), 112–132 (2010)

Vasile, M., Zuiani, F.: Multi-agent collaborative search: an agent-based memetic multi-objective optimization algorithm applied to space trajectory design. Proc. Inst. Mech. Eng. G 225(11), 1211–1227 (2011)

Zuiani, F., Vasile, M.: Multi agent collaborative search based on tchebycheff decomposition. Comput. Optim. Appl. 56(1), 189–208 (2013)

Roy, B.: Problems and methods with multiple objective functions. Math. Program. 1, 239–266 (1971)

Gembicki, F.W., Haimes, Y.Y.: Approach to performance and multiobjective sensivity optimization: the goal attainment method. IEEE Trans. Autom. Control 20, 769–771 (1975)

Das, I., Dennis, J.: Normal-boundary intersection: a new method for generating the Pareto surface in nonlinear multicriteria optimization problems. SIAM J. Optim. 8, 631–657 (1998)

Potschka, A., Logist, F., Impe, J.F.V., Bock, H.G.: Tracing the Pareto frontier in bi-objective optimization problems by ODE techniques. Num. Algorithms 57(2), 217–233 (2011)

Bosman, P.A.N.: On gradients and hybrid evolutionary algorithms for real-valued multiobjective optimization. IEEE Trans. Evol. Comput. 16(1), 51–69 (2012)

Schütze, O., Lara, A., Coello, C.A.C.: The directed search method for unconstrained multi-objective optimization problems. In: Proceedings of the EVOLVE—a bridge between probability, set oriented numerics, and evolutionary computation, pp. 1–4 (2011)

Mejia, E., Schütze, O.: A predictor corrector method for the computation of boundary points of a multi-objective optimization problem. In: CCE 2010, pp. 395–399 (2010)

Lara, A., Alvarado, S., Salomon, S., Avigad, G., Coello, C.A.C., Schütze, O.: The gradient free directed search method as local search within multi-objective evolutionary algorithms. In: EVOLVE II, pp. 153–168 (2013)

Pareto, V.: Manual of Political Economy. The MacMillan Press, London (1971). Original edition in French in 1927

Hillermeier, C.: Nonlinear Multiobjective Optimization—A Generalized Homotopy Approach. Birkhäuser, Basel (2001)

Kuhn, H., Tucker, A.: Nonlinear programming. In: Neumann, J. (ed.) Proceeding of the 2nd Berkeley symposium on mathematical statistics and probability, pp. 481–492 (1951)

Nocedal, J., Wright, S.: Numerical Optimization. Springer Series in Operations Research and Financial Engineering. Springer, New York (2006)

Deuflhard, P., Borneman, F.: Scientific Computing with Ordinary Differential Equations. Texts in Applied Mathematics 42. Springer, New York (2002)

Allgower, E.L., Georg, K.: Numerical Continuation Methods. Springer, New York (1990)

Harada, K., Sakuma, J., Kobayashi, S., Ono, I.: Uniform sampling of local Pareto-optimal solution curves by Pareto path following and its applications in multi-objective GA. In: GECCO (2007)

Wang, H.: Zigzag search for continuous multiobjective optimization. Inf. J. Comput. 25(4), 654–665 (2013)

Schäffler, S., Schultz, R., Weinzierl, K.: A stochastic method for the solution of unconstrained vector optimization problems. J. Optim. Theory Appl. 114(1), 209–222 (2002)

Henderson, M.E.: Multiple parameter continuation: computing implicitly defined k-manifolds, I. J. Bifurc. Chaos 12(3), 451–476 (2003)

Schütze, O., Dell’Aere, A., Dellnitz, M.: On continuation methods for the numerical treatment of multi-objective optimization problems. In: Branke, J. et al. (ed.) Practical Approaches to Multi-objective Optimization, no. 04461 in Dagstuhl Seminar Proceedings (2005)

Boissonnat, J.-D., Ghosh, A.: Triangulating smooth submanifolds with light scaffolding. Math. Comput. Sci. 4(4), 431–461 (2010)

Brown, M., Smith, R.E.: Directed multi-objective optimisation. Int. J. Comput. Syst. Signals 6(1), 3–17 (2005)

Köppen, M., Yoshida, K.: Many-objective particle swarm optimization by gradual leader selection. In: ICANNGA 2007, pp. 323–331. Springer, Berlin (2007)

Schütze, O., Laumanns, M., Tantar, E., Coello, C.A.C., Talbi, E.-G.: Convergence of stochastic search algorithms to gap-free Pareto front approximations. In: GECCO-2007, pp. 892–901 (2007)

Ishibuchi, H., Murata, T.: Multi-objective genetic local search algorithm. In: Proceedings of 3rd IEEE International Conference on Evolutionary Computation, Nagoya, Japan, pp. 119–124 (1996)

Domínguez, I.S., Aguirre, A.H., Valdez, S.I.: A new EDA by a gradient-driven density. In: Parallel Problem Solving from Nature—PPSN XIII—13th International Conference, pp. 352–361 (2014)

Alvarado, S.: El punto a punto de las técnicas de búsquedas local para algoritmos de optimización multiobjetivo. MSc Thesis, Cinvestav-IPN (2012)

Deb, K., Thiele, L., Laumanns, M., Zitzler, E.: Scalable test problems for evolutionary multiobjective optimization. In: Abraham, A., Jain, L., Goldberg, R. (eds.) Evolutionary Multiobjective Optimization. Theoretical Advances and Applications, pp. 105–145. Springer, London (2005)

Motta, R., Silvana, S.A.M., Lyra, P.: A modified NBI and NC method for the solution of n-multiobjective optimization problems. Struct. Multidiscip. Optim. 46(2), 239–259 (2012)

Zitzler, E., Deb, K., Thiele, L.: Comparison of multiobjective evolutionary algorithms: empirical results. Evol. Comput. 8(2), 173–195 (2000)

Caponio, A., Neri, F.: Integrating Cross-Dominance Adaptation in Multi-objective Memetic Algorithms. Springer, Berlin (2009)

Lin, J.-Y., Chen, Y.-P.: Analysis on the collaboration between global search and local search in memetic computation. IEEE Trans. Evol. Comput. 15(5), 608–623 (2011)

Knowles, J., Corne, D.: Memetic algorithms for multiobjective optimization: issues, methods and prospects. Recent Advances in Memetic Algorithms, pp. 313–352. Springer, Berlin (2005)

Shukla, P.: On gradient based local search methods in unconstrained evolutionary multi-objective mptimization. In: Obayashi, S., et al. (eds.) EMO, pp. 96–110. Springer, Heidelberg (2007)

Zhang, Q., Zhou, A., Zhao, S., Suganthan, P. N., Liu, W., Tiwari, S.: Multi-objective optimisation test instances for the CEC 2009 special session and competition, Working Report CES-887, School of Computer Science and Electrical Engineering, University of Essex, revised on 20/04/2009 (2008)

Zhang, Q., Liu, W., Li, H.: The performance of a new version of MOEA/D on CEC09 unconstrained MOP test instances. In: IEEE Congress on Evolutionary Computation, 2009 (CEC’09), pp. 203–208. IEEE (2009)

Schütze, O., Esquivel, X., Lara, A., Coello, C.A.C.: Using the averaged Hausdorff distance as a performance measure in evolutionary multi-objective optimization. IEEE Trans. Evol. Comput. 16(4), 504–522 (2012)

Zitzler, E.: Evolutionary Algorithms for Multiobjective Optimization: Methods and Applications. Ph.D. Thesis, ETH Zurich, Switzerland (1999)

Acknowledgments

The first author acknowledges support from Conacyt Project No. 128554.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schütze, O., Martín, A., Lara, A. et al. The directed search method for multi-objective memetic algorithms. Comput Optim Appl 63, 305–332 (2016). https://doi.org/10.1007/s10589-015-9774-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-015-9774-0