Abstract

The update of the rigid body transformation in the iterative closest point (ICP) algorithm is considered. The ICP algorithm is used to solve surface registration problems where a rigid body transformation is to be found for fitting a set of data points to a given surface. Two regions for constraining the update of the rigid body transformation in its parameter space to make it reliable are introduced. One of these regions gives a monotone convergence with respect to the value of the mean square error and the other region gives an upper bound for this value. Point-to-plane distance minimization is then used to obtain the update of the transformation such that it satisfies the used constraint.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The surface registration problem is to find a rigid body transformation such that a set of data points fits in some sense to a given surface under the transformation. The problem occurs in many applications, for example in workpiece localization and shape verification of produced objects [7, 8, 15]. In shape verification, deviations between a produced object and its CAD-model, describing the ideal shape, is to be found. Before the true deviations can be found a surface registration problem has to be solved to align the representation of the measured shape to the CAD-model.

An algorithm that can be used to solve the surface registration problem is the iterative closest point (ICP) algorithm [3, 4, 11]. In general there are two common types of ICP algorithms. These two are based on point-to-point distance minimization [3], and point-to-plane distance minimization [4]. The planes are the tangent planes at the closest surface points to the data points. The convergence for some different registration algorithms are discussed in [9]. The algorithms based on the point-to-point distances minimization converges to a local minimum and the sequence of values of the objective function over the iterations is monotone decreasing. Unfortunately, the asymptotic convergence is linear and in general very slow. Minimization of point-to-plane distances using a Newton step allows flat regions to slide along each other and gives a quadratic asymptotic convergence which is much faster. Unfortunately, monotone convergence can not be ensured without any step size control method like for example line search, see e.g. [5], where a number of objective function evaluations are performed to find an appropriate step. If the objective function is expensive to evaluate, which is the case in surface registration problems, commonly used step size control methods will then also be expensive.

We are describing how a reliable update of the transformation can be obtained giving better possibilities of the convergence than using point-to-point distance minimization but still have the value of the mean square error under control. It is done by constraining the transformation in the parameter space to a well-defined region, which is derived from expressions of point-to-point distance minimization. Point-to-plane distance minimization is then used to obtain the update of the transformation such that it satisfies the used constraint.

2 The ICP algorithm

The surface registration problem is to find a rigid body transformation, consisting of a rotation matrix \(\mathbf {R} \in \{ \mathbb {R} ^{3 \times 3} \, | \, \mathbf {R}^{\mathrm {T}} \mathbf {R} = {\mathbf {I}} ,\det \left( {\mathbf {R}} \right) = + 1 \}\) and a translation vector \(\mathbf {t}\in \mathbb {R}^3\), such that a set of N given data points, \(\{ \mathbf {p}_i \}_{i=1}^N\), fits in some sense to a set of surface points, S, under the rigid body transformation. If least squares distance minimization is considered, the surface registration problem can be written as

where

is the objective function and

is the distance between an arbitrary point \(\mathbf {p}\) to the points in S.

The ICP algorithm can be used to solve (1). It operates in two principal stages in each iteration. These stages are; finding closest surface points to the data points, and finding a rigid body transformation such that the data points are fitted to the surface at the closest surface points. An initial rigid body transformation, \((\mathbf {R}^{(0)},\mathbf {t}^{(0)})\), is required and using \(\mathbf {R}^{(0)}=\mathbf {I}\) and \(\mathbf {t}^{(0)}=\mathbf {0}\) is appropriate if we do not know anything about the expected transformation. The ICP algorithm is presented by Algorithm 1, where \(\mathcal {C}\) denotes a closest surface point operator. That is \(\mathbf {y}_i^{(k-1)}=\mathcal {C}(\mathbf {p}_i^{(k-1)},S)\) is a closest point in S to a transformed data point \( \mathbf {p}_i^{(k-1)}=\mathbf {R}^{(k-1)}\mathbf {p}_i+\mathbf {t}^{(k-1)}\,. \) If the closest surface point is not unique \(\mathbf {y}_i^{(k-1)}\) can be arbitrarily chosen from the set of closest surface points \(\{ \mathbf {y} \in S \, | \, \Vert \mathbf {p}_i^{(k-1)} - \mathbf {y} \Vert _2 = \hbox {d}(\mathbf {p}_i^{(k-1)},S) \}\). Methods for finding the closest point on a surface to a given point are considered in e.g. [2, 14].

To make the notation simpler we will drop the superscript with the iteration index where it is convenient. If point-to-point distance minimization is used, see e.g. [3], no surface normals are required and the update of the transformation in Algorithm 1 is obtained by solving

which is a rigid body transformation problem considered in e.g. [1, 6, 12, 13]. The update \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger } )=( \mathbf {R}^{*},\mathbf {t}^{*} )\) is then obtained from

where \(\bar{\mathbf {p}}\) and \(\bar{\mathbf {y}}\) are the arithmetical mean values of \(\mathbf {p}_i\) and \(\mathbf {y}_i\).

The asymptotic convergence is linear using point-to-point distance minimization and in general very slow. That is why other methods for finding an appropriate updating rigid body transformation are needed.

3 A parametrization of the transformation

A rotation matrix \(\mathbf {R}\) can be parameterized as

where \(\mathbf {z}=[z_1,z_2,z_3,z_4,z_5,z_6]^{\mathrm {T}},\) and \(\mathrm {s}\) and \(\mathrm {c}\) with index 1, 2, 3 are abbreviations for \(\sin \) and \(\cos \) of angles \(z_1,z_2,z_3\) respectively. This parametrization of \(\mathbf {R}\) rotates points in \(\mathbb {R}^{3}\) through the angle \(z_1\) about the x-axis, through the angle \(z_2\) about the y-axis, and through the angle \(z_3\) about the z-axis. All rotation matrices in \(\mathbb {R}^{3 \times 3}\) can be represented by \(-\pi < z_1,z_3 \le \pi \) and \(-\pi /2 \le z_2 \le \pi /2\). A translation vector \(\mathbf {t}\) can be parameterized as

where \(z_4\), \(z_5\), and \(z_6\) are the translations in x, y, and z directions. Therefore, an arbitrary rigid body transformation \((\mathbf {R},\mathbf {t})\) can be parameterized by six parameters given by a vector \(\mathbf {z}\), where \(-\pi < z_1,z_3 \le \pi \) and \(-\pi /2 \le z_2 \le \pi /2\).

Let \(\varphi \) be an operator for obtaining the rigid body transformation \((\mathbf {R},\mathbf {t})=(\mathbf {R}(\mathbf {z}),\mathbf {t}(\mathbf {z}))\) from a given point \(\mathbf {z}\) in its parameter space and we write it as \( [\mathbf {R},\mathbf {t}]=\varphi (\mathbf {z}) . \) We also introduce an operator \(\vartheta \) for extracting a point \(\mathbf {z}\) in the parameter space from an arbitrary rigid body transformation \((\mathbf {R},\mathbf {t})\) such that

if \(-\pi < z_1,z_3 \le \pi \) and \(-\pi /2 < z_2 < \pi /2\).

4 Regions of reliable updates

Consider the minimization problem in (3) where a rigid body transformation \((\mathbf {R},\mathbf {t})\) is to be found. This problem is discussed in e.g. [1, 6, 12, 13]. We replace \(\mathbf {t}\) with a new independent vector \(\mathbf {u}\) by the change of variables \(\mathbf {t}=-\mathbf {R}\bar{\mathbf {p}}+\bar{\mathbf {y}}+\mathbf {u}\). The objective function in (3) in terms of \(\mathbf {R}\) and \(\mathbf {u}\) is

where

Since both the sum of all \((\mathbf {p}_i-\bar{\mathbf {p}})\) and the sum of all \((\mathbf {y}_i-\bar{\mathbf {y}})\) are equal to the zero vector their scalar product with \(\mathbf {u}\) vanish in (7). We define \(\tilde{p}\) and \(\tilde{y}\) such that

and we replace \(\mathbf {u}\) from the change of variables with \(\mathbf {t}+\mathbf {R}\bar{\mathbf {p}}-\bar{\mathbf {y}}\). The objective function in (3) can then be written as

Only the last two terms are dependent of \(\mathbf {R}\) and \(\mathbf {t}\). The rotation matrix in the rigid body transformation \(( \mathbf {R}^{*},\mathbf {t}^{*} )\) that gives the least value of (8) is usually found from a singular value decomposition of \(\mathbf {C}\), \(\mathbf {C}=\mathbf {U} {\Sigma } \mathbf {V}^{\mathrm {T}}\), from which we form \(\mathbf {R}^{*} = \mathbf {V}\mathbf {U}^{\mathrm {T}}\). It might happen that this produces a reflection matrix where \(\det (\mathbf {VU}^{\mathrm {T}})=-1\). In such case the rotation matrix that gives the least value of (8) is \(\mathbf {R}^{*} = \mathbf {V}\,\mathrm {diag}(1,1,-1)\,\mathbf {U}^{\mathrm {T}}\). Details about how to find \(\mathbf {R}^{*}\) are given in [1, 6, 12, 13]. Using \(\mathbf {R}^{*}\) the translation vector \(\mathbf {t}^{*}\) is obtained from \(\mathbf {t}^{*} = \bar{\mathbf {y}}-\mathbf {R}^{*}\bar{\mathbf {p}}\).

We have that \(\bar{\mathbf {p}}^{(k-1)}=\mathbf {R}^{(k-1)}\bar{\mathbf {p}}+\mathbf {t}^{(k-1)}\), where \(\bar{\mathbf {p}}^{(k-1)}\) is the arithmetical mean value of all transformed data points \(\mathbf {p}_i^{(k-1)}\) from iteration \(k-1\), which gives

Hence, the value of \(\tilde{p}\) do not change in the iterations \(k=1,2,\ldots \;\). However, the value of \(\tilde{y}\) changes in the iterations.

For given \(\bar{\mathbf {p}}\), \(\bar{\mathbf {y}}\), and \(\mathbf {C}\), we define a function \(g=g(\mathbf {z})\) as

where \(\mathbf {R}=\mathbf {R}(\mathbf {z})\) and \(\mathbf {t}=\mathbf {t}(\mathbf {z})\) given by (5) and (6). Note that it consists of the terms in (8) that depend on \(\mathbf {R}\) and \(\mathbf {t}\) but without the constant factor N. The rigid body transformation \(( \mathbf {R}^{*},\mathbf {t}^{*} )\) from (4) gives the minimum value of g. We write \(g^{*}=g(\mathbf {z}^{*})\), where \(\mathbf {z}^{*}=\vartheta (\mathbf {R}^{*}, \mathbf {t}^{*})\), and we write \(g^{\circ }=g(\mathbf {0})\) which is the value of g at the zero transformation.

Let \({\Omega }_{\alpha } \subset \mathbb {R}^6\) be a region such that

where \(\alpha \) is satisfying \(0<\alpha \le 1\). If \(\alpha = 1\) we have that \({\Omega }_{\alpha }=\{ \mathbf {z}^{*} \}\). According to the definition of \({\Omega }_{\alpha }\), its boundary \(\partial {\Omega }_{\alpha }\) is a level surface in the parameter space of the function g where \(g(\mathbf {z})=\alpha g^{*} + (1-\alpha )g^{\circ }\). If \(0<\alpha < 1\) and \(g^{*} < g^{\circ }\) tangent planes to \(\partial {\Omega }_{\alpha }\) have a normal given by the gradient \(\nabla g(\mathbf {z})\). Further, if \(\alpha '' \ge \alpha '\) we have \({\Omega }_{\alpha ''} \subseteq {\Omega }_{\alpha '}\).

Theorem 1

All types of ICP algorithms, as given by Algorithm 1, do always converge monotonic to a local minimum or saddle point of the mean square distance objective function f in (2) if the update of the transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfies \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Omega }_{\alpha }\), where \(0<\alpha \le 1\).

Proof

The convergence is ensured for \(\alpha =1\) according to the proof of convergence in [3] since in that case \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })=( \mathbf {R}^{*},\mathbf {t}^{*} )\). It remains to proof convergence for \(0<\alpha <1\). For all quantities with index i we let \(i = 1,\ldots ,N\) without writing it explicitly. The parameter values of the update of the transformation is written as \(\mathbf {z}^{\dagger }\), i.e. \(\mathbf {z}^{\dagger }=\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\).

In iteration k the set of data points \(\{\mathbf {p}_i^{(k-1)}\}\) from iteration \(k-1\) is given and from these points the set of corresponding closest surface points \(\mathbf {y}_i^{(k-1)}=\mathcal {C}(\mathbf {p}_i^{(k-1)},S)\) are found. For these point pairs the value \(f_{k-1}\) of the objective function f is

A rigid body transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfying \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Omega }_{\alpha }\) is applied to \(\mathbf {p}_i^{(k-1)}\) giving the update of the data points, \(\mathbf {p}_i^{(k)}=\mathbf {R}^{\dagger } \mathbf {p}_i^{(k-1)}+\mathbf {t}^{\dagger }\). For these point pairs the mean squared distance is

Since \(f_{k-1} = \tilde{p}+\tilde{y}+g^{\circ }\) and \(g(\mathbf {z}^{\dagger }) \le \alpha g^{*} + (1-\alpha )g^{\circ } \le g^{\circ }\) is fulfilled we have that \(e_{k} \le f_{k-1}\). The equality holds if and only if \(g^{*}=g^{\circ }\) since \(g(\mathbf {z}^{\dagger })=g^{\circ }\) if and only if \(g^{*}=g^{\circ }\).

In the next iteration, i.e. iteration \(k+1\), the closest surface points \(\mathbf {y}_i^{(k)}=\mathcal {C}(\mathbf {p}_i^{(k)},S)\) to the data points \(\mathbf {p}_i^{(k)}\) are computed. For these point pairs the value \(f_{k}\) of the objective function f is

The points \(\mathbf {y}_i^{(k)}\) are the closest surface points to \(\mathbf {p}_i^{(k)}\) so it is clear that

holds for all i and therefore, \(f_{k} \le e_{k}\). The equality is satisfied if and only if \(\Vert \mathbf {p}_i^{(k)} - \mathbf {y}_i^{(k-1)} \Vert _2=\hbox {d}(\mathbf {p}_i^{(k)},S)\) for all i, i.e. the points \(\mathbf {y}_i^{(k-1)}\) are closest surface points to the data points \(\mathbf {p}_i^{(k)}\).

To summarize we can conclude that

is fulfilled. The lower bound is zero since a sum of squared distances cannot be negative. It gives that the sequences of \(f_{k}\) and \(e_{k}\) are monotone decreasing and bounded below. Then they will converge to a real value, which is the same value for both sequences. Since \(0<\alpha \le 1\) the value of \(g^{\circ }\) will approach to the value of \(g^{*}\) and hence, the function g will eventually be minimized. The sequence of \(e_{k}\) will therefore converge to the least value of (8). Suppose that the sequence of \(f_{k}\) will converge to a value that is larger than the value of the objective function f at the local extremum. Then this would contradict the operation of the closest point operator \(\mathcal {C}\).

We can do the conclusion that all types of ICP algorithms, as given by Algorithm 1, do always converge monotonic to a local minimum or saddle point of the mean square distance objective function f in (2) if the update of the transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfies \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Omega }_{\alpha }\). \(\square \)

Theorem 1 is similar to the convergence theorem given in [3] but it results in better possibilities to find good updates than using the ICP algorithm based on point-to-point distance minimization, (4), which results in a slow linear convergence, see e.g. [9]. The described method to constrain the update \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) such that \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Omega }_{\alpha }\) results in better possibilities to achieve faster convergence. If \(\alpha \) is close to 1 the convergence of the ICP algorithm will behave just like when using point-to-point distance minimization (3). If \(\alpha \) is close to 0 the updating is more aggressive but we will still have monotone convergence of the value of the objective function.

An alternative to using the region \({\Omega }_{\alpha }\) is to consider several iterations. We introduce the function

where \(\tilde{y}\), \(\bar{\mathbf {p}}\), \(\bar{\mathbf {y}}\), and \(\mathbf {C}\) in iteration k are given, and \(\mathbf {R}=\mathbf {R}(\mathbf {z})\) and \(\mathbf {t}=\mathbf {t}(\mathbf {z})\). The value of \(h_{1}(\mathbf {0})\) is denoted \(h^{\circ }\) and in each iteration k we let \(h_{k}^{*}=h_{k}(\mathbf {z}^{*})\). Let \({\Phi }_{\beta } \subset \mathbb {R}^6\) be a region such that

where \(\beta \) is satisfying \(0 \le \beta \le 1\). If \(\beta = 1\) we have that \({\Phi }_{\beta }=\{ \mathbf {z}^{*} \}\). According to the definition of \({\Phi }_{\beta }\), its boundary \(\partial {\Phi }_{\beta }\) is a level surface in the parameter space of the function \(h_{k}\) where \(h_{k}(\mathbf {z})=\beta h_{k}^{*} + (1-\beta )h^{\circ }\). If \(0 \le \beta < 1\) and \(h_{k}^{*} < h^{\circ }\) tangent planes to \(\partial {\Phi }_{\beta }\) have a normal given by the gradient \(\nabla h_{k}(\mathbf {z})\). Further, if \(\beta '' \ge \beta '\) in iteration k we have \({\Phi }_{\beta ''} \subseteq {\Phi }_{\beta '}\). The only thing that differs between the functions g and \(h_{k}\) is \(\tilde{y}\), which is constant with respect to \(\mathbf {z}\) so that \(\nabla h_{k}(\mathbf {z})=\nabla g(\mathbf {z})\).

Theorem 2

For all types of ICP algorithms, as given by Algorithm 1, the value \(f_{k}\) of the mean square distance objective function f in iteration k is bounded above according to

if the update of the transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfies \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{\beta }\), where \(0 \le \beta \le 1\).

Proof

For all quantities with index i we let \(i = 1,\ldots ,N\) without writing it explicitly. The set of data points \(\{\mathbf {p}_i^{(k-1)}\}\) from iteration \(k-1\) is given.

By induction we will first prove that if \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{0}\), i.e. \(\beta =0\), then \({\Phi }_{0}\) will not be empty in any iteration and we have that

where \(\mathbf {y}_i^{(k-1)}=\mathcal {C}(\mathbf {p}_i^{(k-1)},S)\), in all iterations \(k = 1,2,\ldots \;\).

In the first iteration compute the set of closest surface points \(\mathbf {y}_i^{(0)}=\mathcal {C}(\mathbf {p}_i^{(0)},S)\). Since \(h^{\circ }=h_{1}(\mathbf {0}) \ge h_{1}(\mathbf {z}^{*})\) \({\Phi }_{0}\) is not empty there must exist a transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfying \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{0}\). The rigid body transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) is applied to \(\mathbf {p}_i^{(0)}\) giving the update of the data points, \(\mathbf {p}_i^{(1)}=\mathbf {R}^{\dagger } \mathbf {p}_i^{(0)}+\mathbf {t}^{\dagger }\), and the mean squared distance is

The last equality follows directly from the definition of \(h_{k}\). Since \(h_{1}(\mathbf {z}^{\dagger }) \le h^{\circ }\) is fulfilled we have

Thus, the inequality (9) is satisfied in iteration \(k=1\).

Our induction hypothesis is that (9) is true in iteration \(k=\kappa \). That is, we assume that

is fulfilled. Using this hypothesis we will show that (9) is true in iteration \(k=\kappa +1\).

In iteration \(k=\kappa +1\) compute the set of closest surface points, \(\mathbf {y}_i^{(\kappa )}=\mathcal {C}(\mathbf {p}_i^{(\kappa )},S)\). The value \(f_{\kappa }\) of the objective function f is

The last equality follows from (8) and the definition of \(h_{k}\). Since \(\Vert \mathbf {p}_i^{(\kappa )} - \mathbf {y}_i^{(\kappa )} \Vert _2 \le \Vert \mathbf {p}_i^{(\kappa )} - \mathbf {y}_i^{(\kappa -1)} \Vert _2\) for all i we have \(f_{\kappa } \le e_{\kappa }\) resulting in

From the relation above it follows that \(h_{\kappa +1}(\mathbf {0}) \le h^{\circ }\) so \({\Phi }_{0}\) can not be empty and there must exist a least function value \(h_{\kappa +1}^{*} \le h^{\circ }\). The rigid body transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfying \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{0}\) is applied to \(\mathbf {p}_i^{(\kappa )}\) giving the update of the data points, \(\mathbf {p}_i^{(\kappa +1)}=\mathbf {R}^{\dagger } \mathbf {p}_i^{(\kappa )}+\mathbf {t}^{\dagger }\), and the mean squared distance is

It follows directly from the definition of the function \(h_{k}\) that

Since \(h_{\kappa +1}(\mathbf {z}^{\dagger }) \le h^{\circ }\) we have

By mathematical induction we have proved that \({\Phi }_{0}\) is not empty in any iteration and that (9) is satisfied for all iterations \(k = 1,2,\ldots \;\).

Now we know that \({\Phi }_{0}\) is not empty in iteration k when using the update of the rigid body transformations \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) such that \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{0}\). Therefore, there will always exist a point in \({\Phi }_{0}\). The value of \(h_{k}\) on the boundary of \({\Phi }_{0}\) is \(h^{\circ }\). The function \(h_{k}\) is continuous so there will always exist a least function value \(h_{k}^{*}=h_{k}(\mathbf {z}^{*}) \le h^{\circ }\). Let us now consider the case where \(\beta \in [0,1]\) and the updating transformation satisfy \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{\beta }\). Since \({\Phi }_{0}\) is not empty in any iteration where \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) can be chosen arbitrarily in \({\Phi }_{0}\) the region \({\Phi }_{\beta }\) can not be empty in any iteration \(k=1,2,\ldots \) either. We can do that conclusion since if \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) can be chosen arbitrarily in \({\Phi }_{0}\), it can also be chosen such that it satisfies \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{\beta }\). We have that \({\Phi }_{\beta } \subseteq {\Phi }_{0}\) and \(\vartheta ( \mathbf {R}^{*},\mathbf {t}^{*})\in {\Phi }_{\beta }\) for all \(\beta \in [0,1]\) and all iterations \(k = 1,2,\ldots \). Therefore, the region \({\Phi }_{\beta }\) can not be empty in any iteration \(k=1,2,\ldots \) either.

In an arbitrary iteration k, compute the set of closest surface points, \(\mathbf {y}_i^{(k-1)}=\mathcal {C}(\mathbf {p}_i^{(k-1)},S)\). The rigid body transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfying \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{\beta }\), \(\beta \in [0,1]\), is applied to \(\mathbf {p}_i^{(k-1)}\) giving the update of the data points, \(\mathbf {p}_i^{(k)}=\mathbf {R}^{ \dagger }\mathbf {p}_i^{(k-1)}+\mathbf {t}^{\dagger }\), and the mean squared distance is

The last equality follows from the definition of \(h_{k}\). Since \(h_{k}(\mathbf {z}^{\dagger }) \le \beta h_{k}^{*} + (1-\beta )h^{\circ }\) we have

The closest surface points \(\mathbf {y}_i^{(k)}=\mathcal {C}(\mathbf {p}_i^{(k)},S)\) to the updated data points \(\mathbf {p}_i^{(k)}\) are found and for these point pairs the value \(f_{k}\) of the objective function f is

Since \(\Vert \mathbf {p}_i^{(k)} - \mathbf {y}_i^{(k)} \Vert _2 \le \Vert \mathbf {p}_i^{(k)} - \mathbf {y}_i^{(k-1)} \Vert _2\) holds for all i we have \(f_{k} \le e_{k}\) resulting in

We know that \(h_{k}^{*} \le h^{\circ }\), so \(\beta h_{k}^{*} + (1-\beta )h^{\circ } \le h^{\circ }\) and from (10) we get

Since (10) and (11) holds for all \(\beta \in [0,1]\) in an arbitrary iteration k for all \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfying \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{\beta }\) the statements in the theorem are true. \(\square \)

The conclusion we can do from Theorem 2 is that if the updating transformation \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) satisfy \(\vartheta (\mathbf {R}^{\dagger },\mathbf {t}^{\dagger }) \in {\Phi }_{\beta }\), then the value of the objective function (2) is bounded above by \(h^{\circ }\).

Schematic illustrations of the regions \({\Omega }_{\alpha }\) and \({\Phi }_{\beta }\) are shown in Fig. 1. The advantage of using the region \({\Omega }_{\alpha }\) is that monotone convergence of the mean square error can always be ensured without any expensive line search method. Unfortunately there is a risk that the region \({\Omega }_{\alpha }\) will be quite small after some iterations and the possibilities in finding good values of \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) are limited to the neighborhood of \(\mathbf {z}^{*}=\vartheta (\mathbf {R}^{*},\mathbf {t}^{*})\). Hence, using an updating transformation in \({\Omega }_{\alpha }\) might be quite pessimistic. The advantage of using the region \({\Phi }_{\beta }\) is that it gives much better possibilities to find good values of \((\mathbf {R}^{\dagger },\mathbf {t}^{\dagger })\) than \({\Omega }_{\alpha }\) does. This method is more aggressive but monotone convergence of the mean square error can not be ensured. The values of \(\tilde{y}\) must also be computed in the iterations.

5 Trust region step

Let \(v(\mathbf {z})\) be a function of point-to-plane distances in iteration k as

The planes with unit normal vector \(\mathbf {n}_i\) are tangent planes of the surface at the surface points \(\mathbf {y}_i^{(k-1)}\). We assume that our surface is \({\mathbf {G}}^1\)-continuous, i.e. tangentially continuous, so a surface normal exists for each point in S.

The update of the transformation can be found by minimize a quadratic approximation of \(v(\mathbf {z})\) such that \(\mathbf {z} \in {\Omega }_{\alpha }\) or \(\mathbf {z} \in {\Phi }_{\beta }\). That is, the update of the transformation can be obtained from

where \(\mathbf {a}\) is the gradient and \(\mathbf {H}\) is the Hessian of the function \(v(\mathbf {z})\) at \(\mathbf {z}=\mathbf {0}\).

The constrained minimization problem in (13) does not have to be solved with some high accuracy. There is no need to find a very accurate solution since it will just give an update of the parameters in an iterative process. Computations of \({\Omega }_{\alpha }\) or \({\Phi }_{\beta }\) can be done at a very low cost when \(\bar{\mathbf {p}}\), \(\bar{\mathbf {y}}\), \(\mathbf {C}\), and for \({\Phi }_{\beta }\) also \(\tilde{y}\), are given. The computation of these quantities is the principal additional computational cost when using the update from (13) instead of using a pure Newton-type iteration, where also an additional line search process must be performed to ensure convergence. The number of floating point operations to obtain \(\bar{\mathbf {p}}\), \(\bar{\mathbf {y}}\), \(\mathbf {C}\), and \(\tilde{y}\) is of order N, but this cost is small in comparison to the cost of finding closest surface points and obtaining the Hessian and the gradient. When solving the minimization problem in (13) having all necessary quantities there is no need to use the N data points nor the N associated closest surface points and surface normals.

6 Numerical experiment

A numerical experiment is presented in order to test the performance of the discussed methods for updating the rigid body transformation in the ICP-algorithm according to (13).

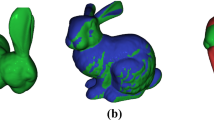

Six different \(\mathbf G ^1\)-continuous surfaces are used in the experiment, which are shown in Fig. 2. The first four surfaces might seem to have sharp edges and corners but they really are \(\mathbf G ^1\)-continuous so for each point on these surfaces there exists a surface normal vector. The last two surfaces are kinematic surfaces [10] which are invariant under some rigid body transformations. We are sampling \(1000000\) points from each of these six surfaces. In addition to the sampled surface points a local quadratic surface approximation is also used at each of these sampled points to represent the surface as the set of points S. We are also sampling a set of \(1000\) data points \({\mathbf {p}_i}\) from each surface and to these data points we are adding a noise from a normal distribution. The data points are then transformed by a rigid body transformation giving them a specific initial position and orientation. The sets of data points are also shown in Fig. 2. The problem is then to find a rigid body transformation such that the data points are fitted to the surface points in S, in least square sense, under the transformation.

We are using 30 iterations of the ICP-algorithm with the discussed methods for updating the transformation. Two different values, 0.1 and 0.9, of both \(\alpha \) and \(\beta \) are used. The difference between the observed values \(f_k\) of the objective function f given by (2) and a pre-computed absolute least value \(\hat{f}\) of f, is presented in Fig. 3 for all 30 iterations. Point-to-point distance minimization given by (4) is used as a comparison to the methods of \({\Omega }_{\alpha }\) and \({\Phi }_{\beta }\), where Newton’s method and point-to-plane distance minimization are utilized together with the step size control in (13).

Differences between observed values \(f_k\) of the objective function f, given by (2), in iteration \(k=0,1,\ldots ,30\) of the ICP-algorithm, given by Algorithm 1, and a computed least value \(\hat{f}\) of f using the surface a rounded tetrahedron, b rounded cube, c rounded cuboid, d rounded cuboid, e torus, f sphere. The function values marked “Point-to-point” are obtained by update the transformation according to (4). The function values marked “\({\Omega }_{\alpha }\)” and the function values marked “\({\Phi }_{\beta }\)” are obtained by update the transformation according to (13). Observations where \(f_k-\hat{f}=0\) are removed because of the logarithmic scale

The search for the closest point in S to an arbitrary data point, written as the operator \(\mathcal {C}\) in Algorithm 1, is done by first finding the closest point among all the \(1000000\) sampled surface points in S. The corresponding local quadratic surface approximation is then used to find an even better closest point. Required surface normals are obtained from the local surface approximations. When using an update given by (13) a minimization problem has to be solved. As a starting point we are using \(\mathbf {z}^{*}=\vartheta (\mathbf {R}^{*},\mathbf {t}^{*})\) which is known to be feasible. The constrained minimization problem in (13) is solved approximately by using a barrier method with a reciprocal barrier function. The computational time to solve this problem is about 20 microseconds which is far less than the computational time for the handling of all computations of the data points.

7 Conclusions

In Fig. 3 we can see that the constraining methods using \({\Omega }_{\alpha }\) and \({\Phi }_{\beta }\) works pretty well in our numerical experiment. It is clear that these methods give much faster convergence than minimizing point-to-point distances. The combination of using Newton’s method to minimize point-to-plane distances and the constraints of \({\Omega }_{\alpha }\) or \({\Phi }_{\beta }\) seems to be really successful when solving surface registration problems where a set of data points is to be fitted to a surface with known surface normals.

The constraint of \({\Phi }_{\beta }\) was sufficient when utilizing the Newton step \(\varvec{\delta }\) to find good updates of the transformation giving a fast convergence, except in the fourth test, Fig. 3d, where the convergence fails initially for \({\Phi }_{0.1}\). In the fifth test, Fig. 3e, the method of \({\Phi }_{0.1}\) shows a strange behaviour after convergence but we should have in mind that our set of surface points S is just sampled together with a quadratic surface approximation which result in a non-exact surface representation. A conclusion is that it might be a good idea to use \({\Omega }_{\alpha }\) or \({\Phi }_{\beta }\) to constrain the Newton step in some initial iterations before an undamped Newton method can be used.

We can also see in Fig. 3 that the observed convergence using \({\Omega }_{\alpha }\) are much slower than the observed convergence using \({\Phi }_{\beta }\). That is because the region \({\Omega }_{\alpha }\) is more constrained than the region \({\Phi }_{\beta }\), which provides less possibilities in finding good updating transformations. The more aggressive choice of \(\alpha \) in \({\Omega }_{\alpha }\), that is having \(\alpha =0.1\,\), gives better observed convergence for all six problems than using the more defensive value \(\alpha =0.9\,\). The same holds also for \({\Phi }_{\beta }\) in most cases.

References

Arun, K.S., Huang, T.S., Blostein, S.D.: Least-squares fitting of two 3-d point sets. IEEE Trans. Pattern Anal. Mach. Intell. 9(5), 698–700 (1987)

Bergström, P., Edlund, O., Söderkvist, I.: Repeated surface registration for on-line use. Int. J. Adv. Manuf. Technol. 54, 677–689 (2011). doi:10.1007/s00170-010-2950-6

Besl, P.J., McKay, N.D.: A method for registration of 3-d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14(2), 239–256 (1992). doi:10.1109/34.121791

Chen, Y., Medioni, G.: Object modeling by registration of multiple range images. Image Vis. Comput. 10(3), 145–155 (1992). doi:10.1016/0262-8856(92)90066-C

Fletcher, R.: Practical Methods of Optimization, 2nd edn. Wiley, New York (1987)

Hanson, R.J., Norris, M.J.: Analysis of measurements based on the singular value decomposition. SIAM J. Sci. Stat. Comput. 2(3), 363–373. doi:10.1137/0902029. http://link.aip.org/link/?SCE/2/363/1 (1981)

Li, Y., Gu, P.: Free-form surface inspection techniques state of the art review. Comput. Aided Des. 36(13), 1395–1417. doi:10.1016/j.cad.2004.02.009. http://www.sciencedirect.com/science/article/pii/S0010448504000430 (2004)

Li, Z., Gou, J., Chu, Y.: Geometric algorithms for workpiece localization. IEEE Trans. Robot. Autom. 14(6), 864–878 (1998). doi:10.1109/70.736771

Pottmann, H., Huang, Q.X., Yang, Y.L., Hu, S.M.: Geometry and convergence analysis of algorithms for registration of 3d shapes. Int. J. Comput. Vision 67(3), 277–296. doi:10.1007/s11263-006-5167-2. (2006)

Pottmann, H., Wallner, J.: Computational Line Geometry. Springer, Berlin (2010)

Rusinkiewicz, S., Levoy, M.: Efficient variants of the icp algorithm. In: Proceedings of the Third International Conference on 3D Digital Imaging and Modeling, pp. 145–152. http://citeseer.ist.psu.edu/rusinkiewicz01efficient.html (2001)

Söderkvist, I.: Perturbation analysis of the orthogonal procrustes problem. BIT 33, 687–694 (1993)

Söderkvist, I., Wedin, P.Å.: Determining the movements of the skeleton using well-configured markers. J. Biomech. 26(12), 1473–1477 (1993)

Sproull, R.F.: Refinements to nearest-neighbor searching in k-dimensional trees. Algorithmica 6(1), 579–589 (1991). doi:10.1007/BF01759061

Zhu, L., Barhak, J., Srivatsan, V., Katz, R.: Efficient registration for precision inspection of free-form surfaces. Int. J. Adv. Manuf. Technol. 32, 505–515 (2007). doi:10.1007/s00170-005-0370-9

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bergström, P. Reliable updates of the transformation in the iterative closest point algorithm. Comput Optim Appl 63, 543–557 (2016). https://doi.org/10.1007/s10589-015-9771-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-015-9771-3