Abstract

In recent years, serverless computing has received significant attention due to its innovative approach to cloud computing. In this novel approach, a new payment model is presented, and a microservice architecture is implemented to convert applications into functions. These characteristics make it an appropriate choice for topics related to the Internet of Things (IoT) devices at the network’s edge because they constantly suffer from a lack of resources, and the topic of optimal use of resources is significant for them. Scheduling algorithms are used in serverless computing to allocate resources, which is a mechanism for optimizing resource utilization. This process can be challenging due to a number of factors, including dynamic behavior, heterogeneous resources, workloads that vary in volume, and variations in number of requests. Therefore, these factors have caused the presentation of algorithms with different scheduling approaches in the literature. Despite many related serverless computing studies in the literature, to the best of the author’s knowledge, no systematic, comprehensive, and detailed survey has been published that focuses on scheduling algorithms in serverless computing. In this paper, we propose a survey on scheduling approaches in serverless computing across different computing environments, including cloud computing, edge computing, and fog computing, that are presented in a classical taxonomy. The proposed taxonomy is classified into six main approaches: Energy-aware, Data-aware, Deadline-aware, Package-aware, Resource-aware, and Hybrid. After that, open issues and inadequately investigated or new research challenges are discussed, and the survey is concluded.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cloud computing has gained significant attention in recent years as an innovative and compelling method of deploying cloud applications. Serverless computing has been employed as a result of the recent evolution of enterprise application architectures into microservice-based architectures. Furthermore, with the help of this technology, developers will have access to a simplified programming model, simplifying the process of creating cloud applications and eliminating most—if not all—of the operational concerns associated with infrastructure configuration. Cloud-native code that responds to events can be deployed rapidly [1, 2]. The function being executed by serverless computing must be stateless and idempotent, which means that it may be re-executed without causing any harm if it fails. Therefore, the discussion of the system’s design is now shifting towards strategies for managing containers and developing software to maximize the system’s performance based on a function-centric infrastructure [3, 4]. Cloud providers can utilize serverless computing to manage the entire development process and reduce operational costs by optimizing and managing cloud resources efficiently from the cloud provider’s perspective [5, 6]. Serverless Computing enables application developers to break up large applications into smaller components, allowing these components to be scaled individually. However, this poses a new problem: managing many functions coherently and allocating resources for them [7, 8]. Depending on the required functionality, serverless architectures can be used interchangeably with traditional architectures. The decision to use serverless technology will likely depend on other non-functional considerations, including how operations are carried out, the cost, and the application workload characteristics [9, 10]. Serverless performance management practices can result in various issues, including inconsistent and inaccurate limitations, inefficient resource allocation, inadequate runtimes, mid-chain function drops, concurrency collapses, and undocumented function priorities from current practices. Therefore, to alleviate these problems and create an efficient resource management system, it is necessary to use a resource allocation scheduler suitable for serverless and chained functions. The scheduler is one of the main components of the system resources management, allowing its performance to be compared across the serverless resources management service providers. One purpose of this feature is to assist the user in selecting the appropriate scheduler for the environment in which it is an implementation system. As a result, identifying the purpose and environment of the scheduling implementation is crucial to ensure that it accomplishes its objectives in a system [11].

1.1 Research motivation and challenges

Serverless computing systems can utilize different schedules based on the type of workload and implementation objectives. Depending on the context and application, a scheduler can deliver the best performance, while if it is used in another context and application, it may deliver the worst results. Thus, selecting a specific scheduler based on the goals of each environment and program is more efficient than choosing a variety of schedulers. Due to the emerging nature of serverless technology, using of this will inevitably face many challenges, particularly when allocating resources. In contrast, to implement a successful and efficient resource allocation process, it is necessary to determine an appropriate schedule to ensure that resources are not used inefficiently during the implementation process. In the field of IoT, serverless computing has emerged as one of the most promising research areas [12]. In light of this, there is no doubt that selecting a suitable and efficient scheduler in serverless computing is a good fit for IoT applications, especially when it intersects with the discussion of edge computing and fog computing infrastructures [13]. Therefore, issues using a suitable scheduler to carry out the resource-allocating process effectively and as efficiently as possible to achieve maximum efficiency in this technology is critical. However, there has been little research conducted in the area of providing schedulers in serverless environments. However, authors have attempted to provide appropriate schedulers with the intended goals; they propose appropriate solutions for existing challenges. Despite the importance of this topic, to our knowledge, no comprehensive and detailed study has been explicitly published regarding the use of appropriate schedules in the context of serverless computing.

1.2 Our contribution

This research will systematically review existing studies for scheduling in the serverless computing field and evaluate various scheduling techniques to provide a comprehensive and systematic assessment of the mechanisms for selecting and implementing schedulers based on their characteristics and implementation. Several main contributions are presented in this review, which can be summarized as follows:

-

Reviewing published articles on serverless computing related to the scheduling approach provides insights into each study’s scheduling methodologies and strategies.

-

We are analyzing and evaluating the latest scheduling approaches and techniques in serverless computing.

-

We are examining current approaches and developing a classification based on these findings.

-

We are discussing any underexplored or underserved future research challenges that could be addressed to improve scheduling techniques for serverless computing environments.

1.3 Organization of the paper

This paper is organized as follows: Sect. 2 presents background on serverless computing scheduling and various features of scheduling applications; an overview of some related survey articles is presented in Sect. 3, along with a comparison of these articles. The research method used in this study is described in Sect. 4; Sect. 5 concludes with a classification of the techniques discussed, a summary description of the plans, and a comparison of these techniques. In Sect. 6, some discussions and comparisons are also presented. There are some open issues outlined in Sect. 7 that need to be addressed in the future. Finally, in Sect. 8 the conclusion is presented.

2 Background

In this section, we provide a brief overview of serverless computing and the scheduling process and will explain the most important parameters involved in the scheduling process.

2.1 Overview of serverless computing

The serverless computing model enables cloud providers to provide infrastructure management for customer resources based on their needs. Serverless applications still require servers, but developers are no longer required to manage them. Thus, serverless enables developers to concentrate on developing serverless applications by implementing auto-scaling based on resource consumption [14]. Hence, Providers of cloud services that advocate using function-as-a-service (FaaS) in an organization should be capable of assisting groups seeking to utilize FaaS. It is possible to execute small, modular fragments using a serverless architecture and a function-based model with the help of FaaS. So, Software developers can use these services to perform client-required functions. The serverless architecture eliminates the need for developers to maintain dedicated servers since applications are only activated when used, eliminating the need to support dedicated servers [15]. So, at the end of the function execution, once the function has been completed successfully, it can be terminated quickly to free up the same amount of computing resources for other functions. Using FaaS, it is possible to access applications on demand. Also, the applications can be executed by a platform that coordinates and manages the application resources so that they remain secure throughout the entire process of running the application. Thus, cloud service providers can simplify operations, reduce costs, and improve scalability by managing servers to implement applications [16]. This property permits them to concentrate more on developing application code than managing servers. Generally, a FaaS model is an excellent choice for simple, repetitive tasks, such as scheduling routine tasks, processing queued messages, or handling web requests regularly. Functions in an application are collections of tasks defined independently as code pieces and executed separately as individual tasks. The most efficient way to utilize resources is to scale a single function rather than an entire application because it does not require a whole micro-service or application [17]. Cloud computing is a method of federating many resources into a single machine, and it is similar to parallel computing in many ways, such as clustering and grid computing. Still, The main characteristic of cloud computing is, however, virtualization. Thus, computing resources can be scheduled as a service for clients in this approach. A significant development in computing is the availability of a wide range of highly flexible devices, known as nodes, which can be deployed anytime and anywhere; only a fee is incurred when they are employed. Hence, with traditional computing environments or data centers, achieving this goal would not be possible due to the limitations of the existing technology [18]. It is essential to note that unused, underused, and inactive resources significantly impact energy waste. Therefore, optimally scheduling cloud resources is one of a company’s most challenging tasks in managing cloud resources [19]. There are many factors to consider when allocating resources to cloud workloads, such as the applications hosted in the cloud and the energy consumption of computing resources. Appropriate techniques for allocating resources are lacking to address the challenges associated with uncertainty, dispersion, and heterogeneity within a cloud environment. Developing more appropriate strategies for giving resources within the cloud environment is necessary [20]. By using a cloud-based approach that automatically manages computing resources based on their consumption as an influencing factor of service quality, it is possible to resolve this issue. In addition to increased scalability and faster development, this approach can reduce costs [21].

2.2 Serverless computing features

A serverless computing approach will be able to respond to all these requests and provide excellent resource management conditions with these features. Several essential advantages of the serverless computing approach include scalability, security, visibility, faster development, and reduced costs.

2.2.1 Scalability

The high scalability offered by serverless computing technology means that developers will no longer have to worry about the impact of heavy traffic since the system is highly flexible and scalable. This property of serverless computing is one of its most attractive features. Compared to previous architectures, this architecture can address all the concerns about scalability more efficiently than previous architectures [22]. Scalable applications can handle the demands of many clients without experiencing a performance loss whenever the number of requests increases. As a result, auto-scaling instances must be designed in such a way that they can handle traffic fluctuations regardless of the number of users and requests. Consequently, using only resources essential to the project is more efficient than wasting unnecessary resources [23].

2.2.2 Visibility

It is essential to monitor a system to keep it healthy. Collecting metrics such as CPU utilization, error logs, and network traffic can use these data as inputs into incident alert tools to prevent system failures. Sending out alarms and notifications to staff can alert them to security, outages, and errors that have occurred [24]. Hence, regarding the visibility of systems and how they perform under varying circumstances, serverless computing achieves different goals than serverless testing and monitoring. As a result, serverless observability can provide insights into system efficiency under various circumstances. A serverless computing system allows for two types of visibility:

-

Testing: testing allows for identifying known problems.

-

Monitoring: monitoring allows for evaluating the system’s health according to available metrics.

So, Instrumentation can be used to gather as much information as possible from an application to identify and resolve unknown problems. Due to the disparate, isolated, and highly transient nature of event-driven functionality, maintaining visibility in serverless applications is essential and challenging [25].

2.2.3 Faster development

By utilizing serverless computing technology and approach, developers can focus more on developing code for the applications they are creating, increasing production rates as rapidly as possible. Finally, this property increases their efficiency while developing the software they are growing, resulting in more excellent performance [26]. By doing so, developers can spend less time on deployment and have a faster development turnaround, thus increasing their productivity. So, compared to traditional technologies, large backend systems can be constructed in less time by abstraction and reduction of complexity due to technology abstraction and reduction of complexity. As a result, the product development process is typically accelerated, resulting in shorter delivery times, faster deliveries, and more significant business growth. Also, the ability to rapidly update existing products with minimal friction and expense and to constantly experiment with new ideas [27].

2.2.4 Security

Security of the system has become more challenging in light of the rapid evolution of serverless applications and their changing structure. Since more information and resources are available in this approach, some novel challenges and complexities arise. Unlike its forerunners, serverless security appears inherently secure due to its characteristics, such as short function duration. In addition, due to its architecture structure, it may also inherit security features developed for other virtualization platforms [28]. Security challenges associated with serverless approaches can create new, distinctive security threats. Furthermore, the development of serverless applications will require a significant change in mindset on the part of developers, both in terms of how applications are developed and their protection against malicious attacks. As more resources equate to more permissions, a unique approach is required to introduce security policies into provider systems. Thus, an organization with more resources has more permissions to manage, making it more challenging to determine the permissions for each interaction [29]. It is essential to provide visibility into serverless applications because they use various cloud services across multiple versions and regions, making it difficult to find and solve a problem. Thus, the visibility feature can be utilized as a solution to security challenges if deployed in a secure environment. Hence, using the visibility feature, which combines two parts of tests with automatic monitoring, one can detect configuration risks and eliminate function permissions using automated methods [30].

2.2.5 Reduced costs

Clients can save their application’s life cycle costs using serverless computing. In addition to simplifying the development process, serverless computing also improves the development process’s efficiency by eliminating idle computing time. Also, clients can receive services at the lowest possible cost, which is both an easy process for them to follow and cost-effective [31]. The service can be scaled up to serve millions of clients simultaneously with no additional cost or effort. It is not necessary to provision, manage, or update server infrastructure during project maintenance, so clients will only pay the cost for what they are using. The cloud service provider handles all this, so clients don’t have to worry about it [32].

2.3 Serverless computing architecture

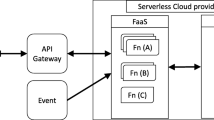

An approach known as serverless architecture is wherein a cloud provider runs code pieces and dynamically assigns resources to the customer’s requirements. Additionally, this approach can be applied to a wide range of scenarios, as functions can be developed that alter resource configurations to accomplish specific infrastructure management tasks that can be achieved by using the model in particular techniques. In other words, serverless computing utilizes the full potential of cloud computing because it allocates resources in real-time to meet the actual requirements of clients and scales up and down as required by the client in real-time. Therefore, the client only has to pay for those resources that are used and do not have to pay for those resources that are not necessary [33]. By using serverless computing, all resources will automatically be scaled back to zero when the application is inactive or when clients have no requests. In serverless architectures, a third-party cloud service provider provides computing services based on the functions of their cloud services while managing infrastructure surveillance as part of their cloud architecture. Ephemeral containers, typically composed of multiple components, are commonly used to achieve this functionality. In addition to database events, file uploads, and queues, they can also be triggered by a wide range of other events, including monitoring signals, cron jobs, and Hypertext Transfer Protocol (HTTP) requests [34]. The client will not have to worry about the server in a serverless computing model since the provider’s architecture abstracts away the server from the client’s point of view. The architecture of serverless computing is illustrated in Fig. 1.

-

Client Serverless functionality relies heavily on the client interface. In addition, interface designs must support extremely high or shallow volumes of data transfers. An effective interface should handle short bursts of stateless interactions [35].

-

Security Running a serverless system without a security service is impossible due to the need to ensure security for numerous requests simultaneously. On the other hand, it is impossible to keep track of previous interactions due to its stateless nature, so ensuring an authentication process has been implemented before returning a response is critical. Serverless systems typically provide clients with temporary credentials through the security service, including authentication and database [36].

-

API Gateway A function-as-a-service service and its client interface are linked using the API gateway. When the client triggers an event, the API gateway relays the information to the function-as-a-service service to trigger a function [37].

-

Functions as a service Function-as-a-service, the most important component of serverless computing, assigns resources to specific scenarios based on their needs. It is a serverless method of running a function in any cloud environment, allowing developers to focus on writing code rather than worrying about infrastructure requirements or building and maintaining it themselves [38].

-

Backend as a service A cloud-based service handles the backend of an application in the backend as a service model. By utilizing several critical backend features, clients can create an exceptionally robust backend application using this component, resulting in an unusually practical backend application that will be developed efficiently and effectively [39].

An event trigger mechanism is used in serverless computing. By implementing this approach, an application may need to fetch and transmit data in various situations to function at its optimum since it might require it to fetch and transmit data under different conditions. When a client creates an event trigger, it is not uncommon for the application to fetch and transmit a particular piece of data to create the event trigger inquiry [40]. Typically, this is called in the world of programming an event. When a client initiates an action to trigger an event, the application dispatches it to the cloud service provider in reaction to the client’s action when the application is launched. According to rules defined for the execution of a function, cloud services allocate dynamic resources for the execution of the function. Before proceeding with the process, the client must consider one point: once the function has been invoked, it will provide the result of its execution to the client. It is impossible to allocate resources without a request from the client, and it is impossible to store data without such a request. Consequently, this allows the application to run in real-time while reducing storage and cost requirements. This data may be presented to the client at any time to provide the client with the most current and updated data [41].

2.4 Scheduling in serverless computing

The execution pattern of a serverless system shifts the responsibility of managing application resources from clients to the cloud service provider as part of the serverless architecture model. Since serverless models use real-time allocation of resources, providers of serverless applications must be able to manage resource allocation autonomously during application execution [42]. When a serverless platform is in its initial stages, the management can be challenging due to the limited information available about the resources different functions need. Consequently, in this case, they may need help making informed resource allocation decisions, which, in turn, may result in them being unable to make informed resource allocation decisions. Serverless architecture analysis revealed that CPU utilization could often be a source of contention between applications, mainly if the applications are computationally intensive, resulting in high response latency [43]. Due to this, providers must be aware that arbitrarily determined resource allocation policies may result in a conflict of resources for applications during their runtime, thus violating the service level agreement signed by the client. Therefore, it is crucial to ensure that the provider’s resources are managed dynamically to ensure the smooth operation of the provision of services [44, 45]. Scheduling algorithms are vital to serverless computing because they can minimize response times and maximize resource utilization by minimizing response times. A scheduling algorithm allows computing resources to be allocated dynamically based on the consumption requirements according to a consumer’s requirements. Several strategies and methodologies have been developed to maximize resource scheduling efficiency and achieve the best results. Therefore, various scheduling strategies and approaches determine resource allocation. Scheduling mechanisms for serverless computing generally include two approaches: affinity and load-balancing scheduling. A load-balancing scheduling approach distributes the load equally among all the workers in the system in order to prevent worker overloading. In this approach, for each request that the system receives as a load, a function must be executed as a program within a container so that the system can respond to the request. There are various approaches to assigning load to workers using the scheduling method through load balancing. They are:

-

In the round-robin distributed approach, the scheduling mechanism ensures that the load is distributed evenly among the workers.

-

In the least load assignment approach, the scheduling mechanism ensures that the load is assigned to the employee who has the fewest requests.

-

In the random distribution approach, the scheduling mechanism ensures that all workers are assigned a random workload.

The selection of the suitable load-balancing approach is based on the system implementation goals. In the affinity-based approach, dispatches send the same kind of request to the same worker when the worker is not overloaded. These approaches can avoid overloading workers and efficiently reuse discontinued containers at times when workloads appear to be stable. It is presumed that requests can quickly retrieve available containers on selected workers [46]. In Fig. 2, the serverless computing scheduling mechanism is illustrated based on the execution of functions in containers on the workers.

According to Fig. 2, the requests are first sent to the scheduler. Serverless computing requires the execution of functions to respond to each of the sent requests. As the execution environment in serverless computing calculations is assumed to be a functions execution-based environment in the form of containers, each of these functions must be executed in a container in order to respond to requests. Hence, for the purpose of executing the functions, a container needs to be created in each worker and used as an environment to run the functions. The scheduler in this mechanism is responsible for distributing the requests. How loads are distributed or, in other words, how requests are distributed among workers depends on the scheduling mechanism’s approach to distributing requests. After determining the load distribution approach, the scheduler tries to distribute the requests among the workers based on the chosen approach. Scheduling algorithms in serverless computing ensure the system’s goals are achieved. Therefore, serverless computing service providers must choose a scheduler approach based on sufficient knowledge to ensure they can achieve a better and more effective result.

2.5 Scheduling metrics

Understanding the mechanisms of the algorithms, it is also possible to define a set of criteria for evaluating the performance of scheduling algorithms across a wide range of implementations [47]. In the following, some of the most critical factors that can be used to demonstrate the performance of a scheduling algorithm will be discussed.

2.5.1 Response time

During the implementation of scheduling algorithms, the response time factor of serverless computing is an essential factor. Various factors determine the response times, including the scheduler’s efficiency, the algorithms’ complexity, the serverless platform characteristics, the simultaneous nature of the application, and the communication delay. Therefore, to determine whether this factor is efficient, a sequence of sequential requests must be sent to the scheduling algorithm so that the scheduling algorithm evaluates by response time. When the workload contains heavy computing functions, the mean response time (application performance) decreases with the number of executors increases. The metric is calculated based on the Eq. (1) [48].

In this equation, \(\mu\) is the number of requests that have been processed for function execution, and \(Re_{a}\) is the response time of the function execution request.

2.5.2 Throughput

The performance throughput of scheduling algorithms can be evaluated based on the number of requests received for resource allocation for functions with different executors. Hence, this case can be suitable when evaluating factor throughput in scheduling algorithms for resource allocation for requested functions. Various implementations can show that schedulers can quickly become a bottleneck when request rates are high, making it difficult for executor resources to utilize fully. So, it’s crucial to note that selecting scheduling algorithms inappropriately by providers can limit scalability and result in low throughput due to high invocation overhead. The calculation of this metric is based on the Eq. (2) [49]. The underlying assumption for throughput is that for large enough, the average service time of terminated jobs in the same type of job (local \(a\) or offloaded \(\mu\).) is based on its mean value. Then, the system operates like a system with a predictable schedule for which the scheduling process is throughput. Take into account any arrival rate function execution \(\vartheta \epsilon {\Theta }\) in \(N = \left\{ {n \epsilon N} \right\}\) machines. Since \({\Theta }\) it is an open set, there exists \(\psi > 0\) such that \(\vartheta = \left( {1 + \psi } \right)\vartheta \epsilon {\Theta }\). Then

is a decomposition of \(\vartheta\), and for any \(n \epsilon {\text{N}}\).

In this equation \(\vartheta = \left( {\vartheta_{1} ,\vartheta_{2} ,\vartheta_{3} , \ldots ,\vartheta_{N + 1} } \right)\) be defined as

Then, \(\vartheta\) is a possible arrangement of arrival allocation for any function so that the average is the arrival rate \(H = \left\{ {\zeta \epsilon H} \right\}\) allocated function to the queue.

2.5.3 Latency

In a serverless computing platform, the latency factor is when a client requests a function when the scheduling algorithm attempts to allocate resources and when the function is executed. During the execution function, request time, request analytics time, and resource allocation are included in the duration. It is of particular importance for latency-sensitive applications. Ideally, the scheduling algorithm should be able to handle and manage all requests made from different locations and with varying amounts of resources in real-time if it receives a large number of requests. Thus, it means the scheduling algorithm should be capable of performing accurate predictions by obtaining current information and sufficient information about the execution environment. Hence, the scheduling algorithm can allocate resources appropriately, and it is possible to improve resource management to determine the most appropriate actions. Finally, reducing the server resource consumption can simultaneously reduce the server’s resource consumption and the job latency. It is calculated based on Eq. (5) [50].

where \(\nu_{\rho }^{\eta }\) is determine the processing delays of \(\eta\) represents the precedence constraint of the schedule of the jobs of \(N_{e}\) \(\eta\) on \(\vartheta\) that is one function from aggregate existing functions \(V = \left\{ {\vartheta \epsilon V} \right\}\). Also, in this equation makes sure that the total delay incurred by a job that is used for the execution of any function of \(N_{{e{ }}} \eta { }\epsilon { }\Lambda_{\vartheta }\) does not surpass its latency threshold.\({ }\lambda_{n} { }\epsilon {\text{ {\rm Z} }}\) to determine the time slot at which the job of \(N_{{e{ }}} \eta { }\epsilon { }\Lambda_{\vartheta }\) starts execution on function \(\vartheta\).

2.5.4 CPU usage

The CPU usage factor allows for deploying resource control mechanisms in serverless computing architecture that can be utilized to evaluate resource management in serverless architecture providers. Therefore, by using this factor, providers can control the amount of CPU consumed during the execution of functions in an application, thus allowing for the evaluation of resource utilization. In other words, this factor makes it possible to evaluate the performance of scheduling algorithms in serverless computing systems. By measuring the number of CPU resources consumed by the requested functions, this evaluation factor can be used to efficiently execute a system, resulting in a significant reduction of the number of CPU resources consumed and an improvement in the overall performance of the serverless computing system. This metric is calculated according to Eq. (6) [51].

In this equation, \({\mathcal{N}}\) nodes \(k_{b} \in K\) that are required be functions by microservices represent instances \(x_{a} \in X\). Hence, each node \(k_{b}\) is bounded by compute resources, specifically CPU limitation \(C_{b}\). On the other side, \(C_{a}\) is CPU usage and \(section\left( {R_{b}^{a} ,\Delta t} \right)\) provides the section of runtime \(R\) of the service \(x_{a}\) on the node \(k_{b}\) that is placed inside \(\Delta t\).

2.5.5 Energy consumption

It is important to consider this factor when evaluating the performance during the resource allocation process of a scheduling algorithm in a serverless environment. This factor can determine the amount of energy a scheduling algorithm consumes. By analyzing the structure of a serverless computing implementation environment carefully, it is possible to calculate the energy consumption of tasks in heterogeneous or heterogeneous environments according to the factor. By utilizing this factor, it will be possible to develop a solution that reduces the amount of energy consumed by serverless computing to the greatest extent possible [52]. Besides providing information regarding the proportions and amounts of resources used by a scheduling algorithm during the allocation process, this factor can also be used to demonstrate its effectiveness. The ideal solution is minimizing resource consumption when allocating resources in scheduling algorithms that would reduce the total cost. Using this factor, a serverless computing provider can select the appropriate algorithm to be implemented during the provision of services, as each application requires a unique approach or, in other words, a suitable algorithm. When providing efficient services, this factor is vital in reducing resource consumption as much as possible. In this case, the metric is calculated in accordance with Eq. (7) [53].

It is important to note that different functions consume varying amounts of energy based on their application. As a consequence, some functions require a processor to operate. In contrast, some applications require a disk for storing data, while others require the exchange of information, which may result in varying levels of energy consumption. According to this equation, \(Energy_{P} \times \left( {1 - \varphi_{P} } \right)\) is the energy used to execute a processor-based function, \(Energy_{R} \times \left( {1 - \varphi_{R} } \right)\) is the amount of energy used for transfer rates-based function, and \(Energy_{I} \times \left( {1 - \varphi_{I} } \right)\) is the amount of energy used for a disk IO-based function. Also, in this equation, the amount of processing energy consumed, data transfer rate, and disk IO rate are represented by \(\varphi_{P}\), \(\varphi_{R}\), and \(\varphi_{I}\), respectively. The variables \(C_{\rho }\), \(C_{R}\), and \(C_{I}\) indicate the amount of energy required by a machine based on the processing type, IO rate, and data transfer rate.

2.5.6 Cost savings

Platforms based on serverless computing offer a pay-per-use model in which users are billed according to the amount of computation memory and time their functions require. Despite some scheduling algorithms in serverless computing environments striving to maximize resource efficiency during function execution, algorithms may neglect to take application-specific factors into account. Consequently, they may violate service level agreements (SLAs), not maximize resource utilization, not achieve optimal resource utilization simultaneously, and cause increased costs. By defining policies in the format of scheduling algorithms, serverless service providers can minimize the cost of resource consumption while meeting the client’s application requirements, namely, the deadline and attention to specific details [54]. The proposed algorithms must be sensitive to deadlines, efficiently increase the provider’s resource utilization, and dynamically manage resources to improve response times to functions, thereby solving the increasing cost challenge [55]. Hence, optimizing resource costs for end clients by reducing providers’ time to respond to functions is possible. Thus, an ideal mechanism for the serverless service provider to choose a scheduling algorithm included placing functions and allocating adequate resources to the containerized function implementation to maintain the resource cost at an optimal level while meeting the desired client requirements. This metric is calculated using Eq. (8) [56]. In serverless computing, the utility of node \(n\) has been defined as the revenue derived from the execution of computation functions minus the processing costs and the penalty for overflow. In other words, the utility has been defined as:

where \(C_{m}^{q}\), \(C_{m}^{y}\) and \(C_{m}^{z}\) are factors affecting costs. \(C_{m}^{q} q_{m} \left( \beta \right)\) is the amount of revenue that the serverless computing node \(m\) receives from the service provider as a result of performing serverless tasks for the cloud center. \(q_{m} \left( \beta \right)\) is defined as the reduction in processing delay over the provider center.

2.6 A brief overview of virtualization and containerization technologies

The process of deploying and implementing applications is different in different environments. Hence, in this section, we discuss how to deploy and run applications using two approaches: virtualization and containerization. With these two approaches, as well as serverless computing, applications can be implemented and deployed across a variety of frameworks and environments.

2.6.1 Containerization approach

Due to the fact that containers present virtualization at the operating system (OS) level, they are more lightweight and agile than traditional virtual machines (VMs) [23]. The term container refers to an isolated, lightweight environment that packages an application along with its requirements in an organized manner. It is important to note that containers share the kernel of the host OS. However, each container has its own network connectivity, file system, and processes. It makes it simpler to deploy applications in a variety of environments when you use containers, as they provide a reproducible runtime and consistent environment. Compared with virtual machines, they are more lightweight, have a faster startup time, and consume fewer resources than VMs. Containers are often implemented in conjunction with container orchestration frameworks, such as Docker and Kubernetes [57].

2.6.2 Virtualization approach

An OS can be implemented as a virtual machine (VM) by using software simulation that plays a hypervisor role on a physical computer system. A VM consists of its virtual hardware, such as memory, storage, CPU, and network interface. A VM provides strong isolation among applications and can run multiple operating systems (OSs) or versions of the same OS simultaneously. VMs are flexible and portable, yet they can consume a lot of resources due to the requirement of running an entire OS on each VM [58].

Compared to VMs, containerized applications provide portability and lightweight isolation, and serverless offer cost efficiency and automatic scalability for event-driven applications. It is important to consider the specific needs of the application, the development mechanism, considerations regarding resource utilization, and the level of isolation required of the team when selecting between them. Comparison of serverless, container, and VMs is shown in Table 1.

2.7 Machine learning in serverless computing

Machine learning is one of the fields that have contributed to the improvement and efficiency of serverless computing systems. Combining these two technologies provides the foundation for the use of artificial intelligence algorithms in serverless computing systems. In addition to improving resource management and runtime optimization, this combination also enhances predictive capability and accuracy. Incorporating these two technologies allows systems to act as more intelligent units, take advantage of each other, and interact more effectively with their input environment. In addition to improving the development prospects for serverless computing systems, this approach is effective in a wide range of applications, such as image processing, language translation, and data prediction [59]. One of the fundamental challenges associated with serverless computing is scheduling, which is exacerbated by fluctuations caused by changes in requests and workloads. Using machine learning to optimize the scheduling process in serverless computing is an innovative and smart solution. By using this approach, resource managers are able to analyze execution patterns, predict resource requirements, and improve system performance. In serverless computing, machine learning can be used to improve the scheduling process in a number of ways, including:

-

Increasing the potential for response The use of machine learning in scheduling serverless computing also increases the responsiveness of the systems since they can more easily adapt to changes in requests and the environment and are better able to respond efficiently to user needs and system functions.

-

Improving resource demand prediction accuracy In serverless computing scheduling, machine learning improves the prediction of resource demand. An accurate analysis of data and resource requirements over time allows machine learning models to estimate better the amount of computing resources required at a given moment.

-

Optimizing smarter decisions The use of machine learning in serverless computing allows the systems to react in real-time to current information and environmental conditions and to make intelligent decisions based on needs and constraints.

-

Managing resources intelligently Machine learning technology can be integrated into serverless computing scheduling to facilitate intelligent resource management. By estimating resource needs accurately and implementing intelligent decisions, resources can be managed in the most optimal manner possible to avoid differences between system needs and resource allocations [60].

-

Enhancements to the estimation of runtimes In serverless computing scheduling, machine learning has a fundamental role to play in improving execution time estimates. Machine learning models are capable of identifying execution patterns and providing more accurate estimates of the time required to execute code by carefully analyzing past execution time data.

-

Predicting future needs Serverless computing can utilize machine learning as a powerful tool for predicting future needs in order to optimize the scheduling process. By analyzing past data and request change patterns, machine learning models can predict future needs in the best possible way in order to prepare the scheduling process in the system to deal with future challenges in the best possible way.

-

Enhancing credibility The use of machine learning in the scheduling of serverless computing can increase the system’s reliability. Users are assured of a high degree of accuracy in estimations, smarter decisions, and forecasting of resource needs, thus ensuring that the system can provide quality services in the shortest amount of time [61].

3 Related works

This section will analyze recent papers reviewed on scheduling in serverless computing. In the following, we will seek to give each survey article’s primary advantages and disadvantages. So, we will take an in-depth view of some articles in the literature. Not reviews work studies scheduling in Serverless computing. Therefore, it can be said that this work is one of the first studies in this domain. One of the notable scheduling studies on serverless computing was accomplished by Alqaryouti and Siyam [62]. Their survey considered various propositions incidental to scheduling tasks in clouds. These propositions were classified according to their target functions by minimizing execution time, execution cost, and multi-targets (time and cost). Applying a hybrid perspective to serverless computing alongside the IaaS approach in the form of one technique leads can significantly reduce issues arising from dependency because issues arising from dependency on the system are from the underutilization of resources. Therefore, their suggested algorithm compresses the schedule by re-ordering the tasks for maximal usage of the scheduled indolent slots related to the dependency restrictions. As a result, as a benefit of this study, solutions were proposed for the problem of scheduling aspects of workflows from the clouds focused professionally. Moreover, the article goes through some disadvantages as follows:

-

Inadequate coverage and weak of recently published papers at the time of publishing

-

Lack of presentment of an organized template for choosing articles.

-

Ignoring some paramount agents when presented with solutions proposed to the problem of scheduling, paramount agents like productivity boost, Cost-effective in Scheduling

-

Ignoring one of the essential features of scheduling on serverless computing in the name of effortless efficiency in reviewing the entire article and the proposed solutions.

-

Not to pay attention to the future path to study the situations and challenges ahead.

Kjorveziroski et al. [63], in their research, reviewing works disseminated between the years 2015 and 2021, presented a systematic survey study of the recent progressions they created In connection with the subject of serverless computing to the edge of the network. They could determine eight fields in which existent serverless edge research was concentrated. Hence, they used the obtained classifications to study the selected articles and, in the following, could present increased interest in using serverless computing at the network’s edge. One subject that is an occupied study, specifically in recent years, is the placement of a real edge-fog-continuum. However, many furtherance’s are required in intelligent scheduling algorithmic and efficiency improvements. Improvement of efficient scheduling algorithms that are skilled in managing vast content of function instantiations and eliminate in brief quantities of time amongst diverse infrastructures; as a producing an edge–cloud continuum, one of the open issues presented needs to be solved for serverless computing. The significant advantage of this research is the excellent constitution of the article, encasing all correlated works together. Regardless, this article toils from some drawbacks, as follows:

-

Lack of careful attention to any of the issues raised

-

Absence of regard and review for the influential factors in each of the issues raised

-

Instead of focusing on the central issues related to the article, it highlights trivial issues, such as how to access the articles.

-

Deficiency of examples of the application of issues raised in the actual environment

-

Absence of regard and review for the influential factors in each of the issues raised.

Saurav and Benedict [64] researched scheduling in scientific workflows. This study aims to present a workflow management system that applies a scheduling strategy for processes that require high scalability in the face of computational workloads and compact data. These computations are used in parallel and distributed systems. Because this system has a heterogeneous architecture, the discussion of awareness of the energy level is crucial. In this research, an attempt at the scientific workflow, along with the distinctive challenges in the path of energy-aware implementation, has been examined. On the other, they review the presented analysis of state-of-the-art workflow scheduling algorithmic regulation and the multi-target improvement issues. Moreover, they explained the importance of energy-aware runtime structures. And they finally suggested a reference architecture and runtime for energy-aware scientific workflows. Researching professionals, thorough scheduling, and reviewing corresponding main details are the influential results of this study. Regardless, the article suffers from some drawbacks, as follows:

-

Inadequate coverage and weak of recently published papers at the time of publishing

-

Not to pay enough attention to the future path to study the situations and challenges ahead.

-

This research focuses on scheduling algorithms in large-scale systems and, considering this issue does not examine the performance of algorithms in the scalability process and only expresses generalities.

-

There is no mention of how scheduling algorithms are implemented in bottlenecks. There will always be a node or nodes in the network that will act as a bottleneck. Therefore, these nodes, which are also heterogeneous, make the system service process difficult.

Shafiei et al. [65] for this survey showed a complete outline of the recent advances and the past developments in study fields associated with serverless computing. They first explored serverless applications and outlined the challenges they have fronted. Then, expansion applications in eight parts individually converse the targets and the viability of the serverless model in each of those parts. Moreover, they categorized those challenges into nine issues and explored the suggested solutions for each of them. Finally, they suggested the fields that require additional attention from the research society and determined available troubles. As a benefit, the paper is covered by many suitable, outstanding quality, recently published articles and excellent paper classification. Hence, they observed and outlined quality of experience facets that directly influence user satisfaction, which can take crucial scheduling metrics in serverless computation. Regardless, the article suffers from some drawbacks, as follows:

-

Loss to review tools and implementation environments related to the subjects presented

-

Despite the expression of the payment model function-as-a-service as one advantage of serverless computing, in no way has this payment model been compared to other payment models. There is no comparison between payment models in service delivery mechanisms such as infrastructure-as-a-service, platform-as-a-service, function-a-a-service, and software-as-a-service has not been made.

-

In this survey, this feature has not been considered despite the expression of auto-scaling as one feature advantage of serverless computing. There are several types of scaling, and there is no mention of what scaling process is used on serverless computing.

-

Given the nature of the performance and implementation of serverless computing, the expression of serverless best practices undertaking execution could have shown a better understanding of how to implement serverless computing.

Xie et al. [66], in their article, attempt attempted to present a comprehensive and organized outline of serverless edge computing networks in the aspect of networking. The first step is to study the design principles for combining a serverless model in edge computing networks. Then, the cooperation process and deployment and execution are shown in the next step. Finally, to entirely use the suggested serverless edge computing scheme, issues such as lifecycle control and service deployment, resource awareness, service scheduling, incentive instruments, exceptions, etc., are explored. Furthermore, some potential investigation subjects that should be regarded for future research are outlined. This article has many advantages, including a detailed study of the issues raised. In addition, it tries to introduce the topics that will be used as research topics in the future. Regardless, the article suffers from some drawbacks, as follows:

-

The reviewed articles bank is Inadequate.

-

The subjects are presented completely abstractly, and no details are noted.

-

Lack of quality study articles

Cassel et al. [67] presented a comprehensive and systematic study that gives us insight into how Entities called functions are off-loaded to various devices And how these entities can interact and collaborate. Moreover, they tried to review the crucial elements utilized to set and run functions, the principal challenges, and open inquiries for this issue. In the continuation of this review, programming languages, storage services, and protocols related to the solutions are also suggested. This article has many positive points, like demonstrating an example of using this technology in the real world, study opportunities, and newfound challenges in the reciprocal hybrid of serverless and IoT, furthermore emphasizing technologies that seem promising for the subsequent years. However, providing a complete and comprehensive classification can be called the most prominent feature of this article. Regardless, the writing suffers from some drawbacks, as follows:

-

Despite providing a robust classification, none of the items shown in the classification is described and presented only as a list.

-

Because orchestrators are used as a crucial part of the implementation process in a server-free computing environment, this article does not refer to implementers of this technology, such as Kubernetes.

-

Attention to issues such as the use of serverless computing, how to communicate across multiple domains in edge computing networks, and the challenges ahead can provide deeper insights into the capabilities of the serverless computing process. Unfortunately, this article ignores this issue.

In [68], a comprehensive study on the types of scheduling algorithms involved in serverless computing is conducted. Through examining various types of scheduling algorithms that are used in serverless computing, they attempted to provide a comprehensive overview of existing approaches and scheduling algorithms as well as their advantages and applications. Additionally, they examine various implementation frameworks that allow serverless computing to be used. There are many positive aspects in this article, such as providing a comprehensive understanding of how scheduling algorithms are applied in serverless computing. However, there are also some disadvantages:

-

A lack of a comprehensive and comprehensive classification of scheduling algorithms for serverless computing

-

An analysis of a limited number of articles related to scheduling algorithms

-

The investigation of a limited number of parameters and metrics used in the scheduling process

-

Lack of accurate and complete comparison of scheduling algorithms

According to the above articles in the field of scheduling in serverless computing, a side-by-side comparison in terms of the type of review, Objectives to be examined, Implementation layers, investigating the evaluation parameters used, and the year of publication of the reviewed articles is summarized in Table 2.

4 Research methodology

This section presented instructions for exploring relevant articles on scheduling in serverless computing. The primary segments for creating a knowledge-great review are searching, collecting, organizing, and analyzing related papers. Conducting a systematic technique that involves restricting the benchmarks of the issues and gathering and assessing those specific issues will be available to investigators. This plan describes the mechanism of discovering important issues in relevant fields.

4.1 Question formalization

The purpose of this research is to survey essential factors and techniques employed in studied articles at a particular time, along with the primary topics and challenges associated with scheduling on serverless computing. Since covering the complete examination of scheduling in serverless computing and showing related open issues is the primary purpose of the current survey, several related questions must be answered to focus on connected worries. Related research questions are shown in Table 3.

4.2 Data Analysis and papers choices

The systematic running structure of opting for and analyzing papers is outlined as follows:

-

Published papers associated with scheduling in serverless computing mechanisms between 2018 and 2023

-

To identify significant synonyms and keywords for scheduling in serverless computing, can apply the following considered string words are applied:

-

1.

(“Scheduling” OR “Energy-aware scheduling” OR “Resource-aware scheduling” OR “Package-aware scheduling” OR “Data-aware scheduling” OR “Deadline-aware scheduling” OR “Hybrid”) AND (“Serverless”) OR (“Serverless Computing”) OR (“Serverless Scheduling”) OR (“FaaS”) OR (“Function-as-a-Service”)).

-

1.

-

The search was done in October 2023, using restrictions on the time scope from 2018 to 2023. The final results showed 444 papers. In the following, by studying some crucial sections, like the Abstract, Goals, Contributions, and Conclusion, at the beginning of this process and as the first step, 180 articles unrelated to the research subject were identified and consequently eliminated. In the Next step, Because of worthless papers with inferior content, study System models, Approaches, Implementation mechanisms, Results of the research, and Solutions provided for the future in the remaining papers. In continuation of the review at the beginning, 131 papers were inscribed as unsuitable; six papers were surveyed, nine papers were repeated, and two books were left out of this process. Therefore, 148 papers have been deleted. Finally, 116 remaining journal papers were inscribed as marked relevant for the study specimen, in which 23 papers were irrigated to serverless computation and have been deleted. In selecting relevant articles, 39 articles were irrigated to scheduling and have been deleted. In the end, the remaining 54 papers related to scheduling in serverless computing approaches are included in the survey.

An illustration of the flowchart for the incorporation and elimination of options is shown in Fig. 3.

Appropriate papers associated with scheduling in serverless computing have been reviewed in reputable scientific databases, as shown in Table 4.

Also, the detailed distribution of these 54 chosen papers between 2018 and October 2023 is shown in Fig. 4. As depicted in the chart, most papers were published between 2021 and 2022 in the corresponding field with an accelerating interest by researchers. Over this period, 2021 has the highest percentage of published articles. In addition, as it is depicted in Fig. 5, the percentages of the mentioned papers were compared to the number of articles and publishers’ titles for each year. As shown, during 2018–2023, IEEE has taken the highest position among other publishers.

5 Scheduling mechanisms in the serverless computing

Serverless computing is an event-driven mechanism in which structures called applications are illustrated by the events that trigger them. Service provider frameworks, as serverless platform functions, tried displaying occurrences via straightforward function abstractions and making out even acts logic among their cloud environments. This structure permits developers to turn big applications into smallish functions, permitting application parts to scale separately [69]. However, this mechanism raises fresh trouble in the logical management of an extensive collection of functions, especially in optimizing resource allocation and managing resources distributed between functions. Serverless computing, usually because of the payment model it has provided for providing its services been raised as a cost-saving tool [70]. Serverless computing has been suggested as a cost-saving mechanism because of the payment model for providing its services. One of the essential ways to save costs is the proper and optimal allocation of resources to functions [71]. Therefore, it is necessary to use a mechanism called scheduling for the optimal allocation of resources. The scheduling process is a strategic-based framework for allocating and distributing resources to devices in a network [72]. This section categorizes and studies the scheduling in the serverless computation domain for chosen papers by the suggested taxonomy. Different classifications of scheduling on serverless computing have been proposed in the literature. In this survey paper, a scheduling mechanism is used to detect the main subjects of resource allocation in serverless computing. So, a subject discovery criterion is used, recognizing six principal classifications. This measure includes issues in diverse classes with various factors and restrictions that cannot be reasonably regarded as a single issue. All techniques present in the study have been categorized into one of these six principal classes, as shown in Fig. 6. As explained earlier, scheduling algorithms are organized into six classes: energy-aware, Data-aware, Deadline-aware, Package-aware, Resource-aware, and hybrid. In the ensuing, we demonstrate some explanations associated with the kinds of scheduling in serverless computing matching the suggested taxonomy, and relevant papers will be concisely studied for each method.

5.1 Energy-aware scheduling mechanisms

This part describes related features to the energy-aware method, which is considered a serverless computing scheduling strategy. Next, the studies papers on this domain are investigated. Scheduling resources efficiently on a provider using energy-aware scheduling is possible. So that the scheduler will be able to understand how the decisions they make will impact the amount of energy consumed, enabling the scheduler to make decisions based on the amount of power utilized and the quantity of energy available [73]. The central concept behind energy-aware scheduling in cloud environments involves the use of passive structures, such as containers or cold-state environments, for the systems to consume as little energy as possible. Employing this approach finally reduces the amount of energy consumed throughout the system [74].

5.1.1 Overview of energy-aware scheduling plans in serverless computing

In this part, the various plans will be studied and then recapitulated at the end of this section. A resource scheduling technique with low energy consumption was proposed by Kallam et al. [73]; the technique is developed based on detailed investigations of a MapReduce framework’s structure, operation, and energy consumption troubles associated with scheduling jobs in a heterogeneous environment. This procedure ensures that all storage nodes are efficiently checked to achieve energy conservation by developing a resource schedule for all the jobs. As a result of the proposed method, the average processing time was significantly reduced compared to the state-of-the-art technique.

Aslanpour et al. [74] analyzed case studies performed in the cloud with the idea that to eschew cold start functions, they should invoke functions by transmitting fake requests, which are considered warm functions. This study aims to ensure that the up-and-running approachability of edge nodes is as high as possible while ensuring that the change does not adversely affect data transmission quality or service quality.

Gunasegaram et al. [75] propose a solution to the problem of colossal container overprovisioning and microservice-agnostic scheduling, which results in poor resource usage, particularly during high workload fluctuations, thereby proposing Fifer as a solution to this issue. Moreover, Fifer minimizes overall latency by creating containers in advance, thus avoiding cold starts. In contrast to most serverless platforms, Fifer has state-of-the-art schedulers that help improve container utilization and reduce cluster-wide energy requirements.

Aslanpour et al. [76] tried to review the issue of efficiently managing energy consumption in very resource-limited edge nodes in their investigation. Their findings demonstrate that while the idle state and CPU consume the most energy in edge devices, the connection process also consumes a significant amount of energy.

Table 5 provides a comprehensive comparison of advantages, disadvantages, performance metrics, programming languages, implementation layers, and evaluation tools of the technical studies reviewed, with a focus on energy-aware scheduling algorithms and approaches.

5.2 Data-aware scheduling mechanisms

In this part, an overview of the data-aware scheduling strategy in serverless computing is provided. With its pliable and varied nature, both in terms of provisioning and allocating resources, the cloud environment poses great difficulties when managing the resources effectively, especially when dealing with data movement among multiple areas simultaneously. For cloud environments to be able to manage their resources effectively and efficiently, it is necessary to consider these points [77]. Thus, it can be concluded that by using a data-aware scheduling model (data affinity), applications can be scheduled intelligently according to the available information and using data-aware scheduling. Therefore, implementing data-aware scheduling as part of workflow applications can also result in significant performance improvements and efficient resource allocation based on the characteristics of variable workloads [78].

5.2.1 Overview of data-aware scheduling mechanisms

The various techniques are studied and summarized in this part at the end of this section.

Rausch et al. [77] propose a container scheduling mechanism enabling platforms to maximize edge infrastructure utilization. The study concluded that the trade-off between data and computational movement is crucial when implementing data-intensive functions in serverless edge computing.

Wu et al. [78] have proposed a method of on-the-fly computing that utilizes efficient multiplexing techniques to reduce the complexity of cloud programming and eliminate the complexity of data analysis by using on-the-fly data collection. Due to its on-demand execution capability, it can provide instant multitenancy and response. In addition to demonstrating the possibility of incorporating the on-the-fly computing method for processing remote sensing data into a serverless system with high reliability and performance, the authors also described how they could use the model to process data collected through sensor networks efficiently.

Yu et al. [79] propose a serverless platform of scalable and low latency called Pheromone. This platform advocates a data-centric orchestration approach in which the data flow triggers function invocations. Pheromone uses a two-level, shared-nothing scheduling hierarchy to schedule functions near the feed-in. Compared to open-source and commercial platforms, Pheromone significantly reduces latencies. Furthermore, Pheromone provides an easy way to implement many applications, including real-time querying, stream processing, and MapReduce sorting.

Das [80] demonstrated that combining Ant Colony Optimization (ACO) with a map-reduce application increases the serverless platform’s efficiency. The proposed model aims to employ MapReduce on Amazon Web Services serverless infrastructure by using a scheduling algorithm called Ant Colony Optimization (ACO). According to the paper, appropriate task scheduling may be able to address the current lack of support for big data applications on serverless platforms.

Seubring et al. [81] propose a data locality-aware scheduler method for serverless edge platforms. The proposed method uses the metadata available in the edge network to optimize the scheduling of functions and constrain execution to the local network. Finally, it shows that moving the execution closer to the data reduces the bandwidth (cost) and time spent moving the data.

Jindal et al. [82] present a Function-Delivery-as-a-Service (FDaaS) framework. A FDaaS allows functions to be delivered to the target platform in a way that fits the platform’s requirements. Moreover, FDaaS allows collaboration between multiple target platforms and data localization and reduces data access latency by transferring data nearer to the target platform. Finally, it shows that employing scheduling functions for an edge platform reduced overall energy consumption without violating SLO requirements.

Nestorov et al. [83] present a high-performance model capable of predicting the performance of inter-functional data exchanges using serverless workloads with a high degree of accuracy. As part of this model, parallelism, data locality, resource requirements, and scheduling policies are also considered to evaluate the performance of data-intensive workloads. The results show that deploying and scheduling workloads in this model can enhance performance.

Przybylski et al. [84] present scheduled individual calls of functions passed to a load balancer to optimize metrics related to response times. In addition, they demonstrated that employing data-driven scheduling strategies improved performance compared with the baseline FIFO or round-robin scheduling. In this way, they could adapt SEPT and SERPT strategies without experiencing any noticeable increase in computational or memory consumption.

García-López et al. [85] propos ServerMix system. The ServerMix system uses a combination of serverless and serverful components to accomplish an analytics task. In the first phase of the research, three fundamental trade-offs have been examined, including those that relate to the serverless computing model of today and their relationship with data analysis. Finally, this paper explores how ServerMix can improve overall computing performance by simplifying the effects of disaggregation, isolation, and scheduling.

HoseinyFarahabady et al. [86] demonstrate when a serverless platform is used to execute several data-intensive functional units, it face many challenges regarding workload consolidation. Finally, Performance evaluations are conducted using modern workloads and data analytic benchmark tools in their four-node platform, illustrating the efficiency of the proposed solution to mitigate the QoS violation rate for high-priority applications.

Tang and Yang [87] present a Lambdata framework for data-aware scheduling. Lambdata is a serverless computing system that allows developers to declare a cloud function’s data intents, including reading and writing data. It is demonstrated in Lambda that once data intents have been defined, the Lambdata mechanism performs a variety of optimizations to improve speed, such as caching data locally and scheduling functions based on the locality of the code and the data. In a Lambdata evaluation, turnaround time has been sped up by 1.51x, and monetary costs have been reduced.

Table 6 provides a comprehensive comparison of advantages, disadvantages, performance metrics, programming languages, implementation layers, and evaluation tools of the technical studies reviewed, with a focus on data-aware scheduling algorithms and approaches.

5.3 Deadline-aware scheduling mechanisms

In this part, an outline of the deadline-aware scheduling approach in serverless computing is discussed. The use of a serverless computing approach has been found to have many advantages. Aside from these advantages, they can also be used to schedule tasks ahead of deadlines. Hence, this helps resource allocation operations run more efficiently for all request tasks since many tasks can be completed by a specific date. On the other hand, they tried to present suitable resource allocation for all tasks with deadline execution. Therefore, to maintain the efficiency of resource allocation operations, it is possible to schedule tasks using deadlines so that a sufficient number of tasks can be completed by the deadline, ensuring that operations run as efficiently as possible [88]. The scheduling process will likely be biased toward task type selection with shorter expected execution times due to the operation mechanics of this strategy. Because shorter tasks are more susceptible to implementation in this strategy, they are estimated to take a shorter time to complete, increasing their chances of success [89]. This strategy is scheduled and implemented so that meeting the latency deadline is one of its priorities. It is necessary to address this issue to minimize the possibility of many deadlines falling by the wayside due to a lack of schedule for future events [90].

5.3.1 Overview of deadline-aware scheduling mechanisms

In this part, the various approaches are reviewed and then outlined after this section. Singhvi et al. [88] present Archipelago, a serverless architecture that supports multiple tenants and performs low-latency tasks via a DAG of functions, each with a deadline for latency. Finally, they demonstrated that with this framework, they were in a position to reduce overall latency by 36X compared to the existing serverless frameworks. And with these frameworks, they could satisfy the latency specifications for more than 99% of actual workloads for applications.

Mampage et al. [90] present a policy for dynamic resource management and function assignment in applications based on serverless computing from the viewpoint of both the client and the provider. Finally, they found that a dynamic CPU-share policy outperformed a static resource allocation policy by 25% in fulfilling deadlines for the needed functions compared to a static approach.

Wang et al. [91] propose employing a model-driven approach. Their work presents a LaSS serverless platform that runs latency-sensitive computations at the edge. Finally, shown by their simulations, LaSS can be programmed to re-provision container capabilities as rapidly as possible while preserving fair share distributions based on their simulations. A LaSS can also accurately predict the resources required for serverless functions when workloads are highly dynamic.

Rama Krishna et al. [92] suggested a SledgeEDF. They used the extension of real-time scheduling to a serverless runtime. For this reason, Sled EDF was suggested to pursue advanced research in this area. SledgeEDF improved the latency of the server-free function by introducing a scheduler for handling a large volume of mixed high-priority workloads simultaneously. As a result of implementing an admissions controller and fine-tuning module configurations, the runtime was able to satisfy 100% of the deadlines.

Pawel and Rzadca [93] present a framework algorithm that can be applied to cloud-based applications on the FaaS system. They also made some desirable modifications to the architecture. Finally, it provides successors for each task in the queue, enabling the scheduler to know which environments must be configured in advance. Based on the simulation results, it was evident that these methods can enhance the efficiency of FaaS. Also, scheduling considering constraints and startup times is more effective and efficient when the system is under heavy load.

Table 7 provides a comprehensive comparison of advantages, disadvantages, performance metrics, programming languages, implementation layers, and evaluation tools of the technical studies reviewed, with a focus on deadline -aware scheduling algorithms and approaches.

5.4 Package-aware scheduling mechanisms

This part provides an introduction to the package-aware scheduling strategy used in serverless computing. In serverless computing, functions are run in pre-allocated containers or VMs on pre-allocated servers. These applications can operate in pre-allocated containers, allowing them to begin operations quickly and efficiently. The functions in question require one or more separate packages to function correctly. These packages can be separated or related to ensure their proper functioning because function execution mechanisms depend entirely on an entity known as a package to function correctly. These functions can start a little slower when needed on a large package [94]. One of the computational nodes in serverless technologies is responsible for retrieving required packages and application libraries upon invocation requests. Finally, if the computation node receives a request for their invocation, a try is required, and the mentioned node will retrieve related library packages. On one or more worker nodes, there is a possibility of installing a preloaded package to make it possible to reuse the execution environment of one or more worker nodes over another worker node. By utilizing this opportunity, it has been possible to reduce the start-up time for the tasks and the turnaround time to complete them, thereby allowing them to be completed in a shorter amount of time and becoming more efficient. In addition to predicting future library requirements based on the libraries already available and installed, it is also possible to use this scheduling strategy to predict future library needs [95].

5.4.1 Overview of package-aware scheduling mechanisms