Abstract

The imbalanced datasets are common in real-world application and the problem of imbalanced dataset affect classification performance of many standard learning approaches. To address imbalanced datasets, a weighted extreme learning machine (WELM) solving the L\(_{2}\)-regularized weighted least squares problem is presented to avoid the generation of an over-fitting model and obtain better generalization ability compared with ELM. However, the weight generated according to class distribution of training data leads to lack of finding optimal weight with good generalization performance and the randomness of input weight and hidden biases of network makes the algorithm produce suboptimal classification model. In this paper, a weighted extreme learning machine based on hybrid artificial bee colony (HABC) is proposed to obtain better performance than WELM, in which input weights and hidden bias of WELM and the weight assigned to training samples are optimized by the hybrid artificial bee colony algorithm. HABC combines the diversities of the perturbed parameter vectors of differential evolution with the best solution information of the artificial bee colony effectively. In the empirical study, different class imbalance data handling methods including four WELM-based methods, weighted support vector machine, four ensemble methods which combine data sampling and the Bagging or Boosting are compared with our method. The experimental results on 15 imbalanced datasets show that the proposed method outperforms most methods, which indicates its superiority.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Extreme learning machine (ELM) [1, 2] is a single layer feed-forward neural network (SLFN) with important features such as elimination of the needed parameter tuning during the training phase, united solutions for regression, binary, and multi-class classification [3, 4]. The overall efficiency of ELM has been proved in many areas like specific proteins detection in bioinformatics [5,6,7,8], drug discovery problem [9], air pollutant detection [10], medical diagnosis[11, 12], corporate life cycle prediction [13], face recognition [14, 15], abnormal activity recognition [16], and so on. Although the ELM can classify balanced datasets effectively, it causes bias towards the majority classes and results in a lower sensitivity in detecting the minority class when dealing with imbalance class [17].

The class imbalance problem are common in real-word domains, such as detecting oil spills from satellite images, anomaly detection, image annotation, software defect prediction, spam filtering [10, 16, 17]. To deal with imbalance class, fuzzy extreme learning machine (FELM) and weighted extreme learning machine (WELM) have been proposed. According to the results of kernel-based possibilistic fuzzy c-Means clustering, FELM assigns different misclassification cost to the positive samples and negative samples for imbalanced datasets with noise and outliers [18]. WELM assigns different weight values for the training samples of different classes to reflect their importance in respective classes and solves the \(L^{2}-\)regularized weighted least squares problem to obtain better generalization performance compared with ELM [19] Similar to traditional ELM, WELM obtains its extremely fast learning speed due to random assignment of input weights and hidden neuron biases. However, this also causes the WELM to produce suboptimal classification model [20, 21]. The effect of random parameters in ELM imposed on the generalization performance can be quite significant, particularly for imbalanced data. In addition, the weight generated according to class distribution of training samples depends on input data, which leads to the lack of finding optimal weight with good generalization performance [22,23,24,25].

In order to reduce the effect of random parameters of ELM, evolutionary extreme learning machine (DE-ELM) has been proposed. DE-ELM adopt the differential evolutionary algorithm (DE) to select the input weights and ELM to calculate the output weight. Experimental results show that the proposed algorithm is able to achieve good generalization performance with much more compact networks [26]. However, this approach developed to reducing the effect of random parameters of the ELM is accuracy-oriented, this causes bias towards the majority classes and results in a lower sensitivity in detecting the minority class, whereas classifying the minority class accurately can be more important than classifying the majority ones accurately in some scenarios as the minority ones often represents the main class of interest. In addition, although the existing external approaches proposed to deal with imbalanced data classification [27,28,29,30,31,32,33] can diminish the effect caused by class imbalance by preprocessing the datasets, when it comes to some real applications such as in bioinformatics and medical diagnosis, it is significant that the predictor is evaluated on the distribution of natural samples instead of balanced distribution under artificial construction, since the external approaches artificially modify the background frequencies of different classes [34]. Therefore, it is meaningful to discuss the internal approaches of imbalance learning that can be applied for the WELM model [35].

Artificial bee colony (ABC) has been used increasingly for global optimization problems [36,37,38]. Compared with existing state-of-the-art population-based approaches, ABC has simple evolutionary operators and fewer parameters need to adjust. Moreover, ABC algorithm is applied to find optimal weight set of the neural network through minimizing output error In the literature [39]. However, Individual position is modified by moving towards a randomly selected solution, which is no guaranty that new candidate position will be better than the last one. So the solution search of ABC algorithm is good at exploration but poor at exploitation [37, 38]. It is observed that DE is good at exploring the search space and locating the region of global minimum but it is slow at exploitation of the solution [40]. Combining ABC with DE algorithm can improve the performance of basic ABC. Therefore, the hybrids of ABC and DE should be promising for training feed-forward neural networks [41, 42].

In this paper, an imbalance learning algorithm applied for the ELM model, named hybrid artificial bee colony optimization-based weighted extreme learning machine (HABC-WELM) is proposed, in which, input weights and hidden bias of network and the weight assigned to training samples are optimized by the hybrid artificial bee colony algorithm, whose exploitation and convergence rate is improved by combining DE operator with the ABC operation, and the network output weights are calculated using WELM. The hybrid ABC mainly focused on restricting the input weights and hidden biases to a reasonable range and assigning optimal weight to training data to achieve good generalization performance. In order to make results comparable, we implement five internal approaches WELM, ensemble weighted extreme learning machine (EN-WELM),evolutionary weighted extreme learning machine (DE-WELM), artificial bee colony optimization-based weighted extreme learning machine (ABC-WELM) and weighted support vector machine (WSVM) which is similar to WELM based on cost-sensitive learning [43]. In addition, we also implement four popular external approaches based ensemble methods RUSBoost, EsayEnsemble, OverBagging, UnderBagging and consider SVM as base classifier since it has been the powerful tools for supervised learning and widely used in classification in a variety of real-world problems. The classification performance and stabilization of the above nine algorithms is also analyzed. In this paper, we focus on imbalanced binary classification datasets, referring to the minority class with positive label and the majority class with negative label.

The rest of paper is organized as follows. In Sect. 2 we review the weighted extreme learning machine and the artificial bee colony algorithm. The details of the proposed HABC-WELM algorithm are described in Sect. 3. In Sect. 4, we carry out the comparison of our method with the state-of-the-art methods. The conclusion and future works are provided in Sect. 5.

2 Preliminaries

This section provides brief reviews of weighted ELM and artificial bee colony algorithm.

2.1 Weighted extreme learning machine

According to statistical learning theory [44], Deng et al proposed a regularized extreme learning machine (RELM) based on L\(_{2}\) penalty which incorporates the structural risk minimization theory and the weighted least squares method into the ELM [45].

Given N distinct samples \(\left( {x_i ,t_i } \right) \), where \(x_i =[x_{i,1} ,x_{i,2} ,\ldots ,x_{i,m} ]^{T}\in R^{m}\) and \(\hbox {t}_i =\big [ t_{i,1} ,t_{i,2} ,\ldots ,t_{i,c} \big ]^{T}\in R^{c}\). \(i=1,2,\ldots ,N\).\( j=1,2,\ldots ,L\). m represents the number of feature. c represents the number of class. The mathematical model of RELM with L hidden nodes and activation function \(\hbox {g}(x)\) can be expressed as

where \(\hbox {a}_j =\left[ {\hbox {a}_{j,1} ,\hbox {a}_{j,2} ,\ldots ,\hbox {a}_{j,m} } \right] \) is the weight vector between the j-th hidden node and the input nodes, \(\beta _j =\left[ {\beta _{j,1} ,\beta _{j,2} ,\ldots ,\beta _{j,c} } \right] ^{T}\)is the weight vector between the j-th hidden node and the output nodes, \(b_j \) is threshold of the j-th hidden nodes, \({\varepsilon }_i =[\varepsilon _{i,1} ,\ldots ,\varepsilon _{i,m} ]\) is the training error vector of the \(\hbox {m}\) output nodes with respect to the training sample \(x_i \), \({\gamma }\) represents the trade-off between the minimization of training errors and the maximization of the marginal distance, The parameters \(\left( {\hbox {a}_j ,b_j } \right) \) in the hidden layer nodes function \(\hbox {h}\left( {x_i } \right) =\hbox {g}(\hbox {a}_j ,b_j ,x_i )\) are randomly generated according to any continuous probability distribution.

The regularized extreme learning machine avoids the generation of an over-fitting model, provides more robust estimates and owns better generalization ability than ELM. But it can leave ELM algorithms with a performance bias, when using a dataset with imbalanced class distribution in the learning process. In order to alleviate the bias in performance caused by imbalanced class distribution, an extra weight \(\hbox {W}_{ii} =\frac{1}{\# (t_i )} \)or \(W_{ii} =\left\{ {{\begin{array}{l} {\frac{0.618}{\# (t_i )} ,if t_i >AVG(t_i ) } \\ {\frac{1}{\# t_i } , if t_i \le AVG(t_i ) } \\ \end{array} }} \right. \) is assigned to each sample to strengthen the impact of minority class while weaken the relative impact of majority class in literature [19], where \(\# (t_i )\) is the number of samples belonging to class \(c,c\in 1,2 ,\) So the weighted extreme learning machine(WELM) is mathematically modeled as

Substituting the constraints to the objective function yields, the Lagrangian for (2) can be written as follows

Where \(\alpha _i \in R(i=1,2,\ldots ,N)\) is the Lagrangian multiplier with the equality constraints of (2). Furthermore, by making the partial derivatives with respect to variables (\(\beta \),\(\varepsilon \),\(\alpha )\) all equal to zero, we can have the KKT corresponding optimality conditions as follows:

By substituting (4.1) and (4.2) into (4.3), we obtain the closed form solution to \(\beta \)

where I is identity matrix and \(\hbox {T}=[t_1 ,t_2 ,\ldots ,t_N ]\). L is the number of hidden nodes and N represents the number of features of input samples. For binary classification problems, WELM needs only one output node, and the decision function is

Similar to traditional ELM, the regularized weighted extreme learning machine produce suboptimal classification model due to random assignment of input weights and hidden neuron biases.

2.2 Artificial bee colony (ABC) algorithm

Artificial bee colony algorithm, proposed by Karaboga for numeric function optimization [36, 37], is inspired by the foraging behavior of bee swarms, which is a competitive and the latest swarm intelligence algorithm. Artificial bee colony consists of three groups: employed bees, onlooker bees and scout bees, in which the first two are of equal amount. To be specific, employed bees exploit the nectar sources in the hive and give information about the quality of the food source to the onlooker bees. Onlooker bees, according to the information shared by the employed bees, decide on a food source to exploit. Scout bees randomly search the environment in order to find a new food source when any food source is abandoned. After initialization phase, the search process of the employed bee phase, the onlooker bee phase and the scout bee phase is repeated until the goal is met or the iteration times are over maximum generation (MaxGen). In ABC algorithm, a possible solution to the optimization problem is expressed by the position of a food source.

ABC works by randomly initializing the positions of food sources searched by the employed bee or its corresponding onlooker bee. Each employed bee is associated with only one food source position. Food source \(x_i \) is generated using a uniform distribution as:

where \(i\in \left( {1,2\ldots ,SN} \right) and j\in (1,2,\ldots D)\). SN represents the number of food source and D represents the dimension of optimization parameters. \(x_j^{min} \) and \(x_j^{max} \) denote boundaries of jth dimension and rand(0,1) is a uniformly distributed random number in the range [0,1].

At first, all employed bees are set out to explore the food source positions. According to local information in its memory, an employed bee updates the position at \(v_i \) of a neighboring food source using following equation

\(k\in \{1,2\ldots ,SN\}\hbox { and }j\in \){\(1,2,\ldots D\}\) are randomly chosen index that has to be different from \(i. \emptyset _{i,j} \) is a uniformly distributed random number in the range [–1, 1].

Afterwards, a greedy selection strategy is applied. If the quality of the food source at \(v_i \) is superior to that of \(x_i \), the employed bee will memorize the new position \(v_i \) and abandon the old one \(x_i \), or the employed bee remains at the previous position. According to the information about the quality of the food source positions shared by the employed bees, onlooker bees either randomly decide on a food source to exploit or ignore according to probability(roulette selection strategy) defined as follows

where \(fitness_i \) represents the quality of the food source at positions \(v_i \) (the objective function of solution) and \(\hbox {fitness}_{best} \) represents the best quality of all food source. It is obvious that higher \(fitness_i \) enjoys higher probability of being selected by the corresponding onlooker bee.

These onlooker bees update the food source position around corresponding employed bees by Eq. (8) and randomly decide on a food source to exploit by Eq. (9). The number of inefficient searching iterations in the process of iterative optimization is written into the control parameter \(trial\left( i \right) \left\{ {i\in \left( {1,2\ldots ,SN} \right) } \right\} \) before better position of the each employed bee and corresponding onlooker bee is derived. If thei-th position of employed bee or onlooker bee is updated, \(trial\left( i \right) \) is set to zero; otherwise, it is added by one. The food source is abandoned and the corresponding bee becomes a scout bee if a food source is not updated for a fixed number limit. The abandoned food source is replaced by a randomly chosen food source by the Eq. (7).

3 Hybrid artificial bee colony optimization-based weighted extreme learning machine

An important paradigm of parameter optimizations for single layer feed-forward neural networks (SLFN) is evolutionary computation [46,47,48,49]. The representative methods using evolutionary computation to optimize ELM parameter is E-ELM [26], which adopts differential evolutionary method to select the hidden node parameters and uses ELM to calculate the output weights. However, E-ELM relies on the error function between the network approximate output and the expected output to evaluate all the populations. For balanced class distribution, these approaches work very well, but when faced with imbalanced data, error can cause the evolution of solutions biased toward the majority class. Yet, it is the performance on the minority class data that is usually of utmost importance. Moreover, while the WELM solves the L \(^{\wedge }\) 2-regularized weighted least squares problem and assigns more weight to minority class and less weight to majority to obtain better generalization performance, the randomly-chosen input weights and hidden neuron biases remain unchanged during the training phase and many non-optimal nodes may exist and contribute less to minimizing the cost function and the weight generated according to class distribution of training samples depends on input samples.

As mentioned above, combining ABC with DE algorithm can improve the performance of basic ABC. This paper aims to propose the HABC-WELM approach that can: (1) use HABC to train WELM to find optimal input weight, hidden bias and the weight assigned to training samples. (2) evolve classifiers in an imbalanced environment without relying on error (the hybrid artificial bee colony is driven by maximizing AUC rather than minimizing overall classification error rate during the training phase), (3) obtain better classification performance than WELM and (4) based on commonly used classification benchmark datasets, present a quantitative performance comparison with WELM, EN-WELM, DE-WELM, ABC-WELM, WSVM, RUSBoost, EsayEnsemble, OverBagging and UnderBagging.

DE algorithm relies on mutation operation constructing better solutions. After generating all the mutant vectors, a crossover operator is used to increase the diversities of the perturbed parameter vectors. The mutation operation of DE is based on the weighted difference of randomly sampled pairs of solutions in the population, and DE algorithm might lead to better perturbation than the strategies with only one weighted difference vector when two weighted difference vectors are added to the base vector. In addition, it is observed that the exploitation capability of ABC can be improved by compelling less fit solutions to follow the best ever found solution [47, 48]. We get the solution search equation of hybrid artificial bee colony (HABC) by combining crossover operation of DE with the best ever found solution of ABC.

where i, k, m is distinct integers randomly selected from the range [1, SN].\( x_{best,j} \) is the best solution in the current swarm. F is positive amplification factor and CR is the crossover rate. \(j_{rand} \) is a randomly chosen integer from [1,D].

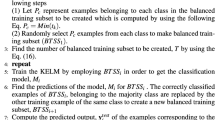

The hybrid artificial bee colony(HABC) described above is now applied to optimize input weights, hidden bias of network and the weight assigned to training samples in WELM. HABC is directly adapted as a training method for feed-forward networks where input weights, hidden bias of network and the weight assigned to training samples are encoded into position vector of food source. The detailed steps of the proposed method are as follow:

Firstly, food sources are randomly generated and composed of a set of NP vectors:

where \(\hbox {a}_j \) and \(b (j=1,\ldots ,L)\) are input weights vector and biases vector generated randomly from the uniform distribution within the range of [–1, 1] and [0, 1] respectively.\( w_{ii} (i=1,\ldots ,N)\) are the diagonal element of weight matrix generated according to class distribution of training samples. L denotes the number of hidden neurons and N denotes the number of samples, q is integer within the range of [1, NP].

Secondly, for each food source, the corresponding output weights of SLFN are computed according to Eq. (5). All employed bees are set out to explore the food source positions. According to local information in its memory, an employed bee updates the position at \(v_i \) of a neighboring food source according to Eq. (10). The quality of the food source is evaluated by a greedy selection strategy. In order to evaluate the fitness of each food source, it requires to select an appropriate evaluation metric as fitness function. G-mean is a measure of the ability of a classifier to balance sensitivity and specificity. Due to this symmetric nature of the distribution of the G-man over sensitivity and specificity, it’s hard to contrast different models in accordance with their precision on each class [49]. F-measure is the weighted harmonic mean of the sensitivity and precision. So the G-mean and F-measure criterion can lead to the selection of sub-optimal models. AUC is defined as a plot of a model’s true positive rate on the y-axis against its false positive rate on the x-axis, offering an overall measure of model performance, regardless of the different thresholds used. When class distribution is imbalanced, the area under the curve (AUC) is the better metric to measure performance of fitness function [50], So the AUC on the validation set is used as the fitness function to evaluate all the population and then WELM is used to tune better the candidate parameters obtained by HABC and move forward to the optimum.

Thirdly, with the fitness value of the i-th food source, the fitness of new position \(v_{i,j}^{\prime } \) corresponding to the current position \(x_{i,j} \) was computed according to Eq. (10). Based on the knowledge that smaller norm of output weights could lead to better generalization ability for ELMs [21, 51], the norm of output weights along with the AUC on the validation set are used as one more criterion evaluating food source. The work mainly focused on restricting the input weights and hidden biases to a reasonable range and assigning optimal weight to training data to improve the classification performance of WELM, thus the greedy selection operation can be expressed as Eq. (12).

where \(f\left( {v_{i,j}^{\prime } } \right) \) and \(f\left( {x_{i,j} } \right) \) are the corresponding fitness values for new position \(v_{i,j}^{\prime } \) and the current position \(x_{i,j } \) respectively. \(\Vert \beta _{v_{i,j}^{\prime } } \Vert \) and \(\Vert \beta _{x_{i,j} }\Vert \) are the norm of the corresponding output weights obtained by ABC-WELM when the input weights are set as the i-th food source, \(\in >0\) is a tolerance rate.

Finally, the above optimization process is repeated and parameters are progressively updated until the convergence criterion is reached or the maximum learning iterations are completed. Thus, the WELM network with the optimal input weights, hidden biases and the weight assigned to each class sample are obtained, and then the optimal WELM is applied to the test data.

4 Experiments

In this section, we observe the variance of AUC and norm of output weights during the training phase of proposed HABC-WELM approach. Afterwards, we present details of the comparison with WELM, EN-WELM, DE-WELM, ABC-WELM, WSVM, RUSBoost, EsayEnsemble, OverBagging and UnderBagging for 15 imbalanced datasets.

4.1 AUC and norm of output weights during the training process

In this subsection, different from the evaluation approaches adopted by most methods, where random permutation is used for each run, we use the same training, testing and validation data set in order to solely analyze the norm of output weights and AUC of four approaches where AUC is used as the fitness function in this subsection to better observe the variance of AUC and norm of output weights. 15 benchmark datasets [52,53,54] used in imbalanced problem are selected. Table 1 gives the characteristics of these datasets. The imbalance ratio(IR, defined as the number of minority class examples divided by the number of majority class examples) of datasets varies from 0.0077 to 0.7409. 10 runs are done to study the stability of three approaches with data unchanged, thus eliminating the performance fluctuation caused by different data partition.

All simulations are carried out by Matlab R2013b on a windows 10 with an Intel Core i7 CPU 4510U @ 2.80GHz an 8G RAM. The performance of the proposed HABC-WELM is evaluated in details with comparison to three WELM-based methods, namely the WELM, ABC-WELM and DE-WELM. The input weights, hidden bias and the weight assigned to training samples of DE-WELM are optimized by differential evolution algorithm. The input weights, hidden bias and the weight assigned to training samples of ABC-WELM are optimized by basic artificial bee colony algorithm. All WELM-based approaches were implemented with sigmoid activation function. There are two parameters to tune for WELM-based approaches, the trade-off constant \({\gamma }\) and the number of hidden nodes \(\hbox {L}\). \({\gamma }\) and \(\hbox {L}\) are searched in a wide range \(\left\{ {2^{-50},2^{-11}\cdots ,2^{50}} \right\} \) and \(\left\{ {10,20,\cdots ,500} \right\} \) respectively in seek of the optimal result. For DE-WELM, F(stepsize) and CR(crossover probability) are set to 1 and 0.8. Size of population is 50, and the maximum number of cycles is 100. For ABC-WELM and HABC-WELM, SN(the number of food sources) is 20, limit is 100 and the maximum number of cycles is 100.

We use G-mean as the evaluation metric to compare models in our case because of its balanced nature and usage in previous works [18]. In addition, G-mean is the trade-off between sensitivity and specificity, whereas the minority ones often represent the main class of interest in some scenarios. Therefore, to evaluate the effectiveness of the competing methods, we also give the results of sensitivity and specificity. The best average classification results in terms of G-mean and corresponding AUC, SN, SP are given in Table 2. For each evaluation metric, the standard deviation is also given after the ± sign.

Average AUC during the fittest evolved solution with DE-WELM (magenta line), ABC-WELM (blue line) and HABC-WELM (cyan line) over 100 independent runs. a Glass0, b Glass2, c Glass6, d Ionosphere, e Ecoli0, f Ecoli1, g Ecoli3, h Vehicle0, i Wisconsin, j Yeast1, k Yeast3, l Pima, m Abalone9vs18, n Abalone19, o Pageblock1 (Color figure online)

Average norm of output weights with DE-WELM (magenta line), ABC-WELM (blue line) and HABC-WELM (cyan line) over 100 independent runs. a Glass0, b Glass2, c Glass6, d Ionosphere, e Ecoli0, f Ecoli1, g Ecoli3, h Vehicle0, i Wisconsin, j Yeast1, k Yeast3, l Pima, m Abalone9vs18, n Abalone19, o Pageblock1 (Color figure online)

Figures 1 and 2 shows the effectiveness of the evaluation metric (AUC) and the variance of norm in DE-WELM, ABC-WELM and HABC-WELM during the training process. As the differential evolution algorithm, artificial bee colony algorithm and hybrid artificial bee colony algorithm are stochastic learning algorithms, each experiment is repeated ten runs using a different random seed. Therefore, Figs. 1 and 2 report the average AUC and norm of the fittest solution in the population over the 100 generations. The best results in terms of the G-mean, and corresponding AUC, SN, SP and standard deviation (STD) respectively.

Figure 1 shows that the AUC of three approaches increase smoothly over all datasets except for Ecoli0, which suggests that training is not an issue for most datasets. As can been seen from Fig. 1 and Table 2, the G-mean of these three methods are higher than those of WELM despite the fact that there is no variance for AUC of Ecoli0 over 100 generations in HABC-WELM, ABC-WELM and DE-WELM, which illustrates that the evaluation metric (AUC) evolves classifiers with best solution at the first generation. Although there are some fluctuations, a trend can still be observed from the curves in Fig 2, i.e., the norm of the output weights of the SLFN are decreasing in HABC-WELM and ABC-WELM for all datasets as well as in DE-WELM for seven datasets during the training process, which is consistent with the theory that the neural networks tend to have a better generalization performance with smaller norm of output weights. Besides, the norm of the output weights of the SLFN are increasing over 8 datasets in DE-WELM, but the testing G-mean are higher than those of the WELM over all datasets from Table 2, which suggests that the optimization of the network hidden node parameters is determined not only by the area under curve(AUC) but also by the norm of the output weights.

From Table 2, the HABC-WELM shows the best performance in terms of G-mean over 15 datasets. the testing G-mean and SN of the DE-WELM are higher than those of the ABC-WELM over glass0, yeast1, pageblock1. However, the testing G-mean and SN of the HABC-WELM are higher than those of the ABC-WELM and DE-WELM over all data sets. It can be seen that the HABC-WELM offers very competitive results compared with the original WELM, DE-WELM and ABC-WELM in terms of producing higher testing AUC, G-mean and SN as well as lower STD over most datasets. Particularly, the HABC-WELM outperforms the ABC-WELM in all tests.

According to the experimental results on all data sets, there is the same value of \(\gamma \) and no much difference in the value of L when WELM, DE-WELM and HABC-WELM obtain the best results in term of G-mean. Therefore, one can fix \(\gamma \) as the best value and tune L when selecting the parameter of model, thus the time for parameter selection has been largely shortened.

Since this simulation is solely aimed to analyze the variance of norm and AUC of three approaches in which AUC is used as the fitness function, only one training, testing and validation datasets are randomly generated at each trial of simulations according to Table 1. Therefore, the value in Table 2 may not be a true reflection of performance. More thorough tests are done in the subsection simulation later.

4.2 Performance evaluation of proposed HABC-WELM

Hereafter, once we have shown and selected our better proposal, we analyze whether the classification performance of our proposed method is better than that of previous methods. To thoroughly evaluate the performance of HABC-WELM, four WELM-based methods, WSVM and four popular ensemble learning based on SVM base classifier, such as RUSBoost, EsayEnsemble, overBagging, underBagging are tested synchronously. We use 15 data sets in Table 1. For above nine methods, the evaluation procedure consists of two cycles in order to avoid over-fitting for final generated classifier. In the inner cycle, the five-fold cross-validation approach(80% samples are extracted into original training set and the rest ones are used for testing each time. The original training set is randomly divided into two groups: training set(2/3) and validation set(1/3)) is used. Each algorithm based on WELM run 10 times considering that the sampling of subsets and input parameters of hidden nodes introduces randomness. The result is averaged over the five-fold. The outer cycle consists of 10 runs and the final result is averaged over 10 runs. In this way, the performance variance of all tests caused by different initial parameters and random partition of datasets can be both analyzed and the number of hidden neurons L and the trade-off constant \(\gamma \) in the WELM-based are chosen to ensure the use of optimal models. When gradually increasing the number of hidden nodes in preset region, the one with the highest test G-mean is selected as the suitable number of hidden nodes.The number of base classifier of EN-WELM is 10. SVM is implemented in LIBSVM. Resampling rate is set 5% in RUSBoost and EsayEnsemble. The number of iteration is set 10 in overBagging, underBagging, RUSBoost and EsayEnsemble. All other parameters for SVM is selected from the set \(\left\{ {10^{-10},\cdots ,10^{10}} \right\} \). The best average classification results in terms of G-mean and corresponding AUC, SN, SP are given in Table 3. For each evaluation metric the standard deviation is also given after the ± sign. In this table, the best results on each data set are emphasized in bold.

From Table 3, some conclusion can be drawn as follows:

Firstly, the results show that the performance of the proposed method is the best on 13 of 15 datasets for G-mean, and 8 of 15 datasets for SN. Secondly, compared with other WELM-based, the proposed method obtains the highest testing G-mean and SN except for EN-WELM over ionosphere. Apart from Glass2, Ecoli0, Ecoli3, Wisconsin and Yeast1, the SP of HABC-WELM increases significantly compared to WELM, which indicates that the proposed method can achieve better classification performance of both minority class and majority class on most datasets. Besides the fitness function (AUC) evolves classifiers with good performance on both the minority and majority class compared with WELM. In comparison with WELM, the STD of G-mean and SN caused by the random generation of input parameters in the proposed method has been reduced, which indicates that the proposed method is more stable than WELM. It can be seen that the HABC-WELM outperforms the original ABC-WELM. As what has been pointed out by many researchers, the solution search of ABC algorithm is good at exploration but poor at exploitation, which may result in premature convergence or stagnation. Therefore, incorporating the information of global best solution of ABC into the solution search equation to guide the search of new candidate solutions and exploiting the diversities of the perturbed parameter vectors of differential evolution may lead to better network generalization performances. Thirdly, compared with overBagging, underBagging, RUSBoost and EsayEnsemble, the proposed method achieves the best G-mean over 14 datasets and the best SN over 10 datasets.

We analyze whether the proposed method performs better than other methods. To do so, we apply Friedman test that ranks the algorithm in term of testing G-mean for each data set separately. The significance level is taken as 0.05. The null hypothesis is that all the algorithms are equivalent and so their average ranks should be equal. P-value is the probability of observing Friedman test statistic as extreme as, or more extreme than, the observed value under the null hypothesis. Small p-values casts doubt on the validity of the null hypothesis. Average ranks computed for Friedman test is shown in Table 4. The test outputs a p-value of 1.31e–14, which indicates that the hypothesis of equivalence can be rejected with high confidence. Hence, we continue a post-hoc test using Nemenyi test to identify which method significantly perform best, as suggested by Demasar [35, 55].

Figure 3 plots the ten methods against average performance ranks, where all methods are sorted according to their ranks. The ‘*’ denotes the respective average rank of each method and the line segment to the right of each method represents its critical difference, which means the methods whose ‘*’ on the right end of the line are outperformed significantly. The ‘\(\varvec{\downarrow }\)’ with vertical dotted line highlight the critical difference. The left vertical line and right vertical line is respectively associated with the best and the worst method. All methods right to this line perform significantly worse than this method and all methods left to this line perform significantly better than it. From Fig. 3, the proposed method is significantly better than another.

5 Conclusions

In this paper, a heuristic weighted extreme learning machine method named as HABC-WELM for imbalanced data classification was proposed, in which a hybrid ABC was used to optimize the input weights, hidden biases of WELM and the weight assigned to training sample. In the process of selecting the input weights and hidden biases, the metric applied to evaluate all populations is designed to AUC rather than the error between the network approximate output and the expected output. By 15 datasets, it has been demonstrated that the AUC metric is effective, and that the proposed method has better classification performance than the WELM, DE-WELM and EN-WELM, ABC-WELM, WSVM, RUSBoost, EsayEnsemble, overBagging and underBagging methods over most datasets. The future research works will include how to apply the proposed method to real-world imbalanced data mining applications such as identification of protein functional sites. Moreover, it is also interesting to extend current HABC-WELM to multi-class datasets and some real world applications.

References

Huang, G.B., Zhu, Q.Y., & Siew, C.K.: Extreme learning machine: a new learning scheme of feedforward neural networks. In: Proceedings of 2004 IEEE International Joint Conference on Neural Networks, Vol. 2, pp. 985–990. IEEE (2004)

Huang, G.B., Zhu, Q.Y., Siew, C.K.: Extreme learning machine: theory and applications. Neurocomputing 70(1), 489–501 (2006)

Huang, G.B., Zhou, H., Ding, X., Zhang, R.: Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B 42(2), 513–529 (2012)

Huang, G., Huang, G.B., Song, S., You, K.: Trends in extreme learning machines: a review. Neural Netw. 61, 32–48 (2015)

Savojardo, C., Fariselli, P., Casadio, R.: BETAWARE: a machine-learning tool to detect and predict transmembrane beta-barrel proteins in prokaryotes. Bioinformatics 29(4), 504–505 (2013)

Savojardo, C., Fariselli, P., Casadio, R.: Improving the detection of transmembrane \(\beta \)-barrel chains with N-to-1 extreme learning machines. Bioinformatics 27(22), 3123–3128 (2011)

Fan, Y.X., Shen, H.B.: Predicting pupylation sites in prokaryotic proteins using pseudo-amino acid composition and extreme learning machine. Neurocomputing 128, 267–272 (2014)

Lan, Y., Soh, Y.C., & Huang, G.B.: Extreme learning machine based bacterial protein subcellular localization prediction. In: IJCNN 2008, IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), pp. 1859–1863. IEEE (2008)

Czarnecki, W.M.: Weighted tanimoto extreme learning machine with case study in drug discovery. IEEE Comput. Intell. Mag. 10(3), 19–29 (2015)

Vong, C.M., Ip, W.F., Wong, P.K., Chiu, C.C.: Predicting minority class for suspended particulate matters level by extreme learning machine. Neurocomputing 128, 136–144 (2014)

Saraswathi, S., Sundaram, S., Sundararajan, N., Zimmermann, M., Nilsen-Hamilton, M.: ICGA-PSO-ELM approach for accurate multiclass cancer classification resulting in reduced gene sets in which genes encoding secreted proteins are highly represented. IEEE/ACM Trans. Comput. Biol. Bioinf. 8(2), 452–463 (2011)

Li, L.N., Ouyang, J.H., Chen, H.L., Liu, D.Y.: A computer aided diagnosis system for thyroid disease using extreme learning machine. J. Med. Syst. 36(5), 3327–3337 (2012)

Lin, S.J., Chang, C., Hsu, M.F.: Multiple extreme learning machines for a two-class imbalance corporate life cycle prediction. Knowl.-Based Syst. 39, 214–223 (2013)

Baradarani, A., Wu, Q.J., Ahmadi, M.: An efficient illumination invariant face recognition framework via illumination enhancement and DD-DTCWT filtering. Pattern Recognit. 46(1), 57–72 (2013)

Mohammed, A.A., Minhas, R., Wu, Q.J., Sid-Ahmed, M.A.: Human face recognition based on multidimensional PCA and extreme learning machine. Pattern Recognit. 44(10), 2588–2597 (2011)

Gao, X., Chen, Z., Tang, S., Zhang, Y., Li, J.: Adaptive weighted imbalance learning with application to abnormal activity recognition. Neurocomputing 173, 1927–1935 (2016)

Mirza, B., Lin, Z., Liu, N.: Ensemble of subset online sequential extreme learning machine for class imbalance and concept drift. Neurocomputing 149, 316–329 (2015)

Xia, S.X., Meng, F.R., Liu, B., Zhou, Y.: A kernel clustering-based possibilistic fuzzy extreme learning machine for class imbalance learning. Cognit. Comput. 7(1), 74–85 (2015)

Zong, W., Huang, G.B., Chen, Y.: Weighted extreme learning machine for imbalance learning. Neurocomputing 101, 229–242 (2013)

Liu, N., Wang, H.: Ensemble based extreme learning machine. IEEE Signal Process. Lett. 17(8), 754–757 (2010)

Cao, J., Lin, Z., Huang, G.B., Liu, N.: Voting based extreme learning machine. Inf. Sci. 185(1), 66–77 (2012)

Sharma, R., Bist, A.S.: Genetic algorithm based weighted extreme learning machine for binary imbalance learning. In: 2015 International Conference on Cognitive Computing and Information Processing (CCIP), pp. 1–6. IEEE (2015)

Dudek, G.: Extreme learning machine for function approximation-interval problem of input weights and biases. In: 2015 IEEE 2nd International Conference on Cybernetics (CYBCONF), pp. 62–67. IEEE (2015)

Zhang, N., Qu, Y., Deng, A.: Evolutionary extreme learning machine based weighted nearest-neighbor equality classification. In: 2015 7th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), vol. 2, pp. 274–279. IEEE (2015)

Hu, K., Zhou, Z., Weng, L., Liu, J., Wang, L., Su, Y., Yang, Y.: An optimization strategy for weighted extreme learning machine based on PSO. Int. J. Pattern Recognit. Artif. Intell. 31(01), 1751001 (2017)

Zhu, Q.Y., Qin, A.K., Suganthan, P.N., Huang, G.B.: Evolutionary extreme learning machine. Pattern Recognit. 38(10), 1759–1763 (2005)

Batista, G.E., Prati, R.C., Monard, M.C.: A study of the behavior of several methods for balancing machine learning training data. ACM Sigkdd Explor. Newsl. 6(1), 20–29 (2004)

Estabrooks, A., Jo, T., Japkowicz, N.: A multiple resampling method for learning from imbalanced data sets. Comput. Intell. 20(1), 18–36 (2004)

Mazurowski, M.A., Habas, P.A., Zurada, J.M., Lo, J.Y., Baker, J.A., Tourassi, G.D.: Training neural network classifiers for medical decision making: the effects of imbalanced datasets on classification performance. Neural Netw. 21(2), 427–436 (2008)

Seiffert, C., Khoshgoftaar, T.M., Van Hulse, J., Napolitano, A.: RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans. Syst. Man Cybern.-Part A 40(1), 185–197 (2010)

Liu, X.Y., Wu, J., Zhou, Z.H.: Exploratory undersampling for class-imbalance learning. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 39(2), 539–550 (2009)

Galar, M., Fernandez, A., Barrenechea, E., Bustince, H., Herrera, F.: A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 42(4), 463–484 (2012)

Barandela, R., Valdovinos, R.M., Sánchez, J.S.: New applications of ensembles of classifiers. Pattern Anal. Appl. 6(3), 245–256 (2003)

Walsh, I., Pollastri, G., Tosatto, S.C.: Correct machine learning on protein sequences: a peer-reviewing perspective. Briefings Bioinf. 17(5), 831–840 (2015)

Arunkumar, N., Ram Kumar, K., Venkataraman, V.: Automatic detection of epileptic seizures using permutation entropy, Tsallis entropy and Kolmogorov complexity. J. Med. Imaging Health Inf. 6(2), 526–531 (2016)

Basturk, B., Karaboga, D.: An artificial bee colony (ABC) algorithm for numeric function optimization. IEEE Swarm Intell. Symp. 8(1), 687–697 (2006)

Karaboga, D., Gorkemli, B., Ozturk, C., Karaboga, N.: A comprehensive survey: artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 42(1), 21–57 (2014)

Jadon, S.S., Bansal, J.C., Tiwari, R., Sharma, H.: Accelerating artificial bee colony algorithm with adaptive local search. Memet. Comput. 7(3), 215–230 (2015)

Ozturk, C., Karaboga, D.: Hybrid artificial bee colony algorithm for neural network training. In: 2011 IEEE Congress on Evolutionary Computation (CEC), pp. 84–88. IEEE (2011) [34] Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces

Storn, R., Price, K.: Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11(4), 341–359 (1997)

Stephygraph, L.R., Arunkumar, N.: Brain-actuated wireless mobile robot control through an adaptive human-machine interface. Adv. Intell. Syst. Comput. 397, 537–549 (2016)

Fernandes, S.L., Gurupur, V.P., Sunder, N.R., Arunkumar, N., Kadry, S.: A novel nonintrusive decision support approach for heart rate measurement. Pattern Recognit. Lett. https://doi.org/10.1016/j.patrec.2017.07.002 (2017)

Yu, T., Jan, T., Simoff, S., Debenham, J.: A hierarchical VQSVM for imbalanced data sets. In: 2007 International Joint Conference on Neural Networks, pp. 518–523. IEEE (2007)

Zhang, Z., Ou, J., Li, D., Zhang, S.: Optimization design of coupling beam metal damper in shear wall structures. Appl. Sci. 7(2), 137 (2017)

Pan, W., Chen, S., Feng, Z.: Automatic clustering of social tag using community detection. Appl. Math. Inf. Sci. 7(2), 675–681 (2013)

Liu, C., Li, Y., Zhang, Y., Yang, C., Hongbin, W., Qin, J., Cao, Y.: Solution-processed. undoped, deep-blue organic light-emitting diodes based on starburst oligofluorenes with a planar triphenylamine core. Chem. A Eur. J. 18(22), 6928–6934 (2012)

Pacifico, L.D., Ludermir, T.B.: Evolutionary extreme learning machine based on particle swarm optimization and clustering strategies. In: The 2013 International Joint Conference on Neural Networks (IJCNN), pp. 1–6. IEEE (2013)

López, V., Fernández, A., García, S., Palade, V., Herrera, F.: An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf. Sci. 250, 113–141 (2013)

Bhowan, U., Johnston, M., Zhang, M.: Developing new fitness functions in genetic programming for classification with unbalanced data. IEEE Trans. Syst. Man Cybern. Part B 42(2), 406–421 (2012)

Bartlett, P.L.: The sample complexity of pattern classification with neural networks: the size of the weights is more important than the size of the network. IEEE Trans. Inf. Theory 44(2), 525–536 (1998)

Barandela, R., Sánchez, J.S., Garcıa, V., Rangel, E.: Strategies for learning in class imbalance problems. Pattern Recognit. 36(3), 849–851 (2003)

Shao, Y.H., Chen, W.J., Zhang, J.J., Wang, Z., Deng, N.Y.: An efficient weighted Lagrangian twin support vector machine for imbalanced data classification. Pattern Recognit. 47(9), 3158–3167 (2014)

Frank, A., Asuncion, A.: UCI Machine Learning Repository http://archive.ics.uci.edu/ml. School of Information and Computer Science, p. 213. University of California, Irvine, CA (2010)

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30 (2006)

Sun, Z., Song, Q., Zhu, X., Sun, H., Xu, B., Zhou, Y.: A novel ensemble method for classifying imbalanced data. Pattern Recognit. 48(5), 1623–1637 (2015)

Acknowledgements

This study was funded by National Key Technology Science and Technique Support Program (No. 2013BAH49f03).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Research Involving Human Participants or Animals

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Tang, X., Chen, L. Artificial bee colony optimization-based weighted extreme learning machine for imbalanced data learning. Cluster Comput 22 (Suppl 3), 6937–6952 (2019). https://doi.org/10.1007/s10586-018-1808-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-018-1808-9