Abstract

Many areas of the natural and social sciences involve complex systems that link together multiple sectors. Integrated assessment models (IAMs) are approaches that integrate knowledge from two or more domains into a single framework, and these are particularly important for climate change. One of the earliest IAMs for climate change was the DICE/RICE family of models, first published in Nordhaus (Science 258:1315–1319, 1992a), with the latest version in Nordhaus (2017, 2018). A difficulty in assessing IAMs is the inability to use standard statistical tests because of the lack of a probabilistic structure. In the absence of statistical tests, the present study examines the extent of revisions of the DICE model over its quarter-century history. The study finds that the major revisions have come primarily from the economic aspects of the model, whereas the environmental changes have been much smaller. Particularly, sharp revisions have occurred for global output, damages, and the social cost of carbon. These results indicate that the economic projections are the least precise parts of IAMs and deserve much greater study than has been the case up to now, especially careful studies of long-run economic growth (to 2100 and beyond). Additionally, the approach developed here can serve as a useful template for IAMs to describe their salient characteristics and revisions for the broader community of analysts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many areas of the natural and social sciences involve complex systems that link together multiple physical or economic sectors. This is particularly true for climate change, which has strong roots in the natural sciences and requires social and policy sciences to solve in an effective manner. As understanding progresses across the different fronts, it is increasingly necessary to link together the different areas to develop effective understanding and efficient policies. In this role, integrated assessment analysis and models play a key role. Integrated assessment models (IAMs) can be defined as approaches that integrate knowledge from two or more domains into a single framework. These are sometimes theoretical but are increasingly involve computerized dynamic models of varying levels of complexity.

One of the earliest IAMs for climate change was the DICE/RICE family of models, developed starting in 1989 and published in Nordhaus (1992b, 1994). The DICE (Dynamic Integrated model of Climate and the Economy) and RICE (Regional Integrated model of Climate and the Economy) models have gone through several revisions since their first development. An intermediate version was Nordhaus (2008). The latest published version is DICE-2016R2 (Nordhaus 2017, 2018), with a complete description of the penultimate model in Nordhaus and Sztorc (2013). Since the earliest versions, the DICE model has been through many iterations, incorporating more recent economic and scientific findings and updated economic and environmental data.

One of the major shortcomings of IAMs is that their structure makes it extremely difficult to use standard econometric techniques to assess their reliability—a feature that is shared with earth systems models and other large simulation models. In the absence of statistical tests, the present study examines the extent and area of revisions of the DICE model from its earliest publication in 1992 to its latest version published in 2017 and 2018. This retrospective gives a flavor for changes in the underlying economic and earth sciences, data revisions, correction of mistakes, and the pure passage of time. Also, for those estimates that have included estimated errors in past studies, it is possible to compare the actual revisions with the estimated errors.

Additionally, the present study provides an example of how modelers might present the salient characteristics of their models. It might be useful to establish guidelines for analyses that IAMs should routinely present—such as the retrospective studies and salient assumptions and outputs presented below—along with a standardized format.

Before presenting the details, I will highlight the results. The major revisions in the modeling have come from the economic sectors, whereas the environmental changes have been much smaller. Regarding elementary inputs, future global output was revised upwards massively over the years. Estimates of 2100 global output have been revised up by a factor of 3½ since the 1990 period. The second major revision has been in the damage function, which has been revised upwards by 60%. None of the other major input variables or functions have had anywhere near those levels of revisions. (The reasons for the revisions will be discussed below.)

In terms of major output variables, the major revision occurred for the estimate of the social cost of carbon, SCC. (Note that the calculations of the SCC are always the marginal damage along the actual emissions and damage path, not the optimized path.) The estimate of the 2015 SCC increased from $5 to $31 per ton of CO2. As we will see below, this was the result of compounded revisions in driving variables. Most of the environmental variables had relatively small revisions and ones that were within the estimated error bounds. For example, 2100 temperature increase was originally 3.2 °C and was revised upwards to 4.3 °C in the latest version. Industrial emissions were revised downward over the years.

2 Methods and results

2.1 Methods

The approach to determining the impact of revisions was the following. I began with the 1992 GAMS version of the DICE model (“DICE.1.2.3”).Footnote 1 Fortunately, the 1992 GAMS code was compatible with the 2017 software, so it could be run to duplicate the 1992 results. In the first estimates shown here, I will simply compare the estimates or projections in the two models. In the last section, I will show the sources of the changes.Footnote 2

It will be useful to review what is assumed in the baseline or uncontrolled runs. These are basically a Ramsey-Koopmans-Cass optimal growth model with limited carbon fuels and uninternalized climate damages. The trajectory of environmental and economic variables is calculated by optimizing the savings rate and the allocation of carbon fuels while excluding damages from output and utility. The damages are then subtracted after output and emissions are determined in the uninternalized path.

Note as well that virtually all studies reviewed here are deterministic models. Other approaches (such as “stochastic DICE” referred to later) look at the results with uncertain parameters. I note that stochastic DICE has some results that are substantially different from deterministic DICE.

2.2 Results for 2015

It is useful to examine the model projections for 2015. These are historical data in the latest version (subject of course to revisions) but are projections in the earliest version. The 1992 version used data from the mid-1980, so the projections to 2015 can be seen as 30-year-ahead forecasts. Table 1 shows the projections and actual values for 2015. The errors were large in many areas. The first column shows the estimates for 2015 in the 1992 model, while the second column shows the estimate for 2015 from the 2017 model, which are actual data. The third numerical column shows the change from 1992 to 2017, which is the forecast error in the 1992 model.

Output and population were underestimated substantially. Emissions and other forcings were overestimated because the rate of decarbonization was underestimated, and in the case of forcings, concentrations of CFCs were vastly overestimated in the baseline estimates. CO2 concentrations were correctly projected, while temperature was overestimated (as with most earth system models). The largest error was the social cost of carbon (SCC), which was hugely underestimated because the different factors compounded. An interesting note is that the SCC was not calculated in the early version of the model and was first introduced in the 2008 version, so estimates of the SCC for early versions are retrospective estimates.

2.3 Projections for 2100 Footnote 3

Table 1 provides a guide to the errors that arise in IAMs like the DICE model, and it also shows how model histories can track errors when models have a sufficiently long history. Table 2 shows the estimated total revisions between 1992 and 2017 for major variables.

The first three columns are similar to those in Table 1. The last two columns show estimated uncertainties (measured as standard deviations) from two studies of uncertainty (Nordhaus 2008, 2018). The first estimate used the 2008 DICE model and made estimates of uncertainty for several variables. These are shown in the uncertainty column labeled “2008.” The second study calculated the standard deviation of the variables using the DICE-2016R2 model and is shown as “2017.”

The pattern of revisions for 2100 shown in Table 2 is similar to the pattern of errors in Table 1 for 2015. The most striking revision in the driving variables is a massive upward revision in world GDP. A major part of this revision is moving from market exchange rates (common until about 2000) to purchasing power parity exchange rates (which should be but is not universal today). A second change is the feature that early versions of the DICE model as well as other energy-economy models were based on estimates that had a strong stagnationist bias, with sharply falling productivity growth after 2025 (see Nordhaus and Yohe 1983, Table 2.7). Factors driving emissions and forcings, by contrast, were revised downward sharply.

If we look at the bottom group of variables in Table 2, we see an interesting pattern. Emissions, concentrations, and forcings were underestimated, but by a relatively small fraction. However, economic variables such as output, damages, and the SCC were massively underestimated. This finding, which the economic variables were the major sources of uncertainty, is one of the most striking results of the current retrospective. A further set of comparisons is presented in a section on model comparisons below.

2.4 Uncertainty estimates

Additionally, it is useful to determine whether estimates of the forecast errors were helpful in understanding the potential forecast errors. Systematic studies of forecast errors using Monte-Carlo-type techniques were undertaken for the 2008 model and the 2016R2 model. The latter set is more comprehensive but they have the drawback of being retrospective error estimates.

Table 3 shows the “error forecast ratio,” which is the ratio of the change in forecasts between 1992 and 2017 relative to the estimated forecast uncertainty (measured as the standard deviation from the Monte-Carlo estimates). Conceptually, these are similar to t-ratios although they do not have a formal probabilistic structure.

Here is a more precise description. Designate xi(T, t) as the observation or projection of variable xi for a future period T when estimated at date t. Using this notation, consider the final prediction error at the end of the period, 2100. The prediction error in 2100 that is made by the 1992 model is xi(2100, 2100) - x i (2100, 1992)]. We can decompose this as follows: is xi(2100, 2100) − xi(2100, 1992)] = [xi(2100, 2100) − xi(2100, 2017)] + [xi(2100, 2017) − xi(2100, 1992)]. The first term cannot be determined until 2100, but the second term can be determined in 2017.

Table 3 shows the second of the two terms, the term that can be determined today. Because this calculation omits the first term, this implies that the ultimate forecast error may well be larger than the second term, so the ratio shown in Table 3 is likely to be an underestimate of the ultimate error forecast ratio.

Examining the error ratios in Table 3, we see that most of the error ratios are less than 1. The largest error ratios are in the order of 1 and occur for temperature, per capita output, output, damages, and the SCC. These estimates indicate that while the projection errors are in some cases very large, large errors are expected because of the magnitude of the parametric uncertainty and the structure of the DICE model. In other words, given the large uncertainty about output growth, it is not surprising that there is a corresponding large uncertainty about emissions growth; the same is true of the SCC. We, therefore, should not be surprised that output and SCC estimates have been substantially revised in the last quarter-century, and we should anticipate major revisions in the future.

3 Decomposition of the changes

A next question is the source of the changes for the projections of different variables with respect to revisions in the model structure and the economic and environmental data. The approach is straightforward in principle but complicated in practice. It involves starting with the earliest version of 1992 and then introducing model and data differences between 1992 and 2017 models one step at a time. We then evaluate the impact on different variables at each step. We can thereby determine the size of the revisions for the important variables along with the sources of the revisions. There is, of course, some ambiguity in this approach to the extent that there are interdependencies among revisions. However, most of the step-by-step changes come in a natural order, so the results are likely to be insensitive to ordering.

Table 4 shows the adjustments made step by step. We label the changes as being different “versions” marked by vj. Some of the steps or versions are trivial or make checks and will not be included in the discussion below. It is important to note that the sequence is a logical progression and not a temporal set of steps. Some of the earliest steps (such as the change to 2010$) came at the end, while there were several changes in the carbon cycle modeling in the intervening years.

It will be useful to show two important examples. Table 5 shows the decomposition for the social cost of carbon for 2015. This, it will be recalled, has the largest single revision. The change comes from multiple variables. The largest contributor is the revision in the treatment of the carbon cycle, while the others are primarily economic variables such as the damage function and the utility function.Footnote 4 Except emissions intensity, all the revisions were upwards.

Table 6 shows a similar calculation for 2100 temperature increase. The total change here is much smaller, with the largest contributor being the carbon cycle. Most of the other changes were modest and were both positive and negative. (The line “DICE-2016R2” refers to all other contributing factors that were not individually estimated.)

Tables 7 and 8 show the complete set of revision results for the outcomes in years 2015 and 2100. The first column is the replication of the results for DICE-1992. The second column (v6) updates the price level to that in the current study and is the version used for the endpoint comparisons in the prior sections.

The changes for selected variables and non-trivial changes are displayed in Figs. 1 and 2. Figure 1 shows the decomposition of the changes for variables in 2015, while Fig. 2 shows for variables in 2100.

Changes in estimates for variables in 2015. The figure shows the level of the variable for each version. For example, moving from 1989 $ to 2010 $ did not affect emissions but doubled output in nominal dollars. Three changes included in Tables 7, 8, and 9 are omitted as they had virtually no impact on any of the graphed variables. Interpretation of legend on horizontal axis: 1965$ DICE-1992 model (in 1989 $). 2010$ DICE-1992 model (reflated to 2010$). Q, K Adjustment for estimated output, capital in 2015. E, M Adjustment for estimated emissions and concentrations in 2015. DamF Change to 2017 damage function. TFP Change to level and growth of TFP (productivity) in 2017 model. Sigma Change to level and growth of global CO2/output ratio in 2017 model. Ufn Change to 2017 utility function. CCyc Change to 2017 carbon cycle and parameters. D2016R2 Rest of changes to full DICE-2016R2 model

Changes in estimates for variables in 2100. The figure shows the level of the variable for each version. For example, moving from 1989 $ to 2010 $ had no effect on emissions but doubled output. For the meaning of legend on the horizontal axis, see Fig. 1

In examining the revisions shown in Tables 7 and 8, the sources of the changes differ by variable. Here are some of the highlights. In this discussion, I will ignore the first line in the table, which is simple price-level change. In each of these decompositions, I examine what changes in model design or data led to the major changes in the output variable from 1992 to 2017.

-

If we look at the change in 2015 global output due to model changes, it is not surprising that most of the change came from the adjustment of the level of 2015 output. Other net changes for 2015 output were minor.

-

The major environmental variables for 2100 were relatively stable. Emissions and concentrations wobbled around with revisions, but there were only minor net changes. These were stable in part because the mechanisms that drive these processes were relatively well understood in the 1990s and partially because there are no ambiguities in how to measure the variables.

-

The huge increase in projected 2100 global output was partially because of the upward revision in the base 2015 output, but primarily because of a major change in projected productivity growth. For output, there are both measurement and technological issues. It is clear that the mechanism underlying productivity growth is non-stationary, which makes forecasting particularly difficult.

-

Most changes in economic variables are driven by upward revisions in the measures of output and TFP (productivity) growth, as discussed above.

4 External validation of DICE model

One of the major concerns with DICE and other IAMs is the difficulty of statistical estimation and validation of the models. This has been a long-standing problem with IAMs as well as other large-scale models, such as earth-systems models and ecological models. I address this by examining potential statistical approaches and model comparisons.

4.1 Statistical approaches

One potential approach to validation is to use time-series methods to estimate IAMs and to test their forecast errors. This is the gold standard, for example, in economic models of business cycles and consumption behavior.

The statistical approach has not proven useful in IAMs and similar models. Because the models make projections into the deep future, it is not feasible to find a reliable approach to estimating the relationships from appropriate historical or cross-sectional data. Moreover, some elements, such as damages, have no useful historical observations because the future climate is projected to be far from the past. Additionally, some of the elements, such as the optimization structure, have no obvious empirical counterparts. Economy-climate models have a similar structure as earth systems models, which also have been unable to draw upon standard statistical techniques for estimation and validation.

Econometricians have explored the issues of statistical estimation of calibrated models. Most of the work applies to dynamics stochastic general equilibrium models. For a general treatment, see Hansen and Heckman (1996) or Fukač and Pagan (2010). There have been virtually no successful examples of the use of statistical calibration techniques for IAMs. One exception to this issue is research of Dale Jorgenson and colleagues in their IGEM model. In their case, they have been able to estimate one part of the IGEM model (the demand structure) but not the other modules (see Goettle et al. 2007). Several studies have estimated the uncertainties associated with IAMs, such as Gillingham et al. (2018) as an example. However, these studies are designed to provide forecast errors of the projections and do not attempt to estimate the structure of the underlying model. The bottom line here is that, to date, researchers have not developed approaches to applying classical statistical time-series techniques to estimation and testing of IAMs.

4.2 Model comparisons as validation

An alternative approach is to examine the results of alternative models as a way of validating models. In contrast to statistical approaches, there is a long history of model comparisons for IAMs. One of the first, if not the first, model comparison of energy models was undertaken in the late 1970s during the National Academy of Sciences’ CONAES (Committee on nuclear and alternative energy systems) project (National Research Council 1979). This project grew into the current Energy Modeling Forum at Stanford University, which has undertaken 34 projects, including several comparing energy models and IAMs.

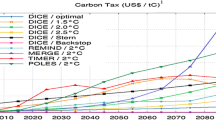

I have examined the results of the DICE-2016 model and compared that with other models that have been compiled in model-comparison studies. Table 9 shows the trajectories of four important environmental variables (emissions, concentrations, forcings, and temperature) over the long period for six different sets of projections. The first two lines, from around 1990, are the first IPCC Assessment and the first DICE model. The third line is the latest DICE model. The next three are three alternative recent projections.

More precisely, the first line of Table 9 was from the IPCC reports of approximately the same time as the first DICE model (IPCC 1990, 2014). The first IPCC report had a “business as usual” scenario that is comparable to the DICE model baseline. The fifth report (IPCC 2014) used alternative approaches. One approach was representative concentration pathways, for which the RCP 8.5 is the closest to a business-as-usual case. Another was the shared socioeconomic pathways (SSPs), which used different models. The estimate in Table 9 for the SSPs uses the average of the baseline calculations for the five SSPs. A final comparison in Table 9 is the five-model average from the MUP study from Gillingham et al. (2018), which was harmonized with the DICE model. Here are the major results looking at Table 9:

-

Looking at 1990–92 projections for 2015, they are reasonably close to the mark. Actual CO2 concentrations in 2015 were 401 ppm, which is very close to the DICE-1992 estimate and lower than the IPCC estimate. The projected increase in global temperature was reasonably accurate, being 0.01 °C high for the IPCC and 0.06 °C high for DICE-1992.

-

Two major errors stand out in the early studies. One projection error was 2015 CO2 emissions, which were substantially overestimated in the 1990–92 period. The other was a large overestimate of total radiative forcings because of the huge overestimate of the contribution of CFCs in the early estimates, before the Montreal Protocol had proven effective.

-

We see that emissions projections for 2100 have been largely downwards in later projections. This reflects primarily an underestimate of decarbonization in early studies. Temperature increase has been generally upward, reflecting an upward revision of the equilibrium temperature sensitivity from the earliest studies. The major downward revision in forcings from the 1990 IPCC study has been due to the phaseout of CFCs.

Table 10 shows another set of calculations, focusing on projections for 2100. This has only the most recent model calculations and is motivated to determine whether the most recent DICE model is consistent with the projections of other IAMs. This table provides estimates from the deterministic DICE-2016, estimates from a stochastic version of DICE-2016 with five uncertain parameters (from Nordhaus 2018), the MUP study just discussed, the AMPERE survey of models described in Kriegler et al. (2015), the SSP baselines described in Table 9, and the Energy Modeling Forum project # 27 summarized in Blanford et al. (2014), which was yet another multi-model comparison.

Table 10 shows two outcome variables for 2100 (concentrations and temperature) and two measures (mean and a measure of variability). The measure of variability is an “ensemble” variability, which is the range of the models, and a Monte-Carlo (5, 95) percentile range for the DICE-stochastic estimates. It should be noted that the other models are of different vintages (from 2010 to 2015), so the comparisons are not perfect.

It is useful to explain the different measures of variability in Table 10. The bracketed results for “DICE stochastic” are the 5th and 95th percentile of a Monte-Carlo simulation. For all others, the measure is the “ensemble range.” The ensemble measure of dispersion looks at the differences among the mean forecasts or models. Ensemble dispersion is the approach often taken by the IPCC and others in the absence of statistical measures. For example, the bracketed range of 2100 CO2 concentrations for the five SSP baseline scenarios is [606–1089] = 483 ppm. We can compare ensemble dispersion with standard statistical measures. If a variable is normally distributed, then the expected value of the ensemble range is 2.3 SD (standard deviations) for five observations and 3.1 SD for ten observations. The measure of variability for stochastic DICE (5–95 percentile) is 3.3 SD. This calculation suggests that the dispersion estimates in Table 10 are between 4 and 7 standard deviations of the variable.

Here are the major points contained in Table 10:

-

For levels of CO2 concentrations, the baseline DICE-2016 is in the middle of the results of other IAMs. However, the mean estimated concentrations for stochastic DICE-2016 are considerably higher than other estimates because of the thick tail of output due to high uncertainty of output growth. This result is extensively discussed in Nordhaus (2018).

-

For the dispersion of CO2 concentrations, stochastic DICE has much higher dispersion. The reason is that all other estimates use ensemble variation as a measure of dispersion, and this appears to be biased downward: Gillingham et al. (2018) showed that structural estimates (such as used for stochastic DICE) have much higher dispersion than ensemble estimates.

-

For the level of temperature increase, DICE-2016 is again in the middle of the pack, with two of the other estimates above and two below. There is a relatively small 2100 temperature divergence between baseline DICE-2016 and stochastic DICE-2016. The small difference arises because of the non-linearity of the temperature-concentration function and the inertia of temperature behind concentrations.

-

For the dispersion of temperature increase, stochastic DICE-2016 again shows a higher range than the ensemble variation. The range for stochastic DICE is 3.2 °C (for the 5–95 percentile range) while the ensemble ranges are from 0.6 to 2.1 °C.

Tables 9 and 10 suggest that the deterministic version of DICE-2016 shows no major differences from the center of gravity of other IAMs for major environmental variables (emissions, concentrations, forcings, and temperature). This pattern indicates modeling convergence as of the 2015–2017 period, with most of the major models having a similar trajectory. The major difference will appear in the uncertainty of the trajectories, for which there has been relatively little focus up to now.

5 Conclusions

This study analyzes the changes in the DICE model of the economics of climate change over the last quarter century. Over that period, the central analytical structure of the model has remained the same, while most of the components have been revised in small or large ways, and there have been major revisions and improvements in most of the underlying data.

Before summarizing the results, it may be useful to reflect on the usefulness of the methods developed in this study for the broader IAM community. As of today, there are dozens of IAMs around the world. It is extremely challenging for other modelers as well as for consumers of IAM studies to understand the history and characteristics of different IAMs. The present study might serve as a model for the IAM community to identify the salient characteristics of each IAM as well as to provide a diagnostic tool, particularly for long-lived IAMs, for examining its history and revisions.

For example, the kinds of statistics shown in Tables 1 and 2 should be routinely available for each IAM (modified and augmented appropriately). While such outputs are often uncovered in model comparisons, a better approach would be to have the salient model characteristics regularly provided in a standardized format. Additionally, a retrospective analysis of model changes over its history can help other modelers and the broader community understand how much models have changed and the sources of changes. While the present study is only a demonstration of what is possible, regular information on salient model characteristics and occasional retrospective analyses should be best practice for integrated assessment models.

Concerning substance, the major message of the study is simple. The projections of most environmental variables (such as emissions, concentrations, and temperature change) have seen relatively small revisions (with the emphasis here on relatively). However, there have been massive changes in the projections of the economic variables, including those that were forecast in 1992 and have now been realized in 2017. The stability of the environmental variables largely reflects the fact that these processes were relatively well-understood by the early 1990s, and, therefore, modeling of these components within IAMs could be based on a solid scientific foundation.

By contrast, the dominant underlying change in the results of this IAM has been in the economic sectors, particularly in the measurement or prospect of current and future global output per capita. A useful example is the revision in global output for 2015. The level of 2015 output (in 2010$) was revised upwards by 35% over the period. Most of this was conceptual, involving the change from market exchange rates to purchasing power parity. The major revision in the 2100 outlook for output was a change from the stagnationist view of global growth in the 1980s and 1990s to a view of continued rapid growth today. This change can be seen by comparing the survey in Nordhaus and Yohe (1983) with that of Christensen et al. (2018). As a result of these two changes, projected 2100 output per capita was revised upward by a factor of 3½ over the period. This major upward revision drove all economic variables, including damages and the social cost of capital.

A further major revision has been in the damage function. There was no established aggregate damage function in the early 1990s, and this module of the DICE model was cobbled together based on very rudimentary primary information.

Another large change has been in the estimated rate of decarbonization, where the revisions have been to lower emissions per unit output over the period. This was largely due to the upward revision in the growth of output (which was not well measured) compared to a stable estimate of emissions (which was relatively well measured).

Perhaps, the most dramatic revision has been the social cost of carbon (SCC). The SCC for 2015 has been revised upwards from $5 to $31 per ton of CO2 over the last quarter-century. This is the result of several different model changes as shown in Table 5. While this large a change is unsettling, it must be recognized that there is a large estimated error in the SCC. The estimated (5%, 95%) uncertainty band for the SCC in the 2016R2 model is $6 and $93 per ton of CO2. This wide band reflects the compounding uncertainties of the temperature sensitivity, output growth, damage function, and other factors. Moreover, it must be recognized that analyses of the social cost of carbon were not widespread until after 2000. Finally, estimates of the SCC are both highly variable across model and specification and have increased substantially over the last quarter-century. If we take early estimates of the SCC from two other well-known models (PAGE and FUND), these were close to estimates in the DICE-1992 model.

A final result concerns the estimated uncertainty of the estimates. Because of their non-statistical structure, it is difficult to estimate the uncertainties associated with future forecasts of IAMs. While a complete comparison is not available, the actual errors to date (measured as forecast revisions) are reasonably within the estimated error bands. This suggests that studies of the uncertainties of IAM projections are an important companion to standard projections as a way of signaling the reliability of different projections (a recent multi-model study of uncertainty is in Gillingham et al. 2018). However, the standard approach of using ensemble uncertainty (uncertainty across models) as an estimate of forecast uncertainty is likely to be unreliable and may underestimate actual uncertainty dramatically.

Both earlier studies and the results of this retrospective indicate that the economic components and projections are the least precise and the most deserving of future study. This applies especially to studies of long-run economic growth (to 2100 and beyond). Aside from climate-change policies, uncertainties and revisions about economic growth are likely to be the major factors behind changing prospects for climate change in the years to come.

Notes

The “1” indicates that it is a one-region model; the “2” indicates that it is the second major version; and the “3” indicates that it uses the third-round estimate of the data. Documentation for this version is contained in Nordhaus (1992b).

It will be instructive to indicate that the task of converting models is not always trivial. The earlier model was in 1989 US dollars at market exchange rates, while the latest model was in 2010 US international dollars. If we look at the US price index for GDP, the ratio of 2010 to 1989 prices is 1.57. However, this is not representative of the world because of the changing composition of output and growth rates of different countries. If we take the ratio of real to nominal GDP for the IMF data for market exchange rates, the ratio is 1.52 for 1985 (the last year with actual data for the 1992 model). The IMF’s calculation of the global price-level change from 1989 to 2010 is 2.02 for the PPP concept and 1.70 for the MER concept. We have taken a reflator of 2.0 to represent the PPP concept. This adjustment is only important for the first step in the process (v6). For the second step, which adjusts to 2015 levels of output, the reflator becomes irrelevant.

We have used the label “2100” in this study. Strictly speaking, the year was 2105 in the DICE model.

The change in the utility function involved both a change in the rate of time preference and a change in the elasticity of the marginal utility of consumption. These affect the real return and the impact of changes in productivity growth on different variables.

References

Blanford GJ, Kriegler E, Tavoni M (2014) Harmonization vs. fragmentation: overview of climate policy scenarios in EMF27. Clim Chang 123(3–4):383–396

Christensen P, Gillingham K, Nordhaus W (2018) Uncertainty in forecasts of long-run productivity growth. Proceedings National Academy of Sciences

Fukač M, Pagan A (2010) Limited information estimation and evaluation of DSGE models. J Appl Econ 25(1):55–70

Gillingham K, Nordhaus WD, Anthoff D, Blanford G, Bosetti V, Christensen P, McJeon H, Reilly J, Sztorc P (2018) Modeling uncertainty in climate change: a multi-model comparison. J Assoc Environ Resour Econ (forthcoming)

Goettle RJ, Ho MS, Slesnick DT, Wilcoxen PJ, Jorgenson DW (2007) IGEM, an Inter-Temporal General Equilibrium Model of the U.S. Economy with Emphasis on Growth, Energy, and the Environment. U.S. Environmental Protection Agency

Hansen LP, Heckman JJ (1996) The empirical foundations of calibration. J Econ Perspect 10(1):87–104

IPCC (1990) In: Houghton JT, Jenkins GJ, Ephraums JJ (eds) Climate change: the IPCC Scientific Assessment. Cambridge University Press, Cambridge

IPCC (2014) Thomas Stocker, ed., Climate change 2013: the physical science basis: Working Group I contribution to the Fifth assessment report of the Intergovernmental Panel on Climate Change. Cambridge University Press, New York

Kriegler E, Riahi K, Bauer N, Schwanitz VJ, Petermann N, Bosetti V, Marcucci A, Otto S, Paroussos L, Rao S, Currás TA (2015) Making or breaking climate targets: the AMPERE study on staged accession scenarios for climate policy. Technol Forecast Soc Chang 90:24–44

National Research Council (1979) Energy in transition, 1985–2010: final report of the committee on nuclear and alternative energy systems. National Research Council, National Academy of Sciences, WH Freeman & Company

Nordhaus WD (1992a) An optimal transition path for controlling greenhouse gases. Science 258:1315–1319

Nordhaus WD (1992b) The DICE model: background and structure, Cowles Foundation Discussion Paper 1009, February, available at http://cowles.yale.edu/publications/cfdp

Nordhaus WD (1994) Managing the global commons: the economics of climate change. MIT Press, Cambridge

Nordhaus W (2008) A question of balance: weighing the options on global warming policies. Yale University Press, New Haven

Nordhaus W (2017) The social cost of carbon: updated estimates, Proceedings of the U. S. National Academy of Sciences, January 31

Nordhaus W (2018) Projections and uncertainties about climate change in an era of minimal climate policies, forthcoming, American Economic Journal: Economic Policy

Nordhaus W, Sztorc P (2013) DICE 2013R: introduction and user’s manual, October 2013, available at http://www.econ.yale.edu/~nordhaus/homepage/ documents/DICE_Manual_100413r1.pdf

Nordhaus W, Yohe G (1983) Future carbon dioxide emissions from fossil fuels, in National Research Council-National Academy of Sciences, Changing Climate, Washington, D.C., National Academy Press, 1983

Funding

The research reported here was supported by the US National Science Foundation Award GEO-1240507 and the US Department of Energy Award DE-SC0005171-001.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has conflicts of interest.

Rights and permissions

About this article

Cite this article

Nordhaus, W. Evolution of modeling of the economics of global warming: changes in the DICE model, 1992–2017. Climatic Change 148, 623–640 (2018). https://doi.org/10.1007/s10584-018-2218-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-018-2218-y