Abstract

Cardiac CT using non-enhanced coronary artery calcium scoring (CACS) and coronary CT angiography (cCTA) has been proven to provide excellent evaluation of coronary artery disease (CAD) combining anatomical and morphological assessment of CAD for cardiovascular risk stratification and therapeutic decision-making, in addition to providing prognostic value for the occurrence of adverse cardiac outcome. In recent years, artificial intelligence (AI) and, in particular, the application of machine learning (ML) algorithms, have been promoted in cardiovascular CT imaging for improved decision pathways, risk stratification, and outcome prediction in a more objective, reproducible, and rational manner. AI is based on computer science and mathematics that are based on big data, high performance computational infrastructure, and applied algorithms. The application of ML in daily routine clinical practice may hold potential to improve imaging workflow and to promote better outcome prediction and more effective decision-making in patient management. Moreover, CT represents a field wherein ML may be particularly useful, such as CACS and cCTA. Thus, the purpose of this review is to give a short overview about the contemporary state of ML based algorithms in cardiac CT, as well as to provide clinicians with currently available scientific data on clinical validation and implementation of these algorithms for the prediction of ischemia-specific CAD and cardiovascular outcome.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Cardiac computed tomography has emerged as a cornerstone in the non-invasive assessment of coronary artery disease (CAD) and now has a class I recommendation, according to the current guidelines of the European Society of Cardiology [1]. Non-enhanced coronary artery calcium scoring (CACS) and coronary CT angiography (cCTA) have been proven for excellent evaluation of CAD, combining anatomical and morphological assessment, such as detailed plaque quantification and characterization of CAD for cardiovascular risk stratification and therapeutic decision-making, in addition to providing prognostic value for the occurrence of adverse cardiac outcome [2,3,4]. Technical advances in hardware and software applications have led to the addition of functional analysis to the previously solely anatomical assessment of CAD. Namely, cCTA-derived fractional flow reserve (CT-FFR) and CT myocardial perfusion (CTP) have been introduced for the detection of hemodynamically significant CAD. Whereas CT-FFR is based on image post-processing using specific software applications, CTP is performed by the addition of a pharmacological stress agent using an additional acquisition protocol. Both techniques have demonstrated incremental value for the prediction of lesion-specific ischemia and cardiovascular outcome [5,6,7,8]. Furthermore, automated plaque segmentation and plaque quantification using data analytic techniques such as radiomics have attracted interest for improved diagnostic and prognostic accuracy [9].

Artificial intelligence (AI) is a field of mathematics and computer science that uses tasks that are normally linked to human intelligence (i.e. pattern recognition and perception, translation of information, and decision-making). Most AI systems used in medical imaging are based on machine learning (ML) applications [10, 11]. ML defines computer-based algorithms that can effectively learn from large training data sets that can be applied for the prediction and intelligent decision-making of a specific task on new untrained data. In the digital era where medical imagers are faced with an increasing amount of imaging data, ML algorithms are both capable of handling this big data with high computational storage power and can be very useful by providing streamlined, time-saving workflows due to learned decision-making based on this input data. Thus, the main goal of ML applications is to assist imagers with their daily tasks by increasing efficiency, reducing errors, and achieving objectives with minimal manual input.

In this review, we outline the contemporary state of ML-based algorithms in cardiac CT, focusing on the clinical validation and implementation of these algorithms in CACS, cCTA, CT-FFR, and CTP for the prediction of ischemia-specific CAD and cardiovascular outcome.

Artificial intelligence and machine learning applications in cardiac CT

Principles and technical background

ML is an analytic method using computer algorithms to learn from datasets without direct programming of these functions [12]. By utilizing the concept of more learning leading to better results, it is analogous to the human learning process. ML features the creation of an autonomous system, which can detect and gain knowledge through pattern recognition of large data sets without being explicitly programmed for a specific task. Some key requirements must be met for appropriate application of ML: the data set must be detailed and relevant enough for the desired task, the applied ML algorithm must be appropriate for the complexity, amount, and type of data used, and the ML-derived results have to be validated and demonstrate usefulness in clinical practice [12] (Table 1).

In cardiac CT, those ML algorithms can be broadly categorized as supervised and unsupervised algorithms: in unsupervised learning, only the input variable is given without trying to engender a specific outcome. The model learns the structure of the data to identify any potential consistent patterns within the data space, without learning an association with a target outcome. Cluster analysis and principal component analysis comprise these algorithms. K-nearest neighbors, K-means, and generalized adversarial networks can be categorized as unsupervised ML models [12]. K-nearest neighbors classify every object being compared to its k-nearest training examples and assigns it to the most frequent of its neighbors. The method is based on the general assumption of a stronger connection between closer cases.

Supervised models can be applied to take on both classification and regression problems. In supervised learning algorithms, the dataset is analyzed to select individual input features that are processed and weighted to identify the best combination of features to fit the outcome variable. Some of the most common algorithms used are support vector machines, decision trees, random forest, artificial neural networks, and linear regression [12, 13]. Support vector machines use a higher dimensional space known as kernel, where data are separated in groups, divided by a hyperplane as a separation between these classes. The name-giving support vector classifier describes additional lines or planes that define a frontier which best segregates the two classes. Decision trees are the simplest ML models based on the human decision-making process, where data is analyzed and split into two groups. It is also commonly used in flow diagrams and risk calculation charts and its parameter selection is based on information rather than common knowledge. It can be further utilized by combination for ensemble learning to create multilayer classifiers like random forest [14]. These consist of large individual numbers of decision trees, where each describes a class prediction. The model’s prediction presents the class with the highest number. By using a combination of learning models, it enhances the overall result. In contrast, artificial neural networks are biologically inspired computational networks, created to emulate the human brain. Their main use in medical science is convolutional neural networking based on deep neural networks consisting of up to hundreds of internal layers, which is currently considered state-of-the-art in algorithms for outcome prediction using imaging data. An example of a deep learning neural network with multiple layers is shown in Fig. 1.

These artificial layers are connected by synapses, which are weighted values. It uses specific receptor fields, just like the human brain cortex would, to analyze structures without connecting every pixel to a single neuron. With further learning, these structures can range from simple lines to evaluation and even classification of whole images [15]. At last, linear regression as part of regression analysis is a longtime-used standard method for determining strengths of predictors, as well as trend or effect prognostication.

A main challenge of ML is the adequate fitting of decision boundaries used to describe the actual data distribution. Underfitting, mainly assigned to a small sample size and incorrect data assumption, leads to poor results representing the data. Meanwhile, a model that is too complex may lead to Overfitting. Here, an ML algorithm captures not only appropriate distributed data but also single data not well presented within the boundary. It therefore is accurate for the analyzed dataset but may fail in further unseen studies. To acquire an optimal fitted model, a compromise of model complexity and data representation is inevitable [9, 16].

Current evidence

Machine learning and coronary artery calcium scoring

Coronary artery calcium scoring (CACS) from non-contrast enhanced images is a well-established tool for screening and risk stratification in patients with low-intermediate risk of CAD and a strong predictor of cardiovascular events [17, 18]. CACS is performed by image postprocessing using the Agatston score by manual assessment of the presence and extent of calcium in the coronaries. Due to the increasing number of CACS scans performed, automated segmentation and quantification of calcium using ML has gained interest. Thus, most ML approaches introduced in CACS have focused on automated calcium detection and scoring [19]. Wolterink et al. introduced an ML approach back in 2015 using automated calcium identification and quantification using intensity-based thresholds on non-contrast-enhanced scans with additional features like size, shape, and location using a decision-tree algorithm. They demonstrated a strong agreement (κ = 0.94) between ML-based calcium risk categorization and human manual segmentation with a good sensitivity of 87% [20]. More recently, the same working group investigated the impact of ML for CACS in 250 datasets using convolutional neural networks and showed a sensitivity of 0.71 and an agreement of 83% in risk classification in comparison to the manual assessment by an expert reader [21]. In a recent study, Martin et al. evaluated a novel deep learning-based research software (Automated CaScoring, Siemens Healthineers) for CACS on non-contrast CT images [22] (Fig. 2). This approach is based on a convolutional neural network and was trained on 2000 annotated datasets. The ML software correctly classified 93.2% of patients (476/511) into the same risk category as the human observers. The authors demonstrated a strong Dice similarity coefficient for a CACS > 0 of 0.95. Likewise, van Velzen et al. [23] investigated the performance of a deep learning convolutional neural network for automatic CACS across a wide range of CT examination types. They showed that the algorithm yielded excellent intraclass correlation coefficients of 0.79-0.97 for CACS in a large and diverse set of CT examinations. More importantly, CT protocol-specific training of the baseline data resulted in an improved risk category assessment for CACS. Yang et al. [24] and Shahzad et al. [25] assessed a fully automatic calcium scoring method on contrast and non-contrast enhanced CT images from different CT systems using a support vector machine classifier. The authors reported sensitivities and specificities of 0.94 and 0.86, respectively. Moreover, recent studies have demonstrated the feasibility of CACS derived from non-contrast enhanced low-dose chest CT [26]. Consequently, ML methods have been applied to imaging studies routinely used for lung cancer screening. In a dataset of 5973 non-contrast non-ECG gated chest CT scans, Cano-Espinosa et al. [27] used a deep convolutional neural network to extract the Agatston scores directly from these images. The algorithm yielded a Pearson correlation coefficient of 0.93 and correctly stratified 73% of cases into the corresponding risk category. In summary, application of ML algorithms for CACS have demonstrated their feasibility with overall good results in diagnostic accuracy and subsequent risk categorization. However, additional efforts are warranted to improve the diagnostic performance of these fully automated software applications in an a priori screening test like CACS.

Machine learning and coronary CT angiography

cCTA has emerged as a cornerstone in the non-invasive evaluation of patients with suspected CAD ruling out the presence of atherosclerotic lesions with high diagnostic accuracy (Fig. 3). Therefore, cCTA serves as a reliable gatekeeper for invasive coronary angiography (ICA) [28, 29]. Moreover, cCTA has demonstrated prognostic value for outcome prediction of major adverse cardiac events (MACE) beyond traditional cardiovascular risk factors [3]. Recent technical advances in image postprocessing and software solutions have added detailed plaque quantification and more importantly, functional evaluation of coronary artery flow and subsequent blood supply to cCTA. Automated plaque detection and quantification ranging from extent of plaque burden to a more detailed characterization of plaques (i.e. non-calcified, calcified, mixed plaques) with the identification of so called “high-risk” plaque features is a coveted target for ML, as current measurements require time-consuming manual human input with wide ranges in intra- and interobserver variability [30, 31]. Technologies such as radiomics and texture analysis, which allow extraction of numerous quantitative parameters from CT images to describe the texture and spatial complexity of plaques, have shown promising results [32, 33].

A major topic that has been extensively studied is the identification of hemodynamically significant CAD by cCTA. CT-FFR and CTP have been of major interest in ML solutions. Whereas promising data for ML approaches using CT-FFR and plaque characteristics are available, only few studies have investigated ML applications in CTP. In general, most studies using ML in cCTA have focused on improving automated segmentation, image pre- and post-processing and computer-aided diagnosis and outcome prediction of CAD.

Machine learning and plaque quantification

ML algorithms for data extraction from cCTA using automated plaque analysis have recently been investigated. Kang et al. [34] used support vector machine learning for the automated detection of obstructive and non-obstructive CAD on cCTA, reporting a diagnostic accuracy of 94%. Utilizing an automated algorithm (AUTOPLAQ) based on automated coronary segmentation and classification for volumetric plaque quantification, Dey et al. [35] added image features to a ML approach with automated feature selection and information gain ranking for the prognostication of lesion-specific ischemia. The ML score resulted in an AUC of 0.84, which was significantly higher compared to all individual CT measures (AUCs 0.63-0.76, p < 0.05). Zreik et al. [36] used a multi-task recurrent convolutional neural network for the automated cCTA-derived detection and classification of coronary plaques and stenosis severity against visual assessment. Their approach achieved an accuracy of 0.77 and 0.80 for the detection and quantification of coronary plaques, and for the determination of its anatomical significance, respectively. Denzinger et al. [37] applied three different ML approaches (convolutional neural network, 2D multi-view ensemble approach for texture analysis, and a newly proposed 2.5D approach) for plaque analysis to identify hemodynamically significant CAD. All three methods demonstrated good performance for the detection of lesion-specific ischemia (all AUC 0.90). Studies by Jawaid et al. [38] and Wei et al. [39] used ML for automated centerline validation and extraction of vessel wall and plaque features using a support vector machine or linear classifier, respectively. They reported accuracies of 88% and 90% for automated detection when compared to an expert reader. More recently, Kolossvary et al. assessed the potential of radiomics in assessing the Napkin ring sign, which is categorized as a so called “high-risk” plaque feature. A total of 4440 radiomics parameters were calculated. Authors showed that the best radiomics parameter performed signficiantly better than plaque attenuation to detect the Napkin ring sign (AUC 0.89 vs. 0.75) [40].

Machine learning and CT-derived fractional flow reserve

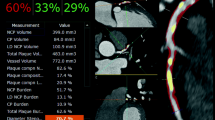

The application of ML calculations to CT-FFR represents the most developed field in cardiac CT, as ML has demonstrated to be a reasonable successor of prior computational fluid dynamics (CFD) algorithms [41, 42]. Until recently, ML CT-FFR had been provided only by one vendor (Siemens Healthineers, Forchheim, Germany) and is for research purposes only. Meanwhile other vendors offer similar, commercially available algorithms, such as Keya Medical’s (Beijing, China) DeepVessel FFR application [43]. ML-based CT-FFR employs a multi-layer neural network framework that are trained and validated offline against the former CFD approach to learn the manifold relationship between the anatomy of the coronary tree and its analogous hemodynamic parameters. In the case of the Siemens algorithm, training used a virtual dataset of 12.000 synthetic 3D coronary models [44]. The technical background of ML-based CT-FFR has been described in detail recently [45]. A case example of ML-based CT-FFR is shown in Fig. 4.

Coronary CT angiography in a 56-year old man. a Automatically generated curved multiplanar reformations demonstrate > 70% stenosis of the proximal LAD (arrow). b 3-dimensional color-coded mesh shows a CT-FFR value of 0.57, indicating lesion-specific ischemia (arrow). c Invasive coronary angiography confirms obstructive stenosis of the LAD (arrow) with FFR of 0.56. d, e Color-coded automated plaque evaluation of the causative lesion by the analysis software quantitates the predominantly non-calcified composition of the underlying atheroma

The validation of diagnostic accuracy of ML-based CT-FFR for ischemia prediction has been assessed in one multicenter trial and several single-center studies in relation to cCTA and ICA. The MACHINE registry (Diagnostic Accuracy of a Machine-Learning Approach to Coronary Computed Tomographic Angiography—Based Fractional Flow Reserve: Result from the MACHINE Consortium) was the first investigation to assess ML-based CT-FFR in 351 patients with 525 vessels from 5 sites in Europe, Asia, and the United States [46]. ML-based CT-FFR showed significantly improved diagnostic accuracy (ML CT-FFR 78% vs. cCTA 58%) and specificity (ML CT-FFR 76% vs. cCTA 38%), while no significant changes in sensitivity were observed (ML CT-FFR 81% vs. cCTA 88%). ML-based CT-FFR yielded a significantly higher AUC of 0.84 when compared to cCTA alone (AUC 0.69, p < 0.05) for the detection of lesion-specific ischemia. In line with the multicenter MACHINE registry, several single-center studies have proven ML-based CT-FFR. A recent investigation included 85 patients with 104 vessels and demonstrated a per-lesion sensitivity and specificity of 79% and 94%, respectively. ML CT-FFR revealed a significantly higher diagnostic performance over cCTA alone both on a per-lesion and per-patient level with AUCs of 0.89 vs. 0.61 (p < 0.001) and 0.91 vs. 0.65 (p < 0.001), respectively [47]. Similar results have been reported by von Knebel Doeberitz et al. [48] and Tang et al. [49] who reported sensitivities and specificities of 82% and 94%, and 85% and 94%, respectively. The impact of coronary calcification and gender on the diagnostic performance of ML-based CT-FFR has also been investigated in two sub-studies of the MACHINE registry. Tesche et al. [50] assessed the influence of calcifications on performance characteristics in patients with a wide range of Agatston scores (range 0 to 3920). They demonstrated an excellent discrimination in vessels with high Agatston scores (CAC ≥ 400) and high accuracy in low-to-intermediate Agatston scores (CAC > 0 to < 400), however with significant differences in the corresponding AUCs (AUC: 0.71 vs. 0.85, p = 0.04). Baumann et al. [51] evaluated the accuracy of ML CT-FFR in 398 vessels in men and 127 vessels in women. Whilst the authors found no significant difference in the AUCs in men when compared to women (AUC: 0.83 vs. 0.83, p = 0.89), ML-based CT-FFR was not superior to cCTA alone (AUC: 0.83 vs. 0.74, p = 0.12) in women, however it was significantly different in terms of accuracy in men (0.83 vs. 0.76 p = 0.007).

The impact of ML-based CT-FFR for therapeutic decision making and on adverse cardiac outcome was assessed in two small retrospective single-center studies [52, 53]. The therapeutic strategy (optimal medical therapy alone vs. revascularization) was investigated in 74 patients with 220 vessels. ML CT-FFR correctly identified 35 of 36 patients (97%) with hemodynamically significant CAD on invasive assessment and all patients (38 of 38) with functionally non-significant CAD. Additionally, the appropriate treatment decision was chosen in 73 of 74 patients (99%) with ML-based CT-FFR, with corresponding accuracy, sensitivity, and specificity of 0.99, 0.97, and 1.0. Prediction of MACE by ML CT-FFR was assessed in 82 patients with a median follow-up of 18.5 months by von Knebel Doeberitz et al. [53]. In a multivariable regression analysis, significant CAD defined by ML CT-FFR ≤ 0.80 served as the strongest predictor for adverse cardiac outcome (odds ratio 7.78, p = 0.001).

These promising results support the use of ML in CT-FFR assessment. However, larger studies on the clinical applicability of ML-based CT-FFR for outcome prediction, diagnostic decision making, and its impact on healthcare economics are warranted before ML CT-FFR can be integrated in routine clinical workflows.

Machine learning and CT perfusion

The impact of ML in CTP has only been investigated in a small number of studies. Generally, the application of CTP in cCTA is limited by its novelty and has been assessed in only a few cardiovascular imaging centers. In a recent study, Xiong et al. [54] applied three different ML approaches (Random Forest, Ada Boost, Naive Bayes) for automated segmentation and delineation of the left ventricle using three different myocardial features obtained from resting CTP images, such as normalized perfusion intensity, transmural perfusion ratio, and myocardial wall thickness. They found that the Ada Boost algorithm performed best when compared to manual segmentation by an expert reader with an AUC of 0.73. In line with this investigation, Han et al. [55] used a gradient boosting classifier for supervised ML for predicting physiologically significant CAD in resting myocardial CTP in a dataset of 252 patients. The authors reported a diagnostic accuracy, sensitivity, and specificity of 68%, 53%, and 85% of CTP added to cCTA stenosis > 70% for predicting ischemia. The addition of resting CTP to the evaluation with cCTA resulted in an increased AUC of 0.75 vs. 0.68 for hemodynamically significant CAD. A case example of CTP post-processing image analysis is shown in Fig. 5.

Overall, the application of ML in CTP has a potential future especially in automated segmentation of the myocardium and automated detection of perfusion defects in both static and dynamic CTP to further reduce human input for image postprocessing. Additionally, the employment of CTP in ML-based risk models for the prediction of ischemia will most likely result in improved diagnostic accuracy in line with CT-FFR.

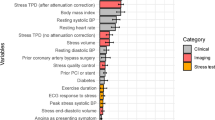

Machine learning and cardiovascular outcome

One of the first major studies using the application of ML in cCTA for outcome prediction has been conducted by Motwani et al. [56] using a large dataset of 10.030 patients from the CONFIRM registry (Coronary CT Angiography Evaluation for Clinical Outcomes: An International Multicenter). A total of 44 cCTA-derived parameters, together with 25 clinical parameters, were applied to a ML algorithm for outcome prediction at 5-year follow-up. The ML approach involved automated feature selection by information gain ranking, followed by model building with LogitBoost and tenfold cross-validation. The ML score combining clinical and CT parameter exhibited a significantly higher AUC of 0.79 for death prediction when compared to traditional risk factors or CT measures. Likewise, van Rosendael et al. [57] assessed the value of a gradient-boosting tree ensemble ML method by only using imaging markers derived from cCTA for outcome prediction in 8844 patients. They demonstrated that a risk score created by a ML algorithm that uses standard 16-coronary segment analysis and plaque composition performed significantly better in the prediction of adverse events (AUC, 0.77) compared to all other coronary CT angiography-derived scores (AUC ranging from 0.69 to 0.70, p < 0.001). In line with the prior investigations, van Assen et al. [58] used a commercially available software (vascuCAP, Elucid Bioimaging) for the automated model-based extraction of quantitative plaque features and their prognostication for MACE. Adding morphological plaque features to their prognostic model resulted in an accuracy of 77%, with an AUC of 0.94 for MACE prediction. Johnson et al. [59] investigated the performance of four different ML models (logistic regression, K-nearest neighbors, bagged trees, and classification neural network) using 64 CT-derived vessel features to discriminate between patients with and without subsequent death or cardiovascular events in a study cohort of 6892 patients. The results demonstrated that the discriminatory power of the ML models in the identification of patients with MACE were superior to that of all evaluated traditional coronary CT angiography-derived scores (ML models AUC 0.77, CAD-RADS AUC 0.72, CT-Leaman-score AUC 0.74, segment plaque burden score AUC 0.76, all p < 0.001).

Conclusion and future outlook

Cardiac CT has resulted in remarkable advancements in the last few decades both in CT systems and hardware and software applications. Consequently, cardiac CT has emerged as a cornerstone in the non-invasive assessment of CAD with high diagnostic accuracy. The rapidly growing amount of imaging data has triggered interest in automated diagnosis tools. ML might be the most suitable solution yet to handle these increased data volumes to streamline imaging workflows by providing pre-reading, quantification, and extraction of necessary data from CT images to improve diagnostic accuracy, and ultimately to allow outcome prediction from various input variables. Before ML applications can be integrated into clinical workflows, several general questions of principle need to be addressed. First of all, safety and protection of patient-data using computational storage and compliance with regulations must be guaranteed. Furthermore, access of different healthcare providers to these data has to be governed. So far, no study has shown that the integration of ML has led to better quality of care, improved patient outcome, or lowered healthcare costs. If these challenges can be addressed and obstacles can be overcome, ML offers a powerful platform to integrate clinical and imaging data for improved patient care.

Abbreviations

- AI:

-

Artificial intelligence

- AUC:

-

Area under the curve

- CACS:

-

Coronary artery calcium scoring

- CAD:

-

Coronary artery disease

- cCTA:

-

Coronary CT angiography

- CFD:

-

Computational fluid dynamics

- CT-FFR:

-

CT-derived fractional flow reserve

- CTP:

-

CT myocardial perfusion

- ICA:

-

Invasive coronary angiography

- MACE:

-

Major adverse cardiac events

- ML:

-

Machine learning

References

Knuuti J, Wijns W, Saraste A, Capodanno D, Barbato E, Funck-Brentano C et al (2020) 2019 ESC Guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J 41:407–477

Chow BJ, Small G, Yam Y, Chen L, Achenbach S, Al-Mallah M et al (2011) Incremental prognostic value of cardiac computed tomography in coronary artery disease using CONFIRM: COroNary computed tomography angiography evaluation for clinical outcomes: an InteRnational Multicenter registry. Circ Cardiovasc Imaging 4:463–472

Cho I, Al’Aref SJ, Berger A, Hartaigh OB, Gransar H, Valenti V et al (2018) Prognostic value of coronary computed tomographic angiography findings in asymptomatic individuals: a 6-year follow-up from the prospective multicentre international CONFIRM study. Eur Heart J. 39:934–941

Investigators S-H, Newby DE, Adamson PD, Berry C, Boon NA, Dweck MR et al (2018) Coronary CT angiography and 5-year risk of myocardial infarction. N Engl J Med 379:924–933

Patel MR, Norgaard BL, Fairbairn TA, Nieman K, Akasaka T, Berman DS et al (2019) 1-Year impact on medical practice and clinical outcomes of FFRCT: the ADVANCE Registry. JACC Cardiovasc Imaging. 13:97–105

Tesche C, De Cecco CN, Albrecht MH, Duguay TM, Bayer RR 2nd, Litwin SE et al (2017) Coronary CT angiography-derived fractional flow reserve. Radiology 285:17–33

Pontone G, Baggiano A, Andreini D, Guaricci AI, Guglielmo M, Muscogiuri G et al (2019) Dynamic stress computed tomography perfusion with a whole-heart coverage scanner in addition to coronary computed tomography angiography and fractional flow reserve computed tomography derived. JACC Cardiovasc Imaging. 12:2460–2471

van Assen M, De Cecco CN, Eid M, von Knebel Doeberitz P, Scarabello M, Lavra F et al (2019) Prognostic value of CT myocardial perfusion imaging and CT-derived fractional flow reserve for major adverse cardiac events in patients with coronary artery disease. J Cardiovasc Comput Tomogr. 13:26–33

Kolossvary M, De Cecco CN, Feuchtner G, Maurovich-Horvat P (2019) Advanced atherosclerosis imaging by CT: radiomics, machine learning and deep learning. J Cardiovasc Comput Tomogr. 13:274–280

van Assen M, Banerjee I, De Cecco CN (2020) Beyond the artificial intelligence hype: what lies behind the algorithms and what we can achieve. J Thorac Imaging 35:S3–S10

Monti CB, Codari M, van Assen M, De Cecco CN, Vliegenthart R (2020) Machine learning and deep neural networks applications in computed tomography for coronary artery disease and myocardial perfusion. J Thorac Imaging 35:S58–S65

Dey D, Slomka PJ, Leeson P, Comaniciu D, Shrestha S, Sengupta PP et al (2019) Artificial iintelligence in cardiovascular Imaging: JACC state-of-the-art review. J Am Coll Cardiol 73:1317–1335

Al’Aref SJ, Anchouche K, Singh G, Slomka PJ, Kolli KK, Kumar A et al (2019) Clinical applications of machine learning in cardiovascular disease and its relevance to cardiac imaging. Eur Heart J 40:1975–1986

Breiman L (2001) Random forests. Mach Learn. 45:5–32

Hubel DH (1959) Single unit activity in striate cortex of unrestrained cats. J Physiol 147:226–238

Singh G, Al’Aref SJ, Van Assen M, Kim TS, van Rosendael A, Kolli KK et al (2018) Machine learning in cardiac CT: basic concepts and contemporary data. J Cardiovasc Comput Tomogr. 12:192–201

McClelland RL, Chung H, Detrano R, Post W, Kronmal RA (2006) Distribution of coronary artery calcium by race, gender, and age: results from the Multi-Ethnic Study of Atherosclerosis (MESA). Circulation 113:30–37

Rozanski A, Gransar H, Shaw LJ, Kim J, Miranda-Peats L, Wong ND et al (2011) Impact of coronary artery calcium scanning on coronary risk factors and downstream testing the EISNER (Early Identification of Subclinical Atherosclerosis by Noninvasive Imaging Research) prospective randomized trial. J Am Coll Cardiol 57:1622–1632

Fischer AM, Eid M, De Cecco CN, Gulsun MA, van Assen M, Nance JW et al (2020) Accuracy of an artificial intelligence deep learning algorithm implementing a recurrent neural network with long short-term memory for the automated detection of calcified plaques from coronary computed tomography angiography. J Thorac Imaging 35:S49–S57

Wolterink JM, Leiner T, Takx RAP, Viergever MA, Išgum I (2015) Automatic coronary calcium scoring in non-contrast-enhanced ECG-triggered cardiac CT with ambiguity detection. IEEE Trans Med Imaging 34:1867–1878

Wolterink JM, Leiner T, de Vos BD, van Hamersvelt RW, Viergever MA, Isgum I (2016) Automatic coronary artery calcium scoring in cardiac CT angiography using paired convolutional neural networks. Med Image Anal 34:123–136

Martin SS, van Assen M, Rapaka S, Hudson HT Jr, Fischer AM, Varga-Szemes A et al (2020) Evaluation of a deep learning-based automated CT coronary artery calcium scoring algorithm. JACC Cardiovasc Imaging. 13:524–526

van Velzen SGM, Lessmann N, Velthuis BK, Bank IEM, van den Bongard D, Leiner T et al (2020) Deep learning for automatic calcium scoring in CT: validation using multiple cardiac CT and chest CT Protocols. Radiology 295:66–79

Yang G, Chen Y, Ning X, Sun Q, Shu H, Coatrieux JL (2016) Automatic coronary calcium scoring using noncontrast and contrast CT images. Med Phys 43:2174

Shahzad R, van Walsum T, Schaap M, Rossi A, Klein S, Weustink AC et al (2013) Vessel specific coronary artery calcium scoring: an automatic system. Acad Radiol. 20:1–9

Hecht HS, Cronin P, Blaha MJ, Budoff MJ, Kazerooni EA, Narula J et al (2017) 2016 SCCT/STR guidelines for coronary artery calcium scoring of noncontrast noncardiac chest CT scans: a report of the Society of Cardiovascular Computed Tomography and Society of Thoracic Radiology. J Thorac Imaging 32:W54–W66

Cano-Espinosa C, Gonzalez G, Washko GR, Cazorla M, Estepar RSJ (2018) Automated Agatston score computation in non-ECG gated CT scans using deep learning. Proc SPIE Int Soc Opt Eng 10574:105742K

Chang HJ, Lin FY, Gebow D, An HY, Andreini D, Bathina R et al (2019) Selective referral using CCTA versus direct referral for individuals referred to invasive coronary angiography for suspected CAD: a randomized, controlled, open-label Trial. JACC Cardiovasc Imaging. 12:1303–1312

Maroules CD, Rajiah P, Bhasin M, Abbara S (2019) Current evidence in cardiothoracic imaging: growing evidence for coronary computed tomography angiography as a first-line test in stable chest pain. J Thorac Imaging 34:4–11

Hoffmann H, Frieler K, Hamm B, Dewey M (2008) Intra- and interobserver variability in detection and assessment of calcified and noncalcified coronary artery plaques using 64-slice computed tomography: variability in coronary plaque measurement using MSCT. Int J Cardiovasc Imaging 24:735–742

Hell MM, Achenbach S, Shah PK, Berman DS, Dey D (2015) Noncalcified plaque in cardiac CT: quantification and clinical implications. Curr Cardiovasc Imaging Rep. 8:27

Kolossvary M, Kellermayer M, Merkely B, Maurovich-Horvat P (2018) Cardiac computed tomography radiomics: a comprehensive review on radiomic techniques. J Thorac Imaging 33:26–34

Tejero-de-Pablos A, Huang K, Yamane H, Kurose Y, Mukuta Y, Iho J et al (2019) Texture-based classification of significant stenosis in CCTA multi-view images of coronary arteries. In: Shen D, Liu T, Peters TM, Staib LH, Essert C, Zhou S et al (eds) Medical image computing and computer assisted intervention—MICCAI 2019. Springer, Cham, pp 732–740

Kang D, Dey D, Slomka PJ, Arsanjani R, Nakazato R, Ko H et al (2015) Structured learning algorithm for detection of nonobstructive and obstructive coronary plaque lesions from computed tomography angiography. J Med Imaging 2:014003

Dey D, Gaur S, Ovrehus KA, Slomka PJ, Betancur J, Goeller M et al (2018) Integrated prediction of lesion-specific ischaemia from quantitative coronary CT angiography using machine learning: a multicentre study. Eur Radiol 28:2655–2664

Zreik M, van Hamersvelt RW, Wolterink JM, Leiner T, Viergever MA, Isgum I (2019) A recurrent CNN for automatic detection and classification of coronary artery plaque and stenosis in coronary CT angiography. IEEE Trans Med Imaging 38:1588–1598

Denzinger F et al (2020) Deep learning algorithms for coronary artery plaque characterisation from CCTA scans. In: Tolxdorff T, Deserno T, Handels H, Maier A, Maier-Hein K, Palm C (eds) Bildverarbeitung für die Medizin 2020. Informatik aktuell, Springer, Wiesbaden

Jawaid MM, Riaz A, Rajani R, Reyes-Aldasoro CC, Slabaugh G (2017) Framework for detection and localization of coronary non-calcified plaques in cardiac CTA using mean radial profiles. Comput Biol Med 89:84–95

Wei J, Zhou C, Chan HP, Chughtai A, Agarwal P, Kuriakose J et al (2014) Computerized detection of noncalcified plaques in coronary CT angiography: evaluation of topological soft gradient prescreening method and luminal analysis. Med Phys 41:081901

Kolossvary M, Karady J, Szilveszter B, Kitslaar P, Hoffmann U, Merkely B et al (2017) Radiomic features are superior to conventional quantitative computed tomographic metrics to identify coronary plaques with napkin-ring sign. Circ Cardiovasc Imaging 10:e006843

Benton SM Jr, Tesche C, De Cecco CN, Duguay TM, Schoepf UJ, Bayer RR 2nd (2018) Noninvasive derivation of fractional flow reserve from coronary computed tomographic angiography: a review. J Thorac Imaging 33:88–96

Schwartz FR, Koweek LM, Norgaard BL (2019) Current evidence in cardiothoracic imaging: computed tomography-derived fractional flow reserve in stable chest pain. J Thorac Imaging 34:12–17

Tang CX, Liu CY, Lu MJ, Schoepf UJ, Tesche C, Bayer RR 2nd et al (2019) CT FFR for ischemia-specific CAD with a new computational fluid dynamics algorithm: a Chinese multicenter study. JACC Cardiovasc Imaging. 3(4):980–990

Itu L, Rapaka S, Passerini T, Georgescu B, Schwemmer C, Schoebinger M et al (1985) A machine-learning approach for computation of fractional flow reserve from coronary computed tomography. J Appl Physiol 2016(121):42–52

Tesche C, Gray HN (2020) Machine learning and deep neural networks applications in coronary flow assessment: the case of computed tomography fractional flow reserve. J Thorac Imaging 35(Suppl 1):S66–S71

Coenen A, Kim YH, Kruk M, Tesche C, De Geer J, Kurata A et al (2018) Diagnostic accuracy of a machine-learning approach to coronary computed tomographic angiography-based fractional flow reserve: result from the MACHINE Consortium. Circ Cardiovasc Imaging 11:e007217

Tesche C, De Cecco CN, Baumann S, Renker M, McLaurin TW, Duguay TM et al (2018) Coronary CT angiography-derived fractional flow reserve: machine learning algorithm versus computational fluid dynamics modeling. Radiology 288:64

von Knebel Doeberitz PL, De Cecco CN, Schoepf UJ, Duguay TM, Albrecht MH, van Assen M et al (2018) Coronary CT angiography-derived plaque quantification with artificial intelligence CT fractional flow reserve for the identification of lesion-specific ischemia. Eur Radiol 29(5):2378–2387

Tang CX, Wang YN, Zhou F, Schoepf UJ, Assen MV, Stroud RE et al (2019) Diagnostic performance of fractional flow reserve derived from coronary CT angiography for detection of lesion-specific ischemia: a multi-center study and meta-analysis. Eur J Radiol 116:90–97

Tesche C, Otani K, De Cecco CN, Coenen A, De Geer J, Kruk M et al (2019) Influence of coronary calcium on diagnostic performance of machine learning CT-FFR: results from MACHINE Registry. JACC Cardiovasc Imaging. 13(3):760–770

Baumann S, Renker M, Schoepf UJ, De Cecco CN, Coenen A, De Geer J et al (2019) Gender differences in the diagnostic performance of machine learning coronary CT angiography-derived fractional flow reserve—results from the MACHINE registry. Eur J Radiol 119:108657

Tesche C, Vliegenthart R, Duguay TM, De Cecco CN, Albrecht MH, De Santis D et al (2017) Coronary computed tomographic angiography-derived fractional flow reserve for therapeutic decision making. Am J Cardiol 120:2121–2127

von Knebel Doeberitz PL, De Cecco CN, Schoepf UJ, Albrecht MH, van Assen M, De Santis D et al (2019) Impact of coronary computerized tomography angiography-derived plaque quantification and machine-learning computerized tomography fractional flow reserve on adverse cardiac outcome. Am J Cardiol 124:1340–1348

Xiong G, Kola D, Heo R, Elmore K, Cho I, Min JK (2015) Myocardial perfusion analysis in cardiac computed tomography angiographic images at rest. Med Image Anal 24:77–89

Han D, Lee JH, Rizvi A, Gransar H, Baskaran L, Schulman-Marcus J et al (2018) Incremental role of resting myocardial computed tomography perfusion for predicting physiologically significant coronary artery disease: a machine learning approach. J Nucl Cardiol. 25:223–233

Motwani M, Dey D, Berman DS, Germano G, Achenbach S, Al-Mallah MH et al (2016) Machine learning for prediction of all-cause mortality in patients with suspected coronary artery disease: a 5-year multicentre prospective registry analysis. Eur Heart J 38(7):500–507

van Rosendael AR, Maliakal G, Kolli KK, Beecy A, Al’Aref SJ, Dwivedi A et al (2018) Maximization of the usage of coronary CTA derived plaque information using a machine learning based algorithm to improve risk stratification; insights from the CONFIRM registry. J Cardiovasc Comput Tomogr. 12:204–209

van Assen M, Varga-Szemes A, Schoepf UJ, Duguay TM, Hudson HT, Egorova S et al (2019) Automated plaque analysis for the prognostication of major adverse cardiac events. Eur J Radiol 116:76–83

Johnson KM, Johnson HE, Zhao Y, Dowe DA, Staib LH (2019) Scoring of coronary artery disease characteristics on coronary CT angiograms by using machine learning. Radiology 292:354–362

Nous FMA, Coenen A, Boersma E, Kim YH, Kruk MBP, Tesche C et al (2019) Comparison of the diagnostic performance of coronary computed tomography angiography-derived fractional flow reserve in patients with versus without diabetes mellitus (from the MACHINE Consortium). Am J Cardiol 123:537–543

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

UJ.S. and C.N.D.C. receive institutional research support and/or honoraria for speaking and consulting from Bayer, Bracco, Elucid BioImaging, Guerbet, HeartFlow Inc., and Siemens Healthineers. C.T. receives honoraria for speaking and consulting from HeartFlow Inc. and Siemens Healthineers.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Brandt, V., Emrich, T., Schoepf, U.J. et al. Ischemia and outcome prediction by cardiac CT based machine learning. Int J Cardiovasc Imaging 36, 2429–2439 (2020). https://doi.org/10.1007/s10554-020-01929-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10554-020-01929-y