Abstract

We study linear dissipative Hamiltonian (DH) systems with real constant coefficients that arise in energy based modeling of dynamical systems. We analyze when such a system is on the boundary of the region of asymptotic stability, i.e., when it has purely imaginary eigenvalues, or how much the dissipation term has to be perturbed to be on this boundary. For unstructured systems the explicit construction of the real distance to instability (real stability radius) has been a challenging problem. We analyze this real distance under different structured perturbations to the dissipation term that preserve the DH structure and we derive explicit formulas for this distance in terms of low rank perturbations. We also show (via numerical examples) that under real structured perturbations to the dissipation the asymptotical stability of a DH system is much more robust than for unstructured perturbations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study linear time-invariant systems with real coefficients. When a physical system is energy preserving, then a mathematical model should reflect this property and this is characterized by the property of the system being Hamiltonian. If energy dissipates from the system then it becomes a dissipative Hamiltonian (DH) system, which in the linear time-invariant case can be expressed as

where the function \(x\mapsto x^T Qx\), with \(Q=Q^T\in {\mathbb {R}}^{n,n}\) positive definite, describes the energy of the system, \(J=-J^T \in {\mathbb {R}}^{n,n}\) is the structure matrix that describes the energy flux among energy storage elements, and \(R\in {\mathbb {R}}^{n,n}\) with \(R=R^T\) positive semidefinite is the dissipation matrix that describes energy dissipation in the system.

Dissipative Hamiltonian systems are special cases of port-Hamiltonian systems, which recently have received a lot attention in energy based modeling, see, e.g., [3, 8, 19, 21, 22, 26,27,28,29,30]. An important property of DH systems is that they are stable, which in the time-invariant case can be characterized by the property that all eigenvalues of \(A=(J-R)Q\) are contained in the closed left half complex plane and all eigenvalues on the imaginary axis are semisimple, see, e.g., [20] for a simple proof which immediately carries over to the real case discussed here.

For general unstructured systems \(\dot{x}=Ax\), if A has purely imaginary eigenvalues, then arbitrarily small perturbations (arising e.g., from linearization errors, discretization errors, or data uncertainties) may move eigenvalues into the right half plane and thus make the system unstable. So stability of the system can only be guaranteed when the system has a reasonable distance to instability, see [2, 5, 11, 12, 14, 31], and the discussion below.

When the coefficients of the system are real and it is clear that the perturbations are real as well, then the complex distance to instability is not the right measure, since typically the distance to instability under real perturbations is larger than the complex distance. A formula for the real distance under general perturbations was derived in the ground-breaking paper [23]. The computation of this real distance to instability (or real stability radius) is an important topic in many applications, see e.g., [10, 17, 18, 24, 25, 32].

In this paper, we study the distance to instability under real (structure preserving) perturbations for DH systems and we restrict ourselves to the case that only the dissipation matrix R is perturbed, because this is the part of the model that is usually most uncertain, due to the fact that modeling damping or friction is extremely difficult, see [10] and Example 1.1 below. Furthermore, the analysis of the perturbations in the matrices Q, J is not completely clear at this stage.

Since DH systems are stable, it is clear that perturbations that preserve the DH structure also preserve the stability. However, DH systems may not be asymptotically stable, since they may have purely imaginary eigenvalues, which happens e.g., when the dissipation matrix R vanishes. So in the case of DH systems, we discuss when the system is robustly asymptotically stable, i.e., small real and structure-preserving perturbations \(\varDelta _R\) to the coefficient matrix R keep the system asymptotically stable, and we determine the smallest perturbations that move the system to the boundary of the set of asymptotically stable systems. Motivated from an application in the area of disk brake squeal, we consider restricted structure-preserving perturbations of the form \(\varDelta _R= B\varDelta B^T\), where \(B\in {\mathbb {R}}^{n,r}\) is a so-called restriction matrix of full column rank. This restriction allows the consideration of perturbations that only affect selected parts of the matrix R. (We mention that perturbations of the form \(B\varDelta B^T\) should be called structured perturbations by the convention following [13], but we prefer the term restricted here, because of the danger of confusion with the term structure-preserving for perturbations that preserve the DH structure.)

Example 1.1

Consider as motivating example the finite element analysis of disk brake squeal [10], which leads to large scale differential equations of the form

where \(M=M^T>0\) is the mass matrix, \(D=D^T\ge 0\) models material and friction induced damping, \(G=-G^T\) models gyroscopic effects, \(K=K^T>0\) models the stiffness, and N is a nonsymmetric matrix modeling circulatory effects. Here \(\dot{q}\) denotes the derivative with respect to time. An appropriate first order formulation is associated with the linear system \(\dot{z}= (J-R)Qz\), where

This system is in general not a DH system, since for \(N\ne 0\) the matrix R is indefinite. But since brake squeal is associated with the eigenvalues in the right half plane, it is an important question for which perturbations the system stays stable. This instability can be associated with a restricted indefinite perturbation

to the matrix that contains only the damping. Note that \(\varDelta R\) is subject to further restrictions, since only a small part of the finite elements is associated with the brake pad and thus the matrix N has only a small number of non-zeros associated with these finite elements and only these occur in the perturbation. If this perturbation is smaller than the smallest perturbation which makes the system have purely imaginary eigenvalues then the system will stay stable. This example motivates our problem. A much harder problem which we do not address is a different restriction of the from \(B \varDelta _N C+ C^T \varDelta _N^T B^T\) which would be more appropriate for this example.

Consider a real linear time-invariant dissipative Hamiltonian (DH) system of the form (1.1). For perturbations in the dissipation matrix we define the following real distances to instability.

Definition 1.1

Consider a real DH system of the form (1.1) and let \(B\in {\mathbb {R}}^{n, r}\) and \(C \in {\mathbb {R}}^{q , n}\) be given restrictions matrices. Then for \(p\in \{2,F\}\) we define the following stability radii.

-

(1)

The stability radius \(r_{{{\mathbb {R}}},p}(R;B,C)\) of system (1.1) with respect to real general perturbations to R under the restriction (B, C) is defined by

$$\begin{aligned} r_{{{\mathbb {R}}},p}(R;B,C)\!:=\!\inf \left\{ {\Vert \varDelta \Vert _p}\,\Big |\,\varDelta \!\in \! {\mathbb {R}}^{r , q} ,~ \Lambda \big ((J-R)Q-(B\varDelta C)Q\big )\!\cap \! i{\mathbb {R}}\!\ne \!\emptyset \right\} . \end{aligned}$$ -

(2)

The stability radius \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\) of system (1.1) with respect to real structure-preserving semidefinite perturbations from the set

$$\begin{aligned}&{\mathcal {S}}_d(R,B):=\left\{ \varDelta \in {\mathbb {R}}^{r , r}\,\big |\,\varDelta ^T=\varDelta \le 0~~\mathrm{and}~~(R+B\varDelta B^T)\ge 0\right\} \end{aligned}$$under the restriction \((B,B^T)\) is defined by

$$\begin{aligned}&r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\\&\quad :=\inf \left\{ {\Vert \varDelta \Vert }_p\,\Big |~\varDelta \in {\mathcal {S}}_d(R,B) ,\, \Lambda \left( (J-R)Q-(B\varDelta B^T)Q\right) \cap i{\mathbb {R}}\ne \emptyset \right\} . \end{aligned}$$ -

(3)

The stability radius \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_i}(R;B)\) of system (1.1) with respect to structure-preserving indefinite perturbations from the set

$$\begin{aligned}&{\mathcal {S}}_i(R,B):=\left\{ \varDelta \in {\mathbb {R}}^{r , r}\,\big |\, \varDelta ^T=\varDelta ~~\mathrm{and}~~(R+B\varDelta B^T)\ge 0\right\} \end{aligned}$$under the restriction \((B,B^T)\) is defined by

$$\begin{aligned}&r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_i}(R;B)\\&\quad :=\inf \left\{ {\Vert \varDelta \Vert }_p\,\Big |~\varDelta \in {\mathcal {S}}_i(R,B) ,\, \Lambda \left( (J-R)Q-(B\varDelta B^T)Q\right) \cap i{\mathbb {R}}\ne \emptyset \right\} . \end{aligned}$$

To derive the real structured stability radii, we will follow the strategy in [20] for the complex case and reformulate the problem of computing \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\) or \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_i}(R;B)\) in terms of real structured mapping problems. The following lemma of [20] (expressed here for real matrices) gives a characterization when a DH system of the form (1.1) has eigenvalues on the imaginary axis.

Lemma 1.1

([20, Lemma 3.1]) Let \(J,R,Q \in {\mathbb {R}}^{n,n}\) be such that \(J^T=-J, R^T=R \) positive semidefinite and \(Q^T=Q \) positive definite. Then \((J-R)Q\) has an eigenvalue on the imaginary axis if and only if \(RQx=0\) for some eigenvector x of JQ. Furthermore, all purely imaginary eigenvalues of \((J-R)Q\) are semisimple.

The new mapping theorems for real DH systems will be derived in Sect. 2. Based on these mapping theorems the stability radii for real DH systems under perturbations in the dissipation matrix are studied in Sect. 3 and the results are illustrated with numerical examples in Sect. 4.

In the following \(\Vert \cdot \Vert \) denotes the spectral norm of a vector or a matrix while \({\Vert \cdot \Vert }_F\) denotes the Frobenius norm of a matrix. By \(\Lambda (A)\) we denote the spectrum of a matrix \(A\in {\mathbb {R}}^{n , n}\), where \({\mathbb {R}}^{n , r}\) is the set of real \(n\times r\) matrices, with the special case \({\mathbb {R}}^{n}={\mathbb {R}}^{n , 1}\). For \(A=A^T\in {\mathbb {R}}^{n , n}\), we use the notation \(A\ge 0\) and \(A\le 0\) if A is positive or negative semidefinite, respectively, and \(A > 0\) if A is positive definite. The Moore-Penrose of a matrix \({\mathbb {R}}^{n , r}\) is denoted by \(A^\dagger \), see e.g., [9].

2 Mapping theorems

As in the complex case that was treated in [20], the main tool in the computation of stability radii for real DH systems will be minimal norm solutions to structured mapping problems. To construct such mappings in the case of real matrices, and to deal with pairs of complex conjugate eigenvalues and their eigenvectors, we will need general mapping theorems for maps that map several real vectors \(x_1,\ldots ,x_m\) to vectors \(y_1,\ldots ,y_m\). We will first study the complex case and then use these results to construct corresponding mappings in the real case.

For given \(X \in {\mathbb {C}}^{m , p}, Y \in {\mathbb {C}}^{n , p}, Z \in {\mathbb {C}}^{n , k}\), and \(W \in {\mathbb {C}}^{m , k}\), the general mapping problem, see [16], asks under which conditions on X, Y, Z, and W the set

is nonempty, and if it is, then to construct the minimal spectral or Frobenius norm elements from \({\mathcal {S}}\). To solve this mapping problem, we will need the following well-known result.

Lemma 2.1

([4]) Let A, B, C be given complex matrices of appropriate dimensions. Then for any positive number \(\mu \) satisfying

there exists a complex matrix D of appropriate dimensions such that

Furthermore, any matrix D satisfying (2.1) is of the form

where \(K^H = (\mu ^2 I - A^HA)^{-1/2}B^H, L = (\mu ^2 I - AA^H)^{-1/2}C\), and Z is an arbitrary contraction, i.e., \(\Vert Z \Vert \le 1\).

Using Lemma 2.1 we obtain the following solution to the general mapping problem.

Theorem 2.1

Let \(X \in {\mathbb {C}}^{m , p}, Y \in {\mathbb {C}}^{n , p}, Z \in {\mathbb {C}}^{n , k}\), and \(W \in {\mathbb {C}}^{m , k}\). Then the set

is nonempty if and only if \(X^HW=Y^HZ,\) \(YX^{\dagger }X = Y\), and \(WZ^{\dagger }Z = W\). If this is the case, then

Furthermore, we have the following minimal-norm solutions to the mapping problem \(AX=Y\) and \(A^HZ=W\):

-

(1)

The matrix

$$\begin{aligned} G:=YX^{\dagger }+{(WZ^\dagger )}^H- {(WZ^\dagger )}^H XX^{\dagger } \end{aligned}$$is the unique matrix from \({\mathcal {S}}\) of minimal Frobenius norm

$$\begin{aligned} \Vert G\Vert _F=\sqrt{ \Vert YX^{\dagger }\Vert _F^2+\Vert WZ^{\dagger }\Vert _F^2- {\text {trace}}((WZ^{\dagger })(WZ^{\dagger })^H X X^{\dagger })}=\inf _{A \in {\mathcal {S}}} \Vert A\Vert _F. \end{aligned}$$ -

(2)

The minimal spectral norm of elements from \({\mathcal {S}}\) is given by

$$\begin{aligned} \mu :=\max \left\{ \Vert YX^{\dagger }\Vert ,\, \Vert WZ^{\dagger }\Vert \right\} =\inf _{A \in {\mathcal {S}}} {\Vert A\Vert }. \end{aligned}$$(2.3)Moreover, assume that \({\text {rank}}(X)=r_1\) and \({\text {rank}}(Z)=r_2\). If \(X=U \varSigma V^H\) and \(Z={\widehat{U}} {\widehat{\varSigma }} {{\widehat{V}}}^H\) are singular value decompositions of X and Z, respectively, where \(U=[U_1,\, U_2]\) with \(U_1 \in {\mathbb {C}}^{m , r_1}\) and \({\widehat{U}}=[{{\widehat{U}}}_1,\, {{\widehat{U}}}_2]\) with \({{\widehat{U}}}_1 \in {\mathbb {C}}^{n , r_2}\), then the infimum in (2.3) is attained by the matrix

$$\begin{aligned} F:=YX^{\dagger }+{(WZ^\dagger )}^H- {(WZ^\dagger )}^H XX^{\dagger }+ (I-ZZ^{\dagger }){{\widehat{U}}}_2 C U_2^H(I-XX^{\dagger }), \end{aligned}$$where

$$\begin{aligned} C= & {} -K(U_1^H(YX^{\dagger }){{\widehat{U}}}_1) L+\mu {(I-KK^H)}^{\frac{1}{2}}P{(I-L^HL)}^{\frac{1}{2}},\\ K= & {} \left[ (\mu ^2 I - U_1^H (YX^{\dagger })^H ZZ^{\dagger }(YX^{\dagger })U_1)^{-\frac{1}{2}}({{\widehat{U}}}_2^H Y X^{\dagger }U_1 )\right] ^H,\\ L= & {} {\left( \mu ^2 I - {{\widehat{U}}}_1^H (YX^{\dagger }){(YX^{\dagger })}^H{{\widehat{U}}}_1\right) } ^{-\frac{1}{2}}\left( {{\widehat{U}}}_1^H {(WZ^{\dagger })}^HU_2\right) , \end{aligned}$$and P is an arbitrary contraction.

Proof

Suppose that \({\mathcal {S}}\) is nonempty. Then there exists \(A \in {\mathbb {C}}^{n , m}\) such that \(A X = Y\) and \(A^HZ = W\). This implies that \(X^HW = Y^HZ\). Using the properties of the Moore-Penrose pseudoinverse, we have that \(YX^\dagger X=A X X^\dagger X=A X=Y\) and \(WZ^\dagger Z=A^H Z Z^\dagger Z=A^H Z=W\).

Conversely, suppose that \(X^HW = Y^HZ, YX^\dagger X = Y\), and \(WZ^\dagger Z = W\). Then \({\mathcal {S}}\) is nonempty, since for any \(C \in {\mathbb {C}}^{n , m}\) we have

In particular, this proves the inclusion “\(\supseteq \)” in (2.2). To prove the other direction “\(\subseteq \)”, let \(X=U \varSigma V^H\) and \(Z={\widehat{U}} {\widehat{\varSigma }} {{\widehat{V}}}^H\) be singular value decompositions of X and Z, respectively, partitioned as in 2), with \(\varSigma _1 \in {\mathbb {R}}^{r_1 , r_1}\) and \(\widehat{\varSigma }_1 \in {\mathbb {R}}^{r_2 , r_2}\) being the leading principle submatrices of \(\varSigma \) and \(\widehat{\varSigma }\), respectively. Let \(A \in {\mathcal {S}}\) and set

where \(A_{11} \in {\mathbb {C}}^{r_1 , r_2},\, A_{12} \in {\mathbb {C}}^{r_1 , m-r_2}, A_{21} \in {\mathbb {C}}^{n-r_1, r_2}\) and \(A_{22} \in {\mathbb {C}}^{n-r_1 , m - r_2}\). Then \({\Vert A\Vert }_{F}={\Vert \widehat{A}\Vert }_{F}\) and \({\Vert A\Vert }={\Vert {\widehat{A}}\Vert }\). Multiplying \({\widehat{U}} {\widehat{A}} U^HX=A X=Y\) by \({\widehat{U}}^H\) from the left, we obtain

which implies that

Analogously, multiplying \(U{\widehat{A}}^H{\widehat{U}}^HZ=A^HZ=W\) from the left by \(U^H\), we obtain that

This implies that

Equating the two expressions for \(A_{11}\) from (2.5) and (2.6), we obtain

which shows that \(X^HW=Y^HZ\). Furthermore, we get

Thus, using \(X^\dagger U_1U_1^H=X^\dagger , Z^\dagger {\widehat{U}}_1{\widehat{U}}_1^H=Z^\dagger \), and \(U_1U_1^H=XX^\dagger \), we obtain

(1) In view of (2.7) and using that \(X^{\dagger }U_2=0\) and \({\widehat{U}}_2^H(Z^{\dagger })^H=0\), we obtain that

Thus, by setting \(A_{22}=0\) in (2.8), we obtain a unique element of \({\mathcal {S}}\) which minimizes the Frobenius norm giving

(2) By definition of \(\mu \), we have

Then it follows that for any \(A \in {\mathcal {S}}\), we have \(\Vert A\Vert =\Vert {\widehat{A}}\Vert \ge \mu \) with \({\widehat{A}}\) as in (2.4). By Theorem 2.1 there exists matrices \(A\in {\mathcal {S}}\) with \(\Vert A\Vert \le \mu \), i.e., \(\inf _{A \in {\mathcal {S}}} \Vert A\Vert =\mu \). Furthermore, by Theorem 2.1 this infimum is attained with a matrix A as in (2.8), where

and P is an arbitrary contraction. Hence the assertion follows by setting \(C = A_{22}\). \(\square \)

The special case \(p=k=1\) of Theorem 2.1 was originally obtained in [15, Theorem 2]. The next result characterizes the Hermitian positive semidefinite matrices that map a given \(X\in {\mathbb {C}}^{n,m}\) to a given \(Y\in {\mathbb {C}}^{n,m}\), and we include the solutions that are minimal with respect to the spectral or Frobenius norm. This generalizes [20, Theorem 2.3] which only covers the case \(m=1\).

Theorem 2.2

Let \(X,\,Y\in {\mathbb {C}}^{n,m}\) be such that \({\text {rank}}(Y)=m\). Define

Then there exists \(H=H^H\ge 0\) such that \(HX=Y\) if and only if \(X^HY=Y^HX\) and \(X^HY > 0\). If this holds, then

is well-defined and Hermitian positive-semidefinite, and

Furthermore, we have the following minimal norm solutions to the mapping problem \(HX=Y\):

-

(1)

The matrix \({\widetilde{H}}\) from (2.9) is the unique matrix from \({\mathcal {S}}\) with minimal Frobenius norm

$$\begin{aligned} \min \left\{ \,\Vert H\Vert _F\; \big |\; H\in {\mathcal {S}}\right\} =\Vert Y{(X^HY)}^{-1}Y^H\Vert _F. \end{aligned}$$ -

(2)

The minimal spectral norm of elements from \({\mathcal {S}}\) is given by

$$\begin{aligned} \min \left\{ \,\Vert H\Vert \; \big |\; H\in {\mathcal {S}}\right\} =\Vert Y{(X^HY)}^{-1}Y^H\Vert \end{aligned}$$and the minimum is attained for the matrix \({\widetilde{H}}\) from (2.9).

Proof

If \(H \in {\mathcal {S}}\), then \(X^HY=X^HHX=(HX)^HX=Y^HX\) and \(X^HY=X^HHX \ge 0\), since \(H^H=H \ge 0\). If \(X^HHX\) were singular, then there would exist a vector \(v \in {\mathbb {C}}^m{\setminus }\{0\}\) such that \(v^HX^HHXv=0\) and, hence, \(Yv=H Xv=0\) (as \(H\ge 0\)) in contradiction to the assumption that Y is of full rank. Thus, \(X^HY > 0\).

Conversely, let \(X^HY=Y^HX> 0\) (which implies that also \((X^HY)^{-1}>0\)). Then \({\widetilde{H}}\) in (2.9) is well-defined. Clearly \({\widetilde{H}}X=Y\) and \({\widetilde{H}}=Y{(Y^HX)}^{-1}Y^H \ge 0\), which implies that \({\widetilde{H}} \in {\mathcal {S}}\). Let

be as in (2.10) for some \(K \in {\mathbb {C}}^{n,n}\). Then clearly \(H^H=H, HX=Y\) and also \(H \ge 0\), as it is the sum of two positive semidefinite matrices. This proves the inclusion “\(\supseteq \)” in (2.10). For the converse inclusion, let \(H \in {\mathcal {S}}\). Since \(H\ge 0\), we have that \(H=A^HA\) for some \(A \in {\mathbb {C}}^{n,n}\). Therefore, \(HX=Y\) implies that \((A^HA) X=Y\), and setting \(Z=A X\), we have \(AX=Z\) and \(A^H Z=Y\). Since \({\text {rank}}(Y)=m\), we necessarily also have that \({\text {rank}}(Z)=m\) and \({\text {rank}}(X)=m\). Therefore, by Theorem 2.1, A can be written as

for some \(C\in {\mathbb {C}}^{n,n}\). Note that

since \((ZZ^\dagger )^H=ZZ^\dagger \) and \(Y^HX=Z^HZ\). By inserting (2.12) in (2.11), we obtain that

and thus,

where the last equality follows since

Setting \(K=(I-ZZ^\dagger )C\) in (2.13) and using

proves the inclusion “\(\subseteq \)” in (2.10).

(1) If \(H \in {\mathcal {S}}\), then \(X^HY=Y^HX > 0\) and we obtain

for some \(K\in {\mathbb {C}}^{n,n}\). By using the formula \({\Vert BB^H+DD^H\Vert }_F^2={\Vert BB^H\Vert }_F^2+2{\Vert DB\Vert }_F^2+{\Vert D^HD\Vert }_F^2\) for \(B=Y(Y^HX)^{-1/2}\) and \(D=K(I-XX^\dagger )\), we obtain

Hence, setting \(K=0\), we obtain \( {\widetilde{H}} =Y(Y^HX)^{-1}Y^H\) as the unique minimal Frobenius norm solution.

(2) Let \(H \in {\mathcal {S}}\) be of the form (2.14) for some \(K \in {\mathbb {C}}^{n,n}\). Since \(Y(Y^HX)^{-1}Y^H\ge 0\) and \((I-XX^\dagger )K^HK(I-XX^\dagger )\ge 0\), we have

This implies that

One possible choice for obtaining equality in (2.15) is \(K=0\) which gives \({\widetilde{H}}=Y(Y^HX)^{-1}Y^H\in {\mathcal {S}}\) and \(\Vert {\widetilde{H}}\Vert =\Vert Y(Y^HX)^{-1}Y^H\Vert =\min \limits _{H\in {\mathcal {S}}} \Vert H\Vert \). \(\square \)

Although the complex versions of the mapping theorems seem to be of independent interest, we will only apply them in this paper to obtain corresponding results for the case of real perturbations. Here, “real” refers to the mappings, but not to the vectors that are mapped, because we need to apply the mapping theorems to eigenvectors which may be complex even if the matrix under consideration is real.

Remark 2.1

If \(X\in {\mathbb {C}}^{m,p}, Y\in {\mathbb {C}}^{n,p}, Z\in {\mathbb {C}}^{n,k}, W\in {\mathbb {C}}^{m,k}\) are such that \(\mathrm{rank}([X~{\bar{X}}])=2p\) and \(\mathrm{rank}([Z~{\bar{Z}}])=2k\) (or, equivalently, \(\mathrm{rank}([\mathop {\mathrm {Re}}X~\mathop {\mathrm {Im}}X])=2p\) and \(\mathrm{rank}([\mathop {\mathrm {Re}}Z~\mathop {\mathrm {Im}}Z])=2k\)), then minimal norm solutions to the mapping problem \(AX=Y\) and \(A^HZ=W\) with \(A\in {\mathbb {R}}^{n,m}\) can easily be obtained from Theorem 2.1. This follows from the observation that with \(AX=Y\) and \(A^HZ=W\) we also have \(A\overline{X}=\overline{Y}\) and \(A^H\overline{Z}=\overline{W}\) and thus

We can then apply Theorem 2.1 to the real matrices \({\mathcal {X}}=[\mathop {\mathrm {Re}}X~\mathop {\mathrm {Im}}X], {\mathcal {Y}}=[\mathop {\mathrm {Re}}Y~\mathop {\mathrm {Im}}Y], {\mathcal {Z}}=[\mathop {\mathrm {Re}}Z~\mathop {\mathrm {Im}}Z],\) and \({\mathcal {W}}=[\mathop {\mathrm {Re}}W~\mathop {\mathrm {Im}}W]\). Indeed, whenever there exists a complex matrix \(A\in {\mathbb {C}}^{n,m}\) satisfying \(A{\mathcal {X}}={\mathcal {Y}}\) and \(A^H{\mathcal {Z}}={\mathcal {W}}\), then there also exists a real one, because it is easily checked that the minimal norm solutions in Theorem 2.1 are real. (Here, we assume that for the case of the spectral norm the real singular value decompositions of \({\mathcal {X}}\) and \({\mathcal {Z}}\) are taken, and the contraction P is also chosen to be real.)

A similar observation holds for solution of the real version of the Hermitian positive semidefinite mapping problem in Theorem 2.2.

Since the real version of Theorem 2.1 (and similarly of Theorem 2.2) is straightforward in view of Remark 2.1, we refrain from an explicit statement. The situation, however, changes considerably if the assumptions \(\mathrm{rank}([\mathop {\mathrm {Re}}X~\mathop {\mathrm {Im}}X])=2p\) and \(\mathrm{rank}([\mathop {\mathrm {Re}}Z~\mathop {\mathrm {Im}}Z])=2k\) as in Remark 2.1 are dropped. In this case, it seems that a full characterization of real solutions to the mapping problems is highly challenging and very complicated. Therefore, we only consider the generalization of Theorem 2.2 to real mappings for the special case \(m=1\) which is in fact the case needed for the computation of the stability radii. We obtain the following two results.

Theorem 2.3

Let \(x,\,y \in {\mathbb {C}}^{n}\) be such that \({\text {rank}}([y~{\bar{y}}])=2\). Then the set

is nonempty if and only if \(x^Hy > |x^Ty|\) (which includes the condition \(x^Hy\in {\mathbb {R}}\)). In this case let

Then the matrix

is well defined and real symmetric positive semidefinite, and

-

(1)

The minimal Frobenius norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min \left\{ {\Vert H\Vert }_F\,\big |~ H \in {\mathcal {S}}\right\} ={\left\| Y\left( Y^TX\right) ^{-1}Y^T\right\| }_{F} \end{aligned}$$and this minimal norm is uniquely attained by the matrix \({\widetilde{H}}\) in (2.16).

-

(2)

The minimal spectral norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min \left\{ {\Vert H\Vert }\,\big |~ H \in {\mathcal {S}}\right\} =\left\| Y\left( Y^TX\right) ^{-1}Y^T\right\| \end{aligned}$$and the matrix \({\widetilde{H}}\) in (2.18) is a matrix that attains this minimum.

Proof

Let \(H \in {\mathcal {S}}\), i.e., \(H^T= H \ge 0\) and \(Hx=y\). Since H is real, we also have \(HX=Y\). Thus, by Theorem 2.2, X and Y satisfy \(X^HY=Y^HX\) and \(Y^HX> 0\). The first condition is equivalent to \((\mathop {\mathrm {Re}}x)^T\mathop {\mathrm {Im}}y=(\mathop {\mathrm {Re}}y)^T\mathop {\mathrm {Im}}x\) which in turn is equivalent to \(x^Hy\in {\mathbb {R}}\) and the second condition is easily seen to be equivalent to \(x^Hy>|x^Ty|\). Conversely, if \(x^Hy=y^Hx\) and \(x^Hy>|x^Ty|\), then \(Y^TX>0\) and hence

is positive semidefinite. Moreover, we obviously have \({\widetilde{H}}X=Y\) and thus \({\widetilde{H}}x=y\). This implies that \({\widetilde{H}} \in {{\mathcal {S}}}\).

The inclusion “\(\supseteq \)” in (2.17) is straightforward. For the other inclusion let \(H\in {\mathcal {S}}\), i.e., \(H^T=H\ge 0\) and \(Hx=y\), and thus \(HX=Y\). Then, by Theorem 2.2, there exist \(L\in {\mathbb {C}}^{n,n}\) such that

where for the last identity we made use of the fact that H is real. Thus, by setting \(K=(\mathop {\mathrm {Re}}({L}^H)\mathop {\mathrm {Re}}({L})+\mathop {\mathrm {Im}}({L}^H)\mathop {\mathrm {Im}}({L}))\), we get the inclusion “\(\subseteq \)” in (2.17). The norm minimality in (1) and (2) follows immediately from Theorem 2.2, because any real map \(H\in S\) also satisfies \(HX=Y\). \(\square \)

Theorem 2.3 does not consider the case that y and \({\bar{y}}\) are linearly dependent. In that case, we obtain the following result.

Theorem 2.4

Let \(x,y\in {\mathbb {C}}^n, y\ne 0\) be such that \({\text {rank}}[y~{\bar{y}}]=1\). Then the set

is nonempty if and only if \(x^Hy>0\). In that case

is well-defined and real symmetric positive semidefinite. Furthermore, we have:

-

(1)

The minimal Frobenius norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min \left\{ {\Vert H\Vert }_F\,\big |~ H \in {\mathcal {S}}\right\} =\frac{\Vert y\Vert ^2}{x^Hy} \end{aligned}$$and this minimal norm is uniquely attained by the matrix \({\widetilde{H}}\) in (2.18).

-

(2)

The minimal spectral norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min \left\{ {\Vert H\Vert }\,\big |~ H \in {\mathcal {S}}\right\} =\frac{\Vert y\Vert ^2}{x^Hy} \end{aligned}$$and the matrix \({\widetilde{H}}\) in (2.18) is a matrix that attains this minimum.

Proof

If \(H\in {\mathcal {S}}\), i.e., \(H^T=H\ge 0\) and \(Hx=y\), then by Theorem 2.2 (for the case \(m=1\)) we have that \(x^Hy>0\). Conversely, assume that x and y satisfy \(x^Hy>0\). Since y and \({\bar{y}}\) are linearly dependent, there exists a unimodular \(\alpha \in {\mathbb {C}}\) such that \(\alpha y\) is real. But then also \(yy^H=(\alpha y)(\alpha y)^H\) is real and hence the matrix \({\widetilde{H}}\) in (2.18) is well defined and real. By Theorem 2.2, it is the unique element from \({\mathcal {S}}\) with minimal Frobenius norm and also an element from \({\mathcal {S}}\) of minimal spectral norm. \(\square \)

Remark 2.2

Note that results similar to Theorems 2.3 and 2.4 can also be obtained for real negative semidefinite maps. Indeed, for \(x,y \in {\mathbb {C}}^{n}\) such that \({\text {rank}}[y~{\bar{y}}]=2\), there exist a real negative semidefinite matrix \(H\in {\mathbb {R}}^{n,n}\) such that \(Hx=y\) if and only if \(x^Hy=y^Hx\) and \(-x^Hy>|x^Ty|\). Furthermore, it follows immediately from Theorem 2.3 by replacing y with \(-y\) and H with \(-H\) that a minimal solution in spectral and Frobenius norm is given by \({\widetilde{H}}=[\mathop {\mathrm {Re}}y~\mathop {\mathrm {Im}}y]\left( [\mathop {\mathrm {Re}}y~\mathop {\mathrm {Im}}y]^H[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]\right) ^{-1}[\mathop {\mathrm {Re}}y~\mathop {\mathrm {Im}}y]^H\). An analogous argument holds for Theorem 2.4. Therefore, we will refer to Theorems 2.3 and 2.4 also in the case that we are seeking solutions for the negative semidefinite mapping problem.

The minimal norm solutions for the real symmetric mapping problem with respect to both the spectral norm and Frobenius norm are well known, see [1, Theorem 2.2.3]. We do not restate this result in its full generality, but in terms of the following two theorems that are formulated in such a way that they allow a direct application in the remainder of this paper.

Theorem 2.5

Let \(x,y \in {\mathbb {C}}^{n}{\setminus }\{0\}\) be such that \({\text {rank}}([x~{\bar{x}}])=2\). Then

is nonempty if and only if \(x^Hy=y^Hx\). Furthermore, define

-

(1)

The minimal Frobenius norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min _{ H \in {\mathcal {S}}}{\Vert H\Vert }_F={\Vert {\widetilde{H}}\Vert }_F=\sqrt{2{\Vert YX^\dagger \Vert }_F^2- {\text {trace}}\left( YX^\dagger (YX^\dagger )^TXX^\dagger \right) } \end{aligned}$$and the minimum is uniquely attained by \({\widetilde{H}}\) in (2.19).

-

(2)

To characterize the minimal spectral norm, consider the singular value decomposition \(X=U\varSigma V^T\) and let \(U=[U_1~U_2]\) where \(U_1 \in {\mathbb {R}}^{n,2}\). Then

$$\begin{aligned} \min _{ H \in {\mathcal {S}}} {\Vert H\Vert }=\Vert YX^\dagger \Vert , \end{aligned}$$and the minimum is attained by

$$\begin{aligned} {\widehat{H}}= {\widetilde{H}}-(I_n-XX^\dagger )KU_1^TYX^\dagger U_1K^T(I_n-XX^\dagger ), \end{aligned}$$(2.20)where \(K=YX^\dagger U_1(\mu ^2 I_2-U_1^TYX^\dagger YX^\dagger U_1)^{-1/2}\) and \(\mu :=\Vert YX^\dagger \Vert \).

Proof

Observe that for \(H^T=H\in {\mathbb {R}}^{n,n}\) the identity \(Hx=y\) is equivalent to \(HX=Y\). Thus, the result follows immediately from [1, Theorem 2.2.3] applied to the mapping problem \(HX=Y\). \(\square \)

Theorem 2.6

Let \(x,y \in {\mathbb {C}}^{n}{\setminus }\{0\}\) be such that \({\text {rank}}([x~{\bar{x}}])=1\). Then

is nonempty if and only if \(x^Hy=y^Hx\). Furthermore, we have:

-

(1)

The minimal Frobenius norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min _{ H \in {\mathcal {S}}}{\Vert H\Vert }_F=\frac{\Vert y\Vert }{\Vert x\Vert } \end{aligned}$$and the minimum is uniquely attained by the real matrix

$$\begin{aligned} {\widetilde{H}} :=\frac{yx^H}{\Vert x\Vert ^2}+\frac{xy^H}{\Vert x\Vert ^2}-\frac{(x^Hy)xx^H}{\Vert x\Vert ^4}. \end{aligned}$$(If x and y are linearly dependent, then \({\widetilde{H}}=\frac{yx^H}{x^Hx}\).)

-

(2)

The minimal spectral norm of an element in \({\mathcal {S}}\) is given by

$$\begin{aligned} \min _{ H \in {\mathcal {S}}} {\Vert H\Vert }=\frac{\Vert y\Vert }{\Vert x\Vert }, \end{aligned}$$and the minimum is attained by the real matrix

$$\begin{aligned} {\widehat{H}}:=\frac{\Vert y\Vert }{\Vert x\Vert } \begin{bmatrix} \frac{y}{\Vert y\Vert }&\frac{x}{\Vert x\Vert } \end{bmatrix} \begin{bmatrix} \frac{y^Hx}{\Vert x\Vert \,\Vert y\Vert }&1 \\ 1&\frac{x^Hy}{\Vert x\Vert \,\Vert y\Vert } \end{bmatrix}^{-1} \begin{bmatrix} \frac{y}{\Vert y\Vert }&\frac{x}{\Vert x\Vert } \end{bmatrix}^H \end{aligned}$$if x and y are linearly independent and for \({\widehat{H}}:=\frac{yx^H}{x^Hx}\) otherwise.

Proof

By [1, Theorem 2.2.3] (see also [20, Theorem 2.1] and [16]) the matrices \({\widetilde{H}}\) and \({\widehat{H}}\) are the minimal Frobenius resp. spectral norm solutions to the complex Hermitian mapping problem \(Hx=y\). Thus, it only remains to show that \({\widetilde{H}}\) and \({\widehat{H}}\) are real. Since x and \({\bar{x}}\) are linearly dependent, there exists a unimodular \(\alpha \in {\mathbb {C}}\) such that \(\alpha x\) is real. But then also \(\alpha y=H(\alpha x)\) and thus \(xx^H=(\alpha x)(\alpha x)^H\) and \(yx^H=(\alpha y)(\alpha x)^H\) are real which implies the realness of \({\widetilde{H}}\). Analogously, \({\widehat{H}}\) can be shown to be real. \(\square \)

Obviously, the minimal Frobenius or spectral norm solutions from Theorem 2.6 have either rank one or two. The following lemma characterizes the rank of the minimal Frobenius or spectral norm solutions from Theorem 2.5 as well as the number of their negative and their positive eigenvalues, respectively.

Lemma 2.2

Let \(x,y \in {\mathbb {C}}^{n}{\setminus }\{0\}\) be such that \(x^Hy=y^Hx\) and \({\text {rank}}([x~{\bar{x}}])=2\). If \({\widetilde{H}}\) and \({\widehat{H}}\) are defined as in (2.19) and (2.20), respectively, then \({\text {rank}}({\widetilde{H}}),{\text {rank}}({\widehat{H}}) \le 4\) and both \({\widetilde{H}}\) and \({\widehat{H}}\) have at most two negative eigenvalues and at most two positive eigenvalues.

Proof

Recall from Theorem 2.5 that \(X=[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x], Y=[\mathop {\mathrm {Re}}y~\mathop {\mathrm {Im}}y]\) and consider the singular value decomposition

with \(U=[U_1~U_2] \in {\mathbb {R}}^{n,n}, U_1 \in {\mathbb {R}}^{n,2}, {\widetilde{\varSigma }}\in {\mathbb {R}}^{2,2}\), and \(V\in {\mathbb {R}}^{2,2}\). If we set

where \(Y_1 \in {\mathbb {R}}^{2,2}\) and \(Y_2 \in {\mathbb {R}}^{n-2,2}\), then

Thus, \({\widetilde{H}}\) is of rank at most four, and also \({\widetilde{H}}\) has a \(n-2\)-dimensional neutral subspace, i.e., a subspace \({\mathcal {V}}\subseteq {\mathbb {R}}^n\) satisfying \(z^T{\widetilde{H}}{\widetilde{z}}=0\) for all \(z,{\widetilde{z}}\in {\mathcal {V}}\). This means that the restriction of \({\widetilde{H}}\) to its range still has a neutral subspace of dimension at least

Thus, it follows by applying [7, Theorem 2.3.4] that \({\widetilde{H}}\) has at most two negative and at most two positive eigenvalues. On the other hand, we have

where \(K=YX^\dagger U_1W, W:=(\mu ^2 I_2-U_1^TYX^\dagger YX^\dagger U_1)^{-1/2}\) and \(\mu :=\Vert YX^\dagger \Vert \). Then we obtain

Also,

Inserting (2.21) and (2.23) into (2.22), we get

where \(Z:={\widetilde{\varSigma }}^{-1}Y_1^T\) and \(L:=Y_2 {\widetilde{\varSigma }}^{-1}\). This implies that

and Z are real symmetric. Let \({\widehat{U}} \in {\mathbb {R}}^{n-2,n-2}\) be invertible such that

is in row echelon form, where \({\widehat{L}}= \left[ \begin{array}{cc}l_{11}&{} l_{12}\\ 0 &{} l_{22}\end{array}\right] \). Then

where

This implies that \({\text {rank}}({\widehat{H}})={\text {rank}}(Z_{11}) \le 4\). Furthermore, by Sylvester’s law of inertia, \({\widehat{H}}\) and \(Z_{11}\) have the same number of negative eigenvalues. Thus, the assertion follows if we show that \(Z_{11}\) has at most two negative eigenvalues. For this, first suppose that \({\widehat{L}}\) is singular, i.e., \(l_{22}=0\), which would imply that \({\text {rank}}(Z_{11}) \le 3\). If \({\text {rank}}(Z_{11}) < 3\), then clearly \(Z_{11}\) can have at most two negative eigenvalues and at most two positive eigenvalues. If \({\text {rank}}(Z_{11}) = 3\), then we have that \(Z_{11}\) is indefinite. Indeed, if Z is indefinite then so is \(Z_{11}\). If Z is positive (negative) semidefinite then \(-{\widehat{L}} W Z W^T {\widehat{L}}^T\) is negative (positive) semidefinite. In this case \(Z_{11}\) has two real symmetric matrices of opposite definiteness as block matrices on the diagonal. This shows that \(Z_{11}\) is indefinite and thus can have at most two negative eigenvalues and at most two positive eigenvalues.

If \({\widehat{L}}\) is invertible, then

is a real symmetric Hamiltonian matrix. Therefore, by using the Hamiltonian spectral symmetry with respect to the imaginary axis, it follows that \(Z_{11}\) has two positive and two negative eigenvalues. \(\square \)

In the next section we will discuss real stability radii under restricted perturbations, where the restrictions will be expressed with the help of a restriction matrix \(B\in {\mathbb {R}}^{n,r}\). To deal with those, we will need the following lemmas.

Lemma 2.3

([20]) Let \(B \in {\mathbb {R}}^{n,r}\) with \({\text {rank}}(B)=r\), let \(y \in {\mathbb {C}}^{r}{\setminus } \{0\}\), and let \(z \in {\mathbb {C}}^{n}{\setminus }\{0\}\). Then, for all \( A\in {\mathbb {R}}^{r,r}\) we have \(B A y=z\) if and only if \( A y=B^\dagger z\) and \(BB^\dagger z=z\).

Lemma 2.4

Let \(B \in {\mathbb {R}}^{n,r}\) with \({\text {rank}}(B)=r\), let \(y \in {\mathbb {C}}^{r}{\setminus } \{0\}\) and \(z \in {\mathbb {C}}^{n}{\setminus }\{0\}\).

-

(1)

If \({\text {rank}}([z~{\bar{z}}])=1\), then there exists a positive semidefinite \(A= A^T \in {\mathbb {R}}^{r,r}\) satisfying \(B A y=z\) if and only if \(BB^\dagger z=z\) and \(y^HB^\dagger z>0\).

-

(2)

If \({\text {rank}}([z~{\bar{z}}])=2\). then there exists a positive semidefinite \(A= A^T \in {\mathbb {R}}^{r,r}\) satisfying \(B A y=z\) if and only if \(BB^\dagger z=z\) and \(y^HB^\dagger z > |y^TB^\dagger z|\).

Proof

Let \( A \in {\mathbb {R}}^{r,r}\), then by Lemma 2.3 we have that \(B A y=z\) if and only if

If \({\text {rank}}([z~{\bar{z}}])=1\), then by Theorem 2.4 the identity (2.24) is equivalent to \(BB^\dagger z=z\) and \(y^HB^\dagger z>0\). If \({\text {rank}}([z~{\bar{z}}])=2\) then (2.24) is equivalent to

because A is real. Note that \({\text {rank}}([B^\dagger z~B^\dagger {\bar{z}}])=2\), because otherwise there would exist \(\alpha \in {\mathbb {C}}^2{\setminus } \{0\}\) such that \([B^\dagger z~B^\dagger {\bar{z}}]\alpha =0\). But this implies that \([z~{\bar{z}}]\alpha =[BB^\dagger z~BB^\dagger {\bar{z}}]\alpha =0\) in contradiction to the fact that \({\text {rank}}([z~{\bar{z}}])=2\). Thus, by Theorem 2.3, there exists \( 0\le A \in {\mathbb {R}}^{r,r}\) satisfying (2.25) if and only if \(BB^\dagger z=z\) and \(y^HB^\dagger z > |y^TB^\dagger z|\). \(\square \)

The following is a version of Lemma 2.4 without semidefiniteness.

Lemma 2.5

Let \(B \in {\mathbb {R}}^{n,r}\) with \({\text {rank}}(B)=r\), let \(y \in {\mathbb {C}}^{r}{\setminus } \{0\}\) and \(z \in {\mathbb {C}}^{n}{\setminus }\{0\}\). Then there exist a real symmetric matrix \( A \in {\mathbb {R}}^{r,r}\) satisfying \(B A y=z\) if and only if \(BB^\dagger z=z\) and \(y^HB^\dagger z \in {{\mathbb {R}}}\).

Proof

The proof is analogous to that of Lemma 2.4, using Theorems 2.5 and 2.6 instead of Theorems 2.3 and 2.4. \(\square \)

In this section we have presented several mapping theorems, in particular for the real case. These will be used in the next section to determine formulas for the real stability radii of DH systems.

3 Real stability radii for DH systems

Consider a real linear time-invariant dissipative Hamiltonian (DH) system \({\dot{x}}=(J-R)Qx\) as in (1.1), i.e., with \(J,\,R,\,Q \in {\mathbb {R}}^{n , n}\) being such that \(J^T=-J, R^T=R \ge 0\) and \(Q^T=Q > 0\). Then the formula for the stability radius \(r_{{{\mathbb {R}}},2}(R;B,C)\) from Definition 1.1 for given restriction matrices \(B\in {\mathbb {R}}^{n,r}\) and \(C\in {\mathbb {R}}^{n,q}\) is a direct consequence of the following well-known result.

Theorem 3.1

([23]) For a given \(M\in {\mathbb {C}}^{p,m}\), define

Then

where \(\sigma _2(A)\) is the second largest singular value of a matrix A. Furthermore, an optimal \(\varDelta \) that attains the value of \(\mu _{{{\mathbb {R}}}}(M)\) can be chosen of rank at most two.

Applying this theorem to DH systems we obtain the following corollary.

Corollary 3.1

Consider an asymptotically stable DH system of the form (1.1) and let \(B \in {\mathbb {R}}^{n,r}\) and \(C\in {\mathbb {R}}^{q,n}\) be given restriction matrices. Then

and

where \(M(\omega ):=CQ\big ((J-R)Q-i\omega I_n\big )^{-1}B\).

Proof

By definition, we have

where the last equality follows, since \((J-R)Q\) is asymptotically stable so that the inverse of \((J-R)Q-i\omega I_n\) exists for all \(\omega \in {{\mathbb {R}}}\). Thus we have

where \(M(\omega ):=CQ((J-R)Q-i\omega I_n)^{-1}B\). Therefore, (3.1) follows from Theorem 3.1, and if \({\widehat{\varDelta }}\) is of rank at most two such that \(\Vert {\widehat{\varDelta }}\Vert =r_{{{\mathbb {R}}},2}(R;B,C)\), then (3.2) follows by using the definition of \(r_{{{\mathbb {R}}},F}(R;B,C)\) and by the fact that

\(\square \)

To derive the stability radii under structure preserving perturbations, we will reformulate the problem of computing the radii in terms of real structured mapping problems and apply the results from Sect. 2. In Sect. 3.1 we first consider the radius \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\) that is shown to correspond to minimal rank structure-preserving perturbations of minimal norm. Then the radius \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_i}(R;B)\) corresponding minimal norm structure-preserving perturbations is considered in Sect. 3.2.

3.1 The stability radius \(r_{{{\mathbb {R}}},p}^{{{\mathcal {S}}}_d}(R;B)\)

To derive formulas for the stability radius under real structure-preserving restricted and semidefinite perturbations we need the following two lemmas.

Lemma 3.1

Let \(H^T=H\in {\mathbb {R}}^{n,n}\) be positive semidefinite. Then \(x^HHx\ge \big |x^THx\big |\) for all \(x\in {\mathbb {C}}^n\), and equality holds if and only if Hx and \(H{\bar{x}}\) are linearly dependent.

Proof

Let \(S\in {\mathbb {R}}^{n,n}\) be a symmetric positive semidefinite square root of H, i.e., \(S^2=H\). Then, using the Cauchy-Schwarz inequality we obtain that

because \({\bar{x}}^HH{\bar{x}}=\overline{x^HHx}=x^HHx\) as H is real. In particular equality holds if and only if Sx and \(S{\bar{x}}\) are linearly dependent which is easily seen to be equivalent to the linear dependence of Hx and \(H{\bar{x}}\). \(\square \)

Lemma 3.2

Let \(R,W \in {\mathbb {R}}^{n,n}\) be such that \(R^T=R \ge 0\) and \(W^T=W > 0\). If \(x \in {\mathbb {C}}^{n}\) is such that \({\text {rank}}([x~{\bar{x}}])=2\) and \(x^HW^TRWx > |x^TW^TRWx|\), set

Then \(R+\varDelta _R\) is symmetric positive semidefinite.

Proof

Since R and W are real symmetric and \(x^HW^TRWx>|x^TW^TRWx|\), it follows that the matrix \([\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]^HWRW[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]\) is real symmetric and positive definite and, therefore, \(\varDelta _R\) is well defined and we have \(\varDelta _R=\varDelta _R^T\le 0\). We prove that \(R+\varDelta _R\ge 0\) by showing that all its eigenvalues are nonnegative. Since W is nonsingular, we have that \(Wx\ne 0\). Also \(\varDelta _R\) is a real matrix of rank two satisfying \(\varDelta _RW[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]=-RW[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]\). This implies that

Since \({\text {rank}}[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]={\text {rank}}[x~{\bar{x}}]=2\) and since W is nonsingular, we have that \(W\mathop {\mathrm {Re}}x\) and \(W\mathop {\mathrm {Im}}x\) are linearly independent eigenvectors of \(R+\varDelta _R\) corresponding to the eigenvalue zero.

Let \(\lambda _1,\ldots ,\lambda _n\) be the eigenvalues of R and let \(\eta _1,\ldots ,\eta _n\) be the eigenvalues of \(R+\varDelta _R\), where both lists are arranged in nondecreasing order, i.e.,

Since \(\varDelta _R\) is of rank two, by the Cauchy interlacing theorem [6],

This implies that \(0\le \eta _3 \le \cdots \le \eta _n\), and thus the assertion follows once we show that \(\eta _1=0\) and \(\eta _2=0\). If R is positive definite, then \(\lambda _1,\ldots ,\lambda _n\) satisfy \(0<\lambda _1\le \cdots \le \lambda _n\) and, therefore, \(0<\eta _3\le \cdots \le \eta _n\). Therefore we must have \(\eta _1=0\) and \(\eta _2=0\) by (3.3).

If R is positive semidefinite but singular, then let k be the dimension of the kernel of R. We then have \(k < n\), because \(R\ne 0\). Letting \(\ell \) be the dimension of kernel of \(R+\varDelta _R\) , then using (3.4) we have that

and we have \(\eta _1=0\) and \(\eta _2=0\) if we show that \(\ell =k+2\). Since W is nonsingular, the kernels of R and RW have the same dimension k. Let \(x_1,\ldots ,x_k\) be linearly independent eigenvectors of RW associated with the eigenvalue zero, i.e., we have \(RWx_i=0\) for all \(i=1,\ldots ,k\). Then \(\varDelta _R Wx_i=0\) for all \(i=1,\ldots ,k\), and hence \((R+\varDelta _R)Wx_i=0\) for all \(i=1,\ldots ,k\). The linear independence of \(x_1,\ldots ,x_k\) together with the nonsingularity of W implies that \(Wx_1,\ldots ,Wx_k\) are linearly independent. By (3.3) we have \((R+\varDelta _R)W\mathop {\mathrm {Re}}x=0\) and \((R+\varDelta _R)W\mathop {\mathrm {Im}}x=0\). Moreover, the vectors \(W\mathop {\mathrm {Re}}x,W\mathop {\mathrm {Im}}x, W x_1,\ldots ,Wx_k\) are linearly independent. Indeed, let \(\alpha , \beta ,\alpha _1,\ldots ,\alpha _k \in {\mathbb {R}}\) be such that

Then we have \(R( \alpha W\mathop {\mathrm {Re}}x + \beta W\mathop {\mathrm {Im}}x) =0\) as \(RWx_i=0\) for all \(i=1,\ldots ,k\). This implies that \(\alpha =0\) and \(\beta =0\), because \(RW\mathop {\mathrm {Re}}x\) and \(RW\mathop {\mathrm {Im}}x\) are linearly independent. The linear independence of \(Wx_1,\ldots ,Wx_k\) then implies that \(\alpha _i=0\) for all \(i=1,\ldots ,k\), and hence \(W\mathop {\mathrm {Re}}x,W\mathop {\mathrm {Im}}x,Wx_1,\ldots ,Wx_k\) are linearly independent eigenvectors of \(R+\varDelta _R\) corresponding to the eigenvalue zero. Thus, the dimension of the kernel of \(R+\varDelta _R\) is at least \(k+2\) and hence we must have \(\eta _1=0\) and \(\eta _2=0\). \(\square \)

Using these lemmas, we obtain a formula for the structured real stability radius of DH systems.

Theorem 3.2

Consider an asymptotically stable DH system of the form (1.1). Let \(B \in {\mathbb {R}}^{n,r}\) with \({\text {rank}}(B)=r\), and let \(p \in \{2,F\}\). Furthermore, for \(j\in \{1,2\}\) let \(\varOmega _j\) denote the set of all eigenvectors x of JQ such that \((I_n-BB^\dagger )RQx=0\) and \({\text {rank}}\big (\left[ \begin{array}{cc}RQx&RQ{\bar{x}}\end{array}\right] \big )=j\), and let \(\varOmega :=\varOmega _1\cup \varOmega _2\). Then \(r_{{{\mathbb {R}}},p}^{{{\mathcal {S}}}_d}(R;B)\) is finite if and only if \(\varOmega \) is nonempty. If this is the case, then

where \(X=B^TQ[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]\) and \(Y=B^\dagger RQ[\mathop {\mathrm {Re}}x~\mathop {\mathrm {Im}}x]\).

Proof

By definition, we have

where \({\mathcal {S}}_d(R,B):=\left\{ \varDelta \in {\mathbb {R}}^{r , r}\,\big |\,\varDelta ^T=\varDelta \le 0~~\mathrm{and}~~(R+B\varDelta B^T)\ge 0\right\} \). By using Lemma 1.1, we obtain that

since by Lemma 2.3 we have \(B\varDelta B^T Qx=-RQx\) if and only if \(\varDelta B^TQx=-B^\dagger RQx\) and \(BB^\dagger RQx=RQx\), and thus \(x\in \varOmega \). From (3.5) and \({\mathcal {S}}_d(R,B) \subseteq \{\varDelta \in {\mathbb {R}}^{r,r}\,|\, \varDelta ^T=\varDelta \le 0\}\), we obtain that

The infimum on the right hand side of (3.6) is finite if and only if \(\varOmega \) is nonempty. The same will also hold for \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\) if we show equality in (3.6). To this end we will use the abbreviations

for \(j\in \{1,2\}\), i.e., we have \(\varrho =\min \{\varrho _1,\varrho _2\}\), and we consider two cases.

Case (1): \(\varrho =\varrho _1\). If \(x\in \varOmega _1\), then \(BB^\dagger RQx=RQx\), and RQx and \(RQ{\bar{x}}\) are linearly dependent. But then also \(B^\dagger RQx\) and \(B^\dagger RQ{\bar{x}}\) are linearly dependent and hence, by Lemma 2.4 there exists \(\varDelta \in {\mathbb {R}}^{r,r}\) such that \(\varDelta \le 0\) and \(\varDelta B^TQx=-B^\dagger RQx\) (and thus \((-\varDelta )\ge 0\) and \((-\varDelta ) B^TQx=B^\dagger RQx\)) if and only \(x^HQRQx>0\). This condition is satisfied for all \(x\in \varOmega _1\). Indeed, since R is positive semidefinite, we find that \(x^HQRQx\ge 0\), and \(x^HQRQx=0\) would imply \(RQx=0\) and thus \((J-R)Qx=JQx\) which means that x is an eigenvector of \((J-R)Qx\) associated with an eigenvalue on the imaginary axis in contradiction to the assumption that \((J-R)Q\) only has eigenvalues in the open left half plane.

Using minimal norm mappings from Theorem 2.4 we thus have

As the expression in (3.7) is invariant under scaling of x, it is sufficient to take the infimum over all \(x\in \varOmega _1\) of norm one. Then a compactness argument shows that the infimum is actually attained for some \({\widetilde{x}}\in \varOmega _1\) and by [20, Theorem 4.2], the matrix

is the unique (resp. a) complex matrix of minimal Frobenius (resp. spectral) norm satisfying \({\widehat{\varDelta }}^T={\widehat{\varDelta }}\le 0\) and \({\widehat{\varDelta }} B^TQx=-B^\dagger RQx\). Also, by Theorem 2.4 the matrix \({\widehat{\varDelta }}\) is real and by [20, Lemma 4.1] we have \(R+B{\widehat{\varDelta }} B^T \ge 0\). Thus \({\widehat{\varDelta }}\in {\mathcal {S}}_d(R,B)\). But this means that \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)=\varrho _1=\varrho \).

Case (2): \(\varrho =\varrho _2\). If \(x\in \varOmega _2\), then \(BB^\dagger RQx=RQx\), and \(\mathop {\mathrm {Re}}RQx\) and \(\mathop {\mathrm {Im}}RQx\) are linearly independent. But then, also \(\mathop {\mathrm {Re}}B^\dagger RQx\) and \(\mathop {\mathrm {Im}}B^\dagger RQx\) are linearly independent, because otherwise, also \(\mathop {\mathrm {Re}}RQx=\mathop {\mathrm {Re}}BB^\dagger RQx\) and \(\mathop {\mathrm {Im}}RQ{\bar{x}}=\mathop {\mathrm {Im}}BB^\dagger RQ{\bar{x}}\) would be linearly dependent. Thus, by Lemma 2.4 there exists \(\varDelta \in {\mathbb {R}}^{r,r}\) such that \(\varDelta \le 0\) and \(\varDelta B^TQx=-B^\dagger RQx\) if and only if \(x^HQRQx>|x^TQRQx|\). By Lemma 3.1 this condition is satisfied for all \(x\in \varOmega _2\), because R and thus QRQ is positive semidefinite.

Using minimal norm mappings from Theorem 2.3 we then obtain

for some \({\tilde{x}} \in \varOmega _2\), where \({\widetilde{X}}=B^TQ\,[\mathop {\mathrm {Re}}{\tilde{x}}~\mathop {\mathrm {Im}}{\tilde{x}}]\) and \({\widetilde{Y}}=B^\dagger RQ\,[\mathop {\mathrm {Re}}{\tilde{x}} ~\mathop {\mathrm {Im}}{{\tilde{x}}}]\). Indeed, the matrix \(Y(Y^HX)^{-1}Y^H\) is invariant under scaling of x, so that it is sufficient to take the infimum over all \(x\in \varOmega _2\) having norm one. A compactness argument shows that the infimum is actually a minimum and attained for some \({\widehat{x}}\in \varOmega _2\). Then setting

we have equality in (3.8) if we show that \(R+B{\tilde{\varDelta }}_R B^T\) is positive semidefinite, because this would imply that \({\tilde{\varDelta }}_R \in {\mathcal {S}}_d(R,B)\). But this follows from Lemma 3.2 by noting that \(BB^\dagger RQ\,[\mathop {\mathrm {Re}}{\tilde{x}}~\mathop {\mathrm {Im}}{{\tilde{x}}}]=RQ\,[\mathop {\mathrm {Re}}{{\tilde{x}}}~\mathop {\mathrm {Im}}{{\tilde{x}}}]\). Indeed, by the definition of \(\varOmega _2\), the vectors \(\mathop {\mathrm {Re}}RQ{\tilde{x}}\) and \(\mathop {\mathrm {Im}}RQ{{\tilde{x}}}\) are linearly independent, and

is positive semidefinite by Lemma 3.2. This proves that \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)=\varrho _2=\varrho .\) \(\square \)

Remark 3.1

In the case that \(R>0\) and J are invertible, the set \(\varOmega _1\) from Theorem 3.2 is empty and hence \(\varOmega =\varOmega _2\), because if \(R>0\) and if x is an eigenvector of JQ with \({\text {rank}}\big (\left[ \begin{array}{cc}RQx&RQ{\bar{x}}\end{array}\right] \big )=1\) then, x is necessarily an eigenvector associated with the eigenvalue zero of JQ. Indeed, if RQx and \(RQ{\bar{x}}\) are linearly dependent, then x and \({\bar{x}}\) are linearly independent, because RQ is nonsingular as \(R >0\) and \(Q>0\). This is only possible if x is associated with a real eigenvalue, and since the eigenvalues of JQ are on the imaginary axis, this eigenvalue must be zero.

Remark 3.2

We mention that in the case that R and J are invertible, \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\) is also the minimal norm of a structure-preserving perturbation of rank at most two that moves an eigenvalue of \((J-R)Q\) to the imaginary axis. To be more precise, if

and

then we have \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_2}(R;B)=r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\). Indeed, assume that \(\varDelta \in {\mathcal {S}}_2(R,B)\) is such that \((J-R)Q-(B\varDelta B^T)Q\) has an eigenvalue on the imaginary axis. By Lemma 1.1 we then have \((R+B\varDelta B^T)Qx=0\) for some eigenvector x of JQ. Since R and J are invertible, it follows from Remark 3.1 that RQx and \(RQ{\bar{x}}\) are linearly independent. Since \(\varDelta \) has rank at most two, it follows that the kernel of \(\varDelta \) has dimension at least \(n-2\). Thus, let \(w_3,\dots ,w_n\) be linearly independent vectors from the kernel of \(\varDelta \). Then \(B^TQx,B^TQ{\bar{x}},w_3,\dots ,w_n\) is a basis of \({\mathbb {C}}^n\). Indeed, let \(\alpha _1,\dots ,\alpha _n\in {\mathbb {C}}\) be such that

Then multiplication with \(B\varDelta \) yields

and we obtain \(\alpha _1=\alpha _2=0\), because RQx and \(RQ{\bar{x}}\) are linearly independent. But then (3.9) and the linear independence of \(w_3,\dots ,w_n\) imply \(\alpha _3=\dots =\alpha _n=0\). Thus, setting \(T:=[B^TQx,B^TQ{\bar{x}},w_3,\dots ,w_n]\) we obtain that T is invertible and \(T^H\varDelta T={\text {diag}}(D,0)\), where

Since by Lemma 3.1 we have \(x^HQRQx>|x^TQRQx|\), it follows that D is positive definite which implies \(\varDelta \in {\mathcal {S}}_d(R;B)\) and hence \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_2}(R;B)\ge r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_d}(R;B)\). The inequality “\(\le \)” is trivial as minimal norm elements from \({\mathcal {S}}_d(R;B)\) have rank at most two.

In this subsection we have characterized the real structured restricted stability radius under positive semidefinite perturbations to the dissipation matrix R. In the next subsection we extend these results to indefinite perturbations that keep R semidefinite.

3.2 The stability radius \(r_{{\mathbb {R}},p}^{{{\mathcal {S}}}_i}(R;B)\)

If the perturbation matrices \(\varDelta _R\) are allowed to be indefinite then the perturbation analysis is more complicated. We start with the following lemma.

Lemma 3.3

Let \(0<R=R^T,\, \varDelta _R=\varDelta _R^T \in {\mathbb {R}}^{n,n}\) be such that \(\varDelta _R\) has at most two negative eigenvalues. If \({\text {dim}}\left( {\text {Ker}}(R+\varDelta _R)\right) = 2\), then \(R+\varDelta _R\ge 0\).

Proof

Let \(\lambda _1,\ldots ,\lambda _n\) be the eigenvalues of \(\varDelta _R\). As \(\varDelta _R\) has at most two negative eigenvalues we may assume that \(\lambda _3,\lambda _4,\ldots ,\lambda _n \ge 0\) and we have the spectral decomposition

with unit norm vectors \(u_1,\ldots ,u_n\in {\mathbb {R}}^n\). Since

and \(\lambda _1u_1u_1^H+\lambda _2 u_2u_2^H\) is of rank two, we can apply the Cauchy interlacing theorem and obtain that \(R+\varDelta _R={\widetilde{R}}+\lambda _1u_1u_1^H+\lambda _2 u_2u_2^H\) has at least \(n-2\) positive eigenvalues. But then using the fact that \({\text {dim}}({\text {Ker}}(R+\varDelta _R))=2\) we get \(R+\varDelta _R \ge 0\). \(\square \)

Using this lemma, we obtain the following results on the stability radius \(r_{{\mathbb {R}},p}^{{{\mathcal {S}}}_i}(R;B)\).

Theorem 3.3

Consider an asymptotically stable DH system of the form (1.1). Let \(B \in {\mathbb {R}}^{n,r}\) with \({\text {rank}}(B)=r\) and let \(p \in \{2,F\}\). Furthermore, for \(j\in \{1,2\}\) let \(\varOmega _j\) denote the set of all eigenvectors x of JQ such that \(BB^\dagger RQx=RQx\) and \({\text {rank}}\big (\left[ \begin{array}{cc} B^TQx&B^TQ{\bar{x}}\end{array}\right] \big )=j\), and let \(\varOmega =\varOmega _1\cup \varOmega _2\).

-

(1)

If \(R > 0\), then \(r_{{{\mathbb {R}}},p}^{{{\mathcal {S}}}_i}(R;B)\) is finite if and only if \(\varOmega \) is nonempty. In that case we have

$$\begin{aligned} r_{{{\mathbb {R}}},2}^{{{\mathcal {S}}}_i}(R;B)=\min \left\{ \inf _{x\in \varOmega _1}\frac{\Vert (B^\dagger RQx)\Vert }{\Vert B^TQx\Vert },\, \inf _{x \in \varOmega _2}\Vert YX^\dagger \Vert \right\} \end{aligned}$$(3.10)and

$$\begin{aligned}&r_{{{\mathbb {R}}},F}^{{{\mathcal {S}}}_i}(R;B)\nonumber \\&\quad =\min \left\{ \inf _{x\in \varOmega _1}\frac{\Vert (B^\dagger RQx)\Vert }{\Vert B^HQx\Vert },\,\inf _{x \in \varOmega _2}\sqrt{ {\Vert YX^\dagger \Vert }_F^2-{\text {trace}}\left( YX^\dagger (YX^\dagger )^HXX^\dagger \right) }\right\} ,\nonumber \\ \end{aligned}$$(3.11)where \(X=[\mathop {\mathrm {Re}}B^TQx~\mathop {\mathrm {Im}}B^TQx]\) and \(Y=[\mathop {\mathrm {Re}}B^\dagger RQx~\mathop {\mathrm {Im}}B^\dagger RQx]\) for \(x \in \varOmega _2\).

-

(2)

If \(R \ge 0\) is singular and if \(r_{{{\mathbb {R}}},p}^{{{\mathcal {S}}}_i}(R;B)\) is finite, then \(\varOmega \) is nonempty and we have

$$\begin{aligned} r_{{{\mathbb {R}}},2}^{{{\mathcal {S}}}_i}(R;B)\ge \min \left\{ \inf _{x\in \varOmega _1}\frac{\Vert (B^\dagger RQx)\Vert }{\Vert B^TQx\Vert },\, \inf _{x \in \varOmega _2}\Vert YX^\dagger \Vert \right\} \end{aligned}$$(3.12)and

$$\begin{aligned}&r_{{{\mathbb {R}}},F}^{{{\mathcal {S}}}_i}(R;B)\nonumber \\&\quad \ge \min \left\{ \inf _{x\in \varOmega _1}\frac{\Vert (B^\dagger RQx)\Vert }{\Vert B^TQx\Vert },\,\inf _{x \in \varOmega _2}\sqrt{ {\Vert YX^\dagger \Vert }_F^2-{\text {trace}}\left( YX^\dagger (YX^\dagger )^HXX^\dagger \right) }\right\} ,\nonumber \\ \end{aligned}$$(3.13)where \(X=[\mathop {\mathrm {Re}}B^TQx~\mathop {\mathrm {Im}}B^TQx]\) and \(Y=[\mathop {\mathrm {Re}}B^\dagger RQx~\mathop {\mathrm {Im}}B^\dagger RQx]\) for \(x \in \varOmega _2\).

Proof

By definition

where \({\mathcal {S}}_i(R,B):=\left\{ \varDelta =\varDelta ^T\in {\mathbb {R}}^{r,r}\,\big |\,(R+B\varDelta B^T)\ge 0\right\} \). Using Lemma 1.1 and Lemma 2.3 and following the lines of the proof of Theorem 3.2, we get

Since all elements of \({\mathcal {S}}_i(R,B)\) are real symmetric, we obtain that

The infimum in the right hand side of (3.14) is finite if \(\varOmega \) is nonempty, as by Lemma 2.5 for \(x\in \varOmega \) there exist \(\varDelta =\varDelta ^T\in {\mathbb {R}}^{r,r}\) such that \(\varDelta B^TQx=-B^\dagger RQx\) if and only if \(x^HQBB^\dagger RQx \in {{\mathbb {R}}}\). This condition is satisfied because of the fact that \(BB^\dagger RQx=RQx\) and R is real symmetric. If \(r_{{{\mathbb {R}}},p}^{{\mathcal {S}}_i}(R;B)\) is finite, then \(\varOmega \) is nonempty because otherwise the right hand side of (3.14) would be infinite. To continue, we will use the abbreviations

for \(j\in \{1,2\}\), i.e., \(\varrho ^{(p)}=\min \{\varrho _1^{(p)},\varrho _2^{(p)}\}\), and we consider two cases.

Case (1): \(\varrho ^{(p)}=\varrho _1^{(p)}\). If \(x\in \varOmega _1\), then \(BB^\dagger RQx=RQx\) and \(B^TQx\) and \(B^TQ{\bar{x}}\) are linearly dependent. Then using mappings of minimal spectral resp. Frobenius norms (and again using compactness arguments), we obtain from Theorem 2.6 that

for some \({\tilde{x}}:={\widehat{x}} \in \varOmega _1\). This proves assertion (2) and “\(\ge \)” in (3.10) and (3.11) in the case \(\varrho ^{(p)}=\varrho _1^{(p)}\).

Case (2): \(\varrho ^{(p)}=\varrho _2^{(p)}\). If \(x\in \varOmega _2\), then \(BB^\dagger RQx=RQx\) and \(B^TQx\) and \(B^TQ{\bar{x}}\) are linearly independent. Using mappings of minimal spectral resp. Frobenius norms (and once more using compactness arguments), we obtain from Theorem 2.5 that

for some \({\widehat{x}} \in \varOmega _2\), where \({\widehat{X}}=[\mathop {\mathrm {Re}}{B^TQ{\widehat{x}}}~\mathop {\mathrm {Im}}{B^TQ{\widehat{x}}}]\) and \({\widehat{Y}}=[\mathop {\mathrm {Re}}{B^\dagger RQ{\widehat{x}}}\mathop {\mathrm {Im}}{B^\dagger RQ{\widehat{x}}}]\), and

for some \({\tilde{x}} \in \varOmega _2\), where \({\widetilde{X}}=[\mathop {\mathrm {Re}}{B^TQ{\tilde{x}}}~\mathop {\mathrm {Im}}{B^TQ{\tilde{x}}}]\) and \({\widetilde{Y}}=[\mathop {\mathrm {Re}}{B^\dagger RQ{\tilde{x}}}\mathop {\mathrm {Im}}{B^\dagger RQ{\tilde{x}}}]\). This proves assertion (2) and \(``\ge \)” in (3.10) and (3.11) in the case \(\varrho ^{(p)}=\varrho _2^{(p)}\).

In both cases (1) and (2), it remains to show that equality holds in (3.10) and (3.11) when \(R>0\). This would also prove that in the case \(R>0\) the non-emptiness of \(\varOmega \) implies the finiteness of \(r_{{{\mathbb {R}}},F}^{{\mathcal {S}}_i}(R;B)\).

Thus, assume that \(R>0\) and let \({\widehat{\varDelta }} ={\widehat{\varDelta }}^T\in {\mathbb {R}}^{r,r}\) and \({\widetilde{\varDelta }} ={\widetilde{\varDelta }}^T\in {\mathbb {R}}^{r,r}\) be such that they satisfy

and such that they are mappings of minimal spectral or Frobenius norm, respectively, as in Theorem 2.5 or Theorem 2.6, respectively. The proof is complete if we show that \((R+B{\widehat{\varDelta }} B^T)\ge 0\) and \((R+B{\widetilde{\varDelta }} B^T)\ge 0\), because this would imply that \({\widehat{\varDelta }}\) and \({\widetilde{\varDelta }}\) belong to the set \({\mathcal {S}}_i(R,B)\). In the case \(\varrho ^{(p)}=\varrho _1^{(p)}\) this follows exactly as in the proof of [20, Theorem 4.5] which is the corresponding result to Theorem 3.3 in the complex case. (In fact, in this case \({\widetilde{\varDelta }}\) and \({\widehat{\varDelta }}\) coincide with the corresponding complex mappings of minimal norm.)

In the case \(\varrho ^{(p)}=\varrho _2^{(p)}\), we obtain from Lemma 2.2, that the matrices \({\widehat{\varDelta }}\) and \({\widetilde{\varDelta }}\) are of rank at most four with at most two negative eigenvalues. This implies that also the matrices \(B{\widehat{\varDelta }} B^T\) and \(B{\widetilde{\varDelta }} B^T\) individually have at most two negative eigenvalues. Indeed, let \( B_1 \in {\mathbb {R}}^{n,n-r}\) be such that \([B~\, B_1] \in {\mathbb {R}}^{n,n}\) is invertible then we can write

and by Sylvester’s law of inertia also \(B {\widehat{\varDelta }} B^T\) has at most two negative eigenvalues. A similar argument proves the assertion for \(B{\widetilde{\varDelta }} B^T\).

Furthermore, using (3.15), we obtain

and

since \({\widehat{x}},\,{\tilde{x}} \in \varOmega \), i.e., \(BB^\dagger RQ{\widehat{x}}=RQ{\widehat{x}}\) and \(BB^\dagger RQ{\tilde{x}}=RQ{\tilde{x}}\). Also \({\text {rank}}[{\widehat{x}}~\bar{{\widehat{x}}}]=2\) and \({\text {rank}}[{\tilde{x}}~\bar{{\tilde{x}}}]=2\), respectively, imply that

Thus, Lemma 3.3 implies that \((R+B{\widehat{\varDelta }} B^T) \ge 0\) and \((R+B{\widetilde{\varDelta }} B^T)\ge 0\). \(\square \)

Remark 3.3

It follows from the proof of Theorem 3.3 that in the case \(R\ge 0\), the inequalities in (3.12) and (3.13) are actually equalities if the minimal norm mappings \({\widetilde{\varDelta }}\) and \({\widehat{\varDelta }}\) from (3.15) satisfy \((R+B{\widehat{\varDelta }} B^T) \ge 0\) and \((R+B{\widetilde{\varDelta }} B^T)\ge 0\), respectively. Thus, when a method for the computation of the stability radius is implemented following the ideas in the proof of Theorem 3.3, then one has to explicitly compute the mappings \({\widetilde{\varDelta }}\) and \({\widehat{\varDelta }}\) from (3.15) and hence it is easy to check if the conditions \((R+B{\widehat{\varDelta }} B^T) \ge 0\) and/or \((R+B{\widetilde{\varDelta }} B^T)\ge 0\) are satisfied. In our numerical experiments this was always the case, so that we conjecture that equality in (3.12) and (3.13) holds in general.

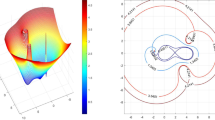

4 Numerical experiments

In this section, we present some numerical experiments to illustrate that the real structured stability radii are indeed larger than the real unstructured ones. To compute the distances, in all cases we used the function fminsearch in MATLAB Version No. 7.8.0 (R2009a) to solve the associated optimization problems.

We computed the real stability radii \(r_{{{\mathbb {R}}},2}(R;B,B^T), r_{{{\mathbb {R}}}, 2}^{{\mathcal {S}}_i}(R;B)\) and \(r_{{{\mathbb {R}}},2}^{{\mathcal {S}}_d}(R;B)\) with respect to real restricted perturbations to R, as obtained in Theorems 3.1–3.3 (taking into account Remark 3.3), respectively, and compared them to the corresponding complex distances \(r_{{{\mathbb {C}}},2}(R;B,B^T), r_{{{\mathbb {C}}}, 2}^{{\mathcal {S}}_i}(R;B)\) and \(r_{{{\mathbb {C}}},2}^{{\mathcal {S}}_d}(R;B)\) as obtained in [20, Theorem 3.3], [20, Theorem 4.5] and [20, Theorem 4.2], respectively.

We chose random matrices \(J,R,Q,B\in {\mathbb {R}}^{n,n}\) for different values of \(n\le 14\) with \(J^T=-J, R^T=R \ge 0\) and B of full rank, such that \((J-R)Q\) is asymptotically stable and all restricted stability radii were finite. The results in Table 1 illustrate that the stability radius \(r_{{{\mathbb {R}}},2}(R,B,B^T)\) obtained under general restricted perturbations is significantly smaller than the real stability radii obtained under structure-preserving restricted perturbations, and it also illustrates that the real stability radii may be significantly larger than the corresponding complex stability radii.

Example 4.1

As a second example consider the lumped parameter, mass-spring-damper dynamical system, see, e.g., [32] with two point masses \(m_1\) and \(m_2\), which are connected by a spring-damper pair with constants \(k_2\) and \(c_2\), respectively. Mass \(m_1\) is linked to the ground by another spring-damper pair with constants \(k_1\) and \(c_1\), respectively. The system has two degrees of freedom. These are the displacements \(u_1(t)\) and \(u_2(t)\) of the two masses measured from their static equilibrium positions. Known dynamic forces \(f_1(t)\) and \(f_2(t)\) act on the masses. The equations of motion can be written in the matrix form as

where

where the real symmetric matrices \(M,\,D\) and K denote the mass, damping and stiffness matrices, respectively, and \(f,u,{\dot{u}}\) and \({\ddot{u}}\) are the force, displacement, velocity, and acceleration vectors, respectively. With the values \(m_1=2,\,m_2=1,\,c_1=0.1,\,c_2=0.3,\,k_1=6\), and \(k_2=3\) we have \(M,D,K > 0\) and an appropriate first order formulation has the linear DH pencil \(\lambda I_4 -(J-R)Q\),

The eigenvalues of \((J-R)Q\) are \(-0.2168 \pm 2.4361i\) and \(-0.0332 \pm 1.2262i\) and thus the system is asymptotically stable. Setting \(B=C^T=\left[ \begin{array}{cc}e_1&e_2 \end{array}\right] \in {\mathbb {R}}^4\), we perturb only the damping matrix D and the corresponding real stability radii are given as follows.

\(r_{{{\mathbb {R}}},2}(R,B,B^T)\) | \(r_{{{\mathbb {R}}},2}^{{\mathcal {S}}_i}(R,B)\) | \(r_{{{\mathbb {R}}},2}^{{\mathcal {S}}_d}(R,B)\) |

|---|---|---|

0.0796 | 0.1612 | 0.3250 |

As long as the norm of perturbation in damping matrix D is less than the stability radius the system remains asymptotically stable. We also see that the stability radii \(r_{{{\mathbb {R}}},2}^{{\mathcal {S}}_i}(R,B)\) and \(r_{{{\mathbb {R}}},2}^{{\mathcal {S}}_d}(R,B)\) that preserve the semidefiniteness of R are significantly larger than \(r_{{{\mathbb {R}}},2}(R,B,B^T)\).

In Table 2, we list the values of various stability radii for mass-spring-damper systems [32] of increasing size. The corresponding masses, damping constants and spring constants were chosen from the top of the vectors

and

respectively, i.e., the four dimensional (\(n=4\)) DH pencil as in (4.1) is corresponding to the first two entries of mass vector m, damping vector c and spring vector k, similarly \(n=6\) is corresponding to the first three entries from the vectors m, c and k, and so on. The restriction matrices \(B=C^T=[e_1~\,e_2\,\cdots \, e_{n/2}]\in {\mathbb {R}}^{n,\frac{n}{2}}\) are such that only the damping matrix D in R is perturbed. In addition to the conclusions of Example 4.1, we found that as expected, the stability radius with respect to general perturbations decreases as the system dimension increases, while this is much less pronounced for the stability radii with respect to structure preserving perturbations.

5 Conclusions

We have presented formulas for the stability radii under real restricted structure-preserving perturbations to the dissipation term R in dissipative Hamiltonian systems. The results and the numerical examples show that the system is much more robustly asymptotically stable under structure-preserving perturbations than when the structure is ignored. Open problems include the computation of the real stability radii when the energy functional Q or the structure matrix J, or all three matrices R, Q, and J are perturbed.

References

Adhikari, B.: Backward perturbation and sensitivity analysis of structured polynomial eigenvalue problem. PhD thesis, Dept. of Math., IIT Guwahati, Assam, India (2008)

Byers, R.: A bisection method for measuring the distance of a stable to unstable matrices. SIAM J. Sci. Stat. Comput. 9, 875–881 (1988)

Dalsmo, M., van der Schaft, A.J.: On representations and integrability of mathematical structures in energy-conserving physical systems. SIAM J. Control Optim. 37, 54–91 (1999)

Davis, C., Kahan, W., Weinberger, H.: Norm-preserving dialations and their applications to optimal error bounds. SIAM J. Numer. Anal. 19, 445–469 (1982)

Freitag, M.A., Spence, A.: A Newton-based method for the calculation of the distance to instability. Linear Algebra Appl. 435(12), 3189–3205 (2011)

Gantmacher, F.R.: Theory of Matrices, vol. 1. Chelsea, New York (1959)

Gohberg, I., Lancaster, P., Rodman, L.: Indefinite Linear Algebra and Applications. Birkhäuser, Basel (2006)

Golo, G., van der Schaft, A.J., Breedveld, P.C., Maschke, B.M.: Hamiltonian formulation of bond graphs. In: Rantzer, A., Johansson, R. (eds.) Nonlinear and Hybrid Systems in Automotive Control, pp. 351–372. Springer, Heidelberg (2003)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Gräbner, N., Mehrmann, V., Quraishi, S., Schröder, C., von Wagner, U.: Numerical methods for parametric model reduction in the simulation of disc brake squeal. Z. Angew. Math. Mech. 96, 1388–1405 (2016). doi:10.1002/zamm.201500217

He, C., Watson, G.A.: An algorithm for computing the distance to instability. SIAM J. Matrix Anal. Appl. 20(1), 101–116 (1998)

Hinrichsen, D., Pritchard, A.J.: Stability radii of linear systems. Syst. Control Lett. 7, 1–10 (1986)

Hinrichsen, D., Pritchard, A.J.: Stability radius for structured perturbations and the algebraic Riccati equation. Syst. Control Lett. 8, 105–113 (1986)

Hinrichsen, D., Pritchard, A.J.: Mathematical Systems Theory I. Modelling, State Space Analysis, Stability and Robustness. Springer, New York (2005)

Kahan, W., Parlett, B.N., Jiang, E.: Residual bounds on approximate eigensystems of nonnormal matrices. SIAM J. Numer. Anal. 19, 470–484 (1982)

Mackey, D.S., Mackey, N., Tisseur, F.: Structured mapping problems for matrices associated with scalar products. Part I: Lie and Jordan algebras. SIAM J. Matrix Anal. Appl. 29(4), 1389–1410 (2008)

Martins, N., Lima, L.: Determination of suitable locations for power system stabilizers and static var compensators for damping electromechanical oscillations in large scale power systems. IEEE Trans. Power Syst. 5, 1455–1469 (1990)

Martins, N., Pellanda, P.C., Rommes, J.: Computation of transfer function dominant zeros with applications to oscillation damping control of large power systems. IEEE Trans. Power Syst. 22, 1657–1664 (2007)

Maschke, B.M., van der Schaft, A.J., Breedveld, P.C.: An intrinsic Hamiltonian formulation of network dynamics: non-standard poisson structures and gyrators. J. Frankl. Inst. 329, 923–966 (1992)

Mehl, C., Mehrmann, V., Sharma, P.: Stability radii for linear Hamiltonian systems with dissipation under structure-preserving perturbations. SIAM J. Matrix Anal. Appl. 37, 1625–1654 (2016)

Ortega, R., van der Schaft, A.J., Mareels, Y., Maschke, B.M.: Putting energy back in control. Control Syst. Mag. 21, 18–33 (2001)

Ortega, R., van der Schaft, A.J., Maschke, B.M., Escobar, G.: Interconnection and damping assignment passivity-based control of port-controlled Hamiltonian systems. Automatica 38, 585–596 (2002)

Qiu, L., Bernhardsson, B., Rantzer, A., Davison, E., Young, P., Doyle, J.: A formula for computation of the real stability radius. Automatica 31, 879–890 (1995)

Rommes, J., Martins, N.: Exploiting structure in large-scale electrical circuit and power system problems. Linear Algebra Appl. 431, 318–333 (2009)

Schiehlen, W.: Multibody Systems Handbook. Springer, Heidelberg (1990)

van der Schaft, A.J.: Port-Hamiltonian systems: an introductory survey. In: Verona, J.L., Sanz-Sole, M., Verdura, J. (eds.) Proceedings of the International Congress of Mathematicians, vol. III, Invited Lectures, pp. 1339–1365, Madrid, Spain

van der Schaft, A.J.: Port-Hamiltonian systems: network modeling and control of nonlinear physical systems. In: Advanced Dynamics and Control of Structures and Machines, CISM Courses and Lectures, vol. 444. Springer, New York (2004)

van der Schaft, A.J., Maschke, B.M.: The Hamiltonian formulation of energy conserving physical systems with external ports. Arch. Elektron. Übertragungstech. 45, 362–371 (1995)

van der Schaft, A.J., Maschke, B.M.: Hamiltonian formulation of distributed-parameter systems with boundary energy flow. J. Geom. Phys. 42, 166–194 (2002)

van der Schaft, A.J., Maschke, B.M.: Port-Hamiltonian systems on graphs. SIAM J. Control Optim. 51, 906–937 (2013)

Van Loan, C.F.: How near is a matrix to an unstable matrix? Contemp. Math. AMS 47, 465–479 (1984)

Veselić, K.: Damped Oscillations of Linear Systems: A Mathematical Introduction. Springer, Heidelberg (2011)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Daniel Kressner.

Supported by Einstein Stiftung Berlin through the Research Center Matheon Mathematics for key technologies in Berlin.

Rights and permissions

About this article

Cite this article

Mehl, C., Mehrmann, V. & Sharma, P. Stability radii for real linear Hamiltonian systems with perturbed dissipation. Bit Numer Math 57, 811–843 (2017). https://doi.org/10.1007/s10543-017-0654-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0654-0

Keywords

- Dissipative Hamiltonian system

- Port-Hamiltonian system

- Real distance to instability

- Real structured distance to instability

- Restricted real distance to instability