Abstract

This paper discusses the upwinded local discontinuous Galerkin methods for the one-term/multi-term fractional ordinary differential equations (FODEs). The natural upwind choice of the numerical fluxes for the initial value problem for FODEs ensures stability of the methods. The solution can be computed element by element with optimal order of convergence \(k+1\) in the \(L^2\) norm and superconvergence of order \(k+1+\min \{k,\alpha \}\) at the downwind point of each element. Here \(k\) is the degree of the approximation polynomial used in an element and \(\alpha \) (\(\alpha \in (0,1]\)) represents the order of the one-term FODEs. A generalization of this includes problems with classic \(m\)’th-term FODEs, yielding superconvergence order at downwind point as \(k+1+\min \{k,\max \{\alpha ,m\}\}\). The underlying mechanism of the superconvergence is discussed and the analysis confirmed through examples, including a discussion of how to use the scheme as an efficient way to evaluate the generalized Mittag-Leffler function and solutions to more generalized FODE’s.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus is the generalization of classical calculus and with a history parallel to that of classical calculus. It was studied by many of the same mathematicians who contributed significantly to the development of classical calculus. In recent years, it has seen a growing use as an appropriate model for non classic phenomena in physical, engineering, and biology fields [15].

To gain an understanding of the basic elements of fractional calculus, we recall

A slight rewriting yields

Clearly, Eq. (1.2) still formally makes sense if \(n\) is replaced by \(\alpha \) (\(\in {R}^{+}\)) leading to the definition of the fractional integral as

Here \(a \in {R}\). This definition of the fractional integral is natural and simple, and inherits many good mathematical properties, e.g., the semigroup property. Moreover \(-\alpha \) ‘connects’ \(-\infty \) to \(0\) in a natural way.

With the derivative as the inverse operation of the integral, the definition of the fractional derivative naturally arises by combining the definitions of the classical derivative and the fractional integral. In this way, the fractional derivative operator \(D^\alpha \) can be defined as \(D^{\lceil \alpha \rceil }D^{-(\lceil \alpha \rceil -\alpha )}\) or \(D^{-(\lceil \alpha \rceil -\alpha )}D^{\lceil \alpha \rceil }\). Mathematically, the first one can be regarded as preferred since \(D^nD^{-n}=I\) while \(D^{-n}D^n=I+\cdots \), which involves additional information about the function at the left end point. When considering a fractional derivative in the temporal direction, the second formulation is more popular due to its convenience when specifying the initial condition in a classic sense. These two definitions of derivatives are referred to as the Riemann–Liouville derivative and Caputo derivative [18], respectively, with the Riemann–Liouville derivative being defined as [4, 18]

and the Caputo derivative recovered as

A third alternative definition is the Grünwald–Letnikov derivative, based on finite differences to generalize the classical derivative. This is equivalent to the Riemann–Liouville derivative if one ignores assumptions on the regularity of the functions.

For (1.4), the following holds [7]

and for (1.3), \(\lim \nolimits _{\alpha \rightarrow n}{_aD_t^{-\alpha }}x(t)={_aD_t^{-n}}x(t)\). Hence, the operator \({_aD_t^\alpha } \,(\alpha \in {R})\) makes sense and ‘connects’ the orders of the fractional derivative from \(-\infty \) to \(+\infty \). However, for (1.5), one recovers

The Riemann–Liouville derivative and the Caputo derivative are equivalent if \(x(t)\) is sufficiently smooth and satisfies \(x^{(j)}(a)=0,\,j=0,2,\ldots ,n-1.\)

At times, (1.3) and (1.4) are referred to as the left Riemann–Liouville fractional integral and the left Riemann–Liouville fractional derivative, respectively. In some cases it is natural to consider the interval \([x, b]\) instead of \([a, x]\), leading to the right Riemann–Liouville fractional integral being defined as

where \(b\in R\) and \(b\) can be \(+\infty \).

In this work, we develop a local discontinuous Galerkin (LDG) methods for the one-term and multi-term initial value problems for fractional ordinary differential equations (FODEs). For ease of presentation, we shall focus the discussion on the following two types of FODEs

and

where \(\alpha \in (0,1] \), and \(m\) is a positive integer and also include examples of \(\alpha \in [1,2]\) in the interest of generalization. For (1.6) and (1.7), the initial conditions can be specified exactly as for the classical ODEs, i.e., the values of \(x^{(j)}(a)\) must be given, where \(j=0,1,2,\ldots ,\lfloor \alpha \rfloor \) for (1.6) and \(j=0,1,2,\ldots ,\max \{\lfloor \alpha \rfloor ,m-1\}\) for (1.7).

It appears that the earliest numerical methods used in the engineering community for such problems are the predictor-corrector approach originally presented in [9], later slightly improved in [7], and a method using a series of classical derivatives to approximate the fractional derivative, realized by using frequency domain techniques based on Bode diagrams [12]. For the second method, ways to evaluate the time domain error introduced in the frequency domain approximations remains open.

The discontinuous Galerkin (DG) methods have been well developed to solve classical differential equations [13], initiated for the classical ODEs [6] with substantial later work, mostly related to discontinuous Galerkin methods for the related Volterra integro-differential equation, including a priori analysis [19], \(hp\)-adaptive methods [3, 16] and recent work on super convergence in the \(h\)-version [17]. This has been extended to approximate the fractional spatial derivatives [8] to solve fractional diffusion equation by using the idea of local discontinuous Galerkin (LDG) methods [2, 5]. In this work, we discuss DG methods to allow for the approximate solution of general FODEs. All the advantages/characteristics of the spatial DG methods carries over to this case with a central one being the ability to solve the equation interval by interval when the upwind flux, taking the value of \(x(t)\) at a discontinuity point \(t_j\) as \(x(t_j^-)\), is used. However, this is a natural choice, since for the initial value problems for the fractional (or classical) ODEs, the information travels forward in time. This implies that we just invert a local low order matrix rather than a global full matrix. The LDG methods for the first order FODEs (1.6) have optimal order of convergence \(k+1\) in the \(L^2\) norm and we observe superconvergence of order \(k+1+\min \{k,\alpha \}\) at the downwind point of each element. Here \(k\) is the degree of the approximation polynomial used in an element. For the two-term FODEs (1.7), the LDG methods retain optimal convergence order in \(L^2\)-norm, and superconvergence at the downwind point of each element as \(k+1+\min \{k,\max \{\alpha ,m\}\}\). We shall discuss the underlying mechanism of this superconvergence and illustrate the results of the analysis through a number of examples, including some going beyond the theoretical developments presented here.

What remains of the paper is organized as follows. In Sect. 2, we present the LDG schemes for the FODEs, discuss the numerical stability of the scheme for the linear case of (1.6) with \(\alpha \in [0,1]\), and uncover the mechanism of superconvergence. The analysis is supported by a number of computational experiments and we also illustrate how the proposed scheme can be applied to compute the generalized Mittag-Leffler functions. In Sect. 3 we discuss a number of generalizations of the scheme, illustrated by a selection of numerical examples and Sect. 4 a few concluding remarks.

2 LDG schemes for the FODEs

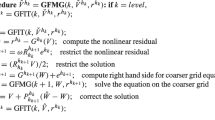

The basic idea in the design the LDG schemes is to rewrite the FODEs as a system of the first order classical ODEs and a fractional integral. Since the integral operator naturally connects the discontinuous function, we need not add a penalty term or introduce a numerical flux for the integral equation. However, for the first order ODEs, upwind fluxes are used. In this section, we present the LDG schemes, prove numerical stability and discuss the underlying mechanism of superconvergence.

2.1 LDG schemes

We consider (1.6) and rewrite it as

We consider a scheme for solving (2.1) in the interval \(\Omega =[0,T]\). Given the nodes \(0=t_0<t_1<\cdots <t_{n-1}<t_n=T\), we define the mesh \(\fancyscript{T}=\{I_j=(t_{j-1},t_j),\,j=1,2,\ldots ,n\}\) and set \(h_j:=|I_j|=t_j-t_{j-1}\) and \(h:=\max _{j=1}^n h_j\). Associated with the mesh \(\fancyscript{T}\), we define the broken Sobolev spaces

and

For a function \(v \in H^1(\Omega ,\fancyscript{T})\), we denote the one-sided limits at the nodes \(t_j\) by

Assume that the solutions belong to the corresponding spaces:

We further define \(X_i\) as the approximation functions of \(x_i\) respectively, in the finite dimensional subspace \(V \subset H^1(\Omega , \fancyscript{T})\); and choose \(V\) to be the space of discontinuous, piecewise polynomial functions

where \(\fancyscript{P}^k(I_j)\) denotes the set of all polynomials of degree less than or equal to \(k\) on \(I_j\). Using the upwind fluxes for the first order classical ODEs and discretizing the integral equation, we seek \(X_i \in V\) such that for all \(v_i \in V\), and \(j=1,2,\ldots , n\), the following holds

Remark When taking \(\alpha \in (0,1]\) and \(m=1\) in (1.7), its scheme is

The scheme is clearly consistent, i.e., the exact solutions of the corresponding models satisfy (2.2). Furthermore, since an upwind flux is used the solutions can be computed interval by interval and if \(f(x,t)\) is a linear function in \(x\), we just need to invert a small matrix in each interval to recover the solution.

2.2 Numerical stability

We consider the question of stability for the linear case of (2.2):

where \(A\) is a negative constant and \(B(t)\) is sufficiently regular to ensure existence and uniqueness. The numerical scheme of (2.3) is to find \(X_i \in V\) such that

holds for all \(v_i \in V\). First we present a lemma here. Based on the semigroup property of fractional integral operators, Property \(A.2\) of [10], and Lemmas 2.6 of [8], we have

Lemma 2.1

For \(\beta >0\), \(\alpha \in (0,1)\),

Let \({\widetilde{X}}_i \in V \) be the approximate solution of \(X_i\) and denote \(e_{X_i}:={\widetilde{X}}_i-X_i\) as the numerical errors. Stability of (2.4) is established in the following theorem.

Theorem 2.1

\((L^\infty \) stability) Scheme (2.4) is \(L^\infty \) stable; and the numerical errors satisfy

where \(\delta e_{X_i}(t_{i-1})=e_{X_i}(t_{i-1}^+)-e_{X_i}(t_{i-1}^-)\).

Since \(A<0\), the scheme is dissipative.

Proof

From (2.4), we recover the error equation

for all \(v_i \in V\). Taking \(v_0=e_{X_0}\), \(v_1=e_{X_1}\), and adding the two equations, we obtain

Summing the equations for \(i=1,2,\ldots ,n\) leads to

and

Using (2.6) of Lemma 2.1 yields the desired result. \(\square \)

2.3 Mechanism of superconvergence

As we shall see shortly, the proposed LDG scheme is \(k+1\) optimally convergent in the \(L^2\)-norm but superconvergent at downwind points. This is also known for the classic case where the downwind convergence is \(2k+1\) [1, 6]. However, for the fractional case, the order of the superconvergence depends on the order of the Caputo fractional derivatives. To understand this, let us again focus on the linear case of (2.2).

The error equation corresponding to (2.4) is

where \(E_{X_i}=x_i-X_i\). In (2.9), taking \(v_i\) to be continuous on the interval \([0,t_j]\) and summing the equations for \(i=1,2,\ldots ,n\) lead to

Following (2.5), we rewrite this as

Rearranging the terms of (2.11) results in

Solving \(\tilde{v}_0+\frac{1}{A}{_tD_{t_n}^{-(1-\alpha )}}\tilde{v}_1=0\) and \(\frac{d\tilde{v}_0}{dt}-\tilde{v}_1=0\) for \(t \in [0,t_n]\) with \(\tilde{v}_0(t_n)=E_{X_0}(t_n^-)\), we get

where \(E_{\alpha ,1}\) is the Mittag-Leffler function. Taking \(v_i\) as the \(L^2\) projection of \(\tilde{v}_i\) onto \(\fancyscript{P}^k\), we recover that if \(\alpha \) is an integer, there exists

due to the regularity of the Mittag-Leffler function [14]. For the fractional case, the approximation [11] yields

Combining with classic polynomial approximation results for \(E_{X_i}\) [6] yields an order of convergence at the downwind point of \(k+1+\min \{ k, \alpha \}\).

Remark 2.1

The superconvergence orders of the scheme (2.9) strongly depend on the regularity of the solution of \(\tilde{v}_0+\frac{1}{A}{_tD_{t_n}^{-(1-\alpha )}}\tilde{v}_1=0\) and \(\frac{d\tilde{v}_0}{dt}-\tilde{v}_1=0\) for \(t \in [0,t_n]\) with \(\tilde{v}_0(t_n)=E_{X_0}(t_n^-)\). We can arrive at similar conclusions for the other schemes discussed in this paper.

2.4 Numerical experiments

Let us consider a few numerical examples to qualify the above analysis.

All results assume that the corresponding analytical solutions are sufficiently regular. For showing the effectiveness of the LDG schemes and further confirming the predicted convergence orders, both linear and nonlinear cases are considered. Finally, we shall also consider the computation of the generalized Mittag-Leffler functions using the LDG scheme.

We use Newton’s method to solve the nonlinear systems. The initial guess in the interval \(I_j\) (\(j \ge 2\)) is given as \(X_i(t_{j-1}^-)\) or by extrapolating forward to the interval \(I_j\). For the interval \(I_1\), we use \(X_0(t_0^-)\) \((=x_0)\) as initial guess.

2.4.1 Numerical results for (1.6) with \(\alpha \in (0,1]\)

We first consider examples to confirm that the convergence order of (2.2) is \(k+1+\alpha \) at downwind points and \(k+1\) in the \(L^2\) sense, respectively, where \(k\) is the degree of the polynomial used in an element and \(\alpha \in [0,1)\). However, when \(\alpha =1\) the convergence order is \(2k+1\) at downwind point and still \(k+1\) in \(L^2\) sense in agreement with classic theory [6].

Example L1. On the computational domain \(t\in \Omega =(0,1)\), we consider

with the initial condition \(x(0)=1\) and the exact solution \(x(t)=t^5+1\). Note that when \(\alpha =0\) this condition is still required for the form of (2.1).

Figure 1 displays convergence the downwind point as well as in \(L^2\) for \(k=1-3\), confirming optimal \(L^2\) convergence and an order of convergence of \(k+1+\alpha \) at the downwind point as predicted.

The convergence of (2.2) for \(k=1\) (top row), \(k=2\) (middle row) and \(k=3\) (bottom row) in (2.16). In the left column we show the convergence at the downwind points while the right column displays \(L^2\)-convergence. The real line (blue online) without marker is the curve \(\sim C n^{-\gamma }\), where \(n\) is the number of elements and \(\gamma \) is an appropriate constant (color figure online)

Example N1. We consider the nonlinear FODE on the domain \(t \in \Omega =(0,0.5)\),

with the initial condition \(x(0)=1\), and the exact solution \(x(t)=t^5+1\).

The results in Fig. 2 confirm that the optimal \(L^2\) convergence and an order of convergence of \(k+1+\alpha \) at the downwind point carries over to the nonlinear case.

The convergence of (2.2) for \(k=1\) (top row), \(k=2\) (middle row) and \(k=3\) (bottom row) in (2.17). In the left column we show the convergence at the downwind points while the right column displays \(L^2\)-convergence. The real line (blue online) without marker is the curve \(\sim C n^{-\gamma }\), where \(n\) is the number of elements and \(\gamma \) is an appropriate constant (color figure online)

2.4.2 Calculating the generalized Mittag-Leffler function

As a more general example, let us use the efficient and accurate solver for calculating the generalized Mittag-Leffler functions defined as

To build the relation between the Mittag-Leffler function and the FODE consider

Taking the Laplace transform on both sides of the above equation, we recover

where \(X(s)\) is the Laplace transform of \(x(t)\). From (2.20), we obtain

Using the inverse Laplace inverse transform in (2.21) results in

Since

we recover

By solving (2.19) and (2.23) or just (2.19) when \(\beta =1\), we can efficiently calculate the generalized Mittag-Leffler function \(E_{\alpha ,\beta }(At^\alpha )\) as illustrated in Fig. 3.

3 Generalizations

Let us now consider the generalized case, given as

where \(\alpha \) in general is real, and \(m\) is a positive integer. We shall follow the same approach as previously, and consider the system of equations

with appropriate initial conditions on \(x_i(0)\) given. Here \(p=\lceil \alpha \rceil \). For simplicity we assume \(x_i(t) \in H^1(\Omega ,\fancyscript{T})\) except \(x_m(t) \in L^2(\Omega ,\fancyscript{T})\). We will continue to use the upwind fluxes to seek \(X_i\), such that for all \(v_i \in V\), the following holds

subject to the appropriate initial conditions.

The analysis of this scheme is generally similar to that of the previous one and will not be discussed further, although, as we shall illustrate shortly, there are details that remain open. A main difference is that the order of super convergence at the downwind point changes to \(k+1+\min \{k,\max \{\alpha ,m\}\}\) and the impact of the fractional operator is thus eliminated by the linear classic operator as long as \(m\ge \alpha \). However, for the case where \( \lfloor \alpha \rfloor \ge k,m\), the situation is less clear.

Let us first consider a linear example to illustrate that the order of super convergence \(2k+1\) at downwind points can also be obtained when \(\alpha \) equals to \(\lfloor \alpha \rfloor \) or \(\lceil \alpha \rceil \), provided the initial condition is not overspecified. On the computational domain \(t\in \Omega =(0,1)\), we consider

with the initial condition \(x(0)=1\) and the exact solution \(x(t)=t^5+1\).

As expected, Fig. 4 confirms super convergence of \(k+1+\min \{k,\max \{\alpha ,m\}\}\) at the downstream point.

The convergence of (3.4) for \(k=1\) (top row), \(k=2\) (middle row) and \(k=3\) (bottom row). In the left column we show the convergence at the downwind points while the right column displays \(L^2\)-convergence. The real line (blue online) without marker is the curve \(\sim C n^{-\gamma }\), where \(n\) is the number of elements and \(\gamma \) is an appropriate constant (color figure online)

Let us consider a nonlinear problem, given for \(t\in \Omega =(0,1)\), as

with the initial condition \(x(0)=1\), \(x^\prime (0)=1\), \(x^{\prime \prime }(0)=1\) and the exact solution \(x(t)=t^5+\frac{1}{2}t^2+t+1\). We note that in this case, \(\alpha \in [1,2]\) but \(m=3\) and, as shown in Fig. 5 super convergence of order \(2k+1\) is maintained in this case.

The convergence of (3.3) for \(k=1\) (top row), \(k=2\) (middle row) and \(k=3\) (bottom row) in (3.5). In the left column we show the convergence at the downwind points while the right column displays \(L^2\)-convergence. The real line (blue online) without marker is the curve \(\sim C n^{-\gamma }\), where \(n\) is the number of elements and \(\gamma \) is an appropriate constant (color figure online)

However, if the assumption that \(m\ge \lceil \alpha \rceil \) is violated, an \(\alpha \)-dependent rate of convergence re-emerges as illustrated in the following example. Consider a nonlinear problem, given for \(t\in [0,1]\) as

with the initial condition \(x(0)=1\), \(x^\prime (0)=1\) and the exact solution \(x(t)=t^5+t+1\). Figure 6 shows superconvergence of \(k+1+\min \{k,\alpha \}\) at the downstream point.

The convergence of (3.3) for \(k=1\) (top row), \(k=2\) (middle row) and \(k=3\) (bottom row) in (3.6). In the left column we show the convergence at the downwind points while the right column displays \(L^2\)-convergence. The real line (blue online) without marker is the curve \(\sim C n^{-\gamma }\), where \(n\) is the number of elements and \(\gamma \) is an appropriate constant (color figure online)

As a final example, let us consider

on the computational domain \(t\in \Omega =(0,1)\), with the initial condition \(x(0)=1\), \(x^\prime (0)=1\) and the exact solution \(x(t)=t^5+t+1\).

In Fig. 7 we show the results for \(k=1\). Following the previous analysis, we would expect an order of convergence as \(k+1+\min \{k,\alpha \}\) which in this case would be third order. However, the results in Fig. 7 highlights a reduction in the order of convergence at the endpoint as \(\alpha \) approaches the value one. The mechanism for this is not fully understood but is likely associated with an over specification of the initial conditions in this singular limit. Increasing \(k\) recovers the expected convergence rate for all values of \(\alpha \).

The convergence of (3.3) for \(k=1\) in (3.7). On the left we show the convergence at the downwind points while the right figure displays \(L^2\)-convergence. The real line (blue online) without marker is the curve \(\sim C n^{-\gamma }\), where \(n\) is the number of elements and \(\gamma \) is an appropriate constant (color figure online)

Remark 3.1

From the simulation results, we can conclude that: (1) for all the schemes, the optimal \(L^2\) convergence rates \(k+1\) with the global truncation errors \(C(\alpha ) h^{k+1}\) are obtained, and the value of \(C(\alpha )\) is not very sensitive to the change of the value of \(\alpha \); (2) in the case \(m=3\) and \(\alpha \in [1,2]\) (Fig. 5), at the downstream point the superconvergence rate \(2k+1\) with the global truncation errors \(C(\alpha ) h^{2k+1}\) is got and \(C(\alpha )\) is also not sensitive to the change of the value of \(\alpha \).

4 Concluding remarks

We introduce an LDG schemes with upwind fluxes for general FODEs. The schemes enable an element by element solution, hence avoiding the need for a full global solve. Through analysis, we highlight that the scheme converges with the optimal order of convergence order \(k+1\) in \(L^2\) norm and shows superconvergence at the downwind point of each interval with an order of convergence order of \(k+1+\min \{k,\alpha \}\), where \(\alpha \) refers to the order of the fractional derivatives and \(k\) the degree of the approximating polynomial. We discuss the mechanism for this superconvergence and extend the discussion to cases where classic ODE terms of order \(m\) are included in the equation. In this case, the order of the super convergence becomes \(k+1+\min \{k,\max \{\alpha ,m\}\}\), i.e., the behavior of the classic derivative dominates that of the fractional operator provided \(m\ge \lceil \alpha \rceil \). This is confirmed through examples. A final case in which \(k\le \lfloor \alpha \rfloor \) shows that in this case, the expected convergence of \(k+1+\min \{k,\alpha \}\) is violated as \(\alpha \) approaches one. The mechanism for this remains unknown and we hope to report on that in future work.

References

Adjerid, S., Devine, K.D., Flaherty, J.E., Krivodonova, L.: A posteriori error estimation for discontinuous Galerkin solutions of hyperbolic problems. Comput. Methods Appl. Mech. Eng. 191, 1097–1112 (2002)

Bassi, F., Rebay, S.: A high-order accurate discontinuous finite element method for the numerical solution of the compressible Navier-Stokes equations. J. Comput. Phys. 131, 267–279 (1997)

Brunner, H., Schötzau, D.: Hp-Discontinuous Galerkin time-stepping for Volterra integrodifferential equations. SIAM J. Numer. Anal. 44, 224–245 (2006)

Butzer, P.L., Westphal, U.: An introduction to fractional calculus. World Scientific, Singapore (2000)

Cockburn, B., Shu, C.-W.: The local discontinuous Galerkin method for time-dependent convection diffusion systems. SIAM J. Numer. Anal. 35, 2440–2463 (1998)

Delfour, M., Hager, W., Trochu, F.: Discontinuous Galerkin methods for ordinary differential equations. Math. Comput. 36, 455–473 (1981)

Deng, W.H.: Numerical algorithm for the time fractional Fokker-Planck equation. J. Comput. Phys. 227, 1510–1522 (2007)

Deng, W.H., Hesthaven, J.S.: Discontinuous Galerkin methods for fractional diffusion equations. ESAIM M2AN 47, 1845–1864 (2013)

Diethelm, K., Ford, N.J., Freed, A.D.: A predictor-corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 29, 3–22 (2002)

Ervin, V.J., Roop, J.P.: Variational formulation for the stationary fractional advection dispersion equation. Numer. Methods Partial Differ. Equ. 22, 558–576 (2005)

Guo, B.Q., Heuer, N.: The optimal convergence of the h-p version of the boundary element method with quasiuniform meshes for elliptic problems on polygonal domains. Adv. Comput. Math. 24, 353–374 (2006)

Hartley, T.T., Lorenzo, C.F., Qammer, H.K.: Chaos in a fractional order Chua’s system. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 42, 485–490 (1995)

Hesthaven, J.S., Warburton, T.: Nodal discontinuous Galerkin methods: algorithms, analysis, and applications. Springer, New York (2008)

Mainardi, F.: On some properties of the Mittag-Leffler function \(E_\alpha (-t^\alpha )\), completely monotone for \(t>0\) with \(0< \alpha <1\). Discret. Contin. Dyn. Syst. Ser. B (2014) (in press)

Metzler, R., Klafter, J.: The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

Mustapha, K., Brunner, H., Mustapha, H., Schötzau, D.: An hp-version discontinuous Galerkin method for integro-differential equations of parabolic type. SIAM J. Numer. Anal. 49, 1369–1396 (2011)

Mustapha, K.A.: A Superconvergent discontinuous Galerkin method for Volterra integro-differential equations. Math. Comput. 82, 1987–2005 (2013)

Podlubny, I.: Fractional differential equations. Academic Press, New York (1999)

Schötzau, D., Schwab, C.: An hp a priori error analysis of the DG time-stepping method for initial value problems. Calcolo 37, 207–232 (2000)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jan Nordström.

Supported by NSFC 11271173, NSF DMS-1115416, and OSD/AFOSR FA9550-09-1-0613.

Rights and permissions

About this article

Cite this article

Deng, W., Hesthaven, J.S. Local discontinuous Galerkin methods for fractional ordinary differential equations. Bit Numer Math 55, 967–985 (2015). https://doi.org/10.1007/s10543-014-0531-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-014-0531-z

Keywords

- Fractional ordinary differential equation

- Local discontinuous Galerkin methods

- Downwind points

- Superconvergence

- Generalized Mittag-Leffler function