Abstract

This paper analyzes three multiscale modeling techniques that are commonly used in biology and physics and uses those cases to construct a normative framework for tailoring multiscale modeling techniques to specific modeling contexts. I argue that the selection of a multiscale modeling technique ought to focus on degrees of relative autonomy between scales, the measurable macroscale parameters of interest, indirect scaling relationships mediated by mesoscale features, and the degree of heterogeneity of the system’s mesoscale structures. The unique role that these features play in multiscale modeling reveals several important methodological, epistemological, and metaphysical questions for future philosophical investigations into multiscale modeling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Several philosophers of science and practicing scientific modelers have begun to pay attention to multiscale modeling techniques (Batterman 2021; Batterman and Green 2021; Bokulich 2021; Bursten 2018; Dallon 2010; Deisboeck et al. 2011; Jhun 2021; Laughlin et al. 1999; Meier-Schellersheim et al. 2009; Pincock 2012; Rice 2021, 2022; Wilson 2017). What is more, the importance of mesoscale structures and correlations in biological systems makes the life sciences, “a rich field well suited for the application of multiscale modeling.” Dallon (2010, 24). Indeed, living systems have spatial scales that range from habitats that are kilometers wide to cellular features that are on the scale of microns. Similarly, biological time scales can range from years for population growth to cell division processes that occur on the scale of a few seconds. In addition, each spatial and temporal scale(s) of these biological systems, “not only has its own characteristic types of data, but also typical modeling and simulation approaches associated with it” (Meier-Schellersheim et al. 2009, 4). To investigate these multiscale phenomena, many biological modelers have begun borrowing multiscale and mesoscale ‘middle-out’ modeling strategies from various areas of physics. As one article starkly puts it, “the miracles of nature revealed by modern molecular biology are no less astonishing than those found by physicists in macroscopic matter. Their existence leads one to question whether as-yet-undiscovered organizing principles might be at work at the mesoscopic scale, at least in living things. This is by any measure a central philosophical controversy of modern science” (Laughlin et al. 1999, 32).

Due to the diversity of multiscale modeling strategies available, a key challenge for practicing scientific modelers is to determine, which multiscale modeling techniques are best suited to different modeling contexts? To help multiscale modelers tailor their techniques to specific modeling contexts, this paper investigates three case studies of multiscale modeling strategies that are widely used in biology (and physics) and analyzes the goals of the modelers, the assumptions of the modeling approaches, and the features of the real-world target system(s) that make those strategies more or less likely to be successful at accomplishing the modelers’ aims. Drawing on the distinctive features of these cases, I argue that the selection of a multiscale modeling technique needs to pay attention to the degree of relative autonomy between scales, the measurable macroscale parameters of interest, indirect scaling relationships mediated by mesoscale features, and the degree of heterogeneity of the system’s mesoscale structures. Identifying these features of the modeling context and the modeling decisions they influence enables the framework developed here to provide useful normative guidance for practicing multiscale modelers.

In addition, the distinctive roles these features play in multiscale modeling reveals several unique methodological, epistemological, and metaphysical questions surrounding multiscale modeling. Specifically, by examining the practical modeling decisions and epistemically limited contexts of scientific practice, the framework developed here identifies the following questions as essential to future philosophical research concerning multiscale modeling:

-

1.

How should we measure degrees of relative autonomy?

-

2.

How is multiscale modeling pragmatically constrained by the available models and data?

-

3.

What does the success of a multiscale modeling strategy tell us about the metaphysical structure of reality?

While these questions are adjacent to traditional philosophy of science questions concerning relationships between features at different scales, they go well beyond traditional debates that have focused on identifying distinct ‘levels’ of reality, universally applicable hierarchical structures, or ‘in principle’ claims concerning reduction/emergence.

The paper will proceed as follows. In the following section, I briefly define multiscale modeling and mesoscale modeling before surveying the three case studies. I then use the features of those cases to construct a normative framework that identifies a range of conditions for success for various multiscale modeling techniques. Next, I use this framework to identify several lessons and novel questions that ought to guide future philosophical investigations into multiscale modeling.

Three case studies of biological multiscale modeling

At first glance, one might think that any time scientists construct multiple models for features at different scales that this qualifies as an instance of multiscale modeling. However, this definition is far too broad since it would include the widespread use of different models (or theories) to capture different phenomena or patterns that occur at different scales. What is distinctive about multiscale phenomena—and what makes them more challenging to model—is that the same phenomenon, or pattern, depends on features across a wide range of spatial/temporal scales. Moreover, multiscale modeling seeks to capture various kinds of mediating, feedback, and other complex relationships between features at different scales. Effectively modeling these inter-scale interactions is a key challenge for multiscale modelers. As some biological multiscale modelers put it, “defining the linkage between different scales poses a significant barrier to model development; in many cases, the link is bidirectional, meaning that higher- and lower-level variables, parameters, and functions characterizing the models are influenced by each other” (Deisboeck et al. 2011, 3).Footnote 1 Therefore, while far from a complete definition, I propose that what makes a modeling approach genuinely multiscale is the use of one or more models to investigate a multiscale phenomenon, whose difference-making features range across several spatial/temporal scales, and that aims to capture certain important/relevant relationships between features at different scales.

Similarly, ‘mesoscale modeling’ might be thought to simply be any modeling approach that targets a scale(s) somewhere in between the smallest and largest scales of the system. But this definition won’t do for a number of reasons. For one thing, almost every instance of biological modeling would likely satisfy this definition since most biological models target living systems whose features occur in between the smallest physical scales and the scale of the overall system. More importantly, however, many modeling techniques target mesoscale features without considering any other scales of the system. While I suspect that a fair amount of mesoscale modeling is going on across the sciences, including all those cases would miss the interesting features that are distinctive of mesoscale modeling approaches. Specifically, while mesoscale modeling approaches start by identifying key mesoscale features (or parameters), representing those mesoscale features is typically a means to the end of mediating between features at smaller and larger scales of the system. Indeed, in contrast with top-down or bottom-up approaches, “Recently, there has been a growing interest in a ‘middle out’ approach, whereby the initial focus is on an intermediate scale that is gradually expanded to include both smaller and larger spatial scales” (Walker and Southgate 2009, 451). This is precisely why mesoscale modeling strategies are often referred to as ‘middle-out’ modeling strategies. Thus, in what follows, I use ‘mesoscale modeling’ and ‘middle-out modeling’ interchangeably to mean the use of modeling techniques that target mesoscale structures of the system and use those mesoscale features/parameters to mediate between features at larger (or slower) and smaller (or faster) scales of the system. With these rough characterizations of multiscale and mesoscale modeling in hand, we can now turn to some of the multiscale modeling strategies that are widely used in scientific practice.

Scale separation techniques: short-term and long-term responses to environmental CO 2

While multiscale phenomena always involve some degree of interactions between features at different scales, often the patterns and processes that occur at particular scales are largely autonomous of what is occurring at other scales of the system. When this ‘separation of scales’ occurs, scientists can effectively model the multiscale phenomenon by using different modeling techniques designed for distinct scales (and types of processes) and keeping their scale-dependent models largely separate from one another. This can be done by running the models in parallel or at different time steps. This is particularly useful because most of the models that have been developed have a characteristic scale(s) for the processes they are intended to model (Meier-Schellersheim et al. 2009). By using different models for different scales, multiscale modelers can simply use the available stock of models to represent processes and features at their characteristic scales without having to worry too much about interactions with processes and features at other scales—though some minimal interactions between the models are sometimes still included.

As an example, Stanislaus Schymanski and colleagues (2015) use time-scale separation techniques to investigate the responses of vegetation to increased CO2 concentrations across multiple time scales. It is typically assumed that elevated atmospheric CO2 will produce reductions in plants’ stomatal conductance and thereby their leaf-scale water use—at least in the short term. However, this shorter timescale response often occurs in tandem with an increase in perennial vegetation cover that increases water use at longer timescales. In order to investigate these processes at different timescales, these modelers constructed a multiscale model that separated out medium-term and long-term processes and incorporated some minimal feedback loops between them. Specifically, these modelers tell us that “the present study investigates whether eCO2 might affect vegetation and the water balance differently in the medium and long term” (Schymanski et al. 2015, 2).

The multiscale model used in this study consists of a multilayer soil–water balance submodel that interacts with another submodel for root water uptake, which also interacts with a third submodel for tissue-water balance and leaf-gas exchange (Schymanski et al. 2015, 2). These submodels involve a variety of idealizing assumptions, e.g., within the soil–water balance submodel, the catchment is represented just as a block of soil because it makes parameterizing the model easier. Several additional idealizations are used in constructing the ‘overall’ multiscale model via combination of these submodels. For example, the canopy of vegetation is represented just as two ‘big leaves’: one of invariant size representing perennial vegetation and another of varying size representing seasonal vegetation. Moreover, these leaves are assumed to transmit no light, so no overlap between the two big leaves is allowed in the model. Finally, since it is a biological optimization model, it is also assumed that vegetation has co-evolved with its environment over a long period of time such that it is optimally adapted to its conditions. These modelers justify their idealizations by noting that these “simplifications were adopted to reduce computational burden in a model where optimal adaptation is computed using a large number of model runs ... we assume that the structure of the costs and benefits of the optimized vegetation properties is captured adequately despite the simplification” (Schymanski et al. 2015, 10). In other words, the idealizations greatly reduced the computational resources needed to run the multiscale model multiple times at different timescales.

In this model, optimization involves adapting numerous properties (or phenotypes) of the vegetation system at different time scales:

-

(1)

Foliage projective cover and max. rooting depth of perennial plants (decades)

-

(2)

Water-use strategies (decades)

-

(3)

Foliage projective cover of seasonal plants (daily)

-

(4)

Photosynthetic capacity and vertical fine root distributions (daily)

-

(5)

Canopy conductance (hourly) (Schymanski et al. 2015, 3)

The model assumes that the vegetation system will optimize (or maximize) its ‘Net Carbon Profit’ (NCP) which is calculated as the net carbon acquired via photosynthesis minus the carbon spent on maintenance of the system. Consequently, the optimal strategy for the above suite of design variables is calculated by finding the set of properties that maximize the vegetation community’s NCP.

Medium-term adaptive responses were then calculated by holding fixed all parameters that changed on longer time scales and only allowing changes to the shorter (medium) time scale properties of the system. The long-term parameters held fixed in the medium-term optimization simulations are marked as ‘constant’ in table one below. These results were then compared to the long-term optimization results in which all the properties of the vegetation were allowed to vary over the 30-year simulation (Schymanski 2015, 3) (Table 1).

Comparing the results of these two sets of scale-separated simulations showed that medium-term and long-term adaptation differed with respect to drainage, total evapotranspiration, transpiration by perennial and seasonal vegetation, CO2 assimilation rate, foliage projective cover, and water-use strategy (Schymanski et al. 2015, 8). In short, different processes are involved at these different timescales and those processes give rise to different results concerning what is most adaptive for the vegetation system at those timescales. The multiscale modeling approach used here is to separate out those complex processes into distinct timescales and model them separately for purposes of comparing the outcomes of medium-term and long-term adaptation. As these modelers tell us, their multiscale modeling approach “separates responses to eCO2 likely occurring at different temporal scales” (Schymanski et al. 2015, 15).

This ‘scale-separation’ multiscale modeling strategy is successful in this context due to the system’s relatively high degree of separation of its features/processes into independent time scales; i.e. the processes occurring at different timescales of the system display a high degree of relative autonomy from each other. In addition, in this case the same (sub)models or averaged parameters—e.g., the overall averaged properties of the two big leaves—could be used for different (spatial) regions of the system because the scale-dependent features are relatively homogenous throughout the system. That is, the features occurring at the separated time scales are relatively stable as we move to different spatial regions of the system. This homogeneity allows simple averaging (e.g. over the entire leaves) to capture most of the relevant (statistical) features of the system. Consequently, the success of scale-separation strategies depends on there being a relatively high degree of separation (or autonomy) between features at different scales and a relatively high degree of homogeneity with respect to the features at those separated scales.

A middle-out modeling strategy: representative volume elements for spider silk

While scale separation techniques are helpful when the system displays a relatively high degree of autonomy between scales and its scale-dependent features are largely homogeneous, in many systems there will be heterogeneous mesoscale structures that have important influences on what is happening at other scales. For example, in modeling cell–cell interactions, “the response of individual cells was influenced by the local microenvironment, leading to population heterogeneity. This heterogeneity was masked when data was averaged over the entire cell population” (Walker and Southgate 2009, 457). In this case, merely averaging over the entire cell population fails to capture the important heterogeneities in the cells’ microenvironments. This example illustrates two important lessons for multiscale modeling: (1) even when scales separate, there are often heterogeneous mesoscale structures that need to be accounted for and, (2) simple averaging often masks the influence of heterogeneous mesoscale structures on the overall behavior of the system (Batterman 2021). In contexts where the features at different scales are more heavily dependent on each other, or there are relevant heterogeneities (or variations) at the system’s mesoscales, “[An] emerging approach is the ‘middle out’ approach, which starts with an intermediate scale… that is gradually expanded to include both smaller and larger spatial scales.” (Dada and Mendes 2011, 87).

A commonly used middle-out modeling strategy is to develop an idealized representative volume element (RVE) of the system at some mesoscale that encodes information from smaller scales as well as constraints from the most macroscales of the system. For example, rather than modeling individual atoms, biologists often select a mesoscale collection of neighboring atoms as the representational target of their model: “The [quasi-continuum] method consists of coarse-graining an atomistic domain by selecting a small subset of the total number of atoms, called representative atoms.” (Dada and Mendes 2011, 88). These ‘representative atoms’ are not representations of the system’s actual atoms, but are instead a mesoscale coarse-grained statistical average of a small neighborhood of atoms and their key structural interactions. A similar approach has been adopted by some modelers of COVID-19: “The central idea behind our rapid modeling approach for mesoscale models is to… model structural characteristics on a small representative collection of structural elements, which are then assembled into the entire cellular or viral system” (Nguyen et al. 2021, 723, my emphasis).

As a more detailed example of this RVE approach, we can look at Gwonchan Yoon et al.’s (2008) modeling of spider silk. Spider silk possesses super-elasticity and yield strength comparable to high-tensile steel that enable the spider to turn the kinetic energy of flying prey into heat dissipation throughout the silk (Yoon et al. 2008, 873). Although bottom-up characterizations of these proteins have made some key advancements, “microscopic characterization such as protein unfolding mechanics may not be sufficient to understand the remarkable mechanical properties of biological materials” (Yoon et al. 2008, 874). One limitation of bottom-up methods has to do with the scale of the experimental data: “the time scale available for [molecular dynamic models] is not relevant to the time scale for [atomic force microscopy] experiments of protein unfolding mechanics” (Yoon et al. 2008, 874). Another issue is that the number of factors involved at the smallest scales of the system means that atomistic or molecular dynamic simulation is typically “computationally ineffective” (Yoon et al. 2008, 876). In short, modeling the system bottom-up from the most microscopic scale is computationally intractable and is difficult to compare with the measurable macroscale parameters.

Instead of modeling from the bottom-up, Yoon et al. use a mesoscale RVE for biological proteins by coarse-graining over a number of molecules and protein crystals (Fig. 1).

Schematic illustration of biological protein materials composed of protein crystals. a Computer illustration of a fiber, made of protein crystals, under mechanical loading. b Several protein crystal lattices constituting the biological fiber. c A unit cell (RVE) containing a protein crystal. (Yoon et al. 2008, 875)

Generally, this RVE method is one in which “the domain is subdivided in some manner to give an average response for the RVE. The average response is then used in a macroscopic model over the entire domain.” (Dallon 2010, 25). Yoon et al.’s stated goal is to use their RVE model of mesoscale structures of biological proteins to account for “macroscopic mechanical properties such as Young’s modulus” (Yoon et al. 2008, 874). To do this for spider silk and other biological proteins, they “consider a representative volume element (RVE) containing protein crystals in a given space group for computing the virial stress of RVE in response to applied macroscopic constant strain” (Yoon et al. 2008, 875).

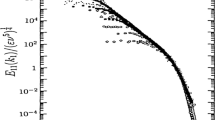

The mesoscale RVE model system is then applied (repeatedly) across the entire domain of the system; in this case, the entire length of the spider silk (Yoon et al. 2008, 875). The resulting model system is then displaced (or stretched) and allowed to return to equilibrium by minimizing energy within the system. The process is repeated until the material has been stretched to a prescribed strain/length. The relationship between these strains and the resulting displacement can then be used to calculate Young’s modulus for the material at the macroscopic scale, which can be plotted as stress–strain curves for different materials (Fig. 2). These simulated values for Young’s modulus can then be compared directly against the available macroscale experimental data/measurements.

Stress-stain curves computed from the mesoscopic RVE model for biological proteins. (Yoon et al. 2008, 875)

After validating their models against the available measurements, these modelers then used their RVE model(s) to investigate specific interactions between topological mesoscale structures and the measurable macroscopic material properties. To do so, they introduced a dimensionless quantity Q that represents the degree of folding topology for a protein (Yoon et al. 2008, 878). This degree of fold is calculated as the number of contacts within a specific cut-off distance divided by the maximum possible number of contacts. Their results showed that “the degree-of-fold, Q, is highly correlated with Young’s modulus” (Yoon et al. 2008, 878). In other words, this mesoscale topological property of the RVE (that involves relationships among a large group of molecules) directly influences measurable macroscopic (i.e. bulk) parameters of the biological protein. They also found that another mesoscale structure relevant to Young’s modulus is whether the bonds between atoms are configured in serial or parallel (Yoon et al. 2008, 879). In short, their RVE model allowed them “to further understand the structure–property relation for protein materials made of large protein crystal which may be computationally inaccessible with atomistic simulation” (Yoon et al. 2008, 880). Indeed, by investigating relevant mesoscale structures via their RVE model, these modelers were able to identify relationships between mesoscale structures and measurable macroscale properties of the system that would have been difficult, if not impossible, to identify/model from the most microscopic (or averaged macroscopic) scales of the system.

This RVE middle-out modeling strategy is particularly fruitful when there are relevant interactions between the mesoscale at which the RVE is constructed and measurable features (or parameters) at more macroscales. That is, this technique works well even when there is much less separation or autonomy between the scales of the system. However, as with scale-separation techniques, this approach’s frequent use of simple averaging (over the RVE) often relies on the assumption that the system’s features and interactions are relatively homogeneous across different subregions of the mesoscale RVE (and the overall system). When the system’s meso- or micro- scale structures and interactions are more heterogeneous, more sophisticated coarse-graining (or upscaling) techniques will be required that are sensitive to changes in those meso- and micro- structures. Finally, it is important to emphasize that the aim of these modelers is to recover measurable macroscopic properties/parameters of the system (e.g., Young’s modulus) and investigate their dependence on certain mesoscale features. Indeed, the RVE approach is particularly well-suited for such attempts to discover/recover measurable macroscale properties of the system and investigate their dependence on certain mesoscale features found at the scale of the RVE.

Modeling heterogenous ecological landscapes with homogenization techniques

Finally, a common multiscale modeling strategy is to use homogenization techniques (Batterman 2021). In these cases, modelers typically begin by building a multiscale model that captures various structures that are heterogeneous at the mesoscales of the system. The modelers then use a homogenization transformation (or scaling functions) to construct an idealized model of the system at some larger scale that represents the system as if it were homogeneous across certain subregions of the system. This process is then repeated until a homogenized model of the overall system is constructed at the system’s most macroscale. This technique is not completely independent of the above RVE strategy since one essentially constructs a RVE at a mesoscale from which to begin the homogenization transformation. However, rather than using a single RVE at a particular mesoscale and directly scaling (all the way) up to macroscale properties, this middle-out modeling strategy recursively applies a homogenization transformation at progressively larger scales so as to: (1) capture the relevant structures (or correlations) across multiple mesoscales of the system and (2) eliminate most of the features that are irrelevant to the system’s macroscale behavior across those scales. Consequently, homogenization is particularly useful when scientific modelers do not know which mesoscale the relevant (or dominant) structures are at or how to directly scale up from those mesoscale features to the system’s most macroscale. Therefore, what modelers know about which mesoscales are relevant and how features at that scale(s) relate to the macroscale features of interest will determine whether constructing a (single) RVE at a particular mesoscale or recursively applying a homogenization transformation across multiple mesoscales is more likely to be fruitful.

As an example, Garlick et al. (2011) use homogenization techniques to model the spread of chronic wasting disease (CWD) in mule deer across heterogeneous landscapes in the La Sal Mountains of Utah. Rather than merely attempting to model the system at the most macroscale or a single mesoscale, a clearly stated goal for these modelers is to “determine the impact of small scale (10–100 m) habitat variability on large scale (10–100 km) movement…the procedure generates asymptotic equations for solutions on the large scale with parameters defined by small-scale variation” (Garlick et al. 2011, 2088). In other words, the goal is to generate equations for the macroscale dynamics of the system that show how they depend on certain structural variations at smaller scales. This requires much more sophisticated modeling techniques than merely averaging over the smaller scale details of the system. Indeed, “Despite numerous suggestions in the literature to the contrary, many forms of aggregation in spatio-temporal statistical modeling projects still rely on over-simplified and non-scientific spatial and/or temporal scaling methods (i.e., arithmetic averaging) without regard for the inherent properties or dynamic features of the process under study” (Hooten et al. 2013, 406). Instead of simple averaging, these cases call for a multiscale modeling approach that takes into account the relevant structural variations at smaller scales and appropriately scales them up into macroscale equations of the system. Homogenization techniques are designed to do precisely that.

Like the cases above, implementing this homogenization approach involves several idealizing assumptions. For example, the model assumes that death from causes other that CWD are balanced by births and ignores indirect ways that the disease might be spread besides direct contact between infected individuals and healthy individuals. As justification for these idealizing assumptions, these modelers tell us that they “will ignore other modeling considerations such as seasonal and sex differences in movement and age structure of the disease for purposes of developing the homogenization approach” (Garlick et al. 2011, 2092). In other words, these idealizations are introduced as a means to applying homogenization methods given the modeler’s aim of deriving tractable macroscale PDEs that incorporate variations in the heterogeneous structures and correlations at multiple mesoscales of the system.

When modeling the spread of disease, the most common approach has been to construct a diffusion model where there is some constant rate of movement or spread represented by a diffusion parameter. The general problem is that “most animals do not diffuse like particles” (Garlick et al. 2011, 2089). More specifically, in biological contexts, diffusion models almost always assume that the diffusion of organisms occurs over a homogeneous landscape to allow for simple averaging. However, in reality, “[Organisms] are greatly influenced by habitat type, moving slowly through landscapes that provide needed resources and more quickly through inhospitable regions and are therefore much more likely to be found some places than others.” (Garlick et al. 2011, 2089). Simply averaging over the system’s smaller scale details ignores these relevant heterogeneities at mesoscales. Therefore, the first step in capturing some of these mesoscale structures is to move to a model of ‘Ecological’ diffusion that replaces the constant diffusion coefficient with a motility parameter, μ, that can be different for different habitats and boundaries between them.

While this is a step in the right direction, diffusion models with varying coefficients are “daunting to implement in a model, particularly at large spatial scales” (Garlick et al. 2011, 2090). As a result, these modelers implement a homogenization technique that “allows one to approximate PDEs that have rapidly-varying coefficients with similar ‘homogenized’ PDEs having average coefficients. The primary advantage is that the ‘homogenized PDE is framed in the large scales in space and time, with the influence of the small scale variability in the averages” (Garlick et al. 2011, 2090). The key thing to notice is that this technique captures the influence of heterogeneous structures at smaller scales while also being computationally tractable at larger spatial scales. Indeed, the goal is to arrive at tractable PDEs at the most macroscale, “while preserving the effects of fine scale variability in the diffusion coefficients” (Garlick et al. 2011, 2091).

The next step is to divide the habitat into different habitat types and estimate a motility value for each type of habitat. In order to use the available experimental data from the U.S. Geological Survey Landcover Institute, these modelers divide the habitat up at two different scales: a smaller scale of 30 × 30 m blocks and a larger scale of 9 × 9 km blocks. This separation of scales allows for the application of homogenization by introducing an order parameter, \(\in\), that represents the ratio between the smaller and larger scales of the system. For the above scales this means that \(\in\)= 30/9000 = 1/300. If we let x be the large spatial scale associated with slow time scale, t, then the small spatial scale y is defined by the scaling equation y = x/\(\in\). This smaller spatial scale is associated with a fast time scale via the scaling equation τ = 1/\({\in }^{2}\). The motility function then becomes a function of both spatial scales: μ = μ (x, y). Using the method of multiple scales and applying the scaling functions to each of the scales results in an infection equation that is a function of the large (and slow) and small (and fast) spatial and temporal scales of the system t, τ, x, and y. The terms of this equation are then averaged over a representative mesoscale cell (or area) for a particular type of landscape. Importantly, this is “not the average value of the function over the entire domain. It is a local average for the current position of interest, and the region of integration depends on the (changing) local cell size” (Garlick et al. 2011, 2095). Yet, even after this local homogenization procedure is recursively applied at progressively larger scales of the system, the smaller scale variability of habitats is preserved in the leading order solution:

Indeed, as Garlick et al. repeatedly remind us, “the homogenized solution reflects small scale variability” (2011, 2096). This allows the macroscale homogenized model to be responsive to variation in the various heterogeneous meso- and micro-scale structures and correlations of the system.

The resulting homogenization model could then be applied to the specific case of CWD in mule deer by using motility coefficients for the different habitats empirically derived from the Utah Division of Wildlife Resources’ GPS movement data. This resulted in the following motility values assigned for different landscapes (Garlick et al. 2011, 2102):

Land cover type | Estimated μ (km2/day) |

|---|---|

Rock | 2.01 |

Scrub | 0.97 |

Conifer forest | 0.66 |

Deciduous forest | 0.65 |

Mixed forest | 0.52 |

Pasture | 0.85 |

Cultivated crops | 0.86 |

Grassland | 0.51 |

Developed (low intensity) | 0.29 |

Developed (medium intensity) | 0.30 |

Developed (open space) | 0.70 |

Woody wetlands | 0.55 |

Open water | 3.02 |

The results showed that this homogenized model yielded very similar results to solving the original heterogenous model in about 1/42000 of the time. So, not only is the homogenized model able to capture the relevant heterogeneous structures across multiple mesoscales of the system, but it is far more computationally efficient than a ‘bottom-up’ dynamical model that aims to represent processes at the smallest scales of the system (many of which are irrelevant to its macroscale behavior).

This type of homogenization approach is well-suited for situations in which the aim is to construct macroscale dynamical equations (e.g., PDEs) for the system that are sensitive to variations in heterogeneous structures across multiple meso- and micro-scales. This strategy is also most applicable when the macroscales of the system are somewhat autonomous of the smaller scale features of the system (i.e. not all of the smaller scale features are relevant), but the macroscale dynamics of the system still depend on some key features/parameters/structures at multiple meso- and micro-scales. In other words, because homogenization techniques are sensitive to variability in some key (but not all) smaller scale features, this multiscale modeling technique is effective in cases where the degree of dependence on those smaller scale structures renders scale-separation techniques ineffective. Moreover, rather than using simple averaging that would miss relevant variations at mesoscales, homogenization techniques typically use more nuanced coarse-graining and scaling transformations that can more systematically capture variation in features across multiple mesoscales of the system.

A framework for selecting effective multiscale modeling strategies

I now use these cases to extract several dimensions along which multiscale phenomena and the modeling techniques used to study them can differ. I focus on these particular features because they have direct implications for the justification for certain modeling decisions; i.e., these features have normative significance because they influence which modeling approach(es) is most likely to be successful in a given modeling context. Most of these features come in degrees and so these should not be seen as binary choices, but, rather, as features of the target system(s), model(s), and modeler’s aims that will impact the degree of justification (or validation) for using a particular multiscale modeling strategy. In addition, while these features are drawn from biological cases, these techniques can be found in numerous other scientific disciplines as well (e.g. see Batterman 2021 for some cases of RVE and homogenization in physics). As a result, the features/dimensions/questions identified by this framework can be generalized to guide the selection of multiscale modeling techniques in other areas of science.

Degrees of relative autonomy and scale separation

Most philosophical debates concerning reduction, emergence, and the relationships between models/theories at different levels/scales have been framed in terms of complete dependence or autonomy between two scales (or levels) of the system. For example, much ink has been spilt arguing that there is/is not a metaphysical dependence between levels/scales in which features at higher-levels are completely determined by features at lower-levels; e.g., via some kind of supervenience relationship. Alternatively, anti-reductionists have often argued that explanations that appeal to emergent features found at higher levels/scales are completely autonomous/independent of explanations (or features) at smaller levels/scales. In contrast with this all-or-nothing framing, one of the lessons illustrated by above cases is that autonomy or dependence between features at different scales almost always comes in degrees. So instead of talking about complete separations of scales or complete dependence/independence of one scale with respect to another, we instead need to focus on the relative degree(s) of autonomy between features at different scales.

As we have seen, the target system’s degree of autonomy between features at different scales directly impacts which multiscale modeling techniques are most likely to be successful. Specifically, scale-separation techniques are most likely to be successful when there is a relatively high degree of autonomy between features at different scales. In contrast, in cases where the macroscale features of interest are autonomous of many features at other scales but are also dependent on a few key features/parameters at smaller scales, scale-separation techniques are less likely to succeed. Fortunately, when scale-separation techniques fail, often RVEs and homogenization will be better able to capture the relationships between the relevant mesoscale structures and more macroscale features of the system. In addition, if the macroscale features of the system depend primarily on features at a particular (or small range) of mesoscales, RVE techniques that focus on that mesoscale will likely be very effective. When the macroscale phenomena of interest depends on meso- and micro-scale features across a wider range of scales, homogenization techniques that track features across recursively larger (or longer) scales will typically be more fruitful. In sum, what the above cases illustrate is that, rather than attempting to determine if some macroscale feature/process is completely ‘emergent’ or can be directly ‘reduced’ to features/processes at smaller scales, it is more beneficial for practicing multiscale modelers to determine which macroscale properties depend on features at more microscales, and in what ways, and which macroscale properties are stable/autonomous of changes at more microscales (Rice 2021). Consequently, the selection of a multiscale modeling technique needs to consider the degree of relative autonomy between various scales of the system.

Measurable macroscale parameters vs. macroscale dynamical equations

Another crucial difference between these cases are the types of representations they aim to produce and the modelers’ purposes for those representations. For example, although both the RVE and homogenization cases apply middle-out modeling strategies, the former case aims to construct a model that adequately captures certain bulk macroscopic properties/parameters of the materials that can be directly compared with the available experimental data, whereas the latter case aims to construct dynamical equations for the most macroscale evolution of the system. Due to this difference in modeling goals, in the RVE case, the modelers begin from a model at a particular mesoscale and directly ‘scale up’ to determine the measurable macroscale properties of the material. This technique is largely successful because these measurable properties at the macroscale—e.g., Young’s modulus—are assumed to be stable/static features of the system and are known to depend on features at a particular mesoscale(s) that is represented within the RVE. In contrast, in the homogenization case, the modelers begin with dynamical equations at a particular mesoscale and then repeatedly apply homogenization transformations at progressively larger scales that preserve the relevant mesoscale structures/variations at each scale and eliminate other irrelevant degrees of freedom. This allows for the construction of a dynamical model that describes the evolution of the system over time and is sensitive to changes at a wide range of meso- and micro-scales of the system. Thus, whether the aim is to recover measurable macroscale bulk properties or dynamically model the evolution of the system will impact which of these middle-out modeling strategies ought to be adopted.

Direct scaling and mediating mesoscales

Philosophical discussions of inter-theory or inter-level relations have primarily sought to discover direct relations via the taking of limits or scaling all the way up from the smallest to the largest scales of the system. However, the last two examples illustrate how indirect relationships that are mediated by mesoscale structures and properties are often the key to bridging between different scales of the system (Batterman 2021). Moreover, we have seen that the use of RVEs and homogenization techniques typically requires that the mesoscale structures of the system be incorporated into the model in the right way so as to capture the important interactions, correlations, and properties at those mesoscales.

For example, choosing the right mesoscale at which to construct an RVE and using appropriate scaling techniques is essential to the success of RVE approaches. Similarly, in the homogenization case, merely describing the system as homogeneous throughout (e.g. via simple averaging) would fail to capture the relevant variations in structures at mesoscales that impact the overall behavior of the system. Only by choosing the correct (or best) RVEs and homogenization transformations will the resulting multiscale model successfully capture the relevant mesoscale structures and the way they mediate relationships between features at larger and smaller scales. As a result, the selection of a multiscale modeling technique needs to consider what kinds of direct and indirect scaling relations need to be captured rather than assuming that the macroscale representation can be derived directly from the available microscale descriptions of the system.

Moreover, these questions about direct scaling versus the need for mediating mesoscale parameters can be recursively asked about any two distinct scales of the system. RVE techniques will often be most useful when we can identify a single best mesoscale with which to mediate between smaller and larger scales of the system. However, in other cases there will be parameters across multiple mesoscales of the system that are necessary to mediate the relationships between the most micro and macro scales of the system. In these cases, a more effective strategy is to use homogenization techniques that systematically incorporate relevant structures and correlations from numerous mesoscales of the system.

Homogeneous vs. heterogenous mesoscale structures

Finally, as we saw in multiple examples, another crucial feature of the system that determines the success of the chosen multiscale modeling strategy is whether the system is relatively homogenous or heterogenous in its micro- and meso-scale properties and structures. When the system is relatively homogenous, scale-separation techniques or simple averaging over a RVE are far more likely to be adequate. However, when there are relevant heterogeneities at the micro- and meso-scales of the system, multiscale modelers ought to make use of coarse-graining techniques or scaling functions that are sensitive to changes in those smaller scale structures. In particular, homogenization techniques are explicitly designed to incorporate relevant variations from across the system’s mesoscales into the parameters of the macroscale model. As a result, deciding which multiscale modeling techniques will be most fruitful requires more than just identifying which features across which scales are relevant for the phenomenon of interest. That is, successful multiscale modeling requires more than just identifying the difference-making features of the system and the scales at which they occur. In addition, the choice of multiscale modeling approach needs to be sensitive to the degree of variability of the relevant mesoscale features across space and time and the influence of that variability on the system’s macroscale features (or patterns).

Tailoring multiscale modeling techniques to specific contexts

I now combine these features into a normative framework that makes explicit how they ought to influence decisions about which multiscale modeling technique to use in different modeling contexts. Specifically, I argue that decisions about which multiscale modeling technique to employ in a particular context ought to be guided by the following questions:

-

(1)

What degree of relative autonomy do the macroscale features of the system have from features at more microscales?

-

(2)

Is the aim of the scientific modelers to recover (static) measurable macroscale parameters or to construct a dynamical model of the evolution of the system?

-

(3)

Are there direct scaling relationships between the micro- and macro- scales of the system, and, if not, how many intermediate mesoscales are involved in mediating between the different scales of the system?

-

(4)

Are the relevant mesoscale structures relatively homogeneous or is there considerable variability in those features across different regions or times?

When considered collectively and weighed against each other, consideration of these features/dimensions of the target system(s), modeling aims, and available modeling strategies can provide a good deal of normative (though certainly fallible) guidance for determining the degree of justification for using different multiscale modeling techniques in different modeling situations. Consequently, the above framework can help practicing multiscale modelers tailor their multiscale modeling techniques to specific modeling contexts.

Lessons and questions for future investigations into multiscale modeling

I now use the above framework to draw some more general philosophical lessons and show that multiscale modeling raises a number of interesting and novel methodological, metaphysical and epistemological questions that deserve further philosophical investigation.

Multiscale modeling is a diverse toolkit of strategies that require independent evaluation

One of the crucial lessons of the above discussion is that there are a wide range of different techniques under the umbrellas of ‘multiscale modeling’ and ‘mesoscale modeling’ that deserve separate evaluation and analysis. Moreover, the justification of those techniques must be evaluated with respect to particular modelers’ purposes/aims (Parker 2019; Weisberg 2013) and take into consideration various features of the model’s target system(s) (Batterman 2021). What we have seen is that multiscale modeling is a diverse toolkit of modeling strategies whose success/failure is not dictated merely by mapping one’s model onto some kind of ontological hierarchy or mereological structuring of reality—indeed, most of these techniques involve substantial idealization. Instead, these multiscale modeling techniques are justified by being pragmatically useful for specific modeling aims and responsive to the relevant features of the system(s) across multiple spatial/temporal scales.

What these cases also illustrate is that there are multiple kinds of inter-scale relationships found in complex systems with different modeling approaches being better able to capture different types of relationships. As a result, no single metaphysical construction of reality (e.g. the layer cake or hierarchical organization) nor modeling approach ought to be privileged across all contexts. Unfortunately, as Alisa Bokulich notes, “what has not been adequately appreciated is that there are many different types of multiscale behavior in nature—involving different spatial and temporal dependencies between scales—which require fundamentally different kinds of multiscale models” (2021, 14171). Thus, rather than just a negative thesis about failures of reductionism or hierarchical/mereological layers, detailed investigation of multiscale modeling reveals two positive theses about our world and scientific practice: (1) the types of relationships between scales are extremely diverse and (2) understanding them requires a plethora of different multiscale modeling techniques tailored to specific modeling contexts/purposes.

Investigating this plurality of scaling (or structural) relationships and multiscale modeling approaches will help move philosophical discussions away from sweeping generalizations about the ontological structure of reality or the viability of reductionism (across the board) towards a more nuanced understanding of the variety of inter-scale relationships informed by the various multiscale modeling techniques found in scientific practice.

Simple averaging often misses the relevant heterogeneous mesoscale structures

These cases also show that using simple arithmetic averaging to determine macroscale properties or parameters will often miss the key heterogeneities that occur at smaller scales. Robert Batterman (2021) has recently described a similar kind of case in material science. In Batterman’s case, “upon performing the simple volume averaging we will conclude that the material is much less stiff than it actually is. This is because that averaging just takes the volume fraction into account and ignores the fact that topologically the inclusions are disconnected and the matrix is connected.” (2021, 92). As Batterman argues, this example, “demonstrates the failure of limiting volume averaging strategies for any heterogeneous mixture. Once one recognizes that most upscaling problems involve complex systems with heterogeneous structures at lower scales, it becomes clear that such a simple strategy is doomed to fail” (Batterman 2021, 97). This is precisely why multiscale modelers often turn to RVE or homogenization techniques that are sensitive to changes in mesoscale and microscale features of the system.

Assuming the system’s mesoscale structures are homogeneous when they are not is related to another issue: focusing only on difference-makers that are common across multiple systems will often miss the relevant heterogeneous features of the system. Unfortunately, many philosophical accounts of modeling have exclusively focused on the role of repeatable features in modeling and explaining patterns. But an important result of the above cases is that it is often the features of the system that are different and change across instances of the same pattern that are particularly of interest to multiscale modelers. In short, sometimes the things that vary in different spatial or temporal regions/scales of the system are often the relevant difference-making features in multiscale systems. As a result, multiscale modeling decisions are largely driven by the need to discover both stable and variable relationships of dependence between scales.

How should we measure degrees of relative autonomy?

As I discussed above, these cases also show that we need to move beyond philosophers’ questions concerning whether a given level or scale is completely determined by, or completely autonomous of, some other level of scale. Instead, relationships between scales come in varying degrees of relative autonomy; i.e., there is almost always some mixture of dependence and independence between features at different spatial and temporal scales. Furthermore, we have seen how these degrees of autonomy directly impact which multiscale modeling approaches are most likely to succeed. An important philosophical question is, then, how ought we (or can we) measure the degree of autonomy in a given case? One option would be to identify all the possible dependencies between two scales and determine how many of those dependencies are realized in a given system. But answering that kind of question is both philosophically and scientifically impossible. Instead, we might use the relationships of interest to scientists to narrow the range of possibilities and then ask what balance of dependence/independence do we observe in those relationships. But this, too, won’t do for two reasons. First, if a dependence or independence exists between two scales of the system that is particularly important/relevant to the occurrence/stability/fragility of the target phenomenon, then whether scientists find that relationship of interest (ahead of time) is irrelevant to determining the kind of relative autonomy required for the selection of an effective multiscale modeling strategy. That is, the relative autonomy that influences the success of a multiscale modeling strategy is a feature of the real system(s), not of a subset of features of interest to the modelers. Second, multiscale modeling typically is applied in contexts in which scientists do not know the range of dependencies exhibited by the system until after they have built a multiscale model with which to investigate that phenomenon. As a result, we require some way of approximating what the degree of relative autonomy between scales might be without requiring multiscale modelers to already know the full set of dependence and independence relationships in the system. Indeed, if they already knew the set of dependence and independence relationships that existed in the system (and their relationship to the phenomenon of interest) then there would be little need to construct a highly idealized multiscale model of the phenomenon. The methodology of multiscale modeling is about discovering the various kinds of dependencies and independencies between different scales of complex systems. This brief discussion yields two lessons. The first is that it would be extremely useful for scientific modelers to be able to develop rough estimates of the expected degree of autonomy between scales before selecting a multiscale modeling strategy. The second is that, once more is learned about a system via the investigation of a multiscale model, the interesting/calculable kind of relative autonomy will be the ratio of dependence and independence relationships between the features that are relevant to the phenomenon of interest. Consequently, asking whether an entire scale/level is completely dependent/autonomous of another entire scale/level (as philosophers have often done) completely misses the pragmatic situation confronting practicing scientists as well as the degree of autonomy that we might actually be able to epistemically access.

How is multiscale modeling pragmatically constrained by the available models and data?

The above framework also highlights how the modeling decisions that confront multiscale modelers are primarily driven by pragmatic constraints and challenges to building computationally tractable models of complex multiscale phenomena. Specifically, multiscale modeling decisions are typically motivated by the desire to (1) use existing modeling approaches, (2) apply them to similar kinds of phenomenon across (sometimes very) different contexts, (3) bring those models into contact with the available data/measurements, and (4) use minimal computational resources. As a result, multiscale modeling is highly constrained by the available modeling resources, empirical data/measurements, and the computational complexity involved in modeling multiscale phenomena. Unsurprisingly, this means that practicing multiscale modelers are largely unconcerned with determining which features of their models would make for the best explanation of the phenomenon according to philosophical accounts of explanation (or reduction). They also are relatively unconcerned with accurately mirroring the ontological entities and interactions of the target system(s). Instead, multiscale modelers’ primary task is to merely construct a viable multiscale model—from the available conceptual, mathematical, and empirical resources—that provides the pieces of understanding or information they seek. In other words, rather that aiming at philosophical representational ideals (e.g. mirroring mechanistic components or interactions), scientists typically construct multiscale models that are merely the most viable/tractable model for their purposes that could be constructed from the existing modeling resources. Unfortunately, as Christopher Pincock notes, in most philosophical discussions, “Both reductionists and their critics underestimate the difficulty in developing a workable representation of a complex system” (2012, 119). I suggest that rather than focusing our attention on whether instances of multiscale modeling are compatible/incompatible with general philosophical accounts of reduction/emergence, our philosophical energy would be better spent analyzing the various pragmatic features of scientific practice that constrain the construction of viable/workable models in particular contexts. Doing so will help philosophical analysis of these cases provide more useful normative guidance for practicing multiscale modelers whose modeling decisions are heavily constrained by the available modeling frameworks, accessible measurements/data, and computational resources/time.

What does the success of a multiscale modeling strategy tell us about the metaphysical structure of reality?

Given the above points about the interaction between various kinds of pragmatic constraints and the complexity of a model’s real-world target system(s), a final lesson is that we ought to be careful about reading the structure or ontology of reality off of our scientific theories and models (Pincock 2012; Potochnik 2017; Rice 2021). While these cases do show us that reality is often far more interwoven across spatial and temporal scales than is often assumed by philosophers’ proposals concerning ‘levels of reality’ (Potochnik 2009), we ought not follow Quine’s (1948) suggestion that we infer the ontological structure of reality by quantifying over the variables or properties present in our best scientific models and theories. Instead, what we find is that the variables, parameters, functions, and transformations that show up in multiscale models are often driven by the need to use the existing theoretical, modeling, experimental, or mathematical tools to solve practical problems. Moreover, bringing these tools into contact with scientists’ practical aims often requires a fair amount of idealization, parameter construction, and mathematical manipulation. As a result, while the success of multiscale models is certainly impacted by the features of real-world systems (Batterman 2021), the processes by which multiscale modeling techniques are selected, implemented, and applied are not driven by the desire to accurately describe the reality of complex systems (Rice 2021). Therefore, we should be cautious about the inference from the existence of a variable, parameter, or scaling relationship in one’s scale-separated, RVE, or homogenized model to the belief that there are real-world entities or interactions that correspond to those variables, parameters, or scaling functions.

Despite this caution, it is also important to note how multiscale modeling does enable us to better understand real features of complex systems. In other words, I don’t think we should adopt pure instrumentalism about multiscale models either. Fortunately, as Pincock notes, there is a third option between a robust metaphysical interpretation of these cases and instrumentalism. “This is to emphasize the epistemic benefits that multiscale representations afford the scientist. On this picture, a successful multiscale representation depends on genuine features of the system.” (Pincock 2012, 119, my emphasis). Indeed, although the system’s entities, features, and relationships may not be accurately reflected in the highly idealized multiscale models constructed by scientists, investigating multiscale models and their scaling relationships can reveal genuine features of a system that would otherwise be obscure or epistemically inaccessible to scientists (Batterman 2021; Pincock 2012; Rice 2021).

Several interesting philosophical questions arise from the tension between these two observations: What degree of accuracy (if any) is required for different multiscale modeling goals/aims/purposes? What conditions of the modeling context would warrant our drawing further metaphysical conclusions from the success of a multiscale modeling technique? What justifies the use of a multiscale modeling technique that is known to drastically misrepresent the relationships between the scales of the system? And, more generally, what more limited conclusions about the nature of reality can we glean from the widespread success of various multiscale modeling techniques? While some philosophers have started to address these kinds of questions (e.g. see Batterman 2021, Ch. 7), much more work needs to be done to develop more nuanced versions of naturalistic metaphysics than philosophers’ suggestions to completely trust or distrust the ontological hierarchies or mereological relationships described by our best scientific models. The above cases and framework show that more careful and context-specific engagement with scientific practice is required to answer these ontological questions.

Conclusion

Analysis of these case studies has revealed several dimensions of modeling contexts that ought to be taken into consideration when selecting a multiscale modeling strategy. By identifying these features and the modeling choices they influence, the framework provides practical normative guidance for scientific modelers interested in multiscale phenomena. Moreover, the framework has revealed numerous distinctive philosophical questions that arise from consideration of multiscale modeling techniques. These questions show that multiscale modeling is a rich landscape for philosophers of science to investigate further in the future. Going forward, philosophers of science ought to continue distinguishing different multiscale modeling strategies and the conditions/features that make different strategies likely to be successful in different scientific modeling contexts. Doing so will improve our accounts of the methodologies of science, the epistemic contributions of scientific models, and the ontological structures and relationships found in complex physical systems.

Notes

The use of the word ‘influence’ here might suggest that these interactions are causal. However, because the relationships are between variables, parameters, and functions, this description is compatible with merely passing information between the models that need not be interpreted causally.

References

Batterman RW (2021) A Middle Way: A Non-Fundamental Approach to Many-Body Physics. Oxford University Press, Oxford

Batterman R, Green S (2021) Steel and bone: Mesoscale modeling and middle-out strategies in physics and biology. Synthese 199:1159–1184

Bokulich A (2021) Taming the tyranny of scales: models and scale in the geosciences. Synthese 199:14167–14199

Bursten J (2018) Conceptual strategies and inter-theory relations: The case of nanoscale cracks. Stud Hist Philos Mod Phys 62:158–165

Dada JO, Mednes P (2011) Multi-scale modelling and simulation in systems biology. Integr Biol 3:86–96

Dallon JC (2010) Multiscale modeling of cellular systems in biology. Curr Opin Colloid Interface Sci 15:24–31

Deisboeck TS, Wang Z, Macklin P, Cristini V (2011) Multiscale cancer modeling. Annu Rev Biomed 13:127–155. https://doi.org/10.1146/annurev-bioeng-071910-124729

Garlick MJ, Powell JA, Hooten MB, McFarlane LR (2011) Homogenization of large-scale movement models in ecology. Bull Math Biol 73:2088–2108

Hooten MB, Garlick MJ, Powell JA (2013) Computationally efficient statistical differential equation modeling using homogenization. J Agric Biol Environ Stat 8(3):405–428

Jhun J (2021) Economics, equilibrium methods, and multiscale modeling. Erkenntnis 86:457–472

Laughlin RB, Pines D, Schmalian J, Stojkovic BP, Wolynes P (1999) The middle way. PNAS 97(1):32–37

Meier-Schellersheim M, Fraser IDC, Klaushcen F (2009) Multi-scale modeling in cell biology. Wiley Interdiscip Rev Syst Biol Med 1(1):4–14

Nguyen N, Strnad O, Klein T, Luo D, Alharbi R, Wonka P, Maritan M, Mindek P, Autin L, Goodsell DS, Violad I (2021) Modeling in the time of COVID-19: Statistical and rule-based mesoscale models. IEEE Trans Visual Comput Graph 27(2):722–732

Parker W (2019) Model evaluation: an adequacy for purpose view. Philos Sci 87:457–477

Pincock C (2012) Mathematics and Scientific Representation. Oxford University Press, Oxford

Potochnik A (2009) Levels of explanation reconceived. Philos Sci 77(1):59–72

Potochnik A (2017) Idealization and the Aims of Science. University of Chicago Press, Chicago

Quine WV (1948) On what there is. Rev Metaphys 2(5):21–38

Rice C (2021) Leveraging Distortions: Explanation, Idealization, and Universality in Science. MIT Press, Cambridge, MA

Rice C (2022) Modeling multiscale patterns: active matter, minimal models, and explanatory autonomy. Synthese 200(6):1–35

Schymanski, S., Roderick, M. and Sivapalan, M. (2015). Using an optimality model to model medium and long-term responses of vegetation water use to elevated atmospheric CO2 concentrations. Annals of Botany: Plants.

Walker DC, Southgate J (2009) The virtual sell—a candidate co-ordinator for ‘middle-out’ modelling of biological systems. Brief Bioinform 10(4):450–461

Weisberg M (2013) Simulation and Similarity: Using Models to Understand the World. Oxford University Press, Oxford

Wilson M (2017) Physics Avoidance. Oxford University Press, Oxford

Yoon G, Park H, Na S, Eom K (2008) Mesoscopic model for mechanical characterization of biological protein materials. J Comput Chem 30(6):873–880

Acknowledgements

Thanks to Robert Batterman, Julia Bursten, Jennifer Jhun, and the audience at our 2022 PSA symposium for helpful feedback and discussion of the ideas in this paper. Thanks also to Jeff Kasser for comments on an earlier draft and to the CSU Department of Cellular and Molecular Biology for helpful discussion.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rice, C. Beyond reduction and emergence: a framework for tailoring multiscale modeling techniques to specific contexts. Biol Philos 39, 12 (2024). https://doi.org/10.1007/s10539-024-09949-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10539-024-09949-x