Abstract

This paper addresses a set of issues involved in modeling systems across many orders of magnitude in spatial and temporal scales. In particular, it focuses on the question of how one can explain and understand the relative autonomy and safety of models at continuum scales. The typical battle line between reductive “bottom-up’’ modeling and “top-down” modeling from phenomenological theories is shown to be overly simplistic. Multi-scale models are beginning to succeed in showing how to upscale from statistical atomistic/molecular models to continuum/hydrodynamics models. The consequences for our understanding of the debate between reductionism and emergence will be examined.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

One way of understanding the nature of emergence in physics is by contrasting it with the reductionist paradigm that is so prevalent in high energy or “fundamental’’ physics. This paradigm has been tremendously successful in explaining and describing various deep features of the universe. The goal is, ultimately, to search for the basic building blocks of the universe and then, having found them, provide an account of the nonfundamental features of the world that we see at scales much larger than those investigated by particle accelerators. On this way of thinking, emergent phenomena, if there are any, are those that apparently are not reducible to, or explainable in terms of, the properties and behaviors of these fundamental building blocks. The very talk of “building blocks’’ and fundamental particles carries with it a particular, and widespread view of how to understand emergence in contrast with reductionism: In particular, it strongly suggests a mereological or part/whole conception of the distinction.Footnote 1 Emergent phenomena, on this conception, are properties of systems that are novel, new, unexplainable, or unpredictable in terms of the components or parts out of which those systems are formed. Put crudely, but suggestively, emergent phenomena reflect the possibility that the whole can be greater (in some sense) that the sum of its parts.

While I believe that sometimes one can think of reduction in contrast to emergence in mereological terms, in many instances the part/whole conception misses what is actually most important. Often it is very difficult to identify what are the fundamental parts. Often it is even more difficult to see how the properties of those parts, should one be able to identify them, play a role in determining the behavior of systems at scales much larger than the length and energy scales characteristic of those parts. In fact, what is most often crucial to the investigation of the models and theories that characterize systems is the fact that there is an enormous separation of scales at which one wishes to model or understand the systems’ behaviors—scale often matters, parts not so much.Footnote 2

In this paper I am not going to be too concerned with mereology. There is another feature of emergent phenomena that is, to my mind, not given sufficient attention in the literature. Emergent phenomena exhibit a particular kind of autonomy. It is the goal of this paper to investigate this notion and having done so to draw some conclusions about the emergence/reduction debate. Ultimately, I will be arguing that the usual characterizations of emergence in physics are misguided because they focus on the wrong questions.

In the next section, I will discuss the nature and evidence for the relative autonomy of the behaviors of systems at continuum scales from the details of the systems at much lower scales. I will argue that materials display a kind of universality at macroscales and that this universal behavior is governed by laws of continuum mechanics of a fairly simple nature. I will also stress the fact (as I see it) that typical philosophical responses to what accounts for the autonomy and the universality—both from purely bottom-up and a purely top-down perspectives—really miss the subtleties involved. Section 7.3 will discuss in some detail a particular strategy for bridging models of material behavior across scales. The idea is to replace a complicated problem that is heterogeneous at lower scales with an effective or homogeneous problem to which the continuum equations of mechanics can then be applied. Finally, in the conclusion I will draw some lessons from these discussions about what are fruitful and unfruitful ways of framing the debate between emergentists and reductionists.

2 Autonomy

References to the term “emergence’’ in the contemporary physics literature and in popular science sometimes speak of “protected states of matter’’ or “protectorates.’’ For example, Laughlin and Pines (2007) use the term “protectorate’’ to describe domains of physics (states of matter) that are effectively independent of microdetails of high energy/short distance scales. A (quantum) protectorate, according to Laughlin and Pines is “a stable state of matter whose generic low-energy properties are determined by a higher organizing principle and nothing else’’ (Lauglin and Pines 2007, p. 261). Laughlin and Pines do not say much about what a “higher organizing principle’’ actually is, though they do refer to spontaneous symmetry breaking in this context. For instance, they consider the existence of sound in a solid as an emergent phenomenon independent of microscopic details:

It is rather obvious that one does not need to prove the existence of sound in a solid, for it follows from the existence of elastic moduli at long length scales, which in turn follows from the spontaneous breaking of translational and rotation symmetry characteristic of the crystalline state’’ (Lauglin and Pines 2007, p. 261).

It is important to note that Laughlin and Pines do refer to features that exist at “long length scales.” Unfortunately, both the conception of a “higher organizing principle’’ and what they mean by “follows from” are completely unanalyzed. Nevertheless, these authors do seem to recognize an important feature of emergent phenomena—namely, that they display a kind of autonomy. Details from higher energy (shorter length) scales appear to be largely irrelevant for the emergent behavior at lower energies (larger lengths).

2.1 Empirical Evidence

What evidence is there to support the claim that certain behaviors of a system at a given scale are relatively autonomous from details of that system at some other (typically smaller or shorter) scale? In engineering contexts, materials scientists often want to determine what kind of material is appropriate for building certain kinds of structures. Table 7.1 lists various classes of engineering materials. If we want to construct a column to support some sort of structure, then we will want to know how different materials will respond to loads with only minimal shear forces. We might be concerned with trade-offs between weight (or bulkiness), price of materials, etc. We need a way of comparing possible materials to be used in the construction. There are several properties of materials to which we can appeal in making our decisions, most of which were discovered in the nineteenth century. Young’s modulus, for instance, tells us how a material changes under tensile or compressive loads. Poisson’s ratio would also be relevant to our column. It tells us how a material changes in directions perpendicular to the load. For instance if we weight our column, we will want to know how much it fattens under that squeezing. Another important quantity will be the density of the material. Wood, for example, is less dense than steel. Values for these, and other parameters have been determined experimentally over many years. It is possible to classify groups of materials in terms of trade-offs between these various parameters.

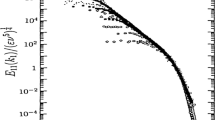

Michael Ashby has developed what he calls “Material Property Charts’’ with which it is possible to plot these different properties against one another and exhibit quite perspicuously what range of values fit the various classes of materials exhibited in Table 7.1. An illustrative example is provided in Fig. 7.1. There are a number of things to note about this chart. First, it is plotted in a log scale. This is for two reasons. On the one hand it allows one to represent a very large range of values for the material properties like density (\( \rho \)) and Young’s modulus (\( E \)). In a number of instances these values, for a given class of materials, can range over 5 decades. On the other hand, the log plot allows one to represent as straight lines the longitudinal elastic wave velocity—the speed of sound in the solid. These are the parallel lines in units of \( \left( {\frac{E}{\rho }} \right)^{1/2} (m/s) \). Clearly the wave velocity is greater in steel and other engineering alloys than it is in cork or in elastomers.

One can also plot the contours \( E/\rho \), \( E^{1/2} /\rho \), and \( E^{1/3} \rho \) that respectively tell us how a tie rod, column, and plate or panel will deflect under loading. For example,

[t]he lightest tie rod which will carry a given tensile load without exceeding a given deflection is that with the greatest value of \( E/\rho \). The lightest column which will support a given compressive load without buckling is that with the greatest value of \( E^{1/2} /\rho \). The lightest panel which will support a given pressure with minimum deflection is that with the greatest value of \( E^{1/3} /\rho \).

On the chart these contours are marked as “Guide Lines For Minimum Weight Design.’’ In order to use them one parallel shifts the line toward the upper left hand corner. The intersection of the line with different materials gives you the materials that will support the same load with different densities. To save weight, choose that material with the smallest density that intersects the line. Thus, perpendicular to the grain woods will support a column as well as steel and its various alloys—they will have equal values of \( E^{1/2} /\rho \).

This chart, and others like it, provide a qualitative picture of both the mechanical and thermal properties of various engineering materials. For our purposes, the most interesting feature is how different materials can be grouped into classes. Within a given envelope defining an entire class of materials from Table 7.1 (bold lines in Fig. 7.1) one can also group individual instances into balloons (lighter lines) that represent particular types of materials in those general classes. These display materials that exhibit similar behaviors with respect to the parameters of interest. Ashby sums this up nicely:

The most striking feature of the charts is the way in which members of a material class cluster together. Despite the wide range of modulus and density associated with metals (as an example), they occupy a field which is distinct from that of polymers, or that of ceramics, or that of composites. The same is true of strength, toughness, thermal conductivity and the rest: the fields sometimes overlap, but they always have a characteristic place within the whole picture (Ashby 1989, p. 1292).

An immediate question concerns why the materials group into distinct envelopes in this way. The answer must have something to do with the nature of the atomic make-up of the materials. For example, a rough, but informative, answer to why materials have the densities they do, depends upon the mean atomic weight of the atoms, the mean size of the atoms and the geometry of how they are packed.

Metals are dense because they are made of heavy atoms, packed more or less closely; polymers have low densities because they are made of light atoms in a linear, 2 or 3-dimensional network. Ceramics, for the most part, have lower densities than metals because they contain light \( O \), \( N \) or \( C \) atoms….

The moduli of most materials depend on two factors: bond stiffness and the density of bonds per unit area. A bond is like a spring…. (Ashby 1989, pp. 1278–1279).

This provides a justification for the observed fact that distinct materials can be grouped into (largely non-overlapping) envelopes in the material property charts, but it does not fully account for the shapes of the envelopes. Why, for instance, are some envelopes elongated in one direction and not another? To answer questions of this sort, we need to pay attention to structures that exist at scales in between the atomic and the macroscopic. The importance of these “mesoscale” structures will be the primary concern of this paper.

This section was meant simply to exhibit the fact that the behaviors of materials have been empirically determined to lie within certain classes that are defined in terms of material parameters whose importance was noted already in the nineteenth century. These so-called moduli are defined at the macroscale where materials are represented as a continuum. The fact that the materials group into envelopes in the property charts is an empirically determined fact that characterizes types of universal behavior for different engineering materials. That is to say, the atomically and molecularly different materials within the same envelope behave, at the macroscale, in very similar ways. A question of interest is how this universal behavior can be understood. Put in a slightly different way: Can we understand how different materials come to be grouped in these envelopes with their distinct shapes? There seem to be “organizing principles’’ at play here. Can we say anything about these macroscopic moduli beyond simply asserting that they exists?Footnote 3

2.2 The Philosophical Landscape

As we have just seen, the material property charts provide plenty of evidence of universal behavior at macroscales. This is also evidence that the behaviors of different materials at those scales are relatively autonomous from the details of the materials at atomic scales. Furthermore, the equations of continuum mechanics exhibit a kind of safety: They enable us to safely build bridges, boats, and airplanes.

The question of how this safety, autonomy, and universality are possible has received virtually no attention in the philosophical literature.Footnote 4 I think the little that has been said can be seen as arising from two camps. Roughly, these correspond to the emergentist and reductionist/fundamentalist poles in the debate. Emergentists might argue that the very simplicity of the continuum laws can be taken to justify the continuum principles in a sense as “special sciences’’ independent of “lower-level’’ details. Recall Fodor’s, essentially all or nothing understanding of the autonomy of the special sciences:

[T]here are special sciences not because of the nature of our epistemic relation to the world, but because of the way the world is put together: not all natural kinds (not all the classes of things and events about which there are important, counterfactual supporting generalizations to make) are, or correspond to, physical natural kinds…. Why, in short, should not the natural kind predicates of the special sciences cross-classify the physical natural kinds? (Fodor 1974, pp. 113–114)

I think this is much too crude. It is, in effect, a statement that the special sciences (in our case the continuum mechanics of materials) are completely autonomous from lower scale details. But we have already seen that the density of a material will depend in part on the packing of the atoms in a lattice. Furthermore, on the basis of continuum considerations alone, we cannot hope to understand which materials will actually be instantiated in nature. That is, we cannot hope to understand the groupings of materials into envelopes as in the property charts; nor can we hope to understand why there are regions within those envelopes where no materials exist. From a design and engineering point of view, these are, of course, key questions.

The shortcomings of a fully top-down approach may very well have led some fundamentalists or reductionists to orthogonal prescriptions about how to understand and explain the evidence of universality and relative autonomy at macroscales. They might argue as follows: “Note that there are roughly one hundred elements in the periodic table rather than the potential infinity of materials that are allowed by the continuum equations. So, work at the atomic level! Start with atomic models of the lattice structure of materials and determine, on the basis of these fundamental features the macroscale properties of the materials for use in engineering contexts.’’

I think this is also much too crude. The idea that one can, essentially ab initio, bridge across 10+ orders of magnitude is a reductionist pipe-dream. Consider the following passage from an NSF Blue Ribbon report on simulation based engineering science.Footnote 5

Virtually all simulation methods known at the beginning of the twenty-first century were valid only for limited ranges of spatial and temporal scales. Those conventional methods, however, cannot cope with physical phenomena operating across large ranges of scale—12 orders of magnitude in time scales, such as in the modeling of protein folding… or 10 orders of magnitude in spatial scales, such as in the design of advanced materials. At those ranges, the power of the tyranny of scales renders useless virtually all conventional methods…. Confounding matters further, the principal physics governing events often changes with scale, so that the models themselves must change in structure as the ramifications of events pass from one scale to another.

The tyranny of scales dominates simulation efforts not just at the atomistic or molecular levels, but wherever large disparities in spatial and temporal scales are encountered (Oden 2006, pp. 29–30).

While this passage refers to simulations, the problem of justifying simulation techniques across wide scale separations, is intimately connected with the problem of interest here—justifying the validity of modeling equations at wide scale separations. The point is that more subtle methods are required to explain and understand the relative autonomy and universality observed in the behavior of materials at continuum scales. The next section discusses one kind of approach to bridging scales that involves both top-down and bottom-up modeling strategies.

3 Homogenization: A Means for Upscaling

Materials of various types appear to be homogeneous at large scales. For example if we look at a steel beam with our eyes it looks relatively uniform in structure. If we zoom in with low powered microscopes we see essentially the same uniform structure. (See Fig. 7.2.) The material parameters or moduli characterize the behaviour of these homogeneous structures. Continuum mechanics describes the behaviors of materials at these large scales assuming that the material distribution, the strains, and stresses can be treated as homogeneous or uniform. Material points and their neighborhoods are taken to be uniform with respect to these features. However, if we zoom in further, using x-ray diffraction techniques for example, we begin to see that the steel beam is actually quite heterogeneous in its makeup.

We want to be able to determine what are the values for the empirically discovered effective parameters that characterize the continuum behaviors of different materials. This is the domain of what is sometimes called “micromechanics.’’ A major goal of micromechanics is “to express in a systematic and rigorous manner the continuum quantities associated with an infinitesimal material neighborhood in terms of the parameters that characterize the microstructure and properties of the microconstituents of the material neighborhood’’ (Nemat-Nasser and Hori 1999, p. 11). One of the most important concepts employed in micromechanics is that of a representative volume element (RVE).

Steel—widely separated scales. The corresponding microconstitutents of the RVE are called the micro-elements. An RVE must include a very large number of micro-elements, and be statistically representative of the local continuum properties (Nemat-Nasser and Hori 1999, p.11)

3.1 RVEs

The concept of an RVE has been around explicitly since the 1960s. It involves explicit reference to features or structures that are absent at continuum scales. An RVE for a continuum material point is defined to be

a material volume which is statistically representative of the infinitesimal material neighborhood of that material point. The continuum material point is called a macro-element.

In Fig. 7.3 the point \( P \) is a material point surrounded by an infinitesimal material element. As noted, this is a macro-element. The inclusions, voids, cracks, and grain-boundaries are to be thought of as the microstructure of macro-element.

RVE (Nemat-Nasser and Hori (1999), p. 12)

The characterization of an RVE requires (as is evident from the figure) the introduction of two length scales. There is the continuum or macro-scale (\( D \)) by which one determines the infinitesimal neighborhood of the material point, and there is a microscale (\( d \)) representing the smallest microconstituents whose properties (normally shapes) are believed to directly effect the overall response and properties of the continuum infinitesimal material neighborhood. We can call these the “essential’’ microconstituents. These length scales must typically differ by orders of magnitude so that \( d/D{ \ll }1. \) The requirement that this ratio be very small is independent of the nature of the distribution of the microconstituents. They may, for instance, be periodically or randomly distributed throughout the RVE. Surely, what this distribution is will effect the overall properties of the RVE.

The concept of an RVE is relative in the following sense. The actual characteristic lengths of the microconstituents can vary tremendously. As Nemat-Nasser and Hori note, the overall properties of a mass of compacted fine powder in powder-metallurgy can have grains of micron size, so that a neighborhood with characteristic dimension of 100 microns could well serve as a RVE. “[W]hereas in characterizing a earth dam as a continuum, with aggregates of many centimetres in size, the absolute dimension of an RVE would be of the order of tens of meters’’ (Nemat-Nasser and Hori 1999, p. 15). Therefore, the concept of an RVE involves relative rather than absolute dimensions.

Clearly it is also important to be able to identify the “essential” microconstituents. This is largely an art informed by the results of experiments. The identification is a key component in determining what an appropriate RVE is for any particular modeling problem. Again, here is Nemat-Nasser and Hori: “An optimum choice would be one that includes the most dominant features that have first-order influence on the overall properties of interest and, at the same time, yields the simplest model. This can only be done through a coordinated sequence of microscopic (small-scale) and macroscopic (continuum scale) observations, experimentation, and analysis’’ (Nemat-Nasser and Hori 1999, p. 15, My emphasis).

The goal is to employ the RVE concept to extract the macroscopic properties of such micro-heterogeneous materials. There are several possible approaches to this in the literature. One approach is explicitly statistical. It employs \( n \)-point correlation functions that characterize the probability, say, of finding heterogeneities of the same type at \( n \) different points in the RVE. The RVE is considered as a member of an ensemble of RVEs from which macroscopic properties such as moduli and material responses to deformations are determined by various kinds of averaging techniques. For this approach see (Torquato 2002). In this paper, however, I will discuss a nonstatistical, classical approach that is described as follows:

… the approach begins with a simple model, exploits fundamental principles of continuum mechanics, especially linear elasticity and the associated extremum principles, and, estimating local quantities in an RVE in terms of global boundary data, seeks to compute the overall properties and associated bounds (Nemat-Nasser and Hori 1999, p. 16).

This approach is, as the passage notes, fully grounded in continuum methods. Thus, it employs continuum mechanics to consider the heterogeneous grainy structures and their effects on macroscale properties of materials. As noted, one goal is to account for the shapes of the envelopes (the range of values of the moduli and density) appearing in Ashby’s material property charts. Thus, this approach is one in which top-down modeling is employed to set the stage for upscaling—for making (bottom-up) inferences.

3.2 Determining Effective Moduli

Two of the most important properties of materials at continuum scales are strength and stiffness. We will examine these properties in the regime in which we take the materials to exhibit linear elastic behavior. All materials are heterogeneous at scales below the continuum scale so we need to develop the notion of an effective or “equivalent’’ homogeneity. That is, we want to determine the effective material properties of an idealized homogeneous medium by taking into consideration material properties of individual phases (the various inclusions that are displayed in the RVE) and the geometries of those individual phases (Christensen 2005, pp. 33–34). (Recall that steel looks completely homogeneous when examined with our eyes and with small powered magnifiers.)

We are primarily interested in determining the behavior of a material as it is stressed or undergoes deformation. In continuum mechanics this behavior is understood in terms of stress and strain tensors which, in linear elastic theory are related to one another by a generalization of Hooke’s law:

\( \sigma_{ij} \) and \( \varepsilon_{kl} \) are respectively the linear stress and strain tensors and \( C_{ijkl} \) is a fourth order tensor—the stiffness tensor—that represents Young’s modulus. The latter is constitutive of the particular material under investigation. The infinitesimal strain tensor \( \varepsilon_{kl} \) is defined in terms of deformations of the material under small loads.

Assume that our material undergoes some deformation (a load is placed on a steel beam). Consider an RVE of volume \( V \). We can define the average strain and stress, respectively as follows:

These averages are defined most generally—there are no restrictions on the boundaries between the different phases or inclusions in the RVE. Given these averages, the effective linear stiffness tensor is defined by the following relation:

So the problem is now “simple.’’ Determine the tensor, \( C_{ijkl}^{{{\kern 1pt} {\text{eff}}{\kern 1pt} }} \) having determined the averages (7.2) and (7.3). “Although this process sounds simple in outline, it is complicated in detail, and great care must be exercised in performing the operation indicated. To perform this operation rigorously, we need exact solutions for the stress and strain fields \( \sigma_{ij} \) and \( \varepsilon_{ij} \) in the heterogeneous media’’ (Christensen 2005, p. 35). Fortunately, it is possible to solve this problem theoretically (not empirically) with a minimum of approximations by attending to some idealized geometric models of the lower scale heterogeneities. It turns out that a few geometric configurations actually cover a wide range of types of materials: Many materials can be treated as composed of a continuous phase, called the “matrix,” and a set of inclusions of a second phase that are ellipsoidal in nature. Limiting cases of the ellipsoids are spherical, cylindrical, and lamellar (thin disc) shapes. These limiting shapes can be used to approximate the shapes of cracks, voids, and grain boundaries.

Let us consider a material composed of two materials or phases.Footnote 6 The first is the continuous matrix phase (the white region in Fig. 7.4) and the second is the discontinuous set of discrete inclusions. We will assume that both materials are themselves isotropic. The behavior of the matrix material is specified by the Navier-Cauchy stress-strain relations

Simlarly, the behavior of the inclusion phase is given by the following:

The parameters \( \lambda \) and \( \mu \) are the Lamé parameters that characterize the elastic properties of the different materials (subscripts indicate for which phase). They are related to Young’s modulus and the shear modulus.

We can now rewrite the average stress formula (7.3) as follows:

Thus, there are \( N \) inclusions with volumes \( V_{n} \) and \( V - \sum\nolimits_{n = 1}^{N} V_{n} \) represents the total volume of the matrix region. Now we use the Navier-Cauchy Eq. (7.5) to get the following expression for the average stress:

The first integral here can be rewritten as two integrals (changing the domain of integration) to get:

Using Eq. (7.4) the first integral in (7.9)—the average stress—can be rewritten in terms of average strains:

So we have arrived at an effective stress/strain relation (Navier-Cauchy equation) in which “only the conditions within the inclusions are needed for the evaluation of the effective properties tensor [\( C_{ijkl}^{{{\kern 1pt} {\text{eff}}{\kern 1pt} }} \)]’’ (Christensen 2005, p. 37). This, together with the method to be discussed in the next section will give us a means for finding homogeneous effective replacements for heterogenous materials.

3.3 Eshelby’s Method

So our problem is one of trying to determine the stress, strain, and displacement fields for a material consisting of a matrix and a set of inclusions with possibly different elastic properties than those found in the matrix. The idea is that we can then model the large scale behavior as if it were homogeneous, having taken into consideration the microstructures in the RVE.

We first consider a linear elastic solid (the matrix) containing an inclusion of volume \( V_{0} \) with boundary \( S_{0} \). See Fig. 7.5. The inclusion is of the same material and is to have undergone a permanent deformation such as a (martensitic) phase transition. In general the inclusion “undergoes a change of shape and size which, but for the constraint imposed by its surroundings (the ‘matrix’) would be an arbitrary homogeneous strain. What is the elastic state of the inclusion and matrix?’’ (Eshelby 1957, p. 376) Eshelby provided an ingenious answer to this question.

Begin by cutting out the inclusion \( V_{0} \) and removing it from the matrix. Upon removal, it experiences a uniform strain \( \varepsilon_{ij}^{T} \) with zero stress. Such a stress-free transformation strain is called an “eigenstrain” and the material undergoes an unconstrained deformation. See Fig. 7.6. Next, apply surface tractions to \( S_{0} \) and restore the cut-out portion to its original shape. This yields a stress in the region \( V_{0} \) as exhibited by the smaller hatch lines; but there is now zero stress in the matrix. Return the volume to the matrix and remove the traction forces on the boundary by apply equal and opposite body forces. The system (matrix plus inclusion) then comes to equilibrium exhibiting at a constrained strain \( \varepsilon_{ij}^{C} \) relative to its initial shape prior to having been removed. Note: elastic strains in these figures are represented by changes in the grid shapes. Within the inclusions, the stresses and strains are uniform.

By Hooke’s law (Eq. (7.1)) the stress in the inclusion \( \sigma_{l} \) can be written as followsFootnote 7:

Eshelby’s main discovery is that if the inclusion is an ellipsoid, the stress and strain fields within the inclusion after these manipulations is uniform (Eshelby 1957, p. 377). Given this, the problem of determining the elastic strain everywhere is reasonably tractable. One can write the constrained strain, \( \varepsilon^{C} \), in terms of the stress free eigenstrain \( \varepsilon^{T} \) by introducing the so-called “Eshelby tensor”, \( S_{ijkl} \):

The Eshelby tensor “relates the final constrained inclusion shape [and inclusion stress] to the original shape mismatch between the matrix and the inclusion’’ (Withers et al. 1989, p. 3062). The derivation of the Eshelby tensor is complicated, but it is tractable when the inclusion is an ellipsoid.

The discussion has examined an inclusion within a much larger matrix where the inclusion is of the same material as the matrix. A further amazing feature of Eshelby’s work is that the solution to this problem actually solves much more complicated problems where, for example, the inclusion is of a different material and thereby possesses different material properties—different elastic constants. These are the standard cases of concern. We would like to be able to determine the large scale behavior of such heterogeneous materials. The Eshelby method directly extends to these cases, allowing us to find an effective homogeneous (fictitious) material with the same elastic properties as the actual heterogeneous system. (In other words, we can employ this homogenized solution as a stand in for the actual material in our design projects—in the use of the Navier-Cauchy equations.) In this context the internal region with different elastic constants will be called the “inhomogeneity’’ rather than the “inclusion.’’Footnote 8

I will briefly describe how this works. Imagine an inhomogeneous composite with an inhomogeneity that is stiffer than the surrounding matrix. Imagine that the inhomogeneity also has a different coefficient of thermal expansion than does the matrix. Upon heating the composite, we can then expect the inhomogeneity or inclusion to be in a state of internal stress. The stiffness tensors can be written, as above, by \( C_{M} \) and \( C_{I} \). We now perform the same cutting and welding operations we did for the last problem. The end result will appear as in the bottom half of Fig. 7.7. The important thing to note is that the matrix strains in Figs. 7.6 and 7.7 are the same.

Eshelby’s method—inhomogeneity (Clyne and Withers 1993, p. 50)

The stress in the inhomogeneity is

The expansion of the inhomogeneity upon being cut out will in general be different than the inclusion in the earlier problem (their resulting expanded shapes will be different). This is because the eigenstrains are different \( \varepsilon^{T*} \ne \varepsilon^{T} \) where \( \varepsilon^{T*} \) is the eigenstrain experienced by the inhomogeneity. Nevertheless, after the appropriate traction forces are superimposed, the shapes can be made to return to the original size of the inclusions with their internal strains equal to zero. This means that the stress in the inclusion (the first problem) must be identical to that in the inhomogeneity (the second problem). This is to say that the right hand sides of Eqs. (7.11) and (7.12) are identical, i.e.,

Recall that for the original problem \( C_{I} = C_{M} \). Using the Eshelby tensor \( S \) we now have (from (7.11) and (7.12))

where \( {\mathbf{I}} \) is the identity tensor. So now it is possible to express the original homogeneous eigenstrain, \( \varepsilon^{T} \), in terms of the eigenstrain in the inhomogeneity, \( \varepsilon^{T*} \) which is just the relevant difference between the matrix and the inhomogeneity. This, finally, yields the equation for the stresses and strains in the inclusion as a result of the differential expansion due to heating (a function of \( \varepsilon^{T*} \) alone):

We have now solved our problem. The homogenization technique just described allows us to determine the elastic properties of a material such as our steel beam by paying attention to the continuum properties of inhomogeneities at lower scales. Eshelby’s method operates completely within the domain of continuum micromechanics. It employs both top-down and bottom-up strategies: We learn from experiments about the material properties of both the matrix and the inhomogeneities. We then examine the material at smaller scales to determine the nature (typically the geometries) of the structures inside the appropriate RVE. Furthermore, in complex materials such as steel, we need to repeat this process at a number of different RVE scales. Eshelby’s method then allows us to find effective elastic moduli for the fictitious homogenized material. Finally, we employ the continuum equations to build our bridges, buildings, and airplanes. Contrary to the fundamentalists/reductionists who say we should start ab initio with the atomic lattice structure, the homogenization scheme is almost entirely insensitive to such lowest scale structure. Instead, Eshelby’s method focus largely on geometric shapes and virtually ignores all other aspects of the heterogeneous (meso)scale structures except for their elastic moduli. But the latter, are determined by continuum scale measurements of the sort that figure in determining the material property charts.

4 Philosophical Implications

The debate between emergentists and reductionists continues in the philosophical literature. Largely, it is framed as a contrast between extremes. Very few participants in the discussion focus on the nature or the degree of autonomy supposedly displayed by emergent phenomena. A few, as we have seen, talk of “protected’’ regions or states of matter, where this is understood in terms of an insensitivity to microscopic details. However, we have seen that to characterize the autonomy of material behaviors at continuum scales in terms of a complete insensitivity to microscopic details is grossly over simplified. Too much attention, I believe, has been paid to a set of ontological questions: “What is the correct ontology of a steel beam? Isn’t it just completely false to speak of it as a continuum?’’ Of course, the steel beam consists of atoms arranged on a lattice in a very specific way. But most of the details of that arrangement are irrelevant. Instead of focusing on questions of correct ontology, I suggest a more fruitful approach is to focus on questions of proper modeling technique. Clearly models at microscales are important, but so are models at continuum scales. And, as I have been trying to argue, models at intermediate scales are probably most important of all. Importantly, none of these models are completely independent. One needs to understand the way models at different scales can be bridged. We need to understand how to pass information both upward from small scales to inform effective models at higher scales and downward to better model small scale behaviors. Despite this dependence, and also because of it, one can begin to understand the relative autonomy of large scale modeling from lower scale modeling. One can understand why the continuum equations work and are safe while ignoring most details at lower scales. In the context of the discussion of Eshelby’s method, this is evident because we have justified the use of an effective homogenized continuum description of our (actually heterogeneous at lower scales) material.

As an aside, there has been a lot of talk about idealization and its role in the emergence/reduction debate most of which, I believe has muddied the waters.Footnote 9 Instead of invoking idealizations, we should think, for example, of continuum mechanics as it was originally developed by Navier, Green, Cauchy, Stokes, and others. Nowhere did any of these authors speak of the equations as being idealized and, hence, false for that reason. The notion of idealization is, in this context, a relative one. It is only after we had come to realize that atoms and small scale molecular structures exist that we begin to talk as though the continuum equations must be false. It is only then that feel we ought to be able to do everything with the “theory’’ that gets the ontology right. But this is simply a reductionist bias. In fact, the continuum equations do get the ontology right at the scale at which they are designed to operate. Dismissing such models as “mere idealization’’ represents a serious philosophical mistake. In the reduction/emergence debate, there has been too much focus on what is the actual fundamental level and whether, if there is a fundamental level, non-fundamental (idealized) models are dispensable. I am arguing here that the focus on the “fundamental’’ is just misguided.

So, the reduction/emergence debate has become mired in a pursuit of questions that, to my mind, are most unhelpful. Instead of mereology and idealization we should be focusing proper modeling that bridges across scales. The following quote from a primer on continuum micromechanics supports this different point of view.

The “bridging of length scales’’, which constitutes the central issue of continuum micromechanics, involves two main tasks. On the one hand, the behavior at some larger length scale (the macroscale) must be estimated or bounded by using information from a smaller length scale (the microscale), i.e., homogenization or upscaling problems must be solved. The most important applications of homogenization are materials characterization, i.e., simulating the overall material response under simple loading conditions such as uniaxial tensile tests, and constitutive modeling, where the responses to general loads, load paths and loading sequences must be described. Homogenization (or coarse graining) may be interpreted as describing the behavior of a material that is inhomogeneous at some lower length scale in terms of a (fictitious) energetically equivalent, homogeneous reference material at some higher length scale. On the other hand, the local responses at the smaller length scale may be deduced from the loading conditions (and, where appropriate, from the load histories) on the larger length scale. This task, which corresponds to zooming in on the local fields in an inhomogeneous material, is referred to as localization, downscaling or fine graining. In either case the main inputs are the geometrical arrangement and the material behaviors of the constituents at the microscale (Böhm 2013, pp. 3–4).

It is clear from this passage and from the discussion of Eshelby’s method of homogenization that the standard dialectic should be jettisoned in favor of a much more subtle discussion of actual methods for understanding the relative autonomy characteristic of the behavior of systems at large scales. This paper can be seen as a plea for more philosophical attention to be paid to these truly interesting methods.

Notes

- 1.

Without doing a literature survey, as it is well-trodden territory, one can simply note that virtually every view of emergent properties canvassed in O'Connor's and Wong's Stanford Encyclopedia article reflects some conception of a hierarchy of levels characterized by aggregation of parts to form new wholes organized out of those parts (O'Connor and Wong 2012).

- 2.

Some examples, particularly from theories of optics, where one can speak of relations between theories and models where no part/whole relations seem to be relevant can be found in Batterman (2002). Furthermore, it is worth mentioning that there are different kinds of models than may apply at the same scale from which one might learn very different things about the system.

- 3.

See (Lauglin and Pines 2007, p. 261), quoted above.

- 4.

Exceptions appear in some discussions of universality in terms of the renormalization group theory of critical phenomena and in quantum field theory. However, universality, etc., is ubiquitous in nature and more attention needs to be paid to our understanding of it.

- 5.

See Batterman (2013) for further discussion.

- 6.

This discussion follows (Christensen 2005, pp. 36–37).

- 7.

This is because the inclusion is of the same material as the matrix.

- 8.

Note that if the inclusion is empty, we have an instance of a porous material. Thus, these methods can be used to understand large scale behaviors of fluids in the ground. (Think of hydraulic fracturing/fracking.).

- 9.

I too have been somewhat guilty of contributing to what I now believe is basically a host of confusions.

References

Ashby, M.F.: On the engineering properties of materials. Acta Metall. 370(5), 1273–1293 (1989)

Batterman, R.W.: The Devil in the Details: Asymptotic Reasoning in Explanation, Reduction, and Emergence. Oxford Studies in Philosophy of Science. Oxford University Press, Oxford (2002)

Batterman, R.W.: The tyranny of scales. In: The Oxford Handbook of Philosophy of Physics. Oxford University Press, Oxford (2013)

Böhm, H.J.: A short introduction to basic aspects of continuum micromechanics. URL http://www.ilsb.tuwien.ac.at/links/downloads/ilsbrep206.pdf (2013). May 2013

Oden, J.T.: (Chair). Simulation based engineering science—an NSF Blue Ribbon Report. URL www.nsf.gov/pubs/reports/sbes_final_report.pdf (2006)

Christensen, R.M.: Mechanics of Composite Materials. Dover, Mineola, New York (2005)

Clyne, \( {\text{T}}.{\tilde{\text{W}}} . \), Withers, \( {\text{P}}.{\tilde{\text{J}}} . \): An Introduction to Metal Matrix Composites. Cambridge Solid State Series. Cambridge University Press, Cambridge (1993)

Eshelby, J.D.: The determination of the elastic field of an ellipsoidal inclusion, and related problems. Proc. Roy. Soc. Lond. A 2410(1226), 376–396 (1957)

Fodor, J.: Special sciences, or the disunity of sciences as a working hypothesis. Synthese 28, 97–115 (1974)

Lauglin, R.B., Pines, D.: The theory of everything. In: Bedau, M.A., Humphreys, P. (eds.) Emergence: Contemporary Readings in Philosophy and Science, pp. 259–268. The MIT Press, Cambridge (2007)

Nemat-Nasser, S., Hori, M.: Micromechanics: Overall Properties of Heterogeneous Materials, 2nd edn. Elsevier, North-Holland, Amsterdam (1999)

O’Connor, T., Wong, H.Y.: Emergent properties. In: Zalta E.N. (ed.) The Stanford Encyclopedia of Philosophy. Spring 2012 edn. (2012). URL http://plato.stanford.edu/archives/spr2012/entries/properties-emergent/

Torquato, S.: Random Heterogeneous Materials: Microstructure and Macroscopic Properties. Springer, New York (2002)

Withers, \( {\text{P}}.{\tilde{\text{J}}}. \), Stobbs, W.M., Pedersen O.B.: The application of the eshelby method of internal stress determination to short fibre metal matrix composites. Actra Metall 370(11), 3061–3084 (1989)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Batterman, R. (2015). Autonomy and Scales. In: Falkenburg, B., Morrison, M. (eds) Why More Is Different. The Frontiers Collection. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-43911-1_7

Download citation

DOI: https://doi.org/10.1007/978-3-662-43911-1_7

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-43910-4

Online ISBN: 978-3-662-43911-1

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)