Abstract

A look at the evolution of Seismology and Engineering Construction from the mid-1700s until the mid-1900s is presented to understand the main accomplishments achieved. Then, we perspective the new advancements toward future mitigation of earthquake impacts with a clear proposal for sustainability and ecological challenge. We will concentrate on analysing the phase 1755–1950 because not enough attention has been paid recently to this exciting period. Next, we jump to our days to look into a few significant problems that require the involvement of the scientific, technical and political communities. In particular: (i) We will look into the developments Intensity Scales should pursue to reduce uncertainties since more than 20 years have passed since the last upgrade. Today, the information from new events is much more extensive and reliable than in the past. Several examples will be presented to illustrate how the frequency of motion should be included in the main characteristics/categories (Building typologies and Vulnerabilities; Damage Grade; Quantity definition) and how it could be beneficial to add a few more descriptors to the Scale, namely shaking of objects and sloshing of water in recipients. (ii) We will analyse the lines of development to mitigate earthquake impacts, and respond to present and future needs, concentrating on the new scientific results that are changing seismology from a “back-analyst” science (indirect contribution to earthquake engineering) into a more pro-active one, with direct impact to reduce risks, such as the EEWS, and low-cost instrumentation. And adding to earthquake engineering the revolutionising health monitoring, as a precautious indicator of malfunction of structures, and a rapid system for evaluation of post-earthquakes, complemented with the citizen science. Finally, all these ingredients need to be merged into simple recommendations for which only data mining will be able to extract new reliable information.

Five Highlights’s for the future:

-

MEMS

-

EEWS

-

Performance-based design

-

Field trips

-

Citizen Science

All these new developments under the umbrella of Machine Learning.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 A Tribute to Nicholas Ambraseys

Nikos Ambraseys left the Seismological and Engineering community with a vast legacy in Engineering Seismology and Earthquake Engineering. The present work has many connections to some of his contributions. To start, I want to mention just a few of the most important contributions he made:

-

The role of Nikos Ambraseys in Engineering Seismology.

-

The contributions in Historical Seismicity in many regions of the World, particularly in Centre Europe to Middle-East and Far-East Areas.

-

The countless field missions where he could learn and interpret the observations. Citing Douglas et al. (2014), Ambraseys summarised the importance of field missions: “The site of a damaging earthquake is a full-scale laboratory from which significant discoveries may be made, by seismologists, geologists, engineers, sociologists, or economists, not to mention politicians”.

-

The first steps to organise an Archive for Strong Motion Data at the World level. This was the first initiative to treat the data spread worldwide in various countries and entities.

-

His post at Imperial College and the School of thought created there. This was a source of gathering brain power with the launching of Master Courses on Earthquake Engineering and Engineering Seismology.

-

The Attenuation Curves: several contributions at different times were essential, and his name is recognised as one of the most well-known worldwide.

-

The sea-bed tsunami interpretation. This is a topic dealing with the influence of large tsunamis on ships near the epicentral area that can suffer from the direct acoustic P-wave. This subject has never been treated in detail until nowadays.

The present review paper is organised into seven parts as follows:

-

1.

Introduction

-

2.

Earthquakes continue to devastate Humanity

-

3.

Evolution of Seismology and Engineering Construction since mid-1700 until the mid-1900s

-

4.

Main achievements in recent periods

-

5.

Intensity Scales: how can we upgrade them

-

6.

Changes in paradigm in Seismology and Earthquake Engineering & New lines for future developments

-

7.

Final Considerations

After this Introduction, which starts with a tribute to N. Ambraseys, we will look at the evolution of Seismology and Engineering Construction from early 1700 till 1950 to understand the significant development of those two fields of knowledge. Then, we perspective the new advancements towards future mitigation of earthquake impacts and look to society with a clear proposal in the direction of sustainability and ecological challenge in the twenty-first century. We will compare the evolution in time of Seismology and Construction versus Earthquakes and how they paved our present and prospect the needs that future decades require. We will spend most of our time analysing the period 1755–1950 because not enough attention has been paid to this exciting period. We then jump to our days to look into a few significant problems that require the involvement of the scientific, technical and political communities to mitigate the real world of earthquakes. Finally, we speculate on which initiatives need to be addressed in the future. In particular, (i) We will look into the developments Intensity Scales should pursue to reduce uncertainties. More than 20 years have passed since the last upgrade, and today the information is much more extensive and reliable than in the past. Several examples will be presented to illustrate how the “frequency of motion” should be included in the main categories of the Scale and how it could be beneficial to add a few more descriptors to the Scale, namely shaking of objects and sloshing water in recipients. (ii) We will analyse the lines of development to mitigate earthquake impacts, and respond to present and future needs, concentrating on the new scientific developments that are changing Seismology and Earthquake Engineering into a more proactive science, such as:

-

EEWS (Earthquake Early Warning Systems) and health monitoring, driven by the powerful low-cost instrumentation (MEMS, Micro-Electromechanical Systems) and complemented with the citizen science. Also, the changes in Earthquake Engineering with the revolutionising health monitoring, as a precautious indicator of malfunction of structures, and as a rapid system for evaluation of post-earthquakes, will be discussed.

-

Performance-based design is a breakthrough in Earthquake Engineering, acting as a response to fight the significant uncertainties in all design processes, especially for new structures.

-

Field missions, despite all new technological tools, continue to stay an indispensable means to better understand the performance of structures, infrastructures, and mitigation practices.

-

Finally, “Machine Learning” will extract new reliable information from all the advancements merging all the ingredients into simple recommendations.

As a long text, to facilitate the reading and get to the pursued goals, each Section initiates with a summary of the topics in discussion and ends with some of the main achievements. This causes repetitions here and there, but we think they are essential to convey the ideas. To enhance some critical comments or Notes, we use the text enclosed in Boxes.

This review paper, entering into the historical times of earthquake science, will enhance the text with many references, which were selected as necessary for the discussion and for the proposals made along with the text, and as general references for completion of the materials covered. A few references with two authors, which become milestones, are referred to with the two names. Many references are books representing landmarks for the period examined in this work. The references not cited in the text are organised in "Appendix 1". A collection of images captured in a few video cameras are also mentioned, as they play an essential tool for supporting some of the facts presented and discussed.

To reduce the size of the written material, some of it is just enumerated and placed in Tables or Boxes with simplified text accompanying them. We often use direct text transcriptions of their authors or part of them not to modify the message’s meaning.

1.2 Point summary of main items

1.2.1 Seismology

-

Tentative explanation of the mechanisms of earthquakes from Chinese Times to mid-nineties.

-

The Great Disasters of history. Statistics since 1700 in the face of population growth.

-

The nineteenth century of field observations—interpretations. First instrumentation.

-

The early twentieth century on Intensity scales.

-

The significant advances in early 1950. The first World Networks of Seismological Stations.

-

The digital era—1980.

-

Massive digital instrumentation of high quality.

-

The strong motion networking.

-

EEWS. Problems and future.

-

MEMS and Citizen Science.

-

Arrays and data mining.

1.2.2 Construction and earthquakes

-

The first treaties of Vitruvio and Roman Construction.

-

The new technologies in the Reconstruction of Lisbon after 1755.

-

The Casa Baraccata.

-

The Construction Encyclopaedia of Gwilt.

-

The introduction of Steel in the nineteenth century.

-

The outstanding construction accomplishments.

-

The Introduction of Reinforced Concrete in the early twentieth century.

-

Lessons from Great Disasters till mid 1950.

-

The codes of practice.

-

The Strong motion advancements.

-

The Material Science.

-

Base Isolation (rubber bearings & magnetic fluctuation).

-

Conflicts of structural safety with patrimonial values, aesthetics, and comfort necessities.

-

Health Monitoring.

-

The use of Machine Learning and Data Mining.

1.3 A few needs for acting

-

Unification of Scales → shaking, tsunami, acoustics; introduce frequency and amplitude as an added parameter to get Intensities.

-

Qualify and better quantify the terms of the descriptors, especially up to Intensities VI, to reduce uncertainties.

-

Introduction of structural dynamics knowledge before assigning Intensities above VI.

-

Use DYFI (Did You Feel It) enquires as single vibratory data points. Use Video-cameras as an added value to describe the wave field.

-

Reduction of uncertainties in several items: conversion of Intensities into strong-motion IM's.

-

Codes and quality control. Dignify the Profession of Engineer.

-

Policies for massive retrofitting for non-conformity construction.

-

Education. Public perception.

-

Reverse the paradigm of earthquakes as disasters into earthquakes as a sustained challenge.

2 Disasters keep on causing massive destruction

Summary

Unlike other natural events, earthquakes do not increase their pace, but in some regions energy dissipation takes a very long time to occur.

Different catalogues show similar results in terms of inter-arrival times. However, it is only recently that economic losses became available; however, even nowadays, they are very difficult to assess accurately, especially when entering with indirect losses.

The impact on victims per million inhabitants has been reduced significantly in the last decades, while the economic impact per million inhabitants and GNPFootnote 1 is almost constant.

There are various indicators to introduce a metric in impacts. We discuss this topic, including multiple entries and using the concept of multi-hazard associated with earthquake occurrences.

Earthquakes are part of the class of natural hazards very similar to recent (Million years!) geological evolution, probably the oldest of them, only contemporary to meteorites or temperature.

They are a phenomenon of the release of energy accumulated inside the Earth's Crust. Tectonic Plate motion causes this accumulation for many millions of years as long as the geological processes keep the same token as in recent geological epochs. Earthquakes are part of the Earth’s evolution, and there is no way to stop their occurrence for millions of years to come. Human generations account for their effects since they could transmit this information to the younger generations. They are marked in the first objects humans produced, from tumbles to ceramic vases to keep food or ornaments to keep human traces in cemeteries. But large earthquakes also marked the landscape before humankind. This can be done with some accuracy with the help of Archaeo-seismology. This modern science can analyse periods longer than the historical human witnesses, observing the movements of objects or “strange deposits” caused by tsunami occurrences that left their “signature” in nature and link these phenomena to earthquakes/tsunamis. Nowadays, science can use many tools to obtain information in periods with poor oral or written communication (historical seismicity) or before humankind. Archaeo-seismology has opened great avenues to look into the past, which are very useful for anticipating the future. In fact, in many places, significant events occur with return periods larger than several civilisations and humanity loose this remembrance. Science has better understood these rare events of considerable impacts in the last two centuries. Even descriptions of recent earthquakes are doubtful for many scientists, which do not believe in descriptions without a number associated. The 1755 Lisbon earthquake is one of those events where science started changing its attitude towards the information of historical nature and not refusing entirely what was said in previous epochs. This earthquake is full of new indications which now no one can ignore: liquefaction at a few hundred km away, rivers spilling their water flow over the margins, seiches observed in lakes at great distances and chandeliers oscillating thousands of km away. Even nowadays, some phenomena beyond what instruments can record are neglected. In an enquire to senior people who remember well the effects of the 1969 San Vicent M7.9 earthquake in SW Iberia, the population is very explicit in saying that waves were accompanied by strong noises from underneath where they were, and the sky suddenly became illuminated. Another example: in the follow-up Sumatra 2004 event, the rescue teams knew when the next aftershock was arriving just because they got nauseated a few moments before shaking arrivals. This is to say that scientists should be humble enough to use all signals presented as observations, some already reported in ancient treaties, to understand the complex process of occurrence, wave propagation and corresponding effects caused on our environment.

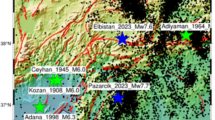

To keep the reader attentive to the real world of the earthquake “drama”, we show in Fig. 1 a collection of 6 moments of great importance to illustrate the various forms that earthquake events express their effects: (a) the effect of fault rupture right over its trace, and the consequences on four similar buildings implanted around the fault trace. Resorting to simple physics, two of them collapsed in opposite directions according to the right-lateral fault motion, and a third building placed in the middle of the fault trace was cut in the vertical. (b) The damage in a late 19th-century bridge was reported very well by J. Milne. (c) The landslide expresses how shaking can have “indirect” tragic consequences due to poor or non-existent land-use policies. (d) The non-structural elements (in-fills) can be highly disruptive if not adequately treated. (e) Fire is another “indirect” effect that for many centuries accompanies the shaking and may cause more impact than the shaking itself. (f) Tsunami cannot be treated separately from shaking, and only very recently the “multi-hazard” concept has been considered in this analysis. (g) We left for the end the images of recent Haiti, August 15, 2021, to show how the earthquake occurrence keeps on with two different memories; one is the unique correlation of events occurring in a different segment of the same fault, separate by a period of 11 years. August 15, 2021, Haiti M7.2, a repetition of the 2010 Haiti earthquake, which caused 200,000 victims and great destruction, adds up to 2500 victims, 12,000 injures, and many more homeless. The second memory, attesting that we cannot eradicate it, is that we see the same type of damage in both events, denoting that rehabilitation should occur even in developing countries to avoid similar consequences.

2.1 Earthquakes as part of the Recent World Disaster Panorama

It is well known that several practicalities accelerate the poor behaviour of certain types of construction. To mention cases where “doubts” are not present, we describe a few accounts with varied performances:

-

In masonry structures up to 3–4 stories high, walls show the tendency for an “out-of-plane” mechanism, causing sizeable economic impact but a reduced amount of victims inside.

-

In reinforced concrete structures exhibiting soft-stories, the tendency is the collapse in the 1st floor leading probably to a “pancake mechanism”, with significant impact in human and economic terms.

Furthermore, it is well settled that:

-

Construction that followed codes of practice and was subjected to good inspection tends to have much better performances than other constructions built without any attention to construction rules.

-

Earthquakes keep on causing significant impacts (human and economic) in societies not prepared to deal with earthquakes.

-

In the case of mega-quakes, even societies that are more resilient to earthquakes, are very much impacted, especially by indirect and cascade effects.

These examples are good indicators that science and technology know much about earthquakes and how to mitigate their effects.

However, the whole panorama of catastrophes is quite different. “The world lost as much as $232bn US due to natural disasters in 2019, with India leading in casualties with 1,750 deaths, said a recent report by AON (https://www.aon.com/risk-services/professional-services/default.jsp). According to the report Weather, Climate Catastrophe Insight for 2019, Cyclone Fani was one of the top 10 disasters of 2019, affecting Andhra Pradesh and Odisha, apart from Bangladesh.

News similar to these appears every year as a result of world natural phenomena with a negative impact on human life, resulting in disasters, and are reported by the various entities that nowadays are running disaster databases. We can cite the reinsurance companies like Munich RE, Swiss RE, MAPFRE RE, or entities interested in studying the epidemiology of worldwide events such as UNDRR, USGS, or EMDAT, where the CATDAT earthquake damaging data resides. GEM is also a partner that has developed many tasks in this area.

Re-insurance companies like those referred above are very interested in impact data from natural and technological (NaTech) events due to their obligations to reimburse in case of damage. They can only survive because they deal with various events and their clients come across all continents. This multiform universe can transfer debts from one place to another and satisfy their contracts. A single country with non-stationary occurrences could never play this role. However, not all assets are covered by insurance. According to Munich RE (2021), total losses resulting from natural events since 1980 sum up to $5,200 bnFootnote 2 US; more than 70% of this total was not insured. Japan Tohoku earthquake in 2011 was the largest ($210 bn US). Hurricane Katrina, which hit New Orleans in August 2005, was the costliest insured, with a total of $60.5bn US (original values).US$

Other data banks have been gathering data continuously since 1970, and their information is already critical because it covers the last 50 years. Catalogues assembled per event for periods before 1970 will be analysed later on. These Catalogues are of most importance to understanding the historical evolution of the earthquake events and are an excellent base to extrapolate to the future. Of course, historical catalogues cannot provide such reasonable information as the new database, but they are essential because they cover much more extended periods.

We are interested in understanding if earthquake events in the World are keeping their stationarity or if there is any incremental modification as has been happening in climate-change-related events. “Globally, a slightly increasing trend in economic damage due to earthquakes is not consistent with the great increase of exposure”. Why is that?

Extending the period of analysis back to 1900, the most significant economic impact is the 1923 Great Kanto (Japan) ($214 bn US in the same order of value as the 2011 Tohoku (Japan) (> $300 bn US) (both values adjusted to 2011 $US market values). The other important events are shown in Fig. 2.

This Figure gives us several indications on how to present the impact of earthquakes by showing the magnitudes, the number of victims and the losses inflicted, and their significant variations from event to event. This brings the question of the more adequate indicators for measuring the impact of earthquakes. Are the world policies and various international initiatives helping in Losing Less? Or, on the contrary, the problem is a random lottery that no one can predict?

This section will look into the problem of occurrence from a Worldwide and European perspective and look for indicators to predict the impact of earthquakes.

Before advancing, we should have a word on Impact Indexes.

Several indexes can be related to the Disaster activity. Some are local, others regional or global. The most important is the impact on humans (victims, heavy injuries, homeless), followed by the impact on the economy and social tissue (number of property losses, indirect losses, intangible impacts). The latter are very difficult to measure; only a qualitative analysis can be made. In about all of them, the epoch of the event is the crucial parameter to examine the accuracy of the estimated values. In modern times, all numbers are available, even though uncertainties may become more prominent in certain countries or regions depending on the state of development of societies. The number of casualties is the most accessible parameter unless the affected region is the object of human mass movement due to social conflicts (wars, migrations, refugees, etc.). The economic parameters are always more challenging to obtain due to the dependence on currency’s value over time.

The market value is changing over the years due to inflation and other issues. For comparisons among regions and epochs, besides total values, it is essential to normalise the results to the existing population and the GNP (at the time) relative to the geographical unit where we are analysing the problem. It is different from normalising an impact by considering an entire country, region, etc., or only in the area affected. As mentioned before, the normalisation shall be done considering the same given time, especially at an epoch not too distant from the time of the occurrence. The economic market changes much along the time, so presenting ratios (Costs/GNP) for different times is not a correct option. If we want to consider accumulating numbers in the economic part, we always have to correct inflation when adding values. As general information, we can say that only after 1900 the accounts for losses can be considered quantitative values, and only after 1950 did these numbers become more accurate. Before 1900, with a few exceptions, only vague descriptions of economic impacts are available.

Besides the total and normalised values, a few other indexes are appropriate to mention.

The “vulnerability ratio” used to make the distinction between “developed” and “developing” countries can be defined as the number of fatalities (× 106) divided by the $US damage cost (Vranes, personal communication 2009). The vulnerability ratio for developed countries lies in the range 0.01/$−0.03/$, while for the developing countries, the ratio is three orders of magnitude worse, in the range 2/$−27/$ (Bilham 2009).

In recent times, a new composite index was proposed to evaluate the status of a region, the so-called HDI (Human Development Index), considered the leading indicator for the vision of “development as freedom”; it is a Composite Index, based on three dimensions (income, health, and education) (Mariano et al. 2021).

In a brief account of the parameters available through the ages, from ancient times to the present day, we can refer to the first observations of earthquake impacts initiated with the first archaeo-seismology information gathered with sedimentary tsunami witnesses a few k-years BC. This information is precious but only gives us, with a significant level of uncertainty, the possible time of the event and how large it might have been. The errors in the sources are enormous. The first Historical Catalogues show more accuracy on time and less on impact information. After the 1755 Lisbon earthquake, the data was transmitted better and with less uncertain. It is very curious to signal that for that event, the uncertainty in human losses is still significant, between 8000 to 20,000 according to the most recent studies, reaching a maximum of 10% of the Lisbon population at the time. In contrast, the attributed economic impact is in the range of 30–50% of the GNP (at the time). By the end of the nineteenth century, the information became more precise as the detail of observations attest, referring to the October 28, 1891, Japan earthquake causing 10,000 deaths; 20,000 injured; 130,000 houses destroyed—as Milne (1911a) corroborates.

With the more completeness of catalogues, other parameters came into place over the last 120 years. Since the end of the twentieth century, with the creation of customised data banks, initially pushed by the re-insurance companies and then by agencies dedicated to disaster studies, the information became more complete in terms of human and economic impacts.

Looking now at the world’s natural disasters from 1970-to 2020 (Fig. 3), we see that earthquakes are not among the most frequent natural events compared to “climate-change” events. Still, they are causing more deaths (58%) for a given period of time (Fig. 4). We also see that comparing two periods of 20 years, 1980–1999 and 2000–2019, the total number of global events is increasing with time (74%), the natural death is kept almost constant, the affected population increased (23%), and the economic losses increased (82%).

Natural disaster in the last 50 years(by type) (EMDAT 2020)

a Number of death per type of disaster for the period 2000–2019; b Comparison of Impacts in the two sequential periods, 1980–199 and 2000–2019 (From CRED/UNDRR 2020)

Back to Fig. 3, we can say that floods and extreme weather are the most common events; other climate-change events are responsible for 80% of total events. Earthquakes take only a 10% share but with significant impacts. After 2002, with a peak, there was a drop.

In Fig. 5, we can understand how the number of damaging earthquakes relates to HDI since 1900. If in the early twentieth century, most damage would come in low HDI countries, towards the end, destructive earthquakes would affect moderate-high HDI and higher (Daniell et al. 2011). Analysing CATDAT, a catalogue free of bugs prepared by Daniell et al. (2012) shows that damaging earthquakes and secondary effects (tsunamis, fire, landslides, liquefaction and fault rupture) are responsible for the significant contribution of total damage inflicted worldwide since the mid-1900s.

Damage inflicted by earthquake activity since 1900 (HDI- Human Development index) https://gfzpublic.gfz-potsdam.de/pubman/item/item_245308

Analysing the statistics of the last 120 years on the number of victims and economic losses (Fig. 6), we observe that the victims per decade per million inhabitants is steadily decreasing. In contrast, financial losses increase exponentially due to the increasing exposition and market value. However, if we normalise by GNP, the situation is better, and even better if we also normalise by population. If we look in more detail, we see that the 120 years should be separated into two periods, 1900–1970 and 1970–2020. Over the last 50 years, the normalised economic losses have been almost constant, meaning probably that mitigation policies might be producing some results.

It is also interesting to verify an almost perfect correlation between Economic Losses/GNP and Human Losses/Inhabitants (Fig. 6d).

In a recent study, Dollet et al. (2021) proposed that the number of victims and total losses inflicted by earthquakes be normalised by the region's total population and GNP enclosed in the isoseismal V (EMS-98). For the last 50 years, the authors conclude that the evolution of the numbers suggests a steady decrease with time. These values agree with the global world numbers of Fig. 6 for the victims but not for the economic losses, which still show a plateau in the last 50 years.

The answer to the question “is the worldwide seismicity stationary in time?” is no. Based on the excellent data from the last 50 years expressed in Fig. 7, the evolution during the period 1970–2020 of the number of events per year disaggregated by magnitude range (M > 8; 7.9 > M > 7.0; …4.9 > M > 4.0) is very stable. The graph (M, λ) shows that the fitting curves are almost horizontal and vertically offset by an order of 10. The existence of shorter periods referring to more recent epochs, when looking to the lower magnitudes values, is a consequence of world seismographic networks' development and the lack of worldwide detection capabilities for the lower magnitudes.

World Seismicity 1970–2021 per disaggregated by magnitude range (https://en.wikipedia.org/wiki/List_of_earthquakes_in_2021)

Data in this figure can be extended to older epochs, and in that case, the class M > 8 can be disaggregated to other narrower classes like 8.9 > M > 8.0 or even more subdivided.

Before finishing this topic, we present the more significant events in West-Central Europe for the last 60 years, with an average interval of 6 years per event (Table 1). Looking at other ancient periods, the geographic pattern is different, indicating that short periods cannot represent the seismicity of a region.

Italian earthquakes dominated the last 60 years of earthquake activity in Europe, even though magnitudes did not surpass M7.0. To emphasise the difficulty in assessing an earthquake’s economic losses, compare Fig. 8 (Dolce and Bucci 2017; Dolce et al. 2021) with Table 1 (author compilation) to check the differences. The same difficulty applies to significant World earthquakes (Tables 1 and 2). A factor 3 or more (times) is present (vide Irpinia 1980), so only tendencies can be considered when analysing economic impacts.

Economic impact of the last 50 years in Italy (Dolce et al. 2021)

Keeping in mind all these limitations, the variation of per cent of GNP goes from 0.3% to 20%, but in most cases, the value does not surpass 2%. Note that the low values assigned to ex-Yugoslavian earthquakes derive from the fact that we took GNP of united Yugoslavia.

In summary, among all natural hazards occurring in the European region, “earthquakes lead to the highest number of fatalities and, after severe storms, cause the second-highest annual economic losses. From 2006 to 2015, Europe experienced 21 earthquake-related disasters that resulted in 1049 fatalities, more than 18 billion Euros in economic losses, and affected 284,000 people. Fortunately, in the last years, risk awareness and perception towards seismic threats have increased among the public and policymakers in a few European countries”. However, much more should be done. If citizens become more educated and aware of what natural hazards they are likely to face in their communities, they can press authorities to implement preventive and protective measures.

In 1972–1990, worldwide financial losses went up to 40% of GNP (Coburn and Spence 2002), showing how vital this impact may be to a country’s economy. Again, the numbers are approximate (Table 2) due to the difficulty in simultaneous assessing the losses and GNP.

2.2 Historical seismicity before 1900

In contrast to the difficulty in advancing with explanations of the origin of earthquakes, earthquake catalogues explaining the date and the size of the events have been a common practice since antique times. Many experts have dedicated their lives to looking for old events and organising them in a sequential form.

2.2.1 Differences in the syllabus

The nomenclature attributed to the word “earthquake” is quite varied from seisms, quakes, “terramotos”, “terremotos”, “tremblement de terre”, séisme, “terræ motu”, “tremor”, “tremuoti”, sismo, “tranblemann tè” and earthshaking. Except “tremor”, all are synonymous; however, some are more used than others depending on the context.

-

Earthquake (sismo) word comes from Greek (Σεισμός) and is the most used word in Science. Sometimes the term “seismic event” is associated. “Terramoto” more is used in Social Communication. “Terremoto” is identical but used in Spanish, Italian or Brazilian. It was also the form in Old Portuguese. “terræ motu” is a Hungarian word when the first isoseismal was presented. There are also dialects or translations in various languages.

-

“tremor de terra” is an earthquake that has the most significant impact on society, as it causes victims and damage to the built stock.

-

Tremor is used in conjunction with “seismic” or earthquake shaking.

Other expressions recently created, such as “mega-quakes”—high impact earthquakes, or “seismic swarm”—related to volcanic activity, with a massive series of small earthquakes occurring in a localised area. We could still add the "micro-earthquakes", which are "quakes" of very small magnitude which may precede a larger earthquake.

Earthquakes are linked to tectonic, volcanic, or "induced seismicity". The latter comes from anthropogenic loading of some expression of the Earth's Crust, as derived from filling reservoirs or opening underground galleries. An example of this case is the earthquakes in recent years in Groningen, Holland, due to the settlement in the underground gallery system constructed as a defence during the 2nd World War.

Other vibrations caused by explosions, bursts, major collapses of structures, etc., are not called earthquakes but seismic noise or "cultural noise", always present on the Earth's surface and captured by very sensitive stations. It comes from urban traffic, wind, waves hitting coastal areas or even temporary anthropogenic activities.

Tsunami word comes from Japanese 津波 and is also named “maremoto” or harbour or tidal wave. There are several causes to produce a tsunami beyond the fault rupture in the ocean. In southwest Asia, there are several local expressions to designate the tsunami.

2.2.2 Catalogues and their importance

Fortunately, there are a few Earthquake Catalogues in Historical times. They should be carefully analysed, and use modern advancements to allow cross-correlate information. This information has been gathered due to the efforts of many experts in seismology, history, geology, etc., and served to determine the main characteristics of ancient events.

Historical catalogues have been produced by individuals, namely Aristoteles, who became one of the first to launch a world catalogue. Before, the Chinese one or two centuries AD had prepared catalogues of their region; and designed the first seismoscope in 132 AD. World catalogues appeared in the eighteenth century. Figure 9 shows one published in 1722 by the Portuguese Engineer Manuel de Azevedo Fortes (Repositório 1722). Later, right after the Lisbon earthquake of 1755, the Universal History of Earthquakes was published in 1758 (Moreira de Mendonça 1758) (Fig. 10).

In the nineteenth century, several new compilations of World earthquakes were made, namely by R. Mallet and his son J.W. Mallet (1858). Mallet estimated 13 M people were killed by the earthquakes from 4000 years until 1900, leading to 0.35 M casualties/century, whereas in the twentieth century, there were 1.5 M casualties/century. To present a measure for comparation these numbers we should refer that from 2000 BC to nowadays, the World population increased from 30 × 106 to 7 × 109.

From 1885 to 1907, Montessus de Ballore (1911) compiled 170,000 earthquakes, giving an average of 7.7 M events/century. According to our 2021 Catalogues, this means that he was capturing M > 4.5 events Worldwide.

An accountable number of Catalogues emerged in the late twentieth and twenty-first centuries, concentrated in some regions or made country by country. To name a few sources, for Europe, we should refer to Karnik (1971), who published the Earthquake Catalogue 1801–1900, van Gils and Leydecker (1991), Stucchi et al. (2017) in the framework of EU Programs NERIES, REAKT, SERIES, NERA and SERA, accessed in SHEEC Catalogue (Stucchi et al. 2013), and the compilations made in the framework of GEM for the World (Pagani et al., 2015), the ISC-GEM, the USGS (2021). Catalogues and statistics are also summarised in https://en.wikipedia.org/wiki/List_of_historical_earthquakes. A database of damaging small-to-medium magnitude earthquakes (Nievas et al. 2020) with data since 1900 has been compiled to analyse frequent events responsible for non-negligible impacts. The authors describe all problems in getting accurate data, especially if you want to disaggregate the information into many categories.

Some statistics

For Europe

Citing Karnik (1971) from the Earthquake Catalogue 1801–1900 complemented to 1900–2020, the following statistics can be observed:

-

Last 60 yr—55 events I0 > VII (Europe). One event per year.

-

In 1900–2000—27 tsunamis were of some importance. Inter-arrival of 3.7 years per event or 0.27 events per year and 21 in 2000–2020, 1 per year. There has been quite an increase in events in the 21st relative to the twentieth century.

From other Catalogues, it is possible to observe various numbers of importance: The Euro-Mediterranean Earthquake Catalogue for the last millennium (EMEC) (Grünthal et al. 2012) (Fig. 11), shows that the Gutenberg–Richter (1954) law of occurrences (G–R), with a slight downward curvature, predicts for Europe one earthquake for M > 8.5 and 20,000 for M > 4 in a 1000 year period.

The most important earthquakes in Europe in the twentieth century (Mw > 7.7 (ISC-GEM Global Catalogue:1904–2017) (In Marreiros et al. 2021)

And for the last 115 years (Fig. 11), we only see events 6 events Mw > 7.7 in the southern part of Europe. Maximum intensities vary dramatically for similar magnitudes due to very different epicentral distances involved.

For the World

As already mentioned, there are a few World-catalogues.

Table 3 presents the most significant earthquakes of the last millennium with victims larger than 50,000.

-

37 earthquakes M8.5 + since 1500—Inter-arrival of 13.5 years, or 0.074 events per year for MegaEarthquakes (see Table 3)

For Mega Earthquakes

-

In 20 Century, one earthquake M8 + per year.

-

- 24 Earthquakes since 1000 AD with more than 50,000 deaths each.

To refer to the initiatives of the more significant impact, we can mention the efforts made in the late 1900s by Gere (1983) for the World and separately for the American areas, Japan, China and Taiwan. An excellent example of studying historical seismicity is Guidoboni and Ebel (2009). One of the most exciting compilations made for the World and covering the last 4000 years was published by Dumbar et al. (1992) and treated with detail by Bilham (2004, 2009). Among many other statistics that Bilham (2009) presents on earthquake events and population evolution, we show in Fig. 12a) the number of fatalities per event in the period 1500–2004. For this period, we can conclude that almost 1,000,000 victims have occurred only one time; 100,000 victims 35 times and 10,000 victims 300 times. This figure has some similarities to the G–R plot if we replace fatalities by magnitude (see Fig. 12b).

Figure 13 presents the World statistics of events larger than M > 8.2 for the last 115 years. It becomes clear that only one event, M > 9.4, have occurred, and the other bins fit with the G–R law.

Distribution of World magnitudes in the last century, per classes (data from Table in Hayes et al. 2020)

From all data presented in the last 120 years, it is possible to prepare Table 4 with the World record of occurrences in terms of the number of events/yr or the Inter-arrival time. World rate of occurrence for M < 8.0 is widely known with information disaggregated by integer magnitude values. With the above numbers we can also disaggregate for M > 8, namely M > 8.3, M > 8.7, and M > 9.4. The estimation of the last numbers was only possible with the help of the Catalogues of more significant earthquakes since 1000 AD, because from Fig. 12a) the prevalence of large magnitudes is not stationary in 1900–2020. Interestingly to note that events like the Valdívia, Chilean earthquake of 1960 M9.4+ occur once every century.

The values of Table 4 can be predicted from equation \({T}={10}^{-b({M}_{0}-M)}{T}_{0}\), where the parameters are taken from G–R law (Chang 2021).

The numbers presented in Table 4 are different from the ones discussed by Hough (2013), who, based on the NGDC Catalog (1994), refers to the incompleteness of the more significant events for periods before 1900 (Fig. 14). Missing events that other techniques, including large tsunamis, might identify are critical to hazard studies and may reduce the odds for occurrences.

Timeline of Mw > 8.5 for the World seismicity in the period 1700–2012 and running average (top) with moving window of 20 years (after Hough (2013)

2.2.3 The role of palaeo-seismology and archaeo-seismology in extending the historical information to older periods

As already mentioned, palaeo-seismology and archaeo-seismology can extend the period of historical information for ancient earthquakes, essentially obtained from tsunami sedimentation, back to 6000 or more years BC with the presently available technology. Silva et al. (2015), studying the ancient earthquakes occurring in coastal areas of the South and SE of the Iberian Peninsula, found three possible tsunamis (218 BC, AD 40–60 and AD 1048) and made use of “seismic palaeo-geography” to confirm the approximate dates of those events. Another example is Baptista and Miranda (2009), which estimated tsunami events for SW of the Iberian Peninsula back to more than 8000 years ago, leading to a time interval of 400 years between large events (Table 5).

Archaeomagnetic data recovered from the study of Celtiberian remains from Central Spain helps analyse the fidelity of palaeo-intensity data on ceramic pottery (Gomez-Capera et al. 2016) through some information on the reconstruction (orientation) of the magnetic field in the first millennium BC. This may be a good signal for dating Archaeo-seismological sites. The above values can constrict the time-intervals for the huge events in the SW of Continental Portugal.

In a recent study, Salazar et al. (2022) found geoarcheological evidence of a tsunamigenic earthquake ≈3800 years ago with origin in northern Chile. They attributed a Mw 9.5 due to the enormous perception area. The origin area corresponds to one of the major gaps of the planet, emphasizing the necessity to account for long temporal time scales.

Other relevant information may come from dendro-hydrological analyses of tree rings, which may give clues to earthquake events due to changes in water content after shaking. Trees ranging in age from 300 to 500 years grow in many places and can identify previously unknown seismic disturbances or better define partially known events (Jacoby 1997). A good case study is related to the 1812 New Madrid events.

2.2.4 Resolving Uncertainties on the location of ancient ocean events with Tsunamis modelling

Studies of back-analysis have been of great importance in determining the epicentre of offshore earthquakes that trigger tsunamis. This applies to historical earthquakes where Intensities are very difficult to produce good results. Historical records are very clear from the beginning of the eighteenth century to access times of arrival of tsunami waves and approximate amplitude of inundations. In addition, information on the polarity (run-up and rundown) is available in many coastal regions. Back analysis has been performed by Backward Ray Tracing, Forward simulations or Inversion of tsunami waveforms. Results are promising in approximating the location of the epicentre with errors in the order of a maximum of 0.5º, depending on how significant the event is and on the number of data points in the coastal regions. These methods permit many better-constrained solutions than working with inland intensities points away from the epicentral area and, most of the time, from a short azimuthal aperture. We can say that about the SW Iberian, a region with large seismic activity and a few tsunamis since 1700. Using this technique it is possible to locate the more significant off-shore events with great precision. We should recall 1722, 1755, and 1761 as significant successes (Baptista 2020, Fig. 15).

Overview of the study area. Beach balls represent the focal mechanisms of the instrumental events—1941, 1969 and 1975; orange stars represent the presumed epicentres of the historical events 1722, 1755 and 1761. White dashed lines follow main geological lineaments. GS Gibraltar Strait, CP Coral Patch Seamount, GB Gorringe Bank

2.3 Risk matrices

Citing Aven et al. (2017), risk problems are often complicated and multi-faceted, requiring simplifications on how risks are described and used in communication and decision-making processes. The tools used to do this include different risk matrices, ranking, rating and scoring metrics. Risk scoring and ranking systems range from simple risk indicators to more complex characterisations that consider other relevant aspects necessary for decision making, including costs and ethical concerns.

Depending on the objectives to reach out, risk matrices can be presented in different formats, as in Fig. 16a), where a two entry table (likelihood or probability) vs. (severity or impact) leads to a number/colour that expresses the Risk. The higher the number, the higher the risk. Figure 16b), which corresponds to a case of floods and landslides (Quaresma and Zêzere 2012), adds the levels of acceptance of risks in face of probabilities of occurrences. There are several limitations and methods to design risk matrices, and caution should be exercised in their applicability (Cox 2008; Bao et al. 2017).

a The type of a Risk Matrix; b F-N Curves (frequency consequences) with levels of acceptance of risks (Quaresma et al. 2012)

If the phenomenon under analysis depends on more than a simple variable, y = f(x), Risk matrices are more complex because both “input” and “output” may be multi-hazard and multi-impact, and the problem easily converts into multi-dimensional.

Figure 17a gives us a Risk Matrix for a single hazard, with two entries, for the case of a tsunami impact measured by the height of inundation and velocity of water flow (Boschetti and Ioualalen 2021). Here again, the higher the number, the higher the risk. Figure 17b as proposed by Mota de Sá et al. (2012), “SIRIUS” presents a Risk Matrix for a region or an urban block to measure the impact of future events considering the average deficit of resistance of the existing stock of buildings fulfilling a prescribed code, and the concentration of population. Probability is associated with abscissa.

Risk Matrix for single hazard, two entries; a tsunami impact measured by the height of inundation and velocity of water flow (Boschetti and Ioualalen 2021); b shaking impact measured by the deficit of resistance and concentration of population affected (SIRIUS)

Population density is also used to link fatalities to magnitudes and reduce dispersion, as shown in Fig. 18a) with data since 1900. A few other Indicators have been presented for the multi-hazard phenomenon, as is the situation of Shaking plus Tsunami (Fig. 18b). To each number, a descriptor is associated.

Relation between earthquake magnitude and numbers of fatalities for all earthquakes since 1900 (Hough et al. 2006) with lines adapted from Samardjieva et al. (2002) (D is population density per km2) b Multi-hazard Shaking plus Tsunami: increase of impact due to tsunami after damaging shaking: a sketch; after Bonacho et al. (2018)

Another form to analyse risk is proposed by Platt (2017). He measures the resilience of societies by studying the factors affecting the speed and quality of post-earthquake recovery. Based on ten significant events of the twenty-first century and on time to recover in each one (data from Kates and.Pijawka 1977), he gets a “good” correlation between disaster management quality and speed and quality of recovery. The better the management, the lower the rate and the larger the quality of recovery.

Finally, from the concept of “vulnerability ratio” and “Size of Earthquake”, we can construct a Risk Matrix (Fig. 19a) as follows:

-

“developed countries” in general have few victims and huge losses (due to inter-dependence and non-structural losses);

-

“developing countries” have many victims but more minor economic losses (because they are already poor and reconstruction is made through solidarity funds);

-

“Mega earthquakes” (M > 8 or area of perception > 500 km) are rare events that produce huge impacts over a large area of perception;

-

“Local earthquakes” (M < 8) are more specific events; if the epicentre occurs near urban areas, the impact is localised, and developed countries can take care of that.

Local and Mega earthquakes can be somehow associated to a given probability of occurrence. But severity is still missing!

Another way is using the concept of “Resilience” (the capacity to recover) and again “Size of Earthquake”, but in this case measured by PGA (peak ground acceleration) (Fig. 19b).

From all the risk matrices presented, SIRIUS (Fig. 16b) seems to be one of the best form of communicating risk to the population.

2.4 Points to retain

The most important points to retain from this Section are:

-

Catalogues are essential tools for understanding the past and expecting the future. As we tried to explain, the world’s seismicity is not increasing in time as it is happening with other natural events related to climate changes. But they are not easy to handle because so far, we could not predict their occurrence in time, even though all indications point to the repetition of similar events in the same places, but occurring at a pace sometimes not compatible with human generations. Periodicity might be too long that communities forget the perils of unexpected events that have already happened. Palaeo- and archaeo- seismology prologue our knowledge into the past and signals those periodicities for the “black swans events”. It is an absolute error trying to extrapolate modern seismicity of excellent quality to periods more extended than the observed ones if the period in analyses is not long enough to represent the stationarity of these stochastic series. Citing N. Ambraseys (2009): “Historical earthquake information is invaluable not only in the study of earthquakes per si but also for climate and weather, and can guide the engineers to design structures to resist the forces of nature without being taken by surprise by an anticipated event”.

-

Why are earthquakes still shocking when they occur? Occurrence is not increasing over time; however, exposition is increasing dragged by the exponential the population's growth and vulnerability. These two elements of the Risk equation (hazard/occurrence, existence, vulnerability) depends essentially on the cultural level of the society and the community perception of perils associated with the frequency of events.

-

Engineering and science know how to prepare a more resilient society. The first signs show that current policies to mitigate earthquake impacts are working if measures are taken.

-

In this section, we also observed the problem of earthquakes from a Worldwide and European perspective and looked for indicators and risk matrices to predict their impact.

Other points should be brought to the discussion on the causes still existing of the high vulnerability of world construction facing earthquakes. Contrarily to what has happened in the automobile industry or in the aeronautics, medicine or other sectors, which need more and more HighTech knowledge, the construction industry did not go through any revolution like the others. Nowadays, to perform maintenance of your car, you no more look for backyard garages. You have to take the vehicle to specialised artistry. The construction industry is in the hands of people with low culture and poor knowledge, based on the unique social-economical background that wants to recover the investment the sooner the best. Masonry construction is the paradigm of solid physical labour even though much knowledge is behind old traditions. Steel or concrete structures require much more expertise, and new materials that appear nowadays (FRP-Fiber Reinforced Materials, CLT-Cross-Laminated Timber) involve other skills. Rehabilitation is another new requirement that experts and societies demand, and public opinion and mass media claim.

In construction, especially in single homes or dwellings, each owner acts by himself, being architect and engineer and solving all problems without calling the help of a professional. Of course, things worldwide differ much from region to region, from culture to culture. However, there is an epistemic uncertainty behind the whole process of the construction industry, which we need to reduce dramatically. This concern is briefly stated in the Class Notes of Geography (Hommel and Parry 2015).

Negligence and corruption have been referred to by a few researchers (i.e. Hough 2020; Bilham 2009), as the most important cause for this alarming situation, and there are indeed many conflicting interests throughout the World dealing with the binary issue of construction industry-urban planning. Politicians do not care for long-term decisions because their term is very short, and their common position is silence or negligence as it has happened with climate changes. Nonetheless, these social-political reasons are responsible for the very poor performances of several typologies in recent events. Science, both Seismology and Engineering Construction have a share of these responsibilities, either for requiring too much or not denouncing the too low, as it will be described in the following sections.

3 Evolution of Seismology and Engineering Construction since the mid-1700s until the mid-1900s

Summary

-

Before 1900 science for studying seismology and earthquakes was not significantly different from other sciences. Very rich in theoretical terms like mathematical physics, a topic still taught nowadays. But the concepts of building safe structures against earthquakes were very rudimentary. Construction kept good traditions from ancient times based on empirical knowledge transmitted from generation to generation.

-

We look in parallel to the developments of these fields anchored into other sciences like construction and architecture that are as old as humanity.

-

For each new invention or explanation, we intend to look ahead to see the follow-ups in the twentieth century and how technology evolved 20 years past the last millennium.

-

As earthquakes/tsunamis occur in a stationary way when speaking of the entire world, there is always something happening and new visions can support new ideas.

-

Seismic-resistant construction was born with great success after the 1755 Lisbon earthquake.

3.1 A first view of the problem

The Lisbon earthquake of 1755 was the first event that awakened science to the earthquake phenomenon. Before that, many advances were made toward understanding motion and how to design structures to survive, but no one got close to the causes of shaking. The first reports on the perception of earthquakes came from China a long time ago, where the first seismoscope was developed to analyse the direction of motion and intensity of first waves.

Developments in the design of structures were connected to vertical loads. Buttresses (flying buttresses) or ties in churches are antique techniques used in the Middle Ages when influential and notorious structures were built. In the Arabian and Muslim times, we also see slender structures, namely mosques and minarets that somehow tried to survive earthquake shaking, challenging mother nature.

Earthquakes are described in ancient times as terrible events that will occur from time to time; other natural occurrences were not so critical, except plagues. As medicine was not like today, contagious diseases such as “pests” (Black Plague) were even more catastrophic in terms of human impacts. According to Cirillo and Taleb (2020), in the last 2500 years, 72 events caused significant mortality worldwide.

The use of very particular techniques helped fight earthquake threats, as in the case of timber crossing used in housing in a few locations in Europe and Asia, especially in Japan. Other areas with plenty of seismic activity were not much inhabited until the fifteenth century, like the Americas. With the arrival of more developed cultures, new information started being collected. But, in many cases, the existence of seismic resistant construction was not present. Even though concerns were current, construction not minimally surviving shaking was built over the centuries. Shaking loads are different from vertical loads, and the material used did not help increase lateral resistance. But human ambition was high, and construction in height without robustness was seen in many locations, even in areas experiencing seismic activity.

In 1755 for the first time, the understanding of waves generated at some point and arriving at different locations with different severity was observed with the help of an enquire made in Portugal and Spain to check the violence of shaking through the behaviour of structures and performance of nature (liquefaction, water flow in rivers, etc.). Reconstruction of Downtown Lisbon observed several requirements; the most obvious was the widespread use of a Pombaline cage, a Timber frame with diagonals implanted in the interior of masonry walls. The engineers at the time learned that the flexibility of elements and connectivity would help buildings survive future events. This knowledge came directly from the wooden naval technology in Lisbon shipyards, where ocean ships (caravels) were provided with enough resistance to survive strong sea waves.

All advancements until early-1800 were very empirical, but the tradition of construction passed from civilisation to civilisation.

The first steps toward a better description of the damage and the tentative measuring seismic action started with impacting earthquakes in Japan and Southern Europe. The advancements in physics and the strength of materials allowed the possibility to theorise on a few essential topics. Statics, but not dynamics, were already known in the construction of spectacular monuments, which helped make constructions more resistant.

Japan, where earthquakes occur with great frequency, caused the attention of several physicists, namely John Milne, a scientist from Cambridge University that spent a significant part of his life studying earthquakes. The same has happened with Robert Mallet member of several English Royal Societies that visited various sites in Italy struck by earthquakes.

It is evident that looking into history, the significant development in the science of earthquakes took place in the regions where these two requirements would hold: (1) Frequency of the impact of earthquakes and (2) existence of a solid culture in physics. This is very clear in "Appendix 2", where we can understand the epochs and the context of higher contributions to science. For example, on the west coast of the Americas, where seismic activity is significant from Southern Chile to Vancouver, even though with very different mechanisms of fault rupture, developed excellent knowledge in Seismology and Earthquake Engineering(SEE) but at a very late epoch in comparison with Asia (Japan) or Southern Europe, especially Italy, due to the late cultural evolution of those western lands.

The first tentative to understanding earthquakes, their origin and their impact are observed in the mid-1800 with the Treatises of The Science of Seismology. From the observed damage, physicists tried to establish a direction to the epicentre and, using trigonometry and ballistics, they obtained the possible distance to the origin of shaking. Intensity scales came at a later stage.

It is exciting to observe the development of Seismology and Earthquake Construction, the two fields of knowledge behind the earthquake problem at the time. For many years they were together, but at a certain point, they followed different paths. Military Engineering was probably the science that led to important constructions, also looking for earthquake leading expertise. Architecture also played an important role, with its initial pioneering work by Vitruvius in the first century AD. Seismology became more independent with the development of physics, while construction was not progressing much. Only when new materials like iron became available by the end of the nineteenth century did the construction practice experience a great impulse.

Masonry, together with timber, geological materials like lime, and a mixture of earth with some cement, dominated all construction materials from early history (Roman Times & before) until the end of the nineteenth century. Other materials (steel and reinforced concrete) progressively replaced the masonry construction during the first half of the twentieth century. Only the recent movements towards the rehabilitation of older construction, in a signal of cultural development, brought back the interest in old materials.

Intensity scales were a new significant advancement in earthquake science. For the first time, measuring the impact of an earthquake in different locations becomes an outstanding achievement. The first scale goes back to the mid-1800. With more refined descriptors, they are still essential nowadays to relate with Strong Motion parameter's values and re-analyse old events where only descriptions of effects and impacts were available.

Intensity is a simple measure of a complex phenomenon that has become challenging to analyse nowadays. To understand the various inter-dependences, we must disaggregate information into more specific elements.

Remarkable Seismology advancements were possible with the advent of recording instrumentation. This evolution rapidly evolved as a “quanta”, accompanying other technological advances.

Several sciences accompanied the development of earthquake knowledge along the times, particularly in Seismology, Construction and Architecture. We will try to compare the critical points that most contributed to the development of earthquake knowledge. But many other sciences, besides the ones above referred, are connected to these developments. Mathematics with Geometry and Calculus, etc., then Physics with Statics and Materials would complement the experimental observations made locally (Field Trips) to essay explanations on the effect of earthquakes.

Science and technology progress is generally achieved by small increments and contributions. It takes time for a new idea to become adopted as the state-of-the-art in the field. In the meantime, discussions and doubts are advanced among experts working in the same area. Finally, one name or a group of names forms a team that gets to the point, wrapping all knowledge and publicising an invention. This had happened throughout ancient times when communication was difficult and with long periods of delay. But even now, with all the efficiency in communication, sometimes the same happens. Frequently, two groups arrive almost simultaneously at the same theory, algorithm or explanation. Of course, there are exceptions; probably the most peculiar one was Einstein’s new Theory of Relativity (1905), which had no one doing the same and was so disruptive that it took years to be accepted. In SEE, the same has taken place. Sometimes, it only appears the name of such a person that finalises the invention or solution to a given problem. The other names, deserving a reference, were kept in the dark.

We will be touching on these topics, not in an orderly way:

-

Military Engineers: Theory, practice—military engineers would be the great “technicians” of large constructions, part as architects and part as civil engineers.

-

Earth Scientists—were mathematicians and physicists that would try to understand the laws of physics of the Planet Earth.

-

Construction Practitioners (Architects—geometry; materials) were the first to put up with engineers the housing and significant monuments of their epoch. Geometry and, in particular, trigonometry was probably, together with materials, the prior knowledge to build construction, the most critical asset for the populations.

-

Material science (earth + masonry + timber + limestone)—the knowledge of properties of existing natural materials, the sites to get them (Geology) at the closest place where the building would occur, the size, strength, the portability, etc., were among the most critical aspects critical for the type of construction that we see growing at a particular place.

-

Observation Seismology upon Construction behaviour (Field Trips)—the external threats were present in many locations, and shaking and tsunamis were probably the most demanding loads to consider. The continuous repetition of disasters always calls the attention of the population and the elites that the territories were not all the same as far as the shaking was concerned. But it took much time to understand the phenomenon and become accustomed to living with them, even now.

-

Mathematical modelling (Theoreticians)—the development of theoretical modelling always helped in looking ahead of time and proportionate the search for better solutions. Experience from past events would be the primary key to understanding the problems.

-

Instrumental Seismology (Physics)—without instrumentation, it was tough to understand the physics of the problem, the origins, the propagation of waves and the interaction with construction. With mathematical modelling, significant steps were made towards what we now understand.

-

Manuals for construction (Codes)—when human evolution turned into exponential growth, construction needs exploded exponentially, and there was no other way to control the quality of construction if codes were not introduced. These codes would reflect the best knowledge of science and practice to mitigate earthquake impacts.

Landmarks in the evolution up to the 18th Century.

-

Chinese (several Centuries BC) already dealt with earthquakes. They were the first communities to compile catalogues of earthquakes, with the first mention in the twenty-third century BC. The first collection of earthquake records appeared in 977 AD. There were 45 earthquake items between the eleventh century BC and 618 AD (Wang 2004). The invention of the first seismoscope will be mentioned in Sect. 3.1.

-

The Roman Architecture and the Vitruvius Treatises explained in 10 Books during the 1st Century BC—this was probably the first compendium of architecture. Vitruvio (Marcus Vitruvius Pollio) (Edition 1486), the father of modern architecture and construction, wrote with simple language and illustrations, many of which were lost over the centuries. He gave a lot of attention to building construction, time and movement of the stars to understand insulation and mechanics and design a lot of equipment for erecting buildings and protecting during wars. He made the principles of architecture based on “firmitas, utilitas, and venustas” ("strength", "utility", and "beauty") and respected the proportion of the human body.

-

The Materials for construction (Blocks, rubble masonry, limestone, timber, etc.)—there was not much choice for materials rather than the ones Mother Nature would directly proportionate. Vitruvius already knew the main properties of those materials and the geometric characteristics to design arches, roads, bridges, monuments, religious temples, castles, etc. These are essential assets among the Greek, Roman and Arabian civilisations (Fig. 20).

-

The disappearance of a big Metropolis near the shoreline is also a problem linked to earthquake events. The discovery of “pozzolana”, a material extracted in Puzzuoli near Vesuvius, permitted cementitious materials would harden inside water. Several massive constructions were built with this material, conquering infill land near ports and water inlets. Alexandria Colossus of Rhodes and Caesarea maritime urban harbour (Palestine) were among them. They disappear suddenly in the event of an earthquake: liquefaction sounds the most obvious explanation for the disappearance of those metropolis. The Colossus of Rhodes, which took 12 years to build (c. 294–282 BC), was toppled by an earthquake about 225/226 BC. The fallen Colossus was left in place until 654 AD, when Arabian forces raided Rhodes and had the statue broken up and sold bronze for scrap. About the fall of Caesarea, explanations are still under study. The sudden disappearance of Heraklion or Thonis in the Nilo's Delta, Egypt, ten centuries BC is probably another case of liquefaction in the following of a large earthquake event.

-

The great monuments of the Middle Ages. These are the times of massive cathedrals, bridges, palaces, castles, etc. They already benefited from having interesting solutions to solve the dead loads, which were also good for absorbing lateral shaking. This coincidence was very successful. Figure 21 Sketches of buttresses in Vitruvius to solve the lateral impulse caused by the upper elements. We understand now that this recommendation, widely used in Middle Ages cathedrals, was very helpful in resisting lateral shaking.

taken from Branco et al. 2017)

Arches, roads, monuments, and castles of the Greek and Roman epochs (photos

The tentative for a trial shake-table. The use of moving back and forward “stage” with construction on top of it built with some earthquake provisions, was the first tentative to analyse of the effect of earthquakes and the usefulness of earthquake provisions. This procedure was described as a probation of the efficiency of the “gaiola technique” (see Sect. 3.2.3) during the reconstruction of Lisbon after the 1755 earthquake.

3.2 Briefing the developments of Seismology and Earthquake Engineering (SEE)

Before entering the eighteenth century, where scientific developments were of great importance, we recall that the first known explanations for the origin of earthquakes go back to the Chinese culture two centuries BC, with the idea of catfish pulling the Earth and provoking shaking and tsunami waves (Fig. 22). The Great wave of Kanagawa (Katsushika Hokusai 1830) (Fig. 21b) was not made to remember the giant waves of a tsunami. Still, after the significant impact on the cultural society of Japan, it was adopted as a reference.

Chinese Culture: Cat-fish and tsunami wave—Catfish, 16th Century BC (https://www.iccrom.org/news/earthquake-heritage-examples-japan)

The first instrument to measure the shaking, intensity and direction of motion was developed in 132 AD by Cheng Hêng, an astronomer and mathematician (Dewey and Byerly 1969). Figure 23 is an attempt at a replica of that seismoscope which was characterised by “dragons-mouths” with “balls” and “toads” that would receive them when falling. Needham (1959) and Sleeswyk and Sivin (1983) described this first seismologic instrument well.

Until mid eighteenth century, the main theories considered causes of earthquakes were the collapse of underground caves excavated by the ocean and the volcanic theories. Aristoteles, Pliny, Chinese, and Shakespeare, among others, thought the Earth's inner fire played a central role in producing seismic vibrations. Aristotle developed an elaborate version of the volcanism model, in which underground vapours were circulated by the combined action of interior fire and solar radiation. Occasionally, the 'winds' produced would escape to the outside of the Earth, creating earthquakes. The Aristotelian theory of earthquakes was widely accepted throughout the Middle Ages. Its influence can still be found in Emanuel Kant’s essays in 1756 on the follow-up of the Lisbon earthquake. But the theories of punishment for the “bad behaviour” of populations were in vogue until the 1755 Lisbon earthquake. Rev. Malagrida was a great defender of God’s punishment advocating the capital penalty in public places. Pombal, the prime minister after the 1755 Lisbon earthquake, opposed that theory and acted right after the event asking for “treating the injuries and burying the dead”. He initiated the reconstruction of Lisbon, creating a team of Engineers and Urban Planners. The opponents of his views were left in jail.

3.2.1 Main seismological achievements in 1800–1900

As referred to before, Seismology and Earthquake Engineering have started as a single science in the mid 18th Century. They both look into explanations of the strange behaviour of Earth shaking and tied to build according to Newton’s Laws of gravity.

A few strong events in Europe, mainly in Italy, central Europe and Japan, forced people to think about the causes of earthquakes and ways to mitigate their disastrous effects. The first ideas came with the 1755 Lisbon earthquake that initialised the scientific approach to wave propagation with the theories of John Mitchel (1760). In the middle of the eighteenth century, the first theories of source of energy that radiates to many points around were starting at the same time as the first instrumentation was initiated. In the seventeenth and eighteenth centuries, the early explanations come from Physics, Mathematics, Earth, and Planetary observation. But only after the development of the theory of elasticity—was it possible to explain seismic phenomena satisfactorily through the laws of Physics. The mathematical formalism of elasticity was developed in the first half of the nineteenth century. It was applied to the study of earthquakes by Robert Mallet. He published in 1848 a pioneering treatise entitled “On the Dynamics of Earthquakes”, an attempt to reduce their observed Phenomena to the known Laws of Wave Motion in Solids and Fluids.

In the following Box, a set of points enumerate some of the most exciting “movements” that contributed to the development of these sciences.

Box—Comparisons in the evolution of Seismology and Earthquake Engineering.

The compared vision of Seismology and earthquake construction and the inter-analysis of both fields of knowledge.

•The first Earthquake Catalogue of Modern Ages with Pereira de Mendonça. •Mallet and the great Neapolitan Earthquake of 1858. Mechanical explanations to derive epicentre and hypocenter. •Milne and the first instrumentation. The first notion of SM record. •Field Missions to significant events. •The need to measure earthquakes’ effects initiates the first intensity scales. Intensity Scales and the first map of isoseismals are also contemporary of these significant achievements. •Turn of 1900 and the first seismographic stations. •Great advancements in understanding the origin of earthquakes, Wegener theory and plate theory in turn to the twentieth century. The plate theory comes later after the 1906 San Francisco earthquake. The rupture slip in the fault trace was evident, even though the first signs of fault rupture were already observed after the Owens Valley (1872) and Nobi (1891) earthquakes. Following the observations of the 1906 earthquake Reid (1910) proposed the model of the elastic rebound. According to Reid, these ruptures release in a few seconds or minutes the adjustable tensions accumulated over centuries or millennia by slow processes of deformation of the crust, the Earth’s outermost layer. This model for the generation of earthquakes is still essential for understanding the phenomenon today. In 2008 critical studies based on World GPS confirmed the plate movements (Müller et al., 2008). •After the 2nd World War, launching the World-Wide Seismographic Standard Network (WWSSN) to monitor nuclear explosions created another essential landmark. •Great disasters are always the origin of new avenues and science opportunities. |

The first explanation of the origin of earthquakes and the concept of epicentral location, focus, magnitude, etc., came at a later stage when American experts entered the seismological process after the 1906 San Francisco earthquake. Also, South American areas of Chile, Central American countries, etc., all the eastern subduction side of the Pacific plate, counted with the expertise of a few people, namely Montessus de Ballore (1911) and the Spaniard military (Cerero y Sáenz 1890) who was dispatched to remote areas of old colonies in the Andes and the far-east.