Abstract

The quantitative assessment of natural risks offers a rational strategy to protect communities, undertake cost effective mitigation and plan the organic and sustainable development of urban systems. For cascade events such as earthquake-induced liquefaction, assessment implies to characterize and reconstruct the areal distribution of seismic hazard, subsoil susceptibility, physical vulnerability, economic and social relevance of structures and to combine all factors in a unitary predictive model. Considering that aleatory variability and epistemic uncertainty affect the characteristic variables and their mutual correlation, it is also necessary to quantify their influence on the prediction. Within this framework, a vulnerability model is proposed to comprehensively assess the physical damage of buildings in an urban system. A chain method is formulated combining calculation schemes recently introduced in the literature with ad hoc numerical analyses. The effectiveness of the method is tested comparing prediction with the effects observed in the city of Christchurch during the 22nd February 2011 earthquake. The unprecedented documentation available after this earthquake enables to validate different components of the model and disclose the importance of possible disregarded factors. A geostatistical methodology is proposed throughout the paper to process data, quantify and govern the different uncertainty factors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The periodic occurrence and the increasing annual pace of losses connected with earthquakes constantly recalls the great influence of seismicity on cities and communities (Guha-Sapir and Vos 2011). In the different possible scenarios, a non-negligible role is often played by liquefaction. An estimate on the economic impact of earthquakes (Daniell et al. 2012), achieved disaggregating primary (shaking) and secondary causes (tsunami, fire, landslides, liquefactions, fault rupture and other type losses) in a statistics of 7103 events occurred worldwide in the period 1900–2012, attributes to liquefaction about 2.2% of the direct economic losses, i.e. about 2.24 trillion US dollars. The global estimate of damage becomes 3.6% when considering total losses, i.e. direct plus indirect. These relatively small numbers could erroneously lead to consider the phenomenon not particularly relevant but, considering the effects on cities or industrial districts, the prolonged impracticability of the infrastructures triggers an overwhelming chain of delays and losses on the interconnected systems capable of undermining the recovery of normal living conditions (Macaulay 2009; CSAPEISLA 2016). During the 1906 earthquake in San Francisco, failures attributed to liquefaction occurred in many locations within a 560 km long zone along the coast, inland as far as 64 km (Youd and Hoose 1976). The city of Kobe, Japan, and its harbor underwent a long economic recovery process from liquefaction-related damage caused by the 1995 Hyogo-ken Nanbu earthquake. Despite relatively modest magnitudes (Mw = 6.2) the consequences of liquefaction occurred in 2011 at Christchurch, New Zealand, forced about 15,000 families to leave their homes and seriously injured hundreds of buildings in the Central Business District—CBD (Cubrinovski et al. 2011a, b, c).

Knowing in advance the zones potentially affected by liquefaction and predicting the effects on the most relevant territorial assets helps to identify risk and enables stakeholders (urban planners, public administrators, private investors, managers of services and lifelines, emergency departments, insurance companies etc.) to undertake mitigation actions, inform population and make communities more resilient. The importance of these studies reflects into the trend undertaken in the nations more sensitive to the seismic hazard, where the territorial planning prescribes the assessment of risk (e.g. NZGS 2016a,b; DPC 2008, 2017).

The mechanisms of liquefaction, i.e. the deformation of cyclically loaded sand (Modoni et al. 2018; Salvatore et al. 2016, 2018; Wiebicke et al. 2017), pore pressure onset (e.g. Boulanger and Idriss 2014; Cubrinovski and van Ballegooy 2017), ground settlements (e.g. Iwasaki et al. 1978; Zhang et al. 2002, 2004; van Ballegooy et al. 2014) and effects on various types of structure (e.g. Bird et al. 2006; Cubrinovski et al. 2014, 2017, 2018) are the subject of different conceptual models that capture the influence of the most fundamental variables. Encompassing these studies in the estimate of damage and risk at the territorial scale is the scope of the present study, with a focus on residential buildings. The methodology adopts a cascade logic where seismic hazard, soil susceptibility and structural fragility of buildings are individually characterized, mapped and cross-correlated in a GIS platform. One main challenge of these studies consists in defining models able to reach a sufficient level of accuracy starting from rough, sometimes incomplete, information typical of the large-scale assessment. This goal requires addressing the epistemic and aleatory uncertainties connected with:

-

the schematization of phenomena;

-

the quality and inhomogeneous spatial distribution of information.

A fundamental issue for buildings founded on loose saturated sands is that they may experience significant settlements even before liquefaction take places. The complex soil-building response, with the coupled volume-shear deformation of soil, the development of pore pressures and the interaction with buildings has been experimentally investigated and interpreted by various authors (e.g. Liu and Dobry,1997; Dashti et al. 2010a, b). The results of numerous parametric calculations reproducing the above phenomena have been inferred with different formulas that lead to compute in a relatively simple and fast way settlements comprehensively taking into account the characteristics of earthquake, subsoil and structure (e.g. Karamitros et al. 2013; Bray and Macedo 2017; Bullock et al. 2018). On the other hand, the generic definition of building structures in territorial risk assessment implies to estimate damage on a probabilistic basis, i.e. adopting fragility curves that classify taxonomically buildings in homogenous classes and categorize damage with a severity rank as function of an engineering demand parameter. Fragility curves may stem from an empirical base, i.e. from the back analysis of observed events (e.g. Maurer et al. 2017) or analytically, performing a large number of numerical simulations where undefined input quantities are parametrically varied (Fotopoulou et al. 2018). The present study adopts the analytical curves recently introduced by Fotopoulou et al. (2018) that express damage as function of the differential foundation settlement. A statistical function derived with a parametric numerical calculation relates this quantity to the absolute settlement, computed considering the consolidation (Zhang et al. 2002) and distortional (Bray and Macedo 2017) strain components triggered by liquefaction.

This analytical scheme forms a novel chain methodology to assess liquefaction the risk on buildings. Validity of prediction is proven versus the effects of the February 11th 2011 earthquake (Mw = 6.2) at Christchurch adopting a binary method recently proposed by Kongar et al. (2015) as evaluation metrics.

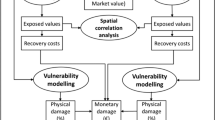

2 Scheme of the liquefaction risk assessment

Seismic liquefaction is the effect of a cascade phenomenon that starts with the release of energy from active faults, the propagation of motion through layers of different properties and the generation of excess pore pressure in the shallower subsoil portions. Severity of damage for buildings depends on the intensity of the shaking, susceptibility of the soil to undergo liquefaction, structural characteristics and socio-economic relevance of the involved system. All these aspects form the logical scheme depicted in Fig. 1, whose elements act in a way that the output of the lower level forms the input of the upper level. This chain scheme may be applied from bottom to top to compute the holistic risk or interrupted at intermediate levels to assess risk on specific sub-systems (ground, physical asset, service, community). In any case, the response of each element must be characterized, defining a correlation between input and output variables, individually or in conjunction with the closer elements depending on the relevance of the interaction between the two systems.

Cascade scheme implemented for liquefaction risk assessment (modified from Liquefact D.7.1 2019—IM: Intensity Measure; EDP: Engineering Damage Parameter; DM: Physical Damage; and DV: Estimated Loss)

Once mechanisms are characterized with appropriate variables and relations, the assessment of risk over a given territory imposes to quantify the concomitance of the risk factors (hazard, vulnerability and exposure) in each location. The analysis takes considerable advantage from the use of Geographical Information Systems (GIS) referring data to their geographical position, adding scripts to correlate variables and representing the output in the form of maps.

A relevant issue for this methodology is the governance of the uncertainty stemming from the concurrence of several factor, i.e. stochastic occurrence of the seismic events, quality and spatial resolution of the available information, precision of the inferred relations. This aspect has been properly addressed by the Performance-Based Earthquake Assessment (PBEA) approach (Cornell and Krawinkler 2000) that proposes to compute risk with the following Eq. (1), accounting for uncertainties with a sequence of conditional probabilities:

Here p(IM) is the density probability that a seismic event of intensity measure (IM) occurs during the lifecycles of the studied system, p(EDP|IM) is the density probability of the engineering demand parameter (EDP) conditioned to IM; p(DM|EDP) is the probability that a physical damage occurs on the structural component of the system conditioned to EDP; P(DV|DM) is a cumulative probability of the assumed evaluator of the system performance conditioned to damage DM. The application of Eq. (1) implies that variables and probability functions are specified with a mechanical characterization of the problem. For buildings subjected to liquefaction, the sequence depicted in the right part of Fig. 1 is presented in the following chapters, computing physical damage with fragility curves and adopting settlements of the building as EDP.

2.1 Subsoil data management

A paramount difficulty of risk assessment for systems spread over large territories is the evaluation of variables (e.g. subsoil properties) from the outcomes of investigation performed in a discrete number of positions. To this aim geostatistical methods (Matheron 1965; Chilès and Delfiner 2012) offer several advantages as demonstrated by different application (e.g. Spacagna et al. 2013; Modoni et al. 2013; Spacagna et al. 2017; Saroli et al. 2020). A fundamental ingredient of this method is the inference with a mathematical function, the theoretical variogram, of the differences between the values of the selected variable related to the mutual distance between sampling points (experimental variogram). The function serves to compute the weighting factor of a linear interpolator that relates the estimate in each position to the values measured in the sampling points (kriging). Compared with other common interpolators (e.g. polynomial functions or inverse distance weighting) that quantify the considered variable deterministically, the geostatistical methods provide estimates in the form of statistical populations, i.e. with the mean values representing the most probable occurrence and the standard deviation that gives an immediate perception of the estimate reliability. A direct advantage of this methodology is that the effects of uncertainty can be incorporated in the risk assessment process, contributing to define the probabilistic terms of Eq. (1). Furthermore, considering that uncertainty is tightly connected with the density of investigation near the considered position, it is possible to discard prediction affected by a too poor reliability. The zones where additional investigation is more relevant can also be identified.

In territorial studies of liquefaction, geostatistical tools have been adopted for instance by Baker and Faber (2008) to compute the distribution of a liquefaction potential indicators in the city of Adapazari (Turkey), by Pokhrel et al. (2015) to map the Liquefaction Potential Index (Iwasaki et al. 1978) from SPT results in the city of Urayasu (Japan) and by Zou et al. (2017) to quantify the probability of liquefaction based on CPTU data. In the present study, geostatistical tools have been recurrently used to assess the quality of available information and filter low quality ones. In fact, an issue related with the collection of subsoil information over large areas is that investigations are rarely performed with the same standard and data are not always consistent each other. Reason for this inconsistency stem frequently from different execution, reporting and interpretation standards, or from other sources like mispositioning of the investigation sites over the map, etc. Geostatistical based tests like the boxplot (Montgomery et al. 2012) enable to evaluate the quality of data from the continuity of information estimated from nearby investigation. This criterion has been here adopted as the less arbitrary, being possible to analyze and manage data in a fully automatic way, i.e. without the need for the operator to pick singularly data and drive outcomes. Once problematic data are identified, it is possible to verify the origin of inconsistency, correct errors or eventually discard the datum. The beneficial effects can then be measured with a reduction of the estimate standard error.

2.2 Computation of losses

The computation of losses associated to damage (DV|DM) is the last step of the assessment procedure depicted in Fig. 1. Several studies have proposed to define damage/losses relations based on socio-economic consideration (e.g. Lee and Mosalam 2006; Moehle 2003; Porter 2003; Comerio 2005; Krawinkler 2005; Mitrani-Reiser et al. 2006). Considering this issue out of the scope of the present paper and focusing for simplicity on the repair losses, i.e. neglecting other indirect costs, a deterministic evaluation scheme defined in Hazus (FEMA 2003) has been adopted in the following. This scheme introduces four different damage limit states (Slight, Moderate, Extensive and Complete) and associates to each of them a loss cost (rci) expressed as a percentage of the total demolition and reconstruction cost (RCi). The ratios (rc/RC)i are defined in the code for 33 different building categories. Table 1 shows an example for residential buildings, named RES1.

Combining the loss factors with the probability of reaching each of the four defined damage states (Pdsi) enables to compute the Mean Damage Rate (MDR) as follows:

3 Vulnerability of buildings

A fundamental issue for the application of Eq. (1) is the definition of vulnerability that passes through the identification of a representative parameter, EDP in the equation, and the expression of the p(EDP|IM) and p(DM|EDP) functions. The physical damage induced by liquefaction on buildings depends on the intensity of the ground shaking and pore pressure build-up, coupled with the capability of structures to adsorb absolute and differential movements. As a preliminary step of the analysis, it should be considered that prior experiencing settlements buildings affected by earthquakes undergo shaking that may produce additional damage. The coupling between ground shaking and liquefaction effects on buildings form the subject of previous studies carried out on empirical or theoretical basis. Some events, like the one occurred in Adapazari, Turkey, after the 1999 Kocaeli Earthquake (Bakir 2002), showed that the buildings damaged by liquefaction suffered limited effects of shaking and vice versa. This evidence suggests that the liquefied soil may act as a sort of natural seismic isolator for the shaken buildings, raising also the extreme idea of inducing liquefaction into selected soil layers to reduce the more severe damage of shaking (e.g. Mousavi et al. 2016). This conceptual scheme implies the idea that shaking prevails in the initial phases of the earthquake whereas liquefaction overlaps in a second phase, with the result that the whole earthquake damage is given by a combination of both. However, while liquefaction reduces the effects of shaking, the consequence in the opposite direction are less evident, with the practical consequence that the estimate of liquefaction damage can be performed autonomously from shaking. Concerning the definition of EDP, Bird et al. (2006) theoretically and van Ballegooy et al. (2014) empirically focus on the differential settlements as the major cause of damage. This option matches closely with the definition of damage given by Boscardin and Cording (1989) and adopted in design standards, e.g. the Eurocode PrEN 1997-1 (CEN 2008), that express the serviceability performance of buildings in terms of distortion. A recent study by Fotopoulou et al. (2018) uses differential settlement as EDP into the definition of four liquefaction fragility curves for buildings, each referred to damage limit states (Table 1). Fragility curves are statistical tools that quantify the probability that a system undergoes a given performance state (e.g. damage level) as functions of the engineering demand parameter. A fundamental requirement for their definition is the taxonomic classification of buildings into classes that group homogenous elements, i.e. buildings having similar typology, extension, structural stiffness and weakness (e.g. Brzev et al. 2013). In choosing the buildings classification a compromise must thus be unavoidably sought between particularization and available knowledge. In fact, a more particular classification would give to more reliable prediction but at the expenses of a deeper knowledge of the building characteristics, rarely viable in territorial analyses. The fragility criterion defined by Fotopoulou et al. (2018) chosen for the present study represents a compromise between immediateness and accuracy. It refers to low-code reinforced concrete buildings, i.e. designed without specific seismic regulation, resting on shallow isolated footings. They have been obtained from statistical analyses of the results of non-linear numerical calculation considering two possible failure mechanisms, flexural damage of beams and shear failure of columns, induced by random differential displacements applied at the foundation. An example of fragility curves for two stories buildings is given in Fig. 2, while a table of the parameters (median A and dispersion β) of the log-normal distribution for 2, 4 and 9-storey buildings are reported in Table 2.

Adapted from Fotopoulou et al. (2018)

Example of fragility curves for a two stories low code framed building.

Damage evaluation with the above curves implies to estimate the differential settlements of earthquake solicited buildings. This evaluation is normally affected by a significant uncertainty, being dictated by the nonhomogeneous subsoil conditions (Ishihara and Yoshimine 1992), distribution of loads and structural properties of the buildings. Knowing these factors in detail is difficult, even for the study of a single building under static conditions, and thus alternative simplified approaches are pursued, that base the estimate of differential settlements on the values of absolute settlements (e.g. Grant et al. 1974; Viggiani et al. 2012). The estimate of differential settlements is moreover difficult when dealing with large scale analyses where information become somehow vaguer. However, data on age and structural typology, plan dimensions, height and number of stories of buildings obtained from databases can be exploited to perform a preliminary risk assessment. With this aim, a two-step calculation is proposed in the present study, extending to liquefaction assessment the previously recalled procedure adopted for static conditions: firstly, absolute settlements are quantified with a simplified formula that includes the dependency on seismic input, subsoil characteristics and simple building properties; then a relation between differential and absolute settlements is inferred from the results of parametric numerical calculations where the coupling between heterogeneous liquefiable subsoil and structures having variable flexural stiffness is accounted for.

3.1 Estimate of absolute settlements

In spite of some popular empirical procedures (e.g. Tokimatsu and Seed 1987; Zhang et al. 2002, 2004) that focus on the free-field response of liquefied soils, centrifuge studies (e.g. Dobry and Liu 1994; Dashti et al. 2010b; Bertalot and Brennan 2015) have highlighted that the presence of loaded footings near the ground level influences significantly the pore pressure build-up and alters the mechanisms that govern deformation and settlements. In details, it is commonly acknowledged that a significant amount of settlements is induced by the distortional strains generated close to the foundation toe. Moving from this idea, Bray and Dashti (2014) proposed to express the total settlement (wmax) as a sum of three contributions, shear induced (ws), volume induced (wv) and ejecta-induced (we):

In a following study, Bray and Macedo (2017) performed a large number of parametric numerical analyses and inferred analytical formulas into the calculation results. In particular, they suggest computing the different terms as follows:

-

integrate with depth the volumetric strain computed with the procedure suggested by Zhang et al. (2002) to compute wv;

-

estimate the settlement due to sand ejecta (we) with an empirical function, built from case histories, of liquefaction indicators like the Liquefaction Severity Number LSN (van Ballegooy et al. 2014), the Liquefaction Potential Index LPI defined by Iwasaki et al. (1978) or its modified version defined by Ishihara (1985);

-

compute the shear-induced settlement using the following equation Bray and Macedo (2017):

$$\begin{aligned} ln\left( {w_{s} } \right) & = c1 + 4.59 \cdot ln\left( Q \right) - 0.42 \cdot ln\left( Q \right)^{2} + c2 \cdot LBS + 0.58 \\ & \quad \cdot ln\left( {tanh\left( {HL/6} \right)} \right) - 0.02 \cdot B + 0.84 \cdot ln\left( {CAVdp} \right) + 0.41 \\ & \quad \cdot ln\left( {Sa1} \right) + \varepsilon \\ \end{aligned}$$(4)where ws is expressed in mm, Q is the unitary contact pressure on the foundation (kPa), HL(m) the thickness of the liquefiable layer and B (m) the lower planimetric dimension of the building footprint. CAVdp (g*s) is the cumulative absolute velocity (Campbell and Bozorgnia 2011; EPRI 1988) and Sa1 is the spectral acceleration at T = 1.0 s (g). The use of CAV for the characterization of the seismic signal responds to concept that liquefaction is more dictated by the energy released by the earthquake rather than by its peak intensity.

Particular relevance is assumed by the index \(LBS = \smallint \frac{{\varepsilon_{shear} }}{z}dz\) computed integrating with depth the shear deformation εshear (Zhang et al. 2002) below the foundation plane. It dictates also the two coefficients c1 and c2, equal respectively to − 8.35 and 0.072 for LBS ≤ 16, − 7.48 and 0.014 otherwise.

The term computed by Eq. (3) represent the median of the results of numerical analyses. In fact, the authors suggest quantifying the uncertainty connected with the use of a simplified formulation with a probabilistic normal function having a variation coefficient ε = 0.5 in the natural logarithmic units.

3.2 Differential versus absolute settlements

The factors influencing differential settlements of buildings, recalled in the Eurocode 7 – (EN 1997 – 1 2004) include:

-

occurrence and rate of settlements;

-

variation of ground properties;

-

loading distribution;

-

construction method and sequence of loading;

-

stiffness of structures.

Bray and Macedo (2017) and Bullock et al. (2019) provides probabilistic estimates of the differential settlements of buildings subjected to liquefaction. In the present study, the role of the above factors on the differential settlements is quantified with a specific calculation. Neglecting the effects of construction sequence, not related to liquefaction, and considering for simplicity a uniform load distribution on building foundations, the effects of settlement rate, variation of subsoil properties and stiffness of the structure have been evaluated performing a parametric numerical analysis (ITASCA V2D 2016). The performed analysis shows that, while the settlement distribution depends on different factors like seismic motion, subsoil layering and properties, foundation width, soil-foundation contact, building inertia etc. (e.g. Karimi et al. 2018; Ramirez 2019; Tokimatsu et al. 2019), the ratio between distortion and absolute settlements depends mainly on the stiffness of the structure-foundation complex and on the variability of subsoil properties.

The studied subsoil scheme consists of three-strata (Fig. 3), an intermediate liquefiable layer whose stress–strain response has been simulated with the critical state-based plasticity model (PM4 sand; Ziotopoulou and Boulanger 2013), a lower base and an upper crust whose stress–strain response has been simulated with hysteretic models coupled with Mohr–Coulomb (MC) failure criterion. For the sake of validation, the model has been inspired to the case study of a building in Terre del Reno (Emilia-Romagna, Italy) struck during the earthquake of May 20th 2012 (Mw 6.1). Figure 3 shows a summary of the model characteristics, geometrical mesh and constitutive parameters. Further details can be found in Modoni et al. (2019).

Numerical model implemented to study the absolute versus differential settlement relation. ρ: soil density; K: bulk modulus; G: shear modulus; n: porosity; k: soil permeability; ϕ: friction angle; c: cohesion: L1 and L2: soil damping parameters; Dr: relative density; G0: small-strain shear stiffness; hpo: plastic modulus calibration parameter; nd: dilatation surface calibration coefficient; nb: bounding surface calibration coefficient; Ad0: dilatancy calibration coefficient

In a first series of analyses, the flexural stiffness the building-foundation system is pointed out. The studied scheme, depicted in Fig. 4, includes a 10 m long plate of variable flexural stiffness modulus EI (from 0 to 260 MN*m), carrying a uniform load (50 kPa). To estimate the influence of seismic input, the calculation is performed for three different acceleration time histories, the one recorded during the May 20th earthquake in Emilia Romagna and other two scaled respectively times 0.7 and 1.6. The plot of Fig. 4b, reporting the angular distortion β (defined in Fig. 4a) as a function of the maximum settlements wmax shows a clear correlation between the two variables. Both depend significantly on the seismic input, but the angular distortion can be significantly restrained increasing the slab stiffness. It is also worth noting that the dots representative of EI = 0 matches closely the curve proposed by Grant et al. (1974) that represent the upper bound of observation under static conditions. Multiple analyses, performed with different set of characteristics (e.g. soil relative density, layers thicknesses, foundation width etc.) have shown a limited influence of these factors on the β- wmax relation.

The second considered factor is the variability of subsoil properties, claimed by Ishihara and Yoshimine (1992) as one of the main causes of differential settlements. This issue has been investigated introducing in the above numerical model the spatial variability of relative density in the liquefiable soil layer and performing random field analyses (Fenton and Griffiths 2000). The definition of spatial variability requires an autocorrelation function, that reproduces the spatial dependency within relatively short distances, and a stochastic function characteristic of the spatially uncorrelated variability, i.e. at larger distances. These functions have been derived from the investigation carried out in Terre del Reno (Italy). Therefore, the spatially correlated variability has been modelled with an anisotropic exponential function having vertical autocorrelation distance equal to 0.77 m, found with a maximum likelihood criterion (Honjo and Kazumba 2002) on the profiles of CPT tests (Fig. 5a); the horizontal autocorrelation distance has been given considering literature indications (Stuedlein et al. 2012) that suggest values variable between 2 and 5 m depending on the depositional history of the deposit. In the present case, the lower value (2.0 m) has been assumed for this distance to maximize subsoil heterogeneity and the distortional effects on the foundation. The random variability of relative density has been modelled assigning a lognormal probability function with mean equal to 0.36 based on the interpretation of CPT tests (Robertson and Wride 1998; Idriss and Boulanger 2008) (Fig. 5a). The standard deviation found in this specific case is equal to 0.07, but this value has been parametrically varied in the future analyses for the sake of generality. Fields of relative density like the one depicted in Fig. 5b can be randomly generated with the local average subdivision method (Fenton and Griffiths 2000) and considered as input for calculation of settlements.

The numerical analyses have been performed assigning random fields of relative density to the liquefiable layer in the liquefaction scheme of Fig. 4a, a 10 m wide slab with uniform unit load equal to q = 50 kPa. Nil flexural stiffness (EI = 0) has been assigned to the slab, to innfer the most conservative condition according to Fig. 4b, i.e. the one that amplifies differential settlements. As before, the seismic input has been assigned scaling times 0.7, 1.0 and 1.6 the acceleration time history of May 22th event in Terre del Reno. The variability of subsoil properties has been parametrically varied assigning different variation coefficients to the log-normal random distribution (CV = 0.1, 0.2 and 0.3). These quantities, together with the low assumed values of autocorrelation distances, should provide conservative estimates of the differential settlement (see Stuedlein et al. 2012).

For each combination of factors, twenty random fields of relative density have been generated and subjected to the numerical calculation, this number has been chosen considering the time necessary for one calculation. Absolute and differential settlements have been estimated from the deformation of the slab as shown in the sketch of Fig. 6a. The plot of Fig. 6b readily shows that, in spite a large variation of settlements generated by seismic inputs of largely different intensity, a direct proportionality can be inferred between differential (δmax) and absolute (wmax) values.

a Differential (δmax) versus absolute (wmax) settlements from the parametric random field analysis; and b statistical distribution of the coefficient α defined in Eq. (5)

Based on this observation, the following linear relation is adopted between these two variables:

Figure 6b shows the statistical distribution of the coefficient α, from which a normal distribution having mean value equal to 0.53 and standard deviation equal to 0.08 is inferred.

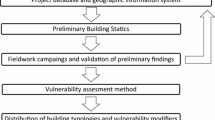

4 Methodology

The above relations form the sequence of steps summarized in the flow chart of Fig. 7, conceived to estimate losses on a portfolio of buildings. The procedure implies to identify and characterize each building with reference to the implemented calculation, i.e. determine the geographical coordinates of the centroid, its structural typology, extension and number of storeys, the latter necessary to identify the appropriate fragility function and estimate unit load. The subsoil is determined with the depth, width and relative soil density of the liquefiable layer, which implies processing all available investigation (boreholes, CPT profiles) as described in Sect. 5.1.2. Conjugating this information with the base seismic input, the local seismic response can be analyzed at the building position to determine the parameters necessary for the further steps of the analysis. They consist in estimating the maximum absolute settlement of the building with Eq. (3), transform it into a differential settlement with Eq. (4), and computing the probability associate to each damage level with the procedure described in Fig. 2 and Table 2. Finally, the estimate of losses can be obtained multiplying each of these probabilities with the cost associated to the corresponding damage level (Eq. 2). The damage computed on each building is a fraction MDR of the total demolition/reconstruction cost.

Calculation can be performed for a selected seismic scenario, assigning the corresponding seismic input, or for the entire lifecycles of the building. In this second case, the seismic input must be defined in probabilistic terms, considering the seismic hazard of the area.

5 Case study validation

The city of Christchurch, located in the Canterbury Region of the South Island of New Zealand, was repeatedly struck by earthquakes during the seismic sequence occurred in 2010–2011 (Cubrinovski 2013). At that time the city had a population of about 375,000 inhabitants and represented the second largest city of the country. The geological setup consists of recent alluvial deposits laid down by the Waimakariri River and fine marine sediments deposited on the coastal margin of the floodplain and in estuaries and lagoons. The Canterbury sequence (Christophersen et al. 2013) generated thousands earthquakes, about six hundreds with moment magnitude Mw > 4 and two most noticeable events, the strongest one (Mw7.1) occurred in September 4th 2010 in Darfield, about forty kilometer far from Christchurch CBD, and a Mw6.2 aftershock occurred in February 22nd 2011 just below the city. Due to the vicinity, the consequences of the second event were particularly severe, with 185 fatalities and a diffuse devastation to dwellings and infrastructures. Liquefaction played the major role causing the displacement of about 15,000 families, the temporary abandonment of nearly 20,000, the demolition of 8000 buildings (including 70% of the building in the CBD) and the removal of 900,000 tons of liquefied soil (Tonkin & Taylor 2013). In an area just outside the CBD, named “The Red Zone” after the earthquake, all buildings were completely damaged and subsequently demolished (Fig. 8). Diffuse losses have involved the community in a slow, tiring and still ongoing reconstruction process. Although not being the only event of such a size, the case of Christchurch is probably the most impressive example of liquefaction induced damage in an urban environment. The present study focuses on the evidences of liquefaction caused by the February 22nd 2011 earthquake in the central part of the city. This area, highlighted in Fig. 8, includes the Central Business District (CBD) and many reinforced concrete buildings that suffered damages of different severity.

5.1 Creation of databases

The proposed methodology requires to collect and process data of different nature referring them to their geographical position, cross information and compute the different terms of damage and risk. Building typology and characteristics together with subsoil composition and properties for the city of Christchurch have, thus, been combined in a GIS platform to predict damage with the scheme of Fig. 7 and compare estimates with the post-earthquake survey of buildings.

5.1.1 Typology, characteristics and damage of buildings

The data on buildings have been recovered from the Canterbury Earthquake Building Assessment (CEBA) database (Lin et al. 2014, 2016). This database was developed based on post-earthquake data collected by Christchurch City Council (CCC), the Canterbury Earthquake Recovery Authority (CERA), and Tonkin and Taylor Limited (Lin et al. 2016) to quickly assess buildings health state and identify possible danger to the public safety. It provides several information on damaged buildings, including addresses, year of construction, structural attributes like typology, systems and construction material, current state of conservation, number of stories and fundamental period (Ty), peak ground and spectral acceleration (PGA, Sa(Ty)) plus other information (Fikri et al. 2018). About 10,777 damaged buildings were documented in the CEBA database following the Canterbury earthquakes, of which 6062 were classified as residential (56%) and 3528 (33%) as commercial (Lin et al. 2016). The distribution of buildings over the territory (Fig. 9a, b) shows that the largest part consists of relatively light wooden structures (61%), with one or two storeys, a lower percentage (16%) is made of heavier masonry walls, while the remaining part consists of reinforced concrete (21%) or steel frames (2%). While wooden buildings are distributed all over the city area, the reinforced concrete buildings are mainly concentrated in central part of the city, around the Christchurch CBD (Fig. 9c).

After each major earthquake event, a detailed survey of the damage occurred to land and dwellings was undertaken by teams of geotechnical engineers coordinated by the agencies of the NZ Government. Maps of liquefaction-induced damage were produced to assess extent and severity of the surface effects. With regard to buildings, different types of damage were observed, in a strict dependency with the structural typology: the damage on wooden buildings was mainly due to ground cracks and sand ejecta, with a negligible role of the building due its limited weight and weak foundation (Fig. 10a); the damage to taller reinforced concrete buildings was due to differential deformation occurred at the foundation level, more heavily affected by the building presence (Fig. 10b).

a Type of damage on wooden; and b reinforced concrete buildings (from Cubrinovski et al. 2011c) (the yellow arrows in figure b represent the settlement distribution along the building)

In the former case, field observations were categorized into three main classes according to the quantity of ejected material observed on the ground surface and to the presence/absence of cracks and lateral spreading. The map of land damage for the event of February 2011 (Fig. 11a) classifies the areas where no liquefaction was observed with blue and green color, the areas characterized by minor to moderate sand ejecta and cracks with yellow, and the areas affected by severe liquefaction, major cracks and lateral spreading with red. For completeness, the same classification after the September 2010 event is reported in Fig. 11b. Comparing these two plots it readily emerges that the Central Business District, that hosts the reinforced concrete buildings analyzed in the present study (see also Fig. 8), was not affected by the September 2010 earthquake, so an interaction between the two events seems unlikely. On the contrary, some overlapping of liquefied zones in the Red Zone may lead to suppose that the previous event could have increased the liquefaction susceptibility of the soil shaken by the second event. Actually, the effects of multiple earthquakes on the liquefaction susceptibility of soil are controversial and largely debated in the literature (Cubrinovski et al. 2011d; van Ballegooy et al. 2014). Considering the different distribution of evidences shown by Fig. 8 and the relatively limited subsidence recorded after the September 2010 event (https://www.nzgd.org.nz/) this influence has been herein neglected and prediction for the earthquake of February 22nd 2011 has been performed independently from the past event.

Liquefaction and Lateral Spreading Observations from Canterbury Geotechnical Database (2013). Map Layer CGD0300—retrieved on 22nd September 2016 from https://canterburygeotechnicaldatabase.projectorbit.com/

Subsequently, another survey named Detailed Engineering Evaluation (DEE) was completed for business and multi-storeys residential buildings (Lin et al. 2014). Based on these reports, damage induced by liquefaction was classified as minor, moderate or major according to the criterion introduced by van Ballegooy et al. (2014) that defines severity classes (Level: #1 minor, #2 moderate and #3 major) for different types of foundation damage (Fig. 12a). The map with classification of damage for the buildings of Christchurch is plotted in Fig. 12b.

a Damage survey criterion from van Ballegooy et al. (2014); and b mapping of the observed liquefaction-induced building damage

5.1.2 Subsoil composition and properties

The subsoil data used in the present analysis have been extracted from the New Zealand Geotechnical Database (NZGD-https://www.nzgd.org.nz/). This database, initially known as the”Canterbury geotechnical Database”, was firstly promoted by the New Zealand Government through the Ministry of Business, Innovation and Employment (MBIE) and the Earthquake Commission (EQC) to assist the rebuilt of Christchurch, and interconnected with CEBA to encourage data sharing among public and private stakeholders. Then, its success among the scientific community and private companies determined its transformation into the New Zealand Geotechnical Database (NZGD) aiming at increasing the resilience around New Zealand against natural disasters. As per May 2019, the NZGD database contained over 35,800 cone penetration tests, 18,700 boreholes, 1000 piezometers with accompanying groundwater monitoring records, 6000 laboratory test records (plus other data and maps) and it is constantly updated. In the present study, about 9000 Cone Penetration Test (CPT) profiles extending below 10 m depth from the ground surface have been considered uniformly distributed over the studied territory. Such an unprecedented density of territory coverage with subsoil data (Fig. 13a) enables to reconstruct with sufficient accuracy the distribution of the different variables over the territory. The database creation and processing has consisted in the following steps:

-

individual scrutiny of each CPT profile, homogenization to a standardized digital format in order to enable automatic processing and restitution of the analysis results on maps;

-

filtering of inconsistent data from the considered dataset, with the boxplot test (Montgomery et al. 2012) explained in the previous Sect. 2.1. The sample test shown in Fig. 13b implies a direct comparison between measured and interpolated values of the representative variable, and the definition of a threshold for the exclusion of inconsistent data; in the present case, the tolerability criterion has been fixed considering the 25 and 75 percentiles of the error distribution (Q1 and Q3), the interquartile range (IQR = Q3 − Q1) and discarding the data falling outside the interval (i.e. Q1 − 1.5*IQR; and Q3 + 1.5*IQR); this process led to discard about 400 samples over the total number of CPT profiles.

-

estimate of the spatial distribution of variables in terms of mean values and standard errors. As an example, Fig. 13c shows the distribution of mean values and standard errors of the Liquefaction Severity Number LSN (van Ballegooy et al. 2014) computed for the February 22nd, 2011 earthquake in the central area of the city.

The above database enables also to verify the applicability of models and to identify possible limitations of the performed analysis. In this specific case, the models adopted to evaluate absolute and differential settlements [e.g. Equations (3) and (5)], but also the empirical indicators foreseeing the effects of liquefaction (e.g. LSN), postulate a relatively simple mechanism where liquefaction occurs in a unique layer underlying a non-liquefiable crust. More complex effects, like those envisaged by Cubrinovski and van Ballegooy (2017), must on the contrary be expected for alternation of susceptible and non-susceptible layers. Therefore, the application of the above defined models to specific situation could result inappropriate and lead to significant errors. To exclude this issue, the equivalence of the subsoil profile derived from each CPT profile to a three-layers model is verified with a criterion defined by Millen et al. (2019). This criterion identifies 22 equivalent soil profile (ESP) classes of different weakness, each identified by a combination of thicknesses of liquefiable layer, crust and average cyclic resistance ratio (CRR—Boulanger and Idriss 2014) and measures the compliance of the subsoil profile with these classes computing a normed error. The application of this test to the total number of 8300 CPT shows intolerable normed errors (with an assumed threshold equal to 0.15), i.e. the schematization with a three-layer model is unacceptable for a limited number of cases (106 profiles, about 1.2% of the total). These profiles are mostly distributed in the southern part of the city with just few spot-like exceptions in the other parts. This result suggests that the subsoil of Christchurch CBD can be reasonably assumed as adherent to the three-layers model (see Fig. 13a) and authorize to apply this schematization with enough confidence.

Additionally, the analysis of outlier shows that the information from CPT is largely consistent all above the territory, apart from a limited fraction of data reported with red dots in Fig. 13a. The nature of this inconsistency stems from small variation of the test results coupled with the implemented automatic processing that lead to misclassify some soil layers and obtain thicknesses of crust and liquefiable layers inconsistent with the local spatial distribution computed with kriging. This error could be corrected scrutinizing individually these CPT profiles but, considering the very big number of CPT available in the present study, all inconsistent profiles have been removed. In general, this measure has produced an improvement of the estimate certified by a lower standard error (Fig. 13c).

5.2 Estimate of damage on reinforced concrete buildings

With the above databases, the procedure illustrated in Fig. 7 has been implemented as follows to estimate the damage induced on the reinforced concrete buildings by liquefaction during the February 22nd 2011 earthquake:

-

selection of reinforced concrete buildings resting on subsoil that can be outlined with a three-layer model;

-

evaluate the absolute settlement wmax of each building with Eq. (3); the unit load Q is estimated multiplying the number of storeys times a load per unit area equal to 10 kPa (this value assumed as a summary of different loading contribution); Sa1 and CAVdp have been estimated considering the ground motion recordings within the Christchurch CBD (Bray and Macedo 2017);

-

evaluate differential settlement as a function of the absolute settlement by using Eq. (5), where α is assumed to be equal to the median value (0.53) of the observed distribution (see Fig. 6);

-

compute the probability associated to each damage level with the functions given in Fig. 2 and Table 2;

-

compute the Mean Damage Rate (MDR) with the loss factors for each damage level expressed in Table 1.

Despite numerous assumption and approximation inherent in its definition, MDR has been here adopted as indicator of damage considering that it incorporates multiple factors such as the released seismic energy, subsoil and building characteristics. Looking at the global map of Fig. 14a, the buildings located in the western part of Christchurch assumes generally low MDR values (< 0.15), consistently with the limited seen effects of liquefaction (Fig. 11). These values increase in the southern and eastern part of the CBD where subsoil becomes more susceptible. The information given by the map of Fig. 14b overlap quite closely with the distribution of LSN plotted in Fig. 13c. However, it is also seen that largely different values (< 0.15 and > 0.60) occur for buildings located at relatively small distance, i.e. in similar subsoil conditions. This result is dictated by the number of storeys, as taller buildings determine higher loads transferred to the foundation and are characterized by a higher vulnerability, in accordance with the lower median of the fragility function defined in Table 2.

5.3 Validation

The effectiveness of prediction is seen comparing the estimated MDR (Fig. 14) with the damage observed after the February 22nd, 2011 event (Fig. 12) and evaluating the performance of the method with a quantifying indicator. The method based on the Receiver Operating Curve—ROC (Kongar et al. 2015) is adopted with this aim. It compares prediction and observation in 2 × 2 contingency tables, classifying each occurrence as true positive (PP), true negative (NN), false positive (NP) and false negative (PN). Then, considering that the prediction outcomes (positive or negative) depend on the threshold assumed for the characteristic variable, the method computes the true positive ratio (TPR, i.e. the fraction of positive events predicted as positive), and the false positive ratio (FPR, i.e. the fraction of negative events predicted as positive), for increasing threshold values and plots all values in the FPR-TPR plane like in the plot of Fig. 15. For a zero threshold, TPR and FPR are both equal to 1 (all events, positive or negative, are estimated as positive), then both variables tend to zero for increasing thresholds (at the maximum threshold all events, positive or negative, are predicted as negative). If the predictive method is valid and a well-defined threshold can be set, the curve will pass near the upper-left corner of the plot (FPR = 0–TPR = 1) which represent an ideal condition (all negative events are predicted as negative, all positive evens are predicted as positive). On the contrary, in the case of poor prediction, the curve will move along the plane bisecting the equality line (1:1), which means positive and negative events randomly predicted as positive or negative. With this representation, the extension of the Area Under the Curve (AUC) can be assumed as a proxy for the quality of prediction (1 means good, 0.5 poor). The optimal threshold, i.e. the decision value of the variable that defines the best separation between negative and positive events, can be computed as the one giving the maximum Matthews Correlation Coefficient (Matthews 1975):

where TP, TN, FT and FN are the numbers of respectively true positive, true negative, false positive and false negative occurrences. From a statistical viewpoint, MCC is proportional to the Chi squared statistic for the 2 × 2 contingency table and its interpretation is like Pearson’s correlation coefficient, so it can be treated as a measure of the goodness-of-fit of a binary classification model (Powers 2011). It is noted that MCC considers success and failure of prediction (for both positive and negative occurrence) in the same way, i.e. without distinguishing the consequences of misprediction. To account for this issue, Maurer et al. (2015) propose to set the optimal decision thresholds evaluating the economic consequences of misprediction, i.e. multiplying the terms of eq. 6 with weighting factors. Despite this issue becomes relevant when risk assessment is preparatory for remediation of buildings, this logic has not been introduced in the present methodological analysis, i.e. the same weight has been given to all terms, to avoid subjective consideration on the cost of repair and remediation. With the same spirit the overall success and failure rates (OSR and OFR), defined respectively as the percentages of successful and unsuccessful prediction, have been here computed to evaluate the prediction performance.

The validation method has thus been applied to the reinforced concrete buildings of Christchurch, considering separately minor, moderate and severe damage as discriminating occurrence in accordance with the post seismic survey of buildings (Fig. 12). Observers may argue that a mismatch exists between the classification of damage used for prediction and damage survey. For prediction, Fotopoulou et al. (2018) define four classes of damage (Slight, Moderate, Extensive and Complete) in accordance with the criterium defined by Hazus (FEMA 2003). In the post-earthquake survey of damage at Christchurch, van Ballegooy et al. (2014) distinguished three classes (“Minor”,” Moderate”, “Severe”). An option to eliminate this mismatch would be the removal of a damage class in prediction. To avoid such a subjective choice, prediction and validation have been performed separately, adopting MDR as predictive variable (it accounts for all four damage classes of damage in a probabilistic way) and relating MDR with the observed damage.

For each condition the area under the curve AUC (Fig. 15) and the values indicating the performance of prediction are reported in Table 3. These results indicate a variable capability of the proposed method to capture observation, being the ROC curves higher than the plane bisecting line, but with variable distances. The prediction is not particularly exciting for minor damage level (Fig. 15a) possibly because of a subjective, not clearly and uniquely identifiable, recognition of injuries of buildings that smooths the border between positive and negative occurrences. However, when looking at the overall success of prediction, i.e. the percentage of correctly predicted cases, an OSR value equal to 68.5% is obtained even for this class. The quality of prediction increases noticeably with the damage level, which means that a stronger relation exists between MDR and damage, as confirmed by the increasingly higher values of the decision threshold and of the Overall Success Rate.

5.3.1 Estimate of damage and validation for wooden buildings

As shown in Fig. 10a, the vulnerability of light wooden dwellings of Christchurch is not much affected by the structural characteristics but mainly depends on the subsoil response. Damage on this building category can thus be strictly associated to the phenomena, sand ejecta and lateral spreading, induced by liquefaction at the ground level. Considering this premise, the validation test of Kongar et al. (2015) has been also applied to the wooden buildings assuming the empirical indicator of land damage LSN defined by van Ballegooy et al. (2014) as engineering demand parameter and deriving the damage level for each of them from the classification of Fig. 11. The results summarized in Fig. 16 and Table 4 show a fairly good correlation between LSN and damage. The overall success rate of this prediction oscillates around 70% for all damage levels (basically two-third of the cases), in accordance with the outcomes reported by other authors (Kongar et al. 2015). The reason for misprediction could be sought in other factors not captured by the considered indicator, like for instance the occurrence of two-dimensional conditions (e.g. local slope) that may aggravate damage (e.g. lateral spreading). However, it is important to observe that the decision thresholds, i.e. the values of LSN that maximizes MCC, increase with the damage level and for the minor damage assume a value similar to the threshold (= 10) suggested by van Ballegooy et al. (2014).

6 Conclusion

The quantification of vulnerability is one of the most important yet delicate phases of the risk assessment process. For earthquakes induced liquefaction, vulnerability models imply to embrace seismic input, mechanical properties of subsoil and structure into a unique scheme that captures their mutual interaction. Numerical tools coupled with recently constitutive models enable to simulate very accurately the role of each element concurring in the dynamic response of buildings founded on liquefiable soils. However, when dealing with territorial risk assessment, these models are hardly applicable basically due to the lack of information, and thus a simpler characterization of factors becomes necessary. The present paper has explored the applicability of a vulnerability scheme to the assessment of reinforced concrete buildings at the urban scale. In the attempt to trade off simplicity and accuracy, recent solutions offered in the literature have been combined in a sequential procedure articulated in the following steps: (1) Absolute settlements of buildings are estimated with a recent formula that incorporates the paramount factors inferring their role from a large number of numerical analyses; (2) Absolute settlements are then transformed into differential settlements, more indicative of damage, with a relation stemming from a parametric numerical calculation where the spatial variability of subsoil properties is probabilistically reproduced; and (3) Differential settlements are finally used as engineering demand parameters of fragility functions quantifying the probability of damage for reinforced concrete buildings not specifically designed to resist seismic actions.

Being aware of the simplification and without pretending to be exhaustive, the proposed methodology introduces a robust logical structure to account for the most relevant factors contributing to building damage. Obviously, it is susceptible of improvement. For instance, the fragility curves of Table 2 are derived for buildings of fixed, although typical, array of columns and plan dimensions, while they have been applied to other building geometry. Additionally, the relation between differential and absolute settlements has been derived under simplified yet conservative assumptions, e.g. focusing on distortion and neglecting other possible causes of damage (e.g. tilting). Refinement should consist in the introduction of more pertinent schemes, e.g. a more detailed classification and characterization of buildings, more accurate calculation schemes for absolute and differential settlements.

However, the validity of the method has been tested on the case study of February 22nd 2011 earthquake of Christchurch (New Zealand), exploiting a rich catalogue of buildings, the post-earthquake survey of damage and a very dense database of geotechnical investigations. A geostatistical methodology has been proposed to manage the uncertainty connected with the determination of subsoil properties, filtering inconsistent data and quantifying the variance inherent with estimate. The validation of model performed with a quantitative criterion has revealed that a correlation exists between prediction and evidence, although with a variable degree of satisfaction. The Overall Success Rate, i.e. the fraction of successful prediction (damage or undamaged), is about two-thirds for the lower level and increases up to more than 90% or for the higher classes.

A similar analysis has been performed for the light wooden buildings present in the city, considering damage associated to subsoil failure only, i.e. without considering the structural characteristics, and adopting the liquefaction severity number LSN (van Ballegooy et al. 2014) as engineering demand parameter. The success rate of prediction in this case has been equal to about two-thirds for all considered damage levels.

In conclusion, the proposed vulnerability scheme addresses an important component of the holistic risk assessment procedure outlined in Fig. 1. One of the most immediate outcomes of this scheme is the cost–benefit analyses anticipating possible mitigation strategies, that can be accomplished coupling economic to physical damage and comparing the cost of mitigation on an annual basis (e.g. Hazus, FEMA 1998). The tools introduced in the analysis, e.g. the geostatistical assessment of subsoil properties, the fragility curves adopted to compute damage on buildings, the relation between differential and absolute settlements, incorporate a probabilistic quantification of the terms of Eq. (1). This development has not been exploited in the present study as further consideration must be made on the uncertainty connected with the different factors that rule the absolute settlements of buildings. However, a procedure to estimate uncertainty and combine all factors is a future goals of the present research.

References

Baker JW, Faber MH (2008) Liquefaction risk assessment using geostatistics to account for soil spatial variability. J Geotech Geoenviron Eng 134:14–23

Bakir BS, Sucuoğlu H, Yilmaz T (2002) An overview of local site effects and the associated building damage in Adapazari during the 17 August 1999 Izmit earthquake. Bull Seismol Soc Am 92(1):509–526

Bertalot D, Brennan AJ (2015) Influence of initial stress distribution on liquefaction-induced settlement of shallow foundations. Geotechnique 65(5):418–428. https://doi.org/10.1680/geot.SIP.15.P.002

Bird J, Bommer J, Crowley H, Pinho R (2006) Modelling liquefaction-induced building damage in earthquake loss estimation. Soil Dyn Earthq Eng 26(2006):15–30

Boscardin MD, Cording EJ (1989) Building response to excavation-induced settlement. J Geotech Eng 115(1):1–21

Boulanger RW, Idriss IM (2014) CPT and SPT based liquefaction triggering procedures. Department of Civil and Environmental engineering, University of California at Davis, Davis

Bray JD, Dashti S (2014) Liquefaction-induced building movements. Bull Earthq Eng 12(3):1129–1156. https://doi.org/10.1007/s10518-014-9619-8

Bray JD, Macedo J (2017) 6th Ishihara lecture: simplified procedure for estimating liquefaction induced building settlement. Soil Dyn Earthq Eng 102:215–231. https://doi.org/10.1016/j.soildyn.2017.08.026

Brzev S, Scawthorn C, Charleson AW, Allen L, Greene M, Jaiswal K, Silva V (2013) GEM Building Taxonomy 2.0, GEM Technical Report, Gem Technical Report 2013-02 V1.0.0

Bullock Z, Karimi Z, Dashti S, Porter K, Liel AB, Franke KW (2018) A physics-informed semi-empirical probabilistic model for the settlement of shallow-founded structures on liquefiable ground. Géotechnique. https://doi.org/10.1680/jgeot.17.P.174

Bullock Z, Dashti S, Karimi Z, Liel A, Porter K, Franke K (2019) Probabilistic models for residual and peak transient tilt of mat-founded structures on liquefiable soils. J Geotech Geoenviron Eng 145(2):04018108

Campbell KW, Bozorgnia Y (2011) Predictive equations for the horizontal component of standardized cumulative absolute velocity as adapted for use in the shutdown of U.S. nuclear power plants. Nucl Eng Des 241:2558–2569

CEN (2008) PrEN 1997-1. Geotechnical design-general rules. European Commission for Standardization, Brussels

Chilès JP, Delfiner P (2012) Geostatistics: modeling spatial uncertainty, 2nd edn. Wiley, Hoboken, p 726. ISBN 978-0-470-18315-1

Christophersen A, Rhoades DA, Hainzl S, Smith EGC, Gerstenberger MC (2013) The Canterbury sequence in the context of global earthquake statistics, GNS Science Consultancy Report 2013/196,December 2013

Comerio MC (ed) (2005) PEER testbed study on a laboratory building: exercising seismic performance assessment. PEER Report. PEER 2005/12

Cornell CA, Krawinkler H (2000) Progress and challenges in seismic performance assessment. PEER Center News 3:1–3

CSAPEISLA (2016) State of the Art and Practice in the Assessment of Earthquake-Induced Soil Liquefaction and Its Consequences, Report of the Committee on State of the Art and Practice in Earthquake Induced Soil Liquefaction Assessment; Board on Earth Sciences and Resources; Division on Earth and Life Studies; National Academies of Sciences, Engineering, and Medicine, ISBN: 978-0-309-44027-1

Cubrinovski M (2013) Liquefaction-induced damage in the 2010–2011 Christchurch (New Zealand) Earthquakes. In: Proceedings of the 7th international conference on case histories in geotechnical engineering, 29 Apr–4 May, Chicago, Illinois

Cubrinovski M, van Ballegooy S (2017) System response of liquefiable deposits. In: 3rd International conference on performance based design in earthquake geotechnical engineering

Cubrinovski M, Hughes M, Bradley B, McCahon I, McDonald Y, Simpson H, Cameron R, Christison M, Henderson B, Orense R, O’Rourke T (2011a) Liquefaction Impacts on pipe networks. Short Term Recovery Project No. 6 Natural Hazards Research Platform. University of Canterbury

Cubrinovski M, Bradley B, Wotherspoon L, Green R, Bray J, Wood C, Pender M, Allen J, Bradshaw A, Rix G, Taylor M, Robinson K, Henderson D, Giorgini S, Ma K, Winkley A, Zupan J, O’Rourke T, DePascale G, Wells D (2011b) Geotechnical aspects of the 22 February 2011 Christchurch earthquake. Bull N Z Soc Earthq Eng 44(4):205–226

Cubrinovski M, Bray J, Taylor M, Giorgini S, Bradley B, Wotherspoon L, Zupan J (2011c) Soil liquefaction effects in the central business district during the February 2011 Christchurch earthquake. Seismol Res Lett 82:893–904. https://doi.org/10.1785/gssrl.82.6.893

Cubrinovski M, Henderson D, Bradley B (2011d) Liquefaction impacts in residential areas in the 2010–2011 Christchurch earthquakes. In: Proceedings of the international symposium on engineering lessons learned from the 2011 Great East Japan Earthquake, March 1–4, 2012, Tokyo, Japan

Cubrinovski M, Hughes M, Bradley B, Noonan J, McNeill S, English G, Hopkins R (2014) Performance of horizontal infrastructure in Christchurch City through the 2010–2011 Canterbury earthquake sequence. Civil & Natural Resources Engineering, University of Canterbury, Christchurch

Cubrinovski M, Bray JD, de la Torre C, Olsen MJ, Bradley BA, Chiaro G, Stocks E, Wotherspoon L (2017) Liquefaction effects and associated damages observed at the Wellington CentrePort from the 2016 Kaikoura Earthquake. Bull N Z Soc Earthq Eng 50(2):152–173

Cubrinovski M, Bray JD, de la Torre C, Olsen MJ, Bradley BA, Chiaro G, Stocks E, Wotherspoon L, Krall T (2018) Liquefaction-induced damage and CPT characterization of the reclamations at CentrePort, Wellington. Bull Seismol Soc Am 108(3B):1695–1708

Daniell JE, Wenzel F, Khazai B, Vervaeck A (2012) The worldwride economic impact of historic earthquakes. In: 15th WCEE conference proceedings, Lisboa.Paper No. 2038

Dashti S, Bray JD, Pestana JM, Riemer M, Wilson D (2010a) Mechanisms of seismically induced settlement of buildings with shallow foundations on liquefiable soil. J Geotech Geoenviron Eng 136(1):151–164. https://doi.org/10.1061/ASCEGT.1943-5606.0000179

Dashti S, Bray JD, Pestana JM, Riemer M, Wilson D (2010b) Centrifuge testing to evaluate and mitigate liquefaction-induced building settlement mechanisms. J Geotech Geoenviron Eng 136(7):918–929

Dipartimento della Protezione Civile, DPC (2008) Indirizzi e criteri per la microzonazione sismica, Conferenza delle Regioni e delle Province autonome - Gruppo di lavoro MS, Dipartimento della protezione civile, Roma

Dipartimento della Protezione Civile, DPC (2017) Linee guida per la gestione del territorio in aree interessate da liquefazioni (LQ). Versione 1.0. Roma 2017

Dobry R, Liu L (1994) Centrifuge modeling of soil liquefaction. In: 10th World conference of earthquake engineering

EN1997-1 (2004) Eurocode 7: geotechnical design. authority: the European Union per regulation 305/2011, Directive 98/34/EC, Directive 2004/18/EC

EPRI (1988) A criterion for determining exceedance of the operating basis earthquake. Electrical Power Research Institute, Palo Alto, CA, EPRI NP-5930

FEMA/NIBS (1998) HAZUS - earthquake loss estimation methodology, vol 1

FEMA/NIBS (2003) HAZUS-earthquake—technical manual. Federal Emergency

Fenton GA, Griffiths DV (2000) Rberar2d. RFEM

Fikri R, Dizhur D, Quinn Walsh K, Ingham J (2018) Seismic performance of Reinforced Concrete Frame with Masonry Infill buildings in the 2010/2011 Canterbury, New Zealand earthquakes. Bull Earthq Eng. https://doi.org/10.1007/s10518-018-0476-8

Fotopoulou S, Karafagka S, Pitilakis K (2018) Vulnerability assessment of low-code reinforced concrete frame buildings subjected to liquefaction-induced differential displacements. Soil Dyn Earthq Eng 110(2018):173–184

Grant R, Christian JT, Vanmarcke EH (1974) Differential settlement of buildings. J Geotech Eng Div ASCE 100(9):973–991

Guha-Sapir D, Vos F (2011) Earthquakes, an epidemiological perspective on patterns and trends. Adv Nat Technol Hazards Res. https://doi.org/10.1007/978-90-481-9455-1_2

Honjo Y, Kazumba S (2002) Estimation of autocorrelation distance for modeling spatial variabilityof soil properties by Random Field theory. In: The 47th geotechnical engineering symposium, JGS, pp 279–286

Idriss IM, Boulanger RW (2008) Soil liquefaction during earthquakes. Monograph MNO-12, Earthquake Engineering Research Institute, Oakland, CA, 261 pp

Ishihara K (1985) Stability of natural deposits during earthquakes. In: Proceedings of the 11th international conference on soil mechanics and foundation engineering. San Francisco, vol 1. pp 321–376

Ishihara K, Yoshimine M (1992) Evaluation of settlements in sand deposits following liquefaction during earthquakes. Soils Found 32(1):173–188

Itasca Consulting Group Inc. (2016) FLAC—Fast Lagrangian Analysis of Continua, Ver. 8.0. Minneapolis: Itasca

Iwasaki T, Tatsuoka F, Tokida K, Yasuda S (1978) A Practical method for assessing soil liquefaction potential based on case studies at various sites in Japan. In: [conference]: 2nd International conference on Microzonation.—1978. pp 885–896

Karamitros DK, Bouckovalas GD, Chaloulos YK (2013) Seismic settlements of shallow foundations on liquefiable soil with a clay crust. Soil Dyn Earthq Eng 46:64–76

Karimi Z, Dashti S, Bullock Z, Porter K, Liel A (2018) Key predictors of structure settlement on liquefiable ground: a numerical parametric study. Soil Dyn Earthq Eng 113:286308

Kongar I, Rossetto T, Giovinazzi S (2015) Evaluating desktop methods for assessing liquefaction-induced damage to infrastructure for the insurance sector. In: 12th International conference on applications of statistics and probability in civil engineering, ICASP12 Vancouver, Canada, July 12–15, 2015

Krawinkler H (ed) (2005) Van nuys hotel building testbed report: exercising seismic performance assessment. PEER Report. PEER 2005/11

Lee TH, Mosalam KM (2006) Probabilistic seismic evaluation of reinforced concrete structural components and systems. PEER Report. PEER 2006/04

Lin SL, Ums SR, Nayerloo M, Buxton R, King A (2014) Engineering characterisation of building performance with detailed engineering evaluation (DEE) data from the Canterbury earthquake sequence. In: ASEC 2014 conference: structural engineering in Australasia, Auckland, New Zealand

Lin SL, Uma SR, King AB, Buxton R, Horspool NA (2016) A compiled and extensible database for building damage from the 2010–2011 earthquake sequence in Canterbury, New Zealand. GNS Science Report, Lower Hutt, Wellington

Liquefact, D7.1 (2019) Deliverable 7.1, Manual for the assessment of liquefaction risk, defining the procedures to create the database, collect, define, symbolize and store information in the Georeferenced Information System and to perform and represent the risk analysis, www.liquefact.eu

Liu L, Dobry R (1997) Seismic response of shallow foundation on liquefiable sand. J Geotech Geoenviron Eng 123(6):557–566

Macaulay T (2009) Critical infrastructures. Taylor & Francis, Abingdon

Matheron G (1965) Les variables régionalisées et leur estimation: une application de la théorie des fonctions aléatoires aux sciences de la nature. Masson, Paris

Matthews BW (1975) Comparison of the predicted and observed secondary structure of T4 phage Iysozyme. Biochimica et Biophysica Acta Protein Structure 405(2):442–451

Maurer BW, Green RA, Cubrinovski M, Bradley BA (2015) Calibrating the liquefaction severity number (LSN) for varying misprediction economies: a case study in Christchurch, New Zealand. In: 6th International conference on earthquake geotechnical engineering, 1–4 November 2015, Christchurch, New Zealand

Maurer BW, van Ballegooy S, Bradley BA (2017) Fragility functions for performance-based ground failure due to soil liquefaction. In: PBDIII conference in Vancouver, BC in July 2017

Millen M, Ferreira C, Quintero J, Gerace A, Viana da Fonseca A (2019) Simplified equivalent soil profiles based on liquefaction performance. In: 7th International conference on earthquake geotechnical engineering. Rome, Italy

Mitrani-Reiser J, Haselton CB, Goulet C, Porter KA, Beck J, Deierlein GG (2006) Evaluation of the seismic performance of a code-conforming reinforced-concrete frame building—part II: loss estimation. In: 8th NCEE, San Francisco, California, April 18–22, 10 pp

Modoni G, Darini G, Spacagna RL, Saroli M, Russo G, Croce P (2013) Spatial-temporal analysis of the subsidence in the city of Bologna. In: Geotechnical engeneering for the presentation of monuments and histories sites, 30–31 May 2013 Università degli Studi di Napoli Federico II – CRC Press – pp 565–572. ISBN: 9781138000551

Modoni G, Albano M, Salvatore E, Koseki J (2018) Effects of compaction on the seismic performance of embankments built with gravel. Soil Dyn Earthq Eng 106:231–242

Modoni G, Spacagna RL, Paolella L, Salvatore E, Rasulo A, Martelli L (2019) Liquefaction risk assessment: lesson learned from a case study. In: Proceedings of the 7th international conference on earthquake geotechnical engineering ICEGE, 2019, pp 761–773

Moehle JP (2003) A framework for performance-based earthquake engineering. In: Proceedings of ATC-15-9 workshop on the improvement of building structural design and construction practices, Maui, HI, June

Montgomery DC, Runger GC, Hubele NF (2012) Statistica per ingegneria. Seconda Edizione. Copyright 2012, EGEA S.p.A

Mousavi SA, Bastami M, Zahrai SM (2016) Large-scale seismic isolation through regulated liquefaction: a feasibility study. Earthq Eng Eng Vib 15(4):579–595. https://doi.org/10.1007/s11803-016-0350-0

NZGS (2016a) Earthquake geotechnical engineering practice, Module 1-6, New Zealand Geotechnical Society and Ministry of Business Innovation & Employment (MBIE) Earthquake Geotechnical Engineering Practice in New Zealand

NZGS (2016b) New Zealand Geotechnical Society, Earthquake Geotechnical Engineering Practice, Module 3: identification, assessment and mitigation of liquefaction hazards, New Zealand Geotechnical Society and Ministry of Business Innovation & Employment (MBIE) Earthquake Geotechnical Engineering Practice in New Zealand

Pokhrel RM, Kiyota T, Kajihara K (2015) Contribution of geostatistical technique to investigate the spatial variation of liquefaction potential in Urayasu City, Japan. In: Proceedings of the 6th international conference on earthquake geotechnical engineering, 1–4 November 2015, Christchurch, New Zealand

Porter KA (2003) An overview of PEER’s performance-based earthquake engineering methodology. In: ICASP9, civil engineering risk and reliability association (CERRA), San Francisco, CA, July 6–9

Powers DMW (2011) Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation. J Mach Learn Tech 2(1):37–63

Ramirez J (2019) Performance of inelastic structures on mitigated and unmitigated liquefiable soils: evaluation of numerical simulations with centrifuge tests. Doctoral dissertation. University of Colorado at Boulder

Robertson PK, Wride CE (1998) Evaluating cyclic liquefaction potential using the cone penetration test. Can Geotech J 35:442–459

Salvatore E, Andò E, Modoni G, Viggiani G (2016) Micromechanical study of cyclically loaded sands with X-ray microtomography and digital image correlation. Procedia Eng 158:92–97

Salvatore E, Andò E, Proia R, Modoni G, Viggiani G (2018) Effect of strain localization on the response of granular materials subjected to monotonic and cyclic triaxial tests. Rivista Italiana di Geotecnica 52(2):30–43

Saroli M, Albano M, Modoni G, Moro M, Milana G, Spacagna RL, Falcucci E, Gori S, Scarascia Mugnozza G (2020) Insights into bedrock paleomorphology and dynamic soil properties of the Cassino intermontane basin (Central Italy). Eng Geol 264:105333. https://doi.org/10.1016/j.enggeo.2019.105333

Spacagna R-L, De Fouquet C, Russo G (2013) Geostatistical analysis of groundwater nitrates distribution in the Plaine d’Alsace. Coupled Phenomena in Environmental Geotechnics, CRC Press (Taylor & Francis Group) (GBR). In: International Symposium on Coupled Phenomena in Environmental Geotechnics (CPEG), Torino 1–3 July 2013, ISBN: 9781138000605

Spacagna RL, Rasulo A, Modoni G (2017) Geostatistical analysis of settlements induced by groundwater extraction. In: ICCSA 2017 17th international conference computational science and its applications, Trieste, Italy, July 3–6, 2017, Proceedings, Part IV LNCS 10407, pp 350–364, 2017 https://doi.org/10.1007/978-3-319-62401-3_26

Stuedlein A, Kramer S, Arduino P, Holtz R (2012) Geotechnical characterization and random field modeling of desiccated Clay. J Geotech Geoenviron Eng 138(11):1301–1313

Tokimatsu K, Seed HB (1987) Evaluation of settlements in sands due to earthquake shaking. ASCE J Geotech Geoenviron Eng 113(8):861–878

Tokimatsu K, Hino K, Suzuki H, Ohno K, Tamura S, Suzuki Y (2019) Liquefaction-induced settlement and tilting of buildings with shallow foundations based on field and laboratory observation. Soil Dyn Earthq Eng 124:268–279

Tonkin & Taylor, Ltd. (2013) Liquefaction Vulnerability Study. Report to Earthquake Commission. Tand T ref. 52020.0200/v1.0, prepared by S. van Ballegooy and P. Malan. https://canterburygeotechnicaldatabase.projectorbit.com

van Ballegooy S, Malan P, Lacrosse V, Jacka ME, Cubrinovski M, Bray JD, O’Rourke TD, Crawford SA, Cowan H (2014) Assessment of liquefaction-induced land damage for residential christchurch. Earthq Spectra 30(1):31–55

Viggiani C, Mandolini A, Russo G (2012) Piles and piled foundations. Taylor & Francis group, Abingdon

Wiebicke M, Andò E, Salvatore E, Viggiani G, Herle I (2017) Experimental measurement of granular fabric and its evolution under shearing. In: The European Physical Journal conferences EPJ web of conferences, vol 140. p 02020

Youd TL, Hoose S (1976) Liquefaction during 1906 San Francisco earthquakes. J Geotech Eng Div 102(GT5):425–439

Zhang G, Robertson PK, Brachman RWI (2002) Estimating liquefaction-induced ground settlements from CPT for level ground. Can Geotech J 39:1168–1180

Zhang G, Robertson PK, Brachman RWI (2004) Estimating liquefaction-induced lateral displacements from CPT for level ground. J Geotech Geoenviron Eng August 2004:861–871