Abstract

Artificial Bee Colony algorithm (ABC) is inspired by behavior of food foraging of honeybees to solve the NP-Hard problems using optimization model which is one among the swarm intelligence algorithms. ABC is a widespread optimization algorithm to obtain the best solution from feasible solutions in the search space and strive harder than other existing population-based algorithms. However, in diversification process ABC algorithm shows good performance but lacks in intensification process and slows to convergence towards an optimal solution because of its search equations. In this work, the authors proposed an improvised solution search strategy at employed bee phase and onlooker bee phase by considering the advantages of the local-best, neighbor-best, and iteration-best solutions. Thus, the obtained candidate solutions are closer to the best solution by providing directional information to ABC algorithms. The search radius for new candidate solutions is adjusted in scout bee phase which facilitates to move towards global convergence. Thus, the process of diversification and intensification is balanced in this work. Finally, to assess the performance of the proposed algorithm, 20 numerical benchmarks functions are used. To show the significance of the proposed methodology it has been tested with Combined Heat and Economic Power Dispatch (CHPED) problem. The empirical result exhibits that the proposed algorithm provides higher quality solutions and outperform with original ABC algorithm for solving numerical optimization problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization problems are habitually stumbled in the field of engineering design (Rao et al. 2008; Pawar et al. 2008), computer science and information theory (Karaboga and CelalOzturk. 2011; Özbakir and AdilBaykasoğlu et al.. 2010; Kaveh and Talatahari 2009), statistical physics and economics, etc. (Pan et al. 2011; Coelho and Leandro and Viviana CoccoMariani. 2009). Popular meta-heuristic approach Swarm intelligence (SI) technique which is a sub-domain of artificial intelligence, and it is employed to unravel various engineering and optimization problems. It is inspired by the foraging behavior of the flock of birds, social insects and schools of fish and they are capable of solving complex tasks. The problems which cannot be solved by standard mathematical methods are easily solved by swarm intelligence. Use of swarm intelligence has many advantages such as modularity, parallel processing, independent and self-governing, fault tolerance and scalability (Kassabalidis et al. 2001). Optimization is the method of searching optimal solution from the large search space which is very hard and requires more computational time.

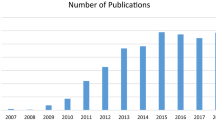

The population-based optimization (PBO) algorithm is designed to calculate the near optimal solution to global numerical problems or engineering problems based on the fitness evaluation, and thus it moves towards best solution space. PBO are classified into two major groups as SI based algorithms and Evolution based algorithms (Passino 2002). In the last decade, the research towards various optimization algorithms increased especially in the meadow of biological-inspired optimization algorithms. Particle swarm optimization (PSO) based on the communal behavior of birds flocking and fish schooling (Liu et al. 2005; Xiang et al. 2007), ant colony optimization (ACO) inspired by the behavior of ant colonies (Dorigo et al. 1996), genetic algorithm (GA) based on the Darwinian law of survival and reproductive system of living species (Golberg 1989) and ABC inspired by the natural deeds of honey bee swarm (Karaboga 2005).

ABC algorithm is a biologically inspired optimization algorithm proposed by Karaboga is used to calculate the best near solution in statistical optimization or engineering problems. ABC is instigated by the foraging deeds of honey bee swarm for seeking food resource. The recital of ABC depends on the process of exploration and exploitation method to yield uniform and doable solution. Exploration is the self-determining search to obtain optimal solution and exploitation uses acquaintance of the previous best solution to search. Many researchers have developed algorithms to amplify the process of exploration and exploitation to solve numerical optimization problems. To appraise the performance of ABC algorithm, many benchmark functions of unimodal and multimodal distributions are employed. ABC is compared with known optimization algorithms like PSO, GA, and ACO to access the performance and the obtained results show ABC produce more optimal solution than other competitive methods (Singh 2009; Karaboga 2009; Kang et al. 2009).

Researchers found ABC is good at exploration in updating search equation but poor in exploitation process (Zhu and Kwong 2010). To achieve the equipoise between exploration and exploitation process, it is essential to progress the local search in ABC (Karaboga and BahriyeAkay. 2009). To evade local optima and accelerate the convergence speed are the primary objectives appealing in ABC. Many modified and improved algorithms (Akay and DervisKaraboga. 2012) are projected in recent years like best-so-far ABC (Banharnsakun and TiraneeAchalakul et al.. 2011), Bee Swarm Optimization (BSO) (Akbari and AlirezaMohammadi and KoorushZiarati. 2010), Gbest-guided ABC (GABC) (Zhu and Kwong 2010) and I-ABC (Gao and Liu 2011) and these algorithms show better performance than original ABC. The choice of ABC over other evolutionary algorithms for improvisation is due to its explored number of applications through it. If ABC can be improved then the supported applications will get an innovative advantage with its use.

At first, a modified exploration equation is presented in the employed bee phase, the information about global best, neighbor best and iteration best are embedded in the solution search equation to balance diversification and intensification process. Then search space radius is adjusted in the phase of scout bee to move towards global convergence, and at each iteration, the perturbation is regulated dynamically. Oppositional Learning concept is imposed over ABC for reducing the deviation of search while exploitation process is on board. In this work, directional information about the food source is incorporated and the algorithm is investigated in the numerical benchmark optimization problems. And also, the proposed model is used to solve CHPED and provide significant results.

This paper is structured as pursue: The literature survey on ABC algorithm is described in Sect. 2. Section 3 discusses the impression of ABC algorithm. The proposed algorithm is conferred in Sect. 4. Section 5 describes the simulation and experimental results for the formulated problem; Sect. 6 concludes the research work.

1.1 Literature review

In the typical ABC, it has many advantages such as local search, solution update, and memory and it produced good results (Basturk and Karaboga 2006). Various modifications and improvement of the algorithm are measured and analyzed on numerical optimization problems (Karaboga 2005). Several hybridizations of ABC algorithm are found in the literature review in Karaboga et al. (2014). However, ABC trapped in local optimum which influences the performance of convergence and result in uncertainty (Luo et al. 2013). Akay et al. (2012) introduced two real-parameter optimizations in ABC algorithm Modification Rate (MR) and scaling factor (SF) to increase the convergence rate and perturbation control. Dongli et al. (Dong Li et al. 2011) proposed better performance in ABC with three modifications. The search solution is updated based on neighborhood search and proposed new search equation with respect to modifications. To balance the exploitation and exploration process, various changes have made in standard ABC.

Gao et al. (2012) proposed a modified ABC algorithm inspired by the differential evolution (DE) by updating the search solution equation. To enlarge the exploitation process and to increase search around best solution, previous iteration value is considered. To balance exploration and exploitation selective probability is introduced to get new search equation. Thus chaotic and opposition-based learning is incorporated to improve the process of global convergence. MABC algorithm prevents the bees from entrapping to the local minimum, which increases convergence globally and efficiently increase the ability of search.

Anuar et al. (Failed 2016) monitored the behavior of scout bee and made changes to improve the performance of exploration process. The proposed algorithm is named as ABC rate of change (ABC-ROC) since the scout bee phase is based on its change rate. The limit in ABC will control exploration in scout bee phase, the author calculated slope and plotted the performance graph. The process of the rate change in performance graph decides the exploration process. Ozturk et al. (2015) proposed the genetic method for discrete ABC by replacing with Jaccard coefficient. In discrete ABC, solution generation is improved by calculating the similarities obtained genetically from inspired components clustering dynamically. Karaboga et al. (2014) proposed quick ABC algorithm (qABC) to sculpt the onlooker bee phase to obtain accurate and improved performance in exploitation process. In the qABC algorithm, the best neighbor solution is incorporated in the candidate solution equation of onlooker bee phase. To decide a best neighborhood, mean Euclidean distance is calculated between neighbor and rest of the solutions.

Yan et al. (2012) proposed an algorithm for data clustering in swarm intelligence model as Hybrid ABC algorithm (HABC). The author incorporated the genetic algorithms crossover operator in ABC to increase the process of information exchange among honey bees. The crossover operator is included in the onlooker bee phase to augment the ability of the optimization in the algorithm. Maeda et al. (Maeda and Tsuda 2015) recommended the reduction of artificial ABC for global optimization. In initialization, the number of bees and positions are updated to avoid trapping into local minima. The bees in the process are sequentially reduced to reach the predetermined value when the highest value is obtained the objective function opts. Stanarevic et al. (Stanarevic et al. 2010) introduced ABC algorithm for the constrained problem. To improve search equation in the initialization process, Smart Bee (SB) is pioneered to use the historical memory of location and search better food sources. Constraint problems are solved by using Deb technique instead of greedy selection.

Aderhold et al. (2010) commenced with two new modifications calculating the global best solution in addition to the random reference value in employee bee phase. The distance has been the global best solution location, and potential reference is selected for probability calculation. Yang et al. (2005) developed virtual bee algorithm (VBA) to solve engineering problems with two parameters. In this algorithm virtual swarm bees are produced and randomly move in space, to find the target food source virtual bees interact with each other based on the encoded values obtained from the function. The intensity of bee’s interaction based on encoded function will solve the optimization problems. Chen et al. (2014) proposed enhanced ABC (EABC) which uses self-adaptive searching strategy to avoid trapping into local minima. To balance the exploitation and exploration process artificial immune network (ai-Net) operator is introduced. This operator will accelerate the global convergence rate in search selection process, and search strategy is introduced in initialization process to obtain a novel generation. In 2021, Author Liling Sun, et al. (2020) proposed surrogate-assisted multi-swarm artificial bee colony (SAMSABC) with improvised exploitation model using orthogonal method. The experimental results over 20 complex numerical optimization algorithms shows its significance. Authors Xiao, et al. (2021) proposed ABC with adaptive neighborhood search and Gaussian perturbation (ABCNG). In this model, the initial phase of population were generated using neighborhood selection model dynamically. Then on the scout bee phase Gaussian Perturbation method is used to improve the unimproved solutions.

However, ABC is used to solve engineering optimization problems and large-scale problems besides solving global numerical optimization problems. Kang et al. (2009) developed a hybrid ABC algorithm uses Nelder-Mead (NM) simplex method for selection local selection process and structural inverse analysis. The performance of this hybrid algorithm outperforms with other heuristic algorithms. Samanta et al. (2011) used ABC to search optimal combinations used in NTM (non-traditional machine) process. This NTM process solves both single and multi-objective optimization problems. Yi et al. (Yi and He 2014) developed novel ABC algorithm (NABC) for solving numerical optimization problems to enhance exploitation process by integrating current best solution in search equation. The NABC is applied in many optimization problems like flow shop, vehicle routing problem and data mining.

Yurtkuran et al. (2016) introduced Enhanced ABC with solution acceptance rule and multi-search (ABC-SA). Replaced greedy selection method solution with acceptance rule, worst condition is decreased non-linearly for the search process. Probabilistic multi-search strategies equalize the process of exploration and exploitation. Wang et al. (2014) developed multi-strategy ABC which consists of many distinct search strategies coexists to produce offspring throughout the search process. Rajasekhar et al. (2011) proposed an improved version of ABC with mutation based on Levy probability distribution and Sobol probability distribution. Further comprehensive survey on variants of ABC can be instigated in Karaboga and Akay (2009).

2 ABC algorithm

ABC is an optimization algorithm which is inspired by natural behavior of honey was proposed by Karaboga in 2005 (Karaboga 2005). The global optimum solution or near optimum solutions can be identified by simulating the intellectual behavior of honeybees. In ABC each food source replicates particular problem’s solution. It treasures the global optimum of a food source using fitness function and computes the qualitative nectar in the food source. This model of algorithm is straight-forward, population-based, and robust for solving engineering and global numerical problems. The colonies of artificial bees are assembled into three groups: employed bee, onlooker bee, and scout bee. A part of the colony members is employed bee (half of the group in precise), and the other half is onlooker bee. The number of food sources available is equal to employed bees and number of onlooker bees. The employed bees search for the new food source and distribute the gathered information about the food source to recruit onlooker bee. The onlooker bee will select the higher quality food source than low quality food source based on probability information. The employed bee whose food source is low quality are rejected and they are changed to scout bee for further searching newer food sources. ABC algorithm iteratively repeats these three units. In the first phase, employed bees search for the food source and modernize the position based on the quality of the food source. In the second phase, food source with higher probability values is chosen by onlooker bee based on the fitness. In the third phase, the low-quality food source is abandoned and reinstated with newer food source by scout bees. Figure 1 shows the flow of ABC algorithm. These three units are summarized as follows:

2.1 Population initialization

The initialization of the population is generated by the randomly distributed collection of FP solutions, where FP denotes the total number of employed or onlooker bees. The food source in the population is represented by \(X_{i} = \left\{ {x_{i,1} ,x_{i,2} , \ldots , x_{i,n} } \right\}\) and each solution in \(x_{i,j}\) is generated as:

where i = 1,2,…,FP and j denotes the number of variables in the solution space ranges from 1 to S and \(x_{min,j}\) and \(x_{max,j}\) is the dimension parameter S with upper and lower bounds.

The food sources are dispersed to the employee bees in random and its fitness value are evaluated. \(rand\left( {0,1} \right)\) is an equally scattered in range [0,1].

2.2 Employed Bee phase

Every employee bee \(X_{i,j}\) gives modification on the food source position. If fitness value of the new position \(v_{i,j}\) is better than existing, then the bee remembers the identified food source position and discards the existing food source. The invention of candidate position \(v_{i,j}\) from the existing \(X_{i,j}\) in memory is generated as:

where j = 1,2,…,S,

k = 1,2,…,FP, chose the value of k is not equal to i,

\(\emptyset_{i,j}\) is a random value in the range [− 1,1].

2.2.1 Onlooker Bee phase

In this phase, employee Bee shares the information about new food source \(X_{i}\) in the form of fitness value with onlooker bee. Each food source is evaluated based on probability \(P_{ij}\) and the selection of best a food source \(X_{i}\) is made by onlooker bee. The probability evaluation of food source is calculated by using Eq. (3)

where \(fit_{i}\) is the fitness value of the solution i. Through this, employed bee will swap the information about food source with onlooker bees. If the candidate food source \(v_{i,j}\) has better fitness than \(X_{i}\), then \(v_{i,j}\) food source will be replaced by \(X_{i}\) in the population.

2.2.2 Scout Bee phase

When the position of the food source \(X_{i}\) cannot enhance through a certain number of iterations, the particular food source is dilapidated. The number of iterations for the abandonment of food source is considered to be the important parameter in ABC algorithm.

2.2.2.1 Revamped directed ABC algorithm (RDABC)

ABC is used to solve global numerical optimization problems and engineering problems. ABC is the bio-inspired algorithm based on the behavior of ants, bees, fish, etc. ABC algorithm shows the advantage over other optimization algorithms by obtaining richness and robustness in solution. The algorithm is flexible to enhance and develop new algorithms, and the complexity is reduced since it requires fewer control parameters. To enhance the process of intensification and diversification, the authors have done three major changes in standard ABC algorithm. The pseudo code of proposed algorithm to solve global numerical optimization problems is given in Algorithm 2.

At first, ABC generates randomly distributed population of food source as solutions, FP is the size of the population. In the initialization process, lower and upper bound parameters are randomly assigned to produce a feasible solution. In exploration phase, neighborhood search of 20% has been done to reconnoiter search space solution based on Eq. 4. The initialization process is explained in Algorithm 3.

After initialization process, in employed bee phase new food source is produced based on the local information extracted and evaluates the fitness value. The searchability and exploration of the solution is enhanced with the guidance of three searching mechanism; the first phase is finding the best in the neighborhood using \(x_{i,j}\), \(x_{k,j}\) and the perturbation on \(x_{i,j}\) is decreased. The second phase is obtaining global best \(x_{g,j}\) from all employed bees and evaluate the fitness on best solution \(fit_{best }\) and compared with other solutions. If \(fit_{best }\) is higher than other solution then memory is not updated else memory is updated. The third modification is in searching ability to calculate iteration best \(x_{t,j}\) as iteration best food particle in dimension j and evaluate fitness on iteration best solution \(fit_{iter\_best }\). To increase the convergence rate, the food source position is directed based on random number \(\xi_{i,j}\) and \(\vartheta_{i,j}\). The direction information \(\rho_{i,j}\) of the ith food source for jth dimension is added to achieve convergence rate. Initially the direction information \(\rho_{i,j}\) is set to be 0, if the new candidate solution is higher than the old solution then \(\rho_{i,j}\) is updated to value 1. If it is lesser, then the directional information \(\rho_{i,j}\) is updated to − 1 as shown in Eq. 5. To improve the ability of local search and convergence rate, the direction information \(\rho_{i,j}\) is employed in the algorithm. Employed bee phase is illustrated in Algorithm 4.

Employed bees share information about the food source position with onlooker bee in the hive. Onlooker bees evaluate the fitness value based on probability calculation \(Pro_{i}\) based on Eq. 6. The probability of selecting the best food source is based on the fitness of the solution \({ }fit_{i}\). The \({ }fit_{i}\) for the solution \({ }X_{i}\) can be calculated using Eq. 7.

After calculating probability value \(Pro_{i}\), onlooker bee randomly chooses food source with probability value from the employed bees. Food source positions were modified in onlooker bee phase upon probability value and evaluates with the random value (0, 1). If the food source has elevated probability value, then it is acceptable and proceeded to calculate candidate solution using Eq. 8. Like employed bee phase, the greedy selection of selecting the best solution among \(X_{i}\) and \(v_{i}\) is determined. The food sources which are not the virtue for exploiting are identified and abandoned through onlooker bee phase. The onlooker bee phase is ascertained by Algorithm 6.

However proposed algorithm increases the exploitation process by improving employee and onlooker bee phase than original ABC algorithm, the solution gets trapped in a local optimum. To avoid entrapment in local optimum, global search ability in scout bee phase is employed. The adjustable search radius to avoid getting entrapped in the local optimum is done by sigmoid function (Malik et al. 2007) in Eq. 9. The candidate solution \(x_{i,j}\) of the scout bee is modified from abandoned old solution \(X_{i}\). The value of \(\psi_{initial}\) and \(\psi_{end}\) represents the initial and end inertia weight of range 0.9 and 0.4respectively. The inertia values are static and desired by the experimenter. The scout bees specific search radius will decrease linearly in our proposed approach from 90 to 40% by sigmoid function at each round. For every iteration the solution will converge towards the optimal solution and the position of scout bee is adjusted in search space. The scout bee process is described in Algorithm 7.

Thus, our proposed algorithm employs diversity in providing the new solution, and the convergence speed is increased by enhancing the process of both exploitation and exploration. Using proposed algorithm, the solutions are globally optimized compared to standard ABC algorithm. The authors have done three modifications, at first in initialization process 20% of neighborhood search is done to enhance the exploitation process. Secondly, directed and search strategy is improved by considering neighbor best, global best and iteration best to produce new candidate solution. Through this, the process of exploitation is enhanced and convergence speed is increased. At last, to avoid entrapment in local optima, the exploration process is revamped by decreasing the search space radius from 90 to 40% using the sigmoid function. On imposing two different models namely ABC and Oppositional Learning would increase the computation time on every iteration. However, by fusing these two models, the proposed model achieves better results within the stipulated number of generations. Hence, by increasing the computational time of every iteration, the overall number of iterations gets reduced.

3 Experimental studies

3.1 Environmental setup and benchmark functions

The performance of DABC is investigated to minimize a set of 20 scalable benchmark functions of various unimodal/multimodal, separable/non-separable. The benchmark functions with various dimensions of 30, 60 and 100. In the first experiment, a set of 20 benchmark functions is demeanor to access the performance of DABC algorithm. In the second experiment, the performance of the proposed algorithm is investigated on a real-world engineering design optimization problem.

3.1.1 Mathematical Benchmark functions and parameter settings

The performance of the proposed RDABC algorithm is compared with ABC algorithm and its variants like SAMSABC (Sun et al. 2020), ABCNG (Xiao et al. 2021). RDABC is coded in MATLAB 12.0 platform under windows on an Intel 2 GHz core 2 quad processor with 2 GB of RAM. The proposed algorithm is to minimize 20 benchmark functions with dimensions D of 30, 60 and 100. For the lawful comparison, the maximum number of evaluations for all the algorithm is 100000 * D. RDABC is run for 50 times for each mathematical benchmark functions. The algorithm aborts when the maximum numbers of evaluations are reached or when the corresponding global minimum value is reached. Table 1 summarizes 20 scalable benchmark functions with its characteristic and range. Results obtained by RDABC are compared with SAMSABC, ABCNG, and ABC concerning the optimal global solution, mean, median and standard deviation. The benchmark functions \(f_{1} - f_{4}\), \(f_{7}\), \(f_{9}\) and \(f_{10}\) are unimodal functions, \(f_{5}\), \(f_{6}\), \(f_{8}\), \(f_{11} - f_{20}\) are multimodal functions, \(f_{15}\), \(f_{16}\), are penalized functions, \(f_{1} - f_{15}\) and \(f_{18} - f_{20}\) are separable functions and \(f_{16}\) and \(f_{17}\) are non-separable functions. Table 2.

On comparing the results of RDABC with the existing algorithms such as SAMSABC, ABCNG and conventional ABC, the proposed model outperforms the other algorithms in terms of achieving best results. On comparing the mean results, the proposed model achieves near values to best which in turn indicates that the final results include more number of optimal solutions. The SD shows the exploration capability of all algorithms. And in terms of computational time as it is mentioned in Sect. 4, the model uses less iterations and thus the computational time is lesser when compared with ABC, SAMSABC and ABCNG Table 3.

3.1.2 Statistical test

Wilcoxon signed-rank nonparametric statistical test is used to find the significant difference and behavior of experimental methods. This tool is used to measure the statistical performance of algorithm in pair-wise. The test results are shown in Tables 4, 5 and 6 and the result is performed with significance value \(\alpha = 0.05\). There is no difference between first algorithms median and next algorithm for the same functions. Wilcoxon Signed rank test provide sizes for the ranks like \(T + , T -\) to know the best rank among two algorithms (Civicioglu 2013). The first column in the table shows benchmark functions to evaluate the performance of experimental methods. The second column indicates p-value. The third and the fourth column indicate the rank value of the pair algorithm. The sign \(+\) indicates the proposed RDABC is superior in Wilcoxon signed-Rank test and null hypothesis is rejected. The sign \({-}\) indicates the proposed RDABC is inferior performance in Wilcoxon test and null hypothesis is rejected. The sign \(=\) shows no significant difference among two algorithms. The Wilcoxon Signed-Rank test is carried out with comparative algorithms for smaller, medium and larger dimensions.

Table 4, 5, and 6 shows statistical result of Wilcoxon Signed-Rank Test compared with SAMSABC, ABCNG, and ABC for small, medium, and large dimensions through 50 runs for each mathematical benchmark functions. ‘ + ’ sign indicates that null hypothesis is rejected and RDABC shows superior performance over other algorithms. ‘- ‘ sign indicates that null hypothesis is rejected and RDABC shows inferior performance over other algorithms. ‘ = ’ sign indicates that there is no significant different among the two algorithms in solving benchmark problems. Table 7 shows the count of statistical significance of RDABC with other algorithms.

Table 7 shows the comparison of the proposed algorithm with SAMSABC, ABCNG and ABC algorithm for smaller, medium, and larger dimension problems. Pair-wise comparison of proposed RDABC with SAMSABC for 30-dimensional benchmark functions rejects the null hypothesis with superior performance for 60% of functions, 15% of functions with no significant difference and 25% of functions with inferior performance. For 60-dimensional benchmark problems, RDABC rejects the null hypothesis with a same performance like 30-dimensional functions. For larger dimension, RDABC rejects the null hypothesis with 75% of superior performance, 10% of functions with no significant difference and 15% of functions with inferior performance.

Pair-wise comparison of RDABC with ABCNG rejects the null hypothesis for all dimensional problem with no significant differences. For 30-dimension problem, 85% of functions possess superior performance, 15% of functions with inferior performance than ABCNG. All the medium sized functions possess superior performance with null hypothesis rejection. For 100-dimension function, 95% of the functions achieved superior performance and 5% of the functions with inferior performance. Pair-wise comparison of the proposed algorithm with standard ABC algorithm rejects the null hypothesis for all functions. For 30-dimensional problem, 95% of the benchmark functions attained superior performance, 5% of functions with significant different and no inferior performance. For medium sized functions, 85% of functions attained superior performance, significant difference for 5% of functions and 10% of functions with inferior performance. For 100-dimension functions, 90% of functions achieved superior performance, 5% of functions attained inferior performance and 5% of functions had significant difference.

3.2 CHPED

CHPED problem is to minimize the production cost of the system by satisfying heat, power demands and constraints. The CHPED is to determine the generation of unit power system and production of heat system. The objective function is to minimize the cost, which can be represented mathematically by,

Subject to.

Equality constraints

The total heat and power production of the problem \(F\left( {p,h} \right)\) is to minimize the cost. \(P\) is the unit power generation, \(h\) is the unit heat production; \(n_{p}\), \(n_{c}\) and \(n_{h}\) are the number of conventional power unit, co-generation unit and heat-only units respectively. \(C_{i} \left( {P_{i} } \right), C_{j} \left( {P_{j} ,H_{j} } \right)\) and \(C_{k} \left( {H_{k} } \right)\) are the cost functions of the \(i\) th conventional power unit, \(j\) th co-generation unit and \(k\) th heat-only unit. \(P^{min} ,\) \(P^{max} ,\) \(H^{min}\) and \(H^{max}\) are the limits of the unit power capacity and unit heat capacity. The active power transmission loss \(P_{L}\), can the calculated using Eq ():

where \(PC_{i,j}\) is the loss coefficient connected between \(i\) and \(j\). In CHPED problem, the power generations \(P_{i}\), \(P_{j}\), and heat productions \(H_{j}\), \(H_{k}\) are taken as decision variables. The cost function for the power generations is represented by a quadratic function.

Cost function of each unit

Power-only units

where \(10 \le P_{1} \le 75\)

where \(20 \le P_{2} \le 125\)

where \(30 \le P_{3} \le 175\)

where \(40 \le P_{4} \le 250\).

Cogeneration units

Heat-only unit

where \(0 \le H_{7} \le 2695.2\)

Table 8 refers the results of combined head and power dispatch with recent algorithms that are used to solve the problem. From the table it is evident that the proposed RDABC outperforms other existing algorithms in terms of overall cost within the given constrained of heat and power. However, on comparing the loss obtained RDABC significance is lower. Network loss coefficients and the feasible region are cited in the Appendix section.

4 Conclusion

In this paper, Revamped Directed ABC Algorithm is proposed for addressing non-linear continuous problems with improved search strategy in the phases of exploration and exploitation. The proposed algorithm has been tested in two different folds namely 1. Standard mathematical benchmark instances and 2. CHPED problem. The test beds are chosen in such a way to prove the significance of the algorithm in both unconstrained and constrained optimization problems. On comparing the results of the proposed algorithm, it shows the significance in terms of convergence towards optimal solution with less computational time. Statistical test namely Wilcoxon Signed Rank Test also concludes that the proposed method wins the pairwise comparison in most of the benchmark instances. On comparing the results of constrained optimization problem namely CHPED, it chooses 5 power values and three heat values in a efficient manner that reduces the overall cost without violating the heat demand constraint. The overall significance of the proposed algorithm are compared with most recent and also conventional algorithms to prove its significance and thus the results shows the significance of proposed algorithm. The future work of the paper is to solve more Engineering problems which are with constraint non-linear search space.

References

Aderhold, A., Diwold, K., Scheidler, A., Middendorf, M.: Artificial bee colony optimization: a new selection scheme and its performance. In: Nature Inspired Cooperative Strategies for Optimization (NICSO 2010), pp. 283–294. Springer, Berlin, Heidelberg (2010)

Akay, B., Karaboga, D.: A modified artificial bee colony algorithm for real-parameter optimization. Inform Sci 192, 120–142 (2012)

Akbari, R., Mohammadi, A., Ziarati, K.: A novel bee swarm optimization algorithm for numerical function optimization. Commun. Nonlinear Sci. Num. Simul. 15(10), 3142–3155 (2010)

Anuar, S., Selamat, A., Sallehuddin, R.: A modified scout bee for artificial bee colony algorithm and its performance on optimization problems. J. King Saud Univ-Comput. Inform. Sci. 28(4), 395–406 (2016)

Banharnsakun, A., Achalakul, T., Sirinaovakul, B.: The best-so-far selection in artificial bee colony algorithm. Appl. Soft. Comput. 11(2), 2888–2901 (2011)

Basturk, B., and D. Karaboga. (2006) An artificial bee colony (abc) algorithm for numeric function optimization. In: IEEE Swarm Intelligence Symposium. Indianapolis, Indiana, USA

Basu, M.: Combined heat and power economic dispatch by using differen-tial evolution. Electr. Power Compon. Syst. 38, 996–1004 (2010)

Basu, M.: Bee colony optimization for combined heat and power economic dispatch. Expert Syst. Appl. 38, 13527–13531 (2011)

Beigvand, S.D., Abdi, H., La Scala, M.: Combined heat and power eco-nomic dispatch problem using gravitational search algorithm. Electr. Power Syst. Res. 133, 160–172 (2016)

Chen, Tinggui, and Renbin Xiao. (2014) Enhancing artificial bee colony algorithm with self-adaptive searching strategy and artificial immune network operators for global optimization. The Scientific World Journal 2014.

Civicioglu, P.: Backtracking search optimization algorithm for numerical optimization problems. Appl. Math. Comput. 219(15), 8121–8144 (2013)

Dorigo, M., Maniezzo, V., Colorni, A.: Ant system: optimization by a colony of cooperating agents. IEEE Trans Syst, Man, Cybern, Part B Cybern 26(1), 29–41 (1996)

Gao, W., Liu, S.: Improved artificial bee colony algorithm for global optimization. Inf. Process. Lett. 111(17), 871–882 (2011)

Gao, W.-F., Liu, S.-Y.: A modified artificial bee colony algorithm. Comput. Oper. Res. 39(3), 687–697 (2012)

Golberg, D.E.: Genetic algorithms in search, optimization, and machine learning. Addionwesley 1989, 102 (1989)

Kang, F., Li, J., Qing, Xu.: Structural inverse analysis by hybrid simplex artificial bee colony algorithms. Comput. Struct. 87(13), 861–870 (2009)

Karaboga, N.: A new design method based on artificial bee colony algorithm for digital IIR filters. J. Franklin Inst. 346(4), 328–348 (2009)

Karaboga, D., Akay, B.: A survey: algorithms simulating bee swarm intelligence. Artif. Intell. Rev. 31(1–4), 61–85 (2009)

Karaboga, D., Akay, B.: A comparative study of artificial bee colony algorithm. Appl Math Comput 214(1), 108–132 (2009)

Karaboga, D., Gorkemli, B.: A quick artificial bee colony (qABC) algorithm and its performance on optimization problems. Appl. Soft Comput. 23, 227–238 (2014)

Karaboga, D., Gorkemli, B., Ozturk, C., Karaboga, N.: A comprehensive survey: artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 42(1), 21–57 (2014)

Karaboga, D., Ozturk, C.: A novel clustering approach: artificial bee colony (ABC) algorithm. Appl Soft Comput 11(1), 652–657 (2011)

Karaboga, Dervis. (2005) An idea based on honey bee swarm for numerical optimization. Technical report-tr06, Erciyesuniversity, engineering faculty, computer engineering department. Vol. 200

Kassabalidis I, El-Sharkawi MA, Marks RJ, Arabshahi P, Gray AA (2001) Swarm intelligence for routing in communication networks. IEEE Global Telecommunications Conference. GLOBECOM'01. 6: 3613-3617

Kaveh, A., Talatahari, S.: Size optimization of space trusses using Big Bang-Big Crunch algorithm. Comput. Struct. 87(17), 1129–1140 (2009)

Liu, B., Wang, L., Jin, Y.H., Tang, F., Huang, D.X.: Improved particle swarm optimization combined with chaos. Chaos, Solitons Fractals 25(5), 1261–1271 (2005)

Luo, J., Wang, Q., Xiao, X.: A modified artificial bee colony algorithm based on converge-onlookers approach for global optimization. Appl. Math. Comput. 219(20), 10253–10262 (2013)

Maeda, M., Tsuda, S.: Reduction of artificial bee colony algorithm for global optimization. Neurocomput 148, 70–74 (2015)

Malik, R.F., Rahman, T.A., Hashim, S.Z., Ngah, R.: New particle swarm optimizer with sigmoid increasing inertia weight. Int J Comput Sci Security 1(2), 35–44 (2007)

Mohammadi-Ivatloo, B., Moradi-Dalvand, M., Rabiee, A.: Combined heat and power economic dispatch problem solution using particle swarm optimization with time varying acceleration coefficients. Electr. Power Syst. Res 95, 9–18 (2013)

Neyestani, M., Hatami, M., Hesari, S.: Combined heat and power economic dispatch problem using advanced modified particle swarm optimization. J. Renew. Sustain. Energy. 11(1), 015302 (2019)

Ozturk, C., Hancer, E., Karaboga, D.: Dynamic clustering with improved binary artificial bee colony algorithm. Appl. Soft Comput. 28, 69–80 (2015)

Pawar, P.,Rao, R.,Davim, J.: Optimization of process parameters of milling process using particle swarm optimization and artificial bee colony algorithm. In: International Conference on Advances in Mechanical engineering (2018).

Pan, Q.K., Tasgetiren, M.F., Suganthan, P.N., Chua, T.J.: A discrete artificial bee colony algorithm for the lot-streaming flow shop scheduling problem. Inform. Sci. 181(12), 2455–2468 (2011)

Passino, K.M.: Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control. Syst. 22(3), 52–67 (2002)

Rajasekhar, Anguluri, Ajith Abraham, and Millie Pant. (2011) Levy mutated artificial bee colony algorithm for global optimization. Systems, Man, and Cybernetics (SMC), 2011 IEEE International Conference on. IEEE.

Rao, R.S., Narasimham, S.V., Ramalingaraju, M.: Optimization of distribution network configuration for loss reduction using artificial bee colony algorithm. Int J Electr Power Energy Syst Eng 1(2), 116–122 (2008)

Samanta, S., Chakraborty, S.: Parametric optimization of some non-traditional machining processes using artificial bee colony algorithm. Eng. Appl. Artif. Intell. 24(6), 946–957 (2011)

dos Santos, C.L., Mariani, V.C.: A novel chaotic particle swarm optimization approach using Hénon map and implicit filtering local search for economic load dispatch. Chaos, Solitons Fractals 39(2), 510–518 (2009)

Singh, A.: An artificial bee colony algorithm for the leaf-constrained minimum spanning tree problem. Appl. Soft Comput. 9(2), 625–631 (2009)

Stanarevic, Nadezda, Milan Tuba, and Nebojsa Bacanin. (2010) Enhanced artificial bee colony algorithm performance. In: Proceedings of the 14th WSEAS international conference on computers: part of the 14th WSEAS CSCC multiconference. 2: 440-445

Sun, L., Sun, W., Liang, X., He, M., Chen, H.: A modified surrogate-assisted multi-swarm artificial bee colony for complex numerical optimization problems. Microprocess Microsyst 76, 103050 (2020)

Wang, H., et al.: Multi-strategy ensemble artificial bee colony algorithm. Inform Sci 279, 587–603 (2014)

Xiang, T., Liao, X., Wong, K.-w: An improved particle swarm optimization algorithm combined with piecewise linear chaotic map. Appl. Math. Comput. 190(2), 1637–1645 (2007)

Xiao, S., Wang, H., Wang, W., Huang, Z., Zhou, X., Xu, M.: Artificial bee colony algorithm based on adaptive neighborhood search and Gaussian perturbation. Appl. Soft Comput. 100, 106955 (2021)

Yan, X., et al.: A new approach for data clustering using hybrid artificial bee colony algorithm. Neurocomput 97, 241–250 (2012)

Yang, Xin-She. (2005) Engineering optimizations via nature-inspired virtual bee algorithms. International Work-Conference on the Interplay between Natural and Artificial Computation. Springer Berlin Heidelberg

Yi Y, and He R (2014) A novel artificial bee colony algorithm. Intelligent human-machine systems and cybernetics (IHMSC), 2014 Sixth International Conference on 1 IEEE

Yurtkuran, A., Emel, E.: An enhanced artificial bee colony algorithm with solution acceptance rule and probabilistic multisearch. Comput. Intell. Neurosci. 2016, 41 (2016)

Zhang D, Guan X, Tang Y, Tang Y. (2011) Modified artificial bee colony algo- rithms for numerical optimization. In: Proc. of 3rd International Workshop on Intelligent Systems and Applications.

Zhu, G., Kwong, S.: Gbest-guided artificial bee colony algorithm for numerical function optimization. Appl. Math. Comput. 217(7), 3166–3173 (2010)

Özbakir, L., Baykasoğlu, A., Tapkan, P.: Bees algorithm for generalized assignment problem. Appl Math Comput 215(11), 3782–3795 (2010)

Acknowledgements

This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Korea government(MSIT) (No. 2019R1G1A110034111).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Network Loss coefficients

Rights and permissions

About this article

Cite this article

Thirugnanasambandam, K., Rajeswari, M., Bhattacharyya, D. et al. Directed Artificial Bee Colony algorithm with revamped search strategy to solve global numerical optimization problems. Autom Softw Eng 29, 13 (2022). https://doi.org/10.1007/s10515-021-00306-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s10515-021-00306-w