Abstract

Advances in AI techniques have fueled research on using EEG data for psychiatric disorder diagnosis. Despite EEG’s cost-effectiveness and high temporal resolution, low Signal-to-Noise Ratio (SNR) hampers critical marker extraction and model improvement, while denoising techniques will lead to a loss of effective information in EEG. The aim of this study is to employ AI methods for the processing of raw EEG data. The primary objectives of the processing are twofold: first, to acquire more reliable markers for schizophrenia, and second, to construct a superior automatic classification for schizophrenia. To remove the noises and retain task-related (classification tasks) effective information mostly, we introduce an Effective Information Estimation Framework (EIEF) based on three key principles: the task-centered approach, leveraging 1D-CNNs’ test metrics to gauge effective information proportion, and feedback. We address a theoretical foundation by integrating these principles into mathematical derivations to propose the mathematical model of EIEF. In experiments, we established a paradigm pool of 66 denoising paradigms, with EIEF successfully identifying the optimal paradigms (on two datasets) for restoring effective information. Utilizing the processed dataset, we trained a 3D-CNN for automatic schizophrenia diagnosis, achieving outstanding test accuracies of 99.94\(\%\) on dataset 1 and 98.02\(\%\) on dataset 2 in subject-dependent evaluations, and accuracies of 89.85\(\%\) on dataset 1 and 98.02\(\%\) on dataset 2 in subject-independent evaluations. Additionally, we extracted 38 features from each channel of both processed and raw datasets, revealing that 20.86\(\%\) (dataset 1) of feature distribution differences between the patients and the healthy exhibited significant changes after implementing the optimal paradigm. We enhance model performance and extract more reliable electrobiological markers. These findings have promising implications for advancing the field of the clinical diagnosis and pathological analysis of Schizophrenia.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Schizophrenia (SZ) is a chronic and intricate neuropsychiatric disorder characterized by symptoms such as blunted affect, hallucinations, and delusions. These debilitating symptoms lead to significant cognitive and, ultimately, social deficits [1], often resulting in individuals spending a substantial portion of their lives in psychiatric care facilities. The precise etiology of schizophrenia remains unclear, but it is believed to be influenced by a complex interplay of genetic, environmental, and psychosocial factors [2].

Electroencephalogram(EEG) is a biological signal characterized by high temporal resolution and low acquisition cost, allowing for the detection of changes in brain states [3]. Consequently, EEG has been widely utilized in the field AI for identifying various human mental states, such as drunkenness [4, 5], depression [6], and various emotions [7], etc. Clearly, the diagnosis of schizophrenia using EEG, the primary focus of this paper, is an ongoing area of research pursued by many researchers [8,9,10].

In the above context, when developing an automatic system for the diagnosis of SZ, we have several primary objectives. Firstly, our main goal is to create a system that can assist clinicians in diagnosing SZ effectively. Secondly, we aim to identify and validate interpretable features that have the potential to classify SZ patients accurately. Lastly, we endeavor to establish electrobiological markers, which are essentially features, for SZ using AI techniques. This paper will address all three of these objectives, striving to provide solutions and insights into the diagnosis of SZ.

Regrettably, EEG data’s inherently low SNR [11] poses a challenge to achieving the aforementioned objectives. Firstly, the presence of noise in EEG data dilutes the relevant information, consequently affecting the performance of trained classifiers. Besides, due to the limitation of the acquisition equipment, the scale of EEG datasets is generally not large. Thus, the situation easily happens that the noise components and the redundant components have different distributions in the two sub-datasets belonging to the healthy and the patients. For example, Fig. 1 shows the boxplots of the bubble entropies [12] of all the disjoint segments with 4 seconds in the dataset 1 mentioned below (the SZ segments and the healthy(HC) segments have their boxplot, respectively). It becomes evident that, in the raw data, the average and median entropy values for SZ patients are higher than those for healthy individuals. However, after the noise removal, these metrics for SZ patients drop below those for healthy individuals. Such a problem definitely hinders the achievement of identifying potential qualified features.

Moreover, training a automatic classification system using such datasets may mislead the system, causing it to extract noise as hidden layer features, ultimately leading to a decrease in the generalization ability of the system.

Because the EEG redundant signal has yet to be defined clearly, removing them is impossible at current. As for the noise, although more and more denoising approaches for EEG signals have been proposed in recent years [11, 13,14,15], these aforementioned issues still persist without complete resolution. Even worse, sometimes signal preprocessing operations significantly influence the outcomes of some specific classification tasks when applied to task-related datasets, potentially causing the loss of essential task-related information [16, 17].

To overcome the limitations of the aforementioned researches, this paper introduces an EEG effective information estimation framework(EIEF). EIEF is designed to be directly aligned with the classification task at hand and is tailored to a specific EEG dataset. The core mechanism of the framework is using the testing metrics of a trained end-to-end DNN to feed the stock and proportion of the effective information back to denoising approach selection. Let us take a testing metric as the objective function. Finding the optimal denoising paradigm for a specific EEG dataset and the corresponding optimal estimation of the SZ-related effective information of this dataset will become an optimization problem. Ideally, the optimal paradigm can remove a lot of noise components with the effective information retained, and enhance the reliability of the SZ electrobiological marker discovery and the generalization capacity of SZ classification systems based on the optimal dataset.

In this paper, the framework utilizes a 1D-CNN as the core component of the DNN, as introduced in [18]. But when applying the framework’s result to construct an automatic SZ diagnosis system, we opt for the 3D-CNN proposed by [19] with a more substantial scale and increased depth to enhance system performance.

To sum up, this paper focuses on the establishment and validation of EIEF, the assessment of changes in EEG feature disparities between patients and healthy individuals before and after implementing the optimal denoising paradigm, and the construction of an automated SZ diagnosis system by EIEF. The paper is structured into three main parts: theory and methodology, experiments, and verifications. In the part of the theory and methodology, starting with discussing the properties of the EEG effective information of classification tasks, then relying on the estimation for that information, we propose EIEF, comprising the objective function, constraints and solution methods. As for the method, according to the conditions and solution of EIEF, after specifying the two EEG datasets used, the research will create a paradigm pool consisting of 66 denoising paradigms, which is followed by the description of the two used CNNs. In the part of the experiments, for each dataset processed by each paradigm, we input segments of the processed dataset into the 1D-CNN for training and testing, using subject-independent cross-validation. We aim to find the two optimal paradigm for the two datasets within the pool, with test accuracy as the optimization objective. Subsequently, using the datasets processed by the optimal paradigms, we develop an automatic SZ diagnostic system based on the 3D-CNN and compare it with S.O.T.A. In the part of the verifications. Making the 3D-CNN replace the 1D-CNN, we will implement another search to verify the stability of the optimal paradigm and the strength of putting the 1D-CNN into the framework. Furthermore, the change in the features disparities between the two groups of individuals before and after the implementation is evaluated to elucidate the significance of the denoising paradigm.

2 Related Work

2.1 EEG noise removal techniques

EEG has a high resolution and the signals are prone to unwanted noise pollution, resulting in various artifacts [20]. Eye movement, blinking, heart activity, and muscle activity in EEG signals are the main physiological artifact types. Besides, there are many extrinsic artifacts existing, like the line noise and volume conduct artifact [11]. TTraditional artifact removal techniques encompass regression methods, wavelet transformations, blind signal separation (BSS) methods, filtering methods, among others. In recent years, several new approaches, such as AI-based methods and hybrid methods, have emerged, demonstrating improved performance and reduced computational demands. Sweeney, Ward, and McLoone assumed that each channel was the accumulation of pure EEG data and a certain proportion of artifacts. The estimated artifacts were then subtracted from the EEG [21]. Gianluca Di Flumeri proposed the regression-based eye correction algorithm(REBLINCA) with a higher ability to retain the EEG signal in the no-eye movement part. Besides, the method does not require an EOG channel compared to other regression-based methods. Compared to ICA-based algorithms, this requires fewer channels and facilitates the calculation [14]. Bigdely-Shamlo et al. Introduced a robust referrals algorithm that attempted to estimate the actual average of EEG channels after removing bad channel contamination. Their efforts were to develop a standardized early-stage preprocessing pipeline (the PREP pipeline) that detects and removes certain experimentally generated artifacts, such as eye blinks or muscle activations [13]. Banghua Yang proposed a novel blind source separation method called CCA-EEMD to remove EOG artifacts automatically as well as reserve more valuable information from raw EEG. A distinctive aspect of this method is that the identified EOG component is not removed directly but used to extract neural EEG data, which would keep more effective information [15]. Sadiq et al. used multiscale principal component analysis to decompose EEG signals, and employed Kaiser rule to select principal components to remove the noises [22]. Morteza Zangeneh Soroush introduced a novel method to detect artifactual components estimated by second-order blind identification (SOBI). Artifacts are detected using a mixture of well-established conventional classifiers and were removed employing stationary wavelet transform (SWT) to reserve neural information. This method combines signal processing techniques and machine learning algorithms, yielding significant results across various scenarios [23]. However, all the above methods are general and aren’t task-centered. In other words, they are open-loop methods without feedback.

2.2 EEG-based SZ classification

From the raw EEG data to the final classification results, researchers’ processing can be broadly categorized into the following steps. Firstly, data extraction is conducted, where commonly encountered EEG signals for disease classification include resting-state signals and task-related signals. Subsequently, data denoising is performed, as mentioned in the preceding section. Then, signal analysis, such as feature extraction, nonlinear signal decomposition, spectral analysis, and so forth. Finally, based on the analyzed data, classifiers are trained. Therefore, in this subsection, we will emphasize how researchers conduct signal analysis and classifier design in those prominent achievements.

More than a decade ago, Sabeti et al. [24] extracted Shannon entropy, spectral entropy, approximate entropy, Lempel-Ziv complexity and Higuchi fractal dimension from an EEG dataset (recorded data in resting-state with eyes opened), and achieved a classification accuracy of 86\(\%\) and 90\(\%\) obtained by LDA and Adaboost respectively. Parvinnia et al. [25] also used resting-state EEG signals with eyes opened to conduct research. After extracting fractal dimension, band power and autoregressive (AR) model, they applied weighted distance nearest neighbor (WDNN) for classification. And the accuracy was 95.3\(\%\). Murphy et al. [26] collected a task-related dataset from duration deviant MMN tasks, and found adolescents with psychotic symptoms were characterised by a reduction in MMN amplitude at frontal and temporal regions compared to the controls through statistical analysis. During that period, researchers were directly extracting a few features from raw data and then using them to perform simply statistical analysis or train classical machine learning classifiers.

As research progresses, researchers are increasingly incorporating new technologies into the feature extraction process. For example, researchers can simultaneously extract features of several dozen different types at once and then use feature selection techniques to to select a suitable subset, and use this subset to train a classifier. Jahmunah et al. [27] total mined 157 features from the dataset, and select 14 features using Student’s t-test. Based on these feature, they implemented classification practice with various ML classifiers, DT, LD, KNN, PNN, and SVM with various kernels. And the average performance value is 92.91\(\%\). Prabhakar et al. [28] first extracted 9 nonlinear features and then optimized the selection of the features by Artificial Flora (AF) optimization, Glowworm Search (GS) optimization, Black Hole (BH) optimization, and Monkey Search (MS) optimization. They also trained several classifiers by the optimized features and found SVM-RBF can reach the best performance of 97.54\(\%\) (for normal cases) and 92.17\(\%\) (for schizophrenia cases).

In recent years, various signal decomposition techniques have been widely employed for state recognition based on EEG. Sadiq et al. achieved a sensitivity, specificity and classification accuracy of 93\(\%\), 92.1\(\%\) and 91.4\(\%\), respectively, on a motor imagery dataset by utilizing a robust and simple automated multivariate empirical wavelet transform (MEWT) to obtain joint instantaneous amplitude and frequency components [29]. And achieved an average classification accuracy of 99.8\(\%\) by employing a multivariate variational mode decomposition (MVMD) method to obtain joint modes in frequency scale across all channels [30]. In the realm of schizophrenia recognition, researchers have also begun innovating feature engineering from the perspective of signal decomposition. Krishnan et al. [31] used Multivariate Empirical Mode Decomposition (MEMD) to decompose the EEG data into Intrinsic Mode Functions (IMF) signal. Then five entropy measures were measured from the IMF signals. And the subset of features was selected by Recursive Feature Elimination. Based on Radial Basis Function (SVM-RBF), they achieved the highest accuracy and F1-score of 93\(\%\) with 95 features and obtained an AUC of 0.9831. Baygin [32] conducted feature extraction from 19-channel EEG signals with healthy and schizophrenia classes, using Tunable Q-Factor Wavelet Transform (TQWT) and statistical moment methods, and selected feature subset by the ReliefF method. He chose KNN to be the classifier and achieved an accuracy of 99.12\(\%\). Khare et al. [33] used the Fisher score method to select the most discriminant channel, then used flexible tunable Q wavelet transform (F-TQWT) to decompose the EEG signal. After the decomposition, similar to the aforementioned researches, they extracted five features and employed the Kruskal-Wallis test to select a subset of features. Subsequently, this subset was fed into an flexible least square support vector machine (F-LSSVM) classifier. In their paper, a more innovative approach involved utilizing the grey wolf optimization algorithm to incorporate feedback from SVM results into the selection of Q-wavelets. An accuracy of 91.39\(\%\), sensitivity, specificity, precision, F-1 measure, false positive rate and error of 92.65\(\%\), 93.22\(\%\), 95.57\(\%\), 0.9306, 6.78\(\%\) and 8.61\(\%\) was achieved.

In addition to intensive research in feature engineering, with the continuous breakthroughs in deep learning technology, researchers have also begun to utilize various types of DNNs for schizophrenia recognition. One category of research involves directly feeding continuous or segmented EEG signals into the network, for example: Oh et al. [18] introduced a 1D-CNN model designed to analyze signals, automatically extract salient features, and perform classification. This model achieved a classification accuracy of 98.07\(\%\) for subject-dependent (SD) evaluation and 81.26\(\%\) for subject-independent(SI) evaluation. Sharma et al. [34] proposed a schizophrenia hybrid neural network (SzHNN), which is a combination of convolutional neural networks (CNNs) and long short-term memory (LSTM). They divided the original data of two EEG datasets into non-overlapping segments and used these segments to train the SzHNN. The performance is an accuracy of 99.9\(\%\) on dataset 1 and an accuracy of 99.5\(\%\) on dataset 2. Another category of research involves the fusion of feature engineering with DNNs. Leveraging the scale of DNNs, such papers often yield large-sized feature sets in their feature engineering, such as the visualized image of EEG signals. Shen et al. [35] developed an image feature, functional brain network, using a multivariate autoregressive model and coherence connectivity algorithm. And they used 3D-CNN to classify the SZ patients. The proposed 3D-CNN method achieved the performance of a 98.47 ± 1.47\(\%\) in accuracy, 99.26 ± 1.07\(\%\) in sensitivity, and 97.23 ± 3.76\(\%\) in specificity. Similarly, Khare et al. [36] captured the instantaneous information of EEG signals in the time-frequency domain using MH-TFD, converted the information to two-dimensional plots, and fed the plots to the developed CNN model. The developed CNN is SchizoNET model. And the proposed model achieved an accuracy of 97.4\(\%\), 99.74\(\%\), and 96.35\(\%\) on the three datasets, respectively. Zülfikar et al. [37] integrated Empirical Mode Decomposition (EMD) with the VGG16 pre-trained CNN. HS (Hilbert Spectrum) images of the first four Intrinsic Mode Functions (IMF) components obtained by applying EMD to EEG signals were fed into several famous CNN. They obtained the classification performance of 98.2\(\%\) for Dataset I and 96.02\(\%\) for Dataset II, using VGG16 network.

The innovation in the aforementioned articles includes the introduction of new features and new methods of feature acquisition (such as signal decomposition), the introduction of new feature subset selection methods, the introduction of new classifiers, etc. However, none of these articles focus on improving the reliability of existing features, which is the focal point of our research.

3 Theory and method

The primary objective of this chapter is to leverage mathematics to introduce an effective information estimation framework that can get the optimum among a given series of denoising paradigms, based on any end-to-end classification model and a certain dataset. Then according to the conditions of EIEF, after specifying the EEG dataset used, the research will create a paradigm pool consisting of 60 denoising paradigms for the following grid search for the optimum. And, the two classifiers inside EIEF and outside EIEF will be detailed, plus the classifier inside serves as the foundation for assessing the metrics used in the paradigm search, and the classifier outside is responsible for constructing the automated SZ diagnosis system.

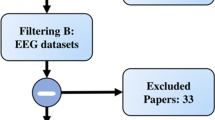

Here, the flow chart from EIEF’s work to the construction and the use of the diagnosis system is illustrated in Fig. 2. And Table 1 is the list of symbols used in the thory part.

3.1 Hypotheses on property of effective information

Let \(\varvec{Z \in \mathbb {R}^{c \times t}}\) denote the \(\varvec{c \times t} \)-dimensional matrix variable, and \(\varvec{X \in \Theta \subset \mathbb {R}^{c \times t}}\) denote the observed EEG signal sample, where \(\varvec{c}\) is the number of recorded channels, \(\varvec{t}\) is the sample size and \(\varvec{\Theta }\) is the sample space. The purpose of this subsection is to describe a common property of EEG effective information for any classification task with mathematic. So first, in order to denote the effective information, we shall explain and denote EEG signal objective components.

Here, we denote an EEG signal objective component as an \(\varvec{i \in I_s}\) which must satisfy the condition: \(\varvec{\forall X, \exists S^i \in \Theta ^ i }\) \(\varvec{\subset \mathbb {R}^{c \times t}}\), where \(\varvec{S^i}\) can be called the sample of component \(\varvec{i}\) (or sample of \(\varvec{i}\)), \(\varvec{I_s}\) is the tag set, \(\varvec{\Theta ^ i}\) is the component space generated by \(\varvec{\Theta }\). For now, the elements that are objective components inside \(\varvec{I_s}\) are not all clear, but it is clear that \(\varvec{I_s}\) is a finite set. Then denote a sample of the real signal under any reference \(\varvec{\lambda }\) as \(\varvec{S^{r} \in \Theta ^{r}}\), and a sample of the real noise is \(\varvec{S^{rn} \in \Theta ^{rn}}\), plus \(\varvec{\forall X, \exists S^{r},S^{rn}: X = S^{r} + S^{rn}}\). When the classification task is \(\varvec{T \in \Gamma }\) (\(\varvec{\Gamma }\) is EEG classification task set), a sample of the effective information of \(\varvec{T}\) is \(\varvec{S^{i^T}}\), the redundant information \(\varvec{S^{i^Tm}}\), plus \(\varvec{\forall S^{r}, \exists S^{i^T}, S^{i^Tm}: S^{r} = }\) \(\varvec{S^{i^T} + S^{i^Tm}}\).

Note that \(\varvec{\forall i,\exists X}\) which has different corresponding \(\varvec{S^i}\), but in one real sampling, there is only one \(\varvec{S^i}\) for any possible sampled \(\varvec{X}\). That’s because the prior information during the sampling, outside the \(\varvec{\Theta }\), decides the unique \(\varvec{S^i}\). Conversely, if having enough prior information, we can construct a \(\varvec{X}\) using \(\varvec{\forall S^i}\). In here, a mathematical model will be proposed to demonstrate the prior information and to achieve the construction.

Definition 1

\(\varvec{\forall i}\), define a mapping and matrix function

as a constructed function based on \(\varvec{i}\) if \(\varvec{\forall X, \exists S^i,C:X=S^i}\) \(\varvec{+g_i(C)}\) and \(\varvec{\forall S^i, \exists X,C:X=S^i+g_i(C)}\), where \(\varvec{C}\) is called the parameter matrix of the prior information of \(\varvec{X}\) expect \(\varvec{S^i}\), \(\varvec{G_i}\) is the function set containing all qualified constructed functions based on \(\varvec{i}\), and \(\varvec{G}\) is the function set containing all \(\varvec{g}\). Typically, \(\varvec{\forall i}\), a identical mapping must be a constructed function . And in this case, since \(\varvec{\forall X, \exists S^i}\), \(\varvec{C}\) shall be \(\varvec{X-S^i}\). It is easy to use any constructed function to define a new sample space.

Definition 2

\(\varvec{\forall i,\forall g_i}\), the space \(\varvec{\bar{\Theta }_{g_i} \subset (\mathbb {R}^{c \times t},\mathbb {R}^{c \times t})}\) is the complete observed sample space based on \(\varvec{g_i}\) if \(\varvec{ \forall (Z_1,Z_2) \in }\) \(\varvec{\bar{\Theta }_{g_i}: Z_1 \in \Theta }\) and \(\varvec{ \forall (X,C) \in \bar{\Theta }_{g_i},\exists ! S^i:S^i}\) \(\varvec{=X-g_i(C)}\). Note that \(\varvec{\bar{\Theta }_{g_i} \in \bar{\Theta }}\), where \(\varvec{\bar{\Theta }}\) is the family of all \(\varvec{\bar{\Theta }_{g_i}}\).

Through complete observed sample spaces, getting the effective information of \(\varvec{\forall T}\) will be implemented by a mapping.

Definition 3

\(\varvec{\forall i,\forall g_i}\), define the mapping

as the \(\varvec{g_i}\)-based restoration function for \(\varvec{i}\), where \(\varvec{\digamma ^i}\) is the function set containing all \(\varvec{f_{g_i}}\) for component \(\varvec{i}\) based on all possible \(\varvec{g_i}\), and \(\varvec{\digamma }\) is the set of all \(\varvec{f}\) for all components. Obviously, \(\varvec{\forall T}\),\(\varvec{f_{g_{i^T}}}\) shall be the restoration function for \(\varvec{i^T}\). So far, \(\varvec{\forall T}\), through \(\varvec{f_{g_{i^T}}}\), any \(\varvec{S^{i^T}}\) can be gotten from \(\varvec{\Theta }\). But the property we propose to describe is related to classifiers, which requires the definition of classifiers.

Definition 4

In this paper, a classifier for EEG is

where \(\varvec{H}\) is the hypothesis space, \(\varvec{\bar{Z} \in \mathbb {R}^{c \times \bar{t}}}\) is a block of the corresponding \(\varvec{Z}\), or a subsample of the corresponding \(\varvec{X}\), \(\varvec{\bar{t}}\) is the sample size of the subsample (\(\varvec{\bar{t} \le t}\)), \(\varvec{cl}\) is the number of the classes decided by task \(\varvec{T}\), and \(\varvec{\hat{y}}\) is the output vector describing the probabilities that \(\varvec{\bar{Z}}\) belongs to each class. There is a fact that \(\varvec{h}\) demands the segmented EEG epochs to be the input, which tells us the classifiers here are not the traditional classification models but actually cover the EEG signal process, feature extraction and selection, and feature dimensionality transformation. Thus, \(\varvec{H_{re}}\) is the hypothesis set containing all realizable hypotheses, and these hypotheses must come from models where the above procedures are all learnable (end-to-end models). For instances, an SVM classifier based on manual feature extraction doesn’t belong to \(\varvec{H_{re}}\), and a CNN classifier belongs to \(\varvec{H_{re}}\) if taking the segmented data as the input and the probability vector as the output. Such a definition could avoid setting priori hypotheses in classifier construction as much as possible, since the priori hypotheses will definitely influence classifiers’ sensitivity to the component change of an EEG trainset.

Before the proposal of the hypothesis, there are some final explanations. Assume a classifier which can be represented by any of model \(\varvec{b \in B_j \subset B}\) is \(\varvec{h_j(;\theta _j) \in }\) \(\varvec{H_j \subset H_{re}}\) (\(\varvec{j \in I_b}\) and \(\varvec{\forall j \in I_b: ((\exists ! B_j \subset B) \wedge }\) \(\varvec{(\exists !H_j \subset H_{re}))}\)), where \(\varvec{B_j}\) is the set containing all the models of a specific model category (like 3D-CNN with definite hyper-parameters), \(\varvec{B}\) is the set containing all the end-to-end models for classification, and \(\varvec{H_j}\) is the set of classifier corresponding to \(\varvec{B_j}\), \(\varvec{\theta _j \in \mathbb {R}^{1 \times \omega _j}}\) is the parameter vector of models belong to \(\varvec{B_j}\) where \(\varvec{\omega _j}\) represent the number of the parameters.

\(\varvec{\forall T \in \Gamma }\), \(\varvec{\forall g_{i^T} \in G_{i^T}}\), Let \(\varvec{\bar{\Theta }_{g_{i^T}} \times Y^T}\) represents the complete observed example space based on \(\varvec{g_{i^T}}\), where \(\varvec{Y^T \subset \mathbb {R}^{cl}}\) is the label space of \(\varvec{T}\), and \(\varvec{\forall ((X,C),y) \in \bar{\Theta }_{g_{i^T}} }\) \(\varvec{\times Y^T}\) represents a observed example and a random variable from the space, where \(\varvec{y}\) is the real label of the sample. Thus, we can use \(\varvec{\forall ((X,C),y):((X,C),y) \sim D^g }\) to demonstrate that \(\varvec{\bar{\Theta }_{g_{i^T}} \times Y^T}\) follows a unknown prior distribution. Besides, \(\varvec{\forall i \in I_s}\), there is a component \(\varvec{i}\) example space \(\varvec{\Theta ^i \times Y^T}\), and \(\varvec{\forall (S^i,y):(S^i,y) \sim D_i^T}\).

Then, let \(\varvec{\{I_{r},I_{rn},I_{else}\}}\) denotes a partition of \(\varvec{I_s}\), which \(\varvec{\forall i \in I_{r}}\) must represents a objective component of real signal and \(\varvec{\forall i \in I_{rn}}\) must represents a objective component of real noise. Now, it’s time to present the hypothesis.

Hypothesis 1

\(\varvec{\forall T}\),the \(\varvec{i^T}\) named effective information for \(\varvec{T}\) has a property that \(\varvec{\forall j \in I_b}\),

where \(\varvec{\bar{\Theta }_{g_i}}\) is a complete observed example space based on \(\varvec{\forall g_{i} \in G_{i}}\), \(\varvec{[f_{g_i}(X,C)]_k}\) is the \(\varvec{k}\)-th block of \(\varvec{f_{g_i}(X,C)}\) (from left to right, if not belonging to \(\varvec{\mathbb {R}^{c \times \bar{t}}}\), the last will not be involved), \(\varvec{n}\) is the number of the blocks belonging to \(\varvec{f_{g_i}(X,C)}\), \(\varvec{L}\) is the loss function, \(\varvec{\mathcal {P}((X,C),y)}\) is the probability of sampling \(\varvec{((X,C),y)}\) from \(\varvec{D^g}\). Equation (4) is essentially a variant of the formula used to calculate the generalization error of a classification model [38]. This hypothesis means, among the classifiers with the best generalization abilities that are found based on all EEG components belonging to \(\varvec{I_{r}}\), the best classifier based on the effective information for \(\varvec{T}\) must be the best of the best, no matter which kind of model \(\varvec{H_j}\) with definite hyper-parameters is chosen for classification.

To extend Hypothesis 1 to \(\varvec{\forall i \in I_s}\), there are still some works requiring to be done. Another hypothesis is the next.

Hypothesis 2

There is an intuitive and important view that \(\varvec{\forall i \in I_{else}}\) shall be composed by an \(\varvec{i_1 \in I_{r}}\) and an \(\varvec{i_2 \in I_{rn}}\) at least.

Keep going, \(\varvec{\forall T \in \Gamma }\), \(\varvec{\forall g_{i^T} \in G_{i^T}}\), when the label of \(\varvec{\bar{\Theta }_{g_{i^T}} \times Y^T}\) is controlled to a specific label, the complete observed example space degenerates to the complete observed example subspace which is denoted as \(\varvec{\bar{\Theta }_{g_{i^T}} \times \{y_\nu \}}\) (\(\varvec{\nu \in \{1,2,\ldots ,cl\}}\)), and \(\varvec{\forall \nu : \forall ((X,C),y_\nu ) \in \bar{\Theta }_{g_{i^T}} \times \{y_\nu \}}\). Similarly, use \(\varvec{((X,C),y_\nu ) \sim D^g(|y=y_\nu )}\) to demonstrate that \(\varvec{\bar{\Theta }_{g_{i^T}} \times \{y_\nu \}}\) follows a unknown prior distribution, and use \(\varvec{\forall (S^i,y_\nu ):(S^i,y_\nu ) \sim D_i^T(|y=y_\nu )}\) for the same reason.

Finally, based on the two Hypotheses, a corollary is proposed to show that, in some conditions, The restriction in Hypothesis 1 that \(\varvec{i^T}\) is the best only among \(\varvec{I_r}\) could be removed.

Corollary 1

\(\varvec{\forall T \in \Gamma , i \in I_{rn},\alpha ,\beta \in \{1,2,..,cl\}}\), if the following condition holds:

then for \(\varvec{\forall j \in I_b}\), the following formula holds:

where \(\varvec{\bar{\Theta }_{g}}\) is the complete observed example space based on the unique \(\varvec{g}\) corresponding to the \(\varvec{f}\). Since the paper is mainly focused on the application, here we just give the idea of the proof of the corollary.

Idea of proof: First, it is obvious that the generalization error of \(\varvec{f \in \digamma ^{i}}\) won’t be the minimum if \(\varvec{i \in I_{rn}}\), because, for classifiers, there is no way to effectively distinguish the classification of the data which has the same distribution between the different example subspaces. Second, if \(\varvec{i \in I_{else}}\), assuming \(\varvec{\exists i}\) which lets \(\varvec{f \in \digamma ^{i}}\) reach the minimum, then the \(\varvec{f_{\bar{i}} \in \digamma ^{\bar{i}}}\) (\(\varvec{\bar{i}}\) represents the component that satisfies \(\varvec{for \, \bar{i}, \exists i^{rn} \in I_{rn}, \forall X \in }\) \(\varvec{\Theta : f_i(X) = f_{\bar{i}}(X) + }\) \(\varvec{f_{i^{rn}}(X)}\) and \(\varvec{\bar{i}}\) belongs to \(\varvec{I_r}\). Hypothesis 2 ensures the exist of \(\varvec{\bar{i}}\)) will definitely reach that minimum or a lower generalization error, which means that \(\varvec{i}\) must reach the minimum together with its \(\varvec{\bar{i}}\). Thus, it is clear that the generalization error of \(\varvec{f_{\bar{i}}}\) won’t less than that of \(\varvec{f_{i^T}}\). Then finally, according to hypothesis 1, the minimum will be obtained when \(\varvec{f \in \digamma ^{i^T}}\) (\(\varvec{f = f_{i^T}}\)) if \(\varvec{i \in I_{r}}\).

3.2 EEG effective information estimation framework

From the above deduction, it is assumed that, \(\varvec{\forall T}\), \(\varvec{i^T}\) shall be the component that could make every end-to-end classifier trained to get the strongest generalization ability. Therefore, for a specific task and a specific dataset, if finding a way to seek out an EEG processing method that leads an end-to-end classifier to get the optimal test result, we shall consider that method as the optimal estimation of \(\varvec{f_{i^T}}\), and the corresponding processed dataset as the optimal estimation of the effective information for that task. Based on the above, we propose the effective information estimation framework .

Making some necessary preparations is still the first.

Definition 5

Define a mapping and matrix function

as a processing method of EEG data, where \(\varvec{Z_p}\) is the image of \(\varvec{Z}\) under \(\varvec{p}\) and \(\varvec{P}\) is the function space. obviously, different processing methods can be composed, and denoted as \(\varvec{p^*= p_1 \circ p_2}\).

Assuming an algorithm that can be applied to estimating the restoration function for \(\varvec{\forall i}\) (\(\varvec{\forall f}\)) is \(\varvec{a \in A^i}\), there is a true proposition denoted as \(\varvec{\forall i \in I_s: }\) \(\varvec{((\exists ! \Theta ^i) \wedge (\exists ! \digamma ^i \subset \digamma ) \wedge }\) \(\varvec{(\exists ! A^i \subset A)\wedge (\exists ! P ^i \subset P))}\), where \(\varvec{A}\) is the set composed of all the algorithms which can be applied to processing EEG signal, \(\varvec{A^i}\) is the set composed of all the algorithms which can be applied to estimating \(\varvec{f_i}\), and \(\varvec{P^i}\) is the function set corresponding to \(\varvec{A^i}\). Next, for \(\varvec{\forall i}\), let \(\varvec{p^i_n(;\theta _n) \in P ^i_n, n \in I^i_a}\) denote a processing method that can be represented by any \(\varvec{a \in A^i_n}\), where \(\varvec{A^i_n}\) is the set containing all the algorithms of a certain algorithm category (like re-reference), \(\varvec{I^i_a}\) is the index set that \(\varvec{\forall n \in I^i_a: ((\exists ! A^i_n \subset A^i) \wedge }\) \(\varvec{(\exists !P ^i_n \subset P ^i))}\), \(\varvec{\theta _n \in \mathbb {R}^{1 \times \omega _n}}\) is the parameter vector of the algorithms belonging to \(\varvec{A^i_n}\) where \(\varvec{\omega _n}\) represent the number of the parameters, \(\varvec{P ^i_n}\) is the set of processing methods corresponding to \(\varvec{A^i_n}\) (per \(\varvec{a \in A^i_n}\) has its paired \(\theta _n\)).

For a EEG based research, if the following conditions hold:

-

1.

The research contains a classification task \(\varvec{T \in \Gamma }\)

-

2.

The research has a specific and finite observed sample set \(\varvec{\Theta ^*\subset \Theta }\) and a label multiset \(\varvec{Y^{T*}}\) corresponding to \(\varvec{\Theta ^*}\) (\(\varvec{\forall y \in Y^{T*} : y \in Y^T}\)) which compose an example set \(\varvec{E^*\subset \Theta \times Y^T}\). The data process of this research aims to draw \(\varvec{S^{i^T} \in \Theta ^{i^T*}}\) for \(\varvec{\forall X \in \Theta ^*}\).

-

3.

The number of the kinds of the selected algorithms (\(\varvec{A^{i^T}_n}\)), and the composition order of the processing methods corresponding to selected algorithms are clear, which means the composite processing methods is denoted as

$$\begin{aligned} \varvec{p(;\theta _p) = p^{i^T}_{n_q}(;\theta _{n_q}) \circ p^{i^T}_{n_{q-1}}(;\theta _{n_{q-1}}) \circ \ldots \circ p^{i^T}_{n_1}(;\theta _{n_{1}})}, \end{aligned}$$(8)where \(\varvec{p^{i^T}_{n_m}(;\theta _{n_m})}\) is a processing methods that is represented by an \(\varvec{a}\) with specific \(\varvec{\theta _{n_m}}\) uniquely, \(\varvec{\theta _p = (\theta _{n_{1}}, \theta _{n_{2}}}\) \(\varvec{, \ldots , \theta _{n_q}) \in \mathbb {R}^{1 \times \sum \limits ^q_{m = 1} \omega _{n_m}}}\) where \(\varvec{q}\) is the total number of the kinds of the selected algorithms.

-

4.

The selected model category \(\varvec{B_j \subset B}\) (and its paired \(\varvec{h_j(;\theta _j)}\)) for classification is clear, and denoted by \(\varvec{B_{j^*}}\) (\(\varvec{h_{j^*}(;\theta _{j^*})}\)).

-

5.

As much as possible, all kinds of noises (\(\varvec{i \in I_{rn}}\)) have the same distribution between the different example subsets. If not, the distribution difference of any noise must not cause the classifier trained by the dataset consisting of that noise to perform better than the classifier trained by the dataset consisting of \(\varvec{S^{i^T}}\). An example subsets is denoted by \(\varvec{E^*_\nu }\) (\(\varvec{\nu \in \{1,2,\ldots ,cl\}}\) and \(\varvec{(\forall \nu , \forall (X_1,y_1),}\) \(\varvec{(X_2,y_2) \in E^*_\nu )}\) \(\varvec{: y_1 = y_2}\)).

-

6.

The testset \(\varvec{E^*_{test}}\) and the trainset \(\varvec{E^*_{trian}}\) are split based on SI strategy.

Then an objective function could be proposed to estimate \(\varvec{f_{i^T}}\):

Note that \(\varvec{\mathscr {M}}\) is the metric function which is determined by what metric we emphasize, and the selected metric must relate to the loss (like accuracy, recall, etc). For example, the function value will be calculated through the formula in the above s.t. (but calculated on testset),if the selected metric function is loss function. Besides, the constraint is designed to simulate the training process. \(\varvec{\delta }\) is the reflection of the training termination condition. \(\varvec{\theta ^*_{j^*}}\) denotes the optimal parameter vector of \(\varvec{h_{j^*}(;\theta _{j^*})}\), and \(\varvec{\theta _{\hat{f}_{i^T}}}\) is the optimum of \(\varvec{p(;\theta _p)}\). Let \(\varvec{\hat{f}_{i^T}}\) represent the optimal estimation of \(\varvec{f_{i^T}}\), then through (9), it is obvious that \(\varvec{\hat{f}_{i^T} = p(;\theta _{\hat{f}_{i^T}})}\).

So, (9) is the objective function of EIEF, and its preconditions is the preconditions of EIEF. It shall be especially reminded that the first 5 conditions are obvious or based on the statement of the last subsection, but only condition 6 is not mentioned before and will be explained below.

In our paper, the solution method of EIEF (the solution method of (9)) is the following: At a resolution ratio, we perform grid processing on the domain of \(\varvec{p(;\theta _p)}\), and in each grid, the problem degenerates into a classifier training problem which could be solved by the standard model training process and provide a best metric function value. By the comparison of the best metric function values among different grids, finding the best grid corresponding to the \(\varvec{\hat{f}_{i^T}}\) will be easy. Note that, from Corollary 1 we can demonstrate that under ideal conditions, the optimal processing method found through all DNNs will remain consistent. However, in real experiments, achieving ideal conditions is often challenging due to various constraints, which will be discussed in the analysis of the experimental results.

Reasonably, the following part of this chapter is going to describe the research preconditions demanded by EIEF, like the dataset, the denoising approaches considered, the approaches’ composition order to form the paradigms, and the classification model.

3.3 Datasets

3.3.1 Dataset 1

The raw dataset [39] used in this experiment collected 14 paranoid SZ, 7 males and 7 females respectively, who were collected from the Institute of Psychiatry and Neurology in Warsaw, Poland. At the same time, healthy subjects of the same age and sex ratio were recruited from the same institute. Each participant provided informed consent to participate in the study upon receiving the study protocol. The participants remained relaxed with their eyes closed when collecting EEG signals, and the sampling rate was 250Hz for 15 minutes. Data was collected via the typical International 10-20 System to obtain 19 channels. The electrodes used were Fp1, Fp2, F7, F3, Fz, F4, F8, T3, C3, Cz, C4, T4, T5, P3, Pz, P4, T6, O1, O2.

3.3.2 Dataset 2

To demonstrate that the proposed method has good generality on both ample and small datasets, it is insufficient to validate using only one dataset. So, the second dataset selected should ideally encompass a larger number of individuals [40].

The dataset comprising 45 SZ individuals and 39 healthy individuals was collected and established by Moscow University [41]. The Mental Health Research Center (MHRC) confirmed the diagnoses of all patients, which involved 45 boys with schizophrenic disorders (infant schizophrenia and schizotypical and schizoaffective disorders (F20, F21, and F25 according to the ICD-10)) with similar symptoms. During the examination at the MHRC, none of the enrolled patients received chemotherapy. The patients’ ages varied from 10 years and 8 months to 14 years. The control group consisted of 39 healthy schoolboys aged from 11 years to 13 years and 9 months, with a mean age of 12 years and 3 months in both groups.

EEG recordings were obtained from 16 electrodes placed according to the international 10-20 system at O1, O2, P3, P4, Pz, T5, T6, C3, C4, Cz, T3, T4, F3, F4, F7, and F8, and monopolarly referenced to coupled ear electrodes, in wakeful relaxed adolescents with closed eyes. The sampling rate is 128 Hz, and the time of one trial is 1 minute.

3.4 Denoising paradigms

A total of 66 paradigms, categorized into five denoising approach categories, which can be considered as 66 grids to deal with the training problem, are implemented on the raw data. This section will provide a detailed specification of these paradigms.

The first category which is also the initial step in the EEG processing pipeline is the bad channels process. In this step, three approaches are available: bad channel retention, bad channel interpolation, and bad channel removal. This approach category is founded on a bad channel detection algorithm, specifically, an iterative detection method proposed by [13]. The removal involves replacing the columns of observed samples identified as bad channels with zero vectors, while the interpolation refers to spherical interpolation. Note that the three choices reflect considerations regarding the resolution ratio mentioned in the previous chapter.

The second procedure is the re-reference process, which is still based on the methodology outlined in [13]. Two options are presented here: performing robust re-referencing or retaining the original reference. Note that performing robust re-reference is available only when bad channel retention hasn’t been carried out, because the re-reference is dependent on the preceding bad channel interpolation step.

Next, we move on to the third procedure–the filtering process. By default, line noise removal (LNR) is carried out using the EEGLAB plugin CleanLine in MATLAB [42, Chapter 7.3.4]. This choice is made because CleanLine is a robust plugin that minimizes the risk of losing genuine EEG signals. Moreover, there are two options left in this procedure-LNR+ 1 HZ high pass filter, and LNR+1-50 HZ band pass filter. Those filters are FIR filters constructed by EEGLAB tool-basic FIR filter, with the default paraments inputted (but unchecked removal bad channels).

The following step is the bad epoch process. Depending on the choice made, certain blocks of observed samples may be replaced by zero blocks (if bad epoch removal is selected), or these blocks will remain unchanged (if bad epoch retention is selected). The bad epoch (block) detection is implemented using the EEGLAB plugin-clean raw data, which is based on Artifact Subspace Reconstruction [43]. The plugin is used with its default settings.

In the final category, we address the decomposition process, presenting three distinct options. If ICA with artifactual components removal is selected, fast ICA will be performed on the dataset which has previously undergone processing through the four procedures [44, 45]. Then, with the assistance of the EEGLAB plugin-classify components, the components identified as non-brain will be systematically removed. Then, if MSPCA with Kaiser-rule-based principal components removal (level 5, wavelet “sym4”) is selected, the dataset will undergo Discrete Wavelet Transform (DWT) and principal components analysis(PCA) for decomposition, and the principal components with eigenvalues lower than the average eigenvalue will be removed [22]. Note that in order to reduce the computational complexity, this option is only available when the reference retention and the bad channel retention are selected in advance. Finally, if retention is selected, no data will be changed in this procedure.

After the specification, let’s provide a straightforward rationale for the selection and arrangement of the preprocessing steps.

Generally, the preprocess of raw EEG can be broken down into several essential procedures, including filtering, re-referencing, resampling, processing bad channels, removing bad data epochs, performing ICA with bad artifactual components removal, segmentation, baseline correction, etc. The order of these procedures can vary depending on the specific analysis.

Because used to analyze the event related potential(ERP) especially, the baseline correction is not involved in our study. Meanwhile, our segmentation is unrelated to ERPs; instead, it serves as a means to prepare EEG signals for input into the neural network. Besides, to avoid sample size reduction, the resampling is not concerned.

Concerning the remaining five procedures, our choices are well-grounded and have been recommended or introduced by the EEGLAB documentation. Our re-reference and bad channel process are both cited from [13] which emphasizes that their pipeline represents an early-stage operation, and where the bad channel interpolation is demanded to be performed initially to ensure the robustness of the average re-reference. Then the filter we implement is a standard FIR filter (the LNR has been introduced clearly).Here, the high-pass filter is configured with a cut-off frequency of 1Hz to effectively remove linear trends, while the 50Hz cut-off frequency is set to eliminate high-frequency noise, such as Muscle Artifacts, while retaining the primary EEG frequency bands (\(\alpha \), \(\beta \), \(\theta \), and \(\delta \) waves). The choice to place the filtering operation third in the sequence is intended to reduce computational demands for subsequent steps. Next, it is desirable to perform the bad epoch process if there are considerable data that suffer from high amplitude noises, and the ASR is used since it is a mature plugin. At last, just costing a little calculation power, the fast ICA is a quite common and outstanding method to address various types of EEG noise. Regarding MSPCA, compared to fast ICA, it offers faster signal processing, and denoising based on MSPCA does not require the signal source classification performed by the neural network. However, this method’s operational process may be less straightforward compared to the method based on ICA. The two methods’ generality makes us place them as the final step so that we can leave the special noises to the corresponding professional tools.

3.5 Model inside EIEF–1D-CNN

Although Corollary 1 indicates that every end-to-end classifier is qualified to seek out the optimal denoising paradigm. There are still many classifiers that aren’t reliable. Some classifiers may lack sensitivity to noise variations, making the task of distinguishing the optimal paradigm challenging. Others might require excessive training time, potentially compromising the practicality of the EIEF. To avoid these obstructions, The 1D-CNN proposed by [18] is selected to be the classifier inside EIEF. This selection is based on several key advantages, including the network’s compact size and minimal trainable parameters. These characteristics endow the 1D-CNN with heightened sensitivity while keeping training times relatively short, ensuring both the reliability and practicality of the EIEF. Table 2 details the layers of the Net. For dataset 1, single trials recorded EEG signals for 15 minutes at a rate of 250 Hz, while for dataset 2, single trials recorded EEG signals for 1 minute at a rate of 128 Hz. To balance the sample sizes, all 67 datasets corresponding to dataset 1 will be divided into irrelevant epochs with the size of \(\varvec{19 \times 6250}\) each. Conversely, datasets corresponding to dataset 2 will be divided into irrelevant epochs with the size of \(\varvec{19 \times 512}\) each. Since dataset 2 has three fewer channels than dataset 1, the data of \(\varvec{3 \times 512}\) in each dataset 2 epoch are padded with zeros. Consequently, the processed datasets for dataset 1 roughly comprise 1131 samples each, while those for dataset 2 roughly comprise 1260 samples each (the quantity may fluctuate due to the bad epochs removal). This approach ensures that dataset 2’s segmented samples are not disproportionately fewer.

3.6 Model for diagnosis system–3D-CNN

The properties of the 1D-CNN make it reasonable that being selected by EIEF, but will cause low stability and generalization. So when pursuing the first objective outlined in the introduction–constructing an automatic SZ diagnosis system, if intending to apply the framework’s result to the construction, we shall select a DNN with a bigger scale to make the system perform better. Meanwhile, Corollary 1 tells us that the optimal dataset determined using the 1D-CNN also holds true for other end-to-end classifiers.

Here, that end-to-end classifier is the 3D-CNN proposed by [19], which operates on three-dimensional input data. The input data of that is in the form of the combination of two-dimensional spatial topological structure and temporal dimension. In the initial few layers, three-dimensional convolution is used to simultaneously extract Spatio-temporal features. Subsequent layers involve spatial fusion to amalgamate high-level spatial features, with only temporal features being output. The following convolutional layers primarily focus on central time feature extraction, followed by intensive prediction embedding. Finally, a softmax layer is employed to produce classification results.

Table 3 details the layers of the Net. Notably, slight adjustments to the Net structure is necessary due to differences in channel numbers between our dataset and the dataset referenced in [19], as well as disparities in the classification tasks. The changed input format of the Net is illustrated in Fig. 3. Besides, we alter the channel numbers of the Net to (20,30,40,50,60), as a hyper-parameters adjustment, and keep the time segmentation unchanged, which indicates the data in the 2 (origin datasets) \(\times \) 67 datasets will be segmented into irrelevant epochs with the size of \(\varvec{7 \times 5 \times 512}\) before inputting. Note that the raw EEG of any subject corresponds to a \(\varvec{X}\), and any data segment after the segmentation corresponds to a \(\varvec{\bar{Z}}\). Besides, especially for dataset 2, the non-existent channels in Fig. 3 will be padded with zeros.

4 Experiment and verification

4.1 Details of experiments and hyper-parameters adjustment

To begin this chapter, it is essential to provide clarity on the implementation procedures and the specifics of the upcoming experiments.

First, a unit experiment is defined as follows: Based on K-fold Cross-validation, a series of Nets are trained by K trainsets from a dataset processed by a particular paradigm, then tested by K testsets from the same dataset. If a unit experiment is based on a subject-independent dataset split, it’s referred to as a SI unit experiment (SIUE) otherwise a subject-dependent unit experiment (SDUE). For datasets originating from dataset 1 which comprises 14 HCs and 14 SZs, each SIUE implements 14-fold cross-validation, among which the k-th fold takes the samples from the k-th healthy control and the k-th schizophrenia as the testset, and the samples from the rest of the subjects as the trainset. For datasets originating from dataset 2, each SIUE implements 10-fold cross-validation, and the split groups are described by Table 4. Or else in one SDUE, no matter which dataset it is based on, 10-fold random Cross-validation will be adopted.

Next, it is crucial to select the hyperparameters for the CNN structures and training strategies for the two CNNs. Notably, the selection of hyper parameters in this paper differs from usual papers where the goal is to achieve the best classification performance. The purpose of the selection is to highlight the influences of denoising approaches on the classification results. So that if the hyper parameters lead to low fitting capability, low stabilities in each training or a slow learning speed, on any of the datasets, it will significantly constrain the aforementioned influences, indicating an incorrect selection. There are three hyper parameters requiring adjusting in the adjustment- the CNN’s channel number, the learning rate, and the learning rate scheduler (others are specified directly). We only roughly describe the strategy for the adjustment which isn’t the key point of the paper, that is: In order to carry on a grid search, some alternative values of the three parameters were listed first. Then, utilizing these values, a series of SIUEs are executed. Following an assessment of the CNNs trained in the SIUEs based on the aforementioned three aspects, the optimal values for the parameters can be determined.

Here the result of adjustment will be listed. The hyper parameters for the 1D-CNN are the same among all unit experiments. The LR is 0.001, the batch size 128, the max training epoch 150, the loss cross-entropy, adam optimizer is applied, the LR scheduler is ReduceLROnPlateau for all epochs, plus the channel numbers of the Net stay original.

The hyper parameters for the 3D-CNN are also the same, and are listed as follows: the LR is 0.006, the batch size 128, the max training epoch 150, the loss cross-entropy, adam optimizer is applied, the LR scheduler is warm-up in the first 20 epochs, and ReduceLROnPlateau in the rest epochs, plus the channel numbers of the Net is (20,30,40,50,60).

4.2 Platforms and softwares

The following Table 5 lists all the software packages used, along with their versions and purposes (excluding common ones like NumPy). Note that fast ICA can be directly executed by EEGLAB, and MSPCA with signal denoising can be executed by MATLAB function “wmspca”.

About the hardwares, all codes written with MATLAB are run on the PC with i7-6800k (3.4GHz), and 16GB (3000MHz) Ram. And all codes written with Python are run on the server with E5-2698 (2.2GHz), 503GB Ram, and Tesla V100-SXM2-32GB \(\times \) 8.

4.3 Implementation of EIEF

This section focus on SIUEs taking the 1D-CNN as the classifier. To ensure consistency and minimize unforeseen variations, each dataset is subjected to 8 parallel SIUEs, resulting in a total of 2\(\times \)67\(\times \)8 SIUEs within this section.

To effectively visualize the impact of different denoising paradigms, it is necessary to represent the 66 paradigms with unique integer codes ranging from 1 to 66. The rules for encoding are as follows. The code 1 signifies the paradigm that sequentially employs robust re-referencing, bad channels removal, line noise removal, bad epochs retention, and decomposition retention, and then per time the code changes upward by 1, an approach belonging to a certain denoising approach categories will change. The change priority of approach categories is ranked as the filter first, the bad epoch second, and then the decomposition, the re-reference, the bad channel. The change direction of the bad channel is from removal to interpolation to retention. The re-reference’s is from using the robust reference to retention. The filter’s is from line noise removal (LNR) to LNR+1 HZ high pass filter to LNR+1-50 HZ band pass filter. The bad epoch’s and the decomposition’s shift from retention to ICA to MSPCA. Following this encoding scheme, the impacts of different paradigms are visually represented using line graphs. Unless otherwise specified, in the following sections, all mentioned metrics for evaluating each paradigm are calculated on the respective testsets and averaged across relevant parallel SIUEs.

As illustrated in Fig. 4, the influence of denoising approaches on diagnostic mean-accuracies (mean accuracy across parallel SIUEs, cross-validation and across 101-150 epochs.) is shown by the two lines marked “Para”. For dataset 1, the maximum accuracy difference of paradigms is up to 24.83\(\%\) and the STD of 66 accuracies is 5.99\(\%\). For dataset 2, the two metrics are 13.63\(\%\) and 3.55\(\%\). This clearly demonstrates that denoising approaches exert a substantial influence on classification outcomes, affirming the experimental feasibility of EIEF.

Compared with the processed datasets, the two baselines (marked “origin”) shows a relatively high level of performance. Only 17 paradigms (dataset 1) and 18 paradigms (dataset 2) yield higher accuracies than the baselines, with the highest achieving 6.39\(\%\) (dataset 1) and 3.95\(\%\) (dataset 2) increases, while the lowest record an 18.44\(\%\) (dataset 1) and 9.67\(\%\) (dataset 2) decreases. Admittedly, such a result has been influenced by the selection of the paradigm pool. Yet, suppose the selection has a generality to some extent. In that case, we can infer that an inadequate denoising paradigm will sorely harm the EEG effective information of SZ classification, which easily happens. Besides, the harm exerts a more pronounced negative influence on the diagnosis performance than the positive influence caused by an excellent paradigm.

Beside mean-accuracy, the influences of denoising approaches on classification’s mean-loss, mean-recall, mean-precision, and mean-specificity are illustrated in Fig. 5. Similar inferences like the above can be delivered if you check the 8 figures thoroughly. Except for the mean-recalls of dataset 1 and the mean-specificities of dataset 2, the harms from poor paradigms are more significant than the gains from excellent paradigms. Utilizing the Pearson correlation coefficient (CC) calculation between the two mean-loss lines and any of the other metric lines separately, we can assert that the paradigms’ influences on these five metrics are fundamentally similar, as evidenced by CC values of -0.89, -0.87, -0.40, and -0.85 (dataset 1) and of -0.73, -0.86, -0.65, -0.29 (dataset 2), respectively.

After the discussion, let’s return to the core of EIEF’s functionality. According to (9), the optimal estimation is up to the selection of metric function. Although five function candidates are plausible, loss is not the most intuitive, and recall, precision, and specificity are not unequivocally guided by loss. Therefore, we have opted for accuracy as the metric function. Consequently, for dataset 1 the paradigm that yields the highest accuracy is considered the optimal estimation for the restoration function of effective information, denoted as paradigm 65. And for dataset 1, the optimal estimation is paradigm 13. The following Table 6 details the denoising approaches inside the two optimal paradigms.

Mean-loss versus denoising paradigm 1; mean-recall versus denoising paradigm 2; mean-precision versus denoising paradigm 3; mean-specificity versus denoising paradigm 4; (The meaning of the legend is the same as that in Fig. 4)

4.4 Construction of SZ diagnosis system

This section primarily focuses on the development of an SZ diagnosis system characterized by high stability and generalization. The key objectives here involve inputting the two datasets processed by the two optimal paradigms into the 3D-CNN for training, resulting in the establishment of an SZ Diagnosis system based on these training iterations. Furthermore, a comparative analysis is conducted between this system and the system trained using the original datasets. Additionally, our results on two datasets are compared with state-of-the-art (SOTA) results obtained by other methods that also utilized the same raw datasets. Both SIUEs and SDUEs will be implemented.

Table 7 lists the best performances obtained through multiple training runs of the network on two original datasets and their respective optimal datasets, categorized into SIUE and SDUE. First, let us delineate the two primary applications of the diagnostic system. Suppose clinicians aim to classify individuals visiting the hospital for the first time, who are potential patients. In this scenario, they should input pre-collected EEG data from both patients and healthy subjects into the optimal paradigm determined by EIEF. Subsequently, this processed and segmented data should be employed to pre-train a 3D-CNN. After collecting EEG data from a new potential patient and applying the same denoising paradigm, the pre-trained CNN can provide clinicians with an estimate of the likelihood that the individual has SZ, as long as they divide the segment number with positive outputs by the total number. According to the 2 lines of “Op + SIUE”, the diagnosis system based on dataset 1 recognizes an SZ patient with the possibility of 92.29\(\%\), and a healthy man with the possibility of 86.37\(\%\). And for the system based on dataset 2, the 2 indicators are 83.63\(\%\) and 91.67\(\%\). Otherwise, Suppose clinicians expect to monitor one patient’s or potential patient’s progress, they simply need to ensure that the pre-collected EEG data of that person exists in the dataset inputted into the EIEF. And the subsequent procedures remain consistent with the prior usage. With the 99.94\(\%\) accuracy on dataset 1 and the 98.02\(\%\) accuracy on dataset 2, patient’s progress could be traced precisely. Note that since the dataset accumulates EEG data from more subjects over time, all subjects’ EEG data must be collected under the same conditions, which avoids the situation where the optimal paradigm may not be suitable for certain subjects.

After that, let’s focus on the performance changes before and after implementing the optimal paradigm. Whether trained in SIUEs or SDUEs, the classifiers trained on the optimal datasets both achieve better accuracies. Let’s focus on dataset 1 first. In the SIUEs, it can be observed that the optimal denoising paradigm led to an overall accuracy improvement of 0.94\(\%\). Additionally, precision and specificity increase by 3.93\(\%\) and 6.61\(\%\), respectively, while recall decrease by 3.20\(\%\). This indicates that the system, while slightly sacrificing the possibility of correctly identifying patients, better reduces the likelihood of misclassifying healthy individuals as patients. In the SDUEs, the diagnostic system’s capability is significantly enhanced to a level that is almost impossible to surpass. Now turning to Dataset 2, it is noteworthy that the gains achieved by EIEF on Dataset 2 are more remarkable, as reflected in the 5.07\(\%\) (SI) and 5.16\(\%\) (SD) accuracy improvements. Regardless of the Split way, the gains obtained by the system are mainly manifested in a significant increase in correctly identifying patients (recall), albeit at the cost of slightly increasing the probability of misclassifying healthy individuals. These findings collectively underscore the positive impact of EIEF on classifier performance.

Last, the comparison between our work and the other popular methods comes. The methods and results are in Tables 8 and 9. In the comparison with works conducted on dataset 1, our results are competitive in all SD researches. In SI researches, since the experimental results of Lillo et al. were obtained by excluding the subject HC-12, our results still stand out compared to all other works. In the comparison to works conducted on dataset 2, our results can be considered excellent in all SD researches, as we are only slightly behind the best-performing research by 1\(\%\). In SI researches, the work of Shen et al. stands out as exceptionally outstanding, but our results are not too far behind.

Mean-accuracy versus denoising paradigm: 1; Mean-loss versus denoising paradigm: 2; mean-recall versus denoising paradigm: 3; mean-precision versus denoising paradigm: 4; mean-specificity versus denoising paradigm: 5 SI performance change line based on SIUEs; SD performance change line based on SDUEs; The rest legends are the same as those in Fig. 4

4.5 Verification for EIEF mechanism

Two distinct approaches will be undertaken to validate the mechanism of EIEF. They are both based on replacing the 1D-CNN with 3D-CNN to implement another grid search. The first approach involves SI dataset splitting before training, confirming that the optimal denoising paradigms identified through different DNNs exhibit similarity. The second approach entails SD splitting, aimed at rationalizing the condition associated with SI splitting within EIEF (Condition 6).

To avoid accidents in the same light, for one dataset and one split method, 4 relevant unit experiments need to be implemented, indicating there are 67 \(\times \) 4 \(\times \) 2 SIUEs and 67 \(\times \) 4 \(\times \) 2 SDUEs involved in this section. Besides, note that the 5 following metrics are both measured on testsets, and averaged across parallel unit experiments, cross-validation and epochs 101-150, akin to Section 4.3.

As illustrated in Fig. 6, the influence of denoising approaches on diagnostic performances is shown by the lines marked “SI”. From them, the denoising’s tremendous influence still holds. As a matter of fact, the optimums searched by the two Nets are actually different, because they are just the optimal estimations of the effective information rather than the information itself. Whereupon, the difference is caused by the classifiers’ difference and shouldn’t be blamed on hypothesis 1. Analyzing Table 10, we observe that both 1D-CNN and 3D-CNN, when subjected to grid search, yielded optimal denoising paradigms that improved classifier performance. Except for Dataset 2, where the optimal paradigm obtained using 3D-CNN did not enhance the performance of the 1D-CNN classifier, but it did not lead to performance degradation. This suggests that EIEF demonstrates good robustness, consistently identifying denoising paradigms that enhance the proportion of effective information, and this enhancement is not dependent on changes in the network used by the diagnostic system.

As for the second verification way, a statistical analysis of the disparity between the “SD” and “SI” lines yields Table 11, which demonstrates that the SD partitioning method results in higher average performance with minimal performance fluctuations. All the indicators push us conclude that one advanced CNN (like our 3D-CNN) can remember every subject’s EEG pattern and reach a high test performance in the SD evaluation no matter what denoising approach is applied, and such a characteristic runs counter to the core objective of EIEF, which is to identify the optimal metric value. In that case, the dataset partitioning approach required for EIEF should ideally be the SI split.

So far, the grid searches based on 1D-CNN with SI split, 3D-CNN with SD, and 3D-CNN with SI have all been completed. Next, a more detailed robustness analysis will be conducted. We will utilize T-tests to analyze the impact of changes in approach selection within each denoising approach category on network performance, for the three grid searches. Additionally, we will compare the analysis results between 1D-CNN and 3D-CNN, as well as between 3D-CNN with SI and SD, to assess whether changes in network and data split way alter the impact of denoising approaches on performance. For the two SI-based grid searches, the data split is determined and non-random, thus paired T-tests are used. For the SD-based grid search, since the data are randomly shuffled and grouped, two-sample T-tests are employed. In tests, one metric value (such as the accuracy corresponding to paradigm 43) is averaged across multiple parallel unit experiments.

Looking at Table 12. For Dataset 1, there are 9 pairs of means (20.45\(\%\)) showing differences in sign. Considering only the 13 pairs of means with p-values below 5\(\%\) for both networks, only 1 pair of means (7\(\%\)) exhibits differences in sign. When considering only accuracies, no pairs of means exhibit differences in sign. For Dataset 2, there are 16 pairs of means (36.36\(\%\)) showing differences in sign. Considering p-values, 5 out of 15 pairs (33.33\(\%\)) exhibit differences, while considering only accuracies, 3 out of 11 pairs (27.27\(\%\)) exhibit differences. These data suggest that out of the 44 total effects resulting from 11 types of change of the denoising approaches, the direction of most effects remains consistent regardless of the internal network used by EIEF. Turning to Table 13. For dataset 1, there are 15 pairs of mean values (34\(\%\)) that differ in sign. When considering the p-values, 4 out of 21 pairs (19.04\(\%\)) show differences. Looking solely at accuracies, there are 3 out of 11 pairs (27.27\(\%\)) with differences. For dataset 2, 5 pairs of mean values (11.36\(\%\)) differ in sign. When considering the p-values, 2 out of 23 pairs of mean values (8.70\(\%\)) show differences. Considering only accuracies, 2 out of 11 pairs of mean values (18.18\(\%\)) exhibit differences. The analysis suggests that the direction of most effects does not change with the variation in data split strategy during network training.

In summary, while variations in the networks used by EIEF, the data split way, and other unmentioned hyperparameters may somewhat hinder our ability to assess the proportion of effective information in the dataset using network performance metrics, overall, EIEF demonstrates sufficient robustness to remain practically meaningful.

4.6 Comparison between two classifiers

From Figs. 4, 5, 6, it seems like, whether the 1D-CNN or the 3D-CNN is selected to perform a grid search, both models consistently yield a reasonable optimal paradigm. To clarify why the 1D-CNN is included within EIEF while the 3D-CNN is not, let’s delve into a comparative analysis of the searches conducted by these two classifiers. The 60 \(\times \) 4 \(\times \) 2 SIUEs performed by the 3D-CNN and the 60 \(\times \) 8 \(\times \) 2 SIUEs performed by 1D-CNN will be analyzed again (unit experiments corresponding to the original dataset aren’t involved), from the aspects of the mean and the max accuracy, the transverse accuracy STD, and the training time.

As listed in Table 14, 3D-CNN-based classifiers exhibit higher mean accuracies but lower extreme accuracies compared to 1D-CNN-based classifiers. This suggests that the 1D-CNN might be more suitable for constructing the diagnosis system. However, in the case that the system requires re-training frequently because of an increasing number of patient visits, the higher STD of multi-training makes the scalability of a 1D-CNN-based system questionable. Moreover, the 3D-CNN, with its larger size and deeper convolutional depth, holds the potential for improved generalization capabilities in deep learning. Collectively, this evidence indicates that the 3D-CNN offers greater stability and suggests it as the preferred choice for system construction. Conversely, EIEF assesses paradigms with the metric averaged across several epoch-fixed SIUEs, which dilutes the influence caused by the instability of the 1D-CNN. Moreover, 1D-CNN’s training time is significantly less than 3D-CNN. And it can have EIEF implemented faster. Thus, we incorporate it into EIEF.

4.7 Confirmation of improved electrobiological markers

In this section, We will focus on experimentally elucidating that the dataset processed through our framework is more suitable for the pathological analysis of schizophrenia, making the analysis on this dataset more reliable.

As an overview, the data STDs of all the channels of the original and optimal datasets are shown in Fig. 7. Figure. 7(a) shows, for dataset 1, the STD of each channel in the origin is higher than the optimum, and indicates the optimal paradigm found by EIEF does subtract a lot of EEG components from the original data. Considering that the performance metrics prove that such a subtraction hasn’t caused a loss of the SZ classification effective Information, so obviously, it becomes evident that EIEF enhances the proportion of that information within the EEG dataset. This, in turn, reinforces the extraction of SZ electrobiological markers, making it more compelling. However, for dataset 2, the STDs of the origin and the optimum are very close in value. This is because the optimum for dataset 2 consists of linear noise removal and bad channel removal. Actually, dataset 2 does not exhibit significant power-line interference. And channel removal only leads to the loss of several channels in the EEG data of some subjects within the 84 subjects, without removing any components of these channels as a whole. Therefore, even if the proportion of effective Information actually increases, this improvement will not be reflected in the standard deviations. So, the next job is to determine what kind of influence EIEF has on electrobiological markers.

4.7.1 Features extraction and analysis method

Here, we take the difference between the two time-domain feature distributions in the 14 SZ patients and the 14 healthy subjects as an electrobiological marker. And the following Table 15 lists the 38 features selected.

Due to the complexity of EEG signal composition and its difficulty in discerning periodicity, extracting structural features and normal dynamic characteristics of EEG is almost meaningless. But the complex nature of the brain’s electrical activity and its non-linear dynamic characteristics result in diverse EEG patterns. Breaking down the signal into smaller subsystems can potentially modify the irregular patterns and dynamic attributes of the signal [61]. The complexity analysis of EEG signals mainly involves the following aspects of feature analysis: 1. Nonlinear features, which examine whether signals exhibit nonlinear dynamical characteristics, such as chaotic behavior, phase transitions, and fractal structures, etc. Nonlinear features reflect the complex dynamics and nonlinear interactions of signals. 2. Information features, which evaluate the amount of information and the information structure carried by signals, including measures such as information entropy, sample entropy, permutation entropy, SVD entropy etc. Information features can reveal the information organization and encoding methods of signals. 3. Stability features, which Investigate the stability and predictability of signals under different conditions, including stability analysis, long-term dependence, and self-similarity, etc. Stability features reflect the stability and predictability of signals. Most of the features we extracted can quantify one or more of the aforementioned aspects of complexity.

In addition to classical complexity analysis, we have also used a new set of features for analyzing potential patterns in EEG-graph features. These features are essentially geometric characteristics on the visualization graph, and their extraction primarily relies on the two-dimensional visualization of EEG signals. Based on Poincaré pattern of DWT coefficients of EEG signals, Akbari et al. proposed new geometrical features–standard descriptors of 2-D projection (STD), summation of triangle area using consecutive points (STA), as well as summation of shortest distance from each point relative to the 45-degree line (SSHD), and summation of distance from each point relative to the coordinate center (SDTC) [62]. And these features are used in our following analysis.

Specifically, For each dataset and each subject, the corresponding multi-channel data are split into irrelevant segments of 4 seconds and of the size of 19\(\times \)1000 (dataset 1) and 16\(\times \)512 (dataset 2) (the last segment that is less than 4 seconds will be thrown away). Then for each segment and each feature, we extract 19 (16) feature values for all 19 (16) channels (Note that any feature’s parameters are the default set by a python package–“NeuroKit2”.). And for each feature from Poincaré plot, since the signals are decomposed to 5 components with the DWT level 4, we extract 5 feature values for one channel. So the dimension number of a feature vector will be 1026 (19\(\times \)34 + 19\(\times \)5\(\times \)4) for dataset 1 or 864 (16\(\times \)34 + 16\(\times \)5\(\times \)4) for dataset 2. Denote the original SZ feature matrix that consists of all feature vectors extracted from the SZ subjects from the original dataset as \(\varvec{M^{sz} \in \mathbb {R}^{n_{sz} \times L_d}}\), where \(\varvec{n_{sz}}\) is the segment number from the SZ subjects, and \(\varvec{L_d}\) is a variable with optional values of 1027 for dataset 1 and 864 for dataset 2. And denote the \(\varvec{i}\)-th column of \(\varvec{M^{sz}}\) as \(\varvec{m^{sz}_i}\) which represents a feature’s values of a channel. Then, let \(\varvec{M^{hc} \in \mathbb {R}^{n_{hc} \times L_d}}\) denote the original HC feature matrix and let \(\varvec{m^{hc}_i}\) denote the \(\varvec{i}\)-th column of it. Similar, for the optimal dataset, we get \(\varvec{M^{*sz}, m^{*sz}_i, M^{*hc}}\) and \(\varvec{m^{*hc}_i}\). After defining the symbols, we deliver the analysis method with Algorithm 1.

4.7.2 Analysis result