Abstract

Blasting is the primary method for ultra-deep roadway engineering, which is facing the challenge of low footage caused by unsatisfactory blasting effects. Among all the evaluation indicators, the blast-hole utilization rate is the most important index for measuring blasting effect. Consequently, accurately predicting this index is essential for improving roadway excavation efficiency. In recent decades, the field applications of artificial intelligence have emerged as the prime method, yet the issue of data loss and large errors in large-scale data processing remains unresolved. In this study, novel Structured Nonlinear Support Vector Machine (SNSVM) is introduced as the primary research tool. To enhance prediction performance and accuracy, Genetic Algorithm (GA), Particle Swarm Optimization (PSO) and Sparrow Search Algorithm (SSA) are utilized to optimize the hyperparameters of SNSVM. The prediction models comprise fourteen influencing factors, constituting the comprehensive blasting effect prediction system based on artificial intelligence. The principal criteria for assessing the performance of various models are the error correlation coefficients (Root Mean Square Error (RMSE), Mean Absolute Percentage Error (MAPE), R-Square (R2)) and the Receiver Operating Characteristic (ROC) curve (standard deviation rate (γ)). Among the models considered, SSA-SNSVM exhibited the greatest capability when the swarm size is 90. The RMSE, MAPE and R2 values of training datasets are 0.0070, 15.54% and 0.9295, respectively. The RMSE, MAPE and R2 values of testing datasets are 0.0086, 16.37% and 0.9490, respectively. Furthermore, the minimum standard deviation rate of SSA-SNSVM serves as the vital index for measuring the accuracy, with a value of 0.11. Subsequently, the sensitivity analysis results indicate that the most sensitive factor of blast-hole utilization rate is the surrounding rock itself. The comprehensive blasting effect evaluation is of significant importance for the dynamic adjustment of on-site blasting schemes, including roadway excavation, shaft excavation, or pressure-relief engineering.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

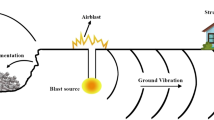

Blasting operation is widely employed in large-scale civil and mining engineering, such as tunnel excavation [1], the open-pit mining [2,3,4,5], shaft development [6] and roadway excavation [7]. For the ultra-deep roadway excavation engineering, within the premise of ensuring safe production, the prime objective of optimization and adjustment for the blasting scheme is increasing the single cycle footage, optimizing the number of cross-section blast-hole, and reducing material loss as much as possible [8, 9]. Furthermore, the ultimate goal of all the measures is to improve the blast-hole utilization rate, which can achieve the best blasting effect and enhance the efficiency of the blasting operation [2,3,4,5].

Aiming at the problem of low blast-hole utilization rate, despite the efforts of domestic and domestic-foreign scholars, who have conducted a series of theoretical analyses, numerical simulations, and model experiments [10], the complex environment of “three high and one disturbance” in ultra-deep roadway continues to affect the excavation efficiency [11]. Moreover, due to the fact that only one blasting scheme is generally adopted on site, or slight adjustments are made on the basis of the original scheme [12], the single-cycle operation effect of roadway excavation is not optimal, while the blast-hole utilization rate is only 50% -70% [13]. In addition, it is widely acknowledged that the process of fine-tuning blasting parameters is laborious, as it necessitates the simultaneous adjustment of multiple parameters, which inevitably leads to the generation of numerous cross-experiments [14]. As a consequence, exploring how to improve the blast-hole utilization rate has consistently constituted a focal point of research in the field of ultra-deep roadway excavation engineering.

For the blasting engineering, the previous empirical formulas or models only contain five to six parameters [15,16,17]. However, the blasting effect is limited by numerous factors, including the excavation site, geological conditions, and the explosive itself [16,17,18]. Developing a comprehensive equation that considers multiple factors simultaneously is a challenging task [19]. However, the introduction of artificial intelligence (AI) has provided a solution to the complex relationship between multiple inputs and outputs [20]. The past 20 years have witnessed a rapid development in the application of various AI tools in civil and mining engineering with encouraging results [21].

The utilization of AI tools to address engineering challenges associated with coal mine blasting is illustrated in Table 1. It is evident that Support Vector Machine (SVM) is an indispensable application or comparison tool in previous AI-based studies, which is utilized to solve practical problems in deep roadway excavation and blasting engineering. However, there are still some problems to be addressed in these studies or work outcomes using SVM: (1) The majority of research endeavors to enhance the pre-processing algorithms for the standard SVM model [18]. Nevertheless, the issue of data loss resulting from errors in large-scale data processing remains unresolved [2,3,4,5]. (2) The selection of influencing factors at the input stage is based solely on experience or prior research, and fails to take into account engineering geological and field conditions, as well as additional factors. This deviation from the true engineering site is significant [22, 23]. (3) The comparison method of the models with various algorithms is relatively simple [24]. The evaluation of the models is based on a limited number of indicators, such as RMSE, R2 and VAF [25, 26]. Well then, this approach does not allow for a comprehensive assessment of the model diversity. Furthermore, the reliability and authenticity of the results remain uncertain.

This study is organized as follows. Section 2 introduced the situation of the test site and the specific method of influencing factor selection based on analysis of the blasting scheme design, also displays the collected data. The primary research tool (SNSVM) and three optimization algorithms (GA, PSO and SSA) are presented in Section 3. Section 4 displayed the evaluation indices of models. Section 5 showed the experimental results of SVM and SNSVM models, includes the error correlation coefficients and the ROC curves. In-depth analysis of each model is discussed in Section 6, the SSA-SNSVM obtained the greatest performance with a swarm size of 90. Section 7 demonstrates the sensitivity analysis results of influencing factors. Some fundings are discussed in Section 8. Section 9 addressed the limitation of this study. Finally, the impact of multiple influencing factors at the input end on the blast-hole utilization rate is demonstrated through sensitivity analysis. The work framework flow of this study is illustrated in Fig. 1.

2 Data description

2.1 Testing location

In this study, the blasting site is situated in Fengtai County, Huainan City, Anhui Province (Fig. 2(a)), which is part of the Huaihe mine area. As the primary coal-producing region, the Huaihe mine area is rich in coal resources and is responsible for transporting coal to southeast China. It occupies a pivotal strategic position. Fengtai County serves as a hub for coal seams in this region, with a large significant concentration of coal mines, collectively producing over one million tons coal annually. However, the majority of coal mines are ultra-deep roadways with a buried depth of more than 600 m. Furthermore, due to the hard rock lithology and complex geological conditions, the vast majority still use the traditional blasting excavation method, as illustrated in Fig. 2(b) and Fig. 2(c).

The rock strata in the Fengtai mine group are predominantly sandstone, distributed throughout the upper and lower mining areas (Fig. 2(d)). There are few weak aquifers in the mining area, with each rock stratum exhibiting greater stability and control of surrounding rock, while rock strength generally being high. It can be seen that the traditional blasting method employed in this region presents a number of challenges. These include the generation of large blocks of gangue, the lower footage, massive material loss, lack of an obvious half-hole mark, and so on. These issues have a detrimental impact on the efficiency of the excavation and production processes, as illustrated in Fig. 3.

Combine with the field investigation, the primary reasons for lower footage can be attributed to the following factors:

-

The lithology and integrity of surrounding rock are sound, but breaking of molecular bonds within the rock requires greater energy;

-

The in-situ stress is high, and the lateral pressure coefficient reaches 1.35, making it resistant to shock wave crushing and explosive gas wedging, and able to withstand radial fissures caused by failure;

-

Groundwater storage conditions are favorable, with few weak aquifers or roof leaks;

-

During the drilling or loading process, structural surfaces and faults cause the hole to collapse, which can negatively impact the blasting effect;

-

Excavating large sections of roadways presents challenges in terms of blast charge control and scheme design, particularly in the core area where straight cut-hole forms are still common.

2.2 Influencing factors

Data collection after blasting serves as the foundation for the evaluation of overall blasting effect. The measurement of blast-hole utilization rate is primarily focused on two aspects: firstly, the core is the cutting hole blasting excavation depth; the periphery hole blasting forms the roadway contour, which serves as the secondary aspect. Hong et al., [31] introduced the blast-hole utilization rate (η) as a means of more accurately evaluating the blast-hole utilization rate through the data, which is defined as the ratio from footage of a single blasting cycle to the average depth of blast hole (Eq. (1)). This measure indicates both the depth of cavity created by the hole and the impact of the surrounding hole on the roadway contour.

In the formula: S is the footage of single circulation operation; L is the average length of the blast hole, L = (L1 + L2 + L3)/3, L1, L2, L3 represent the depth of the cutting hole, the auxiliary hole and the periphery hole.

The factors influencing the direction of blasting in roadway excavation are selected based on two aspects: geological conditions and blasting scheme. This selection is made with consideration of both the specific construction site situation and the blasting scheme design.

-

(1)

Geological conditions

In the field of geotechnical engineering and mining, uniaxial compressive strength (UCS) is often the preferred choice for prediction and evaluation studies. Additionally, in the research of predicting blast-hole utilization rate, the impact of structural planes inside the rock must also be considered. Based on the results of on-site coring, another vital factor of initial property is the rock quality designation (RQD). Subsequently, it is imperative to undertake an objective assessment of the geological conditions at the site, with particular attention to the occurrence of the “three high and one disturbance” phenomenon. This encompasses groundwater, ground temperature, in-situ stress, and blasting disturbance in ultra-deep roadway engineering.

To quantify the effects of diverse factors, Groundwater erosion is defined using the softening coefficient K [2,3,4,5], with the calculation detailed in Eq. (2). Blasting disturbance is assessed by the damage degree, which is a comparison of the results of CT scans of core rock samples taken before and after blasting [32], and the calculation method is shown as Eq. (3).

In this formula: f is the UCS value of rock samples in water-saturated state, and F is the UCS value of rock samples in the dry state.

In this formula: Dt is the fractal dimension of the internal damage area after the explosion; D0 is the fractal dimension of the initial damage area; and \({D}_{t}^{\mathrm{max}}\) is the fractal dimension of the maximum damage area inside the rock sample.

-

(2)

Blasting scheme

In order to predict the blast-hole utilization rate, it is necessary to analyze the impact of different types of blast-holes on the entire roadway face. By examining the on-site blasting scheme (Fig. 4), which is utilized for the “-648 m horizontal cooling chamber” in Gubei Coal Mine, it is possible to determine the region division affected by each type of blast hole. The roadway section has been divided into three regions: region 1 serves as the cutting hole; region 2 acts as the auxiliary hole; region 3 takes the role of the periphery hole. Moreover, region 1 forms the cutting cavity, which is the core of the entire section; while region 3 forms the roadway outline, which is the supplement of region 1; at last, region 2 is the blasting assistance for region 1 and region 3.

Once the functions of each area have been identified, the elements that impact blast-hole utilization rate are presented. In the case of determining the charging method, the factors that influence the utilization rate of blast holes in the blasting design are determined, including the cutting hole depth, number, arrangement form and average charge; cycle number of the auxiliary hole; the periphery hole depth, number and average charge. In particular, the distribution form of the cutting hole is primarily fixed by the hole arrangement for region 1, which is roughly categorized into four types [19]: triangle, rectangle, arch, and inverted trapezoid, as illustrated in Fig. 5.

2.3 Data collection

In this study, 118 datasets are collected from 12 roadways in Gubei, Guqiao and Dingji Coal Mine. The objective of dataset acquisition is to investigate the factors that influence blast-hole utilization rate, using data obtained from field investigations and quantitative laboratory analyses. Figure 6(a) presents the geological condition parameters, while Fig. 6(b) displays the influence factors of cutting holes, well then, the influence factors of auxiliary and periphery holes are shown in Fig. 6(c). The cycle number of the auxiliary hole will change with the roadway cross-section area, but this only happens at a few fixed values with no special cases.

3 Methodology

3.1 SNSVM

The traditional SVM is widely used in statistical classification and regression analysis [25, 26], which exhibits maturity of prediction and evaluation of the field engineering, has the following advantages: (1) It can eliminate a large number of redundant samples and has good robustness and generalization ability [33],(2 The complexity of the calculation results depends on the number of support vectors, which can effectively avoid the curse of dimensionality [34],(3) With the help of the sum function, high-dimensional space mapping can be realized, which has the ability to solve nonlinear problems [35].

However, due to its inability to address data loss during large-scale data processing, it proves inefficient for training and testing when working with more than 40 samples, which will lead to considerable errors and diminished accuracy [36]. Therefore, its utilization for geotechnical engineering and mining fields remains problematic. A new Structured Nonlinear Support Vector Machine (SNSVM) is created by expanding the Gaussian kernel function to address nonlinear issues (Fig. 7), which is based on SVM and SSVM (Structured Support Vector Machine). The exact process of constructing SNSVM is as follows:

a real-valued function is created for each category label: g(x, y; w) = w‧ξv (x, y), ξv is a characteristic function of X‧Y → R*, then the decision function can be expressed as:

Suppose: Given “m” sample points, any (xj, yj) of them is not in the class label y, and all sample points (xi, yi) belong to the class label y (y = yi). At the same time, the loss function C is re-divided into intra-class loss C1 and inter-class loss C2.

Intra-class loss C1: Losses between sample points (xi, yi) in class label y are recorded as intra-class losses and expressed as:

Inter-class loss: The loss between the sample point (xi, yi) and the class label y is recorded as an inter-class loss, expressed as:

Then the loss function of SNSVM is redefined as:

The original problem of SNSVM is transformed into the loss function reconstruction as

It is not possible to guarantee the accuracy of the SNSVM, due to manual determination of penalty factors “C1”, “C2” and kernel deviation “g”, which are optimized by algorithms [37]. Thus, swarm bionic optimization algorithms play the significant role in optimal methods [38], such as Artificial Bee Colony ( [39]), Ant colony optimization [40], Moth-Flame Optimization [41], Grey Wolf Optimization [42] and Whale Optimization Algorithm (Zhou et al., 2021) have gained widespread usage. In this study, three algorithms are utilized to parameter optimization: Genetic Algorithm (GA), Particle Swarm Optimization (PSO) and Sparrow Search Algorithm (SSA). These algorithms are coupled with SNSVM to develop a variety of prediction models.

3.2 GA

Genetic Algorithm (GA) is an AI-based approach to finding the optimal solution by simulating the organic evolution process [43]. The algorithm expresses the evolution process of natural swarms through mathematical methods. Computer simulations transform the problem’s solution into the selection, crossover, and mutation of chromosome genes, mimicking biological evolution. The process gradually updates individuals at each iteration to become more adaptable to the environment, ultimately resulting in the optimal solution to the problem.

As depicted in Fig. 8, GA includes three basic operations: selection, crossover and mutation:

-

(1)

Selection: select some individuals from the old group to the new group by probability, where the probability is related to the fitness function, the higher the fitness function value, the greater the probability of selection;

-

(2)

Crossover: The partial gene codes of two different chromosomes in the swarm were crossed and combined into new individuals;

-

(3)

Mutation: Randomly select an individual from the swarm, that is, randomly select a gene from the chromosome to mutate, resulting in a better individual.

3.3 PSO

Particle Swarm Optimization (PSO) is a swarm intelligence algorithm [44], which was proposed by Eberhart and Kennedy in 1995. Each particle in the N-dimensional space has independent thinking ability, and moves selectively in the process of judging the best position, and achieves the optimal solution through the movement of internal individuals or groups. In the N-dimensional space, the number of particle swarms is n, the position of each particle is xis, the velocity is \({v}_{\mathrm{is}}^{h}\), the best position for a single particle to think is \({P}_{{\mathrm{is}},{\mathrm{pbest}}}^{h}\), and the best position for the particle swarm is \({\overline{P} }_{{\mathrm{is}},{\mathrm{gbest}}}^{h}\). Then the trajectory of each particle includes its motion and the motion of the group, which can be expressed as Eq. (9) and Fig. 9.

In the formula: R1 and R2 are random numbers (0,1) subject to logistic function distribution; h is the number of iterations; C1 and C1 are the acceleration influence coefficients.

3.4 SSA

Sparrow Search Algorithm (SSA) is also a swarm bionic optimization algorithm, which belongs to the same category as GA and PSO (Li et al., 2022). It is designed to simulate the foraging and anti-predation behavior of sparrow groups. To complete the foraging process, sparrows are divided into explorers and followers, which are not fixed entities and can be altered at any time. In nature, to enhance their predation rates, “followers” frequently compete for food resources from high-intake companions. While foraging, all individuals will remain vigilant to the surrounding environment to prevent the arrival of natural enemies. The specific optimization process is shown in Fig. 10.

SSA mainly comprises four steps: initialize parameters and calculate the fitness, update explorer location, update follower location and update danger receiver location. the initial swarm defines quantities and locations to determine the best food source based on fitness values. Assuming that there are “n” sparrows, each with D-dimensional characteristics, then the swarm and fitness matrix are expressed as:

The explorer first provides optimized guidance for followers, and its position is updated to:

In the formula: i is the current number of iterations, imax is the maximum number of iterations; uit and uit+1 indicate the position at time t and t + 1 after the update; α is a random number within [0,1]; A is a random number obeying normal distribution; S is a matrix of 1 X d elements are 1; Q ∈ [0,1] is the warning value, R ∈ [0.5,1] is the safety value.

When Q > R, it indicates that some sparrows have found the danger of predators, swarms should take action; while Q < R indicates that there are no natural enemies, the explorer can perform global optimal searches to provide optimal guidance to the follower, the follower’s location is updated as:

In the formula: uworst is the worst position in the global search process; ui,op is the optimal position for the explorer; B is a matrix of 1 X d, and the element random amplitude is -1 or 1.

When followers emerge, the initial follower with a lower fitness value is in a poor state and must seek out a new foraging location. Assuming that 20% of the swarm is hazardous and the individual is randomly generated, the sparrow's position is then updated as:

In the formula: utbest is the current global optimal position; β is the step length control parameter, which obeys the normal distribution; C is a random number in [-1,1]; D is to avoid the constant of denominator 0; fi is the fitness value, while fb is the current optimal value, fw is the worst value.

4 Evaluation indicators

To comprehensively assess the predictive capacity of SNSVM models that rely on GA, PSO and SSA, three error correlation coefficients will be performed on the experimental process and results [45], including the root mean square error (RMSE), mean absolute percentage error (MAPE), and R-Square (R2), the matrices are expressed as Eq. (14) to Eq. (16).

In the formula: n is the training sample number;\({y}_{i}\) is the true value, \({\widehat{y}}_{i}\) is the predicted value, and \({\overline{y} }_{i}\) is the average of true value.

5 Results

To avoid the issue of local minimum value, three distinct swarm bionic algorithms (GA, PSO, SSA) for global search are utilized. Three prediction models of blast-hole utilization rate (GA-SNSVM, PSO-SNSVM and SSA-SNSVM) are established, which facilitate the expansion and advancement of the SNSVM. The aforementioned fourteen factors are employed as input parameters, while the blast-hole utilization rate serves as output parameters. The final test outcomes are influenced by multiple parameters in three algorithms. The most representative swarm sizes and the number of iterations has been selected for the primary analysis. Furthermore, the remaining parameters are set as demonstrated in Table 2.

5.1 GA-SNSVM

To achieve the most optimal results, it is necessary to select significant parameters that impact GA performance. These include swarm sizes and the number of iterations. In general, as the number of iterations increases, performance stabilizes but the program’s running time increases. This section will demonstrate the optimization performance of various models through the ROC curve and RMSE values.

In the GA-SNSVM pre-testing process, the model’s prediction performance region becomes stable with number of iterations reaching 400, which leads to the determination of a maximum iteration number limit of 400. The swarm sizes are 50, 60, 70, 80, 90 and 100, respectively. As shown in Fig. 11 and Fig. 12, while the optimal processes for various swarm sizes differ, the ROC curve and RMSE trends for models remain consistent. Furthermore, the RMSE value decreases with the increased number of iterations. The GA-SNSVM models achieve the minimum RMSE value, ultimately stabilizing at 0.013–0.014.

To assess the performance of GA-SNSVM models with varying swarm sizes, RMSE, MAPE and R2 are utilized. These evaluation indices are generated by integrating a comprehensive evaluation system. The comprehensive scores for various swarm sizes are calculated, and the final results are presented in Table 3. The optimal parameter combination for GA-SNSVM models is identified as the highest-scoring configuration.

When the swarm size is 90, the model achieves the highest score of 25, resulting in the best prediction performance. Furthermore, the values of RMSE, MAPE and R2 of the training sample are 0.0115, 17.21% and 0.8601, respectively. The RMSE, MAPE and R2 of the testing sample are 0.0144, 16.88% and 0.8673, respectively. Despite the model’s suboptimal evaluation indices at a swarm size of 90, its comprehensive performance is superior to that of other models. Upon reaching a swarm size of 80, the training sample and test sample exhibited disparate performance. The training sample demonstrated remarkable efficacy, yet this is not reflected in the testing sample.

5.2 PSO-SNSVM

The parameter control of PSO has a synergistic relationship with the reconstruction of the loss function in SNSVM. Particle and particle swarm optimization is consistent with the division of intra-class and inter-class losses. Similar to the analysis of the GA-SNSVM model, the number of iterations and swarm sizes are selected to be discussed in the test results. Furthermore, the number of iterations is maintained at 400, while the swarm size is controlled at 50, 60, 70, 80, 90, and 100 to enable comparison. The ROC and RMSE curve of various swarm sizes are displayed in Fig. 13 and Fig. 14, when the number of iterations reaches 250, the PSO-SNSVM models achieve the minimum RMSE value, which finally stabilizes at 0.010–0.011.

The evaluation metrics of PSO-SNSVM models are listed in Table 4. The model attains its optimal performance with a swarm size of 100, the comprehensive performance is better than other swarm sizes, which achieves the highest score of 22. The training sample displays RMSE, MAPE and R2 values of 0.0089, 18.53% and 0.8905, respectively. In contrast, the testing sample shows RMSE, MAPE and R2 values of 0.0107, 18.47% and 0.9082 respectively. However, when the swarm sizes reach 50 and 70, the training sample performs better than the testing sample, it is not fed back to the testing sample, which is similar to GA-SNSVM.

5.3 SSA-SNSVM

Sparrow Search Algorithm (SSA) is a new swarm bionic optimization algorithm, which showcases the superiority and stability in parameter optimization. To conduct a controlled trial, the same number of iterations are utilized while maintaining a swarm size of 50, 60, 70, 80, 90 and 100. ROC and RMSE curve with various swarm sizes are shown in Fig. 15 and Fig. 16. When the number of iterations reaches 50, the SSA-SNSVM models obtains minimum RMSE value, and ultimately stabilize at 0.008–0.009. It is evident that SSA-SNSVM models demonstrate rapid convergence, indicating exceptional optimization proficiency.

The evaluation indices of SSA-SNSVM models are presented in Table 5. The model achieves the best optimal performance with a swarm size of 90, resulting in a score of 24. The RMSE, MAPE and R2 of the training samples are 0.0070, 15.54% and 0.9295, respectively. The RMSE, MAPE and R2 of the testing samples are 0.0086, 16.37% and 0.9490, respectively. The prediction model demonstrates excellent performance across all evaluation metrics, with the most favorable feedback provided. The SSA-SNSVM model performs exceptionally well, with no significant discrepancy between testing samples and training samples.

6 Discussion

The Area of Under Curve (AUC) is a metric used to assess the advantages and disadvantages. It is commonly used to ascertain the viability and authenticity of the models, by recording the area enclosed to the coordinate axis under the ROC curve, while the value range is 0.5 to 1.0. When the value is equal to 0.5, the model is not available due to its lowest feasibility, while the closer the value is to 1.0, the greater the authenticity of the detection method. In addition, Std is another index that measures the degree of data dispersion. The AUC and Std values for SNSVM models conducted with various swarm sizes are listed in Table 6, and the running time is also recorded.

In addition to error correlation coefficients, the concept of standard deviation rate γ is introduced to enhance the operational precision of SNSVM models, which can be expressed by Eq. (17). Well then, the dynamic evolution of standard deviation rate with swarm sizes will be recorded.

As depicted in Fig. 17, the three types of SNSVM model performance exhibit distinct differences with varying swarm sizes. When the swarm size is 90, the GA-SNSVM model displays the most favorable results, with a minimum standard deviation rate of 0.26%. Similarly, the SSA-SNSVM also demonstrates remarkable efficacy, with a value of is 0.11%. On the contrary, the PSO-SNSVM performs the poorest with a value of 0.9%, its minimum standard deviation rate is 0.19% with a swarm size of 100. The SSA-SNSVM model consistently demonstrates the lowest value compared to the other two models, regardless of swarm sizes. A comprehensive comparison of the gentle area trend reveals that three types of SNSVM models exhibit the following operational accuracy ranking: SSA-SNSVM > PSO-SNSVM > GA-SNSVM.

Finally, predicted values along with actual values of blast-hole utilization rate for GA-SNSVM, PSO-SNSVM and SSA-SNSVM are presented in Fig. 18(a), (b) and (c), respectively. The accuracy of three models for training datasets and testing datasets are shown in Fig. 18(d). Compared to the other two models, the SSA-SNSVM obtains the best convergence for predicted and actual values, while demonstrating minimal error during the test. These findings align with previous RMSE and error analysis results, which indicate that the SSA-SNSVM model has the most optimal performance with a swarm size of 90.

Furthermore, the performance comparison among the SVM models (SVM, GA-SVM, PSO-SVM, SSA-SVM) and SNSVM models (SNSVM, GA-SNSVM, PSO-SNSVM, SSA-SNSVM) can be conducted in term of accuracy and efficiency, as illustrated in Fig. 19 and 20. In comparison to the SVM models, there is a significant enhancement in the accuracy of the SNSVM models. Notably, the accuracy of SNSVM models is increased by 17.95% on average. More importantly, SSA-SNSVM achieved an accuracy of up to 95.08%, experienced an increase in accuracy by 19.07%. The reconstruction of the loss function enables precise data control. Meanwhile, the dual kernel function’s implementation facilitates accurate data classification, thus resolving the issue of data loss in large-scale data applications.

In Fig. 20, the SVM and SNSVM models exhibit a considerable range in operational efficiency, the average running time is 7.5589 s, 15.1321 s, 12.6127 s, 13.1340 s, 8.4629 s, 17.8541 s, 14.6597 s and 11.2657 s, respectively. The double kernel function and the loss function both increase the running burden of SNSVM, resulting in a 11.96% prolongation of the running time. However, this remains within a tolerable range. With regard to SNSVM models, SSA algorithm has the greatest improvement in the learning efficiency of SNSVM, while SNSVM with GA and PSO is weaker than that of SVM models. Nevertheless, SSA-SNSVM exhibits ana improvement of 14.23% than SSA-SVM. In consideration of the error correlation coefficients, ROC curve, standard deviation rate, accuracy and running time, it can be observed that SNSVM has a significant improvement in data processing and learning capabilities in comparison to SVM.

7 Sensitivity

To achieve the research objectives of this paper, the prediction model for blast-hole utilization rate in deep rock excavation engineering is proposed. These models have been developed through a combination of site survey, addressing conditions, and blasting scheme analysis. Meanwhile, the sensitivity-cosine analysis method has been employed in order to enhance the computational efficiency of the AI-based model and handle the intricate data interaction [46]. This method is utilized to identify and compare the sensitivity of each influencing factor to the blast-hole utilization rate, thereby assessing the significance of input variables.

The input and output variables are organized as column matrices, resulting in fifteen column matrices that match the dataset sizes. The Eq. (18) is then used to analyze the blast hole utilization's sensitivity to different impact indicators.

In the formula: m is the total number of input and output variables, n is the total number of data to be processed; \({x}_{\mathrm{an}}\) is the input variable, \({x}_{bn}\) is the output variable, \(a\) is the number of input variables, b is the number of output variables; \({S}_{mn}^{i}\) is the sensitivity of the model, \(i\) is the type of model.

The data presented in Fig. 21 indicates the sensitivity of different factors to blast-hole utilization rate. The results demonstrate that the nature of the surrounding rock, including UCS and RQD, is the most sensitive factor to blast-hole utilization rate. This finding aligns with the fundamental theory of blasting, as the rock’s strength and integrity offset the dynamic stress from the explosion shock wave and detonation gas during the blasting process. Consequently, these findings indicate that both UCS and RQD have a positive impact on blast-hole utilization rate.

Secondly, considering the factors of the blasting scheme, in region 1 (Fig. 4) of the cutting hole, when the blast-hole number is constant, the triangle or rectangular arrangement has little effect on the blast-hole utilization rate. In region 3 of the periphery hole, after determining the contour range, increasing or decreasing the hole count has little impact on the formation of the roadway contour. However, both depth and average charge are the primary factors in blast-hole utilization rate prediction, regardless of whether it is the cutting hole, the periphery hole or the auxiliary hole.

Moreover, the sensitivity of at least 0.8 for each factor indicates the significant contribution of selected factors to the blast-hole utilization rate. Based on the aforementioned experiments and collected datasets, the sensitivity of various factors to blast-hole utilization rate is ranked from highest to lowest: RQD, UCS, average charge of cutting hole, groundwater erosion, cutting hole arrangement form, cycle number of auxiliary hole, depth of cutting hole, depth of auxiliary and periphery hole, in-situ stress, geo-temperature, number of cutting hole, average charge of single hole, blasting disturbance and number of periphery hole. It should be noted that different sample data may lead to varying results, but analysis findings can serve as a guide for blasting design in similar working conditions.

8 Conclusion

In this study, we propose a new support vector machine (SNSVM) model for managing large-scale datasets, well then, establish GA-SNSVM, PSO-SNSVM, and SSA-SNSVM models by combining with GA, PSO, and SSA algorithms. These models are then utilized to predict the blast-hole utilization rate in ultra-deep roadway engineering. The SNSVM model divides the loss function into intra-class and inter-class categories to decrease data dispersion while preserving high sensitivity. This approach addresses the issue of large errors that can occur during the processing of large datasets. Additionally, swarm bionic optimization algorithms are employed to optimize hyper-parameters, thereby enhancing the accuracy and stability of SNSVM models.

Five performance measures are employed to compare the performance of SVM and SNSVM models. These included error correlation coefficients, ROC curves, standard deviation rates, accuracy, and running time. On average, the accuracy of SNSVM models improved by 17.95% compared to SVM models. Among various models, the SSA-SNSVM demonstrated exceptional comprehensive performance with a swarm size of 90. RMSE, MAPE, and R2 values for the training datasets are 0.0070, 15.54%, and 0.9295, respectively. The testing datasets yield RMSE, MAPE, and R2 values of 0.0086, 16.37%, and 0.9490, respectively. SSA-SNSVM also obtains the lowest standard deviation rate of 0.11% with an AUC ± Std value of 0.9485 ± 0.0011. Furthermore, the model achieves a high accuracy of 95.08%, while the study efficiency improves by 14.23% compared to the SSA-SVM model.

Based on the sensitivity analysis, RQD and UCS exert the greatest impact on the prediction outcomes. In comparison with AI-based studies on ground or shallow blasting, our research incorporates more intricate factors of ultra-deep blasting excavation engineering. Therefore, the derived results can provide crucial recommendations for blasting scheme designations. On the other hand, this study presents a viable investigation into employing AI tools in the field of blasting engineering. This approach offers a novel solution to enhance the efficiency of blasting excavation and expedite the intelligent progression of ultra-deep mineral resources.

9 Limitation

The SNSVM models present the dominant approach for forecasting the blast-hole utilization rate in ultra-deep roadway excavation engineering, with notable success. However, there are still limitations and shortcomings that require further attention in subsequent studies. Firstly, the test sample dataset is relatively small, only 118 samples and just collected in the Huainan mining area. Data mining is more reliable when performed on large datasets collected from diverse conditions, strata, landscapes, or regions. Additionally, the mechanism of blasting excavation in ultra-deep roadways is more complex than that in open-pit and shallow parts. Therefore, it is crucial to deeply analyze the mechanism of deep blasting, to dig more factors related to the blast-hole utilization rate. Finally, to improve the prediction accuracy of the blast-hole utilization rate, algorithms can be utilized, which are suitable for the development of SNSVM. More importantly, a plethora of sophisticated metaheuristic models can be emploied to model investigation and comparison, including Adaptive-Network-Based Fuzzy Inference System (ANFIS), Gradient Boosting Machine (GBM), Convolutional Neural Networks (CNNs), Random Forest (RF), Bayesian methods (BN), and so on.

Data availability

The authors do not have permission to share data.

References

Huo XF, Shi XZ, Qiu XY, Zhou J, Guo YG, Yu Z, Ke WY (2020) Rock damage control for large-diameter-hole lateral blasting excavation based on charge structure optimization. Tunn Undergr Space Technol 106:103569. https://doi.org/10.1016/j.tust.2020.103569

Li QQ, Peng X, Wang J, Cheng YY, Zhang K, Dai WW, Ju CG, Zhang XY, Wu Y (2023) Non-sintered wrap-shell lightweight aggregates from dredged soils: study of softening coefficients and water absorption-desorption behavior. Constr Build Mater 374:130871. https://doi.org/10.1016/j.conbuildmat.2023.130871

Li RR, Xu S, Li ZC, Suorineni FT, Zhu GJ (2023) Development and testing of self-swelling cartridge for use as stemming material in open-pit blasting —A quarry case study. Int J Rock Mech Min 170:105503. https://doi.org/10.1016/j.ijrmms.2023.105503

Li CX, Yang RS, Wang YB, Kang YQ, Zhang YT (2023) Theory and numerical simulation of deep hole cut blasting based on dispersed charge and staged detonation. Int J Rock Mech Min 169:105453. https://doi.org/10.1016/j.ijrmms.2023.105453

Li QL, Wang Y, Shao YD, Li L, Hao H (2023) A comparative study on the most effective machine learning model for blast loading prediction: From GBDT to Transformer. Eng Struct 276:115310. https://doi.org/10.1016/j.engstruct.2022.115310

Hao YM, Wei XD, Li Q, Zhao GF (2024) A feasible approach for engineering-scale 3D blasting numerical modelling incorporating explosive charges and layout design. Comput Geotech 170:106253. https://doi.org/10.1016/j.compgeo.2024.106253

Lu A, Yan P, Lu WB, Li XF, Liu X, Luo S, Huang SL, Grasselli G (2024) Crack propagation mechanism of smooth blasting holes for tunnel excavation under high in-situ stress. Eng Fract Mech 304:110144. https://doi.org/10.1016/j.engfracmech.2024.110144

Huo XF, Shi XZ, Qiu XY, Zhou J, Guo YG, Yu Z, Zhang SZ (2022) A study on raise blasting and blast-induced vibrations in highly stressed rock masses. Tunn Undergr Space Technol 123:104407. https://doi.org/10.1016/j.tust.2022.104407

Xu JH, Kang Y, Wang XC, Feng G, Wang ZF (2019) Dynamic characteristics and safety criterion of deep rock mine opening under blast loading. Int J Rock Mech Min 119:156–167. https://doi.org/10.1016/j.ijrmms.2019.04.015

Fan JS, Yuan Q, Chen J, Ren YW, Zhang DD, Yao H, Hu B, Qu YH (2024) Investigation of surrounding rock stability during proximal coal seams mining process and feasibility of ground control technology. Process Saf Environ 186:1447. https://doi.org/10.1016/j.psep.2024.04.091

Singh SK, Banerjee BP, Raval S (2023) A review of laser scanning for geological and geotechnical applications in underground mining. Int J Min Sci Technol 33(2):133–154. https://doi.org/10.1016/j.ijmst.2022.09.022

Duan BF, Xia HL, Yang XX (2018) Impacts of bench blasting vibration on the stability of the surrounding rock masses of roadways. Tunn Undergr Space Technol 71:605–622. https://doi.org/10.1016/j.tust.2017.10.012

Ma XM, Chen ZY, Chen P, Zheng HZ, Gao XY, Xiang JJ, Chen LY, Huang YP (2023) Predicting the utilization factor of blasthole in rock roadways by random forest. Undergr Space 11:232–245. https://doi.org/10.1016/j.undsp.2023.01.006

Luo Y, Xu K, Huang JH, Li XP, Liu TT, Qu DX, Chen PP (2021) Impact analysis of pressure-relief blasting on roadway stability in a deep mining area under high stress. Tunn Undergr Sp Technol 110:c103781. https://doi.org/10.1016/j.tust.2020.103781

Kucewicz M, Baranowski P, Mazurkiewicz L, Małachowski J (2023) Comparison of selected blasting constitutive models for reproducing the dynamic fragmentation of rock. Int J Impact Eng 173:104484. https://doi.org/10.1016/j.ijimpeng.2022.104484

Li EM, Yang FH, Ren MH, Zhang XL, Zhou J, Khandelwal M (2021) Prediction of blasting mean fragment size using support vector regression combined with five optimization algorithms. J Rock Mech Geotech Eng 13:1380–1397. https://doi.org/10.1016/j.jrmge.2021.07.013

Li XH, Zhu ZM, Wang M, Wan DY, Zhou L, Liu RF (2021) Numerical study on the behavior of blasting in deep rock masses. Tunn Undergr Sp Technol 113:103968. https://doi.org/10.1016/j.tust.2021.103968

Wojtecki L, Iwaszenko L, Apel DB, Bukowska M, Makówka J (2022) Use of machine learning algorithms to assess the state of rock-burst hazard in underground coal mine openings. J Rock Mech Geotech Eng 14:703–713. https://doi.org/10.1016/j.jrmge.2021.10.011

Yang RS, Li CX, Chen J, Zou FY, Wang YB, Xiao CL, Zhang ZR (2023) Development history and new technology research progress of rock roadway blasting excavation in coal mines in China. Coal Sci Technol 51(1):224–241. https://doi.org/10.13199/j.cnki.cst.2022-1804. ([In Chinese])

Yüksel N, Börklü HR, Sezer HK, Canyurt OE (2023) Review of artificial intelligence applications in engineering design perspective. Eng Appl Artif Intell 118:105697. https://doi.org/10.1016/j.engappai.2022.105697

Archur CK, Onifade RM, Mohamad ET, Sabri MMS, Bohra M, Khandelwal M, Kwon S (2022) Prediction of blast-induced ground vibration at a limestone quarry: an artificial intelligence approach. Appl Sci-Basel 12(18):9189. https://doi.org/10.3390/app12189189

Lawal AI, Kwon S (2021) Application of artificial intelligence to rock mechanics: An overview. J Rock Mech Geotech Eng 13:248–266. https://doi.org/10.1016/j.jrmge.2020.05.010

Noriega R, Pourrahimian Y (2022) A systematic review of artificial intelligence and data-driven approaches in strategic open-pit mine planning. Resour Policy 77:102727. https://doi.org/10.1016/j.resourpol.2022.102727

Yu Z, Shi XZ, Miao XH, Zhou J, Khandelwal M, Chen X, Qiu YG (2021) Intelligent modeling of blast-induced rock movement prediction using dimensional analysis and optimized artificial neural network technique. Int J Rock Mech Min 143:104794. https://doi.org/10.1016/j.ijrmms.2021.104794

Yu BB, Li Q, Zhao TD (2024) Deformation extent prediction of roadway roof during non-support period using support vector regression combined with swarm intelligent bionic optimization algorithms. Tunn Undergr Sp Tech 145:105585. https://doi.org/10.1016/j.tust.2024.105585

Yu ZS, Yuan Y, Tian PJ (2024) An efficient trust region algorithm with bounded iteration sequence for unconstrained optimization and its application in support vector machine. J Comput Appl Math. https://doi.org/10.1016/j.cam.2024.115956

Murlidhar BR, Nguyen H, Rostami J, Bui XN, Armaghani DJ, Ragam P, Mohamad ET (2021) Prediction of flyrock distance induced by mine blasting using a novel Harris Hawks optimization-based multi-layer perceptron neural network. J Rock Mech Geotech Eng 13:1413–1427. https://doi.org/10.1016/j.jrmge.2021.08.005

Zhang Q, He MC, Wang J, Guo S, Zhu C, Tao ZG, Wang C (2022) Investigation of a non-explosive directional roof cutting technology for self-formed roadway. Int J Min Sci Technol 32:997–1008. https://doi.org/10.1016/j.ijmst.2022.07.006

Zhang RX, Li YF, Gui YL, Zhou J (2022) Prediction of blasting induced air-overpressure using a radial basis function network with an additional hidden layer. Appl Soft Comput 127:109343. https://doi.org/10.1016/j.asoc.2022.109343

Hosseini S, Poormirzaee R, Hajihassani M (2022) An uncertainty hybrid model for risk assessment and prediction of blast-induced rock mass fragmentation. Int J Rock Mech Min 160:105250. https://doi.org/10.1016/j.ijrmms.2022.105250

Hong Y, Ma HH, Shen ZW, Ren LJ, Cui Y, Zhao K (2018) Research and application on efficient rock blasting based on circular free surface. Explosion Shock Waves 38(1):99–105. https://doi.org/10.11883/bzycj-2016-0176. ([In Chinese])

Wang YB, Wen ZJ, Liu GQ, Wang JG, Zhou QB, Lu KQ, Wang DC, Wang BZ (2020) Explosion propagation and characteristics of rock damage in decoupled charge blasting based on computed tomography scanning. Int J Rock Mech Min 136:104540. https://doi.org/10.1016/j.ijrmms.2020.104540

Masurkar A, Daruwala R, Mohite A (2024) Performance analysis of SAR filtering techniques using SVM and Wishart Classifier. Remote Sens App: Soc Environ 34:101189. https://doi.org/10.1016/j.rsase.2024.101189

Wang C, Bai D, Li YB, Zhang Q, Ma X, Tian DL, Shan MM (2024) Establishment of critical non-depositing velocity prediction model for sediment in drip irrigation laterals based on PSO-SVM. J Clean Prod 457(10):14248. https://doi.org/10.1016/j.jclepro.2024.142488

Hou ZG, Wang HW, Yue YB, Xiong ML, Zhang WX (2024) A novel framework based on two-stage multi-view feature optimization and improved support vector data description for aeroengine bearing early fault detection. Reliab Eng Syst Saf 245:110027. https://doi.org/10.1016/j.ress.2024.110027

Huang K, Wang XG (2023) CCR-GSVM: A boundary data generation algorithm for support vector machine in imbalanced majority noise problem. Appl Intell 53(1):1192–1204. https://doi.org/10.1007/s10489-022-03408-4

Santos CE, Sampaio C, Coelho LS, Bestard GA, Llanos CH (2021) Multi-objective adaptive differential evolution for SVM/SVR hyperparameters selection. Pattern Recognit 110:107649. https://doi.org/10.1016/j.patcog.2020.107649

Wang JL, Gong B, Liu H, Li SH (2022) An algorithm with harmonious blending of distributed swarm intelligence and geometric Brownian motion for greener heterogeneous scheduling. Appl Intell 52(15):18210–18225. https://doi.org/10.1007/s10489-021-03074-y

Karaman A, Karaboga D, Pacal I, Akay B, Basturk A, Nalbantoglu U, Coskun S, Sahin O (2023) Hyper-parameter optimization of deep learning architectures using artificial bee colony (ABC) algorithm for high performance real-time automatic colorectal cancer (CRC) polyp detection. Appl Intell 53(12):15603–15620. https://doi.org/10.1007/s10489-022-04299-1

Sun B (2023) A dimensionless model and ant colony optimization fusion temperature prediction in tunnel fires. Appl Soft Comput 145:110564. https://doi.org/10.1016/j.asoc.2023.110564

Nguyen H, Biu XN, Topal E (2023) Reliability and availability artificial intelligence models for predicting blast-induced ground vibration intensity in open-pit mines to ensure the safety of the surroundings. Reliab Eng Syst Safe 231:109032. https://doi.org/10.1016/j.ress.2022.109032

Li JN, Luo WG, Bai MS, Song MK (2024) Fault diagnosis of high-speed rolling bearing in the whole life cycle based on improved grey wolf optimizer-least squares support vector machines. Digit Signal Process 145:104345. https://doi.org/10.1016/j.dsp.2023.104345

Truong VT, Anand N (2023) System performance and optimization in NOMA mobile edge computing surveillance network using GA and PSO. Comput Netw 223:109575. https://doi.org/10.1016/j.comnet.2023.109575

Kuo BJ, Chiu TH (2024) Hybrid of jellyfish and particle swarm optimization algorithm-based support vector machine for stock market trend prediction. Appl Soft Comput 154:111394. https://doi.org/10.1016/j.asoc.2024.111394

Wang R, Chen SJ, Li XL, Tian G, Zhao TB (2023) AdaBoost-driven multi-parameter real-time warning of rock burst risk in coal mines. Eng Appl Artif Intell 125:106591. https://doi.org/10.1016/j.engappai.2023.106591

Yang Y, Zhang Q (1997) A hierarchical analysis for rock engineering using artificial neural networks. Rock Mech Rock Eng 30(4):207–222. https://doi.org/10.1007/BF01045717

Author information

Authors and Affiliations

Contributions

Bingbing Yu: Investigation, Methodology, Writing—original draft, Writing—review & editing. Bo Wang: Investigation, Resources, Supervision. Yi Li: Project administration, Conceptualization. Yuantong Zhang: Methodology, Validation, Writing—review & editing. Guohao Wang: Investigation, Supervision.

Corresponding author

Ethics declarations

Human participants

This study does not contain any studies with human participants or animals performed by any of the authors.

Conflict of interests

The authors declare that they have no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, B., Wang, B., Li, Y. et al. Prediction of blast-hole utilization rate using structured nonlinear support vector machine combined with optimization algorithms. Appl Intell 54, 9136–9157 (2024). https://doi.org/10.1007/s10489-024-05614-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05614-8