Abstract

The Graph Attention Network (GAT) is a type of graph neural network (GNN) that uses attention mechanisms to weigh the importance of nodes’ neighbors, demonstrating flexibility and power in representation learning. However, GAT and its variants still face common challenges in GNNs, such as over-smoothing and over-squashing. To address this, we propose Multi-Head Multi-Order Graph Attention Networks (MHMOGAT) as an enhanced GAT layer. MHMOGAT is built based on multi-head attention and adjacency matrices of different orders, aiming to expand the receptive field of GAT to effectively capture long-distance dependencies. Moreover, Bayesian optimization is employed to determine optimal hyperparameter combinations for different datasets. Experimental results on six prevailing datasets demonstrate that MHMOGAT improves GAT accuracy by approximately 2-5% across various datasets with different label rates, indicating its effectiveness. Additionally, MHMOGAT exhibits potential in handling large and complex graphs with low label rates.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Graph data [1] is a natural representation of many complex systems in the real world, such as social networks [2], recommendation systems, biological networks, transportation networks, and more [3,4,5,6]. However, in many applications of graph data, label information about nodes is often scarce [7, 8], which limits the applicability and effectiveness of traditional supervised learning approaches. As such, semi-supervised learning methods [9,10,11] have emerged as powerful tools for these scenarios by leveraging information from unlabeled nodes to enhance model performance. Semi-supervised learning is a machine learning paradigm [12, 13] and there are various methods, including the use of generative models [14, 15], semi-supervised support vector machines [16, 17], graph models [18, 19], and so on. Through these methods, semi-supervised learning can better cope with the incomplete data in the real world and improve the generalization ability of the model.

On the other hand, graph convolutional networks (GCNs) [18] have achieved significant advancements in semi-super-vised learning. These networks facilitate information transmission based on the topology between nodes, effectively capturing relational data within graph structures. GCNs demonstrated their potency across various downstream tasks [20,21,22]. The message passing mechanism in GCNs, while useful for utilizing graph structure information by adding more layers to have an extensive receptive field, can lead to the over-smoothing problem when combined with increased depth [23, 24].

Although GCNs have significant potential for modeling graph-structured data, it has been revealed that they encounter the issues of over-smoothing and over-squashing [25, 26]. over-smoothing occurs as node features become increasingly similar with the increase in convolutional layers, while over-squashing arises from significant information compression via bottleneck edges. Various methods have been proposed to tackle these challenges [25,26,27,28,29]. Specifically, Graph Attention Networks [27] utilize attention mechanisms to learn discriminative features, thus reducing the over-smoothing problem. Building upon this, [28] further developed a high-order graph attention network that effectively integrates multi-hop neighbor information for node representation. Moreover, [29] developed DropEdge, which uniformly drops out a certain number of edges during model training to relieve the over-smoothing problem. However, these methods cannot effectively address the over-squashing problem, especially for graphs with large diameters and long-range dependencies between nodes. [30] pointed out that GNNs struggle to transmit messages effectively from distant nodes and demonstrate degraded performance when tasked with predictions relying on interactions spanning long distances. They formally introduced the over-squashing phenomenon of GNNs but did not present a new architecture to solve the problem. Later, [31] employed the Stochastic Jost and Liu Curvature Rewiring algorithm to perform edge addition and removal during GNN training, achieving a suitable compromise between over-smoothing and over-squashing.

To mitigate the over-smoothing and over-squashing problems and increase GCNs’ expressiveness, this paper proposes a method called multi-head multi-order graph attention networks(MHMOGAT). We integrated multi-head attention with varying neighborhood orders, resulting in a model that incorporates both multi-order and cross-order multi-head attentions. In the multi-head attention model, each head focus on learning information from different neighborhood orders. This allows for learning at an expanded perception field and capturing relationships within the graph more accurately. In our cross-order setup, we use multiple channels with various neighborhood orders to achieve a cross-order effects. This approach enhances generalization ability of the model by capturing multiple hop’s information. Allowing the model to solve different challenging classification tasks. Our model defines a novel self-attention mechanism that evaluates the influence of both immediate neighbors and long-distance nodes, while assigning heterogeneous multi-head attention to enhance feature extraction across different layers. In addition, Bayesian optimization is integrated into the model for hyperparameter optimization.

The main contributions of this paper can be summarized as follows:

-

Develop new multi-head multi-order GCN layers which improve GAT performance significantly on various semi-supervised classification datasets.

-

Tackle the limitation of GAT in capturing multi-hop information and expand its perception field.

-

Show potential in dealing with large and complex graphs with very low label rates.

The remaining structure of this article is as follows: Section 2 covers the related work in this field. In Section 3, we provide an overview of the network architectures of GAT and MOGCN, along with highlighting some of the challenges they face. Our proposed model MHMOGAT is introduced, and its two implementation approaches are described in Section 4. In Section 5, our model will be compared with some other state-of-the-art models to demonstrate its effectiveness. Finally, Section 6 presents the conclusions of this study and discusses future work.

2 Related work

2.1 High-Order Graph Convolutional Networks

Recent works have focused on encoding high-order neighborhood information from target nodes to improve performance. MOGCN [32] constructs multiple simple GCN learners with multi-order adjacency matrices to capture high-order connectivity among the nodes. MixHop [33] mixes powers of the adjacency matrix, which can mix feature representations of neighbors at various distances to learn neighborhood mixing relationships. HCNP [34] can simultaneously aggregate information from various neighborhoods by constructing high-order convolutions. Additionally, similar to our model, both HGRN [28] and MulStepNET [35] combine the attention mechanism with high-order neighborhoods to improve the model’s learning ability. However, HGRN and MulStepNET simply combine the attention mechanism with higher-order information without considering the effective combination of multi-head and multi-order information to make the model more flexible and efficient.

2.2 Over-smoothing and over-squashing

In the realm of GNNs, over-smoothing and over-squashing stand out as significant limitations. To address these challenges, numerous strategies have been devised. For instance, Huang et al. [29] proposed a methodology centered on the removal of graph edges during GNN training, while Zhao et al. [36] and Zhou et al. [37] introduced novel normalization techniques tailored to directly counteract over-smoothing effects. Additionally, Chien et al. [38] developed a novel graph convolutional filter aimed at circumventing over-smoothing phenomena. In tackling the over-squashing issue, Topping et al. [31] employed the Stochastic Jost and Liu Curvature Rewiring algorithm to facilitate edge addition and removal during GNN training. Moreover, recent advancements have seen the utilization of state-of-the-art large language models in addressing graph-related tasks, exemplified by the Graphllm [39] and Graphgpt [40] models, offering an alternative approach to mitigating both over-smoothing and over-squashing concerns.

2.3 Attention mechanism

Graph Attention Networks (GAT) [27] utilize self-attention mechanisms to compute the contribution weights of each neighboring node to update the central node’s features, followed by a weighted summation to obtain new node features. GAT also uses multi-head attention, allowing the model to focus on the features of different neighbor nodes in parallel to better capture relationships and features in the graph data. HAN [41] introduces multiple layers of attention mechanisms, enabling the model to assign weights differently based on node types and relationships, facilitating better capturing of associations between nodes in heterogeneous graphs. In addition, other models such as GGAN-DGC [42], GA-GNN [43], SGATs [44] also incorporate attention mechanisms into their architectures, enhancing the model’s capabilities. HGRN [28] incorporates multi-hop neighbor information to enhance the node representation ability of the original GAT, effectively addressing the over-smoothing problem. However, none of the previous research combined multi-head and multi-order information. Additionally, few studies focused on evaluating model performance at scarce label rates for semi-classification tasks.

Overall, the original GAT model may encounter the over-squashing issue in certain datasets. HGRN addresses this problem by incorporating high-order augmentation graphs. However, this approach might lead to an over-smoothing issue by introducing a large number of new edges. This concern forms the primary motivation for our work: we aim to combine multi-order and multi-head approaches to add heterogeneous paths for different-order augmented graphs, thereby solving both over-smoothing and over-squashing issues. In addition, considering the increase of the dimension of hyperparameter space, a more advanced hyperparameter optimization method should be investigated.

3 Preliminary

3.1 Graph attention networks(GAT)

A Graph Attention Network (GAT) is a type of graph neural network that uses an attention mechanism to capture the most important relationships between nodes in the graphs to enhance prediction or classification performances. The weights of edges can be calculated by the following formula:

where \( \alpha _{ij}\) is the weight between the i-th and j-th nodes, \(\textbf{W}\) is a trainable weight matrix for node feature transformation, \(\textbf{h}_ i \) and \(\textbf{h}_j\) are the feature vectors of the i-th node and the j-th node, \(\mathbf {\textbf{a}}\) is a vector of weights, \(^T\) represents transposition, \(\mathcal {N}_i\) is the immediate neighborhood of the i-th node in the graph. The feature information is processed by combining with the weighted neighbor information from the attention adjacency matrix and then transformed through a nonlinear function, which is described by

where \( K \) is the number of multi-heads. \(\sigma \) denotes the final nonlinear function (usually a softmax). Finally, a prediction is to be made using the output of the final layer.

3.2 Mixed-order graph convolutional networks(MOGCN)

Mixed-order graph convolutional networks (MOGCN) [32] is a novel end-to-end ensemble framework. MOGCN combines the results of multiple GCN learners that are trained on adjacency matrices of various orders to boost the performance of semi-supervised node classification tasks. MOGCN can directly capture the dependencies between nodes at different distances, which enhances its information acquisition capability. Firstly, it constructs several similar GCN learners and assigns them adjacency matrices of different order in order to learn the information relationship between neighbors of different orders. Let’s denote a finite set of multi-order adjacency matrices as \(\left\{ \textbf{A}^{1 },\cdots , \textbf{A}^{k} \right\} \). Then, we can formally define the GCN learners with respect to this set as follows,

where \(\tilde{\textbf{A}} ^{k}_{s} ={\tilde{\textbf{D}} ^{k-\frac{1}{2}}}\tilde{\textbf{A}}^{k}{\tilde{\textbf{D}} ^{k-\frac{1}{2}}}\), and \(\tilde{\textbf{A}} ^{ k } = \textbf{A}^{ k } + \textbf{I}\), and the associated degree matrix is \(\tilde{\textbf{D}} ^{\left( k \right) } = \textbf{D}^{\left( k \right) } + \textbf{I}\), \(\textbf{I}\) is a diagonal matrix, \(\textbf{X}\) is the node feature matrix, \(\textbf{W}_{0}^{k}\) and \( \textbf{W}_{1}^{k}\) are the trainable weight matrices in the k-th learner \(f_{k}\).

For the obtained k learners \(\left\{ f_{1},\cdots , f_{k} \right\} \) finally combined through an ensemble module [45]:

In Eq. (4), the outputs of \(f_{ens}\) are the predicting label matrix based on the k-th learner.

Despite both GAT and MOGCN achieve state-of-the-art performance on various datasets, there still exist limitations. GAT can only transfer and aggregate information in the first-order neighborhood of the target node, and it cannot take into account nodes that are far away, thus ignoring the important information implied in them. While MOGCN can consider nodes in multi-order neighborhoods, it is unable to adaptively assign weights to individual neighbors, treating all nodes as having the same importance. Based on these problems, we propose a new model that can combine multi-order neighborhood information and adaptively assign weights to neighbor nodes.

4 The proposed method

In this section, we detail our method in two parts: the individual MHMOGAT layer and two distinct architectures. Our approach leverages a mix of multi-head attention and adjacency matrices of different orders, building upon the foundation established by HGRN [28]. HGRN incorporates multi-hop neighbor information to enhance node representation, addressing the over-smoothing problem. Extending upon this framework, our proposed model integrates multi-head and multi-order information, enhancing adaptability and efficiency across various tasks. Additionally, we employ Bayesian optimization to determine optimal hyperparameter combinations for different datasets. We propose two specific implementation methods through a reasonable combination of multi-head attention and adjacency matrices of different orders.

4.1 Attention layer

We start with the individual graph attention layer of MHMOGAT. The input to the attention layer of each graph is a set of node features, which we represent as a set of vectors:

where \(\textbf{h}_{i}\in \mathbb {R}^{F} \), F is the number of features for each node, and Z is the number of nodes. The layer produces a new set of node features (of potentially different cardinality \(F'\)), \(\textbf{h}'=\left\{ \mathbf {h'} _{1},\mathbf {h'} _{2},\dots ,\mathbf {h'} _{Z} \right\} , \mathbf {h'}_{i}\in \mathbb {R}^{F'} \), as its output. Let’s denote a finite set of multi-order adjacency matrices as \(\left\{ \textbf{A}^{1 },\cdots , \textbf{A}^{n} \right\} \), where

In order to convert the primary features into a more advanced feature representation and improve the capabilities of the model, we perform the following processing on the data:

where \(\textbf{W}\) is a trainable weight matrix and a is a shared attention mechanism, \(e_{ij}\) represents the importance of the j-th node to the i-th node.

In the initial processing step, a linear transformation is applied on the nodes like original GAT model to learn the structures and patterns of data. Subsequently, the proposed model defines a novel self-attention mechanism that evaluates the influence of both immediate neighbors and long-distance nodes, while assigning heterogeneous multi-head attention to enhance feature extraction across different layers. This multi-level feature extraction facilitates the model’s ability to handle complex features and manage long-distance dependencies effectively.

The modified attention coefficient is defined as follows:

where \( \alpha _{ij}^{k}\) represents the weight between i-th and j-th nodes in the k-th attention head, \(\textbf{W}_k\) is a trainable weight matrix, \(\textbf{h}_ i \) and \(\textbf{h}_j^{n}\) represent the features of the i-th node and n-th order neighbor node j of i-th node, \(\mathbf {\textbf{a}}\in \mathbb {R}^{2F'}\) is a vector of weights, \(\mathcal {N}_i\) is the n-th order neighborhood of the i-th node in the graph. After calculating the attention coefficient according to Eq.(9), it is used to compute a linear combination of the features corresponding to them, to serve as the final output features for every node.

where \(\alpha _{ij}^{k}\) is the attention coefficient calculated by the k-th attention head, \(\mathbf {h'}_i\) is the new node representation aggregated by multiple different order attention heads.

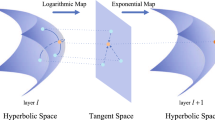

The message aggregation in the Multi-Head Multi-Order Graph Attention Network (MHMOGAT) framework is illustrated. The red node represents an arbitrary central node, while the other nodes represent the neighbors of the central node at different orders. Initially, the attention coefficients are computed based on current node representations. This is followed by executing a multi-head different orders attention graph convolution on multi-hop nodes (where blue nodes represent 1-hop neighbors, green for 2-hop neighbors and yellow for 3-hop neighbors). The three differently colored arrows signify various levels of attention heads. Ultimately, to obtain \(\mathbf {h'}_{1}\), the embedding from these heads are averaged

4.2 General framework

In this subsection, we describe the implementation details of the two distinct architectures of MHMOGAT: the multi-head different orders attention architecture and the cross-order multi-head attention architecture.

4.2.1 MHMOGAT with multi-head different orders attention

In this architecture, in order to improve the performance capability of the model and to enable the model to learn the weights in neighbor relationships at different scales, we use adjacency matrices of different orders in each attention head for information aggregation.

Firstly, we assign different order adjacency matrices to each attention head based on Eq.(7)-(9) to obtain the k-th attention head as follows:(Figs. 1 and 2 )

The message aggregation in the Multi-Head Multi-Order Graph Attention Network (MHMOGAT) framework is illustrated. The red node represents an arbitrary central node, while the other nodes represent the neighbors of the central node at different orders. Initially, the attention coefficients are computed based on current node representations. This is followed by executing across-order multi-head attention graph convolution on multi-hop nodes. In channel 1, the current node executes across-order multi-head attention graph convolution on 1-hop neighbors; in channel 2, the node executes across-order multi-head attention graph convolution on 2-hop neighbors. The process continues until it reaches the predefined channel number N. Ultimately, the \(\mathbf {h'}_i^{1}\),...,\(\mathbf {h'}_i^{n}\) are averaged to generate the final node embedding

After obtaining the attention coefficients of different orders of each head, we design an information aggregation method as follows. The K attention heads execute the transformation of Eq.(10), and then their features are concatenated, resulting in the following output feature representation.

where \(||\) represents concatenation, K is the number of attention heads, \(\alpha _{ij}^{k}\) is the attention coefficient calculated by the k-th attention head, \(\textbf{W}_{k}\) is a trainable weight matrix, and \(\mathbf {h'}_i\) is a new node representation aggregated by multiple different order attention heads.

Specifically, if we perform multi-head attention on the final layer of the network, for efficient implementation of multi-head attention, we replace concatenation with averaging and apply a nonlinear activation function to generate the high-level node embedding.

Overall, the implementation of the MHMOGAT with multi-head different orders attention is described in Algorithm 1.

4.2.2 MHMOGAT with cross-order multi-head attention

In this section, we introduce another specific implementation method with cross-order multi-head attention.

Different from the first way, the MHMOGAT with cross-order multi-head attention introduces multiple attention heads across different orders. Therefore each attention head can aggregate information from different orders of adjacency matrix.

Firstly, several channels are established, each containing K attention heads. For the n-th channel, the n-th order adjacency matrix is applied to K attention heads following Eq. (7)-(9):

where \(\alpha _{ij}^{k}({n})\) is the attention coefficient calculated by the k-th attention head in the n-th channel. Eq.(14) allows for the calculation of the attention coefficient, as determined by K attention heads for each channel.

Subsequently, these K attention coefficients per channel are aggregated as follows:

Specially, when multi-head attention is implemented on the final layer of the network, the tensor has been aggregated along the n-th channel, which can be expressed as,

where \(\mathbf {h'}_i^{n}\) is the new node representation formed by cross-order aggregation of K attention heads in the n-th channel.

Finally, we integrate the results obtained by N channels through an integration module, which is defined as follows:

Overall, this approach incorporates multiple attention heads across various orders, enabling each head to learn and balance information propagation at different scales: from first-order neighbors to higher-order ones. Consequently, each attention head can identify useful features within different orders of information and subsequently integrate these features. The implementation details of this method are shown in Algorithm 2.

5 Experiments

In this section, the proposed model is compared with several prevailing GCNs across six general semi-supervised datasets.

5.1 Datasets

Table 1 presents the datasets used in the experiment, namely Cora, Citeseer, Pubmed, ACM, Chameleon and Actor. Cora, Citeseer and Pubmed are three citation networks, the nodes represent publications, the edges represent citation links, the feature of each node is the bag-of-word representation of the corresponding publication, and the class is the number of clusters. ACM is a paper network, and the nodes represent papers. If two papers are written by the same author, then there is an edge between the two papers. The feature is the bag-of-words representation of keywords. Papers are divided into three categories according to research fields. Chameleon is a web page dataset where nodes are web pages on a specific topic and edges are hyperlinks between them. Actor is a dataset where nodes are actors and edges mean two actors co-occurring on the same Wikipedia page.

5.2 Comparison with state-of-the-art methods

5.2.1 Baseline methods

To evaluate the performance of the proposed MHMOGAT, we compare it with the following methods:

1. GCN [18]: GCN is a graph convolutional network used to learn the representation of nodes in graph data. It updates the representation of a node by aggregating information from each node’s neighbor nodes.

2. GAT [27]: GAT is an improved graph convolutional network that introduces an attention mechanism to dynamically adjust the weights of neighbor nodes. GAT allows nodes to assign different weights to their neighbor nodes, which makes the model more flexible to learn the relationship between nodes.

3. MOGCN [32]: a graph convolutional neural network improved on the basis of GCN. It is finally integrated by applying a hybrid high-order adjacency matrix to multiple GCN modules.

4. GCNII [46]: a simple and deep graph convolutional network model, which effectively solves the problem of over-smoothing through initial residuals and unit mappings.

5. KGCN [47]: KGCN is a graph convolutional network specifically designed for knowledge graphs. KGCN is used to learn embeddings between entities and relationships to support various reasoning and prediction tasks on knowledge graphs.

6. SGC [48]: SGC is a simplified graph convolutional network that reduces the complexity of the model by subjecting the features of all neighbor nodes to the same linear transformation.

7. GFNN [49]: a simple and deep graph convolutional network model, which effectively solves the problem of over-smoothing through initial residuals and unit mappings.

8. SJLR [31]: SJLR introduces the Stochastic Jost and Liu Curvature Rewiring (SJLR) algorithm, which adjusts edges during training to effectively solve over-smoothing and over-squeezing issues while maintaining efficiency and reliability.

9. DropEdge (DE) [29]: DE is an operation-based approach that developed a method for dropping edges to overcome the over-smoothing issue in deep GCNs.

10. GRAND [50]: GRAND approaches deep learning on graphs as a continuous diffusion process and treats Graph Neural Networks (GNNs) as discretizations of an underlying PDE.

5.2.2 Parameter settings

All experiments are conducted in a semi-supervised setting. The maximum iteration number (\(max\_iter\)) is set to 500, and the method utilizes a hidden layer with 8 neurons. We train our model by using the full batch in each training epoch and implement our algorithm in Pytorch [51], and we optimize it with the Adam [52] algorithm. Additionally, learning rate and dropout rate are set at 0.005 and 0.6 respectively. Following [53, 54], we conduct experiments on the following label rates: 0.5\(\%\), 1\(\%\), 2\(\%\), 3\(\%\), 4\(\%\) and 5\(\%\) on Citeseer and Cora datasets, and 0.03\(\%\), 0.05\(\%\), and 0.1\(\%\) on the Pubmed dataset, and 0.5\(\%\), 1\(\%\), 2\(\%\) and 3\(\%\) on Actor dataset. Furthermore, we set the label rates to 0.1%, 0.2%, 0.3%, 0.4% and 0.5% on the ACM dataset and 0.5%, 5%, 10% and 15% on the Chameleon dataset and then conduct experiments. The mean classification accuracy (ACC) on test nodes is reported after running our method over specified data splits twenty times. The number of attention heads is set to 8. In MHMOGAT based on different orders of multi-head attention, the number of different order n is selected among \(\left\{ 1,2,3 \right\} \). In MHMOGAT based on cross-order multi-head attention, the number of different order n is selected among \(\left\{ 1,2,...,6 \right\} \).

5.2.3 Results

The semi-supervised node classification results of the baseline methods and two variations of MHMOGAT under optimal hyperparameters n are reported in Tables 2, 3, 4 and 5. Specifically, MHMOGAT-1 indicates MHMOGAT with cross-order multi-head attention and MHMOGAT-2 indicates MHMOGAT with multi-head different orders attention. We obtain the following observations:

In comparison to all the baseline methods, MHMOGAT-1 and MHMOGAT-2 achieve superior performance across various label rates on the six datasets. The GRAND model outperforms our model at most label rates on Citeseer and Actor datasets, but underperforms on Cora, Pubmed and Chameleon datasets. Furthermore, our model’s performance is almost in the top two at each label rate across all datasets. The GCN model shows low accuracy because it only employs a uniform neighbor aggregation strategy without an attention mechanism. Although GAT incorporates an attention mechanism, its performance is limited as it only captures information from first-order neighbors and is thus potentially missing important information from distant nodes. In contrast, through unique combinations of multi-head attention and varying order adjacency matrices, our models exhibit excellent node classification capabilities.

The proposed method shows excellent performance even at low label rates. For example, at a label rate of 0.5%, the classification accuracy of GCN and GCNII on the Cora dataset is only about 48% and 50%, but the accuracy of MHMOGAT-1 reaches 61%. The accuracy of MHMOGAT-2 is approximately 60%, exceeding the performance of other methods. Despite the extremely low label rate of 0.03% on the Pubmed dataset, both MHMOGAT-1 and MHMOGAT-2 still achieve classification accuracy of 65% and 64%, respectively, surpassing other methods.

Figure 3 illustrates the impact of high-order GATs and MHMO on a graph with bottleneck issues. Initially, there were only two paths between the left and right clusters, leading to an over-squashing problem. High-order GAT [28] introduces high-order information, which increases paths between clusters homogeneously and effectively curbs the over-squashing issue. In contrast, the proposed MHMO framework adds heterogeneous paths (2 attention heads for \(1^{\text {st}}\) order and 1 attention head for \(2^{\text {nd}}\) order) and designs a novel attention mechanism to assign weights to each path, thereby enhancing the model’s expressiveness and reducing potential over-smoothing issues.

5.3 Ablation study

To understand the impacts of the components of MHMOGAT on its performance, we conduct an ablation study in this section. Here, we consider two variants of our model:

-

(1) MHMOGAT-1-SO: MHMOGAT-1 uses a single order adjacency matrix (\(\left\{ 1,2,...,6 \right\} \)) for training, instead of different order matrices. The best result among the single order setups is reported.

-

(2) MHMOGAT-2-SO: MHMOGAT-2 uses a single order adjacency matrix (\(\left\{ 1,2,...,6 \right\} \)) for training, instead of different order matrices. The best result among the single order setups is reported.

Figure 4 illustrates the comparison results of MHMOGAT and its variants. MHMOGAT-1-SO and MHMOGAT-2-SO all perform worse than the proposed MHMOGAT-1 and MHMOGAT-2 models on the six datasets. This is because the two single-order variants cannot capture the rich relation information in the graph, while MHMOGAT can capture multi-order neighbor information using multi-order adjacency matrices and thus achieve significant performance. In addition, by utilizing multi-order adjacency matrices, hierarchical feature representation of nodes is achieved, which adapts to more complex graph structures and integrates information at multiple levels, thereby enhancing the model’s performance.

5.4 Parameter sensitivity

In MHMOGAT, the hyperparameter n, which determines the number of adjacency matrices of different orders, can influence the model performance significantly. Therefore, we conducted an analysis to examine its sensitivity.

The 2nd to the 6th high-order matrices are considered in MHMOGAT-1 and the results are presented in Table 3. We found that MHMOGAT-1 with 2nd and 3rd-order matrices provides comparably satisfactory performance on Cora, Citeseer, Pubmed, ACM and Chameleon. This means that it is suitable to incorporate low-order relations to enhance the effectiveness of GAT on these datasets. In contrast, on the Actors dataset, MHMOGAT-1 with 3rd-order and 4th-order performs better. This indicates that high-order information is crucial on the Actors dataset. However, it is found that further increasing the order of matrices results in the decline of classification performance.

In MHMOGAT-2, the number of different orders, denoted as n, is selected from the set \(\left\{ 1,2,3 \right\} \). We combine these three adjacency matrices and then place the resulting eight combinations into the eight attention heads sequentially. The combined results are shown in Table 4.

Specifically, ’adj’, ’adj2’, and ’adj3’ indicate the first-order, second-order, and third-order adjacency matrices, respectively. For example, combination 1 consists of 4 first-order adjacency matrices and 4 second-order adjacency matrices. Then, we conduct experiments on six datasets, and the results are as shown in Table 5.

The performance of MHMOGAT-2 is more complex, which is related to the combination of two hyperparameters as shown in Table 4. It is found that MHMOGAT-2 with hyperparameters of combination 1 and combination 2 performs well on the Cora, Citeseer, Pubmed, ACM, and Chameleon datasets. This is because under these settings, the model can effectively learn at various scales and capture relationships within the graph more accurately. On the Actors dataset, using hyperparameters of combination 5 performs better because the nodes in the Actors dataset are more sensitive to high-order information.

6 Conclusions and future work

In the realm of Graph Neural Networks (GNNs), significant limitations such as over-smoothing and over-squashing have been observed. To address these deficiencies, this work introduces the Multi-Head Multi-Order Graph Attention Network (MHMOGAT) architecture. By leveraging multi-head attention mechanisms, over-smoothing is mitigated, while the incorporation of multi-order adjacency matrices tackles over-squashing arising from bottlenecks in the graph data. Furthermore, Bayesian optimization is employed to identify optimal hyperparameter combinations tailored to different datasets. The proposed MHMOGAT framework offers two distinct implementations, each combining multi-head attention with adjacency matrices of varying orders. Through extensive experimentation across six datasets, our approach demonstrates state-of-the-art performance and shows effectiveness in handling large and complex graphs with low label rates.

In future studies we plan to improve this work in three ways: 1) validate our model using more diverse datasets; 2) compare it against state-of-the-art deep GCN models; and finally 3) test the effectiveness of our model when applied to regression problems.

Data Availability

The authors do not have permission to share data.

References

Kang Z, Peng C, Cheng Q, Liu X, Peng X, Xu Z, Tian L (2021) Structured graph learning for clustering and semi-supervised classification. Pattern Recognit 110:107627

Maltseva D, Batagelj V (2021) Journals publishing social network analysis. Scientometrics 126(4):3593–3620

Sun Q, Wei X, Yang X (2024) Graphsage with deep reinforcement learning for financial portfolio optimization. Expert Syst Appl 238:122027122027

Wang X, Yang X, Wang P, Yu H, Xu T (2023) Ssgcn: a sampling sequential guided graph convolutional network. Int J Mach Learn Cybern 1–16

Guo Q, Yang X, Zhang F, Xu T (2024) Perturbation-augmented graph convolutional networks: A graph contrastive learning architecture for effective node classification tasks. Eng Appl Artif Intell 129:107616

Sun Q, Wei X, Yang X (2024) Graphsage with deep reinforcement learning for financial portfolio optimization. Expert Syst Appl 238:122027

Zhou Z-H, Zhan D-C, Yang Q (2007) Semi-supervised learning with very few labeled training examples. In: AAAI, vol 7, pp 675–680

Li Y, Yin J, Chen L (2023) Informative pseudo-labeling for graph neural networks with few labels. Data Min Knowl Discov 37(1):228–254

Van Engelen JE, Hoos HH (2020) A survey on semi-supervised learning. Mach Learn 109(2):373–440

Gao C, Zhou J, Miao D, Wen J, Yue X (2021) Three-way decision with co-training for partially labeled data. Inf Sci 544:500–518

Kim D, Seo D, Cho S, Kang P (2019) Multi-co-training for document classification using various document representations: Tf-idf, lda, and doc2vec. Inf Sci 477:15–29

Jordan MI, Mitchell TM (2015) Machine learning: Trends, perspectives, and prospects. Science 349(6245):255–260

Cozman FG, Cohen I, Cirelo M (2002) Unlabeled data can degrade classification performance of generative classifiers. In: Flairs conference, pp 327–331

Kingma DP, Mohamed S, Jimenez Rezende D, Welling M (2014) Semi-supervised learning with deep generative models. Adv Neural Inf Process Syst 27

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2020) Generative adversarial networks. Commun ACM 63(11):139–144

Dong H, Yang L, Wang X (2021) Robust semi-supervised support vector machines with laplace kernel-induced correntropy loss functions. Appl Intell 51:819–833

Calma A, Reitmaier T, Sick B (2018) Semi-supervised active learning for support vector machines: A novel approach that exploits structure information in data. Inf Sci 456:13–33

Kipf TN, Welling M (2016) Semi-supervised classification with graph convolutional networks. arXiv:1609.02907

Saluja A, Awadalla HH, Toutanova K, Quirk C (2014) Graph-based semi-supervised learning of translation models from monolingual data. In: Proceedings of the 52nd annual meeting of the association for computational linguistics (Volume 1: Long Papers), pp 676–686

Wang J, Chen Q, Gong H (2020) Stmag: A spatial-temporal mixed attention graph-based convolution model for multi-data flow safety prediction. Inf Sci 525:16–36

Wang B, Sun Y, Chu Y, Min C, Yang Z, Lin H (2023) Local discriminative graph convolutional networks for text classification. Multimed Syst 1–11

Huang H, Song Y, Wu Y, Shi J, Xie X, Jin H (2020) Multitask representation learning with multiview graph convolutional networks. IEEE Trans Neural Netw Learn Syst 33(3):983–995

Dai M, Guo W, Feng X (2020) Over-smoothing algorithm and its application to gcn semi-supervised classification. In: Data science: 6th international conference of pioneering computer scientists, engineers and educators, ICPCSEE 2020, Taiyuan, China, September 18-21, 2020, Proceedings, Part II 6, pp 197–215. Springer

Yang R, Dai W, Li C, Zou J, Xiong H (2023) Tackling over-smoothing in graph convolutional networks with em-based joint topology optimization and node classification. IEEE Trans Signal Inf Process Netw 9:123–139

Oono K, Suzuki T (2020) Graph neural networks exponentially lose expressive power for node classification. In: International conference on learning representations

Topping J, Di Giovanni F, Chamberlain BP, Dong X, Bronstein MM (2022) Understanding over-squashing and bottlenecks on graphs via curvature. In: International conference on learning representations

Velickovic P, Cucurull G, Casanova A, Romero A, Lio P, Bengio Y et al (2017) Graph attention networks. Stat 1050(20):10–48550

He L, Bai L, Yang X, Du H, Liang J (2023) High-order graph attention network. Inf Sci 630:222–234

Rong Y, Huang W, Xu T, Huang J (2020) Dropedge: Towards deep graph convolutional networks on node classification. In: International conference on learning representations

Alon U, Yahav E (2021) On the bottleneck of graph neural networks and its practical implications. In: International conference on learning representations

Giraldo JH, Skianis K, Bouwmans T, Malliaros FD (2023) On the trade-off between over-smoothing and over-squashing in deep graph neural networks. In: Proceedings of the 32nd ACM international conference on information and knowledge management, pp 566–576

Wang J, Liang J, Cui J, Liang J (2021) Semi-supervised learning with mixed-order graph convolutional networks. Inf Sci 573:171–181

Abu-El-Haija S, Perozzi B, Kapoor A, Alipourfard N, Lerman K, Harutyunyan H, Ver Steeg G, Galstyan A (2019) Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In: International Conference on Machine Learning, pp 21–29. PMLR

Liu X, Xia G, Lei F, Zhang Y, Chang S (2021) Higher-order graph convolutional networks with multi-scale neighborhood pooling for semi-supervised node classification. IEEE Access 9:31268–31275

Liu X, Lei F, Xia G (2023) Mulstepnet: stronger multi-step graph convolutional networks via multi-power adjacency matrix combination. J Ambient Intell Humaniz Comput 14(2):1017–1026

Zhao L, Akoglu L (2020) Pairnorm: Tackling oversmoothing in gnns. In: International conference on learning representations

Zhou K, Huang X, Li Y, Zha D, Chen R, Hu X (2020) Towards deeper graph neural networks with differentiable group normalization. Adv Neural Inf Process Syst 33:4917–4928

Chien E, Peng J, Li P, Milenkovic O (2021) Adaptive universal generalized pagerank graph neural network. In: International conference on learning representations

Chai Z, Zhang T, Wu L, Han K, Hu X, Huang X, Yang Y (2023) Graphllm: Boosting graph reasoning ability of large language model. arXiv:2310.05845

Tang J, Yang Y, Wei W, Shi L, Su L, Cheng S, Yin D, Huang C (2023) Graphgpt: Graph instruction tuning for large language models. arXiv:2310.13023

Wang X, Ji H, Shi C, Wang B, Ye Y, Cui P, Yu PS (2019) Heterogeneous graph attention network. In: The world wide web conference, pp 2022–2032

Yu R, Wang L, Xin Y, Qian J, Dong, Y (2023) A gated graph attention network based on dual graph convolution for node embedding. Appl Intell, 1–14

Chen J, Fang C, Zhang X (2023) Global attention-based graph neural networks for node classification. Neural Process Lett 55(4):4127–4150

Ye Y, Ji S (2021) Sparse graph attention networks. IEEE Trans Knowl Data Eng 35(1):905–916

Krogh A, Vedelsby J (1994) Neural network ensembles, cross validation, and active learning. Adv Neural Inf Process Syst 7

Chen M, Wei Z, Huang Z, Ding B, Li Y (2020) Simple and deep graph convolutional networks. In: International conference on machine learning, pp 1725–1735. PMLR

Wang H, Zhao M, Xie X, Li W, Guo M (2019) Knowledge graph convolutional networks for recommender systems. In: The world wide web conference, pp 3307–3313

Wu F, Souza A, Zhang T, Fifty C, Yu T, Weinberger K (2019) Simplifying graph convolutional networks. In: International conference on machine learning, pp 6861–6871. PMLR

Nt H, Maehara T (2019) Revisiting graph neural networks: All we have is low-pass filters. arXiv:1905.09550

Chamberlain B, Rowbottom J, Gorinova MI, Bronstein M, Webb S, Rossi E (2021) Grand: Graph neural diffusion. In: International conference on machine learning, pp 1407–1418. PMLR

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L et al (2019) Pytorch: An imperative style, high-performance deep learning library. Adv Neural Inf Process Syst 32

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv:1412.6980

Li Q, Han Z, Wu X-M (2018) Deeper insights into graph convolutional networks for semi-supervised learning. In: Proceedings of the AAAI conference on artificial intelligence, vol 32

Sun K, Lin Z, Zhu Z (2020) Multi-stage self-supervised learning for graph convolutional networks on graphs with few labeled nodes. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 5892–5899

Acknowledgements

This work is supported by National Natural Science Foundation of China (Nos. 62076111, 62006099).

Funding

This work is supported by National Natural Science Foundation of China (Nos. 62076111, 62006099).

Author information

Authors and Affiliations

Contributions

Jie Ben: Investigation,Resources,DataCuration,Writing - Review& Editing. Qiguo Sun: Conceptualization, Methodology, Formal analysis. Keyu Liu: Investigation,Writing - Original Draft,Writing - Review& Editing. Xibei Yang: Investigation, Project administration, Resources. Fengjun Zhang: Data Curation.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical and informed consent

The data used in this study were sourced from public databases, which are openly accessible and do not contain any personally identifiable information. Therefore, the issue of informed consent does not arise. All data handling procedures adhered to ethical guidelines for research.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ben, J., Sun, Q., Liu, K. et al. Multi-head multi-order graph attention networks. Appl Intell 54, 8092–8107 (2024). https://doi.org/10.1007/s10489-024-05601-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05601-z