Abstract

The opposition-based differential evolution (ODE) cannot adaptively adjust the number of individuals partake opposition-based learning, which makes it difficult to solve complex optimization problems. In this manuscript, we present an innovative approach for the treatment of variable population ODE (SASODE) by leveraging on adaptive parameters. The core idea of SASODE is to assign a jumping rate to each individual in the population, which is the key parameter that determines whether an individual enters a subpopulation or not. The initial rate assignment relies on the empirical mean of a normal distribution. During the iterative process, the mean is adjusted adaptively by taking into account the historical information of the individuals retained from the preceding generation. At the same time, the variation of this mean directly lead to changing the jumping rate of individuals and thus to adjusting the subpopulation size. In addition, the constant c and the Lehmer mean together maintain a balance between exploration and exploitation of SASODE. Experimental results show that the algorithm ranks first in the Wilcoxon test on 61 benchmarks and three optimization problems in three dimensions. Then, we confirm that SASODE can achieve an accuracy of 96% or even higher on the feature selection problem. Therefore, SASODE outperforms the other state-of-the-art algorithms compared in terms of convergence rate and accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, as the complexity of actual optimization problem increases, efficient and easy implementation algorithms are essential in solving these problems. Inspired by the various principles of nature, metaheuristic algorithms are widely used in various fields such as scheduling problems [1, 2], routing problems [3], data mining [4], feature selection [5, 6] and deep learning [7]. Based on different principles, the existing metaheuristic algorithms are divided into four categories [8,9,10]: Evolutionary computation, Swarm intelligence, Human-based algorithms and Physics-based algorithms. Swarm intelligence algorithms inspired by swarm behavior, and the collaborative effect of a population is greater than the sum of the effect of individuals. Evolutionary computation is derived from Darwinism, where algorithms are designed from an evolutionary perspective and perform genetic operators (crossover, mutation and selection) on the population to find global optimal. Evolutionary computation mainly include Genetic Algorithm (GA) [11] and Differential Evolution (DE) [12]. Algorithm such as Brain Storm Optimization (BSO) [13] is inspired by human social behavior. Sine Cosine Algorithm (SCA) [14] and Equilibrium Optimizer (EO) [15] are proposed based on the laws of natural physics.

DE is a classical evolutionary algorithm, it was proposed by Storn [12] and was inspired by the GA. The Differential Evolution (DE) algorithm is characterized by three fundamental parameters, namely the population size NP, the scaling factor F, and the crossover rate CR. The effectiveness of DE is contingent upon the utilization of three genetic operators and three crucial parameters. In [16], Li et al. introduced a variant of DE that integrates an elite preservation and mutation strategy. The utilization of elite preservation strengthens the exploitation capability while mutation strategies were employed to maintain a balance between exploration and exploitation (EE) [17]. Zeng et al. [18] introduced a selection operator in DE with a novel approach that intended to minimize the effect of stagnation. The three candidate vectors may survive to the next generation if the algorithm is in a state of stagnation. Rosic et al. [19] proposed a hybrid firefly algorithm for DE (AHFADE). The AHFADE has the capability of adaptively adjusting parameter settings in order to choose a suitable mutation operator that can ensure a stable balance between the processes of diversification and intensification. According to Deng et al. [20], an adaptive mechanism for dimensional adjustment based on DE was incorporated to address the issue of premature convergence or stagnation, this mechanism greatly reduces the impact of dimensionality on algorithm performance.

Opposition-based learning (OBL) was proposed by Tizhoosh to improve the probability of identifying superior individuals in a population [21, 22]. In 2008, Rahnamayan et al. [23] proposed a new OBL-based DE variant, ODE, which for the first time used OBL in the DE initialization phase to accelerate the convergence of DE. Choi et al. [24] proposed a fast and efficient stochastic OBL (BetaCOBL) to control the degree of OBL solution, but the excessive computational complexity made it difficult to solve cost-sensitive optimization problems, so a second generation method iBetaCOBL was generated based on this method, using a linear diversity time metric to reduce the computational cost, and experimental results showed that the complexity of the algorithm can be reduced from \(O(NP^{2} \cdot D)\) to \(O(NP \cdot D)\). iBetaCOBL-eig [25], a new version of iBetaCOBL, was developed in 2023, which improves DE from a dimensionality point of view by using multiple crossover operators based on eigenvectors, and similarly proved that the advantage of the algorithm performance. Of course, in addition to the combination with DE, OBL is now integrated with multiple approaches. Mohapatra and Mohapatra [26] combined OBL and random OBL (ROBL) with the Golden Jackal optimization algorithm, and statistical tests showed that the algorithm was optimal on benchmark functions and engineering optimization problems. Wang et al. [27] combined both OBL and the Q-learning with the heuristic algorithm, which not only improved the neighbourhood search capability of the algorithm, but also increased the probability of finding the optimal and greatly reduced the time cost.

However, research into OBL at this stage has shortcomings. First, the direct manipulation of the population in initialization phase or during iteration can ignore the diversity of individuals in the population. Second, as the algorithm iterates, the individuals that can produce the optimal solution will become more and more concentrated, and the population size for performing OBL operations theoretically needs to become smaller and smaller, which requires providing an adaptive subpopulation strategy to ensure the equilibrium of EE and find the optimal of the algorithm faster.

In the age of information, there has been a significant surge in the volume of data available for analysis, posing a formidable challenge for classification tasks due to the substantial increase in samples and features. Redundant features in the samples not only reduce the classification accuracy, but also increase the training time and computational complexity of the classification model [28], and the process of selecting a specific number of features for classification training is called data dimensionality reduction. There are two ways of data dimensionality reduction, one is feature extraction and the other is feature selection. The former is spatially scoped to produce a new dimensional mapping of features, and the latter is a reduction in the number of features to obtain a new feature subset [29]. As the complexity of the problem and the quantity of data dimensions augment, the number of features within the search space will exponentially expand, which makes it a challenging optimization problem. To solve this problem, most of the early studies used traditional greedy algorithms such as SFS and SBS to solve the problem, but as the difficulty of the problem increases, these methods tend to fall into local optimal and fail to find an effective and efficient solution. In recent years, heuristic algorithms have been widely used to solve it due to their powerful global search capability and robustness [30, 31]. There is also a lot of work on combining OBL with heuristic algorithms applied to feature selection [32, 33], but there is still a lot of room for development in the field of combining DE with OBL variants to jointly solve the feature selection problem. Therefore, in this paper, we improve the ODE and validate it on the feature selection problem.

Although there are many performance investigations for ODE, there are still some drawbacks in practice. According to our research, the traditional ODE may not obtain better performance because it ignores the individual differences.This paper presents an approach aimed at overcoming the constraints that characterize traditional OBL. The proposed strategy seeks to enhance OBL by shifting the focus from population-based changes to individual-based changes. The main principal contributions of this paper can be summarized as:

-

1.

A strategic proposal is being presented to enhance the utilization of the OBL population to their utmost potential by emphasizing the strengths of each individual member of the population.

-

2.

Adaptive parameter control is introduced in ODE to decide the size of the subpopulation according to the jumping rate of individuals and accelerate the convergence speed of evolution.

-

3.

The concept of subpopulation survived individuals and historical information is proposed to guide the direction of SASODE optimization search.

-

4.

Validation on several benchmarks and feature selection optimization problem and comparison with several competing algorithms.

The present article is organized as follows: The preliminary review of the DE and OBL is provided in Section 2. Section 3 presents the proposed SASODE. The experimental verification is discussed in detail in Section 4. The application of feature selection is demonstrated in Section 5. Lastly, the conclusion is drawn in Section 6.

2 Fundamentals

2.1 Differential evolution

As a population-based algorithm, the population undergoes three genetic operators (mutation, crossover and selection) in each iteration [12]. Here, the population is initialized as follow:

where \(x_{\min }^{j}\) is the lower boundary. \(x_{\max }^{j}\) is the upper boundary.

Then, DE employ mutation operator on the target vector. In SASODE, we use the most popular mutation DE/rand/1, it is defined as:

where the indices \(r_1\), \(r_2\), \(r_3\) are numbers randomly selected from [1, NP]. F is a positive parameter and scales the difference vector to control the size of search step.

After mutation, DE will perform the cross operator. CR is the crossover probability and the vectors \({\textbf {v}}_i\) and \({\textbf {x}}_i\) are crossed based on CR. The detail is outlined as follows:

Where the variable \(j_{rand}\) denotes an integer that is randomly chosen from the interval 1 to D, D is the dimension size.

The last step in each iteration of the DE is selection. A one-to-one selection between \({\textbf {x}}_i\) and \({\textbf {u}}_i\) takes place, guided by the fitness values. The selection approach can be elucidated as follows:

where fitness(x) denotes the fitness function.

2.2 Opposition-based learning

Assume the set \(P=\{x(1),x(2),...,x(D)\}\), where \(x(j)\in [a(j),b(j)]\), where \(j=1,2,...,D\). This set contains all points in a D-dimensional space. The set of opposite points can be defined as \(\breve{P}=\{\breve{x}(1),\breve{x}(2),...,\breve{x}(D)\}\). The specific formula is as follows.

3 Proposed algorithm

Based on the characteristics of OBL and the evolutionary process of ODE, the advantages of individuals in the population are maximized in the proposed SASODE. Furthermore, the NFL demonstrates that no single algorithm can solve all problems [34], various problems necessitate distinct parameter configurations, which can be effectively resolved through the utilization of a self-adaptive approach to parameter setting in the iterative process of the algorithm.

3.1 Motivations

In ODE, the population jump in each generation is contingent upon the jumping rate, and provided that the requisite jumping condition is fulfilled, all members of the population produce their opposite individuals. This is a population-based strategy, however its utilization for the opposite population shows weakness during the later stages of evolution. Therefore, how to use the characteristics of individual information plays a key role in enhancing algorithm performance.

The research of meta-heuristic algorithms places significant emphasis on the aspect of parameter control [35], which has been discussed in Section 1. The primary parameter in ODE is the jumping rate. To regulate the number of individuals participating in OBL, subpopulation and adaptive parameter control mechanisms have been proposed for the attainment of SASODE via data exchange.

3.2 Subpopulation-based strategy

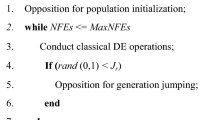

The most important difference between population-based and subpopulation-based is the number of opposite individuals in each generation. The detailed description is shown on Algorithm 1. From line 2 to line 5, we employ (1) to produce an initialized population that improves the diversity. From line 8 to line 10, the entire population participates in the opposite operation if a random number is less than Jr (Jr is generally taken as 0.3). Assume population size is 50, iteration is 100, the total number of individuals required to perform the opposition operation is approximately 0.3\(\times \)1000\(\times \)50. From line 16 to line 22, the subpopulation is crated by using the probability. Specifically, each individual is accompanied by a jumping rate and the assignment of jumping rate follows a Gaussian distribution which the mean is \(\mu _J\) and the variance is 0.1 (\(\mu _J\) is generally taken as 0.3). The condition for an individual to enter a subpopulation is whether its jumping rate is greater than a random number. In each iteration, if the population size is 50, approximately 0.3\(\times \)50 individuals perform the opposite operation. In this way, when the iteration reaches 1000, the total number of individuals involved in OBL is approximately 0.3\(\times \)50\(\times \)1000. Obviously, population-based and subpopulation-based are very different in the methods of selecting the opposite individuals, but the number of times the opposite individuals are calculated is approximately the same. It indicates that the proposed subpopulation strategy leads to no extra computational effort.

The approach of subpopulation is motivated by the dependence mechanism of individuals, which manages the mutation operators and parameters in accordance with the fitness value of each individual, with the ultimate objective of enhancing the convergence and accuracy of DE [36]. The purpose of this paper is to solve complex single-objective optimization problems, and how to select suitable individuals to create subpopulations is the most important concern at present. In [37], subpopulations are created according to the size of fitness value. In [38], NBC uses a neighborhood subpopulation strategy to obtain clustering centers. To embed parameter control into the subpopulation strategy, SASODE creates subpopulations based on the size of individual jumping rate. The outlined steps are specified as follows:

-

1.

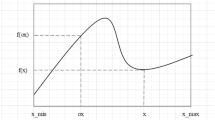

\(P=\{{\textbf {x}}_i|i=1,2,...,NP\}\) is the initialized population. The jumping rate corresponding to each individual \( {\textbf {Jr}}=\{j_1, j_2,...,j_{NP}\}\) is generated according to the Gaussian distribution. More detailed description of this step is shown in Fig. 1.

-

2.

A random number \(r_{i}\) between [0, 1] for each individual is generated according to the uniform distribution, and each random number with the jumping rate \(j_{i}\) is compared. If the former is smaller than the latter, the individual \({\textbf {x}}_i\) is a member of the subpopulation SP. The individuals of the subpopulation are represented by different colored symbols in Fig. 2.

-

3.

Create the opposite subpopulation OSP according to (5), combine the original population P with OSP. The surviving individuals are recorded as green yellow circles in Fig. 3, and select the top NP individuals with the best fitness into the next generation. \(S_{J}\) represents the jumping rate of individuals retained in the OSP and serves as a crucial parameter for regulating subpopulation size. \(S_{J}\) is a significant parameter that enables the adjustment of subpopulation size, and its values can be modified based on historical data from the preceding generation, it details in Section 3.3.

3.3 Self-adaptive parameter control

The jump rate of OBL is a random number between 0 and 1, but the jumping rate based on subpopulation is a vector. The vector \( {\textbf {Jr}}=\{j_1, j_2,...,j_{NP}\}\) is generated by a Gaussian distribution, and the mean value of \(\mu _J\) determines the location of the distribution of the jumping rate \(j_{i}\) for each individual. The larger the \(\mu _J\), the larger the value of \(j_{i}\), and the greater the probability that an individual corresponding to \(j_{i}\) will enter the subpopulation at that time. It can be seen that \(\mu _J\) can control the size of the subpopulation. In the late evolutionary stage, the parameter control mechanism determines the balance of algorithm about EE.

According to the previous analysis, it is clear that there is a corresponding relationship between the size of subpopulations and \(\mu _J\). The literature [39] found that an increase in convergence rate was associated with a reduction in population diversity. To attain a more equitable equilibrium concerning diversity and convergence rate, Zhang et al. augmented the arithmetic mean employed for adaptive parameter computation by integrating the Lehmer mean paradigm [40]. The difference between the two is that the calculated value of the Lehmer is larger than the value of the arithmetic mean when the variables have the same value. The SASODE algorithm also extends this method. The specific calculation formula is as follows:

where n represents the size of \(S_{J}\), \(x_{i},i=1,2,...,n\) are all the elements of \(S_{J}\) in each generation.

During the iteration, the size of \(j_{i}\) directly determines whether the individual performs the opposite operation or not. As mentioned before, the jumping rate \(j_{i}\) of an individual is generated by Gaussian random numbers, and a Gaussian distribution with mean \(\mu _{J}\) and variance 0.1 can generate the jumping rate randomly and without outliers according to the location parameter \(\mu _{J}\), as shown in the formula for (7). The same operation as SASODE can also be found in the algorithms JADE [40], SHADE [41], LSHADE [42] and so on.

In the above formula, the initial \(\mu _{J}\) is 0.3, which is updated in the evolutionary process using the following formula [23]:

where \(S_{J}\) indicates the set of individual jumping rate \(j_{i}\) of surviving individuals in the opposite subpopulation OSP. c is a positive number between 0 and 1 that makes a linear combination of \(\mu _{J}\) and \(\textrm{Lehmer}(S_{J})\) to reach an equilibrium state.

The (8) consists of two parts, \(\mu _{J}^{G}\) and \(\textrm{Lehmer}(S_{J})\). The former is the historical information left by the previous iterations, which represents the global information in the previous generations. The latter is the surviving individuals in the opposite population, and these surviving individuals represent the local information that survived the current generation to the next iteration. In order to avoid that \(\mu _{J}^{G+1}\)can have a violent oscillation, which causes the individuals performing the backward learning to lose control, making \(\mu _{J}^{G+1}\) infinitely larger or smaller affecting the performance of the algorithm. The introduction of a constant c in this formula and assigning computational weights to the two components enables a stable state to be reached for both global and local information. Algorithm 2 is the pseudo code of SASODE.

4 Experimental verification

To further validate SASODE’s performance, we compared different test functions, unimodal and multimodal functions, verified the validation algorithm on the CEC2017 optimization problem, and tested three engineering problems.

4.1 Experimental on unimodal and multimodal test functions

To validate the effectiveness of SASODE, a total of 32 widely-recognized benchmark functions have been meticulously chosen for the purpose of conducting a comprehensive numerical experiment. Specifically, 15 unimodal functions and 17 multimodal functions pertaining to the optimization of real-valued data sets have been carefully selected, as referenced in works by Yao et al. [43] and Askari et al. [44]. It is noteworthy that the aforementioned benchmark functions, i.e., \(f_{1}-f_{15}\) and \(f_{16}-f_{32}\) respectively represent unimodal and multimodal functions, the specific details regarding the aforementioned benchmark functions are presented in Table 1.

4.1.1 Parameter setting

This paper aims to compare SASODE with six swarm intelligence algorithms, namely GWO [45], WOA [46], MFO [47], SCA [14], SSA [48], and HBO [44], for the aforementioned functions. To ensure the reliability of the algorithmic results, the population size and maximum number of iterations of the comparison algorithm have been fixed at 50 and 1000, respectively. However, the rest of the parameter settings have been retained from its original research. All experiments were done on the Windows 10 operating system, MATLAB R2018b.

4.1.2 Comparison metrics

The Wilcoxon test for pairwise comparison was utilized to compare the performance of SASODE and the comparison algorithm. This statistical analysis was conducted with a significant level of \(\alpha =0.05\) according to [49]. To denote the correlation between the algorithm under consideration and the comparative algorithms, we employed the symbols \(+\), −, and \(=\). A detailed explanation of the specific application of these symbols is provided below.

-

1.

\(+\): The solutions of SASODE performs better than the comparison algorithm.

-

2.

\(=\): The solutions of SASODE performs approximately than the comparison algorithm.

-

3.

−: The solutions of SASODE performs worse than the comparison algorithm.

4.1.3 The results of unimodal functions

This paper sets four dimensions of 10, 30, 50 and 100 for experimental comparison, and the 30D results are displayed visually by iteration curves.

From the results of testing functions f1-f15 in Tables 2, 3, and 4, SASODE has the best performance on 100D, especially on functions f4, f5, f6, f8, f9, f11, f12, f13, and f14, with better results for both mean and standard deviation than other algorithms. The SASODE algorithm may have certain advantages in solving real-world high-dimensional parameter optimization problems, industrial engineering and manufacturing optimization problems, and the algorithm is relatively stable. Making full use of the characteristics and advantages of each individual in the optimization process and deciding the surviving individuals based on the size of their jumping rate is a gap area in the existing ODE research.

In the results of 10D and 50D, the comparison algorithms perform best on f6, f8, f9, f12, and f4, f5, f6, f8, f13, respectively. The SSA, SCA, and MFO algorithms do not have an advantage in unimodal functions, except for the algorithm WOA, which is competitive with SASODE. The analysis depicted in Fig. 4 indicates that SASODE exhibits a superior convergence speed and accuracy relative to the other algorithms assessed over a period of 1000 iterations. For f12, although the convergence accuracy is slightly worse than WOA, it has a significant advantage compared with the accuracy of the remaining five algorithms. Based on the characteristics of the unimodal function, we conclude that the SASODE algorithm exhibits remarkable exploitation capabilities, enhances convergence speed, and demonstrates robustness as evidenced by the values of the standard deviation.

4.1.4 The results of multimodal functions

Multimodal functions possess multiple local optima, which distinguishes them from unimodal functions. Moreover, the count of local optima rises exponentially as the dimensionality increases. The resulting multimodal function serves as a reliable metric to judge the exploration capacity of the algorithm. The results of SASODE on the multimodal functions f16-f32 are given in Tables 2, 3, and 4.

Based on the results, it is evident that SASODE exhibits superior performance in multimodal functions as compared to unimodal ones. Out of the 17 functions, the algorithm has secured the first position with regard to both the mean and standard deviation values for f16, f28, f23, f24, f26, and f27. In addition, the SASODE function performs better on more than half of the functions, and in the 10D, the algorithm performs best on f18 and f32 in terms of standard deviation, ranking first on nine functions. In the 50D results, the algorithm has the best standard deviation on f25 and the best on 11 functions. And the 100D results conclude that SASODE performs better on f27, ranking highest on 10 functions. All the above experimental results can demonstrate the advantages of the adaptive parameter mechanism proposed in SASODE. As the iteration proceeds, the jumping rate of each individual in the subpopulation is linearly assigned according to the jumping rate of the surviving individuals in the previous generation and the size of the mean of the Gaussian distribution. This allows historical information to be used directly to guide the search direction, and also allows the algorithm to jump out of the local optimum to search in the global domain as the jumping rate changes. As the complexity of the optimization problem increases, this algorithm based on the adaptive mechanism can be applied to as many different industrial and engineering optimization problems as possible, such as the rocket engine design problem [50], which also shows that SASODE has the ideal exploratory ability and advantages in multimodal problems.

4.1.5 Statistical analysis

This section presents the outcomes of the pair-wise Wilcoxon test and multiple-wise Friedman test conducted on SASODE and other algorithms. SASODE is selected as the control algorithm. The significance outcomes of various algorithms on distinct benchmark functions are illustrated in Table 5, which showcases the results of the Wilcoxon test.

-

1.

For unimodal functions f1-f15, SASODE clearly finds better solutions on more than half of the functions, and the algorithm in this paper is significant on all 15 functions compared to SSA and SCA. Compared with HBO and MFO, SASODE has a greater advantage on f11 and f13, respectively. Compared with WOA and GWO, f5 and f7 are dominant, respectively. In other words, SASODE performs much better on single-peaked functions than all other algorithms.

-

2.

For multimodal functions f16-f32, SASODE finds better solutions compared to all six comparison algorithms. For example, compared to WOA, GWO, SSA, SCA and MFO, the results are significant on f12, f15, f17 and f16, respectively. Although, SASODE does not perform significantly on six functions compared to HBO, the algorithm employed in this paper continues to exhibit exceptional performance when compared to other algorithms. Hence, SASODE surpasses other algorithms on multimodal functions.

The comparison of the convergence curves of SASODE with benchmark functions on 30D has been conducted in conjunction with WOA, GWO, HBO, SSA, SCA, and MFO, as illustrated in Fig. 4. The horizontal axis of Fig. 4 represents the number of iterations, while the vertical axis represents the fitness. Each algorithm was independently run 30 times with a fixed number of iterations of 1000. In Fig. 4, (a)-(f) denotes the convergence curves of unimodal functions, and (g)-(l) denotes the convergence curves of multimodal functions. Based on the information presented in Fig. 4, it can be inferred that the following conclusions can be drawn:

-

1.

Based on the iteration curves of the unimodal functions, it can be observed that the convergence accuracy of f1, f4, f8, and f14 is the best among the six algorithms, but on the f12, the accuracy of the SASODE algorithm is slightly worse than that of WOA. On f10, despite having equivalent convergence speed to GWO and WOA, the SASODE outperforms both in terms of convergence precision.

-

2.

Based on the iteration curves of the multimodal functions, the algorithm is optimal on f16, f18, f21, f22 and f30, both in terms of convergence speed and convergence accuracy. For f23, SASODE did not converge during the first 1000 iterations, but the convergence accuracy was significantly better than the remaining five comparison algorithms.

-

3.

To conclude, SASODE exhibits commendable performance on unimodal and multimodal functions for a dimensionality of 30. The outcomes of multimodal functions are superior to those of unimodal, as demonstrated by the numerical outcomes pertaining to residual dimensions.

4.1.6 Scalability analysis

To examine the impact of dimensionality on the performance of the algorithm, this section conducts experiments on dimensions of 10, 30, 50, and 100 respectively, and subsequently compares the algorithms applied to all test functions. The Wilcoxon test and Friedman test results are included in Table 6 and Fig. 5 for analysis. Based on these findings, it can be concluded that:

-

1.

From the findings presented in Table 6, it is evident that SASODE ranks first in essentially all dimensions, but the different dimensions can be divided in detail.

-

2.

Analyzing the whole experimental results vertically, in the case of 10D, WOA and HBO are the second echelon except the SASODE algorithm, SCA and MFO are ranked after the above two algorithms, and SSA is the worst performing algorithm. In the 30D, 50D and 100D cases, WOA is one of the best competitive algorithms for SASODE, except for GWO and HBO, followed by SSA and MFO. SCA is the worst algorithm in all three cases.

-

3.

The results of the horizontal comparison show that the performance of the algorithm gradually improves as the dimensionality increases and the difficulty in finding the optimal function increases. From this, we can infer that SASODE ranks first among the six algorithms in terms of the overall level of the optimization function in high dimensions.

-

4.

The results of the Friedman rank on various dimensions demonstrate that SASODE has a slight edge over HBO, and outperforms SSA, SCA, and MFO in 10D. Moreover, the same situation can be observed in 30D and 50D. The biggest difference is that other algorithms are slightly inferior to SASODE. However, SASODE exhibits more statistical significance than other algorithms in 100D, except for the WOA. It indicates that the performance gains of SASODE are heightened as the dimensionality increases.

4.2 Experiments on CEC 2017

To understand the performance of SASODE on different benchmark test suites. The recently proposed CEC 2017 test suite includes unimodal, multimodal, hybrid, and composite functions that are tested in this section [51]. The present SASODE algorithm is evaluated against various leading algorithms in the field including WOA, GWO, HBO, SSA, SCA, MFO, and HHO. These algorithms are run independently with a maximum iteration count of 1000, and the process is repeated 30 times. In order to ensure equitable comparison, fitness evaluations for each algorithm are capped at 50000, and population size is fixed at 50.

The outcomes pertaining to our study are exhibited in Table 7. As delineated in the table, SASODE manifests superior performance for unimodal functions (f1, f3). Furthermore, with respect to most multimodal functions (f4-f10), SASODE performs better compared to other algorithms, except for f5, f7 and f8. It should be noted that the good exploration behavior of SASODE on multimodal functions is due to the Lehmer mean and variable subpopulation size strategies. For the mixed functions (f11-f20), SASODE ranks first among all tested functions. The aforementioned outcomes suggest that SASODE attains equilibrium between EE. In terms of the amalgamated functions (f21-f30), SASODE demonstrated superior outcomes on more than fifty percent of the functions as compared to its counterparts. To sum up, SASODE demonstrated superior performance relative to its rivals in CEC 2017.

4.3 The application in engineering optimization problems

The effectiveness of the proposed algorithm is verified by three real-world optimization problems such as Parameter estimation for frequency-modulated sound waves (FM), Lennard-Jones Potential Problem (LJ) and Spread Spectrum Radar Polly Phase Code Design (SPRP) [52]. The detailed definitions and mathematical models of these problems can be found in Appendix A. Moreover, several state-of-the-art metaheuristics are utilized as the comparison algorithms, such as EO, MFO, RUN [53], GWO, HBO, HHO, LFD [54], SCA, SSA and WOA. For the specific parameter settings of each algorithm, see the corresponding original work. The comparison algorithms have undergone fitness evaluations totaling 150000, and each algorithm’s population size is set at 50. This results in 3000 iterations for these algorithms. It is worth noting that the effectiveness of the mutation operator in DE is highly sensitive to the population size. Therefore, SASODE utilizes a population size of 150. To maintain fairness, the iteration about SASODE is limited to 1000 despite undergoing the same number of fitness evaluations as the comparison algorithm. To ensure optimal readability of the table, the results will be presented across two tables, namely Tables 8 and 9.

Tables 8 and 9 show the experimental results of FM. The results depict that SASODE surpasses over fifty percent of the evaluated algorithms. The statistical values of median and mean illustrate that SASODE accomplishes the optimal value more frequently compared to the other algorithms. The reason is that the adaptive parameter strategy can better adapt to the evolutionary process of this complex problem and make the algorithm reach the optimum more smoothly.

As shown in Tables 8 and 9, SASODE and some other algorithms, such as EO, SSA, HBO, HHO and GWO, rank first in the design of the SPRP. The results indicate that the problem is relatively easy to solve. There are many algorithms that can be optimal, but some are still not stable enough (such as LFD, MFO, RUN, SCA, and WOA). In general, SASODE is able to maintain good stability on this problem.

5 Feature selection optimization

To further corroborate the efficacy of SASODE in addressing practical issues, we have applied the SASODE towards feature selection problem, which has been proven to belong to an expensive optimization problem class. As a result, we will begin with introducing the datasets employed by the algorithm, followed by a parametric analysis of the comparison algorithm, and conclude by analyzing experimental results.

5.1 Datasets

The datasets utilized in this stage of the experiment are displayed in Table 10 and encompass critical information like dataset name, number of attributes, and samples. It is worth mentioning that this paper selects eight real datasets sourced from the UCI Machine Learning Laboratory [55] in addition to the Permission dataset for Android malicious application classification extracted from the literature [56]. From Table 10 we can see that the number of attributes in the datasets are all below five hundred, but the number of samples varies from several hundred to several thousand, and the attributes are not proportional to the number of samples, and these characteristics make the datasets a challenging task in classification.

5.2 Experimental settings

Due to the unbalanced number of samples in the above datasets,the experimental process employs K-fold cross-validation methodology. Specifically, the value of k is established as 10, and dividing the dataset into ten copies of the same size, nine of which are used for training data in the feature selection process and one for testing data. The experimental classifier is KNN classifier.

5.3 Comparison of algorithm parameter settings and evaluation criteria

The comparison algorithms used in this section include the differential evolution algorithm with opposition-based learning ODE, and variants of ODE, CODE [57], COODE [58], and QRODE [59], as well as the emerging algorithms HHO, JAYA [60], and WOA algorithms proposed in recent years for solving feature selection. To ensure fairer experimental results, we used the control variables method, and all algorithms were iterated 100 times and run 20 times independently. Table 11 shows the parameter settings of the algorithm.

Feature selection is a class of discrete optimization problems, the optimization goal is to improve the accuracy of the classifier to reduce the number of features selected [61]. Give a dataset with N features, each individual in the population is a binary vector\( X =\left( x_1,x_2,....x_N \right) \), each bit \(x_d\in \left\{ 0,1 \right\} \), \(d=1,2,.....,N\), where 1 denotes the dth feature is selected,and 0 denotes it is not selected. In this paper, two different evaluation functions are used. One is the classifier accuracy rate and the other is the classifier error rate. Equations (9) and (10) are the calculation formula.

5.4 Comparison of experimental results

The data displayed in Table 12 represents the mean standard deviation computations using (9) as the evaluation metric. The average ranking is presented in the last row of the table, while the best experimental results are highlighted in bold in the table. In the analysis of the experimental results, this paper divides the competing algorithms into two categories. The first part is the analysis and comparison between SASODE and different variants of differential evolution algorithms, and the second part is the comparison between SASODE and other algorithms.

5.4.1 Comparison with ODE and its variant

By analyzing the data in Table 12, we can understand that SASODE has the highest accuracy on Ionosphere, Credit6000, Permission, and Spambase, which is because the algorithm can effectively avoid the best individuals in the population from performing OBL. The traditional OBL ordinary differential equation algorithm is OBL with less than jumping probability for all individuals in the population, which is tantamount to destabilizing the population, making the local best individuals in the population eliminated with a certain probability of being the individuals with poor fitness values. We can see that SASODE has a lower standard deviation than the other improved DE algorithms on the remaining eight datasets, except for ODE. According to the iteration step of the algorithm, it is easy to find that SASODE selects individuals to join the subpopulation by comparing each individual with a Gaussian random number and that the algorithm is significantly more random compared to the traditional ODE, so the algorithm is more stable during the iteration. The range of the difference was found to be 0.001, and since the algorithms are all randomized, the small differences in stability between the algorithms are negligible while ensuring accuracy. In addition to the comparison of mean variances between algorithms, the ranking analysis shows that among all algorithms, SASODE ranks first, followed by ODE, CODE, QRODE tied for third, and COODE ranked fourth.

5.4.2 Comparison with other algorithms

In addition to the comparison with ODE variants, SASODE has more obvious advantages in accuracy compared with HHO, JAYA and WOA algorithms, especially WOA algorithm. The results of the Wilcoxon rank sum test are shown in Table 13, from the table it can be inferred that SASODE displays superior performance in comparison to WOA across all nine data sets. Even though the WOA algorithm has a superior standard deviation on Ionosphere and Credit6000 compared to SASODE, its accuracy has been underwhelming when compared to the algorithm introduced in this paper. Secondly, according to the results of the rank sum test, it can be inferred that JAYA and SASODE algorithms are similar. However, when considering both the mean and standard deviation, JAYA algorithm was found to be notably inferior to SASODE algorithm. This indicates that SASODE outperforms HHO, JAYA, and WOA algorithms.

5.4.3 Convergence behavior

In addition to comparing the results of different algorithms on different datasets according to (10), we visualize the algorithm iteration 100 with the evaluation function and the visualization results as in Fig. 6. It is easy to see by the iterative curves that the blue curve performs well on most of the datasets. SASODE converges the least on the Ionosphere, Sonar, Credit6000, Spambase, Waveform, and WDBC datasets, which means that the algorithm has the highest accuracy and the lowest error rate. However, a closer look also shows that SASODE can converge to better values, but not as fast as the other algorithms on the Sonar, Permission and Waveform datasets. This is due to the larger number of features in the Sonar dataset, the larger number of samples in the Permission and Waveform datasets, and SASODE focuses on the jumping rate of each individual in the population, which prevents it from simultaneously considering both the effectiveness and efficiency of the optimization in the early stage. Therefore, the performance of SASODE may not be very good in dealing with high-dimensional large-sample problems. But in the later stage, the update of the jumping rate is based on historical information and there is no need to find the optimal search direction, so as the number of iterations increases (after 60 generations), the algorithm gradually finds the optimal solution and converges.

5.4.4 Number of features and running time analysis

Table 14 displays the mean quantity of features that were chosen by various algorithms across a range of datasets, as well as the mean time taken by each algorithm to make these selections. It is evident from Table 14 that SASODE does not exhibit significant benefits with regard to the quantity of selected features and the execution time. This is because the algorithm assigns a jumping rate to each individual in the population during the iteration process, which theoretically increases the time cost and leads to a slowdown in the pre-optimization process, but this operation helps the algorithm to jump out of the local optimum to increase the probability of finding the globally optimal solution, which explains the fact that SASODE selects the smallest number of features on the waveform, but takes a much longer time to find the optimal solution than JAYA.

Combined with the in-depth analysis of the numerical results in Table 12, it is apparent that SASODE exhibits the most superior classification accuracy when the quantity of features is not considered. The features selected by WOA are few, but have the lowest accuracy rate. In other words, the features selected by WOA are not representative. Similarly, the same is true for JAYA. Upon further examination of the experimental results, it has been deduced that the variances between the quantities of features chosen by SASODE, WOA, and JAYA do not exceed three, the time taken for feature selection does not surpass 50%. Consequently, in instances where high accuracy is ensured, the difference between these two indicators may be disregarded. We can even boldly infer that the improved algorithm is applicable to smaller data sets and can guarantee high accuracy without consuming too much time. In conclusion, SASODE outperforms other comparison algorithms in terms of comprehensive performance.

6 Conclusions

In this paper, a novel evolutionary algorithm, termed SASODE, is proposed specifically for solving optimization problems. To distinguish population-based OBL in ODE, first, SASODE proposes opposite operation based on subpopulation, an idea that fully mobilizes the advantages of individuals and maximizes the utilization of individuals in OBL population. Second, an adaptive parameter control strategy is introduced in SASODE to determine the number of individuals eligible to perform OBL and to adjust the size of the subpopulation. Finally, the concept of surviving individuals in subpopulations based on historical information is proposed to guide the algorithm search in a more favourable direction for convergence. Experimental results show that SASODE outperforms state-of-the-art algorithms in recent years on different types of optimization problems, confirming the effectiveness and robustness of the new mechanism in SASODE.

In future research work, ODE still remains much room for further improvements, and several research directions can be recommended. The performance impact of subpopulation strategies in different opposition-based learning. The combination of different adaptive strategies and subpopulation strategies in DE can be further considered. SASODE is currently only used in single-objective optimization problems, and the performance on multi-objective optimization problems may be an exciting research work.

Availability of data and materials

Written informed consent for publication of this paper was obtained from all authors.

References

Gao D, Wang G-G, Pedrycz W (2020) Solving fuzzy job-shop scheduling problem using DE algorithm improved by a selection mechanism. IEEE Trans Fuzzy Syst 28(12):3265–3275. https://doi.org/10.1109/tfuzz.2020.3003506

Tirkolaee EB, Goli A, Weber G-W (2020) Fuzzy mathematical programming and self-adaptive artificial fish swarm algorithm for just-in-time energy-aware flow shop scheduling problem with outsourcing option. IEEE Trans Fuzzy Syst 28(11):2772–2783

Tirkolaee EB, Alinaghian M, Hosseinabadi AAR, Sasi MB, Sangaiah AK (2019) An improved ant colony optimization for the multi-trip capacitated arc routing problem. Comput Electr Eng 77:457–470

Alswaitti M, Albughdadi M, Isa NAM (2019) Variance-based differential evolution algorithm with an optional crossover for data clustering. Appl Soft Comput 80:1–17. https://doi.org/10.1016/j.asoc.2019.03.013

Zhang Y, Gong D-w, Gao X-z, Tian T, Sun X-y (2020) Binary differential evolution with self-learning for multi-objective feature selection. Inf Sci 507:67–85. https://doi.org/10.1016/j.ins.2019.08.040

Sharafi Y, Teshnehlab M (2021) Opposition-based binary competitive optimization algorithm using time-varying v-shape transfer function for feature selection. Neural Comput & Applic 33(24):17497–17533. https://doi.org/10.1007/s00521-021-06340-9

Elaziz MA, Dahou A, Abualigah L, Yu L, Alshinwan M, Khasawneh AM, Lu S (2021) Advanced metaheuristic optimization techniques in applications of deep neural networks: a review. Neural Comput & Applic 33(21):14079–14099. https://doi.org/10.1007/s00521-021-05960-5

Abualigah L, Yousri D, Elaziz MA, Ewees AA, Al-qaness MAA, Gandomi AH (2021) Aquila optimizer: a novel meta-heuristic optimization algorithm. Comput Ind Eng 157:107250. https://doi.org/10.1016/j.cie.2021.107250

Fausto F, Reyna-Orta A, Cuevas E, Andrade ÁG, Perez-Cisneros M (2019) From ants to whales: metaheuristics for all tastes. Artif Intell Rev 53(1):753–810. https://doi.org/10.1007/s10462-018-09676-2

Ser JD, Osaba E, Molina D, Yang X-S, Salcedo-Sanz S, Camacho D, Das S, Suganthan PN, Coello CAC, Herrera F (2019) Bio-inspired computation: where we stand and what’s next. Swarm Evol Comput 48:220–250. https://doi.org/10.1016/j.swevo.2019.04.008

Holland J (1975) Adaptation in natural and artificial systems: an introductory analysis with application to biology. Control Artif Intell

Storn R, Price K (1997) Differential evolution - a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359. https://doi.org/10.1023/a:1008202821328

Shi Y (2011) Brain storm optimization algorithm. In: Advances in swarm intelligence: second international conference, ICSI 2011, Chongqing, China, June 12-15, 2011, Proceedings, Part I 2, pp 303–309. Springer

Mirjalili S (2016) SCA: A sine cosine algorithm for solving optimization problems. Knowl-Based Syst 96:120–133. https://doi.org/10.1016/j.knosys.2015.12.022

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: a novel optimization algorithm. Knowl-Based Syst 191:105190. https://doi.org/10.1016/j.knosys.2019.105190

Li Y, Wang S (2019) Differential evolution algorithm with elite archive and mutation strategies collaboration. Artif Intell Rev 53(6):4005–4050. https://doi.org/10.1007/s10462-019-09786-5

Vasant P, Zelinka I, Weber G-W (2019) Intelligent Computing & Optimization. Springer, ???

Zeng Z, Zhang M, Chen T, Hong Z (2021) A new selection operator for differential evolution algorithm. Knowl-Based Syst 226:107150. https://doi.org/10.1016/j.knosys.2021.107150

Rosić MB, Simić MI, Pejović PV (2021) An improved adaptive hybrid firefly differential evolution algorithm for passive target localization. Soft Comput 25(7):5559–5585. https://doi.org/10.1007/s00500-020-05554-8

Deng L-B, Li C-L, Sun G-J (2020) An adaptive dimension level adjustment framework for differential evolution. Knowl-Based Syst 206:106388. https://doi.org/10.1016/j.knosys.2020.106388

Tizhoosh HR (2005) Opposition-based learning: a new scheme for machine intelligence. In: International conference on computational intelligence for modelling, control and automation and international conference on intelligent agents, web technologies and internet commerce (CIMCA-IAWTIC’06). IEEE, vol 1, pp 695–701

Mahdavi S, Rahnamayan S, Deb K (2018) Opposition based learning: a literature review. Swarm Evol Comput 39:1–23. https://doi.org/10.1016/j.swevo.2017.09.010

Rahnamayan S, Tizhoosh HR, Salama MMA (2008) Opposition-based differential evolution. IEEE Trans Evol Comput 12(1):64–79. https://doi.org/10.1109/tevc.2007.894200

Choi TJ, Togelius J, Cheong Y-G (2021) A fast and efficient stochastic opposition-based learning for differential evolution in numerical optimization. Swarm Evol Comput 60:100768

Choi TJ (2023) A rotationally invariant stochastic opposition-based learning using a beta distribution in differential evolution. Expert Syst Appl 120658

Mohapatra S, Mohapatra P (2023) Fast random opposition-based learning golden jackal optimization algorithm. Knowl-Based Syst 110679

Wang Z, Huang L, Yang S, Li D, He D, Chan S (2023) A quasi-oppositional learning of updating quantum state and q-learning based on the dung beetle algorithm for global optimization. Alexandria Eng J 81:469–488

Li AD, Xue B, Zhang M (2021) Improved binary particle swarm optimization for feature selection with new initialization and search space reduction strategies. Appl Soft Comput 106(Mar.):107302. https://doi.org/10.1016/j.asoc.2021.107302

Zhang Y, Wang S, Phillips P, Ji G (2014) Binary pso with mutation operator for feature selection using decision tree applied to spam detection. Knowl Based Syst 64(jul.):22–31. https://doi.org/10.1016/j.knosys.2014.03.015

Nssibi M, Manita G, Korbaa O (2023) Advances in nature-inspired metaheuristic optimization for feature selection problem: a comprehensive survey. Comput Sci Rev 49:100559

Wang Y, Ran S, Wang G-G (2023) Role-oriented binary grey wolf optimizer using foraging-following and lévy flight for feature selection. Appl Math Model

Tubishat M, Idris N, Shuib L, Abushariah MA, Mirjalili S (2020) Improved salp swarm algorithm based on opposition based learning and novel local search algorithm for feature selection. Expert Syst Appl 145:113122. https://doi.org/10.1016/j.eswa.2019.113122

Hamidzadeh J et al (2021) Feature selection by using chaotic cuckoo optimization algorithm with levy flight, opposition-based learning and disruption operator. Soft Comput 25(4):2911–2933

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82. https://doi.org/10.1109/4235.585893

Lacerda MGP, Araujo Pessoa LF, Lima Neto FB, Ludermir TB, Kuchen H (2021) A systematic literature review on general parameter control for evolutionary and swarm-based algorithms. Swarm Evol Comput 60:100777. https://doi.org/10.1016/j.swevo.2020.100777

Tang L, Dong Y, Liu J (2015) Differential evolution with an individual-dependent mechanism. IEEE Trans Evol Comput 19(4):560–574. https://doi.org/10.1109/tevc.2014.2360890

Cui L, Li G, Lin Q, Chen J, Lu N (2016) Adaptive differential evolution algorithm with novel mutation strategies in multiple sub-populations. Comput Oper Res 67:155–173. https://doi.org/10.1016/j.cor.2015.09.006

Lin X, Luo W, Xu P (2021) Differential evolution for multimodal optimization with species by nearest-better clustering. IEEE Trans Cybern 51(2):970–983. https://doi.org/10.1109/tcyb.2019.2907657

Črepinšek M, Liu S-H, Mernik M (2013) Exploration and exploitation in evolutionary algorithms. ACM Comput Surv 45(3):1–33. https://doi.org/10.1145/2480741.2480752

Zhang J, Sanderson AC (2009) JADE: Adaptive differential evolution with optional external archive. IEEE Trans Evol Comput 13(5):945–958. https://doi.org/10.1109/tevc.2009.2014613

Tanabe R, Fukunaga A (2013) Success-history based parameter adaptation for differential evolution. In: 2013 IEEE Congress on Evolutionary Computation, pp 71–78. IEEE

Tanabe R, Fukunaga AS (2014) Improving the search performance of SHADE using linear population size reduction. In: 2014 IEEE Congress on Evolutionary Computation (CEC). IEEE, ???. https://doi.org/10.1109/cec.2014.6900380

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3(2):82–102. https://doi.org/10.1109/4235.771163

Askari Q, Saeed M, Younas I (2020) Heap-based optimizer inspired by corporate rank hierarchy for global optimization. Expert Syst Appl 161:113702. https://doi.org/10.1016/j.eswa.2020.113702

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl-Based Syst 89:228–249. https://doi.org/10.1016/j.knosys.2015.07.006

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol Comput 1(1):3–18. https://doi.org/10.1016/j.swevo.2011.02.002

Kudo F, Yoshikawa T, Furuhashi T (2011) A study on analysis of design variables in pareto solutions for conceptual design optimization problem of hybrid rocket engine. In: 2011 IEEE Congress of evolutionary computation (CEC), pp 2558–2562. IEEE

Awad N, Ali M, Liang J, Qu B, Suganthan P (2016) Problem definitions and evaluation criteria for the cec 2017 special session and competition on single objective bound constrained real-parameter numerical optimization. In: Technical report, pp 1–34. Nanyang Technological University Singapore, ???

Das S, Suganthan PN (2010) Problem definitions and evaluation criteria for cec 2011 competition on testing evolutionary algorithms on real world optimization problems. Jadavpur University, Nanyang Technological University, Kolkata, pp 341–359

Ahmadianfar I, Heidari AA, Gandomi AH, Chu X, Chen H (2021) RUN beyond the metaphor: an efficient optimization algorithm based on runge kutta method. Expert Syst Appl 181:115079. https://doi.org/10.1016/j.eswa.2021.115079

Houssein EH, Saad MR, Hashim FA, Shaban H, Hassaballah M (2020) Lévy flight distribution: a new metaheuristic algorithm for solving engineering optimization problems. Eng Appl Artif Intell 94:103731. https://doi.org/10.1016/j.engappai.2020.103731

Dua D, Graff C (2017) UCI Machine Learning Repository. http://archive.ics.uci.edu/ml

Wang L, Gao Y, Gao S, Yong X (2021) A new feature selection method based on a self-variant genetic algorithm applied to android malware detection. Symmetry 13(7):1290. https://doi.org/10.3390/sym13071290

Rahnamayan S, Jesuthasan J, Bourennani F, Salehinejad H, Naterer GF (2014) Computing opposition by involving entire population. In: 2014 IEEE Congress on evolutionary computation (CEC), pp 1800–1807. IEEE

Xu Wang L, He B, Wang N (2011) Modified opposition-based differential evolution for function optimization. J Comput Inf Syst 7(5):1582–1591

Ergezer M, Simon D, Du D (2009) Oppositional biogeography-based optimization. In: 2009 IEEE International conference on systems, man and cybernetics, pp 1009–1014. IEEE

Rao RV (2016) Jaya: a simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int J Ind Eng Comput 19–34. https://doi.org/10.5267/j.ijiec.2015.8.004

Bing X, Zhang M, Browne WN, Xin Y (2016) A survey on evolutionary computation approaches to feature selection. IEEE Trans Evol Comput 20(4):606–626. https://doi.org/10.26686/wgtn.14214497.v1

Mladenović N, Petrović J, Kovačević-Vujčić V, Čangalović M (2003) Solving spread spectrum radar polyphase code design problem by tabu search and variable neighbourhood search. Eur J Oper Res 151(2):389–399. https://doi.org/10.1016/s0377-2217(02)00833-0

Funding

The authors are grateful for the support of National key research and development program of China (2020YFA0908300), and National Natural Science Foundation of China (21878081).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The author(s) declared no potential conflicts of interest with respect to the research, author- ship, and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Engineering optimization problem model

Appendix A: Engineering optimization problem model

1.1 A.1 Parameter estimation for frequency-modulated sound waves (FM)

The first problem is Frequency modulation sound wave synthesis (FM) . This problem has six variables, namely, \(\vec {X}=\{a_1,\omega _1,a_2,\omega _2,a_3,\omega _3 \}\) are called estimated parameters. Additional details regarding this function have been included below:

where \(\theta =2\pi /100\), the range of the FM function lies between [-6.4,6.35].

1.2 A.2 Lennard-Jones potential problem (LJ)

The objective of the following multimodal optimization problem is to minimize the energy of a pure Lennard-Jones (LJ) cluster. A large number of local optima exist in this problem [52]. Given M atoms and their Cartesian coordinates \(\vec {a}_i = \{\vec {x}_i,\vec {y}_i,\vec {z}_i\}\), where i ranges from 1 to M, the objective function can be expressed as follows:

where \(r_{i j}={||\vec {a}_i-\vec {a}_j||}_2\) with gradient

The three variables take values in the range \(x_1\in [0,4],x_2 \in [0,4],x_3 \in [0,\pi ].\) The coordinates \(x_i\) for other atom is given to be bound in the range \(\left[ -4-\frac{1}{4}\left\lfloor \frac{i-4}{3}\right\rfloor , 4+\frac{1}{4}\left\lfloor \frac{i-4}{3}\right\rfloor \right] \).

1.3 A.3 Spread spectrum radar polly phase code design (SPRP)

The objective function of the SPRP problem is a nonlinear and non-convex function. The mathematical model of the problem is shown below [62].

Figure 7 shows the objective function at \(D=2\). Since the problem is NP-hard and the problem is segmented and smoothed, it cannot be solved efficiently using traditional optimization algorithms.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, L., Li, J. & Yan, X. A variable population size opposition-based learning for differential evolution algorithm and its applications on feature selection. Appl Intell 54, 959–984 (2024). https://doi.org/10.1007/s10489-023-05179-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-05179-y