Abstract

Cameras are integrated with various underwater vision systems for underwater object detection and marine biological monitoring. However, underwater images captured by cameras rarely achieve the desired visual quality, which may affect their further applications. Various underwater vision enhancement technologies have been proposed to improve the visual quality of underwater images in the past few decades, which is the focus of this paper. Specifically, we review the theory of underwater image degradations and the underwater image formation models. Meanwhile, this review summarizes various underwater vision enhancement technologies and reports the existing underwater image datasets. Further, we conduct extensive and systematic experiments to explore the limitations and superiority of various underwater vision enhancement methods. Finally, the recent trends and challenges of underwater vision enhancement are discussed. We wish this paper could serve as a reference source for future study and promote the development of this research field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, underwater images play an essential role in marine archaeology, pipeline inspection, and marine biology research. As the source of underwater images, cameras not only assist Autonomous Underwater Vehicles (AUVs) to complete a series of complex work but also constitute an essential element of underwater imaging systems [1,2,3]. Figures 1, 2, 3 give the statistical analysis of the literature on underwater image processing. Figure 1 counts the number of underwater vision papers published on the Web of Science from 2013 to 2020, and Fig. 2 calculates the proportion of each research hotspots in the field of underwater vision in 2020. Obviously, the total number of underwater image papers is increasing year by year, and work related to underwater image enhancement and restoration becomes the hottest direction. Figure 3 shows the number of learning-based underwater vision enhancement papers each year. It can be seen that learning-based methods have become a trend in the field of underwater vision enhancement. However, the learning-based methods are still not the mainstream methods for underwater vision enhancement.

Unlike terrestrial images, images captured by underwater sensors are generally degraded by underwater environments. As shown in Fig. 4, the light received by a camera can be represented by the linear combination of three components, i.e., the direct component, the backscatter component, and the forward component. Specifically, the reasons for underwater image degradation can be summarized as follows according to Fig. 4. First, light traveling underwater decays exponentially with distance, reducing the brightness of underwater images. Then, the selective absorption of light with different wavelengths by water results in color distortion in underwater images. Next, the use of artificial light to expand the imaging range leads to halos. Last but not the least, the blur is mainly introduced by scattered light reflected by suspended particles [4]. As a result, designing effective underwater vision enhancement technologies is meaningful for numerous engineering and research tasks.

This review classifies and summarizes the underwater vision enhancement methods in the past two decades. Different from the previous reviews [4,5,6,7], this paper provides a more comprehensive and structured review of recent advances in underwater vision enhancement technologies. For example, compared with [5], this review has summarized a greater variety of underwater vision enhancement methods, such as hardware-based restoration methods. Therefore, we use clearer taxonomies to categorize existing methods. Furthermore, we introduce eight public underwater datasets in detail and provide the links in the table to facilitate the readers, which is ignored by most reviews. Finally, abound experiments (including the accuracy of background light and transmission map estimation, performance on various underwater scenes, and time cost test) are designed to verify the performance of different types of methods, and the experimental results are deeply analyzed. The main contributions of this review are shown in Fig. 5 and introduced as follows.

-

(1)

We summarize the reasons for underwater image degradation with graphics and words.

-

(2)

We classify current underwater vision enhancement technologies and introduce working principles, representative methods, advantages, and limitations of each type in various forms. Additionally, we introduce commonly-used public underwater image datasets, including sources, example images, and characteristics, which meet the diverse requirements of researchers.

-

(3)

We conduct extensive and systematic experiments on representative underwater vision enhancement methods and analyze the performance of these methods both objectively and subjectively. Based on the experimental results, we analyze the limitations of the existing objective underwater image evaluation metrics.

-

(4)

We discuss several unsolved open problems and the possible research directions in the field of underwater vision enhancement.

2 Underwater image restoration methods

This section focuses on underwater image restoration methods and introduces a widely-used underwater image formation model in detail. Furthermore, we present several state-of-the-art restoration methods and summarize their advantages and disadvantages.

2.1 Hardware-based methods

Hardware-based underwater imaging methods reduce scattering and obtain clear underwater images mainly by improving imaging sensors or systems. The methods of this kind include the use of polarizers [8,9,10,11,12,13], lasers [14], deep-sea underwater camera [15], stereo camera [16,17,18,19,20], etc. In what follows, we introduce the representative hardware-based methods.

2.1.1 Stereo imaging system

The stereo imaging technology is an imitation of the human visual system. The early stereo imaging system uses a traditional camera to shoot the same target from different perspectives and then calculates the depth of the field by these stereo photos. With the appearance of a CCD digital camera, a typical stereo imaging system is made up of a binocular vision device, making it possible to obtain depth information via stereo processing. The advantage of a higher refresh rate and resolution with lower cost makes the stereo imaging system more popular with AUVs [2].

Ideally, the mechanism of the stereo imaging system is depicted in Fig. 6. OR and OL are the center points of the right and left image sensors, respectively. The line segment connecting OR and OL named baseline B, is parallel to the optical axis. PR and PL are the same target point P which is captured by two CCDs, respectively. OR, OL and P together constitute the epipolar plane in three-dimensional (3D) space. The epipolar plane and images in two sides intersect at two straight lines, called epipolar lines. PR and PL are arranged on a line parallel to the baseline. The distance zbetween CCDs and the target point P can be acquired through triangulation, namely formulars from Eqs. (1) and (2):

where (X, Y, Z) is the spatial coordinate of the target point P, XR and XL denote the distance of PR and PL which are the projections of P, Band f are baseline and focal length which can be obtained by prior information or camera calibration, and d is disparity.

Bruno et al. discussed the structured light in a stereo imaging system with different water turbidity conditions [17]. They established the experiment by employing two Nikon D200s with a CCD sensor (size of 23.6 × 15.8 mm and a resolution of 3872 × 2592 pixels) for stereo vision and a commercial projector as the structured light source in a water tank, proving that the quality of the result is acceptable in high turbidity level. Roser et al. proposed an approach to improve the visibility of stereo images for AUVs [18]. A rough three-dimensional image is estimated from the degraded stereo image, followed by a light attenuation model to calculate the visibility distance. Finally, 3D scene reconstruction is carried out to obtain a color-corrected underwater stereo image. Lin et al. constructed the vision enhancement system for AUVs based on the stereo imaging system and object recognition [19]. A Harris corner detector is applied to detect corner points of interest and match the feature in images captured by the left and right perspectives of the stereo camera. In addition, histogram equalization is used to strengthen the corner of the image. To improve the hardware of stereo imaging in the deep sea, Luczynski et al. proposed a stereo imaging system which is the primary sensor for deep-sea operations [20]. Numerous factors are considered carefully in the stereo vision system. Specifically, they discuss the image sensor for the camera, optimize the camera housings to adapt to the deep sea, analyze the suitable lens in terms of the size of the imager, and verify its effect on AUVs.

2.1.2 Range-gated imaging system

As illustrated in Fig. 7, a range-gated imaging system contains a pulsed laser, a timing control unit (TCU), and a high-speed camera with a Charge-Couple Device (CCD) image sensor. A range-gated imaging system controls the camera gate to receive the direct reflected light and prevents the backscatter before reaching into the sensor. Note that the switching time of a camera gate is based on the prior information from the manual decision, the laser range finder, or other sensors. The range-gated imaging system effectively prevents backscatter outside the gate time, but the noise inside the sampling volume of interest (VOI) still contributes to imaging.

Tan et al. improved the ranged-gated imaging system in the highly turbid medium for Underwater Remotely Operated Vehicle (ROV) from both hardware optimization and image enhancement [21]. Specifically, tail-gating is designed to control the gate opening time towards the tail of Reflected Image Temporal Profile (RITP) to obtain the highest image quality. Then, Contrast Limited Adaptive Histogram Equalization (CLAHE) is adopted further to improve the contrast of range-gated images (RGI). To reduce the speckle-generating in imaging, Li et al. analyzed the causes of speckle noise and experimented with speckle-noise comparative analysis with different material objects and lasers [22]. Liu et al. used a self-built range-gated imaging system to construct a scattering model and proposed an optimal pulse with a gate control coordination strategy [23]. Wang et al. proposed a 3D dehazing-gated range-intensity correlation imaging approach to remove scatter in 3D RGI [24]. The method has the advantage of high practicality, significant dehazing effect, and an extended imaging range. However, more images have to be collected to reduce scatter. To decrease the number of input images and improve the visibility of two-dimensional (2D) RGI, Wang et al. further proposed a dehazing approach with only a single gated image as input [25]. The method is based on a prior that the intensity of the target distributes according to the range intensity profile (RIP) in RGI. The depth-noise map and transmission map are computed by a smooth target intensity map and RIP for image dehazing.

2.1.3 Polarization imaging system

Polarization is one of the inherent properties of light. Restoration based on polarization is also an effective way to achieve high-contrast underwater images and is widely used in many fields (such as underwater video shooting [26]). This method usually collects polarized images in the same scene and separates background light and scattered light to estimate the intensity of light and transmission, thereby inverting the degradation process and obtaining results [27].

Some studies assumed that the backscattered component is unbiased, while the directly reflected component is biased [28]. Other studies assumed that the backscattered component is biased, and polarization of the directly reflected component can be ignored. They used it as a basis to restore underwater images [8, 9]. Additionally, some studies considered that two light parts are partially polarized [10,11,12]. Schechner and Karpel proposed an algorithm based on an optical model to invert the degradation process [8]. Original images taken with a polarizing filter are used to estimate scene depth. It effectively improves the visibility of degraded images to a certain extent, but still has limitations. Therefore, more researchers made improvements based on it. The premise of [8] is that illumination light is uniform, which is unsuitable for images with artificial illumination. Therefore, Treibitz and Schechner improved the method proposed in [8] with a non-scanning restoration method based on active polarization imaging [10]. It allows partial polarization of backscattered light and direct reflected light and has better applicability, simple hardware, and easy operation. Nevertheless, due to the influence of noise and attenuation of illumination, this method is only effective in a specific range.

For objects with a high degree of polarization (i.e., smooth metal surfaces), the transmission map is underestimated if their polarization does not consider these factors. Huang et al. presented a method using curve-fitting to estimate transmission map and background light [13]. Both objects with high polarization and low polarization can be recovered by this method. However, plenty of parameters are optimized due to the introduction of non-linear calculations, resulting in high time complexity. Hu et al. presented a transmission map correction approach using a simple polynomial fitting to correct the transmission map of objects with a high degree of polarization to restore images [11]. It restores objects with different degrees of polarization, which is faster than [13]. Due to circularly polarized light maintaining its polarization well in turbid media [12], Hu et al. illuminated a scene with circularly polarized light and proposed a method based on polarization in high-turbid media [12]. The technique adapts to underwater images in high-density turbid media and restores images by combining circular polarization information and linear polarization information.

In summary, since most experiments are conducted only in the water tank, the main challenge for hardware-based methods is the application in a real-world underwater environment. Specifically, the methods based on stereo imaging systems rarely consider the influence of scattering, making them unavailable in the presence of high turbidity. The methods based on range-gated imaging systems have inherent limitations that scatter cannot be wholly removed even under ideal conditions. Therefore, several methods have been proposed to remove backscatter for underwater range-gated images further. The methods based on polarization imaging underwater have a significant dehazing effect, while the robustness of the methods is poor. Feature research may focus on combining polarization-based algorithms with other types to improve the real-time performance and expand the practical application.

2.2 Prior-based methods

The software-based restoration methods aim to construct an imaging model and accurately estimate the parameters in the corresponding imaging model. The Jaffe-McGlamery physical imaging model [29, 30] is a commonly-used image formation model for underwater image restoration. The light received by the camera ET is linearly composed of the following three parts:

where Ed is the direct component, representing the unscattered part of the direct reflected light that reaches the camera. Ef is the forward-scattered component, representing the scattered part of the direct reflected light. Eb is the backscattered component, representing the veiling light scattered by floating particles in water. When the medium is uniformly distributed, the direct component Ed can be defined as Eq. (4):

where J(x,y) and d(x,y) represent the scene radiance and distance to the camera at the pixel (x,y), respectively, c ∈ {R, G, B} is the color channel index, and ρc is the beam attenuation coefficient which can be expressed as Eq. (5):

where ρc is the result of the linear addition of the beam scattering coefficient ac and the beam absorption coefficient bc. The value ρc depends on the frequency of light waves of different colors. The higher frequency of light waves, the stronger the penetration of light reaches. Since green and blue light waves have higher frequencies, underwater images are mostly blue-green.

The transmission map t(x, y) is defined as Eq. (6):

Ef is one of the causes of image blurring. In the case of small-angle scattering of reflected light, Ef can be expressed as:

where g(x, y) is the point spread function (PSF) of the pixel (x, y), J(x, y) represents the scene radiance at the pixel (x, y), and ⊗ is the convolution operation.

Eb is the main reason for the decrease of image contrast and can be expressed as:

where A∞ is the veiling light. The impact of the forward-scattered component Ef on image degradation is small and usually ignored. Therefore, like the image formation model in the atmospheric environment, the model widely used to restore an underwater image is shown in Eq. (9):

The process of the underwater image restoration is to estimate the veiling light A∞ and the transmission map t(x, y) from the observed image I(x, y), and restore the scene radiance J(x, y) from the image formation model.

We will introduce the representative underwater image restoration methods and underwater video restoration methods as follows.

2.2.1 Underwater image restoration

In the past ten years, research on image dehazing has achieved remarkable development [31,32,33,34]. He et al. designed a haze removal algorithm based on dark channel prior (DCP) [34]. Specifically, DCP is based on the statistics results of a large quantity of haze-free images out of doors. It states a fact that each point has at least one color channel with very low intensity in most non-sky pixels of haze-free images. To formally describe this observation, the concept of a dark channel Jdark is given by Eq. (10). For arbitrary image J(x, y), its dark channel Jdark is:

where x indicates the coordinate of each pixel, Ω(x) indicates a local patch centering on x, Jc(y) is the pixel value of c in the range of Ω(x). According to the concept of DCP, if J is an outdoor non-sky haze-free image, the intensity of the dark channel J is close to zero:

If the background light A is given, (11) can be normalized by Ac:

Further, assume that transmission tc(x) in Ω(x) is constant and takes minimum operation on both sides of the Eq. (13):

According to the conception of DCP, the value of the dark channel of an arbitrary haze-free image J is close to zero. Noted that Ac is positive. We have

Putting Eqs. (12) into (11), \( \overset{\sim }{t}(x) \) can be acquired simply by:

Unlike haze images, the factors causing underwater image degradation are often more complex. Therefore, if the dehazing algorithm is directly applied to underwater images, it will only produce effects on specific images. Some researchers improved dehazing methods to cope with underwater images. Chiang et al. presented a restoration approach based on DCP (wavelength compensation and image dehazing, WCID) [35]. DCP is used to obtain the distance between the objects and the camera (i.e., depth map) to determine if the effect of artificial light needs to be removed. Once the effect of artificial light is compensated, water depth is estimated according to the remaining energy ratios of RGB channels in background light. Based on the attenuation of each channel, color compensation is conducted according to the water depth. Since the method fails to improve DCP, it has the same drawbacks as DCP. Wen et al. [36] and Drews et al. [37] improved DCP, providing new ideas for the application of DCP in the field of underwater image restoration. Wen et al. presented an effective algorithm to estimate the transmission map by using the information of green and blue channels [36]. Similarly, Drews et al. only considered the impact of blue and green channels on underwater images and presented an underwater DCP algorithm (UDCP) [37]. The dark channel based on the blue and green channels Judark is given in equation Eq. (16):

where y denotes the pixel coordinates, and Jc(y) is the undegraded underwater image.

The estimation of the transmission map is shown in Eq. (17):

where \( \overset{\sim }{t}(x) \) is the estimate of transmission map (\( 0\le \overset{\sim }{t}(x)\le 1 \)), Ic(y) is the image captured by the camera, Ac is the background light, Ω(x) is the selected area, ω is a constant (0 < ω ≤ 1) and ω = 0.95 in most cases.

The models proposed by Wen et al. and Drews et al. consider the propagation characteristics of light underwater, which deal with a part of deep-sea images well. However, these models fail to use the information of the red channel. The transmission map will be underestimated when applied to images where the red light has not been wholly attenuated, resulting in a lack of reliability in the restoration results. Galdran et al. proposed a red channel restoration approach based on DCP (RDCP) [38], which considered the impact of both natural light and artificial light. It made up for the defect that DCP is not suitable for images illuminated by artificial light. In addition, the method effectively recovers light with a shorter wavelength. Nevertheless, it is likely to overcompensate the red channel when coping with deep-sea images, resulting in reddish. Peng et al. presented a restoration approach based on generalized dark channel prior (GDCP) [39]. The method added an adaptive color correction to the image restoration process to ensure better image color restoration. Zhou et al. presented an approach using the guided filter to refine the transmission map [40]. The results of this approach can obtain high visibility and better real-time performance. To minimize the impact of underwater red artifacts on the restoration results, Lee et al. presented an approach based on continuous color correction and superpixel DCP [41]. Based on constant color correction, the method produced minimum red artifacts. Besides, the superpixel DCP algorithm improved the block effect of DCP. The advantages and disadvantages of the DCP-based techniques for underwater vision enhancement are summarized in Table 1.

Since DCP has inherent limitations, researchers proposed methods without using DCP. Carlevaris-Bianco et al. proposed an approach to eliminate the influence of scattering [42]. It used the different attenuation degrees of light with different wavelengths underwater to obtain the estimated water depth value and remove the scattering effect. Due to the DCP-based restoration approaches may fail when the scene contains bright objects, Peng et al. improved [43] and estimated scene depth based on blurriness, increasing with distance [44]. Compared with DCP, this method can more accurately estimate the depth map when objects are brighter than the background. But it is less efficient in the range with less texture. Similarly, Emberton et al. introduced an approach with novel veiling light and transmission estimation to improve this disadvantage [45], but the speed of the whole process was slow. Berman et al. presented an approach to restore the color of the image [46]. This method adds two global parameters to simplify the image restoration process, estimate different parameters from different existing water types, and apply them to the same image. Finally, the best restoration result of images is automatically selected according to the color distribution of the image.

The study has found that impressive results can be acquired when applying suitable image enhancement algorithms [47]. Therefore, researchers used the extensive color correction algorithm to compensate for uneven color distribution. Peng et al. estimated the transmission map by calculating the difference between the observed intensities of scene points and the background light. They used the physical model to restore the degraded image [47]. On this basis, an adaptive color correction algorithm was used to restore the color of the degraded image. Ancuti et al. presented an approach of locally estimating backscatter by using pixel neighborhoods [48]. Li et al. presented a systematic algorithm based on the principle of minimum information loss and histogram distribution for image enhancement [49]. This method can produce two versions of the output. One version with actual colors and a natural appearance is suitable for display. The other version with high contrast and high brightness can extract more valuable information and reveal more details. Ancuti et al. presented an approach based on fusion. This approach combined color transfer with DCP to improve the defect that the image color is too single [50]. But the method is not robust, especially when it is applied to images taken in turbid underwater scenes. Dai et al. presented an approach that integrates image restoration with a color balance algorithm [51].

Due to the low computational efficiency of traditional dehazing methods which are not suitable for underwater real-time processing equipment. Cho et al. proposed an efficient approach with sparse depth priors and used an incremental Gaussian Process (iGP) to estimate the depth and dehaze for underwater robots [52]. Yang et al. designed a restoration model using a DCP-based decomposition of reflected light and local estimation of backscattered light for the turbid ocean environment [53]. Different from estimating the background light as a fixed value, Yang et al. used the background light to restore the edge details better. Compared with the method of Peng and Cosman [54], this method has more straightforward calculation steps and is suitable for fast underwater processing. The advantages and disadvantages of underwater restoration methods without using DCP are summarized in Table 2.

2.2.2 Underwater video restoration

Underwater robots replace humans in a series of underwater operations and play a key role in exploring the ocean in the extremely challenging underwater environment. A vision-driven ROV mainly relies on the feature information of underwater video [54], and its excellent visual perception ensures its safety and efficiency. Accordingly, video restoration technology is also an essential but complicated part. On the one hand, underwater videos face the same problem as underwater images. On the other hand, changes in parameters between adjacent frames lead to temporal artifacts and noise. Furthermore, the results may have a series of problems such as inconsistency and incoherence of neighboring frames. Given the above challenging situations, some scholars put forward solutions.

Drews et al. presented an approach to estimating parameters using a new optical flow approach combined with structure-from-motion techniques [54]. The optical flow is calculated by estimating the scene depth. Then, structure-from-motion based on optical flow is used to estimate scene depth. Finally, the restoration results can be improved by iterating the above process. Li et al. proposed an underwater video dehazing algorithm by using stereo matching [55]. This method combines the stereo depth of the image and the haze density to estimate the depth of field and calculate the transmission of each pixel. In addition, the method adds the extinction Laplacian constraint, which helps to capture the details in the depth map. Emberton et al. presented a novel underwater video dehazing approach [56]. This method classifies images into three watercolor categories and selects the most suitable white balance algorithm for each type. By detecting and segmenting the area where the water is, they estimated background light and improve the visibility of images and videos. Gaussian normalization was introduced to ensure the consistency of the segmentation between adjacent frames of the video. The advantages and disadvantages of underwater video restoration methods are summarized in Table 3.

Prior-based underwater restoration methods achieve outstanding effects in both color cast elimination and haze removal. However, methods may fail when the target scene mismatches the prior. In addition, the accuracy of the imaging model also directly influences the performance of the algorithms.

2.3 Learning-based methods

Due to its robust feature learning ability, deep learning has made significant progress in vision tasks [57,58,59,60]. Intuitively, more and more researchers tend to restore underwater images using deep learning. These methods can be classified into CNN-based methods and GAN-based methods.

2.3.1 CNN-based methods

Methods based on convolutional neural networks (CNN) commonly design a CNN and train the network under big data. The trained model can output the veiling light and transmission map accurately from the input dataset. Finally, the physical model is used to restore the original image [61]. Figure 8 illustrates the flowchart of commonly used CNN-based underwater image restoration methods.

Given the inaccurate estimation of background light by selecting a pixel value of a certain point by most restoration methods, Shin et al. presented a general network structure to estimate parameters and restore images based on the underwater imaging model [62]. Wang et al. designed a CNN-based network (UIE-Net) containing two subnets used for color correction and image dehazing, respectively [63]. To achieve cross-scene applications, it uses underwater imaging models [64] to synthesize 200,000 underwater images for training. The final training model has superior generalization ability. Li et al. designed an end-to-end CNN-based model to directly reconstruct clear underwater images (UWCNN) [65]. They synthesized ten ocean image datasets and trained ten corresponding UWCNN models corresponding to the optical properties of different water types. Lu et al. presented an approach to solving degraded underwater imaging in a low-intensity light environment [66]. This method uses CNN to estimate the image depth and uses color correction to restore the image color. Compared with traditional methods, it obtains more accurate depth information, but there are still errors. Wang et al. presented an underwater image restoration approach by using parallel CNN [67]. The network includes two parallel branches, which are used to estimate transmission map and veiling light simultaneously by T network and A network, respectively. T network is inspired by the multi-scale network fusion method of Ren et al. [68], which uses cross-layer connection and multi-scale estimation to avoid artifacts and retain more details. Finally, the underwater image is restored by the underwater optical imaging model and the estimated values generated by two branches. Li proposed an underwater image enhancement network (Water-Net) based on the constructed dataset by them, called UIEBD [69]. Fu and Cao thought that methods based on deep learning have fixed-parameter after training, which lacks flexibility in dealing with changeable underwater environments [60]. Therefore, they combined deep learning with classical histogram equalization and proposed a novel model. The model with two branches deals with color cast and low contrast, respectively. Then, classic histogram equalization is used to obtain better visual effects without introducing artifacts. Table 4 summarized the training dataset, advantages, and disadvantages of the above-mentioned CNN-based restoration methods.

2.3.2 GAN-based methods

Generative Adversarial Networks (GAN) [70] are trained to map images from a data set X to a reference data set Y. Suppose X and Y are the undistorted images and the corresponding distorted image, respectively. Usually, the restoration process of the image can be regarded as transforming X to Y using the adversarial learning. Additionally, GAN can also be used to generate underwater degraded images [71]. Figure 9 briefly shows the flowchart of a typical GAN-based model.

Since obtaining paired datasets is expensive and complicated, researchers have developed unsupervised learning-based methods to get paired datasets [72,73,74]. Zhu et al. proposed CycleGAN which transforms the images from a source dataset to a target dataset without paired data [74]. Fabbri et al. used the CycleGAN-based approach [74] to generate paired data sets and proposed a GAN method to improve image quality, called UGAN [72]. It is partly similar to WaterGAN presented by Li et al. [73]. The difference is that this method does not need to estimate the information of scene depth which is difficult to obtain. Chen et al. presented an underwater image restoration method based on GAN [71]. A multi-branch discriminator is designed to preserve features and remove underwater noise. A DCP loss and multi-level loss strategy are formulated for training to ensure the effectiveness of training results in this method. Table 5 summarized the training dataset, advantages, and disadvantages of the above-mentioned GAN-based restoration methods.

With the development of deep learning techniques, learning-based methods have become a trend in recent years. However, learning-based methods still have a long way to go. First, datasets including real-world underwater images and the corresponding reference images are limited in quality and quantity. Then, synthetic underwater images are no substitute for real-world images. Furthermore, most methods use the existing network architecture with minor modifications rather than considering elaborate networks or loss functions based on underwater image characteristics. In a word, learning-based methods have great potential in the future.

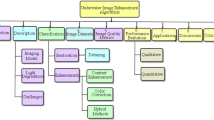

3 Underwater image enhancement methods

This section focuses on the classical underwater image enhancement methods and introduces several usual enhancement methods. The main idea of underwater image enhancement methods is to construct functions adjusting the color and contrast of the whole image to make the enhanced result conform well to the human visual system. The conventional underwater image enhancement methods contain histogram-based methods, fusion-based methods, Retinex-based methods, and frequency-domain methods.

3.1 Histogram-based methods

The histogram-based method is a kind of classical image enhancement method. It transforms the image histogram from a narrow unimodal histogram to a balanced distribution histogram, fast and straightforward. As shown in Fig. 10, the histogram distribution of each color channel of the processed image is uniform, occupying the whole gray level space. The brightness and contrast of the scene are significantly improved in Fig. 10b.

The underwater images and their histograms of three color channels. Top row: raw image and its histogram of RGB channels. Bottom row: image processed by histogram equalization algorithm and its histogram of RGB channels. The horizontal and vertical coordinates are the pixel value and the number of pixels, respectively

However, the histogram equalization algorithm is difficult to improve the local contrast of the image, resulting in excessive enhancement of the local area and loss of detailed information. To solve the above-mentioned problems, Pizer et al. propose an Adaptive Histogram Equalization (AHE) method to improve the contrast by calculating the local histogram of the image [75]. Zuiderveld proposed Contrast Limited Adaptive Histogram Equalization (CLAHE) to overcome the limitation of AHE [76]. Based on CLAHE, Hitam et al. propose a mixture CLAHE method that successfully suppresses the image noise and color distortion [77]. Luo et al. proposed an approach based on a histogram stretching algorithm to compensate for the value of the red channel [78].

3.2 Fusion-based methods

Underwater enhancement methods based on fusion intend to enhance a single input image with different algorithms and obtain multiple enhanced underwater images. Then, the fusion weight of each enhanced image is calculated, and the final enhanced image is obtained by image fusion.

Ancuti et al. designed underwater images and videos dehazing strategy by fusion [79]. The approach takes a color recovery version and a contrast enhancement version as the input. Four weights are defined to improve the visibility of the degraded target scene, and noise reduction strategies based on edge-preserving ensure the consistency between adjacent frames of underwater videos. Based on [79], Ancuti et al. proposed a novel white balance strategy as a preprocessing step to improve the case of severe light attenuation underwater [80]. Additionally, they simplify the weight maps and the input versions. Gao et al. presented a method based on red channel compensation, local contrast correction, and multi-scale fusion to remove the backscatter and color cast in the underwater images [81]. To improve the process of weight map calculation, Song et al. presented an updated approach of weight map calculation combining spatial and contrast cues to obtain a high-contrast fusion result [82].

3.3 Retinex-based methods

Based on the observation of the human vision system, Land et al. propose the theory of color constancy called Retinex, which tries to restore the actual color of the scene under an arbitrary light. The Retinex model can be described as:

where S(x, y) is the image captured by the camera, R(x, y) is the reflection component, and L(x, y) is the illumination component. The schematic diagram of the Retinex model is shown in Fig. 11. The purpose of Retinex is to eliminate the influence of the illumination component L(x, y), and obtain the actual appearance R(x, y) of the object.

Based on the theory of Retinex, Jobson et al. proposed Multi-Scale Retinex (MSR) [83] and Multi-Scale Retinex with Color Restoration (MSRCR) [84], which use the Gaussian blur of the original image as the estimation R(x, y). Retinex was mainly used for foggy image dehazing in the early years, while many researchers improved Retinex and applied it to underwater image enhancement. Zhang et al. present a method achieved by combing the filter with the improved MSR [85]. Tang et al. used MSR for pre-corrected to improve the contrast and equalize the color distribution of the image [86].

In summary, hardware-based methods obtain the higher definition underwater images by upgrading hardware. Underwater vehicles such as AUVs can significantly benefit from these hardware devices. However, most hardware-based methods have poor performance in dehazing and color correction. One meaningful research direction is to combine hardware-based technologies with software-based technologies to get a high-quality underwater image. Software-based methods mainly focus on dehazing and color cast elimination to generate results coinciding with human visual perception. Software-based restoration methods are presented based on the image formation model. The estimation of background light and transmission map becomes the crucial step in the restoration process. Prior-based restoration methods achieve outstanding dehazing effects when the target scene is in accord with the prior. In contrast, the robustness of the methods and the accuracy of the established imaging model can be further improved. Currently, a growing number of learning-based approaches have been proposed to improve the robustness and practicability of underwater vision enhancement. However, the performance of these methods lags behind state-of-the-art conventional methods in most cases. This is mainly because most network structure and loss functions are not specially designed according to the characteristics of underwater images. Additionally, limited datasets also affect the generation capacity of deep learning models. Thus, there is still ample room for the improvement of learning-based methods. Underwater image enhancement methods mainly focus on the color and saturation of the whole image. Both effects of dehazing and eliminating color distortion are greatly improved through the enhancement algorithms. Although appealing results are obtained, methods are designed without considering the underwater optical imaging model, which ignores the relationship between degradation and the scene depth. Therefore, the actual color characteristics of underwater images cannot be reconstructed by these methods.

4 Underwater image datasets

Underwater image datasets play a vital role in training data-driven networks and fairly evaluating the performance of enhancement and restoration methods. This part introduces current underwater image datasets which have been commonly used for training and testing. We provide the download link of each dataset in Table 6, which is presented in chronological order.

4.1 ImageNet dataset

Deng et al. introduce a dataset aiming to contain 50 million labeled images with high quality, called ImageNet [87]. ImageNet is large in scale and diversity, providing images for object recognition tasks and image classification tasks. It organizes various classes, including images of vehicles, mammals, birds, etc. Fabbri et al. [72] select underwater images and in-air images from ImageNet and use CycleGAN to generate underwater images in pairs. Figure 12 shows four images in ImageNet.

Example images from ImageNet [87]

4.2 Port Royal Dataset

Li et al. establish a large RGBD image dataset synthesized from in-air RGBD images, called Port Royal Dataset [73]. It contains 15 thousand of synthetic underwater images, which can be used for network training and underwater image enhancement. Figure 13 shows four in-air images and the corresponding synthetic underwater images in Port Royal Dataset.

Example images from Port Royal [73]. Top row: in-air images; Bottom row: corresponding synthetic underwater images

4.3 SQUID dataset

Berman et al. collect underwater images captured in different water types, depths, and seasons, called SQUID [46]. The dataset provides 57 stereo pairs, including raw images and corresponding camera calibration files, TIF files, and depth maps. All scenes are illuminated by natural light only and contain color charts for evaluating the accuracy of color correction. Figure 14 shows four real-world underwater images and the corresponding true distance map in SQUID.

Example images from SQUID [46]. Top row: real-world underwater images taken in different locations; Bottom row: the corresponding true distance map based on the stereo

4.4 UIEBD dataset

Li et al. construct a dataset including 950 real-world underwater images where 890 have corresponding reference images. The rest are challenging images that cannot acquire ideal enhanced results [69]. The original underwater images in UIEBD have various color casts and qualities, while the corresponding reference images have better contrast and higher quality. Therefore, UIEBD can be widely used for underwater image enhancement method evaluation and end-to-end network training. Figure 15 shows four images and the corresponding reference images in UIEBD.

Example images from UIEBD [69]. Top row: raw images taken in diverse underwater scenes; Bottom row: the corresponding reference images

4.5 RUIE dataset

Liu et al. construct a dataset including more than 4, 000 images, called RUIE [88]. RUIE is divided into three subsets aiming to evaluate the effectiveness of enhancement approaches from three aspects. Specifically, the first subset contains underwater images with five different qualities from high to low. The second subset includes 300 images with 100 each for green images, green-blue images, and blue images. The third subset can be used to evaluate the effects of different algorithms on high-level tasks (such as classification and detection). It contains 300 images, covering a variety of marine organisms. Additionally, the locations and types of scallops, sea cucumbers, and sea urchins are labeled manually. Figure 16 shows four images with different qualities in RUIE.

Example images from RUIE [88]. All the images shown are from the second subset. The image quality decreases from left to right

4.6 RGBD underwater dataset

Akkaynak and Treibitz introduce an underwater dataset including multiple raw images with a color chart and corresponding depth maps [89]. The images are divided into five subsets: images captured in different depths, water types, backscatter, and angles. More information can be obtained from the website given in Table 6. Figure 17 shows four real underwater images and the corresponding SFM-based range maps in RGBD underwater dataset.

Example images from the RGBD dataset [89]. Top row: real-world underwater images taken in different scenes; Bottom row: the corresponding SFM-based range map based on the stereo

4.7 EUVP dataset

Islam et al. construct a large-scale dataset containing more than 20, 000 paired images and over 10,000 unpaired images carefully selected to facilitate supervised training of underwater image enhancement networks, called EUVP [90]. Images in EUVP come from 7 different camera shots, including different visibility and locations. Figure 18 shows four real underwater images and the corresponding ground truth in EUVP.

Example images from EUVP [90]. Top row: distorted underwater images taken in different scenes; Bottom row: the corresponding ground truth images

4.8 MABLS dataset

Song et al. established a dataset composed of 500 underwater images manually annotated with background light, called MABLs [91]. It is the first public dataset for underwater image background light estimation. The dataset contains various underwater scenes (such as single fish, school of fish, coral, diving, etc.) and different degrees of distortion (such as deep water, low visibility, high scatter, color distortion scenes, etc.). Figure 19 shows four images in MABLS Dataset.

Example images from MABLS Dataset [91]

5 Experiment and analysis

This section firstly evaluates the results of typical underwater vision enhancement methods from a subjective perspective. Then, three commonly-used evaluation metrics proposed for underwater images are introduced. Further, a quantitative comparison is conducted based on these metrics. Last, each result based on both subjective and objective aspects is analyzed and discussed.

5.1 Experimental setting

To conduct extensive and systematic experiments, underwater enhancement and restoration methods based on different techniques are tested on various scenes from both subjective and objective perspectives. Methods include enhancement methods (Fusion [79], Two-step [92], RBE [93]), prior-based methods (DCP [34], UDCP [37], DHDP [49], IBLA [44], Haze-Lines [47], GDCP [39]) and learning-based methods (UWCNN [65], Water-Net [69]). Underwater images with various color distortion and qualities are selected from UIEBD [69] and RUIE [88]. Since natural light cannot reach the deep sea, artificial light sources are commonly served as illumination for the deep-sea vision system. Thus, underwater images with artificial light sources are also necessary for this experiment.

To ensure the fairness of qualitative and quantitative evaluations, all the experiments except the computation complexity experiment are implemented on a Windows 10 PC with Intel(R) Core (TM) i5-5200U CPU @ 2.20GHz, 8.00GB 1600MHz DDR3L memory, running on Matlab2018 and Python 3.6.

5.2 Subjective comparison

Images are selected from UIEBD, RUIE, and deep-sea datasets for color cast removal comparison, dehazing comparison, transmission map (TM) and background light (AL) accuracy comparison, and an artificially illuminated image restoration comparison. The results generated by the above-mentioned methods are shown in Figs. 20, 21, 22, 23, 24, 25.

Subjective evaluation on different color distortion. From top to bottom are greenish images, blue-green images, and the blueish image selected from RUIE [88]. From left to right are raw images and the results generated by Fusion [79], Two-step [92], DCP [34], UDCP [37], DHDP [49], IBLA [44], Haze-Lines [46], UWCNN [65], and Water-Net [69]

Subjective evaluation on high backscatter scenes. All the selected images are real-world underwater images from UIEBD [69]. From left to right are raw images and the results generated by Fusion [79], Two-step [92], DCP [34], UDCP [37], DHDP [49], IBLA [44], Haze-Lines [46], UWCNN [65], and Water-Net [69]

For images with different color casts shown in Fig. 20, the white balance of the Fusion [79] can effectively remove the color cast. Two-step [92] uses a piecewise linear function to remove the color cast. However, the simple linear transformation cannot deal with various water types. As a result, Two-step has a more negligible effect on color correction. DCP [34] and IBLA [44] have unobvious results, and Haze-Lines [46] only affects partial images. This is because of the mismatch between the priors and the scenes. The overestimation of TM causes the over-darkness of restored images generated by UDCP [37]. DHDP [49] applied the histogram prior and introduced undesirable red artifacts. As for learning-based methods, UWCNN [65] has a more negligible effect, while Water-Net [69] can produce pleasing restoration results due to the perpetual optimization and the powerful learning ability of the model.

For images with high backscatter shown in Fig. 21, Fusion [79] can preserve details and has outstanding dehazing effects, because it uses the output of CLAHE as the input of the Fusion [79]. Two-step [92] also applies CLAHE to obtain the reference image, but it fails to find the desired balance between the raw image and the reference image. The methods of DCP [34], UDCP [37], and IBLA [44] also show poor performance on dehazing due to the inaccurate model and assumptions. DHDP [49] effectively reduces backscatter but easily introduces artifacts. Haze-Lines [46] remains unstable in different scenes because of the wrong estimation of the water types. UWCNN [65] uses synthetic underwater images for training, which leads to the limited generalization capacity of the trained model. Thus, it produces unpleasing results on real-world images. Finally, Water-Net [69] has a dehazing effect on each testing image, but the result is not as obvious as conventional methods, such as Fusion [79], DHDP [49], and Haze-Lines [46].

Combing the two comparisons above, most underwater restoration methods well complete dehazing work, while the effect of color correction is unpleasing. Colorful and brighter images can be obtained when enhancement methods are imported as post-processing. However, red artifacts and halos may be introduced at the same time. One valuable direction is to effectively combine the underwater image restoration method and the underwater image enhancement method. Furthermore, researchers have found that the commonly-used image formation model is partly to blame for the unstable result of current model-based methods [89]. Methods based on a more accurate model are needed for correcting complex underwater scenes.

Since the estimation of BL and TM plays a decisive role in the restoration process, we show the result, the BL and TM estimation of each model-based method in Figs. 22, 23, 24, respectively, to specifically demonstrate the reasons for the results generated by these model-based methods. The images are selected from UIEBD with various scenes.

By analyzing the subjective results in Figs. 22, 23, 24, it can be found that DCP [34] has little effect on underwater image restoration. This is because the model used by DCP fails to consider the selective attenuation of light underwater, leading to the same value of BL in three channels and the overestimation of TM. In addition, brighter objects in the foreground also lead to the wrong estimation of TM. To solve the problem in DCP, UDCP [37] estimates TM by blue and green channels, ignoring the red channel, which may lead to the underestimation of TM. Distinct dehazing results can be obtained by UDCP [37] for blue-green and blueish images. However, UDCP [37] aggravates the effect of the color cast because it fails to consider wavelength-dependent compensation and has a wrong estimation of BL in most cases, shown in Fig. 23. DHDP [49] shows a good performance on color correction and contrast improvement due to the enhancement algorithm (Histogram prior, HP) applied after image restoration. Unfortunately, the application of HP may result in severe red artifacts in images with strong red light attenuation, as shown in Fig. 22e. Considering selective attenuation of light underwater, the TM estimated by IBLA [44] is different in three channels. For easy observation, Fig. 24 shows the normalization of the blue channel in TM estimated by IBLA [44]. IBLA [44] has a less positive effect on dehazing and contrast improvement, especially for images with large non-textured areas, which violates the basic assumption of IBLA [44]. Haze-Lines [46] shows a good performance in some cases. As shown in Fig. 24, the TM estimated by Haze-Lines [46] is wrong and contains a lot of noise, which causes over-dark areas in Fig. 22 a, b, d. GDCP [39] is a variant of DCP. It considers that the low estimation of BL in underwater images with low brightness leads to the failure of image restoration. Therefore, GDCP improved the estimation formula of TM to make sure that both the numerator and denominator are greater than 0. However, this improvement leads to the over-brightness of restoration results, as shown in Fig. 22a–c. Moreover, the restoration fails when the color of the object is close to the estimated BL. It can be seen that the color of an object in Fig. 22d is different from the estimated BL in Fig. 23d. As a result, GDCP performs best on Fig. 22d, but not well on other images.

To sum up, model-based methods usually have poor robustness and show less effect on color correction. The main reason is that the priors are not always effective, and the image formation model cannot represent the complex imaging environment underwater. Underwater images with various scenes are challenging for all kinds of underwater vision enhancement methods.

Since natural light cannot reach the deep sea, artificial light sources are added to the device to expand the imaging area, which is accompanied by the problem of the light spot and uniform light distribution. Due to the wide application of artificial light sources, underwater images with artificial light sources are also necessary for this experiment. For images with artificial light sources in Fig. 25, the over-bright areas tend to be reddish in different degrees after enhancing by varying methods.

Although Fusion [79], Two-step [92], and DHDP [49] have the problem of reddish and over-saturated areas, these methods can obtain pleasing results in general. DCP [34] and UDCP [37] show poor performance because the light-colored pixels in the foreground violate the prior use of these methods, resulting in the incorrect estimation of TM. GDCP [39] can improve the brightness of the processed images but has the problem of over-exposure when the brightness of images is moderate. IBLA [44] uses blurriness for estimation to ensure that BL and TM are correctly estimated when the foreground of the image contains a light-colored region. Therefore, it has a relatively good result for dealing with the light spots. The performance of Water-Net [69] is not obvious. This is due to its lack of training with artificially illuminated underwater images. All the results shown in Fig. 25 are not satisfying. There is still a blank for processing underwater images with artificial light sources.

5.3 Objective comparison

A complete set of underwater image quality evaluation standards is essential to promote underwater vision enhancement technologies. However, none of the conventional color image quality evaluation methods is reliable for quality evaluation of the results generated by underwater vision enhancement algorithms. In recent years, with the high demand for automated underwater image quality evaluation, several evaluation methods have been proposed for underwater images. The commonly-used metrics include underwater image quality measures (UIQM) [94], underwater color image quality evaluation (UCIQE) [95], and patch-based contrast quality index (PCQI) [96].

Panetta et al. presented a method (UIQM) to evaluate the performance of the algorithm without a reference image [94]. It effectively measures the quality of the image through a linear combination of image characteristics. The expression of UIQM is shown in formula (19):

where UICM, UISM, and UIConM represent chromaticity, clarity, and contrast measurement, respectively. c1, c2 and c3 are weighting factors, where c1 = 0.0282, c2 = 0.2953, c3 = 3.5753.

Yang and Sowmya presented UCIQE in CIELab color space which can be like human subjective perception [95]. UCIQE is a weighted combination of chroma, saturation, and contrast to quantify the restored underwater images. The expression of UCIQE is shown in formula (20):

where σc is chromaticity standard deviation, conl is brightness contrast, μs is saturation average, c1, c2 and c3 are weighting factors, where c1 = 0.4680, c2 = 0.2745 and c3 = 0.2576.

Wang et al. proposed PCQI based on patch-structure [96]. This method evaluates the image quality by calculating the average value, signal intensity, and signal structure of each local patch, and comparing the contrast difference between the images.

In addition to the above commonly-used metrics, researchers also use the following metrics to evaluate the quality of underwater images. Colorfulness contrast fog density index (CCF) [97] is a linear combination of colorfulness, contrast, and fog density:

Image information entropy H(ξ) is used to measure the amount of information in an image, which is represented by:

where i indicates the gray level of the pixel, ranging from 0 to 255, Pi is the proportion of the pixel whose gray level is i.

Structure similarity index measure (SSIM) measures [98] the similarity between the enhanced image x and the reference image y from three aspects: brightness, contrast and structure:

where μxH(ξ) and μy are the average values of x and y, \( {\sigma}_x^2 \) and \( {\sigma}_y^2 \) are the standard deviation values of x and y, σxy is the covariance of x and y, x and y, c1 = (k1L)2 and c2 = (k2L)2 are constant for stability, L is the range of pixel values, k1 = 0.01 and k1 = 0.03.

Mean square error (MSE) computes the cumulative squared error between the enhanced image x and the reference image y.

where M×N is the image size.

Table 7 summarizes the above-mentioned metrics. Note that ↑ or ↓ in the last row represents that the image quality is better when the metric is higher or lower.

To have a comprehensive objective assessment, the average values of different methods are assessed in terms of UIQM, UCIQE, and PCQI on UIEBD composed of 890 original underwater images. Table 8 reports the results with the highest values shown in bold. Noted that UDCP [37] gets the highest score on both UIQM and PCQI. It means UDCP produces prominent sharpness and color. The inaccurate TM estimation of UDCP aggravates the color deviation, which makes the results completely different from the raw images, leading to higher UIQM and PCQI. DHDP [69] and Fusion achieved relatively high scores on all indicators, verifying that both methods have colorfulness correction and remarkable contrast enhancement. This is because the white balance algorithm of Fusion [79] and the histogram-based algorithm of DHDP contribute the most to obtaining rich colors. By comparison, the objective values of learning-based methods (UWCNN [65] and Water-Net [69]) are unsatisfactory. These methods are stable but have limited restoration ability.

To further illustrate the difference between subjective and objective evaluation, we perform an extensive evaluation based on eight images with different color shifts, as shown in Fig. 26. UIQM, UCIQE, and PCQI are used to evaluate the quality of each result in Fig. 26. Tables 9, 10, and 11 give the value of UIQM, UCIQE, and PCQI, respectively, and the highest score is noted in bold. It was evident that DCP [34] and UDCP [37] get the lowest score in most cases, which is consistent with the subjective evaluation. For blue tone images (f) and (g), UDCP [37] gets the best score of UCIQE because of the high contrast it produced. Water-Net obtains the median score. However, we noted that not all objective assessments are in accord with the subjective assessment. For example, the average scores of DHDP [69] are the highest of three metrics, while DHDP introduces annoying red artifacts in blue tone images. The same situation occurs in the UIQM and UCIQE value of IBLA [44] in Fig. 26a. This is because underwater image quality evaluation metrics overly concern the features of contrast, saturation, and chroma, but ignore high semantics from human perception. This problem may result in abnormal assessment in some cases.

In summary, underwater image enhancement methods usually focus on the whole image, producing the over-enhanced region. For example, Fusion [79] excessively improves the value of the red channel in Fig. 26f–h, which leads to unpleasing red artifacts. Nevertheless, Fusion [79] robustly improves the colors and contrast of the images where the light has not been strongly attenuated. This is due to the perfect fusion of the high-contrast input and the color correction input, which is simple but effective. Although the model-based methods obtain pleasing results in some cases, these methods have poor robustness due to the inaccurate image formation model and the easily violated priors. Specifically, UDCP [37] has a distinct effect on dehazing for images with strong red light intensity attenuation, but fails to consider color correction. The results of DHDP [49] preserve details and have vivid color due to the enhancement algorithm added after image restoration. But the enhancement algorithm easily introduces reddish deviation when the red light is strongly attenuated, which is the same problem as the enhancement methods. IBLA [44] has little effect on backscatter removal and color correction, especially in foreground regions with less texture. However, we noted that IBLA [44] positively affects images with artificial light sources. This is because it uses blurriness for BL and TM estimation, unaffected by the light-colored region in the foreground. As for learning-based methods, they cannot outperform conventional methods in most cases due to the poor generalization ability. To be specific, Water-Net [69] has a moderate performance on testing images. It alleviates color cast and improves contrast relatively but is not as distinct as Fusion [79]. One of the reasons is the lack of a well-designed network aiming at underwater images. UWCNN [65] performs poorly in almost every image due to the synthetic data is used for training. Additionally, getting the highest score on objective evaluation metrics may not be consistent with the corresponding subjective evaluation in some cases. This is partly due to the commonly-used non-reference metrics do not take color cast and artifacts into account.

5.4 Computation complexity comparison

To evaluate the computation complexity of each algorithm, we construct an underwater image dataset containing 200 images with the size 640◊640. The experiment is implemented on a Windows 10 PC with Intel(R) Xeon(R) Silver 4414 CPU @ 2.20GHz, 32.0GB RAM, and Tesla V100-PCIE-32GB using Matlab2018 and Python 3.6. The average running time of each algorithm is shown in Table 12.

From Table 12, we notice that Two-step runs the fastest among all the comparison methods. This is because Two-step only contains simple linear transformations, which sacrifices accuracy for time efficiency. Additionally, it is noted that enhancement methods have a low complexity while most restoration methods require more time to get the results. This is because most restoration methods take more time on TM estimation. Haze-Lines and IBLA have the highest computation complexity. Specifically, Haze-Lines spends more time on estimating the types of water ten times to choose the most appropriate water types and IBLA spends more time on blurriness estimation for TM estimation. As for learning-based methods, although their runtime is less than restoration methods, they require an extremely long time of training.

6 Challenges and future trends

Underwater vision enhancement technologies have been developed over the years, while there remain limitations and challenges. In this section, we discuss the challenges of this field and draw recommendations for potential directions, hoping to provide relevant cues for future work.

6.1 Image formation model and model-based methods

The current underwater image formation model (IFM) introduced in Section II has been widely used in almost every underwater image restoration method. Unfortunately, the experiments conducted in Section IV have shown these state-of-the-art model-based methods are particularly susceptible to various color casts and produce inconsistent results. This is partly due to the inaccurate IFM, which is firstly derived for the haze imaging. The study has proved that the complex interaction of water type, season, location, illumination, and other factors affects the formation of underwater images [99], while IFM does not take these factors into account. Recently, progress in revising the underwater image formation model has been proposed [99, 100] to develop robust model-based methods. Nevertheless, the revised model introduces more dependencies that require external hardware for measurement. The technique called Sea-thru [89] based on the revised model has achieved great success in underwater image restoration and inspired the future research on color reconstruction in underwater images. However, Sea-thru ignores part of the dependencies in the revised model and requires the depth map for parameter estimation. Using a single underwater image as input, it seems impossible to restore images based on the revised model.

To solve these problems, researchers are expected to develop novel methods based on the revised model with less dependent on hardware in the future. Benefitting from the powerful learning ability of deep nets, accurately estimating parameters in the revised model with well-designed networks is also a promising direction. Moreover, reasonably simplifying the revised model or proposing a simple but effective underwater image formation model is also a meaningful study.

6.2 Robustness, computation efficiency, and practicality

Conventional model-based methods, which are sensitive to the water types, have low efficiency when the priors or assumptions are complicated (e.g., Haze-Lines [46], IBLA [44]). As for learning-based methods, if well-trained, the models have a good generalization capacity, but require a long training time due to the massive parameters. In contrast, most enhancement methods have high computation efficiency, but easily suffer from under/over-enhanced. This is because these methods use one algorithm to deal with the whole image without considering the local features. It may amplify noise which is harmful to some high-level vision tasks, such as sea cucumber detection. There seems to be no method that is both robust and computationally efficient.

Although it is impossible to design a method perfectly coping with every type of water in the past, benefitting from the powerful representative ability of deep nets, deep learning techniques give more possibilities to reconstruct colors of underwater images robustly. A well-designed model with less computation is expected to solve this problem. Additionally, advances in the underwater image formation model are also of great help to improve the robustness of model-based methods.

6.3 Objective assessment metrics

Evaluation metrics especially designed for underwater images provide realistic feedback on image quality. However, there exist few metrics proposed for underwater images. Furthermore, as we point out in Section IV, these metrics only focus on the color and sharpness of the image. Enhancement methods which pay more attention to the global color and contrast of the image are able to get higher scores by using these metrics. Therefore, current metrics cannot produce a reliable objective evaluation for underwater image quality.

In our view, in addition to the chroma, saturation, and contrast, a comprehensive underwater image quality evaluation metric should also take the following factors into account.

-

a.

Red artifacts and over/under-enhanced area. Due to the attenuation of red light underwater, underwater vision enhancement methods easily produce annoying red artifacts, especially on deep-sea images. Metrics are supposed to detect the area of these undesirable noises.

-

b.

Unnatural color. Colorful but unnatural results may acquire higher scores according to the current metrics, while higher scores should be obtained by reconstructing the natural color of underwater images.

-

c.

Underwater image formation proprieties. Considering underwater imaging proprieties may need to estimate optical parameters of the image, which is also a challenge faced by restoration methods.

-

d.

High-level vision task-oriented. It is widely known that underwater high-level vision tasks require a large number of high-quality underwater images to ensure accuracy. However, current underwater image assessment metrics only focus on color and contrast, resulting in images with high objective values that cannot provide effective information for these tasks. Recently, several works aimed at identifying high-quality pest images for high-level vision tasks have been published [101,102,103]. Herein, we call for the proposal of underwater image assessment metrics for different features to evaluate the practical value of these images for high-level vision tasks.

6.4 Underwater image benchmark datasets

A real-world underwater image dataset with a wide range of diversities serves as a benchmark for evaluating the performance of vision enhancement technologies and is the foundation of learning-based methods. An increasing number of underwater image datasets are constructed recently, as introduced in Section III, but most of them are monotonous. Moreover, few of them can be directly used by learning-based methods for training. This is because the number of underwater images and their reference images is limited. Learning-based underwater vision enhancement methods usually employ synthetic images which have corresponding ground-truth images. However, synthetic images are still sharply different from real-world images due to the complex underwater imaging environment. As a result, the model trained by artificial datasets may have a limited generalization capability and acquire unpleasing results on real-world images.

Unsupervised learning techniques make it possible to train deep nets without the ground truth to address this issue. Similarly, realistic datasets can also be generated by these unsupervised learning techniques. Once synthesizing real underwater datasets is successful, almost all the possible directions will benefit from it. However, since none of the underwater image restoration methods based on unsupervised learning have achieved satisfactory results, unsupervised learning research still needs further study.

6.5 Challenging underwater scenes

Almost every method performs well on underwater images with low backscatter and attenuation, but fails to restore challenging images. Therefore, future works are expected to better cope with challenging underwater scenes. Herein, we list some notoriously challenging scenes.

-

a.

Artificial light sources and marine snow. Artificial light sources are used to improve the visibility of the underwater scene. However, both marine snow and the introduction of artificial light caused an uneven light distribution and bright specks, which is difficult to deal with.

-

b.

High attenuation. Due to the severe attenuation of red light underwater, little information exists in the red channel of deep-sea images. Few methods are considered to handle this type of image [104]. Methods are expected to recover the red channel information in deep-sea images, and images containing color charts (e.g., SQUID [46], RGBD Dataset [89]) may offer a reference for color reconstruction.

-

c.

High backscatter. Backscatter, which grows with distance, is hard to remove in the whole image completely. Removing backscatter by using an accurate depth map may be a reasonable way.

6.6 Hardware optimization

Merely using software-based technologies has a limited effect on underwater image restoration. Despite the high cost, the optimized hardware can capture high-quality underwater images and provide accurate physical imaging parameters, such as point spread function (PSF). In addition, hardware -such as stereo cameras- can construct a 3D structure of the underwater scene, which is much more precise than the estimation based on software. Thus, the information provided by hardware plays an irreplaceable role in underwater image restoration. Underwater image restoration methods based on the combination of software technology and hardware technology will be another promising direction to improve the quality of degraded images.

7 Conclusion

This review provides a comprehensive summary of underwater vision enhancement technology which has received much attention in recent years. To analyze the main reasons for underwater image degradation, the optical image formation model and its components are introduced in detail. Then, we give a taxonomy of the existing underwater vision enhancement methods, and the advantages and disadvantages of various typical methods are summarized in tables. Moreover, the widely-used underwater image quality evaluation metrics are introduced, and typical methods are assessed from subjective and objective aspects. Finally, we suggest open challenges and potential directions. We hope that all efforts in this review may provide researchers with the essential background to face the challenges and opportunities in underwater vision enhancement.

References

Ren R, Zhang L, Liu L, Yuan Y (2021) Two AUVs guidance method for self-reconfiguration Mission based on monocular vision. IEEE Sensors J 21(8):10082–10090

Kim B, Kim J, Cho H, Kim J, Yu S (2020) AUV-based multi-view scanning method for 3-D reconstruction of underwater object using forward scan sonar. IEEE Sens J 20(3):1592–1606

Li C, Guo J, Guo C (2018) Emerging from water: underwater image color correction based on weakly supervised color transfer. IEEE Signal Process Lett 25(3):323–327

Zhou J, Zhang D, Zhang W (2020) The classical and state-of-the-art approaches for underwater image defogging: a comprehensive survey. Front Inform Technol Elect Eng 21(12):1745–1769

Han M, Lyu Z, Qiu T, Xu M (2020) A review on intelligence Dehazing and color restoration for underwater images. IEEE Trans Syst Man Cybern Syst 50(5):1820–1832

Lu H, Li Y, Zhang Y, Chen M, Serikawa S, Kim H (2017) Underwater optical vision enhancement: a comprehensive review. Mobile Netw Appl 22(6):1204–1211

Wang Y, Song W, Fortino G, Qi L-Z, Zhang W, Liotta A (2019) An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 7:140233–140251

Schechner YY, Karpel N (2005) Recovery of underwater visibility and structure by polarization analysis. IEEE J Ocean Eng 30(3):570–587

Treibitz T, Schechner YY (2006) Instant 3Descatter. In: Proc. CVPR, New York, USA, pp 1861–1868

Treibitz T, Schechner YY (2009) Active polarization descattering. IEEE Trans Pattern Anal Mach Intell 31(3):385–399

Hu H, Zhao L, Huang B, Li X, Wang H, Liu T (2017) Enhancing visibility of polarimetric underwater image by transmittance correction. IEEE Photonics J 9(3):1–10

Hu H, Zhao L, Li X, Wang H, Yang J, Li K, Liu T (2018) Polarimetric image recovery in turbid media employing circularly polarized light. Opt Express 26(19):25047–25059

Huang BJ, Liu T, Hu H, Han JH, Yu MX (2016) Underwater image recovery considering polarization effects of objects. Opt Express 24(9):9826–9988

Wu HD, Zhao M, Xu WH (2020) Underwater de-scattering imaging by laser field synchronous scanning. Opt Lasers Eng 126:1–8

Ishibashi S (2011) The study of the underwater camera model. In: Proc. OCEANS, Santander, Spain, pp 1–6

Pizerr SM, Amburn EP, Austin JD et al (1987) Adaptive histogram equalization and its variations. Comput Gr Image Process 39(3):355–368

Bruno F, Bianco G, Muzzupappa M, Barone S, Razionale AV (2011) Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS-J Photogramm Remote Sens 66(4):508–510

Roser M, Dunbabin M, Geiger A (2014) Simultaneous underwater visibility assessment enhancement and improved stereo. In: Proc. ICPA, Hong Kong, China, pp 3840–3847

Lin Y, Chen S, Tsou C (2019) Development of an vision enhancement module for autonomous underwater vehicles through integration of visual recognition with stereoscopic image reconstruction. J Mar Sci Eng 7(4):1–42

Luczynski T, Luczynski P, Pehle L, Wirsum M, Birk A (2019) Model based design of a stereo vision system for intelligent deep-sea operations. Measurement 144:298–310

Tan CS, Sluzek A, Seet G, Jiang TY (2006) Range gated imaging system for underwater robotic vehicle. In: Proc. OCEANS, Singapore, pp 1–6

Li H, Wang X, Bai T, Jin W, Ding K (2009) Speckle noise suppression of range gated underwater imaging system. In: Proc. SPIE, California, USA, pp 1–8

Liu W, Li Q, Hao G, Wu G, Lv P (2018) Experimental study on underwater range-gated imaging system pulse and gate control coordination strategy. In: Proc. SPIE, Beijing, China

Wang M, Wang X, Sun L, Yang Y, Zhou Y (2020) Underwater 3D deblurring-gated range-intensity correlation imaging. Opt Lett 45(6):1455–1458

Wang M, Wang X, Zhang Y, Sun L, Lei P, Yang Y, Chen J, He J, Zhou Y (2021) Range-intensity-profile prior dehazing method for underwater range-gated imaging. Opt Express 29(5):7630–7640

Han P, Liu F, Wei Y, Shao X (2020) Optical correlation assists to enhance underwater polarization imaging performance. Opt Lasers Eng 134:1–6