Abstract

Underwater optical images serve as crucial carriers and representations of ocean information. They play a vital role in the field of marine exploration. However, the quality of images captured by underwater cameras often falls short of the expected standards due to the complex underwater environment. This limitation significantly hampers the application and advancement of intelligent underwater image processing systems. Consequently, underwater image enhancement and restoration have been attracting extensive research efforts. In this paper, we review the degradation mechanisms and imaging models of underwater images, and summarize the challenges associated with underwater image enhancement and restoration. Meanwhile, we provide a comprehensive overview of the research progress in underwater optical image enhancement and restoration, and introduces the publicly available underwater image datasets and commonly-used quality evaluation metrics. Through extensive and systematic experiments, the superiority and limitations of underwater image enhancement and restoration methods are further explored. Finally, this review discusses the existing issues in this field and prospects future research directions. It is hoped that this paper will provide valuable references for future studies and contribute to the advancement of research in this domain.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Underwater image enhancement

- Underwater image restoration

- Image quality assessment

- Underwater optical imaging

- Object detection

1 Introduction

In recent years, the rapid advancement of economic has led to escalating pressures in the exploitation and utilization of terrestrial space and resources. Consequently, an increasing number of researchers have turned their attention to the enigmatic underwater world. The processing of underwater images plays a pivotal role in a wide range of domains, including ocean resource development, environmental monitoring, and security defense. Nevertheless, underwater imagery exhibits entirely different characteristics compared to atmospheric imagery. Influenced by the unique physical and chemical properties of the underwater environment, underwater images frequently encounter challenges such as blurred details, reduced contrast, and color distortions. The progress of underwater computer vision applications relies on the quality of the acquired images. Hence, it is crucial to design algorithms capable of enhancing and restoring underwater images in various complex environments.

Recently, researchers worldwide have conducted extensive investigations into underwater image processing and visual technologies, with several scholars providing reviews of the field's current progress. Han et al. [1] provided an overview of underwater image dehazing and color restoration algorithms, but did not introduce the relevant datasets and quality evaluation metrics. Wang et al. [2] discussed traditional methods for underwater image enhancement and restoration, but did not comprehensively review data-driven approaches. In contrast to the aforementioned studies, this paper presents the following significant contributions: First, we conduct a comprehensive examination of the challenges and recent advancements in the field of underwater image enhancement and restoration. Second, we sort out the publicly available underwater image datasets and commonly-utilized quality evaluation metrics. And then we implement the quantitative and qualitative experimental analyses of classical algorithms. Third, we innovatively explore the interplay between low-level underwater image enhancement and downstream high-level vison tasks. Finally, the paper concludes by providing a forward-looking perspective on the future trends in underwater image enhancement and restoration techniques.

2 Underwater Image Enhancement and Restoration

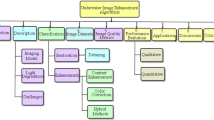

Underwater image enhancement and restoration techniques assume a crucial role in optimizing the overall quality of underwater images. This section provides an introductory overview of underwater imaging model and various types of image quality degradation. Furthermore, clear taxonomies are used to offer a systematic review of existing classical algorithms employed for underwater image enhancement and restoration. Figure 1 visually illustrates the classification of underwater image enhancement and restoration schemes.

2.1 Challenges of Underwater Imaging

The underwater imagery data represents a vital source of optical information within the marine realm. Nevertheless, the field of underwater imaging confronts a range of severe challenges owing to the inherent complexity of the underwater environment. Gaining profound insights into the characteristics of underwater optical imaging models holds immense potential in facilitating the exploration and formulation of robust and efficacious strategies for optimizing the quality of underwater images. Commonly, methods for underwater image restoration are constructed upon the foundation of the classical Jaffe-McGlamery model or its variations. Figure 2 visually presents the underwater optical imaging process and the wavelength-dependent attenuation of light during underwater propagation. During the process of underwater imaging, notable degradation factors can be succinctly outlined as follows:

-

1)

The absorption and scattering coefficients of light at different wavelengths vary underwater. This fact results in a prevalent and pronounced color cast attribute in underwater images, primarily manifesting as a blue-green tone.

-

2)

Light scattering phenomena are commonly present in underwater environments, leading to image degradation such as blurring, fogging, and reduced contrast.

-

3)

With increasing underwater depth, natural light intensity undergoes significant attenuation. To address this, artificial light sources are often employed to facilitate illumination during deep-sea photography. However, this practice introduces noticeable disparities in the distribution of brightness within underwater images.

To sum up, underwater optical images encounter a series of complex degradation factors, leading to impaired visual quality and restricting their usability in subsequent machine vision tasks.

2.2 Underwater Image Enhancement

Underwater image enhancement techniques are typically non-physical model-based approaches, aiming to enhance the visual quality of low-quality images by directly manipulating pixel values. These methods generally neglect prior knowledge of optical imaging parameters in water and primarily concentrate on improving contrast, sharpness, and color rendition to ensure the enhanced images are more confirm to the human visual perception. Underwater image enhancement methods are commonly classified into two categories: hardware-based methods and software-based methods.

2.2.1 Hardware-Based Approaches

Early investigations in the field of underwater image quality improvement mainly concentrated on upgrading hardware-based imaging devices. Schechner et al. [3] proposed a polarization-based algorithm, which effectively removed the effects of light scattering to a certain extent. However, it is unsuitable for images obtained underwater with artificial illumination. Treibitz et al. [4] developed a non-scanning recovery method based on active polarization imaging. It is beneficial to yield significant enhancement in the visibility of degraded images, and is capable of working with the simple and compact hardware. Liu et al. [5] used a self-built range-gated imaging system to construct a scattering model and proposed an optimal pulse with a gate control coordination strategy.

However, these hardware-dependent approaches commonly exhibit limitations such as low efficiency and high costs associated with frequent updates, impeding their capacity to address the multifaceted challenges inherent in diverse underwater environments.

2.2.2 Software-Based Approaches

With the maturation and progression of image processing and computer vision technologies, researchers have increasingly redirected their focus towards software-based methodologies. Underwater image enhancement and restoration algorithms are employed to ameliorate the quality of underwater images, thus enabling the resultant processed images to align with human visual perception characteristics and meet the demands of high-level machine vision tasks.

Spatial-Domain Image Enhancement.

Spatial domain image enhancement directly manipulates the pixels of the image, adjusting the grayscale values of the red, green, and blue color channels, as well as employing grayscale mapping to modify the color hierarchy of the image. Currently, the widely-utilized spatial domain techniques can be broadly categorized into contrast enhancement methods and color correction methods.

High-contrast images tend to have both rich grayscale details and high dynamic range. Zuiderveld et al. [6]. Proposed Contrast Limited Adaptive Histogram Equalization (CLAHE), which applied constraints to the local contrast of an image, achieving simultaneous contrast enhancement and noise reduction. Huang et al. [7] presented a relative global histogram stretching (RGHS) method for shallow-water image enhancement. Although histogram equalization and its variant algorithms generally achieve relatively good enhancement results for regular images, they may not be fully applicable to underwater scenes. These algorithms insufficiently consider the inherent characteristics of the underwater environment, leading to significant artifacts and amplified noise when applied to underwater images, especially in low-light conditions, the images are more susceptible to color distortion.

Due to the selective attenuation of light in the underwater environment, underwater images frequently suffer from significant color deviations. To tackle aforementioned problems, Henke et al. [8] proposed a novel feature-based color constancy hypothesis. Based on this hypothesis, an algorithm utilized for removing the color cast of images captured underwater was given. Inspired by the Multi-Scale Retinex with Color Restoration (MSRCR) framework proposed in [9], Liu et al. [10] presented a two-stage method. Initially, the MSRCR joint guided filtering method was given for dehazing, and then the white balance fusion global guided image filtering (G-GIF) technology was proposed aiming at enhancing the edge details and correcting the color of underwater images.

Transform-Domain Image Enhancement.

Unlike the spatial-domain approaches, transform-domain image enhancement methods generally transform the spatial domain image to the corresponding domain for processing by applying the Fourier transform, Laplacian pyramid, wavelet transform, and other techniques [11]. In the frequency domain, the low frequency components of an image normally represent the smooth background region and texture information, whereas the high frequency components correspond to the edge region where the pixel values change dramatically. The quality of the image can be improved effectively by amplifying the frequency component of interest and suppressing the frequency component of disinterest, simultaneously.

Wavelet transform is usually employed in underwater image enhancement, Srikanth et al. [12] developed a representation of the energy functionals for the approximation and the detailed coefficients of the underwater image. In order to adjust the contrast and correct the color cast, the detailed coefficients and the approximation coefficients of RGB components were modified meticulously. Iqbal et al. [13] introduced an underwater image enhancement approach utilizing Laplacian decomposition. The image was divided into the low-frequency and high-frequency sub-bands by Laplacian transform. The low-frequency image was dehazed and normalized for white balancing while amplifying the high-frequency sub-band for maintaining the edge details. Subsequently, the enhanced image was obtained from fusing the two processed images.

Fusion-Based Image Enhancement.

Considering that use single feature to design an underwater image enhancement method may not give full play to the advantages of the features, several algorithms based on fusion have been extensively studied and applied to underwater image enhancement one after another. The fusion images can reflect multidimensional details from the source images, enabling a comprehensive representation of the scenes that satisfies the requirements of both human observers and computer vision systems.

Ancuti et al. [14] developed a strategy to improve the visual quality of underwater images and videos by multi-scale fusion. The specific operation of this technique can be summarized as follows. Firstly, the proposed approach took the color-corrected and contrast-enhanced versions of the original underwater image/video frame as the input. Then, four fusion weights were defined in accordance with the contrast, salience and exposedness of the two input images, which aimed to improve the visibility of the degraded target scene. Finally, the multi-scale fusion method was employed for obtaining the final enhanced result with the aim of overcoming the demerits of artifacts and undesirable halos brought by linear fusion. Analogously, Muniraj et al. [15] proposed a method for underwater image perceptual enhancement via a combination of color constancy framework and dehazing. Chang et al. [16] introduced a color-contrast complementary framework consisting of two steps, i.e., adaptive color perception balance and attentive weighted fusion.

Deep Learning-Based Image Enhancement.

With the substantial advances in deep learning, a variety of learning-based methods have been broadly exploited in vision tasks and demonstrated excellent performance.

As a convolutional neural network (CNN) is capable to learn robust image representation on various vision tasks, numerous novel CNN-based algorithms are developed for underwater image enhancement. Perez et al. [17] took the lead to make a groundbreaking attempt. In the same year, Wang et al. [18] subsequently presented an end-to-end CNN-based framework (UIE-Net) containing two subnets employed for color correction and haze removal, which was applicable to cross-scene applications. Li et al. [19] established a large-scale underwater image enhancement pseudo-reference dataset referred to as UIEBD. Meanwhile, motivated by the multi-scale fusion strategy, the authors designed a gated fusion network trained on the constructed UIEBD. Wang et al. [20] proposed a novel UIECˆ2-Net that creatively incorporated both HSV color space and RGB color space in one simple CNN framework, which played a guiding role in the subsequent investigations of underwater image color correction. Moreover, the coarse-to-fine strategy has drawn considerable attention in low-level vision tasks recently and attained impressive performance. Inspired by it, Cai et al. [21] conducted a coarse-to-fine scheme that utilizes three different cascaded subnetworks to progressively improve underwater image degradation.

Generative Adversarial Networks (GANs) represent a type of novel network structure designed to generate desired outputs through adversarial learning between a generative model and a discriminator model. The data-driven training approach of GANs is particularly well-suited for addressing the issue of underwater image degradation caused by multiple factors. Consequently, GAN models have gained significant traction in the field of underwater image enhancement and restoration. Fabbri et al. [22] applied a CycleGAN [23] to synthesize a diversity of paired training data, and then utilized these pairs to correct color deviation in a supervised manner. All of the aforementioned algorithms utilize sufficient synthetic data to train their networks and obtain decent performance. Nevertheless, the significant domain discrepancies between the synthetic and real-world data are generally not taken into consideration by these methods, resulting in the undesirable artifacts and color distortions on diverse real-world underwater images. To address this issue, a novel two-stage domain adaptation network for enhancing underwater images was developed in [24], which improved both the robustness and generalization capabilities of the network.

Deep learning has undoubtedly demonstrated its significance in underwater image enhancement. However, most mainstream algorithms are computationally expensive and memory intensive, hindering their deployment in practical applications. To overcome this challenge, Li et al. [25] proposed a lightweight CNN-based model that directly reconstructs the clear underwater images in place of estimating parameters of underwater imaging model. This method can be extended to underwater video frame-by-frame enhancement efficiently. Similarly, Naik et al. [26] unveiled a novel shallow neural network known as Shallow-UWnet that requires less memory and computational costs while achieving comparable performance to state-of-the-art algorithms. Islam et al. [27] presented a fully-convolutional GAN-based model, which met the demand for real-time underwater image enhancement. Jiang et al. [28] introduced an innovative recursive strategy for model and parameter reuse, enabling the design of a lightweight model based on Laplacian image pyramids.

2.3 Underwater Image Restoration

The restoration of underwater images can be regarded as an inverse process of underwater imaging, which typically employs prior knowledge and optical properties of underwater imaging to implement degraded image reconstruction. Currently, underwater image restoration methods primarily encompass prior-based approaches and deep learning-based approaches. In this section, we conduct a systematic review of classical restoration schemes.

2.3.1 Prior-Based Image Restoration

Prior-based underwater image restoration methods typically rely on various prior knowledge or assumptions to deduce the crucial parameters of the established degradation model, facilitating the restoration of underwater imagery.

In 2011, He et al. [29] proposed an image prior - dark channel prior (DCP), subsequently, the DCP-based strategies have been widely applied to efficiently remove haze from a single atmospheric image [30]. Images captured underwater are commonly affected by the suspended particles in waterbody, similar to the effect of thick fog in atmosphere, hence the standard DCP scheme is also adopted to restore the degraded underwater images. In reference [31], Chao et al. directly employed the DCP scheme for underwater image dehazing. For refining the DCP-based parameter estimation, Akkaynak et al. [32] designed a Sea-thru framework by combining with the revised image information model (IFM) and transmission map, whereby the distance-dependent attenuation coefficients are obtained via estimating the spatially varying illuminant. Regrettably, the DCP-based schemes mentioned above cannot apply to the restoration of underwater images with multiple types of distortion, and they struggled to demonstrate significant superiority. This is due to their inadequate consideration of the specific transformation discrepancies between in-air and underwater scenes, especially concerning the scene depth-dependent and wavelength-dependent light attenuation. In light of these challenges, a series of priors specifically targeting the optical imaging properties of underwater scenes has been developed.

Taking the viewpoint that the blue and green channels are the principal source of underwater visual information into account, an underwater DCP (UDCP) restoration algorithm was proposed in [33], whereby the DCP was adopted exclusively for the blue and green color channels to recover the color properties of underwater images. Despite the improved performance in estimating transmission map, the restored images still fall short of desired quality, as UDCP neglected the information from the red channel under any circumstance. Galdran et al. [34] designed a simple yet robust red channel prior-based approach (RDCP) for recovering the visibility loss and improving the color distortion. By exploiting hierarchical searching technique and innovative scoring formula, a generalized UDCP was proposed in [35] to obtain more robust back-scattered light estimation and transmission estimation. However, due to the inherent limitations of DCP, partial images recovered by the above methods still exhibit incorrect or unreal colors.

Carlevaris-Bianco et al. [36] presented a novel maximum intensity prior (MIP), it was an innovative exploration of the strong attenuation difference between the varying color channels of underwater images for estimating transmission map. Correspondingly, the MIP was adopted in [37] to estimate the background light. Considering the multiple spectral profiles of different waterbodies, Berman et al. [38] proposed a scheme to handle wavelength-dependent attenuation. The image restoration process was simplified to one procedure of single image dehazing via estimating the attenuation ratios of the blue-green and blue-red channels. Given that the methods based on DCP or MIP are likely to be invalidated by the changeable lighting conditions in underwater images, Peng et al. [39] exploited both light absorption and image blurriness to estimate more accurate scene depth, background light, and transmission map. Song et al. [40] explored an effective scene depth estimation framework on the basis of underwater light attenuation prior (ULAP), which can smoothly estimate the background light and the transmission maps to restore the true scene radiance.

2.3.2 Deep Learning-Based Image Restoration

Deep learning has made remarkable advancements in modeling complex nonlinear systems due to the rapid development of artificial intelligence and computer vision technology. Consequently, the innovative combination of underwater physical models with deep learning techniques has received growing research attention over recent years. Numerous studies showed that the deep neural network-based underwater image restoration schemes can accurately estimate the background light and transmission map.

Benefiting from the knowledge of underwater imaging, Li et al. [41] designed an unsupervised GAN for synthesizing realistic underwater-like images from in-air image and depth maps. Then a color calibration network was employed to correct color deviations utilizing these generated data. Li et al. [42] proposed a deep underwater image restoration framework Ucolor. The proposed network can be roughly divided into two subnetwork, multi-color space encoder coupled with channel attention and medium transmission-guided decoder. More particularly, the encoder part enables the varied feature representations from multi-color space and adaptively highlights the most discriminative information while the decoder part is responsible for enhancing the response of network towards quality-degraded regions. Based on physical model and causal intervention, a two-stage GAN-based framework was investigated in [43] for restoring the underwater low-quality images in real time. Notably, the majority of existing deep learning-based algorithms rely upon synthetic paired data to train a deep neural network for underwater image recovery, which may be subject to the domain shift issue. In view of this challenge, Fu et al. [44] provided a new research perspective for underwater image restoration, designing an effective self-supervised framework with the homology constraint.

Mitigating the limited availability of restoration methods based on deep learning is of great significance. It is worth considering the combination of deep learning with suitable underwater physical models or traditional restoration methods, which can contribute to achieving underwater image restoration applicable to different underwater environments with promising prospects.

3 Underwater Image Datasets and Quality Metrics

Underwater image datasets play a crucial role in data-driven visual tasks, providing valuable references and support for objective quality assessment and visual enhancement of underwater images. Furthermore, establishing a comprehensive underwater image quality evaluation framework will strongly promote innovative advancements in underwater image enhancement and restoration algorithms.

However, underwater image acquisition is challenging and costly due to environmental and equipment constraints, resulting in a scarcity of comprehensive and large-scale underwater image datasets. Existing datasets suffer from limitations such as limited diversity in target scenes, fewer category groupings, and a lack of extensive subjective experiments. This section summarizes a series of publicly available datasets used in underwater image enhancement and recovery algorithm research, as presented in Table 1. Moreover, it is worth noting that the existing image quality assessment (IQA) methods are primarily tailored for atmospheric color images and lack of reliability for assessing the quality of enhanced underwater images. To fill this gap, several objective quality evaluation metrics have been developed for underwater images, as illustrated in Table 2. Among them, the following three quality metrics are widely used in the field. UCIQE [48] is a no-reference quality assessment metric designed based on the chromaticity, contrast, and saturation in the CIELab color space of underwater images. UIQM [49], inspired by the human visual system, combines underwater image contrast measurement (UIConM), underwater image chromaticity measurement (UICM), and underwater image sharpness measurement (UISM) to evaluate different attributes of underwater images. On the other hand, the CCF metric [50] takes inspiration from the principles of underwater imaging and utilizes a weighted fusion of color, fog density, and contrast metrics to provide a comprehensive quality evaluation.

4 Experiments and Analysis

The performance of varying algorithms is discussed from both subjective and objective perspectives in this section, followed by an analysis and outlook on the semantic-aware ability of these enhancement and restoration algorithms in further high-level vision tasks.

Dataset and Implementation Details.

In order to perform comprehensive and systematic experiments, various underwater enhancement and restoration methods are selected for assessment. Typical methods include traditional enhancement schemes (e.g., CLAHE [6], Fusion [14], RGHS [7]), traditional restoration methods (e.g., UDCP [33], ULAP [40], IBLA [39]), and data-driven approaches (e.g., UWCNN [25], FUnIE-GAN [27], Shallow-UWnet [26], USUIR [44]). The UIEBD dataset comprises a total of 890 authentic underwater images, providing comprehensive representation of the diversity in real underwater scenes. It is commonly employed for evaluating the performance of underwater image enhancement and restoration algorithms. To ensure the diversity of the test set, we select 60 challenging images from the UIEBD dataset, covering various distortion types such as blue-green color cast, reduced contrast, non-uniform illumination, and turbidity. Additionally, each distortion type includes images with different levels of distortion. Then, the challenging underwater object detection dataset UDD is utilized to explore the relationship between the improvement of underwater image quality and the high-level vision tasks.

To ensure fairness in both qualitative and quantitative evaluations, all training and testing are carried out on a Windows 11 PC with an NVIDIA 3060 GPU. The experiments are conducted using Python 3.7 and Matlab 2021b.

Results on Qualitative and Quantitative Evaluations.

The visual comparisons of various algorithms are successively conducted on the Test U-C60 dataset, as illustrated in Fig. 3. It can be observed that the USUIR algorithm achieves satisfactory visual results, effectively coping with the diversity of underwater degraded images. Among the traditional algorithms, Fusion and RGHS demonstrate relatively strong universality and flexible applicability to different types of underwater images, while UDCP and IBLA tend to cause over-enhancement and over-saturation. Moreover, almost all algorithms still exhibit limited enhancement effects on turbidity images.

Since Test U-C60 set is lack of corresponding reference images, the performance of algorithms is assessed in terms of UCIQE, UIQM and CCF. The comparison results are summarized in Table 3, where the highest score is indicated in bold, and the second highest scores are underlined. We observe that not all objective evaluation results align with subjective human perception. To be specific, UDCP achieves the highest scores of UCIQE and CCF metrics; however, subjective assessments reveal recurrent issues of excessive color enhancement and blurring in the images processed by UDCP. This phenomenon can be attributed to the fact that UCIQE and CCF metrics primarily emphasize the chromaticity and saturation of the enhanced images, while UIQM additionally considers the sharpness characteristics. This discrepancy in evaluation criteria is a key factor leading to UDCP's lowest score in the CCF metric.

Application to High-Level Vision Tasks.

As depicted in Fig. 4. It is visually evident that the results of IQA metrics are not linearly correlated with the accuracy of the object detection. Compared to the original degraded images, the enhanced images show improvement in the evaluation metric scores to some extent, but do not achieve superior detection accuracy. Therefore, the practical effects of underwater image enhancement and restoration on object detection and other computer vision applications deserve further in-depth research. The selection and design of effective image enhancement and restoration methods based on the specific requirements of advanced visual tasks are of paramount importance and significance.

5 Opportunities and Future Trends

For future research endeavors in the field of underwater image enhancement and restoration, improvements and innovations are needed in several aspects:

-

1)

Existing approaches tend to consume substantial computational resources and cannot be deployed in detection devices with limited processing capacity. Therefore, it is imperative to design lightweight networks for resource-limited scenarios.

-

2)

It is an urgent and meaningful need to study algorithms that can adapt to diverse underwater environments and dynamically adjust based on different degradation types of underwater images. Further exploration of the application of self-supervised and unsupervised strategies in enhancing algorithm adaptability is also warranted.

-

3)

Data-driven algorithms often overlook the disparities between real and synthetic data, hindering their effective generalization to real-world underwater applications. Domain adaptation techniques offer a promising solution to this challenge, providing a new direction for improving underwater image quality in the future.

-

4)

Existing objective quality metrics and the detection accuracy in underwater object detection are not linearly correlated, thus failing to provide effective guidance for downstream advanced tasks. Therefore, further research into utility-oriented quality assessment methods is highly warranted.

-

5)

Existing methods mostly focus on improving the perceive quality of underwater images, neglecting the underlying connection between enhancement tasks and high-level vision tasks. Investigating the utilization of multitask learning paradigms to integrate underwater image enhancement and restoration techniques and advanced vision tasks represents a research avenue worthy of exploration.

Addressing these research aspects will undoubtedly contribute to the advancement of underwater image enhancement and restoration, ultimately facilitating the development of more effective and practical solutions in this field.

6 Conclusion

This review summarizes the current research status of underwater optical image enhancement and restoration techniques. A brief exposition of the underwater optical imaging model is firstly presented to facilitate a better understanding and analysis of the diverse causes of underwater image degradation. Subsequently, existing methods are systematically classified and discussed, followed by a concise introduction of publicly available underwater image datasets and quality evaluation approaches. Furthermore, we conduct comprehensive evaluations and comparisons of the classical methods, and then sort out the opportunities and future trends. This extensive survey of the state-of-the-art schemes provides guidance for future research and would be valuable for newly interested researchers.

References

Han, M., Lyu, Z., Qiu, T., et al.: A review on intelligence dehazing and color restoration for underwater images. IEEE Trans. Syst. Man Cybern. Syst. 50(5), 1820–1832 (2020)

Wang, Y., Song, W., Fortino, G., et al.: An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 7, 140233–140251 (2019)

Schechner, Y.Y., Karpel, N.: Recovery of underwater visibility and structure by polarization analysis. IEEE J. Ocean. Eng. 30(3), 570–587 (2005)

Treibitz, T., Schechner, Y.Y.: Active polarization descattering. IEEE Trans. Pattern Anal. Mach. Intell. 31(3), 385–399 (2009)

Liu, W., Li, Q., Hao, G., et al.: Experimental study on underwater range-gated imaging system pulse and gate control coordination strategy. In: Proceedings of the SPIE, Beijing, China (2018)

Zuiderveld, K.: Contrast limited adaptive histogram equalization. Graph. Gems. 474–485 (1994)

Huang, D., Wang, Y., Song, W., Sequeira, J., Mavromatis, S.: Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In: Schoeffmann, K., et al. (eds.) MMM 2018. LNCS, vol. 10704, pp. 453–465. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-73603-7_37

Henke, B., Vahl, M., Zhou, Z.: Removing Color cast of underwater images through non-constant color constancy hypothesis. In: 8th International Symposium on Image and Signal Processing and Analysis (ISPA), Trieste, Italy, pp. 20–24 (2013)

Jobson, D.J., Rahman, Z., Woodell, G.A.: A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6(7), 965–976 (1997)

Liu, K., Li, X.: De-hazing and enhancement method for underwater and low-light images. Multimed Tools Appl. 80(13), 19421–19439 (2021)

Agaian, S.S., Panetta, K., Grigoryan, A.M.: Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process. 10(3), 367–382 (2001)

Vasamsetti, S., Mittal, N., Neelapu, B.C., et al.: Wavelet based perspective on variational enhancement technique for underwater imagery. Ocean Eng. 141, 88–100 (2017)

Iqbal, M., Riaz, M.M., Sohaib Ali, S., et al.: Underwater image enhancement using laplace decomposition. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022)

Ancuti, C., Ancuti, C.O., Haber, T., et al.: Enhancing underwater images and videos by fusion. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, pp. 81–88 (2012)

Muniraj, M., Dhandapani, V.: Underwater image enhancement by combining color constancy and dehazing based on depth estimation. Neurocomputing 460, 211–230 (2021)

Chang, L., Song, H., Li, M., et al.: UIDEF: a real-world underwater image dataset and a color-contrast complementary image enhancement framework. ISPRS-J. Photogramm. Remote. Sens. 196, 415 (2023)

Perez, J., Attanasio, A.C., Nechyporenko, N., Sanz, P.J.: A deep learning approach for underwater image enhancement. In: Ferrández Vicente, J.M., Álvarez-Sánchez, J.R., de la Paz López, F., Toledo Moreo, J., Adeli, H. (eds.) IWINAC 2017. LNCS, vol. 10338, pp. 183–192. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59773-7_19

Wang, Y., Cao, J., Wang, Z.: A deep CNN method for underwater image enhancement. In: 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, pp. 1382–1386 (2017)

Li, C., Guo, C., Ren, W., et al.: An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389 (2020)

Wang, Y., Guo, J., Gao, H., et al.: UIECˆ2-net: CNN-based underwater image enhancement using two color space. Signal Process.: Image Commun. 96, Art. no. 116250 (2021)

Cai, X., Jiang, N., Chen, W., et al.: CURE-Net: a cascaded deep network for underwater image enhancement. IEEE J. Ocean. Eng. (2023). https://doi.org/10.1109/JOE.2023.3245760

Fabbri, C.M., Islam, J., Sattar, J.: Enhancing underwater imagery using generative adversarial networks. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, pp. 7159–7165 (2018)

Zhu, J. -Y., Park, T., Isola, P., et al.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp. 2242–2251 (2017)

Wang, Z., Shen, L., Xu, M., et al.: Domain adaptation for underwater image enhancement. IEEE Trans. Image Process. 32, 1442–1457 (2023)

Li, C., Anwar, S., Porikli, F.: Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 98, Art no. 107038 (2020)

Naik, A., Swarnakar, A., Mittal, K.: Shallow-UWnet: compressed model for underwater image enhancement. arXiv preprint arXiv:2101.02073 (2021)

Islam, M.J., Xia, Y., Sattar, J.: Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 5(2), 3227–3234 (2020)

Jiang, N., Chen, W., Lin, Y., et al.: Underwater image enhancement with lightweight cascaded network. IEEE Trans. Multimed. 24, 4301–4313 (2022)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Parihar, A.S., Gupta, Y.K., Singodia, Y., et al.: A comparative study of image Dehazing algorithms. In: International Conference on Communication and Electronics Systems, Coimbatore, India, pp. 766–771 (2020)

Chao, L., Wang, M.: Removal of water scattering. In: 2nd International Conference on Computer Engineering and Technology, Chengdu, V2-35–V2-39 (2010)

Akkaynak, D., Treibitz, T.: Sea-Thru: a method for removing water from underwater images. In: 2019 IEEE/CVF Conference on Computer Vision and Pat-tern Recognition (CVPR), Long Beach, CA, USA, pp. 1682–1691 (2019)

Drews, P., Nascimento, E., Moraes, F., et al.: Transmission estimation in underwater single images. In: Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, pp. 825–830 (2013)

Galdran, A., Pardo, D., Picón, A., et al.: Automatic red channel underwater image restoration. J. Vis. Commun. Image Represent. 26, 132–145 (2015)

Liang, Z., Ding, X., Wang, Y., et al.: GUDCP: generalization of underwater dark channel prior for underwater image restoration. IEEE Trans. Circ. Syst. Video Technol. 32(7), 4879–4884 (2022)

Carlevaris-Bianco, N., Mohan, A., Eustice, R.M.: Initial results in underwater single image dehazing. In: OCEANS-MTS/IEEE Seattle, Seattle, WA, USA, pp. 1–8 (2010)

Zhao, X., Jin, T., Qu, S.: Deriving inherent optical properties from background color and underwater image enhancement. Ocean Eng. 94, 163–172 (2015)

Berman, D., Levy, D., Avidan, S., et al.: Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Trans. Pattern Anal. Mach. Intell. 43, 2822–2837 (2021)

Peng, Y.-T., Cosman, P.C.: Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 26(4), 1579–1594 (2017)

Song, W., Wang, Y., Huang, D., Tjondronegoro, D.: A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In: Hong, R., Cheng, W.-H., Yamasaki, T., Wang, M., Ngo, C.-W. (eds.) PCM 2018. LNCS, vol. 11164, pp. 678–688. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00776-8_62

Li, J., Skinner, K.A., Eustice, R.M.: WaterGAN: unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 3(1), 387–394 (2018)

Li, C., Anwar, S., Hou, J., et al.: Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 30, 4985–5000 (2021)

Hao, J., Yang, H., Hou, X., et al.: Two-stage underwater image restoration algorithm based on physical model and causal intervention. IEEE Signal Processing Lett. 30, 120–124 (2023)

Fu, Z., Lin, H., Yang, Y., et al.: Unsupervised underwater image restoration: from a homology perspective. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 1, pp. 643-651 (2022)

Liu, R., Fan, X., Zhu, M.: Real-world underwater enhancement: challenges, benchmarks, and solutions under natural light. IEEE Trans. Circuits Syst. Video Technol. 30(12), 4861–4875 (2020)

Islam, M. J., Luo, P., Sattar, J.: Simultaneous enhancement and super-resolution of underwater imagery for improved visual perception. arXiv preprint arXiv:2002.01155 (2020)

Liu, C., Li, H., Wang, S., et al.: A dataset and benchmark of underwater object detection for robot picking. IEEE International Conference on Multimedia & Expo Workshops (ICMEW), pp. 1–6 (2021)

Yang, M., Sowmya, A.: An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24(12), 6062–6071 (2015)

Panetta, K., Gao, C., Agaian, S.: Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 41(3), 541–551 (2016)

Wang, Y., Li, N., Li, Z., et al.: An imaging-inspired no-reference underwater color image quality assessment metric. Comput. Electr. Eng. 70, 904–913 (2018)

Zheng, Y., Chen, W., Lin, R., et al.: UIF: an objective quality assessment for underwater image enhancement. IEEE Trans. Image Process. 31, 5456–5468 (2022)

Jiang, Q., Gu, Y., Li, C., et al.: Underwater image enhancement quality evaluation: benchmark dataset and objective metric. IEEE Trans. Circ. Syst. Video Technol. 32(9), 5959–5974 (2022)

Acknowledgments

This work was supported by the Natural Science Foundation of Fujian Province under Grant 2022J05117.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, M., Lan, F., Su, Z., Chen, W. (2023). Underwater Image Enhancement and Restoration Techniques: A Comprehensive Review, Challenges, and Future Trends. In: Yongtian, W., Lifang, W. (eds) Image and Graphics Technologies and Applications. IGTA 2023. Communications in Computer and Information Science, vol 1910. Springer, Singapore. https://doi.org/10.1007/978-981-99-7549-5_1

Download citation

DOI: https://doi.org/10.1007/978-981-99-7549-5_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-7548-8

Online ISBN: 978-981-99-7549-5

eBook Packages: Computer ScienceComputer Science (R0)