Abstract

Detection of changes in streaming data is an important mining task, with a wide range of real-life applications. Numerous algorithms have been proposed to efficiently detect changes in streaming data. However, the limitation of existing algorithms is that they assume that data are generated independently. In particular, temporal dependencies of data in a stream are still not thoroughly studied. Motivated by this, in this work we propose a new efficient method to detect changes in streaming data by exploring the temporal dependencies of data in the stream. As part of this, we introduce a new statistical model called the Candidate Change Point (CCP) model, with which the main idea is to compute the probabilities of finding change points in the stream. The computed probabilities are used to generate a distribution, which is, in turn, used in statistical hypothesis tests to determine the candidate changes. We use the CCP model to develop a new algorithm called Candidate Change Point Detector (CCPD), which detects change points in linear time, and is thus applicable for real-time applications. Our extensive experimental evaluation demonstrates the efficiency and the feasibility of our approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The availability of modern technology and the proliferation of mobile devices and sensors have resulted in a tremendous amount of streaming data. Due to its broad real-life applications, including consumption data (electricity, food, oil), finance and stock exchanges, healthcare, and intrusion/fraud detection, detection of changes in streaming data is an important data mining task. A main challenge with mining streaming data is that data in a stream is inherently dynamic, and its underlying distribution can change and evolve over time, leading to what is referred to as concept drift [40]. As an example, in consumption data, we might have data about customer purchasing behavior over time that could be influenced by the strength of the economy. In this case, the concept is the strength of the economy, which can drift. A concept drift can be either a real or virtual concept drift, where changes in the distribution can, in turn, have four different forms [21] namely abrupt, reoccurring, incremental, and gradual, as illustrated in Fig. 1. In addition, we can have mixtures of these forms in streaming data.

Illustration of the four different types of concept drift [21]

What can be inferred from this is that a method developed for mining changes in streaming data has to take into account the different characteristics of concept drifts. In particular, the learning model has to be trained and adapted to the changes, and model parameters should be able to adapt themselves following the changes in the streams. Numerous algorithms have been proposed to detect changes in streaming data [19, 20]. Still, most existing algorithms have been built based on the assumption that streaming data have stable flows, and that they are arriving in the same distribution. In addition, they assume the data to be identically and independently distributed (i.i.d.). However, such an assumption hardly holds in real-life streaming environments.

Focusing on non-stationary environments, several learning algorithms have been proposed to overcome the limitation of the i.i.d. assumption [27, 29]. The majority of existing approaches have, however, assumed that data in a stream are independently but not identically distributed [10, 45]. Adaptive estimation is among the proposed techniques for handling temporal dependencies of data. For example, in [3, 11, 32, 37], the approaches employed adaptive estimation methodology by considering a so-called forgetting factor. Here, according to a decay function of the forgetting factor, the underlying assumption is that the importance of a data in a stream is inversely proportional to its age. As part of this, cumulative measures of the underlying distribution and estimators are maintained and monitored to detect changes, while new data continuously arrive.

Despite their efficiency with respect to detecting changes in data streams, the underlying assumption of the above methods is that data are generated independent of other data in the same stream. Meanwhile, several empirical experiments, e.g., [6, 45], have shown that there are important temporal dependencies among data points in a stream of data, thus making it crucial for further studies, especially focusing on change detection. Motivated by this, the main goal of this work is to investigate the temporal dependencies of data points in a data stream, and use this to develop a novel method that enables monitoring changes in the stream while continuously estimating the underlying data distribution.

To summarize the principle behind the proposed method, Fig. 2 shows a block diagram of the important steps of the method. As shown in this figure, our approach takes a stream of data as input, and the main output is the list of candidate change points. In order to generate this list, the stream is processed in three main steps. The first step is to extract the distribution and determine the dependency information. To do this, we use the Euclidean projection method to generate projections of given data points in a stream onto k previously seen/processed data points. This produces a set of (probabilistic) paths between pairs of observed data points, called trails. In the second step, the mean values of the trails are estimated and used as inputs for the third and final step, in which the change detection is carried out through statistical hypothesis tests. Note that due to the dynamic nature of streaming data, each of the steps has to be efficient and be able to adapt to changes in the stream.

In this work, we make the following main contributions:

-

1.

We introduce a new model named Candidate Change Point (CCP) that we use to model high-order temporal dependencies and data distribution in streaming data.

-

2.

We develop a new concept called CCP trail, which is the path from a given observed data to another specific data in the observation history. Our approach uses the mean value of the CCP trail as a measure of data distribution, and to capture the temporal dependencies among observed data in the stream.

-

3.

We develop a method that is able to handle the fact that data arrives in a high-velocity stream by providing continuously updating estimation factors as part of the CCP model.

-

4.

We propose an efficient real-time algorithm, based on pivotal statistic tests for change detection named Candidate Change Point Detector (CCPD).

-

5.

In order to demonstrate the feasibility, efficiency, and generality of our method, we conduct a thorough evaluation based on several real-life datasets and comparison with related approaches.

The remainder of this paper is organized as follows. Section 2 briefly reviews the related work. Section 3 introduces the problem of change detection. Section 4 presents the proposed model and the adaptive estimation method for detecting changes in evolving streaming data. Section 5 describes and discusses the results from our experimental evaluation. Finally, Section 6 concludes the paper and outlines possible topics for future work.

2 Related work

Change detection in streaming data has a wide-range of applications in many domains and has attracted lots of interests from the research community. To tackle the problem of change detection in streaming data, numerous methods and algorithms have been proposed [1, 2, 20, 22, 23]. In the context of event detection in streaming data, due to the large volume of data, and that the data is dynamic and continuously arrives at a high speed, online learning approaches are necessary to be able to meet the induced challenges. As a result, several online learning algorithms have been proposed [17, 18]. However, an important requirement of online methods is to minimize the computational cost, and that approaches for online event/change detection must have constant time and space computational complexity for them to be feasible.

The algorithms for concept drift detection can be categorized into four main categories [21] (see also Table 1 for a summary). The first category consists of detectors that are based on sequential analysis. Cumulative Sum (CUSUM) and its variant, Page-Hinkley (PH) [32], are the representative algorithms in this category. Their main idea is to estimate the probability distribution value and update the value when new data comes. A concept drift occurs if there is significant change in the value of the estimated parameters. The main advantage with sequential analysis-based algorithms is that they generally have low memory consumption. One of the disadvantages, on the other hand, is that the performance depends on the choice of parameters. The second category includes detectors that are based on statistical process control, with which the idea is to estimate statistical information (such as error and standard deviation). Monitoring this error rate, the detectors determine a concept drift if the error increases above a pre-specified threshold value. Examples of methods in this group are DDM [19], EDDM [4], ECDD [39], AFF [11], and RDDM [5]. The main advantage with the methods in this category is that in addition of being efficient and having low memory consumption, they work generally well on streams with abrupt changes. However, slow gradual change detection is a one of the main weaknesses of the algorithms in this category. The third category comprises detectors that monitor distributions on two different time windows, i.e., applying statistical tests on the distributions of two windows in a stream. Here, the first window is a fixed reference window used to summarize the information from past data; whereas the second window is a sliding window summarizing the information of the most recent data. Examples of representative algorithms in this category are ADWIN [7], HDDM [18], SEQDRIFT2 [33], and MDDM [34]. The main advantage with the window-based methods are that they are able to provide more precise localization of change points. The disadvantages are the high memory cost and the space requirements for processing the aforementioned two windows. The fourth and final category consists of detectors that are contextual-based. The approaches in this category keep the balance of incremental learning and forgetting in detecting a concept drift with respect to the time of an estimated window. An example of contextual approaches is SPLICE [24]. The main advantage of methods in this category is that they can detect gradual and abrupt drifts, in addition to the ability to control the number of errors. However, contextual-based methods are generally complex and have long execution time, thus making them less suitable for mining of streaming data.

Hoeffding’s Inequality [25] is one of the most well-known inequalities. It has been used to design several upper bounds for drift detection [18]. These upper bounds have been used in algorithms such as the Drift Detection Method Based on Hoeffding’s Bounds (HDDM) [18], the Fast Hoeffding Drift Detection Method for Evolving Data Streams (FHDDM) [36], Hoeffding Adaptive Tree (HAT) [8], and the HAT + DDM + ADWIN [2] algorithm which extends the ADaptive sliding WINdow (ADWIN) algorithm [7], the Drift Detection Method (DDM) [19], and the Reactive Drift Detection Method (RDDM) [5], which is a recent drift detection algorithm based on DDM. Nevertheless, Hoeffding inequalities have the disadvantage of dropping the dependence on the underlying distribution [43]. Although these algorithms are useful to detect changes in streaming data, most of them assume that data is identically and independently distributed. However, as mentioned earlier, in real-life streaming data, data is inherently dynamic, and is not identically and independently distributed [10, 45]. Also, temporal dependencies are very common in data streams [45]. Thus, to address this issue, temporal dependencies in the streams should be considered.

Regarding temporal dependencies in data streams, adaptive models for detecting changes in the underlying data distribution have been proposed and extensively studied. The main idea of these approaches has been to estimate, maintain some interesting targets in the stream, and then compute the data distributions. The main method used has been based on statistical hypothesis tests, where the hypothesis \(H_{1}\) means change has been discovered, whereas the null hypothesis \(H_{0}\) means no change. An example of such an approach has been suggested by Bodenham et al. [11]. They adopted a so-called forgetting factor, originally suggested by Anagnostopoulos et al. [3], to develop a new approach for continuously monitoring changes in a data stream, using adaptive estimation. They proposed an exponential forgetting factor method to decrease the importance of data according to a decay function, which is inversely proportional to the age of the observed data.

Although Bodenham’s approach enables monitoring changes in data streams by applying so-called Adaptive Forgetting Factor (AFF) [11], it does not address the challenges with temporal dependencies of data. Further, while AFF enables detecting multiple data point changes with adaptive estimation, the approach presented in this paper focuses on temporal dependencies of data, in addition to supporting online change detection in a data stream.

In conclusion, different from previous approaches, the proposed method in this paper generalizes the concept of temporal dependency in streaming data, by supporting both the first-order and the higher-order of dependencies. To achieve this, as discussed later in this paper, we propose a probabilistic method that can be used to analyze the differences between a data point at a given observation point and k previously observed points in the stream.

3 Problem definition

Formally, we define the problem of change detection in streaming data by the following definition. Let there be a data stream S, which is an open-ended sequence of values \(\{v_{1}, v_{2}, \dots , v_{i}, \dots \}\). Assume that our observation \(V_{i}\) of \(v_{i}\) in the data stream is drawn from a Gaussian model with an unknown distribution, \(V_{i} \sim \mathcal {N}(\mu , \sigma ^{2}I)\), where \(\mu \) is the (unknown) mean and is the interest measure in our change detection method, and \(\sigma ^{2}\) is the error variance. At each observation time i, the observed mean is \(\mu _{i}\). Hence, for a data stream S, we have a sequence of observed mean \(\mu _{1}\), \(\mu _{2}\), …, \(\mu _{i}\), … corresponding to observation times 1, 2, …, i, …. Our change detection method considers the difference between adjacent means \(\mu _{i}\) and \(\mu _{i + 1}\), where not all adjacent means are not necessarily equal. The change detection method is designed to detect all change points i between two observed adjacent means \(\mu _{i}\) and \(\mu _{i + 1}\), for any i and \(\mu _{i} \neq \mu _{i + 1}\). Assume that \(t_{1}, t_{2}, {\dots } t_{j}, \dots \) are the true change points in a distribution of means. Then, the change detector verifies a change point \(t_{j}\) by testing the following statistical hypotheses:

against

] Given a significance confidence \(\rho \), the rule to accept the \(H_{1}\) hypothesis is if an objective interest measure of mean, i.e., \(f(.)\), satisfies:

where \(v_{\frac {\rho }{2}}\) and \(v_{1- \frac {\rho }{2}}\) are values such that \(Pr(f(\mu _{t_{j}}) < v_{\frac {\rho }{2}}) = \frac {\rho }{2} \) and \(Pr(f(\mu _{t_{j}}) > v_{1- \frac {\rho }{2}}) = 1 - \frac {\rho }{2} \) , and f(.) is an objective function. A change point is said to occur at an observation time \(t_{j}\) if \(H_{0}\) is rejected. This means that the task of detecting change points is to find all the observation times where there are significant differences in the data distribution for a given measure.

To illustrate, consider a stream of data shown in Fig. 3, which can be modeled using a piece-wise function with different values depending on the time intervals, \([1, 50]\), \([51, 80]\) and after 81. The estimated mean values are controlled/computed by the function to be 50 in the first interval, 100 in the second and then 80. In conclusion, as also can be observed, significant changes in the stream appear at timestamps 51 and 81.

To automatically detect change points, we propose an approach called Candidate Change Point (CCP) detection, which can be summarized as follows. First, we investigate the temporal dependence of each data to its k previous data points. We project a vector of that k data onto a \(\ell _{1}\) constraints to optimize the minimum difference between k previous observations and the current observation. The noisy or decay factor that weighs the importance of previous data on the current observation is inversely proportional to its age. Moreover, our approach employs truncated form of the geometric distribution to simulate the decaying probability of looking back into the history. Second, an adaptive estimator on CCP trail and CCP propagation is maintained online during the monitoring process of the stream (to be described in more detail in Section 4.2). Finally, our approach uses a statistic test on interest measure of mean values to determine if there is a change point at a given observation time. Specifically, a p-value of a mean and a pivotal statistic function are used to map the problem into a uniform Gaussian model on the interval [0, 1]. Then we use this to perform the truncated Gaussian statistic to test the hypotheses in order to decide change point occurrences in real-time.

4 The candidate change point (detection) approach

In this section, we propose a new model to represent the temporal dependency of the current observation to its history in the data stream to detect changes. As mentioned in Section 1, most existing work has assumed the streaming data to be identically and independently distributed (i.i.d.). However, focusing on real-life applications, this assumption is too restrictive. In fact, the probability of the current observation is largely dependent on previous data and the history of the stream. With this in mind, we consider Markov chain [31] as the natural choice for modelling streaming data, since a Markov process can be used to represent the probability of transitions between states of data in a stream. However, note that the space requirement for maintaining parameters with a higher-order Markov process grows exponentially as functions of the number of parameters. Thus, approaches employing higher-order Markov processes have a cubic space complexity, and are for this reason inefficient. To cope with this issue, more efficient parameterization approaches, such as the Linear Additive Markov Process (LAMP [28]) and the Retrospective Higher-Order Markov Process (RHOMP [44]), have been proposed. In contrast to the higher-order Markov process, the number of maintained parameters in the LAMP model and the RHOMP model grow linearly, which makes them more suitable for streaming data.

In this work, we build on the ideas of these linear Markov process models to develop an efficient model for temporal dependency in evolving data streams, and use the developed model for detecting concept drifts. We do this based on the fact that the probability of a data to appear in a stream at a specific time is not dependent on the first-order data only. This means that the appearance of data in specific observations can be assumed to be a cause of k previous observations. To be more specific, we propose a novel model, called the candidate change point (CCP) model, for detecting concept drifts. This is done by using the temporal dependency information from previously observed data in the current observation to compute the probability of finding changes in the given observation. What this implies is that with a first-order CCP model, any data that is observed at a specific time has only temporal dependency from the most recent previously observed data in the stream. On the other hand, if the current data point depends on its k previous data points and is a mix of these k data points, then it has a CCP from k-order ancestors. In such a case, the model that we apply is the k-order Candidate Change Point (CCP) model, defined as follows.

Definition 1 (k-order candidate change point)

Given a stream of observations with an open end \(x_{1}\), \(x_{2}\), \(\dots \), \(x_{n}\), \(\dots \), the k-order Candidate Change Point is denoted as \(CCP_{k}\), and at observation time t, the CCP model is presented as \(CCP_{k}(x_{t})\) = (C1, \(C_{2}\), \(\dots \), \(C_{k}\)), where \(0 \leq C_{i} \leq 1\) for \(i = 1, 2, \dots , k\), \({\sum }_{i = 1}^{k}C_{i} = 1\). We call \(C_{i}\) is the probability proportion of CCP in current descendant obtained from i-th order in its k ancestors.

Definition 2 (k-order fading candidate change point)

Given a stream of observations with an open end \(x_{1}\), \(x_{2}\), \(\dots \), \(x_{n}\), \(\dots \), the k-order Fading Candidate Change Point is the k-order Candidate Change Point considering forgetting factor \(\alpha \) of ancestors by order denoted as \(CCP_{k,\alpha }\). At observation time t, the CCP model is presented as \(CCP_{k,\alpha }(x_{t})\) = (α1 × C1, \(\alpha _{2}\times C_{2}\), \(\dots \), \(\alpha _{k}\times C_{k}\)), where \(0 \leq \alpha _{i} \leq 1\) for \(i = 1, 2, \dots , k\), \({\sum }_{i = 1}^{k}\alpha _{i} = 1\), \(0 \leq C_{i} \leq 1\) for \(i = 1, 2, \dots , k\), \({\sum }_{i = 1}^{k}C_{i} = 1\).

We call \(\alpha _{i}\) a decaying probability of CCP in current descendant from i-th ancestor in its k ancestors into the history context. Given a scalar x, we denote \(\overrightarrow {x_{CCP_{k}}}\) as a vector of x projected on its k-order ancestors. We have \(\overrightarrow {x_{CCP_{k}}} = (C_{1}, C_{2}, \dots , C_{k})\), it is the k-order Candidate Change Point of the scalar. In the rest of the paper, we use a bold face letters (x) to present the vector for short (\(\overrightarrow {x_{CCP_{k}}}\)). Suppose \(\mathcal {L}\) is a cost function, the value and option of cost function \(\mathcal {L}\) will be discussed in the next section. In this paper, our purpose is to solve the minimum of cost function subject to conditions \(\ell _{1}\)-norm constraint on k-order Candidate Change Point. The problem is described as:

subject to \(0 \leq C_{i} \leq 1, \forall i = 1, 2, \dots , k, {\sum }_{i = 1}^{k}C_{i} = 1\).

Projected (sub)gradient methods minimize an objective function \(f(x)\) subject to the constraint that x belongs to a convex set \(\mathcal {C}\mathcal {S}\). The constrained convex optimization problem is minimizef(x) subject to \(x \in \mathcal {CS}\).

The projected (sub)gradient method is given by generating the sequence \({x^{(t)}}\) via:

where \({\prod }_{\mathcal {CS}}\) is a projection on \(\mathcal {CS}\), \(\gamma _{t}\) is step size, \(x^{(t)}\) is the t-th iteration, and \(g^{(t)}\) is any (sub)gradient of f at \(x^{(t)}\), and will be denoted as \(\partial f(x)/\partial x^{(t)}\).

4.1 Evolving data and CCP parameter selection

Given a data stream where data evolves over time, i.e., its population distribution or its structure changes over time, the goal is to maximize the probability proportion of CCP for current observation in the set of the k last/previously observed data by solving an optimal problem, using the projected (sub)gradient method with an optimal function to minimize the divergence of the currently observed data when it is projected onto \(\ell _{1}\)-norm constraints of k previous ancestors. Here, the cost function \(f(x)\) on Euclidean projections can be chosen as Euclidean norm (ℓ2 norm) \(\mathcal {L}(x) = \frac {1}{2}\parallel x-v \parallel ^{2}\).

The Euclidean projection in this paper is to project a streaming data \(x^{t}\) onto a set of k previously observed data, defined as:

subject to \( \parallel \textbf {x} \parallel _{1} = {\sum }_{i = 1}^{k}C_{i} = 1\). The optimal solution for this problem can be solved in linear time as in [14, 16, 30]. The Lagrangian of Eq. 4 with Karush Kuhn Tucker (KKT) multiplier \(\zeta \) and \(\mu \) is:

Derivation of Lagrangian function at \(x_{i}\) with optimal solution \(x^{*}\) by dimension i is \( \frac {\partial \mathcal {L} }{\partial x_{i}}(x^{*}) = (x^{*}_{i} - {x^{t}_{i}}) + \zeta - \mu _{i}\). Because \(x^{*}\) is an optimal point then \( \frac {\partial \mathcal {L} }{\partial x_{i}}(x^{*}) = 0 \rightarrow x^{*}_{i} = {x^{t}_{i}} - \zeta + \mu _{i}\). The KKT inequalities in the problem is that xi ≥ 0, then by the complementary slackness, we have \(\mu _{i} = 0\), hence \(x^{*}_{i} = \textit {max }({x^{t}_{i}} - \zeta ,0)\). Moreover, we project our vector using a fast and linear method as in [14]. In particular, we consider a vector of the last k data points as the vector of current observation with k elements. This k elements vector will be projected onto \(\ell _{1}\) ball constraints by using (sub)gradient method.

Definition 3 (CCP heritage)

Given a k-order Candidate Change Point with forgetting factor \(\alpha \), the CCP model is \(CCP_{k,\alpha }(x_{t})\) = (α1 × C1, \(\alpha _{2}\times C_{2}\), \(\dots \), \(\alpha _{k}\times C_{k}\)), where \(0 \leq \alpha _{i} \leq 1\) for \(i = 1, 2, \dots , k\), \({\sum }_{i = 1}^{k}\alpha _{i} = 1\), \(0 \leq C_{i} \leq 1\) for \(i = 1, 2, \dots , k\), and \({\sum }_{i = 1}^{k}C_{i} = 1\). The CCP Heritage of the model at time t is denoted as \(CH_{k,\alpha }(x_{t})\) and computed as \(CH_{k,\alpha }(x_{t})\) = \({\sum }_{i = 1}^{k} \alpha _{i}\times C_{i}\). This value of the scalar can be used to present the temporal dependency proportion of the scalar to history. For the sake of brevity, the notation \(ch_{t}\) will be used to refer to the CCP heritage value at observation time t in the rest of this paper. The sequence of CCP heritage of the stream is denoted \(ch_{1}, ch_{2}, \dots , ch_{t}, \dots \).

In this work, the key idea is to investigate how to exploit the temporal dependencies of data for detection of changes in a stream. The method we propose is inspired by the ideas behind linear Markov process models with which the main principle is to estimate the probability of transition between states, i.e., to determine the likelihood of each state transition. Nevertheless, the main difference between our approach and previous Markov-based approaches is that our approach considers the temporal dependencies based on several prior observations. In addition, the proposed model enables estimating the dependency measures in a data stream in an online fashion, thus making it possible to detect changes in the stream in real time.

4.2 Adaptive estimation of data in streaming

Adaptive estimation approaches were previously proposed to handle issues with the uncertainty of data. In streaming data, the importance of historical data is weighted using a forgetting function with a decay factor [3, 11, 41]. This means that, in the stream, the most recent data is more important than the older ones. A decay function is also useful to flush any noise when detecting concept drifts. There are several approaches to select the decay factor \(\alpha \). Once the value can be set to constant, then we can conduct trial experiments and obtain the optimal value for individual application. The factor can be any function of exponential family of distribution. In [11], the authors proposed an adaptive forgetting factor to weigh the importance of historical data by solving an optimal problem in movement of mean. The estimator is continuously monitored when new data arrives to detect changes in the data stream. The principle behind building a forgetting factor is to build an exponential decay function of observation time such that the importance of a data in a stream is inversely proportional to its age, and the temporal dependencies can be seen is just 1-order dependency, which is a special case, with k= 1 and \(\alpha \) = 1. In the proposed method, we introduce an adaptive estimation for detecting changes, we use CCP heritage of the CCP model to estimate the CCP mean distribution of streaming data. This method can be compared to the linear high order of states based on Markov chain [28, 44].

To adapt the evolving factor in streaming data, we introduce CCP trail to denote the CCP path from a given observation to another previous observation in the streaming data. Hence, a CCP trail can be considered as the probability of finding the heritage from a data stream. This is formally defined as follows.

Definition 4 (CCP Trail)

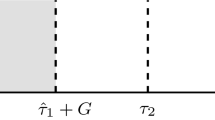

Given two different observation times \(t_{1}\) and \(t_{2}\), \(0 < t_{1} \leq t_{2}\), of a streaming data S, which is an open-ended sequence of values {v1, \(v_{2}\), \(\dots \), \(v_{i}\), \(\dots \)}. The CCP trail between two given observations is the probability of finding the heritage of the data at observation time \(t_{1}\) in the data at observation time \(t_{2}\). A CCP trail is denoted as \(ct(t_{2}, t_{1})\), and is formally defined as:

Property 1

The CCP trail can be computed by as the product of CCP heritages for all \(t_{1} \leq t_{2}\) as follows:

where \(1_{\{x\}}\) is binary indicator function. In other words, \(1_{\{x\}}\) is equal to 1 if x is TRUE, otherwise \(1_{\{x\}}\) is equal to 0.

Proof

The detailed proof is provided in Appendix A.1. □

CCP trail mean at observation time t in the data stream is then defined by:

where \(cp(t) = {\sum }_{i = 1}^{t} v_{i} \times ct(t,i)\), \(ctsum(t) = {\sum }_{i = 1}^{t}ct (t,i)\).

cp(t) is the CCP propagation at observation time t looking back into its history in the data stream. \(ctsum(t)\) is the coefficient presenting sum of the CCP trail at time t.

Proposition 1

Given a data stream, \(ctsum(t)\) can be computed by the following equation:

Proof

The detailed proof is provided in Appendix A.2□

Proposition 2

Similar to the coefficient estimation, the CCP propagation can be estimated by the following sequential updating:

Proof

The detailed proof is provided in Appendix A.3□

Equations 10–11 show that we can sequentially update the CCP model, CCP coefficient, and CCP propagation in the stream at each observation time, while new data arrives. Hence, the CCP trail mean at the next observation, \(\overline {CCP(t + 1)}\), is easily estimated in linear time by Eq. 9.

4.3 Change detection

Change point detection relies on the null hypothesis \(H_{0}\) and the alternative hypothesis \(H_{1}\). The null hypothesis \(H_{0}\) is a hypothesis that assumes the population means are drawn from the same distribution while the alternative hypothesis \(H_{1}\) supposes the observations are from the different distribution. The change detector defines a rule to accept \(H_{1}\) and reject \(H_{0}\). When \(H_{0}\) is rejected, it means that there is a significant movement in underlying distribution of data, and change point occurs. In our approach, the detector monitors the movement in CCP trail mean in an online manner.

Given a random variable v, we consider the Gaussian model with mean \(\theta \), variance \(\sigma ^{2}\), and assume that \(v \sim \mathcal {N}(\theta , \tau ^{2}=\sigma ^{2}I)\). The cumulative distribution function [42] for a linear contrast \(p^{T}\theta \) of mean \(\theta \) is as follows:

where \([v_{1}, v_{2}]\) is the boundary interval of the Gaussian model and \(\mathcal {D}\) is pivotal statistic function. The pivotal statistic function \(\mathcal {D}\) is defined and computed as:

where CDF(.) is a standard normal cumulative distribution function, and in our proposed method we use the standard normal right tail probabilities as in [12] due to its simple form, and it has a very small error. We use the truncated Gaussian pivot [42] to test hypothesis \(H_{0}\) with an assumption that the population distribution is equal to zero, \(H_{0}: p^{T}\theta = 0\). The alternative positive hypothesis is \(H_{1+}: p^{T}\theta > 0\). The truncated Gaussian statistic then is computed by: \(\mathcal {T} = 1- \mathcal {D}_{0, \tau ^{2}}^{[v_{1}, v_{2}]}(p^{T}v)\). Given a confidence value \(0 \leq \rho \leq 1\), in our change detector, we find the \(v_{\rho }\) satisfying \(1- \mathcal {D}_{v_{\rho }, \tau ^{2}}^{[v_{1}, v_{2}]}(p^{T}v) = \rho \rightarrow \mathbb {P}(p^{T}\theta \geq v_{\rho }) = 1-\rho \). Similar to the two-sided test, we compute the confidence interval \([v_{\frac {\rho }{2}}, v_{1-\frac {\rho }{2}}]\). \(v_{\frac {\rho }{2}}\) and \(v_{1-\frac {\rho }{2}}\) are computed based on conditions such that:

Then we have \(\mathbb {P}(v_{\frac {\rho }{2}} \leq p^{T}\theta \leq v_{1-\frac {\rho }{2}}) = 1- \rho \). Change is identified with a confidence \(\rho \) if the pivotal population mean does not lie in the interval \([v_{\frac {\rho }{2}}, v_{1-\frac {\rho }{2}}]\).

4.4 Choosing decay factor

In our method, we consider forgetting factor \(\alpha \) of k previous ancestors by order to the current model. The meaning of \(\alpha \) is similar to the decaying probability of looking back into the history in [44]. To select the parameter \(\alpha \), we use truncated form of the geometric distribution [13]. The truncated form of the geometric distribution is presented by parameter \(\eta \), subject to \(0 < \eta \leq 1\), and k terms (ancestors). The probability density function at term \(i, 1\leq i \leq k\), is defined by \(P(i) = \frac {\eta (1-\eta )^{i-1}}{1-(1-\eta )^{k}} \). We set this probability density at term i as our forgetting factor \(\alpha _{i}\) of the i-th ancestor,

Observe that the truncated form of the geometric distribution subjects to the condition that sum of probability of k terms equals to 1. In other words, it subjects to \({\sum }_{i = 1}^{k} \alpha _{i} = 1\).

Furthermore, the condition \(0 \leq \alpha _{i} \leq 1\) is also satisfied. The value of \(\eta \) is application-specific and can be chosen either by a procedure of polynomial interpolation or using a heuristic optimal as in [44].

4.5 The online change detection algorithm

Figure 4 gives an overview of the CCPD algorithm, and our method for change detection is presented in Algorithm 1. The input of the algorithm is a sequence of open end values \(v_{1}\), \(v_{2}\), \(\dots \), \(v_{i}\), \(\dots \), and a confidence value \(\rho \). When a new value \(v_{i}\) is read from the stream, a linear projection onto \(\ell _{1}\) ball constraints is performed to project a vector of the k last data values to get \(CCP_{k}\) of the current observation in line 3 (see [14] for more detailed information about the projection method). Line 4 computes \(ch_{i}\) using the truncated form of the geometric distribution. Line 5 is executed to estimate \(ctsum(i)\) and \(cp(i)\). Then CCP trail mean \(\overline {CCP(i)}\) is computed by line 6. In line 7, the boundary interval with confidence \(\rho \) is calculated using the pivotal statistic function. The pivotal truncated Gaussian value of the current data is specified in line 8. A check is performed in line 9 to determine if there is a change or not. If a change occurs, the estimators are reset and the algorithm outputs that change.

An illustrative example

Let us consider a sample synthetic data stream presented in Fig. 3. The stream contains 100 elements with significant changes appearing at timestamps 51 and 81. Assume our parameter k = 3. In this example, we include the last data in the projection. Considering the observation at timestamp 50, the values of estimated parameters at timestamp 50 are as follows: ch= 0.487, ct(ctsum)= 1.493, cp= 75.372, and \(\overline {CCP}\) is computed as \(\frac {cp}{ct} = \frac {75.372}{1.493} = 50.484\). Assume that the incoming element in the stream at timestamp 50 is 50.45 and the three latest elements in the stream are sequentially 50.45, 50.66, and 50.08. The processing steps of the proposed method are the following:

- Step 1::

-

Project a vector of the last 3 elements (50.45, 50.66, 50.08) on \(\ell _{1}\) constraints.

The result of this projection is (0.39, 0.59, 0.02).

- Step 2::

-

Calculate the value of CCP Heritage, ch.

Assume the parameter \(\eta \) is set to 0.98, the decay factors \(\alpha _{i}, i = 1,\ldots , 3\), are computed to be (0.98, 0.0196, 0.0004), and the new value of ch is \(0.39 * 0.98 + 0.59 * 0.0196 + 0.02 * 0.0004 = 0.394\).

- Step 3::

-

Calculate values of estimators ct and cp.

$$\begin{array}{@{}rcl@{}} cp = 75.372 * 0.394 + 50.45 = 80.147,\\ ct = 1.493 * 0.394 + 1 = 1.588. \end{array} $$ - Step 4::

-

Update mean \(\overline {CCP} = \frac {cp}{ct} = \frac {80.147}{1.588} = 50.470\).

- Step 5::

-

Compute the pivotal truncated Gaussian of the new mean, and the confidence interval of the old mean with a confidence \(\rho \) = 0.01.

We have \(pval(\overline {CCP})\) = 1.0, and \(tailarea = [v_{\frac {\rho }{2}}, v_{1-\frac {\rho }{2}}]\) = [0.0, 1.0].

- Step 6::

-

Check condition \(pval(i) \notin tailarea\).

Because 1.0 \(\in \) [0.0, 1.0], the detector determines that there is no change at timestamp 50.

- Step 7::

-

A new data in the stream at timestamp 51 can now be processed. At the timestamp the data value is 100.56, so the last 3 elements in the stream are (100.56, 50.45, 50.66). Repeat Step 1 to Step 6 to process the rest of the stream.

When new data comes in the stream, if a change is detected, the values of the estimators are reset to default. In our example, with the sample synthetic dataset, the CCPD detects two change points at timestamps 52 and 82 (1 delay in comparison with two true change points at timestamps 51 and 81).

Figures 5 and 6 depict the visualization of the real values of the stream and the estimated mean values by the CCPD method, running on the sample synthetic streaming data shown in Fig. 3. In particular, Fig. 5 plots the real values and the estimated mean values computed using the CCPD method with two true change points at timestamps 51 and 81, and two detected change points at timestamps 52 and 82. Figure 6 shows the estimated mean values while we vary the value of \(\eta \) in the decay factor. The importance of the degree of the current data is the combination of the projection vector (on k latest data) and the decay factors, which are, in turn, affected by the choice of \(\eta \). Because we chose decay factors in a form of geometric distribution, the most effect on the importance of current data can be derived from the most recent data in the stream. We observe that when the stream is stable, the value of the estimator is stable with different values of the decay factor. However, the obtained estimator significantly changes around the change point.

Complexity

The procedure of processing each arrival stream data point has three main parts. The first part is the Euclidean projection of the k last data points on ℓ1 ball constraints. This process is a fast projection [14] and has complexity \(\mathcal {O}(k)\). Normally, k is small, thus the process can be performed in constant time. The second part is the process of calculating values of CCP, CCP mean, and updating estimators. The complexity of this process is constant \(\mathcal {O}(1)\). The last part is a process which we calculate tail area and pivotal Gaussian value. We use a fixed number (100) of steps to search for the boundary of the tail. Hence, this process also has constant complexity \(\mathcal {O}(1)\). In summary, the complexity of our method is \(\mathcal {O}(k) + \mathcal {O}(1) + \mathcal {O}(1) = \mathcal {O}(k)\), thus the proposed algorithm CCPD has a constant complexity with constant k, \(\mathcal {O}(k) \).

5 Evaluation

We have performed thorough experiments to evaluate the performance of our method and compare it to the state-of-the-art algorithms.

To make our experiments as real and generic as possible, we performed our evaluation on several different real-world datasets, with various characteristics. Besides that, an extensive experiment was conducted to evaluate the flow rates of the stream including detection delay, true positive, true negative, false negative, and accuracy on several artificial datasets with known ground-truth. We carried out the experiments on a computer running the Windows 10 operating system, having a 64 bit Intel i7 2.6 GHz processor, and 16 GB of RAM. The proposed algorithm was implemented in Java. All evaluations are performed using the MOA framework [9]Footnote 1, with our algorithm integrated. In the experimental results below (Tables 2–7), the bold value is to indicate the highest accuracy and standard deviation per dataset.

5.1 Real-world datasets

For our experiments, we used eight real-world datasets, that are widely-used as benchmark datasets for change-detection methods [7, 18, 36, 45], consisting of Electricity, Poker (Hand), Forest Covertype, Spam, Usenet1, Usenet2, Nursery, and EEG Eye State.

Electricity

is an electricity consumption dataset collected from the Australian New South Wales Electricity Market. The dataset has 45,312 instances which contains electricity prices from 7 May 1996 to 5 December 1998. The instances were recorded by an interval every 30 minutes.

Poker-Hand

is data of a hand consisting of five playing cards drawn from a standard deck of 52. The dataset contains 829,201 instances.

Forest Covertype

is forest cover type for a given observation of 30 x 30 meter cells. The dataset was obtained from US Forest Service (USFS) Region 2 Resource Information System (RIS) data. The dataset is recorded in the Roosevelt National Forest of northern Colorado, US. Forest Covertype and contains 581,012 instances.

Spam

is a dataset based on the Spam Assassin collection and contains both spam and legitimate messages. The Spam dataset has 9,324 instances.

Usenet1

and Usenet2 are based on the twenty newsgroups data set, which consists of 20,000 messages taken from twenty newsgroups. Each of Usenet1 and Usenet2 contains 1,500 instances obtained from twenty newsgroups data set to present a stream of messages of a user.

Nursery

was derived from a hierarchical decision model originally developed to rank applications for nursery schools in Ljubljana, Slovenia. The dataset contains 12,960 instances.

EEG Eye State

was originally used to predict eye states by measuring brain waves with an electroencephalographic (EEG), i.e., finding the correlation between eye states and brain activities [38]. It consists of 14,980 instances with 14 EEG values, where each value indicates the eye state.

Electricity, Forest Covertype and Poker-Hand

were obtained from the most popular open source framework for data stream mining MOAFootnote 2, while the remaining datasets were obtained from the UCI Machine Learning RepositoryFootnote 3 (University of California, Irvine)

5.2 Performance

This section presents the experimental results of the proposed method compared to the state-of-the-art algorithms. We performed empirical experiments to evaluate our proposed algorithm and compared it against HDDMW, HDDMA [18], DDM [19], EDDM [4], SeqDrift2 [33], FHDDM [36]Footnote 4, and RDDMFootnote 5 [5]. These algorithms were chosen because they are the state-of-the art algorithms in drift detection (see also Section 2). Implementation of the algorithms are provided by the MOA framework. In all our experiments we used Naïve Bayes (NB) and Hoeffding Tree (HT) classifiers as the base learners, which are frequently used in the literature [7, 18, 33, 36]. In addition, we performed comparison with a baseline method, called “No Change Detection”, that detects changes with base learners only. The confidence value is set to 0.001, \(\eta \) is set to 0.99. The \(\lambda \) in HDDMW is set to 0.05 as recommended by the authors. The sliding window size is set to 25 in FHDDM. According to the authors, it is the optimal value providing the best classification accuracy. With the other algorithms, in all the tests we use the default parameters and configuration values as recommended by the authors of the original work.

Accuracy evaluator is computed base on a window with size 100. Frequency of sampling process is every 100 samples. Furthermore, we prepare two versions, named \(CCPD_{k = 5}\) and \(CCPD_{k = 3}\). \(CCPD_{k = 5}\) is our proposed method running with a projection on the last five data points in the stream, while \(CCPD_{k = 3}\) runs with a projection on the last three data points in the stream.

Tables 2–3 show the average (\(\overline {a}\)) and standard deviation (std) accuracy of the change detection algorithms with NB and HT classifiers as base learner, respectively. In case of same accuracy result, we consider the algorithm with the lowest standard deviation to be the best. The results show that CCPD wins 5 times on total 8 datasets with NB classifiers and 4 times on total 8 datasets with HT classifiers. Further, Table 4 shows that our proposed method obtains the best accuracy scores with the majority of the datasets (five out of eight datasets), including Electricity, Spam, Usenet1, Covertype, and Poker datasets; while on EEG Eye, Nursery, and Usenet2, the accuracy ranks are 2, 4, and 6.5 (the same rank with FHDDM), respectively. Another observation from the experiments is that, while varying the order of temporal dependency k, the accuracy scores on Nursery and Usernet2 change abruptly. This can be explained as follows. On the Usernet2 dataset, the reason is that the concept drift is moderate and the topic shift on the dataset is blunt and blurred. Moreover, the number of samples in the dataset is small for the training. On Nursery, on the other hand, the change behavior may be due to the large number of distinct values of attributes in the dataset. Thus, the temporal dependency is loose, which has in turn an impact on detecting the changes. Nevertheless, the difference from the best accuracy is not significant. Overall, the empirical results show that CCPD has the best performance in all cases of combinations with NB and HT classifier.

To further evaluate the performance of the proposed method, and in order to get a fair comparison among the algorithms, we performed statistical significance tests based on the average rank of accuracy of the algorithms [15]. Firstly, we select the best accuracy of the algorithms with NB classifier and HT classifier and then report rank of accuracy of the algorithms. Secondly, we use Friedman test because it is a nonparametric analogue of the parametric two-way analysis of variance by ranks. Table 4 shows statistical test results using the methodology proposed in [15]. The number in parentheses at each cell is the rank of accuracy. The bold values indicate the best results per dataset. Based on the methodology, we reject the null-hypothesis because \(F_{F} > \) Critical F-value. This means that there are significant differences between the algorithms. Finally, we use the Nemenyi post-hoc test to present these differences. We set the significance level at 5%. The critical value for 9 algorithms is 3.10. The critical difference at level 5% is \(CD= 4.24\). Figure 7 shows the results of the Nemenyi test of the data from Table 4. On the figure, the methods on the right side have lower average rank of accuracy and are better than the methods on the left.

5.3 Impact of the k-order

In this section, we run tests to evaluate the dependencies of data points in the streams in the Electricity, Poker, Forest Covertype, Spam, Usenet1, Usenet2, Nursery, and EEG Eye State datasets. We record average accuracy and standard deviation of the CCPD on the datasets with NB classifier while varying the parameter k from 2 to 7.

Table 5 shows the average accuracy and standard deviation of the CCPD method while varying k from 2 to 7. From the results, we can observe that the different values of k will affect to the accuracy of the algorithm. The best accuracy is almost obtained at order \(k = 2\) or \(k = 3\). On Electricity, Usenet2, and Covertype datasets, the best performance is obtained when we project on \(k = 2\) data, while on the remaining datasets, the best performance is at \(k = 3\). On the EEG Eye State dataset, when the value of k increases from 2 to 7, the accuracy also slightly increases. For the sake of comparison, the table only shows k ∈ [2,7]. Since we observed that the values were still increasing, we decided to vary k, until \(k = 20\) to find the optimal value. The optimal accuracy of 99.55% was found at \(k = 13\). The best accuracy at different order k also depends on the characteristics of the input dataset. Furthermore, the results show that change in accuracy, abrupt or slight, is dataset-dependent. On Electricity, Spam, Covertype, and Poker datasets, when we vary value of order k, the accuracy changes slightly. In contrast, the accuracy varies abruptly on Usenet1, Usenet2, and Nursery datasets.

As shown in the results in Table 5, the best results are usually in 2 or 3 orders of dependency. The reason can be explained as that the proposed method uses the fixed length for projection of temporal dependencies. In real life datasets, the order of dependencies may not be fixed length, which means that each data point in a stream may have temporal dependencies of a different number from its previous data points. For this reason, our further research will include studying higher-order temporal dependencies.

5.4 True change point and delay

One of the disadvantages of some real-world datasets in change detection problem is that the ground truth of the datasets is unknown. On the other hand, the performance of a detection method is evaluated base on the accuracy and delay of detected change points to the real change points in the data. In the above section, we have presented the efficiency of our proposed method with high accuracy detection. In this section, for a further evaluation of the algorithm, we describe the tests we performed with CCPD on a real-world dataset for which its ground truth has been known. This is the Electricity dataset, which is also widely-used in many methods [10, 45] for change detection. In this dataset, data are heavily autocorrelated with frequency peaks in every 48 instances [45].

In this experiment, we performed tests on the first 1,000 instances of the dataset. We then record change points detected by CCPD and other state-of-the-art algorithms with respect to true change points that are known as ground truth. Specifically, we did experiments employing CCPD, HDDMW, HDDMA, CUSUM, and PAGE-HINKLEY algorithms. CCPD, HDDMW, and HDDMA are online detection algorithms. PAGE-HINKLEY is a concept drift detection based on the Page Hinkley Test, while CUSUM is a drift detection method based on cumulative sum. For the best competitive comparison, CUSUM and PAGE-HINKLEY are executed in an online manner at every data point in the stream. Here, we set sample frequency to 1. We adjust the minimal number of instance to 1, and set all the other parameters to default values as in MOA framework according to prior works.

Table 6 shows the change points detected by the algorithms on Electricity dataset. The CCPD detects online and at exact change points. CUSUM and PAGE-HINKLEY have the same result with a short delay detection of change, which is 1 data point. HDDMA−test produces the largest delay with 15 data points in delay. And the last algorithm HDDMW−test detects change with 4 data points in delay.

5.5 Experiments on synthetic datasets

This subsection presents experiments to evaluate detection delay, true positive (TP), true negative (TN), false negative (FN), and accuracy of our proposed method. We compared the results with several state-of-the-art drift detectors, including EDDM [4], ECDD [39], SeqDrift2 [33], and RDDM [5], on four widely-used synthetic data streams in the literature [18, 36]: Mixed, Sine, Circles, and LED. Each dataset contains 100,000 instances, and 10% noise in class of instances. In brief, the characteristics of these datasets are as follows:

-

Mixed: This dataset contains four attributes, including two Boolean attributes and two numeric attributes in the [0, 1] interval. The Mixed dataset contains abrupt concept drifts. The drifts occur at every 20,000 instances with a transition length \(\xi = 50\).

-

Sine1: In this dataset there are two attributes that are uniformly distributed in the [0, 1] interval. The Sine1 dataset contains abrupt concept drifts. The drifts occur at every 20,000 instances with a transition length \(\xi \) = 50.

-

Circles: This dataset uses four circles to simulate concept drifts. Each instance has two numeric attributes on the [0, 1] interval. The Circles dataset contains gradual concept drifts. The drifts occur at every 25,000 instances with a transition length \(\xi \) = 500.

-

LED: This dataset is a seven-segment display of digit dataset. The LED dataset contains gradual concept drifts. The drifts occur at every 25,000 instances with a transition length \(\xi \) = 500.

All experiments on the synthetic datasets were performed using the MOA framework with parameters set to the optimal values for all the compared algorithms as recommended in the original papers. We adopted the acceptable delay length metric [36] to evaluate the performance of the algorithms. Given a threshold, if a detector can detect a change within a threshold delay from the true change point, it is considered as a true positive. In the experiments on the Mixed and Sine1 datasets, the level of temporal dependency k is set to 3 and the acceptable delay is set to 250. While on the Circles and LED datasets, k is empirically set to 5 and the acceptable delay is set to 1,000.

Table 7 shows the average and standard deviation of classification results for the CCPD, EDDM, ECDD, SeqDrift2, and RDDM running on 100 samples of datasets. The results show that, in most cases, ECDD and EDDM are the worst detectors. On the Circles dataset, SeqDrift2 has the best performance, while SeqDrift2 has the worst performance on the LED dataset. This can be explained as follows. SeqDrift2 maintains a fixed size reservoir sampling for concept drift detection. The reservoir sampling contains 200 instances, and this size is suitable for the Circles dataset since it contains gradual concept drifts with a transition length of 500. The low accuracy of SeqDrift2 on LED is a result of its very high false positive. On the Mixed and Sine1 datasets, we observe that, the shortest delay is obtained by ECDD. The reason is that the number of instances in the estimated window in ECDD is small. However, with ECDD, both the TP rate and the FP rate are high, thus resulting in a low accuracy. In almost all cases, CCPD and RDDM have the best accuracy and very good flow rates of detection delay, TP, FP, and FN. The CCPD has the most accurate and very low FP, FN rates on the Mixed and Sine1 datasets; while RDDM has good performance on the LED dataset. This is because RDDM discards old instances from the stream, while in CCPD, we weigh the current instance based on a projection on k latest instances in the stream. Therefore, any concept drifts can be quickly detected on abrupt concept drift datasets like Mixed and Sine1. Overall, the CCPD and RDDM have the same rank (1.75).

5.6 Runtime performance

In terms of runtime performance, we performed experiments to evaluate the classification time and the streaming processing speed of our proposed method. Table 8 presents the evaluations of the method in terms of running time in streaming on Electricity, Poker, Forest Covertype, Spam, Usenet1, Usenet2, Nursery, and EEG Eye datasets. It shows a number of learning evaluations, average total running time in seconds, average time using for each learning process and number of learning that can be processed per second. From this table, we can observe that our detector is capable of processing a streaming at high velocity, up to 204.5 process per second. Hence, it is feasible to detect changes in an online setting as in streaming manner.

6 Conclusion

In this paper, we presented a new approach for detecting changes in an open-ended data stream. We proposed a k-order Candidate Change Point (CCP) Model that builds on linear higher order Markov processes, in order to exploit the temporal dependency among data in a stream. The main idea with the model is to compute the probability of finding change points in a given observation time window, using the temporal dependency information or factors between different observed data points in a stream. To cope with the dynamic nature of the stream, we proposed an approach that can continuously optimize the temporal dependency factors by using an Euclidean projection on \(\ell _1\) ball constraints. In addition, we introduced a concept called CCP trail, which refers to the probabilistic path from a specific observed data point to another previously observed data point. Our approach adapts the probability of finding change points to continuously estimate the CCP trail means in streaming data. Using CCP trail mean values, we applied statistical tests to detect the change points. To evaluate our approach, we performed extensive experiments using several datasets and compared our algorithm to the state-of-the-art algorithms. Our evaluation showed that our k-order Candidate Change Point Model is effective, and that the Candidate Change Point Detector (CCPD) algorithm outperforms the state-of-the-art algorithms on most of the datasets. In addition, our method has a linear time performance, which enables it to be deployed online in real-world stream applications.

There are several directions to extend this work. First, it is worth investigating how the number of different CCPs in different data points affects the dependency model.

Second, in data stream, a large volume of data arrives at a high speed. Therefore, it is infeasible to maintain information of all data. Developing sketching algorithms that combine temporal dependency for detecting drifts and outliers is an area for further study.

Notes

Version 4.0.0, June 2017.

Source code of the FHDDM is provided by the authors of the algorithm

Source code of the RDDM is obtained from the authors personal website

References

Adä I, Berthold MR (2013) EVE: a framework for event detection. Evolving Systems 4(1):61–70

Adhikari U, Morris T, Pan S (2017) Applying Hoeffding adaptive trees for real-time cyber-power event and intrusion classification. IEEE Transactions on Smart Grid PP(99):1–12

Anagnostopoulos C, Tasoulis DK, Adams NM, Pavlidis NG, Hand DJ (2012) Online linear and quadratic discriminant analysis with adaptive forgetting for streaming classification. Statistical Analysis and Data Mining 5(2):139–166

Baena-García M, del Campo-Ȧvila J, Fidalgo R, Bifet A, Gavaldȧ R, Morales-Bueno R (2006) Early drift detection method. In: The 4th international workshop on knowledge discovery from data streams

Barros RS, Cabral DR, Gonçalves PM, Santos SG (2017) RDDM: Reactive drift detection method. Expert Syst Appl 90(Supplement C):344–355

Bifet A (2017) Classifier concept drift detection and the illusion of progress. In: Artificial intelligence and soft computing. Springer International Publishing, Cham, pp 715–725

Bifet A, Gavaldà R (2007) Learning from time-changing data with adaptive windowing. In: Proceedings of the 2007 SIAM international conference on data mining, pp 443–448

Bifet A, Gavaldà R (2009) Adaptive learning from evolving data streams. In: Proceedings of the 8th international symposium on intelligent data analysis, pp 249–260

Bifet A, Holmes G, Kirkby R, Pfahringer B (2010) MOA: Massive online analysis. J Mach Learn Res 11:1601–1604

Bifet A, Read J, žliobaitė I, Pfahringer B, Holmes G (2013) Pitfalls in benchmarking data stream classification and how to avoid them. In: Proceedings of the european conference on machine learning and knowledge discovery in databases, ECML PKDD, pp 465–479

Bodenham DA, Adams NM (2017) Continuous monitoring for changepoints in data streams using adaptive estimation. Stat Comput 27(5):1257–1270

Bryc W (2002) A uniform approximation to the right normal tail integral. Appl Math Comput 127(2):365–374

Chattopadhyay S, Murthy C, Pal SK (2014) Fitting truncated geometric distributions in large scale real world networks. Theor Comput Sci 551:22–38

Condat L (2016) Fast projection onto the simplex and the and the \(\ell _{1}\) ball. Math Program 158(1):575–585

Demšar J (2006) Statistical Comparisons of Classifiers over Multiple Data Sets. J Mach Learn Res 7:1–30

Duchi J, Shalev-Shwartz S, Singer Y, Chandra T (2008) Efficient projections onto the \(\ell _{1}\)-ball for learning in high dimensions. In: Proceedings of the 25th international conference on machine learning, ICML, pp 272–279

Frías-Blanco II, del Campo-Ávila J, Ramos-Jiménez G, Carvalho ACPLF, Díaz AAO, Morales-Bueno R (2016) Online adaptive decision trees based on concentration inequalities. Knowl-Based Syst 104:179–194

Frías-Blanco II, del Campo-Ávila J, Ramos-Jiménez G, Morales-Bueno R, Ortiz-Díaz AA, Caballero-Mota Y (2015) Online and non-parametric drift detection methods based on Hoeffding’s bounds. IEEE Trans Knowl Data Eng 27(3):810–823

Gama J, Medas P, Castillo G, Rodrigues PP (2004) Learning with drift detection. In: Proceedings of brazilian symposium on artificial intelligence, pp 286–295

Gama J, Sebastião R, Rodrigues PP (2013) On evaluating stream learning algorithms. Mach Learn 90 (3):317–346

Gama JA, žliobaitė I, Bifet A, Pechenizkiy M, Bouchachia A (2014) A survey on concept drift adaptation. ACM Comput Surv 46(4):1–37

Gomes HM, Barddal JP, Enembreck F, Bifet A (2017) A survey on ensemble learning for data stream classification. ACM Computing Surveys 50(2):23:1–23:36

Gomes HM, Bifet A, Read J, Barddal JP, Enembreck F, Pfharinger B, Holmes G, Abdessalem T (2017) Adaptive random forests for evolving data stream classification. Mach Learn 106(9):1469–1495

Harries MB, Sammut C, Horn K (1998) Extracting hidden context. Mach Learn 32(2):101–126

Hoeffding W (1963) Probability inequalities for sums of bounded random variables. J Am Stat Assoc 58 (301):13–30

Kifer D, Ben-David S, Gehrke J (2004) Detecting change in data streams

Kolter JZ, Maloof MA (2007) Dynamic weighted majority: an ensemble method for drifting concepts. J Mach Learn Res 8:2755–2790

Kumar R, Raghu M, Sarlós T, Tomkins A (2017) Linear additive markov processes. In: Proceedings of the 26th international conference on World Wide Web, pp 411–419

Li P, Wu X, Hu X (2010) Mining recurring concept drifts with limited labeled streaming data. In: Proceedings of the 2nd Asian conference on machine learning. PMLR, vol 13, pp 241–252

Liu J, Ye J (2009) Efficient euclidean projections in linear time. In: Proceedings of the 26th annual international conference on machine learning. ICML, pp 657–664

Markov A (1971) Extension of the Limit Theorems of Probability Theory to a Sum of Variables Connected in a Chain. In: Appendix B, dynamic probabilistic systems (Volume I: Markov models), pp 552–577

Page ES (1954) Continuous Inspection Schemes. Biometrika 41(1/2):100–115

Pears R, Sakthithasan S, Koh YS (2014) Detecting concept change in dynamic data streams. Mach Learn 97(3):259–293

Pesaranghader A, Viktor H, Paquet E (2017) McDiarmid drift detection methods for evolving data streams. CoRR arXiv:1710.02030

Pesaranghader A, Viktor H, Paquet E (2017) Reservoir of diverse adaptive learners and stacking fast Hoeffding drift detection methods for evolving data streams. CoRR arXiv:1709.02457

Pesaranghader A, Viktor HL (2016) Fast Hoeffding Drift Detection Method for Evolving Data Streams. In: Proceedings of the 2016 machine learning and knowledge discovery in databases. ECML PKDD, pp 96–111

Roberts SW (1959) Control chart tests based on geometric moving averages. Technometrics 1(3):239–250

Rösler O, Suendermann D (2013) A first step towards eye state prediction using EEG. In: Proceedings of the international conference on applied informatics for health and life sciences (AIHLS 2013)

Ross GJ, Adams NM, Tasoulis DK, Hand DJ (2012) Exponentially weighted moving average charts for detecting concept drift. Pattern Recogn Lett 33(2):191–198

Schlimmer JC, Granger RH (1986) Incremental learning from noisy data. Mach Learn 1(3):317–354

Sebastião R, Gama J, Mendonça T (2017) Fading histograms in detecting distribution and concept changes. International Journal of Data Science and Analytics 3(3):183–212

Tibshirani RJ, Taylor J, Lockhart R, Tibshirani R (2016) Exact Post-Selection inference for sequential regression procedures. J Am Stat Assoc 111(514):600–620

Weissman T, Ordentlich E, Seroussi G, Verdu S, Weinberger MJ (2003) Inequalities for the \(\ell _{1}\) Deviation of the Empirical Distribution. Technical report, Hewlett-Packard Labs

Wu T, Gleich DF (2017) Retrospective higher-order markov processes for user trails. In: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining. KDD, pp 1185–1194

žliobaitė I, Bifet A, Read J, Pfahringer B, Holmes G (2015) Evaluation methods and decision theory for classification of streaming data with temporal dependence. Mach Learn 98(3):455–482

Acknowledgements

This research is funded by the Norwegian University of Science and Technology (NTNU) through the MUSED project.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof

Appendix: Proof

1.1 A.1 Proof of property 1

Proof

Equation 7 can be easily proved by induction. When \(t_2 = t_1\), we have:

When \(t_2 = t_1 + 1 \), we have:

Assume that Eq. 7 is satisfied when \(t_2 = t_1 + m\), with \(m \in \mathbb {N}, m > 0\). We prove that Eq. 7 is also satisfied with \(t_2 = t_1 + m + 1\). We have:

□

1.2 A.2 Proof of proposition 1

Proof

We have:

□

1.3 A.3 Proof of proposition 2

Proof

We have:

□

Rights and permissions

About this article

Cite this article

Duong, QH., Ramampiaro, H. & Nørvåg, K. Applying temporal dependence to detect changes in streaming data. Appl Intell 48, 4805–4823 (2018). https://doi.org/10.1007/s10489-018-1254-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1254-7