Abstract

The Artificial Bee Colony (ABC) algorithm is considered as one of the swarm intelligence optimization algorithms. It has been extensively used for the applications of static type. Many practical and real-world applications, nevertheless, are of dynamic type. Thus, it is needed to employ some optimization algorithms that could solve this group of the problems that are of dynamic type. Dynamic optimization problems in which change(s) may occur through the time are tougher to face than static optimization problems. In this paper, an approach based on the ABC algorithm enriched with explicit memory and population clustering scheme, for solving dynamic optimization problems is proposed. The proposed algorithm uses the explicit memory to store the aging best solutions and employs clustering for preserving diversity in the population. Using the aging best solutions and keeping the diversity in population of the candidate solutions in the environment help speed-up the convergence of the algorithm. The proposed approach has been tested on Moving Peaks Benchmark. The Moving Peaks Benchmark is a suitable function for testing optimization algorithms and it is considered as one of the best representative of dynamic environments. The experimental study on the Moving Peaks Benchmark shows that the proposed approach is superior to several other well-known and state-of-the-art algorithms in dynamic environments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, evolutionary algorithms have attracted much interest among researchers for solving dynamic optimization problems [52,53,54]. An evolutionary algorithm suitable for dynamic optimization problems should not only be able to locate the optimum, as it does in the static optimization problems, but also be capable of detecting the time when the changes in the positions of optima occur and also tracking the newly relocated optima. In the static environments for the reason that optima are not moved in the environment and each of them remains in a fixed position passing the time, it is easy for evolutionary algorithms to find the global optimum, but in an environment that the optima are subject to change it is not an easy task to find the global optimum following every change that occurs in the environment. Thus strong heuristic mechanisms are needed to solve dynamic optimization problems. One of the weaknesses in evolutionary algorithms for solving dynamic optimization problems is that they can’t single-handedly solve them. Thus, they should employ a number of appropriate strategies that make them able to manage dynamic optimization problems. One proper strategy for dynamic environments is to use a combination of some auxiliary elements; for example, using of the memory element in evolutionary algorithms can be very useful. Some of these auxiliary elements are presented in [1,2,3]. The main weakness of using Standard Evolutionary Algorithms in a dynamic environment is that, once the algorithm starts to converge around some optimal or near optimal solution, it will likely lose its ability to continue the search for new optima, when the environment changes. Thus, one key point in optima tracking approaches is the need for increasing or maintaining diversity among the individuals in the population, so that the algorithm keeps its ability to explore the new optima when the environments change, even after the population has incompletely converged to an optimum, i.e. the candidate solutions have become close to the optimal solution. This paper involves a comprehensive experimental study based on the Moving Peaks Benchmark (MPB) problem [25]. In order to show whether the proposed technique effects on increasing convergence speed, the examinations will be conducted on MPB. We compare the performance of the proposed approach with other well-known and state-of-the-art approaches in dynamic environments. Other algorithms selected to be compared with the proposed approach are the state-of-the-art algorithms that most of the researchers use as their competent methods.

The contributions of the paper are as follows:

-

1.

Introducing an explicit memory based artificial bee colony algorithm for dynamic optimization,

-

2.

Introducing a new mechanism suitable for diversity preserving in the proposed dynamic optimization algorithm based on an innovative updating-retrieving mechanism using clustering of the population,

-

3.

Huge experimental study on tuning the proposed dynamic optimization algorithm’s parameters.

The second one is a vital contribution. Indeed, through it we help memory usage be more goal-oriented. One of the most important goals of memory usage has been injecting diversity in the population after convergence of the algorithm. Through the second contribution, this is maximally satisfied. It is due to the clustering concept. Indeed, through clustering of population and letting only exchange occurrence in a cluster guarantees the diversity in the population; while through clustering of memory and letting only exchange occurrence in a cluster guarantees the diversity in the memory.

This paper is organized as follows. Section 1 is introduction. Section 2 is dedicated to memory definition and its strategies for updating and retrieving in the optimization algorithms. Section 3 includes backgrounds, i.e. the ABC algorithm and paper definitions. Section 4 presents related work. The proposed method is presented in sufficient detail in Section 5 along with its algorithmic Pseudo code. Section 6 first presents MPB problem. Then experimental results and their analysis have been presented in the rest of Section 6. Finally, the paper is concluded in Section 7 with a discussion on the possible future work of the research.

2 Memory types in dynamic environments

In dynamic environments, memory is applied to save outstanding past solutions with the assumption that the optimum may return to its previous point. When certain aspects of the problem present some variety of periodic behavior, old solutions might be used to bias the search in their vicinity and consequently the computational time is reduced. The use of memory is beneficial on those types of environments. Memory based approaches can be divided in two categories. The first one is implicit memory and the second one is explicit memory.

2.1 Implicit memory

The usage of redundant representations is the main characteristic of the implicit memory methods. Memory implicit are divided into two groups: (a) double memory and (b) diploid memory. Diploid memory representations have been first used by Goldberg et al. [10]. The diploid implicit memory functions have been described in detail at [11]. Other works using implicit memory based on diploid representations and dominance mechanisms can be found in [12,13,14,15,16].

2.2 Explicit memory

Using explicit memory for the storage of useful environmental information is in contrast to using implicit memory that even additional information is also reserved. Here only useful information is to be reserved (Fig. 1). Yang [17] has divided explicit memory approaches into two main categories: (a) direct memory and [18, 19] (b) associative memory [20, 21].

2.3 Updating memory

To replace the members of population in the memory, different strategies have been proposed, some of which that are discussed in [22] are presented here:

- Strategy 1: :

-

In this strategy, two individuals in memory with the least distance (the closest similarity) are selected and among the two, the individual with lower efficiency becomes the candidate for replacement. For example, if fit(i) is the efficiency of the ith individual and fit(j) is the efficiency of the jth individual, if fit(i) < fit(j), then the ith individual in memory is replaced by the best individual in the population and vice versa.

- Strategy 2: :

-

If \(fit(j)\times \frac {d_{ij}}{d_{max}} \le fit(new)\), then the jth individual in the memory will be replaced by the current individual. In this equation fit(j) is the individual efficiency of the jth person in the memory and dij is the distance between two individuals i and j and dmax is the maximum distance between any arbitrary pair of individuals.

- Strategy 3: :

-

This strategy is known as similarity strategy in which the most similar individual is replaced by the best available individual in the population in the memory, provided that this individual has more efficiency than the individual in memory. In order to measure the similarity, Euclidean distance can be employed. Euclidean distance between ith and jth individuals, denoted by d(i,j), is calculated according to equation \(\sqrt {{\sum }_{d = 1}^{D} ({{x}_{i}^{d}} - {{x}_{j}^{d}})^{2}}\). In this strategy, the best individual of the population, denoted by βpop, is nominated for being placed into the memory. Then the most similar individual in the memory with that βpop, denoted by \(\alpha _{\beta _{pop}}\), is replaced by βpop if \(f(\alpha _{\beta _{pop}} )\le f(\beta _{pop})\).

2.4 Memory retrieval

The information stored in memory should be used for tracking of the optimum when optima are relocated. So the best time to retrieve data from memory is the moment when the environment is changed. Several strategies can be adopted to retrieve information from memory. One of the methods of memory information retrieval is to select the best individual in the memory then to replace it with the worst individual in the population [23].

3 Backgrounds

3.1 Artificial bee colony

In recent years a growing interest has been created in the field of swarm intelligence in dynamic optimization problems according to their importance in the real world. The collective intelligence is an almost new field of research focused on studying and modeling the behavior of social insects such as bees. Artificial bee colony algorithm [24] simulates an inquisitive and intelligent behavior of a set of bees. Artificial bee algorithm consists of six essential steps. These steps are briefly described in the following subsection.

3.1.1 ABC steps

- Step (1) :

-

Initialization of the artificial bee colony algorithm parameters and the optimization problem parameters.

In general, optimization problem can be formulated based on (1)

In (1), f(x) is the objective function that should be minimized. Each x is a set of decision variables (a bee) that {xi ∈ Xi|i = 1,…,N}. Xi is the possible range for i th decision variable. It means that X = {X1,X2,…,XN} and Xi ∈ (LBi,UBi) where LBi and UBi are the lower bound and upper bound of the i th decision variable Xi. N is the number of decision variables (the number of features) and g(x) and h(x) are the equality and inequality relations respectively. Artificial bee colony algorithm includes three other parameters as well. These parameters include:

-

(A):

Parameter SN, which is the number of food sources (solutions) in the population. SN is equal to the number of employee bees.

-

(B):

The maximum cycle parameter for algorithm is denoted by MCN, which stands for the maximum number of generations that algorithm is permitted to proceed.

-

(C):

Limit parameter is the frequency of motion of a bee to a position without improvement. This parameter is used to explore the food sources (solutions). If the frequency of motion of a bee to a position without improvement is more than the limit parameter, the bee considers that position as a deserted position.

- Step (2): :

-

Creation of food source memory.

Food Source Memory (FSM) consists of a SN × N matrix. In each row of FSM, location of a food source is assumed. The FSM is created based on the (2). Vectors are arranged according to the adjacent cost equation in an upside trend.

Generally any xj(i) (the position of jth bee in ith dimension) is generated as (3).

where, r is a random number generated from uniform distribution in the closed range from 0 to 1 (r ∈ [0,1]). f(xi) indicates the fitness of xi; i.e. i th bee.

- Step (3): :

-

Allocation of employee bees to food sources.

At this stage, any employee bee moves into the food sources randomly and calculates the suitability of a position. Each bee chooses a neighbor randomly and moves toward it. The employee bee modifies its new position based on (4).

In (4), xj(i) is the position of jth bee in the ith dimension and xk(i) stands for a neighbor bee position. Parameter r is a random number generated from uniform distribution in the closed range from − 1 to 1 (r ∈ [− 1,1]). The pseudo code of the employee bee is presented in Algorithm 1.

- Step (4): :

-

Sending the onlooker bees.

Onlooker bee phase includes three following phases.

-

1)

Assigning a probability to each employee bee indicating probability of its selection (for exploring the environment around it) according to (5).

$$ P_{j} = \frac{f(x_{j})}{{\sum}_{k = 1}^{SN}f(x_{k})} $$(5) -

2)

Employing the probability that an employee bee is selected by an onlooker bee is based on (5). This is done in fact by a roulette wheel; i.e. the higher probability of a position leads to the higher possibility of being selected.

- Step (5): :

-

Sending the scout bees to search for new food sources.

-

3)

Every onlooker bee randomly selects an employee bee and moves toward it. So each bee finds a new position after a move toward a randomly selected employee bee. If the efficiency of the new position is higher than the efficiency of its previous position, the bee selects to stay at the new position and resets its non-improvement counter; otherwise it returns to the previous position and adds one unit to its non-improvement counter. Non-improvement counter shows how many successive trials have been failed to find a better position by an onlooker bee. In other words, the non-improvement counter counts the number of consecutive movements of the bee with no improvement. The bee modifies its new position based on (4). If non-improvement counter of an onlooker bee exceeds a specified limit right after the onlooker bee fails to improve its position; it means that the food source has no nectar and that position should be abandoned. Pseudo code of the onlooker bee is based on Algorithm 2. In this pseudo code sum_prob is the cumulative probability of the employee bees being selected by onlooker bees.

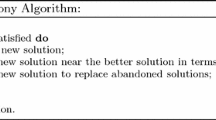

The bee that is responsible to find new solutions in the search environment is the scout bee. This bee moves in search space randomly to explore new areas. These bees must replace the found abandoned food sources with new food sources with random search according to (3). Pseudo code related to scout bee phase is presented in Algorithm 3.

- Step (6): :

-

Save the best food source.

In this step the best food source position denoted by bestx is stored in memory of food sources. The pseudo code related to artificial bee colony algorithm is based on Algorithm 4.

3.2 Dynamic and static environment definitions

A dynamic environment is the one that changes over time. We follow this subsection by presenting the definitions of some keywords.

Dynamic environments are the ones that change continuously or discretely over time. These changes can be in large scale. Among the cases that may happen, the change in parameter values over time is considered. Consequently, after each environmental change, the problem optima have probably been subject to change. One of the most complete simulations of the dynamic environments is Moving Peaks Benchmark that possesses almost all features of a real-world dynamic environment. Indeed, a problem is considered as a dynamic one if its objective function is subject to change over time.

If the objective function is not a function of time, then it can be considered as a static objective function. For example, the problem of finding the shortest path in a graph is a static problem, while by adding the online traffic of paths to the problem, it will be a dynamic one.

Moment of environment change

The moment in which the environment changes (optimization function changes) is called the moment of environment change (or the cycle of environment change) and is denoted by MEC.

One of the challenges in dynamic environments is to detect the moment of change. We need to identify the moment of change in the environment after it is occurred, because after a change in the environment a re-evaluation for all individuals (artificial bees) is needed. To detect an environment change, the algorithm computes the average fitness values of all artificial bees in a generation is more than the average fitness values of all artificial bees in the previous generation.

This strategy is used to identify the moment of change. Equation (6) presents a condition when it holds, then we can assume the change has been occurred. If \(f({x}_{j}^{new})\) presents the efficiency of the jth bee in current generation (after the change) and \(f({x}_{j}^{old} )\) presents the efficiency of jth bee in previous generation (before the change), then (6) indicates the condition in where environment change has been occurred.

Colony

error The error of a colony xt (xt shows colony at the t th function evaluation), denoted by \(E(x^{t},f_{t} (\vec {p}))\), is defined in (7) by fitness function \(f_{t} (\vec {p})\) where \(\vec {p}\) is a position in the landscape of fitness function ft. The fitness function ft is a function that is defined at t th function evaluation (it is very important to note that except in the environment change moment, τ, fτ≢fτ− 1. In other words, fτ ≡ fτ− 1 is always a valid sentence provided that τ isn’t environment change moment).

where \({p}_{t}^{\ast }\) is the optimal position in the landscape of fitness function \(f_{t}(\vec {p})\) (which is not available).

OfflineError

To measure the effectiveness of an evolutionary algorithm in a dynamic environment, we use a measure called offline error denoted by OffLineError. OffLineError is obtained by (8).

where, π equals the number of fitness evaluations that have been completed.

4 Related work

In recent years, several methods have been expanded for solving dynamic optimization problems with the aim of increasing and maintaining diversity as the generations go forward during the entire run [4,5,6]. Ramsey and Grefenstette [7] have introduced a case-based method for initializing the genetic algorithm when a change is detected. Louis and Xu [8] have applied the same idea to the open shop scheduling problem. They have used an Evolutionary Algorithm combined with case-based reasoning. Yang et al. [9] have used a hybrid immigrant scheme. This method has combined the concepts of elitism, dualism and random immigrants. The best individual from the previous generation and its dual individual are retrieved in order to create immigrants via mutation. These elitism-based and dualism based immigrants together with some random immigrants were substituted into the current population, replacing the worst individuals in the population.

A cellular automata-based artificial immune system optimization algorithm has been proposed for dealing with dynamic environments [47]. The cellular automaton has been used as a diversity generator in population.

Xin et al. have used a self-adaptive mechanism for the determination of transfer rate in using immigrants in population to increase the diversity in the population. By the mentioned strategy, they have proposed a dynamic optimization algorithm [48].

In another work, a hybrid optimization algorithm has been proposed based on combining the artificial bee colony optimization algorithm and the particle swarm optimization algorithm. The main foundation of the algorithm is the artificial bee colony optimization algorithm where their bees have been improved by the particle swarm optimization algorithm. A cellular automaton has been employed to increase the diversity in the population [49].

A method called DMGA is an evolutionary algorithm that uses an explicit memory as a diversity generator in population [50]. The memory size is m = 0.1 × n where it is randomly initiated. In updating memory step, the memory individual that is the most similar to the best individual in the population is replaced the best individual in the population. The updating times are random. In DMGA, the fitness function values of all memory individuals should be reevaluated after each environmental change. If the change occurs, then from all population and memory individuals, a subset of 0.9 × n individuals should be selected as the new population, but the memory should not be changed.

The artificial fish swarm algorithm has been modified by Yazdani et al. to become suitable for solving dynamic environment optimization problems [51]. The Dynamic Modified Artificial Fish Swarm Algorithm (DMAFSA) is modified parameters, behaviors and procedure of the standard AFSA so as to solve dynamic optimization problems.

Mohamadpour et al. [46] have proposed a memory-based approach for solving dynamic environment optimization problems. In their method, the chaotic genetic algorithm based on explicit memory has been employed. They also have proposed a suitable approach for memory updating.

5 Proposed algorithm

The most important challenge for a dynamic method in dealing with dynamic environments is how the method accomplishes the self-adaptation to the new (changed) environment. Therefore, it should track all local optima in its evolution so as to find them as soon as possible if the environment changes. To deal with the mentioned challenge, researchers have tried to improve the evolutionary and swarm intelligence algorithms by adding some special mechanisms. From another perspective, the dynamic algorithm should be (a) fast and (b) accurate. The artificial bee colony optimization algorithm is one of the swarm intelligence algorithms that has both high convergence rate and high capability of local search. The ABC optimization algorithm has been effectively used for solving the static optimization problems.

In this paper, a new method based on the ABC optimization algorithm has been proposed for solving dynamic optimization problems. It has been proved that the simple ABC optimization algorithm is unable to solve these problems [46, 49]. Therefore, the proposed ABC optimization algorithm has been enriched by a memory mechanism and an updating strategy. One important feature of all optimization algorithms is they can converge to the optimal solution after some iterations and consequently they lose their diversity. Therefore, these methods without some improvements are unable to re-search the problem space after an environmental change in order to find the new optimal solution. Therefore, it is safe to acclaim that they are unable to appropriately solve the dynamic optimization problems. Therefore, the proposed method, as it is presented in the flowchart of Fig. 2, uses some new improvements in the ABC optimization algorithm.

5.1 Artificial bee colony algorithm based on clustering and memory

In this article we have proposed an Artificial Bee Colony algorithm based on Clustering and Memory (ABCCM) for dynamic environments. In this method, we employ an explicit memory to store the previously found optimum solutions with the aim of benefiting from them in the new environment at the change moment. Because in a dynamic environment there are cyclic behaviors, it is probable for a previous optimum which has been appeared in a past generation, to be a good solution in current generation.

Employment of a memory raises seven problems/questions (including four challenging problems and three approximately easy problems) to be managed. The three approximately easy problems are: (Q1) “When should we update the memory?”, (Q2) “How many individuals (bees) should be swapped between population (colony) and memory when it is decided to update population (colony) and memory? (either from population to memory or vice versa)”, and (Q3) “When should we update population (colony) from the memory?”. The four challenging problems are: (Q4) “Which individual(s) (bee(s)) of population (colony) should be selected to be placed in memory when it is decided to update the memory?”, (Q5) “Which element(s) of memory should be removed when it is decided to update the memory?”, (Q6) “Which element(s) of memory should be selected to be placed in population (colony) when it is decided to update the population (colony) from the memory?”, and finally (Q7) “Which individual(s) (bee(s)) of population (colony) should be removed when it is decided to update population (colony) from the memory?”.

So employment of a memory can be useful. Before we go forward, we define some keywords.

Memory updating time

The moment that memory is updated from population is named Memory Updating Time (MUT) throughout all this paper.

Population element to be saved in memory

A population (colony) element that is needed to be saved in memory at MUT is named a Population Element to be saved in Memory (PEM) throughout all this paper. It is very important to note that it is possible that there is more than one individual that is of type PEM. If k th individual is of type PEM, we will show it by xk ∈ PEM where xk indicates k th population individual.

Element to be deleted from memory

An element that is needed to be deleted from memory at MUT is named an Element to be deleted from Memory (EM) throughout all this paper. It is very important to note that it is possible that there is more than one memory individual that is of type EM. If k th memory individual is of type EM, we will show it by memk ∈ EM where memk indicates k th memory individual.

Number of individuals transferred from population to memory

The number of individuals (bees) that are needed to be transferred from population (colony) into memory at MUT is named Number of individuals transferred from Population to Memory (NPM) throughout all this paper.

Updated size

The NPM is also named Updated Size (UdS) throughout all this paper.

Population updating time

The moment that population (colony) is updated from memory is named Population Updating Time (PUT) throughout all this paper.

Number of individuals transferred from memory to population

The number of individuals (bees) that are needed to be transferred from memory into population (colony) at PUT is named Number of individuals transferred from Memory to Population (NMP) throughout all this paper.

Updating size

The NMP is also named Updating Size (UgS) throughout all this paper.

Memory element to be saved in population

A memory element that is needed to be saved in population (colony) at PUT is named Memory Element to be saved in Population (MEP) throughout all this paper. It is very important to note that it is possible that there is more than one individual that is of type MEP. If k th individual is of type MEP, we will show it by xk ∈ MEP.

Element to be deleted from population

An element that is needed to be deleted from population (colony) at PUT is named an Element to be deleted from Population (EP) throughout all this paper. It is very important to note that it is possible that there is more than one population individual that is of type EP. If k th memory individual is of type EP, we will show it by xk ∈ EP where xk indicates k th population individual.

Cluster in memory

A number of memory individuals (bees) that are similar to each other and construct a concentrated group are considered as a Cluster in Memory (CM) throughout all this paper. Indeed a CM is a set containing a number of similar individuals in memory.

Cluster in population

A number of population (or colony) individuals (or bees) that are similar to each other and construct a concentrated group are considered as a Cluster in Population (CP) throughout all this paper. Indeed a CP is a set containing a number of similar individuals in population (colony).

Cluster size in memory

The number of clusters defined in the memory is named Cluster Size in Memory (CSM) throughout all this paper.

Cluster size in population

The number of clusters defined in the population (colony) is named Cluster Size in Population (CSP) throughout all this paper.

Cluster center in memory

Each cluster defined in the memory has a central assumptive element that is named Cluster Center in Memory (CCM) throughout all the paper. The j th feature of i th CM is denoted by CCMi(j) and is defined according to (9).

where CMi is i th Cluster in Memory, |CMi| is the number of memory individuals (bees) in the i th Cluster in Memory, and memk is k th memory individual.

Cluster center in population (colony)

Each cluster defined in the population (colony) has a central assumptive element that is named Cluster Center in Population (CCP) throughout all the paper. The j th feature of i th CP is denoted by CCPi(j) and is defined according to (10).

where CPi is i th Cluster in Population, |CPi| is the number of population individuals in the i th Cluster in Population, and xk is k th population individual.

All of the seven questions mentioned above should be responded in a right manner. If we don’t control the above questions properly, it will be highly likely that the memory usage is more harmful than useful. Now we answer seven questions respectively.

(A1) Assume MUTi be i th MUT. The first MUT is assumed to be zero at cycle zero; i.e. MUT0 = 0. Also assume 𝜗i be equal to MUTi+ 1 −MUTi. The 𝜗i is also a random integer number between [L𝜗, U𝜗]. So for obtaining MUTi+ 1, we first compute 𝜗i and then add it to MUTi; as presented in (11).

(A2) both UdS and UgS are to set by user as two parameters of algorithm throughout all the paper.

(A3) PUT is always done at the time of MEC throughout all the paper. It is assumed when environment changes, the population needs to be updated.

(A4) When it is decided to update memory, we should first of all select UdS individuals (it is worthy to mention that UdS is set by user, so in each MUT only UdS individuals are chosen) in the population that can be transformed to memory. All PEM s should be chosen in such a way that two constraints are satisfied. (1) Qualities of memory individuals (bees) should be as high as possible, and (2) Diversity among memory individuals (bees) should be guaranteed.

To satisfy constraint (2), the proposed algorithm first assumes that each population individual is a data sample and each dimension is a feature; so we have a dataset. A fast clustering algorithm partitions data samples (population individuals) into CSP clusters, where CSP = UdS. The proposed algorithm chooses one population individual per each cluster. The proposed algorithm chooses as an PEM individual for that cluster, the population individual that have the most quality among individuals of that cluster. By selecting the best individual in each cluster, the proposed algorithm guarantees constraint (1).

(A5) When it is decided to update memory and after making the clustering of population individuals, in the second phase we should select UdS memory individuals that can be removed (they should be replaced with PEM individuals). All EM individuals should be chosen in such a way that the two constraints mentioned in (A4) are guaranteed to be satisfied. To satisfy constraint (1), the proposed algorithm considers each memory individual as a data sample and each dimension as a feature; so we have a dataset again; we initially place each data sample in a cluster produced in population, i.e. we cluster data samples. The memi is placed in the j th cluster if we have ∀k : {1,2,…,UdS}⋅|memi − CCPj|≤|memi − CCPk|, where CCPk is the center of k th cluster produced right after clustering task accomplished on the population individuals and |⋅| indicates a norm function (here in the paper \(|a| = ({\sum }_{i = 1}^{p} {{a}_{i}^{2}})^{0.5})\). To satisfy constraint (2), the algorithm selects the worst memory element in each cluster as EM in that cluster and it should be replaced with PEM individual in that cluster.

Indeed the algorithm first partitions the population individuals into CP.

where t ∈{1,2,…,UdS} and CPUd stands for clustering of populations individuals when it is wanted to update memory and \({\mathit {CP}}_{t}^{Ud}\) stands for its t th cluster. Let \({\mathit {Best}}_{i}^{Ud} = \arg (\max _{j\in CP_{i}^{Ud}} f(pop_{j} ))\). Then it places any memory element in the cluster whose center is the nearest. i.e.

Let \({\mathit {Worst}}_{i}^{Ud} = \arg (\min _{j\in CM_{i}^{Ud}} f(\mathit {mem}_{j}))\). Now we have \(EM_{i} = \mathit {mem}_{{\mathit {Worst}}_{i}^{Ud}}\) and \(PEM_{i} = pop_{{\mathit {Best}}_{i}^{Ud}}\).

(A6) When it is decided to update population, we should first of all select UgS memory elements (it is worthy to mention that UgS is set by user, so in each PUT only UgS memory elements are chosen) in the memory that can be transformed to population. All MEP s should be chosen in such a way that two constraints are satisfied. (1) Qualities of population individuals (bees) should be as high as possible, and (2) Diversity among population individuals (bees) should be guaranteed.

To satisfy constraint (2), the proposed algorithm first assumes that each memory individual is a data sample and considers each dimension as a feature; so we have a dataset. A fast clustering algorithm partitions data samples (memory elements) into CSM clusters, where CSM = UgS. The proposed algorithm chooses one memory individual per each cluster. The proposed algorithm chooses the memory individual that has the most quality among individuals of that cluster, as an MEP individual for that cluster. By selecting the best individual in each cluster, the proposed algorithm guarantees constraint (1).

(A7) When it is decided to update population and after making the clustering of memory individuals, in the second phase we should select UgS population individuals that can be removed (they should be replaced with MEP individuals). All EP individuals should be chosen in such a way that the two constraints mentioned in (A4) are guaranteed to be satisfied. To satisfy constraint (1), the proposed algorithm considers each population individual as a data sample and each dimension as a feature; so we have a dataset again; we initially place each data sample in a cluster produced over memory individuals, i.e. we cluster data samples. The popi is placed in the j th cluster if we have ∀k : {1,2,…,UgS}⋅|popi −CCMj|≤|popi −CCMk|, where CCMk is the center of k th cluster produced right after clustering task accomplished on the memory individuals. To satisfy constraint (2), the algorithm selects the worst population element in each cluster as EP in that cluster and it should be replaced with MEP individual in that cluster.

Indeed the algorithm first partitions the memory individuals into CM.

where t ∈{1,2,…,UgS}. Let \({Best}_{i}^{Ug} = \arg (\max _{j\in {CM}_{i}^{Ug}} f(mem_{j}))\). Then it places any population element in the cluster whose center is the nearest. i.e.

Let \({\mathit {Worst}}_{i}^{Ug} = \arg (\min _{j\in {CM}_{i}^{Ug}} f(pop_{j} ))\). Now we have \(EP_{i} =pop_{{\mathit {Worst}}_{i}^{Ug}}\) and \(MEP_{i} = mem_{{\mathit {Best}}_{i}^{Ug}}\).

One of the main challenges in dynamic environments is to maintain the diversity when implementing the algorithm and in this method the diversity has been maintained stable in the population using clustering of memory and the population. In this method the memory and the population in the first phase are initialized. Then memory and the population are clustered after being initialized based (12) and (13). Each bee needs to be placed in his cluster for insertion and retrieval. Clustering is an unsupervised learning field in which the samples are divided into a series of clusters as the samples in a cluster are similar and they are different than the samples in other clusters. There are many criteria to measure the similarities between any pair of samples. One of them is Euclidean distance between the two samples. Similar samples have lower Euclidean distance and they are placed in a cluster. This clustering is called distance-based clustering. One of the distance-based clustering is k-means clustering. In k-means clustering the center of a cluster is the average amount of data within each cluster. Then the data are clustered based on the Euclidean distance to cluster centers. This method in each iteration improves the inside cluster changes of the model which is done by estimating the new cluster center in iteration phase. In this way the data are allocated to different clusters based on updated average. This work will continue until the center of the cluster is fixed and the value will remain unchanged in successive iterations and the clusters are stable. Pseudo-code of the proposed method is based on Form (5).

As mentioned previously in order to update the memory an alternative strategy should be used. The pseudo-code of the update function in the memory is presented in Form (6). In this pseudo-code it is mentioned that if there are two similar persons (with the closest distance to each other) exist in the memory the person with lower efficiency is removed from the memory and the person with higher efficiency remains in the memory.

5.2 Descriptions of the proposed algorithm pseudo code

At the beginning of the algorithm the required parameters are defined. In Phase 2 the population must be created. To initialize the population (16) and (17) are used. Initializing population for the base and memory population is done based on (16) and (17).

where |mem| and |pop| are memory and population size. In step 3 and 4 based on efficiency function, competence for each individual memory is calculated by the base or memory population. In phase 6 it becomes clear that memory update is done and a randomized range of rand (5,10). If the memory update time occurs in cycle iteration then the next update for memory is in Rand(5,10) + cycle. The algorithm cycle begins at stage 6. Steps 7 and 8 state the function of updating for the bees and memory population (in step 8 popfunction(x) is ABC algorithm (algorithm 4)). In step 9 the reassess is performed to calculate the efficiency of the bees and if the efficiency is changed even for a single bee, we understand that the environment has changed. Step 11 based on the changes in the environment, the data stored in the memory should be applied foe the new environment which is done in 6 various phases as follows:

-

1.

Efficiency is calculated for the base and memory population.

-

2.

Memory population is clustered.

-

3.

The best person in each cluster of the population is selected and the best person in this population is the one who has the best efficiency.

-

4.

The based population is clustered.

-

5.

The worst person in each base population cluster is selected (the worst individual from each cluster is the least efficient one).

-

6.

The worst person in each cluster is replaced by the best individual from each cluster in the base population.

Step 12 indicates that if update time is greater than the algorithm cycle, we activate update for active memory and otherwise one unit is added to the cycle and finally when we get to the cycle stop condition we leave the cycle and reach the end of algorithm.

Example 1

Figure 3 shows an example that helps to explain the proposed approach. As it is presented in Fig. 3, the problem space is divided into three clusters and in each cluster, the position of the bees is defined by a small star, the center of cluster is defined by the plus, the position of the memory is determined by a circle, the center of cluster of memory population is defined by the square, the center of each peak is marked by a diamond and the new center of the peal is marked by a bug star sign. As discussed before the worst individual of the population is the one who has the greatest distance from the center of the peak and in fact it can be said that the worst person has the least efficiency. If the environment change occurs and the peak center is changed then the efficiency of the members can be changed.

The closest memory to the new peak is considered as the best memory and this memory after the change and displacement of the optimum peak can lead members of the population toward the new peak. The question that arises here is that how a memory understands is close or far from the new peak center. In response to this question, we can say that, if the efficiency of a memory after the change of environment is increased the memory understands that it is closer to the peak and if the efficiency is lowered the memory understands that is far from it. The best individual of the population is the closest one to the center of the peak center.

6 Tests and results

In order to perform the tests on the proposed algorithm and its comparison with other algorithms in a dynamic environment Moving Peaks Benchmark [20] is used to test the efficiency of the proposed method.

6.1 Moving peaks benchmark problem

MPB problem [25] is a good simulator for simulating the dynamic environment. This problem includes m peaks in a dimension space of the size n with real value parameters and the heights, widths and positions of peaks may change over time. MPB function is formulated based on (18):

where, \(B(\vec {{x}})\) is the base value of the environment that is independent of time and P is a function that defines the shape of the peak, that each of m peaks has their time variable parameters, height (h), width (w), and their own position (p). In each ΔE fitness evaluations, height, width and position of each peak are changed. The height and width of each peak changes by adding a Gaussian random variable. The change frequency parameter indicates when the environment is changed or when the algorithm must respond to changes in the environment. Moving Peaks Benchmark function has various parameters that by changing each one of these parameters, the problem nature can be changed. Figure 4 presents the change of the peaks in this problem with multiple peaks.

The parameter s controls the amount of variation, ΔE determines the frequency of changes, the parameter λ determines how the position of a peak is changed based on its previous motion.

If λ = 0, every motion can be random and if λ = 1, peaks can move in a determined path. Whenever a change occurs in the environment this change is mention on the location, height and width of a peak as the equations mentioned in (19).

Transmission vector \(\vec {{\nu } }_{i} (t)\) combines a random vector \(\vec {{r}}_{i} \) with the previous transmission vector \(\vec {{\nu } }_{i} (t-1)\). The random vector \(\vec {{\nu } }_{i} (t)\) is generated by producing the random numbers in [0,1] for each dimension and normalizing it to a length of s. Vector \(\vec {{\nu } }_{i} (t)\) can be created based on the previous change where the position of the peaks is aligned to the previous changes or it is created randomly which changes the position of the peaks and they would have no dependence to the last change. Vector \(\vec {{\nu }}_{i} (t)\) is calculated based on (20):

Peak function for height, width and position of each peak is calculated based on (21).

The part related to the radical mentions the distance between a point and a peak position.

Numerical experiments concerning the Moving Peaks Benchmark, scenario 2, as proposed by Branke (2001) were performed in order to test behavior of the proposed method. The default settings and definition of the benchmark used in the experiments of this paper can be found in Table 1. Parameter settings for the proposed algorithm are presented in Table 2.

6.2 Varying shift severity

The shift severity parameter of the MPB controls the severity of the change in height, width and position of peaks. From Table 3, it can be seen that the results achieved by the proposed algorithm are much better than the results of the other 11 state-of-the-art algorithms on the MPB problems with different shift severity. As we know, the peaks are more and more difficult to track with the increasing of the shift severity. Of course, the performance of any optimization algorithm degrades when the shift severity increases. However, the offline error of the proposed algorithm is better than the other 11 state-of-the-art algorithms. These results indicate the proposed algorithm to adapt better than others algorithm to more severe changes in the landscape.

6.3 Varying number of peaks

Table 4 presents the experimental results in terms of the offline error for 19 algorithms, where the results of the other 18 state-of-the-art algorithms are provided by the corresponding papers with their optimal configuration that enables them to achieve their best performance. In Table 4, mCPSO∗ and mQSO∗ denote mCPSO without anti-convergence and mQSO without anti-convergence, respectively. From Table 4, it can be seen that the performance of the proposed algorithm is not influenced too much when the number of peaks is increased. Usually, increasing the number of peaks makes it harder for algorithms to track the optima. However, the offline error is less when the number of peaks is larger than 50 for the proposed algorithm.

6.4 Coverage of bees around peaks

Figure 5 shows the distribution of bees around peaks that is drawn in 2 dimensions for 10 peaks and the default setting of the MPB after different numbers of the fitness evaluations.

6.5 Effect of the dimension size on the proposed algorithm

Table 5 shows the results of the proposed method with different dimensions when the number of peaks is 10, the change frequency is 5000 and shift severity is 1; it also represents the same results for mQSO, Adaptive mQSO, rPSO and mPSO. The results presented in Table 5 shows “the more dimensions of the landscape, the better the performance of the proposed algorithm comparing to the other algorithms”. Figure 6 presents the diagrams of the offline error for the proposed method when number of dimensions is 5 or 30, the frequency of changes is 5000 and the number of peaks is 10.

6.6 Effect of memory and clustering

Memory and clustering are of those facilities that are able to significantly improve both of the convergence time and the performance metric of dynamic optimizers. In previous sections, we have discussed about clustering in details. In this subsection, the effect of these two concepts is evaluated on dynamic optimization paradigm.

To determine how effective these two concepts are, the offline errors for four paradigms have been produced: With-Memory- withOut-Clustering (WMOC), withOut-Memory-With-Clustering (OMWC), withOut-Memory-withOut-Clustering (OMOC), and With-Memory-With-Clustering (WMWC). These results are depicted in Fig. 7. The parameters of these results are the same mentioned as default parameters.

6.7 Effect of frequency change on offline error

In this section, the offline error is plotted for different frequency changes (i.e. 500, 1000, 2500, 5000, 10000, and 15000) when there are 10 peaks, 5 dimensions, and parameter s is set to 1.

Figure 8a depicts the offline error curve of the proposed method when the frequency change is 500 and the number of the fitness evaluations is 50000 (it means that 100 changes occur). The number of peaks is 10 in all experiments of this section. Figure 8b depicts the offline error curve of the proposed method when the frequency change is 1000 and the number of the fitness evaluations is 100000 (it means that 100 changes occur again here). Figure 8c, d, e and f depict the offline error curves of the proposed method when the frequency changes are 2500, 5000, 10000, and 15000 respectively and the numbers of the fitness evaluations are 250000, 500000, 1000000, and 1500000 (it means that 100 changes occur in all of these cases).

6.8 Comparing with the state-of-the-art methods

In this section we have compared our method with 6 state-of-the-art methods including PCAFSA [41], Adaptive-SFA [42], CDEPSO [43], mNASA [44], Multi-pop-ABC [45], and CMBGA [46] in terms of the offline and standard errors. The employed parameters for these methods have been extracted based on their papers and have been presented in Table 6. The experimental comparison of the proposed method with the algorithms mentioned in Table 6 has been presented in Table 7. The results indicate that by increasing the number of the peaks, our method becomes more superior to state-of-the-art methods.

Effect of λ value on the offline error of the proposed method when the change frequency is 500 and there are 10 peaks has been investigated here. The experimental results presented in Table 8 indicate as the value of the parameter λ increases, our method performs better. It has been predictable, because by increasing λ value, the randomness in the movements of the peaks becomes more limited.

The memory size is the last parameter that should be investigated in our experimentations so as to find out what value is its best option. This parameter plays an important role in effectuality of the proposed method. Figure 9 depicts the proposed method efficacy in terms of the number of peaks when change frequency is 5000 for memory sizes 10, 20, 30, and 40. The best value for memory size is 10 according to these results.

The effect of the population size on performance of the proposed method in terms of average offline errors has been examined in Table 9.

The results presented in Table 9 indicate the best population size is no more than 150 and no less than 50. It can be 100 according to the results of Table 9. Due to two reasons: (a) if the population size is a large value, the algorithm should accomplish many fitness function evaluations in one generation; and (b) if population is very small, then the algorithm exploration is not guaranteed

7 Conclusions and future work

Intelligent optimization algorithms in dynamic environments should be designed in such a way that they could track the optima efficiently. In this article we use a combination of the memory and artificial bee colony algorithm to maintain good solutions. We increase algorithms’ efficiency by clustering of population and memory individuals. We employed an appropriate strategy to maintain diversity in population. We experimentally have shown that the proposed algorithm completely outperforms the state-of-the-art algorithms in terms of convergence speed. Usage of the proposed algorithm with chaos theory can be a good idea for future works.

References

Branke J (1999) Memory enhanced evolutionary algorithms for changing optimization problems. Proc Congr Evol Comput 3:1875–1882

Yang S (2006) Associative memory scheme for genetic algorithms in dynamic environments. In: Proceedings of EvoWorkshops: Appl. Evol. Comput., LNCS 3907, pp 788–799

Yang S, Yao X (2008) Population-based incremental learning with associative memory for dynamic environments. IEEE Trans Evol Comput 12(5):542–561

Cobb HG, Grefenstette JJ (1993) Genetic algorithms for tracking changing environments. In: Proceedings of the 5th international conference on genetic algorithms, pp 523–530

Grefenstette JJ (1992) Genetic algorithms for changing environments. In: Proceedings of the 2nd international conference on parallel problem solving from nature, pp 137–144

Yang S (2008) Genetic algorithms with memory and elitism-based immigrants in dynamic environment. Evol Comput 16(3):385–416

Ramsey CL, Grefenstette JJ (1993) Case-based initialization of genetic algorithms. In: Forrest S (ed) Proceedings of the fifth international conference on genetic algorithms. Morgan Kaufmann, pp 84–91

Louis SJ, Xu Z (1996) Genetic algorithms for open shop scheduling and re-scheduling. In: Cohen ME, Hudson DL (eds) Proceedings of the eleventh international conference on computers and their applications (ISCA), pp 99–102

Yang S, Tinos R (2007) A hybrid immigrants scheme for genetic algorithms in dynamic environments. Int J Autom Comput 3(4):243–254

Goldberg DE, Smith RE (1987) Non-stationary function optimization using genetic algorithms with dominance and diploidy. In: Grefenstette JJ (ed) Proceedings of the second international conference on genetic algorithms (ICGA 1987). Lawrence Erlbaum Associates, pp 5968

Ryan C (1997) Diploidy without dominance. In: Nordic workshop on genetic algorithms, pp 45–52

Ryan C (1997) Dyploidy without dominance. In: Alander JT (ed) Proceedings of the nordic workshop on genetic algorithms, pp 63–70

Lewis EHJ, Ritchie G (1998) A comparison of dominance mechanisms and simple mutation on non-stationary problems. In: Schoenauer M, Deb K, Rudolf G, Yao X, Lutton E, Merelo JJJ, Schwefel H-P (eds) Proceedings of the parallel problem solving from nature (PPSN V), vol 1917 of Lecture notes on computer science. Springer, pp 139–148

Uyar AS, Harmanci AE (1999) Investigation of new operators for a diploid genetic algorithm. In: Proceedings of SPIE’s annual meeting

Uyar AS, Harmanci AE (2005) A new population based adaptive dominance change mechanism for diploid genetic algorithms in dynamic environments. Soft Comput 9(11):803–814

Yang S (2006) Dominance learning in diploid genetic algorithms for dynamic optimization problems. In: Keijzer M et al. (eds) Proceedings of the eighth international genetic and evolutionary computation. Conference (GECCO 2006). ACM Press, pp 1435–1436

Yang S (2007) Explicit memory schemes for evolutionary algorithms in dynamic environments. In: Yang S, Ong Y-S, Jin Y (eds) Evolutionary computation in dynamic and uncertain environments, vol 51 of Studies in computational intelligence, pp 3–28

Ramsey CL, Grefenstette JJ (1993) Case-based initialization of genetic algorithms. In: Forrest S (ed) Proceedings of the fifth international conference on genetic algorithms. Morgan Kaufmann, pp 84–91

Louis SJ, Xu Z (1996) Genetic algorithms for open shop scheduling and rescheduling. In: Cohen ME, Hudson DL (eds) Proceedings of the eleventh international conference on computers and their applications (ISCA), pp 99–102

Trojanowski K, Michalewicz Z (1999) Searching for optima in non-stationary environments. In: Proceedings of the IEEE congress on evolutionary computation (CEC 1999). IEEE Press, pp 1843–1850

Barlow GJ, Smith SF (2008) A memory enhanced evolutionary algorithm for dynamic scheduling problems. In: Springer (ed) Applications of evolutionary computing, vol 4974 of Lecture notes in computer science, pp 606–615

Ryan C (1997) Dyploidy without dominance. In: Alander JT (ed) Proceedings of the nordic workshop on genetic algorithms, pp 63–70

Bird S, Li X (2007) Using regression to improve local convergence. In: Proceedings of congress on evolutionary computation, pp 592–599

Karaboga D, Basturk B (2009) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Glob Optim 39:459–471

Branke J (1999) Memory enhanced evolutionary algorithms for changing optimization problems. In: Proceedings of the IEEE congress on evolutionary computation (CEC 1999). IEEE Press, pp 1875–1882

Yang S, Li C (2009) A clustering particle swarm optimizer for dynamic optimization. In: Proceedings of congress on evolutionary computation, pp 439–446

Blackwell T, Branke J, Li X (2008) Particle swarms for dynamic optimization problems. In: Swarm intelligence. Springer, Berlin, pp 193–217

Blackwell TM, Branke J (2006) Multiswarms, exclusion, and anticon vergence in dynamic environments. IEEE Trans Evol Comput 10(4):459–472

Lung RI, Dumitrescu D (2010) Evolutionary swarm cooperative optimization in dynamic environments. Nat Comput 9(1):83–94

Lung RI, Dumitrescu D (2007) A collaborative model for tracking optima in dynamic environments. In: Proceedings of congress on evolutionary computation, pp 564–567

Blackwell TM, Branke J (2006) Multiswarms, exclusion, and anticon vergence in dynamic environments. IEEE Trans Evol Comput 10(4):459–472

Li X (2004) Adaptively choosing neighborhood bests using species in a particle swarm optimizer for multimodal function optimization. In: Proceedings of genetic and evolutionary computation conference, pp 105–116

Yang S, Li C (2012) A clustering particle swarm optimizer for locating and tracking multiple optima in dynamic environments. IEEE Trans 4:16

Liu L, Yang S, Wang D (2010) Particle swarm optimization with composite particles in dynamic environments. IEEE Trans Syst Man Cybern B Cybern 40(6):1634–1648

Kamosi M, Hashemi AB, Meybodi MR (2010) A hibernating multiswarm optimization algorithm for dynamic environments. In: Proceedings of world congress on NaBIC, pp 363–369

Woldesenbet YG, Yen GG (2009) Dynamic evolutionary algorithm with variable relocation. IEEE Trans Evol Comput 13(3):500–513

Yang S, Li C (2008) Fast multi-swarm optimization for dynamic optimization problems. In: Proceedings of international conference on natural computation, vol 7, no 3, pp 624–628

Hashemi B, Meybodi M (2009) Cellular PSO: a PSO for dynamic environments. In: Advances in computation and intelligence. Lecture notes in computer science, vol 5821, pp 422–433

Wang H, Yang S, Ip WH, Wang D (2012) A memetic particle swarm optimization algorithm for dyanamic multi modal optimization problems. Int J Syst Sci 43(7):1268–1283

Blackwell T, Branke J, Li X (2008) Particle swarms for dynamic optimization problems. In: Swarm S, Yang C, Li A (eds) Clustering particle swarm optimizer for locating and intelligence. Springer, Berlin, pp 193–217

Yazdani D, Sepas-Moghaddam A, Dehban A, Horta N (2016) A novel approach for optimization in dynamic environments based on modified artificial fish swarm algorithm. Int J Comput Intell Appl 15(2):1650010 (23 pages)

Nasiri B, Meybodi MR (2016) Improved speciation-based firefly algorithm in dynamic and uncertain environment. Int J Bio-Inspir Comput (in press)

Kordestani JK, Rezvanian A, Meybodi MR (2014) CDEPSO: a bi-population hybrid approach for dynamic optimization problems. Appl Intell 40:682–694

Yazdani D, Nasiri B, Sepas-Moghaddam A, Meybodi M, Akbarzadeh-Totonchi M (2014) MNAFSA: a novel approach for optimization in dynamic environments with global changes. Swarm Evolut Comput 18:38–53

Shams KN, Abdullah S, Turky A, Kendall G (2016) An adaptive multi-population artificial bee colony algorithm for dynamic optimisation problems. In: Knowledge-based systems ⋅ 104 April 2016 with 138 reads. https://doi.org/10.1016/j.knosys.2016.04.005

Mohammadpour M, Parvin H, Sina M (2018) Chaotic genetic algorithm based on explicit memory with a new strategy for updating and retrieval of memory in dynamic environments. J AI Data Min 6:191–205 (in press)

Rezvanian A, Meybodi MR (2010) Tracking extrema in dynamic environments using a learning Automata-Based immune algorithm, grid and distributed computing. Control Autom 121:216–225

Xin Y, Ke T, Xin Y (2011) Immigrant schemes for evolutionary algorithms in dynamic environments: adapting the replacement rate. Science in China Series F - Information Sciences II:543–552

Baktash N, Mahmoudi F, Meybodi MR (2012) Cellular PSO-ABC: a new hybrid model for dynamic environment. Int J Comput Theory Eng 4(3):365–368

Yang S (2007) Explicit memory schemes for evolutionary algorithms in dynamic environments.. In: Evolutionary computation in dynamic and uncertain environments, vol 51. Springer, Heidelberg, pp 3–28

Yazdani D, Akbarzadeh-Totonchi MR, Nasiri B, Meybodi MR (2012) A new artificial fish swarm algorithm for dynamic optimization problems. In: IEEE congress on evolutionary computation (CEC), pp 1–8

Saxena N, Mishra KK (2017) Improved multi-objective particle swarm optimization algorithm for optimizing watermark strength in color image watermarking. Appl Intell 47(2):362–381

Sharma B, Prakash R, Tiwari S, Mishra KK (2017) A variant of environmental adaptation method with real parameter encoding and its application in economic load dispatch problem. Appl Intell 47(2):409–429

Tripathi A, Saxena N, Mishra KK, Misra AK (2017) A nature inspired hybrid optimisation algorithm for dynamic environment with real parameter encoding. IJBIC 10(1):24–32

Acknowledgements

We want to be thankful of Yasooj Branch, Islamic Azad University, Yasooj, Iran, for supporting this research.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Parvin, H., Nejatian, S. & Mohamadpour, M. Explicit memory based ABC with a clustering strategy for updating and retrieval of memory in dynamic environments. Appl Intell 48, 4317–4337 (2018). https://doi.org/10.1007/s10489-018-1197-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1197-z