Abstract

In this paper, we propose a differential evolution (DE) algorithm variant with a combination of multiple mutation strategies based on roulette wheel selection, which we call MMRDE. We first propose a new, reflection-based mutation operation inspired by the reflection operations in the Nelder–Mead method. We design an experiment to compare its performance with seven mutation strategies, and we prove its effectiveness at balancing exploration and exploitation of DE. Although our reflection-based mutation strategy can balance exploration and exploitation of DE, it is still prone to premature convergence or evolutionary stagnation when solving complex multimodal optimization problems. Therefore, we add two basic strategies to help maintain population diversity and increase the robustness. We use roulette wheel selection to arrange mutation strategies based on their success rates for each individual. MMRDE is tested with some improved DE variants based on 28 benchmark functions for real-parameter optimization that have been recommended by the Institute of Electrical and Electronics Engineers CEC2013 special session. Experimental results indicate that the proposed algorithm shows its effectiveness at cooperative work with multiple strategies. It can obtain a good balance between exploration and exploitation. The proposed algorithm can guide the search for a global optimal solution with quick convergence compared with other improved DE variants.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The differential evolution (DE) algorithm proposed by Price and Storn [1] is a simple but powerful implementation of stochastic search techniques. It has the advantages of simple principles, few controlled parameters, and strong robustness [1,2,3] for real-valued parameter optimization. Population-based optimization algorithms, including DE, tend to suffer from long computational times because of their evolutionary stochastic nature. This crucial drawback sometimes limits their applications to problems with few or no real-time constraints. Despite this drawback, the DE algorithm has a strong real-number domain-search capability, and the improved DE algorithm is still an important research field whose purpose is to adapt to more complex optimization problems and achieve high-quality solutions.

DE mainly has three control parameters, population size NP, scaling factor F, and crossover rate CR, and many mutation strategies have been proposed. [5, 9, 16, 24] Some mutation strategies are more suitable for global convergence, [34] some are more effective in the local search, [7] and some are more suitable when solving rotated problems. [4] Control parameters of the classical DE are fixed, and a single mutation strategy is used. The performance of DE may vary greatly for different optimization problems using the same control parameters and single offspring generation strategy. [34] However, for a given optimization problem, the most suitable control parameters and the proper mutation strategy may not be the same at different stages of evolution. [34] There are many large-scale optimization problems in the real world. For some problems, we may have to spend a large amount of time to determine the best strategy and tuning parameters [5, 6] to obtain better performance. A DE with outstanding performance is required to achieve a global optimum without considering the characteristics of the problem.

Much attention has been focused on methods to automatically tune parameters and to develop an excellent mutation strategy or ensemble of mutation strategies. This focus has resulted in many improved DE variants, such as SaDE [7] (ensemble of two mutation strategies and self-adapted parameters), jDE [8] (self-adaptive tuning parameters), JADE [9] (to develop an excellent mutation strategy and self-adapted parameters), EPSDE [10] (ensemble of mutation strategies and parameters), CODE [11] (composition of mutation strategies with their own parameters), SHADE [12] (self-adapted parameters), HSDE [13] (hybrid of two mutation strategies), GADE [14] (greedy adapted parameters), MPEDE [15] (ensemble of multiple mutation strategies), and DE/Alopex/1 [16] (to develop an excellent mutation strategy).

The Nelder–Mead method is a traditional direct algorithm for unconstrained nonlinear optimization problems. It has excellent local search capability, but it has strong dependence on the initial solution and it falls easily into a local minimum. Reflection, expansion, contraction, and shrinking are its primary operations. In this paper, inspired by the reflection operations in the Nelder–Mead method, we propose a new, reflection-based mutation operation. For each individual to be mutated, we randomly select four individuals from the current population, calculate the weighted center of the three individuals with better fitness based on their object function values, and use the difference vector of the best and worst individuals as the perturbation vector. We use the center we have calculated as the target individual, thus completing a mutation operation. We compare the reflection-based strategy and seven other mutation strategies in an experiment to prove that the proposed mutation strategy has good exploration and exploitation capability.

Although the reflection-based strategy can balance exploration and exploitation better than other basic mutation strategies, it is still a greedy mutation strategy and is prone to premature convergence when solving complex multimodal optimization problems. We add two basic strategies to generate perturbation vectors to maintain population diversity and to increase robustness. We use a roulette wheel selection strategy to arrange mutation strategies based on their success rates for each individual. We call this variant DE with multiple mutation strategies based on roulette wheel selection (MMRDE). We used 28 benchmark functions to test the performance of MMRDE with some improved DE variants. Experimental results indicate that the proposed algorithm can balance between exploration and exploitation well. The algorithm shows the effectiveness of three mutation strategies in cooperative work. It can guide the search for a global optimal solution with fast convergence compared with other improved DE variants.

This paper has six sections. Section 2 discusses relevant works. Section 3 explains the traditional DE algorithm. We introduce the details of our proposed MMRDE in Section 4. Section 5 discusses the experimental study to test the new reflection-based mutation strategy with other mutation strategies and compares the performance of MMRDE to some improved DE variants. We provide our conclusions in Section 6.

2 Relevant works

The DE algorithm is an excellent evolutionary algorithm (EA) that is simple, has few controlled parameters, and is robust. DE, however, is prone to suffer from premature convergence, evolution stagnation, and long computational times. The performance of DE strongly depends on the setting of control parameters and/or the mutation strategy used.

DE mainly uses the three control parameters NP, F, and CR. NP is used to deal with the problem of different dimensionalities, and F has greater influence on the convergence speed of DE. [7] The choice of F is more flexible. Storn et al. [1] said that a value of F in [0.5, 1] is reasonable, a value less than 0.4 or more than 1.0 can sometimes be effective, and a value of 0.6 or 0.5 is often a good choice. As discussed in the literature, [17] an initialF value of 0.9 is recommended. According to Qin et al., [7] a value of F generated randomly in the range (0, 1] is usually preferred. CR mainly affects the adaptability of DE to solve complex problems. A proper value of CR may result in good performance in some learning strategies, and the wrong choice may lead to deterioration in the performance of any learning strategy. A good CR parameter value usually falls in a small range, and the algorithm always performs well on a complex problem. As discussed in the literature, [1] a large CR can accelerate the convergence rate, an initial value of 0.1 is a good choice, and setting CR to 0.9 or 1.0 can accelerate convergence. Price and Storn [18] proposed setting CR in the range (0, 0.2) for separable problems, and (0.9, 0.1) for multimodal problems. In the previously noted research, we see different conclusions for the tuning of control parameters, but we know that the proper parameters will vary according to the type of problem. Adaptively controlling the parameters within the entire evolution process can enhance the performance of DE. [19]

The control parameter settings of DE can presently be classified into the three categories of deterministic, adaptive, and self-adaptive parameter control. [20] Lampinen [21] proposed a fuzzy adaptive DE in which the values of F and CR are dynamically adapted based on fuzzy logic controllers. Brest et al. [8] proposed a self-adapting control parameter scheme (jDE), which may be the first in a category of literature discussing self-adapting control parameters. Ghosh et al. [22] presented an improved DE (FiADE) algorithm with tuning control parameters based on individual object function values. Leon et al. [14] proposed an adapting DE (GADE) that performs a greedy search to adjust the control parameters. Zhang et al. [9] proposed a self-adaptive control parameter adjustment scheme (JADE) in which F and CR are generated randomly according to Cauchy and normal distributions, respectively.

The chosen mutation strategy has a profound effect on the performance of DE. [23] The idea of designing a new mutation strategy [24] or introducing a technique that automatically selects the classical mutation strategy is used to improve DE performance. A new mutation strategy, DE/current-to-p best, with an optional external archive proposed by Zhang et al., [9] is a variant of DE/current-to-best. DE/current-to-p best/1 randomly chooses p% of the population’s best individuals instead of the best individual, as in DE/current-to-best/1. A novel mutation strategy called DE/current-to-gr_best/1 [25] is a variant of DE/current-to-best/1 proposed for the binomial crossover of DE. DE/current-to-gr_best/1 randomly chooses q% of the population as a group and uses the best solutions of the group to perturb the target vector. An improved DE variant named IDE [26] proposes a new triangular mutation strategy to balance global exploration and exploitation of DE. This strategy uses three randomly chosen vectors to form a convex combination vector, which is used as the base vector of the mutation strategy. This triangular mutation strategy uses the difference between the best and worst individuals among the three randomly selected vectors as the disturbance vector. This triangular mutation strategy combines with the basic mutation strategy (DE/rand/1/bin) through a nonlinear decreasing probability. Wang et al. [27] proposed an improved DE variant (IMSaDE) by using an improved mutation strategy that incorporated an elite archive strategy and parameter-adaptation strategy in the basic mutation strategy DE/rand/2. Using different offspring individual generation patterns in an optimization loop can improve the quality of subsequent offspring individuals. Moreover, the optimal individual’s relevant information can be passed to the rest of the individuals, which may involve different variation strategies. So, it can also improve the performance of the next generation. The use of different mutation strategies [28] can provide a balance between exploration and exploitation. This also reduces stagnation and premature convergence in DE. Two DE variants with adaptive strategy selection were proposed by Gong et al. [29] to autonomously select the most suitable strategy. Yi et al. [13] proposed an improved DE variant with a hybrid mutation strategy and self-adapting control parameters. The hybrid mutation strategy uses two types of mutation operators and autonomously selects the mutation operators for each individual. Leon et al. [16] proposed a novel mutation strategy called DE/Alopex/1, which calculates the probabilities of move directions based on the fitness value between two individuals, while the movement direction of other mutation strategies is independent of the fitness of selected individuals.

3 The basic DE

DE has proven to be an efficient and effective global algorithm for real-code optimization, and it has been shown to outperform the genetic algorithm. DE is similar to other intelligent optimization algorithms (e.g., genetic algorithms). It also randomly generates a population of NP feasible solutions PG = {X1,G,X2,G,…,XNP,G}. Any one feasible solution is based on constraint-optimization decision variables in the equation. We call it an individual \(X_{i,G} =\left ({x_{i,G}^{1} ,x_{i,G}^{2} ,\ldots ,x_{i,G}^{D}} \right ),i = 1,2,\mathellipsis ,NP\), where G denotes the number of generations and D is the number of individual dimensions. Each dimension of the individual is determined randomly and uniformly in the range [Xmin,Xmax], where Xmin = (x min1,x min2,…,x minD) and Xmax = (x max1,x max2,…,x maxD). Such a series of random individuals constitute the initial population, which can be expressed as

where rand(0,1) denotes a real number that is determined randomly and uniformly between 0 and 1.

3.1 Mutation

DE utilizes the difference vector of parents as its basic variance components. Each difference vector is obtained by subtraction between two different individuals from the parent. The mutation vector is obtained by the addition of the scaled difference vector and different individuals. The DE operator is usually represented by DE/x/y/z, where x represents the perturbed vector (also called the base vector), y represents the number of differential vectors, and z represents the crossover operator. The classical DE algorithm uses DE/rand/1 to generate the mutant vectors,

where Xa,G, Xb,G, and Xc,G are chosen from the current population, and Xi,g is the target individual. Xa,G, Xb,G, and Xc,G are three random individuals. F is a real and constant factor to scale the difference vector.

Some other mutation strategies are used in the literature, as shown in Table 1.

Xbest,G represents the vector with the best fitness value at generationG. Xa,G, Xb,G, Xc,G, Xd,G, and Xe,G are five individuals randomly chosen from the current population. Xi,G is the target vector.

3.2 Crossover operation

To improve the diversity of the population, DE introduces the crossover operation so that at least one component of the vector comes from the variation vector. The details are as follows:

where randj(0,1)∈[0,1] is the j th evaluation of a uniform random generator number, CR is a user-specified crossover probability in the range [0,1], and the index jrandrefers to a randomly chosen dimension, which is used to ensure that the trail vector Ui,G+ 1 gets at least one element from \(V_{i,G} =\left \{ {\begin {array}{l} U_{i,G + 1} ,if\;f\left ({U_{i,G + 1}} \right )<f\left ({X_{i,G}} \right ) \\ X_{i,G} ,otherwise \\ \end {array}} \right .,i = 1,2,\mathellipsis ,NP\). This cross-operation, called a binomial uniform crossover, is widely used in the literature. When the binomial uniform crossover is used, the strategies in Table 1 are named DE/rand/1/bin, DE/best/1/bin, DE/current-to-best/1/bin, DE/best/2/bin, DE/rand/2/bin, andDE/current-to-rand/1/bin, respectively.

3.3 Selection operation

Good selection strategies can significantly improve the convergence performance of the algorithm. DE uses a greedy selection strategy. The individual generated through mutation and crossover operations is compared with its parent individual. The better-performing individual is selected as one of the next generation of individuals in a population, as follows:

A series of mutations, crossovers, and selection operations can produce the same number of new individuals comprising the next-generation population. Then, the previous generation population continues the operation until termination conditions are reached. The termination conditions are usually met when the maximum number of iterations or the optimal solution of the relative equations is reached.

4 DE with multiple mutation strategies based on roulette wheel selection

In this section, we will introduce the details of the MMRDE algorithm. First, we propose a new reflection-based mutation operation inspired by the reflection operations in the Nelder–Mead method.

4.1 Reflection-based mutation

The Nelder–Mead method is a traditional direct algorithm for unconstrained nonlinear optimization problems. The method first constructs a simplex, which is a polyhedron containing n + 1 vertices for n-dimensional optimization. After calculating the object function of each vertex, we determine the best point, the reciprocal second-worst point, and the worst point. Through reflection, expansion, contraction, and shrink operations, a better solution can be found and obtain a new simplex by instead of the worst point with the better solution, then an approximate global optimal solution can be obtained after many iterations. The Nelder–Mead method is simple and easy to implement. It has excellent local search capability and does not require the function to be derivable, so it has a wide range of applications. It has strong dependence on the initial solution, however, and easily falls into a local minimum. The search direction of reflection and expansion operations is consistent. The expansion operation is performed on the premise that the reflected point is best so far. The contraction operation is performed when the reflected point is worse than the reciprocal second-worst point. Compression operations are performed when both reflection and contraction operations are unable to search for a better solution than the reciprocal second-worst point, and their main function is to compress the search space.

After a certain generation of a DE algorithm, the population individual shows the tendency to gather near the optimal solution. [30, 31] Therefore, the whole DE algorithm’s optimization process is similar to the compression operation in the Nelder–Mead method. The reflected point is generated with the worst point reflected through the centroid of the remaining n points after removing the worst point.

Motivated by this operation, we designed what we call a reflection-based mutation strategy, which is similar to the reflection operations in the Nelder–Mead method for the DE algorithm. We randomly select four individuals from the current population. We sort them from good to bad according to their object function values, f(Xa,G) < f(Xb,G) < f(Xc,G) < f(Xd,G). Then, the center Xo,G of the first three individuals is generated according to their function values. The trial vectors are generated with the worst point reflected through the best individual. The details are as follows:

where w1,w2, and w3 are the respective weights for Xa,G, Xb,G, and Xc,G; and F is a mutation scaling factor.

The Nelder–Mead method has excellent local search capability. But it has strong dependence on the initial solution and easily falls into a local minimum. Nelder–Mead approximates the optimum solution of a problem with n variables when the object function is continuous and unimodal in the search space. The method, however, is prone to becoming trapped in a basin of attraction of locally optimal solutions and to converge prematurely when solving multimodal optimization problems. The lack of diversity is an important reason for premature convergence of the algorithm. Population diversity and the convergence rate often can be antagonistic. Because the Nelder–Mead method uses all the points except the worst point to calculate the center point and uses a determined evolutionary direction, it can easily lose diversity and converge prematurely. Our reflection-based mutation strategy uses four random individuals, which are a small part of the population when solving high-dimensional problems, to perform mutation operations. When considering the solution of low-dimensional problems when the population is not large, four individuals are not a small number relative to the population, so we allow for the existence of duplicates of these four individuals, but there are at least two individuals. Therefore, this mutation operation can balance exploration and exploitation. Its performance will be confirmed in Section 5.2.

4.2 Assisted mutation strategies

The reflection-based mutation strategy is a greedy strategy. Although this mutation operation can balance exploration and exploitation better than other basic mutation strategies, it is still prone to premature convergence when solving complex multimodal optimization problems. To make it more suitable for solving as many optimization problems as possible, we must increase the robustness of the algorithm. We add two basic strategies to generate perturbation vectors to maintain population diversity. The characteristics of these two mutation strategies are described below:

-

1.

DE/rand/1/bin: DE/rand/1 is a widely used basic mutation strategy for random searches that is always used together with a binomial crossover. [15, 32, 33] It has strong exploration ability and can effectively maintain the diversity of populations. DE/rand/1 is suitable for solving multimodal optimization problems. It converges more slowly, however, when solving single modal problems. [34]

-

2.

DE/current-to-rand/1 without crossover: This mutation strategy uses information of a random individual in the current population to guide the mutation of the current individual. This mutation operation is applied without crossover. It is especially suitable for solving rotation problems because it is rotation-invariant. [11, 15, 35]

4.3 Selection of mutation strategies

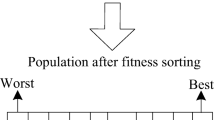

Like the original DE algorithm, only one population is used in our algorithm. Therefore, we face the problem of how to arrange the mutation strategy for each individual. In this study, we use a roulette wheel selection strategy [36,37,38] to arrange mutation strategies for each individual. Roulette strategies are based on the success rate of each strategy, which is the ratio of the number of better offspring individuals generated by one mutation strategy to the total number of individuals using that strategy.

In the initial generation, all mutation strategies have equal success rates. This ensures that each mutation strategy can be used by almost the same number of individuals. As evolution proceeds, some variation strategies may not be appropriate for the evolution of the current generation, so their success rates will be recalculated. The success rate of mutation strategy k (srk) is calculated as follows:

where Nk is the total number of individuals assigned mutation strategy k; srk refers to the success rate of mutation strategy k (it is a real value and varies between 0 and 1); NP is a population size; Ui,k,G indicates an individual with an index of i that uses mutation strategy k; and sci,k is an indicator that denotes whether individual Ui,k,G generates better offspring Xi,k,G. The higher a mutation strategy’s success rate, the greater the probability it is chosen. To ensure that the algorithm does not lose some mutation strategies during evolution, we set the same minimum value of the success rate for all mutation strategies. We then calculate the mutation strategy success rate to be below the predetermined minimum, discard the calculated success rate, and replace this rate with the predetermined minimum. We set the minimum success rate of each mutation strategy to 0.08. In addition, to ensure that the success rate of each strategy can be fully calculated, we calculate the success rate every 10 generations.

4.4 Parameter self-adaptive adjustment mechanism

The parameter self-adaptive adjustment mechanism allows an individual to use a scaling factor and crossover factor to generate new individuals, greatly improving convergence performance. The parameter adaptive regulation method we adopt is based on Tanabe et al., [12] which in turn is based on JADE. [9] Two history memories, MF and MCR, each with H entries, store the mean values of a Cauchy and normal distribution, respectively. Fi and CRi are scale and crossover factors, respectively, of individuals from the current population. Fi and CRi are randomly generated according to their respective distributions. The variation factors and crossover factors of an individual are self-adaptively generated as follows:

where MF,ri and MCR,ri are elements that are randomly selected from MF and MCR, respectively. Both MF and MCR are initialized to 0.5. To update MF and MCR expediently, we introduce an index pos to remember the update position, with pos initialized to 1. If pos> H, then pos= 1, and 1 is added to pos at the end of each generation. Fi is truncated to 1 when Fi > 1; when Fi < 0, Fi is considered to have an invalid value and it must be regenerated. If CRi < 0, then CRi is truncated to 0, and if CRi > 1 then CRi is truncated to 1. MF,pos and MCR,pos are recalculated at the end of each generation as follows:

where SF and SCR are sets of all success mutation factors and crossover factors, respectively. They inherit the Fi and CRi of the successful individuals in each generation. That is, if a trial vector Ui,G has a lower object value and then takes this operator, then Fi → SF, CRi → SCR. A weight strategy is introduced to control the generation of MF,i andMCR,i. This operation effectively alleviates the obvious bias of MCR,i to a small value during the evolution process, according to Peng et al., [39] by introducing an array Δf to record the deviation of the old and new fitness values of the successful individuals, that is, Δf(k) = fold(k) − fnew(k). The size of the array Δf is equal to the number of successful individuals in the current generation. To aid in understanding the details of MMRDE, Fig. 1 displays its framework.

5 Experimental Setup

This section shows all the experiments we performed for this study. Our algorithms were coded using Fortran 90. The compiler was Intel Visual FORTRAN Composer XE 2013 with VS2010. We ran the algorithms on a PC with 2.83 GHz Intel(R) Core(TM)2 Quad CPU and 4GB RAM on Windows 7. A benchmark set was used to test the performance of the proposed algorithm (MMRDE).

5.1 Introduction of the benchmark set

The benchmark set used in this study consists of 28 benchmark functions from the Institute of Electrical and Electronics Engineers CEC2013 special session on real-parameter optimization. A detailed description of the 28 benchmark functions can be found in Liang et al. [40] Overall, these 28 benchmark functions can be divided into three classes:

-

1.

Unimodal Functions: f1 ∼ f5

-

2.

Basic Multimodal Functions: f6 ∼ f20

-

3.

Composition Functions: f21 ∼ f28

Given theoretical thinking that the error values all converge to 0, to avoid influence of the algorithm on the accuracy of the program language, when the optimal individual reached the precision (10− 8), the algorithm was deemed to converge, and it exited the calculation.

5.2 Performance test of DE/Reflection/1

5.3 Experimental settings

To demonstrate the performance of our reflection-based strategy, we compare the performance of the algorithm with reflection-based DE/Reflection/1 and with seven other mutation strategies: DE/Alopex/1, [16] DE/rand/1, DE/best/1, DE/current-to-best/1, DE/best/2, DE/rand/2, and DE/current-to-rand/1. The dimensions of all functions are set to 30.

To ensure fair comparisons, all mutation strategies are combined with the binomial cross strategy, and all adopt the same fixed control parameters: mutation factor F = 0.5, crossover rate CR= 0.5. The population size (NP) of all variants is set to 100. The maximum function evaluations number is max_FES = 30000*D Each algorithm runs independently 30 times, and the average function value (mean) and standard deviation (Std.) of results of these 30 runs are listed in Table 2. To clearly see the performance of the algorithm in each test function, the best performing algorithm results are set in bold. For comparison purposes, the Wilcoxon signed-rank test for pairwise samples is conducted between DE/Reflection/1 and seven other variants. The symbols +, =, and −, respectively, indicate that performance of DE/Reflection/1 is significantly better, not significantly better or worse, or significantly worse than other compared algorithms according to the Wilcoxon signed-rank test at the α = 0.05 level. The three lines at the bottom represent the count results of +, =, and −.

5.4 Comparison of DE/Reflection/1 with other basic DE variants

We can observe from Table 2 that DE/Reflection/1 has the highest number of best results among all the variants, accounting for 32.1% of the test functions. DE/Alopex/1 follows with 28.6%. DE/rand/2 has the worst overall performance because it has the highest probability of achieving the worst result, accounting for 64.3% of the functions. DE/Reflection/1 mostly achieves the best result on composition functions, so it is more suitable for solving this type of function. DE/Alopex/1 mostly gets the best result on basic multimodal functions. DE/current-to-best/1 is more suitable for solving unimodal functions.

The bottom three lines of Table 2 represent the results of pairwise comparisons between DE/1Reflection/1 and other algorithms. DE/Reflection/1 is better at more functions than other mutation strategies, in addition to DE/Alopex/1 and DE/current-to-best/1. DE/Reflection/1 achieves similar performance to DE/Alopex/1 and DE/current-to-best/1 Table 3 shows more detailed results. From this we see that DE/Reflection/1 performs well for unimodal functions and only slightly worse than DE/best/1. DE/Alopex/1 performs best when calculating basic multimodal functions, and DE/Reflection/1 achieves similar performance to DE/current-to-best/1 and is only worse than DE/Alopex/1. DE/Reflection/1 performs best when solving composition functions. With an average ranking of 3.29, DE/Reflection/1was the second-best algorithm

In Table 4, the Wilcoxon test is applied to all functions. DE/Reflection/1 is significantly better than DE/rand/1, DE/best/2, and DE/rand/2. It, however, is not significantly better or worse than DE/Alopex/1, DE/best/1, DE/current-to-best/1, and DE/current-to-rand/1.

To compare the convergence speed of each mutation strategy, we choose a set of results from 30 independent runs to draw the convergence process curve. Figure 2 shows the convergence curve of DE/Reflection/1 and other DE variants on some functions from the benchmark set. From this we see that the convergence speed of DE/Reflection/1 is one of the fastest among all the mutation strategies in functions F1, F10, F24, and F25. In Fig. 2a, DE/Reflection/1 is seen to have good local search ability. In Fig. 2g and h, the reflection-variation strategy seems to effectively maintain the population diversity.

5.5 Performance test of MMRDE

5.6 Experimental settings

To compare the overall performance of the DE algorithm with multiple mutation strategies based on roulette wheel selection (MMRDE), we conducted direct and indirect comparisons for all functions on the benchmark set. The four DE variants SHADE, [12] CODE, [11] EPSDE, [10] and JADE [9] are used to perform direct performance comparisons with MMRDE. Their data come directly from the literature [12] . We then compare the performance of MMRDE, HSDE, JADE, and SHADE by our experiment. All the algorithms we used for comparisons were coded using Fortran 90. To establish a consistent test environment, we coded the JADE and HSDE algorithms in FORTRAN based on the literature [9, 13]. The SHADE code was translated from the original C+ + program (found in the CEC Shared DocumentsFootnote 1) to Fortran 90.

We did not specifically tune the parameters of other algorithms for our experiment. To fairly compare the performance of the algorithms, the population size of each algorithm was set to 100 for all dimensions. Each algorithm ran 30 times independently. The dimension of all 28 test functions in the benchmark set was set to 30. The search space was [− 100,100] for all dimensions. The maximum functions number was max_FES = 10000*D.

The average value (mean) and standard deviation (Std.) of 30 independent calculation results for each function are listed in Table 5 for indirect comparisons and in Table 6 for self-comparisons. To clearly see which algorithm performs best on each test function, the best outcomes among all algorithms are shown in boldface. For comparison, the Wilcoxon signed-rank test is conducted between the state-of-the-art DE variants and MMRDE in Table 6 using KEEL, [41] where +, =, and −, respectively, indicate that performance of our algorithm is significantly better, not significantly better or worse, or significantly worse than other compared state-of-the-art DE algorithms according to the Wilcoxon signed-rank test at the α = 0.05 level. The three lines at the bottom of Table 6 represent the count results of +, =, and −, and Table 7 shows more detailed results. At the bottom of Table 6, the average ranks and summation of relative errors between all algorithms are shown.

5.7 Comparison of MMRDE with other DE variants

Table 5 shows the experimental results of indirect comparison with other state-of-the-art DE variants when solving the 28 benchmark functions. The experimental results obtained by running each state-of-the-art DE algorithm 30 times on each function in the benchmark set are shown in Table 6. From the results in Tables 5 and 6, we can summarize the experimental results as follows.

-

1.

Unimodal Functions f1 ∼ f5: MMRDE performs better than HSDE and JADE, because MMRDE obtains significantly better results in more functions. MMRDE has similar performance to SHADE. All the competing algorithms can converge globally for functions f1 and f5. MMRDE obtains significantly better results than its competitors for f4. Based on the performance in functions f1 ∼ f5, we can conclude that MMRDE has a strong exploitation ability and achieves the performance level of SHADE in unimodal functions.

-

2.

Basic Multimodal Functions f6 ∼ f20: MMRDE performs better than its peers. MMRDE clearly is the best among all the algorithms for functions f7, f8, f12, f16,f18, and f20. This shows that the method of using a roulette wheel to choose a mutation strategy seems effective. Our proposed mutation strategy works well with rand/1 and current-to-rand/1. Overall, we see that MMRDE has a strong ability to converge globally.

-

3.

Composition Functions f21 ∼ f28: These hybrid composition functions are made up of several different types of functions and are difficult to optimize. Tables 5, 6, and 7 show that MMRDE achieves similar performance to SHADE and performs better than JADE and HSDE. Specifically, MMRDE obtains the best solution on functions 23, 25, and 26. This shows that MMRDE has a strong ability to solve composition functions.

-

4.

Table 8 shows the results of the application of Wilcoxon’s test to all functions. MMRDE is significantly better than JADE and HSDE, but it is not significantly better or worse than SHADE. Both the average ranking and summation of relative errors of MMRDE are the lowest, however, indicating that MMRDE performs better overall than its competitors.

To intuitively reflect the convergence speed of MMRDE relative to other algorithms, we choose a set of results from 30 independent runs to draw the convergence-process curve in eight representative test functions from the benchmark set. These curves are shown in Fig. 3. We see that SHADE has the fastest optimization speed overall. MMRDE obviously converges faster than HSDE. Figure 3c, e and f show that MMRDE seems to have a proper convergence speed to strike a good balance between exploration and exploitation.

5.8 Evolution of mutation strategies

It is interesting to find out which mutation strategies have a higher success rate of generating excellent offspring. A mutation strategy with higher success rates can be allocated to more individuals using the roulette wheel selection method. We select eight representative functions from the benchmark set to represent the success rates of each mutation strategy in different DE stages of one problem. Figure 4 shows the success rate of each mutation strategy and the changes in error value during the evolution.

From Fig. 4, we see that the reflection-based mutation strategy and current-to-rand/1 have higher success rates than mutation strategy rand/1, and they usually dominate the evolutionary process. Mutation strategy rand/1 has less impact on evolution. All strategies have a high success rate in early evolution; however, as evolution proceeds, the success rates of the three mutation strategies vary in different functions. When solving simple problems (such as F1), the success rate of each mutation strategy is high and there is little change in the whole evolution process. But, when a complex function is solved, the success rate of each mutation strategy usually fluctuates greatly. It is worth noting that we set a lower limit on the success rate so that each mutation strategy will not become extinct in some evolutionary stages that were unfavorable to them, and they have the opportunity of dominant evolution at any stage of evolution. Figure 4d, e, and f clearly show the effect of the lower limit of success rate on evolution. The use of roulette options for the three mutation strategies seems to mitigate the process of evolutionary stagnation.

In general, the three strategies used in MMRDE work well, and the algorithm achieves a better balance between exploration and exploitation.

6 Conclusions

In this paper we propose a new mutation operation, called reflection-based mutation, inspired by the reflection operations in the Nelder-Mead method. For each individual to be mutated, we randomly select four individuals from the current population. We then calculate the weighted center of three individuals with better fitness based on their object function values, use the difference vector of the best individual and the worst individual as the perturbation vector, and use the center we have calculated as the target individual, thus completing a mutation operation. We compare DE/Reflection/1 and other mutation strategies in an experiment and prove that the proposed mutation strategy has good exploration and exploitation capability.

Although reflection-based mutation can better balance exploration and exploitation than other compared mutation strategies, it is still a greedy mutation strategy that is prone to premature convergence when solving complex multimodal optimization problems. We add two basic strategies for a population to generate perturbation vectors to maintain population diversity and increase robustness. We use a roulette wheel selection strategy to arrange mutation strategies based on their success rate for each individual. This DE variant, with a combination of multiple mutation strategies based on roulette wheel selection, is named MMRDE. We use a benchmark set, including 28 functions for real-parameter optimization recommended by the Institute of Electrical and Electronics Engineers CEC2013 special session, to test the performance of MMRDE against some improved DE variants. Experimental results indicate that the proposed algorithm can balance between exploration and exploitation. The algorithm shows the effectiveness of three mutation strategies in cooperative work. The proposed algorithm shows it can guide the search for a global optimal solution with fast convergence compared with other improved DE variants.

References

Storn R, Price K (1997) Differential evolution – A simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Chang CF et al (2007) Robust searching hybrid differential evolution method for optimal reactive power planning in large-scale distribution systems. Electr Power Syst Res 77(5-6):430–437

Dragoi EN et al (2013) Optimization methodology based on neural networks and self-adaptive differential evolution algorithm applied to an aerobic fermentation process. Appl Soft Comput 13(1):222–238

Iorio AW, Li X (2004) Solving rotated multi-objective optimization problems using differential evolution. AI 2004: Advances in Artificial Intelligence 3339:861–872

Das S, Konar A, Chakraborty U K (2005) Two improved differential evolution schemes for faster global search. In: Genetic And Evolutionary Computation Conference, pp 991– 998

Chakraborty UK, Das S, Konar A (2006) Differential Evolution with Local Neighborhood. In: 2006 IEEE International Conference on Evolutionary Computation 2042–2049

Qin AK, Suganthan PN (2005) Self-adaptive differential evolution algorithm for numerical optimization. In: 2005 IEEE Congress on Evolutionary Computation 2:1785–1791

Brest J et al (2006) Self-adapting control parameters in differential evolution: A comparative study on numerical benchmark problems. IEEE Trans Evol Comput 10(5):646–657

Zhang J, Sanderson A C (2009) JADE: adaptive differential evolution with optional external archive. IEEE Trans Evol Comput 13(5):945–958

Mallipeddi R et al (2011) Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl Soft Comput 11(2):1679–1696

Wang Y, Cai Z, Zhang Q (2011) Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans Evol Comput 15(1):55–66

Tanabe R, Fukunaga A (2013) Success-history based parameter adaptation for differential evolution. In: 2013 IEEE Congress on Evolutionary Computation 71–78

Yi W et al (2015) A new differential evolution algorithm with a hybrid mutation operator and self-adapting control parameters for global optimization problems. Appl Intell 42(4):642–660

Leon M, Xiong N (2016) Adapting differential evolution algorithms for continuous optimization via greedy adjustment of control parameters. J Artificial Intell Soft Comput Res 6(2):103–118

Wu G et al (2016) Differential evolution with multi-population based ensemble of mutation strategies. Inf Sci 329(C):329– 345

Leon M, Xiong N (2017) Alopex-based mutation strategy in differential evolution. In: 2017 IEEE Congress on Evolutionary Computation 1978–1984

Ronkkonen J, Kukkonen S, Price KV (2005) Real-parameter optimization with differential evolution. In: 2005 IEEE Congress on Evolutionary Computation, vol 1, pp 506–513

Price K, Storn RM, Lampinen JA (2005) Differential evolution: a practical approach to global optimization (natural computing series). Springer-Verlag, New York, pp 1–24

Jia D, Zheng G, Khurram Khan M (2011) An effective memetic differential evolution algorithm based on chaotic local search. Inf Sci 181(15):3175–3187

Ali MZ et al (2017) An Adaptive Multipopulation Differential Evolution with Dynamic Population Reduction. IEEE Trans Cybern 47(7):2768–2779

Lampinen J (2002) A Fuzzy Adaptive Differential Evolution Algorithm. Soft Comput 9(5):448–462

Ghosh A et al (2011) An improved differential evolution algorithm with fitness-based adaptation of the control parameters. Inf Sci 181(18):3749–3765

Mezura-Montes E, Velazquez-Reyes J, Coello Coello CA (2006) A comparative study of differential evolution variants for global optimization. In: 8th Annual Genetic and Evolutionary Computation Conference 485–492

Dorronsoro B, Bouvry P (2011) Improving classical and decentralized differential evolution with new mutation operator and population topologies. IEEE Trans Evol Comput 15(1):67–98

Islam SM et al (2012) An adaptive differential evolution algorithm with novel mutation and crossover strategies for global numerical optimization. IEEE Trans Syst Man Cybern B Cybern 42(2):482–500

Mohamed AW (2015) An improved differential evolution algorithm with triangular mutation for global numerical optimization. Comput Ind Eng 85(C):359–375

Wang S et al (2017) Self-adaptive differential evolution algorithm with improved mutation strategy. Soft Computing, pp 1–15. Springer Berlin Heidelberg. https://doi.org/10.1007/s00500-017-2588-5. Online ISSN: 1433-7479

Piotrowski AP (2013) Adaptive memetic differential evolution with global and local neighborhood-based mutation operators. Inf Sci 241(10):164–194

Gong W et al (2011) Adaptive strategy selection in differential evolution for numerical optimization: An empirical study. Inf Sci 181(24):5364–5386

Tasoulis DK, Plagianakos VP, Vrahatis MN (2005) Clustering in Evolutionary Algorithms to efficiently compute simultaneously local and global minima. In: 2005 IEEE Congress on Evolutionary Computation, vol 2, pp 1847–1854

Epitropakis MG, Plagianakos VP, Vrahatis MN (2008) Balancing the exploration and exploitation capabilities of the differential evolution algorithm. In: 2008 IEEE Congress on Evolutionary Computation 2686–2693

Ali MZ, Awad NH, Suganthan PN (2015) Multi-population differential evolution with balanced ensemble of mutation strategies for large-scale global optimization. Appl Soft Comput 33(C):304–327

Piotrowski AP, Napiorkowski JJ, Kiczko A (2012) Differential Evolution algorithm with Separated Groups for multi-dimensional optimization problems. Eur J Oper Res 216(1):33–46

Qin AK, Huang VL, Suganthan PN (2009) Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans Evol Comput 13(2):398–417

Das S et al (2009) Differential evolution using a neighborhood-based mutation operator. IEEE Trans Evol Comput 13(3):526–553

Pencheva T, Atanassov K, Shannon A (2009) Modelling of a roulette wheel selection operator in genetic algorithms using generalized nets. Int J Bio 13(4):101–105

Lipowski A, Lipowska D (2012) Roulette-wheel selection via stochastic acceptance. Physica A: Stat Mech Appl 391(5):2193–2196

Ho-Huu V et al (2018) An improved differential evolution based on roulette wheel selection for shape and size optimization of truss structures with frequency constraints. Neural Comput Applic 29(1):167–185

Peng F et al (2009) Multi-start JADE with knowledge Transfer for numerical optimization. In: 2009 IEEE Congress on Evolutionary Computation 1889–1895

Liang J et al (2013) Problem definitions and evaluation criteria for the CEC 2013 Special Session on Real-Parameter Optimization

Alcala-Fdez J et al (2009) KEEL: A software tool to assess evolutionary algorithms for data mining problems. Soft Comput 13(3):307–318

Acknowledgments

We thank Miguel Leon (from the School of Innovation, Design and Engineering, Malardalen University) for his great help with this study. We greatly appreciate the reviewers for their thoughtful and encouraging comments on our manuscript, which we all think is helpful for improving the quality of our paper. This study was supported by program 2013KCT-15 of the Shanxi Provincial Key Innovative Research Team and the National Natural Science Foundation of China (51409206). We thank LetPub (www.letpub.com) for its linguistic assistance during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Qian, W., Chai, J., Xu, Z. et al. Differential evolution algorithm with multiple mutation strategies based on roulette wheel selection. Appl Intell 48, 3612–3629 (2018). https://doi.org/10.1007/s10489-018-1153-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1153-y