Abstract

Targeting systemic risk, we propose a two-stage analysis of a large collection of stock markets by considering their interconnections. First, we characterize the joint dynamics of stock returns using a multivariate GARCH model in the presence of regime changes. The model detects three regimes of volatility rising from two unknown but common endogenous breaks. We compute filtered returns by normalizing them using the dynamic GARCH volatility. Second, we build a Gaussian signed weighted and undirected worldwide financial network from filtered stock returns, that evolves across regimes. The best network is built from the partial correlation matrix of filtered stock returns over each regime using regularisation and the minimum Extended Bayesian Information Criterion. To gain insights into the resilience of the financial network and its systemic risk over time, we then compute relevant nodal centrality measures—including the clustering coefficient—over each regime. Thus, we characterize the ever-changing network topology and structure by detecting group-like and community-like patterns (e.g., clustering and community detection, network cohesion). Under the resilience framework and depending on the studied regime, we analyse the propensity of a shock to propagate across the network thanks to positive weights, and the network’s ability to mitigate shocks thanks to negative weights. The balance between spreading and inhibiting node influences drives the network’s frailty and resilience to shocks. Hence, the network exhibits a high level of systemic risk when its connectivity is large and most edge weights are significantly positive (i.e., strong and multiple conditional dependencies of world stock markets). It is of high significance to policymakers because systemic risk/financial frailty is potentially costly (i.e., loss) while resilience is rewarding (i.e., gain).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 2007, the subprime mortgage crisis and its spreading to global financial markets shed light on systemic risk. The various interconnections across asset classes and marketplaces put forward the need to account for the topology of financial markets as a global network and the strength of existing linkages (Hasse, 2022; So et al., 2022). Such patterns drive potential contagion across financial markets and financial stability (Jackson & Pernoud, 2021). In particular, higher connectivity implies a higher risk of shock transmission and, therefore higher risk of sharing failure (Elliott et al., 2021), which threatens the financial network's resilience.

We target to study the network of the world stock markets over time. Specifically, we consider the dailyFootnote 1 and weekly returns of a qualified collection of relevant stock indices worldwide in the period 2000–2022. Our analysis handles the time-varying nature of financial markets along with their interconnections. Therefore, we identify common worldwide stock market regimes.

It is well-known that financial data such as stock returns exhibit time-varying variance (also known as volatility clustering) and regime changes (Connolly et al., 2007; Gatfaoui, 2013; Gatfaoui & de Peretti, 2019; Hafner et al., 2022; Kenourgios et al., 2011; Malik et al., 2005; Strohsal & Weber, 2015). In this light, the best representation that can capture their underlying data-generating process consists of Generalized Autoregressive Conditionally Heteroscedastic, also called GARCH, models (Bollerslev, 1986; Engle, 2002a, 2002b). In particular, GARCH representations handling volatility clustering and multiple change point detections are required to capture the structural changes in the data-generating process of financial returns (Cho & Fryzlewicz, 2015; Cho & Korkas, 2022).

In this paper, we deal with a GARCH-based framework for managing and measuring systemic risk. There are several methodological approaches for the definition of systemic risk. One of the most popular systemic risk measures is the CoVaR, introduced by Adrian and Brunnermeier (2016). In this framework, the authors proposed the effect of a distress of an institution on the others as a systemic risk measure. The grounding idea is the presence of interconnections among institutions, so that something occurring somewhere might be able to propagate everywhere. CoVaR is a measure that implicitly state the presence of a network structure among the institutions without formalizing it through a mathematical model. On the same line, Braverman and Minca (2018), Cont and Schaaning (2019) and Cerqueti et al. (2021a) proposed a market-impact model for measuring systemic risk—i.e., they introduce the impact of a collapse of asset prices on the market value. The idea that the links between two institutions could be driven by the influence of a negative shock occurring on a node to another one is the basis of Cerqueti et al. (2022b). In the quoted paper, the authors provided a network structure among companies on the basis of the financial interflows—hence, leading to an influence-based analysis of systemic risk.

In the present case, we use a two-stage approach as follows.

In the first stage, we use a novel methodology developed by Cho and Korkas (2022). Such a method is based on a multivariate GARCH representation with multiple change points to characterize stock market returns. Such an approach allows for both identifying common volatility regimes for world stock markets and describing the time-varying volatility and correlation of those stock markets. The GARCH-inferred individual volatilities are then used to normalize stock market returns. In the second stage, a robust approach is used to build a regime-specific network of filtered stock returns. After having identified change points in regional index returns, we estimate a Dynamic Conditional Correlation (DCC) GARCH model over each related index returns regime and for each bundle of regional stock indices as well as all regional indices (Engle, 2002a, 2002b).

Using the obtained networks, we compute the regime-specific centrality measures and the nodal weighted clustering coefficient to gain insights into the resilience of the network and the systemic risk of the overall financial framework.

As regards change point detection, the modelling scheme assumes that the studied sample of time series exhibits the same change points and, therefore, the same regimes, which conforms to the regional analysis of stock prices daily, but also their study at the world scale weekly. Based on cumulative sum-type statistics (CUSUM), the authors develop a methodology that identifies change points and captures the multivariate GARCH patterns across the identified regimes. Specifically, the GARCH parameters are allowed to evolve across regimes, and the multiple change points can be well-identified even when they are close to each other. Under such a setting, the dependence structure within and between time series is also handled, and the high-dimensionality pattern is well-managed. The two latter features are strong added values and enhancements compared to classic change point tests and classic multivariate GARCH representations. The previous arguments support choosing their methodology in the prospect of a financial network analysis, which may require considering many time series.

In the present paper, we adopt therefore a robust and genuine approach which handles several worldwide stock markets at the same time and for which joint structural breaks are investigated. The added value of our approach is twofold since the multivariate GARCH setting:

-

(1)

Handles the joint dynamics of several stock markets (i.e., multivariate GARCH process of dimension 20),

-

(2)

With endogenous structural breaks (i.e., multiple breakpoints that are unknown but common to all stock marketplaces).

As a result, our approach:

-

Is more powerful than Gatfaoui (2013) thanks to (1) and (2), and Gatfaoui and de Peretti (2019) thanks to (1),

-

And also improves Gatfaoui and de Peretti (2019) thanks to (2) by identifying market regimes that are specific to the world stock market.

Moreover, we consider the instantaneous and significant non-zero correlations between the filtered log-returns across detected global market regimes. Finally, one needs to mention that we target a characterization of systemic risk in world stock markets. Current high frequency trading practices and high-speed internet-flowing information processes support the study of instantaneous pairwise correlations across stock market returns. In this light, we are looking at strong links and influences between nodes (i.e., stock markets), so that small edges are not of interest (i.e., low correlated or independent stock markets).

The considered approach allows for eliciting time-varying financial networks and characterizes their properties (e.g., topology, the strength of linkages). We can study the propensity of the world financial stock markets to absorb external shocks based on the nature, density and strength of their connections.

Indeed, it is well acknowledged now that financial interconnectedness is a source of instability and systemic risk (Renn et al., 2022) with an unfavourable impact on economic activity (Simaan et al., 2020). According to Simaan et al. (2020), instability leads to a disruption in the financial system's functioning, coupled with harmful spillover effects across the whole network. Such a pattern requires modelling financial markets as a network where the failure results from an instability in the dynamical system (Markose et al., 2021). In particular, the structure and topology of the financial network determine the risk of contagion (Hübsch & Walther, 2017) and, therefore, the resilience of the financial network (Bastidon & Parent, 2022).

Under such a setting, systemic risk is often measured by considering a node's centrality and the density and connectivity of its neighbours within the financial network (Zhu et al., 2018). For example, clustering coefficients serve as good descriptors of a network's systemic risk (Cerqueti et al., 2021a, 2021b).

Importantly, Cerqueti et al. (2022b) elaborate on the systemic risk as the resilience of the underlying network—as we do in the present paper. As a further analogy with the present study, Cerqueti et al. (2022b) can be inserted in the strand of topological-based resilience models, where the occurrence of a microscopic shock in the system gives a variation in the community structure of the network (see also Dong et al., 2018) or the modification of the nodal centralities (see e.g., Ghanbari et al., 2018 and Cerqueti et al., 2021b, 2022a).

The rest of the paper is organized as follows. Section 2 outlines the methodological devices used for the analysis. Section 3 presents the statement and the results of the empirical exercise. Last section offers some conclusive remarks.

2 Methodology

2.1 Multivariate GARCH with change points

Consider N stock market indices. We follow Cho and Korkas (2022) and design a time-varying multivariate GARCH(p,q) model as follows:

where

for any time t = 1,…,T, any stock index i = 1,…,N and any corresponding index return \({r}_{i,t}\). In the above equation of conditional variance, p is the ARCH lag, q is the GARCH lag, \({\omega }_{i}\left(t\right)>0\) is the unconditional variance while \({\alpha }_{i,j}\left(t\right)\) and \({\beta }_{i,k}\left(t\right)\) are the positive ARCH and GARCH coefficients.

The vector of independent innovations \({\varepsilon }_{t}=\left({\varepsilon }_{1,t},...,{\varepsilon }_{N,t}\right){1^\prime}\) has a zero mean \({\mathbb{E}}\left({\varepsilon }_{t}\right)={0}_{N}\) and a finite and symmetric covariance matrix \(var\left({\varepsilon }_{t}\right)={\Sigma }_{\varepsilon }\left(t\right)={\left[{\sigma }_{i,m}\left(t\right)\right]}_{i,m=1}^{N}\) with \({\sigma }_{i,i}\left(t\right)=1\) and \({\sigma }_{i,m}\left(t\right)={\sigma }_{m,i}\left(t\right)\) for all \(1\le i,m\le N\) and t. Assuming that a number of B change points are detected, and the corresponding change point dates are (\({\eta }_{1}<...<{\eta }_{B}\)) with also \({\eta }_{0}=1\) and \({\eta }_{B+1}=T\). Therefore, the set of studied time series exhibits \((B+1)\) regimes bounded by the identified change points. For example, the first regime runs over the period [\({\eta }_{0}\),\({\eta }_{1}\)], the second regime runs over the period [\({\eta }_{1}+1\),\({\eta }_{2}\)] while the (B + 1)-th regime runs over the period [\({\eta }_{B}+1\),\({\eta }_{B+1}\)]. During the two-stage estimation process, a double CUSUM binary segmentation algorithm is performed to detect and locate changepoints, and then a bootstrap algorithm is applied to confirm the appropriate threshold used for the test statistic in the previous algorithm. Moreover, the correlation structure between index returns is also considered during the estimation process. Thus, the multivariate GARCH model is updated over each regime, and its parameters are allowed to change across regimes so that we get a piecewise stationary multivariate GARCH model. The corresponding set of parameters is regime-specific (i.e., constant over each regime).

As regards DCC GARCH(1,1) modelling, letting the conditional variance be \({E}_{t-1}\left[{r}_{t} {r}_{t}{^\prime}\right]={H}_{t}={D}_{t} {R}_{t} {D}_{t}\), Engle (2002a, 2002b) introduced the following specifications for \(1\le i\le N\):

where \({\mathcal{F}}_{t-1}\) is the available information set at time t − 1, \({r}_{t}=\left({r}_{1,t},...,{r}_{N,t}\right)^{\prime}\) is the vector of returns, \({E}_{t-1}\left[.\right]\) is the expectation conditional on past information up to time t − 1, \(\mathcal{N}\left(.,.\right)\) is the Gaussian probability distribution with given first and second moments, \(\circ \) is the Hadamart product of 2 matrices, S is the unconditional correlation/covariance matrix of \({\varepsilon }_{t}\) (i.e., the standardized returns), \({R}_{t}\) is the time-varying correlation matrix. Note that Q is a \(N\times N\) symmetric positive semi-definite matrix approximation of covariances, A, B and \(\left(1 1{^\prime}-A-B\right)\) are \(N\times N\) positive semi-definite matrices, \({E}_{t-1}\left[{\varepsilon }_{t} {\varepsilon }_{t}{^\prime}\right]={D}_{t}^{-1} {H}_{t} {D}_{t}^{-1}={R}_{t}={\left({\rho }_{ijt}\right)}_{1\le i,j\le N}\) with \({\rho }_{ijt}\) the pairwise conditional correlation between the returns of assets i and j at time t so that \({H}_{t}={\left({\rho }_{ijt} \sqrt{{h}_{iit} {h}_{jjt}}\right)}_{1\le i,j\le N}\). Moreover, Eq. (4) indicates that the respective conditional variances of index returns follow univariate GARCH(1,1) processes with \({\omega }_{i}>0\), \({\alpha }_{1i}\ge 0\), \({\beta }_{1i}\ge 0\) and \({\alpha }_{1i}+{\beta }_{1i}<1\) for \(1\le i\le N\). When the number of observations is large enough over a given regime (e.g., above or equal to 100), the DCC GARCH(1,1) is estimated by maximizing the log-likelihood implied by Eq. (3). Differently, when the number of observations is quite limited, we estimate the DCC GARCH(1,1) model by considering its Bayesian version (Fioruci et al., 2014) and applying Markov Chain Monte Carlo (MCMC) simulations with 10,000 simulations. In Sect. 3.1 we present the dataset employed for our empirical experiment. As we will see, we will identify three regimes. According to the arguments above, regimes 1 and 2 require a log-likelihood maximization procedure, while regime 3 requires a Bayesian estimation of the DCC GARCH(1,1) model.

2.2 Network construction

Targeting a study of the worldwide financial market, we set our focus on weekly data and their common regime changes (as a joint dynamic pattern). The GARCH(1,1) representation serves to obtain the filtered stock index returns that will be scrutinized as a worldwide financial network. According to (1), we consider the standardized returns as follows:

All stock index returns are supposed to build a global financial network whose evolution is studied across each regime. The network is built based on the Pearson correlation matrix of standardized residuals from the multivariate DCC GARCH output.

The correlation matrix is treated to avoid being non-semi-definite positive (e.g., the precision matrix is used to build the network). The eigenvalues and eigenvectors are computed to keep only orthogonal series (dimension reduction of redundant series) and solve for low eigenvalues that are difficult to invert, e.g., regularisation process, sparse precision matrix or Gaussian graphical models (Pourahmadi, 2011).

To obtain a robust correlation matrix—that is necessary to compute the network's adjacency matrix and its weights—we apply an optimal estimation process over each period (Foygel & Drton, 2010; Guillemot et al., 2013; van Borkulo et al., 2014). The estimation process relies on five steps as follows:

-

(1)

Compute the covariance matrix of standardized residuals,

-

(2)

Compute the related precision matrix (as its inverse; Whittaker, 1990).

-

(3)

Regularise the precision matrix by keeping only the eigenvectors with the most significant eigenvalues through the thresholding process of Jankova and van de Geer (2018). We get an orthogonalized precision matrix since the absolute elements of the original precision matrix that fall below the threshold are set to zero. The threshold being ln(N(N − 1)/2)/√T where T is the sample size and N is the number of nodes.

-

(4)

Then, convert the regularised precision matrix to a partial correlation matrix (i.e., pairwise correlations conditional on all other network variables, Baba et al., 2004; Ghanbari et al., 2019).

-

(5)

Use the obtained partial correlation to infer the adjacency matrix and its weights to build the related network of index returns.

Within the estimation process, edges are considered significant when their weights are non-zero. Only significant weights are considered in the computation of network attributes and related centrality measures. The rationale for such a criterion is straightforward. Given that we target financial network resilience analysis, the financial network exhibits a substantial risk of failure when nodes are strongly and positively linked. Indeed, they transmit more easily shocks to each other, with a potentially higher magnitude. Thus, financial networks exhibit higher positive weights during crisis periods and stronger linkages impairing the global network's frailty. Such features contribute to describing a greater fragility of the financial network.

Moreover, the estimation process above-mentioned is further optimized as follows:

-

(1)

Given that several different thresholds can yield various corresponding partial correlation matrices (e.g., several different network structures), all possible resulting networks are obtained using the Least Absolute Shrinkage and Selection Operator (LASSO) of Tibshirani (1996). However, each obtained network still depends on a fine-tuning parameter lambda that serves as a penalization parameter disentangling useful/significant network parameters from useless/nonsignificant parameters (Opgen-Rhein & Strimmer, 2007; Schäfer & Strimmer, 2005). Hence, several resulting networks can be built as a function of a such fine-tuning parameter (Friedman et al., 2008). Such a setting raises the question of how to choose an appropriate estimate for the fine-tuning parameter lambda.

-

(2)

First, a wide range of lambda parameters are considered, and corresponding networks are built. Then, a comparison between the obtained networks is performed, and the best network representation (resulting from the most appropriate partial correlation matrix) is selected by minimizing the Extended Bayesian Information Criterium (EBIC) of Chen and Chen (2012). The minimum EBIC also yields the optimal shrinkage parameter (i.e., the best estimate of the fine-tuning/penalization parameter).

By using filtered stock index returns, we implement the analysis of Gaussian weighted and undirected networks that are regime-specific. Each network relies on the correlation between stock market places (i.e., the correlation matrix of filtered returns describes a Markov random field), such correlation matrix being used to build the adjacency matrix of each network. The values of the correlation elements are used as edges’ weights, and thus:

(Conditionally) Independent nodes that are identified as having a zero pairwise correlation, are not connected in the network;

Only (conditionally) dependent nodes are connected in the network;

Connected nodes that are:

Positively correlated have a positive weight,

Negatively correlated have a negative weight.

2.3 Centrality measures and clustering coefficient

We describe the network by computing key centrality measures and the clustering coefficient (Mason & Watts, 2012; Ward et al., 2011). Indeed, we can use them to gain insights into the resilience of the considered financial networks.

According to the considered model, we deal with weighted undirected networks with the set of the nodes \(V=\{1, \dots , N\}\) and with weights collected in a \(N\)-square weighted adjacency matrix \({W=(w}_{ik}:i,j\in V)\).

We compute centrality measures such as strength, betweenness and closeness as first representatives of network patterns. Strength centrality (Strength) sums the weights of all incident edges of node j (i.e., edges for which node j is an endpoint). Then, to account for the node j’s participation in the shortest paths within the network, betweenness centrality (Betweenness) is computed for any node j as:

where \({n}_{ik}\left(j\right)\) is the number of shortest paths between nodes i and k that pass through j as an intermediate node, \({n}_{ik}\) is the number of all shortest paths between/from i and/to k. When betweenness is high, more information and shocks flow through this node (i.e., a higher tendency to transmit shocks on a "local" basis).

The closeness centrality (Closeness) is computed for any node j as:

where \(dist\left(i,j\right)\) is the distance or length of the shortest path between nodes i and j. Closeness is the inverse of the average distance of node j to all the other nodes of the network. The higher the closeness, the more central to the network graph node j is.

As regards the clustering coefficient, it captures network connectivity and illustrates the propensity of neighbour nodes to connect around a given node (i.e., the density of interconnections within the neighbourhood of a given node). Hence, the value of the clustering coefficient illustrates the propensity for a shock to diffuse over the (financial) network and, therefore, its potential vulnerability to shocks (i.e., loss in resilience).

For our weighted undirected network, we consider the clustering coefficient proposed by Onnela et al. (2005), which is a generalization of the clustering coefficients defined in Watts and Strogatz (1998) and Zhang and Horvath (2005). Onnela et al.'s coefficient replaces the number of links in the neighbourhood of node j with the intensity or weights of such links, while Zhang's coefficient computes clustering coefficients of a matrix of weights rather than those of an adjacency matrix.

We report here below the weighted clustering coefficient \(C\left(i\right)\) of Onnela et al. (2005) for a generic node \(i\in V\).

where \({k}_{i}\) is the number of edges connected to node i, the edge weights \({\widehat{w}}_{ij}={w}_{ij}/{\text{max}}(w)\) are normalized by the maximum weight in the network, and self-edges do not exist so that \({w}_{ii}=0\), \(\forall i\in \left\{1,\cdots ,N\right\}\).

In the following, we will examine each centrality measure's contribution and the clustering coefficient because of their significance for resilience. In the appendix, we also display the extension of Onnela et al. (2005) clustering coefficient to signed networks, as proposed by Costantini and Perugini (2014). We focus on the clustering coefficient of Onnela et al. (2005) below for comparability purposes with network centrality measures (for which only absolute weights are considered, because of the presence of negative cycles in the networks).

3 Empirical experiment

3.1 Data

We select broad stock market indices from worldwide stock markets. Such selection will allow for an approximate view of the level of dependence on the world's stock marketplaces and, later, its consequences for the resilience of the global marketplace to shocks (e.g., systemic risk).

We extract the weekly closing values of several stock indices worldwide (see Table 1 below). The weekly frequency allows for ignoring the time zone of financial dataFootnote 2 and eases the identification of common changepoints in time series at the world level. The stock indices are gathered by region. Hence, any region's financial data should react simultaneously to a significant economic, financial, or geopolitical event.

We compute the percentage of the logarithmic returns of the indices considered. The indices cover three major regions/continents such as America (five time series), Europe (nine time series) and Asia (six time series). The returns of those 20 stock indices run weekly from the 3rd January 2000 to the 8th July 2022, with a sample size of T = 1175 (observations per series).

4 Results

4.1 Multivariate GARCH with changepoints

In unreported results, individual time series of index returns exhibit the following patterns: returns are not independent, and strong ARCH(1) effects exist. The latter pattern advocates the application of a time-varying multivariate GARCH(1,1) representation to stock index returns (we compared ARCH(1) output with GARCH(1,1), and GARCH(1,1) was the more robust representation). Moreover, we apply the DCC-GARCH(1,1) specification above-mentioned by setting matrices A and B to scalar values a and b, respectively, which satisfy the conditions a ≥ 0, b ≥ 0, and a + b < 1. The off-diagonal elements of Q approximate the correlations between returns.Footnote 3 These correlations vary over time in response to new information on returns. Parameters a and b govern the speed at which correlations rise above their average level for a while when returns move in the same direction or temporarily fall below their unconditional value when returns move in opposite directions (Engle, 2009). We can interpret parameter a as a short-run response in correlations and parameter b as a long-run or persisting correlation component.

The region-specific weekly results are displayed in the forthcoming tables as follows. Table 2 displays the estimated changepoints (i.e., break dates) for each region, while Tables 3, 4, and 5 present the estimated GARCH(1,1) parameters across regimes and for each region on a weekly basis. We find two changepoints; therefore, three regimes describe the weekly stock returns of the worldwide financial network.

Over period 1, stock indices exhibit strong persistence in their volatility coupled with persistence in their correlations. Period 2 exhibits globally small or medium persistence in stock index volatilities and small persistence in correlations. Over period 3, stock indices' individual volatilities and correlations exhibit strong persistence across all stock marketplaces.

4.1.1 Regime-specific network graphs

We find an optimal shrinkage coefficient lambda of 0.2363, 0.0827, and 0.0072 for regimes 1, 2, and 3, respectively. Using the orthogonalized precision matrix of the standardized residuals' correlation matrix (see step 3 in the estimation procedure described in Sect. 2.2), we get the regime-specific undirected and weighted network graphs displayed below (Figs. 1, 2, and 3). In all network graphs, the same layout prevails so that:

-

Negative links are red coloured while positive ones are green coloured.

-

The stronger the link, the thicker the corresponding edge.

-

Only significant weights are considered in the network (i.e., nonsignificant edges are omitted).

4.1.2 Centrality measures and clustering coefficient for network resilience

We computed the centrality measures and the clustering coefficient (Onnela et al., 2005) for the three regimes, which are displayed in Tables 6, 7 and 8. Moreover, the centrality measures (i.e., betweenness, closeness and strength) are standardized for comparability purposes, while the clustering coefficient is left as is.

The analysis of the network's resilience—hence, of the systemic risk of the associated financial framework—can be carried out based on the centrality measures and the clustering coefficient.

Indeed, resilience refers to the tolerance of the complex dynamical system to perturbations and the ability of the network system to survive component failures. It is often linked to the network topology and geometry as represented by centrality and clustering measures. Hence, a financial network with high connectivity and many cycles (i.e., paths or motives) of information/shocks spreading is low resilient. The more financial markets become interconnected, and the more different patterns of shocks' spreading exist, the more the fragility of the network of stock markets increases. Of course, the link between these three features is nonlinear.

According to the arguments above, the highest the nodal clustering coefficient, the strongest the community structure around the considered node. Indeed, the propagation of an exogenous shock starting from a node \(i\in V\) is facilitated when the adjacents of \(i\) are strongly positively interconnected—i.e., the clustering coefficient \(C(i)\) is high. Therefore, a network with high (low) levels of nodal clustering coefficients is weakly (highly) resilient. At the same time, a high value of the centrality measures is associated with nodes having strong interconnections (high strength), highly relevant nodes for flow propagation (high betweenness) and short connection paths among the nodes (high closeness). In all these cases, we have an easy propagation of the shocks—i.e., a weakly resilient network. This said, we can enter some details and examine the centrality measures and the clustering coefficient from the resilience perspective.

Let us start with the strength.

Stylized facts assure that the concept of resilience is increasingly appearing in portfolios of investing companies (Hua et al., 2020; Kim & Kim, 2019; Kritzman et al., 2011). Portfolios are examined under the criterion of the amount of time that it takes to recover and the level to which the portfolio must climb to restore what was lost (Chen & Hackbarth, 2020; Geczy, 2014). The downside protection plays a key role due to the asymmetric relationship between losses and gains. The relevance of downside protection passes through the construction of well-diversified portfolios. The concept of resilience then becomes quite close and overlaps with hedging, where the risk of loss must be mitigated. A system that returns to its mean after some shock can surely be defined as resilient. When the risk is considered as the deviation from the mean, a hedged portfolio is resilient (Kim & Kim, 2019).

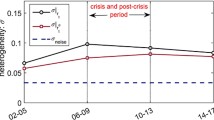

In portfolio theory, it is well assessed that a portfolio with non-negative weights can be hedged when negative correlations are present among its assets. Within these terms, the set of worldwide markets considered as an equally distributed portfolio maintains at most its stability when both positive and negative correlations are present. Such a naïve portfolio satisfies thus the international diversification principle. This is the rationale for considering the average of the strength as a proxy for the hedging, hence for the resilience (Barrat et al., 2004). A lower strength average implies a good balance among positive and negative partial correlations. On our data, the average strengths in regimes 1, 2, and 3 are, respectively, equal to − 0.0667, − 0.0288 and 0.1699. This implies that regime 2 shows the highest resilience and regime 3 the lowest.

For closeness, we notice that the average closeness in regimes 1, 2, and 3 is, respectively, equal to − 0.0916, − 0.2163 and − 0.2734.

Onnela et al. (2002) show that during expansions, the distance among the assets increases and decreases during recessions. The analysis carried out in the quoted paper is performed only on the topology of the network without considering the weights. In Elliot et al. (2021), the topology of the network has been studied, and the more the nodes are close, the easier the shock may propagate through the network. Also, Elliot et al.'s paper does not consider the weights.

Importantly, the weights provide relevant information.

The negative sign of the closeness in each of the three regimes signals an overall prevalence of negative partial correlation. Once more, this is a positive fact for resilience since it mitigates the risk of local failures. More precisely, negative weight may not slow the propagation of shocks but surely change their sign, contributing to overall stability in front of individual fluctuations. Hence, it is worth considering the standard deviations of the closeness, which are, respectively, equal to 0.35, 0.54 and 0.51 in regimes 1, 2 and 3. The lower variability in regime 1 confirms it as the best balanced with respect to the closeness, while regimes 2 and 3 have, respectively, low closeness, but higher standard deviation and the lowest closeness and less high standard deviation. Therefore, closeness provides information not already considered in the strength, providing a different ranking in terms of resilience.

Let us discuss the betweenness.

Betweenness represents the ability of a node to drive the communication (i.e., the propagation/spreading process of a shock) between a pair of nodes as a function of their location in the network. Hence, it contributes to determining if a node is influential within the network and whether it acts as a shock broker/mitigator or shock propagator. The more the nodes are "in betweenness", the more they "bridge" groups which would be more distant otherwise. Therefore, the betweenness outlines the presence of non-uniform aggregations, precursory of groups. On our data, the average betweenness in regimes 1, 2, and 3 are, respectively, equal to − 0.0626, − 0.1287 and − 0.0719. The more the betweenness is far from 0, the more the coarse-grained structure emerges. Regime 2 is the one where the phenomenon is more evident. Regimes 1 and 3 give similar results. The presence of groups on one side gives an overall delay to the propagation of shocks; on the other side, it shows a less uniform sensitivity to the shocks: whether the nodes with the highest betweenness undergo fluctuations, the spread is faster than in-group fluctuations. Therefore, the information provided by the betweenness does not overlap with the other centrality measures.

Finally, we explore the clustering coefficients.

To this aim, we present some bar plots having as an x-axis the indices label of Tables 6, 7 and 8 in the same order (see Figs. 4, 5 and 6 for regimes 1, 2 and 3, respectively).

Regimes 1 and 3 present a small number of nodes with high positive clustering coefficients and a large set of indices with small and negative clustering coefficients. This implies that a few nodes bring a major part of the network community structure, which are well counterbalanced by most nodes having negative correlations around them. In these circumstances, resilience is strongly affected by the identification of the nodes where an external shock appears. If the shock to be absorbed involves one of the high-value clustering coefficient nodes, then its propagation is rapid and not easy to be stopped. We notice that regime 3 has a node with an exceptional positive clustering coefficient, pointing to a strong community structure around that node and a weakly resilient network in the presence of a shock related to that node. Regime 2 shows a fair proportion between clustering coefficients in terms of size and sign of the values.

In general, the clustering coefficient does not provide a clear response to the resilience of the network. Specifically, it highlights a strong dependence on the ability to absorb a nodal shock on the node where it appears, leading to a heterogeneous role of the stock indices in this context.

5 Conclusions

This paper analyses the world's main stock indices as connected entities, with a specific focus on the resilience of the resulting network models. The indices are assumed to be connected through their correlation structure. We also detect the presence of regimes over a large period and discuss the regime-based resilience of the financial model.

The proposed worldwide scale analysis is useful for studying and diagnosing financial integration's implications across stock marketplaces. Highlighting the frailty of the global financial market will help policy makers identify key weaknesses and take preventive actions in the case of the occurrence of a financial crisis.

In this respect, we are in line with a large strand of literature. A prominent contribution is due to Ben Bernanke, who was awarded the 2022 Nobel Memorial Prize in Economic Sciences and was the former chairman of the Federal Reserve. Quoting Bernanke (2010): “In discussing the causes of the crisis, it is essential to distinguish between triggers (the particular events or factors that touched of the crisis) and vulnerabilities (the structural weaknesses in the financial system and in regulation and supervision that propagated and amplified the initial shocks)”. In this respect, we mention also Mynhardt et al. (2014), who discussed the long-run properties of financial markets performance before and after the crisis in 2007 and show the differences in markets efficiency before and after systemic collapses. Longstaff (2010) showed that the subprime crisis was predicted by the (negative) variation of some financial market performance indicators. Differently, Junior and Franca (2012) showed that high volatility of markets is associated with a high level of positive correlation among them—hence, pointing to a concrete connection between some aspects of financial frailty and the occurrence of a crisis.

Differently with the quoted paper, we identify critical elements under a multifocal perspective by firstly creating a novel market network model and then by exploring its critical elements on the basis of several nodal centrality measures. The identification of a stochastic dependence structure among the assets through a multivariate GARCH process and the inclusion of critical change points in the considered framework let the proposed setting be a new contribution in the systemic risk management and measurement—thus, motivating and supporting the worthiness of the presented model.

We link resilience with some meaningful nodal centrality measures and clustering coefficients, which describe a network's connectivity and information spreading patterns.

Focusing on financial networks, centrality measures help identify critical stock marketplaces.

They are useful to stock investors to identify risk sources and contagion risks within their international stock portfolios. Moreover, they are handful devices to implement investment strategies and diversify stock portfolios. As additional evidence, centrality metrics are useful to policymakers to prevent or mitigate volatility spillovers from one marketplace to another, avoiding thus the well-known domino effect in financial markets. For example, they may help identify influential marketplaces acting as volatility shock propagators. And policymakers may use such information to set up strategies to minimize the contaminating influence of these critical marketplaces within the financial network. Concomitantly, policymakers may also build strategies maximizing the influence of balancing marketplaces that act as volatility mitigators in the process of financial shock propagation.

In the considered paradigmatic empirical instance, the propagation process may rely on a set of nodes, making the network vulnerable to shock propagation because they may spread the shocks significantly. A set of counterbalancing nodes may mitigate such a propagation process. Specifically, positive weights highlight the reinforcing pattern of nodes' interrelationships, while negative weights stress the balancing impact nodes can have on one another. Clustering refers to the density and connectivity features of a node's neighbourhood.

By embedding several robust and efficient stages of study and estimation, our robust approach is flexible enough to be applied to any other similar topic, such as studying the financial dependence between various asset classes across the world and the economic dependence across the world, among others. From the point of view of big or institutional investors, our approach can be applied to large portfolios of assets from the same market segment, various market segments and/or worldwide exchanges. It provides a useful tool to diagnose and measure such portfolios' resilience to financial shocks over time. In particular, the underlying regime-specific analysis of portfolios allows for describing the dependence of asset returns over time, which is especially useful to help build active management strategies. Such active management strategies focus on the regular mitigation of returns' dependence and improvement of portfolio performance over time to establish/revise the composition of the optimal portfolios and resulting asset allocation (i.e., the distribution of wealth across assets, which maximizes the performance and reduces the risk of the portfolio).

Change history

09 February 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10479-024-05853-5

Notes

On a daily basis, regional stock markets exhibit a different number of structural breaks which happen at different dates. Gathering the stock markets worldwide provides a very large number of breaks with several short-lived regimes that build too small samples for a network analysis. Thus, we moved to weekly data to get significant market regimes with a convenient duration and sample size. Moreover, weekly data avoid to handle asynchronous daily closing prices by lagging relevant market prices but at the cost of dropping some insightful informational content.

The appendix provides results for daily data for extra information.

As an approximation, the quasi-correlation matrix Q needs to be scaled.

References

Adrian, T., & Brunnermeier, M. K. (2016). CoVaR. The American Economic Review, 106(7), 1705.

Baba, K., Shibata, R., & Sibuya, M. (2004). Partial correlation and conditional correlation as measures of conditional independence. Australian and New Zealand Journal of Statistics, 46(4), 657–664.

Barrat, A., Barthelemy, M., Pastor-Satorras, R., & Vespignani, A. (2004). The architecture of complex weighted networks. In Proc. Natl. Acad. Sci. USA 101 (pp. 3747–3752).

Bastidon, C., & Parent, A. (2022). Cliometrics of world stock markets evolving networks. Annals of Operations Research.

Bernanke, B S. “Chairman of the Board of Govenors Federal Reserve System, Ben Bernanke Written Testimony Before the FCIC” (2010). YPFS Documents. 4204.

Bollerslev, T. (1986). Generalized autoregressive conditional heteroscedasticity. Journal of Econometrics, 31, 307–327.

Braverman, A., & Minca, A. (2018). Networks of common asset holdings: Aggregation and measures of vulnerability. The Journal of Network Theory in Finance, 4(3), 53–78.

Cerqueti, R., Ciciretti, R., Dalò, A., & Nicolosi, M. (2021a). ESG investing: A chance to reduce systemic risk. Journal of Financial Stability, 54, 100887.

Cerqueti, R., Ciciretti, R., Dalò, A., & Nicolosi, M. (2022a). A new measure of the resilience for networks of funds with applications to socially responsible investments. Physica a: Statistical Mechanics and Its Applications, 593, 126976.

Cerqueti, R., Clemente, G. P., & Grassi, R. (2021b). Systemic risk assessment through high order clustering coefficient. Annals of Operations Research, 299, 1165–1187.

Cerqueti, R., Pampurini, F., Pezzola, A., & Quaranta, A. G. (2022b). Dangerous liaisons and hot customers for banks. Review of Quantitative Finance and Accounting, 59(1), 65–89.

Chen, H., & Hackbarth, D. (2020). Active sector funds and fund manager skill. Journal of Portfolio Management, 46(8), 64–85.

Chen, J., & Chen, Z. (2012). Extended BIC for small-n-large-p sparse GLM. Statistica Sinica, 22(2), 555–574.

Cho, H., & Fryzlewicz, P. (2015). Multiple-change-point detection for high dimensional time series via sparsified binary segmentation. Journal of the Royal Statistical Society B, 77(2), 475–507.

Cho, H., & Korkas, K. K. (2022). High-dimensional GARCH process segmentation with an application to value-at-risk. Econometrics and Statistics, 23, 187–203.

Connolly, R. A., Stivers, C., & Sun, L. (2007). Commonality in the time-variation of stock–stock and stock–bond return comovements. Journal of Financial Markets, 10(2), 192–218.

Cont, R., & Schaanning, E. (2019). Monitoring indirect contagion. Journal of Banking & Finance, 104, 85–102.

Costantini, G., & Perugini, M. (2014). Generalization of clustering coefficients to signed correlation networks. PLoS ONE, 9(2), e88669.

Dong, G., Fan, J., Shekhtman, L. M., Shai, S., Du, R., Tian, L., Chen, X., Stanley, H. E., & Havlin, S. (2018). Resilience of networks with community structure behaves as if under an external field. Proceedings of the National Academy of Sciences, 115(27), 6911–6915.

Elliott, M., Georg, C.-P., & Hazell, J. (2021). Systemic risk shifting in financial networks. Journal of Economic Theory, 191, 105157.

Engle, R. F. (2002a). Dynamic conditional correlation: A simple class of multivariate generalized autoregressive conditional heteroskedasticity models. Journal of Business & Economic Statistics, 20(3), 339–350.

Engle, R. F. (2002b). Anticipating correlations: A new paradigm for risk management. Princeton University Press.

Fioruci, J. A., Ehlers, R. S., & Andrade Filho, M. G. (2014). Bayesian multivariate GARCH models with dynamic correlations and asymmetric error distributions. Journal of Applied Statistics, 41(2), 320–331.

Foygel, R., & Drton, M. (2010). Extended Bayesian information criteria for Gaussian graphical models. In Advances in Neural Information Processing Systems (pp. 604–612).

Friedman, J., Hastie, T., & Tibshirani, R. (2008). Sparse inverse covariance estimation with the graphical lasso. Biostatistics, 9, 432–441.

Gatfaoui, H. (2013). Translating financial integration into correlation risk: A weekly reporting’s viewpoint for the volatility behavior of stock markets. Economic Modelling, 30, 776–791.

Gatfaoui, H., & de Peretti, P. (2019). Flickering in information spreading precedes critical transitions in financial markets. Scientific Reports, 9, 5671.

Geczy, C. (2014). The new diversification: Open your eyes to alternatives. Journal of Portfolio Management, 40(5), 146–155.

Ghanbari, M., Lasserre, J., & Vingron, M. (2019). The distance precision matrix: Computing networks from nonlinear relationships. Bioinformatics, 35(6), 1009–1017.

Ghanbari, R., Jalili, M., & Yu, X. (2018). Correlation of cascade failures and centrality measures in complex networks. Future Generation Computer Systems, 83, 390–400.

Guillemot, V., Bender, A., & Boulesteix, A.-L. (2013). Iterative reconstruction of high-dimensional Gaussian graphical models based on a new method to estimate partial correlations under constraints. PLoS ONE, 8(4), e60536.

Hafner, C. M., Herwartz, H., & Maxand, S. (2022). Identification of structural multivariate GARCH models. Journal of Econometrics, 227(1), 212–227.

Hasse, J.-B. (2022). Systemic risk: A network approach. Empirical Economics, 63, 313–344.

Hua, J., Peng, L., Schwartz, R. A., & Alan, N. S. (2020). Resiliency and stock returns. Review of Financial Studies, 33(2), 747–782.

Hübsch, A., & Walther, U. (2017). The impact of network inhomogeneities on contagion and system stability. Annals of Operations Research, 254, 61–87.

Jackson, M. O., & Pernoud, A. (2021). Systemic risk in financial networks: A survey. Annual Review of Economics, 13, 171–202.

Jankova, J., & van de Geer, S. (2018). Inference for high-dimensional graphical models. In: Handbook of graphical models (editors: Drton, M., Maathuis, M., Lauritzen, S., Wainwright, M.). CRC Press: Boca Raton, Florida, USA.

Junior, L. S., & Franca, I. D. P. (2012). Correlation of financial markets in times of crisis. Physica a: Statistical Mechanics and Its Applications, 391(1–2), 187–208.

Kenourgios, D., Samitas, A., & Paltalidis, N. (2011). Financial crises and stock market contagion in a multivariate time-varying asymmetric framework. Journal of International Financial Markets, Institutions and Money, 21(1), 92–106.

Kim, J., & Kim, Y. (2019). Transitory prices, resiliency, and the cross-section of stock returns. International Review of Financial Analysis, 63(C), 243–256.

Kritzman, M., Li, Y., Page, S., & Rigobon, R. (2011). Principal Components as a Measure of Systemic Risk 112–126.

Longstaff, F. A. (2010). The subprime credit crisis and contagion in financial markets. Journal of Financial Economics, 97(3), 436–450.

Malik, F., Ewing, B. T., & Payne, J. E. (2005). Measuring volatility persistence in the presence of sudden changes in the variance of Canadian stock returns. Canadian Journal of Economics, 38(3), 1037–1056.

Markose, S., Giansante, S., Eterovic, N. A., & Gatkowski, M. (2021). Early warning of systemic risk in global banking: Eigen-pair R number for financial contagion and market price-based methods. Annals of Operations Research. https://doi.org/10.1007/s10479-021-04120-1

Mason, W., & Watts, D. J. (2012). Collaborative learning in networks. Proceedings of the National Academy of Sciences, 109(3), 764–769.

Mynhardt, R. H., Plastun, A., & Makarenko, I. (2014). Behavior of financial markets efficiency during the financial market crisis: 2007–2009. Corporate Ownership and Control, 11(2), 473–485.

Onnela, J. P., Chakraborti, A., Kaski, K., & Kertiész, J. (2002). Dynamic asset trees and portfolio analysis. The European Physical Journal B-Condensed Matter and Complex Systems, 30(3), 285–288.

Onnela, J. P., Saramaki, J., Kertesz, J., & Kaski, K. (2005). Intensity and coherence of motifs in weighted complex networks. Physical Review E, 71(6), 065103.

Opgen-Rhein, R., & Strimmer, K. (2007). Accurate ranking of differentially expressed genes by a distribution-free shrinkage approach. Statistical Applied Genetics Molecular Biology, 6, 1.

Pourahmadi, M. (2011). Covariance estimation: The GLM and regularization perspectives. Statistical Science, 26(3), 369–387.

Renn, O., Laubichler, M., Lucas, K., Kröger, W., Schanze, J., Scholz, R. W., & Schweizer, P. J. (2022). Systemic risks from different perspectives. Risk Analysis, 42(9), 1902–1920.

Schäfer, J., & Strimmer, K. (2005). A shrinkage approach to large-scale covariance estimation and implications for functional genomics. Statistical Applied Genetics Molecular Biology, 4, 32.

Simaan, M., Gupta, A., & Kar, K. (2020). Filtering for risk assessment of interbank network. European Journal of Operational Research, 280(1), 279–294.

So, M. K. P., Mak, A. S. W., & Chu, A. M. Y. (2022). Assessing systemic risk in financial markets using dynamic topic networks. Scientific Reports, 12, 2668.

Strohsal, T., & Weber, E. (2015). Time-varying international stock market interaction and the identification of volatility signals. Journal of Banking & Finance, 56, 28–36.

Tibshirani, R. (1996). Regression Shrinkage and selection via the Lasso. Journal of the Royal Statistical Society Series B, 58(1), 267–288.

van Borkulo, C. D., Borsboom, D., Epskamp, S., Blanken, T. F., Boschloo, L., Schoevers, R. A., & Waldorp, L. J. (2014). A new method for constructing networks from binary data. Scientific Reports, 4(1), 1–10.

Ward, M. D., Stovel, K., & Sacks, A. (2011). Network analysis and political science. Annual Review of Political Science, 14(1), 245–264.

Watts, D. J., & Strogatz, S. H. (1998). Collective dynamics of “small-world” networks. Nature, 393(6684), 440–442.

Whittaker, J. (1990). Graphical models in applied multivariate statistics. John Wiley.

Zhang, B., & Horvath, S. (2005). A general framework for weighted gene co-expression network analysis. Statistical Applications in Genetics and Molecular Biology, 4(1), 17.

Zhu, Y., Yang, F., & Ye, W. (2018). Financial contagion behavior analysis based on complex network approach. Annals of Operations Research, 268, 93–111.

Acknowledgements

We thank two anonymous referees for their questions and comments.

Funding

R. Cerqueti and G. Rotundo have no financial interests. Hayette Gatfaoui received a supporting grant from the “Sapienza Visiting Professor Programme 2020” of Sapienza University of Rome, Italy.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

G. Rotundo is an unpaid member of GNFM-INdAM and COST Action CA18232 and she thanks these organizations for fruitful discussions.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Daily GARCH estimates

Appendix: Daily GARCH estimates

From the 3rd January 2000 to the 8th July 2022, the daily sample size is T = 5875 (observations per series). The region-specific daily results are displayed in the forthcoming tables as follows. Table

9 displays the estimated changepoints (i.e., break dates) for each region, while Tables

10,

11 and

12 present the estimated GARCH(1,1) parameters across regimes and for each region on a daily basis. We find different regimes for stock returns across regional marketplaces.

In America, stock market indices exhibit (strong) persistence in volatility over time except for IPC index during period 3. Correlations persist over all periods except period 3. In Europe, individual volatilities of indices are strongly persisting across periods except for the following cases. CACALL index exhibits weak volatility persistence over period 2, while DAX, SMI, AEX, IBEX35, and OMXS indices exhibit small volatility persistence over period 3. Periods 1 and 2 exhibit almost no correlation persistence, while a low correlation persistence arises over period 3, giving then rise to strongly persisting correlations from period 4 to period 6. In Asia, stock market indices globally exhibit small persistence in volatility over periods 1, 2 and 5, while volatilities exhibit strong persistence over remaining periods 3, 4, 6, 7 and 8. Periods 1, 2 and 5 exhibit a small persistence in correlations, while the remaining periods 3, 4, 6, 7 and 8 strong exhibit persistence in correlations.

1.1 Clustering coefficient for signed networks

We apply the extension proposed by Costantini and Perugini (2014). The corresponding clustering coefficients per regime are displayed in the table below.

See Table

Obviously, considering weights' signs yields narrower standardized clustering coefficients because of the interplay between negative and positive node influences. Indeed, positive edges illustrate amplifying connections (e.g., risk frailty) within the network, and interact with negative edges that reflect inhibiting connections (e.g., risk resistance). Such interplay balances the network (shock spreading versus shock mitigation).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cerqueti, R., Gatfaoui, H. & Rotundo, G. Resilience for financial networks under a multivariate GARCH model of stock index returns with multiple regimes. Ann Oper Res (2024). https://doi.org/10.1007/s10479-023-05756-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10479-023-05756-x