Abstract

Primal and dual algorithms are developed for solving the n-dimensional convex optimization problem of finding the Euclidean ball of minimum radius that covers m given Euclidean balls, each with given center and radius. Each algorithm is based on a directional search method in which a search path may be a ray or a two-dimensional conic section in \(I\!\!R^n\). At each iteration, a search path is constructed by the intersection of bisectors of pairs of points, where the bisectors are either hyperplanes or n-dimensional hyperboloids. The optimal stopping point along each search path is determined explicitly.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

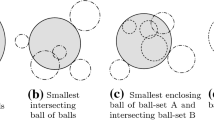

Let \(P=\{\textbf{p}_1,\ldots ,\textbf{p}_m\}\) be a given set of m distinct points in \(I\!\!R^n\), and for each point \(\textbf{p}_i \in P\), let \(r_i\) be a non-negative radius. Let \([\textbf{p}_i, r_i] = \{ \textbf{x}: \Vert \textbf{x} - \textbf{p}_i \Vert \le r_i \}\) denote the closed Euclidean ball where \(\textbf{p}_i\) is the center, \(r_i\) is the radius, and \(\Vert \textbf{x} - \textbf{p}_i \Vert \) is the Euclidean distance between \(\textbf{p}_i\) and \(\textbf{x}\). The problem of determining the minimum covering Euclidean ball of a set of Euclidean balls requires the ball \([\textbf{x}^*, z^*]\), with center \(\textbf{x}^*\) and minimum radius \(z^*\), that covers, or contains, the balls \([\textbf{p}_i, r_i]\) for all \(\textbf{p}_i \in P\). The problem is denoted by M(P), and is written as:

Adding a real constant to all the radii yields an equivalent problem, so the assumption of non-negative radii is not necessary. However, non-negative radii are assumed to simplify the presentatiion. If all the radii are equal, then all the radii may be assumed to be zero, and the problem is to find the minimum covering ball of the points \(\textbf{p}_i \in P\), called the minimum covering ball problem. Problem M(P) has the following equivalent representation:

This version of the problem is known as the min–max location problem with fixed distance and as the one-center delivery problem (Plastria, 2004). The center \(\textbf{x}\) is the location of a facility that minimizes the maximum service to points \(\textbf{p}_i \in P\) , where service is measured as the travel distance from \(\textbf{x}\) to \(\textbf{p}_i\) plus a fixed travel distance \(r_i\).

This paper presents primal and dual algorithms for solving problem M(P). At each iteration of either algorithm, bisectors of pairs of points are intersected to construct a search path, that is either a ray or a two-dimensional conic section in \(I\!\!R^n\). For the primal (dual) algorithm, points on the search path maintain primal (dual) feasibility and complementary slackness. The optimal stopping point along the search path is determined explicitly.

2 Literature

The problem of finding the minimum covering circle of a set of points in \(I\!\!R^2\) was first posed by Sylvester (1857). Various geometric solutions were reported by Sylvester (1860), Chrystal (1885), Blumenthal and Wahlin (1941), Rademacher and Toeplitz (1990), and Elzinga and Hearn (1972a). Voronoi diagrams (Aurenhammer and Klein (2000)) have also been used to solve the problem in \(I\!\!R^2\). Megiddo (1983a) reported an algorithm for M(P), with \(n = 3\), that is linear in m.

For the minimum covering ball problem of a set of points in \(I\!\!R^n\), Elzinga and Hearn (1972a) solved the dual problem as a convex, quadratic programming problem. Hopp and Reeve (1996) extended the Sylvester (1860) and Chrystal (1885) algorithm to \(I\!\!R^n\) relying on a heuristic without proof of convergence. Fischer et al. (2003) expanded the Hopp and Reeve algorithm, gave a proof of finite convergence, and were able to solve problems for m up to 10,000 and n up to 2000. Megiddo (1983b) reported an algorithm that is linear in m but exponential in n. Dyer (1992) improved the time-complexity of Meggido’s algorithm. Dearing and Zeck (2009) reported a dual algorithm based on search paths constructed from bisectors of pairs of points. Cavaleiro and Alizadeh (2018) presented computational improvements to the Dearing and Zeck approach.

The literature for the minimum covering ball of a set of balls in \(I\!\!R^n\) is more limited. Elzinga and Hearn (1972b) presented a geometrical algorithm for the problem in \(I\!\!R^2\). Xu et al. (2003) reported computational results for four general approaches to problem M(P) in \(I\!\!R^2\): a second-order cone reformulation, a sub-gradient approach, a quadratic programming scheme, and a randomized incremental algorithm. Two approaches for problem M(P) in \(I\!\!R^n\) were reported by Zhou et al. (2005): an unconstrained convex program whose objective function approximates the maximum objective function, and a reformulation of M(P) as a second-order cone programming problem. They solve problems with n up to 10,000, and m up to 5000. Applications of problem M(P) are referenced in Fischer et al. (2003) and Megiddo (1983b). Plastria (2004) presents a survey of the min–max location problems. Cavaleiro and Alizadeh (2021) present a dual simplex-type algorithm for the smallest enclosing ball of balls using a search path parameterized by the objective function value.

3 Properties of problem M(P)

The following properties of problem M(P) are used to develop the algorithms.

Property 1

There exists a unique minimum solution \(\textbf{x}^* \in I\!\!R^{n}\) to M(P).

Proof

The function \(f(\textbf{x}) = \max _{\textbf{p}_i \in P} \{f_{i}(\textbf{x}) \}\), where \(f_{i}(\textbf{x}) = \Vert \textbf{x} - \textbf{p}_i \Vert + r_{i}\), is strictly convex, and therefore continuous, on \(I\!\!R^n\) since each \(f_{i}(\textbf{x})\) is strictly convex. For any \(\textbf{x}_{0} \in I\!\!R^{n}\), with \(z_{0} = \max \{f_{i}(\textbf{x}_{0}) \}\), the sub-level set \(L_{z_0}(f) = \{ \textbf{x} \in I\!\!R^n: f(\textbf{x}) \le z_0 \}\) is non-empty and compact since it is the intersection of non-empty compact sub-level sets: \(L_{z_0}(f) = \cap _{\textbf{p}_i \in P}\{ \textbf{x} \in I\!\!R^n: f_{i}(\textbf{x}) \le z_0 \}\). Thus, there exists a unique \(\textbf{x}^* \in L_{z_0}(f)\) that minimizes \(f(\textbf{x})\) over \(L_{z_0}(f)\), and \(\textbf{x}^*\) minimizes \(f(\textbf{x})\) over \(I\!\!R^n\) since for \(\textbf{x} \in I\!\!R^n \setminus L_{z_0}(f)\), \(f(\textbf{x}) > f(\textbf{x}^*)\). \(\square \)

For points \(\textbf{p}_j, \textbf{p}_k \in P\), the ball \([\textbf{p}_k, r_k]\) is contained in the ball \([\textbf{p}_j, r_j]\) if and only if \(r_j - r_k \ge \; \Vert \textbf{p}_{k} - \textbf{p}_j \Vert \). In this case, the point \(\textbf{p}_k\) is redundant to \(\textbf{p}_j\), since any ball \([\textbf{x}, z]\) that covers \([\textbf{p}_j, r_j]\) also covers \([\textbf{p}_k, r_k]\). The point \(\textbf{p}_k\) can be eliminated from the set P with no effect on the optimal solution. If each point in P is redundant to \(\textbf{p}_j\), then the ball \([\textbf{x}, z ] = [\textbf{p}_j, r_j ]\) is the optimal solution for M(P) and is called the trivial solution.

The point \(\textbf{p}_k\) is non-redundant to \(\textbf{p}_j\) if and only if \(r_j - r_k < \; \Vert \textbf{p}_{k} - \textbf{p}_j \Vert \), and two points \(\textbf{p}_j, \textbf{p}_k\) are (mutually) non-redundant if neither is redundant to the other, that is, \(| r_j - r_k |< \; \Vert \textbf{p}_{j} - \textbf{p}_k \Vert \). Observe that if \(\textbf{p}_k\) is redundant to \(\textbf{p}_j\), then \(\textbf{p}_j\) is non-redundant to \(\textbf{p}_k\), and if \(r_k \ge r_j\), \(\textbf{p}_k\) is non-redundant to \(\textbf{p}_j\).

The bisector of two non-redundant points \(\textbf{p}_{j}, \textbf{p}_{k} \in P\), with radii \(r_{j}\) and \(r_{k}\) respectively, is the set

If \(r_j = r_k\), then \(\textbf{p}_{j}\) and \(\textbf{p}_{k}\) are two non-redundant points (since P consists of distinct points), and \(B_{j,k} = \{ \textbf{x}: (\textbf{p}_j - \textbf{p}_k)\textbf{x} = (\textbf{p}_j - \textbf{p}_k) (\textbf{p}_j + \textbf{p}_k) /2 \}\), is the hyperplane that is orthogonal to the line through \(\textbf{p}_{j}\) and \(\textbf{p}_{k}\), and contains the midpoint between \(\textbf{p}_{j}\) and \(\textbf{p}_{k}\).

If \(r_j > r_k\), and if \(\textbf{p}_k\) is non-redundant to \(\textbf{p}_j\), then the bisector \(B_{j,k} = \{ \textbf{x} : \Vert \textbf{p}_k - \textbf{x} \Vert - \Vert \textbf{p}_j - \textbf{x} \Vert = r_j - r_k \}\) is one sheet of an n-dimensional hyperboloid of two sheets, symmetric about the line through the focal points \(\textbf{p}_j\) and \(\textbf{p}_k\). This follows by setting \(2a_{j,k} = r_j - r_k > 0\), and noting that \(\textbf{p}_k\) is non-redundant to \(\textbf{p}_j\) if and only if \(2a_{j,k} < \Vert \textbf{p}_j - \textbf{p}_j \Vert \). Then the set \(H\!B_{j,k} = \{ \textbf{x} : | \Vert \textbf{p}_k - \textbf{x} \Vert - \Vert \textbf{p}_j - \textbf{x} \Vert | = 2a_{j,k} \}\) satisfies the definition, given by equation (32) in the “Appendix”, of an n-dimensional hyperboloid of two sheets with focal points \(\textbf{p}_j\) and \(\textbf{p}_k\), and the bisector \(B_{j,k}\) satisfies the definition, given by equation (33), of the sheet of \(H\!B_{j,k}\) closest to \(\textbf{p}_j\).

The bisector \(B_{j,k}\) is characterized by the axis vector \(\textbf{v}_{j,k} = (\textbf{p}_j - \textbf{p}_k)/\Vert \textbf{p}_j - \textbf{p}_k \Vert \), center \(\textbf{c}_{j,k} = (\textbf{p}_j + \textbf{p}_k)/2\), vertex \(\textbf{a}_{j,k} = \textbf{c}_{j,k} + a_{j,k}\textbf{v}_{j,k}\), which is the intersection of \(B_{j,k}\) and the line through the focal points, focal distance \(c_{j,k} = \Vert \textbf{p}_j - \textbf{p}_k \Vert /2\), and eccentricity \(\epsilon _{j,k} = c_{j,k}/a_{j,k}\).

Each point \(\textbf{x} \in B_{j,k}\) is the center of a ball \([\textbf{x},z_{\textbf{x}}]\), with radius \(z_{\textbf{x}} = \Vert \textbf{p}_j -\textbf{x} \Vert + r_j = \Vert \textbf{p}_k -\textbf{x} \Vert + r_k\), that contains, and is internally tangent to, the two balls \([\textbf{p}_j, r_j]\) and \([\textbf{p}_k, r_k]\). If \(B_{j,k}\) is the sheet of the hyperboloid closest to \(\textbf{p}_j\), its vertex \(\textbf{a}_{j,k}\) is the center of the smallest ball containing, and internally tangent to, the two balls \([\textbf{p}_j, r_j]\) and \([\textbf{p}_k, r_k]\). If \(B_{j,k}\) is the hyperplane, then \((\textbf{p}_{j} + \textbf{p}_{k})/2\) is the center of the smallest ball containing, and internally tangent to, the two balls \([\textbf{p}_j, r_j]\) and \([\textbf{p}_k, r_k]\).

Property 2

For non-redundant points \(\textbf{p}_j\), \(\textbf{p}_k\) \(\in P\), and for each \(\textbf{x} \in B_{j,k}\), \(\textbf{x} \ne \textbf{p}_j\) and \(\textbf{x} \ne \textbf{p}_k\).

Proof

The Property is true for any \(\textbf{x}\) not on the line through \(\textbf{p}_j\) and \(\textbf{p}_k\). The only point on the line through \(\textbf{p}_j\) and \(\textbf{p}_k\) and on \(B_{j,k}\) is the vertex \(\textbf{a}_{j,k}\). Substitution of the vector and parameter definitions implies \(\Vert \textbf{a}_{j,k}- \textbf{p}_j \Vert = c_{j,k} - a_{j,k} > 0\) and \(\Vert \textbf{a}_{j,k}- \textbf{p}_{k} \Vert = c_{j,k} + a_{j,k} >0\). \(\square \)

The primal and dual algorithms developed here are examples of the active set method of mathematical programming, Gill et al. (1991). A set \(S = \{ \textbf{p}_{i_1}, \ldots , \textbf{p}_{i_s} \} \subset P\), with its points ordered by non-decreasing radii, \(r_{i_1} \ge \ldots \ge r_{i_s}\), is an active set of a ball \([\textbf{x}_S, z_S]\) if S satisfies the following three conditions: (1) the points in S are affinely independent, (2) the constraint of M(P) corresponding to each point in S holds at equality, that is, \( \Vert \textbf{p}_{i_j} - \textbf{x}_S \Vert + r_{i_j} = z_S\), for each \(\textbf{p}_{i_j} \in S\), and (3) if \(s > 1\), \(\textbf{p}_{i_j}\) is non-redundant to \(\textbf{p}_{i_1}\), for \(j = 2, \ldots ,s\), that is, \(B_{i_1,i_j}\) is a bisector for \(j = 2, \ldots ,s\). Observe that if a set \(S \subset P\) satisfies conditions (1) and (2), but not (3), S may be transformed into an active set by deleting the points \(\textbf{p}_{i_j}\) that are redundant to \(\textbf{p}_{i_1}\). Also, there may be more than one active set corresponding to a ball, and any subset of an active set of a ball is also an active set of that ball.

Each iteration of the primal and dual algorithms corresponds to a ball \([\textbf{x}_S, z_S]\) and an active set S. The points in S are used to check for optimality and to determine the search path. The size of S may increase, decrease, or remain unchanged at each iteration.

Observe that a ball \([\textbf{x}_S, z_S]\) and an active set S with \(s = 1\) are optimal if and only if \([\textbf{x}_S, z_S]\) is the trivial solution, that is, \([\textbf{x}_S, z_S] = [\textbf{p}_{i_1}, r_{i_1}]\) and \(S = \{ \textbf{p}_{i_1} \}\).

Property 3

At any iteration of the primal or the dual algorithm, let \(S = \{ \textbf{p}_{i_1}, \ldots , \textbf{p}_{i_s} \}\), with points ordered by non-increasing radii, and with \(s > 1\), be an active set corresponding to the ball \([\textbf{x}_S, z_S ]\). Then \(\textbf{x}_S \ne \textbf{p}_{i_j}\), for \(j = 1, \ldots , s\).

Proof

For each \(\textbf{p}_{i_j} \in S\), \(B_{i_1,i_j}\) is a bisector and by Property 2, \(\textbf{x}_S \ne \textbf{p}_{i_1}\) and \(\textbf{x}_S \ne \textbf{p}_{i_j}\). \(\square \)

Property 3 implies that at each iteration of the primal or the dual algorithm with active set S, and for each \(\textbf{p}_{i_j} \in S\), the Euclidean distance \(\Vert \textbf{x} - \textbf{p}_{i_j} \Vert \) is differentiable with respect to \(\textbf{x}\) over the set \(I\!\!R^n\setminus S\). Then the Karush Kuhn Tucker (KKT) conditions for optimality, Gill et al. (1991), may be written as follows.

Property 4

A ball \([\textbf{x}_S, z_S]\), with active set S and \(|S| > 1,\) is the optimal solution to M(P) if and only if there exist variables \(\lambda _j \ge 0\) for \(\textbf{p}_{i_j} \in S\) and \(\lambda _j = 0\) for \(\textbf{p}_{i_j} \notin S\) such that

Property 5

At optimality, statements (3), (4), and (5) of the KKT conditions are equivalent to:

Proof

Since \(\Vert \textbf{x}_S - \textbf{p}_{i_j} \Vert > 0\), for all \(\textbf{p}_{i_j} \in S\), the change of variables \(\pi _j =\frac{\lambda _j/ \Vert \textbf{x}_S - \textbf{p}_{i_j} \Vert }{\sum _{\textbf{p}_{i_j} \in S} \lambda _j/ \Vert \textbf{x}_S - \textbf{p}_{i_j} \Vert }\) and \(\lambda _j = \frac{ \Vert \textbf{x}_S - \textbf{p}_{i_j} \Vert \pi _{j}}{ \sum _{\textbf{p}_{i_j} \in S} \Vert \textbf{x}_S - \textbf{p}_{i_j} \Vert \pi _j}\) for each \(\textbf{p}_{i_j} \in S\), shows the equivalence of conditions (3), (4), and (5) to conditions (7), (8), and (9). \(\square \)

Properties 4 and 5 lead to additional properties of an optimal ball \([\textbf{x}_S, z_S]\).

Property 6

The non-trivial minimum covering ball \([\textbf{x}_S,z_S]\) for problem M(P) is determined by an active set S of at most \(n+1\) affinely independent points in P, and the optimal center \(\textbf{x}_S \in \textrm{conv}(S)\), the convex hull of S.

Proof

Conditions (7) and (8) determine a linear system with \(n+1\) linear equations. A solution is determined by at most \(n+1\) linearly independent columns of the system which correspond to at most \(n+1\) affinely independent points from P. Conditions (7), (8), and (9) are equivalent to \(\textbf{x}_S \in \textrm{conv}(S)\). \(\square \)

The complementary slackness conditions (6) imply the following necessary condition for optimality.

Property 7

If \([\textbf{x}_S,z_S]\) is optimal to problem M(P) with active set S, and \(|S| > 1\), then \(\textbf{x}_S\) is on at least one bisector of a pair of points in S.

If S is an active set of size s corresponding to an optimal covering ball \([\textbf{x}_S, z_S]\), there may be more than s constraints that are active. At each iteration of either the primal or dual algorithm, the points in the active set S are sufficient to determine the ball \([\textbf{x}_S, z_S]\).

If S is an active set corresponding of the ball \([\textbf{x}_S, z_S]\), then \(\textbf{x}_S \in B_{i_j,i_k}\) for each pair of points \(\textbf{p}_{i_j}, \textbf{p}_{i_k} \in S\), so that \(\textbf{x}_S\) is on the intersection of bisectors \(B_{i_j,i_k}\) over all pairs of points \(\textbf{p}_{i_j}, \textbf{p}_{i_k} \in S\), denoted by \(B_S\). That is, \(\textbf{x}_S \in B_S = \cap _{\{\textbf{p}_{i_j}, \textbf{p}_{i_k}\} \subseteq S} B_{i_j,i_k}\).

At each iteration of the primal and dual algorithms a search path \(X_S = \{ \textbf{x}(\alpha ), \alpha _S \le \alpha \le \alpha _m \}\) is constructed so that \(X_S \subset B_S\). Property 23 shows that \(\textbf{x}(\alpha ) \in B_{i_j,i_k}\) for all \(\{\textbf{p}_{i_j}, \textbf{p}_{i_k}\} \subseteq S\), and \(\alpha _S \le \alpha \le \alpha _m\), and that the complementary slackness conditions (6) are maintained at each point on the search path \(X_S\).

If all the points in S have equal radii, then all the bisectors are hyperplanes, and \(B_S\) is a linear manifold of dimension \(n-|S|+1\). In this case, the search path is a ray.

If some points in S have unequal radii, then at least one bisector is a hyperboloid. Property 22 shows that the vectors and parameters of \(B_S\) may be computed by intersecting the hyperboloid bisector with \(|S|-2\) hyperplanes, and Property 19 shows that the resulting intersection is a conic section of dimension \(n-|S|+2\).

4 Primal algorithm

At each iteration of the primal algorithm there is a ball \([ \textbf{x}_S, z_S ]\) and an active set S that satisfy expressions (2) and (6) of the KKT conditions, but do not satisfy expressions (3), (4), and (5) of the KKT conditions. That is, S and \([ \textbf{x}_S, z_S ]\) are primal feasible but not dual feasible.

Since \([ \textbf{x}_S, z_S ]\) is primal feasible at each iteration, \(z_S\) is an upper bound on the optimal objective function value of M(P). Assuming non-degeneracy, the radius \(z_S\) is shown to decrease at each iteration of the primal algorithm.

The primal algorithm may be initialized by choosing \(\textbf{x}_S\) to be any point in \(I\!\!R^n\!,\) computing \(z_S = \max _{\textbf{p}_i \in P} \Vert \textbf{x}_S - \textbf{p}_i \Vert + r_i = \Vert \textbf{x}_S - \textbf{p}_j \Vert + r_j\), for some \(\textbf{p}_j \in P\), and choosing \(S = \{ \textbf{p}_j \}\). Observe that \([ \textbf{x}_S, z_S ]\) is primal feasible, but \(\textbf{x}_S \notin \textrm{aff}(S)\), which implies \(\textbf{x}_S \notin \textrm{conv}(S)\) so that \(\textbf{x}_S\) is not dual feasible.

Primal search phase

Given a primal feasible ball \([ \textbf{x}_S, z_S ]\) and an active set S, a search path \(X_S = \{ \textbf{x}(\alpha ) : \alpha _S \le \alpha \le \alpha _m \}\) is constructed so that \(X_S \subset B_S\), \(\textbf{x}(\alpha _S) = \textbf{x}_S\), and \(\textbf{x}(\alpha _m) \in \textrm{aff}(S)\). If all the points in S have equal radii, the search path will be a ray, but if some points in S have unequal radii, the search path will be a two-dimensional conic section in \(I\!\!R^n\).

For a search path \(X_S\) that is either a ray or a conic section, Property 23 shows that S is an active set for the ball \([\textbf{x}(\alpha ), z(\alpha )]\) for \(\alpha \ge \alpha _S\), and that S and \([\textbf{x}(\alpha ), z(\alpha )]\) satisfy the complementary slackness conditions (6). Property 24 shows that \(z(\alpha )\) is decreasing for \(\alpha \ge \alpha _S\).

For each \(\textbf{p}_k \in P \setminus S\), the parameter \(\alpha _k \ge \alpha _S\) is determined, if it exists, so that \(X_S\) intersects the bisector \(B_{i_1,k}\) at \(\textbf{x}(\alpha _k)\). If \(\textbf{x}(\alpha _k) \in X_S \cap B_{i_1,k}\), then \(\textbf{x}(\alpha _k) \in X_S \cap B_{i_j,k}\) for each \(\textbf{p}_{i_j} \in S\). That is, \(X_S\) simultaneously intersects the bisectors \(B_{i_j,k}\) at \(\textbf{x}(\alpha _k)\). Thus, it suffices to consider the intersection of \(X_S\) with only \(B_{i_1,k}\). At the point \(\textbf{x}(\alpha _k) \in X_S \cap B_{i_1,k}\), the constraint corresponding to the point \(\textbf{p}_k\) is active. The Update Phase chooses \(\alpha ^* = \min \{\alpha _m,\min _{\textbf{p}_k \in P \setminus S} \alpha _k \}\), and the ball \([\textbf{x}(\alpha ^*), z(\alpha ^*)]\) is checked for optimality.

Case 1: All points in S have equal radii

The points in the active set are denoted by \(S = \{ \textbf{p}_{i_1}, \ldots , \textbf{p}_{i_{s}} \}\), where \(r_{i_j} = r_{i_1}\) for \(\textbf{p}_{i_j} \in S\). In this case, the search path is the ray

where \(\alpha _S = 0\), \(\alpha _m = [( \textbf{p}_{i_1} - \textbf{x}_S ) \textbf{d}_S]/ \Vert \textbf{d}_S \Vert ^2 > 0\), and \(\textbf{d}_S \leftarrow \) Projection\(((\textbf{p}_{i_1} - \textbf{x}_S), R)\), with \(R = \{ (\textbf{p}_{i_1} - \textbf{p}_{i_2}), \ldots , (\textbf{p}_{i_1} - \textbf{p}_{i_s}) \}\). If \(|S| = 1\), \(\textbf{d}_S = ( \textbf{p}_{i_1} - \textbf{x}_S )/ \Vert \textbf{p}_{i_1} - \textbf{x}_S \Vert \).

For each \(\textbf{p}_k \in P \setminus S\), the parameter \(\alpha _k \ge \alpha _{S}\) is determined, if it exists, so that \(X_S\) intersects the bisector \(B_{i_1,k}\) at \(\textbf{x}(\alpha _k)\). There are two sub-cases to consider for computing \(\alpha _k\) depending on whether the radius \(r_{i_1}\) equals the radius \(r_{k}\).

Case 1a: \(r_{i_1} = r_{k}\) Then \(B_{i_1,k}\) is a hyperplane. The point \(\textbf{x}(\alpha _k) \in X_S \cap B_{i_1,k}\) is determined by solving for \(\alpha \) in the equation \((\textbf{p}_{i_1} - \textbf{p}_k)\textbf{x}(\alpha ) = (\textbf{p}_{i_1} - \textbf{p}_k)(\textbf{p}_{i_1} + \textbf{p}_k)/2\). If \((\textbf{p}_{i_1} - \textbf{p}_{k}) \textbf{d}_S = 0\), \(\alpha _k \leftarrow \infty \), else

If \(\alpha _k < 0\), \(\alpha _k \leftarrow \infty \).

Case 1b(i) \(r_{i_1} > r_{k}\). Then \(\textbf{p}_{i_1}\) is non-redundant to \(\textbf{p}_{k}\). If \(\textbf{p}_k\) is redundant to \(\textbf{p}_{i_1}\), eliminate \(\textbf{p}_k\) from P, and continue. Otherwise, \(\textbf{p}_k\) is non-redundant to \(\textbf{p}_{i_1}\) so that \( B_{i_1,k}\) is a hyperboloid and the parameter \(\alpha _k\) is determined so that \(\textbf{x}(\alpha _k) \in X_S \cap B_{i_1,k}\).

Case 1b(ii) \(r_{i_1}< r_{k}\). Then \(\textbf{p}_{k}\) is non-redundant to \(\textbf{p}_{i_1}\). The point \(\textbf{p}_{i_1}\) is redundant to \(\textbf{p}_{k}\) if and only if \(r_{k} - r_{i_1} = \Vert \textbf{p}_{i_1} - \textbf{p}_{k} \Vert \), in which case, \(\alpha _k = 0\). Otherwise, \(\textbf{p}_{i_1}\) is non-redundant to \(\textbf{p}_{k}\) so that \( B_{i_1,k}\) is a hyperboloid and the parameter \(\alpha _k\) is determined so that \(\textbf{x}(\alpha _k) \in X_S \cap B_{k, i_1}\).

The intersection of \(X_S\) and \(B_{i_1,k}\) is determined by substituting \(\textbf{x}(\alpha ) = \textbf{x}_S + \alpha \textbf{d}_S\) for \(\textbf{x}\), and \(c_{i_1,k}\), \(\textbf{c}_{i_1,k}\), \(a_{i_1,k}\), \(\textbf{v}_{i_1,k}\), and \(\epsilon _{i_1,k}\), for c, \(\textbf{c}\), a, \(\textbf{v}\), and \(\epsilon \), respectively, in expression (36) for \(B_{i_1,k}\) as a quadratic form. This gives the quadratic equation

where \(A = (\textbf{d}_S)^2 - \epsilon _{i_1,k}^2(\textbf{d}_S \textbf{v}_{i_1,k})^2\), \(B = 2(\textbf{x}_S - \textbf{c}_{i_1,k}) \textbf{d}_S - 2\epsilon _{i_1,k}^2[(\textbf{x}_S - \textbf{c}_{i_1,k}) \textbf{v}_{i_1,k}][ \textbf{d}_S \textbf{v}_{i_1,k}]\), and \(C = (\textbf{x}_S - \textbf{c}_{i_1,k})^2 - \epsilon _{i_1,k}^2[(\textbf{x}_S - \textbf{c}_{i_1,k}) \textbf{v}_{i_1,k}]^2 - a_{i_1,k}^2 + c_{i_1,k}^2.\) The parameter \(\alpha _k\) is chosen as the smallest positive real solution to (12).

Case 2: At least two points in S have unequal radii

The points in the active set are denoted by \(S = \{ \textbf{p}_{i_1}, \dots , \textbf{p}_{i_s} \}\), where \(r_{i_1} \ge \cdots \ge r_{i_s}\). By assumption \(r_{i_1} > r_{i_{s}}\), and by construction \(B_{i_1,i_s}\) is a bisector. Property 22 shows that \(B_S = B_{i_1,i_s} \cap _{k=2}^{s-1} H_k\), where each \(H_k = \{ \textbf{x}: \textbf{h}_k\textbf{x} = \textbf{h}_k\textbf{d}_k \}\) is a hyperplane such that \(B_{i_1,i_k} \cap B_{i_1,i_s} = B_{i_1,i_k} \cap H_k = B_{i_1,i_s} \cap H_k\), for \(k = 2 \ldots ,s-1\). Thus, \(B_S\) is a conic section of dimension \(n-s+2\).

Algorithm Intersections gives the closed-form expressions, based on Property 19, for computing the parameters and vectors of \(B_S\): \((\textbf{v}_{S}, \textbf{c}_{S}, \epsilon _{S}, a_{S}, b_{S}, c_{S}) \leftarrow \) Intersections \((S, \{r_{i_j} : \textbf{p}_{i_j} \in S \} )\). The algorithm starts with the vectors and parameters of \(B_{i_1,i_s}\), and each iteration computes the vectors and parameters of \(B_{i_1,i_s} \cap _{j=2}^{k-1} H_j \cap H_k\) given the vectors and parameters of \(B_{i_1,i_s} \cap _{j=2}^{k-1} H_j \), for \(k = 2, \ldots , s-1\).

If \(\epsilon _S >1\), then \(B_S\) is one branch of a hyperboloid; if \(\epsilon _S <1\), \(B_S\) is an ellipsoid; and if \(\epsilon _S = 1\), \(B_S\) is a paraboloid.

Case 2a: \(\epsilon _S > 1\) Then \(B_S\) is a hyperboloid. Property 14 shows that for any vector \(\textbf{u}\) orthogonal to \(\textbf{v}_S\), \(\hat{X}_S = \{ \textbf{x}(\alpha ) = \textbf{c}_S + a_S \sec (\alpha ) \textbf{v}_S + b_S \tan (\alpha ) \textbf{u} : -\pi< \alpha < \pi \}\) is one branch of a two-dimensional hyperbola with \(\hat{X}_S \subset B_S\; \cap \textrm{aff}(\textbf{c}_S, \textbf{v}_S, \textbf{u})\). Also, \(\hat{X}_S\) has the same vectors and parameters as \(B_S\), with vertex \(\textbf{x}(0) = \textbf{a}_S\).

The search path \(X_S\) is constructed by computing the vector \(\textbf{u}_S \leftarrow {\textsc {Projection}} ((\textbf{c}_S - \textbf{x}_S), \{ \textbf{v}_S \})\), so that \(\textbf{u}_S\) is orthogonal to \(\textbf{v}_S\), and by restricting the domain to \(\alpha _S \le \alpha \le \alpha _m\), where \(\alpha _S = \arctan \{ (\textbf{u}_S(\textbf{x}_S - \textbf{c}_S))/b_S\} < 0\), and \(\alpha _m = 0\). Then \(\textbf{x}_S = \textbf{x}(\alpha _S)\), and \(\textbf{x}(0) = \textbf{a}_S \in \textrm{aff}(S)\). The search path is defined by

For each \(\textbf{p}_k \in P \setminus S\), compute \(\alpha _k\), if it exists, such that \(\textbf{x}(\alpha _k) \in X_S \cap B_{i_1,k}\). If \(\textbf{p}_k\) is redundant to \(\textbf{p}_{i_1}\), eliminate \(\textbf{p}_k\) from P and continue to the next point in \(P \setminus S\). If \(\textbf{p}_{i_1}\) is redundant to \(\textbf{p}_k\), \(\alpha _k = 0\), and the algorithm continues to the next point in \(P \setminus S\). Else, \(\textbf{p}_{k}\) is not redundant to \(\textbf{p}_{i_1}\) so that \(B_{i_1,k}\) is a bisector and the parameter \(\alpha _k\) is determined so that \(\textbf{x}(\alpha ) \in X_S \in B_{i_1,k}\).

Property 20 shows that \(X_S \cap B_{i_1,k} = X_S \cap H_k\) for the hyperplane \(H_k = \{ \textbf{x}: \textbf{h}_k\textbf{x} = \textbf{h}_k\textbf{d}_k \}\), where \((\textbf{h}_k, \textbf{d}_k) \leftarrow \) Hyperplane \((\textbf{p}_{i_1}, \textbf{p}_{i_s}, \textbf{p}_k, r_{i_1}, r_{i_s}, r_{k} )\). The point \(\textbf{x}(\alpha _k) \in X_S \cap H_k\) is determined by solving for \(\alpha \) using the equation \( \textbf{h}_k\textbf{x}(\alpha ) = \textbf{h}_k\textbf{d}_k\), which is equivalent to the equation \(a_S\textbf{h}_k\textbf{v}_S\sec (\alpha ) + b_S\textbf{h}_k\textbf{u}_S\tan (\alpha ) = \textbf{h}_k(\textbf{d}_k - \textbf{c}_S)\). Multiplying through by \(\cos (\alpha )\), which is positive for \(-\pi /2< \alpha < 0\), and rearranging gives

where \(A = \textbf{h}_k(\textbf{d}_k - \textbf{c}_S)\), \(B = -b_S\textbf{h}_k\textbf{u}_S\), and \(C = a_S\textbf{h}_k\textbf{v}_S\). Define \(\phi = \sin (\alpha )\) for \(-\pi /2< \alpha < 0\). Note that \(\phi _S = \sin (\alpha _S)\). The solution \(\alpha _k\) is determined by \(\phi _k \leftarrow {\textsc {Compute}}\, \phi (\phi _S, A, B, C)\), and \(\alpha _k = \arcsin (\phi _k)\).

Case 2b: \(\epsilon _S < 1\) Then \(B_S\) is an ellipsoid. Corollary 1 shows that for any vector \(\textbf{u}\) orthogonal to \(\textbf{v}_S\), \(\hat{X}_S = \{ \textbf{x}(\alpha ) = \textbf{c}_S + a_S \cos (\alpha ) \textbf{v}_S + b_S \sin (\alpha ) \textbf{u} : 0 \le \alpha \le 2\pi \}\) is a two-dimensional ellipse with \(\hat{X}_S \subset B_S \;\cap \textrm{aff}(\textbf{c}_S, \textbf{v}_S, \textbf{u})\).

The search path \(X_S\) is constructed by computing \(\textbf{u}_S \leftarrow {\textsc {Projection}}((\textbf{c}_S - \textbf{x}_S), \{ \textbf{v}_S \} )\), so that \(\textbf{u}_S\) is orthogonal to \(\textbf{v}_S\), and by restricting the domain to \(\alpha _S \le \alpha \le \alpha _m\), where \(\alpha _S = \arcsin \{ (\textbf{u}_S(\textbf{x}_S - \textbf{c}_S))/b_S\} < 0\), and \(\alpha _m = 0\). Then \(\textbf{x}_S = \textbf{x}(\alpha _S)\), and \(\textbf{x}(\alpha _m) = \textbf{a}_S \in \textrm{aff}(S)\). The search path is defined by

The analysis for the elliptic search path is analogous to the hyperbolic search path. For each \(\textbf{p}_k \in P \setminus S\), the parameter \(\alpha _k\) is determined, if it exists, so that \(X_S\) intersects the bisector \(B_{i_1,k}\) at \(\textbf{x}(\alpha _k)\), with \(\alpha _S \le \alpha \le 0\). If \(\textbf{p}_k\) is redundant to \(\textbf{p}_{i_1}\), eliminate \(\textbf{p}_k\) from P and continue to the next point in \(P \setminus S\). If \(\textbf{p}_{i_1}\) is redundant to \(\textbf{p}_k\), \(\alpha _k = 0\), and the algorithm continues to the next point in \(P \setminus S\). Else, \(\textbf{p}_{k}\) is not redundant to \(\textbf{p}_{i_1}\) so that \(B_{i_1,k}\) is a bisector and the parameter \(\alpha _k\) is determined so that \(\textbf{x}(\alpha ) \in X_S \in B_{i_1,k}\).

Property 20 shows that \(X_S \cap B_{i_1,k} = X_S \cap H_k\) for the hyperplane \(H_k = \{ \textbf{x}: \textbf{h}_k\textbf{x} = \textbf{h}_k\textbf{d}_k \}\), where \((\textbf{h}_k, \textbf{d}_k) \leftarrow \) Hyperplane \((\textbf{p}_{i_1}, \textbf{p}_{i_s}, \textbf{p}_k, r_{i_1}, r_{i_s}, r_{k} )\). The point \(\textbf{x}(\alpha _k) \in X_S \cap H_k\) is determined by solving for \(\alpha \) using the equation \( \textbf{h}_k\textbf{x}(\alpha ) = \textbf{h}_k\textbf{d}_k\), which is equivalent to the equation

where \(A = a_S \textbf{h}_k\textbf{v}_S\), \(B = b_S \textbf{h}_k\textbf{u}_S\), and \(C = \textbf{h}_k(\textbf{d}_e - \textbf{c}_S)\). Define \(\phi = \sin (\alpha )\) for \(-\pi /2< \alpha < \pi /2\). Note that \(\phi _S = \sin (\alpha _S)\). The solution \(\alpha _k\) is determined by \(\phi _k \leftarrow {\textsc {Compute}} \phi (\phi _S, A, B, C)\), and \(\alpha _k = \arcsin (\phi _k)\).

Case 2c: \(\epsilon _S = 1\) Then \(B_S\) is a paraboloid. Property 17 shows that for any vector \(\textbf{u}\) orthogonal to \(\textbf{v}_S\), \( \hat{X}_{S} = \{ \textbf{x}(\alpha ) = \textbf{c}_{S} + \tilde{c}_{S} \alpha ^2 \textbf{v}_{S} + 2 \tilde{c}_{S}\alpha \textbf{u} : -\infty< \alpha < \infty \}\) is a two-dimensional parabola with \(\hat{X}_S \subset B_S \cap \textrm{aff}( \textbf{c}_{S}, \textbf{v}_{S}, \textbf{u})\), and \(\hat{X}_S\) has the same vectors and parameters as \(B_S\). The search path \(X_S\) is constructed by computing the vector \(\textbf{u}_S \leftarrow \) Projection\(((\textbf{c}_S - \textbf{x}_S), \{\textbf{v}_S \} )\). Also, the domain is restricted to \(\alpha _S \le \alpha \le \alpha _m\), where \(\alpha _S = -(\textbf{u}_S(\textbf{c}_S - \textbf{x}_S))/(2\tilde{c}_S) \le 0\), and \(\alpha _m = 0\). Then \(\textbf{x}(\alpha _S) = \textbf{x}_S\), \(\textbf{x}(\alpha _m) = \textbf{c}_S \in \textrm{aff}(S)\), and the search path is defined by

For each \(\textbf{p}_k \in P \setminus S\), the parameter \(\alpha _k\) is determined, if it exists, so that \(X_S\) intersects the bisector \(B_{i_1,k}\) at \(\textbf{x}(\alpha _k)\) for \(\alpha _k \le \alpha \le 0\).

Property 20 shows that \(X_S \cap B_{i_1,k} = X_S \cap H_k\) for the hyperplane \(H_k = \{ \textbf{x} : \textbf{h}_k\textbf{x} = \textbf{h}_e\textbf{d}_k \}\) where \((\textbf{h}_k, \textbf{d}_k) \leftarrow \) Hyperplane \(( \textbf{p}_{i_1}, \textbf{p}_{i_s}, \textbf{p}_{k}, r_{i_1}, r_{i_s}, r_{k} )\). The point \(\textbf{x}(\alpha _k) \in X_{S} \cap H_k\) is determined by solving the equation \(\textbf{h}_e\textbf{x}(\alpha _k) = \textbf{h}_k\textbf{d}_k\), for \(\alpha _k,\) which is equivalent to the quadratic equation

where \(A = \tilde{c}_S \textbf{h}_k\textbf{v}_S\), \(B = 2\tilde{c}_S \textbf{h}_k \textbf{u}_S\), and \(C = \textbf{h}_k(\textbf{d}_k - \textbf{c}_S)\). Then \(\alpha _k\) is chosen as the smallest real solution such that \(\alpha _S \le \alpha _k\).

Primal update phase

Let \(\alpha ^* = \min \{\alpha _m,\min _{\textbf{p}_k \in P \setminus S} \alpha _k \}\). If \(\alpha ^* = \alpha _m\), then \([\textbf{x}_S, z_S] \leftarrow [\textbf{x}(\alpha _m), z(\alpha _m)]\) and \(\textbf{x}_S \in \textrm{aff}(S)\). If \(\textbf{x}_S \in \textrm{conv}(S)\), that is, there exists a solution to equations (3) and (4) of the KKT conditions over the set S, where \(\lambda _{i_j} \ge 0\) for all \(p_{i_{j}} \in S\), then \([\textbf{x}_S, z_S]\) is the optimal solution. Else, \(\textbf{x}_S \notin \textrm{conv}(S)\), and \(\lambda _{i_l} < 0\), for some \(\textbf{p}_{i_l} \in S\). Set \(S \leftarrow S \setminus \{ \textbf{p}_{i_l} \}\). The algorithm returns to the Search Phase with \([\textbf{x}_S, z_S] \) and active set S.

If \(\alpha ^* = \min _{\textbf{p}_k \in P \setminus S} \{\alpha _k \}\), set \(\alpha _e \leftarrow \alpha ^*\), \([\textbf{x}_S, z_S] \leftarrow [\textbf{x}(\alpha _e), z(\alpha _e)]\), and \(S \leftarrow S \cup \{\textbf{p}_e\}\). In this case, \(\alpha _e\) is the smallest parameter such that the ball \([\textbf{x}_S, z_S]\) remains primal feasible and contains the ball \([\textbf{p}_e, r_e]\). If \(\textbf{x}_S \in \textrm{conv}(S)\), then \([\textbf{x}_S, z_S] \) is the optimal solution. If \(\textbf{x}_S \notin \textrm{conv}(S)\), but \(\textbf{x}_S \in \textrm{aff}(S)\), then \(\lambda _{i_l} < 0\) for some \(\textbf{p}_{i_l} \in S\) and \(S \leftarrow S \setminus \{ \textbf{p}_{i_l} \}\). The algorithm returns to the Search Phase with \([\textbf{x}_S, z_S] \) and active set S. Else, \(\textbf{x}_S \notin \textrm{aff}(S)\), then the points in S are affinely independent, and the algorithm returns to the Search Phase with \([\textbf{x}_S, z_S] \) and active set S.

There may be a tie for the entering point \(\textbf{p}_{e}\), in which case the next iteration of the Search Phase may be degenerate with zero change in the parameter \(\alpha _k\). Cycling can be avoided by an adaptation of Bland’s rule that chooses the point with the smallest index among all points that are candidates for entering. That is, \(e = \min \{ k : \alpha _k = \alpha ^*, \textbf{p}_k \in P \setminus S \}\).

Property 8

If equations (3) and (4) of the KKT conditions, over the set \(S \cup \{ \textbf{p}_e \}\), with \([\textbf{x}^*, z^*] =[ \textbf{x}_S, z_S]\) have a solution with \(\lambda _{i_l} < 0\), then the points in \(S \cup \{ \textbf{p}_e \}\setminus \{ \textbf{p}_{i_l} \}\) are affinely independent.

Proof

Each column of the linear system over the set S determined by equations (3) and (4) from the KKT conditions with \([\textbf{x}^*, z^*] =[ \textbf{x}_S, z_S]\) corresponds to a point in S. Since the points in S are affinely independent, the columns of this linear system are linearly independent. The linear system over the set \(S \cup \{ \textbf{p}_e \} \setminus \{ \textbf{p}_{i_l} \}\) has the same columns as the linear system over the set S, except that the column corresponding to \(\textbf{p}_e\) has replaced the column corresponding to \(\textbf{p}_{i_l}\). Therefore the columns of the linear system over the set \(S \cup \{ \textbf{p}_e \} \setminus \{ \textbf{p}_{i_l} \}\) are linearly independent since the column corresponding to \(\textbf{p}_e\) is a linear combination of the columns determined by the set S, with a non-zero multiplier for the column corresponding to \(\textbf{p}_{i_l}\). Therefore, the points in the set \(S \cup \{ \textbf{p}_e \} \setminus \{ \textbf{p}_{i_l} \}\) are affinely independent. \(\square \)

The next property shows that if the point \(\textbf{p}_{i_l}\) leaves S, because \(\lambda _{i_l} < 0\), then the point \(\textbf{p}_{i_l}\) is covered by the ball \([ \textbf{x}(\alpha ),z(\alpha ) ]\) during the next search phase.

Property 9

In the Update Phase, suppose the point \(\textbf{p}_{i_l}\) is chosen to leave the set S because \(\lambda _{i_l} < 0\) in the solution to equations (3) and (4) of the KKT conditions over the set \(S \cup \{ \textbf{p}_e \}\). In the Search Phase, the active set is \(S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}\), and the search path is \(X_{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} = \{ \textbf{x}(\alpha ): \alpha _{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} \le \alpha \le 0 \}\). Then the ball \([\textbf{x}(\alpha ), z(\alpha )]\) remains feasible with respect to the leaving point \(\textbf{p}_{i_l}\), that is, \([\textbf{p}_{i_l}, r_{i_l}] \subset [\textbf{x}(\alpha ), z(\alpha )]\) for \(\alpha _{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} \le \alpha \le 0\).

Proof

Let \(z_{i_j}(\alpha ) = \Vert \textbf{x}(\alpha ) - \textbf{p}_{i_j} \Vert +r_{i_j}\), for each \(\textbf{p}_{i_j} \in S\), and let \(z'_{i_j}(\alpha ) = \frac{(\textbf{x}(\alpha ) - \textbf{p}_{i_j} )}{\Vert \textbf{x}(\alpha ) - \textbf{p}_{i_j} \Vert } \textbf{x}'(\alpha )\), where \(\textbf{x}'(\alpha )\) is the normalized tangent vector to the search path at \(\textbf{x}(\alpha )\). By construction of the search path, with \(\alpha _{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} \le \alpha \le 0\), \(z_{i_1}(\alpha ) = z_{i_j}(\alpha )\), for each \(\textbf{p}_{i_j} \in S \setminus \{\textbf{p}_{i_l} \}\), which implies the derivatives are equal, that is, \(z'_{i_1}(\alpha ) =z'_{i_j}(\alpha )\), for each \(\textbf{p}_{i_j} \in S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}\) and for \(\alpha _{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} \le \alpha \le 0\). Property 24 shows that \(z_{i_j}(\alpha )\) is decreasing along the search path, that is, \( z'_{i_j}(\alpha ) < 0,\) for each \(\textbf{p}_{i_j} \in S \setminus \{\textbf{p}_{i_l} \}\), and for \(\alpha _{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} \le \alpha \le 0\).

In equation (4) substitute \(\textbf{x}(\alpha )\) for \(\textbf{x}_S\), and take the dot product of each summand with the tangent vector \(\textbf{x}'(\alpha )\), so that \(\sum _{\textbf{p}_{i_j} \in S \setminus \{\textbf{p}_{i_l} \}} \frac{ (\textbf{x}(\alpha ) - \textbf{p}_{i_j} )}{ \Vert \textbf{x}(\alpha ) - \textbf{p}_{i_j} \Vert }\textbf{x}'(\alpha ) \lambda _{i_j} + \frac{ (\textbf{x}(\alpha ) - \textbf{p}_{i_l} )}{ \Vert \textbf{x}(\alpha ) - \textbf{p}_{i_l} \Vert }\textbf{x}'(\alpha )(\lambda _{i_l} ) = \sum _{\textbf{p}_{i_j} \in S \setminus \{\textbf{p}_{i_l} \}} z'_{i_j}(\alpha ) \lambda _{i_j} + z'_{i_l}(\alpha ) \lambda _{i_l} = 0.\) Substitute \(z'_{i_1}(\alpha ) =z'_{i_j}(\alpha )\), for each \(\textbf{p}_{i_j} \in S \setminus \{\textbf{p}_{i_l} \}\), and apply equation (3), to get \(z'_{i_1}(\alpha ) (1-\lambda _{i_l}) + z_{i_l}'(\alpha )( \lambda _{i_l} ) = z'_{i_1}(\alpha ) + (z'_{i_l}(\alpha ) - z'_{i_1}(\alpha ))\lambda _{i_l} = 0\), or \(z'_{i_1}(\alpha ) = - \lambda _{i_l} (z'_{i_l}(\alpha ) - z'_{i_1}(\alpha ))\). Since \(z'_{i_1}(\alpha ) < 0\) and \(\lambda _{i_l} < 0\), then \(z'_{i_l}(\alpha )< z'_{i_1}(\alpha ) < 0\) so that \(z_{i_l}(\alpha )\) is decreasing and decreasing at a faster rate than \(z_{i_1}(\alpha )\). This shows that \([\textbf{p}_{i_l}, r_{i_l}] \subset [\textbf{x}(\alpha ), z(\alpha )]\) for \(\alpha _{S \cup \{ \textbf{p}_e \} \setminus \{\textbf{p}_{i_l} \}} \le \alpha \le 0\). \(\square \)

If there is more than one component \(\lambda _{i_j} < 0\) in the solution to (3) and (4), an adaptation of Bland’s rule is to choose the point to be deleted from S with the smallest index \(i_j\) such that \(\lambda _{i_j} < 0\), that is, \(i_l = \min \{ i_j: \lambda _{i_j} < 0 \}\).

Property 10

The primal algorithm solves M(P) in a finite number of iterations.

Proof

At each iteration of the search phase there is a primal feasible, but not dual feasible, ball \([\textbf{x}_S, z_S]\), and an active set S, such that \(\textbf{x}_S \notin \textrm{aff}(S)\). If the search phase yields \(\alpha ^* = \alpha _m > \alpha _{S}\), then \(z(\alpha ^*) < z(\alpha _{S})\) by Property 24, and \(\textbf{x}(\alpha ^*) \in \textrm{aff}(S)\).

If the search phase yields \(\alpha ^* = \alpha _k > \alpha _{S}\), then \(z(\alpha ^*) < z(\alpha _{S})\), and \(S \leftarrow S \cup \{\textbf{p}_{k} \} \).

The search phase yields \(\alpha ^* = \alpha _k = \alpha _{S}\) if and only if \(\textbf{p}_k\) is active for the ball \([\textbf{x}_S, z_S]\). If \(\textbf{x}(\alpha ^*) \in \textrm{conv}(S \cup \{ \textbf{p}_k \})\), then \([\textbf{x}(\alpha ^*), z(\alpha ^*)]\) is optimal. Else, if \(\textbf{x}(\alpha ^*) \in \textrm{aff}(S \cup \{ \textbf{p}_k \})\), some point \(\textbf{p}_{i_l}\) leaves S, and a new search is initiated with \([\textbf{x}_S, z_S] = [\textbf{x}(\alpha ^*), z(\alpha ^*)]\) and \(S \setminus \{\textbf{p}_{i_l} \} \cup \{\textbf{p}_{k} \}\).

To prohibit an active set from being repeated in the sequence of degenerate steps, a list of points that have been eliminated from active sets occurring in the sequence of degenerate steps is generated, and these points are prohibited from consideration as entering points. After a finite number of degenerate iterations, either \(\alpha ^* = \alpha _m > \alpha _{S}\) or \(\alpha ^* = \alpha _k > \alpha _{S}\) and \(z(\alpha ^*)\) decreases. Since there are a finite number of active sets, and \(z(\alpha )\) is bounded below, the algorithm finds a minimum ball \([\textbf{x}_S, z_S]\) in a finite number of iterations. \(\square \)

5 Dual algorithm

At each iteration of the dual algorithm there is a ball \([ \textbf{x}_S, z_S ]\) and an active set S that satisfy expressions (3), (4), (5), and (6) of the KKT conditions, but do not satisfy expressions (2) of the KKT condition. That is, S and \([ \textbf{x}_S, z_S ]\) are dual feasible but not primal feasible.

Since \([\textbf{x}_S, z_S ]\) is a feasible covering of the balls \([ \textbf{p}_i, r_i ]\) for \(\textbf{p}_i \in S\), then \([\textbf{x}_S, z_S ]\) is an optimal solution to the problem M(S), a relaxation of M(P). Therefore, \(z_S\) is a lower bound on the optimal objective function value for M(P). Assuming non-degeneracy, the radius \(z_S\) is shown to increase at each iteration of the dual algorithm.

The dual algorithm may be initialized by choosing the ball \([\textbf{x}_S, z_S ] = [\textbf{p}_j, r_j ]\) for any point \(\textbf{p}_j \in P\), and \(S = \{ \textbf{p}_{j} \}\) as an active set. Then \([\textbf{x}_S, z_S ]\) and S are dual feasible.

Dual update phase

A dual feasible ball \([\textbf{x}_S, z_S ]\) and active set S are optimal to M(P) if they are primal feasible to M(P), that is, if \(z_S \ge \Vert \textbf{p}_i-\textbf{x}_S \Vert + r_i\), for all \(\textbf{p}_i \in P\). If not optimal, then an infeasible point \(\textbf{p}_{e} \in P \setminus S\) is chosen to enter.

If the points in \(S \cup \{ \textbf{p}_e \}\) are affinely independent, then \(|S \cup \{\textbf{p}_e \}| \le n+1\), and \(\textbf{x}_S \in \textrm{conv}(S \cup \{ \textbf{p}_e\})\), so that \([\textbf{x}_S, z_S]\) remains dual feasible with respect to \(S \cup \{\textbf{p}_e \}\). The algorithm enters the Search Phase with the ball \([\textbf{x}_S, z_S ]\), active set S, and the entering point \(\textbf{p}_e\). The Search Phase searches for a new solution for which the set \(S \cup \{\textbf{p}_e \}\) is active.

If the points in \(S \cup \{ \textbf{p}_e \}\) are affinely dependent, then a point \(\textbf{p}_{l} \in S\) is chosen to leave S. Since \([\textbf{x}_S, z_S ]\) is dual feasible to M(P), there exists a non-negative solution \((\pi _{1},\ldots ,\pi _{s})\) to the linear system (7), (8), over the set S. Since the points in \(S \cup \{\textbf{p}_{e}\}\) are affinely dependent, the linear system (19) and (20) has a solution, and (19) implies \(\lambda _{j}<0\), for some \(\textbf{p}_j \in S\).

A leaving point \(\textbf{p}_l\) is chosen by the “minimum ratio rule”:

The next property states that the points in \(S \cup \{\textbf{p}_{e}\} \setminus \{\textbf{p}_{l}\}\) are affinely independent and that \(\textbf{x}_S\) remains dual feasible for the set \(S \cup \{\textbf{p}_{e}\} \setminus \{\textbf{p}_{l}\}\). The proof is given in Dearing and Thipwiwatpotjana (2006).

Property 11

Suppose that \([\textbf{x}_S, z_S]\), and active set S, are dual feasible, but not optimal, to M(P). Suppose that \(\textbf{p}_{e}\) is the point chosen to enter the set S and that the set \(S \cup \{\textbf{p}_{e} \}\) is affinely dependent. If the leaving point \(\textbf{p}_{l}\) is chosen by (21), then the points in \(S \cup \{\textbf{p}_{e}\} \setminus \{\textbf{p}_{l}\}\) are affinely independent, and \(\textbf{x}_S \in \textrm{conv}(S \cup \{\textbf{p}_{e}\} \setminus \{\textbf{p}_{l}\})\).

If the point \(\textbf{p}_l\) leaves S, then the set S is reset to \(S \leftarrow S \setminus \{ \textbf{p}_{l} \}\). Thus \(|S \cup \{\textbf{p}_e \}| \le n+1\), and \(\textbf{x}_S \in \textrm{conv}(S \cup \{ \textbf{p}_e\})\), so that \([\textbf{x}_S, z_S]\) remains dual feasible with respect to \(S \cup \{\textbf{p}_e \}\). The updated set S remains active for \([\textbf{x}_S, z_S ]\). The algorithm enters the Search Phase with the set S, the ball \([\textbf{x}_S, z_S ]\), and the entering point \(\textbf{p}_e\). The Search Phase searches for a new solution for which the set \(S \cup \{\textbf{p}_e \}\) will be active.

Dual search phase

Given a dual feasible ball \([ \textbf{x}_S, z_S ]\), an active set S, and an entering point \(\textbf{p}_e\) as determined in the Update Phase, a search path \(X_{S} = \{ \textbf{x}(\alpha ): \alpha \ge \alpha _S \}\) is constructed so that \(\textbf{x}(\alpha _S) = \textbf{x}_S\) and \(X_S \subset B_S\). If all the points in S have equal radii, the search path will be a ray, but if some points in S have unequal radii, the search path will be a two-dimensional conic section in \(I\!\!R^n\).

For a search path \(X_S\) that is either a ray or a conic section, Property 23 shows that S is an active set for the ball \([\textbf{x}(\alpha ), z(\alpha ) ]\), and that S and \([\textbf{x}(\alpha ), z(\alpha ) ]\) satisfy the complementary slackness conditions (6) for \(\alpha \ge \alpha _S\). Property 24 shows that \(z(\alpha )\) is increasing for \(\alpha \ge \alpha _S\).

The parameter \(\alpha _e \ge \alpha _S\) is determined, if it exists, so that \(X_{S}\) intersects the bisector \(B_{i_1,e}\) at \(\textbf{x}(\alpha _e)\). If \(\textbf{x}(\alpha _e) \in X_{S} \cap B_{i_1,e}\), then \(\textbf{x}(\alpha _e) \in X_{S} \cap B_{i_j,e}\) for each \(\textbf{p}_{i_j} \in S\). Thus it suffices to consider the intersection of \(X_{S}\) with only \(B_{i_1,e}\).

Geometrically, the search moves the center \(\textbf{x}(\alpha )\) of the ball \([\textbf{x}(\alpha ), z(\alpha )]\) along the path \(X_{S}\), while the radius \(z(\alpha )\) increases. At the point \(\textbf{x}(\alpha _e) \in X_{S} \cap B_{i_1,e}\), the constraint corresponding to \(\textbf{p}_e\) is active. If \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\), then the ball \([\textbf{x}(\alpha _e) , z(\alpha _e)]\) and S are checked for optimality. Otherwise, the parameter \(\alpha ^*\) is determined so that \(\textbf{x}(\alpha ^*) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). In this case some point \(\textbf{p}_{i_l} \in S\) is deleted from S, and the Update Phase is entered with the ball \([\textbf{x}(\alpha ^*) , z(\alpha ^*)]\), the set \(S \setminus \{ \textbf{p}_{i_l} \}\), and entering point \(\textbf{p}_e\).

Case 1: All points in S have equal radii

The points in the active set are denoted by \(S = \{ \textbf{p}_{i_1}, \ldots , \textbf{p}_{i_{s}} \}\), where \(r_{i_j} = r_{i_1}\), for \(\textbf{p}_{i_j} \in S\). In this case, the search path is the ray

where \(\alpha _S = 0\) and \(\textbf{d}_S \leftarrow \) Projection\(((\textbf{p}_{e} - \textbf{x}_S), R)\) with \(R = \{ (\textbf{p}_{i_1} - \textbf{p}_{i_2}), \ldots , (\textbf{p}_{i_1} - \textbf{p}_{i_s}) \}\). If \(s = 1\), with \(S = \{ \textbf{p}_{i_1} \}\), \(\textbf{d}_{S} = (\textbf{p}_{e} - \textbf{p}_{i_1})/\Vert \textbf{p}_{e} - \textbf{p}_{i_1}\Vert \).

There are two sub-cases to consider for computing \(\alpha _e\) depending on whether the radius \(r_{i_1}\) equals the radius \(r_e\).

Case 1a: \(r_{i_1} = r_e\) Then \(B_{i_1,e}\) is a hyperplane. The intersection of \(X_S\) and \(B_{i_1,e}\) is determined by solving for \(\alpha \) using the equation \((\textbf{p}_{i_1} - \textbf{p}_e)\textbf{x}(\alpha ) = (\textbf{p}_{i_1} - \textbf{p}_e)(\textbf{p}_{i_1} + \textbf{p}_e)/2\). If \((\textbf{p}_{i_1} - \textbf{p}_{e}) \textbf{d}_S = 0\), \(\alpha _e \leftarrow \infty \). Otherwise,

If \(\alpha _e < 0\), \(\alpha _e \leftarrow \infty \).

Case 1b: \(r_{i_1} \ne r_e\) Since \(z_S = \Vert \textbf{p}_{i_j} - \textbf{x}_S \Vert + r_j\) for each \(\textbf{p}_{i_j} \in S\), and \(z_S < \Vert \textbf{p}_e-\textbf{x}_S \Vert + r_e\), then \(\textbf{p}_e\) is non-redundant to each \(\textbf{p}_{i_j} \in S\), and \(B_{i_1,e}\) is a bisector. The intersection of \(X_S\) and \(B_{i_1,e}\) is determined by substituting \(\textbf{x}(\alpha ) = \textbf{x}_S + \alpha \textbf{d}_S\) for \(\textbf{x}\), and \(c_{i_1,e}\), \(\textbf{c}_{i_1,e}\), \(a_{i_1,e}\), \(\textbf{v}_{i_1,e}\), and \(\epsilon _{i_1,e}\) for c, \(\textbf{c}\), a, \(\textbf{v}\), and \(\epsilon \), respectively, in the quadratic expression (36) for \(B_{i_1,e}\). This gives the quadratic equation

where \(A = (\textbf{d}_S)^2 - \epsilon _{i_1,e}^2(\textbf{d}_S \textbf{v}_{i_1,e})^2,\) \(B = 2(\textbf{x}_S - \textbf{c}_{i_1,e}) \textbf{d}_S - 2\epsilon _{i_1,e}^2 [(\textbf{x}_S - \textbf{c}_{i_1,e}) \textbf{v}_{i_1,e} ] [\textbf{d}_S \textbf{v}_{i_1,e}] ,\) and \(C = (\textbf{x}_S - \textbf{c}_{i_1,e})^2 - \epsilon _{i_1,e}^2[(\textbf{x}_S - \textbf{c}_{i_1,e}) \textbf{v}_{i_1,e}]^2 - a_{i_1,e}^2 + c_{i_1,e}^2\). The parameter \(\alpha _e\) is chosen as the smallest positive real solution \(\alpha \) to (24).

The following procedure, implemented by Algorithm ConvexHull, is used to determine whether \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). Substitute \(\textbf{x}(\alpha ) = \textbf{x}_{S} +\alpha \textbf{d}_{S}\) for \(\textbf{x}_S\) in equation (8), expand, and simplify to obtain the linear system

where T is defined in Step 2 of Algorithm ConvexHull, with \(\textbf{w}_S = \textbf{x}_S\), and the right-hand side of (25) is defined by \(\tilde{\textbf{v}}_S = \textbf{d}_S\) and \(\tilde{\textbf{u}}_S = \textbf{0}\). The matrix T is \((n+1)\)-by-\((s+1)\) with rank \(s+1\). The linear system (25) has a solution since \(\textbf{x}(\alpha ) \in \textrm{aff}(S \cup \textbf{p}_e)\) for \(\alpha \ge 0\). To solve (25) for some value of \(\alpha \), solve \(T \varvec{\gamma } = \textbf{e}_1\) for \(\varvec{\gamma }\), and solve \(T \varvec{\nu } = [0, \tilde{\textbf{v}}^{T}_{S}]^{T}\) for \(\varvec{\nu }\). Then \( \varvec{\pi }(\alpha ) = \varvec{\gamma } - \alpha \varvec{\nu }\).

If \(\varvec{\pi }(\alpha _e) \ge 0\), then \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). If some component of \(\varvec{\pi }(\alpha _e)\) is negative, then \(\textbf{x}(\alpha _e) \notin \textrm{conv}(S \cup \{ \textbf{p}_e \})\). In this case, a new parameter \(\alpha _{i_l}\) is determined so that \(\alpha _S = 0 \le \alpha _{i_l} < \alpha _e\), and \(\textbf{x}(\alpha _{i_l}) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). Since the \(s+1\) points in \(S \cup \{ \textbf{p}_e\}\) are affinely independent, \(\textrm{conv}(S \cup \{ \textbf{p}_e\})\) is a simplex with \(s+1\) vertices and \(s+1\) facets. The \(s+1\) vertices are the points in \(S \cup \{ \textbf{p}_e\}\). The \(s+1\) facets are denoted by \(F_{i_j} = \textrm{conv}(S \cup \{ \textbf{p}_e\} \setminus \{\textbf{p}_{i_j} \})\), for each \(\textbf{p}_{i_j} \in S\), and by \(F_e = \textrm{conv}(S)\), for \(\textbf{p}_e\). Each point \(\textbf{p}_{i_j} \in S\) corresponds to the component \(\pi _{i_j}(\alpha )\) of \(\varvec{\pi }(\alpha )\) and to the facet \(F_{i_j}\). The point \(\textbf{p}_e\) corresponds to the component \(\pi _e(\alpha )\) of \(\varvec{\pi }(\alpha )\) and to the facet \(F_e\).

Since \(\textbf{x}(0) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\), and \(\textbf{x}(\alpha _e) \notin \textrm{conv}(S \cup \{ \textbf{p}_e \})\), \(X_{S}\) must intersect some facet of \(\textrm{conv}(S \cup \{ \textbf{p}_e \})\) between \(\textbf{x}(0)\) and \(\textbf{x}(\alpha _e)\). For each \(\textbf{p}_{i_j} \in S\), the parameter \(\alpha _{i_j} \ge 0\) is computed, if it exists, so that \(\textbf{x}(\alpha _{i_j}) \in X_S \cap F_{i_j}\), which is equivalent to \(\pi _{i_j}(\alpha _{i_j}) = \gamma _{i_j} - \alpha _{i_j}\delta _{i_j} = 0\). That is, for each \(\textbf{p}_{i_j} \in S\), with \(\delta _{i_j} > 0\), set \( \alpha _{i_j} =\gamma _{i_j} /\delta _{i_j}\). If \(\delta _{i_j} \le 0\), \(\alpha _{i_j} \leftarrow \infty \). For the point \(\textbf{p}_e\), \(\gamma _e = 0\), implies \(\alpha _e = 0\), so \(\alpha _e \leftarrow \infty \). Cavaleiro and Alizadeh (2018) present an equivalent procedure for finding \(\alpha _{i_l}\) in their approach to the minimum covering ball problem of a set of points.

The intersection of \(X_S\) and a facet of \(\textrm{conv}(S \cup \{ \textbf{p}_e \})\) first encountered along \(X_S\) occurs at \(\alpha _{i_l}\), where \( \alpha _{i_l} = \min _{\textbf{p}_{i_j} \in S } \{ \alpha _{i_j} \}\). The point \(\textbf{p}_{i_l} \in S\) is chosen to leave S. Then the solution to the linear system (25) with \(\textbf{x}(\alpha _{i_l})\) substituted for \(\textbf{x}_S\), yields \(\pi _{i_l} (\alpha _{i_l})= 0\), and \(\pi _{i_j}(\alpha _{i_l}) \ge 0\), for \(\textbf{p}_{i_j} \in S \cup \{ \textbf{p}_e \} \setminus \{ \textbf{p}_{i_l} \}\), so that \(\textbf{x}(\alpha _{i_l}) \in F_{i_l}\). This construction shows that \(S \setminus \{ \textbf{p}_{i_l} \}\) is an active set for \([\textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\) and that \([\textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\) is dual feasible with respect to \(S \cup \{ \textbf{p}_e \} \setminus \{ \textbf{p}_{i_l} \}\). However, the constraint corresponding to \(\textbf{p}_e\) is not active at \([\textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\), and \(\textbf{p}_e\) is not added to S.

Compute \((\text{ FLAG }, i_l, \alpha _{i_l}) \leftarrow \) ConvexHull\((\epsilon _{S}, \textbf{x}_S, \textbf{d}_S, \textbf{0}, 0, 0, \alpha _e, 0)\), and if FLAG = TRUE, then \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). In this case, \(S \leftarrow S \cup \{\textbf{p}_e\}\), and \([\textbf{x}_S, z_S ] \leftarrow [\textbf{x}(\alpha _e),z(\alpha _e)]\) are checked for optimality by the Update Phase. If FLAG = FALSE, delete the point \(\textbf{p}_{i_l}\) from S. Reset \(S \leftarrow S \setminus \{ \textbf{p}_{i_l} \}\), and \([\textbf{x}_S, z_S] \leftarrow [ \textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\). The Search Phase is re-entered with the active set S, the entering point \(\textbf{p}_e\), and the ball \([\textbf{x}_S, z_{S}]\).

Case 2: At least two points in S have unequal radii

The points in the active set are denoted by \(S = \{ \textbf{p}_{i_1}, \dots , \textbf{p}_{i_{s}} \}\), where \(r_{i_1} \ge \cdots \ge r_{i_s}\). By assumption \(r_{i_1} > r_{i_s}\), and by construction \(B_{i_1,i_s}\) is a bisector. The vectors and parameters of \(B_S = B_{i_1,i_s} \cap _{k=2}^{s-1} H_k\) are computed using \( \left( \textbf{v}_{S}, \textbf{c}_{S}, \epsilon _{S}, a_{S}, b_S, c_{S} \right) \leftarrow \) Intersections \((S, \{ r_{i_j} : \textbf{p}_{i_j} \in S\} )\). If \(\epsilon _S > 1\), then \(B_S\) is one branch of a hyperboloid. If \(\epsilon _S <1\), then \(B_S\) is an ellipsoid. If \(\epsilon _S = 1\), then \(B_S\) is a paraboloid.

Case 2a: \(\epsilon _S > 1\) Then \(B_S\) is a hyperboloid. Property 14 shows that for any vector \(\textbf{u}\) orthogonal to \(\textbf{v}_S\), \( \hat{X}_{S} = \{ \textbf{x}(\alpha ) = \textbf{c}_{S} + a_{S} \sec (\alpha ) \textbf{v}_{S} + b_{S} \tan (\alpha ) \textbf{u} : -\pi< \alpha < \pi \} \) is one branch of a two-dimensional hyperbola with \(\hat{X}_S \subset B_S \cap \textrm{aff}(\textbf{c}_{S}, \textbf{v}_{S}, \textbf{u})\). Also, \(\hat{X}_S\) has the same vectors and parameters as \(B_S\), with vertex \(\textbf{x}(0) = \textbf{a}_{S}\).

The search path \(X_S\) is constructed by computing the vector \(\textbf{u}_{S} \leftarrow \) Projection\(( (\textbf{p}_{e} - \textbf{c}_{S}), R)\) where \(R = \{ (\textbf{p}_{i_1} - \textbf{p}_{i_2}), \ldots , (\textbf{p}_{i_1} - \textbf{p}_{i_s}) \}\), and by restricting the domain to \(\{\alpha _S \le \alpha \}\), where \(\alpha _S = \arctan \{(\textbf{u}_S(\textbf{x}_S - \textbf{c}_S))/b_S\}\). If \(\textbf{x}_S \in \textrm{aff}(S)\), then \(\alpha _S = 0\), else \(\alpha _S > 0\). Then

The parameter \(\alpha _e\) is determined, if it exists, so that \(\textbf{x}(\alpha _e) \in B_{i_1,e}\) and \(\alpha _S \le \alpha _e\). Property 20 shows that \(X_S \cap B_{i_1,e} = X_S \cap H_e\) for the hyperplane \(H_e = \{ \textbf{x}: \textbf{h}_e\textbf{x} = \textbf{h}_e\textbf{d}_e \}\), where \((\textbf{h}_e, \textbf{d}_e) \leftarrow \) Hyperplane \((\textbf{p}_{i_1}, \textbf{p}_{i_s}, \textbf{p}_e, r_{i_1}, r_{i_s}, r_{e} )\). The point \(\textbf{x}(\alpha _e) \in X_S \cap H_e\) is determined by solving for \(\alpha \) using the equation \( \textbf{h}_e\textbf{x}(\alpha ) = \textbf{h}_e\textbf{d}_e\), which is equivalent to the equation \(a_S\textbf{h}_e\textbf{v}_S\sec (\alpha ) + b_S\textbf{h}_e\textbf{u}_S\tan (\alpha ) = \textbf{h}_e(\textbf{d}_e - \textbf{c}_S)\). Multiplying through by \(\cos (\alpha )\), which is positive for \(-\pi /2< \alpha < \pi /2\), and rearranging gives

where \(A = \textbf{h}_e(\textbf{d}_e - \textbf{c}_S)\), \(B = -b_S\textbf{h}_e\textbf{u}_S\), and \(C = a_S\textbf{h}_e\textbf{v}_S\). Let \(\phi = \sin (\alpha )\) for \(-\pi /2< \alpha < \pi /2\). Note that \(\phi _S = \sin (\alpha _S)\). The solution \(\alpha _e\) is determined by \(\phi _e \leftarrow {\textsc {Compute}} \phi (\phi _S, A, B, C).\) and \(\alpha _e = \arcsin (\phi _e)\).

Compute \((\text{ FLAG }, i_l, \alpha _{i_l}) \leftarrow \) ConvexHull\((\epsilon _{S}, \textbf{c}_S, \textbf{v}_S, \textbf{u}_S, a_S, b_S, \alpha _e, \phi _S)\). If FLAG = TRUE, \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). In this case, \(S \leftarrow S \cup \{\textbf{p}_e\}\), and \([\textbf{x}_S, z_S ] \leftarrow [\textbf{x}(\alpha _e),z(\alpha _e)]\) are checked for optimality by the Update Phase. If FLAG = FALSE, delete the point \(\textbf{p}_{i_l}\) from S. Reset \(S \leftarrow S \setminus \{ \textbf{p}_{i_l} \}\), and \([\textbf{x}_S, z_S] \leftarrow [ \textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\). The set S is active with respect to the ball \([\textbf{x}_S, z_{S}]\), and \([\textbf{x}_S, z_{S}]\) is dual feasible with respect to \(S \cup \{ \textbf{p}_e \} \). The Search Phase is re-entered with the active set S, the entering point \(\textbf{p}_e\), and the ball \([\textbf{x}_S, z_{S}]\).

Case 2b: \(\epsilon _{S} < 1\) Then \(B_S\) is an ellipsoid. Corollary 1 shows that for any vector \(\textbf{u}\) orthogonal to \(\textbf{v}_S\), \(\hat{X}_{S} = \{ \textbf{x}(\alpha ) = \textbf{c}_{S} + a_{S} \cos (\alpha ) \textbf{v}_{S} + b_{S} \sin (\alpha ) \textbf{u} : -\pi \le \alpha \le \pi \}\) is a two-dimensional ellipse with \(\hat{X}_S \subset B_S \cap \textrm{aff}(\textbf{c}_{S}, \textbf{v}_{S}, \textbf{u})\), and with the same vectors and parameters as \(B_S\).

The search path \(X_S\) is constructed by computing \(\textbf{u}_{S} \leftarrow {\textsc {Projection}} ( (\textbf{p}_{e} - \textbf{c}_{S}), R)\), where \(R = \{ (\textbf{p}_{i_1} - \textbf{p}_{i_2}), \ldots , (\textbf{p}_{i_1} - \textbf{p}_{i_s}) \}\), and by restricting the domain to \(\{\alpha _S \le \alpha \le \pi \}\), where \(\alpha _S = \arcsin \{(\textbf{u}_S(\textbf{x}_S - \textbf{c}_S))/b_S\}\). If \(\textbf{x}_S \in \textrm{aff}(S)\), then \(\alpha _S = 0\), else \(\alpha _S > 0\). Then the search path \(X_S\) is defined by

The parameter \(\alpha _e\) is determined, if it exists, so that \(\textbf{x}(\alpha _e) \in B_{i_1,e}\) and \(\alpha _S \le \alpha _e\). Property 20 shows that \(X_S \cap B_{i_1,e} = X_S \cap H_e\) for the hyperplane \(H_e = \{ \textbf{x}: \textbf{h}_e\textbf{x} = \textbf{h}_e\textbf{d}_e \}\), where \((\textbf{h}_e, \textbf{d}_e) \leftarrow \) Hyperplane \((\textbf{p}_{i_1}, \textbf{p}_{i_s}, \textbf{p}_e, r_{i_1}, r_{i_s}, r_{e} )\). The point \(\textbf{x}(\alpha _e) \in X_S \cap H_e\) is determined by solving for \(\alpha \) using the equation \( \textbf{h}_e\textbf{x}(\alpha ) = \textbf{h}_e\textbf{d}_e\), which is equivalent to the equation

where \(A = a_S \textbf{h}_e\textbf{v}_S\), \(B = b_S \textbf{h}_e \textbf{u}_S\), and \(C = \textbf{h}_e (\textbf{d}_e - \textbf{c}_S)\). The solution \(\alpha _e\) is determined by \(\phi _e \leftarrow {\textsc {Compute}}\phi (\phi _S, A, B, C)\), and \(\alpha _e = \arcsin (\phi _e)\).

Compute \((\text{ FLAG }, i_l, \alpha _{i_l}) \leftarrow \) ConvexHull\((\epsilon _{S}, \textbf{c}_S, \textbf{v}_S, \textbf{u}_S, a_S, b_S, \alpha _e, \phi _S)\). If FLAG = TRUE, \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). In this case, \(S \leftarrow S \cup \{\textbf{p}_e\}\), and \([\textbf{x}_S, z_S ] \leftarrow [\textbf{x}(\alpha _e),z(\alpha _e)]\) are checked for optimality by the Update Phase. If FLAG = FALSE, delete the point \(\textbf{p}_{i_l}\) from S. Reset \(S \leftarrow S \setminus \{ \textbf{p}_{i_l} \}\), and \([\textbf{x}_S, z_S] \leftarrow [ \textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\). The set S is active with respect to the ball \([\textbf{x}_S, z_{S}]\), and \([\textbf{x}_S, z_{S}]\) is dual feasible with respect to \(S \cup \{ \textbf{p}_e \} \). The Search Phase is re-entered with the active set S, the entering point \(\textbf{p}_e\), and the ball \([\textbf{x}_S, z_{S}]\).

Case 2c: \(\epsilon _S = 1\) Then \(B_S\) is a paraboloid. Property 17 shows that for any vector \(\textbf{u}\) orthogonal to \(\textbf{v}_S\), \( \hat{X}_{S} = \{ \textbf{x}(\alpha ) = \textbf{c}_{S} + \tilde{c}_{S} \alpha ^2 \textbf{v}_{S} + 2 \tilde{c}_{S}\alpha \textbf{u}: -\infty< \alpha < \infty \}\) is a two-dimensional parabola with \(\hat{X}_S \subset B_S \cap \textrm{aff}( \textbf{c}_{S}, \textbf{v}_{S}, \textbf{u})\), and \(\hat{X}_S\) has the same vectors and parameters as \(B_S\). The search path \(X_S\) is constructed by computing the vector \(\textbf{u}_S \leftarrow \) Projection\(((\textbf{p}_e - \textbf{c}_S), R )\), where \(R = \{ (\textbf{p}_{i_1} - \textbf{p}_{i_2}), \ldots , (\textbf{p}_{i_1} - \textbf{p}_{i_s}) \}\). Also, the domain is restricted to \(\alpha _S \le \alpha \), where \(\alpha _S = 0\) if \(\textbf{x}_S = \textbf{c}_S\); otherwise \(\alpha _S = (\textbf{u}_S(\textbf{x}_S - \textbf{c}_S))/(2\tilde{c}_S) \ge 0\). Then the search path is defined by

The parameter \(\alpha _e \ge \alpha _S\) is determined, if it exists, so that \(\textbf{x}(\alpha _e) \in X_S \cap B_{i_1,e}\). Since \(\textbf{p}_e\) is infeasible, \(\textbf{p}_e\) is non-redundant to \(\textbf{p}_{i_1}\) so that \(B_{i_1,e}\) is a bisector. If \(\textbf{x}(\alpha _e) \in X_S \cap B_{i_1,e}\), then \(\textbf{x}(\alpha _e) \in X_S \cap B_{i_j,e}\), for all \(\textbf{p}_{i_j} \in S\). Thus, it suffices to determine the intersection of \(X_S\) with only \(B_{i_1,e}\).

Property 20 shows that \(X_S \cap B_{i_1,e} = X_S \cap H_e\) for the hyperplane \(H_e = \{ \textbf{x} : \textbf{h}_e\textbf{x} = \textbf{h}_e\textbf{d}_e \}\), where \((\textbf{h}_e, \textbf{d}_e) \leftarrow \) Hyperplane \(( \textbf{p}_{i_1}, \textbf{p}_{i_s}, \textbf{p}_{e}, r_{i_1}, r_{i_s}, r_{e} )\). The point \(\textbf{x}(\alpha _e) \in X_{S} \cap H_e\) is determined by solving the equation \(\textbf{h}_e\textbf{x}(\alpha ) = \textbf{h}_e\textbf{d}_e\), for \(\alpha \), which gives the quadratic equation

where \(A = \tilde{c}_S \textbf{h}_e\textbf{v}_S\), \(B = 2\tilde{c}_S \textbf{h}_e \textbf{u}_S\), and \(C = \textbf{h}_e(\textbf{d}_e - \textbf{c}_S)\). Then \(\alpha _e\) is chosen as the smallest real solution such that \(\alpha _S \le \alpha _e\), which must exist since \(\textbf{p}_e\) is infeasible and \(z(\alpha )\) is increasing.

Compute \((\text{ FLAG }, \alpha _{i_l}) \leftarrow \) ConvexHull\((\epsilon _{S}, \textbf{c}_S, \textbf{v}_S, \textbf{u}_S, a_S, b_S, \alpha _e, \phi _S)\). If FLAG = TRUE, \(\textbf{x}(\alpha _e) \in \textrm{conv}(S \cup \{ \textbf{p}_e \})\). In this case, \(S \leftarrow S \cup \{\textbf{p}_e\}\), and \([\textbf{x}_S, z_S ] \leftarrow [\textbf{x}(\alpha _e),z(\alpha _e)]\) are checked for optimality by the Update Phase. If FLAG = FALSE, delete the point \(\textbf{p}_{i_l}\) from S. Reset \(S \leftarrow S \setminus \{ \textbf{p}_{i_l} \}\), and \([\textbf{x}_S, z_S] \leftarrow [ \textbf{x}(\alpha _{i_l}), z(\alpha _{i_l})]\). The set S is active with respect to the ball \([\textbf{x}_S, z_{S}]\), and \([\textbf{x}_S, z_{S}]\) is dual feasible with respect to \(S \cup \{ \textbf{p}_e \} \). The Search Phase is re-entered with the active set S, the entering point \(\textbf{p}_e\), and the ball \([\textbf{x}_S, z_{S}]\).

Regardless of the type of search path: a ray, hyperbola, ellipse, or parabola, if there is more than one point \(\textbf{p}_{i_l} \in S \cup \{\textbf{p}_{e}\}\) such that \(\min _{\textbf{p}_{i_j} \in S} \{\alpha _{i_j} \} = \alpha _{i_l}\), then \(X_S\) intersects the corresponding facets simultaneously, and there is a tie for the leaving point. In this case choose any candidate point to be deleted from S. The next Search Phase may lead to a degenerate iteration at the next step with \(\alpha _{i_l} =0\) for some \(\textbf{p}_{i_l}\). Cycling will not occur since at each degenerate iteration one point is deleted from the finite set S. After a finite number of points are deleted, S is reduced to two points, and the parameter \(\alpha \) will be positive at the next iteration.

Property 12

The dual algorithm solves M(P) in a finite number of iterations.

Proof

Given a dual feasible ball \([\textbf{x}_S, z_S]\), an active set S, and an entering point \(\textbf{p}_e \notin S\) such that \(\Vert \textbf{x}_S - \textbf{p}_e \Vert + r_S > z_S\), the search phase finds a step size \(\alpha _e > \alpha _S\), and a set \(S' \subseteq S \cup \{ \textbf{p}_e \}\) such that the ball \([\textbf{x}_{S'}, z_{S'}] \leftarrow [\textbf{x}(\alpha _e), z(\alpha _e)]\) is dual feasible with active set \(S'\). The search phase may require intermediate iterations, each identifying a step size \(\alpha _{i_l} > \alpha _S\) and resulting in a point \(\textbf{p}_{i_l}\) leaving the current active set \(S' \subseteq S\). At most \(|S| -1\) intermediate iterations are possible before \(\textbf{x}(\alpha _{i_l}) \in \text {conv}(S' \cup \{ \textbf{p}_e \})\). Property 24 shows that \(z(\alpha _e) > z(\alpha _S)\) at each iteration, and \(z(\alpha _{i_l}) > z(\alpha _S)\) at each intermediate iteration. Since the objective function value is bounded above and there are only a finite number of active sets, the algorithm finds the optimal solution in a finite number of iterations. \(\square \)

6 Computational results

Both algorithms were implemented in MATLAB [Release R2017a (9.20.556344)] on a MacBook Pro with a 2.7 GHz Intel Core i5 processor running macOS Catalina Version 10.15.5. The primal algorithm code is roughly 700 lines long, and the dual algorithm code is roughly 800 lines long. Both algorithms were run to solve Problem M(P) for problems with balls whose centers and radii were drawn randomly from the following distributions:

-

Points drawn from the uniform distribution within a hypercube centered at the origin with each side of length 20. Test problems were created for each of the dimensions \(n=50, 100, 500, 1000\) and for each of the number of points \(m=n/10, n/5, n/2, n, 2n, 5n, 10n\). Once a point was sampled, a value R was drawn from the uniform distribution on the interval [0, 1]. This value of R was then multiplied by each of the radius parameters \(r = 0, 1, 2, 4, 6, 8\) to generate a problem instance with m points in n dimensions, with radii rR. This generated problem instances in which the balls were growing progressively larger for the same set of points. Forty values of R were sampled, yielding a suite of 6,720 different hypercube test problems.

-

In a similar manner, points drawn uniformly on the surface of a hypersphere of radius 10 centered at the origin. Test problems were created for each of the dimensions \(n=50, 100, 500, 1000\); for each of the number of points \(m=n/10, n/5, n/2, n, 2n, 4n\); and for each of the radius parameters \(r = 1, 1/2, 1/4, 1/16\). Again, R was drawn from the uniform distribution on the interval [0, 1], and forty values of R were sampled, yielding a suite of 3,840 different hypersphere test problems. Geometrically, the test problems resembled a bumpy sphere.

All test problems were solved to optimality by both the primal and dual algorithms. For the primal algorithm, the center of the initial covering ball was selected to be the arithmetic mean of the set of points P. For the dual algorithm, the initial set S was the ball whose center plus corresponding radius was farthest from the origin. For the dual algorithm, the entering point was selected to be the center of the ball that was most infeasible. To avoid redundancy, if there was a tie between two balls for being most infeasible, the ball with largest radius was selected to enter S.

A numerical tolerance was set to \(8n\epsilon _{mach}/(1 - 8n\epsilon _{mach})\) for the dual algorithm and \(100n\epsilon _{mach}/(1 - 100n\epsilon _{mach})\) for the primal algorithm. This quantity was used to detect primal feasibility, dual feasibility, eccentricity, etc. In solving all of the test problems, neither the primal nor the dual algorithms ever encountered an elliptic or a parabolic search path. Small test problems were constructed that did require elliptic or parabolic search paths, and were easily solved. These examples required careful placements of points and assignments of radius values.

For the primal algorithm, the computational complexity for each iteration is as follows:

-

1.

For the Primal Search Phase, the most expensive operation is the calculation of the vectors \(\textbf{hp}_{k}\), \(k = 2,\dots ,(|S|-1)\). This was done using the Gram-Schmidt algorithm, which has complexity of \(O(2n|S|^2)\).

-

2.

For the calculation of the parameter \(\alpha ^*\), each point \(\textbf{p}_k\) in \(P \setminus S\) must be examined and the associated parameter \(\alpha _k\) must be calculated. This has a complexity of O(53mn).

-

3.

In the Primal Update Phase, the algorithm tests whether the KKT conditions are satisfied. This involves using least squares to calculate the solution to an n-by-|S| system of linear equations, which has a complexity of \(O(4n|S|^2 - 4|S|^{3}/3)\).

In conclusion, each iteration of the primal algorithm has complexity \(O(6n|S|^2 - 4|S|^{3}/3 + 53mn)\).

For the dual algorithm, the computational complexity for each iteration is as follows:

-

1.

In the Dual Update Phase, it first must be determined whether the points in \(S \cup \{ \textbf{p}_e \}\) are affinely independent, which has complexity \(O(4n|S|^2 - 4|S|^3/3)\). If they are affinely dependent, then solutions must be calculated for equations (7), (8), and (9), and for equations (19) and (20). These computations have complexity \(O(2n|S|^2 - 2|S|^3/3)\) and \(O(6n|S|^2 - 2|S|^3)\), respectively.

-

2.

In the Dual Search Phase, vectors \(\textbf{hp}_{k}\), \(k = 2,\dots ,(|S|-1)\) were calculated using the Gram-Schmidt algorithm, which has complexity of \(O(2n|S|^2)\), and the calculation of the vector \(\textbf{u}_{S}\) has complexity \(O(2n|S|^2 - 2|S|^3/3)\). Finally, the calculation of the parameter \(\alpha ^*\) requires \(O(2n|S|^2 - 2|S|^3/3)\) operations.

-

3.

Calculating whether the new dual feasible solution is also primal feasible requires O(3mn) operations.

In conclusion, each iteration of the dual algorithm has complexity \(O(18n|S|^2 - 4|S|^{3} + 3mn)\).

Observations

-

1.

Table 1 shows that for the Hypercube data, the average number of iterations for both the primal and dual closely tracts the average value of |S| at optimality. This means that a point is added to S in most iterations, and there are very few iterations in which a point leaves S.

-

2.

Tables 1 and 2 show that the dual algorithm is faster than the primal algorithm over almost all problem classes (n, m). This can be explained by the computational complexity of the algorithms because at each iteration, the primal algorithm requires an operation for each point in \(P \setminus S\), while the dual algorithm requires an operation for each point in S. Since |S| is small initially and increases by at most one at each iteration, mn dominates |S| and contributes more to the computational effort.

-

3.

Except in the Hypersphere test cases in which \((n, m) = (50,200)\) and \((n, m) = (100,400)\), the algorithms use a similar number of iterations to solve the test problems to optimality.

Areas of continued research for improving the computational efficiency of the algorithms include the following:

-

Use column update methods to solve sequential linear systems that differ by only one column.

-

Investigate alternative rules for starting solutions and for choosing candidate points to enter an active set.

-

For the primal algorithm, investigate optimizing sequentially over subsets of a partition of P.

-

For the primal algorithm, investigate whether there are structures that allow points covered by a feasible ball to remain covered by subsequent iterations.

-

Investigate a primal-dual approach using the bisector search paths.

-

Investigate the use of search paths generated by bisectors to other optimization problems.

References

Aurenhammer, F., & Klein, R. (2000). Voronoi diagrams. Handbook of Computational Geometry, 5(10), 201–290.

Blumenthal, L., & Wahlin, G. (1941). On the spherical surface of smallest radius enclosing a bounded subset of \(n\)-dimensional Euclidean space. Bulletin of the American Mathematical Society, 47(10), 771–777.

Cavaleiro, M., & Alizadeh, F. (2018). A faster dual algorithm for the Euclidean minimum covering ball problem. Annals of Operations Research, pp. 1–13.

Cavaleiro, M., & Alizadeh, F. (2021). A dual simplex-type algorithm for the smallest enclosing ball of balls. Computational Optimization and Applications, 79(3), 767–787.

Chrystal, G. (1885). On the problem to construct the minimum circle enclosing \(n\) given points in the plane. Proceedings of the Edinburgh Mathematical Society, 3(1885), 30–33.

Dearing, P. (2017). Intersections of hyperplanes and conic sections in \(R^{n}\). arXiv preprint arXiv:1702.03205

Dearing, P., & Thipwiwatpotjana, P. (2006). One-center location with block and Euclidean distance. Journal of research of the National Institute of Standards and Technology, 111(2), 89–96.

Dearing, P., & Zeck, C. R. (2009). A dual algorithm for the minimum covering ball problem in \(R^{n}\). Operations Research Letters, 37(3), 171–175.

Dyer M (1992) A class of convex programs with applications to computational geometry. In Proceedings of the eighth annual symposium on computational geometry, pp. 9–15.

Elzinga, D. J., & Hearn, D. W. (1972). Geometrical solutions for some minimax location problems. Transportation Science, 6(4), 379–394.

Elzinga, D. J., & Hearn, D. W. (1972). The minimum covering sphere problem. Management Science, 19(1), 96–104.

Fischer, K., Gärtner, B., Kutz, M. (2003). Fast smallest-enclosing-ball computation in high dimensions. In European symposium on algorithms, Springer, pp. 630–641.

Gill, P. E., Murray, W., & Wright, M. H. (1991). Numerical linear algebra and optimization (Vol. 1). Redwood City, CA: Addison-Wesley.

Hopp, T. H., Reeve, C. P. (1996). An algorithm for computing the minimum covering sphere in any dimension. Tech. rep., Technical Report NISTIR 5831, National Institute of Standards and Technology.

Leva, J. L. (1996). An alternative closed-form solution to the gps pseudo-range equations. IEEE Transactions on Aerospace and Electronic Systems, 32(4), 1430–1439.

Megiddo, N. (1983). Linear-time algorithms for linear programming in \(R^3\) and related problems. SIAM Journal on Computing, 12(4), 759–776.

Megiddo, N. (1983). The weighted Euclidean 1-center problem. Mathematics of Operations Research, 8(4), 498–504.

Plastria, F. (2004). Continuous covering location problems. In Z. Drezner, H. W. Hamacher, (Eds) Facility location: Applications and theory, Springer, Berlin, pp. 37–79.

Rademacher, H., & Toeplitz, O. (1990). The enjoyment of mathematics: Selections from mathematics for the amateur. Courier Corporation.

Sylvester, J. J. (1857). A question in the geometry of situation. Quarterly Journal of Pure and Applied Mathematics, 1(1), 79–80.

Sylvester, J. J. (1860). XXVII. on Poncelet’s approximate linear valuation of surd forms. The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science, 20(132), 203–222.

Xu, S., Freund, R. M., & Sun, J. (2003). Solution methodologies for the smallest enclosing circle problem. Computational Optimization and Applications, 25(1–3), 283–292.

Zhou, G., Tohemail, K. C., & Sun, J. (2005). Efficient algorithms for the smallest enclosing ball problem. Computational Optimization and Applications, 30(2), 147–160.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Results on conic sections and applications to the primal and dual algorithms

Appendix: Results on conic sections and applications to the primal and dual algorithms