Abstract

Twin support vector machine (TWSVM) and twin support vector regression (TSVR) are newly emerging efficient machine learning techniques which offer promising solutions for classification and regression challenges respectively. TWSVM is based upon the idea to identify two nonparallel hyperplanes which classify the data points to their respective classes. It requires to solve two small sized quadratic programming problems (QPPs) in lieu of solving single large size QPP in support vector machine (SVM) while TSVR is formulated on the lines of TWSVM and requires to solve two SVM kind problems. Although there has been good research progress on these techniques; there is limited literature on the comparison of different variants of TSVR. Thus, this review presents a rigorous analysis of recent research in TWSVM and TSVR simultaneously mentioning their limitations and advantages. To begin with, we first introduce the basic theory of support vector machine, TWSVM and then focus on the various improvements and applications of TWSVM, and then we introduce TSVR and its various enhancements. Finally, we suggest future research and development prospects.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

SVM (Cortes and Vapnik 1995) is a prominent classification technique widely used since its inception. It was first introduced by Cortes and Vapnik (1995) in 1995 for binary classification problems. SVM seeks to find decision hyperplanes that determine the decision boundary which can classify the data points into two classes. These decision planes are called support hyperplanes and the distance between them is maximized by solving a quadratic programming problem (QPP). SVM is computationally powerful even in non-linearly separable cases by using kernel trick (Cristianini et al. 2000). SVM has remarkable advantages as it utilizes the idea of structural risk minimization (SRM) principle which provides better generalization as well as reduces error in the training phase. As a result of its superior performance even in non-linear classification problems, it has been implemented in a diverse spectrum of research fields, ranging from text classification, face recognition, financial application, brain-computer interface, bio-medicine, human action recognition, horse race odds prediction and multiple instance learning (Tong and Koller 2001; Agarwal and Tomar 2014; Schölkopf et al. 2004; Tay and Cao 2001; Gupta et al. 2019a; Noble 2004; Osuna et al. 1997; Hua and Sun 2001; Byvatov and Schneider 2003; Morra et al. 2010; Tanveer et al. 2020b; Vapnik 2013; Edelman 2007; Poursaeidi and Kundakcioglu 2014). SVM has also been used in feature selection methods (Le Thi and Nguyen 2017). Robust SVM penalized all the samples via new loss function for better generalization in presence of noise (Wang et al. 2010b). Although SVM has outperformed most other systems, it still has many limitations in dealing with complex data due to its high computational cost of solving QPPs and its performance highly depends upon the choice of kernel functions and its parameters. Many improvements have been made in the last decade to enhance the accuracy of SVM (Li et al. 2019, 2020). One such critical enhancement was generalized eigenvalue proximal SVM (GEPSVM) (Mangasarian and Wild 2001; Khemchandani and Chandra 2017; Shao et al. 2013b) that led the foundation of TWSVM (Khemchandani and Chandra 2007; Jayadeva and Chandra 2016). The idea behind GEPSVM is to determine two nonparallel hyperplanes via solving two generalized eigenvalue problems. TWSVM (Khemchandani and Chandra 2007; Jayadeva and Chandra 2016) enhanced the generalization ability of GEPSVM. TWSVM also determines two nonparallel hyperplanes and needs to solve a pair of small QPPs in lieu of solving one complex QPP in SVM. Computational cost of TWSVM is approximately one-fourth of the SVM. TWSVM is faster and has better generalization performance than SVM and GEPSVM. Authors also extended TWSVM for nonlinear classification problems by introducing kernel. But, in nonlinear cases when a kernel is used, the formulation requires to solve inverse of matrices. Also, its performance highly depends upon the selection of kernel functions and its parameters for nonlinear classification. Although TWSVM is still in its rudimentary stage, many improvements and variants have been proposed by researchers due to its favorable performance especially in-case of handling large datasets which is not possible with the conventional SVM. Huang et al. (2018) reviewed the research progress of TWSVM until 2017 for classification problems.

Support Vector Machine has also been used effectively for regression and is called support vector regression (SVR) (Basak et al. 2007). SVR is different than SVM in some aspects as it sets a margin of tolerance \(\epsilon \) and find the optimal hyperplane such that the error is minimized. Thus, it finds a function such that error can be maximum of \(\epsilon \) distance, thus any error within \(\epsilon \) deviation is acceptable. Learning speed of SVR is low as it needs to solve a large sized QPP. Researchers have proposed many variants of SVR to improve its performance in terms of reducing computational complexity and improving accuracy (Drucker et al. 1997; Smola and Schölkopf 2004). Some other variants of SVR includes \(\epsilon \)–support vector regression (\(\epsilon \)–SVR) (Shao et al. 2013e), Fuzzy SVM (Chuang 2007), \(\nu \)–SVR (Chang and Lin 2002), robust and sparse SVR (Tanveer et al. 2016b) and some other algorithms (Yang et al. 2009; Elattar et al. 2010). \(\epsilon \)–SVR has high computational cost and in order to remediate this, an efficient twin support vector regression (TSVR) (Peng 2010b) determined two nonparallel hyperplanes that clusters the data points in two classes by optimizing the regression function. TSVR uses \(\epsilon \)–insensitive up and down-bound functions to optimize the final regression function. TSVR formulation is computationally less complex as it needs to solve a pair of smaller QPPs in lieu of solving one large QPP in SVR, thus it’s speed is much more than SVR. However, in TSVR, the spirit of TWSVM was missing, improved TSVR (TWSVR) (Khemchandani et al. 2016) which works on the similar lines of TWSVM was proposed. To further boost the performance of TSVR, many algorithms have been introduced by researchers which are discussed in Sect. 3.

In the past years, twin support vector classification and regression algorithms have been developed rapidly and are implemented to solve some real-life challenges. However, there is limited literature on twin support vector regression as it is a relatively new theory and needs further study and improvement. Thus, the objective of this paper is to present an compendium of recent developments in TWSVM and TSVR, identify limitations and advantages of these techniques and promote future developments.

The framework of this paper is as follows, Sect. 2 presents brief about SVM and TWSVM, Sect. 3 and 4 include the recent advancements and applications of TWSVM respectively, Sect. 5 presents a brief about TSVR, Sects. 6 and 7 include the recent advancements and applications of TSVR respectively and at last Sect. 8 provides synopsis, future research and development prospects.

2 Related work

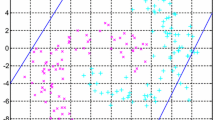

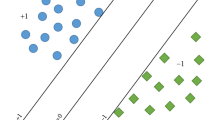

Suppose a binary classification problem with dataset \(X \in {\mathbb {R}}^{l\times n}\) in which \(l_1\) data points are in class \(+1\) (termed as positive class) and \(l_2\) data points are in class \(-1\) (termed as negative class) and these are represented by matrix A and B respectively. For classification problems, the data label \(Y\in \{-1,1\}\) and for regression \(Y\in {\mathbb {R}}\). Accordingly, these matrices will be of size \((l_1 \times n)\) and \((l_2 \times n)\) where n is feature space dimension and \(l=l_1+l_2.\)

2.1 Support vector machine (Cortes and Vapnik 1995)

The optimization problem of support vector machine is given as follows:

where, \(c>0\) is the regularisation parameter, and \(\phi (x)\) represents the non-linear mapping of the input x and \(\xi \) is the vector of slack variables.

Form the above given optimization problem, one can see that all the data samples appear in the constraints of the optimization problem. Hence, the complexity of the model is high as it involves solving a single large quadratic programming problem. Thus, the complexity of SVM is \(O(l^3)\).

2.2 Twin support vector machines (Khemchandani and Chandra 2007)

Standard twin SVM is a binary classification model. To categorize data samples which cannot be separated by linear functions, TWSVM uses kernel functions to convert the higher dimensional data space to the required form. The two kernel generated surfaces are given as below:

here \(D=[A;B]\); and K is a kernel function. The formulation for nonlinear TWSVM classification is defined as below:

and

here \(c_1, c_2 \ge 0, e_1, e_2\) are vectors of ones of suitable dimensions and \(\xi _1, \xi _2\) are called slack variables. After using the Lagrange multipliers \(\alpha \ge 0, \beta \ge 0\) and the Karush–Kuhn–Tucker (K.K.T.) (Kuhn andTucker 1951) conditions, the duals of the QPPs in (3) and (4) are defined as below:

and

where \( P =[K(A,\,D^T)\,\,e_1]\,\,\text{ and }\,\, Q=[K(B,\,D^T)\,\,e_2].\) After solving (5) and (6), the proximal hyperplanes are given as follows:

where \(\delta I\) is a regularization term and \(\delta >0.\)

A new sample \(x\in {\mathbb {R}}^n \) is allocated to either class on the basis of the proximity of kernel generated surface to x, i.e.,

where \(sign(\cdot )\) is signum function.

From optimization problems (3) and (4), one can see that the constraints of only one class appear in the optimization problem while generating the hyperplane for the other class. Thus, the size of the constraints in QPPs of TWSVM is approximately half compared to the SVM formulation (assuming the data is balanced between the classes). TWSVM is approximately four times faster than the usual SVM. The complexity of TWSVM is \(2*O((l/2)^3)\). In terms of generalization, TWSVM and SVM have comparable generalization performance (Khemchandani and Chandra 2007).

3 Research progress on twin support vector machines

In this section, we discuss the progress of TWSVM based models in classification problems. The variants of TWSVM (given in Fig. 1) are

3.1 Least squares twin support vector machines

To reduce TWSVM training time, Kumar and Gopal (2009) formulated least squares TWSVM (LS-TWSVM) algorithm. The major advantage of LS-TWSVM over TWSVM is that it only deals with linear equations in place of QPPs in TWSVM which reduces the computational complexity of the model. LS-TWSVM has comparable accuracy but low computational time than TWSVM. Although the computational time of LS-TWSVM is less than TWSVM, its generalization performance is poor as same penalties are allotted to the samples either being positive or negative. LS-TWSVM apply the empirical risk minimization (ERM) principle which affects accuracy and also causes over-fitting problem. Also, it doesn’t take into account the effects of samples having different locations. To avoid this limitation, weighted LS-TWSVM (Xu et al. 2014a) gives different weights to the samples depending upon their locations. Experimental results have shown that weighted LS-TWSVM yields better testing accuracy than TWSVM and LS-TWSVM but its computational time is more than these algorithms.

To incorporate expert’s knowledge in LS-TWSVM classifier, knowledge-based LS-TWSVM (KBLS-TWSVM) (Kumar et al. 2010) included polyhedral knowledge sets in the formulation of LS-TWSVM. Experimental results have shown that KBLS-TWSVM is simple and more apt classifier compared to LS-TWSVM. In order to improve accuracy of LS-TWSVM, Wang et al. (2010a) incorporated the manifold geometric structure of data of each class. It requires to solve a set of linear equations.

Although, LS-TWSVM performed well with large datasets compared to TWSVM, Gao et al. (2011) designed \(l_1\)-norm LS-TWSVM (NLS-TWSVM) to automatically select the relevant features in order to strengthen the algorithm in dealing with high-dimensional datasets. NLS-TWSVM is based on LS-TWSVM and includes a Tikhonov regularization term. To outdo LS-TWSVM in accuracy and avoid over-fitting problem, Improved LS-TWSVM (ILS-TWSVM) (Xu et al. 2012) improved the classification accuracy by implementing structural risk minimization principle. ILS-TWSVM is faster and yields comparable generalization and computational time to LS-TWSVM. Since \(L_2\) norm magnifies the outlier effect in least squares TWSVM models, hence, capped \(L_{2,p}\) norm based least squares TWSVM (Yuan and Yang 2021) was formulated to reduce the effect of outliers and noise.

To take advantage of the correlation between some data points and reduce the effect of noise, Ye et al. (2012a) used density of the sample to allot weights for each sample (DWLSC). Experimental results demonstrate better classification accuracy of the DWLSC than TWSVM and LS-TWSVM.

There are several real-life problems which are essentially multicategory problems. The extensions of TWSVM to multicategory is also an active research domain where several research papers have evolved addressing the same. However, class imbalance problem is common in multicategory classification as all binary SVMs are trained with all patterns. To deal with these issues Nasiri et al. (2014) formulated energy-based LS-TWSVM (ELS-TWSVM) in which the constraints of LS-TWSVM are converted to an energy model to decrease the impact of noise and outliers. TWSVM, LS-TWSVM, and ELS-TWSVM satisfy the empirical risk minimization (ERM) principle and also in these techniques the matrices are positive semi-definite. Thus, to remediate this problem, Tanveer et al. (2016a) embodied the SRM principle in ELS-TWSVM and proposed robust ELS-TWSVM (RELSTSVM) which makes the matrices positive definite. Moreover, RELSTSVM uses an energy parameter to handle the noise and thus make the algorithm more robust. Results have shown the promising generalization performance of RELSTSVM with less computational time when compared with the baseline algorithms (Table 1). In the recent comprehensive evaluation (Tanveer et al. 2019) of 187 classifiers on 90 datasets, RELSTSVM (Tanveer et al. 2016a) emerged as the best classifier. Khemchandani and Sharma (2016) proposed robust least squares TWSVM (RLS-TWSVM) which is fast and yields better generalization performance and is also robust to handle the noise. Further, the application in the field of human activity recognition is explored in the paper. The authors in Khemchandani and Sharma (2016) also proposed Incremental RLS-TWSVM to increase the training speed of RLS-TWSVM and Incremental RLS-TWSVM also deals with noise and outliers effectively. Tomar and Agarwal (2014) proposed feature selection based LS-TWSVM to diagnose heart diseases using F-score to choose the most relevant features which enhances the accuracy of the model. To handle imbalanced datasets, a novel weighted LS-TWSVM (Tomar et al. 2014c) for imbalanced data, which has better accuracy and geometric mean than SVM and TWSVM, was proposed. To further enhance the accuracy on imbalanced datasets, Hybrid Feature Selection Based WLS-TWSVM (HFS based WLS-TWSVM) (Tomar and Agarwal 2015a) approach was proposed for diagnosing diseases like Breast Cancer, Diabetes and Hepatitis which can tackle data imbalance issues. Xu et al. (2015) included data distribution information into the structural LS-TWSVM (SLS-TWSVM) classifier. It is based on structural TWSVM and performs clustering before classification and embody the structural information into the model. SLS-TWSVM has a better generalization performance than \(\nu \)-SVM (Chen et al. 2005), \(\nu \)-TWSVM (Peng 2010c), STWSVM (Qi et al. 2013a) and LS-TWSVM (Kumar and Gopal 2009). It also improves the noise insensitivity of LS-TWSVM.

Mei and Xu (2019) proposed a novel multi-task LS-TWSVM algorithm which is build on directed multi-task TWSVM (DMTWSVM) and LS-TWSVM. It focuses on multitask learning instead of commonly applied single task learning in TWSVM and LS-TWSVM. This algorithm is computationally effective as it only requires to solve linear equations in lieu of QPPs in DMTWSVM.

Least square twin support vector hypersphere (LSTSVH) (Tomar and Agarwal 2015a) is an enhancement of twin support vector hypersphere (TSVH) (Peng and Xu 2013b). TSVH is different from TWSVM as it obtains two hyperspheres through solving two small SVM type problems. Experimental results demonstrate that LSTSVH has almost same accuracy as SVM, LS-SVM, TWSVM, LS-TWSVM, and TSVH but has high computational time than LS-SVM and LS-TWSVM and less than SVM and TWSVM. Tanveer et al. (2020a) proposed efficient large scale least squares twin support vector machines (LS-LSTWSVM) which uses different Lagrangian functions to eliminate the need for calculating the inverse matrices and thus enhance the performance of TWSVM on large scale datasets.

3.2 Projection twin support vector machine

Projection TWSVM (P-TWSVM) (Chen et al. 2011) is a multiple-surface classification (MSC) technique based on the multi-weight vector projection SVM (MVSVM) and TWSVM. Chen et al. (2011) introduced P-TWSVM with minimizing within class variance principle. Unlike finding hyperplanes in TWSVM, P-TWSVM finds projection axes to distinctly separate samples. To enhance performance of P-TWSVM, authors proposed a recursive algorithm in which for each class multiple projection axes are considered. P-TWSVM is a better classifier as hyperplanes are more fitted for single plane based samples while for datasets having complex sample distribution like XOR problems, projection directions can generate better accuracy. Experimental results have shown that P-TWSVM has better accuracy than GEPSVM, TWSVM, LS-TWSVM, and MVSVM. However, it is computationally more complex than TWSVM. Thus, Shao et al. (2012a) proposed improved P-TWSVM and used the idea in LS-SVM (Suykens et al. 1999) and LS-TWSVM (Kumar et al. 2010) and added a regularization term into P-TWSVM simultaneously maintaining the optimization problem to be positive definite. It is simple, fast and has similar classification accuracy but less computational time than P-TWSVM.

Although P-TWSVM is an efficient classifier, it only implements empirical risk minimization principle which can incur possible singularity problems which need to be dealt with principal component analysis (PCA) and linear discriminant analysis (LDA). Shao et al. (2013c) formulated a new P-TWSVM variant by introducing a maximum margin regularization term called RP-TWSVM in which empirical risk is replaced by regularized risk. Authors further proposed a successive over-relaxation (SOR) technique and a genetic algorithm (GA) for parameter selection to optimally solve the primal problems. RP-TWSVM not only has better accuracy but also has a better generalization than P-TWSVM.

To implement least square P-TWSVM to non-linear problems, Ding and Hua (2014) introduced kernel to LSP-TWSVM (Shao et al. 2012a) which also deals with linear equations similar to LSP-TWSVM and opposed to P-TWSVM. Authors introduced nonlinear recursive algorithm in order to improve its performance. Experimental results have shown good classification accuracy than LSP-TWSVM and P-TWSVM.

Guo et al. (2014) proposed a feature selection approach for LSP-TWSVM which finds two projection directions and has comparable prediction accuracy to that of TWSVM, LS-TWSVM and LSP-TWSVM and a similar generalization ability to TWSVM and LS-TWSVM.

LSP-TWSVM enhances the performance of P-TWSVM but fails to include underlying data information to enhance classification accuracy. To overcome this limitation, weighted LSP-TWSVM (LIWLSP-TWSVM) (Hua and Ding 2015) exploited local information into the algorithm. LIWLSP-TWSVM is more effective than LSP-TWSVM as it uses weighted mean rather than standard mean in LSP-TWSVM. It has better generalization ability but its computational cost is high when solving multi class problems (Table 2).

Hua et al. (2017) formulated a novel projection TWSVM (NP-TWSVM). NP-TWSVM has many advantages over P-TWSVM. It is faster than P-TWSVM as calculation of inverse matrices is not avoided in NP-TWSVM. It determines the projection axes so that margin across the sample and class is greater than or equal to zero. Experimental results have shown that it obtains better generalization and accuracy when compared with other baseline algorithms. An efficient nonparallel sparse projection SVM (NPrSVM) (Chen et al. 2020c) finds optimal projection for each class such that the projection values of within class instance are clustered as much as possible within an insensitive tube while those of other class instance are kept away. Extensive experiments show that NPrSVM is superior than PTWSVM in terms of generalization performance, training time, sparsity and robustness to outliers. \(\nu -\) projection twin SVM (Chen et al. 2020b) seeks projection axis for each class in a manner that \(\nu \) controls the fraction of support vectors and error margin, and also avoids the matrix inversions. Robust rescaled hinge loss based projection TWSVM model (Ren and Yang 2021) use different parameters to control the effect of outliers and the model results in better performance.

3.3 Twin parametric margin support vector machine

Motivated by the idea of TWSVM and Par-\(\nu \)-SVM (Hao 2010), Peng (2011a) formulated twin parametric-margin SVM (TPMSVM). Like par-\(\nu \)-SVM, TPMSVM seeks to determine two parametric margin hyperplanes which defines the positive and negative margin respectively. It’s an indirect classifier even suitable when noise is heteroscedastic as it automatically adjusts a flexible margin. Experimental results have shown that TPMSVM has comparable generalization ability to SVM, Par-\(\nu \)-SVM, and TWSVM. Also, TPMSVM has much lower training time than Par-\(\nu \)-SVM as it solves two small sized QPPs like TWSVM. TPMSVM classifier is not sparse due to the formulations of its parametric- margin hyperplanes. TPMSVM has good generalization but it is computationally complex as it solves two QPPs. Wang et al. (2013) added a quadratic function to TPMSVM in primal space to get better training speed. The proposed algorithm is called smooth twin parametric-margin SVM (STPMSVM). Also, the authors proposed the use of genetic algorithm (GA) in STPMSVM to overcome the drawback of regularizing at least four parameters in TPMSVM. To obtain sparsity and feature noise insensitivity, truncated pinball loss TPMSVM (Wang et al. 2021a) was proposed. The optimal separating hyperplanes are obtained via concave-convex procedure (CCCP).

Shao et al. (2013d) proposed least squares TPMSVM (LSTPMSVM) to decrease the training cost of TPMSVM as LSTPMSVM solves two primal problems rather than dual problems. This change makes it less complex and increases training speed. It has comparable or better classification accuracy but with remarkably less computational time than TPMSVM. For model selection, the authors proposed a particle swarm optimizer (PSO) which effectively optimizes the four parameters defined in LSTPMSVM. In terms of generalization, LSTPMSVM performs better than TPMSVM and LS-TWSVM. In order to consider prior structural data information, Peng et al. (2013) proposed structural twin parametric-margin SVM (STPMSVM). Based on cluster granularity, the class data structures are included in the problem. STPMSVM not only obtained good generalization ability but also showed fast learning speed, and better performance than TPMSVM. TPMSVM is an efficient classifier but it losses the sparsity due to the weight vectors of the hyperplanes. To solve this issue, centroid-based TPSVM (CTPSVM) (Peng et al. 2015b) was introduced which uses the projection of the centroid points and leads to a sparse optimal hyperplane by optimizing the centroid projection values.

To incorporate structural information present in data, Rastogi et al. (2018d) proposed robust parametric TWSVM for pattern classification (RP-TWSVM) which seek to find two parametric margin hyperplanes that has the capability to adjust margin to capture heteroscedastic noise data information. Khemchandani et al. (2018b), formulated angle-based TPSVM (ATPSVM) is proposed, which can efficiently handle heteroscedastic noise. Other improvements which maximizes angle between the twin hyperplanes are proposed by Rastogi et al. Rastogi et al. (2018c). Taking motivation from TPMSVM, ATPSVM determines a pair of hyperplanes so that the angle between their normals is maximized. Recently, Richhariya and Tanveer proposed a novel angle based universum LS-TWSVM (AULSTWSVM) for pattern classification. In contrast to ATPSVM (Rastogi et al. 2018c), the AULSTWSVM minimizes the angle between universum hyperplane and classifying hyperplane. To incorporate the prior information about the distribution of the data using the universum, AULSTWSVM used linear loss (Shao et al. 2015) in the formulation. Numerical experiments show the promising generalization performance with very less computational time. Further, the application in the diagnosis of Alzheimer’s disease is explored.

Moreover, the proposed AULSTWSVM includes linear loss (Shao et al. 2015) in the optimization problem, while incorporating prior information about data distribution using the universum. Moreover, the solution of AULSTWSVM involves system of linear equations leading to less computation time (Kumar and Gopal 2009)

3.4 Robust and sparse twin support vector machine

TWSVM achieves better accuracy and is faster when compared to conventional SVM but it loses sparsity as it considers \(l_2\) norm of distances in the objective function. Thus, in order to make TWSVM sparse, Peng (2011b) proposed TWSVM in primal space which provides a sparse hyperplane with better generalization ability. To improve the robustness, a regularization term is also added. It has comparable generalization and a rapid learning speed to TWSVM and LS-TWSVM but the computational cost is high. Peng and Xu (2013c) proposed robust minimum class variance TWSVM (RMCV-TWSVM) classifier to enhance generalization and robustness of TWSVM. This algorithm has an extra regularization term which makes its learning speed comparable to TWSVM but obtains better generalization ability than TWSVM.

Qi et al. (2013b) proposed robust TWSVM using second-order cone programming formulation. It is effective with noise-corrupted data. This algorithm overcomes the limitation of TWSVM and computes the inverse of matrices which are not suitable for large datasets, it takes only the inner products about samples by which kernel trick can be applied directly and does not need to solve the extra inverse of matrices. It is superior in computational time and accuracy than other TWSVMs. Tian et al. (2014a) proposed sparse nonparallel support vector machine (SN-SVM). While TWSVM losses the sparseness, SN-SVM has the inherent sparseness as it uses the two loss functions instead of one in existing TWSVM. Only the empirical risk is considered in TWSVM, SN-SVM introduces the regularization term by maximizing the margin. TWSVM minimizes the loss function based on \(l_1\) or \(l_2\)-norm. Thus, Zhang et al. (2014) proposed \(l_p\)-norm-LS-TWSVM which is an adaptive as p can be automatically chosen by data. Robust non-parallel SVM via second order cone programming (López et al. 2019) is robust to outliers and noise, and constructs the two separating hyperplanes via maximisation of probabilistic framework.

In order to intensify the robustness and sparsity in the original formulation of TWSVM, Tanveer (2015b), incorporated regularization technique and formulated a linear programming \(l_1\)-norm TWSVM (NLP-TWSVM) which needs to solve linear equations rather than solving QPPs in TWSVM which makes it fast, robust, sparse and a simple algorithm. Experimental results demonstrate that NLP-TWSVM’s generalization ability is better and computational time is less than GEPSVM, SVM, and TWSVM. Tanveer (2015c, 2013) proposed smoothing approaches for 1-norm linear programming TWSVM (SLPTWSVM). The solution of SLPTWSVM reduces to solving two systems of linear equations which makes the algorithm extremely simple and computationally efficient. Tanveer et al. (2019c) proposed sparse pinball TWSVM (SP-TWSVM) by introducing \(\epsilon \) insensitive zone pinball loss function in the orginal TWSVM formulation. SP-TWSVM is noise insensitive, retain sparsity and more stable for re-sampling. Robust capped \(l_1\) norm TWSVM (Wang et al. 2019a) reduced the effect of outliers which resulted in better performance. To reduce the overfitting issues, capped \(l_1\) norm twin bounded support vector machines (Ma et al. 2020) was proposed. Efficient and robust \(l_1\) norm TWSVM (Yan et al. 2019) used \(l_1\) norm to maximise the ratio of interclass scatter to intraclass scatter. The authors used iterative procedure to get the optimal separating hyperplanes. Adaptive capped \(L_{\theta ,\epsilon }\)-loss based TWSVM (Ma et al. 2021) is a generalized TWSVM model wherein \(\theta \) and \(\epsilon \) parameters are optimized to meet the objectives of robust to noise and outliers.

3.5 Pinball loss twin support vector machine

Xu et al. (2016c) proposed a novel TPMSVM with the pinball loss (Pin-TPMSVM). The authors introduced pinball loss function that is based on maximizing quantile distances between the two classes instead of hinge loss function which maximizes the distance between the closest samples of the two classes. This leads to noise insensitivity and as well as re-sampling stability. Pin-TPMSVM has excellent capability to handle noise which makes the model a more robust classifier but little attention is given on sparsity due to which the testing time is high and there is instability in re-sampling. Xu et al. (2016d) also formulated a maximum margin and minimum volume hyperspheres with pinball loss (Pin-\(M^3\)HM). Authors proposed this algorithm to enhance the generalization of twin hypersphere SVM (TH-SVM) for noise present in datasets. The algorithm classifies samples of two classes by a pair of hyper-spheres, each containing either majority or the minority class samples simultaneously maintaining maximum margin between the hyperplanes. Pin-\(M^3\)HM is fast and has better accuracy than TWSVM. Sharma and Rastogi (2018) proposed two models called \(\epsilon \)-Pin-TWSVM and Flex-Pin-TWSVM. \(\epsilon \)-Pin-TWSVM introduces \(\epsilon \) parameter to reduce the impact of noise and to attain a sparse solution while Flex-Pin-TWSVM uses a self-optimized framework which makes this algorithm flexible to estimate the size of insensitive zone. It adapts to the structure of data. Pin-TPMSVM has impressive ability to handle noise but to reduce parameter tuning time, Yang et al. (2018) introduced a new solution approach for the TPMSVM in which one instance is considered at a time and it is solved analytically without solving optimization problem. It is fast, simple and flexible. Since pinball loss function is not differentiable at zero, hence, smooth pinball loss TWSVM (Li and Lv 2021) used smooth approximation function and solved the objective functions via Newton-Armijo method.

For imbalanced data classification Xu et al. (2018b) proposed a maximum margin twin spheres which uses pinball loss. This algorithm finds homocentric spheres so that the smaller one captures positive class samples and the larger one repel negative samples. It requires to solve a QPP and a Linear programming (LP) problem. This algorithm has good generalization performance and noise insensitivity, however, suffers due to large complexity. To reduce the complexity, bound estimation-based safe acceleration for maximum margin of twin spheres machine with pinball loss (Yuan and Xu 2021) was proposed. Sharma et al. (2019) proposed a Stochastic Quasi-Netwon method based TPSVM using Pinball Loss Function (SQN-PTWSVM) which can scale the training process to handle millions of data points while simultaneously deals with noise and re-sampling data issues. Twin neural networks (Pant et al. 2019) uses feature maps which allows better discrimination among classes. The twin neural network is also extended to multiclass problems wherein the number of neural networks trained is proportional to number of classes.

The aforementioned pinball loss TWSVM algorithms are based on TPMSVM and not on the original TWSVM algorithms. Tanveer et al. (2019b) introduced pinball loss to the original TWSVM, termed as general TWSVM with pinball loss (Pin-GTSVM). Pin-GTSVM (Tanveer et al. 2019b; Ganaie and Tanveer 2021) is less sensitive to the outliers and more stable algorithm for re-sampling as compared to the original TWSVM. To retain the sparsity of original TWSVM and Pin-GTSVM, Tanveer et al. (2019c) proposed a novel sparse pinball TWSVM (SP-TWSVM) which uses \(\epsilon \)-insensitive zone pinball loss function. SP-TWSVM has better classification accuracy than TWSVM and Sparse Pin SVM and it is insensitive to outliers, retains sparseness and suitable for re-sampling. Recently, Tanveer et al. (2019a) proposed improved sparse pinball TWSVM (ISPTWSVM) by adding a regularization term to the objective function of SP-TWSVM. ISPTWSVM implements SRM principle and also the matrices appear in the dual formulation are positive definite which makes the proposed algorithm computationally less complex and it also achieves better accuracy than other baseline algorithms. Most of the twin SVM based models involve matrix inversion operations which limits their applicability to large scale data. Hence, large scale pinball TWSVM (Tanveer et al. 2021g) uses pinball loss function to reduce the issues of feature noise and reformulated the Lagrangian in a manner that matrix inversions are no longer involved in the optimization problems. This scaled the model to large scale data.

3.6 Twin support vector machine for universum data and imbalanced datasets

Qi et al. (2012a) formulated TWSVM for universum data classification (U-TWSVM). Universum data is defined as the data samples which does not belong to any given class. This algorithm utilizes the universum data to enhance TWSVM classification accuracy. U-TWSVM employs new data points to the either class based on its proximity to the hyperplanes. Experimental results have shown that U-TWSVM has better accuracy than TWSVM. In order to construct a robust classifier by including the prior information embedded in the universum samples Qi et al. (2014) formulated nonparallel SVM (U-NSVM) which maximizes the two margins related to the two nearest adjacent classes.

As many real life problems consists of datasets which are imbalanced in nature i.e. classes do not contain same number of data samples, due to which many machine learning algorithms cannot be implemented. Thus, to enhance the performance of TWSVM while dealing with imbalanced datasets, Shao et al. (2014b) proposed weighted Lagrangian TWSVM for imbalanced data classification. Authors introduced a quadratic loss function to the formulation of TWSVM which enabled faster training of data points. Also, this is more robust to outliers and has better computational speed and accuracy than TWSVM. Tomar et al. (2014c) proposed weighted LS-TWSVM for imbalanced datasets by adjusting the classifier and assigning distinct weight to training samples. The results of the experiment shows that its accuracy is more than SVM, TWSVM, and LS-TWSVM. Furthermore, in 2015, Xu et al. (2016a) formulated least squares TWSVM (ULS-TWSVM) which exploits the universum data. It introduces a regularization term thus implements SRM principle and is computationally less complex as it requires to solve linear equations in place of QPPs in TWSVM. Cao and Shen (2016) proposed combining re-sampling with TWSVM for imbalanced data classification. In this algorithm, authors presented a hybrid re-sampling technique which utilizes the one side selection (OSS) algorithm and synthetic minority oversampling technique (SMOTE) algorithm to balance the training data. This is combined with TWSVM for classification purpose. However, SMOTE algorithm based on K-nearest neighbors can often result in over-fitting. Robust rescaled hinge loss TWSVM (Huang et al. 2019) and density weighted TWSVM (Hazarika and Gupta 2021) for imbalanced datasets resulted in improved performance.

Encouraged by the performance of \(\nu \)-TWSVM (Peng 2010c) and U-TWSVM (Qi et al. 2012a), Xu et al. (2016b) proposed \(\nu \)-TWSVM for universum data classification (\(U\nu \)-TWSVM). It is more flexible in using the prior knowledge from universum data to improve the generalization ability. It uses two hinge loss functions so that data can remain in a nonparallel insensitive loss tube. Experimental results have shown that \(U\nu \)-TWSVM has better accuracy and also costs lower running time than other baseline algorithms.

Xu (2017) proposed maximum margin twin spheres SVM algorithm (MMTSSVM) to overcome many limitations of TWSVM while dealing with imbalanced data. This algorithm seeks to determine two homocentric spheres so that smaller one captures as many positive class samples and the larger one repel negative samples simultaneously increases the margin between the spheres.

Richhariya et al. (2018) added a regularization term into the optimization problem of universum TWSVM to make matrices non-singular and improve the generalization performance. The proposed algorithm is called improved universum TWSVM. It has better generalization and training time than USVM and UTWSVM. Richhariya and Tanveer (2019) proposed a fuzzy universum SVM (FUSVM) based on information entropy. Universum support vector machines are more efficient than other SVM methods as it includes prior information of samples. But, it is not effective in case of noise-corrupted datasets. FUSVM introduces weights such that it gives fewer weights to the outlier universum points. Further, the authors proposed a fuzzy universum TWSVM (FUTWSVM). Both the algorithms have better generalization performance than SVM, USVM, TWSVM, and UTWSVM. Recently, Richhariya and Tanveer (2020b) proposed a reduced universum twin support vector machine for class imbalance learning (RUTWSVM-CIL) with the idea of prior information about the data distribution. RUTWSVM-CIL used reduced kernel matrix, and thus applicable for the large sized imbalanced datasets. Numerical experiments on several imbalanced benchmark datasets showed the applicability of RUTWSVM-CIL. Richhariya and Tanveer (2020a) also proposed a novel parametric model for universum based twin support vector machine and extended its application for the classification of Alzheimer’s disease data. Fuzzy universum least squares TWSVM (Richhariya and Tanveer 2021a; Borah et al. 2018) and fuzzy least squares TWSVM (Ganaie et al. 2021b; Gupta and Richhariya 2018) solved a linear system of equations instead of solving the QPPs, hence, the model is fast and requires no external toolbox for solving the optimization problems. Robust TBSVM (Borah and Gupta 2021) is proposed to make the model more robust to noise in imbalance datasets.

3.7 Fuzzy twin support vector machines

Chen and Wu (2018) proposed a novel fuzzy TWSVM (NFTWSVM) which takes care of the problem of dealing with one class playing major role in classification, by assigning fuzzy membership to different samples. The proposed NFTWSVM includes fuzzy neural networks and provides more generalized results. Richhariya and Tanveer (2019) proposed a fuzzy universum SVM (FUSVM) based on information entropy. This algorithm is also discussed in Sect. 3.6. Richhariya and Tanveer (2018a) also proposed a robust fuzzy LS-TWSVM (RFLS-TWSVM-CIL) to boost the performance of TWSVM on imbalanced datasets. The optimization problem in this algorithm is strictly convex as it uses 2-norm of the slack variables. Authors further proposed a fuzzy membership function to deal with imbalanced problems as it provides weights to samples and includes data imbalance ratio. RFLS-TWSVM-CIL obtains better generalization and is computationally more efficient as it requires to solve two linear equations. However, RFLSTWSVM-CIL algorithm implements empirical risk minimization principle which requires inverse of matrices to be positive semi-definite. In order to improvise, Ganaie et al. (2020b) introduced a regularization term to the primal formulation of RFLSTWSVM-CIL. The proposed algorithm is regularized robust fuzzy least squares (RRFLSTWSVM) which doesn’t require the extra assumption of inverse of matrices to be positive semi-definite and performs better than RFLSTWSVM-CIL.

Khemchandani et al. (2018a) formulated fuzzy LS version of TSVC in which each data point has a fuzzy membership value and is allotted to different clusters. This algorithm is also discussed in Section 3.11. Chen et al. (2020a) proposed entropy-based fuzzy least squares TWSVM which considers the fuzzy membership for each data point basis entropy. Rezvani et al. (2019) formulated intuitionistic fuzzy TWSVM (IFTWSVM) which is modified version of fuzzy TWSVM as it considers the position of input data in the feature space and calculate adequate fuzzy membership and also reduces the effect of noise. Zhang et al. (2019) proposed fuzzy TWSVM which assigns fuzzy membership based on intra-class hyperplane and sample affinity. Experimental results showed that this algorithm has better accuracy than TWSVM and classic fuzzy TWSVM. Gupta et al. (2019b) proposed entropy-based fuzzy twin support vector machine (EFTWSVM-CIL) which is an efficient algorithm for imbalanced datasets as it assigns fuzzy membership values based on entropy of the samples. Chen et al. (2020a) proposed entropy-based fuzzy least squares twin support vector machine (EFLSTWSVM) which is an improvised version of EFTWSVM-CIL by formulating the QPPs in least squares sense and retains the superior characteristics of LSTWSVM. Experimental results shown that this algorithm performed better than other baseline fuzzy TWSVM algorithms (Table 3).

3.8 Some other improvements of twin support vector machines

Kumar and Gopal (2008) enhanced TWSVM using smoothing techniques. Authors proposed to transform the primal problems of TWSVM into smooth unconstrained minimization problems and used Newton−Armijo algorithm for optimization. Smooth TWSVM (STWSVM) has comparable generalization to TWSVM but is significantly a faster algorithm. Khemchandani and Chandra (2009) proposed iterative alternating algorithm to make kernels learn efficiently. Zhang (2009) proposed boosting based TWSVM for clustered microcalcifications detection. Results have shown that this method improved detection accuracy. Bagging algorithm was combined with boosting to solve the unstable problem of TWSVM. This method is called BB-TWSVM. Twin support vector machine in linear programs and robust TWSVM (Qi et al. 2013b) have also been proposed for the classification problems (Li and Tian 2014).

Shao et al. (2010) proposed a bi-level programming method to multiple instance classification, called MI-TWSVM. Multiple instance learning (MIL) is a supervised technique wherein the training data points consist of labeled bags and these bags include multiple unlabeled instances. For binary classification, a bag is a negative sample if all the instances in it are negative and it is labeled a positive sample if at least one instance is positive. Similar to TWSVM, the proposed algorithm seeks two hyperplanes thereby the positive hyperplane is closest to the positive instances and as distant as possible from the negative instances and vice-versa. Ghorai et al. (2010) proposed a unity norm TWSVM classifier (UNTWSVM) which includes unity norm equality constraints and a quadratic loss function in the objective function of TWSVM. This algorithm can be solved by sequential quadratic optimization method. UNTWSVM has more computational cost than TWSVM especially for large datasets because of the nonlinear formulation. Generalized TWSVM (Moosaei et al. 2021) proposed two models, in the first model the authors used the \(l_1\) and \(l_\infty \) norm in the optimization problems and in second model the convex, piecewise quadratic objective function is solved via generalized Newton method.

In order to improve TWSVM’s generalization ability and to decrease support vectors (SVs), Peng (2010c) introduced a variable p and parameter \(\nu \) in TWSVM and proposed \(\nu \)-TWSVM. An improved \(\nu \)-TWSVM (Xu and Guo 2014a) has also been proposed for the classification problems. The parameter \(\nu \) controls the trade-off between the SV and marginal error while adaptive p overcomes the limitations of constraints being over-restricted in TWSVM and thus reduces the number of support vectors. But, it gives the same penalties to each misclassified sample and results in over-fitting. Thus, Xu et al. (2012) introduced the rough set theory into \(\nu \)-TWSVM to remediate this limitation. This algorithm is more effective in avoiding over-fitting as it gives different penalties to different points depending upon location. It also has better generalization than \(\nu \)-TWSVM.

Although rough \(\nu \)-TWSVM (Xu et al. 2012) performs better than \(\nu \)-TWSVM, it provides different weights to negative samples and same weights to all the positive samples. Thus, Xu et al. (2014b) formulated K nearest neighbor (KNN) weighted rough \(\nu \)-TWSVM which gives different penalties to the samples of both the classes. It has better accuracy and lower computational cost than \(\nu \)-TWSVM and Rough \(\nu \)-TWSVM. In another attempt to enhance the results of \(\nu \)-TWSVM, Khemchandani et al. (2016) formulated two models for binary classification: \(I\nu \)TWSVM and \(I\nu \)TWSVM Fast. Both the algorithms are faster than \(\nu \)-TWSVM as \(I\nu \)TWSVM solves one small QPP and an unconstrained minimization problem (UMP) while \(I\nu \)TWSVM Fast solves one unimodal function and one UMP. To overcome the disadvantage of TWSVM being sensitive to outliers, Xie and Sun (2015) implemented class centroid and proposed multitask centroid TWSVM for multitask problems.

For handling large scale datasets, Shao et al. (2015) introduce weighted linear loss in TWSVM and proposed weighted linear loss TWSVM (WL-TWSVM) to classify large scale datasets. The proposed algorithm only deals with linear equations which increases the training speed and also has better generalization than TWSVM. To further fasten up the training process, Sharma and Rastogi (2018) and Wang et al. (2018), recently proposed stochastic conjugate gradient method based twin support vector machine (SCH-TWSVM). The resulting model showed effective performance on binary activity classification problem.

Shao et al. (2011) introduced the regularization term in TWSVM to embody SRM principle and formulated a new algorithm called twin bounded support vector machines (TBSVM). To further speed up training, SOR technique is used. Numerical results on various datasets show that TBSVM is faster and has better generalization ability than TWSVM. Ye et al. (2011) formulated localized TWSVM via convex minimization (LC-TWSVM) which effectively constructed two nearest-neighbor graphs in the original input space to reduce the space complexity of TWSVM. Shao and Deng (2013) in 2012 considered the unity norm constraints and added a regularization term to minimize structural risk in TWSVM and proposed margin-based TWSVM with unity norm hyperplanes (UNHMTWSVM). The proposed algorithm is fast, has better generalization and accurate compared to TWSVM. Lagrangian TBSVM with \(L_2\) norm (Gupta and Gupta 2019) replaced \(L_1\) norm of the slack variables with \(L_2\) norm to improve the performance.

To include statistical data information in TWSVM, Peng and Xu (2012) formulated twin Mahalanobis distance-based SVM (TMSVM) that uses each classes covariance to determine hyperplanes. It has better training speed and generalization than TWSVM. Shao et al. (2012b) introduced a probability based TWSVM model (P-TWSVM) which is more accurate than TWSVM. In 2012, Shao and Deng (2012) formulated a coordinate descent based TWSVM to increase the efficiency of TWSVM by introducing a regularization term. Further, to solve the dual problems, the authors proposed a coordinate descent method which reduces the training time even in case of large datasets as it deals with single data point at once.

To include the underlying correlation information between data points in TWSVM, Ye et al. (2012b) formulated a novel nonparallel plane classifier, called Weighted TWSVM with Local Information (WL-TWSVM). A major limitation of this algorithm is that it doesn’t work for large-scale problems as it finds the K-nearest neighbors for all the samples. Based on TWSVM, Peng and Xu (2013b) formulated a twin-hypersphere SVM (TH-SVM). Unlike TWSVM, it determines two hyperspheres and avoids the matrix inversions in its dual formulations.

To incorporate the structural data information in TWSVM, Qi et al. (2013a) formulated structural-TWSVM which is superior in training time as well as accuracy to that of TWSVM. However, it still ignores the importance of different samples within each cluster. To overcome this drawback, Pan et al. (2015) uses K-nearest neighbor based structural TWSVM which provides different penalties to the samples of different classes. However, it suffers from overfitting due to empirical risk minimisation. To reduce the overfitting issues, efficient KNN weighted TWSVM (Xie and Xu 2019) introduced the regularisation term to avoid the issues of overfitting. This also ensured the minimization of structural risk which results in better generalization.

Shao et al. (2014a) formulated a different nonparallel hyperplane SVM in which hyperplanes are determined by clustering the samples based on similarity between the two classes. This algorithm has better or comparable accuracy with low computational time. To optimally select the parameters in TWSVM, Ding et al. (2016) proposed TWSVM build on Fruit Fly Optimization Algorithm (FOA-TWSVM). It can optimally select the parameters in less time and with better accuracy. In 2017, Pan et al. (2017) proposed safe screening rules to make TWSVM efficient for large-scale classification problems. The safe screening rules reduce the scale by eliminating training samples and giving same solution as the original problem. Experimental results show that safe screening rules can greatly reduce the computational time while giving the same solutions as original ones. Yang and Xu (2018) proposed safe sample screening rule (SSSR) for Laplacian twin parametric-margin SVM (LTPSVM) to address the problems while handling large-scale problems. Pang and Xu (2019) proposed safe screening rule for Weighted TWSVM with local information (WLTWSVM) to implement the algorithm for large-scale datasets. Experimental results demonstrate the effectiveness of SSSR for WLTWSVM as it performes better than SVM and TWSVM with significantly less computational time. Recently, Zhao et al. (2019) proposed an efficient non-parallel hyperplane Universum support vector machine (U-NHSVM) for classification problems. U-NHSVM is flexible to exploit the prior information in universum.

Tanveer (2015c) proposed unconstrained minimization problem (UMP) formulation of Linear programming TWSVM to enhance robustness and sparsity in TWSVM. Tanveer (2015a) also proposed an implicit Lagrangian TWSVM which is solved by using finite Newton method. Tanveer and Shubham (2017a) proposed smooth TWSVM via UMP which increases the generalization ability and training speed of TWSVM.

Multi-label learning deals with data having multiple labels and has gained a lot of attention recently. Chen et al. (2016) proposed multi-label TWSVM (MLTWSVM) that exploits multi-label information from instances. Azad-Manjiri et al. (2020) proposed structural twin support vector machine for multi-label learning (ML-STWSVM) which embeds the prior structural information of data into the optimization function of MLTWSVM based on the same clustering technology of S-TWSVM. This algorithm achieved better performance compared to other baseline multi-label learning algorithms.

Rastogi et al. (2018b) proposed a new loss function termed as \((\epsilon _1, \epsilon _2)\)-insensitive zone pinball loss function which generalizes other existing loss functions e.g. pinball loss, hinge loss. The resulting model takes care of noise insensitivity, instability to re-sampling and scatterdness present in the datasets. Tanveer et al. (2019) presented rigorous comparison of 187 classifiers which includes 8 variants of TWSVM and exhaustive evaluation of these classifiers was performed on 90 UCI benchmark datasets. Results have shown that RELS TSVM achieved highest performance than all other classifiers for binary classification task. Thus, RELSTSVM is the best TWSVM based classifier. For more details, one can refer to Tanveer et al. (2019). Ganaie and Tanveer (2020) proposed a novel classification approach using pre-trained functional link to enhance the feature space. Authors performed the classification task by LSTWSVM on the enhanced features and validated the performance on various datasets. Some other recent research on TWSVM include (Ganaie et al. 2020a) where authors proposed a novel way for generating oblique decision trees. The classification of training samples is done based on the Bhattachrayya distance with randomly selected feature subset and then hyperplanes are generated using TBSVM. The major advantage of the proposed model is that there is no need for any extra regularization as matrices are positive definite. The ensemble (Ganaie et al. 2021a) based models of twin SVM based models was proposed in Tanveer et al. (2021a).

3.9 Twin support vector machine for multi-class classification

Earlier, TWSVM was only implemented to solve binary class problems, however, the majority of problems in the real-world applications are generally based on multicategory classification. Thus, Xu et al. (2013) formulated Twin-KSVC. It implements “1-versus-1-rest" form to provide ternary output \((-1, 0, 1)\). This algorithm requires to solve two smaller-sized QPPs. It has better accuracy than 1-versus-rest TWSVM but losses sparsity. Yang et al. (2013) proposed multiple birth SVM (MBSVM). It is computationally better than TWSVM even when number of classes are large.

Xie et al. (2013) extended TWSVM application for multi-class problems and proposed one-versus-all TWSVM (OVA-TWSVM) which solve k-category problem using one-versus-all (OVA) approach to develop k TWSVM. To strengthen the performance of multi-TWSVM, Shao et al. (2013a) formulated a separating decision tree TWSVM (DTTWSVM). The basic idea of DTTWSVM is to embody the best separating principle rule to create a binary tree and then built binary TWSVM model on each node. Experimental results have shown that DTTWSVM has low computational complexity and better generalization.

Xu and Guo (2014b) formulated twin hyper-sphere multi-class SVM (THKSVM) which employs the “rest-versus-1” structure instead of “1-versus-rest” structure in TWSVM. In order to find hyperspheres, it constructs k (no. of classes) classifiers and finds k centers and k radiuses for each hypersphere. Each hypersphere covers maximum points of \(K_1\) classes and is as distant as possible from the rest classes. It has fast computational speed as compared to Twin-KSVC and also inverse operation of matrices are not required while solving dual QPPs of THKSVM. Thus, it performs better on large datasets and has better accuracy than“1-versus-rest” TWSVM but lower than Twin-KSVC. To enhance the performance of Twin-KSVC, Nasiri et al. (2015) formulated LS version of Twin-KSVC that works similar to Twin-KSVC but solves linear system of equations rather than pair of QPPs in Twin-KSVC. The proposed algorithm is fast, simple and has better accuracy and lower training time than Twin-KSVC. To enhance the performance of Twin-KSVC and to include local information of samples, Xu (2016) proposed K-nearest neighbor-based weighted multi-class TWSVM which uses information from within class and applies a weight in the objective function. This algorithm has low computational cost and better accuracy than Twin-KSVC.

Based on the multi-class extension of the binary LS-TWSVM, weighted multi-class LSTWSVM algorithm (Tomar and Agarwal 2015b) and regularized least squares twin SVM (Ali et al. 2022) have been proposed for multi-class imbalanced data. To control sensitivity of classifier, weight setting is employed in loss function for determining each hyperplane. Experimental results have shown the superiority and feasibility of the proposed algorithm for multi-class imbalanced problems. The authors (Tomar and Agarwal 2015c) extended LS-TWSVM for multi-class classification and compared various concepts of a multi-class classifier like “One-versus-All", “One-versus-One", “All-versus-One" and “Directed Acyclic Graph (DAG)". DAG MLS-TWSVM performance is superior and has high computational efficiency.

Yang et al. (2016) formulated least squares recursive projection TWSVM. For each class, it determines k projection axes and needs to solve linear equations. It has similar performance as MP-TWSVM. Based on P-TWSVM, Li et al. (2016) proposed multiple recursive projection TWSVM (Multi-P-TWSVM) which solves k QPPs in order to determine k-projection axes (for k classes). Authors introduced regularization term and recursive procedure which increases the generalization but this algorithm is complex when more orthogonal projection axes are generated.

Ding et al. (2017) review various multi-class algorithms as per their structures: “one-versus-rest”, “one-versus-one”, “binary tree”, “one-versus-one-versus-rest”, “all-versus-one” and “direct acyclic graph” based multi-class TWSVM. All these multi-class TWSVMs have some advantages and disadvantages. In general, one-versus-one TWSVMs have higher performance. Ai et al. (2018) proposed a multi-class classification weighted least squares TSVH using local density information in order to improve the performance of LSTSVH. Authors introduced local density information into LSTSVH to provide weight for each data point in order to avoid noise sensitivity. Pang et al. (2018) proposed K-nearest neighbor-based weighted multi-class TWSVM (KMTWSVM) that incorporated "1-versus-1-versus-rest" strategy for multi-class classification and also takes into account the distribution of all instances. However, it is computationally extensive especially for large-scale problems. Thus, authors in Pang et al. (2018) also proposed safe instance reduction rule (SIR-KMTWSVM) to reduce its computational time. de Lima et al. (2018) proposed improvements on least squares twin multi-class classification SVM which is “one-versus-one-versus-rest” strategy and generated ternary output. The proposed algorithm only needs to deal with linear equations. Numerical results demonstrate that it achieves better classification accuracy than Twin-KSVC (Xu et al. 2013) and LSTKSVC (Nasiri et al. 2015). Qiang et al. (2020) proposed improvement on LSTKSVC by proposing robust weighted linear loss twin multi-class support vector machine (WLT-KSVC) which takes care of the two drawbacks of LSTKSVC; sensitive to outliers and misclassifying some rest class samples due to the use of quadratic loss. Experiments on the UCI and NDC datasets showed promising results of this algorithm but its training accuracy significantly decreases as the number of classes increases. Li et al. (2019) proposed a nonparallel support vector machine (NSVM) for multiclass classification problem. Numerical experiments on several benchmark datasets clearly show the advantage of NSVM. In 2020, Tanveer et al. (2021d) proposed a fast and improved version of KWMTWSVM (Xu and Wang 2014) called least square K-nearest neighbor weighted multi-class TWSVM (LS-KWMTWSVM). Numerical experiments on various KEEL imbalance datasets showed high accuracy and low computational time for the proposed LS-KWMTWSVM as compared to other baseline algorithms. A multiclass nonparallel parametric margin SVM (Du et al. 2021) has also been proposed for multiclass classification. Kernel free least squares TWSVM (Gao et al. 2021) via special fourth order polynomial surface resulted in improved performance in multiclass problems.

3.10 Twin support vector machine for semi-supervised learning

Semi-supervised learning (SSL) techniques have achieved extensive attention from many researchers in the last few years due to its promising applications in machine learning and data mining. In many real-world challenges, labeled data is not easily available and thus it deteriorates the performance of supervised learning algorithms due to insufficient supervised information. SSL overcomes this limitation and uses both unlabeled and labeled data.

Qi et al. (2012b) formulated a novel Laplacian – TWSVM for the semi-supervised classification problem. This algorithm assumes that data points lie in low dimensional space and uses the geometric information of the unlabeled data points. Similar to TWSVM it solves a pair of QPPs with inverse matrix operations. Results have shown that Lap-TWSVM has better flexibility, prediction accuracy and generalization performance than conventional TWSVM. This algorithm has excellent performance for semi-supervised classification problems but due to QPPs and inverse matrix operations, its computational cost is high. Thus, to increase the training speed of Lap-TWSVM, Chen et al. (2014a) formulated LS version of Lap-TWSVM (Lap-LS-TWSVM). Unlike Lap-TWSVM, it needs to deal with linear equations and is done using conjugate gradient algorithm. It has less training time than Lap-TWSVM. Another algorithm was formulated by Chen et al. (2014b) to improve the performance of Lap-TWSVM by transforming the QPPs to UMPs in primal space and smoothing method is used which is effectively solved by Newton-Armijo algorithm. It achieved comparable accuracy as Lap-TWSVM with less computational time. Khemchandani and Pal (2016b) extended this to multi-class classification and formulated Lap-LS-TWSVM which evaluates training samples to “1-versus-1-versus-rest” and provides ternary output \((-1, 0,+1)\). It has better accuracy and less training time than Lap-TWSVM.

Based on the similar idea of Lap-TWSVM, Yang and Xu (2016) proposed an extension of traditional TPMSVM, which makes use of graph Laplacian and creates a connection graph of the training data points whose solution can be obtained by solving two SVM-type QPPs. Experiments reveal that LTPMSVM has higher classification accuracy than SVM, TPMSVM, Lap-TWSVM, and TWSVM.

Khemchandani and Pal (2017), blended the Laplacian—TWSVM and Decision Tree–TWSVM classifier and formulated a tree based classifier for semi-supervised multi-class classification. Extensive experiments on color images shows the feasibility of the model. Another interesting approach in this direction in 2019, has been suggested by Rastogi and Sharma (2019) called as Fast Laplacian TWSVM for Active Learning (\(FLap-TWSVM_{AL}\)) where the authors proposed to identify the most informative and representative training points whose labels are queried for domain experts for annotations. Once the corresponding labels are acquired, this limited labeled and unlabeled data are used to train a fast model that involves solving a QPP and an unconstrained minimization problem to seek the classification hyperplanes.

3.11 Twin support vector machine for clustering

Wang et al. (2015b) formulated twin support vector clustering algorithm (TSVC) build upon TWSVM. It divides the data samples into k clusters such that the data samples are around k cluster center points. It exploits the information from clusters (both between and within clusters) and the center planes are determined by solving a series of QPP. Authors in Wang et al. (2015b) also proposed a nearest neighbor graph (NNG)-based initialization to make the model more stable and efficient. Improved TSVC (Moezzi et al. 2019) decomposed the multiclass clustering problem into multiple two cluster problems.

Khemchandani et al. (2018a) formulated fuzzy least squares version of TSVC in which each data point has a fuzzy membership value and is allotted to different clusters. The proposed algorithm solves primal problems instead of dual problems in TSVC. Experimental results show that the proposed algorithm obtains comparable clustering accuracy to that of TSVC but has less training time.

Khemchandani and Pal (2016a) replaced the hinge loss function of TSVC with weighted linear loss and introduced a regularization term in TSVC which needs to solve linear equations and also implements the structural risk component. The proposed algorithm called weighted linear loss TSVC achieves higher accuracy than TSVC. The loss function proposed in Khemchandani and Pal (2016a) is not continuous and therefore, authors in Rastogi and Pal (2019) modified the weighted linear loss function to form a continuous loss function and implemented TSVC and WLL-TSVC in semi-supervised framework and also introduced a fuzzy extension of semi-supervised WLL-TSVC to make a robust algorithm which is less sensitive to noise. Experimental results have demonstrated that the proposed algorithm achieved better clustering accuracy and less computational time than TSVC and WLL-TSVC. Tree-based localized fuzzy twin support vector clustering (Tree-TSVC) was proposed by Rastogi and Saigal (2017). Tree-TSVC is a novel clustering algorithm that builds the cluster that represents a node on a binary tree, where each node comprises of proposed TWSVM based classifier. Due to the tree structure and the formulation that leads to solving a series of systems of linear equations, Tree-TSVC model is efficient in terms of training time and accuracy.

TSVC uses squared \(L_2\)-norm distance which leads to noise sensitivity. Also, each cluster plane learning iteration requires to solve a QPP which makes it computationally complex. To address these issues in TSVC, Ye et al. (2018) used \(L_1\) norm distance and proposed \(L_1\)-norm distance minimization-based robust TSVC which only deals with linear equations instead of series of QPPs. Ye et al. (2018) further proposed RTSVC and Fast RTSVC to speed up the computation of TSVC in nonlinear case. Numerical experiments shown that this model has higher accuracy as compared to other k-clustering methods and has less computational time than TSVC. Zhen et al. (2019c) proposed ramp-based TSVC by introducing the ramp cost function in TSVC. The solution of the proposed algorithm is obtained by solving non-convex programming problem using an alternating iteration algorithm. Bai et al. (2019) introduced regularization in clustering and proposed large margin TSVC. Bai et al. (2019) proposed a fast least squares TSVC with uniform coding output. The algorithm achieves better performance than TSVC and other plane-clustering methods. Wang et al. (2019d) proposed a general model for plane-based clustering which includes various extensions of k-plane clustering (kPC). It optimizes the problem by minimizing the total loss which is derived from both within-cluster and between-cluster. It can capture data distribution accurately. Richhariya et al. (2020a) proposed projection based least square TSVC (LSPTSVC) and employed the concave-convex procedure (CCCP) to solve the optimization problem. LSPTSVC only needs to solve a set of linear equations and thus leads to significantly less computational time. TSVC uses hinge loss function, hence suffers from the issues of noise and has low sampling stability. To overcome these issues, pinball loss twin support vector clustering (Tanveer et al. 2021b) used pinball loss function to penalize the samples.However, it suffers from the issues of overfitting as it minimises the empirical risk. Hence, pinball loss twin bounded support vector clustering (Tanveer et al. 2021e) introduced the regularisation term to minimise the structural risk and avoids the issues of overfitting. Introduction of pinball loss function leads to loss of sparsity in the model. Hence, sparse pinball loss TSVC (Tanveer et al. 2021c, f) used \(\epsilon \)-insensitive pinball loss function to make the model sparse. It implements the empirical risk minimisation principle and thus suffers due to overfitting. To minimise the overfitting issues, sparse pinball twin bounded support vector clustering (Tanveer et al. 2021e) used the regularisation term to minimise the structural risk and hence, avoid the issues of overfitting.

4 Applications of twin support vector classification

TWSVM has been implemented to solve many real-life classification challenges and has shown promising results in some applications (Fig. 2).

4.1 Biomedical applications

TWSVM has been applied to detect breast cancer in early stages by detecting a mass in digital mammograms (Si and Jing 2009). A hybrid feature selection based approach has also been implemented for detecting breast cancer, Hepatitis, and Diabetes (Tomar and Agarwal 2015a). Wang et al. (2016b) proposed TWSVM in combination with dual-tree complex wavelet transform (DTCWT) method for Pathological Brain Detection. TWSVM is also implemented for detecting cardiac diseases, one such application was proposed by Houssein et al. Houssein et al. (2018), heartbeats were detected using Swarm-TWSVM and this algorithm achieved better accuracy than TWSVM. Refahi et al. (2018) used LSTWSVM and DAG LS-TWSVM classifiers for predicting arrhythmia heart disease. Chandra and Bedi (2018) proposed linear norm fuzzy based TWSVM for color based classification of human skin which achieved better accuracy than other conventional methods. Xu et al. (2015) proposed semi-supervised TWSVM for detection of Acute-on-chronic liver failure (ACLF). Also, many researchers have applied TWSVM to detect Alzheimer’s disease in its early stages (Tanveer et al. 2020; Zhang and Wang 2015; Wang et al. 2016c; Alam et al. 2017; Tomar and Agarwal 2014; Wang et al. 2016b; Tomar et al. 2014b; Wang et al. 2016a; Tomar et al. 2014a; Wang et al. 2015a).

4.2 Alzheimer’s disease prediction

Richhariya and Tanveer (2021b) proposed an angle based universum least squares TWSVM (AULSTWSVM) which performed with 95% accuracy for detecting Alzheimer’s disease (AD). Richhariya et al. (2020b) also proposed universum support vector machine based recursive feature elimination (USVM-RFE) for detecting AD. Khan et al. (2021) proposed an approach to improve the classification accuracy in mild cognitive impairment (MCI), normal control (NC), and AD subjects using structural magnetic resonance imaging (sMRI). Authors used FreeSurfer to process MRI data and derive cortical features which are used in TWSVM, LSTWSVM and RELSTSVM to detect AD. Sharma et al. (2022) proposed fuzzy LS-TWSVM based deep learning network for prognosis of the Alzheimer’s disease.

4.3 Speaker recognition

Cong et al. (2008) formulated multi-class TWSVM for speaker recognition with feature extraction based on gaussian mixture models (GMMs). It gives better results than traditional SVM. Yang and Wu (2009) proposed multi-TWSVM which find hyperplane for every class and takes constraints from other classes separately on the QPP. This algorithm performed better than many other algorithms for speaker recognition.

4.4 Text categorization

Kumar and Gopal (2009) formulated a least squares TWSVM and experiments have shown the validity of the model for text applications. Francis and Sreenath (2019) proposed manifold regularized TWSVM for text recognition and the proposed method achieved highest accuracy among SVM, LSTWSVM and other methods. Non-parallel SVM (Tian et al. 2014b) and efficient pinball TWSVM (Rastogi and Pal 2021) has also demonstrated better performance in text categorization.

4.5 Intrusion detection

It is a system which monitors or protects the network against any malicious activity. It has been a critical component for network security. Researchers (He and Zheng 2014; Ding et al. 2008; Mousavi et al. 2015; Nie et al. 2013) applied TWSVM for intrusion detection and results have shown that it achieves better accuracy than other intrusion detection algorithms.

4.6 Human activity recognition

Khemchandani and Sharma (2016) proposed least square TWSVM for human activity recognition which gives promising results even with the outliers. Nasiri et al. (2014) formulated energy-based LS-TWSVM algorithm. Khemchandani and Sharma (2017) also proposed robust parametric twin support vector machine which can effectively deal with the noise. Mozafari et al. (2011) used the Harris detector algorithm and applied LS-TWSVM for action recognition and achieved the highest accuracy than other state-of-the-art methods. Kumar and Rajagopal (2018) proposed Multi-class TWSVM for detecting human face happiness combined with Constrained Local Model. Kumar and Rajagopal (2019) also proposed semi-supervised multi TWSVM to predict human facial emotions with 13 minimal features that can detect six basic human emotions. Algorithm achieved highest accuracy and least computation time with minimal feature vectors.

4.7 Image denoising

Yang et al. (2014) proposed edge/texture-preserving image denoising based on TWSVM which is very effective to preserve the informative features such as edges and textures and better than other image denoising methods available. Shahdoosti and Hazavei (2018) proposed a ripplet formulation of the total variation method for denoising images. This algorithm attains promising results in improving the image quality in terms of both subjective and objective inspections.

4.8 Electroencephalogram (EEG)

Classification of electroencephalogram (EEG) for different mental activities has been an active research topic. Richhariya and Tanveer (2018b) proposed universum TWSVM which is insensitive to outliers as it selects universum from the EEG datasets itself to generate universum data points which remove the effect of outliers. Soman (2015) used the classifiable metric to choose discriminative frequency bands and used the TWSVM to learn imbalanced datasets. Tanveer et al. (2018) proposed entropy based features in Flexible analytic wavelet transform (FAWT) framework and RELSTSVM Tanveer et al. (2016a) for classification to detect epileptic seizure EEG. Tanveer et al. (2018) used FAWT framework for classification of seizure and seizure-free EEG signals with Hjorth parameters as features and implemented TWSVM, LSTWSVM and RELSTSVM (Tanveer et al. 2016a) for classifying signals. Li et al. (2018) proposed LSTWSVM with a frequency band selection common spatial pattern algorithm for detecting motor imagery electroencephalography. This algorithm achieved faster training time compared to other SVM baseline algorithms. Some more researchers have applied TWSVM for EEG classification, biometric identification and other leak detection challenges (Kumar and Gupta 2021; Gupta et al. 2021; She et al. 2015; Li et al. 2018; Kostílek and Št’astnỳ 2012; Lang et al. 2017; Dalal et al. 2019)

4.9 Other applications

Cao et al. (2018) proposed improved TWSVM with multi-objective cuckoo search to predict software bugs. Authors employed TWSVM to predict defected modules and used cuckoo search to optimize TWSVM and this proposed method achieved better accuracy than other software defect prediction methods. Chu et al. (2018) proposed Multi-information TWSVM for detecting steel surface defects. The TWSVM models have also been used in image recognition or face recognition (Qi et al. 2013b; Peng and Xu 2012; Chen and Wu 2018; Peng and Xu 2013a) and facial expression recognition (Richhariya and Gupta 2019) and privacy preservation (Anand et al. 2019).

The pinball loss function is defined as follows:

where \(g_y(x)=g(x;w_y,b_y)=w_y^Tx+b_y=0\), \({\underline{D}}(g_y(A,0))\) denotes the intraclass distance which represents objective function while \({\overline{D}}(g_y(A),g_y(B))\) is interclass distance which corresponds to constraints, \(D(g_y(x))\) is the perpendicular distance of point x from the hyperplane \(g_y(x)=0\).

Optimization framework of various TWSVM algorithms are discussed in Table 4 (Li et al. 2019).

Table 5 shows the differences in major TWSVM methods based on the SRM principle, sparsity, matrix inversion and noise insensitivity.

5 Basic theory of twin support vector regression

Peng (2010b) proposed an efficient twin support vector regression (TSVR) algorithm in line with TWSVM, called twin support vector regresson (TSVR). Like TWSVM, it also requires to solve two QPPs. It finds an end regressor that is the mean of \(\varepsilon \)-insensitive up and down bound functions.TSVR has less computational time than a standard SVR and has better generalization ability. The down- and up-bound functions for linear case are given below:

For any \(x\in R^{n}\), the two hyperplanes are defined as follows:

The two QPPs in linear case are defined as below:

and

here, \(C_{1} ,C_{2} >0\); \(\varepsilon _{1} ,\varepsilon _{2} >0\) and \(\zeta _1 ,\zeta _2 \) are slack variables. The final regressor is the mean of up and down regressors in (11), which is given as follows

For nonlinear case, kernel surfaces are used rather than hyperplanes which are given below:

The two QPPs in non-linear case are defined as below:

and

For more details, one can refer to Peng (2010b).

Although taking motivation from TWSVM formulation, Peng (2010b) attempted to propose TSVR where the regressor is obtained via solving a pair of quadratic programming problems (QPPs). However, later authors in Khemchandani et al. (2016) argued that TSVR formulation is not in the true spirit of TWSVM. Further, taking the motivation from Bi and Bennett (2003), they proposed an alternative approach to find a formulation for TSVR which is in the true spirit of TWSVM. They have shown that their proposed TSVR formulation can be derived from TWSVM for an appropriately constructed classification problem.

6 Research progress on twin support vector regression

In this section, we discuss the progress of twin SVM based models for the regression problems.

6.1 Weighted twin support vector regression