Abstract

Big data systems for reinforcement learning have often exhibited problems (e.g., failures or errors) when their components involve stochastic nature with the continuous control actions of reliability and quality. The complexity of big data systems and their stochastic features raise the challenge of uncertainty. This article proposes a dynamic coherent quality measure focusing on an axiomatic framework by characterizing the probability of critical errors that can be used to evaluate if the conveyed information of big data interacts efficiently with the integrated system (i.e., system of systems) to achieve desired performance. Herein, we consider two new measures that compute the higher-than-expected error,—that is, the tail error and its conditional expectation of the excessive error (conditional tail error)—as a quality measure of a big data system. We illustrate several properties (that suffice stochastic time-invariance) of the proposed dynamic coherent quality measure for a big data system. We apply the proposed measures in an empirical study with three wavelet-based big data systems in monitoring and forecasting electricity demand to conduct the reliability and quality management in terms of minimizing decision-making errors. Performance of using our approach in the assessment illustrates its superiority and confirms the efficiency and robustness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Big data have become a torrent, flowing into every area of business and service along with the integration of information and communication technology and the increasingly development of decentralized and self-organizing infrastructures (see Sun et al. 2015, for example). A big data system (BDS), which in our article refers to the big data-initiated system of systems, is an incorporation of a task-oriented or dedicated system that interoperates with big data resources, integrates their proprietary infrastructural components, and improves their analytic capabilities to create a new and more sophisticated system for more functional and superior performance. For example, the Internet of Things (IoT), as described by Deichmann et al. (2015) is a BDS, and its network’s physical objects through the application of embedded sensors, actuators, and other devices can collect or transmit data about the objects. BDS generates an enormous amount of data through devices that are networked through computer systems.

Challenges to BDS are therefore unavoidable with the tremendously ever-increasing scale and complexity of systems. Hazen et al. (2014) address the data quality problem in the context of supply chain management and propose methods for monitoring and controlling data quality. The fundamental challenge of BDS is that there exists an increasing number of software and hardware failures when the scale of an application increases. Pham (2006) discusses the system software reliability in theory and practice. Component failure is the norm rather than exception, and applications must be designed to be resilient to failures and diligently handle them to ensure continued operations. The second challenge is that complexity increases with an increase in scale. There are more component interactions, increasingly unpredictable requests on the processing of each component, and greater competition for shared resources among interconnected components. This inherent complexity and non-deterministic behavior makes diagnosing aberrant behavior an immense challenge. Identifying whether the interruption is caused by the processing implementation itself or unexpected interactions with other components turns out to be rather ambiguous. The third challenge is that scale makes a thorough testing of big data applications before deployment impractical and infeasible.

BDS pools all resources and capabilities together and offers more functionality and performance with sophisticated technologies to ensure the efficiency of such an integrated system. Therefore, the efficiency of BDS should be higher than the merged efficiency of all constituent systems. Deichmann et al. (2015) point out that the replacement cycle for networked data may be longer than the innovation cycle for the algorithms embedded within those systems. BDS therefore should consider ways to upgrade its computational capabilities to enable continuous delivery of data (information) updates. Modular designs are required so the system can refresh discrete components (e.g., data) of a BDS on a rolling basis without having to upgrade the whole database. To pursue a continuous-delivery model of information or decision, BDS must be enabled to review its computational processes and be focused simultaneously on supporting data flows that must be updated quickly and frequently, such as auto-maintenance. In addition, BDS has to ensure speed and fidelity in computation and stability and security in operation. Networking efficiency is huge, but there is a lot of uncertainty over it. Questions thus arise about how to accurately assess the performance of BDS, and how to build a technology stackFootnote 1 to support it.

Quality measures quantify a system’s noncompliance with the desirable or intended standard or objective. Such noncompliance may occur for a process, outcome, component, structure, product, service, procedure, or system, and its occurrence usually causes loss. Enlightened by the literature of managing the extreme events and disasters, in this article we work on an axiomatic framework of new measures (based on the tail distribution of critical defect or error) to address how to conduct optimal quality management for BDS with respect to a dynamic coherent measurement that has been well established particularly for managing risk or loss—see, for example, Riedel (2004), Artzner et al. (2007), Sun et al. (2009), Bion-Nadal (2009) and Chun et al. (2012) as well as references therein. Conventional quality measures focus on quantizing either the central moment (i.e., the mean) or the extreme value (e.g., six sigma) of error (or failure). The newly proposed measure focuses on a specific range of distribution instead of a specific point, which modulates the overall exposure of defects for quality management.

Conventional measurement based on average performance such as mean absolute error (MAE) is particularly susceptible to the influence of outliers. When we conduct system engineering, systems have their individual uncertainties that lead to error propagation that will distort the overall efficiency. When these individual uncertainties are correlated with an unknown pattern, simple aggregating a central tendency measure gives rise to erroneous judgment. The six sigma approach that investigates the extremumFootnote 2 has received considerable attention in the quality literature and general business and management literature. However, this approach has shown various deficiencies such as rigidity, inadequate for complex system, and non-subadditivity, particularly, not suitable for an integrated system or system of dynamic systems. However, Baucells and Borgonovo (2013) and Sun et al. (2007) suggested the Kolmogorov–Smirnov (KS) distance, Cramér–von Mises (CVM) distance, and Kuiper (K) distance as the metrics for evaluation. These measures are based on the probability distribution of malfunction and only provide information for relative comparison of extremum. They fail to identify the absolute difference when we need to know the substantiality of difference. In order to overcome these shortcomings, we propose a dynamic measurement applying tail error (TE) and its derivation, conditional tail error (CTE), to quantify the higher-than-expected failure (or critical error) of a BDS.

Tail error (TE) describes the performance of a system in a worst-case scenario or the critical error for a really bad performance with a given confidence level. TE measures an error below a given probability \(\alpha \) that can be treated as a percentage of all errors. \(\alpha \) is then the probability such that an error greater than TE is less than or equal to \(\alpha \), whereas an error less than TE is less than or equal to \(1-\alpha \). For example, one can state that it is 95% (i.e., \(\alpha =5\%\)) sure for a given system processing that the highest defect rate is no more than 15% (which is the tail error that can be tolerated). TE can also be technically defined as a higher-than-expected error of an outcome moving more than a certain tolerance level,—for example, a latency with three standard deviations away from the mean, or even six standard deviations away from the mean (i.e., the six sigma). For mere mortals, it has come to signify any big downward move in dependability. Because an expectation is fairly subjective, a decision maker can appropriately adjust it with respect to different severities.

When we assess (e.g., zoom in) tail-end (far distance from zero) along with the error (or failure) distribution, the conditional tail error (CTE) is a type of sensitivity measure derived from TE describing the shape of the error (or failure) distribution for the far-end tail departing from TE. CTE also quantifies the likelihood (at a specific confidence level) that a specific error will exceed the tail error (i.e., the worst case for a given confidence level), which is mathematically derived by taking a weighted average between the tail error and the errors exceeding it. CTE evaluates the value of an error in a conservative way, focusing on the most severe (i.e., intolerable) outcomes above the tail error. The dynamic coherent version of CTE is the upper bound of the exponentially decreasing bounds for TE and CTE. CTE is convex and can be additive for an independent system. A preternatural character of them is to quantitate the density of errors by choosing different \(\alpha \) values (or percentages). Therefore, a subjective tolerance (determined by \(\alpha \)) of error can be predetermined by a specific utility function of the decision maker. Intrinsic features of the conditional probability guarantee its consistency and coherence when applying it to dynamic quality management throughout the entire process. We show several properties of these measures in our study and explain how to compute them for quality management of a BDS.

We organize the article as follows. Section 2 introduces some background information focusing on BDS and quality management. We describe the prevailing paradigm for the quality management of a big data system. We then introduce the methodology in more details and illustrate several properties of the proposed framework. Section 3 presents three big data systems (i.e., SOWDA, GOWDA, and WRASA) based on wavelet algorithms. Section 4 conducts an empirical study to evaluate these three systems’ performances on the big electricity demand data from France through analysis and forecasting. It also provides a discussion with simulation for several special cases that have not been observed in the empirical study. Our empirical and simulation results confirm the efficiency and robustness of the method proposed herein. We summarize our conclusions in Sect. 5.

2 Quality management for big data system

Optimal quality management refers to the methodologies, strategies, technologies, systems, and applications that analyze critical data to help an institution or individual better understand the viability, stability, and performance of systems with timely beneficial decisions in order to maintain a desired level of excellence. In addition to the data processing and analytical technologies, quality management for BDS assesses macroscopic and microscopic aspects of system processing and then combines all relevant information to conduct various high-impact operations to ensure the system’s consistency. Therefore, the foundation of quality management for BDS is how to efficiently evaluate the interoperations of all components dealing with the big data through robust methodologies (see Sun et al. 2015, for example). In this section we first classify BDS—that is, the fundamentals for the system of systems engineering. We then discuss quality lifecycle management (QLM) for systematic quality management. We propose the framework of dynamic coherent quality management, which is a continuous process to consistently identify critical errors. We then set up the quantitative framework of metrics to evaluate the decision-making quality of BDS in terms of error control.

2.1 Big data system

The integration and assessment of a broad spectrum of data—where such data usually illustrate the typical “6Vs” (i. e., variety, velocity, volume, veracity, viability, and value)—affect a big data system. Sun et al. (2015) summarize four features of big data as follows: (1) autonomous and heterogeneous resource, (2) diverse dimensionality, (3) unconventional processing, and (4) dynamic complexity. Monitoring and controlling data quality turn out to be more critical (see Hazen et al. 2014, for example). In our article we refer to the big data initiated a system of systems as BDS, which is an incorporation of task-oriented or dedicated systems that interoperate their big data resources, integrate their proprietary infrastructural components, and improve their analytic capabilities to create a new and more sophisticated system for more functional and superior performance. A complete BDS contains (1) data engineering that intends to design, develop, integrate data from various resources, and run ETL (extract, transform and load) on top of big datasets and (2) data science that applies data mining, machine learning, and statistics approaches to discovery knowledge and develop intelligence (such as decision making).

When integrating all resources and capabilities together to a BDS for more functionality and performance, such a processing is called system of systems engineering (SoSE) (see Keating and Katina 2011, for example). SoSE for BDS integrates various, distributed, and autonomous systems to a large sophisticated system that pools interacting, interrelated, and interdependent components as an incorporation. We illustrate three fundamental architectures of BDS with Fig. 1 centralized, fragmented, and distributed.

Fundamental architectures of a big data system (BDS). Dots indicate components (processors and terminals) and edges are links. The component could be a system or a unit. a Centralized BDS that has a central location, using terminals that are attached to the central. b Fragmented (or decentralized) BDS that allocates one central operator to many local clusters that communicate with associated processors (or terminals). c Distributed BDS that spreads out computer programming and data on many equally-weighted processors (or terminals) that directly communicate with each other

Centralized BDS has a central operator that serves as the acting agent for all communications with associated processors (or terminals). Fragmented (or decentralized) BDS allocates one central operator to many local clusters that communicate with associated processors (or terminals). Distributed BDS spreads out computer programming and data on many equally weighted processors (or terminals) that directly communicate with each other. For more details about data center network topologies, see Liu et al. (2013) and references therein.

We can identify five properties of BDS following “Maier’s criteria” (see Maier 1998): (1) operational independence, i.e., each subsystem of BDS is independent and achieves its purposes by itself; (2) managerial independence, that is, each subsystem of BDS is managed in large part for its own purposes rather than the purposes of the whole; (3) heterogeneity, that is, different technologies and implementation media are involved and a large geographic extent will be considered; (4) emergent behavior, that is, BDS has capabilities and properties in which the interaction of BDS inevitably results in behaviors that are not predictable in advance for the component systems; and (5) evolutionary development, that is, a BDS evolves with time and experience. This classification shares some joint properties that can be used to characterize a complex system,—for example, the last three properties of heterogeneity, emergence, and evolution.

2.2 Systematic quality management

Modeling a system’s featuring quality helps characterize the availability and reliability of the underlying system. The unavailability and unreliability of a system presents an overshoot with respect to its asymptotic metrics of performance with the hazard that characterizes the failure rate associated with design inadequacy. Levels of uncertainty in modeling and control are likely to be significantly higher with BDS. Greater attention must be paid to the stochastic aspects of learning and identification. Uncertainty and risk must be rigorously managed.

These arguments push for a renewed mechanism on system monitoring, fault detection and diagnosis, and fault-tolerant control that include protective redundancies at the hardware and software level (see Shooman 2002). Rigorous, scalable, and sophisticated algorithms shall be applied for assuring the quality, reliability, and safety of the BDS. Achieving consistent quality in the intelligent decision of the BDS is a multidimensional challenge with increasingly global data flows, a widespread network of subsystems, technical complexity, and a highly demanding computational efficiency.

To be most effective, quality management should be considered as a continuous improvement program with learning as its core. Wu and Zhang (2013) point out that exploitative-oriented and explorative-oriented quality management need to consistently ensure quality control on the operation lifecycle throughout the entire process. Parast and Adams (2012) highlight two theoretical perspectives (i.e., convergence theory and institutional theory) of quality management over time. Both of them emphasize that quality management evolves over time by using cross-sectional, adaptive, flexible, and collaborative methods so that the quality information obtained in one lifecycle stage can be transferred to relevant processes in other lifecycle stages. Contingency theory also recommends that the operation of quality control be continually adapted (see Agarwal et al. 2013, for example), and quality information must therefore be highly available throughout the whole system to ensure that any and all decisions that may require quality data or affect performance quality are informed in a timely, efficient, and accurate fashion.

Quality lifecycle management (QLM)Footnote 3 is system-wide, cross-functional solution to ensure that processing performance, reliability, and safety are aligned with the requirements set for them over the course of the system performance. Mellat-Parst and Digman (2008) extend the concept of quality beyond the scope of a firm by providing a network perspective of quality. QLM is used to build quality, reliability, and risk planning into every part of the processing lifecycle by aligning functional needs with performance requirements, ensuring that these requirements are met by specific characteristics and tracking these characteristics systematically throughout design, testing, development, and operation to ensure the requirements are met at every lifecycle stage. Outputs from each lifecycle stage, including analysis results, performance failures, corrective actions, systemic calibrations, and algorithmic verifications, are compiled within a single database platform using QLM. They are made accessible to other relevant lifecycle stages using automated processes. This guarantees the continuous improvement of systemic performance over the course of development and further improvement. QLM links together the quality, reliability, and safety activities that take place across every stage of the product development lifecycle. Through QLM, one lifecycle stage informs the next, and the feedback from each stage is automatically fed into other stages to which it relates, we then obtain a unified and holistic view of overall quality of performance.

2.3 The methodology

In this section, we describe the axiomatic framework for QLM with respect to the dynamic coherent error measures. Based on the Kullback–Leibler distance, we propose two concepts—that is, the tail error (TE) and conditional tail error (CTE)—and their dynamic setting to guarantee the feature of time consistency. These two error measures can be applied dynamically to control performance errors at each stage of BDS in order to conduct QLM.

2.3.1 Notation and definitions

The uncertainty of future states shall be represented by a probability space (\(\Omega , {\mathcal {F}}, P\)) that is, the domain of all random variables. At time t a big data system is employed to make a decision about future scenario after a given time horizon \(\Delta \), for example, to predict price change from t to \(t+\Delta \). We denote a quantitative measure of the future scenario at time t by V(t) that is a random variable and observable through time. The difference between the predicted scenario at time t and its true observation at time \(t+\Delta \) is then measured by \(V(t+\Delta )-V(t)\). If we denote \({\widetilde{V}}(\cdot )\) as the prediction and \(V(\cdot )\) the observation, then the Kullback–Leibler distance or divergence of the prediction error is given by the following definition:

Definition 1

For given probability density functions V(x) and \({\widetilde{V}}(x)\) on an open set \({\mathcal {A}} \in \mathfrak {R}^{N}\), the Kullback–Leibler distance or divergence of \({\widetilde{V}}(x) \) from V(x) is defined by

Axiom 1

Assume that V(x) and \({\widetilde{V}}(x)\) are continuous on an open set \({\mathcal {A}}\), then for arbitrary V(x) and \({\widetilde{V}}(x)\), \({\mathcal {K}}(V\Vert {\widetilde{V}})\ge 0\); and \({\mathcal {K}}(V\Vert {\widetilde{V}})= 0\) if and only if \(V(x)={\widetilde{V}}(x)\) for any \(x \in {\mathcal {A}} \).

Because \({\widetilde{V}}(x)\) and V(x) are density functions, we have \(\int {\widetilde{V}}(x)\mathrm {d}x=1\) and \(\int V(x)\mathrm {d}x=1\), which shows Axiom 1.

Axiom 2

Consider a set \({\mathcal {K}}\) of real-valued random variables. A functional \(\rho : {\mathcal {D}} \rightarrow {\mathbb {R}}\) is referred to be a consistent error measure for \({\mathcal {D}}\) if it satisfies all of the following axioms:

-

(1)

\(\forall \,{\mathcal {K}}_1, {\mathcal {K}}_2, \in {\mathcal {D}}\) such that \({\mathcal {K}}_2\ge {\mathcal {K}}_1\) a.s., we have \(\rho ({\mathcal {K}}_1) \le \rho ({\mathcal {K}}_2)\).

-

(2)

\(\forall \,{\mathcal {K}}_1, {\mathcal {K}}_2, \in {\mathcal {D}}\) such that \({\mathcal {K}}_1 + {\mathcal {K}}_2 \in {\mathcal {D}}\), we have \(\rho ({\mathcal {K}}_1 + {\mathcal {K}}_2) \le \rho ({\mathcal {K}}_1) + \rho ({\mathcal {K}}_2)\); for \({\mathcal {K}}_1\) and \({\mathcal {K}}_2\) are comonotonic random variables, that is, for every \(\omega _1,\omega _2 \in \Omega : \; ({\mathcal {K}}_1(\omega _2) - {\mathcal {K}}_1(\omega _1))({\mathcal {K}}_2(\omega _2) - {\mathcal {K}}_2(\omega _1)) \ge 0\), we have \(\rho ({\mathcal {K}}_1+{\mathcal {K}}_2) = \rho ({\mathcal {K}}_1) + \rho ({\mathcal {K}}_2)\).

-

(3)

\( \forall \, {\mathcal {K}} \in {\mathcal {D}}\) and \(\gamma \in \mathfrak {R}_{+}\) such that \(\gamma {\mathcal {K}} \in {\mathcal {D}}\), we have \(\rho (\gamma {\mathcal {K}}) \le \gamma \rho ({\mathcal {K}})\).

-

(4)

\(\forall \,{\mathcal {K}} \in {\mathcal {D}}\) and \(a \in \mathfrak {R}\) such that \({\mathcal {K}} + a \in {\mathcal {D}}\), we have \(\rho (X + a) = \rho (X)+a\).

-

(5)

\( \forall \,{\mathcal {K}}_1, {\mathcal {K}}_2, \in {\mathcal {D}}\) with cumulative distribution functions \(F_{{\mathcal {K}}_1}\) and \(F_{{\mathcal {K}}_2}\) respectively, if \(F_{{\mathcal {K}}_1} = F_{{\mathcal {K}}_2}\) then \(\rho ({\mathcal {K}}_1) = \rho ({\mathcal {K}}_2)\).

This axiom is motivated by the coherent measure proposed by Artzner et al. (2007). Here, we modify the axiomatic framework of consistency in describing the error function. By Axiom 2-(1), a monotonicity of the error function is proposed: the larger the Kullback–Leibler distance is, the larger the error metric will be. Axiom 2-(2) says that if we combine two tasks, then the total error of them is not greater than the sum of the error associated with each of them, if these two tasks are independent. When these two tasks are dependent, the total error of them is equal to the sum of the error associated with each of them. It is also called subadditivity in risk management and also is applied in systematic computing such that an integration does not create any extra error. Axiom 2-(3) can be derived if Axiom 2-(2) holds, and it reflects positive homogeneity. It shows that an error reduction requires that processing uncertainty should linearly influence task loans. Axiom 2-(4) shows that the error will not be altered by adding or subtracting a deterministic quantity a to a task. Axiom 2-(5) states the probabilistic equivalence that is derived directly from Axiom 1.

2.3.2 Dynamic error measures

A dynamic error measure induces for each operation a quality control process describing the error associated with the operation over the time. O’Neill et al. (2015) point out that quality management helps maintain the inherent characteristics (distinguishing features) in order to fulfill certain requirements. The inherence of quality requires a dynamic measure to conduct continuous processing of error assessment in the rapidly changing circumstances of an operational system. During the dynamic phase, the decision-making process involves investigating the errors generated by the big data systems, selecting an appropriate response (system of work), and making a judgment on whether the errors indicate a systematic failure. How to interrelate quality control process over different periods of time is important for the dynamic measure. Consequently, a dynamic error measure should take into account the information available at the time of assessment and update the new information continuously over time. Time consistency then plays a crucial role in evaluating the performance of dynamic measures, particularly for big data systems that run everlastingly before stopping. Therefore, the quality measure for errors shall preserve the property of consistency.

Bion-Nadal (2008) defines a dynamic risk measures based on a continuous time filtered probability space as (\(\Omega , {\mathcal {F}}_{\infty }, ({\mathcal {F}}_t)_{t\in \mathfrak {R}^+}, P\)) where the filtration \(({\mathcal {F}}_t)_{t\in \mathfrak {R}^+}\) is right continuous. Similarly, following the Axiom 2, we can extend it to a dynamic error measure following Bion-Nadal (2008), Chen et al. (2017), and references therein.

Definition 2

For any stopping time \(\tau \), we define the \(\sigma \)-algebra \({\mathcal {F}}_{\tau }\) by \({\mathcal {F}}_{\tau }=\{ {\mathcal {K}} \in {\mathcal {F}}_{\infty }\, |\, \forall \, t \in \mathfrak {R}^+:{\mathcal {K}} \cap \{\tau \le t\} \in {\mathcal {F}}_t \}\). Then \(L^{\infty }(\Omega , {\mathcal {F}}_{\tau }, P)\) is the Banach algebra of essentially bounded real valued \({\mathcal {F}}_{\tau }\)-measurable functions.

The Kullback–Leibler distance for the system error will be identified as an essentially bounded \({\mathcal {F}}_{\tau }\)-measurable function with its class in \(L^{\infty }(\Omega , {\mathcal {F}}_{\tau }, P)\). Therefore, an error at a stopping time \(\tau \) is an element of \(L^{\infty }(\Omega , {\mathcal {F}}_{\tau }, P)\). We can distinguish a finite time horizon as a case defined by \({\mathcal {F}}_t\,=\,{\mathcal {F}}_T \;\forall \, t\ge T\).

Axiom 3

For all \({\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\), a dynamic error measure \((\varrho _{\tau _1, \tau _2})_{0\le \tau _1 \le \tau _2}\) on (\(\Omega , {\mathcal {F}}_{\infty }, ({\mathcal {F}}_t)_{t\in \mathfrak {R}^+}, P\)), where \(\tau _1\le \tau _2\) are two stopping times, is a family of maps defined on \(L^{\infty }(\Omega , {\mathcal {F}}_{\tau _2}, P)\) with values in \(L^{\infty }(\Omega , {\mathcal {F}}_{\tau _1}, P)\). \((\varrho _{\tau _1, \tau _2})\) is consistent if it satisfies the following properties:

-

(1)

\(\forall \,{\mathcal {K}}_1, {\mathcal {K}}_2, \in L^{\infty }({\mathcal {F}}_{\tau })^2\) such that \({\mathcal {K}}_2\ge {\mathcal {K}}_1\) a.s., we have \(\varrho _{\tau _1, \tau _2}({\mathcal {K}}_1) \le \varrho _{\tau _1, \tau _2}({\mathcal {K}}_2)\).

-

(2)

\(\forall \, {\mathcal {K}}_1, {\mathcal {K}}_2, \in L^{\infty }({\mathcal {F}}_{\tau })^2\) such that \({\mathcal {K}}_1 + {\mathcal {K}}_2 \in L^{\infty }({\mathcal {F}}_{\tau })^2\), we have \(\varrho _{\tau _1, \tau _2}({\mathcal {K}}_1 + {\mathcal {K}}_2) \le \varrho _{\tau _1, \tau _2}({\mathcal {K}}_1) + \varrho _{\tau _1, \tau _2}({\mathcal {K}}_2)\); for \({\mathcal {K}}_1\) and \({\mathcal {K}}_2\) are comonotonic random variables, i.e., for every \(\omega _1,\omega _2 \in \Omega : \; ({\mathcal {K}}_1(\omega _2) - {\mathcal {K}}_1(\omega _1))({\mathcal {K}}_2(\omega _2) - {\mathcal {K}}_2(\omega _1)) \ge 0\), we have \(\varrho _{\tau _1, \tau _2}({\mathcal {K}}_1+{\mathcal {K}}_2) = \varrho _{\tau _1, \tau _2}({\mathcal {K}}_1) + \varrho _{\tau _1, \tau _2}({\mathcal {K}}_2)\).

-

(3)

\(\forall \, {\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\) and \(\gamma \in \mathfrak {R}_{+}\) such that \(\gamma {\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\), we have \(\varrho _{\tau _1, \tau _2}(\gamma {\mathcal {K}}) \le \gamma \varrho _{\tau _1, \tau _2}({\mathcal {K}})\).

-

(4)

\(\forall \, {\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\) and \(a \in L^{\infty }({\mathcal {F}}_{\sigma })\) such that \({\mathcal {K}} + a \in L^{\infty }({\mathcal {F}}_{\sigma })\), we have \(\varrho _{\tau _1, \tau _2} (X + a) = \varrho _{\tau _1, \tau _2} (X)+a\).

-

(5)

For any increasing (resp. decreasing) sequence \({\mathcal {K}}_n \in L^{\infty }({\mathcal {F}}_{\tau })^n\) such that \({\mathcal {K}}=\lim {\mathcal {K}}_n\), the decreasing (resp. increasing) sequence \(\varrho _{\tau _1, \tau _2}({\mathcal {K}}_n)\) has the limit \(\varrho _{\tau _1, \tau _2}({\mathcal {K}})\) and continuous from below (resp. above).

This axiom is motivated by Axiom 2 in consistency with respect to the dynamic setting for error assessment. Following Bion-Nadal (2008), Chen et al. (2017), and references therein, we can define time invariance as follows:

Definition 3

For all \({\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\), a dynamic error measure \((\varrho _{\tau })\) on (\(\Omega , {\mathcal {F}}_{\infty }, ({\mathcal {F}}_t)_{t\in \mathfrak {R}^+}, P\)), where \({\tau _1} ,{\tau _2}\in \{0, 1, \dots , T-1\}\) are consecutive stopping times and s is a constant of time, is said to be time invariant if it satisfies the following properties:

-

(1)

\(\forall \,{\mathcal {K}}_1, {\mathcal {K}}_2, \in L^{\infty }({\mathcal {F}}_{\tau })^2\): \(\varrho _{\tau _1+s}({\mathcal {K}}_1) = \varrho _{\tau _1+s}({\mathcal {K}}_2) \Rightarrow \varrho _{\tau _1}({\mathcal {K}}_1) = \varrho _{\tau _1}({\mathcal {K}}_2)\) and \(\varrho _{\tau _2+s}({\mathcal {K}}_1) = \varrho _{\tau _2+s}({\mathcal {K}}_2) \Rightarrow \varrho _{\tau _2}({\mathcal {K}}_1) = \varrho _{\tau _2}({\mathcal {K}}_2)\).

-

(2)

\(\forall \, {\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\): \(\varrho _{\tau _1+s}({\mathcal {K}}) = \varrho _{\tau _2+s}({\mathcal {K}}) \Rightarrow \varrho _{\tau _1}({\mathcal {K}}) = \varrho _{\tau _2}({\mathcal {K}})\).

-

(3)

\(\forall \, {\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\): \(\varrho _{\tau _1}({\mathcal {K}}) = \varrho _{\tau _1}(-\varrho _{\tau _1+s}({\mathcal {K}}))\) and \(\varrho _{\tau _2}({\mathcal {K}}) = \varrho _{\tau _2}(-\varrho _{\tau _2+s}({\mathcal {K}}))\).

Based on the equality and recursive property in Definition 3, we can construct a time invariant error measure by composing one-period (i.e., \(s=1\)) measures over time such that for all \( t < T-1\) and \({\mathcal {K}} \in L^{\infty }({\mathcal {F}}_{\tau })\) the composed measure \(\tilde{\varrho _{\tau }}\) is (1) \({\tilde{\varrho }}_{T-1}({\mathcal {K}})=\varrho _{T-1}({\mathcal {K}})\) and (2) \({\tilde{\varrho }}_{\tau }({\mathcal {K}})=\varrho _{\tau }(-\tilde{\varrho }_{\tau +1}({\mathcal {K}}))\) (see Cheridito and Stadje 2009, for example).

2.3.3 Tail error (TE) and conditional tail error (CTE)

Enlightened by the literature of the extreme value theory, we define the tail error (TE) and its coherent extension, that is, the conditional tail error (CTE). Considering some outcomes generated from a system, an error or distance is measured by the Kullback–Leibler distance \({\mathcal {K}}\), and there exists any arbitrary value \(\varepsilon \), we denote \(\mathrm {F}_{{\mathcal {K}}}(\varepsilon )= \mathrm {P}({\mathcal {K}}\le \varepsilon )\) as the distribution of the corresponding error. We intend to define a statistic based on \(\mathrm {F}_{{\mathcal {K}}}\) that can measure the severity of the erroneous outcome over a fixed time period. We can directly use the maximum error (e.g., \(\varepsilon \)), such that \(\inf \{ \varepsilon \in \mathfrak {R}: \mathrm {F}_{{\mathcal {K}}}(\varepsilon )=1\}\). When the support of \(\mathrm {F}_{{\mathcal {K}}}\) is not bounded, the maximum error becomes infinity. However, the maximum error does not provide us the probabilistic information of \(\mathrm {F}_{{\mathcal {K}}}\). The tail error then extends it to the maximum error that will not be exceeded with a given probability.

Definition 4

Given some confidence level \(\alpha \in (0,1)\), the tail error of outcomes from a system at the confidence level \(\alpha \) is given by the smallest value \(\varepsilon \) such that the probability that the error \({\mathcal {K}}\) exceeds \(\varepsilon \) is not larger than \(1-\alpha \), that is, the tail error is a quantile of the error distribution shown as follows:

Definition 5

For an error measured by the Kullback–Leibler distance \({\mathcal {K}}\) with \(\mathtt {E}({\mathcal {K}}) <\infty \), and a confidence level \(\alpha \in (0,1)\), the conditional tail error (CTE) of system’s outcomes at a confidence level \(\alpha \) is defined as follows:

where \(\beta \in (0,1)\).

We can formally present \(\mathrm {TE}_{\alpha }({\mathcal {K}})\) in Eq. (2) as a minimum contrast (discrepancy) parameter as follows:

where \(\alpha \in (0,1)\) identifies the location of \({\mathcal {K}}\) on the distribution of errors. Comparing with TE, CTE averages TE over all levels \(\beta \ge \alpha \) and investigates further into the tail of the error distribution. We can see \(\mathrm {CTE}_{\alpha }\) is determined only by the distribution of \({\mathcal {K}}\) and \(\mathrm {CTE}_{\alpha }\ge \mathrm {TE}_{\alpha } \). We see TE is monotonic, that is, given by Axiom 2-(1), positive homogenous by Axiom 2-(3), translation invariant shown by Axiom 2-(4), and equivalent in probability by Axiom 2-(5). However, the subadditivity property cannot be satisfied in general for TE, and it is thus not a coherent error measure. The conditional tail error satisfies all properties given by Axiom 2 and it is a coherent error measure. In addition, under the dynamic setting, CTE satisfies all properties given by Axiom 3, it is also a dynamic coherent error measure.

In other words, TE measures an error below a given percentage (i.e., \(\alpha \)) of all errors. \(\alpha \) is then the probability such that an error greater than TE is less than or equal to \(\alpha \), whereas an error less than TE is less or than or equal to \(1-\alpha \). CTE measures the average error of the performance in a given percentage (i.e., \(\alpha \)) of the worst cases (above TE). Given the expected value of error \({\mathcal {K}}\) is strictly exceeding \(\mathrm {TE}\) such that \(\mathrm {CTE}_{\alpha }({\mathcal {K}})^+ = \mathrm {E}[{\mathcal {K}}| {\mathcal {K}} > \mathrm {TE}_{\alpha }({\mathcal {K}})]\) and the expected value of error \({\mathcal {K}}\) is weakly exceeding \(\mathrm {TE}\) where \(\mathrm {CTE}_{\alpha }({\mathcal {K}})^- = \mathrm {E}[{\mathcal {K}}| {\mathcal {K}} \ge \mathrm {TE}_{\alpha }({\mathcal {K}})]\), \(\mathrm {CTE}_{\alpha }({\mathcal {K}})\) can be treated as the weighted average of \(\mathrm {CTE}_{\alpha }({\mathcal {K}})^+\) and \(\mathrm {TE}_{\alpha }({\mathcal {K}})\) such that:

where

We can see that \(\mathrm {TE}_{\alpha }({\mathcal {K}})\) and \(\mathrm {CTE}_{\alpha }({\mathcal {K}})^+\) are discontinuous functions for the error distributions of \(({\mathcal {K}})\), whereas \(\mathrm {CTE}_{\alpha }({\mathcal {K}})\) is continuous with respect to \(\alpha \); see Sun et al. (2009) and references therein. We then obtain \(\mathrm {TE}_{\alpha }({\mathcal {K}})\le \mathrm {CTE}_{\alpha }({\mathcal {K}})^-\le \mathrm {CTE}_{\alpha }({\mathcal {K}})^+ \le \mathrm {CTE}_{\alpha }({\mathcal {K}})\). It is clear that CTE evaluates the performance quality in a conservative way by focusing on the less accurate outcomes. \(\alpha \) for TE and CTE can be used to scale the user’s tolerance of critical errors.

2.3.4 Computational methods

The \(\mathrm {TE}_{\alpha }\) represents the error that with probability \(\alpha \) it will not be exceeded. For continuous distributions, it is an \(\alpha \)-quantile such that \(\mathrm {TE}_{\alpha }({\mathcal {K}})=F_{{\mathcal {K}}}^{-1}(\alpha )\) where \(F_{{\mathcal {K}}}(\cdot )\) is the cumulative distribution function of the random variable of error \({\mathcal {K}}\). For the discrete case, there might not be a defined unique value but a probability mass around the value; we then can compute \(\mathrm {TE}_{\alpha }({\mathcal {K}})\) with \(\mathrm {TE}_{\alpha }({\mathcal {K}})=\min \{\mathrm {TE}({\mathcal {K}}): \mathrm {P}({\mathcal {K}}\le \mathrm {TE}({\mathcal {K}}))\ge \alpha \}\).

The \(\mathrm {CTE}_{\alpha }({\mathcal {K}})\) represents the worst (\(1-\alpha \)) part of the error distribution of \({\mathcal {K}}\) that is above the \(\mathrm {TE}_{\alpha }({\mathcal {K}})\) (i.e., conditional on the error above the \(\mathrm {TE}_{\alpha }\)). When \(\mathrm {TE}_{\alpha }({\mathcal {K}})\) has continuous part of the error distribution, we can derive \(\alpha \)th CTE of \(({\mathcal {K}})\) with \(\mathrm {CTE}_{\alpha }({\mathcal {K}})=\mathrm {E}({\mathcal {K}}|{\mathcal {K}}>\mathrm {TE}_{\alpha }({\mathcal {K}}))\). However, when \(\mathrm {TE}_{\alpha }\) falls in a probability mass, we compute \(\mathrm {CTE}_{\alpha }({\mathcal {K}})\) following Eq. (5):

where \({\hat{\beta }}=\max \{\,\beta : \mathrm {TE}_{\alpha }({\mathcal {K}})\,=\,\mathrm {TE}_{\beta }({\mathcal {K}})\}\).

It is obvious that the \(\alpha \)-th quantile of \(({\mathcal {K}})\) is critical. The classical method for computing is based on the order statistics; see David and Nagaraja (2003) and references therein. Given a set of values \({\mathcal {K}}_1\), \({\mathcal {K}}_1,\dots ,{\mathcal {K}}_n\), we can define the quantile for any fraction p as follows:

-

1.

Sort the values in order, such that \({\mathcal {K}}_{(1)} \le {\mathcal {K}}_{(2)} \le \cdots \le {\mathcal {K}}_{(n)} \). The values \({\mathcal {K}}_{(1)}, \dots , {\mathcal {K}}_{(n)}\) are called the order statistics of the original sample.

-

2.

Take the order statistics to be the quantile that correspond to the fraction:

$$\begin{aligned} p_i\,=\, \frac{i-1}{n-1}, \end{aligned}$$for \(i=1, 2, \dots , n\).

-

3.

Use the linear interpolation between two consecutive \(p_i\) to define the p-th quantile if p lies a fraction f to be:

$$\begin{aligned} \mathrm {TE}_p({\mathcal {K}})\,=\, (1-f)\, \mathrm {TE}_{p_i}({\mathcal {K}})+ f \mathrm {TE}_{p_{i+1}}({\mathcal {K}}). \end{aligned}$$

\({\mathcal {K}}\) in Axiom 1 measures the distance (i.e., error) between \({\widetilde{V}}(x)\) and V(x). If the error illustrate homogeneity and isotropy, for example, the distribution of errors are characterized by a known probability function, a parametric method then can be applied, see Sun et al. (2009) and references therein.

3 Wavelet-based big data systems

We are now going to evaluate big data systems under the framework we proposed in Sect. 2. We first describe three big data systems that have been used for intelligent decision making with different functions in this section before we conduct a numerical investigation. These three big data systems have been built with wavelet algorithms that were applied in big data analytics; see Sun and Meinl (2012) and references therein. The first big data system is named SOWDA (smoothness-oriented wavelet denoising algorithm), which optimizes a decision function with two control variables as proposed by Chen et al. (2015). It is originally used to preserve the smoothness (low-frequency information) when processing big data. The second is GOWDA (generalized optimal wavelet decomposition algorithm) introduced by Sun et al. (2015), which is an efficient system for data processing with multiple criteria (i.e., six equally weighted control variables). Because GOWDA has a more sophisticated decision function, an equal weight framework significantly improves computational efficiency. It is particularly suitable for decomposing multiple-frequency information with heterogeneity. The third one is called WRASA (wavelet recurrently adaptive separation algorithm), an extension of GOWDA as suggested by Chen et al. (2015), which determines the decision function of multiple criteria with convex optimization to optimally weight the criteria. Chen and Sun (2018) illustrate how to incorporate these systems with a reinforcement learning framework. All three big data systems have open access to adopt components of wavelet analysis.

As we have discussed in Sect. 2, a complete BDS contains two technology stacks: data engineering and data science. The former intends to design, develop, and integrate data from various resources and run ETL on top of big datasets and the latter applies data mining, machine learning, and statistics approaches to discovering knowledge and developing intelligence (such as decision making). In this section, we briefly introduce the three BDS focusing on their engineering and learning.

3.1 Data engineering with wavelet

Big data has several unique characteristics (see Sect. 2.1) and requires special processing before mining it. When dealing with heterogeneity, multi-resolution transformation of the input data enables us to analyze the information simultaneously on different dimensions. We are going to decompose the input data X as follows:

where \(S_t\) is the trend and we can estimate it with \({\tilde{S}}_t\) and \(N_t\) is the additive random features sampled at time t. Let \({\mathcal {W}}(\cdot ; \omega , \zeta )\) denote the wavelet transform operators with specific wavelet function \(\omega \) for \(\zeta \) level of decomposition and \({\mathcal {W}}^{-1}(\cdot )\) is its corresponding inverse transform. Let \({\mathcal {D}}(\cdot ; \gamma )\) denote the denoising operator with thresholding rule \(\gamma \). Therefore, wavelet data engineering is then to extract \(\tilde{S_t}\) from \(X_t\) as an optimal approximation of \(S_t\) after removing the randomness. We summarize the processing as follows:

The complete procedure shall implement the operators \({\mathcal {W}}(\cdot )\) and \({\mathcal {D}}(\cdot )\) after careful selection of \(\omega \), \(\zeta \), and \(\gamma \); see Sun et al. (2015).

Wavelets are bases of \(L^2({\mathbb {R}})\) and enable a localized time-frequency analysis with wavelet functions that usually have either compact support or decay exponentially fast to zero. Wavelet function (or the mother wavelet) \(\psi (t)\) is defined as:

Given \(\alpha ,\beta \in {\mathbb {R}}, \alpha \ne 0\), with compressing and expanding \(\psi (t)\), we obtain a successive wavelet \(\psi _{\alpha , \beta }(t)\), such that,

where we call \(\alpha \) the scale or frequency factor and \(\beta \) the time factor. Given \({\bar{\psi }}(t)\) as the complex conjugate function of \(\psi (t)\), a successive wavelet transform of the time series or finite signals \(f(t)\in L^2({\mathbb {R}})\) can be defined as:

Equation (8) shows that the wavelet transform is the decomposition of f(t) under a different resolution level (scale). In other words, the idea of the wavelet transform \({\mathcal {W}}\) is to translate and dilate a single function (the mother wavelet) \(\psi (t)\). The degree of compression is determined by the scaling parameter \(\alpha \), and the time location of the wavelet is set by the translation parameter \(\beta \). Here, \(\left| a \right| < 1\) leads to compression and thus higher frequencies. The opposite (lower frequencies) is true for \(\left| a \right| > 1\), leading to time widths adapted to their frequencies, see Chen et al. (2017) and references therein.

The continuous wavelet transform (CWT) is defined as the integral over all time of the data multiplied by scaled (stretching or compressing), shifted (moving forward or backward) version of wavelet function. Because most big data applications have a finite length of time, therefore, only a finite range of scales and shifts are practically meaningful. Therefore, we consider the discrete wavelet transform (DWT), which is an orthogonal transform of a vector (discrete big data) X of length N (which must be a multiple of \(2^J\)) into J wavelet coefficient vectors \(W_j \in {\mathbb {R}}^{N/{2^j}}\), \(1 \le j \le J\) and one scaling coefficient vector \(V_J \in {\mathbb {R}}^{N/{2^J}}\). The maximal overlap discrete wavelet transform (MODWT) has also been applied to reduce the sensitivity of DWT when choosing an initial value. The MODWT follows the same pyramid algorithm as the DWT by using the rescaled filters; see Sun et al. (2015) for details. All three BDSs can apply the DWT and MODWT. We focus on the wavelet transform on MODWT in this study.

Sun et al. (2015) pointed out that wavelet transform can lead to different outcomes when choosing different input variables. Since wavelets are oscillating functions and transform the signal orthogonally in the Hilbert space, there are many wavelet functions that satisfy the requirement for the transform. Chen et al. (2015) show that different wavelets are used for different reasons. For example, the Haar wavelet has very small support, whereas wavelets of higher orders such as Daubechies DB(4) and least asymmetric (LA) have bigger support. Bigger support can ensure a smoother shape of \(S_j\), for \(1 \le j \le J\) with each wavelet and that the scaling coefficient carries more information because of the increased filter width. Sun et al. (2015) highlighted three factors that influence the quality of wavelet transform: wavelet function (or mother wavelet), number of maximal iterations (or level of decomposition), and the thresholding rule. However, there is no straightforward method to determine these three factors simultaneously. Therefore, different BDS applies different decision functions when conducting machine learning.

3.2 Machine learning

In order to perform the wavelet transform to optimize the analysis, that is, to see how close \({\tilde{S}}_t\) is toward \(S_t\), three big data systems have been applied with different decision processing. First, let us name a separation factor \(\theta \), which is the combination of wavelet function, number of maximal iterations, and thresholding rule, as well as a factor space \(\Theta \subseteq {\mathbb {R}}^p, p \ge 0\), then \(\theta \in \Theta \). Different \(\theta \) will lead to different wavelet performances. We then consider a random variable \({\mathcal {K}}\) and \({\mathcal {K}}=S-{\tilde{S}}\). Here, \({\mathcal {K}}\) is in fact the approximation error and determined by a real value approximating function \(m({\mathcal {K}},\theta ) \in {\mathcal {R}}\) and \(E(m({\mathcal {K}},\theta ))=0\).

Definition 6

Let \({\mathcal {K}}_t \in {\mathbb {R}}^d\) be i.i.d. random vectors, and given that \(\theta \in {\mathbb {R}}^p\) is uniquely determined by \(E(m({\mathcal {K}},\theta ))=0\), where \(m({\mathcal {K}},\theta )\) is called the approximating function and takes values in \({\mathbb {R}}^{p+q}\) for \(q \ge 0\). Algorithm determines \({{\tilde{\theta }}}\) such that \({{\tilde{\theta }}}=\arg \max _{\theta } {\mathcal {R}}(\theta )\), where

where \(\omega _i\) is the weight for the approximating function.

If we use only one type of approximation—that is, a single criterion—then the weight is one. If we need to apply multivariate criteria for approximation, then we have to determine the associated weight for each approximating function.

3.2.1 Decision criteria

Six model selection criteria (i. e., \(m_1, \dots , m_6 \)) have been suggested by Sun et al. (2015) to evaluate \(m(\cdot )\). Given \({\mathcal {K}}_t=S_t-\tilde{S_t}\), for all \(t=1, 2, \dots , T\) where T is the data length and p the number of parameters estimated, the first three criteria are shown as follows:

and

Another three criteria are based on specific indicating functions. They are given as follows:

where

and z is the \(\alpha \)-th quantile of a probability distribution (e.g. \(\alpha = 0.05\)) for \({\mathcal {K}}\).

where

when \(\Lambda \) is the local maxima and

when \(\lambda \) is the local minima.

where

Summaried by Sun et al. (2015) is \(m_4\) to indicate the global extrema and \(m_5\) for the local extrema. Both of them have the ability to detect boundary problems—that is, an inefficient approximation at the beginning and the end of the signal. In addition, \(m_6\) detects the severity of \({\mathcal {K}}\) focusing on directional consistence.

For the three big data systems we compare in our study, SOWDA uses \(m_1\) and \(m_2\) and GOWDA and WRASA use all six criteria when formulating their decision making process.

3.2.2 Decision making

With the approximation error vector \(\mathbf{m }=[m_1, m_2, \dots , m_6]\), the next step is to determine the weights of these factors, that is, \(\varvec{\omega }\in {\mathbb {R}}^6\). Because the approximation error \(\mathbf{m }\) is random, for any given combination of the wavelet, level of decomposition, and thresholding function, the weighted error \(\mathbf{m }^T\varvec{\omega }\) can be expressed as a random variable with mean \(\mathbb {E[\mathbf{m }}^T\varvec{\omega }]\) and variance \(\mathbb {V(\mathbf{m }}^T\varvec{\omega })\) where \( \mathbb {E[\mathbf{m }}^T\varvec{\omega }]= \bar{\mathbf{m }}^T\varvec{\omega }\) and \(\mathbb {V(\mathbf{m }}^T\varvec{\omega })=\mathbb {E[\mathbf{m }}^T \varvec{\omega }- \mathbb {E[\mathbf{m }}^T\varvec{\omega }]]^2 = \varvec{\omega }^T \Sigma \, \varvec{\omega }\). In our work, we apply convex optimization suggested by Chen et al. (2017) to determine the weight \(\varvec{\omega }\) for minimizing a linear combination of the expected value and variance of the approximation error, that is,

where \(\gamma \) is a sensitivity measure that trades off between small expected approximation error and small error variance because of computational efficiency. In this article, we allow \(\gamma > 0\) which ensures a sufficiently large decrease in error variance, when the expected approximation error is trivial with respect to computational tolerance.

Figure 2 illustrates the workflow for the three big data systems (i.e., SOWDA, GOWDA and WRASA) that we investigated in this article. As we can see, the major difference of these three big data systems is in the decision-making process. WRASA minimizes the resulting difference sequence between \({\tilde{S}}_t\) and \(S_t\) based on choosing an optimal combination of approximating functions \(m(\cdot )\). Therefore, WRASA determines the approximating function \(m(\cdot )\) and its corresponding weight with convex optimization. SOWDA and GOWDA simply take the predetermined value of \(\omega _i\) (i.e., at equal weight in our study) rather than optimizing them. There has to be a trade-off between accuracy and computational efficiency if we want to keep the latency as low as possible.

4 Empirical study

4.1 The data

In our empirical study, we use electricity power consumption in France from 01 January 2009 to 31 December 2014. The consumption data are taken from real-time measurements of production units. The National Control Center (Centre National d’Exploitation du Système - CNES) constantly matches electricity generation with consumers’ power demands, covering total power consumed in France nationwide (except Corsica). The input data for our empirical study are provided by RTE (Ré seau de Transport d’Èlectricitè—Electricity Transmission Network), which is the electricity transmission system operator of France. RTE is in charge of operating, maintaining and developing high-voltage transmission systems in France, which is also the Europe’s largest one at approximately 100,000 kilometers in length. The data supplied are calculated on the basis of load curves at 10-min frequency. RTE’s regional units validate the data by adding missing values and by correcting erroneous data then provide the information data at 30-min frequency. These data are completed by the estimated values for consumed power from electricity generation on the distribution networks and private industrial networks.Footnote 4

4.2 The method

In our empirical study, we apply three big data systems (i.e., SOWDA, GOWDA, and WRASA) to complete the whole decision making process, that is, process the original power demand data and conduct a predictive analytics. We evaluate the quality of decision-making process (forecasting accuracy) with the TE and CTE discussed in Sect. 2.3.

Following Sun et al. (2015) and Chen et al. (2017), we chose Haar, Daubechies (DB), Symlet (LA), and Coiflets (Coif) as wavelet functions. We apply the MODWT with pyramid algorithm in our empirical study. Several threshold rules have been considered as well. The components served for the three systems in our study are listed as follows:

-

\(\omega \in \{\) Haar, DB(2), DB(4), DB(8), LA(2), LA(4), LA(8), Coif(4), Coif(6), Coif(8)\(\}\);

-

\(\zeta \in \{i: i=1,2,3\}\);

-

\(\gamma \in \{\)Heuristic SURE, Minimax, SURE, Universal\(\}\).

The subsample series that are used for the in-sample study are randomly selected by a moving window with length T. Replacement is allowed in the sampling. Letting \(T_F\) denote the length of the forecasting series, we perform the 1 day ahead out-of-sample forecasting (\(1\le T \le T+T_F \le N\)). In our analysis, the total sample length is \(T=17{,}520\) for a normal year and \(T=17{,}568\) for a leap year. The subsample length (i.e., the window length) of \(T=672\) (2 weeks) was chosen for the in-sample simulation and \(T_F=48\) (1 day) for the out-of-sample forecasting.

We compare the system’s performance by using the root mean squared error (RMSE), the mean absolute error (MAE), and the mean absolute percentage error (MAPE) as the goodness-of-fit measures:

where \(S_t\) denotes the actual value at time t, and \(\tilde{S_t}\) is its corresponding predicted value generated by the big data system.

Baucells and Borgonovo (2013) and Sun et al. (2007) suggested the Kolmogorov–Smirnov (KS) distance, the Cramér–von Mises (CVM) distance, and the Kuiper (K) distance as the metrics for evaluation. We use them here to compare the quality performance of the big data systems in our study.

Let \(F_n (S)\) denote the actual sample distribution of S, and \(F({{\tilde{S}}})\) is the distribution function of the approximation or forecasts (i. e., the output of BDS), Kolmogorov–Smirnov (KS) distance, Cramér von Mises (CVM), and Kuiper distance (K), which are defined as follows:

We conclude that the smaller these distances are, the better the performance of the system. The KS distance focuses on deviations near the median of the distribution. Thus, it tends to be more sensitive near the center of the error distribution than at the tails, whereas the CVM distance measures the sensitivity of dispersion between the output and its trend with respect to the change of the data filter of BDS. The Kuiper distance considers the extreme errors.

4.3 Results

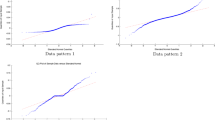

We show the forecasting performance of three big data systems (i.e., SOWDA, GOWDA, and WRASA) in Fig. 3. Panel (a) illustrates the predictive power demand generated from the three big data systems when compared with the actual power demand. Panel (b) illustrates the forecasting errors versus the actual power demand. It is not easy to identify which one performs better than the others. Therefore, we report different error evaluation results.

Comparison for forecasting performance of the three big data systems (i.e., SOWDA, GOWDA, and WRASA). a Illustrates the predictive electricity power demand generated from the three systems compared with the actual electricity power demand. b Illustrates the forecasting errors compared with the actual electricity power demand

Table 1 reports the in-sample (training) and out-of-sample (forecasting) performances of SOWDA, GOWDA, and WRASA measured by MAPE, MAE, and RMSE as the goodness-of-fit criteria. For the in-sample modeling performances, we see that the values of MAPE, MAE, and RMSE on average are all reduced after applying WRASA, compared with the results of SOWDA and GOWDA. Compared to SOWDA, WRASA reduces the error on average by 27.75, 27.87, and 24.17% for the MAPE, MAE, and RMSE criteria, respectively. Compared to GOWDA, WRASA reduces the error on average by 24.55, 24.56, and 21.47% for the MAPE, MAE, and RMSE, respectively.

We also focus on the results of the out-of-sample forecasting performances of SOWDA, GOWDA, and WRASA and evaluate them by using MAPE, MAE, and RMSE as the quality criteria. For the forecasting results, WRASA increases the accuracy on average by 28.06, 28.13, and 28.95% compared with SOWDA, and 10.49, 10.12, and 10.22% compared with GOWDA, based on the MAPE, MAE, and RMSE criteria, respectively.

Table 2 reports the in-sample and out-of-sample performance of SOWDA, GOWDA, and WRASA when we take the Cramér von Mises (CVM), Kolmogorov–Smirnov (KS), and Kuiper statistics into consideration as the goodness-of-fit criteria. In comparison with the results of SOWDA, WRASA improves the performance by 56.77, 38.26, and 32.94% measured by the CVM, KS, and Kuiper criteria, respectively. In addition, WRASA reduces the error by 49.84, 19.79, and 24.54% compared with GOWDA when evaluating by the CVM, KS, and Kuiper distance, respectively. From the CVM, KS, and Kuiper criteria, WRASA achieves better forecasting performance than SOWDA by 54.99, 34.58, and 30.80%, respectively. Under the same measurement, WRASA outperforms GOWDA by 31.41, 10.88, and 6.84%, respectively.

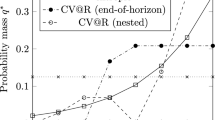

We next evaluate the performances of three big data systems with the methods we have discussed in Sect. 2.3—that is, TE and CTE. With these tools, we can identify the performance quality with respect to their cumulative absolute errors. We choose the significance level \(\alpha \) at \(50\%\), \(25\%\), \(10\%\), \(5\%\), and \(1\%\). When \(\alpha =50\%\), TE is then the median of the absolute error. Table 3 shows the comparison of SOWDA, GOWDA, and WRASA for their training performance measured by TE and CTE, and Table 4 shows the comparison of forecasting performance.

As we have mentioned, TE is exactly the \(\alpha \)-th quantile of the error distribution. We could also say that, the smaller TE is, the better the system’s performance will be. CTE assesses the average error between TE at \(\alpha \) confidence and the errors exceeding it. Obviously, the smaller CTE is, the better the system’s performance is as well.

We find that the values of TE and CTE for WRASA are the smallest compared with that of SOWDA and GOWDA for all \(\alpha \)s in Tables 3 and 4. For example, in Table 3 when we compare the training performance with TE, for \(\alpha =5\%\) WRASA reduces the tail error on average by 21.43 and 16.58% compared with the results of SOWDA and GOWDA, respectively. When \(\alpha =1\%\), the reduction of WRASA then turns to be 15.78 and 15.12%, respectively. For CTE, when \(\alpha =5\%\) WRASA reduces the conditional tail error on average by 17.63 and 15.47% in comparison with SOWDA and GOWDA, respectively and when \(\alpha =1\%\), WRASA reduces the conditional tail error by 12.62 and 11.73%, respectively.

When we compare the forecasting performance in Table 4, for \(\alpha =5\%\) WRASA reduces TE by 27.49 and 10.91% comparing with SOWDA and GOWDA and CTE by 43.36 and 8.91%, respectively. For \(\alpha =1\%\) WRASA reduces TE by 33.10 and 7.6% versus SOWDA and GOWDA and CTE 49.15 and 6.13%, respectively.

As we have pointed out that, when \(\alpha =0.5\), \(\mathrm {TE}_{0.5}\) becomes the median of absolute error. Comparing with MAE, we can identify the skewness of the underlying error distribution. When the mean is larger than the median, we call it positively skewed (i.e., the mass of the error is concentrated on the left approaching to zero). The better the performance of the BDS, the more positive skewness of the mass of error approaching to zero. On the other hand, given a skewness, we prefer the light tail (i.e., small tail or conditional tail error). Our results in Tables 3 and 4 illustrate that the error distributions of three systems are all positively skewed to zero.

In addition, from Tables 3 and 4, we can sketch the error distribution above the median. For any given two confidences \(\alpha _1\) and \(\alpha _2\), if \(\alpha _1 > \alpha _2\), we can ensure that \(\mathrm {TE}_{(1-\alpha _1)}({\mathcal {K}}) \le \mathrm {TE}_{(1-\alpha _2)}({\mathcal {K}})\) and \(\mathrm {CTE}_{(1-\alpha _1)}({\mathcal {K}}) \le \mathrm {CTE}_{(1-\alpha _2)}({\mathcal {K}})\), see Sect. 2.3.3. This is a robust feature for TE and CTE. Furthermore, TE and CTE show their efficiency. When we compare two performance (\({\mathcal {K}}_1\) and \({\mathcal {K}}_2\)), there must exist a \(\alpha \) such that \(\mathrm {TE}_{(1-\alpha )}({\mathcal {K}}_1) \ne \mathrm {TE}_{(1-\alpha )}({\mathcal {K}}_2)\) and \(\mathrm {CTE}_{(1-\alpha )}({\mathcal {K}}_1) \ne \mathrm {CTE}_{(1-\alpha )}({\mathcal {K}}_2)\) if the underlying performance are not identical. With these features, we can compare the system performance by choosing a proper \(\alpha \) that reflects the decision maker’s utility. For example, when we choose \(\mathrm {TE}_{0.9}\), we can conclude that WRASA performs better than GWODA and SOWDA in both in-sample training and out-of-sample forecasting because the corresponding tail and conditional tail errors of WRASA are smallest. Similarly, when we apply \(\mathrm {TE}\) and \(\mathrm {CTE}\) for all \(\alpha \) values, we conclude the same.

In order to graphically illustrate the performances we exhibit the complete plot of forecasting errors for the three big data systems in Fig. 4. Panel (a) in Fig. 4 presents the error density of the system’ outcomes. We can see that all error densities are positively skewed to zero, but we cannot easily distinguish the difference. Panel (b) in Fig. 4 exhibits the error distribution where we can easily identify the quantile (i.e., TE) that is used to quantify the tail error. From the right panel of Fig. 4, we see the error distribution of WRASA is shifted to the left, which means that the performance of WRASA is better comparing with SOWDA and GOWDA. Table 4 illustrates this with concrete quantile values for \(\alpha \ge 0.5\). With these values we can consistently compare the system performance after definite ranking TE and CTE values. We can thus verify that the proposed measures (i.e., TE and CTE) for evaluating quality perform well and can be confirmed to be very robust and efficient measures.

4.4 Discussion with simulation

In our empirical study of forecasting power consumption, all quality measurement tools we applied suggest to us the best performance in conformity. As we have seen in Fig. 3, the power consumption illustrates seasonality. Not surprisingly, WRASA works well for such a weak irregularity. In this case, the preternatural feature of the proposed measure (i.e., CTE) based on tail distribution is not startlingly clear. We are going to conduct a simulation study to investigate the performance of the proposed quality measure for assessing the performance of a BDS with strong irregularity.

4.4.1 Simulation method

In this study we perform Monte Carlo simulations where extreme irregular observations (jumps) are generated from two different patterns: (1) excessive volatility and (2) excessive volatility with regime switching. We generate 100 sequences of length \(2^{12}\). This trend is based on a sine function, whose amplitude and frequency are drawn from a uniform distribution. For generating the pattern (1) signals, following Sun and Meinl (2012), we add jumps to the trend. Jump occurrences are uniformly distributed (coinciding with a Poisson arrival rate observed in many systems), with the jump heights being a random number drawn from a skewed contaminated normal noise suggested by Chun et al. (2012). For generating pattern (2) data, we repeat the method used for pattern (2) signals, but shift the trend up and down once in order to characterize a sequence of excessive volatility with regime switching. The amplitude of the shift is four times the previous trend; see Sun et al. (2015).

Similar to how the method was applied in our empirical study, we also need to decide a moving window for the training and forecasting operations. We set the in-sample size as 200 and the out-of-sample size as 35, 80, and 100. The number of window moves is then 720, and we generate 100 data series for each pattern. Therefore, for each pattern we test our algorithm 72,000 times for in-sample approximation and one-step ahead forecasting. In our simulation, we have 216,000 runs in total. In our study, cases 1–3 and cases 4–6 are based on the data pattern (1) and (2), respectively. The out-of-sample forecasting length is set as 35 for cases 1 and 4, 80 for cases 2 and 5, and 100 for cases 3 and 6.

For each run we collect the values of six conventional criteria and five newly proposed measures. We report the results in next subsection.

4.4.2 Results and discussion

Table 5 reports the performance quality of three BDS evaluated by the six conventional methods. We can see that when comparing the performances, WRASA is considered superior by MAPE, MAE, and RMSE in Case 1 in training while SOWDA is preferred by CVM, KS, and Kuiper. Similarly, MAPE, MAE, and RMSE support WRASA and CVM, KS, and Kuiper support SOWDA for out-of-sample performance in Case 1. In Table 5 we highlight the best performance in bold. Obviously, we encounter difficulty in synchronizing because there are contradictive results.

The contradiction can be identified by four different classes. The first is noncoincidence among three mean error based methods, (i.e., MAPE, MAE, and RMSE). For example, for the out-of-sample performance of Case 3, GOWDA is preferred by RMSE whereas MAE and MAPE support WRASA. Second, there is noncoincidence among three extreme error based methods, (i.e., CVM, KS, and Kuiper). For example, for the in-sample performance of Case 4, SOWDA is preferred by CVM whereas KS and Kuiper support WRASA. Third, there is noncoincidence between the mean error based methods and extreme error based methods. For example, for the out-of-sample performance of Case 2, all mean error based methods support GOWDA but all extreme error based methods benefit WRASA. Fourth, one method is not distinguishable from another method. For example, for the out-of-sample performance of Case 5, MAE cannot distinguish which one is better between GOWDA and WRASA because a tie occurs in comparison. KS cannot distinguish SOWDA and WRASA when comparing the in-sample performance of Case 6.

When we apply TE and CTE, it turns out to be much easier. In Table 6, when we evaluate the in-sample performance, all smallest values of TE and CTE lead to WRASA. Therefore, its in-sample performance is better than other two. When we compare the out-of-sample performance, we recognize that TE cannot always provide a coherent conclusion with the results reported in Table 7. For example, for Case 2, \(\mathrm {TE}_{0.95}\) supports GOWDA whereas \(\mathrm {TE}_{0.90}\) benefit WRASA. Meantime, \(\mathrm {TE}_{0.99}\) cannot distinguishFootnote 5 the quality of GOWDA and WRASA. Not surprisingly, CTE can provide the coherent conclusion that WRASA always performs better. We have shown that CTE is a dynamic coherent measure since it satisfies all properties given by Axiom 3.

There are many advantages of TE. First, it is a simple quality measure and has a straightforward interpretation. Second, it defines the complete error distribution by specifying TE for all \(\alpha \). Third, it focuses only on the part of the error distribution specified by \(\alpha \). Fourth, it can be estimated with parametric models once the error distribution can be clarified and the estimation procedures are stable with different methods. However, quality control using TE may lead to undesirable results for skewed error distributions. It does not consider the properties of the error distribution beyond \(\alpha \). In addition, it is non-convex and discontinuous for discrete error distributions.

The advantages of applying CTE are threefold. First, it has superior mathematical properties, as we have shown, and preserves some properties of TE, such as easy interpretation and complete definition of error distribution with all \(\alpha \). Second, it is continuous with respect to \(\alpha \) and coherent. Third, when making decision, we can optimize it with convex programming since \(\mathrm {CTE}_{\alpha }(\omega _1 {\mathcal {K}}_1+\cdots + \omega _n {\mathcal {K}}_n)\) is a convex function with respect to weights \(\omega _1, \dots , \omega _n\) where \(\sum _1^n \omega _n=1\). However, challenges still remain when estimating CTE as it is heavily affected by tail modeling.

Coherence matters when we conduct system engineering either by reconfiguring systems or aggregating tasks. System engineering refers to simple configurations, such as parallelization, and complex ones, such as centralization, fragmentation, or distribution, as we show in Fig. 1. TE is not coherent because it does not satisfy Axiom 2-(2) in Sect. 2.3.1. Axiom 2-(2) states that if we combine two independent tasks, then the total error of them is not greater than the sum of the error associated with each of them. When we reconfigure systems, we try to avoid creating any extra errors (or system instability), but no one can guarantee it. In our empirical study, all three big data systems apply only plain configuration because the input data are simple high-frequency time series and work on a single task of forecasting the load. Therefore, we can apply TE and CTE for dynamical measuring following Definition 3.

Accuracy is given by systemic computability. Many computational methods can be used to accurately describe the probability distribution of errors; therefore, we can obtain a definite value for TE and CTE. Compared with conventional methods, with more suitable models or computational methods, we could optimally reduce the incapability of tail measures. If we refer to robustness as consistency and efficiency as comparability, then we can confidentially apply CTE, since it is a preferred method for quality control due to its robustness and efficiency. Therefore, the only thing we need for conducting the quality control is to choose or search for a proper \(\alpha \) that reflects our own utility.

5 Conclusion

In this article we highlighted the challenge of big data systems and introduced some background information focusing on their quality management. We looked to describe the prevailing paradigm for quality management of these systems. We proposed a new axiomatic framework for quality management of a big data system (BDS) based on the tail error (TE) and conditional tail error (CTE) of the system. From a decision analysis perspective, particularly for the CTE, a dynamic coherent mechanism can be applied to perform these quality errors by continuously updating the new information through out the whole systemic processing. In addition, the time invariance property of the conditional tail error has been well defined to ensure the inherent characteristics of a BDS are able to satisfy quality requirements.

We applied the proposed methods with three big data systems (i.e., SOWDA, GOWDA, and WRASA) based on wavelet algorithms and conducted an empirical study to evaluate their performances with big electricity demand data from France through analysis and forecasting. Our empirical results confirmed the efficiency and robustness of the method we proposed herein. In order to fully illustrate the advantage of the proposed method, we also provided a simulation study to highlight some features that were not obtained from our empirical study. We believe our results will enrich the future applications in quality management.

As we have seen, the three big data systems we investigated in this article are mainly from fragmented (decentralized) systems where the interconnection of each component is still hierarchical in algorithms. When the underlying big data system exhibits strong distributed features—for example, there are tremendous interoperations—how to execute the dynamic coherent quality measure we proposed in this article should be paid more attention. In addition, some robust tests focusing on validating its conditional correlation over time should be proposed for future study.

Notes

These are layers of hardware, firmware, software applications, operating platforms, and networks that make up IT architecture.

A six sigma process is one in which 99.99966% of all opportunities are statistically expected to be free of defects (i.e., 3.4 defective features per million opportunities).

PTC white paper. PTC is a global provider of technology platforms and solutions that transform how companies create, operate, and service the “things” in the Internet of Things (IoT). See www.ptc.com.

See www.rte-france.com.

Not because of making a round number.

References

Agarwal, R., Green, R., Brown, P., Tan, H., & Randhawa, K. (2013). Determinants of quality management practices: An empirical study of New Zealand manufacturing firms. International Journal of Production Economics, 142, 130–145.

Artzner, P., Delbaen, F., Eber, J., Heath, D., & Ku, K. (2007). Coherent multiperiod risk adjusted values and Bellman’s principle. Annals of Operations Research, 152, 5–22.

Baucells, M., & Borgonovo, E. (2013). Invariant probabilistic sensitivity analysis. Management Science, 59(11), 2536–2549.

Bion-Nadal, J. (2008). Dynamic risk measures: Time consistency and risk measures from BMO martingales. Finance and Stochastics, 12(2), 219–244.

Bion-Nadal, J. (2009). Time consistent dynamic risk processes. Stochastic Processes and their Applications, 119(2), 633–654.

Chen, Y., & Sun, E. (2015). Jump detection and noise separation with singular wavelet method for high-frequency data. Working paper of KEDGE BS.

Chen, Y., & Sun, E. (2018). Chapter 8: Automated business analytics for artificial intelligence in big data \(@\)x 4.0 era. In M. Dehmer & F. Emmert-Streib (Eds.), Frontiers in Data Science (pp. 223–251). Boca Raton: CRC Press.

Chen, Y., Sun, E., & Yu, M. (2015). Improving model performance with the integrated wavelet denoising method. Studies in Nonlinear Dynamics and Econometrics, 19(4), 445–467.

Chen, Y., Sun, E., & Yu, M. (2017). Risk assessment with wavelet feature engineering for high-frequency portfolio trading. Computational Economics. https://doi.org/10.1007/s10614-017-9711-7.

Cheridito, P., & Stadje, M. (2009). Time-inconsistency of VaR and time-consistent alternatives. Finance Research Letters, 6, 40–46.

Chun, S., Shapiro, A., & Uryasev, S. (2012). Conditional value-at-risk and average value-at-risk: Estimation and asymptotics. Operations Research, 60(4), 739–756.

David, H., & Nagaraja, H. (2003). Order statistics (3rd ed.). Hoboken: Wiley.

Deichmann, J., Roggendorf, M., & Wee, D. (2015). McKinsey quarterly november: Preparing IT systems and organizations for the Internet of Things. McKinsey & Company.

Hazen, B., Boone, C., Ezell, J., & Jones-Farmer, J. (2014). Data quality for data science, predictive analytics, and big data in supply chain management: An introduction to the problem and suggestions for research and applications. International Journal of Production Economics, 154, 72–80.

Keating, C., & Katina, P. (2011). Systems of systems engineering: Prospects and challenges for the emerging field. International Journal of System of Systems Engineering, 2(2/3), 234–256.

Liu, Y., Muppala, J., Veeraraghavan, M., Lin, D., & Hamdi, M. (2013). Data center networks: Topologies architechtures and fault-tolerance characteristics. Berlin: Springer.

Maier, M. (1998). Architecting principles for systems-of-systems. Systems Engineering, 1(4), 267–284.

Mellat-Parst, M., & Digman, L. (2008). Learning: The interface of quality management and strategic alliances. International Journal of Production Economics, 114, 820–829.

O’Neill, P., Sohal, A., & Teng, W. (2015). Quality management approaches and their impact on firms’ financial performance—An Australian study. International Journal of Production Economics. https://doi.org/10.1016/j.ijpe.2015.07.015i.

Parast, M., & Adams, S. (2012). Corporate social responsibility, benchmarking, and organizational performance in the petroleum industry: A quality management perspective. International Journal of Production Economics, 139, 447–458.

Pham, H. (2006). System software reliability. Berlin: Springer.

Riedel, F. (2004). Dynamic coherent risk measures. Stochastic Processes and their Applications, 112(2), 185–200.

Shooman, M. (2002). Reliability of computer systems and networks: Fault tolerance analysis and design. Hoboken: Wiley.

Sun, E., Chen, Y., & Yu, M. (2015). Generalized optimal wavelet decomposing algorithm for big financial data. International Journal of Production Economics, 165, 161–177.