Abstract

Support vector machine (SVM) is a widely used machine learning method in analog circuit fault diagnosis. However, SVM parameters such as kernel parameters and penalty parameters can seriously affect the classification accuracy. The current parameter optimization methods have defects such as slow convergence speed, easy falling into local optimal solutions, and premature convergence. Because of this, an improved grey wolf optimization algorithm (GWO) based on the nonlinear control parameter strategy, the first Kepler’s law strategy, and chaotic search strategy (NKCGWO) is proposed to overcome the shortcomings of the traditional optimization methods in this paper. In the NKCGWO method, three strategies are developed to improve the performance of GWO. Thereafter, the optimal parameters of SVM are obtained using NKCGWO-SVM. To evaluate the performance of NKCGWO-SVM for analog circuit diagnosis, two analog circuits are employed for fault diagnosis. The proposed method is compared with GA-SVM, PSO-SVM and GWO-SVM. The experimental results show that the proposed method has higher diagnosis accuracy than the other compared methods for analog circuit diagnosis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of modern electronic technology, analog circuits are widely used and play a vital role in various electronic systems. An unexpected failure of the analog circuit may lead to sudden breakdown of the entire equipment, resulting in huge economic losses or even casualties [1,2,3]. However, analog fault diagnosis is still a challenging task because of poor fault models, component tolerances and nonlinear effects of analog circuits [4, 5]. Consequently, effective fault detection and isolation for analog circuits to avoid system failure has become an active research field, and many different methods have been proposed [6,7,8,9]. Analog diagnosis approaches are usually classified into two main categories: simulation before test (SBT) and simulation after test (SAT) [4, 10,11,12]. The SAT method is limited by computational time in the testing process. In contrast, the SBT method is more acceptable because only off-line computation is needed before testing [13]. Among all SBT methods, data-driven methods, such as artificial neural networks (ANNs) and support vector machines (SVMs), are very popular and more suitable for analog fault diagnosis because they do not need an explicit model [6,7,8,9, 14, 15]. Considering the trade-off between the global optimizing solution and generalizing ability, SVM has been regarded as an effective tool for fault diagnosis of analog circuits [16,17,18,19,20].

Support vector machine (SVM) is a machine learning method based on statistic learning theory and has good classification ability for small-sample, nonlinear, high-dimension problems [21]. However, SVM classification accuracy heavily depends on the SVM parameters, such as the penalty parameter C and the kernel parameter γ the RBF kernel function, and it is very difficult to determine the optimal SVM parameters. Currently, different optimization algorithms are used to select the optimal SVM parameters. A straightforward method, the exhaustive grid search (GS), is proposed to optimize the SVM parameters [22]. Li and Zhang [23] used a genetic algorithm (GA) to optimize the SVM parameters. Sun et al. [24] successfully used the particle swarm optimization (PSO) method to obtain the optimal SVM parameters. Soroor and Hossein [25] presented an SVM parameters optimization method based on a gravitational search algorithm (GSA). However, the aforementioned methods are not ideal. GS is time-consuming and difficult as the optimization parameters increase. GA and PSO easily fall into local optima and exhibit premature convergence in the search space.

The grey wolf optimization (GWO) algorithm was first proposed by Seyedali et al. [26] as a novel heuristic optimization method based on the leadership behavior and hunting mechanism of grey wolves. GWO can avoid local optima to some extent. The performance of GWO is superior to that of PSO, GA, and GSA on twenty-nine benchmark test functions. However, in some cases, GWO also suffers from premature convergence and fails to find a global optimal solution. This may be attributed to the fact that the wolves lack information sharing among them [27]. GWO needs a better trade-off between exploitation and exploration. Hence, it cannot always deal with optimization problems successfully.

In this paper, an improved GWO is introduced to optimize the penalty parameter C and the kernel parameter γ of SVM. In this method, the nonlinear control parameter strategy can genuinely reflect the actual search process of the GWO algorithm. Since the search process of the GWO algorithm is nonlinear and highly complicated, the first Kepler’s law strategy can better balance the exploration and exploitation of the GWO algorithm, and the chaos search strategy is introduced to avoid falling into a local optimum. These improvements evidently enhance optimization efficiency.

The rest of this paper is organized as follows. In Sect. 2, the principle of SVM is briefly introduced. In Sect. 3, the concepts of GWO are described, and NKCGWO is proposed. In Sect. 4, the proposed NKCGWO-SVM is put forward in detail. The experimental research is presented in Sect. 5. In Sect. 6, the conclusions are given.

2 The principle of SVM

Support vector machine (SVM) was first proposed by Vapnik based on the principle of structural risk minimization, with good classification ability [28]. the purpose of SVM is to identify hype-plane to separate different classes by maximizing the distance between classes. the basic principle of SVM is briefly described as follows.

Assume we have training sample sets \(X={\{{x}_{i},{y}_{i}\}}_{i=1}^{n}\), where \({x}_{i}\in {R}^{d}\) represents the i-th training sample and \({y}_{i}\) is the class label of \({x}_{i}\). If the training samples can be separated linearly, the hyper-plane is defined as follows:

where \(w represents\) a weight vector, and b is a bias value. The hyperplane can correctly separate training samples belonging to different categories, and it is satisfactory that the margin between the two classes that point closest to the hyperplane is the largest. The hyperplane is called the optimal separating hyperplane. The problem of the optimal separating hyperplane based on the principle of structural risk minimization can be described as the following convex quadratic programming problem:

If the training samples are linearly non-separable, the penalty parameter and the slack variable must be introduced. Hence, the SVM classification optimal problem can be found after resolving the following constrained optimization problem:

where, C is the penalty parameter and \({\xi }_{i}\) represents the slack variable.

If the training samples are nonlinearly separable, a kernel function is used to transform the training samples into a high-dimensional dot product space using a nonlinear function Φ, where the data can be separated linearly. The definition of the kernel function is shown in formula (4). The convex quadratic programming problem of the SVM classifier is given in formula (5).

To solve the optimal problem of Eq. (5), the Lagrange multiplier is used. Then, the quadratic programming (QP) problem can be transformed into the dual problem as follows:

where, \({\alpha }_{i}\) is the Lagrange multiplier. The final optimal classification surface function can be given as follows:

From Eqs. (5) and (6), we can see that the performance of SVM largely depends on the type of kernel function, the parameter of the kernel function, and the penalty parameter C.

In this paper, we choose the RBF kernel as the kernel function of SVM, which is shown in the following formula:

where γ is inversely proportional to the width of the kernel.

Traditionally, the SVM classifier uses a default set of C and γ in solving the pattern classification problems, which usually cannot obtain a satisfactory classification result because the SVM classifier with a different set of C and γ has a different performance. Finding an effective way to obtain the optimal parameters C and γ is crucial for improving the SVM performance.

3 Improved GWO

3.1 Classical grey wolf optimization algorithm

The grey wolf optimization algorithm (GWO) is a new metaheuristic algorithm inspired by the social hierarchy and hunting strategies of grey wolves in nature [26].

To establish a social hierarchy of grey wolves, all grey wolves are categorized into four groups according to the fitness value: alpha (\(\alpha\)), beta (\(\beta\)), delta (\(\delta\)), and omega (\(\omega\)) wolves. The best solution in the population is denoted as alpha (\(\alpha\)). Similarly, the second and third best solutions are named beta (\(\beta\)) and delta (\(\delta\)), respectively. The remaining solutions are considered as omega (\(\omega\)). In GWO, the \(\omega\) wolves are mainly guided by \(\alpha\), \(\beta ,\) and \(\delta\) toward promising areas of the search space. The social hierarchy of grey wolves is shown in Fig. 1.

The hunting behavior of the grey wolf is mainly divided into three steps: tracking, encircling and attacking the prey. Encircling the prey can be mathematically expressed as follows:

where t represents the current iteration. D is the distance between the position of the prey and the grey wolf. Xp and X denote the position vectors of the prey and a grey wolf, respectively. A and C are coefficient vectors that are calculated as follows:

where r1 and r2 are random variables in [0,1]. au is a control parameter, called the convergence factor, whose value linearly decreases from 2 to 0 during the iteration process. tmax is the maximum number of iterations. The exploration and exploitation decisions are made based on the value of A.

The positions of \(\omega\) are updated according to the positions of \(\alpha\), \(\beta ,\) and \(\delta\) as follows:

The GWO algorithm begins with the initialization of the grey wolf population. The search process is mainly guided by \(\alpha\), \(\beta\) and \(\delta\). When \(\left|A\right|>1\), they diverge from each other to search for prey. When \(\left|A\right|<1\), they converge to attack prey. Finally, the optimal solution is obtained if the maximum number of iterations is reached.

The pseudo code of the GWO algorithm is presented in Fig. 2.

The grey wolf optimization algorithm.

3.2 The proposed NKCGWO

To prevent the GWO algorithm from premature convergence and falling into a local optimal solution, enhance the abilities of exploration and exploitation and improve the performance of the GWO algorithm, the nonlinear control parameter strategy, the first Kepler’s law strategy and the chaotic search strategy are simultaneously embedded into the GWO algorithm, which is called NKCGWO. The NKCGWO algorithm can simulate the grey wolf predation process more realistically. The construction of the NKCGWO will be introduced in this section.

3.2.1 Nonlinear control parameter strategy

In the GWO algorithm, au plays an important role in the trade-off between exploration and exploitation. A smaller au is conducive to local exploitation, while a larger au is beneficial to global exploration. However, in the classical GWO algorithm, the linearly decreasing au strategy cannot truly reflect the search process because the search process of grey wolves is nonlinear and highly complex. The value of au should be a nonlinearly decreasing value rather than being a linearly decreasing quantity. As a result, a nonlinear control parameter strategy is presented [29]. The au is modified as follows:

where e is the base of the natural logarithm. The t is the current iteration. The tmax is the maximum number of iterations. The k is the adjustment parameter. The k is set to 2 [29]. The auinit and aufin are the initial value and final value of control parameter au, respectively. The variation trend of au with increasing iteration is shown in Fig. 3.

From Fig. 3, it can be seen that when k = 2, the improved control parameter au nonlinearly decreases from 2 to 0 during the iteration process. At the beginning of the iteration, the decay rate of the improved au is slower than that of the original au, which can better find the global optimal solution. At the end of the iteration, the decay rate of the improved au is faster than that of the original au, in order to make the search for the local optimal solution more accurate. Therefore, the nonlinear control parameter strategy is more practical, ensuring the abilities of exploration and exploitation, balancing global and local search performance, and further enhancing the algorithm’s global optimization ability.

3.2.2 The first Kepler’ law strategy

The first Kepler’s law is that the orbits of the planets revolving around the Sun are ellipses [30]. In other words, the distance between the planets and the Sun is different in different times. The concept is introduced into the GWO algorithm. This means that grey wolves (the planets) move around the prey (the Sun) in an elliptical orbit. The first Kepler’s law strategy can make a good trade-off between exploration and exploitation. Meanwhile, it also makes exploration and exploitation more efficient. The steps of the first Kepler’s law strategy are described as follows:

Suppose the group size of the grey wolf pack is N, and K grey wolves (K < N) are randomly selected from the grey wolf pack. The new positions of K grey wolves (Xi,new, i = 1, …, K) are calculated by Eq. (18). Meanwhile, the new position of \(\alpha \left({X}_{\alpha ,new}\right)\) is changed by Eq. (19) as follows [31]:

where, U (− 2,2) is a uniformly distributed random number in the interval [− 2,2]. t is the current iteration. \({R}_{i,\alpha }\) is the Euclidean distance between \({X}_{i}\) and \({X}_{\alpha }\). If the return value of U (− 2,2) is close to 1, the proposed algorithm performs exploration; otherwise, it executes exploitation.

Only when the fitness values of the new positions are better than those of the original positions can the positions of K grey wolves be updated for the next iteration. It is described as follows:

The pseudo code of the first Kepler’s law strategy is given in Fig. 4.

The first Kepler’s law algorithm.

3.2.3 Chaotic search strategy

The lack of ergodicity in the entire search space can make the GWO algorithm easily fall into a local optimum. Chaos is a nonlinear phenomenon, and it is stochastic, regular and ergodic. Based on the ergodicity of chaos, the chaotic search strategy and the GWO algorithm are combined to prevent the GWO algorithm from falling into a local optimum and to improve the overall searching ability of the algorithm.

A typical chaotic map, called a logistic map is used in this paper, and the logistic map is defined as follows [32, 33]:

where the value of a is set to 4 [34], the chaotic behavior of logistic map besprinkles between intervals of [0,1]. \({x}_{i}\in [\mathrm{0,1}]\), \(i=\mathrm{1,2},\cdots ,Max\_iter\), \({x}_{1}\notin [\mathrm{0.25,0.5,0.75}]\).

The steps of the chaotic search strategy are described as follows:

Step 1: Assume that xi(t) represents the i-th dimension value of x(t) in the current iteration. t is the current iteration. Min_xi and Max_xi are the upper and lower bounds of xi, respectively. Set t = 0.

Step 2: Map xi(t) to the chaotic variable cxi(t) using the following equation [35].

Step 3: Calculate the next iteration cxi(t + 1) of cxi(t) using Eq. (21).

Step 4: Transform the chaotic variable cxi(t + 1) to the original space xi(t + 1) using the following equation.

Step 5: Assess xi(t + 1) according to the decision function fit(xi(t + 1)).

Step 6: If the value of fit(xi(t + 1)) is better than that of fit(xi(t)), the offspring will be preserved; otherwise, the value of xi(t) is kept unchanged. t = t + 1 and go back to Step 2 to continue searching until the maximum number of iterations is reached.

3.2.4 The NKCGWO algorithm

Combining the advantages of the nonlinear control parameter strategy, the first Kepler’s law strategy and the chaotic search strategy, an improved GWO algorithm is proposed, which is NKCGWO. The pseudo code of NKCGWO is described in Fig. 5. The changes from GWO are underlined.

The NKCGWO algorithm.

4 SVM parameter optimization by the proposed NKCGWO

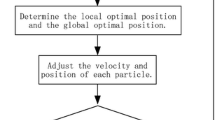

In this section, we describe the proposed NKCGWO algorithm to optimize the penalty parameter C and the kernel parameter γ of SVM. The detailed process of SVM parameter optimization based on NKCGWO is illustrated in Fig. 6. The implementation steps of the NKCGWO are described as follows:

Step 1: Preprocess the dataset: Extract the feature value of the dataset, and scale each feature to the range [0,1]. Divide the dataset into training set and testing set.

Step 2: Initialize the parameters of NKCGWO: Set the maximum number of iterations, population size and dimension number of the particle. Initial au, A and C. Finite the upper and lower bounds of C and γ for SVM. Generate the initial position of every particle.

Step 3: Optimize the SVM parameters: Input the training set into the SVM classifier, which is trained with each parameter combination (C, γ). The SVM classifier is evaluated by using the five-fold cross-validation average error as the objective function of NKCGWO. The best solution (Copt, γopt) is obtained when the termination condition is satisfied.

Step 4: Test classification: Input the testing set into the trained SVM classifier with the optimal parameters Copt andγopt. Get the classification results.

5 Experiments circuits and simulation results

In this section, we consider two example circuits, including a video amplifier circuit and an active band-stop filter circuit, to demonstrate the effectiveness of the proposed method. OrCAD 16.6 software is implemented to simulate circuits, and the NKCGWO-SVM algorithm is programmed in Matlab2016b. The steps of analog fault diagnosis based on NKCGWO-SVM are given in Fig. 7.

5.1 Example circuits and fault type

5.1.1 CTSV filter circuit

The first CUT used in this paper is a CTSV filter circuit. A CTSV filter circuit with nominal values of all the components is shown in Fig. 8. The tolerance of the resistors and capacitors are set to 5% and 10%, respectively. An excitation sinewave voltage source with an amplitude of 5 V and a frequency of 100 Hz is loaded as the input of the circuit, and the test node, labelled Vout in Fig. 8, is chosen to acquire the fault voltage signal.

In this paper, the fault modes of the CTSV filter circuit include 5 hard faults, 8 soft faults, and a fault free to be diagnosed, as shown in Table 1. Here, soft faults indicate that the actual value of the component is higher and lower than its nominal value by 50%. Hard faults are caused by components that are short or open. In the simulation circuit, hard faults (open and short faults) are modelled by connecting a 100 MΩ resistor in series and paralleling a 0.01Ω resistor.

In each fault mode, Monte Carlo analysis based on time domain transient analysis is run 80 times, and 80 original samples of each fault class are obtained. The original samples are divided into 50 training sets and 30 testing sets. Then, wavelet packet analysis is used to extract fault features.

5.1.2 Active band-stop filter circuit

The second CUT is an active band-stop filter circuit, as shown in Fig. 9. The tolerance of the resistors and capacitors are set to 5% and 10%, respectively. A single pulse with a height of 5 V and a duration of 10 us is adopted as the input of the circuit. The fault modes of the active band-stop filter circuit include 5 hard faults, 8 soft faults, and a fault free to be diagnosed, as shown in Table 2.

5.2 Simulation results

To demonstrate the advantage of the proposed method in Sect. 4, the proposed method is compared with the following three methods: the GA-SVM [36], PSO-SVM [37], and GWO-SVM [38]. The parameter settings of the above-mentioned methods are listed in Table 3.

The Figs. 10 and 11 show the iterative curves of the best classification accuracy with the four involved methods for hard faults and soft faults in the first CUT, respectively. Figures 12 and 13 show the best fitness curves for hard faults and soft faults in the second CUT, respectively. In Fig. 10, the best classification accuracy of NKCGWO-SVM for hard faults reach 97.6% in the seventh iteration. The maximum classification accuracies of the other three methods, GWO-SVM, PSO-SVM, and GA-SVM, are 96.6%, 94.5%, and 93.6%, respectively. NKCGWO-SVM has a faster convergence rate than the other three methods. In Fig. 11, the maximum classification accuracy of NKCGWO-SVM for soft faults is 95.1%, which is higher than the other three methods, but it is lower than the maximum classification accuracy of the method for hard faults in Fig. 10. The reason is that fault diagnosis for hard faults is easier than fault diagnosis for soft faults. In Figs. 12 and 13, the same results can be obtained; that is, for the second CUT, the proposed method has better recognition ability than GA-SVM, PSO-SVM, and GWO-SVM.

The average diagnosis results of two CUTs using the four involved methods are shown in Tables 4 and 5, respectively. It is shown that NKCGWO-SVM has higher average recognition capability than the other methods in the two CUTs.

To further study the diagnosis performance of the proposed method in the analog circuit, the detailed misclassification analyses of the four involved methods for the second CUT are shown in Figures. 14, 15, 18, 17. Figure 14 shows that 31 of 420 cases are misclassified using GA-SVM. Figures 15, 16, 17 show that 27 of 420 cases are misclassified using PSO-SVM, 21 of 420 cases are misclassified using GWO-SVM, and 19 of 420 cases are misclassified using NKCGWO-SVM. From Fig. 14, 15, 16, 17, we conclude that NKCGWO-SVM has the smallest misclassification compared to GA-SVM, PSO-SVM and GWO-SVM in fault diagnosis of analog circuits.

For different training sets, training sets and testing sets consisting of 20, 40, 60, 80 and 30 samples for each fault class are used. Figure 18 shows that the rate of correct classification of the proposed method is higher than that of the other three methods in different numbers of training samples in the first CUT.

6 Conclusions

In this paper, the NKCGWO method is used to optimize the parameters of SVM for fault diagnosis of analog circuits. The proposed method has good search and convergence performance. NKCGWO-SVM can improve the classification accuracy of SVM by optimizing the penalty parameter C and the kernel parameter γ to evaluate the recognition capability of the proposed method. Experiments on two analog circuits, a video amplifier circuit and an active band-stop filter circuit, are performed. Other methods are also used to perform comparisons. The experimental results demonstrate that the proposed method has higher performance in terms of fault classification than GA-SVM, PSO-SVM and GWO-SVM.

Data availability

The data that have been used in confidential.

References

Liu, Z. B., Jia, Z., Yong, C. M., & Bu, S. H. (2017). Capturing high-discriminative fault features for electronics-rich analog system via deep learning. IEEE Transactions on Industrial Informatics, 13(3), 1213–1226.

Long, B., Tian, S. L., & Wang, H. J. (2012). Diagnostics of filtered analog circuits with tolerance based on LS-SVM using frequency features. Journal of Electronic Testing, 28(3), 291–300.

Michal, T., & Stanislaw, H. (2022). A method for parametric and catastrophic fault diagnosis of analog linear circuits. IEEE Access, 10, 27002–27013.

Bandler, J. W., & Salama, A. E. (1985). Fault diagnostic of analog circuits. Proceedings of the IEEE, 20(2), 1279–1325.

Spina, R., & Upadhyaya, S. (1997). Linear circuit fault diagnosis using neuromorphic analyzers. IEEE Transactions on Circuits and Systems. Part II: Express briefs, 44(3), 188–196.

Aminian, M., & Aminian, F. (2007). A modular fault-diagnostic system for analog electronic circuits using neural networks with wavelet transform as a preprocessor. IEEE Transactions on Instrumentation and Measurement, 56(5), 1546–1554.

Aminian, F., Aminian, M., & Collins, H. W. (2002). Analog fault diagnosis of actual circuits using neural networks. IEEE Transactions on Instrumentation and Measurement, 51(3), 544–550.

Aminian, F., & Aminian, M. (2001). Fault diagnosis of analog circuits using Bayesian neural networks with wavelet transform as preprocessor. Journal of Electronic Testing, 17(1), 29–36.

Yuan, L. F., He, Y. G., Huang, J. Y., & Sun, Y. C. (2010). A new neural- network-based fault diagnosis approach for analog circuits by using Kurtosis and entropy as a preprocessor. IEEE Transactions on Instrumentation and Measurement, 59(3), 586–595.

Zhang, Y., Wei, X. Y., & Jiang, H. F. (2008). One-class classifier based on SBT for analog circuit fault diagnosis. Measurement, 41(4), 371–380.

Duhamal, P., & Rault, J. C. (1979). Automatic tests generation techniques for analog circuits and systems: A review. IEEE Transactions on Circuits and Systems I, 26, 411–440.

Lin, P. M., & Elcherif, Y. S. (1985). Analogue circuits fault dictionary-new approaches and implementation. International Journal of Circuit Theory and Applications, 13(2), 149–172.

Huang, J., Hu, X. G., & Yang, F. (2011). Support vector machine with genetic algorithm for machinery fault diagnosis of high voltage circuit breaker. Measurement, 44(6), 1018–1027.

Tan, Y. H., He, Y. G., Cui, C., & Qiu, G. Y. (2008). A novel method for analog fault diagnosis based on neural networks and genetic algorithms. IEEE Transactions on Instrumentation and Measurement, 57(11), 2631–2639.

Cui, J., & Wang, Y. (2011). A novel approach of analog circuit fault diagnosis using support vector machines classifier. Measurement, 44(1), 281–289.

Mao, J. K., & Mao, X. B. (2012). Application of SVM classifier and fractal feature in circuit fault diagnosis. Advanced Materials Research, 490, 942–945.

Sun, Y. K, Chen, G. J., Li, H. (2007). SVM method for diagnosing analog circuits fault based on testability analysis. Proceedings of the 2007 IEEE International Conference on Mechatronics and Automation, pp. 3452–3456.

Gao, T., Yang, J. L., & Jiang, S. D. (2021). A novel incipient fault diagnosis method for analog circuits based on GMKL-SVM and wavelet fusion features. IEEE Transactions on Instrumentation and Measurement, 70, 1–15.

Sun, X. Y., Cao, C. Q., & Zeng, X. D. (2021). Application of DBN and GWO-SVM in analog circuit fault diagnosis. Scientific Reports, 11(1), 1–14.

Liang, H., Zhu, Y. M., & Zhang, D. Y. (2021). Analog circuit fault diagnosis based on support vector machine classifier and fuzzy feature selection. Electronics, 10(12), 1496–1516.

Vapnik, V., & Cortes, C. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Jiang, L. L., Liu, Y. L., Li, X. J., & Chen, A. H. (2010). Gear fault diagnosis based on SVM and multi-sensor information fusion. Journal of Central South University, 41(6), 2184–2188.

Li, H., Zhang, Y. (2009). An algorithm of soft fault diagnosis for analog circuit based on the optimized SVM by GA. In 9th International Conference on Electronic Measurement Instruments, Beijing, pp. 1023–1027.

Sun, J., Wang, C. H., Sun, J., & Wang, L. (2013). Analog circuit soft fault diagnosis based on PCA and PSO-SVM. Journal of Networks, 8(12), 2792–2796.

Soroor, S., & Hossein, N. (2013). Facing the classification of binary problems with a GSA-SVM hybrid system. Mathematical and Computer Modelling, 57(2), 270–278.

Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey wolf optimizer. Advances in engineering software, 69(3), 46–61.

Kishor, A., Singh, P. K. (2016). Empirical study of grey wolf optimizer. In 5th International Conference on Soft Computing for Problem Solving, 436, 1037–1049.

Vapnik, V. N. (1999). The Nature of Statistical Learning Theory. Springer-verlag.

Tan, F. M., Zhao, J. J., & Wang, Q. (2019). A grey wolf optimization algorithm with improved nonlinear convergence. Micro-electronics and Computer, 36(5), 89–95.

Abdel-Basset, M., Mohamed, R., & Azeem, S. A. A. (2023). Kepler optimization algorithm: A new metaheuristic algorithm inspired by Kepler’s laws of planetary motion. Knowledge-Based Systems, 268, 1–31.

Sarafrazi, S., & Seydnejad, S. R. (2015). A novel hybrid algorithm of GSA with Kepler algorithm for numerical optimization. Journal of King Saud University Computer and Information Sciences, 27(3), 288–296.

Kohli, M., & Arara, S. (2018). Chaotic grey wolf optimization algorithm for constrained optimization problems. Journal of Computational Design and Engineering, 5(4), 458–472.

Gao, Z. M., Zhao, J., & Zhang, Y. J. (2022). Review of chaotic mapping enabled nature-inspired algorithms. Mathematical Bio-sciences and Engineering, 19(8), 8215–8258.

Mittal, H., Pal,R., Kulhari,A., Saraswat,M. (2016). Chaotic kbest gravitational search algorithm. 2016 Ninth International Conference on Contemporary Computing, Noida, pp. 355–360.

Zhang, Y., Sun, H. X., Wei, Z. L., & Han, B. (2017). Chaotic grey wolf optimization algorithm with adaptive adjustment strategy. Computer Science, 44(11), 119–122.

Chen, S., Zhao, S., Wang, C. (2014). A new analog circuit fault diagnosis approach based on GA-SVM. Tencon IEEE region 10 Conference, Xi’an, pp. 1–4.

Tang, J. Y., Shi, Y. B., & Jiang, D. (2009). Analog circuit fault diagnosis with hybrid PSO-SVM. IEEE Circuit and Systems International Conference on Testing and Diagnosis, 7(2), 1–5.

Eswaramoorthy, S., Sivakumaran, N., & Sekaran, S. (2016). Grey wolf optimization based parameter selection for support vector machines. Compel International Journal for Computation and Mathematics in Electrical and Electronic Engineering, 35(5), 1513–1523.

Author information

Authors and Affiliations

Contributions

P.S.: Methodology, software, investigation, writing—original draft. L.C.: Conceptualization, supervision. K.C.: Validation, writing—review & editing. T.G.:Validation, formal analysis. Y.X.:Validation, formal analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, P., Chen, L., Cai, K. et al. Analog circuit diagnosis based on support vector machine with parameter optimization by improved NKCGWO. Analog Integr Circ Sig Process 119, 497–510 (2024). https://doi.org/10.1007/s10470-023-02194-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10470-023-02194-4